Keywords

Computer Science and Digital Science

- A1.1.1. Multicore, Manycore

- A1.1.2. Hardware accelerators (GPGPU, FPGA, etc.)

- A1.1.5. Exascale

- A8.2.1. Operations research

- A8.2.2. Evolutionary algorithms

- A9.6. Decision support

- A9.7. AI algorithmics

Other Research Topics and Application Domains

- B3.1. Sustainable development

- B3.1.1. Resource management

- B7. Transport and logistics

- B8.1.1. Energy for smart buildings

1 Team members, visitors, external collaborators

Research Scientist

- Jan Gmys [University of Mons, Belgium, Researcher, until Sep 2020]

Faculty Members

- Nouredine Melab [Team leader, Université de Lille, Professor, HDR]

- Omar Abdelkafi [Université de Lille, Associate Professor]

- Matthieu Basseur [Université d'Angers, Associate Professor, until Aug 2020, HDR]

- Bilel Derbel [Université de Lille, Associate Professor, HDR]

- Arnaud Liefooghe [Université de Lille, Associate Professor]

- El-Ghazali Talbi [Université de Lille, Professor, HDR]

Post-Doctoral Fellow

- Tiago Carneiro Pessoa [Inria, until Jun 2020]

PhD Students

- Brahim Aboutaib [Université de Lille, from Sep 2020]

- Nicolas Berveglieri [Université de Lille]

- Alexandre Borges De Jesus [University of Coimbra, Portugal]

- Guillaume Briffoteaux [University of Mons, Belgium]

- Lorenzo Canonne [Inria, from Oct 2020]

- Raphael Cosson [Université de Lille]

- Juliette Gamot [Inria, from Nov 2020]

- Maxime Gobert [University of Mons, Belgium]

- Ali Hebbal [Université de Lille]

- Geoffrey Pruvost [Université de Lille]

- Jeremy Sadet [Université de Valenciennes et du Hainaut Cambrésis]

Technical Staff

- Nassime Aslimani [Université de Lille, Engineer]

- Dimitri Delabroye [Inria, Engineer]

- Jan Gmys [Inria, Engineer, from Oct 2020]

- Jingyu Ji [Inria, Engineer, until Jan 2020]

Interns and Apprentices

- Ayman Makki [Université de Lille, from Mar 2020 until Aug 2020]

Administrative Assistant

- Karine Lewandowski [Inria]

2 Overall objectives

2.1 Presentation

In the Bonus project, we target optimization problems. Solving those problems consists in optimizing (minimizing or maximizing) one or more objective function(s) under some constraints, as formulated below:

where is the feasible search space and is the decision variable vector of dimension .

Nowadays, in many research and application areas we are witnessing the emergence of the big era (big data, big graphs, etc). In the optimization setting, the problems are increasingly big in practice. Big optimization problems (BOPs) refer to problems composed of a large number of environmental input parameters and/or decision variables (high dimensionality), and/or many objective functions that may be computationally expensive. For instance, in smart grids, there are many optimization problems for which it has to be considered a large number of consumers (appliances, electrical vehicles, etc.) and multiple suppliers with various energy sources. In the area of engineering design, the optimization process must often take into account a large number of parameters from different disciplines. In addition, the evaluation of the objective function(s) often consist(s) in the execution of an expensive simulation of a black-box complex system. This is for instance typically the case in aerodynamics where a CFD-based simulation may require several hours. On the other hand, to meet the high-growing needs of applications in terms of computational power in a wide range of areas including optimization, high-performance computing (HPC) technologies have known a revolution during the last decade (see Top500 1). Indeed, HPC is evolving toward ultra-scale supercomputers composed of millions of cores supplied in heterogeneous devices including multi-core processors with various architectures, GPU accelerators and MIC coprocessors.

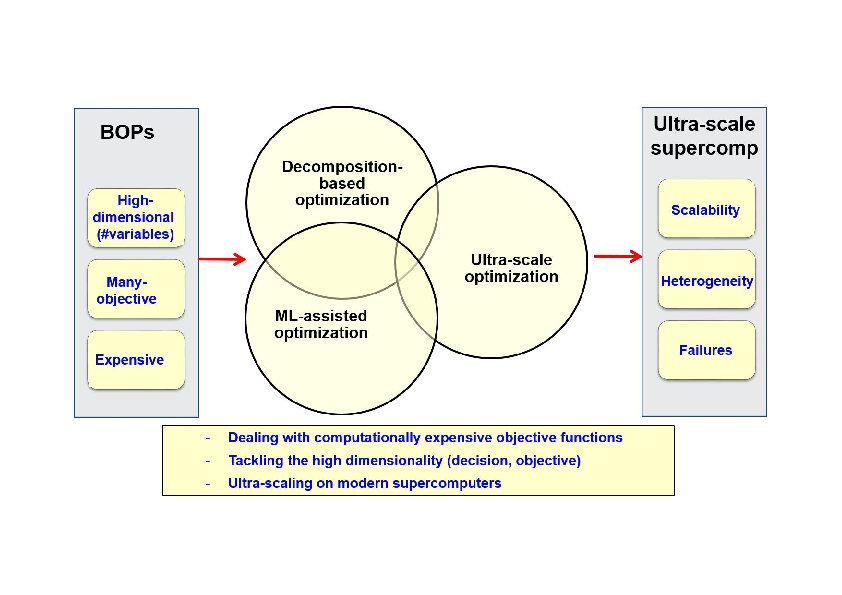

Beyond the “big buzzword”, solving BOPs raises at least four major challenges: (1) tackling their high dimensionality; (2) handling many objectives; (3) dealing with computationally expensive objective functions; and (4) scaling up on (ultra-scale) modern supercomputers. The overall scientific objectives of the Bonus project consist in addressing efficiently these challenges. On the one hand, the focus will be put on the design, analysis and implementation of optimization algorithms that are scalable for high-dimensional (in decision variables and/or objectives) and/or expensive problems. On the other hand, the focus will also be put on the design of optimization algorithms able to scale on heterogeneous supercomputers including several millions of processing cores. To achieve these objectives raising the associated challenges a program including three lines of research will be adopted (Fig. 1): decomposition-based optimization, Machine Learning (ML)-assisted optimization and ultra-scale optimization. These research lines are developed in the following section.

From the software standpoint, our objective is to integrate the approaches we will develop in our ParadisEO 3 2 framework in order to allow their reuse inside and outside the Bonus team. The major challenge will be to extend ParadisEO in order to make it more collaborative with other software including machine learning tools, other (exact) solvers and simulators. From the application point of view, the focus will be put on two classes of applications: complex scheduling and engineering design.

3 Research program

3.1 Decomposition-based Optimization

Given the large scale of the targeted optimization problems in terms of the number of variables and objectives, their decomposition into simplified and loosely coupled or independent subproblems is essential to raise the challenge of scalability. The first line of research is to investigate the decomposition approach in the two spaces and their combination, as well as their implementation on ultra-scale architectures. The motivation of the decomposition is twofold: first, the decomposition allows the parallel resolution of the resulting subproblems on ultra-scale architectures. Here also several issues will be addressed: the definition of the subproblems, their coding to allow their efficient communication and storage (checkpointing), their assignment to processing cores etc. Second, decomposition is necessary for solving large problems that cannot be solved (efficiently) using traditional algorithms. Indeed, for instance with the popular NSGA-II algorithm the number of non-dominated solutions 3 increases drastically with the number of objectives leading to a very slow convergence to the Pareto Front 4. Therefore, decomposition-based techniques are gaining a growing interest. The objective of Bonus is to investigate various decomposition schemes and cooperation protocols between the subproblems resulting from the decomposition to generate efficiently global solutions of good quality. Several challenges have to be addressed: (1) how to define the subproblems (decomposition strategy), (2) how to solve them to generate local solutions (local rules), and (3) how to combine these latter with those generated by other subproblems and how to generate global solutions (cooperation mechanism), and (4) how to combine decomposition strategies in more than one space (hybridization strategy)?

The decomposition in the decision space can be performed following different ways according to the problem at hand. Two major categories of decomposition techniques can be distinguished: the first one consists in breaking down the high-dimensional decision vector into lower-dimensional and easier-to-optimize blocks of variables. The major issue is how to define the subproblems (blocks of variables) and their cooperation protocol: randomly vs. using some learning (e.g. separability analysis), statically vs. adaptively etc. The decomposition in the decision space can also be guided by the type of variables i.e. discrete vs. continuous. The discrete and continuous parts are optimized separately using cooperative hybrid algorithms 68. The major issue of this kind of decomposition is the presence of categorial variables in the discrete part 64. The Bonus team is addressing this issue, rarely investigated in the literature, within the context of vehicle aerospace engineering design. The second category consists in the decomposition according to the ranges of the decision variables. For continuous problems, the idea consists in iteratively subdividing the search (e.g. design) space into subspaces (hyper-rectangles, intervals etc.) and select those that are most likely to produce the lowest objective function value. Existing approaches meet increasing difficulty with an increasing number of variables and are often applied to low-dimensional problems. We are investigating this scalability challenge (e.g. 10). For discrete problems, the major challenge is to find a coding (mapping) of the search space to a decomposable entity. We have proposed an interval-based coding of the permutation space for solving big permutation problems. The approach opens perspectives we are investigating 7, in terms of ultra-scale parallelization, application to multi-permutation problems and hybridization with metaheuristics.

The decomposition in the objective space consists in breaking down an original Many-objective problem (MaOP) into a set of cooperative single-objective subproblems (SOPs). The decomposition strategy requires the careful definition of a scalarizing (aggregation) function and its weighting vectors (each of them corresponds to a separate SOP) to guide the search process towards the best regions. Several scalarizing functions have been proposed in the literature including weighted sum, weighted Tchebycheff, vector angle distance scaling etc. These functions are widely used but they have their limitations. For instance, using weighted Tchebycheff might do harm diversity maintenance and weighted sum is inefficient when it comes to deal with nonconvex Pareto Fronts 60. Defining a scalarizing function well-suited to the MaOP at hand is therefore a difficult and still an open question being investigated in Bonus 6, 5. Studying/defining various functions and in-depth analyzing them to better understand the differences between them is required. Regarding the weighting vectors that determine the search direction, their efficient setting is also a key and open issue. They dramatically affect in particular the diversity performance. Their setting rises two main issues: how to determine their number according to the available computational ressources? when (statically or adaptively) and how to determine their values? Weight adaptation is one of our main concerns that we are addressing especially from a distributed perspective. They correspond to the main scientific objectives targeted by our bilateral ANR-RGC BigMO project with City University (Hong Kong). The other challenges pointed out in the beginning of this section concern the way to solve locally the SOPs resulting from the decomposition of a MaOP and the mechanism used for their cooperation to generate global solutions. To deal with these challenges, our approach is to design the decomposition strategy and cooperation mechanism keeping in mind the parallel and/or distributed solving of the SOPs. Indeed, we favor the local neighborhood-based mating selection and replacement to minimize the network communication cost while allowing an effective resolution 5. The major issues here are how to define the neighborhood of a subproblem and how to cooperatively update the best-known solution of each subproblem and its neighbors.

To sum up, the objective of the Bonus team is to come up with scalable decomposition-based approaches in the decision and objective spaces. In the decision space, a particular focus will be put on high dimensionality and mixed-continuous variables which have received little interest in the literature. We will particularly continue to investigate at larger scales using ultra-scale computing the interval-based (discrete) and fractal-based (continuous) approaches. We will also deal with the rarely addressed challenge of mixed-continuous variables including categorial ones (collaboration with ONERA). In the objective space, we will investigate parallel ultra-scale decomposition-based many-objective optimization with ML-based adaptive building of scalarizing functions. A particular focus will be put on the state-of-the-art MOEA/D algorithm. This challenge is rarely addressed in the literature which motivated the collaboration with the designer of MOEA/D (bilateral ANR-RGC BigMO project with City University, Hong Kong). Finally, the joint decision-objective decomposition, which is still in its infancy 70, is another challenge of major interest.

3.2 Machine Learning-assisted Optimization

The Machine Learning (ML) approach based on metamodels (or surrogates) is commonly used, and also adopted in Bonus, to assist optimization in tackling BOPs characterized by time-demanding objective functions. The second line of research of Bonus is focused on ML-aided optimization to raise the challenge of expensive functions of BOPs using surrogates but also to assist the two other research lines (decomposition-based and ultra-scale optimization) in dealing with the other challenges (high dimensionality and scalability).

Several issues have been identified to make efficient and effective surrogate-assisted optimization. First, infill criteria have to be carefully defined to adaptively select the adequate sample points (in terms of surrogate precision and solution quality). The challenge is to find the best trade-off between exploration and exploitation to efficiently refine the surrogate and guide the optimization process toward the best solutions. The most popular infill criterion is probably the Expected Improvement (EI) 63 which is based on the expected values of sample points but also and importantly on their variance. This latter is inherently determined in the kriging model, this is why it is used in the state-of-the-art efficient global optimization (EGO) algorithm 63. However, such crucial information is not provided in all surrogate models (e.g. Artificial Neural Networks) and needs to be derived. In Bonus, we are currently investigating this issue. Second, it is known that surrogates allow one to reduce the computational burden for solving BOPs with time-consuming function(s). However, using parallel computing as a complementary way is often recommended and cited as a perspective in the conclusions of related publications. Nevertheless, despite being of critical importance parallel surrogate-assisted optimization is weakly addressed in the literature. For instance, in the introduction of the survey proposed in 62 it is warned that because the area is not mature yet the paper is more focused on the potential of the surveyed approaches than on their relative efficiency. Parallel computing is required at different levels that we are investigating.

Another issue with surrogate-assisted optimization is related to high dimensionality in decision as well as in objective space: it is often applied to low-dimensional problems. The joint use of decomposition, surrogates and massive parallelism is an efficient approach to deal with high dimensionality. This approach adopted in Bonus has received little effort in the literature. In Bonus, we are considering a generic framework in order to enable a flexible coupling of existing surrogate models within the state-of-the-art decomposition-based algorithm MOEA/D. This is a first step in leveraging the applicability of efficient global optimization into the multi-objective setting through parallel decomposition. Another issue which is a consequence of high dimensionality is the mixed (discrete-continuous) nature of decision variables which is frequent in real-world applications (e.g. engineering design). While surrogate-assisted optimization is widely applied in the continuous setting it is rarely addressed in the literature in the discrete-continuous framework. In 64, we have identified different ways to deal with this issue that we are investigating. Non-stationary functions frequent in real-world applications (see Section 4.1) is another major issue we are addressing using the concept of deep Gaussian Processes.

Finally, as quoted in the beginning of this section, ML-assisted optimization is mainly used to deal with BOPs with expensive functions but it will also be investigated for other optimization tasks. Indeed, ML will be useful to assist the decomposition process. In the decision space, it will help to perform the separability analysis (understanding of the interactions between variables) to decompose the vector of variables. In the objective space, ML will be useful to assist a decomposition-based many-objective algorithm in dynamically selecting a scalarizing function or updating the weighting vectors according to their performances in the previous steps of the optimization process 5. Such a data-driven ML methodology would allow us to understand what makes a problem difficult or an optimization approach efficient, to predict the algorithm performance 4, to select the most appropriate algorithm configuration 8, and to adapt and improve the algorithm design for unknown optimization domains and instances. Such an autonomous optimization approach would adaptively adjust its internal mechanisms in order to tackle cross-domain BOPs.

In a nutshell, to deal with expensive optimization the Bonus team will investigate the surrogate-based ML approach with the objective to efficiently integrate surrogates in the optimization process. The focus will especially be put on high dimensionality (e.g. using decomposition) with mixed discrete-continuous variables which is rarely investigated. The kriging metamodel (Gaussian Process or GP) will be considered in particular for engineering design (for more reliability) addressing the above issues and other major ones including mainly non stationarity (using emerging deep GP) and ultra-scale parallelization (highly needed by the community). Indeed, a lot of work has been reported on deep neural networks (deep learning) surrogates but not on the others including (deep) GP. On the other hand, ML will be used to assist decomposition: importance/interaction between variables in the decision space, dynamic building (selection of scalarizing functions, weight update etc.) of scalarizing functions in the objective space etc.

3.3 Ultra-scale Optimization

The third line of our research program that accentuates our difference from other (project-)teams of the related Inria scientific theme is the ultra-scale optimization. This research line is complementary to the two others, which are sources of massive parallelism and with which it should be combined to solve BOPs. Indeed, ultra-scale computing is necessary for the effective resolution of the large amount of subproblems generated by decomposition of BOPs, parallel evaluation of simulation-based fitness and metamodels etc. These sources of parallelism are attractive for solving BOPs and are natural candidates for ultra-scale supercomputers 5. However, their efficient use raises a big challenge consisting in managing efficiently a massive amount of irregular tasks on supercomputers with multiple levels of parallelism and heterogeneous computing resources (GPU, multi-core CPU with various architectures) and networks. Raising such challenge requires to tackle three major issues, scalability, heterogeneity and fault-tolerance, discussed in the following.

The scalability issue requires, on the one hand, the definition of scalable data structures for efficient storage and management of the tremendous amount of subproblems generated by decomposition 66. On the other hand, achieving extreme scalability requires also the optimization of communications (in number of messages, their size and scope) especially at the inter-node level. For that, we target the design of asynchronous locality-aware algorithms as we did in 61, 69. In addition, efficient mechanisms are needed for granularity management and coding of the work units stored and communicated during the resolution process.

Heterogeneity means harnessing various resources including multi-core processors within different architectures and GPU devices. The challenge is therefore to design and implement hybrid optimization algorithms taking into account the difference in computational power between the various resources as well as the resource-specific issues. On the one hand, to deal with the heterogeneity in terms of computational power, we adopt in Bonus the dynamic load balancing approach based on the Work Stealing (WS) asynchronous paradigm 6 at the inter-node as well as at the intra-node level. We have already investigated such approach, with various victim selection and work sharing strategies in 69, 7. On the other hand, hardware resource specific-level optimization mechanisms are required to deal with related issues such as thread divergence and memory optimization on GPU, data sharing and synchronization, cache locality, and vectorization on multi-core processors etc. These issues have been considered separately in the literature including our works 9, 1. Indeed, in most of existing works related to GPU-accelerated optimization only a single CPU core is used. This leads to a huge resource wasting especially with the increase of the number of processing cores integrated into modern processors. Using jointly the two components raises additional issues including data and work partitioning, the optimization of CPU-GPU data transfers etc.

Another issue the scalability induces is the increasing probability of failures in modern supercomputers 67. Indeed, with the increase of their size to millions of processing cores their Mean-Time Between Failures (MTBF) tends to be shorter and shorter 65. Failures may have different sources including hardware and software faults, silent errors etc. In our context, we consider failures leading to the loss of work unit(s) being processed by some thread(s) during the resolution process. The major issue, which is particularly critical in exact optimization, is how to recover the failed work units to ensure a reliable execution. Such issue is tackled in the literature using different approaches: algorithm-based fault tolerance, checkpoint/restart (CR), message logging and redundancy. The CR approach can be system-level, library/user-level or application-level. Thanks to its efficiency in terms of memory footprint, adopted in Bonus 2, the application-level approach is commonly and widely used in the literature. This approach raises several issues mainly: (1) which critical information defines the state of the work units and allows to resume properly their execution? (2) when, where and how (using which data structures) to store it efficiently? (3) how to deal with the two other issues: scalability and heterogeneity?

The last but not least major issue which is another roadblock to exascale is the programming of massive-scale applications for modern supercomputers. On the path to exascale, we will investigate the programming environments and execution supports able to deal with exascale challenges: large numbers of threads, heterogeneous resources etc. Various exascale programming approaches are being investigated by the parallel computing community and HPC builders: extending existing programming languages (e.g. DSL-C++) and environments/libraries (MPI+X etc.), proposing new solutions including mainly Partitioned Global Address Space (PGAS)-based environments (Chapel, UPC, X10 etc.). It is worth noting here that our objective is not to develop a programming environment nor a runtime support for exascale computing. Instead, we aim to collaborate with the research teams (inside or outside Inria) having such objective.

To sum up, we put the focus on the design and implementation of efficient big optimization algorithms dealing jointly (uncommon in parallel optimization) with the major issues of ultra-scale computing mainly the scalability up to millions of cores using scalable data structures and asynchronous locality-aware work stealing, heterogeneity addressing the multi-core and GPU-specific issues and those related to their combination, and scalable GPU-aware fault tolerance. A strong effort will be devoted to this latter challenge, for the first time to the best of our knowledge, using application-level checkpoint/restart approach to deal with failures.

4 Application domains

4.1 Introduction

For the validation of our findings we obviously use standard benchmarks to facilitate the comparison with related works. In addition, we also target real-world applications in the context of our collaborations and industrial contracts. From the application point of view two classes are targeted: complex scheduling and engineering design. The objective is twofold: proposing new models for complex problems and solving efficiently BOPs using jointly the three lines of our research program. In the following, are given some use cases that are the focus of our current industrial collaborations.

4.2 Big optimization for complex scheduling

Three application domains are targeted: energy, health and transport and logistics. In the energy field, with the smart grid revolution (multi-)house energy management is gaining a growing interest. The key challenge is to make elastic with respect to the energy market the (multi-)house energy consumption and management. This kind of demand-side management will be of strategic importance for energy companies in the near future. In collaboration with the EDF energy company we are working on the formulation and solving of optimization problems on demand-side management in smart micro-grids for single- and multi-user frameworks. These complex problems require taking into account multiple conflicting objectives and constraints and many (deterministic/uncertain, discrete/continuous) parameters. A representative example of such BOPs that we are addressing is the scheduling of the activation of a large number of electrical and thermal appliances for a set of homes optimizing at least three criteria: maximizing the user's confort, minimizing its energy bill and minimzing peak consumption situations. In the health care domain, we have collaborated with the Beckman & Coulter company on the design and planning of large medical laboratories. This is a hot topic resulting from the mutualisation phenomenon which makes these laboratories bigger. As a consequence, being responsible for analyzing medical tests ordered by physicians on patient’s samples, these laboratories receive large amounts of prescriptions and tubes making their associated workflow more complex. Our aim was therefore to design and plan any medical laboratory to minimize the costs and time required to perform the tests. More exactly, the focus was put on the multi-objective modeling and solving of large (e.g. dozens of thousands of medical test tubes to be analyzed) strategic, tactical and operational problems such as the layout design, machine selection and confguration, assignment and scheduling. In 2020, we have published an USA patent jointly with the company presenting a clinical laboratory optimization framework.

4.3 Big optimization for engineering design

The focus is for now put on the aerospace vehicle design, a complex multidisciplinary optimization process, we are exploring in collaboration with ONERA. The objective is to find the vehicle architecture and characteristics that provide the optimal performance (flight performance, safety, reliability, cost etc.) while satisfying design requirements 59. A representative topic we are investigating, and will continue to investigate throughout the lifetime of the project given its complexity, is the design of launch vehicles that involves at least four tightly coupled disciplines (aerodynamics, structure, propulsion and trajectory). Each discipline may rely on time-demanding simulations such as Finite Element analyses (structure) and Computational Fluid Dynamics analyses (aerodynamics). Surrogate-assisted optimization is highly required to reduce the time complexity. In addition, the problem is high-dimensional (dozens of parameters and more than three objectives) requiring different decomposition schemas (coupling vs. local variables, continuous vs. discrete even categorial variables, scalarization of the objectives). Another major issue arising in this area is the non-stationarity of the objective functions which is generally due to the abrupt change of a physical property that often occurs in the design of launch vehicles. In the same spirit than deep learning using neural networks, we use Deep Gaussian Processes (DGPs) to deal with non-stationary multi-objective functions. Finally, the resolution of the problem using only one objective takes one week using a multi-core processor. The first way to deal with the computational burden is to investigate multi-fidelity using DGPs to combine efficiently multiple fidelity models. This approach has been investigated this year within the context of the PhD thesis of A. Hebbal. In addition, ultra-scale computing is required at different levels to speed up the search and improve the reliability which is a major requirement in aerospace design. This example shows that we need to use the synergy between the three lines of our research program to tackle such BOPs.

5 Highlights of the year

5.1 Awards

- Ali Hebbal received the 2020 Doctoral student award from the ONERA aerospace research center: https://

www. onera. fr/ fr/ rejoindre-onera/ prix-des-doctorants.

5.2 Other highlights

- Implication of Bonus in 2 region-scale projets accepted for the Université de Lille: PIA3 Equipex+ MesoNet (N. Melab: scientific coordinator for Université de Lille) and INFRANUM labeling of the regional data center (HdF) (N. Melab: member of PI team of the project).

- Bonus won the organization of ACM GECCO 2021 at Lille, a flagship international conference in the research field of the team. This is the first time that GECCO has been organized in France since its creation in 1999.

- USA Patent with Beckman Coulter Inc., Clinical laboratory optimization framework (Eric Varlet, S. Faramarzi-oghani, E-G. Talbi), WO2020117733A1, June 2020.

6 New software and platforms

6.1 New platforms

6.1.1 Grid'5000 testbed: evolution towards SILECS

Participants: Nouredine Melab, Dimitri Delabroye, Thierry Peltier, Lucas Nussbaum.

- Keywords: Experimental testbed, large-scale computing, high-performance computing, GPU computing, cloud computing, big data

-

Functional description: Grid'5000 is a project intiated in 2003 by the French government to promote scientific research on large scale distributed systems. The project is later supported by different research organizations including Inria, CNRS, the french universities, Renater which provides the wide-area network etc. The overall objective of Grid'5000 was to build by 2007 a nation-wide experimental testbed composed of at least 5000 processing units and distributed over several sites in France (one of them located at Lille). From a scientific point of view, the aim was to promote scientific research on large-scale distributed systems.

Within the framework of CPER data, the equipment of Grid'5000 at Lille has been renewed in 2017-2018 in terms of hardware resources (GPU-powered servers, storage, PDUs, etc.) and infrastructure (network, inverter, etc.). As scientific leader of Grid'5000 at Lille, N. Melab has strongly been involved in the management of this renewal. During the year 2020, he has been involved in the preparation of the proposal of the SILECS project (fusion of Grid'5000 and FIT testbeds) submitted in June to the Call for expressions of interest structuring equipment for research (ESR Equipex+).

-

URL:

https://

www. grid5000. fr/ mediawiki/ index. php/ Grid5000:Home

7 New results

7.1 Decomposition-based optimization

During the year 2020, we have investigated decomposition-based optimization in the decision space as well as in the objective space. In the decision space, we have considered discrete as well as continuous problems. For discrete problems, we have reinvestigated our interval-based decomposition approach proposed in 7 as a baseline to explore ultra-scale Branch-and-Bound algorithms using Chapel 16. The contribution is presented in Section 7.3. For continuous problems, we have extended the geometric fractal decomposition-based approach 10 to parallel multi-objective optimization 26 considering two different approaches: scalarization and non dominance. In 27, we have investigated the intuitive-for-decomposition bipartite graph representation for the design of a new partition crossover for the QAP problem. In the objective space, we have studied in 35 the joint impact of population size and sub-problem selection within the context of MOEA/D framework. The contributions are summarized in the following.

7.1.1 Fractal Decomposition for Continuous Multi-Objective Optimization Problems

Participants: El-Ghazali Talbi, Léo Souquet, Amir Nakib.

Fractal Decomposition Algorithm (FDA) is a metaheuristic that was recently proposed to solve high dimensional single-objective continuous optimization problems 10. This approach is based on a geometric fractal decomposition which divides recursively the decision space into hyperspheres while searching the optimal solution. In this work 26, two different multi-objective approaches have been investigated. The former (Mo-FDA-S) is based on scalarization and distributed on parallel multi-node virtual environments, taking profit from the FDA’s properties. In addition, Mo-FDA-S benefits from containers, light-weight virtual machines that are designed to run a specific task. The second one (Mo-FDA-D), based on Pareto dominance sorting, includes both a new hypersphere evaluation technique based on the hypervolume indicator and a new Pareto local search algorithm. A comparison with state-of-the-art algorithms on different well-known benchmarks (ZDT and DTLZ) has allowed to demonstrate the efficiency and the robustness of the proposed approaches for two- and three-objective problems.

7.1.2 Combined Impact of Population Size and Sub-problem Selection in MOEA/D

Participants: Geoffrey Pruvost, Bilel Derbel, Arnaud Liefooghe, Ke Li, Qingfu Zhang.

This contribution 35 intends to understand and to improve the working principle of decomposition-based multi-objective evolutionary algorithms. We review the design of the well-established MOEA/D framework to support the smooth integration of different strategies for sub-problem selection, while emphasizing the role of the population size and of the number of offspring created at each generation. By conducting a comprehensive empirical analysis on a wide range of multi-and many-objective combinatorial NK landscapes, we provide new insights into the combined effect of those parameters on the anytime performance of the underlying search process. We particularly show that even a simple random strategy selecting sub-problems at random outperforms existing sophisticated strategies. We also study the sensitivity of such strategies with respect to the ruggedness and the objective space dimension of the target problem.

7.1.3 Polynomial Clustering for 2D Pareto Fronts

Participants: El-Ghazali Talbi, Nicolas Dupin, Frank Nielsen.

-medoids is a discrete sum-of-square NP-hard clustering problem, which is known to be more robust to outliers than -means clustering. This contribution 47 examines -medoids clustering in the case of a 2D Pareto front, as generated by bi-objective optimization approaches. A characterization of optimal clusters is provided in this case allowing to solve the problem to optimality in polynomial time using a dynamic programming algorithm. More precisely, having points to cluster, the complexity of the algorithm is proven in time and memory space. This algorithm can also be used to jointly minimize the number of clusters and the dissimilarity of clusters. This bi-objective extension is also solvable to optimality with the same complexities, which is useful to choose the appropriate number of clusters for the real-life applications. Parallelization issues are also discussed, to speed-up the algorithm in practice. Polymonial clustering for 2D Pareto Fronts using Euclidian distance is also demonstrated for P-center problems, both discrete and continuous.

7.1.4 Design of a Partition Crossover for the Quadratic Assignment Problem

Participants: Omar Abdelkafi, Bilel Derbel, Arnaud Liefooghe, Darrell Whitley.

This contribution 27 consists in a study focused on the design of a partition crossover for the Quadratic Assignement Problem (QAP). On the basis of a bipartite graph representation, intuitive for decomposition, we propose to recombine the unshared components from parents, while enabling their fast evaluation using a preprocessing step for objective function decomposition. Besides, a formal description and complexity analysis of the proposed crossover, we conduct an empirical analysis on its relative behavior using a number of large-size QAP instances, and a number of baseline crossovers. The proposed operator is shown to have a relatively high intensification ability, while keeping execution time relatively low.

7.2 ML-assisted optimization

As pointed out in our research program 3.2, we investigate the ML-assisted optimization following two directions: building efficiently surrogates to deal with expensive black-box objective functions and automatically building and predicting/improving the performance of metaheuristics through landscape/

Regarding surrogate-assisted optimization, we have firstly considered the integration of Gaussian Processes (GPs) into Bayesian Optimization to address the non-stationarity issue using Deep GPs in 22, to handle multi-fidelity in 13 and to deal with multi-objective Multidisciplinary Design Optimization in 12. Secondly, we came out with two other contributions for the design of surrogate-assisted multi-objective Evolutionary Algorithms: in 37 to deal with medium-scale (up to 50 decision variables) continuous problems and in 36 to optimize boolean functions using decomposition and Walsh basis.

Regarding the second direction, we thoroughly investigated in 33, 34 the problem structure/landscape analysis for large multi-objective optimization. We have also performed in 38 an instance space analysis on discrete multi-objective optimization problems and have revisited in 28 the fitness landscape analysis within the stochastic context.

In addition, we have designed optimization algorithms using ML techniques. In 18, we have combined Mixed Integer Programming (MIP) with dual heuristics and ML techniques for the design of efficient lower bounds. In 32, we have proposed an ensemble indicator-based density estimator for multi-objective optimization giving rise to an ensemble indicator-based multi-objective EA (EIB-MOEA). In 30, we have studied the design of any-time bi-objective algorithms with the aim to trade-off runtime for solution quality.

The major contributions are summarized in the following.

7.2.1 (D)GP-assisted Bayesian optimization with applications to aerospace vehicle design

Participants: Ali Hebbal, El-Ghazali Talbi, Nouredine Melab, Loïc Brevault, Mathieu Balesdent.

Bayesian Optimization (BO) using Gaussian Processes (GPs) is a popular approach to deal with optimization involving expensive black-box functions. However, because of the assumption on the stationarity of the covariance function defined in classic GPs, this method may not be adapted for non-stationary functions involved in the optimization problem. To overcome this issue, Deep GPs (DGPs) can be used as surrogate models instead of classic GPs. This modeling technique increases the power of representation to capture the non-stationarity by considering a functional composition of stationary GPs, providing a multi-layer structure. In 22, we have investigated the application of DGPs within BO context. The specificities of this optimization method are discussed and highlighted with academic test cases. The performance of DGP-assisted BO is assessed on both analytical test cases and aerospace design optimization problems and compared to the state-of-the-art stationary and non-stationary BO approaches to demonstrate their high efficiency.

7.2.2 GP-based multi-fidelity with variable relationship between fidelities

Participants: Ali Hebbal, Loïc Brevault, Mathieu Balesdent.

Multi-fidelity modeling is a way to merge different fidelity models to provide engineers with accurate results with a limited computational cost. GP-based multi-fidelity modeling has emerged as a popular approach to fuse information between the different fidelity models. The relationship between the fidelity models is a key aspect in multi-fidelity modeling. In 13, we provide an overview of GP-based multi-fidelity modeling techniques for variable relationship between the fidelity models (e.g. linearity, non-linearity, variable correlation). Each technique is described within a unified framework and the links between the different techniques are highlighted. All these approaches are numerically compared on a series of analytical test cases and four aerospace-related engineering problems in order to assess their benefits and drawbacks with respect to the problem characteristics.

7.2.3 GP-assisted evolutionary algorithm for medium-scale multi-objective optimisation

Participants: Bilel Derbel, Arnaud Liefooghe, Xiaoran Ruan, Ke Li.

The scalability of surrogate-assisted Evolutionary Algorithms (SAEAs) have not been well studied yet in the literature. In 37, we have proposed a GP-assisted EA for solving medium-scale expensive multi-objective optimisation problems with up to 50 decision variables considering three distinctive features. First, instead of using all decision variables in surrogate model building for each objective function, we only use those correlated ones. Second, rather than directly optimising the surrogate objective functions, the original multi-objective optimisation problem is transformed into a new one based on the surrogate models. Last but not the least, a subset selection method is developed to choose a couple of promising candidate solutions for actual objective function evaluations thus to update the training dataset. The effectiveness of our proposed algorithm is validated on benchmark problems with 10, 20, 50 variables, in comparison with three state-of-the-art SAEAs.

7.2.4 Walsh surrogate-assisted and decomposition-based multi-objective combinatorial optimisation

Participants: Geoffrey Pruvost, Bilel Derbel, Arnaud Liefooghe, Sébastien Verel, Qingfu Zhang.

In 36, we have considered the design and analysis of surrogate-assisted algorithms for expensive multi-objective combinatorial optimization. Focusing on pseudo-boolean functions, we leverage existing techniques based on Walsh basis to operate under the decomposition framework of MOEA/D. We have investigated two design components for the cheap generation of a promising pool of offsprings and the actual selection of one solution for expensive evaluation. We have proposed different variants, ranging from a filtering approach that selects the most promising solution at each iteration by using the constructed Walsh surrogates to discriminate between a pool of offsprings generated by variation, to a substitution approach that selects a solution to evaluate by optimizing the Walsh surrogates in a multi-objective way. Considering bi-objective NK landscapes as benchmark problems offering different degree of non-linearity, we have conducted a comprehensive empirical analysis including the properties of the achievable approximation sets, the anytime performance, and the impact of the order used to train the Walsh surrogates. Our empirical findings have shown that, although our surrogate-assisted design is effective, the optimal integration of Walsh models within a multi-objective evolutionary search process gives rise to particular questions for which different trade-off answers can be obtained.

7.2.5 Problem structure/landscape analysis for large multi-objective optimization

Participants: Arnaud Liefooghe, Bilel Derbel, Sébastien Verel, Hernan Aguirre, Kiyoshi Tanaka, Hugo Monzón.

In 33, we have investigated the properties of large-scale multi-objective quadratic assignment problems (mQAP) and how they impact the performance of multi-objective evolutionary algorithms. The landscape of a diversified dataset of bi-, multi-, and many-objective mQAP instances is characterized by means of features measuring complementary facets of problem difficulty based on a sample of solutions collected along random and adaptive walks over the landscape. The strengths and weaknesses of a dominance-based, an indicator-based, and a decomposition-based search algorithm are then highlighted by relating their expected approximation quality in view of landscape features. We also discriminated between algorithms by revealing the most suitable one for subsets of instances. At last, we investigated the performance of a feature-based automated algorithm selection approach. By relying on low- cost features, we show that our recommendation system performs best in more than of the considered mQAP instances.

In 34, we have investigated the Dynamic Compartmental Models (DCMs), which are linear models inspired by epidemiology models, to study Multi- and Many-Objective EAs dynamics. We have introduced a new set of features based only on when non-dominated solutions are found in the population, relaxing the usual assumption that the Pareto optimal set is known in order to use DCMs on larger problems. We have also proposed an auxiliary model to estimate the hypervolume from the features of population dynamics that measures the changes of new non-dominated solutions in the population. The new features are tested by studying the population changes on the Adaptive -Sampling -Hood while solving 30 instances of a 3-objective, 100-variables MNK-landscape problem.

Finally, in collaboration with the University of Melbourne (Australia) we have performed in 38 an instance space analysis on discrete multi-objective optimization problems for the first time using visualization, algorithm performance and an independent feature selection. We have also revisited in 28 in collaboration with LISIC/ULCO the local optimality and fitness landscape analysis within the stochastic context.

7.2.6 ML-assisted design optimization algorithms

We have designed several optimization algorithms using ML techniques. In 18, in collaboration with LRI/Université Paris-Saclay, we have studied the hybridization of Mixed Integer Programming (MIP) with dual heuristics and ML techniques, to provide dual bounds for a large-scale industrial optimization problem (EURO/ROADEF Challenge 2010). The problem consists in optimizing the refueling and maintenance planning of nuclear power plants. Several MIP relaxations are presented to provide dual bounds computing smaller MIPs than the original problem. It is proven how to get dual bounds with scenario decomposition in the different 2-stage programming MILP formulations, with a selection of scenario guided by ML techniques. Several sets of dual bounds are computable, improving significantly the former best dual bounds of the literature and justifying the quality of the best primal solution known. In addition, we have addressed the same problem in 19 using matheuristics.

On the other hand, we have designed in colaboration with CINVESTAV (Mexico) in 32 a new mechanism to generate an ensemble indicator-based density estimator for multi-objective optimization. The proposed method gives rise to the ensemble indicator-based multi-objective evolutionary algorithm (EIB-MOEA) that shows a robust performance on different multi-objective optimization problems when compared with respect to several existing indicator-based multi-objective evolutionary algorithms. In 30, we have studied the design of any-time bi-objective algorithms with the aim to trade-off runtime for solution quality. First, we have proposed in 11 in collaboration with CISUC (Portugal) a theoretical model for the characterization of the best trade-off between runtime and solution quality. In 30, we have studied the algorithm selection problem in a context where the decision maker's anytime preferences are defined by a general utility function, and only known at the time of selection.

7.3 Towards ultra-scale Big Optimization

During the year 2020, we have addressed in 20 the ultra-scale optimization research line within the context of parallel metaheuristics. More exactly, we have investigated one of the major issues of ultra-scale optimization (and beyond) which consists in selecting the adequate productivity-aware programming language. The challenge is to take into account in addition to the traditional performance objective the productivity awareness which is highly important to deal with the increasing complexity of modern supercomputers. In this pioneering study, we have compared three languages: Python, Julia and Chapel. In addition, we have investigated in 29 the design of parallelism in surrogate-assisted decomposition-based multi-objective optimization. The merit of this original contribution is to cobmine the three lines of our research program: decomposition-based, ML-assisted and ultra-scale optimization. As pointed out in our editorial 24, such a combination is highly important for performance but rarely addressed in the literature. Finally, in 15 we have also combined surrogate-assisted optimization with parallelism considering various evolution controls.

7.3.1 High-productivity high-performance programming for parallel metaheuristics

Participants: Jan Gmys, Tiago Carneiro, Nouredine Melab, El-Ghazali Talbi, Daniel Tuyttens.

Parallel metaheuristics require programming languages that provide both, high performance and a high level of programmability. In 20, we have provided a useful data point to help practitioners gauge the difficult question of whether to invest time and effort into learning and using a new programming language. To answer this question, three productivity-aware languages (Chapel, Julia, and Python) have been compared in terms of performance, scalability and productivity. To the best of our knowledge, this is the first time such a comparison is performed in the context of parallel metaheuristics. As a test-case, we have implemented two parallel metaheuristics in three languages for solving the 3D Quadratic Assignment Problem (Q3AP), using thread-based parallelism on a multi-core shared-memory computer. We have also evaluated and compared the performance of the three languages for a parallel fitness evaluation loop, using four different test-functions with different computational characteristics. Besides providing a comparative study, we have given feedback on the implementation and parallelization process in each language.

7.3.2 Design of parallelism in surrogate-assisted and decomposition-based multi-objective optimization

Participants: Nicolas Berveglieri, Bilel Derbel, Arnaud Liefooghe, Hernan Aguirre, Qingfu Zhang, Kiyoshi Tanaka.

While many studies acknowledge the impact of parallelism for single-objective expensive optimization assisted by surrogates, extending such techniques to the multi-objective setting has not yet been properly investigated, especially within the state-of-the-art decomposition framework. In 29, we first highlighted the different degrees of parallelism in existing surrogate-assisted multi-objective evolutionary algorithms based on decomposition (S-MOEA/D). We have then provided a comprehensive analysis of the key steps towards a successful parallel S-MOEA/D approach. Through an extensive benchmarking effort relying on the well-established bbob-biobj test functions, we have analyzed the performance of the different algorithm designs with respect to the problem dimensionality and difficulty, the amount of parallel cores available, and the supervised learning models considered. In particular, we have shown the difference in algorithm scalability based on the selected surrogate-assisted approaches, the performance impact of distributing the model training task and the efficacy of the designed parallel-surrogate methods.7.3.3 Parallel Bayesian Neural Network-assisted Genetic Algorithms using various evolution controls

Participants: Guillaume Briffoteaux, Romain Ragonnet, Mohand Mezmaz, Nouredine Melab, Daniel Tuyttens.

Only-HPC-based methods are often not able to solve efficiently simulation-based optimization problems, parallel computing can therefore be coupled with surrogate models to achieve better results. When using surrogates, as pointed out previously it is critical to address the major trade-off issue between the quality (precision) and the efficiency (execution time) of the resolution. In 15, we have investigated Evolution Controls (ECs) which are strategies that define the alternation between the simulator and the surrogate within the optimization process. We propose a new EC based on the prediction uncertainty obtained from Monte Carlo Dropout (MCDropout), a technique originally dedicated to quantifying uncertainty in deep learning. Investigations of such uncertainty-aware ECs remain uncommon in surrogate-assisted evolutionary optimization. In addition, we have used parallel computing in a complementary way to address the high computational burden. Our new strategy is implemented in the context of a pioneering application to Tuberculosis Transmission Control (TBTC). The reported results show that the MCDropout-based EC coupled with massively parallel computing outperforms strategies previously proposed in the field of surrogate-assisted optimization.

In addition, we have extended this work in 39, using an EC mechanism that dynamically combines different ECs. The contribution has been validated on different standard benchmaks and the real TBTC application.

8 Bilateral contracts and grants with industry

8.1 Bilateral grants with industry

Our current industrial granted projects are completely at the heart of the Bonus project. They are summarized in the following.

- EDF (2020–2021, Paris): this joint project with EDF, a major electrical power player in France, is supported by the PGMO programme of Jacques Hadamard foundation of mathematics 7. The focus of the project is put on the optimization of deep neural networks applied to the energy consumption forecasting. A budget of 62K€ is allocated for funding a post-doc position.

- ONERA & CNES (2016–2023, Paris): the focus of this project with major European players in vehicle aerospace is put on the design of aerospace vehicles, a high-dimensional expensive multidisciplinary problem. Such problem needs the use of the research lines of Bonus to be tackled effectively and efficiently. Two jointly supervised PhD students (J. Pelamatti and A. Hebbal) are involved in this project. The PhD thesis of J. Pelamatti has been defended in March 2020 and that of A. Hebbal in January 2021. Another one (J. Gamot) has started in November 2020.

- Imprevo: Stratup creation initiative: The Imprevo startup proposal has been integrated in the Inria Startup Studio program in October 2020. The projet will benefit in 2021 from the recruitment of two temporal engineers.

9 Partnerships and cooperations

9.1 International initiatives

9.1.1 Inria associate team not involved in an IIL

Associate Team DMO

- Title: Three-fold decomposition in multi-objective optimization (DMO)

- International Partner: University of Exeter, UK

- Start year: 2018

9.1.2 Inria international partners

Informal international partners

- School of Public Health and Preventive Medicine, Monash University, Australia (ranked over 1000 in the Shangai international ranking).

- University of Melbourne, Australia.

- Habanero Extreme Scale Software Research Laboratory, Georgia Tech, Atlanta, USA.

- Federal Institute of Education, Science and Technology of Ceará, Maracanaú, Brazil.

9.1.3 Participation in other international programs

International Lab MODO

- Title: Frontiers in Massive Optimization and Computational Intelligence (MODO)

- International Partner: Shinshu University, Japan

- Start year: 2017

- See also: https://

sites. google. com/ view/ lia-modo/ - Abstract: The aim of MODO is to federate French and Japanese researchers interested in the dimensionality, heterogeneity and expensive nature of massive optimization problems. The team receives a yearly support for international exchanges and shared manpower (joint PhD students).

PICS MOCO-Search (2018-2020)

- Title: Bridging the gap between exact methods and heuristics for multi-objective search (MOCO-Search)

- International Partner (Institution - Laboratory - Researcher): University of Coimbra and University of Lisbon, Portugal

- Start year: 2018

- Website: http://

sites. google. com/ view/ moco-search/ - Abstract: This international project for scientific cooperation (PICS), funded by CNRS and FCT, aims to fill the gap between exact and heuristic methods for multi-objective optimization. The goal is to establish the link between the design principles of exact and heuristic methods, to identify features that make a problem more difficult to be solved by each method, and to improve their performance by hybridizing search strategies. Special emphasis is given to rigorous performance assessment, benchmarking, and general-purpose guidelines for the design of exact and heuristic multi-objective search.

9.2 International research visitors

Research stays abroad

- E-G. Talbi, University of Luxembourg (Luxembourg), November 2020

- A. Liefooghe, Schloss Dagstuhl, Leibniz Center for Informatics, January 2020

- A. Liefooghe, CNRS delegation at JFLI (IRL 3527) and Invited Professor at University of Tokyo, February to July 2020

- N. Melab, University of Mons, Belgium, many working meetings throughout the year

9.3 European initiatives

9.3.1 Collaborations in European programs, except FP7 and H2020

Program: COST CA15140

- Project acronym: ImAppNIO

- Project title: Improving applicability of nature-inspired optimization by joining theory and practice

- Duration: 2016-2020

- Coordinator: Thomas Jansen

- Abstract: The main objective of the COST Action is to bridge the gap between and improve the applicability of all kinds of nature-inspired optimisation methods. It aims at making theoretical insights more accessible and practical by creating a platform where theoreticians and practitioners can meet and exchange insights, ideas and needs; by developing robust guidelines and practical support for application development based on theoretical insights; by developing theoretical frameworks driven by actual needs arising from practical applications; by training Early Career Investigators in a theory of nature-inspired optimisation methods that clearly aims at practical applications; by broadening participation in the ongoing research of how to develop and apply robust nature-inspired optimisation methods in different application areas.

9.3.2 Collaborations with major European organizations

- University of Mons, Belgium: Parallel surrogate-assisted optimization, large-scale exact optimization, two joint PhDs (M. Gobert and G. Briffoteaux).

- University of Luxembourg: Q-Learning-based Hyper-Heuristic for Generating UAV Swarming Behaviours.

- University of Coimbra and University of Lisbon, Portugal: Exact and heuristic multi-objective search.

- University of Manchester, United Kingdom: Local optimality in multi-objective optimization.

- University of Elche and University of Murcia, Spain: Matheuristics for DEA.

9.4 National initiatives

9.4.1 ANR

- Bilateral ANR/RGC France/Hong Kong PRCI (2016-2021): “Big Multi-objective Optimization” in collaboration with City University of Hong Kong

9.5 Regional initiatives

- CPER Data (2015-2020): in this project, that promotes research and software development related to advanced data science, the Bonus team is the scientific leader (N. Melab) of one of the three research lines of the project “Optimization and High-Performance Computing”. In addition, the team is co-leader of the workpackage/lever “Research infrastructures” related to the Grid’5000 nation-wide experimental testbed.

- CPER ELSAT (2015-2020): in this project, focused on ecomobility, security and adaptability in transport, the Bonus team is involved in the transversal research line: planning and scheduling of maintenance logistics in transportation. The team got support for a one-year (2019-2020) engineer position (N. Aslimani). The support/contract is extended by 10 months.

10 Dissemination

10.1 Promoting scientific activities

10.1.1 Scientific events: organisation

General chair, scientific chair

- N. Melab (Workshop co-chair): Intl. Workshop on Parallel Optimization using/for Multi- and Many-core High Performance Computing (HPCS/POMCO 2020), Barcelona, Spain, December 10–14, 2020 (postponed to February, 2021).

- J. Gmys (Workshop co-chair): Intl. Workshop on the Synergy of Parallel Computing, Optimization and Simulation (HPCS/

PaCOS 2020), Barcelona, Spain, Dececmber 10–14, 2020 (postponed to February, 2021). - E-G. Talbi (Steering committee Chair): Intl. Conf. on Optimization and Learning (OLA 2020), Cadiz, Spain, February 17–19, 2020.

- E-G. Talbi (Steering committee): IEEE Workshop Parallel Distributed Computing and Optimization (IPDPS/PDCO 2020), New Orleans, USA, May 18–20, 2020.

- E-G. Talbi (Co-President): intl. Metaheuristics Summer School – MESS 2020 on “Learning and optimization from big data”, Catania, Italy, July 27–31, 2020.

- N. Melab (Co-chair): IBM Q Workshop to get started with Quantum Computing programming, October 5–9, 2020, Lille - https://

lille-ibmq. sciencesconf. org/. - B. Derbel (Workshop co-chair): Intl. workshop on on Decomposition Techniques in Evolutionary Optimization (DTEO), affiliated to ACM GECCO, July 2020, Mexico, on-line event.

- N. Melab (Chair): 5 simulation and HPC-related seminars at Université de Lille: IBM, IDRIS, Universit2 de Lille DSI, University of Norway, Vallourec, 2020.

10.1.2 Scientific events: selection

Member of the conference program committees

- Intl. Conf. on Parallel Problem Solving from Nature (PPSN 2020), Leiden, The Netherlands, September 5–9, 2020

- IEEE Congress on Evolutionary Computation (CEC), Glasgow, UK, July 19–24, 2020.

- The ACM Genetic and Evolutionary Computation Conference (GECCO), Cancun, Mexico, July 8–12, 2020.

- Intl. Workshop on the Synergy of Parallel Computing, Optimization and Simulation (HPCS/PaCOS 2020), Barcelona, Spain, December 10–14, 2020.

- IEEE Intl. Workshop on Parallel/Distributed Computing and Optimization (IPDPS/PDCO), New Orleans, USA, May 18–22, 2020.

- EvoCOP 2020, European Conference on Evolutionary Computation in Combinatorial Optimization, Seville, Spain, April 15–17, 2020.

- Intl. Conf. on Optimization and Learning (OLA 2020), Cadiz, Spain, February 17–19, 2020.

- The Intl. Conference on Bioinspired Optimisation Methods and Their Applications (BIOMA 2020), Brussels, Belgium, November 19–20, 2020.

- Intl. Symposium on Intelligent Distributed Computing (IDC 2020), Reggio Calabria, Italy, September 21–23, 2020.

- The Intl. Conference on Cloud Computing and Artificial Intelligence: Technologies and Applications (CloudTech 2020), Marrakesh, Morocco, November 24–26, 2020.

10.1.3 Journal

Member of the editorial boards

- N. Melab: Associate Editor of ACM Computing Surveys (IF: 8.96) since 2019.

- N. Melab: Guest and Managing Editor (in collaboration with P. Korosec, J. Gmys and I. Chakroun) of a special on Synergy between Parallel Computing, Optimization and Simulation in Journal of Computational Science (JoCS), 2019–2020.

- B. Derbel: Associate Editor, IEEE Transactions on Systems, Man and Cybernetics: Systems (IEEE).

Reviewer - reviewing activities

- Transactions on Evolutionary Computation (IEEE TEC, IF: 11.169), IEEE.

- ACM Computing Surveys (IF: 8.96), ACM.

- Swarm and Evolutionary Computation (SWEVO, IF: 6.912), Elsevier.

- Future Generation Computer Systems (FGCS, IF: 6.125), Elsevier.

- Applied Soft Computing (ASOC, IF: 5.474), Elsevier

- IEEE Transactions on Parallel and Distributed Systems (IEEE TPDS, IF: 4.154), IEEE.

- Computers & Operations Research (COR, IF: 3.424), Elsevier.

- Journal of Computational Science (JoCS, IF: 2.644), Elsevier.

- IEEE Transactions on Systems, Man, and Cybernetics: Systems (IEEE SMC: Systems, IF: 9.309), IEEE.

10.1.4 Invited talks

- E-G. Talbi. High-performance machine learning-assisted optimization, Invited talk, Huawei Global Connect Event (Virtual event), November 2020.

- E-G. Talbi. Data driven metaheuristics, Keynote talk, Int. Conf. on Information Technology InCIT 2020, ChonBuri, Thailand, October 2020.

- B, Derbel, A. Liefooghe, S. Verel. Fitness landscape analysis: Understanding and predicting algorithm performance for single- and multi-objective optimization —- Tutorial, International Conference on Parallel Problem Solving from Nature (PPSN 2020), Leiden, The Netherlands, September 2020.

- A. Liefooghe. Multi-objective landscapes: Problem understanding, search prediction and algorithm selection — Invited talk, JFLI, Japan, June 2020.

- A. Liefooghe. On the difficulty of evolutionary multi-objective optimization — Invited talk, University of Tokyo, Japan, February 2020.

- A. Liefooghe. On the difficulty of multiobjective combinatorial optimization problems — Dagstuhl seminar 20031 on Scalability in Multiobjective Optimization, Dagstuhl, Germany, January 2020.

10.1.5 Leadership within the scientific community

- N. Melab: scientific leader of Grid'5000 (https://

www. grid5000. fr) at Lille, since 2004. - E-G. Talbi: Co-president of the working group “META: Metaheuristics - Theory and applications”, GDR RO and GDR MACS.

- E-G. Talbi: Co-Chair of the IEEE Task Force on Cloud Computing within the IEEE Computational Intelligence Society.

- A. Liefooghe: co-secretary of the association “Artificial Evolution” (EA).

10.1.6 Scientific expertise

- N. Melab: Member of the advisory committee for the IT and maganement engineer training at Faculté Polytechnique de Mons, Belgium.

10.1.7 Research administration

- N. Melab: Member of the Scientific Board (Bureau Scientfique du Centre) for the Inria Lille - Nord Europe research center.

- N. Melab: Member of the steering committee of “Maison de la Simulation”

- B. Derbel: member of the CER committee, Inria Lille — Nord Europe

10.2 Teaching - supervision - juries

10.2.1 Teaching

Taught courses

- International Master lecture: N. Melab, Supercomputing, 45h ETD, M2, Université de Lille.

- Master lecture: N. Melab, Operations Research, 60h ETD, M1, Université de Lille.

- Licence: A. Liefooghe, Algorithmic and Data structure, 36h ETD, L2, Université de Lille.

- Licence: A. Liefooghe, Algorithmic for Operations Research, 36h ETD, L3, Université de Lille.

- Master: A. Liefooghe, Databases, 30h ETD, M1, Université de Lille.

- Master: A. Liefooghe, Advanced Object-oriented Programming, 53h ETD, M2, Université de Lille.

- Master: A. Liefooghe, Combinatorial Optimization, 10h ETD, M2, Université de Lille

- Master: A. Liefooghe, Multi-criteria Decision Aid and Optimization, 25h ETD, M2, Université de Lille

- Master: B. Derbel, Combinatorial Optimization, 35h, M2, Université de Lille

- Master: B. Derbel, Algorithms and Complexity, 35h, M1, Université de Lille

- Master: B. Derbel, Optimization and machine learning, 24h, M1, Université de Lille

- Master: B. Derbel, Big Data technologies, 24h, M1, Université de Lille

- Licence: B. Derbel, Object oriented design, 24h, L3, Université de Lille

- Licence: B. Derbel, Software engineering, 24h, L3, Université de Lille

- Licence: B. Derbel, Algorithms and data structures, 35h, L2, Université de Lille

- Licence: B. Derbel, Object oriented programming, 35h, L2, Université de Lille

- Engineering school: E-G. Talbi, Advanced optimization, 36h, Polytech'Lille, Université de Lille.

- Engineering school: E-G. Talbi, Data mining, 36h, Polytech'Lille, Université de Lille.

- Engineering school: E-G. Talbi, Operations research, 60h, Polytech'Lille, Université de Lille.

- Engineering school: E-G. Talbi, Graphs, 25h, Polytech'Lille, Université de Lille.

- Licence: O. Abdelkafi, Computer Science, 46.5 ETD, L1, Université de Lille.

- Licence: O. Abdelkafi, Web Technologies, 36 ETD, L1, Université de Lille.

- Licence: O. Abdelkafi, Unix system introduction, 6 ETD, L2, Université de Lille.

- Licence: O. Abdelkafi, Web Technologies, 24 ETD, L2 S3H, Université de Lille.

- Licence: O. Abdelkafi, object-oriented programming, 36 ETD, L2, Université de Lille.

- Licence: O. Abdelkafi, Relational Databases, 36h ETD, L3, Université de Lille.

- Licence: O. Abdelkafi, Algorithmic for Operations Research, 36h ETD, L3, Université de Lille.

Teaching responsabilities

- Master leading: N. Melab, Co-head (with B. Merlet) of the international Master 2 of High-perforpmance Computing and Simulation, Université de Lille.

- Master leading: B. Derbel, head of the Master MIAGE, Université de Lille.

- Master leading: A. Liefooghe, superviser of the Master 2 MIAGE IPI-NT.

- Master leading: O. Abdelkafi, superviser of the Master MIAGE alternance, Université de Lille.

- Head of the international relations: E-G. Talbi, Polytech'Lille, Université de Lille.

10.2.2 Supervision

- HDR defense: M. Mezmaz, Multi-level Parallel Branch-and-Bound Algorithms for Solving Permutation Problems on GPU-accelerated Clusters, September 18, 2020, Nouredine Melab.

- PhD defense: J. Pelamatti, Multi-disciplinary design of aerospace vehicles, March 9, 2020, El-Ghazali Talbi, co-supervisors from ONERA: L. Brévault, M. Balesdent, co-supervisor from CNES: Y. Guerin.

- PhD in progress (collaboration with ONERA): Ali Hebbal, Deep Gaussian processes and Bayesian optimization for non-stationary, multi-objective and multi-fidelity problems, Defense planned for January 21, 2021, El-Ghazali Talbi and Nouredine Melab, co-supervisors from ONERA: L. Brevault and M. Balesdent.

- PhD in progress (cotutelle): Maxime Gobert, Parallel multi-objective global optimization with applications to several simulation-based exlporation parameter, since October 2018, Nouredine Melab (Université de Lille) and Daniel Tuyttens (University of Mons, Belgium).

- PhD in progress (cotutelle): Guillaume Briffoteaux, Bayesian Neural Networks-assisted multi-objective evolutionary algorithms: Application to the Tuberculosis Transmission Control, since October 2017, Nouredine Melab (Université de Lille) and Daniel Tuyttens (University of Mons, Belgium).

- PhD in progress: Jeremy Sadet, Surrogate-based optimization in automotive brake design, El-Ghazali Talbi (Université de Lille), Thierry Tison (Université Polytechnique Hauts-de-France).

- PhD in progress: Geoffrey Pruvost, Machine learning and decomposition techniques for large-scale multi-objective optimization, Oct 2018, Bilel Derbel and Arnaud Liefooghe.

- PhD in progress: Nicolas Berveglieri, Meta-models and machine learning for massive expensive optimization, since October 2018, Bilel Derbel and Arnaud Liefooghe.

- PhD in progress: Raphael Cosson, Design, selection and configuration of adaptive algorithms for cross-domain optimization, since November 2019, Bilel Derbel and Arnaud Liefooghe.

- PhD in progress (cotutelle): Alexandre Jesus, Algorithm selection in multi-objective optimization, Bilel Derbel and Arnaud Liefooghe (Université de Lille), Luís Paquete (University of Coimbra, Portugal).

- PhD in progress: Juliette Gamot, Multidisciplinary design and analysis of aerospace concepts with a focus on internal placement optimization, Nov. 2020, El-Ghazali Talbi and Nouredine Melab, co-supervisors from ONERA: L. Brevault and M. Balesdent.

- PhD in progress: Lorenzo Canonne, Massively Parallel Gray-box and Large Scale Optimization, Oct. 2020, Bilel Derbel, Arnaud Liefooghe and Omar Abdelkafi.

Juries

- E-G. Talbi (Reviewer): PhD thesis of Antonio Gianmaria Spampinato, Metaheuristic optimization in system biology, University of Catania, Italy, defended Dec. 2020.

- E-G. Talbi (Reviewer): PhD of Sohail Ahmad, On improvements and applicatios of multi-verse optimizer algorithm to real-world problems, Abdul Wali Khan University, Pakistan, defended Dec. 2020.

- Bilel Derbel (Examiner): PhD thesis of Valentin Drouet, “Optimisation multi-objectifs du pilotage des réacteurs nucléaires à eau sous pression en suivi de charge dans le contexte de la transition énergétique à l'aide d'algorithmes évolutionnaires”, CEA / Université Paris-Saclay, defended Dec. 2020.

- Bilel Derbel (Examiner): PhD thesis of Amaury Dubois, “Optimisation et apprentissage de modèles biologiques : application à l’irrigation de pomme de terre”, Université de Littoral Côte d'Opale, defended Dec. 2020.

- A. Liefooghe (External Examiner): PhD of Sarah Thomson, University Stirling, UK, defended Oct. 2020.

- E-G. Talbi (President): PhD of Peerasak Wangsom, Multi-objective optimization for scientific workflow scheduling with data movement awareness in Cloud, King Mongkut’s University of Technology Thonburi, Thailand, defended June 2020.

- E-G. Talbi (Reviewer): PhD of Abdallah Ali Zainelabden, Performance evaluation and modeling of Saas web services in the Cloud, University of Luxembourg, defended Jan. 2020.

10.3 Popularization

10.3.1 Internal or external Inria responsibilities

- N. Melab: Chargé de Mission of High Performance Computing and Simulation at Université de Lille, since 2010.

10.3.2 Articles and contents

- E-G. Talbi. Collective intelligence at the service of health. In the Lille by Inria magazine, Inria Lille, Dec. 2020, https://

www. inria. fr/ fr/ lintelligence-collective-au-service-de-la-sante.

11 Scientific production

11.1 Major publications

- 1 articleA Survey on the Metaheuristics Applied to QAP for the Graphics Processing UnitsParallel Processing Letters2632016, 1--20

- 2 articleFTH-B&B: A Fault-Tolerant HierarchicalBranch and Bound for Large ScaleUnreliable EnvironmentsIEEE Trans. Computers6392014, 2302--2315

- 3 articleParadisEO: A Framework for the Reusable Design of Parallel and Distributed MetaheuristicsJ. Heuristics1032004, 357--380

- 4 article Problem Features versus Algorithm Performance on Rugged Multiobjective Combinatorial Fitness Landscapes Evolutionary Computation 25 4 2017

- 5 phdthesis Contributions to single- and multi- objective optimization: towards distributed and autonomous massive optimization Université de Lille 2017

- 6 inproceedingsMulti-objective Local Search Based on DecompositionParallel Problem Solving from Nature - PPSN XIV - 14th International Conference, Edinburgh, UK, September 17-21, 2016, Proceedings2016, 431--441

- 7 article IVM-based parallel branch-and-bound using hierarchical work stealing on multi-GPU systems Concurrency and Computation: Practice and Experience 29 9 2017

- 8 inproceedingsTowards Landscape-Aware Automatic Algorithm Configuration: Preliminary Experiments on Neutral and Rugged LandscapesEvolutionary Computation in Combinatorial Optimization - 17th European Conference, EvoCOP 2017, Amsterdam, The Netherlands, April 19-21, 2017, Proceedings2017, 215--232

- 9 articleGPU Computing for Parallel Local Search Metaheuristic AlgorithmsIEEE Trans. Computers6212013, 173--185

- 10 articleDeterministic metaheuristic based on fractal decomposition for large-scale optimizationAppl. Soft Comput.612017, 468--485

11.2 Publications of the year

International journals

International peer-reviewed conferences

Conferences without proceedings

Scientific books

Scientific book chapters

Edition (books, proceedings, special issue of a journal)

Doctoral dissertations and habilitation theses

Reports & preprints

11.3 Cited publications

- 59 inbookSpace Engineering: Modeling and Optimization with Case StudiesG. FasanoJ. PintérSpringer International Publishing2016, Advanced Space Vehicle Design Taking into Account Multidisciplinary Couplings and Mixed Epistemic/Aleatory Uncertainties1--48URL: http://dx.doi.org/10.1007/978-3-319-41508-6_1

- 60 inproceedingsOn the Impact of Multiobjective Scalarizing FunctionsParallel Problem Solving from Nature - PPSN XIII - 13th International Conference, Ljubljana, Slovenia, September 13-17, 2014. Proceedings2014, 548--558

- 61 inproceedingsA fine-grained message passing MOEA/DIEEE Congress on Evolutionary Computation, CEC 2015, Sendai, Japan, May 25-28, 20152015, 1837--1844

- 62 articleParallel surrogate-assisted global optimization with expensive functions – a surveyStructural and Multidisciplinary Optimization54(1)2016, 3--13

- 63 articleEfficient Global Optimization of Expensive Black-Box FunctionsJournal of Global Optimization13(4)1998, 455--492

- 64 inproceedingsHow to deal with mixed-variable optimization problems: An overview of algorithms and formulationsAdvances in Structural and Multidisciplinary Optimization, Proc. of the 12th World Congress of Structural and Multidisciplinary Optimization (WCSMO12)Springer2018, 64--82URL: http://dx.doi.org/10.1007/978-3-319-67988-4_5

- 65 articleCRAFT: A library for easier application-level Checkpoint/Restart and Automatic Fault ToleranceCoRRabs/1708.020302017, URL: http://arxiv.org/abs/1708.02030

- 66 articleData Structures in the Multicore AgeCommunications of the ACM5432011, 76--84

- 67 articleAddressing Failures in Exascale ComputingInt. J. High Perform. Comput. Appl.282May 2014, 129--173

- 68 articleCombining metaheuristics with mathematical programming, constraint programming and machine learningAnnals OR24012016, 171--215

- 69 articleParallel Branch-and-Bound in multi-core multi-CPU multi-GPU heterogeneous environmentsFuture Generation Comp. Syst.562016, 95--109