Keywords

Computer Science and Digital Science

- A5.1. Human-Computer Interaction

- A5.1.1. Engineering of interactive systems

- A5.1.2. Evaluation of interactive systems

- A5.1.5. Body-based interfaces

- A5.1.6. Tangible interfaces

- A5.1.7. Multimodal interfaces

- A5.2. Data visualization

Other Research Topics and Application Domains

- B2.8. Sports, performance, motor skills

- B5.7. 3D printing

- B6.3.1. Web

- B6.3.4. Social Networks

- B9.2. Art

- B9.2.1. Music, sound

- B9.2.4. Theater

- B9.5. Sciences

1 Team members, visitors, external collaborators

Research Scientists

- Wendy Mackay [Team leader, Inria, Senior Researcher, HDR]

- Baptiste Caramiaux [CNRS, Researcher, until Oct 2020]

- Theophanis Tsantilas [Inria, Researcher, HDR]

Faculty Members

- Michel Beaudouin-Lafon [Univ Paris-Saclay, Professor, HDR]

- Sarah Fdili Alaoui [Univ Paris-Saclay, Associate Professor]

- Cedric Fleury [Univ Paris-Saclay, Associate Professor]

- Joanna McGrenere [University of British Columbia, Inria Chair]

Post-Doctoral Fellows

- Benjamin Bressolette [Univ Paris-Saclay, until Nov 2020]

- Julien Gori [Univ Paris-Saclay, until Jan 2020]

- Janin Koch [Inria, from Jul 2020]

- Wanyu Liu [Univ Paris-Saclay, from Feb 2020 until Oct 2020]

- Antoine Loriette [Inria]

PhD Students

- Alexandre Battut [Univ Paris-Saclay, from Apr 2020]

- Arthur Fages [Univ Paris-Saclay]

- Camille Gobert [Inria, from Oct 2020]

- Per Carl Viktor Gustafsson [Univ Paris-Saclay]

- Han Han [Univ Paris-Saclay]

- Shu-Yuan Hsueh [Univ Paris-Saclay]

- Miguel Renom [Univ Paris-Saclay]

- Jean Philippe Riviere [Univ Paris-Saclay, until Nov 2020]

- Wissal Sahel [Institut de recherche technologique System X, from Nov 2020]

- Teo Sanchez [Inria]

- Martin Tricaud [CNRS]

- Oleksandra Vereschak [Sorbonne Université]

- Elizabeth Walton [Univ Paris-Saclay]

- Yiran Zhang [Univ Paris-Saclay]

- Yi Zhang [Inria]

Technical Staff

- Xi Hu [Univ Paris-Sud, Engineer, until Mar 2020]

- Nicolas Taffin [Inria, Engineer]

Interns and Apprentices

- Jekaterina Belakova [Inria, from Mar 2020 until Aug 2020]

- Camille Gobert [Inria, from Mar 2020 until Aug 2020]

- Lilly Arstad Helmersen [Inria, from Mar 2020 until Jul 2020]

- Hira Kazmi [Inria, from Mar 2020 until Jun 2020]

- Shalvi Palande [Inria, from Mar 2020 until Jul 2020]

- Michele Romani [Univ Paris-Saclay, from Mar 2020 until Jun 2020]

- Zachary Wilson [Inria, from Mar 2020 until Aug 2020]

- Ming Yao [Inria, from Mar 2020 until Aug 2020]

- Junhang Yu [Inria, from Apr 2020 until Sep 2020]

Administrative Assistant

- Irina Lahaye [Inria]

External Collaborator

- Midas Nouwens [Université d'Aarhus - Danemark, until Jan 2020]

2 Overall objectives

Interactive devices are everywhere: we wear them on our wrists and belts; we consult them from purses and pockets; we read them on the sofa and on the metro; we rely on them to control cars and appliances; and soon we will interact with them on living room walls and billboards in the city. Over the past 30 years, we have witnessed tremendous advances in both hardware and networking technology, which have revolutionized all aspects of our lives, not only business and industry, but also health, education and entertainment. Yet the ways in which we interact with these technologies remains mired in the 1980s. The graphical user interface (GUI), revolutionary at the time, has been pushed far past its limits. Originally designed to help secretaries perform administrative tasks in a work setting, the GUI is now applied to every kind of device, for every kind of setting. While this may make sense for novice users, it forces expert users to use frustratingly inefficient and idiosyncratic tools that are neither powerful nor incrementally learnable.

ExSitu explores the limits of interaction — how extreme users interact with technology in extreme situations. Rather than beginning with novice users and adding complexity, we begin with expert users who already face extreme interaction requirements. We are particularly interested in creative professionals, artists and designers who rewrite the rules as they create new works, and scientists who seek to understand complex phenomena through creative exploration of large quantities of data. Studying these advanced users today will not only help us to anticipate the routine tasks of tomorrow, but to advance our understanding of interaction itself. We seek to create effective human-computer partnerships, in which expert users control their interaction with technology. Our goal is to advance our understanding of interaction as a phenomenon, with a corresponding paradigm shift in how we design, implement and use interactive systems. We have already made significant progress through our work on instrumental interaction and co-adaptive systems, and we hope to extend these into a foundation for the design of all interactive technology.

3 Research program

We characterize Extreme Situated Interaction as follows:

Extreme users. We study extreme users who make extreme demands on current technology. We know that human beings take advantage of the laws of physics to find creative new uses for physical objects. However, this level of adaptability is severely limited when manipulating digital objects. Even so, we find that creative professionals––artists, designers and scientists––often adapt interactive technology in novel and unexpected ways and find creative solutions. By studying these users, we hope to not only address the specific problems they face, but also to identify the underlying principles that will help us to reinvent virtual tools. We seek to shift the paradigm of interactive software, to establish the laws of interaction that significantly empower users and allow them to control their digital environment.

Extreme situations. We develop extreme environments that push the limits of today's technology. We take as given that future developments will solve “practical" problems such as cost, reliability and performance and concentrate our efforts on interaction in and with such environments. This has been a successful strategy in the past: Personal computers only became prevalent after the invention of the desktop graphical user interface. Smartphones and tablets only became commercially successful after Apple cracked the problem of a usable touch-based interface for the iPhone and the iPad. Although wearable technologies, such as watches and glasses, are finally beginning to take off, we do not believe that they will create the major disruptions already caused by personal computers, smartphones and tablets. Instead, we believe that future disruptive technologies will include fully interactive paper and large interactive displays.

Our extensive experience with the Digiscope WILD and WILDER platforms places us in a unique position to understand the principles of distributed interaction that extreme environments call for. We expect to integrate, at a fundamental level, the collaborative capabilities that such environments afford. Indeed almost all of our activities in both the digital and the physical world take place within a complex web of human relationships. Current systems only support, at best, passive sharing of information, e.g., through the distribution of independent copies. Our goal is to support active collaboration, in which multiple users are actively engaged in the lifecycle of digital artifacts.

Extreme design. We explore novel approaches to the design of interactive systems, with particular emphasis on extreme users in extreme environments. Our goal is to empower creative professionals, allowing them to act as both designers and developers throughout the design process. Extreme design affects every stage, from requirements definition, to early prototyping and design exploration, to implementation, to adaptation and appropriation by end users. We hope to push the limits of participatory design to actively support creativity at all stages of the design lifecycle. Extreme design does not stop with purely digital artifacts. The advent of digital fabrication tools and FabLabs has significantly lowered the cost of making physical objects interactive. Creative professionals now create hybrid interactive objects that can be tuned to the user's needs. Integrating the design of physical objects into the software design process raises new challenges, with new methods and skills to support this form of extreme prototyping.

Our overall approach is to identify a small number of specific projects, organized around four themes: Creativity, Augmentation, Collaboration and Infrastructure. Specific projects may address multiple themes, and different members of the group work together to advance these different topics.

4 Application domains

4.1 Creative industries

We work closely with creative professionals in the arts and in design, including music composers, musicians, and sound engineers; painters and illustrators; dancers and choreographers; theater groups; game designers; graphic and industrial designers; and architects.

4.2 Scientific research

We work with creative professionals in the sciences and engineering, including neuroscientists and doctors; programmers and statisticians; chemists and astrophysicists; and researchers in fluid mechanics.

5 Highlights of the year

5.1 Awards

- Wendy Mackay : "ACM Fellow" - https://

www. acm. org/ media-center/ 2019/ december/ fellows-2019 - Wendy Mackay : "Suffrage Science Award", London Institute of Medical Sciences - https://

www. suffragescience. org - Michel Beaudouin-Lafon : "Heroes of Computer Science", University of York - https://

www. cs. york. ac. uk/ equality-and-diversity/ heroes-of-computer-science/ - New contracts: Sarah Fdili Alaoui: ANR JCJC Living Archive; Michel Beaudouin-Lafon: Equipex+ CONTINUUM ; Wendy Mackay: Horizon 2020 HumanE-AI, Horizon 2020 ALMA, SystemX Smart Cockpit.

- Sarah Fdili Alaoui: Best Paper Award at ACM CHI 26

- Han L Han, Miguel Renom, Wendy Mackay and Michel Beaudouin-Lafon: Honorable Mention Award at ACM CHI 2020 20

- Viktor Gustaffson, Benjamin Horne and Wendy Mackay: Paper Award at FDG 2020 19

- Four former ExSitu Ph.D. students obtain faculty positions: Ignacio Avellino (CNRS), Marianela Ciolfi Felice (KTH, Sweden), Germán Leiva (Univ. Aarhus, Denmark), Wanyu "Abby" Liu (CNRS).

6 New software and platforms

6.1 New software

6.1.1 Digiscape

- Name: Digiscape

- Keywords: 2D, 3D, Node.js, Unity 3D, Video stream

- Functional Description: Through the Digiscape application, the users can connect to a remote workspace and share files, video and audio streams with other users. Application running on complex visualization platforms can be easily launched and synchronized.

-

URL:

http://

www. digiscope. fr - Contact: Olivier Gladin

- Partners: Maison de la simulation, UVSQ, CEA, ENS Cachan, LIMSI, LRI - Laboratoire de Recherche en Informatique, CentraleSupélec, Telecom Paris

6.1.2 Touchstone2

- Keyword: Experimental design

-

Functional Description:

Touchstone2 is a graphical user interface to create and compare experimental designs. It is based on a visual language: Each experiment consists of nested bricks that represent the overall design, blocking levels, independent variables, and their levels. Parameters such as variable names, counterbalancing strategy and trial duration are specified in the bricks and used to compute the minimum number of participants for a balanced design, account for learning effects, and estimate session length. An experiment summary appears below each brick assembly, documenting the design. Manipulating bricks immediately generates a corresponding trial table that shows the distribution of experiment conditions across participants. Trial tables are faceted by participant. Using brushing and fish-eye views, users can easily compare among participants and among designs on one screen, and examine their trade-offs.

Touchstone2 plots a power chart for each experiment in the workspace. Each power curve is a function of the number of participants, and thus increases monotonically. Dots on the curves denote numbers of participants for a balanced design. The pink area corresponds to a power less than the 0.8 criterion: the first dot above it indicates the minimum number of participants. To refine this estimate, users can choose among Cohen’s three conventional effect sizes, directly enter a numerical effect size, or use a calculator to enter mean values for each treatment of the dependent variable (often from a pilot study).

Touchstone2 can export a design in a variety of formats, including JSON and XML for the trial table, and TSL, a language we have created to describe experimental designs. A command-line tool is provided to generate a trial table from a TSL description.

Touchstone2 runs in any modern Web browser and is also available as a standalone tool. It is used at ExSitu for the design of our experiments, and by other Universities and research centers worldwide. It is available under an Open Source licence at https://touchstone2.org.

-

URL:

https://

touchstone2. org - Contacts: Wendy Mackay, Michel Beaudouin-Lafon

- Partner: University of Zurich

6.1.3 UnityCluster

- Keywords: 3D, Virtual reality, 3D interaction

-

Functional Description:

UnityCluster is middleware to distribute any Unity 3D (https://unity3d.com/) application on a cluster of computers that run in interactive rooms, such as our WILD and WILDER rooms, or immersive CAVES (Computer-Augmented Virtual Environments). Users can interact the the application with various interaction resources.

UnityCluster provides an easy solution for running existing Unity 3D applications on any display that requires a rendering cluster with several computers. UnityCluster is based on a master-slave architecture: The master computer runs the main application and the physical simulation as well as manages the input, the slave computers receive updates from the master and render small parts of the 3D scene. UnityCluster manages data distribution and synchronization among the computers to obtain a consistent image on the entire wall-sized display surface.

UnityCluster can also deform the displayed images according to the user's position in order to match the viewing frustum defined by the user's head and the four corners of the screens. This respects the motion parallax of the 3D scene, giving users a better sense of depth.

UnityCluster is composed of a set of C Sharp scripts that manage the network connection, data distribution, and the deformation of the viewing frustum. In order to distribute an existing application on the rendering cluster, all scripts must be embedded into a Unity package that is included in an existing Unity project.

- Contact: Cédric Fleury

- Partner: Inria

6.1.4 VideoClipper

- Keyword: Video recording

-

Functional Description:

VideoClipper is an IOS app for Apple Ipad, designed to guide the capture of video during a variety of prototyping activities, including video brainstorming, interviews, video prototyping and participatory design workshops. It relies heavily on Apple’s AVFoundation, a framework that provides essential services for working with time-based audiovisual media on iOS (https://developer.apple.com/av-foundation/). Key uses include: transforming still images (title cards) into video tracks, composing video and audio tracks in memory to create a preview of the resulting video project and saving video files into the default Photo Album outside the application.

VideoClipper consists of four main screens: project list, project, capture and import. The project list screen shows a list with the most recent projects at the top and allows the user to quickly add, remove or clone (copy and paste) projects. The project screen includes a storyboard composed of storylines that can be added, cloned or deleted. Each storyline is composed of a single title card, followed by one or more video clips. Users can reorder storylines within the storyboard, and the elements within each storyline through direct manipulation. Users can preview the complete storyboard, including all titlecards and videos, by pressing the play button, or export it to the Ipad’s Photo Album by pressing the action button.

VideoClipper offers multiple tools for editing titlecards and storylines. Tapping on the title card lets the user edit the foreground text, including font, size and color, change background color, add or edit text labels, including size, position, color, and add or edit images, both new pictures and existing ones. Users can also delete text labels and images with the trash button. Video clips are presented via a standard video player, with standard interaction. Users can tap on any clip in a storyline to: trim the clip with a non-destructive trimming tool, delete it with a trash button, open a capture screen by clicking on the camera icon, label the clip by clicking a colored label button, and display or hide the selected clip by toggling the eye icon.

VideoClipper is currently in beta test, and is used by students in two HCI classes at the Université Paris-Saclay, researchers in ExSitu as well as external researchers who use it for both teaching and research work. A beta test version is available on demand under the Apple testflight online service.

- Contacts: Wendy Mackay, Nicolas Taffin

6.1.5 WildOS

- Keywords: Human Computer Interaction, Wall displays

-

Functional Description:

WildOS is middleware to support applications running in an interactive room featuring various interaction resources, such as our WILD and WILDER rooms: a tiled wall display, a motion tracking system, tablets and smartphones, etc. The conceptual model of WildOS is a platform, such as the WILD or WILDER room, described as a set of devices and on which one or more applications can be run.

WildOS consists of a server running on a machine that has network access to all the machines involved in the platform, and a set of clients running on the various interaction resources, such as a display cluster or a tablet. Once WildOS is running, applications can be started and stopped and devices can be added to or removed from the platform.

WildOS relies on Web technologies, most notably Javascript and node.js, as well as node-webkit and HTML5. This makes it inherently portable (it is currently tested on Mac OS X and Linux). While applications can be developed only with these Web technologies, it is also possible to bridge to existing applications developed in other environments if they provide sufficient access for remote control. Sample applications include a web browser, an image viewer, a window manager, and the BrainTwister application developed in collaboration with neuroanatomists at NeuroSpin.

WildOS is used for several research projects at ExSitu and by other partners of the Digiscope project. It was also deployed on several of Google's interactive rooms in Mountain View, Dublin and Paris. It is available under an Open Source licence at https://bitbucket.org/mblinsitu/wildos.

-

URL:

https://

bitbucket. org/ mblinsitu/ wildos - Contact: Michel Beaudouin-Lafon

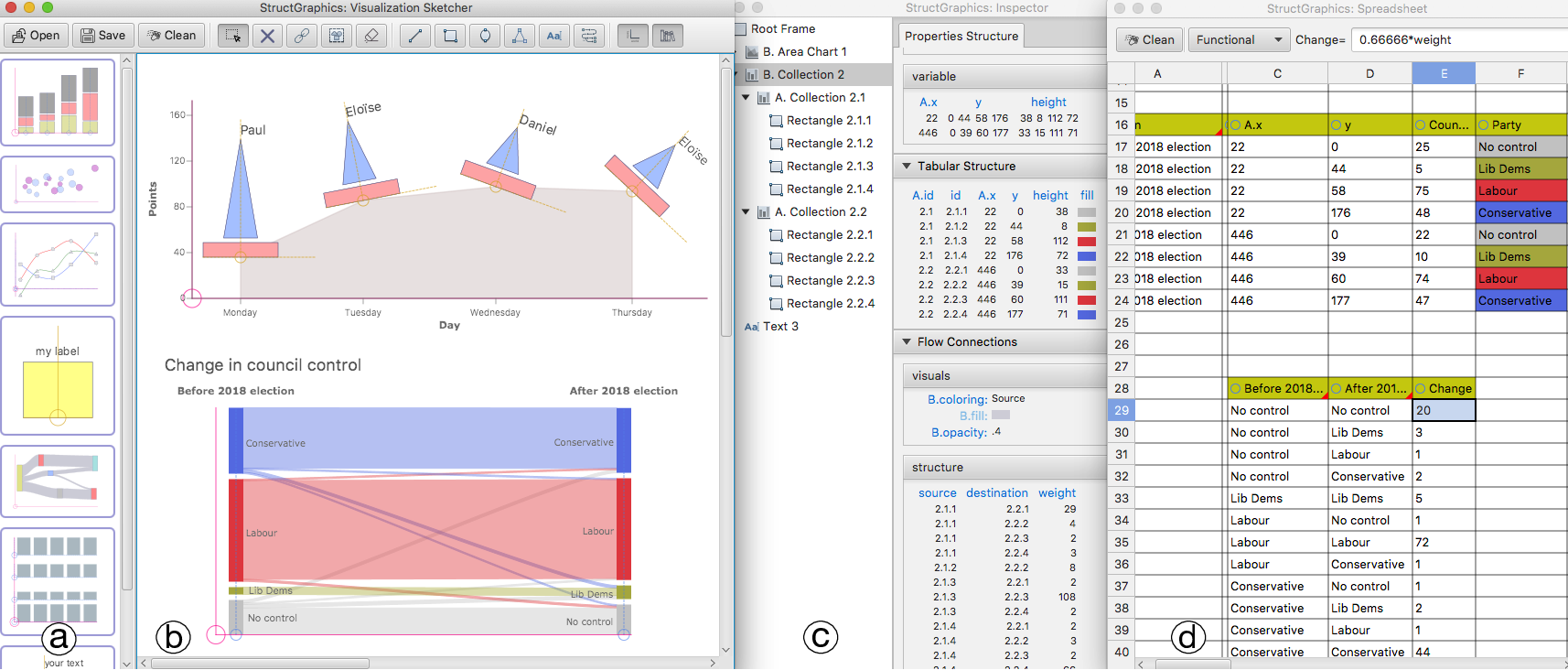

6.1.6 StructGraphics

- Keywords: Data visualization, Human Computer Interaction

- Functional Description: StructGraphics is a user interface for creating data-agnostic and fully reusable designs of data visualizations. It enables visualization designers to construct visualization designs by drawing graphics on a canvas and then structuring their visual properties without relying on a concrete dataset or data schema. Overall, StructGraphics follows the inverse workflow than traditional visualization-design systems. Rather than transforming data dependencies into visualization constraints, it allows users to interactively define the property and layout constraints of their visualization designs and then translate these graphical constraints into alternative data structures. Since visualization designs are data-agnostic, they can be easily reused and combined with different datasets.

-

URL:

https://

gitlab. inria. fr/ structgraphics/ code - Publication: https://hal.science/hal-02929811/

- Contact: Theophanis Tsandilas

6.2 New platforms

6.2.1 WILD

Participants: Michel Beaudouin-Lafon, Cédric Fleury, Olivier Gladin.

WILD is our first experimental ultra-high-resolution interactive environment, created in 2009. In 2019-2020 it received a major upgrade: the 16-computer cluster was replaced by new machines with top-of-the-line graphics cards, and the 32-screen display was replaced by 32 32" 8K displays resulting in a resolution of 1 giga-pixels (61 440 x 17 280) for an overall size of 5m80 x 1m70 (280ppi). An infrared frame adds multitouch capability to the entire display area. The platform also features a camera-based motion tracking system that lets users interact with the wall, as well as the surrounding space, with various mobile devices.

6.2.2 WILDER

Participants: Michel Beaudouin-Lafon, Cédric Fleury, Olivier Gladin.

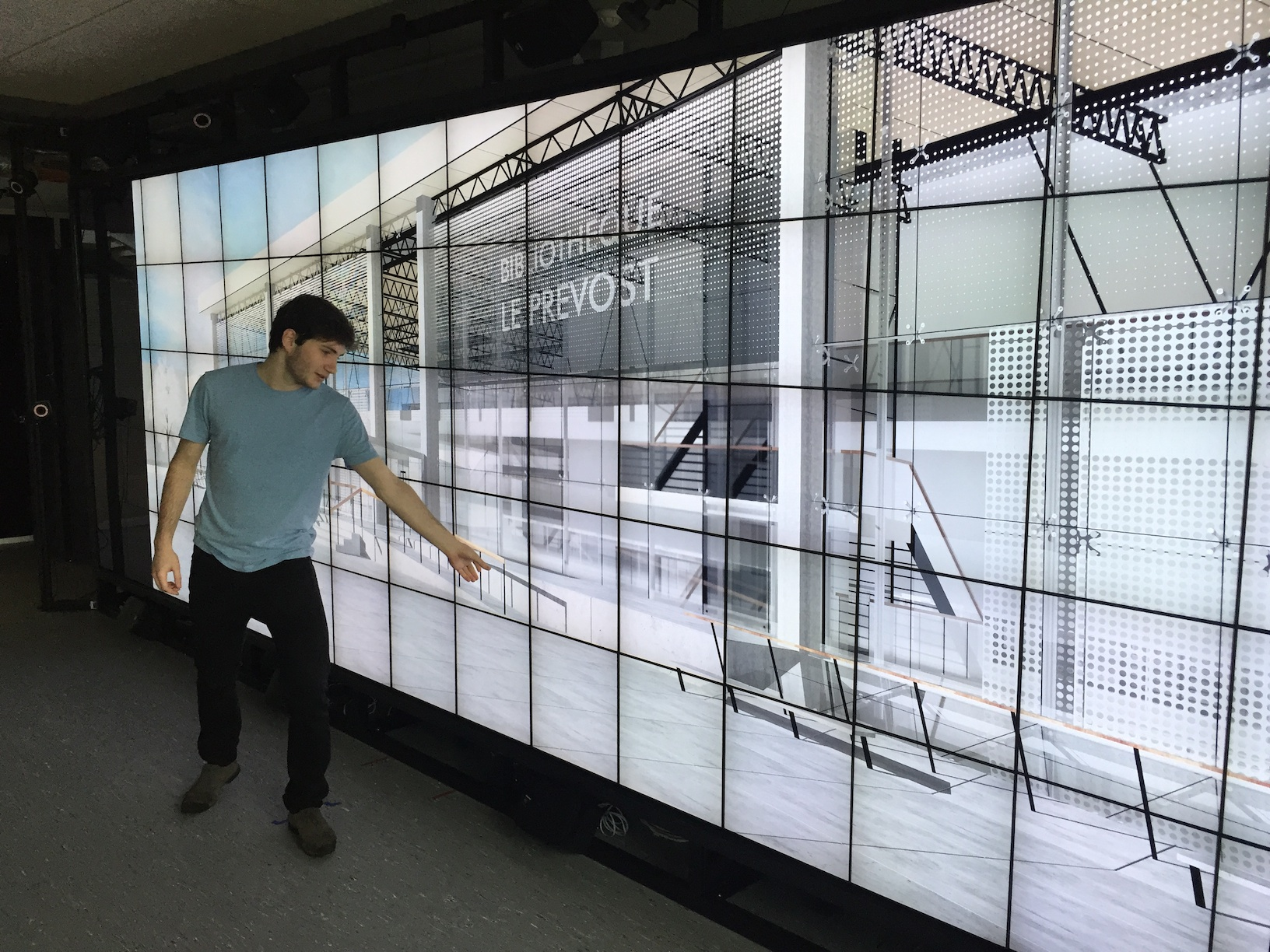

WILDER (Figure 1) is our second experimental ultra-high-resolution interactive environment, which follows the WILD platform developed in 2009. It features a wall-sized display with seventy-five 20" LCD screens, i.e. a 5m50 x 1m80 (18' x 6') wall displaying 14 400 x 4 800 = 69 million pixels, powered by a 10-computer cluster and two front-end computers. The platform also features a camera-based motion tracking system that lets users interact with the wall, as well as the surrounding space, with various mobile devices. The display uses a multitouch frame (one of the largest of its kind in the world) to make the entire wall touch sensitive.

WILDER was inaugurated in June, 2015. It is one of the ten platforms of the Digiscope Equipment of Excellence and, in combination with WILD and the other Digiscope rooms, provides a unique experimental environment for collaborative interaction.

In addition to using WILD and WILDER for our research, we have also developed software architectures and toolkits, such as WildOS and Unity Cluster, that enable developers to run applications on these multi-device, cluster-based systems.

7 New results

7.1 Fundamentals of Interaction

Participants: Michel Beaudouin-Lafon, Wendy Mackay, Cédric Fleury, Theophanis Tsandilas, Benjamin Bressolette, Alexandre Eiselmeyer, Camille Gobert, Julien Gori, Han Han, Miguel Renom, Martin Tricaud, Yiran Zhang.

In order to better understand fundamental aspects of interaction, ExSitu conducts in-depth observational studies and controlled experiments which contribute to theories and frameworks that unify our findings and help us generate new, advanced interaction techniques. Our theoretical work also leads us to deepen or re-analyze existing theories and methodologies in order to gain new insights.

Following up on our work on fundamental laws of Human-Computer Interaction (HCI), such as Fitts' Law, we revisited the validity of Hick's Law 24. Hick's law is a key quantitative law in Psychology that relates reaction time to the logarithm of the number of stimulus-response alternatives in a task. The law, however, is often misunderstood in HCI. We conducted an in-depth review of the literature on choice-reaction time and concluded that: (1) Hick's law contradicts the popular principle that 'less is better'; (2) logarithmic growth of observed temporal data is not necessarily interpretable in terms of Hick's law; (3) the stimulus-response paradigm is rarely relevant to HCI tasks, where choice-reaction time can often be assumed to be constant; and (4) for user interface design, a detailed examination of the effects of psychological processes such as visual search and decision making on choice-reaction time is more fruitful than a mere reference to Hick's law.

In collaboration with researchers at the University of Zurich, we also contributed to research methodology. Argus 16 addresses a key challenge that HCI researchers face when designing a controlled experiment i.e. choosing the appropriate number of participants, or sample size. A priori power analysis examines the relationships among multiple parameters, including the complexity associated with human participants, e.g., order and fatigue effects, to calculate the statistical power of a given experiment design. We created Argus, a tool that supports interactive exploration of statistical power: Researchers specify experiment design scenarios with varying confounds and effect sizes. Argus then simulates data and visualizes statistical power across these scenarios, which lets researchers interactively weigh various trade-offs and make informed decisions about sample size. We describe the design and implementation of Argus, and the results of a study where researchers used Argus to choose the sample size for a Fitts’s law experiment.

In the context of identifying and applying unifying principles of interaction, we conducted several studies and created prototypes that illustrate the power of this theoretical and methodological approach.

The first study focused on document editing: Writing technical documents frequently requires following constraints and consistently using domain-specific terms. We interviewed 12 legal professionals and found that they must rely on their memory to manage dependencies and maintain consistent vocabulary within their documents. We created Textlets 20, interactive objects that reify text selections into persistent items. Textlets help manage consistency and constraints within the document, including selective search and replace, word count, and alternative wording. A preliminary evaluation showed that users were able to use Textlets to perform advanced tasks. They also spontaneously generated new strategies for search-and-replace and for identifying unwanted words in a document. Textlets is a powerful new technique for text editing, an area that has long since been considered as "solved" by commercial word processors.

The second study addressed the management of consistency across documents by knowledge workers. We interviewed 23 scientists and found that they all had difficulties using the file system to keep track of, re-find and maintain consistency among related but distributed information. We created FileWeaver 18, a system that automatically detects dependencies among files without explicit user action, tracks their history, and lets users interact directly with the graphs representing these dependencies and version history. Changes to a file can trigger recipes, either automatically or under user control, to keep the file consistent with its dependants. Users can merge variants of a file, e.g. different output formats, into a polymorphic file, or morph, and automate the management of these variants. By making dependencies among files explicit and visible, FileWeaver facilitates the automation of workflows by scientists and other users who rely on the file system to manage their data.

The third study targeted users who create visualizations. We studied how to enable users to create custom visualizations without programming. Despite their expressive power, previous visualization authoring systems often assume that users want to generate visual representations that they already have in mind rather than explore designs. They also impose a data-to-graphics workflow, where binding data dimensions to graphical properties is a necessary step for generating visualization layouts. Targeting visualization-design tasks, we introduced StructGraphics 15, an approach for creating data-agnostic and fully reusable visualization designs. StructGraphics enables designers to construct visualization designs by drawing graphics on a canvas and then structuring their visual properties without relying on a concrete dataset or data schema. In StructGraphics, tabular data structures are derived directly from the structure of the graphics (Figure 2). Later, designers can link these structures with real datasets through a spreadsheet user interface. StructGraphics supports the design and reuse of complex data visualizations by combining graphical property sharing, by-example design specification, and persistent layout constraints. We demonstrated the power of the approach through a gallery of visualization examples and reflected on its strengths and limitations in interaction with graphic designers and data visualization experts.

We also investigated navigation in virtual reality, and more specifically, teleportation with head-mounted displays. Basic teleportation usually moves a user's viewpoint to a new destination of the virtual environment without taking into account the physical space surrounding them. However, considering the user's real workspace is crucial for preventing them from reaching its limits and thus managing direct access to multiple virtual objects. We proposed to display a virtual representation of the user's real workspace before the teleportation, and compare manual and automatic techniques for positioning such a virtual workspace 28. Controlled experiments show that both manual and automatic techniques are more efficient than the basic teleportation for reaching many objects of the virtual environment. In addition, manual techniques seems more suitable for crowded scenes than automatic techniques.

7.2 Human-Computer Partnerships

Participants: Wendy Mackay, Baptiste Caramiaux, Wanyu Liu, Janin Koch, Téo Sanchez, Nicolas Taffin, Theophanis Tsandilas.

ExSitu is interested in designing effective human-computer partnerships, in which expert users control their interaction with technology. Rather than treating the human users as the 'input' to a computer algorithm, we explore human-centered machine learning, where the goal is to use machine learning and other techniques to increase human capabilities. Much of human-computer interaction research focuses on measuring and improving productivity: our specific goal is to create what we call 'co-adaptive systems' that are discoverable, appropriable and expressive for the user.

We continued our collaboration with the University of Paris Descartes and the ILDA Inria team to help data analysts query massive data series collections within interaction times. Even though the data management community has developed sophisticated techniques for answering similarity queries quickly, the state-of-the-art indexes are still far from achieving interactive response times — depending on the dataset size and the machine, a single query can take from a few seconds to dozens of minutes. We presented and experimentally evaluated a new probabilistic learning-based method 17 that provides quality guarantees for progressive Nearest-Neighbor query answering. We provided both initial and progressive estimates of the final answer that are getting better during the similarity search, as well suitable stopping criteria for the progressive queries. Experiments with synthetic and diverse real datasets demonstrated that our prediction methods constitute the first practical solution to the problem, significantly outperforming competing approaches.

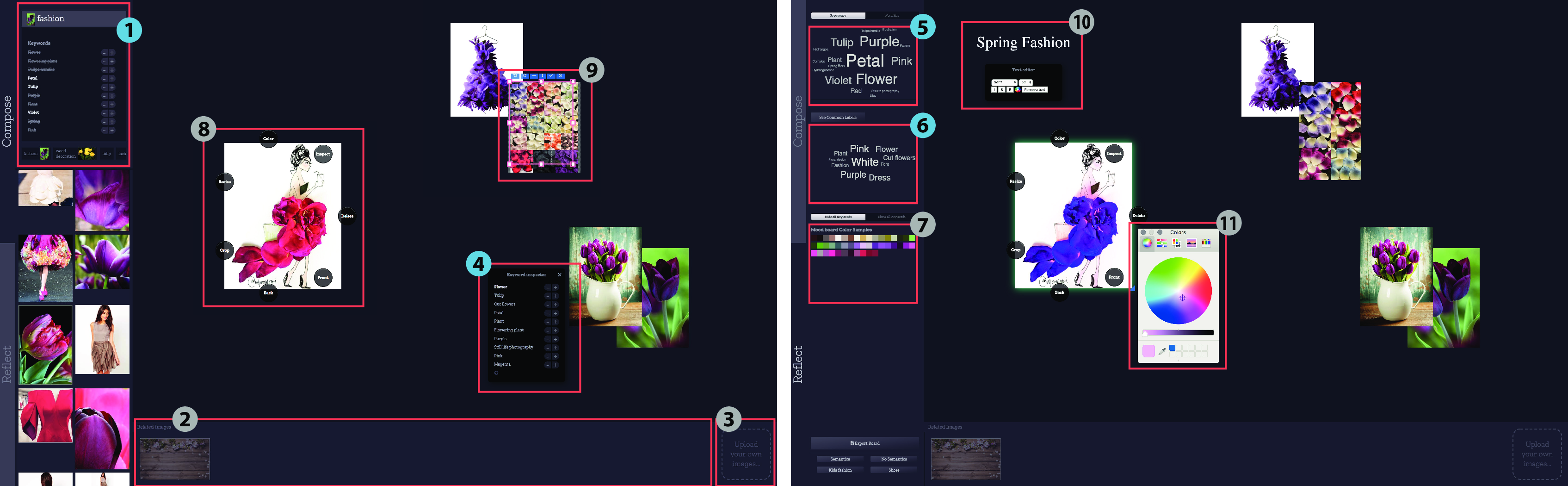

We collaborated with researchers at Aalto University to create an intelligent tool that helps designers create inspirational mood boards to express their design ideas visually, through collages of images and text. They find appropriate images and reflect on them as they explore emergent design concepts. We designed and developed Semantic Collage23, a digital mood board tool creation tool that attaches semantic labels to images by applying a state- of-the-art semantic labeling algorithm. SemanticCollage helps designers to 1) translate vague, visual ideas into search terms; 2) make better sense of and communicate their designs; while 3) not disrupting their creative flow. Empirical work included and initial participatory design workshop, a survey of professional designers,and a structured observation with 12 professional designers that demonstrated how semantic labels help designers successfully guide image search and find relevant words that articulate their abstract, visual ideas.

7.3 Creativity

Participants: Sarah Fdili Alaoui, Baptiste Caramiaux, Wendy Mackay, Viktor Gustafsson, Shu Yuan Hsueh, Janin Koch, Jean-Philippe Rivière, Nicolas Taffin, Theophanis Tsandilas.

ExSitu is interested in understanding the work practices of creative professionals, particularly artists, designers, and scientists, who push the limits of interactive technology. We follow a multi-disciplinary participatory design approach, working with both expert and non-expert users in diverse creative contexts.

Firstly, Exsitu explores methodological considerations. For example, Sarah Fdili Alaoui collaborated with the researchers in Simon Fraser University to study how micro-phenomenology has been used in HCI/Design research since 2001 to articulate user’s experience in third wave HCI and creative contexts 26. The paper illustrates how this method has been applied by the selected experts through developing a practice, and present conditions under which the descriptions of the experience unfold, and the values that this method can provide to HCI/Design field. The paper has earned a best paper award.

Members of Exsitu organised a workshop during CHI 2020 called Where Art Meets Technology: Integrating Tangible and Intelligent Tools in Creative Processes 22. The focus of this workshop is to bring together researchers and practitioners to explore what the future of digital art and design will hold. The exploration will centre around synthesizing key challenges and questions, along with ideas for future interaction technologies that consider mobile and tangible aspects of digital art.

ExSitu has explored diverse creative contexts, including video games, music, and dance. Our work with video games show that players in modern Massively Multiplayer Online Role-Playing Games progress through ambitiously designed narratives, but have no real influence on the game, since only their characters' data, not the game environment, persists 19. Gustafsson et al. introduce Narrative Substrates, a theoretical framework for designing game architectures that represent, manage, and persist traces of player activity as unique, interactive content. They identify key benefits and challenges of their approach and argue that reification of emergent narratives offers new design opportunities for creating truly interactive games.

The second creative context is music and sound design. Baptiste Caramiaux collaborated in the study of timbre, an auditory attribute that conveys crucial information about the identity of a sound source, especially for music 14. The authors use a data-driven computational account to reveal the acoustic correlates of timbre. Human dissimilarity ratings are simulated with metrics learned on acoustic spectrotemporal modulation models inspired by cortical processing. The authors observe that timbre has both generic and experiment-specific acoustic correlates. These findings provide a broad overview of former studies on musical timbre and identify its relevant acoustic substrates according to biologically inspired models. Additionally, Baptiste Caramiaux collaborated in a study which examines mapping design 27. Twelve skilled NIME users designed a mapping from a T-Stick to a subtractive synthesizer and were interviewed about their approach to mapping design. The authors present a thematic analysis of the interviews and suggest that the mapping design process is an iterative process that alternates between two working modes: diffuse exploration and directed experimentation.

The third creative context is dance. Jean-Philippe Rivière defended his thesis 33 on “Capturing traces of the dance learning process”, which encompasses both focused and long-term empirical studies with dancers and choreographers, as well as a novel technology, MoveOn, which supports dance learning as well as provides a in-context tool for capturing and analysing learning and choreographic creative practice.

In Digital art, and in intersection with the use of artificial intelligence, Baptiste Caramiaux provided a five-year art and science collaboration that proposes a methodology combining computational learning technology -Machine Learning (ML) and Artificial Intelligence (AI)- with interactive music performance and choreography 29. The article described two collaborative artistic works Corpus Nil and Humane Methods, with the artistic motivations and reflections on the methodology developed during the collaboration as well as the conceptual shift of computational learning technologies, from ML to AI, and its impact on Music performance. Baptiste Caramiaux also proposes a report with an overview on the Use of Artificial Intelligence in the Cultural and Creative Sectors 31.

Finally, Theophanis Tsandilas successfully defended his Habilitation 30, reviewing the results of more than 10 years of research at Inria on interactive tools and techniques for creative activities.

7.4 Collaboration

Participants: Cédric Fleury, Michel Beaudouin-Lafon, Wendy Mackay, Joanna McGrenere, Arthur Fages, Janin Koch, Yi Zhang.

ExSitu explores new ways of supporting collaborative interaction and remote communication. In particular, we studied co-located and remote collaboration on large wall-sized displays, video-conferencing systems, and computer tools to support collaborative activities for design and creativity.

In an industrial context, design review is an iterative process which mainly relies on two steps involving many stakeholders: design discussion and CAD data adjustment. We investigated how a wall-sized display could be used to merge these two steps by allowing multidisciplinary collaborators to simultaneously generate and explore design alternatives. We designed ShapeCompare 25 based on the feedback from a usability study. It enables multiple users to compute and distribute CAD data with touch interaction (Figure 4). To assess the benefit of the wall-sized display in such context, we ran a controlled experiment which aims to compare ShapeCompare with a visualization technique suitable for standard screens. The results show that pairs of participants performed a constraint solving task faster and used more deictic instructions with ShapeCompare. From these findings, we draw generic recommendations for collaborative exploration of design alternatives.

In collaboration with researchers at the University of Aalto, we worked with professional designers to create a collaborative tool to support mood board design. Professional designers create mood boards to explore, visualize, and communicate hard-to-express ideas. We developed ImageSense12, an intelligent, collaborative ideation tool that combines individual and shared work spaces, as well as collaboration with multiple forms of intelligent agents. In the collection phase, ImageSense offers fluid transitions between serendipitous discovery of curated images via ImageCascade, combined text- and image-based Semantic search, and intelligent AI suggestions for finding new images. For later composition and reflection, ImageSense provides semantic labels, generated color palettes, and multiple tag clouds to help communicate the intent of the mood board. A study of nine professional designers revealed nuances in designers’ preferences for designer-led, system-led, and mixed-initiative approaches that evolve throughout the design process. We discuss the challenges in creating effective human-computer partnerships for creative activities, and suggest directions for future research.

8 Partnerships and cooperations

8.1 European initiatives

8.1.1 FP7 & H2020 Projects

Humane AI

- Title: Toward AI Systems That Augment and Empower Humans by Understanding Us, our Society and the World Around Us

- Duration: Sept 2020 - August 2024

- Coordinator: DFKI

-

Partners:

- Aalto Korkeakoulusaatio SR (Finland)

- Agencia Estatal Consejo Superior Deinvestigaciones Cientificas (Spain)

- Albert-ludwigs-universitaet Freiburg (Germany)

- Athina-erevnitiko Kentro Kainotomias Stis Technologies Tis Pliroforias, Ton Epikoinonion Kai Tis Gnosis (Greece)

- Consiglio Nazionale Delle Ricerche (Italy)

- Deutsches Forschungszentrum Fur Kunstliche Intelligenz GMBH (Germany)

- Eidgenoessische Technische Hochschule Zuerich (Switzerland)

- Fondazione Bruno Kessler (Italy)

- German Entrepreneurship GMBH (Germany)

- INESC TEC - Instituto De Engenhariade Sistemas E Computadores, Tecnologia E Ciencia (Portugal)

- ING GROEP NV (Netherlands)

- Institut Jozef Stefan (Slovenia)

- Institut Polytechnique De Grenoble (France)

- Knowledge 4 All Foundation LBG (UK)

- Kobenhavns Universitet (Denmark)

- Kozep-europai Egyetem (Hungary)

- Ludwig-maximilians-universitaet Muenchen (Germany)

- Max-planck-gesellschaft Zur Forderung Der Wissenschaften EV (Germany)

- Technische Universitaet Wien (Austria)

- Technische Universitat Berlin (Germany)

- Technische Universiteit Delft (Netherlands)

- Thales SIX GTS France SAS (France)

- The University Of Sussex (UK)

- Universidad Pompeu Fabra (Spain)

- Universita di Pisa (Italy)

- Universiteit Leiden (Netherlands)

- University College Cork - National University of Ireland, Cork (Ireland)

- Uniwersytet Warszawski (Poland)

- Volkswagen AG (Germany)

- Inria contact: Wendy Mackay

- Summary: The goal of the HumanE AI project is to create artificial intelligence technologies that synergistically work with humans, fitting seamlessly into our complex social settings and dynamically adapting to changes in our environment. Such technologies will empower humans with AI, allowing humans and human society to reach new potentials and more effectively to deal with the complexity of a networked globalized world.

ALMA

- Title: ALMA: Human Centric Algebraic Machine Learning

- Duration: Sept 2020 - August 2024

- Coordinator: DFKI

-

Partners:

- Deutsches Forschungszentrum Fur Kunstliche Intelligenz GMBH (Germany)

- Fiware Foundation EV (Germany)

- Fundacao D. Anna Sommer Champalimaud E DR. Carlos Montez Champalimaud (Portugal)

- Proyectos Y Sistemas de Mantenimiento SL (Spain)

- Teknologian Tutkimuskeskus VTT Oy (Finland)

- Universidad Carlos III de Madrid (Spain)

- Inria contact: Wendy Mackay

- Summary: Algebraic machine learning (AML) is a new AI learning paradigm that builds upon Abstract Algebra, but rather than using statistics, instead produces models from semantic embeddings of data into discrete algebraic structures. The goal of the ALMA project is to leverage the properties of ALM to create a new generation of interactive, human-centric machine learning systems that integrate human knowledge with constraints, reduce dependence on statistical properties of the data, and the enhance explainability of the learning process.

ONE

- Title: ONE: Unified Principles of Interaction

- Funding: European Research Council (ERC Advanced Grant)

- Duration: October 2016 - September 2021

- Coordinator: Michel Beaudouin-Lafon

- Summary: The goal of ONE is to fundamentally re-think the basic principles and conceptual model of interactive systems to empower users by letting them appropriate their digital environment. The project addresses this challenge through three interleaved strands: empirical studies to better understand interaction in both the physical and digital worlds, theoretical work to create a conceptual model of interaction and interactive systems, and prototype development to test these principles and concepts in the lab and in the field. Drawing inspiration from physics, biology and psychology, the conceptual model combines substrates to manage digital information at various levels of abstraction and representation, instruments to manipulate substrates, and environments to organize substrates and instruments into digital workspaces.

8.2 National initiatives

ELEMENT

- Title: Enabling Learnability in Human Movement Interaction

- Funding: ANR

- Duration: 2019 - 2022

- Coordinator: Baptiste Caramiaux, Sarah Fdili Alaoui, Wendy Mackay

-

Partners:

- Inria

- IRCAM

- Inria contact: Sarah Fdili Alaoui

- Summary: The goal of this project is to foster innovation in multimodal interaction, from non-verbal communication to interaction with digital media/content in creative applications, specifically by addressing two critical issues: the design of learnable gestures and movements; and the development of interaction models that adapt to a variety of user's expertise and facilitate human sensorimotor learning.

CAB: Cockpit and Bidirectional Assistant

- Title: Smart Cockpit Project

- Duration: Sept 2020 - August 2024

- Coordinator: SystemX Technological Research Institute

-

Partners:

- SystemX

- EDF

- Dassault

- RATP

- Orange

- Inria

- Inria contact: Wendy Mackay

- Summary: The goal of the CAB Smart Cockpit project is to define and evaluate an intelligent cockpit that integrates a bi-directional virtual agent that increases the real-time the capacities of operators facing complex and/or atypical situations. The project seeks to develop a new foundation for sharing agency between human users and intelligent systems: to empower rather than deskill users by letting them learn throughout the process, and to let users maintain control, even as their goals and circumstances change.

8.2.1 ARCOL

- Title: Interactive Reinforcement Co-Learning

- Funding: ANR

- Type: Young researcher project, Equipment and human resources

- Duration: 2020-2024

- Coordinator: Baptiste Caramiaux

- Partners: ISIR

- Inria contact: Baptiste Caramiaux

- Summary: Throughout our lifetime, we are acquiring and improving a wide range of motor skills. In other words, we are able to perform certain movements faster, better and more accurately. The mechanisms at play in motor skill acquisition are complex and not yet fully understood, but there is a consensus that practice is fundamental to skill learning. For instance, a novice musician would spend hours practicing her instrument in order to play music pieces, or to play with other musicians. The same is true for athletes. Practice plays a fundamental role in motor learning and it can, ideally, be designed in order to improve learning performance. However, practice design is challenging as it relies mostly on heuristics (from the learner, a teacher, or a pedagogy), tacit knowledge and varies across learners and along the learning development. Designing good practice sessions is all the more important for beginners who do not have the expertise to select efficient practice routines and to understand what makes a good or a bad practice schedule.

Archive Interactive Isadora Duncan

- Title: Archive Interactive Isadora Duncan

- Funding: Centre National de la Danse

- Duration: 2019 – 2020

- Coordinator: Elisabeth Schwartz

- Partners: Rémi Ronfart, Inria Rhones Alpes

- Inria contact: Sarah Fdili Alaoui

- Summary: The goal of this project is to design an interactive system that allows to archive through graphical representations and movement based interactive systems the repertoire of Isadora Dunca.

8.2.2 Investissements d'Avenir

CONTINUUM

- Title: Collaborative continuum from digital to human

- Type: EQUIPEX+ (Equipement d'Excellence)

- Duration: 2020 – 2029

- Coordinator: Michel Beaudouin-Lafon

-

Partners:

- Centre National de la Recherche Scientifique (CNRS)

- Institut National de Recherche en Informatique et Automatique (Inria)

- Commissariat à l'Energie Atomique et aux Energies Alternatives (CEA)

- Université de Rennes 1

- Université de Rennes 2

- Ecole Normale Supérieure de Rennes

- Institut National des Sciences Appliquées de Rennes

- Aix-Marseille University

- Université de Technologie de Compiègne

- Université de Lille

- Ecole Nationale d'Ingénieurs de Brest

- Ecole Nationale Supérieure Mines-Télécom Atlantique Bretagne-Pays de la Loire

- Université Grenoble Alpes

- Institut National Polytechnique de Grenoble

- Ecole Nationale Supérieure des Arts et Métiers

- Université de Strasbourg

- COMUE UBFC Université de Technologie Belfort Montbéliard

- Université Paris-Saclay

- Télécom Paris - Institut Polytechnique de Paris

- Ecole Normale Supérieure Paris-Saclay

- CentraleSupélec

- Université de Versailles - Saint-Quentin

- Budget: 13.6 Meuros public funding from ANR

- Summary: The CONTINUUM project will create a collaborative research infrastructure of 30 platforms located throughout France, to advance interdisciplinary research based on interaction between computer science and the human and social sciences. Thanks to CONTINUUM, 37 research teams will develop cutting-edge research programs focusing on visualization, immersion, interaction and collaboration, as well as on human perception, cognition and behaviour in virtual/augmented reality, with potential impact on societal issues. CONTINUUM enables a paradigm shift in the way we perceive, interact, and collaborate with complex digital data and digital worlds by putting humans at the center of the data processing workflows. The project will empower scientists, engineers and industry users with a highly interconnected network of high-performance visualization and immersive platforms to observe, manipulate, understand and share digital data, real-time multi-scale simulations, and virtual or augmented experiences. All platforms will feature facilities for remote collaboration with other platforms, as well as mobile equipment that can be lent to users to facilitate onboarding.

8.3 Regional initiatives

Living Archive

- Funding: STIC department, Université Paris-Saclay

- Duration: 2019 – 2020

- Coordinator: Sarah Fdili Alaoui

- Inria contact: Sarah Fdili Alaoui

- Summary: The goal of this project is to design an interactive system that allows dance practitioners (including dancers, choreographers, and dance pedagogues) to collect, curate, capture, annotate, document their own dance materials from their first person perspective and with their own idiosyncratic methods and to develop methods that allow the general public to access, transmit and appropriate the constituted dance repositories.

9 Dissemination

9.1 Promoting scientific activities

Chair of conference program committees

- IHM 2020-21, Conférence Francophone d'Interaction Homme-Machine, demonstration co-chair: Cédric Fleury

- ACM DIS 2021, Designing Interactive Systems: Chair of the Art and Design Exhibition, Sarah Fdili Alaoui

- ACM CHI 2021, CHI Conference on Human Factors in Computing Systems: Doctoral consortium co-chair: Wendy Mackay

- ACM UIST 2020, ACM Symposium on User Interface Software and Technology: Best Paper Awards committee co-chair: Wendy Mackay

Member of the conference program committees

- ACM CHI 2021, ACM CHI Conference on Human Factors in Computing Systems: Doctoral consortium co-chair: Wendy Mackay

- ACM UIST 2020, ACM Symposium on User Interface Software and Technology: Best Paper Awards committee co-chair: Wendy Mackay

- ACM DIS 2021, Designing Interactive Systems: Sarah Fdili Alaoui

- ACM UIST 2020, ACM Symposium on User Interface Software and Technology: Michel Beaudouin-Lafon, Wendy Mackay

- ACM UIST 2020 Doctoral Symposium: Michel Beaudouin-Lafon

- EuroVR 2020, EuroVR International Conference: Cédric Fleury

- IHM 2020, Conférence Francophone d’Interaction Homme-Machine: Michel Beaudouin-Lafon

- GI 2020, Graphics Interface Conference: Janin Koch, Theophanis Tsandilas

Reviewer

- ACM CHI 2021, ACM CHI Conference on Human Factors in Computing Systems: Theophanis Tsandilas, Sarah Fdili Alaoui, Michel Beaudouin-Lafon, Janin Koch, Stacy Hsueh, Wendy Mackay

- IEEE VR 2021, Virtual Reality Conference: Cédric Fleury

- ACM UIST 2020, ACM Symposium on User Interface Software and Technology: Theophanis Tsandilas, Arthur Fages, Janin Koch

- ACM DIS 2020, Designing Interactive Systems: Theophanis Tsandilas, Saraj Fdili Alaoui, Janin Koch

- IEEE VIS 2020, IEEE Visualization Conference: Theophanis Tsandilas

- ACM IMX, ACM IMX International Conference on Interactive Media Experiences: Jean-Philippe Rivière

- ACM MobileHCI (2019) The Conference on Human-Computer Interaction with Mobile Devices and Services: Janin Koch

- ACM HRI 2020, ACM/IEEE International Conference on Human-Robot Interaction: Janin Koch

- ACM TEI 2020, ACM Conference on Tangible, Embedded and Embodied Interaction : Janin Koch

- ACM CHIPLAY 2020, ACM SIGCHI Annual Symposium on Computer-Human Interaction in Play: Viktor Gustafsson

9.1.1 Journal

Member of the editorial boards

- Editor for the Human-Computer Interaction area of the ACM Books Series : Michel Beaudouin-Lafon (2013-)

- TOCHI, Transactions on Computer Human Interaction, ACM: Michel Beaudouin-Lafon (2009-), Wendy Mackay (2016-), Baptiste Caramiaux (2019-)

- PloS ONE: Baptiste Caramiaux (2018-)

- JIPS, Journal d'Interaction Personne-Système, AFIHM: Michel Beaudouin-Lafon (2009-)

- Frontiers in Virtual Reality: Cédric Fleury (2019-)

Reviewer - reviewing activities

- TOCHI, Transactions on Computer Human Interaction, ACM: Theophanis Tsandilas, Sarah Fdili Alaoui, Janin Koch

- IEEE TVCG, Transactions on Visualization and Computer Graphics: Theophanis Tsandilas

- IEEE CG&A, Computer Graphics and Applications: Cédric Fleury

9.1.2 Invited talks

- Technologies in Dance: methods, creations and critical reflections at NYU Marl Speaker series, New York: Sarah Fdili Alaoui

- Some Assembly Reprocessed an international artistic exchange with Attack Theatre, Pittsburg: Sarah Fdili Alaoui

- Lost in translation : Reconciling artistic and technological discourses at the Humanities center, University of Pittsburg: Sarah Fdili Alaoui

- Massachusetts Institute of Technology (MIT) Designing Collaborative Systems for Inspiration, 1 December 2020: Janin Koch

- KTH Stockholm May AI? Design Ideation with Cooperative Contextual Bandits, 19 November 2020: Janin Koch

- CSCW and UIST: the 1980s, ACM UIST Panel (21 October 2020 ): Wendy Mackay

- Hybrid Intelligence (10 October 2020): Wendy Mackay

- Structured Observation (26 June 2020): Wendy Mackay

9.1.3 Leadership within the scientific community

- STIC department (Science and Technology of Information and Communication), Université Paris-Saclay (1300 researchers and faculty): Michel Beaudouin-Lafon (Chair)

- RTRA Digiteo (Research network in Computer Science), Université Paris-Saclay: Michel Beaudouin-Lafon (Director)

- Graduate School in Computer Science, Université Paris-Saclay: Michel Beaudouin-Lafon (Adjunct Director for Research)

- Labex Digicosme: Michel Beaudouin-Lafon (Co-chair of the Interaction theme)

- ACM Technical Policy Council: Micel Beaudouin-Lafon (Vice-chair)

9.1.4 Scientific expertise

- Jury for the recruiting of a Professor, Université Paris-Saclay, 2020: Michel Beaudouin-Lafon (President)

- Jury for the recruiting of a Professor, Université Paris-Saclay, 2020: Wendy Mackay (President)

- Dutch Science Foundation on Hybrid Intelligence Scientific Advisory Board, the Netherlands: Wendy Mackay (member)

- Jury d’Admission, DR Inria: Wendy Mackay (member)

- Jury for the qualification to an Assistant Professor position, University of Paris, 2020: Theophanis Tsandilas (member)

- Jury for the qualification to an Assistant Professor position, Kopenhagen University (Danemark), 2020: Michel Beaudouin-Lafon (member)

- Jury for the ERC Generator call for projects, Université de Lille 2020: Michel Beaudouin-Lafon (member)

- Agence Nationale de la Recherche (ANR), Appel à projets générique 2020: Theophanis Tsandilas (reviewer)

- “Commission Scientifique”, Inria: Theophanis Tsandilas (member)

- ACM SIGCHI Lifetime Service Award committee, 2020: Michel Beaudouin-Lafon (Chair)

- ACM Policy Award committee, 2020: Michel Beaudouin-Lafon (member)

9.1.5 Research administration

- Programme committee of Institut Convergence DATAIA: Michel Beaudouin-Lafon (member)

- Prefiguration group of the Graduate School in Computer Science, Université Paris-Saclay: Michel Beaudouin-Lafon (member)

- “Commission consultatives paritaires (CCP)” Inria: Wendy Mackay (President)

- “Commission Locaux”, LRI: Theophanis Tsandilas (member)

9.2 Teaching - Supervision - Juries

9.2.1 Teaching

- International Masters: Theophanis Tsandilas, Probabilities and Statistics, 32h, M1, Télécom Sud Paris, Institut Polytechnique de Paris

- Interaction & HCID Masters: Michel Beaudouin-Lafon, Wendy Mackay, Fundamentals of Situated Interaction, 21 hrs, M1/M2, Univ. Paris-Saclay

- Interaction & HCID Masters: Sarah Fdili Alaoui, Creative Design, 21h, M1/M2, Univ. Paris-Saclay

- Interaction & HCID Masters: Sarah Fdili Alaoui, Studio Art Science in collaboration with Centre Pompidou, 21h, M1/M2, Univ. Paris-Saclay

- Interaction & HCID Masters: Michel Beaudouin-Lafon, Fundamentals of Human-Computer Interaction, 21 hrs, M1/M2, Univ. Paris-Saclay

- Interaction & HCID Masters: Wendy Mackay, Career Seminar 6 hrs, M2, Univ. Paris-Saclay

- Interaction & HCID Masters: Wendy Mackay, HCI Winter School 21 hrs, M2, Univ. Paris-Saclay

- Interaction & HCID Masters: Wendy Mackay, Design of Interactive Systems, 42 hrs, M1/M2, Univ. Paris-Saclay

- Interaction & HCID Masters: Wendy Mackay, Advanced Design of Interactive Systems, 21 hrs, M1/M2, Univ. Paris-Saclay

- Interaction & HCID Masters: Baptiste Caramiaux, Gestural and Mobile Interaction, 24 hrs, M1/M2, Univ. Paris-Saclay

- Polytech: Cédric Fleury, Projet Java-Graphique-IHM, 24 hrs, 3st year, Univ. Paris-Saclay

- Polytech: Cédric Fleury, Interaction Homme-Machine, 18 hrs, 3st year, Univ. Paris-Saclay

- Polytech: Cédric Fleury, Option Réalité Virtuelle, 56 hrs, 5th year, Univ. Paris-Saclay

- Polytech: Cédric Fleury & Arthur Fages, Réalité Virtuelle et Interaction, 48 hrs, “Apprentis” 5th year, Univ. Paris-Saclay

- Licence Informatique: Michel Beaudouin-Lafon, Introduction to Human-Computer Interaction, 21h, second year, Univ. Paris-Saclay

9.2.2 Supervision

PhD students

- PhD: Stacy (Shu-Yuan) Hsueh, Embodied design for Human-Computer Co-creation, 7 December 2020. Advisors: Wendy Mackay & Sarah Fdili Alaoui

- PhD: Jean-Philippe Rivière, Embodied Design for Human-Computer Partnership in Learning Contexts, 11 December 2020. Advisors: Wendy Mackay, Sarah Fdili Alaoui & Baptiste Caramiaux

- PhD: Yujiro Okuya, CAD Modification Techniques for Design Reviews on Heterogeneous Interactive Systems, 8 November 2020. Advisors: Patrick Bourdot (LIMSI-CNRS) & Cédric Fleury

- PhD in progress: Yiran Zhang, Telepresence for remote and heterogeneous Collaborative Virtual Environments, October 2017. Advisors: Patrick Bourdot (LIMSI-CNRS) & Cédric Fleury

- PhD in progress: Téo Sanchez, Co-Learning in Interactive Systems, September 2018. Advisors: Baptiste Caramiaux & Wendy Mackay

- PhD in progress: Elizabeth Walton, Inclusive Design in Embodied Interaction, November 2018. Advisor: Wendy Mackay

- PhD in progress: Miguel Renom, Theoretical bases of human tool use in digital environments, October 2018. Advisors: Michel Beaudouin-Lafon & Baptiste Caramiaux

- PhD in progress: Han Han, Participatory design of digital environments based on interaction substrates, October 2018. Advisor: Michel Beaudouin-Lafon

- PhD in progress: Viktor Gustafsson, Co-adaptive Instruments fo Game Design, October 2018. Advisor: Wendy Mackay

- PhD in progress: Yi Zhang, Generative Design using Instrumental Interaction, Substrates and Co-adaptive Systems, October 2018. Advisor: Wendy Mackay

- PhD in progress: Martin Tricaud, Instruments and Substrates for Procedural Creation Tools, October 2019. Advisor: Michel Beaudouin-Lafon

- PhD in progress: Arthur Fages, Collaborative 3D Modeling in Augmented Reality Environments, December 2019. Advisors: Cédric Fleury & Theophanis Tsandilas

- PhD in progress: Alexandre Battut, Interactive Instruments and Substrates for Temporal Media, April 2020. Advisor: Michel Beaudouin-Lafon

- PhD in progress: Camille Gobert, Interaction Substratees for Programming, October 2020. Advisor: Michel Beaudouin-Lafon

- PhD in progress: Wissal Sahel. Co-adaptive Instruments for Smart Cockpits November 2020. Advisors: Wendy Mackay & Nicolas Heulot (SystemX).

Masters students

- Yao Ming, “Ink-based interaction grammars”: Theophanis Tsandilas (advisor)

- Michele Romani, “Video communication in augmented reality”: Cédric Fleury & Michel Beaudouin-Lafon (advisors)

- Lilly Arstad Helmersen “Co-Designers Not Troublemakers: Enabling Player-Created Narratives inPersistent Game Worlds”: Wendy Mackay (advisor)

- Junhang Yu “Interactive Path Visualization”: Wendy Mackay (advisor)

- Zachary Wilson, “Creativity Techno-Fidgets: Towards Embodied Creativity Support Tools”: Wendy Mackay (advisor)

- Ekatarina Belakova “SonAmi: A Tangible Creativity Support Tool for Productive Procrastination”: Wendy Mackay (advisor)

- Shalvi Palande, “The Interaction Museum”: Wendy Mackay (advisor)

9.2.3 Juries

PhD theses

- Theofanis Tsandilas, Inria & Université Paris-Saclay, “Designing Interactive Tools for Creators and Creative Work, 8 july 2020: Wendy Mackay (marraine)

- Jonas Frich, Aarhus University,“Understanding Digital Tools for Creatvity”, 23 Octobre 2020: Wendy Mackay (rapporteur)

- Stéphani Rey, Université de Bordeaux, “Apports des Interactions Tangibles pour la Création, le Choix et le Suivi de Parcours de Visite Personnalisés dans les Musées”, 8 juin 2020: Wendy Mackay (rapporteur) (Tangible Interactions for Creating, Choosing and Monitoring Personalized Visit in Museums)

- Valentin Lachand-Pascal, Université de Lyon, “Approche centrée activité pour la conception et l’orchestration d’activités numériques en class”, 6 novembre 2020: Wendy Mackay (rapporteur)

- Nawel Khenak, Université Paris-Saclay, “Towards a unified model of Spatial Presence: categories, factors and measures. Application to the study of Telepresence in immersive environments.”, 27 octobre 2020: Wendy Mackay (présidente)

- Luis Galindo “’’, Université de Poitiers, 19 November: Wendy Mackay (rapporteur)

- Vincent Gouezou, LACTH - Ecole Nationale Supérieure d'Architecture et de Paysage de Lille, “De la représentation à la modélisation de l'architecture : réintroduire le dessin d'esquisse en contexte BIM par sa spatialisation en réalité virtuelle” (advisors: Frank Vermandel & Laurent Grisoni): Theophanis Tsandilas, examiner

- Romain Terrier, INSA de Rennes / IRISA-Inria Rennes, “Support à la conception collaborative en Réalité Virtuelle : du Framework basé scénarios aux interactions” (advisors: Nico Pallamin, Valérie Gouranton & Bruno Arnaldi): Cédric Fleury, examiner

9.3 Popularization

9.3.1 Articles and contents

- Statement on Essential Principles and Practices for Covid-19 Contact Tracing Applications. Beaudouin-Lafon, Enrico Nardelli, and Gerhard Schimpf. ACM Europe Technology Policy Committee, 2020. https://

www. acm. org/ articles/ bulletins/ 2020/ may/ europe-tpc-statement-on-contact-tracing - Pourquoi vouloir interagir avec des ordinateurs ? Michel Beaudouin-Lafon (2020). In Vers le cyber-monde. Humain et numérique en interaction, Mokrane Bouzeghoub, Jamal Daafouz, and Christian Jutten (Eds.). CNRS Éditions, 18–41. 32

9.3.2 Interventions

- "Confine ta science", remote popularization conference and participatory workshop for the general public with the association TRACES, 23 March 2020 held on Twitch platform: Téo Sanchez and Baptiste Caramiaux (co-facilitators)

- Interactive exhibition piece and Podcast, 27.11.2019–15.1.2020, Art and AI Exhibition 'Connecting the Dots' : Janin Koch

- Where Art Meets Technology: Integrating Tangible and Intelligent Tools in Creative Processes, 26 April 2020 Workshop held at CHI 2020: Janin Koch (co-facilitator).

- Interviews on the France Inter national radio show "Les P'tits Bateaux": Michel Beaudouin-Lafon. Answers to kid questions: "Pourquoi sur le clavier, y’a pas, dans l’ordre, les lettres de l’alphabet" (1 march 2020), "Combien y a-t-il de touches sur les claviers des ordinateurs chinois" (12 may 2020), "Je voudrais savoir ce que veut dire point com" (20 december 2020).

10 Scientific production

10.1 Major publications

- 1 inproceedings'Expressive Keyboards: Enriching Gesture-Typing on Mobile Devices'.Proceedings of the 29th ACM Symposium on User Interface Software and Technology (UIST 2016)ACMTokyo, JapanACMOctober 2016, 583 - 593

- 2 inproceedings'CamRay: Camera Arrays Support Remote Collaboration on Wall-Sized Displays'.Proceedings of the CHI Conference on Human Factors in Computing Systems CHI '17Denver, United StatesACMMay 2017, 6718 - 6729

- 3 inproceedings 'Beyond Snapping: Persistent, Tweakable Alignment and Distribution with StickyLines'. UIST '16 Proceedings of the 29th Annual Symposium on User Interface Software and Technology Proceedings of the 29th Annual Symposium on User Interface Software and Technology Tokyo, Japan October 2016

- 4 inproceedings'Touchstone2: An Interactive Environment for Exploring Trade-offs in HCI Experiment Design'.CHI 2019 - The ACM CHI Conference on Human Factors in Computing SystemsProceedings of the 2019 CHI Conference on Human Factors in Computing Systems217ACMGlasgow, United KingdomACMMay 2019, 1--11

- 5 article'ImageSense: An Intelligent Collaborative Ideation Tool to Support Diverse Human-Computer Partnerships'.Proceedings of the ACM on Human-Computer Interaction 4CSCW1May 2020, 1-27

- 6 inproceedings'BIGnav: Bayesian Information Gain for Guiding Multiscale Navigation'.ACM CHI 2017 - International conference of Human-Computer InteractionDenver, United StatesMay 2017, 5869-5880

- 7 article 'ShapeGuide: Shape-Based 3D Interaction for Parameter Modification of Native CAD Data'. Frontiers in Robotics and AI 5 November 2018

- 8 inproceedings'Articulating Experience: Reflections from Experts Applying Micro-Phenomenology to Design Research in HCI'.CHI '20 - CHI Conference on Human Factors in Computing SystemsHonolulu HI USA, United StatesACMApril 2020, 1-14

- 9 article'StructGraphics: Flexible Visualization Design through Data-Agnostic and Reusable Graphical Structures'.IEEE Transactions on Visualization and Computer Graphics272October 2020, 315-325

- 10 inproceedings'Stretchis: Fabricating Highly Stretchable User Interfaces'.ACM Symposium on User Interface Software and Technology (UIST)Tokyo, JapanOctober 2016, 697-704

10.2 Publications of the year

International journals

International peer-reviewed conferences

Scientific book chapters

Doctoral dissertations and habilitation theses

Reports & preprints

10.3 Other

Scientific popularization

10.4 Cited publications

- 33 phdthesis 'Capturing traces of the dance learning process'. Université Paris-Saclay December 2020