Keywords

Computer Science and Digital Science

- A3.1.4. Uncertain data

- A3.1.10. Heterogeneous data

- A3.4.1. Supervised learning

- A3.4.2. Unsupervised learning

- A3.4.3. Reinforcement learning

- A3.4.4. Optimization and learning

- A3.4.5. Bayesian methods

- A3.4.6. Neural networks

- A3.4.8. Deep learning

- A5.1. Human-Computer Interaction

- A5.1.1. Engineering of interactive systems

- A5.1.2. Evaluation of interactive systems

- A5.1.5. Body-based interfaces

- A5.1.8. 3D User Interfaces

- A5.1.9. User and perceptual studies

- A5.2. Data visualization

- A5.3.5. Computational photography

- A5.4.4. 3D and spatio-temporal reconstruction

- A5.4.5. Object tracking and motion analysis

- A5.5. Computer graphics

- A5.5.1. Geometrical modeling

- A5.5.2. Rendering

- A5.5.3. Computational photography

- A5.5.4. Animation

- A5.6. Virtual reality, augmented reality

- A5.6.1. Virtual reality

- A5.6.2. Augmented reality

- A5.6.3. Avatar simulation and embodiment

- A5.9.1. Sampling, acquisition

- A5.9.3. Reconstruction, enhancement

- A6.3.5. Uncertainty Quantification

- A8.3. Geometry, Topology

- A9.2. Machine learning

- A9.3. Signal analysis

Other Research Topics and Application Domains

- B3.2. Climate and meteorology

- B3.3.1. Earth and subsoil

- B3.3.2. Water: sea & ocean, lake & river

- B3.3.3. Nearshore

- B5. Industry of the future

- B5.2. Design and manufacturing

- B5.5. Materials

- B5.7. 3D printing

- B5.8. Learning and training

- B8. Smart Cities and Territories

- B8.3. Urbanism and urban planning

- B9. Society and Knowledge

- B9.1.2. Serious games

- B9.2. Art

- B9.2.2. Cinema, Television

- B9.2.3. Video games

- B9.5.1. Computer science

- B9.5.2. Mathematics

- B9.5.3. Physics

- B9.5.5. Mechanics

- B9.6. Humanities

- B9.6.6. Archeology, History

- B9.8. Reproducibility

- B9.11.1. Environmental risks

1 Team members, visitors, external collaborators

Research Scientists

- George Drettakis [Team leader, Inria, Senior Researcher, HDR]

- Adrien Bousseau [Inria, Researcher, HDR]

Post-Doctoral Fellows

- Rada Deeb [Inria, until Jul 2020]

- Yulia Gryaditskaya [Inria, until Jan 2020]

- Thomas Leimkühler [Inria]

- Julien Philip [Inria, from Oct 2020]

- Gilles Rainer [Inria, from Dec 2020]

- Tibor Stanko [Inria, until Aug 2020]

PhD Students

- Stavros Diolatzis [Inria]

- Felix Hahnlein [Inria]

- David Jourdan [Inria]

- Georgios Kopanas [Inria]

- Julien Philip [Inria, until Sep 2020]

- Siddhant Prakash [Inria]

- Simon Rodriguez [Inria, until Sep 2020]

- Emilie Yu [Inria, from Oct 2020]

Technical Staff

- Mahdi Benadel [Inria, Engineer, from Apr 2020]

- Sebastien Morgenthaler [Inria, Engineer, until Jun 2020]

- Bastien Wailly [Inria, Engineer, until Oct 2020]

Interns and Apprentices

- Ronan Cailleau [Inria, from Oct 2020]

- Nicolas Vergnet [Inria, from Jul 2020]

- Emilie Yu [Inria, from Feb 2020 until Jul 2020]

Administrative Assistant

- Sophie Honnorat [Inria]

External Collaborator

- Frederic Durand [Massachusetts Institute of Technology, HDR]

2 Overall objectives

2.1 General Presentation

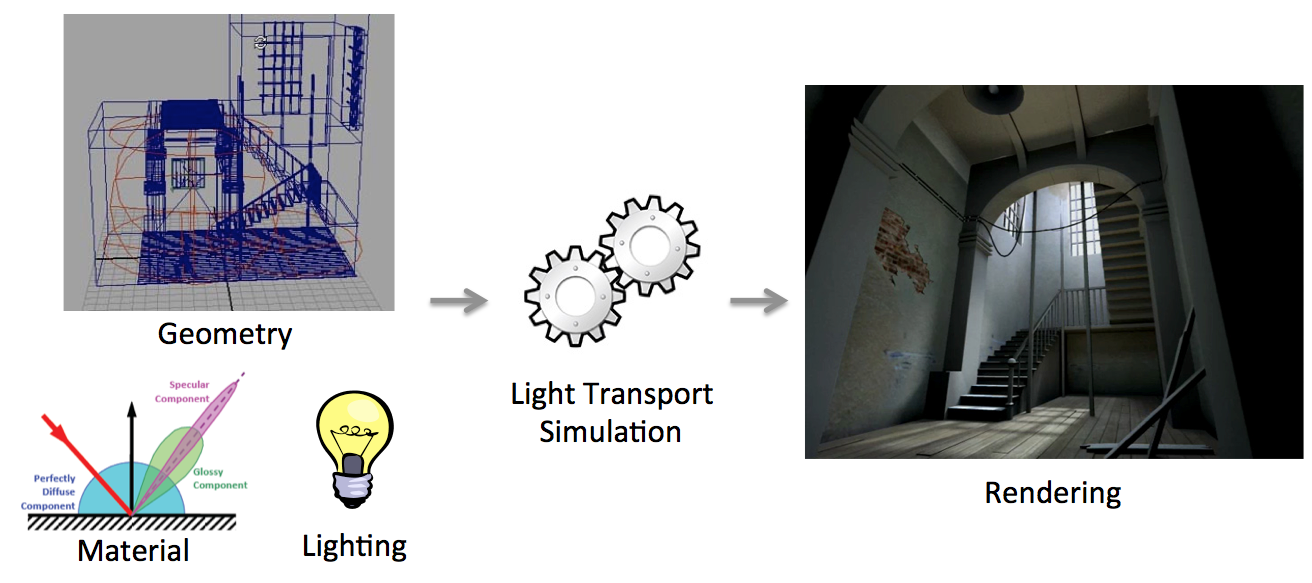

In traditional Computer Graphics (CG) input is accurately modeled by hand by artists. The artists first create the 3D geometry – i.e., the polygons and surfaces used to represent the 3D scene. They then need to assign colors, textures and more generally material properties to each piece of geometry in the scene. Finally they also define the position, type and intensity of the lights. This modeling process is illustrated schematically in Fig. 1(left)). Creating all this 3D content involves a high level of training and skills, and is reserved to a small minority of expert modelers. This tedious process is a significant distraction for creative exploration, during which artists and designers are primarily interested in obtaining compelling imagery and prototypes rather than in accurately specifying all the ingredients listed above. Designers also often want to explore many variations of a concept, which requires them to repeat the above steps multiple times.

Once the 3D elements are in place, a rendering algorithm is employed to generate a shaded, realistic image (Fig. 1(right)). Costly rendering algorithms are then required to simulate light transport (or global illumination) from the light sources to the camera, accounting for the complex interactions between light and materials and the visibility between objects. Such rendering algorithms only provide meaningful results if the input has been accurately modeled and is complete, which is prohibitive as discussed above.

A major recent development is that many alternative sources of 3D content are becoming available. Cheap depth sensors allow anyone to capture real objects but the resulting 3D models are often uncertain, since the reconstruction can be inaccurate and is most often incomplete. There have also been significant advances in casual content creation, e.g., sketch-based modeling tools. The resulting models are often approximate since people rarely draw accurate perspective and proportions. These models also often lack details, which can be seen as a form of uncertainty since a variety of refined models could correspond to the rough one. Finally, in recent years we have witnessed the emergence of new usage of 3D content for rapid prototyping, which aims at accelerating the transition from rough ideas to physical artifacts.

The inability to handle uncertainty in the data is a major shortcoming of CG today as it prevents the direct use of cheap and casual sources of 3D content for the design and rendering of high-quality images. The abundance and ease of access to inaccurate, incomplete and heterogeneous 3D content imposes the need to rethink the foundations of 3D computer graphics to allow uncertainty to be treated in inherent manner in Computer Graphics, from design all the way to rendering and prototyping.

The technological shifts we mention above, together with developments in computer vision, user-friendly sketch-based modeling, online tutorials, but also image, video and 3D model repositories and 3D printing represent a great opportunity for new imaging methods. There are several significant challenges to overcome before such visual content can become widely accessible.

In GraphDeco, we have identified two major scientific challenges of our field which we will address:

- First, the design pipeline needs to be revisited to explicitly account for the variability and uncertainty of a concept and its representations, from early sketches to 3D models and prototypes. Professional practice also needs to be adapted and facilitated to be accessible to all.

- Second, a new approach is required to develop computer graphics models and algorithms capable of handling uncertain and heterogeneous data as well as traditional synthetic content.

We next describe the context of our proposed research for these two challenges. Both directions address hetereogeneous and uncertain input and (in some cases) output, and build on a set of common methodological tools.

3 Research program

3.1 Introduction

Our research program is oriented around two main axes: 1) Computer-Assisted Design with Heterogeneous Representations and 2) Graphics with Uncertainty and Heterogeneous Content. These two axes are governed by a set of common fundamental goals, share many common methodological tools and are deeply intertwined in the development of applications.

3.2 Computer-Assisted Design with Heterogeneous Representations

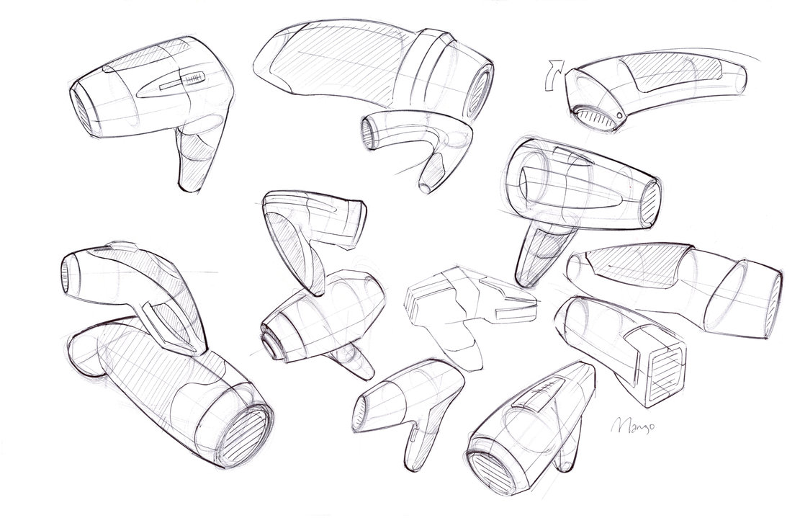

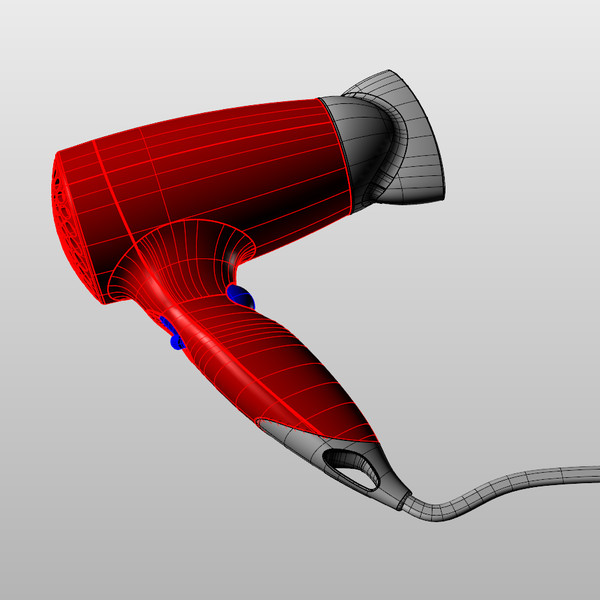

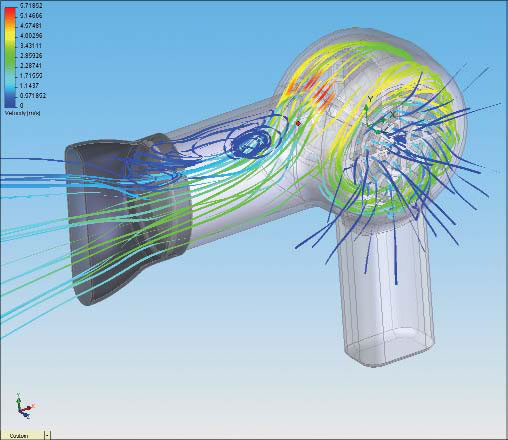

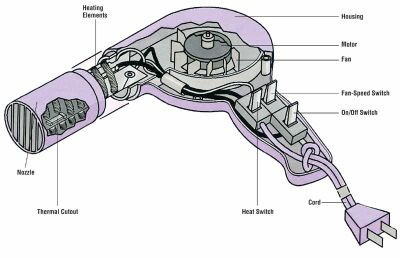

Designers use a variety of visual representations to explore and communicate about a concept. Fig. 2 illustrates some typical representations, including sketches, hand-made prototypes, 3D models, 3D printed prototypes or instructions.

|

|

|

|

| (a) Ideation sketch | (b) Presentation sketch | (c) Coarse prototype | (d) 3D model |

|

|

|

|

| (f) Simulation | (e) 3D Printing | (g) Technical diagram | (h) Instructions |

The early representations of a concept, such as rough sketches and hand-made prototypes, help designers formulate their ideas and test the form and function of multiple design alternatives. These low-fidelity representations are meant to be cheap and fast to produce, to allow quick exploration of the design space of the concept. These representations are also often approximate to leave room for subjective interpretation and to stimulate imagination; in this sense, these representations can be considered uncertain. As the concept gets more finalized, time and effort are invested in the production of more detailed and accurate representations, such as high-fidelity 3D models suitable for simulation and fabrication. These detailed models can also be used to create didactic instructions for assembly and usage.

Producing these different representations of a concept requires specific skills in sketching, modeling, manufacturing and visual communication. For these reasons, professional studios often employ different experts to produce the different representations of the same concept, at the cost of extensive discussions and numerous iterations between the actors of this process. The complexity of the multi-disciplinary skills involved in the design process also hinders their adoption by laymen.

Existing solutions to facilitate design have focused on a subset of the representations used by designers. However, no solution considers all representations at once, for instance to directly convert a series of sketches into a set of physical prototypes. In addition, all existing methods assume that the concept is unique rather than ambiguous. As a result, rich information about the variability of the concept is lost during each conversion step.

We plan to facilitate design for professionals and laymen by adressing the following objectives:

- We want to assist designers in the exploration of the design space that captures the possible variations of a concept. By considering a concept as a distribution of shapes and functionalities rather than a single object, our goal is to help designers consider multiple design alternatives more quickly and effectively. Such a representation should also allow designers to preserve multiple alternatives along all steps of the design process rather than committing to a single solution early on and pay the price of this decision for all subsequent steps. We expect that preserving alternatives will facilitate communication with engineers, managers and clients, accelerate design iterations and even allow mass personalization by the end consumers.

- We want to support the various representations used by designers during concept development. While drawings and 3D models have received significant attention in past Computer Graphics research, we will also account for the various forms of rough physical prototypes made to evaluate the shape and functionality of a concept. Depending on the task at hand, our algorithms will either analyse these prototypes to generate a virtual concept, or assist the creation of these prototypes from a virtual model. We also want to develop methods capable of adapting to the different drawing and manufacturing techniques used to create sketches and prototypes. We envision design tools that conform to the habits of users rather than impose specific techniques to them.

- We want to make professional design techniques available to novices. Affordable software, hardware and online instructions are democratizing technology and design, allowing small businesses and individuals to compete with large companies. New manufacturing processes and online interfaces also allow customers to participate in the design of an object via mass personalization. However, similarly to what happened for desktop publishing thirty years ago, desktop manufacturing tools need to be simplified to account for the needs and skills of novice designers. We hope to support this trend by adapting the techniques of professionals and by automating the tasks that require significant expertise.

3.3 Graphics with Uncertainty and Heterogeneous Content

Our research is motivated by the observation that traditional CG algorithms have not been designed to account for uncertain data. For example, global illumination rendering assumes accurate virtual models of geometry, light and materials to simulate light transport. While these algorithms produce images of high realism, capturing effects such as shadows, reflections and interreflections, they are not applicable to the growing mass of uncertain data available nowadays.

The need to handle uncertainty in CG is timely and pressing, given the large number of heterogeneous sources of 3D content that have become available in recent years. These include data from cheap depth+image sensors (e.g., Kinect or the Tango), 3D reconstructions from image/video data, but also data from large 3D geometry databases, or casual 3D models created using simplified sketch-based modeling tools. Such alternate content has varying levels of uncertainty about the scene or objects being modelled. This includes uncertainty in geometry, but also in materials and/or lights – which are often not even available with such content. Since CG algorithms cannot be applied directly, visual effects artists spend hundreds of hours correcting inaccuracies and completing the captured data to make them useable in film and advertising.

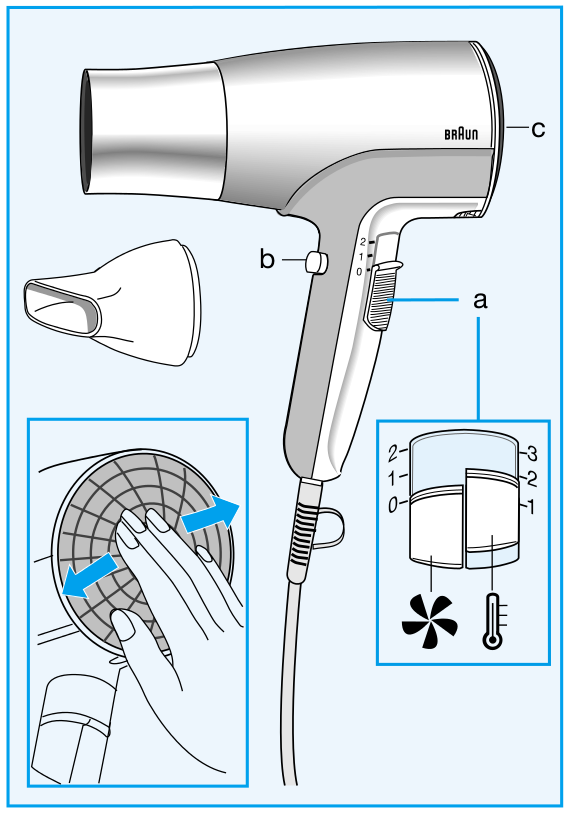

|

|

Image-Based Rendering (IBR) techniques use input photographs and approximate 3D to produce new synthetic views.

We identify a major scientific bottleneck which is the need to treat heterogeneous content, i.e., containing both (mostly captured) uncertain and perfect, traditional content. Our goal is to provide solutions to this bottleneck, by explicitly and formally modeling uncertainty in CG, and to develop new algorithms that are capable of mixed rendering for this content.

We strive to develop methods in which heterogeneous – and often uncertain – data can be handled automatically in CG with a principled methodology. Our main focus is on rendering in CG, including dynamic scenes (video/animations).

Given the above, we need to address the following challenges:

- Develop a theoretical model to handle uncertainty in computer graphics. We must define a new formalism that inherently incorporates uncertainty, and must be able to express traditional CG rendering, both physically accurate and approximate approaches. Most importantly, the new formulation must elegantly handle mixed rendering of perfect synthetic data and captured uncertain content. An important element of this goal is to incorporate cost in the choice of algorithm and the optimizations used to obtain results, e.g., preferring solutions which may be slightly less accurate, but cheaper in computation or memory.

- The development of rendering algorithms for heterogeneous content often requires preprocessing of image and video data, which sometimes also includes depth information. An example is the decomposition of images into intrinsic layers of reflectance and lighting, which is required to perform relighting. Such solutions are also useful as image-manipulation or computational photography techniques. The challenge will be to develop such “intermediate” algorithms for the uncertain and heterogeneous data we target.

- Develop efficient rendering algorithms for uncertain and heterogeneous content, reformulating rendering in a probabilistic setting where appropriate. Such methods should allow us to develop approximate rendering algorithms using our formulation in a well-grounded manner. The formalism should include probabilistic models of how the scene, the image and the data interact. These models should be data-driven, e.g., building on the abundance of online geometry and image databases, domain-driven, e.g., based on requirements of the rendering algorithms or perceptually guided, leading to plausible solutions based on limitations of perception.

4 Application domains

Our research on design and computer graphics with heterogeneous data has the potential to change many different application domains. Such applications include:

Product design will be significantly accelerated and facilitated. Current industrial workflows seperate 2D illustrators, 3D modelers and engineers who create physical prototypes, which results in a slow and complex process with frequent misunderstandings and corrective iterations between different people and different media. Our unified approach based on design principles could allow all processes to be done within a single framework, avoiding unnecessary iterations. This could significantly accelerate the design process (from months to weeks), result in much better communication between the different experts, or even create new types of experts who cross boundaries of disciplines today.

Mass customization will allow end customers to participate in the design of a product before buying it. In this context of “cloud-based design”, users of an e-commerce website will be provided with controls on the main variations of a product created by a professional designer. Intuitive modeling tools will also allow users to personalize the shape and appearance of the object while remaining within the bounds of the pre-defined design space.

Digital instructions for creating and repairing objects, in collaboration with other groups working in 3D fabrication, could have significant impact in sustainable development and allow anyone to be a creator of things, not just consumers, the motto of the makers movement.

Gaming experience individualization is an important emerging trend; using our results players will also be able to integrate personal objects or environments (e.g., their homes, neighborhoods) into any realistic 3D game. The success of creative games where the player constructs their world illustrates the potential of such solutions. This approach also applies to serious gaming, with applications in medicine, education/learning, training etc. Such interactive experiences with high-quality images of heterogeneous 3D content will be also applicable to archeology (e.g., realistic presentation of different reconstruction hypotheses), urban planning and renovation where new elements can be realistically used with captured imagery.

Virtual training, which today is restricted to pre-defined virtual environment(s) that are expensive and hard to create; with our solutions on-site data can be seamlessly and realistically used together with the actual virtual training environment. With our results, any real site can be captured, and the synthetic elements for the interventions rendered with high levels of realism, thus greatly enhancing the quality of the training experience.

Another interesting novel use of heterogeneous graphics could be for news reports. Using our interactive tool, a news reporter can take on-site footage, and combine it with 3D mapping data. The reporter can design the 3D presentation allowing the reader to zoom from a map or satellite imagery and better situate the geographic location of a news event. Subsequently, the reader will be able to zoom into a pre-existing street-level 3D online map to see the newly added footage presented in a highly realistic manner. A key aspect of these presentation is the ability of the reader to interact with the scene and the data, while maintaining a fully realistic and immersive experience. The realism of the presentation and the interactivity will greatly enhance the readers experience and improve comprehension of the news. The same advantages apply to enhanced personal photography/videography, resulting in much more engaging and lively memories. Such interactive experiences with high-quality images of heterogeneous 3D content will be also applicable to archeology (e.g., realistic presentation of different reconstruction hypotheses), urban planning and renovation where new elements can be realistically used with captured imagery.

Other applications may include scientific domains which use photogrammetric data (captured with various 3D scanners), such as geophysics and seismology. Note however that our goal is not to produce 3D data suitable for numerical simulations; our approaches can help in combining captured data with presentations and visualization of scientific information.

5 Highlights of the year

5.1 Awards

Valentin Deschaintre received the French Computer Graphics and Geometry Thesis award from the GDR IG-RV. Valentin also received the UCA foundation Academic Excellence thesis award from Université Côte d'Azur.

5.2 Software release

Software release of SIBR: System for Image-Based Rendering. SIBR is a major software platform for Image-Based Rendering (IBR) research. The current or previous versions of this platform have been used for 10 of our publications and 4 other current projects. It includes both preprocessing tools (computing data used for rendering) and rendering utilities. We released the core system as open source under a non-commerical license in December 2020, together with full source code modules and example data for 7 publications of the group. Please see the section on new softwares and platforms, as well as the online documentation.

6 New software and platforms

6.1 New software

6.1.1 SynDraw

- Keywords: Non-photorealistic rendering, Vector-based drawing, Geometry Processing

- Functional Description: The SynDraw library extracts occluding contours and sharp features over a 3D shape, computes all their intersections using a binary space partitioning algorithm, and finally performs a raycast to determine each sub-contour visibility. The resulting lines can then be exported as an SVG file for subsequent processing, for instance to stylize the drawing with different brush strokes. The library can also export various attributes for each line, such as its visibility and type. Finally, the library embeds tools allowing one to add noise into an SVG drawing, in order to generate multiple images from a single sketch. SynthDraw is based on the geometry processing library libIGL.

- Release Contributions: This first version extracts occluding contours, boundaries, creases, ridges, valleys, suggestive contours and demarcating curves. Visibility is computed with a view graph structure. Lines can be aggregated and/or filtered. Labels and outputs include: line type, visibility, depth and aligned normal map.

- Authors: Adrien Bousseau, Bastien Wailly, Adele Saint-Denis

- Contacts: Adrien Bousseau, Bastien Wailly

6.1.2 DeepSketch

- Keywords: 3D modeling, Sketching, Deep learning

- Functional Description: DeepSketch is a sketch-based modeling system that runs in a web browser. It relies on deep learning to recognize geometric shapes in line drawings. The system follows a client/server architecture, based on the Node.js and WebGL technology. The application's main targets are iPads or Android tablets equipped with a digital pen, but it can also be used on desktop computers.

- Release Contributions: This first version is built around a client/server Node.js application whose job is to transmit a drawing from the client's interface to the server where the deep networks are deployed, then transmit the results back to the client where the final shape is created and rendered in a WebGL 3D scene thanks to the THREE.js JavaScript framework. Moreover, the client is able to perform various camera transformations before drawing an object (change position, rotate in place, scale on place) by interacting with the touch screen. The user also has the ability to draw the shape's shadow to disambiguate depth/height. The deep networks are created, trained and deployed with the Caffe framework.

- Authors: Adrien Bousseau, Bastien Wailly

- Contacts: Adrien Bousseau, Bastien Wailly

6.1.3 DPP

- Name: Delaunay Point Process for image analysis

- Keywords: Computer vision, Shape recognition, Delaunay triangulation, Stochastic process

- Functional Description: The software extract 2D geometric structures (planar graphs, polygons...) from images

- Publication: hal-01950791

- Authors: Jean-Dominique Favreau, Florent Lafarge, Adrien Bousseau

- Contact: Florent Lafarge

- Participants: Jean-Dominique Favreau, Florent Lafarge, Adrien Bousseau

6.1.4 sibr-core

- Name: System for Image-Based Rendering

- Keyword: Graphics

-

Scientific Description:

Core functionality to support Image-Based Rendering research. The core provides basic support for camera calibration, multi-view stereo meshes and basic image-based rendering functionality. Separate dependent repositories interface with the core for each research project. This library is an evolution of the previous SIBR software, but now is much more modular.

sibr-core has been released as open source software, as well as the code for several of our research papers, as well as papers from other authors for comparisons and benchmark purposes.

The corresponding gitlab is: https://gitlab.inria.fr/sibr/sibr_core

The full documentation is at: https://sibr.gitlabpages.inria.fr

- Functional Description: sibr-core is a framework containing libraries and tools used internally for research projects based on Image-Base Rendering. It includes both preprocessing tools (computing data used for rendering) and rendering utilities and serves as the basis for many research projects in the group.

- Authors: Sebastien Bonopera, Jérôme Esnault, Siddhant Prakash, Simon Rodriguez, Théo Thonat, Gaurav Chaurasia, Julien Philip, George Drettakis, Mahdi Benadel

- Contact: George Drettakis

6.1.5 SGTDGP

- Name: Synthetic Ground Truth Data Generation Platform

- Keyword: Graphics

-

Functional Description:

The goal of this platform is to render large numbers of realistic synthetic images for use as ground truth to compare and validate image-based rendering algorithms and also to train deep neural networks developed in our team.

This pipeline consists of tree major elements that are:

- Scene exporter

- Assisted point of view generation

- Distributed rendering on INRIA's high performance computing cluster

The scene exporter is able to export scenes created in the widely-used commercial modeler 3DSMAX to the Mitsuba opensource renderer format. It handles the conversion of complex materials and shade trees from 3DSMAX including materials made for VRay. The overall quality of the produced images with exported scenes have been improved thanks to a more accurate material conversion. The initial version of the exporter was extended and improved to provide better stability and to avoid any manual intervention.

From each scene we can generate a large number of images by placing multiple cameras. Most of the time those points of view has to be placed with a certain coherency. This task could be long and tedious. In the context of image-based rendering, cameras have to be placed in a row with a specific spacing. To simplify this process we have developed a set of tools to assist the placement of hundreds of cameras along a path.

The rendering is made with the open source renderer Mitsuba. The rendering pipeline is optimised to render a large number of point of view for single scene. We use a path tracing algorithm to simulate the light interaction in the scene and produce hight dynamic range images. It produces realistic images but it is computationally demanding. To speed up the process we setup an architecture that takes advantage of the INRIA cluster to distribute the rendering on hundreds of CPUs cores.

The scene data (geometry, textures, materials) and the cameras are automatically transfered to remote workers and HDR images are returned to the user.

We already use this pipeline to export tens of scenes and to generate several thousands of images, which have been used for machine learning and for ground-truth image production.

We have recently integrated the platform with the sibr-core software library, allowing us to read mitsuba scenes. We have written a tool to allow camera placement to be used for rendering and for reconstruction of synthetic scenes, including alignment of the exact and reconstructed version of the scenes. This dual-representation scenes can be used for learning and as ground truth. We can also perform various operations on the ground truth data within sibr-core, e.g., compute shadow maps of both exact and reconstructed representations etc.

- Authors: Laurent Boiron, SÉbastien Morgenthaler, Georgios Kopanas, Julien Philip, George Drettakis

- Contact: George Drettakis

6.1.6 Unity IBR

- Keyword: Graphics

- Functional Description: Unity IBR (for Image-Based Rendering in Unity) This is a software module that proceeds the development of IBR algorithms in Unity. In this case, algorithms are developed for the context of EMOTIVE EU project. The rendering technique was changed during the year to evaluate and compare which one produces better results suitable for Game Development with Unity (improvement of image quality and faster rendering). New features were also added such as rendering of bigger datasets and some debugging utilities. Software was also updated to keep compatibility with new released versions of Unity game engine. In addition, in order to develop a demo showcasing the technology, a multiplayer VR scene was created proving the integration of IBR with the rest of the engine.

- Authors: Sebastian Vizcay, George Drettakis

- Contact: George Drettakis

6.1.7 DeepRelighting

- Name: Deep Geometry-Aware Multi-View Relighting

- Keyword: Graphics

- Scientific Description: Implementation of the paper: Multi-view Relighting using a Geometry-Aware Network (https://hal.inria.fr/hal-02125095), based on the sibr-core library.

- Functional Description: Implementation of the paper: Multi-view Relighting using a Geometry-Aware Network (https://hal.inria.fr/hal-02125095), based on the sibr-core library.

- Publication: https://hal.inria.fr/hal-02125095

- Authors: Julien Philip, George Drettakis

- Contacts: George Drettakis, Julien Philip

- Participants: Julien Philip, George Drettakis

6.1.8 SingleDeepMat

- Name: Single-image deep material acquisition

- Keywords: Materials, 3D, Realistic rendering, Deep learning

- Scientific Description: Cook-Torrance SVBRDF parameter acquisition from a single Image using Deep learning

- Functional Description: Allows material acquisition from a single picture, to then be rendered in a virtual environment. Implementation of the paper https://hal.inria.fr/hal-01793826/

- Release Contributions: Based on Pix2Pix implementation by AffineLayer (Github)

-

URL:

https://

team. inria. fr/ graphdeco/ projects/ deep-materials/ - Publication: hal-01793826

- Authors: Valentin Deschaintre, Miika Aittala, Frédo Durand, George Drettakis, Adrien Bousseau

- Contacts: Valentin Deschaintre, George Drettakis, Adrien Bousseau

- Participants: Valentin Deschaintre, Miika Aittala, Frédo Durand, George Drettakis, Adrien Bousseau

- Partner: CSAIL, MIT

6.1.9 MultiDeepMat

- Name: Multi-image deep material acquisition

- Keywords: 3D, Materials, Deep learning

- Scientific Description: Allows material acquisition from multiple pictures, to then be rendered in a virtual environment. Implementation of the paper https://hal.inria.fr/hal-02164993

- Functional Description: Allows material acquisition from multiple pictures, to then be rendered in a virtual environment. Implementation of the paper https://hal.inria.fr/hal-02164993

- Release Contributions: Code fully rewritten since the SingleDeepMat project, but some function are imported from it.

-

URL:

https://

team. inria. fr/ graphdeco/ projects/ multi-materials/ - Publication: hal-02164993

- Authors: Valentin Deschaintre, Miika Aittala, Frédo Durand, George Drettakis, Adrien Bousseau

- Contacts: Valentin Deschaintre, George Drettakis, Adrien Bousseau

- Participants: Valentin Deschaintre, Miika Aittala, Frédo Durand, George Drettakis, Adrien Bousseau

6.1.10 GuidedDeepMat

- Name: Guided deep material acquisition

- Keywords: Materials, 3D, Deep learning

- Scientific Description: Deep large scale HD material acquisition guided by an example small scale SVBRDF

- Functional Description: Deep large scale HD material acquisition guided by an example small scale SVBRDF

- Release Contributions: Code based on the MultiDeepMat project code.

- Authors: Valentin Deschaintre, George Drettakis, Adrien Bousseau

- Contacts: Valentin Deschaintre, George Drettakis, Adrien Bousseau

- Participants: Valentin Deschaintre, George Drettakis, Adrien Bousseau

6.1.11 FacadeRepetitions

- Name: Repetitive Facade Image Based Rendering

- Keywords: Graphics, Realistic rendering, Multi-View reconstruction

- Scientific Description: Implementation of the paper: Exploiting Repetitions for Image-based Rendering of Facades (https://hal.inria.fr/hal-01814058, http://www-sop.inria.fr/reves/Basilic/2018/RBDD18/, https://gitlab.inria.fr/sibr/projects/facades-repetitions/facade_repetitions), based on the sibr-core library.

- Functional Description: Implementation of the paper: Exploiting Repetitions for Image-based Rendering of Facades (https://hal.inria.fr/hal-01814058, http://www-sop.inria.fr/reves/Basilic/2018/RBDD18/, https://gitlab.inria.fr/sibr/projects/facades-repetitions/facade_repetitions), based on the sibr-core library.

- Publication: hal-01814058

- Authors: Simon Rodriguez, George Drettakis, Adrien Bousseau, Frédo Durand

- Contacts: George Drettakis, Simon Rodriguez

- Participants: George Drettakis, Simon Rodriguez, Adrien Bousseau, Frédo Durand

6.1.12 SemanticReflections

- Name: Image-Based Rendering of Cars with Reflections

- Keywords: Graphics, Realistic rendering, Multi-View reconstruction

- Scientific Description: Implementation of the paper: Image-Based Rendering of Cars using Semantic Labels and Approximate Reflection Flow (https://hal.inria.fr/hal-02533190, http://www-sop.inria.fr/reves/Basilic/2020/RPHD20/, https://gitlab.inria.fr/sibr/projects/semantic-reflections/semantic_reflections), based on the sibr-core library.

- Functional Description: Implementation of the paper: Image-Based Rendering of Cars using Semantic Labels and Approximate Reflection Flow (https://hal.inria.fr/hal-02533190, http://www-sop.inria.fr/reves/Basilic/2020/RPHD20/, https://gitlab.inria.fr/sibr/projects/semantic-reflections/semantic_reflections), based on the sibr-core library.

- Publication: hal-02533190

- Authors: Simon Rodriguez, George Drettakis, Siddhant Prakash, Lars Peter Hedman

- Contacts: George Drettakis, Simon Rodriguez

- Participants: Simon Rodriguez, George Drettakis, Siddhant Prakash, Lars Peter Hedman

6.1.13 GlossyProbes

- Name: Glossy Probe Reprojection for GI

- Keywords: Graphics, Realistic rendering, Real-time rendering

- Scientific Description: Implementation of the paper: Glossy Probe Reprojection for Interactive Global Illumination (https://hal.inria.fr/hal-02930925, http://www-sop.inria.fr/reves/Basilic/2020/RLPWSD20/, https://gitlab.inria.fr/sibr/projects/glossy-probes/synthetic_ibr), based on the sibr-core library.

- Functional Description: Implementation of the paper: Glossy Probe Reprojection for Interactive Global Illumination (https://hal.inria.fr/hal-02930925, http://www-sop.inria.fr/reves/Basilic/2020/RLPWSD20/, https://gitlab.inria.fr/sibr/projects/glossy-probes/synthetic_ibr), based on the sibr-core library.

- Publication: hal-02930925

- Authors: Simon Rodriguez, Thomas LeimkÜhler, Siddhant Prakash, George Drettakis

- Contacts: George Drettakis, Simon Rodriguez

- Participants: Simon Rodriguez, George Drettakis, Thomas LeimkÜhler, Siddhant Prakash

6.1.14 SpixelWarpandSelection

- Name: Superpixel warp and depth synthesis and Selective Rendering

- Keywords: 3D, Graphics, Multi-View reconstruction

-

Scientific Description:

Implementation of the following two papers, as part of the sibr library: + Depth Synthesis and Local Warps for Plausible Image-based Navigation http://www-sop.inria.fr/reves/Basilic/2013/CDSD13/ (previous bil fiche https://bil.inria.fr/fr/software/view/1802/tab ) + A Bayesian Approach for Selective Image-Based Rendering using Superpixels http://www-sop.inria.fr/reves/Basilic/2015/ODD15/

Gitlab: https://gitlab.inria.fr/sibr/projects/spixelwarp

- Functional Description: Implementation of the following two papers, as part of the sibr library: + Depth Synthesis and Local Warps for Plausible Image-based Navigation http://www-sop.inria.fr/reves/Basilic/2013/CDSD13/ (previous bil fiche https://bil.inria.fr/fr/software/view/1802/tab ) + A Bayesian Approach for Selective Image-Based Rendering using Superpixels http://www-sop.inria.fr/reves/Basilic/2015/ODD15/

- Release Contributions: Integration into sibr framework and updated documentation

- Authors: Gaurav Chaurasia, Sylvain François Duchene, Rodrigo Ortiz Cayon, Abdelaziz Djelouah, George Drettakis

- Contact: George Drettakis

- Participants: George Drettakis, Gaurav Chaurasia, Olga Sorkine-Hornung, Sylvain François Duchene, Rodrigo Ortiz Cayon, Abdelaziz Djelouah, Siddhant Prakash

6.1.15 DeepBlending

- Name: Deep Blending for Image-Based Rendering

- Keywords: 3D, Graphics

- Scientific Description: Implementations of two papers: + Scalable Inside-Out Image-Based Rendering http://www-sop.inria.fr/reves/Basilic/2016/HRDB16/ (with MVS input) + Deep Blending for Free-Viewpoint Image-Based Rendering http://www-sop.inria.fr/reves/Basilic/2018/HPPFDB18/

-

Functional Description:

Implementations of two papers: + Scalable Inside-Out Image-Based Rendering http://www-sop.inria.fr/reves/Basilic/2016/HRDB16/ (with MVS input) + Deep Blending for Free-Viewpoint Image-Based Rendering http://www-sop.inria.fr/reves/Basilic/2018/HPPFDB18/

Code: https://gitlab.inria.fr/sibr/projects/inside_out_deep_blending

- Contact: George Drettakis

- Participants: George Drettakis, Julien Philip, Lars Peter Hedman, Siddhant Prakash, Gabriel Brostow, Tobias Ritschel

7 New results

7.1 Computer-Assisted Design with Heterogeneous Representations

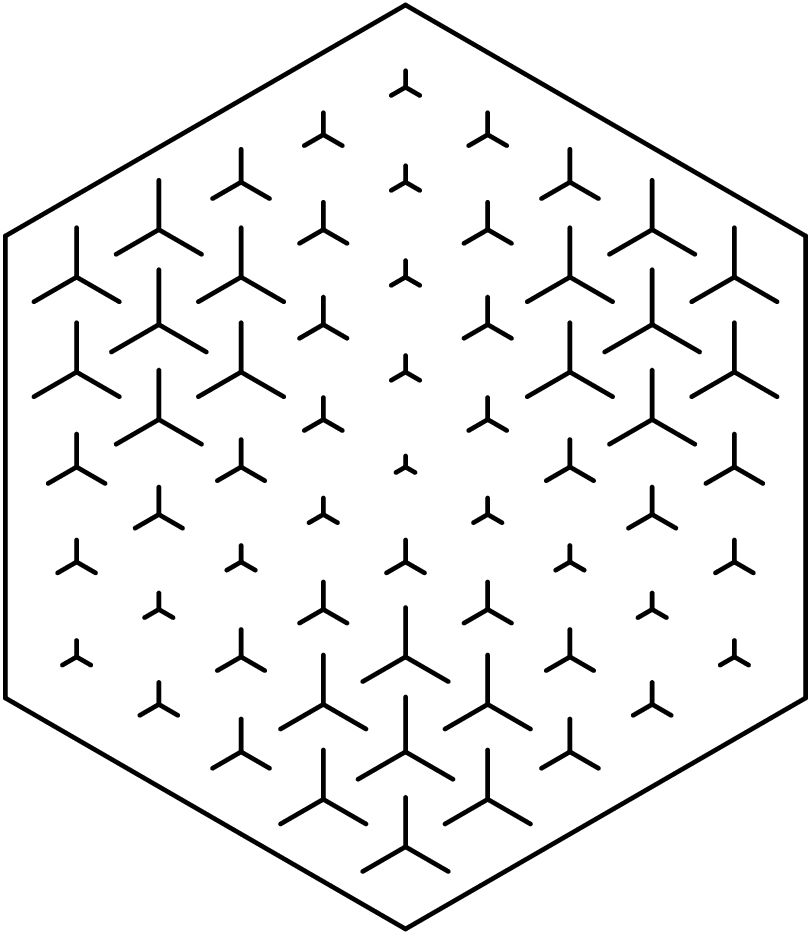

7.1.1 Integer-Grid Sketch Vectorization

Participants: Tibor Stanko, Adrien Bousseau.

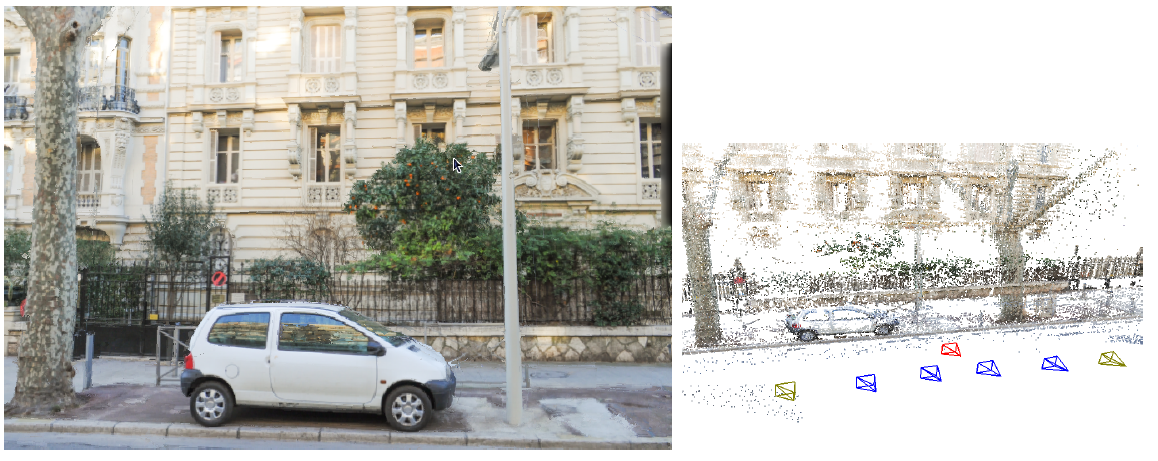

A major challenge in line drawing vectorization is segmenting the input bitmap into separate curves. This segmentation is especially problematic for rough sketches, where curves are depicted using multiple overdrawn strokes. Inspired by feature-aligned mesh quadrangulation methods in geometry processing, we propose to extract vector curve networks by parametrizing the image with local drawing-aligned integer grids. The regular structure of the grid facilitates the extraction of clean line junctions; due to the grid's discrete nature, nearby strokes are implicitly grouped together (Fig. 4). We demonstrate that our method successfully vectorizes both clean and rough line drawings, whereas previous methods focused on only one of those drawing types.

This work is a collaboration with David Bommes from University of Bern and Mikhail Bessmeltsev from University of Montreal. It was published in Computer Graphics Forum, and presented at SGP 2020 17.

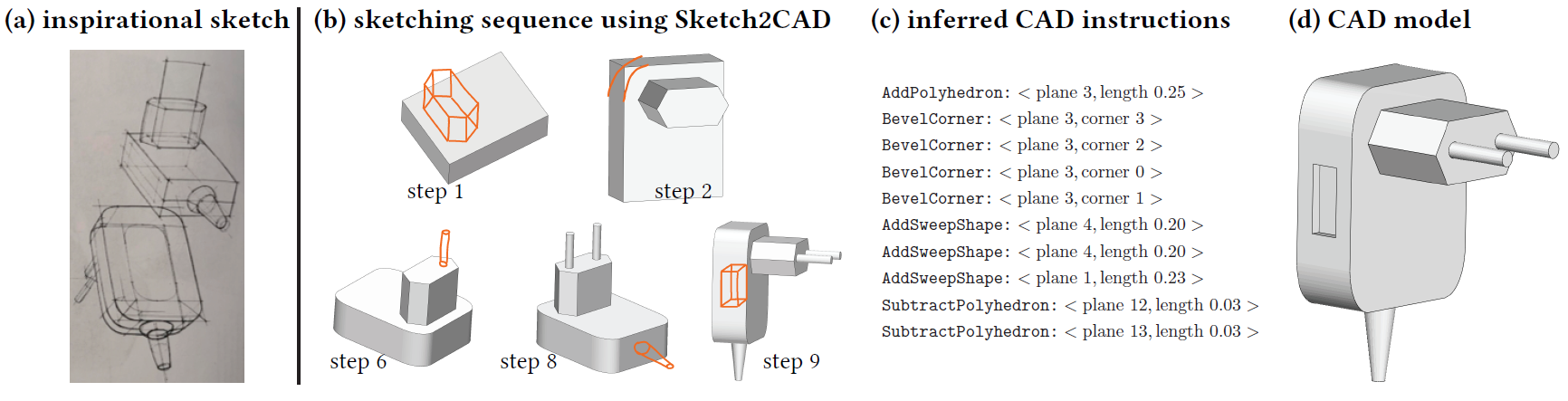

7.1.2 Sketch2CAD: Sequential CAD Modeling by Sketching in Context

Participants: Adrien Bousseau.

We present a sketch-based CAD modeling system, where users create objects incrementally by sketching the desired shape edits, which our system automatically translates to CAD operations (Fig. 5). Our approach is motivated by the close similarities between the steps industrial designers follow to draw 3D shapes, and the operations CAD modeling systems offer to create similar shapes. To overcome the strong ambiguity with parsing 2D sketches, we observe that in a sketching sequence, each step makes sense and can be interpreted in the context of what has been drawn before. In our system, this context corresponds to a partial CAD model, inferred in the previous steps, which we feed along with the input sketch to a deep neural network in charge of interpreting how the model should be modified by that sketch. Our deep network architecture then recognizes the intended CAD operation and segments the sketch accordingly, such that a subsequent optimization estimates the parameters of the operation that best fit the segmented sketch strokes. Since there exists no datasets of paired sketching and CAD modeling sequences, we train our system by generating synthetic sequences of CAD operations that we render as line drawings. We present a proof of concept realization of our algorithm supporting four frequently used CAD operations. Using our system, participants are able to quickly model a large and diverse set of objects, demonstrating Sketch2CAD to be an alternate way of interacting with current CAD modeling systems.

This work is a collaboration with Changjian Li and Niloy J. Mitra from University College London and Adobe Research, and Hao Pan from Microsoft Research Asia. It was published in ACM Transactions on Graphics, and presented at SIGGRAPH Asia 2020 14.

7.1.3 Lifting Freehand Concept Sketches into 3D

Participants: Yulia Gryaditskaya, Felix Hahnlein, Adrien Bousseau.

In this project, we developed the first algorithm capable of automatically lifting real-world, vector-format, industrial design sketches into 3D. Targeting real-world sketches raises numerous challenges due to inaccuracies, use of overdrawn strokes, and construction lines, see Fig.6.

In particular, while construction lines convey important 3D information, they add significant clutter and introduce multiple accidental 2D intersections. Our algorithm exploits the geometric cues provided by the construction lines and lifts them to 3D by computing their intended 3D intersections and depths. Once lifted to 3D, these lines provide valuable geometric constraints that we leverage to infer the 3D shape of other artist drawn strokes. The core challenge we address is inferring the 3D connectivity of construction and other lines from their 2D projections by separating 2D intersections into 3D intersections and accidental occlusions. We efficiently address this complex combinatorial problem using a dedicated search algorithm that leverages observations about designer drawing preferences, and uses those to explore only the most likely solutions of the 3D intersection detection problem.

We demonstrate that our separator outputs are of comparable quality to human annotations, and that the 3D structures we recover enable a range of design editing and visualization applications, including novel view synthesis and 3D-aware scaling of the depicted shape.

This work is a collaboration with Chenxi Liu and Alla Sheffer from University of British Columbia. This work was published at ACM Transactions on Graphics, and presented at SIGGRAPH Asia 2020 13.

7.1.4 Symmetric Concept Sketches

Participants: Felix Hahnlein, Adrien Bousseau.

In this project, we leverage symmetry as a prior for reconstructing concept sketches into 3D. Reconstructing concept sketches is extremely challenging, notably due to the presence of construction lines. We observe that designers commonly use 2D construction techniques which yield symmetric lines. We are currently developing a method which makes use of an integer programming solver to identify summetry pairs and deduce the resulting 3D reconstruction. To evaluate our method, we compare the results with those obtained by previous methods and show the usability of our reconstructions for further 3D processing applications.

This ongoing work is a collaboration with Alla Sheffer from University of British Columbia, and Yulia Gryaditskaya now at University of Surrey.

7.1.5 Printing-on-Fabric Meta-Material for Self-Shaping Architectural Models

Participants: David Jourdan, Adrien Bousseau.

We describe a new meta-material for fabricating lightweight architectural models, consisting of a tiled plastic star pattern layered over pre-stretched fabric, and an interactive system for computer-aided design of doubly-curved forms using this meta-material. 3D-printing plastic rods over pre-stretched fabric recently gained popularity as a low-cost fabrication technique for complex free-form shapes that automatically lift in space. Our key insight is to focus on rods arranged into repeating star patterns, with the dimensions (and hence physical properties) of the individual pattern elements varying over space. Our star-based meta-material on the one hand allows effective form-finding due to its low-dimensional design space, while on the other is flexible and powerful enough to express large-scale curvature variations. Users of our system design free-form shapes by adjusting the star pattern; our system then automatically simulates the complex physical coupling between the fabric and stars to translate the design edits into shape variations. We experimentally validate our system and demonstrate strong agreement between the simulated results and the final fabricated prototypes (see Fig. 7).

|

|

|

| Star pattern | Simulation | Fabrication |

This work is a collaboration with Mélina Skouras from Inria Grenoble Rhône-Alpes and Etienne Vouga from UT Austin. This work will be presented at Advances in Architectural Geometry 18.

7.1.6 Computational Design of Self-Actuated Surfaces by Printing Plastic Stripes on Stretched Fabric

Participants: David Jourdan, Adrien Bousseau.

In this project, we propose to solve the inverse problem of designing self-actuated structures that morph from a flat sheet to a curved 3D geometry by embedding plastic strips into pre-stretched fabric. Our inverse design tool takes as input a triangle mesh representing the desired target (deployed) surface shape, and simultaneously computes (1) a flattening of this surface into the plane, and (2) a stripe pattern on this planar domain, so that 3D-printing the stripe pattern onto fabric with constant pre-stress, and cutting the fabric along the boundary of the planar domain, yields an assembly whose static shape deploys to match the target surface. Combined with a novel technique allowing us to print on both sides of the fabric, this algorithm allows us to reproduce a wide variety of shapes.

This ongoing work is a collaboration with Mélina Skouras from Inria Grenoble Rhône-Alpes and Etienne Vouga from UT Austin.

7.1.7 CASSIE: Curve and Surface Sketching in Immersive Environments

Participants: Emilie Yu, Tibor Stanko, Adrien Bousseau.

In this project, we prototype and test a novel conceptual modeling system in VR that leverages freehand mid-air sketching, and a novel 3D optimization framework to create connected curve network armatures, predictively surfaced using patches with C0 continuity. Our system provides a judicious balance of interactivity and automation, resulting in a homogeneous 3D drawing interface for a mix of freehand curves, curve networks, and surface patches. A comprehensive user study with professional designers as well as amateurs (N=12), and a diverse gallery of 3D models, show our armature and patch functionality to offer a user experience and expressivity on par with freehand ideation, while creating sophisticated concept models for downstream applications.

This work is a collaboration with Rahul Arora and Karan Singh from University of Toronto, and J. Andreas Bærentzen from the Technical University of Denmark.

7.2 Graphics with Uncertainty and Heterogeneous Content

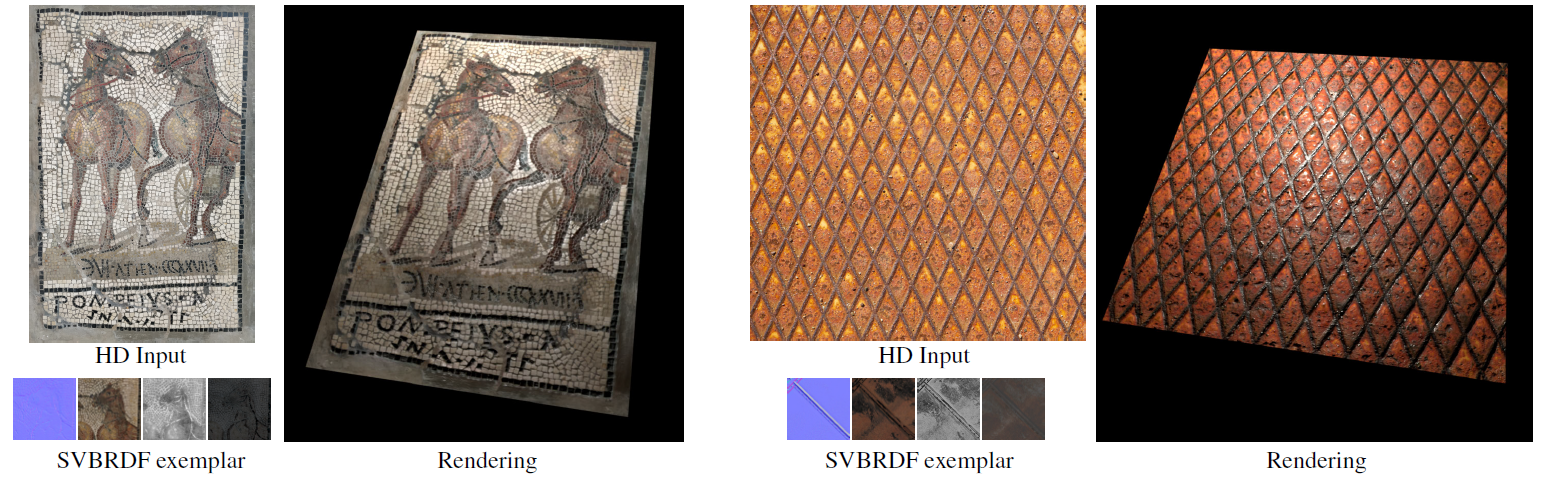

7.2.1 Guided Fine-Tuning for Large-Scale Material Transfer

Participants: Valentin Deschaintre, George Drettakis, Adrien Bousseau.

We present a method to transfer the appearance of one or a few exemplar SVBRDFs to a target image representing similar materials, as illustrated in Fig. 8. Our solution is extremely simple: we fine-tune a deep appearance-capture network on the provided exemplars, such that it learns to extract similar SVBRDF values from the target image. We introduce two novel material capture and design workflows that demonstrate the strength of this simple approach. The first workflow allows us to produce plausible SVBRDFs of large-scale objects from only a few pictures. Specifically, users only need to take a single picture of a large surface and a few close-up flash pictures of some of its details. We use existing methods to extract SVBRDF parameters from the close-ups, and our method to transfer these parameters to the entire surface, enabling the lightweight capture of surfaces several meters wide, such as murals, floors and furniture. In our second workflow, we provide a powerful way for users to create large SVBRDFs from internet pictures by transferring the appearance of existing, pre-designed SVBRDFs. By selecting different exemplars, users can control the materials assigned to the target image, greatly enhancing the creative possibilities offered by deep appearance capture.

This work was published in Computer Graphics Forum, and presented at EGSR 2020 10.

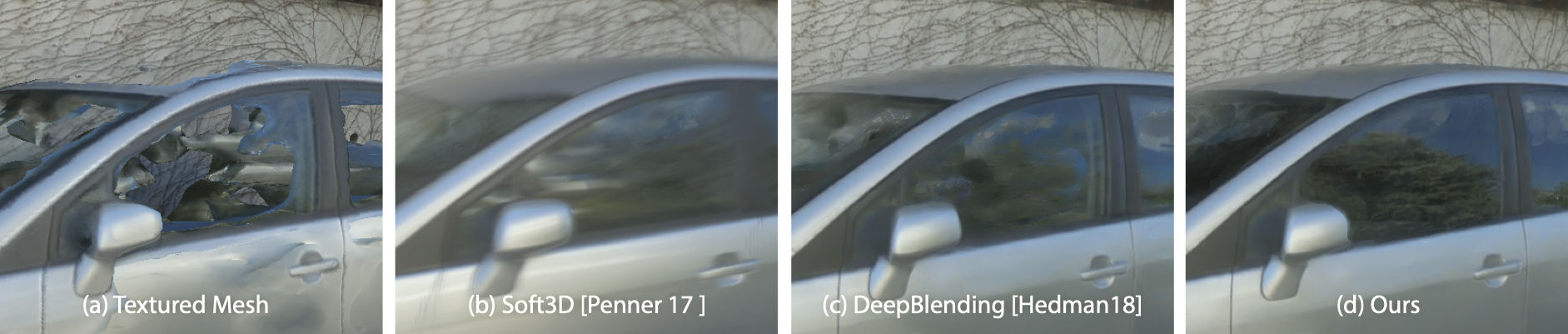

7.2.2 Image-Based Rendering of Cars using Semantic Labels and Approximate Reflection Flow

Participants: Simon Rodriguez, Siddhant Prakash, George Drettakis.

Image-Based Rendering (IBR) has made impressive progress towards highly realistic, interactive 3D navigation for many scenes, including cityscapes. However, cars are ubiquitous in such scenes; multi-view stereo reconstruction provides proxy geometry for IBR, but has difficulty with shiny car bodies, and leaves holes in place of reflective, semi-transparent windows on cars. We present a new approach allowing free-viewpoint IBR of cars based on an approximate analytic reflection flow computation on curved windows. Our method has three components: a refinement step of reconstructed car geometry guided by semantic labels, that provides an initial approximation for missing window surfaces and a smooth completed car hull; an efficient reflection flow computation using an ellipsoid approximation of the curved car windows that runs in real-time in a shader and a reflection/background layer synthesis solution. These components allow plausible rendering of reflective, semi-transparent windows in free viewpoint navigation. We show results on several scenes casually captured with a single consumer-level camera, demonstrating plausible car renderings with significant improvement in visual quality over previous methods - see Fig. 9

This work is a collaboration with Peter Hedman from University College of London. This work was published in Proceedings of the ACM on Computer Graphics and Interactive Techniques, and presented at ACM Symposium on Interactive 3D Graphics and Games 16.

7.2.3 Glossy Probe Reprojection for Interactive Global Illumination

Participants: Simon Rodriguez, Thomas Leimkühler, Siddhant Prakash, George Drettakis.

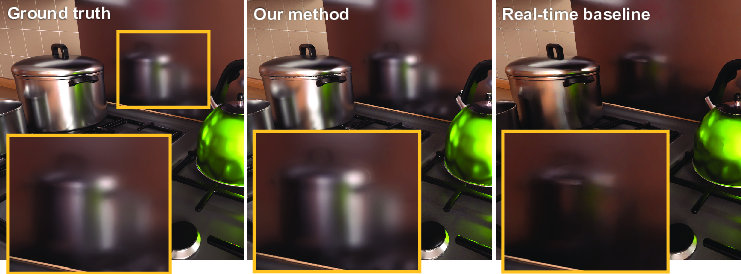

Recent rendering advances dramatically reduce the cost of global illumination. But even with hardware acceleration, complex light paths with multiple glossy interactions are still expensive; our new algorithm stores these paths in precomputed light probes and reprojects them at runtime to provide interactivity. Combined with traditional light maps for diffuse lighting our approach interactively renders all light paths in static scenes with opaque objects. Naively reprojecting probes with glossy lighting is memory-intensive, requires efficient access to the correctly reflected radiance, and exhibits problems at occlusion boundaries in glossy reflections. Our solution addresses all these issues. To minimize memory, we introduce an adaptive light probe parameterization that allocates increased resolution for shinier surfaces and regions of higher geometric complexity. To efficiently sample glossy paths, our novel gathering algorithm reprojects probe texels in a view-dependent manner using efficient reflection estimation and a fast rasterization-based search. Naive probe reprojection often sharpens glossy reflections at occlusion boundaries, due to changes in parallax. To avoid this, we split the convolution induced by the BRDF into two steps: we precompute probes using a lower material roughness and apply an adaptive bilateral filter at runtime to reproduce the original surface roughness. Combining these elements, our algorithm interactively renders complex scenes (Fig. 10) while fitting in the memory, bandwidth, and computation constraints of current hardware.

This work is a collaboration with Chris Wyman and Peter Shirley from Nvidia, was published at ACM Transactions on Graphics, and presented at SIGGRAPH Asia 2020 15.

7.2.4 Learning Glossy Probes Reprojection

Participants: Ronan Cailleau, Thomas Leimkühler, George Drettakis.

This project follows previous work 15 which achieved real-time/interactive rendering of glossy reflections (view dependent reflections) in real-time at an unprecedented quality. We started by extending our method to also support transmission effects. Such visual effects are very common in realistic scenes, such as objects with glass materials/appearences, and were missing from the original method. We noticed that the distortion induced by refractive objects tends to make artifacts of the original (previous) reflection reconstruction method even more visible. This further motivated the usage of Deep Neural Networks to replace our hand-crafted reflections and transmission computation, at least partially. We believe that such a process can be learned to further improve the quality of the results, since it is easy to generate training data using physically-based rendering. This is a challenging goal that requires usage of recent advances in the image processing field with neural networks.

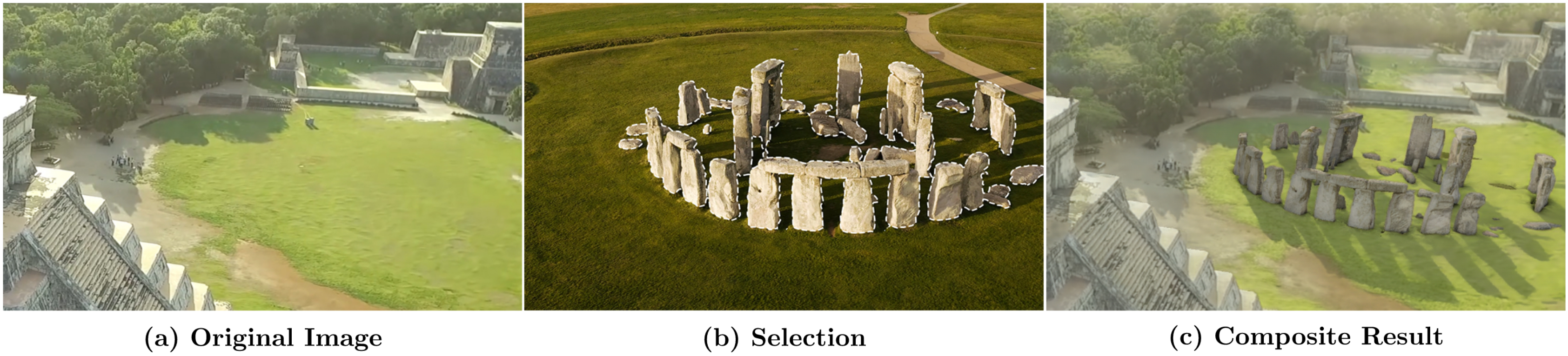

7.2.5 Repurposing a Relighting Network for Realistic Compositions of Captured Scenes

Participants: Baptiste Nicolet, Julien Philip, George Drettakis.

Multi-view stereo can be used to rapidly create realistic virtual content, such as textured meshes or a geometric proxy for free-viewpoint Image-Based Rendering (IBR). These solutions greatly simplify the content creation process compared to traditional methods, but it is difficult to modify the content of the scene. We propose a novel approach to create scenes by composing (parts of) multiple captured scenes. The main difficulty of such compositions is that lighting conditions in each captured scene are different; to obtain a realistic composition we need to make lighting coherent. We propose a two-pass solution, by adapting a multi-view relighting network. We first match the lighting conditions of each scene separately and then synthesize shadows between scenes in a subsequent pass. We also improve the realism of the composition by estimating the change in ambient occlusion in contact areas between parts and compensate for the color balance of the different cameras used for capture. We illustrate our method with results on multiple compositions of outdoor scenes and show its application to multi-view image composition (see fig 11), IBR and textured mesh creation.

This work was published and presented at ACM Symposium on Interactive 3D Graphics and Games 2020 19.

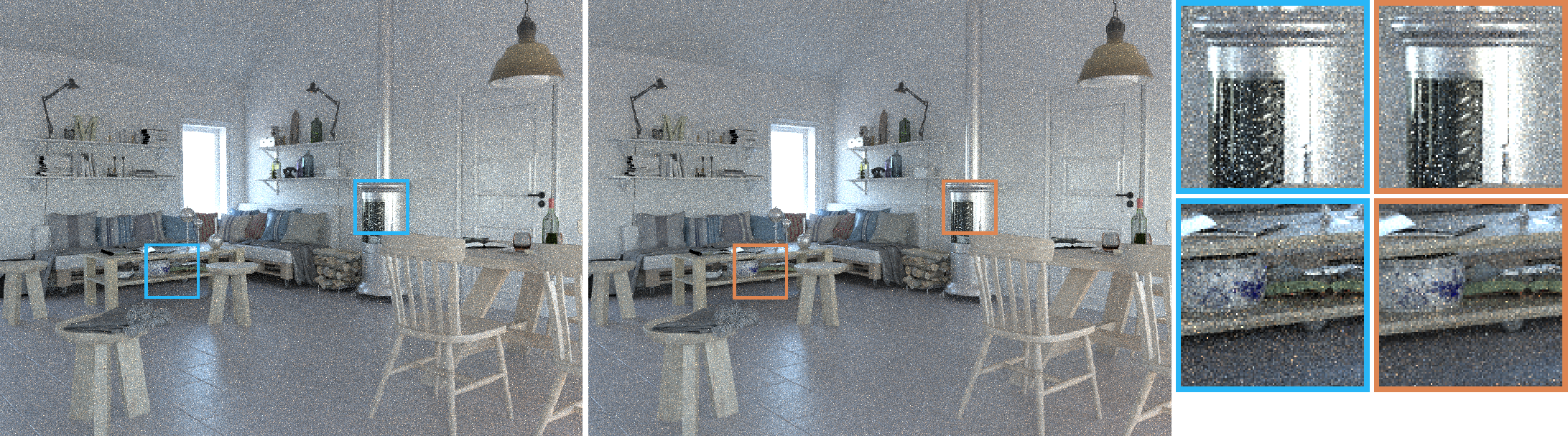

7.2.6 Practical Product Path Guiding Using Lineartly Transformed Cosines

Participants: Stavros Diolatzis, George Drettakis.

Path tracing is now the standard method used to generate realistic imagery in many domains, e.g., film, special effects, architecture etc. Path guiding has recently emerged as a powerful strategy to counter the notoriously long computation times required to render such images. We present a practical path guiding algorithm that performs product sampling, i.e., samples proportional to the product of the bidirectional scattering distribution function (BSDF) and incoming radiance. We use a spatial-directional subdivision to represent incoming radiance, and introduce the use of Linearly Transformed Cosines (LTCs) to represent the BSDF during path guiding, thus enabling efficient product sampling. Despite the computational efficiency of LTCs, several optimizations are needed to make our method cost effective. In particular, we show how we can use vectorization, precomputation, as well as strategies to optimize multiple importance sampling and Russian roulette to improve performance. We evaluate our method on several scenes, demonstrating consistent improvement in efficiency compared to previous work, especially in scenes with significant glossy inter-reflection (Figure 12).

This work is a collaboration with Adrien Gruson from McGill University, Wenzel Jakov from EPFL and Derek Nowrouzezahrai from McGill University. This work was published at Computer Graphics Forum, and presented at EGSR 2020 11.

7.2.7 Free-Viewpoint Neural Relighting of Indoor Scenes

Participants: Julien Philip, George Drettakis.

We introduce a mixed image rendering and relighting method that allows a user to move freely in a multi-view interior scene while altering its lighting. Our method uses a deep convolutional network trained on synthetic photo-realistic images. We adapt standard path tracing techniques to approximate complex lighting effects such as color bleeding and reflections. This work is conditionally accepted to ACM Transactions on Graphics and is a collaboration with Michael Gharbi from Adobe Research.

7.2.8 Point-Based Neural Rendering with Per-View Optimization

Participants: Georgios Kopanas, Julien Philip, Thomas Leimkühler, George Drettakis.

This project explores the combination of multi-view 3D reconstruction and neural rendering. Existing methods use 3D geometry reconstructed with Multi-View Stereo (MVS) but cannot recover from the errors of this process, or directly learn a volumetric neural representation, but suffer from expensive training and inference. We introduce a general approach that is initialized with MVS, but allows further optimization of scene properties in the space of input views, including depth and reprojected features, resulting in improved novel view synthesis. A key element of our approach is a differentiable pointbased splatting pipeline, based on our bi-directional Elliptical Weighted Average solution. To further improve quality and efficiency of our pointbased method, we introduce a probabilistic depth test and efficient camera selection. We use these elements together in our neural renderer, allowing us to achieve a good compromise between quality and speed. Our pipeline can be applied to multi-view harmonization and stylization in addition to novel view synthesis.

This work is submitted for publication.

7.2.9 Hybrid Image-based Rendering for Free-view Synthesis

Participants: Siddhant Prakash, Thomas Leimkühler, Simon Rodriguez, George Drettakis.

Image-based rendering (IBR) provides a rich toolset for free-viewpoint navigation in captured scenes. We identify common IBR artifacts and combine the strengths of different algorithms to strike a good balance in the speed/quality tradeoff. We address the problem of visible color seams that arise from blending casually-captured input images by explicitly treating view-dependent effects. We also compensate for geometric reconstruction errors by refining per-view information using a novel clustering and filtering approach. Finally, we devise a practical hybrid IBR algorithm, which locally identifies and utilizes the rendering method best suited for an image region while retaining interactive rates.

This work is conditionally accepted to ACM SIGGRAPH Symposium on Interactive 3D Graphics and Games, to be published in the journal Proceedings of the ACM on Computer Graphics and Interactive Techniques.

7.2.10 Free-viewpoint Portrait Rendering with GANs using Multi-view Images

Participants: Thomas Leimkühler, George Drettakis.

Current generative adversarial networks (GANs) produce photorealistic renderings of portrait images. While recent methods provide considerable semantic control over the generated images, they can only generate a limited set of viewpoints and cannot explicitly control the camera. We use a few images of a face to perform 3D reconstruction, which allows us to define and ultimately extend the range of the images the GAN can reproduce, while obtaining precise camera control.

7.2.11 Active Exploration For Neural Rendering

Participants: Stavros Diolatzis, Julien Philip, George Drettakis.

In this project we experiment with Active Learning practices to reduce the amount of training data and the training time required for neural rendering. Current methods require weeks of training on thousands of images and these requirements only increase with the complexity of the scenes. To make the use of neural networks practical in rendering of synthetic scenes we propose an active data generation scheme which procudes training data based on their importance, thus allowing for much shorter training and data generation times.

7.2.12 Practical video-based rendering of dynamic stationary environments

Participants: Théo Thonat, George Drettakis.

The goal of this work is to extend traditional Image Based Rendering to capture subtle motions in real scenes. We want to allow free-viewpoint navigation with casual capture, such as a user taking photos and videos with a single smartphone and a tripod. We focus on stochastic time-dependent textures such as waves, flames or waterfalls. We have developed a video representation able to tackle the challenge of blending unsynchronized videos.

This work is a collaboration with Sylvain Paris from Adobe Research, Miika Aittala from MIT CSAIL, and Yagiz Aksoy from ETH Zurich, and has been submitted for publication.

8 Partnerships and cooperations

8.1 International initiatives

Informal international partners

We maintain close collaborations with international experts, including

- McGill (Canada) (A. Gruson, D. Nowrouzezahrai)

- UBC (Canada), (A. Sheffer)

- U. Toronto (Canada), (K. Singh)

- TU Delft (NL) (M. Sypesteyn, J. W. Hoftijzer)

- EPFL (Switzerland) (W. Jakob)

- U Bern (Switzerland) (D. Bommes)

- University College London (UK) (G. Brostow, P. Hedman, N. Mitra)

- NVIDIA Research (USA), (C. Wyman, P. Shirley)

- Adobe Research (USA, UK), (S. Paris, M. Gharbi, N. Mitra)

- U. Texas, Austin (USA), (E. Vouga)

8.1.1 Visits of international scientists

We had no visits this year due to the pandemic.

8.2 European initiatives

8.2.1 D3: Drawing Interpretation for 3D Design

Participants: Yulia Gryaditskaya, Tibor Stanko, Bastien Wailly, David Jourdan, Adrien Bousseau, Felix Hähnlein.

Line drawing is a fundamental tool for designers to quickly visualize 3D concepts. The goal of this ERC project is to develop algorithms capable of understanding design drawings.

This year, we introduced two methods to create 3D objects from drawings. The first method exploits specific construction lines, called scaffolds that designers draw to lay down the main structure of a shape before adding details. By formalizing the geometric properties of these lines, we defined an optimization procedure that recover the depth of each stroke in an existing drawing 13.

The second method employs deep learning to recognize parametric modeling operations which, when executed in sequence, allows users to create compex shapes by drawing 14.

In addition, we also progressed on other problems related to design drawing and prototyping, such as converting bitmap drawings into vectorial curves 17 and fabricating lightweight curved surfaces with a desktop 3D printer 18.

8.2.2 ERC FunGraph

Participants: George Drettakis, Thomas Leimkühler, Sébastien Morgenthaler, Rada Deeb, Stavros Diolatzis, Siddhant Prakash, Simon Rodriguez, Julien Philip.

The ERC Advanced Grant FunGraph proposes a new methodology by introducing the concepts of rendering and input uncertainty. We define output or rendering uncertainty as the expected error of a rendering solution over the parameters and algorithmic components used with respect to an ideal image, and input uncertainty as the expected error of the content over the different parameters involved in its generation, compared to an ideal scene being represented. Here the ideal scene is a perfectly accurate model of the real world, i.e., its geometry, materials and lights; the ideal image is an infinite resolution, high-dynamic range image of this scene.

By introducing methods to estimate rendering uncertainty we will quantify the expected error of previously incompatible rendering components with a unique methodology for accurate, approximate and image-based renderers. This will allow FunGraph to define unified rendering algorithms that can exploit the advantages of these very different approaches in a single algorithmic framework, providing a fundamentally different approach to rendering. A key component of these solutions is the use of captured content: we will develop methods to estimate input uncertainty and to propagate it to the unified rendering algorithms, allowing this content to be exploited by all rendering approaches.

The goal of FunGraph is to fundamentally transform computer graphics rendering, by providing a solid theoretical framework based on uncertainty to develop a new generation of rendering algorithms. These algorithms will fully exploit the spectacular – but previously disparate and disjoint – advances in rendering, and benefit from the enormous wealth offered by constantly improving captured input content.

This year we had several new results. Notably, we developed a new method for Image-Based Rendering (IBR) of reflections on cars 16, providing a solution to long standing problem of IBR methods. We advanced on traditional rendering methods, developing a solution for real-time rendering of global illumination with glossy probes 15, and a solution for path guiding in Monte Carlo global illumination 11. We also presented a method for guided transfer of materials using deep learning 10.

9 Dissemination

9.1 Promoting scientific activities

9.1.1 Scientific events: selection

Member of the conference program committees

George Drettakis has been a member of the program committees for Pacific Graphics, EGSR, HPG.

9.1.2 Journal

Member of the editorial boards

George Drettakis is a member of the Editorial Board of Computational Visual Media (CVM)

Reviewer - reviewing activities

- Adrien Bousseau was reviewer for Siggraph, Siggraph Asia, Computer Graphics Forum, ACM Transactions on Graphics, IEEE Transactions on Visualization and Computer Graphics, ECCV.

- George Drettakis was reviewer for Siggraph, Siggraph Asia.

- Thomas Leimkühler was reviewer for Siggraph, IEEE Transactions on Visualization and Computer Graphics, Eurographics, The Visual Computer Journal, Computers & Graphics.

9.1.3 Invited talks

George Drettakis gave invited talks at MPI Saarbruecken, Germany, and at Aalto University Helsinki, Finland.

9.1.4 Leadership within the scientific community

- G. Drettakis chairs the Eurographics (EG) working group on Rendering, and the steering committee of EG Symposium on Rendering.

- G. Drettakis has been selected as chair of the ACM SIGGRAPH Papers Advisory Group which choses the technical papers chairs of ACM SIGGRAPH conferences and is reponsible for all issues related to publication policy of our flagship conferences SIGGRAPH and SIGGRAPH Asia

9.1.5 Scientific expertise

- Adrien Bousseau was an evaluator for ANR.

- Adrien Bousseau was a member of the Eurographics Ph.D. award committee.

- George Drettakis is a member of the Ph.D. Award selection committe of the French GdR IG-RV.

9.1.6 Research administration

- Adrien Bousseau was a jury member for Inria Chargé(e)s de Recherche in Bordeaux.

- Adrien Bousseau is a member of Comité du suivi doctoral, Comité du centre, local committee on sustainable development, Local scientific committee on computing platforms.

- George Drettakis is a member (suppleant) of the Inria Scientific Council.

- George Drettakis was a member of the hiring committee at the Technical University of Crete.

9.2 Teaching - Supervision - Juries

9.2.1 Teaching

- David Jourdan taught imperative programming for 33h (TD, L1) and algorithms and data structures for 24h (TD, L2) at Université Côte d'Azur.

- Tibor Stanko taught mathematics for biology for 20h (TD) at Université Côte d'Azur.

9.2.2 Supervision

- Ph.D.: Simon Rodriguez, Image-based methods for view-dependent effects in real and synthetic scenes, defended September 2020, George Drettakis 22.

- Ph.D.: Julien Philip, Multi-view image-based editing and rendering through deep learning and optimization, defended October 2020, George Drettakis 21.

- Ph.D. in progress: David Jourdan, Interactive architectural design, since October 2018, Adrien Bousseau and Melina Skouras (Imagine)

- Ph.D. in progress: Felix Hahnlein, Line Drawing Generation and Interpretation, since February 2019, Adrien Bousseau.

- Ph.D. in progress: Emilie Yu, 3D Sketching in Virtual Reality, since October 2020, Adrien Bousseau.

- Ph.D. in progress: Stavros Diolatzis, Guiding and Learning for Illumination Algorithms, since April 2019, George Drettakis.

- Ph.D. in progress: Georgios Kopanas, Neural Rendering with Uncertainty for Full Scenes, since September 2020, George Drettakis.

- Ph.D. in progress: Siddhant Prakash, Rendering with uncertain data with application to augmented reality, since November 2019, George Drettakis.

9.2.3 Juries

George Drettakis was an evaluator of the Ph.D. thesis of Abhimitra Meka (MPI, Germany) and Markus Kettunen (Aalto University, Finland).

9.3 Popularization

9.3.1 Internal or external Inria responsibilities

George Drettakis chairs the local “Jacques Morgenstern” Colloquium organizing committee.

9.3.2 Interventions

- Adrien Bousseau gave an invited seminar to students of ENS Lyon, and a conference at the main library in Nice.

10 Scientific production

10.1 Major publications

- 1 articleSingle-Image SVBRDF Capture with a Rendering-Aware Deep NetworkACM Transactions on Graphics372018, 128 - 143

- 2 articleFidelity vs. Simplicity: a Global Approach to Line Drawing VectorizationACM Transactions on Graphics (SIGGRAPH Conference Proceedings)2016, URL: http://www-sop.inria.fr/reves/Basilic/2016/FLB16

- 3 articlePhoto2ClipArt: Image Abstraction and Vectorization Using Layered Linear GradientsACM Transactions on Graphics (SIGGRAPH Asia Conference Proceedings)366November 2017, URL: http://www-sop.inria.fr/reves/Basilic/2017/FLB17

- 4 article OpenSketch: A Richly-Annotated Dataset of Product Design Sketches ACM Transactions on Graphics 2019

- 5 articleDeep Blending for Free-Viewpoint Image-Based RenderingACM Transactions on Graphics (SIGGRAPH Asia Conference Proceedings)376November 2018, URL: http://www-sop.inria.fr/reves/Basilic/2018/HPPFDB18

- 6 articleScalable Inside-Out Image-Based RenderingACM Transactions on Graphics (SIGGRAPH Asia Conference Proceedings)356December 2016, URL: http://www-sop.inria.fr/reves/Basilic/2016/HRDB16

- 7 articleAccommodation and Comfort in Head-Mounted DisplaysACM Transactions on Graphics (SIGGRAPH Conference Proceedings)364July 2017, 11URL: http://www-sop.inria.fr/reves/Basilic/2017/KBBD17

- 8 articleInteractive Sketching of Urban Procedural ModelsACM Transactions on Graphics (SIGGRAPH Conference Proceedings)2016, URL: http://www-sop.inria.fr/reves/Basilic/2016/NGGBB16

- 9 article Multi-view Relighting using a Geometry-Aware Network ACM Transactions on Graphics 38 2019

10.2 Publications of the year

International journals

International peer-reviewed conferences

Doctoral dissertations and habilitation theses