Keywords

Computer Science and Digital Science

- A5. Interaction, multimedia and robotics

- A5.1.1. Engineering of interactive systems

- A5.1.6. Tangible interfaces

- A5.3.5. Computational photography

- A5.4. Computer vision

- A5.4.4. 3D and spatio-temporal reconstruction

- A5.5. Computer graphics

- A5.5.1. Geometrical modeling

- A5.5.2. Rendering

- A5.5.3. Computational photography

- A5.5.4. Animation

- A5.6. Virtual reality, augmented reality

- A6.2.3. Probabilistic methods

- A6.2.5. Numerical Linear Algebra

- A6.2.6. Optimization

- A6.2.8. Computational geometry and meshes

Other Research Topics and Application Domains

- B5. Industry of the future

- B5.1. Factory of the future

- B9. Society and Knowledge

- B9.2. Art

- B9.2.2. Cinema, Television

- B9.2.3. Video games

- B9.6. Humanities

- B9.6.6. Archeology, History

- B9.6.10. Digital humanities

1 Team members, visitors, external collaborators

Research Scientists

- Pascal Barla [Team leader, Inria, Researcher, HDR]

- Gaël Guennebaud [Inria, Researcher]

- Romain Pacanowski [Inria, Researcher, from Dec 2020]

Faculty Members

- Pierre Bénard [Univ de Bordeaux, Associate Professor]

- Patrick Reuter [Univ de Bordeaux, Associate Professor, HDR]

Post-Doctoral Fellow

- David Murray [Inria, until Jun 2020]

PhD Students

- Megane Bati [Institut d'optique graduate school]

- Camille Brunel [Inria]

- Corentin Cou [CNRS]

- Charlotte Herzog [Imagine Optic, CIFRE, until Aug 2020]

- Charlie Schlick [Univ de Bordeaux]

Interns and Apprentices

- Leo Ackermann [Inria, from May 2020 until Jul 2020]

- Eric Barre [Inria, from Jun 2020 until Aug 2020]

- Melvin Even [Inria, from Jun 2020 until Sep 2020]

- Arno Galvez [Inria, from Mar 2020 until Aug 2020]

Administrative Assistant

- Anne-Laure Gautier [Inria]

External Collaborators

- Xavier Granier [Univ Paris-Saclay, HDR]

- David Murray [Institut d'optique graduate school, from Jul 2020]

- Romain Pacanowski [CNRS, until Nov 2020]

2 Overall objectives

2.1 General Introduction

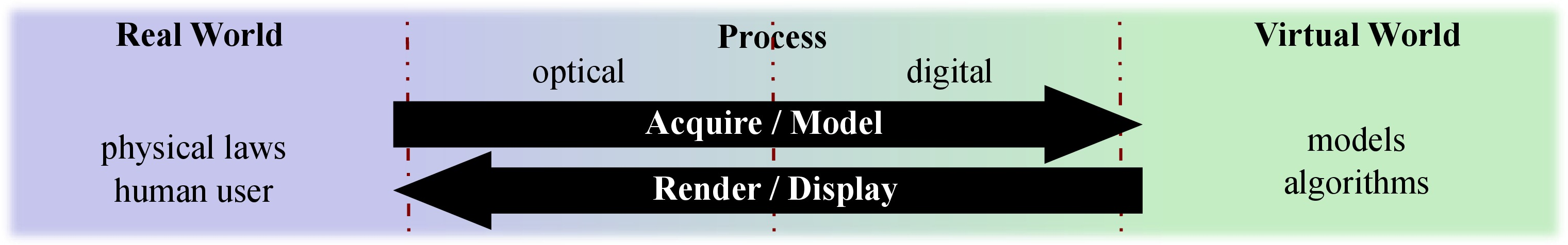

Computer generated images are ubiquitous in our everyday life. Such images are the result of a process that has seldom changed over the years: the optical phenomena due to the propagation of light in a 3D environment are simulated taking into account how light is scattered 46, 22 according to shape and material characteristics of objects. The intersection of optics (for the underlying laws of physics) and computer science (for its modeling and computational efficiency aspects) provides a unique opportunity to tighten the links between these domains in order to first improve the image generation process (computer graphics, optics and virtual reality) and next to develop new acquisition and display technologies (optics, mixed reality and machine vision).

Most of the time, light, shape, and matter properties are studied, acquired, and modeled separately, relying on realistic or stylized rendering processes to combine them in order to create final pixel colors. Such modularity, inherited from classical physics, has the practical advantage of permitting to reuse the same models in various contexts. However, independent developments lead to un-optimized pipelines and difficult-to-control solutions since it is often not clear which part of the expected result is caused by which property. Indeed, the most efficient solutions are most often the ones that blur the frontiers between light, shape, and matter to lead to specialized and optimized pipelines, as in real-time applications (like Bidirectional Texture Functions 56 and Light-Field rendering 20). Keeping these three properties separated may lead to other problems. For instance:

- Measured materials are too detailed to be usable in rendering systems and data reduction techniques have to be developed 55, 57, leading to an inefficient transfer between real and digital worlds;

- It is currently extremely challenging (if not impossible) to directly control or manipulate the interactions between light, shape, and matter. Accurate lighting processes may create solutions that do not fulfill users' expectations;

- Artists can spend hours and days in modeling highly complex surfaces whose details will not be visible 77 due to inappropriate use of certain light sources or reflection properties.

Most traditional applications target human observers. Depending on how deep we take into account the specificity of each user, the requirement of representations, and algorithms may differ.

|

|

|

| Auto-stereoscopy display | HDR display | Printing both geometry and material |

| ©Nintendo | ©Dolby Digital | 38 |

With the evolution of measurement and display technologies that go beyond conventional images (e.g., as illustrated in Figure 1, High-Dynamic Range Imaging 67, stereo displays or new display technologies 42, and physical fabrication 12, 29, 38) the frontiers between real and virtual worlds are vanishing 25. In this context, a sensor combined with computational capabilities may also be considered as another kind of observer. Creating separate models for light, shape, and matter for such an extended range of applications and observers is often inefficient and sometimes provides unexpected results. Pertinent solutions must be able to take into account properties of the observer (human or machine) and application goals.

2.2 Methodology

2.2.1 Using a global approach

The main goal of the MANAO project is to study phenomena resulting from the interactions between the three components that describe light propagation and scattering in a 3D environment: light, shape, and matter. Improving knowledge about these phenomena facilitates the adaption of the developed digital, numerical, and analytic models to specific contexts. This leads to the development of new analysis tools, new representations, and new instruments for acquisition, visualization, and display.

To reach this goal, we have to first increase our understanding of the different phenomena resulting from the interactions between light, shape, and matter. For this purpose, we consider how they are captured or perceived by the final observer, taking into account the relative influence of each of the three components. Examples include but are not limited to:

- The manipulation of light to reveal reflective 17 or geometric properties 84, as mastered by professional photographers;

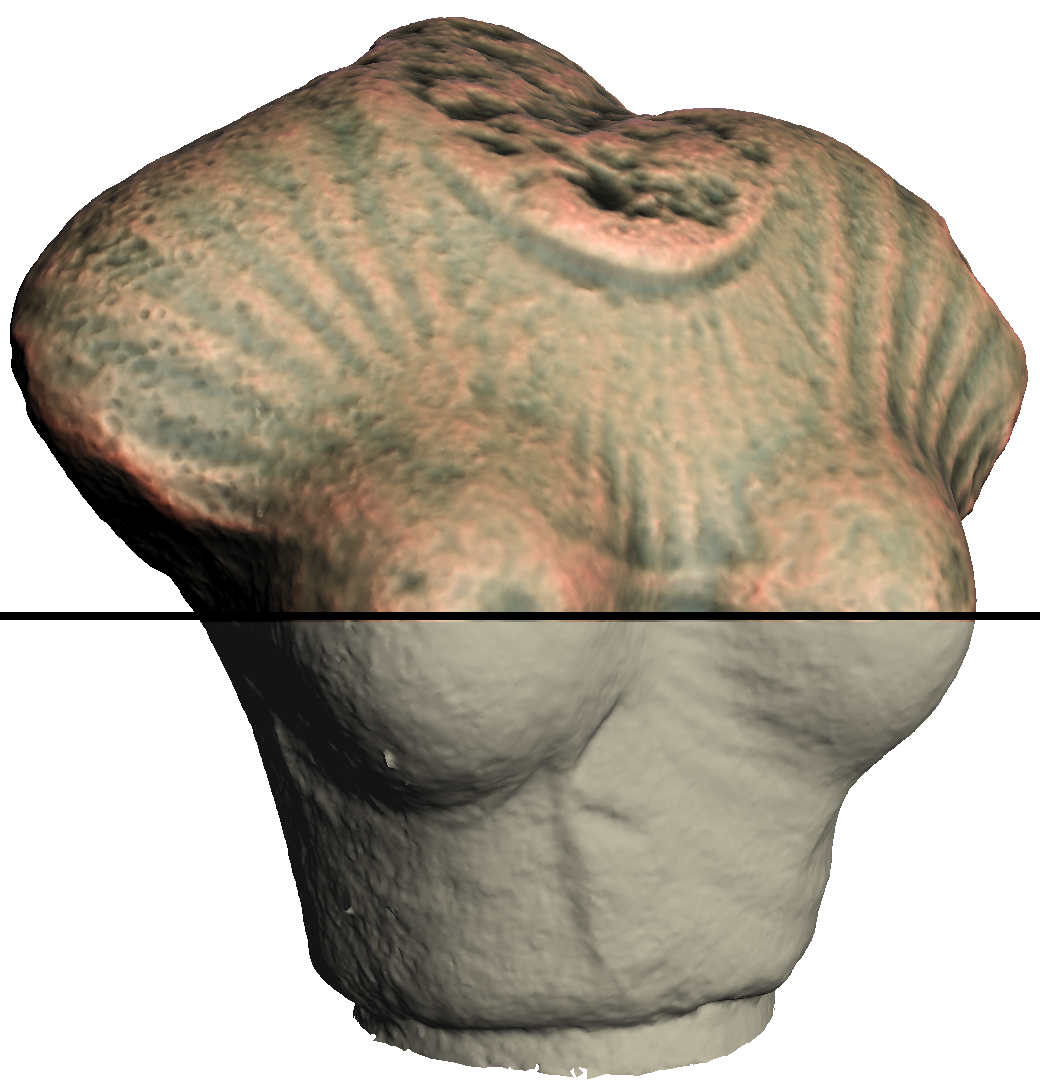

- The modification of material characteristics or lighting conditions 83 to better understand shape features, for instance to decipher archaeological artifacts;

- The large influence of shape on the captured variation of shading 65 and thus on the perception of material properties 80.

Based on the acquired knowledge of the influence of each of the components, we aim at developing new models that combine two or three of these components. Examples include the modeling of Bidirectional Texture Functions (BTFs) 28 that encode in a unique representation effects of parallax, multiple light reflections, and also shadows without requiring to store separately the reflective properties and the meso-scale geometric details, or Light-Fields that are used to render 3D scenes by storing only the result of the interactions between light, shape, and matter both in complex real environments and in simulated ones.

One of the strengths of MANAO is that we are inter-connecting computer graphics and optics. On one side, the laws of physics are required to create images but may be bent to either increase performance or user's control: this is one of the key advantage of computer graphics approach. It is worth noticing that what is not possible in the real world may be possible in a digital world. However, on the other side, the introduced approximations may help to better comprehend the physical interactions of light, shape, and matter.

2.2.2 Taking observers into account

The MANAO project specifically aims at considering information transfer, first from the real world to the virtual world (acquisition and creation), then from computers to observers (visualization and display). For this purpose, we use a larger definition of what an observer is: it may be a human user or a physical sensor equipped with processing capabilities. Sensors and their characteristics must be taken into account in the same way as we take into account the human visual system in computer graphics. Similarly, computational capabilities may be compared to cognitive capabilities of human users. Some characteristics are common to all observers, such as the scale of observed phenomena. Some others are more specifics to a set of observers. For this purpose, we have identified two classes of applications.

- Physical systems Provided our partnership that leads to close relationships with optics, one novelty of our approach is to extend the range of possible observers to physical sensors in order to work on domains such as simulation, mixed reality, and testing. Capturing, processing, and visualizing complex data is now more and more accessible to everyone, leading to the possible convergence of real and virtual worlds through visual signals. This signal is traditionally captured by cameras. It is now possible to augment them by projecting (e.g., the infrared laser of Microsoft Kinect) and capturing (e.g., GPS localization) other signals that are outside the visible range. These supplemental information replace values traditionally extracted from standard images and thus lower down requirements in computational power 53. Since the captured images are the result of the interactions between light, shape, and matter, the approaches and the improved knowledge from MANAO help in designing interactive acquisition and rendering technologies that are required to merge the real and the virtual world. With the resulting unified systems (optical and digital), transfer of pertinent information is favored and inefficient conversion is likely avoided, leading to new uses in interactive computer graphics applications, like augmented reality 16, 25 and computational photography 66.

- Interactive visualization This direction includes domains such as scientific illustration and visualization, artistic or plausible rendering. In all these cases, the observer, a human, takes part in the process, justifying once more our focus on real-time methods. When targeting average users, characteristics as well as limitations of the human visual system should be taken into account: in particular, it is known that some configurations of light, shape, and matter have masking and facilitation effects on visual perception 77. For specialized applications, the expertise of the final user and the constraints for 3D user interfaces lead to new uses and dedicated solutions for models and algorithms.

3 Research program

3.1 Related Scientific Domains

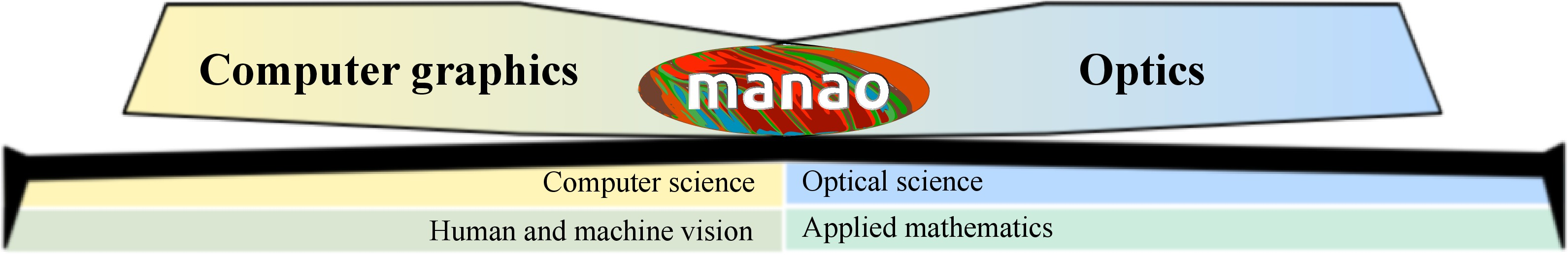

The MANAO project aims at studying, acquiring, modeling, and rendering the interactions between the three components that are light, shape, and matter from the viewpoint of an observer. As detailed more lengthily in the next section, such a work will be done using the following approach: first, we will tend to consider that these three components do not have strict frontiers when considering their impacts on the final observers; then, we will not only work in computer graphics, but also at the intersection of computer graphics and optics, exploring the mutual benefits that the two domains may provide. It is thus intrinsically a transdisciplinary project (as illustrated in Figure 3) and we expect results in both domains.

Thus, the proposed team-project aims at establishing a close collaboration between computer graphics (e.g., 3D modeling, geometry processing, shading techniques, vector graphics, and GPU programming) and optics (e.g., design of optical instruments, and theories of light propagation).

The following examples illustrate the strengths of such a partnership.

First, in addition to simpler radiative transfer equations 30 commonly used in computer graphics, research in the later will be based on state-of-the-art understanding of light propagation and scattering in real environments.

Furthermore, research will rely on appropriate instrumentation expertise for the measurement 43, 44 and display 42 of the different phenomena.

Reciprocally, optics researches may benefit from the expertise of computer graphics scientists on efficient processing to investigate interactive simulation, visualization, and design.

Furthermore, new systems may be developed by unifying optical and digital processing capabilities.

Currently, the scientific background of most of the team members is related to computer graphics and computer vision.

A large part of their work have been focused on simulating and analyzing optical phenomena as well as in acquiring and visualizing them.

Combined with the close collaboration with the optics laboratory LP2N (http://

At the boundaries of the MANAO project lie issues in human and machine vision. We have to deal with the former whenever a human observer is taken into account. On one side, computational models of human vision are likely to guide the design of our algorithms. On the other side, the study of interactions between light, shape, and matter may shed some light on the understanding of visual perception. The same kind of connections are expected with machine vision. On the one hand, traditional computational methods for acquisition (such as photogrammetry) are going to be part of our toolbox. On the other hand, new display technologies (such as the ones used for augmented reality) are likely to benefit from our integrated approach and systems. In the MANAO project we are mostly users of results from human vision. When required, some experimentation might be done in collaboration with experts from this domain, like with the European PRISM project. For machine vision, provided the tight collaboration between optical and digital systems, research will be carried out inside the MANAO project.

Analysis and modeling rely on tools from applied mathematics such as differential and projective geometry, multi-scale models, frequency analysis 32 or differential analysis 65, linear and non-linear approximation techniques, stochastic and deterministic integrations, and linear algebra. We not only rely on classical tools, but also investigate and adapt recent techniques (e.g., improvements in approximation techniques), focusing on their ability to run on modern hardware: the development of our own tools (such as Eigen) is essential to control their performances and their abilities to be integrated into real-time solutions or into new instruments.

3.2 Research axes

The MANAO project is organized around four research axes that cover the large range of expertise of its members and associated members. We briefly introduce these four axes in this section. More details and their inter-influences that are illustrated in the Figure 2 will be given in the following sections.

Axis 1 is the theoretical foundation of the project. Its main goal is to increase the understanding of light, shape, and matter interactions by combining expertise from different domains: optics and human/machine vision for the analysis and computer graphics for the simulation aspect. The goal of our analyses is to identify the different layers/phenomena that compose the observed signal. In a second step, the development of physical simulations and numerical models of these identified phenomena is a way to validate the pertinence of the proposed decompositions.

In Axis 2, the final observers are mainly physical captors. Our goal is thus the development of new acquisition and display technologies that combine optical and digital processes in order to reach fast transfers between real and digital worlds, in order to increase the convergence of these two worlds.

Axes 3 and 4 focus on two aspects of computer graphics: rendering, visualization and illustration in Axis 3, and editing and modeling (content creation) in Axis 4. In these two axes, the final observers are mainly human users, either generic users or expert ones (e.g., archaeologist 69, computer graphics artists).

3.3 Axis 1: Analysis and Simulation

Challenge: Definition and understanding of phenomena resulting from interactions between light, shape, and matter as seen from an observer point of view.

Results: Theoretical tools and numerical models for analyzing and simulating the observed optical phenomena.

To reach the goals of the MANAO project, we need to increase our understanding of how light, shape, and matter act together in synergy and how the resulting signal is finally observed. For this purpose, we need to identify the different phenomena that may be captured by the targeted observers. This is the main objective of this research axis, and it is achieved by using three approaches: the simulation of interactions between light, shape, and matter, their analysis and the development of new numerical models. This resulting improved knowledge is a foundation for the researches done in the three other axes, and the simulation tools together with the numerical models serve the development of the joint optical/digital systems in Axis 2 and their validation.

One of the main and earliest goals in computer graphics is to faithfully reproduce the real world, focusing mainly on light transport. Compared to researchers in physics, researchers in computer graphics rely on a subset of physical laws (mostly radiative transfer and geometric optics), and their main concern is to efficiently use the limited available computational resources while developing as fast as possible algorithms. For this purpose, a large set of theoretical as well as computational tools has been introduced to take a maximum benefit of hardware specificities. These tools are often dedicated to specific phenomena (e.g., direct or indirect lighting, color bleeding, shadows, caustics). An efficiency-driven approach needs such a classification of light paths 39 in order to develop tailored strategies 81. For instance, starting from simple direct lighting, more complex phenomena have been progressively introduced: first diffuse indirect illumination 36, 73, then more generic inter-reflections 46, 30 and volumetric scattering 70, 27. Thanks to this search for efficiency and this classification, researchers in computer graphics have developed a now recognized expertise in fast-simulation of light propagation. Based on finite elements (radiosity techniques) or on unbiased Monte Carlo integration schemes (ray-tracing, particle-tracing, ...), the resulting algorithms and their combination are now sufficiently accurate to be used-back in physical simulations. The MANAO project will continue the search for efficient and accurate simulation techniques, but extending it from computer graphics to optics. Thanks to the close collaboration with scientific researchers from optics, new phenomena beyond radiative transfer and geometric optics will be explored.

Search for algorithmic efficiency and accuracy has to be done in parallel with numerical models. The goal of visual fidelity (generalized to accuracy from an observer point of view in the project) combined with the goal of efficiency leads to the development of alternative representations. For instance, common classical finite-element techniques compute only basis coefficients for each discretization element: the required discretization density would be too large and to computationally expensive to obtain detailed spatial variations and thus visual fidelity. Examples includes texture for decorrelating surface details from surface geometry and high-order wavelets for a multi-scale representation of lighting 26. The numerical complexity explodes when considering directional properties of light transport such as radiance intensity (Watt per square meter and per steradian - ), reducing the possibility to simulate or accurately represent some optical phenomena. For instance, Haar wavelets have been extended to the spherical domain 72 but are difficult to extend to non-piecewise-constant data 75. More recently, researches prefer the use of Spherical Radial Basis Functions 78 or Spherical Harmonics 64. For more complex data, such as reflective properties (e.g., BRDF 58, 47 - 4D), ray-space (e.g., Light-Field 54 - 4D), spatially varying reflective properties (6D - 68), new models, and representations are still investigated such as rational functions 61 or dedicated models 14 and parameterizations 71, 76. For each (newly) defined phenomena, we thus explore the space of possible numerical representations to determine the most suited one for a given application, like we have done for BRDF 61.

|

|

|

|

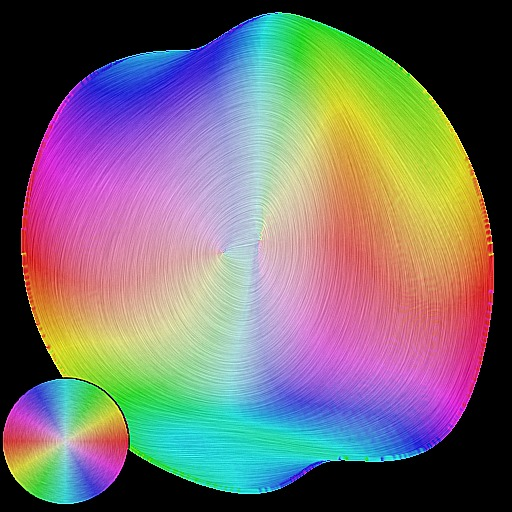

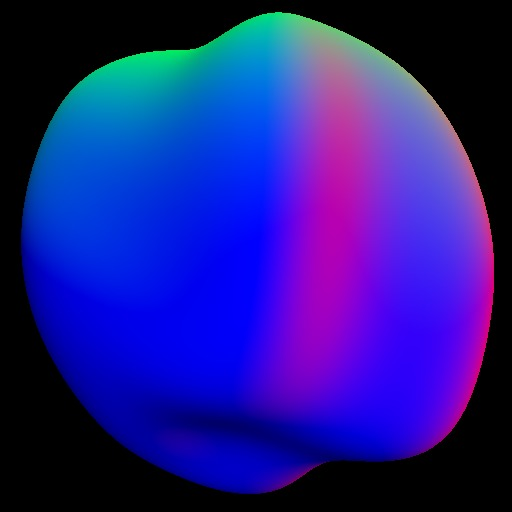

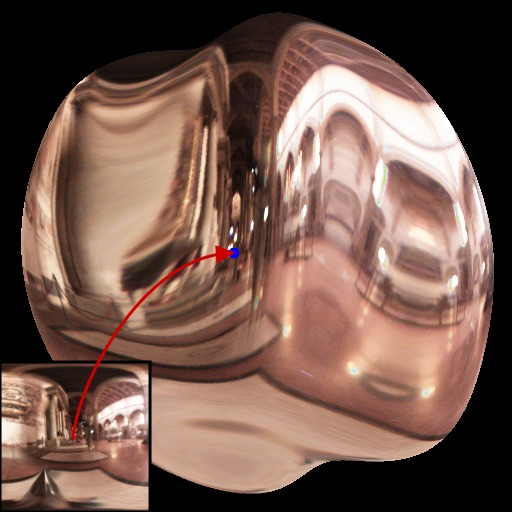

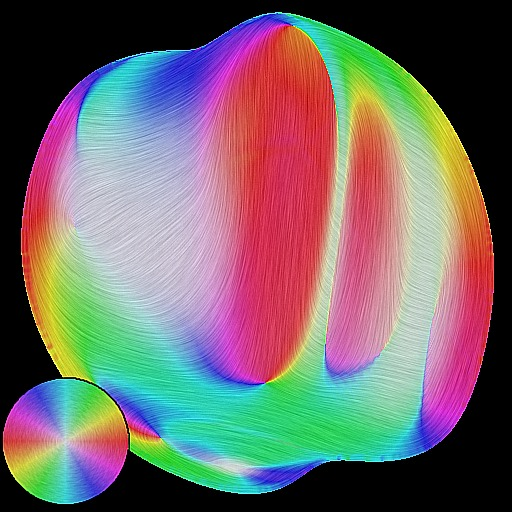

| Texuring | 1st order gradient field | Environment reflection | 2st order gradient field |

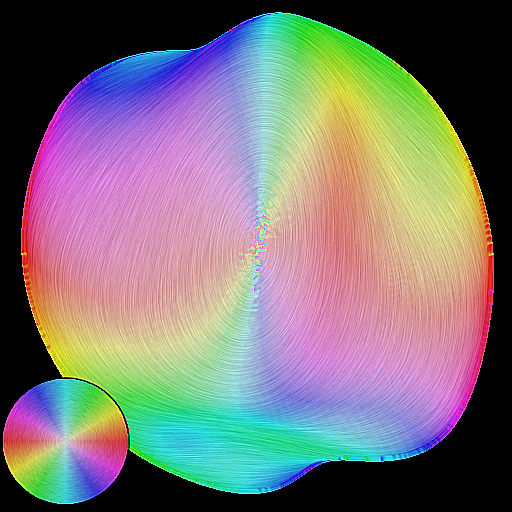

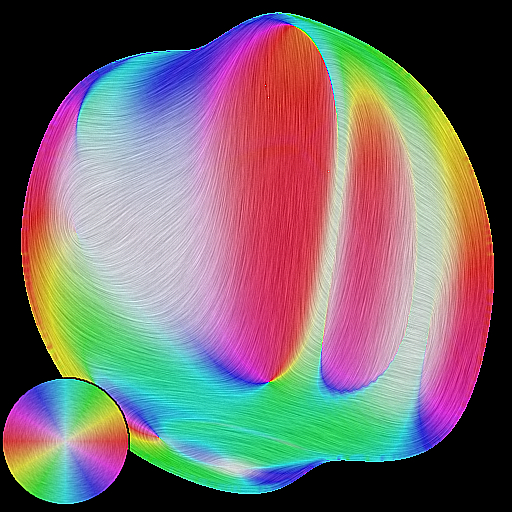

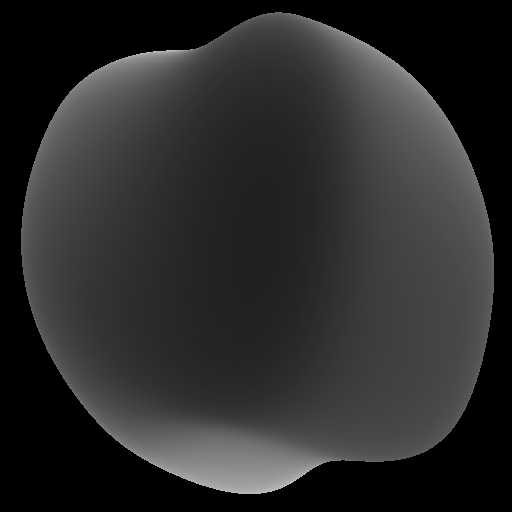

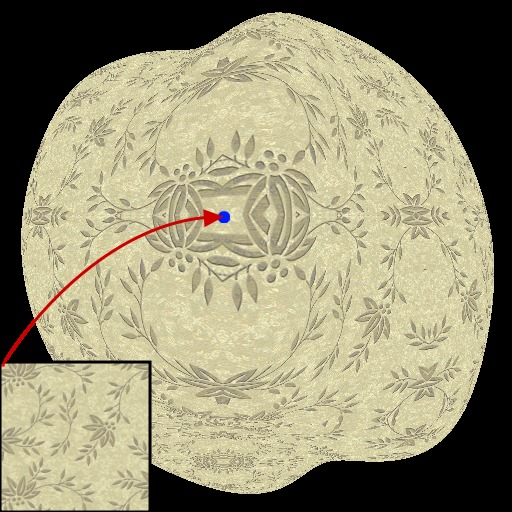

Before being able to simulate or to represent the different observed phenomena, we need to define and describe them. To understand the difference between an observed phenomenon and the classical light, shape, and matter decomposition, we can take the example of a highlight. Its observed shape (by a human user or a sensor) is the resulting process of the interaction of these three components, and can be simulated this way. However, this does not provide any intuitive understanding of their relative influence on the final shape: an artist will directly describe the resulting shape, and not each of the three properties. We thus want to decompose the observed signal into models for each scale that can be easily understandable, representable, and manipulable. For this purpose, we will rely on the analysis of the resulting interaction of light, shape, and matter as observed by a human or a physical sensor. We first consider this analysis from an optical point of view, trying to identify the different phenomena and their scale according to their mathematical properties (e.g., differential 65 and frequency analysis 32). Such an approach has leaded us to exhibit the influence of surfaces flows (depth and normal gradients) into lighting pattern deformation (see Figure 4). For a human observer, this correspond to one recent trend in computer graphics that takes into account the human visual systems 33 both to evaluate the results and to guide the simulations.

3.4 Axis 2: From Acquisition to Display

Challenge: Convergence of optical and digital systems to blend real and virtual worlds.

Results: Instruments to acquire real world, to display virtual world, and to make both of them interact.

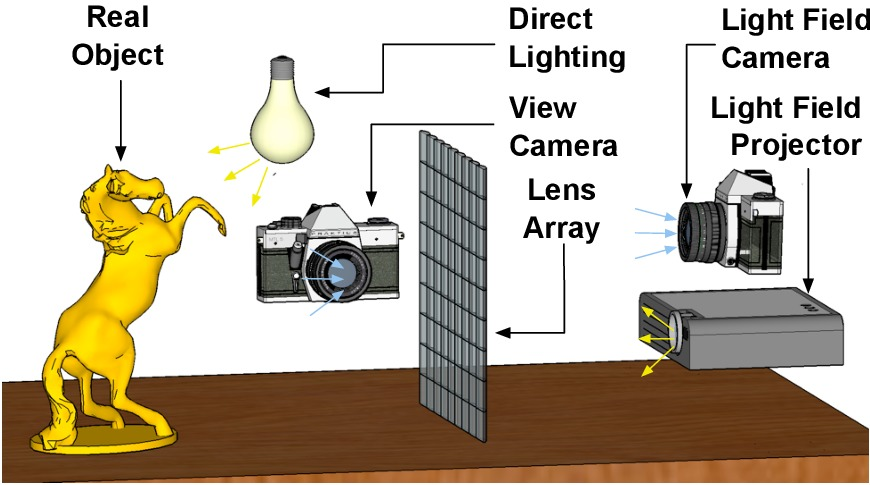

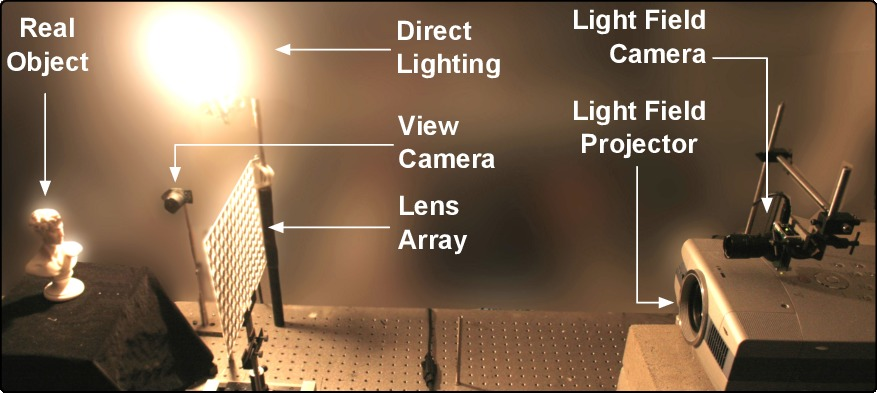

In this axis, we investigate unified acquisition and display systems, that is systems which combine optical instruments with digital processing. From digital to real, we investigate new display approaches 54, 42. We consider projecting systems and surfaces 21, for personal use, virtual reality and augmented reality 16. From the real world to the digital world, we favor direct measurements of parameters for models and representations, using (new) optical systems unless digitization is required 35, 34. These resulting systems have to acquire the different phenomena described in Axis 1 and to display them, in an efficient manner 40, 15, 41, 44. By efficient, we mean that we want to shorten the path between the real world and the virtual world by increasing the data bandwidth between the real (analog) and the virtual (digital) worlds, and by reducing the latency for real-time interactions (we have to prevent unnecessary conversions, and to reduce processing time). To reach this goal, the systems have to be designed as a whole, not by a simple concatenation of optical systems and digital processes, nor by considering each component independently 45.

To increase data bandwidth, one solution is to parallelize more and more the physical systems. One possible solution is to multiply the number of simultaneous acquisitions (e.g., simultaneous images from multiple viewpoints 44, 63). Similarly, increasing the number of viewpoints is a way toward the creation of full 3D displays 54. However, full acquisition or display of 3D real environments theoretically requires a continuous field of viewpoints, leading to huge data size. Despite the current belief that the increase of computational power will fill the missing gap, when it comes to visual or physical realism, if you double the processing power, people may want four times more accuracy, thus increasing data size as well. To reach the best performances, a trade-off has to be found between the amount of data required to represent accurately the reality and the amount of required processing. This trade-off may be achieved using compressive sensing. Compressive sensing is a new trend issued from the applied mathematics community that provides tools to accurately reconstruct a signal from a small set of measurements assuming that it is sparse in a transform domain (e.g., 62, 87).

We prefer to achieve this goal by avoiding as much as possible the classical approach where acquisition is followed by a fitting step: this requires in general a large amount of measurements and the fitting itself may consume consequently too much memory and preprocessing time. By preventing unnecessary conversion through fitting techniques, such an approach increase the speed and reduce the data transfer for acquisition but also for display. One of the best recent examples is the work of Cossairt et al. 25. The whole system is designed around a unique representation of the energy-field issued from (or leaving) a 3D object, either virtual or real: the Light-Field. A Light-Field encodes the light emitted in any direction from any position on an object. It is acquired thanks to a lens-array that leads to the capture of, and projection from, multiple simultaneous viewpoints. A unique representation is used for all the steps of this system. Lens-arrays, parallax barriers, and coded-aperture 52 are one of the key technologies to develop such acquisition (e.g., Light-Field camera 1 45 and acquisition of light-sources 35), projection systems (e.g., auto-stereoscopic displays). Such an approach is versatile and may be applied to improve classical optical instruments 50. More generally, by designing unified optical and digital systems 59, it is possible to leverage the requirement of processing power, the memory footprint, and the cost of optical instruments.

Those are only some examples of what we investigate. We also consider the following approaches to develop new unified systems. First, similar to (and based on) the analysis goal of Axis 1, we have to take into account as much as possible the characteristics of the measurement setup. For instance, when fitting cannot be avoided, integrating them may improve both the processing efficiency and accuracy 61. Second, we have to integrate signals from multiple sensors (such as GPS, accelerometer, ...) to prevent some computation (e.g., 53). Finally, the experience of the group in surface modeling help the design of optical surfaces 48 for light sources or head-mounted displays.

3.5 Axis 3: Rendering, Visualization and Illustration

Challenge: How to offer the most legible signal to the final observer in real-time?

Results: High-level shading primitives, expressive rendering techniques for object depiction, real-time realistic rendering algorithms

The main goal of this axis is to offer to the final observer, in this case mostly a human user, the most legible signal in real-time. Thanks to the analysis and to the decomposition in different phenomena resulting from interactions between light, shape, and matter (Axis 1), and their perception, we can use them to convey essential information in the most pertinent way. Here, the word pertinent can take various forms depending on the application.

In the context of scientific illustration and visualization, we are primarily interested in tools to convey shape or material characteristics of objects in animated 3D scenes. Expressive rendering techniques (see Figure 6c,d) provide means for users to depict such features with their own style. To introduce our approach, we detail it from a shape-depiction point of view, domain where we have acquired a recognized expertise. Prior work in this area mostly focused on stylization primitives to achieve line-based rendering 85, 49 or stylized shading 19, 83 with various levels of abstraction. A clear representation of important 3D object features remains a major challenge for better shape depiction, stylization and abstraction purposes. Most existing representations provide only local properties (e.g., curvature), and thus lack characterization of broader shape features. To overcome this limitation, we are developing higher level descriptions of shape 13 with increased robustness to sparsity, noise, and outliers. This is achieved in close collaboration with Axis 1 by the use of higher-order local fitting methods, multi-scale analysis, and global regularization techniques. In order not to neglect the observer and the material characteristics of the objects, we couple this approach with an analysis of the appearance model. To our knowledge, this is an approach which has not been considered yet. This research direction is at the heart of the MANAO project, and has a strong connection with the analysis we plan to conduct in Axis 1. Material characteristics are always considered at the light ray level, but an understanding of higher-level primitives (like the shape of highlights and their motion) would help us to produce more legible renderings and permit novel stylizations; for instance, there is no method that is today able to create stylized renderings that follow the motion of highlights or shadows. We also believe such tools also play a fundamental role for geometry processing purposes (such as shape matching, reassembly, simplification), as well as for editing purposes as discussed in Axis 4.

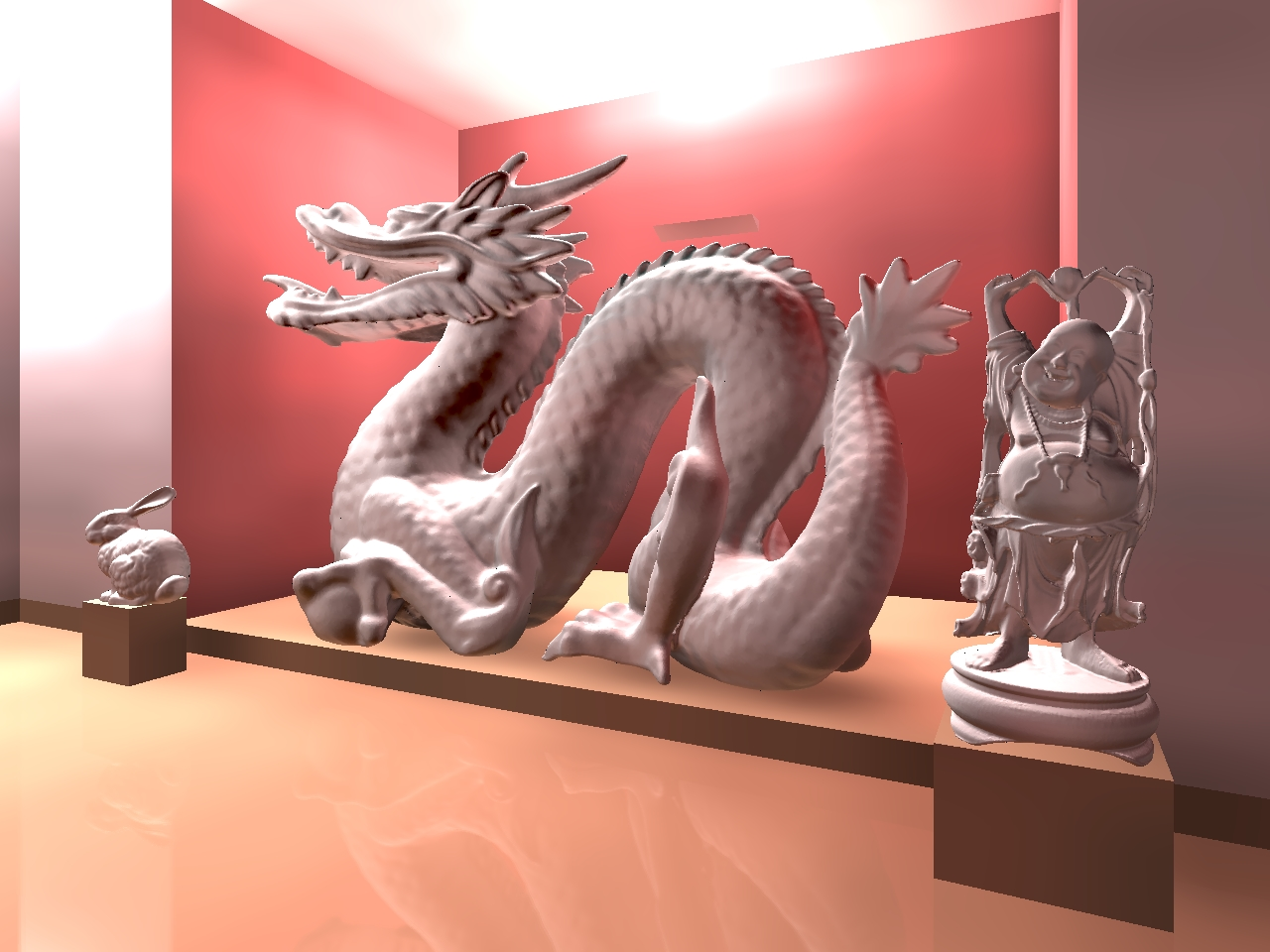

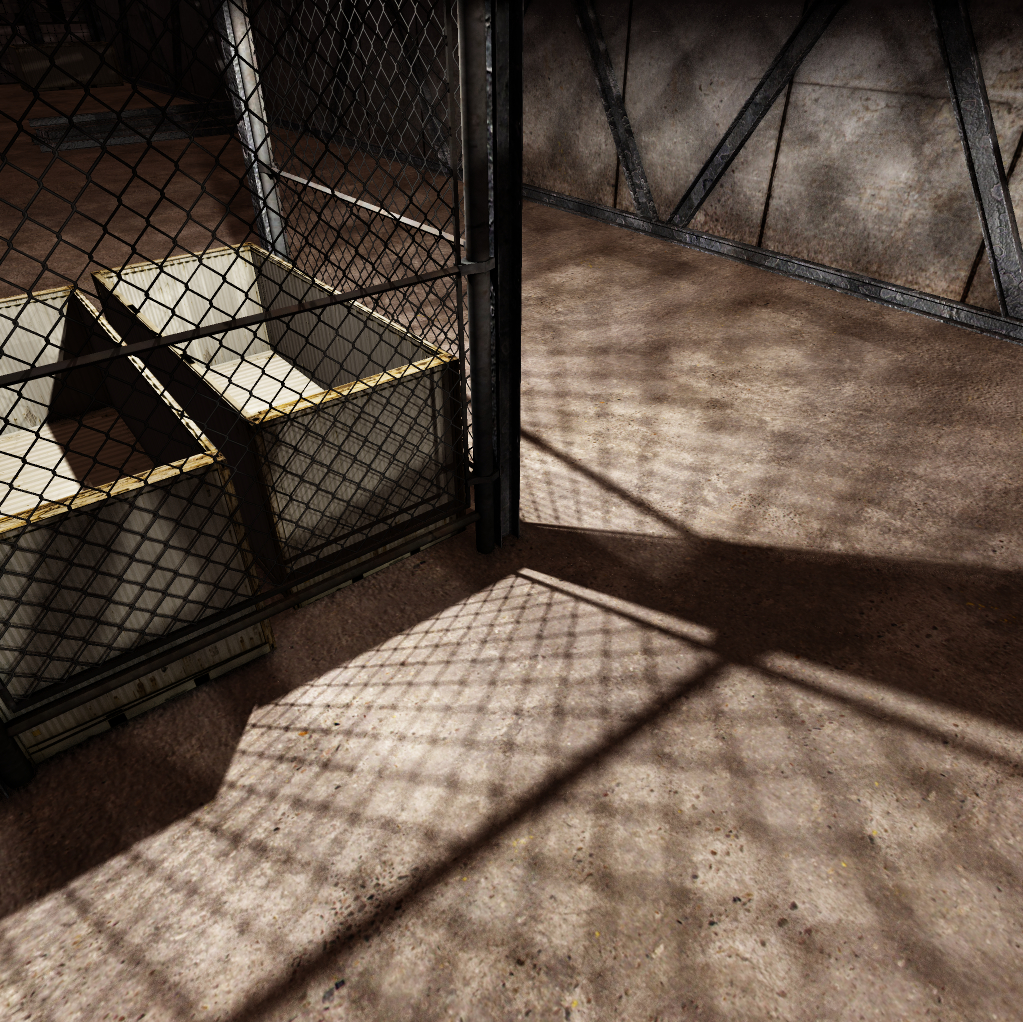

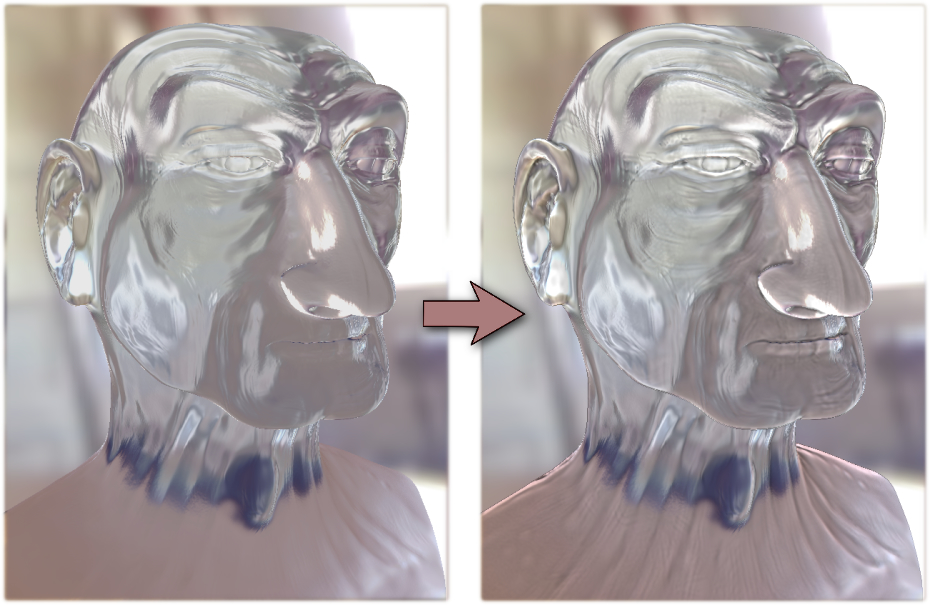

In the context of real-time photo-realistic rendering ((see Figure 6a,b), the challenge is to compute the most plausible images with minimal effort. During the last decade, a lot of work has been devoted to design approximate but real-time rendering algorithms of complex lighting phenomena such as soft-shadows 86, motion blur 32, depth of field 74, reflexions, refractions, and inter-reflexions. For most of these effects it becomes harder to discover fundamentally new and faster methods. On the other hand, we believe that significant speedup can still be achieved through more clever use of massively parallel architectures of the current and upcoming hardware, and/or through more clever tuning of the current algorithms. In particular, regarding the second aspect, we remark that most of the proposed algorithms depend on several parameters which can be used to trade the speed over the quality. Significant speed-up could thus be achieved by identifying effects that would be masked or facilitated and thus devote appropriate computational resources to the rendering 51, 31. Indeed, the algorithm parameters controlling the quality vs speed are numerous without a direct mapping between their values and their effect. Moreover, their ideal values vary over space and time, and to be effective such an auto-tuning mechanism has to be extremely fast such that its cost is largely compensated by its gain. We believe that our various work on the analysis of the appearance such as in Axis 1 could be beneficial for such purpose too.

Realistic and real-time rendering is closely related to Axis 2: real-time rendering is a requirement to close the loop between real world and digital world. We have to thus develop algorithms and rendering primitives that allow the integration of the acquired data into real-time techniques. We have also to take care of that these real-time techniques have to work with new display systems. For instance, stereo, and more generally multi-view displays are based on the multiplication of simultaneous images. Brute force solutions consist in independent rendering pipeline for each viewpoint. A more energy-efficient solution would take advantages of the computation parts that may be factorized. Another example is the rendering techniques based on image processing, such as our work on augmented reality 23. Independent image processing for each viewpoint may disturb the feeling of depth by introducing inconsistent information in each images. Finally, more dedicated displays 42 would require new rendering pipelines.

3.6 Axis 4: Editing and Modeling

Challenge: Editing and modeling appearance using drawing- or sculpting-like tools through high level representations.

Results: High-level primitives and hybrid representations for appearance and shape.

During the last decade, the domain of computer graphics has exhibited tremendous improvements in image quality, both for 2D applications and 3D engines. This is mainly due to the availability of an ever increasing amount of shape details, and sophisticated appearance effects including complex lighting environments. Unfortunately, with such a growth in visual richness, even so-called vectorial representations (e.g., subdivision surfaces, Bézier curves, gradient meshes, etc.) become very dense and unmanageable for the end user who has to deal with a huge mass of control points, color labels, and other parameters. This is becoming a major challenge, with a necessity for novel representations. This Axis is thus complementary of Axis 3: the focus is the development of primitives that are easy to use for modeling and editing.

More specifically, we plan to investigate vectorial representations that would be amenable to the production of rich shapes with a minimal set of primitives and/or parameters. To this end we plan to build upon our insights on dynamic local reconstruction techniques and implicit surfaces 2418. When working in 3D, an interesting approach to produce detailed shapes is by means of procedural geometry generation. For instance, many natural phenomena like waves or clouds may be modeled using a combination of procedural functions. Turning such functions into triangle meshes (main rendering primitives of GPUs) is a tedious process that appears not to be necessary with an adapted vectorial shape representation where one could directly turn procedural functions into implicit geometric primitives. Since we want to prevent unnecessary conversions in the whole pipeline (here, between modeling and rendering steps), we will also consider hybrid representations mixing meshes and implicit representations. Such research has thus to be conducted while considering the associated editing tools as well as performance issues. It is indeed important to keep real-time performance (cf. Axis 2) throughout the interaction loop, from user inputs to display, via editing and rendering operations. Finally, it would be interesting to add semantic information into 2D or 3D geometric representations. Semantic geometry appears to be particularly useful for many applications such as the design of more efficient manipulation and animation tools, for automatic simplification and abstraction, or even for automatic indexing and searching. This constitutes a complementary but longer term research direction.

In the MANAO project, we want to investigate representations beyond the classical light, shape, and matter decomposition. We thus want to directly control the appearance of objects both in 2D and 3D applications (e.g., 79): this is a core topic of computer graphics. When working with 2D vector graphics, digital artists must carefully set up color gradients and textures: examples range from the creation of 2D logos to the photo-realistic imitation of object materials. Classic vector primitives quickly become impractical for creating illusions of complex materials and illuminations, and as a result an increasing amount of time and skill is required. This is only for still images. For animations, vector graphics are only used to create legible appearances composed of simple lines and color gradients. There is thus a need for more complex primitives that are able to accommodate complex reflection or texture patterns, while keeping the ease of use of vector graphics. For instance, instead of drawing color gradients directly, it is more advantageous to draw flow lines that represent local surface concavities and convexities. Going through such an intermediate structure then allows to deform simple material gradients and textures in a coherent way (see Figure 7), and animate them all at once. The manipulation of 3D object materials also raises important issues. Most existing material models are tailored to faithfully reproduce physical behaviors, not to be easily controllable by artists. Therefore artists learn to tweak model parameters to satisfy the needs of a particular shading appearance, which can quickly become cumbersome as the complexity of a 3D scene increases. We believe that an alternative approach is required, whereby material appearance of an object in a typical lighting environment is directly input (e.g., painted or drawn), and adapted to match a plausible material behavior. This way, artists will be able to create their own appearance (e.g., by using our shading primitives 79), and replicate it to novel illumination environments and 3D models. For this purpose, we will rely on the decompositions and tools issued from Axis 1.

|

|

|

|

|

|

| (a) | (b) | (c) | (d) | (e) | (f) |

4 Application domains

4.1 Physical Systems

Given our close relationships with researchers in optics, one novelty of our approach is to extend the range of possible observers to physical sensors in order to work on domains such as simulation, mixed reality, and testing. Capturing, processing, and visualizing complex data is now more and more accessible to everyone, leading to the possible convergence of real and virtual worlds through visual signals. This signal is traditionally captured by cameras. It is now possible to augment them by projecting (e.g., the infrared laser of Microsoft Kinect) and capturing (e.g., GPS localization) other signals that are outside the visible range. This supplemental information replaces values traditionally extracted from standard images and thus lowers down requirements in computational power. Since the captured images are the result of the interactions between light, shape, and matter, the approaches and the improved knowledge from MANAO help in designing interactive acquisition and rendering technologies that are required to merge the real and the virtual worlds. With the resulting unified systems (optical and digital), transfer of pertinent information is favored and inefficient conversion is likely avoided, leading to new uses in interactive computer graphics applications, like augmented reality, displays and computational photography.

4.2 Interactive Visualization and Modeling

This direction includes domains such as scientific illustration and visualization, artistic or plausible rendering, and 3D modeling. In all these cases, the observer, a human, takes part in the process, justifying once more our focus on real-time methods. When targeting average users, characteristics as well as limitations of the human visual system should be taken into account: in particular, it is known that some configurations of light, shape, and matter have masking and facilitation effects on visual perception. For specialized applications (such as archeology), the expertise of the final user and the constraints for 3D user interfaces lead to new uses and dedicated solutions for models and algorithms.

5 Social and environmental responsibility

5.1 Footprint of research activities

For a few years now, our team has been collectively carefull in limiting its direct environmental impacts, mainly by limiting the number of flights and extending the lifetime of PCs and laptops beyong the warranty period.

For 2020, because of the health crisis, our team members did not took any flights and our home work trips have also been very limited due to a massive use of telecommuting. Moreover, we have not bought any computer nor screen over this year. As a result, a careful estimation of the footprint of our research activities for the year is irrelevant.

5.2 Environmental involvement

Gaël Guennebaud and Pascal Barla are engaged in several actions and initiatives related to the environmental issues, both within Inria itself, and within the research and higher-education domain in general:

- We are members of the “Local Sustainable Development Committee” of Inria Bordeaux.

- G. Guennebaud is a member of the “Social and Environmental Responsibility Committee” of the LaBRI.

- We have been very active within the Inria's national initiatives, in particular:

- We have part of the working group on reducing the footprint of professionnal travels, with, in particular, the design of the content of the flyless document which has eventually been taken up at the national level by the service of communication.

- We have participated in the elaboration of the questionnaire on the role of Inria regarding environmental issues, as well as helped disseminating it.

- G. Guennebaud has been involved in the developpement of tools to ease the estimation of the Inria team's carbon footprint.

- Ecoinfo: G. Guennebaud is involved within the GDS Ecoinfo of the CNRS, and in particular he is in charge of the developpement of the ecodiag tool.

- Labo1point5: G. Guennebaud has joined the labo1point5 group, in particular to help with the developpment of a module to take into account the carbon footprint of ICT devices within their carbon footprint estimation tool (GES1point5).

- G. Guennebaud has participated in the elaboraton and dissemination of a new course, “Introduction to climate change issues and the environnemental impacts of ICT”, with two collegues of the LaBRI.

6 Highlights of the year

2020 was marked by the covid crisis and its impact on the overall society and its activity. The world of research has also been greatly affected:

- Faculty members have seen their teaching load increase significantly;

- PhD students and post-docs with have had often to deal with a worsening of their working conditions, as well as of reduced interactions with their supervisors and colleagues;

- Most scientific collaborations have been greatly affected, with several of international activities cancelled or postponed to dates still to be defined.

7 New software and platforms

7.1 New software

7.1.1 Eigen

- Keyword: Linear algebra

- Functional Description: Eigen is an efficient and versatile C++ mathematical template library for linear algebra and related algorithms. In particular it provides fixed and dynamic size matrices and vectors, matrix decompositions (LU, LLT, LDLT, QR, eigenvalues, etc.), sparse matrices with iterative and direct solvers, some basic geometry features (transformations, quaternions, axis-angles, Euler angles, hyperplanes, lines, etc.), some non-linear solvers, automatic differentiations, etc. Thanks to expression templates, Eigen provides a very powerful and easy to use API. Explicit vectorization is performed for the SSE, AltiVec and ARM NEON instruction sets, with graceful fallback to non-vectorized code. Expression templates allow to perform global expression optimizations, and to remove unnecessary temporary objects.

- Release Contributions: In 2017, we released three revisions of the 3.3 branch with few fixes of compilation and performance regressions, some doxygen documentation improvements, and the addition of transpose, adjoint, conjugate methods to SelfAdjointView to ease writing generic code.

-

URL:

http://

eigen. tuxfamily. org/ - Contact: Gaël Guennebaud

- Participant: Gaël Guennebaud

7.1.2 Spectral Viewer

- Keyword: Image

- Functional Description: An open-source (spectral) image viewer that supports several images formats: ENVI (spectral), exr, png, jpg.

-

URL:

https://

adufay. gitlabpages. inria. fr/ SpectralViewer/ index. html - Contact: Romain Pacanowski

- Partner: LP2N (CNRS - UMR 5298)

7.1.3 otmap

- Name: C++ optimal transport solver on 2D grids

- Keywords: Optimal transportation, Eigen, C++, Image processing, Numerical solver

-

Functional Description:

This is a lightweight implementation of "Instant Transport Maps on 2D Grids".

It currently supports L2-optimal maps from an arbitrary density defined on a uniform 2D grid (aka an image) to a square with uniform density. Inverse maps and maps between pairs of arbitrary images are then recovered through numerical inversion and composition resulting in density preserving but approximately optimal maps.

This code also includes with 3 mini applications:

- otmap: computes the forward and backward maps between one image and a uniform square or between a pair of images. The maps are exported as .off quad meshes. - stippling: adapt a uniformly distributed point cloud to a given image. - barycenters: computes linear (resp. bilinear) approximate Wasserstein barycenters between a pair (resp. four) images.

- Contact: Gaël Guennebaud

7.1.4 Malia

- Name: The Malia Rendering Framework

- Keywords: 3D, Realistic rendering, GPU, Ray-tracing, Material appearance

- Functional Description: The Malia Rendering Framework is an open source library for predictive, physically-realistic rendering. It comes with several applications, including a spectral path tracer, RGB-to-spectral conversion routines a blender bridge and a spectral image viewer.

-

URL:

https://

pacanows. gitlabpages. inria. fr/ MRF/ main. md. html - Contact: Romain Pacanowski

8 New results

8.1 Analysis and Simulation

8.1.1 Bringing an Accurate Fresnel to Real-Time Rendering: a Preintegrable Decomposition

Participants: Laurent Belcour, Mégane Bati, Pascal Barla.

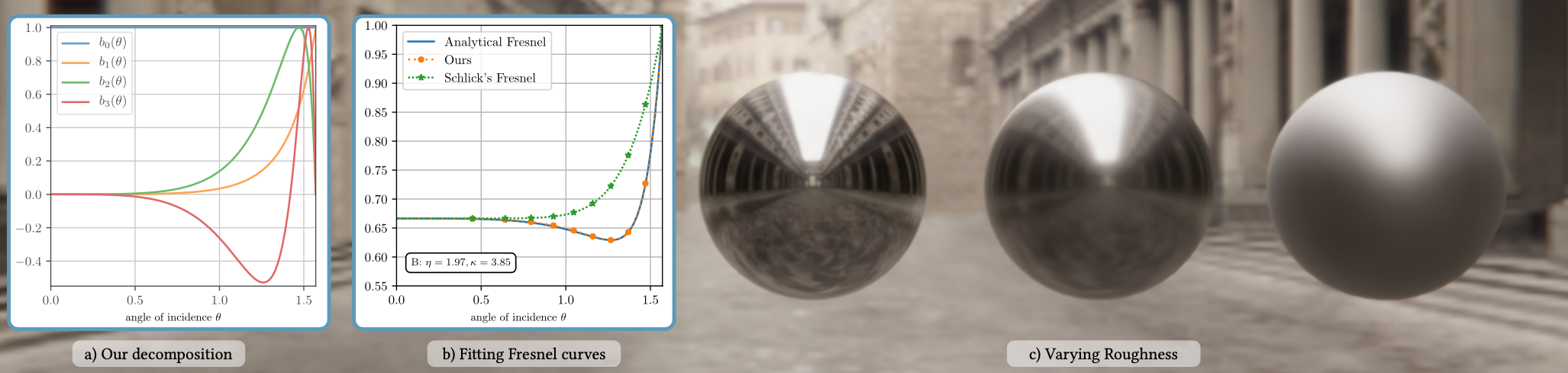

In this work 11, we introduce a new approximate Fresnel reflectance model that enables the accurate reproduction of ground-truth reflectance in real-time rendering engines. Our method is based on an empirical decomposition of the space of possible Fresnel curves (Figure 8). It is compatible with the preintegration of image-based lighting and area lights used in real-time engines. Our work permits to use a reflectance parametrization 37 that was previously restricted to offline rendering.

8.1.2 Effects of light map orientation and shape on the visual perception of canonical materials

Participants: Fan Zhang, Huib de Ridder, Pascal Barla, Sylvia Pont.

We previously presented a systematic optics-based canonical approach to test material-lighting interactions in their full natural ecology, combining canonical material and lighting modes. Analyzing the power of the spherical harmonics components of the lighting allowed us to predict the lighting effects on material perception for generic natural illumination environments. To further understand how material properties can be brought out or communicated visually, in the current study 9, we tested whether and how light map orientation and shape affect these interactions in a rating experiment: For combinations of four materials, three shapes, and three light maps, we rotated the light maps in 15 different configurations. For the velvety objects, there were main and interaction effects of lighting and light map orientation. The velvety ratings decreased when the main light source was coming from the back of the objects. For the specular objects, there were main and interaction effects of lighting and shape. The specular ratings increased when the environment in the specular reflections was clearly visible in the stimuli. For the glittery objects, there were main and interaction effects of shape and light map orientation. The glittery ratings correlated with the coverage of the glitter reflections as the shape and light map orientation varied. For the matte objects, results were robust across all conditions. Last, we propose combining the canonical modes approach with so-called importance maps to analyze the appearance features of the proximal stimulus, the image, in contradistinction to the physical parameters as an approach for optimization of material communication.

8.2 Rendering, Visualization and Illustration

8.2.1 On Learning the Best Balancing Strategy

Participants: David Murray, Sofiane Benzait, Romain Pacanowski, Xavier Granier.

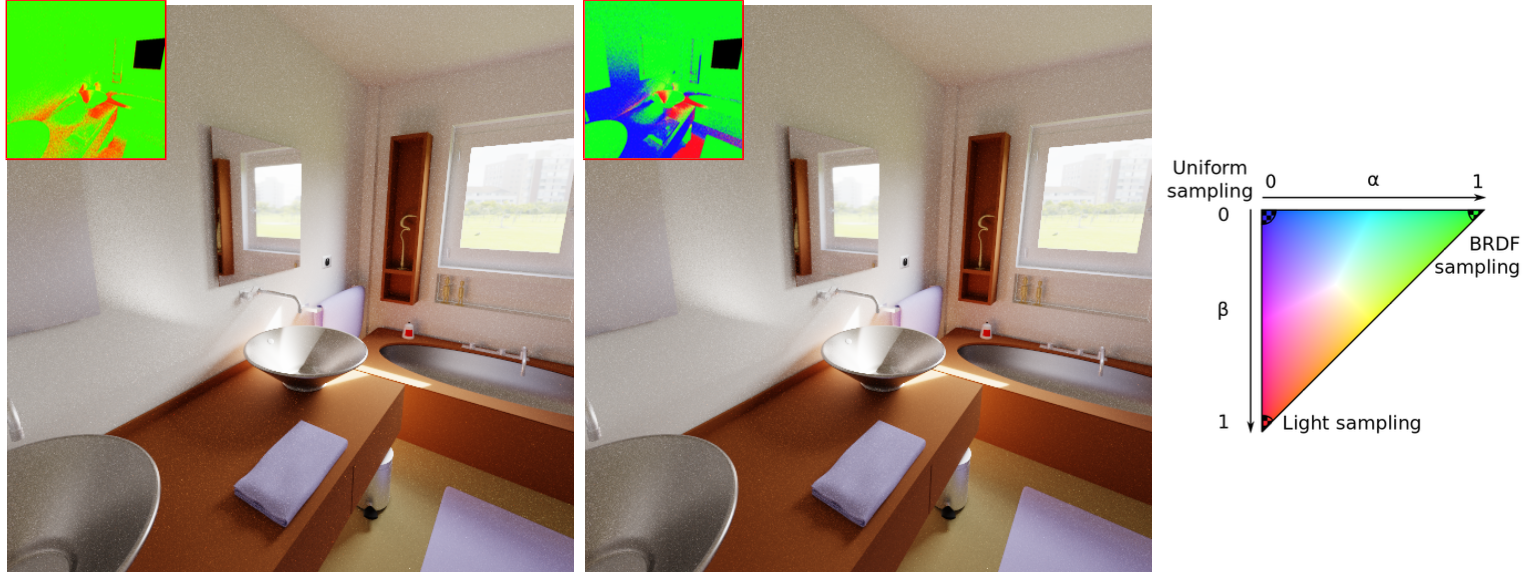

Fast computation of light propagation using Monte Carlo techniques requires finding the best samples from the space of light paths. For the last 30 years, numerous strategies have been developed to address this problem but choosing the best one is really scene-dependent. Multiple Importance Sampling (MIS) emerges as a potential generic solution by combining different weighted strategies, to take advantage of the best ones. Most recent work have focused on defining the best weighting scheme. Among them, two paper have shown that it is possible, in the context of direct illumination, to estimate the best way to balance the number of samples between two strategies, on a per-pixel basis. In this paper 10, we extend this previous approach to Global Illumination and to three strategies (Figure 9).

8.3 Editing and Modeling

8.3.1 A Time-independent Deformer for Elastic-rigid Contacts

Participants: Camille Brunel, Pierre Bénard, Gaël Guennebaud, Pascal Barla.

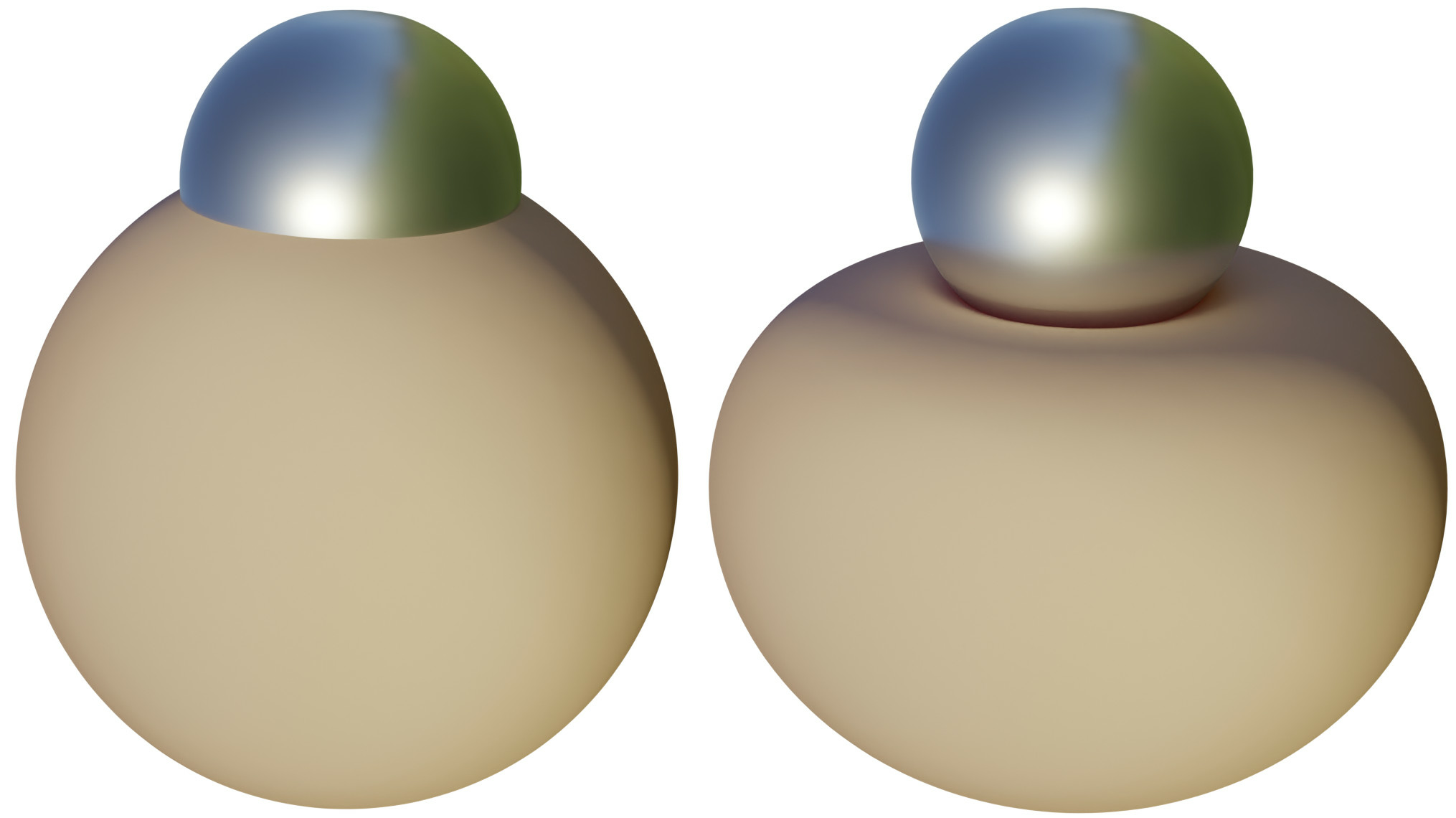

In this article 6, we introduce a new tool that assists artists in deforming an elastic object when it comes in intersection with a rigid one. As opposed to methods that rely on time-resolved simulations, our approach is entirely based on time-independent geometric operators. It thus restarts from scratch at every frame from a pair of objects in intersection and works in two stages: the intersected regions are first matched and a contact region is identified on the rigid object; the elastic object is then deformed to match the contact while producing plausible bulge effects with controllable volume preservation (Figure 10). Our direct deformation approach brings several advantages to 3D animators: it provides instant feedback, permits non-linear editing, allows for the replicability of the deformation in different settings, and grants control over exaggerated or stylized bulging effects.

9 Bilateral contracts and grants with industry

9.1 Bilateral contracts with industry

CIFRE PhD contract with Imaging Optics (2017-2020)

Participants: C. Herzog & X. Granier

For this project, we aim at developing 3 dimensions X-rays imaging techniques for medical applications.

10 Partnerships and cooperations

10.1 National initiatives

10.1.1 ANR

“Young Researcher” VIDA (2017-2021)

- LP2N-CNRS-IOGS INRIA

Leader: R. Pacanowski (LP2N-CNRS-IOGS)

Participant: P. Barla

This project aims at establishing a framework for direct and inverse design of material appearance for objects of complex shape. Since the manufacturing processes are always evolving, our goal is to establish a framework that is not tied to a fabrication stage.

FOLD-Dyn (2017-2021)

IRIT, IMAGINE, MANAO, TeamTo, Mercenaries

Leader: L. Barthe (IRIT)

Local Leader: G. Guennebaud

The FOLD-Dyn project proposes the study of new theoretical approaches for the effective generation of virtual characters deformations, when they are animated. These deformations are two-folds: character skin deformations (skinning) and garment simulations. We propose to explore the possibilities offered by a novel theoretical way of addressing character deformations: the implicit skinning. This method jointly uses meshes and volumetric scalar functions. By improving the theoretical properties of scalar functions, the study of their joint use with meshes, and the introduction of a new approach and its formalism - called multi-layer 3D scalar functions - we aim at finding effective solutions allowing production studios to easily integrate in their pipeline plausible character deformations together with garment simulations.

CaLiTrOp (2017-2021)

IRIT, LIRIS, MANAO, MAVERICK

Leader: M. Paulin (IRIT)

Participant: D. Murray

What is the inherent dimensionality, topology and geometry of light-paths space? How can we leverage this information to improve lighting simulation algorithms? These are the questions that this project wants to answer from a comprehensive functional analysis of light transport operators, with respect to the 3D scene's geometry and the reflectance properties of the objects, but also, to link operators with screen-space visual effects, with respect to the resulting picture.

11 Dissemination

11.1 Promoting scientific activities

11.1.1 Scientific events: organisation

Member of the conference program committees

Eurographics 2021, Eurographics Symposium on Geometry Processing 2020, Eurographics Symposium on Rendering 2020, Web3D Conference 2020, Eurographics Workshop on Graphics and Cultural Heritage 2020

Reviewer

ACM Siggraph, ACM Siggraph Asia

11.1.2 Journal

Reviewer - reviewing activities

ACM Transactions on Graphics, IEEE Transactions on Visualization and Computer Graphics, Journal of Vision

11.1.3 Scientific expertise

Patric Reuter reviewed one project submissions of the Horizon 2020 - Research and Innovation Action of the European Commission.

11.2 Teaching - Supervision - Juries

11.2.1 Teaching

The members of our team are involved in teaching computer science at University of Bordeaux and Institut d'Optique Graduate School (IOGS). General computer science is concerned, as well as the following graphics related topics:

- Master : Pierre Bénard, Gaël Guennebaud, Advanced Image Synthesis, 50 HETD, M2, Univ. Bordeaux, France.

- Master : Gaël Guennebaud, Geometric Modeling, 31 HETD, M2, IOGS, France

- Master : Gaël Guennebaud and Pierre Bénard, 3D Worlds, 60 HETD, M1, Univ. Bordeaux and IOGS, France.

- Master : Pierre Bénard, Patrick Reuter, Virtual Reality, 20 HETD, M2, Univ. Bordeaux, France.

- Master : Patrick Reuter, Graphical user interfaces and Spatial augmented reality seminars, 10 HETD, M2, ESTIA, France.

- Master : Romain Pacanowski, 3D Programming, M2, 18 HETD, Bordeaux INP ENSEIRB Matmeca, France.

- Licence : Patrick Reuter, Digital Imaging, 30 HETD, L3, Univ. Bordeaux, France.

- Licence : Gaël Guennebaud, Introduction to climate change issues and the environnemental impacts of ICT,24 HETD, L3, Univ. Bordeaux, France.

One member is also in charge of a field of study:

- Master : Pierre Bénard, M2 “Informatique pour l'Image et le Son”, Univ. Bordeaux, France.

Pierre Bénard was also part of the education team of the DIU (Diplôme Inter-Universitaire) titled “Numérique et Sciences Informatiques” which is opened to secondary professors that are teaching Computer Science in high school. The second session took place during the last three weeks of June.

11.2.2 Supervision

- PhD: Antoine Lucat, Appearance Acquisition and Rendering, IOGS & Univ. Bordeaux, R. Pacanowski & X. Granier, 06/02/2020

- PhD: Charlotte Herzog, 3 dimensions X-rays imaging for medical, applications, Imaging Optics, IOGS & Univ. Bordeaux, X. Granier, 24/09/2020

- PhD in progress: Camille Brunel, Real-Time Animation and Deformation of 3D Characters, Inria & Univ. Bordeaux, P. Barla, G. Guennebaud & P. Bénard

- PhD in progress: Megane Bati, Inverse Design for Complex Material Apperance, IOGS & Univ. Bordeaux, R. Pacanowski & P. Barla

- PhD in progress: Charlie Schlick, Augmented reality with transparent multi-view screens, Inria & Univ. Bordeaux, P. Reuter

- PhD in progress: Corentin Cou, Characterization of visual appearance for 3D restitution and exploration of monumental heritage, Inria & IOGS & CNRS/INHA, G. Guennebaud, X. Granier, M. Volait, R. Pacanowski.

11.2.3 Juries

- Thibault Louis, Université Grenoble Alpes, October 22th, 2020.

- Asmaâ Agouzoul, Univ. Bordeaux, January 9th, 2020.

11.3 Popularization

11.3.1 Internal or external Inria responsibilities

- Gaël Guennebaud and Pascal Barla are active members of the CLDD (Local Sustainable Development Committee), Gaël Guennebaud being one of the two co-representative.

11.3.2 Interventions

- Les impacts environnementaux du numérique. Gaël Guennebaud. CATI BIOS4Biol INRAE (10/12/2020)

- Textile 3D exhibition at the MEB (Musée Ethnographique de Bordeaux) has been extended until May 2021.

12 Scientific production

12.1 Major publications

- 1 articleA Practical Extension to Microfacet Theory for the Modeling of Varying IridescenceACM Transactions on Graphics364July 2017, 65

- 2 article Autostereoscopic transparent display using a wedge light guide and a holographic optical element: implementation and results Applied optics 58 34 November 2019

- 3 articleA Two-Scale Microfacet Reflectance Model Combining Reflection and DiffractionACM Transactions on Graphics364Article 66July 2017, 12

- 4 article A View-Dependent Metric for Patch-Based LOD Generation & Selection Proceedings of the ACM on Computer Graphics and Interactive Techniques 1 1 May 2018

- 5 articleInstant Transport Maps on 2D GridsACM Transactions on Graphics376November 2018, 13

12.2 Publications of the year

International journals

Conferences without proceedings

Other scientific publications

12.3 Cited publications

- 12 articleReliefs as imagesACM Trans. Graph.2942010, URL: http://doi.acm.org/10.1145/1778765.1778797

- 13 articleSurface Relief Analysis for Illustrative ShadingComputer Graphics Forum314June 2012, 1481-1490URL: http://hal.inria.fr/hal-00709492

- 14 techreport Distribution-based BRDFs unpublished Univ. of Utah 2007

- 15 article Time-resolved 3D Capture of Non-stationary Gas Flows ACM Trans. Graph. 27 5 2008

- 16 book Spatial Augmented Reality: Merging Real and Virtual Worlds A K Peters/CRC Press 2005

- 17 articleOptimizing Environment Maps for Material DepictionComput. Graph. Forum (Proceedings of the Eurographics Symposium on Rendering)3042011, URL: http://www-sop.inria.fr/reves/Basilic/2011/BCRA11

- 18 articleLeast Squares Subdivision SurfacesComput. Graph. Forum2972010, 2021-2028URL: http://hal.inria.fr/inria-00524555/en

- 19 articleStyle Transfer Functions for Illustrative Volume RenderingComput. Graph. Forum2632007, 715-724URL: http://www.cg.tuwien.ac.at/research/publications/2007/bruckner-2007-STF/

- 20 inproceedingsUnstructured lumigraph renderingProc. ACM SIGGRAPH2001, 425-432URL: http://doi.acm.org/10.1145/383259.383309

- 21 articleApplication of radial basis functions to shape description in a dual-element off-axis eyewear display: Field-of-view limitJ. Society for Information Display16112008, 1089-1098

- 22 article A Survey on Participating Media Rendering Techniques The Visual Computer 2005

- 23 inproceedingsOn-Line Visualization of Underground Structures using Context FeaturesSymposium on Virtual Reality Software and Technology (VRST)ACM2010, 167-170URL: http://hal.inria.fr/inria-00524818/en

- 24 articleNon-oriented MLS Gradient FieldsComputer Graphics ForumDecember 2013, URL: http://hal.inria.fr/hal-00857265

- 25 articleLight field transfer: global illumination between real and synthetic objectsACM Trans. Graph.2732008, URL: http://doi.acm.org/10.1145/1360612.1360656

- 26 articleA novel approach makes higher order wavelets really efficient for radiosityComput. Graph. Forum1932000, 99-108

- 27 articleA quantized-diffusion model for rendering translucent materialsACM Trans. Graph.3042011, URL: http://doi.acm.org/10.1145/2010324.1964951

- 28 inproceedingsReflectance and texture of real-world surfacesIEEE Conference on Computer Vision and Pattern Recognition (ICCV)1997, 151-157

- 29 articleFabricating spatially-varying subsurface scatteringACM Trans. Graph.2942010, URL: http://doi.acm.org/10.1145/1778765.1778799

- 30 book Advanced Global Illumination A.K. Peters 2006

- 31 articleFrequency analysis and sheared filtering for shadow light fields of complex occludersACM Trans. Graph.3022011, URL: http://doi.acm.org/10.1145/1944846.1944849

- 32 articleFrequency Analysis and Sheared Reconstruction for Rendering Motion BlurACM Trans. Graph.2832009, URL: http://hal.inria.fr/inria-00388461/en

- 33 articleSpecular reflections and the perception of shapeJ. Vis.492004, 798-820URL: http://journalofvision.org/4/9/10/

- 34 inproceedingsBRDF Acquisition with Basis IlluminationIEEE International Conference on Computer Vision (ICCV)2007, 1-8

- 35 articleAccurate Light Source Acquisition and RenderingACM Trans. Graph.2232003, 621-630URL: http://hal.inria.fr/hal-00308294

- 36 inproceedingsModeling the interaction of light between diffuse surfacesProc. ACM SIGGRAPH1984, 213-222

- 37 articleArtist Friendly Metallic FresnelJournal of Computer Graphics Techniques (JCGT)34December 2014, 64--72URL: http://jcgt.org/published/0003/04/03/

- 38 articlePhysical reproduction of materials with specified subsurface scatteringACM Trans. Graph.2942010, URL: http://doi.acm.org/10.1145/1778765.1778798

- 39 phdthesis Simulating Global Illumination Using Adaptative Meshing University of California 1991

- 40 articleFluorescent immersion range scanningACM Trans. Graph.2732008, URL: http://doi.acm.org/10.1145/1360612.1360686

- 41 articleAcquisition and analysis of bispectral bidirectional reflectance and reradiation distribution functionsACM Trans. Graph.2942010, URL: http://doi.acm.org/10.1145/1778765.1778834

- 42 articleDynamic Display of BRDFsComput. Graph. Forum3022011, 475--483URL: http://dx.doi.org/10.1111/j.1467-8659.2011.01891.x

- 43 articleTransparent and Specular Object ReconstructionComput. Graph. Forum2982010, 2400-2426

- 44 inproceedingsA Kaleidoscopic Approach to Surround Geometry and Reflectance AcquisitionIEEE Conf. Computer Vision and Pattern Recognition Workshops (CVPRW)IEEE Computer Society2012, 29-36

- 45 inproceedingsA theory of plenoptic multiplexingIEEE Conf. Computer Vision and Pattern Recognition (CVPR)\bf oralIEEE Computer Society2010, 483-490

- 46 inproceedingsThe rendering equationProc. ACM SIGGRAPH1986, 143-150URL: http://doi.acm.org/10.1145/15922.15902

- 47 inproceedingsFast, arbitrary BRDF shading for low-frequency lighting using spherical harmonicsProc. Eurographics workshop on Rendering (EGWR)Pisa, Italy2002, 291-296

-

48

articleEdge clustered fitting grids for

-polynomial characterization of freeform optical surfaces'Opt. Express19272011, 26962-26974URL: http://www.opticsexpress.org/abstract.cfm?URI=oe-19-27-26962 - 49 articleDemarcating curves for shape illustrationACM Trans. Graph. (SIGGRAPH Asia)2752008, URL: http://doi.acm.org/10.1145/1409060.1409110

- 50 articleRecording and controlling the 4D light field in a microscope using microlens arraysJ. Microscopy23522009, 144-162URL: http://dx.doi.org/10.1111/j.1365-2818.2009.03195.x

- 51 articlePredicted Virtual Soft Shadow Maps with High Quality FilteringComput. Graph. Forum3022011, 493-502URL: http://hal.inria.fr/inria-00566223/en

- 52 articleProgrammable aperture photography: multiplexed light field acquisitionACM Trans. Graph.2732008, URL: http://doi.acm.org/10.1145/1360612.1360654

- 53 articleOnline Tracking of Outdoor Lighting Variations for Augmented Reality with Moving CamerasIEEE Transactions on Visualization and Computer Graphics184March 2012, 573-580URL: http://hal.inria.fr/hal-00664943

- 54 article3D TV: a scalable system for real-time acquisition, transmission, and autostereoscopic display of dynamic scenesACM Trans. Graph.2332004, 814-824URL: http://doi.acm.org/10.1145/1015706.1015805

- 55 articleA data-driven reflectance modelACM Trans. Graph.2232003, 759-769URL: http://doi.acm.org/10.1145/882262.882343

- 56 inproceedingsAcquisition, Synthesis and Rendering of Bidirectional Texture FunctionsEurographics 2004, State of the Art Reports2004, 69-94

- 57 inproceedingsExperimental Analysis of BRDF ModelsProc. Eurographics Symposium on Rendering (EGSR)2005, 117-226

- 58 book Geometrical Considerations and Nomenclature for Reflectance National Bureau of Standards 1977

- 59 article Optical computing for fast light transport analysis ACM Trans. Graph. 29 6 2010

- 60 articleVolumetric Vector-based Representation for Indirect Illumination CachingJ. Computer Science and Technology (JCST)2552010, 925-932URL: http://hal.inria.fr/inria-00505132/en

- 61 articleRational BRDFIEEE Transactions on Visualization and Computer Graphics1811February 2012, 1824-1835URL: http://hal.inria.fr/hal-00678885

- 62 article Compressive light transport sensing ACM Trans. Graph. 28 1 2009

- 63 articleMulticamera Real-Time 3D Modeling for Telepresence and Remote CollaborationInt. J. digital multimedia broadcasting2010Article ID 247108, 12 pages2010, URL: http://hal.inria.fr/inria-00436467

- 64 articleOn the relationship between radiance and irradiance: determining the illumination from images of a convex Lambertian objectJ. Opt. Soc. Am. A18102001, 2448-2459

- 65 articleA first-order analysis of lighting, shading, and shadowsACM Trans. Graph.2612007, URL: http://doi.acm.org/10.1145/1189762.1189764

- 66 book Computational Photography: Mastering New Techniques for Lenses, Lighting, and Sensors A K Peters/CRC Press 2012

- 67 book High Dynamic Range Imaging: Acquisition, Display and Image-Based Lighting 2nd edition Morgan Kaufmann Publishers 2010

- 68 article Pocket reflectometry ACM Trans. Graph. 30 4 2011

- 69 articleArcheoTUI-Driving virtual reassemblies with tangible 3D interactionJ. Computing and Cultural Heritage322010, 1-13URL: http://hal.inria.fr/hal-00523688/en

- 70 articleThe zonal method for calculating light intensities in the presence of a participating mediumACM SIGGRAPH Comput. Graph.2141987, 293-302URL: http://doi.acm.org/10.1145/37402.37436

- 71 inproceedingsA New Change of Variables for Efficient BRDF RepresentationProc. EGWR '981998, 11-22

- 72 inproceedingsSpherical wavelets: efficiently representing functions on the sphereProc. ACM SIGGRAPHannual conference on Computer graphics and interactive techniques1995, 161-172URL: http://doi.acm.org/10.1145/218380.218439

- 73 book Radiosity and Global Illumination Morgan Kaufmann Publishers 1994

- 74 articleFourier Depth of FieldACM Transactions on Graphics2822009, URL: http://hal.inria.fr/inria-00345902

- 75 articleEfficient Glossy Global Illumination with Interactive ViewingComputer Graphics Forum1912000, 13-25

- 76 articleBarycentric Parameterizations for Isotropic BRDFsIEEE Trans. Vis. and Comp. Graph.1122005, 126-138URL: http://dx.doi.org/10.1109/TVCG.2005.26

- 77 book Visual Perception from a Computer Graphics Perspective A K Peters/CRC Press 2011

- 78 articleAll-Frequency Precomputed Radiance Transfer Using Spherical Radial Basis Functions and Clustered Tensor ApproximationACM Trans. Graph.2532006, 967-976

- 79 inproceedingsDynamic Stylized Shading PrimitivesProc. Int. Symposium on Non-Photorealistic Animation and Rendering (NPAR)ACM2011, 99-104URL: http://hal.inria.fr/hal-00617157/en

- 80 articleThe influence of shape on the perception of material reflectanceACM Trans. Graph.2632007, URL: http://doi.acm.org/10.1145/1276377.1276473

- 81 inproceedingsMetropolis light transportProc. SIGGRAPH '97annual conference on Computer graphics and interactive techniquesACM/Addison-Wesley Publishing Co.1997, 65-76URL: http://doi.acm.org/10.1145/258734.258775

- 82 articleSurface Flows for Image-based Shading DesignACM Transactions on Graphics313August 2012, URL: http://hal.inria.fr/hal-00702280

- 83 articleImproving Shape Depiction under Arbitrary RenderingIEEE Trans. Visualization and Computer Graphics1782011, 1071-1081URL: http://hal.inria.fr/inria-00585144/en

- 84 articleLight warping for enhanced surface depictionACM Trans. Graph.283\it front cover2009, 25:1URL: http://doi.acm.org/10.1145/1531326.1531331

- 85 articleImplicit Brushes for Stylized Line-based RenderingComput. Graph. Forum302\bf 3\textsuperscriptrd best paper award2011, 513-522URL: http://hal.inria.fr/inria-00569958/en

- 86 articleSoft Textured Shadow VolumeComput. Graph. Forum2842009, 1111-1120URL: http://hal.inria.fr/inria-00390534/en

- 87 article Kernel Nyström method for light transport ACM Trans. Graph. 28 3 2009

- 88 articlePacket-based Hierarchical Soft Shadow MappingComput. Graph. Forum2842009, 1121-1130URL: http://hal.inria.fr/inria-00390541/en