Keywords

Computer Science and Digital Science

- A5.1.3. Haptic interfaces

- A5.1.5. Body-based interfaces

- A5.1.9. User and perceptual studies

- A5.4.2. Activity recognition

- A5.4.5. Object tracking and motion analysis

- A5.4.8. Motion capture

- A5.5.4. Animation

- A5.6. Virtual reality, augmented reality

- A5.6.1. Virtual reality

- A5.6.3. Avatar simulation and embodiment

- A5.6.4. Multisensory feedback and interfaces

- A5.10.3. Planning

- A5.10.5. Robot interaction (with the environment, humans, other robots)

- A5.11.1. Human activity analysis and recognition

- A6. Modeling, simulation and control

Other Research Topics and Application Domains

- B1.2.2. Cognitive science

- B2.5. Handicap and personal assistances

- B2.8. Sports, performance, motor skills

- B5.1. Factory of the future

- B5.8. Learning and training

- B7.1.1. Pedestrian traffic and crowds

- B9.2.2. Cinema, Television

- B9.2.3. Video games

- B9.4. Sports

1 Team members, visitors, external collaborators

Research Scientists

- Franck Multon [Team leader, Inria, Senior Researcher, HDR]

- Adnane Boukhayma [Inria, Researcher]

- Ludovic Hoyet [Inria, Researcher]

- Katja Zibrek [Inria, Starting Research Position, from Feb 2020]

Faculty Members

- Nicolas Bideau [Univ Rennes 2, Associate Professor]

- Benoit Bideau [Univ Rennes 2, Professor, HDR]

- Marc Christie [Univ Rennes 1, Associate Professor]

- Armel Crétual [Univ Rennes 2, Associate Professor, HDR]

- Georges Dumont [École normale supérieure de Rennes, Professor, HDR]

- Diane Haering [Univ Rennes 2, Associate Professor]

- Simon Kirchhofer [École normale supérieure de Rennes, ATER, from Sep 2020]

- Richard Kulpa [Univ Rennes 2, Associate Professor, HDR]

- Fabrice Lamarche [Univ Rennes 1, Associate Professor]

- Guillaume Nicolas [Univ Rennes 2, Associate Professor]

- Anne-Hélène Olivier [Univ Rennes 2, Associate Professor]

- Charles Pontonnier [École normale supérieure de Rennes, Associate Professor, HDR]

Post-Doctoral Fellows

- Pierre Raimbaud [Inria, from Dec 2020]

- Bhuvaneswari Sarupuri [Univ Rennes 2, From October 2020]

PhD Students

- Vicenzo Abichequer-Sangalli [Inria, from Nov. 2020, co-supervised with Rainbow]

- Jean Basset [Inria, co-supervised with Morpheo]

- Florian Berton [Inria, co-supervised with Rainbow]

- Jean Baptiste Bordier [Univ Rennes 1, from Oct 2020]

- Hugo Brument [Univ Rennes 1, Co-supervised with Rainbow and Hybrid teams]

- Ludovic Burg [Univ Rennes 1]

- Thomas Chatagnon [Inria, From Nov. 2020, co-supervised with Rainbow]

- Adèle Colas [Inria, co-supervised with Rainbow]

- Erwan Delhaye [Univ Rennes 2]

- Louise Demestre [École normale supérieure de Rennes]

- Diane Dewez [Univ Rennes1, co-supervised with Hybrid]

- Charles Faure [Univ Rennes 2, until Aug 2020]

- Rebecca Fribourg [Inria, co-supervised with Hybrid]

- Olfa Haj Mahmoud [Faurecia]

- Nils Hareng [Univ Rennes 2]

- Simon Hilt [École normale supérieure de Rennes, until Aug 2020]

- Alberto Jovane [Inria, co-supervised with Rainbow]

- Qian Li [Inria, from Oct 2020]

- Annabelle Limballe [Univ Rennes 2]

- Claire Livet [École normale supérieure de Rennes]

- Amaury Louarn [Univ Rennes 1, until Nov 2020]

- Pauline Morin [École normale supérieure de Rennes, from Sep 2020]

- Lucas Mourot [InterDigital, from Jun 2020]

- Benjamin Niay [Inria]

- Nicolas Olivier [InterDigital]

- Theo Perrin [École normale supérieure de Rennes, until Aug 2020]

- Pierre Puchaud [Fondation Saint-Cyr, until Nov 2020]

- Carole Puil [IFPEK Rennes]

- Pierre Touzard [Univ Rennes 2]

- Alexandre Vu [Univ Rennes 2]

- Xi Wang [Univ Rennes 1]

- Tairan Yin [Inria, from Nov. 2020, co-supervised with Rainbow]

- Mohamed Younes [Inria, from Dec 2020]

Technical Staff

- Robin Adili [Inria, Engineer, from Sep 2020]

- Ronan Gaugne [Univ Rennes 1, Engineer]

- Shubhendu Jena [Inria, Engineer, from Dec 2020]

- Anthony Mirabile [SATT Ouest Valorisation, Engineer]

- Adrien Reuzeau [Inria, Engineer, from Oct 2020]

- Anthony Sorel [Univ Rennes 2, Engineer]

- Xiaofang Wang [Univ de Reims Champagne-Ardennes, Engineer, from May 2020]

Interns and Apprentices

- Robin Adili [Inria, from Feb 2020 until Jul 2020]

- Jean Peic Chou [Univ Rennes 1, from May 2020 until Aug 2020]

- Thomas Kergoat [Inria, until Apr 2020]

- Dan Mahoro [Inria, from Jun 2020]

- Florence Maqueda [Inria, from May 2020 until Jul 2020]

- Lendy Mulot [École normale supérieure de Rennes, from May 2020 until Jul 2020]

- Pierre Nicot-Berenger [Univ Rennes 2, until May 2020]

- Steven Picard [Inria, until Jul 2020]

Administrative Assistant

- Nathalie Denis [Inria]

Visiting Scientist

- Iana Podkosova [Université de Vienne - Autriche, from Sep 2020 until Nov 2020]

2 Overall objectives

2.1 Presentation

MimeTIC is a multidisciplinary team whose aim is to better understand and model human activity in order to simulate realistic autonomous virtual humans: realistic behaviors, realistic motions and realistic interactions with other characters and users. It leads to modeling the complexity of a human body, as well as of his environment where he can pick-up information and he can act on it. A specific focus is dedicated to human physical activity and sports as it raises the highest constraints and the highest complexity when addressing these problems. Thus, MimeTIC is composed of experts in computer science whose research interests are computer animation, behavioral simulation, motion simulation, crowds and interaction between real and virtual humans. MimeTIC is also composed of experts in sports science, motion analysis, motion sensing, biomechanics and motion control. Hence, the scientific foundations of MimeTIC are motion sciences (biomechanics, motion control, perception-action coupling, motion analysis), computational geometry (modeling of the 3D environment, motion planning, path planning) and design of protocols in immersive environments (use of virtual reality facilities to analyze human activity).

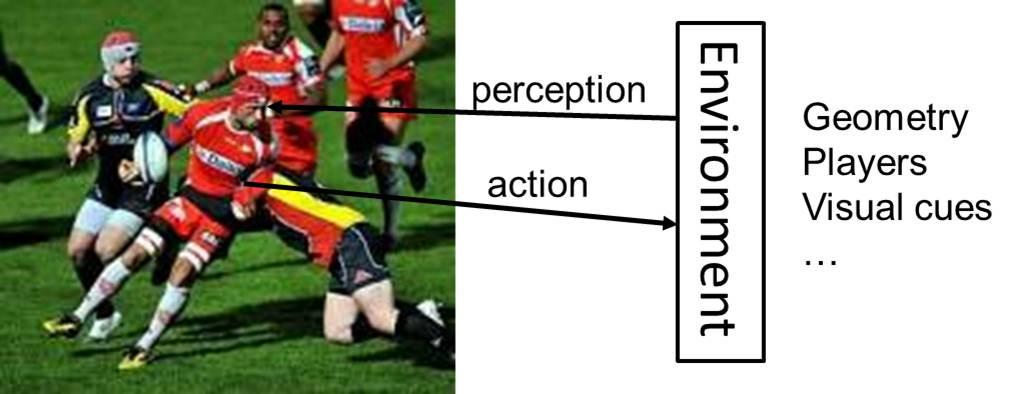

Thanks to these skills, we wish to reach the following objectives: to make virtual humans behave, move and interact in a natural manner in order to increase immersion and to improve knowledge on human motion control. In real situations (see Figure 1), people have to deal with their physiological, biomechanical and neurophysiological capabilities in order to reach a complex goal. Hence MimeTIC addresses the problem of modeling the anatomical, biomechanical and physiological properties of human beings. Moreover these characters have to deal with their environment. Firstly they have to perceive this environment and pick-up relevant information. MimeTIC thus addresses the problem of modeling the environment including its geometry and associated semantic information. Secondly, they have to act on this environment to reach their goals. It leads to cognitive processes, motion planning, joint coordination and force production in order to act on this environment.

In order to reach the above objectives, MimeTIC has to address three main challenges:

- dealing with the intrinsic complexity of human beings, especially when addressing the problem of interactions between people for which it is impossible to predict and model all the possible states of the system,

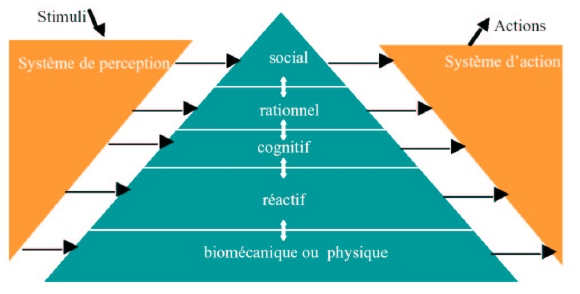

- making the different components of human activity control (such as the biomechanical and physical, the reactive, cognitive, rational and social layers) interact while each of them is modeled with completely different states and time sampling,

- and being able to measure human activity while balancing between ecological and controllable protocols, and to be able to extract relevant information in wide databases of information.

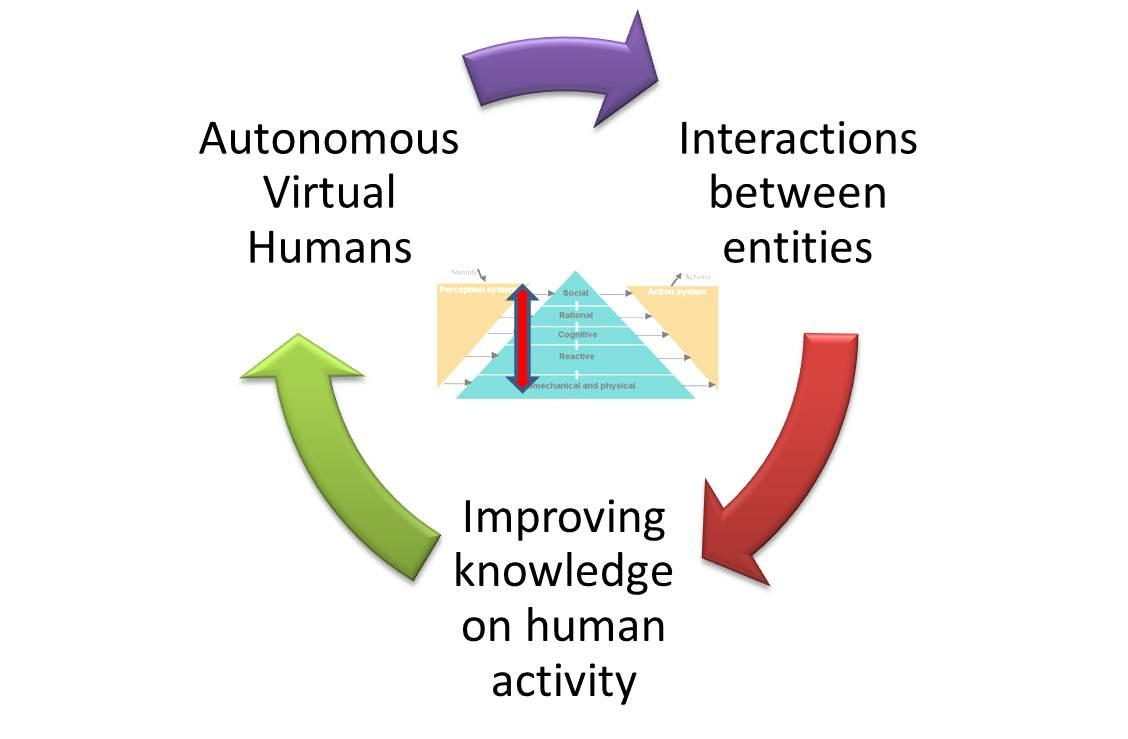

Contrary to many classical approaches in computer simulation, which mostly propose simulation without trying to understand how real people do, the team promotes a coupling between human activity analysis and synthesis, as shown in Figure 2.

In this research path, improving knowledge on human activity enables us to highlight fundamental assumptions about natural control of human activities. These contributions can be promoted in e.g. biomechanics, motion sciences, neurosciences. According to these assumptions we propose new algorithms for controlling autonomous virtual humans. The virtual humans can perceive their environment and decide of the most natural action to reach a given goal. This work is promoted in computer animation, virtual reality and has some applications in robotics through collaborations. Once autonomous virtual humans have the ability to act as real humans would in the same situation, it is possible to make them interact with others, i.e., autonomous characters (for crowds or group simulations) as well as real users. The key idea here is to analyze to what extent the assumptions proposed at the first stage lead to natural interactions with real users. This process enables the validation of both our assumptions and our models.

Among all the problems and challenges described above, MimeTIC focuses on the following domains of research:

- motion sensing which is a key issue to extract information from raw motion capture systems and thus to propose assumptions on how people control their activity,

- human activity & virtual reality, which is explored through sports application in MimeTIC. This domain enables the design of new methods for analyzing the perception-action coupling in human activity, and to validate whether the autonomous characters lead to natural interactions with users,

- interactions in small and large groups of individuals, to understand and model interactions with lot of individual variability such as in crowds,

- virtual storytelling which enables us to design and simulate complex scenarios involving several humans who have to satisfy numerous complex constraints (such as adapting to the real-time environment in order to play an imposed scenario), and to design the coupling with the camera scenario to provide the user with a real cinematographic experience,

- biomechanics which is essential to offer autonomous virtual humans who can react to physical constraints in order to reach high-level goals, such as maintaining balance in dynamic situations or selecting a natural motor behavior among the whole theoretical solution space for a given task,

- and autonomous characters which is a transversal domain that can reuse the results of all the other domains to make these heterogeneous assumptions and models provide the character with natural behaviors and autonomy.

3 Research program

3.1 Biomechanics and Motion Control

Human motion control is a highly complex phenomenon that involves several layered systems, as shown in Figure 3. Each layer of this controller is responsible for dealing with perceptual stimuli in order to decide the actions that should be applied to the human body and his environment. Due to the intrinsic complexity of the information (internal representation of the body and mental state, external representation of the environment) used to perform this task, it is almost impossible to model all the possible states of the system. Even for simple problems, there generally exists an infinity of solutions. For example, from the biomechanical point of view, there are much more actuators (i.e. muscles) than degrees of freedom leading to an infinity of muscle activation patterns for a unique joint rotation. From the reactive point of view there exists an infinity of paths to avoid a given obstacle in navigation tasks. At each layer, the key problem is to understand how people select one solution among these infinite state spaces. Several scientific domains have addressed this problem with specific points of view, such as physiology, biomechanics, neurosciences and psychology.

In biomechanics and physiology, researchers have proposed hypotheses based on accurate joint modeling (to identify the real anatomical rotational axes), energy minimization, force and torques minimization, comfort maximization (i.e. avoiding joint limits), and physiological limitations in muscle force production. All these constraints have been used in optimal controllers to simulate natural motions. The main problem is thus to define how these constraints are composed altogether such as searching the weights used to linearly combine these criteria in order to generate a natural motion. Musculoskeletal models are stereotyped examples for which there exists an infinity of muscle activation patterns, especially when dealing with antagonist muscles. An unresolved problem is to define how to use the above criteria to retrieve the actual activation patterns, while optimization approaches still leads to unrealistic ones. It is still an open problem that will require multidisciplinary skills including computer simulation, constraint solving, biomechanics, optimal control, physiology and neuroscience.

In neuroscience, researchers have proposed other theories, such as coordination patterns between joints driven by simplifications of the variables used to control the motion. The key idea is to assume that instead of controlling all the degrees of freedom, people control higher level variables which correspond to combinations of joint angles. In walking, data reduction techniques such as Principal Component Analysis have shown that lower-limb joint angles are generally projected on a unique plane whose angle in the state space is associated with energy expenditure. Although knowledge exists for specific motions, such as locomotion or grasping, this type of approach is still difficult to generalize. The key problem is that many variables are coupled and it is very difficult to objectively study the behavior of a unique variable in various motor tasks. Computer simulation is a promising method to evaluate such type of assumptions as it enables to accurately control all the variables and to check if it leads to natural movements.

Neuroscience also addresses the problem of coupling perception and action by providing control laws based on visual cues (or any other senses), such as determining how the optical flow is used to control direction in navigation tasks, while dealing with collision avoidance or interception. Coupling of the control variables is enhanced in this case as the state of the body is enriched by the large amount of external information that the subject can use. Virtual environments inhabited with autonomous characters whose behavior is driven by motion control assumptions is a promising approach to solve this problem. For example, an interesting problem in this field is navigation in an environment inhabited with other people. Typically, avoiding static obstacles together with other people displacing into the environment is a combinatory problem that strongly relies on the coupling between perception and action.

One of the main objectives of MimeTIC is to enhance knowledge on human motion control by developing innovative experiments based on computer simulation and immersive environments. To this end, designing experimental protocols is a key point and some of the researchers in MimeTIC have developed this skill in biomechanics and perception-action coupling. Associating these researchers to experts in virtual human simulation, computational geometry and constraints solving enable us to contribute to enhance fundamental knowledge in human motion control.

3.2 Experiments in Virtual Reality

Understanding interactions between humans is challenging because it addresses many complex phenomena including perception, decision-making, cognition and social behaviors. Moreover, all these phenomena are difficult to isolate in real situations, and it is therefore highly complex to understand their individual influence on these human interactions. It is then necessary to find an alternative solution that can standardize the experiments and that allows the modification of only one parameter at a time. Video was first used since the displayed experiment is perfectly repeatable and cut-offs (stop the video at a specific time before its end) allow having temporal information. Nevertheless, the absence of adapted viewpoint and stereoscopic vision does not provide depth information that are very meaningful. Moreover, during video recording session, the real human is acting in front of a camera and not of an opponent. The interaction is then not a real interaction between humans.

Virtual Reality (VR) systems allow full standardization of the experimental situations and the complete control of the virtual environment. It is then possible to modify only one parameter at a time and to observe its influence on the perception of the immersed subject. VR can then be used to understand what information is picked up to make a decision. Moreover, cut-offs can also be used to obtain temporal information about when information is picked up. When the subject can moreover react as in a real situation, his movement (captured in real time) provides information about his reactions to the modified parameter. Not only is the perception studied, but the complete perception-action loop. Perception and action are indeed coupled and influence each other as suggested by Gibson in 1979.

Finally, VR allows the validation of virtual human models. Some models are indeed based on the interaction between the virtual character and the other humans, such as a walking model. In that case, there are two ways to validate it. First, they can be compared to real data (e.g. real trajectories of pedestrians). But such data are not always available and are difficult to get. The alternative solution is then to use VR. The validation of the realism of the model is then done by immersing a real subject in a virtual environment in which a virtual character is controlled by the model. Its evaluation is then deduced from how the immersed subject reacts when interacting with the model and how realistic he feels the virtual character is.

3.3 Computer Animation

Computer animation is the branch of computer science devoted to models for the representation and simulation of the dynamic evolution of virtual environments. A first focus is the animation of virtual characters (behavior and motion). Through a deeper understanding of interactions using VR and through better perceptive, biomechanical and motion control models to simulate the evolution of dynamic systems, the Mimetic team has the ability to build more realistic, efficient and believable animations. Perceptual study also enables us to focus computation time on relevant information (i.e. leading to ensure natural motion from the perceptual points of view) and save time for unperceived details. The underlying challenges are (i) the computational efficiency of the system which needs to run in real-time in many situations, (ii) the capacity of the system to generalise/adapt to new situations for which data was not available or for which models were not defined for, and (iii) the variability of the models, i.e. their ability to handle many body morphologies and generate variations in motions that would be specific to each virtual character.

In many cases, however, these challenges cannot be addressed in isolation. Typically character behaviors also depend on the nature and the topology of the environment they are surrounded by. In essence, a character animation system should also rely on smarter representations of the environments, in order to better perceive the environment, and take contextualised decisions. Hence the animation of virtual characters in our context often requires to be coupled with models to represent the environment, reason, and plan both at a geometric level (can the character reach this location), and at a semantic level (should it use the sidewalk, the stairs, or the road). This represents the second focus. Underlying challenges are the ability to offer a compact, yet precise representation on which efficient path and motion planning can be performed, and on which high-level reasonning can be achieved.

Finally, a third scientific focus tied to the computer animation axis is digital storytelling. Evolved representations of motions and environments enable realistic animations. It is yet equally important to question how these event should be portrayed, when and under which angle. In essence, this means integrating discourse models into story models, the story representing the sequence of events which occur in a virtual environment, and the discourse representing how this story should be displayed (ie which events to show in which order and with which viewpoint). Underlying challenges are pertained to (i) narrative discourse representations, (ii) projections of the discourse into the geometry, planning camera trajectories and planning cuts between the viewpoints and (iii) means to interactively control the unfolding of the discourse.

By therefore establishing the foundations to build bridges between the high-level narrative structures, the semantic/geometric planning of motions and events, and low-level character animations, the Mimetic team adopts a principled and all-inclusive approach to the animation of virtual characters.

4 Application domains

4.1 Animation, Autonomous Characters and Digital Storytelling

Computer Animation is one of the main application domain of the research work conducted in the MimeTIC team, in particular in relation to the entertainment and game industries. In these domains, creating virtual characters that are able to replicate real human motions and behaviours still highlights unanswered key challenges, especially as virtual characters are becoming more and required to populate virtual worlds. For instance, virtual characters are used to replace secondary actors and generate highly populated scenes that would be hard and costly to produce with real actors, which requires to create high quality replicas that appear, move and behave both individually and collectively like real humans. The three key challenges for the MimeTIC team are therefore (i) to create natural animations (i.e., virtual characters that move like real humans), (ii) to create autonomous characters (i.e., that behave like real humans) and (iii) to orchestrate the virtual characters so as to create interactive stories.

First, our challenge is therefore to create animations of virtual characters that are natural, in the largest sense of the term of moving like a real human real would. This challenge covers several aspects of Character Animation depending on the context of application, e.g., producing visually plausible or physically correct motions, producing natural motion sequences, etc. Our goal is therefore to develop novel methods for animating virtual characters, e.g., based on motion capture, data-driven approaches, or learning approaches. However, because of the complexity of human motion (e.g., the number of degrees of freedom that can be controled), resulting animations are not necessarily physically, biomechanically, or visually plaisible. For instance, current physics-based approaches produce physically correct motions but not necessarily perceptually plausible ones. All these reasons are why most entertainment industries still mainly rely on manual animation, e.g., in games and movies. Therefore, research in MimeTIC on character animation is also conducted with the goal of validating objective (e.g., physical, biomechanical) as well as subjective (e.g., visual plausibility) criteria.

Second, one of the main challenges in terms of autonomous characters is to provide a unified architecture for the modeling of their behavior. This architecture includes perception, action and decisional parts. This decisional part needs to mix different kinds of models, acting at different time scale and working with different nature of data, ranging from numerical (motion control, reactive behaviors) to symbolic (goal oriented behaviors, reasoning about actions and changes). For instance, autonomous characters play the role of actors that are driven by a scenario in video games and virtual storytelling. Their autonomy allows them to react to unpredictable user interactions and adapt their behavior accordingly. In the field of simulation, autonomous characters are used to simulate the behavior of humans in different kind of situations. They enable to study new situations and their possible outcomes. In the MimeTIC team, our focus is therefore not to reproduce the human intelligence but to propose an architecture making it possible to model credible behaviors of anthropomorphic virtual actors evolving/moving in real time in virtual worlds. The latter can represent particular situations studied by psychologists of the behavior or to correspond to an imaginary universe described by a scenario writer. The proposed architecture should mimic all the human intellectual and physical functions.

Finally, interactive digital storytelling, including novel forms of edutainment and serious games, provides access to social and human themes through stories which can take various forms and contains opportunities for massively enhancing the possibilities of interactive entertainment, computer games and digital applications. It provides chances for redefining the experience of narrative through interactive simulations of computer-generated story worlds and opens many challenging questions at the overlap between computational narratives, autonomous behaviours, interactive control, content generation and authoring tools. Of particular interest for the MimeTIC research team, virtual storytelling triggers challenging opportunities in providing effective models for enforcing autonomous behaviours for characters in complex 3D environments. Offering both low-level capacities to characters such as perceiving the environments, interacting with the environment and reacting to changes in the topology, on which to build higher-levels such as modelling abstract representations for efficient reasoning, planning paths and activities, modelling cognitive states and behaviours requires the provision of expressive, multi-level and efficient computational models. Furthermore virtual storytelling requires the seamless control of the balance between the autonomy of characters and the unfolding of the story through the narrative discourse. Virtual storytelling also raises challenging questions on the conveyance of a narrative through interactive or automated control of the cinematography (how to stage the characters, the lights and the cameras). For example, estimating visibility of key subjects, or performing motion planning for cameras and lights are central issues for which have not received satisfactory answers in the literature.

4.2 Fidelity of Virtual Reality

VR is a powerful tool for perception-action experiments. VR-based experimental platforms allow exposing a population to fully controlled stimuli that can be repeated from trial to trial with high accuracy. Factors can be isolated and objects manipulations (position, size, orientation, appearance, ..) are easy to perform. Stimuli can be interactive and adapted to participants’ responses. Such interesting features allow researchers to use VR to perform experiments in sports, motion control, perceptual control laws, spatial cognition as well as person-person interactions. However, the interaction loop between users and their environment differs in virtual conditions in comparison with real conditions. When a user interact in an environment, movement from action and perception are closely related. While moving, the perceptual system (vision, proprioception,..) provides feedback about the users’ own motion and information about the surrounding environment. That allows the user to adapt his/her trajectory to sudden changes in the environment and generate a safe and efficient motion. In virtual conditions, the interaction loop is more complex because it involves several material aspects.

First, the virtual environment is perceived through a numerical display which could affect the available information and thus could potentially introduce a bias. For example, studies observed a distance compression effect in VR, partially explained by the use of Head Mounted Display with reduced field of view and exerting a weight and torques on the user’s head. Similarly, the perceived velocity in a VR environment differs from the real world velocity, introducing an additional bias. Other factors, such as the image contrast, delays in the displayed motion and the point of view can also influence efficiency in VR. The second point concerns the user’s motion in the virtual world. The user can actually move if the virtual room is big enough or if wearing a head mounted display. Even with a real motion, authors showed that walking speed is decreased, personal space size is modified and navigation in VR is performed with increased gait instability. Although natural locomotion is certainly the most ecological approach, the physical limited size of VR setups prevents from using it most of the time. Locomotion interfaces are therefore required. Locomotion interfaces are made up of two components, a locomotion metaphor (device) and a transfer function (software), that can also introduce bias in the generated motion. Indeed, the actuating movement of the locomotion metaphor can significantly differ from real walking and the simulated motion depends on the transfer function applied. Locomotion interfaces cannot usually preserve all the sensory channels involved in locomotion.

When studying human behavior in VR, the aforementioned factors in the interaction loop potentially introduce bias both in the perception and in the generation of motor behavior trajectories. MimeTIC is working on the mandatory step of VR validation to make it usable for capturing and analyzing human motion.

4.3 Motion Sensing of Human Activity

Recording human activity is a key point of many applications and fundamental works. Numerous sensors and systems have been proposed to measure positions, angles or accelerations of the user's body parts. Whatever the system is, one of the main problems is to be able to automatically recognize and analyze the user's performance according to poor and noisy signals. Human activity and motion are subject to variability: intra-variability due to space and time variations of a given motion, but also inter-variability due to different styles and anthropometric dimensions. MimeTIC has addressed the above problems in two main directions.

Firstly, we have studied how to recognize and quantify motions performed by a user when using accurate systems such as Vicon (product of Oxford Metrics), Qualisys, or Optitrack (product of Natural Point) motion capture systems. These systems provide large vectors of accurate information. Due to the size of the state vector (all the degrees of freedom) the challenge is to find the compact information (named features) that enables the automatic system to recognize the performance of the user. Whatever the method used, finding these relevant features that are not sensitive to intra-individual and inter-individual variability is a challenge. Some researchers have proposed to manually edit these features (such as a Boolean value stating if the arm is moving forward or backward) so that the expertise of the designer is directly linked with the success ratio. Many proposals for generic features have been proposed, such as using Laban notation which was introduced to encode dancing motions. Other approaches tend to use machine learning to automatically extract these features. However most of the proposed approaches were used to seek a database for motions which properties correspond to the features of the user's performance (named motion retrieval approaches). This does not ensure the retrieval of the exact performance of the user but a set of motions with similar properties.

Secondly, we wish to find alternatives to the above approach which is based on analyzing accurate and complete knowledge on joint angles and positions. Hence new sensors, such as depth-cameras (Kinect, product of Microsoft) provide us with very noisy joint information but also with the surface of the user. Classical approaches would try to fit a skeleton into the surface in order to compute joint angles which, again, lead to large state vectors. An alternative would be to extract relevant information directly from the raw data, such as the surface provided by depth cameras. The key problem is that the nature of these data may be very different from classical representation of human performance. In MimeTIC, we try to address this problem in specific application domains that require picking specific information, such as gait asymmetry or regularity for clinical analysis of human walking.

4.4 Sports

Sport is characterized by complex displacements and motions. One main objective is to understand the determinants of performance through the analysis of the motion itself. In the team, different sports have been studied such as the tennis serve, where the goal was to understand the contribution of each segment of the body in the performance but also the risk of injuries as well as other situation in cycling, swimming, fencing or soccer. Sports motions are dependent on visual information that the athlete can pick up in his environment, including the opponent's actions. Perception is thus fundamental to the performance. Indeed, a sportive action, as unique, complex and often limited in time, requires a selective gathering of information. This perception is often seen as a prerogative for action, it then takes the role of a passive collector of information. However, as mentioned by Gibson in 1979, the perception-action relationship should not be considered sequentially but rather as a coupling: we perceive to act but we must act to perceive. There would thus be laws of coupling between the informational variables available in the environment and the motor responses of a subject. In other words, athletes have the ability to directly perceive the opportunities of action directly from the environment. Whichever school of thought considered, VR offers new perspectives to address these concepts by complementary using real time motion capture of the immersed athlete.

In addition to better understand sports and interactions between athletes, VR can also be used as a training environment as it can provide complementary tools to coaches. It is indeed possible to add visual or auditory information to better train an athlete. The knowledge found in perceptual experiments can be for example used to highlight the body parts that are important to look at to correctly anticipate the opponent's action.

4.5 Ergonomics

The design of workstations nowadays tends to include assessment steps in a Virtual Environment (VE) to evaluate ergonomic features. This approach is more cost-effective and convenient since working directly on the Digital Mock-Up (DMU) in a VE is preferable to constructing a real physical mock-up in a Real Environment (RE). This is substantiated by the fact that a Virtual Reality (VR) set-up can be easily modified, enabling quick adjustments of the workstation design. Indeed, the aim of integrating ergonomics evaluation tools in VEs is to facilitate the design process, enhance the design efficiency, and reduce the costs.

The development of such platforms asks for several improvements in the field of motion analysis and VR. First, interactions have to be as natural as possible to properly mimic the motions performed in real environments. Second, the fidelity of the simulator also needs to be correctly evaluated. Finally, motion analysis tools have to be able to provide in real-time biomechanics quantities usable by ergonomists to analyse and improve the working conditions.

In real working condition, motion analysis and musculoskeletal risks assessment raise also many scientific and technological challenges. Similarly to virtual reality, fidelity of the working process may be affected by the measurement method. Wearing sensors or skin markers, together with the need of frequently calibrating the assessment system may change the way workers perform the tasks. Whatever the measurement is, classical ergonomic assessments generally address one specific parameter, such as posture, or force, or repetitions..., which makes it difficult to design a musculoskeletal risk factor that actually represent this risk. Another key scientific challenge is then to design new indicators that better capture the risk of musculoskeletal disorders. However, this indicator has to deal with the tradeoff between accurate biomechanical assessment and the difficulty to get reliable and the required information in real working conditions.

4.6 Locomotion and Interactions between walkers

Modeling and simulating locomotion and interactions between walkers is a very active, complex and competitive domain, interesting various disciplines such as mathematics, cognitive sciences, physics, computer graphics, rehabilitation etc. Locomotion and interactions between walkers are by definition at the very core of our society since they represent the basic synergies of our daily life. When walking in the street, we should produce a locomotor movement while taking information about our surrounding environment in order to interact with people, move without collision, alone or in a group, intercept, meet or escape to somebody. MimeTIC is an international key contributor in the domain of understanding and simulating locomotion and interactions between walkers. By combining an approach based on Human Movement Sciences and Computer Sciences, the team focuses on locomotor invariants which characterize the generation of locomotor trajectories, conducts challenging experiments focusing on visuo-motor coordination involved during interactions between walkers both using real and virtual set-ups. One main challenge is to consider and model not only the "average" behaviour of healthy younger adult but also extend to specific populations considering the effect of pathology or the effect of age (kids, older adults). As a first example, when patients cannot walk efficiently, in particular those suffering from central nervous system affections, it becomes very useful for practitioners to benefit from an objective evaluation of their capacities. To facilitate such evaluations, we have developed two complementary indices, one based on kinematics and the other one on muscle activations. One major point of our research is that such indices are usually only developed for children whereas adults with these affections are much more numerous. We extend this objective evaluation by using person-person interaction paradigm which allows studying visuo-motor strategies deficit in these specific populations.

Another fundamental question is the adaptation of the walking pattern according to anatomical constraints, such as pathologies in orthopedics, or adaptation to various human and non-human primates in paleoanthropoly. Hence, the question is to predict plausible locomotion according to a given morphology. This question raises fundamental questions about the variables that are regulated to control gait: balance control, minimum energy, minimum jerk...In MimeTIC we develop models and simulators to efficiently test hypothesis on gait control for given morphologies.

5 Highlights of the year

MimeTIC is part of the PIA3 Equipex+ CONTINUUM project leaded by CNRS, to develop and maintain an outstanding national network of VR platforms. This network will support academic research on the continuum between virtual and real world, and its applications.

PIA3 programs to support Sport have been launched in 2019, with two calls. In the second call, in 2020, MimeTIC has been granted for two (among 14 which have been submitted) such PPR projects:

- BEST Tennis leaded by Benoit Bideau and aiming at enhancing the performance of elite swimmers, with a strong investment of the French Swimming Federation,

- REVEA leaded by Richard Kulpa, aiming at developing new training sessions based on Virtual Reality for Boxing, Athletism and Gymnastics.

MimeTIC is also leading one of the 24 PIA3 EUR projects (among 81 projects submitted) Digisport. This project aims at creating a graduate school to support multidisciplinary research on sports, with the main Rennes actors in sports sciences, computer sciences, electronics, data sciences, and human and social sciences.

Anne-Hélène Olivier has defended her Habilitation to Direct Research (HDR) December 7th 2020.

5.1 Awards

Rebecca Fribourg (co-supervised with the Hybrid team) received two awards for her contributions on the topic of Avatars and Virtual Embodiment:

- IEEE Virtual Reality Best Journal Papers Award, for her work Avatar and Sense of Embodiment: Studying the Relative Preference Between Appearance, Control and Point of View.

- ICAT-EGVE Best Paper Award, for her work Influence of Threat Occurrence and Repeatability on the Sense of Embodiment and Threat Response in VR.

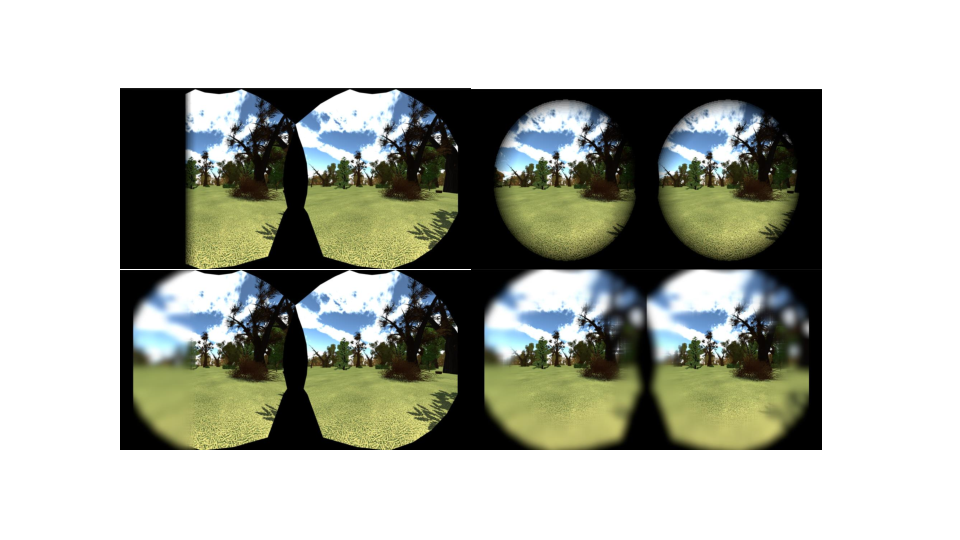

Hugo Brument (co-supervised with the Rainbow and Hybrid teams) received the best paper award 32 in Euro VR international conference for his work Influence of Dynamic Field of View Restrctions on Rotation Gain Perception in Virtual Environments.

6 New software and platforms

6.1 New software

6.1.1 AsymGait

- Name: Asymmetry index for clinical gait analysis based on depth images

- Keywords: Motion analysis, Kinect, Clinical analysis

- Scientific Description: The system uses depth images delivered by the Microsoft Kinect to retrieve the gait cycles first. To this end it is based on a analyzing the knees trajectories instead of the feet to obtain more robust gait event detection. Based on these cycles, the system computes a mean gait cycle model to decrease the effect of noise of the system. Asymmetry is then computed at each frame of the gait cycle as the spatial difference between the left and right parts of the body. This information is computed for each frame of the cycle.

- Functional Description: AsymGait is a software package that works with Microsoft Kinect data, especially depth images, in order to carry-out clinical gait analysis. First it identifies the main gait events using the depth information (footstrike, toe-off) to isolate gait cycles. Then it computes a continuous asymmetry index within the gait cycle. Asymmetry is viewed as a spatial difference between the two sides of the body.

- Authors: Edouard Auvinet, Franck Multon

- Contact: Franck Multon

- Participants: Edouard Auvinet, Franck Multon

6.1.2 Cinematic Viewpoint Generator

- Keyword: 3D animation

- Functional Description: The software, developed as an API, provides a mean to automatically compute a collection of viewpoints over one or two specified geometric entities, in a given 3D scene, at a given time. These viewpoints satisfy classical cinematographic framing conventions and guidelines including different shot scales (from extreme long shot to extreme close-up), different shot angles (internal, external, parallel, apex), and different screen compositions (thirds,fifths, symmetric of di-symmetric). The viewpoints allow to cover the range of possible framings for the specified entities. The computation of such viewpoints relies on a database of framings that are dynamically adapted to the 3D scene by using a manifold parametric representation and guarantee the visibility of the specified entities. The set of viewpoints is also automatically annotated with cinematographic tags such as shot scales, angles, compositions, relative placement of entities, line of interest.

- Authors: Christophe Lino, Emmanuel Badier, Marc Christie

- Contact: Marc Christie

- Participants: Christophe Lino, Emmanuel Badier, Marc Christie

- Partners: Université d'Udine, Université de Nantes

6.1.3 CusToM

- Name: Customizable Toolbox for Musculoskeletal simulation

- Keywords: Biomechanics, Dynamic Analysis, Kinematics, Simulation, Mechanical multi-body systems

-

Scientific Description:

The present toolbox aims at performing a motion analysis thanks to an inverse dynamics method.

Before performing motion analysis steps, a musculoskeletal model is generated. Its consists of, first, generating the desire anthropometric model thanks to models libraries. The generated model is then kinematical calibrated by using data of a motion capture. The inverse kinematics step, the inverse dynamics step and the muscle forces estimation step are then successively performed from motion capture and external forces data. Two folders and one script are available on the toolbox root. The Main script collects all the different functions of the motion analysis pipeline. The Functions folder contains all functions used in the toolbox. It is necessary to add this folder and all the subfolders to the Matlab path. The Problems folder is used to contain the different study. The user has to create one subfolder for each new study. Once a new musculoskeletal model is used, a new study is necessary. Different files will be automaticaly generated and saved in this folder. All files located on its root are related to the model and are valuable whatever the motion considered. A new folder will be added for each new motion capture. All files located on a folder are only related to this considered motion.

- Functional Description: Inverse kinematics Inverse dynamics Muscle forces estimation External forces prediction

- Publications: hal-02268958, hal-02088913, hal-02109407, hal-01904443, hal-02142288, hal-01988715, hal-01710990

- Contacts: Antoine Muller, Charles Pontonnier, Georges Dumont

- Participants: Antoine Muller, Charles Pontonnier, Georges Dumont, Pierre Puchaud, Anthony Sorel, Claire Livet, Louise Demestre

6.1.4 Directors Lens Motion Builder

- Keywords: Previzualisation, Virtual camera, 3D animation

- Functional Description: Directors Lens Motion Builder is a software plugin for Autodesk's Motion Builder animation tool. This plugin features a novel workflow to rapidly prototype cinematographic sequences in a 3D scene, and is dedicated to the 3D animation and movie previsualization industries. The workflow integrates the automated computation of viewpoints (using the Cinematic Viewpoint Generator) to interactively explore different framings of the scene, proposes means to interactively control framings in the image space, and proposes a technique to automatically retarget a camera trajectory from one scene to another while enforcing visual properties. The tool also proposes to edit the cinematographic sequence and export the animation. The software can be linked to different virtual camera systems available on the market.

- Authors: Emmanuel Badier, Christophe Lino, Marc Christie

- Contact: Marc Christie

- Participants: Christophe Lino, Emmanuel Badier, Marc Christie

- Partner: Université de Rennes 1

6.1.5 Kimea

- Name: Kinect IMprovement for Egronomics Assessment

- Keywords: Biomechanics, Motion analysis, Kinect

- Scientific Description: Kimea consists in correcting skeleton data delivered by a Microsoft Kinect in an ergonomics purpose. Kimea is able to manage most of the occlultations that can occur in real working situation, on workstations. To this end, Kimea relies on a database of examples/poses organized as a graph, in order to replace unreliable body segments reconstruction by poses that have already been measured on real subject. The potential pose candidates are used in an optimization framework.

- Functional Description: Kimea gets Kinect data as input data (skeleton data) and correct most of measurement errors to carry-out ergonomic assessment at workstation.

- Publications: hal-01612939v1, hal-01393066v1, hal-01332716v1, hal-01332711v2, hal-01095084v1

- Authors: Pierre Plantard, Franck Multon, Anne-Sophie Le Pierres, Hubert Shum

- Contact: Franck Multon

- Participants: Franck Multon, Hubert Shum, Pierre Plantard

- Partner: Faurecia

6.1.6 Populate

- Keywords: Behavior modeling, Agent, Scheduling

-

Scientific Description:

The software provides the following functionalities:

- A high level XML dialect that is dedicated to the description of agents activities in terms of tasks and sub activities that can be combined with different kind of operators: sequential, without order, interlaced. This dialect also enables the description of time and location constraints associated to tasks.

- An XML dialect that enables the description of agent's personal characteristics.

- An informed graph describes the topology of the environment as well as the locations where tasks can be performed. A bridge between TopoPlan and Populate has also been designed. It provides an automatic analysis of an informed 3D environment that is used to generate an informed graph compatible with Populate.

- The generation of a valid task schedule based on the previously mentioned descriptions.

With a good configuration of agents characteristics (based on statistics), we demonstrated that tasks schedules produced by Populate are representative of human ones. In conjunction with TopoPlan, it has been used to populate a district of Paris as well as imaginary cities with several thousands of pedestrians navigating in real time.

- Functional Description: Populate is a toolkit dedicated to task scheduling under time and space constraints in the field of behavioral animation. It is currently used to populate virtual cities with pedestrian performing different kind of activities implying travels between different locations. However the generic aspect of the algorithm and underlying representations enable its use in a wide range of applications that need to link activity, time and space. The main scheduling algorithm relies on the following inputs: an informed environment description, an activity an agent needs to perform and individual characteristics of this agent. The algorithm produces a valid task schedule compatible with time and spatial constraints imposed by the activity description and the environment. In this task schedule, time intervals relating to travel and task fulfillment are identified and locations where tasks should be performed are automatically selected.

- Contacts: Carl-Johan Jorgensen, Fabrice Lamarche

- Participants: Carl-Johan Jorgensen, Fabrice Lamarche

6.1.7 PyNimation

- Keywords: Moving bodies, 3D animation, Synthetic human

- Scientific Description: PyNimation is a python-based open-source (AGPL) software for editing motion capture data which was initiated because of a lack of open-source software enabling to process different types of motion capture data in a unified way, which typically forces animation pipelines to rely on several commercial software. For instance, motions are captured with a software, retargeted using another one, then edited using a third one, etc. The goal of Pynimation is therefore to bridge the gap in the animation pipeline between motion capture software and final game engines, by handling in a unified way different types of motion capture data, providing standard and novel motion editing solutions, and exporting motion capture data to be compatible with common 3D game engines (e.g., Unity, Unreal). Its goal is also simultaneously to provide support to our research efforts in this area, and it is therefore used, maintained, and extended to progressively include novel motion editing features, as well as to integrate the results of our research projects. At a short term, our goal is to further extend its capabilities and to share it more largely with the animation/research community.

-

Functional Description:

PyNimation is a framework for editing, visualizing and studying skeletal 3D animations, it was more particularly designed to process motion capture data. It stems from the wish to utilize Python’s data science capabilities and ease of use for human motion research.

In its version 1.0, Pynimation offers the following functionalities, which aim to evolve with the development of the tool : - Import / Export of FBX, BVH, and MVNX animation file formats - Access and modification of skeletal joint transformations, as well as a certain number of functionalities to manipulate these transformations - Basic features for human motion animation (under development, but including e.g. different methods of inverse kinematics, editing filters, etc.). - Interactive visualisation in OpenGL for animations and objects, including the possibility to animate skinned meshes

- Authors: Ludovic Hoyet, Robin Adili, Benjamin Niay, Alberto Jovane

- Contacts: Ludovic Hoyet, Robin Adili

6.1.8 The Theater

- Keywords: 3D animation, Interactive Scenarios

- Functional Description: The Theater is a software framework to develop interactive scenarios in virtual 3D environements. The framework provides means to author and orchestrate 3D character behaviors and simulate them in real-time. The tools provides a basis to build a range of 3D applications, from simple simulations with reactive behaviors, to complex storytelling applications including narrative mechanisms such as flashbacks.

- Contact: Marc Christie

- Participant: Marc Christie

6.2 New platforms

6.2.1 Immerstar Platform

Participants: Georges Dumont, Ronan Gaugne, Anthony Sorel, Richard Kulpa.

With the two platforms of virtual reality, Immersia (http://

7 New results

7.1 Outline

In 2020, MimeTIC has maintained his activity in motion analysis, modelling and simulation, to support the idea that these approaches are strongly coupled in a motion analysis-synthesis loop. This idea has been applied to the main application domains of MimeTIC:

- Animation, Autonomous Characters and Digital Storytelling,

- Fidelity of Virtual Reality,

- Motion sensing of Human Activity,

- Sports,

- Ergonomics,

- and Locomotion and Interactions Between Walkers.

7.2 Animation, Autonomous Characters and Digital Storytelling

MimeTIC main research path consists in associating motion analysis and synthesis to enhance the naturalness in computer animation, with applications in movie previsualisation, and autonomous virtual character control. Thus, we pushed example-based techniques in order to reach a good tradeoff between simulation efficiency and naturalness of the results. In 2020, to achieve this goal, MimeTIC continued to explore the use of perceptual studies and model-based approaches, but also began to investigate deep learning.

7.2.1 Topology-aware Camera Control

Participants: Marc Christie, Amaury Louarn.

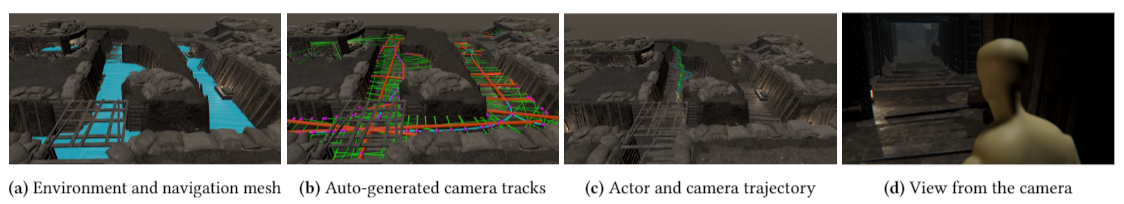

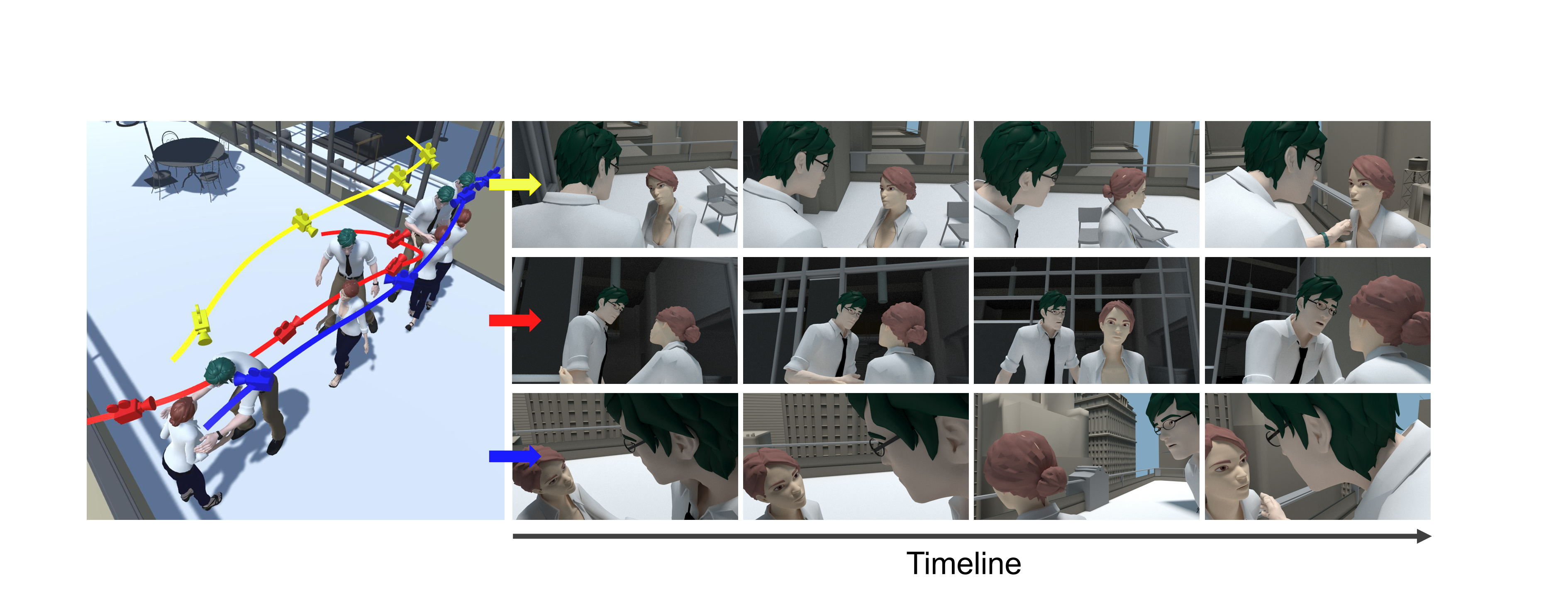

Placing and moving virtual cameras in real-time 3D environments is a task that remains complex due to the many requirements which need to be satisfied simultaneously. Beyond the essential features of ensuring visibility and frame composition for one or multiple targets, an ideal camera system should provide designers with tools to create variations in camera placement and motions, and create shots which conform to aesthetic recommendations. In this work, we propose a controllable process that will assist developers and artists in placing cinematographic cameras and camera paths throughout complex virtual environments, a task that was often manually performed until now. With no specification and no previous knowledge on the events, our tool exploits a topological analysis of the environment to capture the potential movements of the agents, highlight linearities and create an abstract skeletal representation of the environment. This representation is then exploited to automatically generate potentially relevant camera positions and trajectories organized in a graph representation with visibility information. At run-time, the system can then efficiently select appropriate cameras and trajectories according to artistic recommendations. We demonstrate the features of the proposed system with realistic game-like environments, highlighting the capacity to analyze a complex environment, generate relevant camera positions and camera tracks, and run efficiently with a range of different camera behaviours (see Figure 4) 38.

7.2.2 An interactive staging-and-shooting solver for virtual cinematography

Participants: Marc Christie, Amaury Louarn.

Research in virtual cinematography often narrows the problem down to computing the optimal viewpoint for the camera to properly convey a scene’s content. In contrast we propose to address simultaneously the questions of placing cameras, lights, objects and actors in a virtual environment through a high-level specification. We build on a staging language and propose to extend it by defining complex temporal relationships between these entities. We solve such specifications by designing pruning operators which iteratively reduce the range of possible degrees of freedom for entities while satisfying temporal constraints. Our solver first decomposes the problem by analyzing the graph of relationships between entities and then solves an ordered sequence of sub-problems. Users have the possibility to manipulate the current result for fine-tuning purposes or to creatively explore ranges of solutions while maintaining the relationships. As a result, the proposed system is the first staging-and-shooting cinematography system which enables the specification and solving of spatio-temporal cinematic layouts 39.7.2.3 Deep Learning of Camera Behaviors

Participants: Marc Christie, Xi Wang.

Designing a camera motion controller that has the capacity to move a virtual camera automatically in relation with contents of a 3D animation, in a cinematographic and principled way, is a complex and challenging task. Many cinematographic rules exist, yet practice shows there are significant stylistic variations in how these can be applied. In this paper, we propose an example-driven camera controller which can extract camera behaviors from an example film clip and re-apply the extracted behaviors to a 3D animation, through learning from a collection of camera motions. Our first technical contribution is the design of a low-dimensional cinematic feature space that captures the essence of a film's cinematic characteristics (camera angle and distance, screen composition and character configurations) and which is coupled with a neural network to automatically extract these cinematic characteristics from real film clips. Our second technical contribution is the design of a cascaded deep-learning architecture trained to (i) recognize a variety of camera motion behaviors from the extracted cinematic features, and (ii) predict the future motion of a virtual camera given a character 3D animation. We propose to rely on a Mixture of Experts (MoE) gating+prediction mechanism to ensure that distinct camera behaviors can be learned while ensuring generalization. We demonstrate the features of our approach through experiments that highlight (i) the quality of our cinematic feature extractor (ii) the capacity to learn a range of behaviors through the gating mechanism, and (iii) the ability to generate a variety of camera motions by applying different behaviors extracted from film clips. Such an example-driven approach offers a high level of controllability which opens new possibilities toward a deeper understanding of cinematographic style and enhanced possibilities in exploiting real film data in virtual environments (see Figure 5). The work is a collaboration with the Beijing Film Academy in China and was presented at SIGGRAPH 2020 22.

7.2.4 Efficient Visibility Computation for Camera Control

Participants: Marc Christie, Ludovic Burg.

Efficient visibility computation is a prominent requirement when designing automated camera control techniques for dynamic 3D environments; computer games, interactive storytelling or 3D media applications all need to track 3D entities while ensuring their visibility and delivering a smooth cinematographic experience. Addressing this problem requires to sample a very large set of potential camera positions and estimate visibility for each of them, which in practice is intractable. In this work, we introduce a novel technique to perform efficient visibility computation and anticipate occlusions. We first propose a GPU-rendering technique to sample visibility in Toric Space coordinates – a parametric space designed for camera control. We then rely on this visibility evaluation to compute an anticipation map which predicts the future visibility of a large set of cameras over a specified number of frames. We finally design a camera motion strategy that exploits this anticipation map to maximize the visibility of entities over time. The key features of our approach are demonstrated through comparison with classical ray-casting techniques on benchmark environments, and through an integration in multiple game-like 3D environment with heavy sparse and dense occluders 14.7.2.5 Relative Pose Estimation and Planar Reconstruction via Superpixel-Driven Multiple Homographies

Participants: Marc Christie, Xi Wang.

This work proposes a novel method to simultaneously perform relative camera pose estimation and planar reconstruction of a scene from two RGB images. We start by extracting and matching superpixel information from both images and rely on a novel multi-model RANSAC approach to estimate multiple homographies from superpixels and identify matching planes. Ambiguity issues when performing homogra-phy decomposition are handled by proposing a voting system to more reliably estimate relative camera pose and plane parameters. A non-linear optimization process is also proposed to perform bundle adjustment that exploits a joint representation of homographies and works both for image pairs and whole sequences of image (vSLAM). As a result, the approach provides a mean to perform a dense 3D plane reconstruction from two RGB images only without relying on RGB-D inputs or strong priors such as Manhattan assumptions, and can be extented to handle sequences of images. Our results compete with keypoint-based techniques such as ORB-SLAM while providing a dense representation and are more precise than direct and semi-direct pose estimation techniques used in LSD-SLAM or DPPTAM. Results were presented at IRIS 2020 427.2.6 Contact Preserving Shape Transfer For Rigging-Free Motion Retargeting

Participants: Franck Multon, Jean Basset.

In 2018, we introduced the idea of context graph to capture the relationship between body parts surfaces and enhance the quality of the motion retargetting problem. Hence, it becomes possible to retarget the motion of a source character to a target one while preserving the topological relationship between body parts surfaces. However this approach implies to strictly satisfy distance constraints between body parts, whereas some of them could be relaxed to preserve naturalness. In 2019, we introduced a new paradigm based on transfering the shape instead of encoding the pose constraints to tackle this problem for isolated poses. In 2020, we extended this approach to handle continuity in motion, and non-human characters.

Hence, in 12, we presented an automatic method that allows to retarget poses from a source to a target character by transferring the shape of the target character onto the desired pose of the source character. By considering shape instead of pose transfer our method allows to better preserve the contextual meaning of the source pose, typically contacts between body parts, than pose-based strategies. To this end, we propose an optimization-based method to deform the source shape in the desired pose using three main energy functions: similarity to the target shape, body part volume preservation, and collision management to preserve existing contacts and prevent penetrations. The results show that our method allows to retarget complex poses with several contacts to different morphologies, and is even able to create new contacts when morphology changes require them, such as increases in body size. To demonstrate the robustness of our approach to different types of shapes, we successfully apply it to basic and dressed human characters as well as wild animal models, without the need to adjust parameters.

7.2.7 Walk Ratio: Perception of an Invariant Parameter of Human Walk on Virtual Characters

Participants: Ludovic Hoyet, Benjamin Niay, Anne-Hélène Olivier, Katja Zibrek.

Synthesizing motions that look realistic and diverse is a challenging task in animation. Therefore, a few generic walking motions are typically used when creating crowds of walking virtual characters, leading to a lack of variations as motions are not necessarily adapted to each and every virtual character's characteristics. While some attempts have been made to create variations, it appears necessary to identify the relevant parameters that influence users' perception of such variations to keep a good trade-off between computational costs and realism. In this context, we investigated 40 the ability of viewers to identify an invariant parameter of human walking named the Walk Ratio (step length to step frequency ratio), which was shown to be specific to each individual and constant for different speeds, but which has never been used to drive animations of virtual characters. To this end, we captured 2 female and 2 male actors walking at different freely chosen speeds, as well as at different combinations of step frequency and step length. We then performed a perceptual study to identify the Walk Ratio that was perceived as the most natural for each actor when animating a virtual character, and compared it to the Walk Ratio freely chosen by the actor during the motion capture session (Figure 6). We found that Walk Ratios chosen by observers were in the range of Walk Ratios measured in the literature, and that participants perceived differences between the Walk Ratios of animated male and female characters, as evidenced in the biomechanical literature. Our results provide new considerations to drive the animation of walking virtual characters using the Walk Ratio as a parameter, and might provide animators with novel means to control the walking speed of characters through simple parameters while retaining the naturalness of the locomotion.

7.2.8 The impact of stylization on face recognition

Participants: Ludovic Hoyet, Franck Multon, Nicolas Olivier.

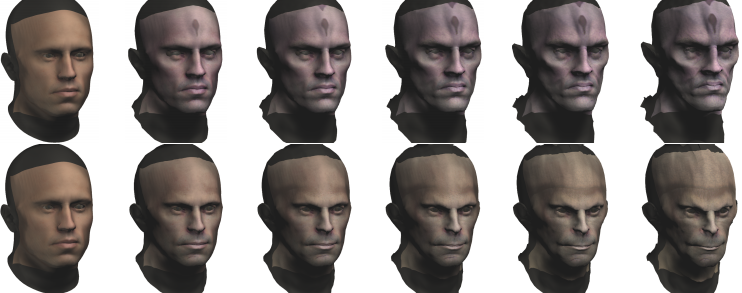

While digital humans are key aspects of the rapidly evolving areas of virtual reality, gaming, and online communications, many applications would benefit from using digital personalized (stylized) representations of users, as they were shown to highly increase immersion, presence and emotional response. In particular, depending on the target application, one may want to look like a dwarf or an elf in a heroic fantasy world, or like an alien on another planet, in accordance with the style of the narrative. While creating such virtual replicas requires stylization of the user’s features onto the virtual character, no formal study has however been conducted to assess the ability to recognize stylized characters. In collaboration with Hybrid team and InterDigital, we carried-out a perceptual study investigating the effect of the degree of stylization on the ability to recognize an actor, and the subjective acceptability of stylizations 41 (Figure 7). Results show that recognition rates decrease when the degree of stylization increases, while acceptability of the stylization increases. These results provide recommendations to achieve good compromises between stylization and recognition, and pave the way to new stylization methods providing a tradeoff between stylization and recognition of the actor.

7.2.9 Interaction Fields: Sketching Collective Behaviours.

Participants: Marc Christie, Adèle Colas, Ludovic Hoyet, Anne-Hélène Olivier, Katja Zibrek.

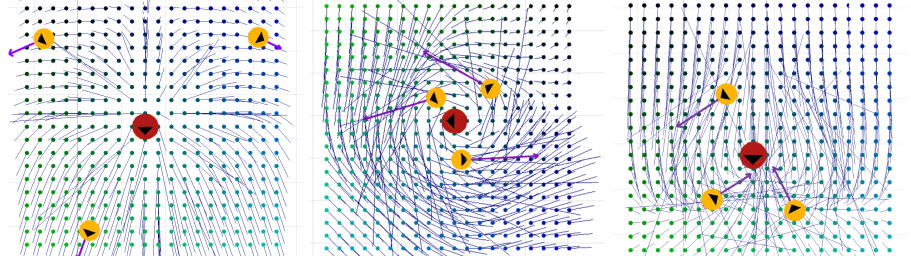

Many applications of computer graphics, such as cinema, video games, virtual reality, training scenarios, therapy, or rehabilitation, involve the design of situations where several virtual humans are engaged. In applications where a user is immersed in the virtual environment, the (collective) behavior of these virtual humans must be realistic to improve the user's sense of presence. While expressive behaviour appears to be a crucial aspect as part of the realism, collective behaviours simulated using typical crowd simulation models (e.g., social forces, potential fields, velocity selection, vision-based) usually lack expressiveness and do not allow to capture more subtle scenarios (e.g., a group of agents hiding from the user or blocking his/her way), which require the ability to simulate complex interactions. As subtle and adaptable collective behaviours are not easily modeled, there is therefore a need for more intuitive ways to design such complex scenarios. In this preliminary work 50, conducted in collaboration with Julien Pettré and Claudio Pacchierotti in Rainbow team, we therefore propose a novel approach to sketch such interactions to define collective behaviours. Although other sketch-based approaches exist, these usually focus on goal-oriented path planning, and not on modelling social or collective behaviour. In comparison, we present the concepts of a new approach based on a user-friendly application enabling users to draw target interactions between agents through intuitive vector fields (Figure 8). In the future, our goal is to use this approach to facilitate the design of expressive and collective behaviours. By considering more generic and dynamic situations, we design diversified and subtle interactions, which so far have mostly focused on predefined static scenarios.

7.3 Fidelity of Virtual Reality

MimeTIC wishes to promote the use of Virtual Reality to analyze and train human motor performance. It raises the fundamental question of the transfer of knowledge and skills acquired in VR to real life. In 2020, we maintain our efforts to enhance the experience of users when interacting physically with a virtual world. We developed an original setup to carry-out experiments aiming at evaluating various haptic feedback rendering techniques. In collaboration with Hybrid team, we put many efforts in better simulating avatars of the users, and analyzed embodiment in various VR conditions, leading to several co-supervised PhD students. In collaboration with Rainbow team, we also explored methods to enhance VR experience when navigating in crowds, using perceptual studies, new steering methods, and the possibility to physically interact with other people through haptic feedback.

7.3.1 Biofidelity in VR

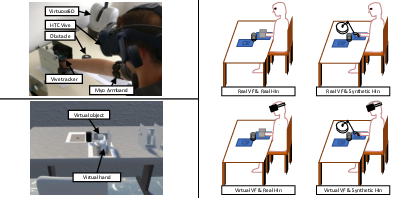

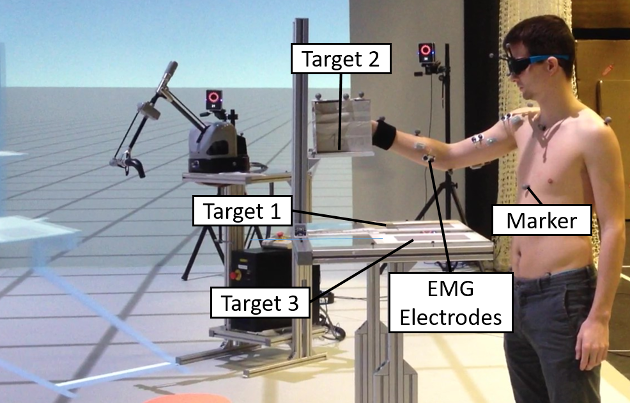

Participants: Simon Hilt, Georges Dumont, Charles Pontonnier.

Virtual environments (VE) and haptic interfaces (HI) tend to be introduced as virtual prototyping tools to assess ergonomic features of workstations. These approaches are cost-effective and convenient since working directly on the Digital Mock-Up -and thus allows low-cost solutions for studying the ergonomics of workstations upstream of the design chain, thanks to the interaction of an operator and a digital mock-up- in a VE is preferable to constructing a physical mock-up in a Real Environment (RE). However it can be usable only if the ergonomic conclusions made from the VE are similar to the ones you would make in the real world. The focus was put on the evaluation of the impact of visual and haptic renderings in terms of biomechanical fidelity for pick-and-place tasks. We developed an original setup 9, enabling to separate the effect of visual and haptic renderings in the scene in order to investigate individual and combined effects of those modes of immersion and interaction on the biomechanical behavior of the subject during the task. We focused particularly on the mixed effects of the renderings on the biomechanical fidelity of the system to simulate pick-and-place tasks. Fourteen subjects performed time-constrained pick-and-place tasks in RE and VE with a real and a virtual, haptic driven object at three different speeds. Motion of the hand and muscles activation of the upper limb were recorded. A questionnaire assessed subjectively discomfort and immersion. The results revealed significant differences between measured indicators in RE and VE and with real and virtual object. Objective and subjective measures indicated higher muscle activity and higher length of the hand trajectories in VE and with HI. Another important element is that no cross effect between haptic and visual rendering was reported. Theses results confirmed that such systems should be used with caution for ergonomics evaluation, especially when investigating postural and muscle quantities as discomfort indicators. The last of this part lies in an experimental setup easily replicable to asses more systematically the biomechanical fidelity of virtual environments for ergonomics purposes 20. Haptic feedback is a way to interact whit the digital Digital Mock-Up but the control of the haptic device may affect the feedback. We proposed to evaluate the biomechanical fidelity of a pick-and-place task interacting with a 6 degrees of freedom HI and a dedicated inertial and viscous friction compensation algorithm. The proposed work shows that for such low masses manipulation in the proposed setup 10, the subjective feeling, obtained by a questionnaire, of the user using the HI do not correspond to the muscle activity. Such a result is fundamental to classify what can be transferred from virtual to real at a biomechanical level. The development of additional algorithms is needed to achieve a higher biomechanical fidelity, i.e. the user feels and undergoes the same constraints as the interaction with the same real object 30.

This work is also included in the PhD Thesis of Simon Hilt, defended December 4th 2020 47.

7.3.2 Avatar and Sense of Embodiment: Studying the Relative Preference Between Appearance, Control and Point of View.

Participants: Rebecca Fribourg, Ludovic Hoyet.

In Virtual Reality, a number of studies have been conducted to assess the influence of avatar appearance, avatar control and user point of view on the Sense of Embodiment (SoE) towards a virtual avatar. However, such studies tend to explore each factor in isolation. This work, conducted in collaboration with Ferran Argelaguet and Anatole Lécuyer in Hybrid team, aims to better understand the inter-relations among these three factors by conducting a subjective matching experiment 17. In the presented experiment (n=40), participants had to match a given “optimal” SoE avatar configuration (realistic avatar, full-body motion capture, first-person point of view), starting by a “minimal” SoE configuration (minimal avatar, no control, third-person point of view), by iteratively increasing the level of each factor. The choices of the participants provide insights about their preferences and perception over the three factors considered. Moreover, the subjective matching procedure was conducted in the context of four different interaction tasks with the goal of covering a wide range of actions an avatar can do in a VE (Figure 11). This work also included a baseline experiment (n=20) which was used to define the number and order of the different levels for each factor, prior to the subjective matching experiment (e.g. different degrees of realism ranging from abstract to personalised avatars for the visual appearance). The results of the subjective matching experiment show that point of view and control levels were consistently increased by users before appearance levels when it comes to enhancing the SoE. Second, several configurations were identified with equivalent SoE as the one felt in the optimal configuration, but vary between the tasks. Taken together, our results provide valuable insights about which factors to prioritize in order to enhance the SoE towards an avatar in different tasks, and about configurations which lead to fulfilling SoE in VE.

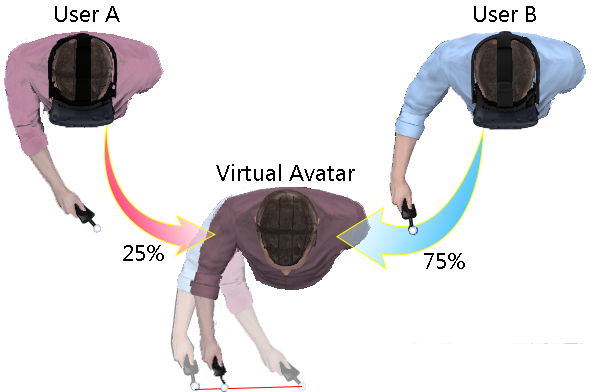

7.3.3 Virtual Co-Embodiment: Evaluation of the Sense of Agency while Sharing the Control of a Virtual Body among Two Individuals

Participants: Rebecca Fribourg, Ludovic Hoyet.

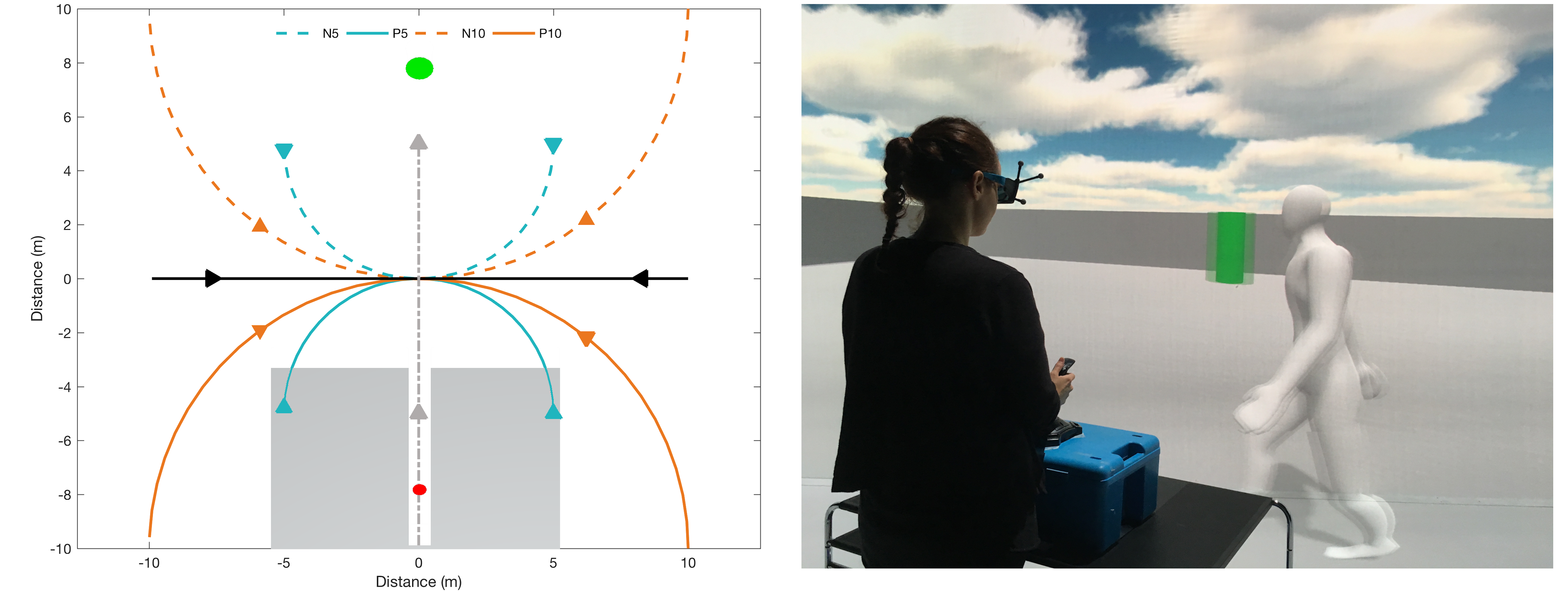

In this work, conducted in collaboration with Ferran Argelaguet and Anatole Lécuyer in Hybrid team, as well as with Nami Ogawa, Takuji Narumi and Michitaka Hirose from The University of Tokyo (Japan), we introduce a concept called “virtual co-embodiment” 18, which enables users to share their virtual avatar with another entity (e.g., another user, robot, or autonomous agent, see Figure 12). We describe a proof-of-concept in which two users can be immersed from a first-person perspective in a virtual environment and can have complementary levels of control (total, partial, or none) over a shared avatar. In addition, we conducted an experiment to investigate the influence of users' level of control over the shared avatar and prior knowledge of their actions on the users' sense of agency and motor actions. The results showed that participants are good at estimating their real level of control but significantly overestimate their sense of agency when they can anticipate the motion of the avatar. Moreover, participants performed similar body motions regardless of their real control over the avatar. The results also revealed that the internal dimension of the locus of control, which is a personality trait, is negatively correlated with the user's perceived level of control. The combined results unfold a new range of applications in the fields of virtual-reality-based training and collaborative teleoperation, where users would be able to share their virtual body.

7.3.4 Influence of Threat Occurrence and Repeatabilityon the Sense of Embodiment and Threat Response in VR

Participants: Rebecca Fribourg, Ludovic Hoyet.