Keywords

Computer Science and Digital Science

- A1.2.1. Dynamic reconfiguration

- A1.3.1. Web

- A1.3.6. Fog, Edge

- A2.1.3. Object-oriented programming

- A2.1.10. Domain-specific languages

- A2.5. Software engineering

- A2.5.1. Software Architecture & Design

- A2.5.2. Component-based Design

- A2.5.3. Empirical Software Engineering

- A2.5.4. Software Maintenance & Evolution

- A2.5.5. Software testing

- A2.6.2. Middleware

- A2.6.4. Ressource management

- A4.4. Security of equipment and software

- A4.8. Privacy-enhancing technologies

Other Research Topics and Application Domains

- B3.1. Sustainable development

- B3.1.1. Resource management

- B6.1. Software industry

- B6.1.1. Software engineering

- B6.1.2. Software evolution, maintenance

- B6.4. Internet of things

- B6.5. Information systems

- B6.6. Embedded systems

- B8.1.2. Sensor networks for smart buildings

- B9.5.1. Computer science

- B9.10. Privacy

1 Team members, visitors, external collaborators

Research Scientists

- Djamel Eddine Khelladi [CNRS, Researcher]

- Gunter Mussbacher [INRIA, Chair, Professor at McGill]

- Olivier Zendra [Inria, Researcher]

Faculty Members

- Olivier Barais [Team leader, Univ de Rennes I, Professor, HDR]

- Mathieu Acher [Univ de Rennes I, Associate Professor]

- Raounak Benabidallah [Univ de Rennes I, ATER, until Aug 2021]

- Reda Bendraou [Univ de Nanterre, Associate Professor, from Sep 2021, HDR]

- Arnaud Blouin [INSA Rennes, Associate Professor, HDR]

- Johann Bourcier [Univ de Rennes I, Associate Professor, HDR]

- Stéphanie Challita [Univ de Rennes I, Associate Professor]

- Elyes Cherfa [Univ de Rennes I, from Sep 2021]

- Benoit Combemale [Univ de Rennes I, Professor, HDR]

- Jean-Marc Jezequel [Univ de Rennes I, Professor, HDR]

- Noel Plouzeau [Univ de Rennes I, Associate Professor]

- Oscar Luis Vera Perez [Univ de Rennes I, ATER, until Feb 2021]

- Nan Zhang Messe [Univ de Rennes I, ATER, until Aug 2021]

Post-Doctoral Fellow

- Xhevahire Ternava [Univ de Rennes I]

PhD Students

- June Benvegnu-Sallou [Univ de Rennes I]

- Anne Bumiller [Orange, CIFRE]

- Emmanuel Chebbi [Inria]

- Antoine Cheron [Zengularity SAS, CIFRE, until Oct 2021]

- Cassius De Oliveira Puodzius [Inria]

- Pierre Jeanjean [Inria]

- Gwendal Jouneaux [Univ de Rennes I]

- Piergiorgio Ladisa [SAP, CIFRE]

- Quentin Le Dilavrec [Univ de Rennes I]

- Luc Lesoil [Univ de Rennes I]

- Gauthier Lyan [Keolis, CIFRE, until Jul 2021]

- Hugo Martin [Univ de Rennes I, until Oct 2021]

- Lamine Noureddine [Inria, until Feb 2021]

- Alif Akbar Pranata [Inria, until Nov 2021]

- Georges Aaron Randrianaina [Univ de Rennes I, from Jul 2021]

Technical Staff

- Florian Badie [Inria, Engineer, from Nov 2021]

- Romain Belafia [Univ de Rennes I, Engineer, from Sep 2021]

- Theo Giraudet [Univ de Rennes I, Engineer, from Sep 2021]

- Bruno Lebon [Inria, Engineer, from Jul 2021 until Oct 2021]

- Romain Lefeuvre [Inria, Engineer, from Sep 2021]

- Dorian Leroy [Inria, Engineer]

- Didier Vojtisek [Inria, Engineer]

Interns and Apprentices

- Romain Belafia [Inria, from Feb 2021 until Jul 2021]

- Antoine Bouffard [Inria, from May 2021 until Aug 2021]

- Remi Daniel [INSA Rennes, from May 2021 until Aug 2021]

- Willy Fortin [Inria, from May 2021 until Aug 2021]

- Theo Giraudet [Univ de Rennes I, from May 2021 until Jul 2021]

- Antoine Gratia [Univ de Rennes I, from Mar 2021 until Jun 2021]

- Mouna Hissane [Univ de Rennes I, from May 2021 until Aug 2021]

- Philemon Houdaille [Inria, from May 2021 until Jul 2021]

- Zohra Kaouter Kebaili [INSA Rennes, from Mar 2021 until Sep 2021]

- Meziane Khodja [Univ de Rennes I, from Jun 2021 until Aug 2021]

- Alexandre Le Balanger [Univ de Rennes I, from Jun 2021 until Aug 2021]

- Marius Lumbroso [Univ de Rennes I, from May 2021 until Aug 2021]

- Quentin Mazouni [Inria, from Oct 2021]

- Jean Loup Moll [Univ de Rennes I, from Jun 2021 until Jul 2021]

- Koffi Ismael Ouattara [Inria, from Apr 2021 until Aug 2021]

- Georges Aaron Randrianaina [Univ de Rennes I, from Feb 2021 until Jul 2021]

- Leo Rolland [Univ de Rennes I, from Jun 2021 until Aug 2021]

Administrative Assistant

- Sophie Maupile [CNRS]

External Collaborator

- Gurvan Le Guernic [DGA]

2 Overall objectives

DIVERSE's research agenda targets core values of software engineering. In this fundamental domain we focus on and develop models, methodologies and theories to address major challenges raised by the emergence of several forms of diversity in the design, deployment and evolution of software-intensive systems. Software diversity has emerged as an essential phenomenon in all application domains borne by our industrial partners. These application domains range from complex systems brought by systems of systems (addressed in collaboration with Thales, Safran, CEA and DGA) and Instrumentation and Control (addressed with EDF) to pervasive combinations of Internet of Things and Internet of Services (addressed with TellU and Orange) and tactical information systems (addressed in collaboration with civil security services). Today these systems seem to be all radically different, but we envision a strong convergence of the scientific principles that underpin their construction and validation, bringing forwards sane and reliable methods for the design of flexible and open yet dependable systems. Flexibility and openness are both critical and challenging software layer properties that must deal with the following four dimensions of diversity: diversity of languages, used by the stakeholders involved in the construction of these systems; diversity of features, required by the different customers; diversity of runtime environments, where software has to run and adapted; diversity of implementations, which are necessary for resilience by redundancy.

In this context, the central software engineering challenge consists in handling diversity from variability in requirements and design to heterogeneous and dynamic execution environments. In particular, this requires considering that the software system must adapt, in unpredictable yet valid ways, to changes in the requirements as well as in its environment. Conversely, explicitly handling diversity is a great opportunity to allow software to spontaneously explore alternative design solutions, and to mitigate security risks.

Concretely, we want to provide software engineers with the following abilities:

- to characterize an “envelope” of possible variations;

- to compose envelopes (to discover new macro envelopes in an opportunistic manner);

- to dynamically synthesize software inside a given envelope.

The major scientific objective that we must achieve to provide such mechanisms for software engineering is summarized below:

Scientific objective for DIVERSE: To automatically compose and synthesize software diversity from design to runtime to address unpredictable evolution of software-intensive systems

Software product lines and associated variability modeling formalisms represent an essential aspect of software diversity, which we already explored in the past, and this aspect stands as a major foundation of DIVERSE's research agenda. However, DIVERSE also exploits other foundations to handle new forms of diversity: type theory and models of computation for the composition of languages; distributed algorithms and pervasive computation to handle the diversity of execution platforms; functional and qualitative randomized transformations to synthesize diversity for robust systems.

3 Research program

3.1 Context

Applications are becoming more complex and the demand for faster development is increasing. In order to better adapt to the unbridled evolution of requirements in markets where software plays an essential role, companies are changing the way they design, develop, secure and deploy applications, by relying on:

- A massive use of reusable libraries from a rich but fragmented eco-system;

- An increasing configurability of most of the produced software;

- A strongly increase in evolution frequency;

- Cloud-native architectures based on containers, naturally leading to a diversity of programming languages used, and to the emergence of infrastructure, dependency, project and deployment descriptors (models);

- Implementations of fully automated software supply chains;

- The use of lowcode/nocode platforms;

- The use of ever richer integrated development environments (IDEs), more and more deployed in SaaS mode;

- The massive use of data and artificial intelligence techniques in software production chains.

These trends are set to continue, all the while with a strong concern about the security properties of the produced and distributed software.

The numbers in the examples below help to understand why this evolution of modern software engineering brings a change of dimension:

- When designing a simple kitchen sink (hello world) with the angular framework, more than 1600 dependencies of JavaScript libraries are pulled.

- The numbers revealed by Google in 2018 showed that over 500 million tests are run per day inside Google’s systems, leading to over 4 millions daily builds.

- Also at Google, they reported 86 TB of data, including two billion lines of code in nine million source files 105. Their software also rapidly evolves both in terms of frequency and in terms of size. Again, at Google, 25,000 developers typically commit 16,000 changes to the codebase on a single workday. This is also the case for most of software code, including open source software.

- x264, a highly popular and configurable video encoder, provides 100+ options that can take boolean, integer or string values. There are different ways of compiling x264, and it is well-known that the compiler options (e.g., -O1 –O2 –O3 of gcc) can influence the performance of a software; the widely used gcc compiler, for example, offers more than 200 options. The x264 encoder can be executed on different configurations of the Linux operating system, whose options may in turn influence x264 execution time; in recent versions ( 5), there are 16000+ options to the Linux kernel. Last but not least, x264 should be able to encode many different videos, in different formats and with different visual properties, implying a huge variability of the input space. Overall, the variability space is enormous, and ideally x264 should be run and tested in all these settings. But a rough estimation shows that the number of possible configurations, resulting from the combination of the different variability layers, is .

The DIVERSE research project is working and evolving in the context of this acceleration. We are active at all stages of the software supply chain. Software supply chain covers all the activities and all the stakeholders that relate to software production and delivery. All these activities and stakeholders have to be smartly managed together as part of an overall strategy. The goal of supply chain management (SCM) is to meet customer demands with the most efficient use of resources possible.

In this context, DIVERSE is particularly interested in the following research questions:

- How to engineer tool-based abstractions for a given set of experts in order to foster their socio-technical collaboration;

- How to generate and exploit useful data for the optimization of this supply chain, in particular for the control of variability and the management of the co-evolution of the various software artifacts;

- How to increase the confidence in the produced software, by working on the resilience and security of the artifacts produced throughout this supply chain.

3.2 Scientific background

3.2.1 Model-Driven Engineering

Model-Driven Engineering (MDE) aims at reducing the accidental complexity associated with developing complex software-intensive systems (e.g., use of abstractions of the problem space rather than abstractions of the solution space) 109. It provides DIVERSE with solid foundations to specify, analyze and reason about the different forms of diversity that occur throughout the development life cycle. A primary source of accidental complexity is the wide gap between the concepts used by domain experts and the low-level abstractions provided by general-purpose programming languages 81. MDE approaches address this problem through modeling techniques that support separation of concerns and automated generation of major system artifacts from models (e.g., test cases, implementations, deployment and configuration scripts). In MDE, a model describes an aspect of a system and is typically created or derived for specific development purposes 65. Separation of concerns is supported through the use of different modeling languages, each providing constructs based on abstractions that are specific to an aspect of a system. MDE technologies also provide support for manipulating models, for example, support for querying, slicing, transforming, merging, and analyzing (including executing) models. Modeling languages are thus at the core of MDE, which participates in the development of a sound Software Language Engineering, including a unified typing theory that integrates models as first class entities 111.

Incorporating domain-specific concepts and a high-quality development experience into MDE technologies can significantly improve developer productivity and system quality. Since the late nineties, this realization has led to work on MDE language workbenches that support the development of domain-specific modeling languages (DSMLs) and associated tools (e.g., model editors and code generators). A DSML provides a bridge between the field in which domain experts work and the implementation (programming) field. Domains in which DSMLs have been developed and used include, among others, automotive, avionics, and cyber-physical systems. A study performed by Hutchinson et al. 86 indicates that DSMLs can pave the way for wider industrial adoption of MDE.

More recently, the emergence of new classes of systems that are complex and operate in heterogeneous and rapidly changing environments raises new challenges for the software engineering community. These systems must be adaptable, flexible, reconfigurable and, increasingly, self-managing. Such characteristics make systems more prone to failure when running and thus the development and study of appropriate mechanisms for continuous design and runtime validation and monitoring are needed. In the MDE community, research is focused primarily on using models at the design, implementation, and deployment stages of development. This work has been highly productive, with several techniques now entering a commercialization phase. As software systems are becoming more and more dynamic, the use of model-driven techniques for validating and monitoring runtime behavior is extremely promising 95.

3.2.2 Variability modeling

While the basic vision underlying Software Product Lines (SPL) can probably be traced back to David Parnas' seminal article 102 on the Design and Development of Program Families, it is only quite recently that SPLs have started emerging as a paradigm shift towards modeling and developing software system families rather than individual systems 99. SPL engineering embraces the ideas of mass customization and software reuse. It focuses on the means of efficiently producing and maintaining multiple related software products, exploiting what they have in common and managing what varies among them.

Several definitions of the software product line concept can be found in the research literature. Clements et al. define it as a set of software-intensive systems sharing a common, managed set of features that satisfy the specific needs of a particular market segment or mission and are developed from a common set of core assets in a prescribed way 100. Bosch provides a different definition 71: A SPL consists of a product line architecture and a set of reusable components designed for incorporation into the product line architecture. In addition, the PL consists of the software products developed using the mentioned reusable assets. In spite of the similarities, these definitions provide different perspectives of the concept: market-driven, as seen by Clements et al., and technology-oriented for Bosch.

SPL engineering is a process focusing on capturing the commonalities (assumptions true for each family member) and variability (assumptions about how individual family members differ) between several software products 77. Instead of describing a single software system, a SPL model describes a set of products in the same domain. This is accomplished by distinguishing between elements common to all SPL members, and those that may vary from one product to another. Reuse of core assets, which form the basis of the product line, is key to productivity and quality gains. These core assets extend beyond simple code reuse and may include the architecture, software components, domain models, requirements statements, documentation, test plans or test cases.

The SPL engineering process consists of two major steps:

- Domain Engineering, or development for reuse, focuses on core assets development.

- Application Engineering, or development with reuse, addresses the development of the final products using core assets and following customer requirements.

Central to both processes is the management of variability across the product line 83. In common language use, the term variability refers to the ability or the tendency to change. Variability management is thus seen as the key feature that distinguishes SPL engineering from other software development approaches 72. Variability management is thus increasingly seen as the cornerstone of SPL development, covering the entire development life cycle, from requirements elicitation 113 to product derivation 117 to product testing 98, 97.

Halmans et al. 83 distinguish between essential and technical variability, especially at the requirements level. Essential variability corresponds to the customer's viewpoint, defining what to implement, while technical variability relates to product family engineering, defining how to implement it. A classification based on the dimensions of variability is proposed by Pohl et al. 104: beyond variability in time (existence of different versions of an artifact that are valid at different times) and variability in space (existence of an artifact in different shapes at the same time) Pohl et al. claim that variability is important to different stakeholders and thus has different levels of visibility: external variability is visible to the customers while internal variability, that of domain artifacts, is hidden from them. Other classification proposals come from Meekel et al. 92 (feature, hardware platform, performance and attributes variability) or Bass et al. 63 who discusses about variability at the architectural level.

Central to the modeling of variability is the notion of feature, originally defined by Kang et al. as: a prominent or distinctive user-visible aspect, quality or characteristic of a software system or systems 88. Based on this notion of feature, they proposed to use a feature model to model the variability in a SPL. A feature model consists of a feature diagram and other associated information: constraints and dependency rules. Feature diagrams provide a graphical tree-like notation depicting the hierarchical organization of high level product functionalities represented as features. The root of the tree refers to the complete system and is progressively decomposed into more refined features (tree nodes). Relations between nodes (features) are materialized by decomposition edges and textual constraints. Variability can be expressed in several ways. Presence or absence of a feature from a product is modeled using mandatory or optional features. Features are graphically represented as rectangles while some graphical elements (e.g., unfilled circle) are used to describe the variability (e.g., a feature may be optional).

Features can be organized into feature groups. Boolean operators exclusive alternative (XOR), inclusive alternative (OR) or inclusive (AND) are used to select one, several or all the features from a feature group. Dependencies between features can be modeled using textual constraints: requires (presence of a feature requires the presence of another), mutex (presence of a feature automatically excludes another). Feature attributes can be also used for modeling quantitative (e.g., numerical) information. Constraints over attributes and features can be specified as well.

Modeling variability allows an organization to capture and select which version of which variant of any particular aspect is wanted in the system 72. To implement it cheaply, quickly and safely, redoing by hand the tedious weaving of every aspect is not an option: some form of automation is needed to leverage the modeling of variability 67. Model Driven Engineering (MDE) makes it possible to automate this weaving process 87. This requires that models are no longer informal, and that the weaving process is itself described as a program (which is as a matter of fact an executable meta-model 96) manipulating these models to produce for instance a detailed design that can ultimately be transformed to code, or to test suites 103, or other software artifacts.

3.2.3 Component-based software development

Component-based software development 112 aims at providing reliable software architectures with a low cost of design. Components are now used routinely in many domains of software system designs: distributed systems, user interaction, product lines, embedded systems, etc. With respect to more traditional software artifacts (e.g., object oriented architectures), modern component models have the following distinctive features 78: description of requirements on services required from the other components; indirect connections between components thanks to ports and connectors constructs 90; hierarchical definition of components (assemblies of components can define new component types); connectors supporting various communication semantics 75; quantitative properties on the services 70.

In recent years component-based architectures have evolved from static designs to dynamic, adaptive designs (e.g., SOFA 75, Palladio 68, Frascati 93). Processes for building a system using a statically designed architecture are made of the following sequential lifecycle stages: requirements, modeling, implementation, packaging, deployment, system launch, system execution, system shutdown and system removal. If for any reason after design time architectural changes are needed after system launch (e.g., because requirements changed, or the implementation platform has evolved, etc) then the design process must be reexecuted from scratch (unless the changes are limited to parameter adjustment in the components deployed).

Dynamic designs allow for on the fly redesign of a component based system. A process for dynamic adaptation is able to reapply the design phases while the system is up and running, without stopping it (this is different from a stop/redeploy/start process). Dynamic adaptation processes support chosen adaptation, when changes are planned and realized to maintain a good fit between the needs that the system must support and the way it supports them 89. Dynamic component-based designs rely on a component meta-model that supports complex life cycles for components, connectors, service specification, etc. Advanced dynamic designs can also take platform changes into account at runtime, without human intervention, by adapting themselves 76, 115. Platform changes and more generally environmental changes trigger imposed adaptation, when the system can no longer use its design to provide the services it must support. In order to support an eternal system 69, dynamic component based systems must separate architectural design and platform compatibility. This requires support for heterogeneity, since platform evolution can be partial.

The Models@runtime paradigm denotes a model-driven approach aiming at taming the complexity of dynamic software systems. It basically pushes the idea of reflection one step further by considering the reflection layer as a real model “something simpler, safer or cheaper than reality to avoid the complexity, danger and irreversibility of reality 107”. In practice, component-based (and/or service-based) platforms offer reflection APIs that make it possible to introspect the system (to determine which components and bindings are currently in place in the system) and dynamic adaptation (by applying CRUD operations on these components and bindings). While some of these platforms offer rollback mechanisms to recover after an erroneous adaptation, the idea of Models@runtime is to prevent the system from actually enacting an erroneous adaptation. In other words, the “model at run-time” is a reflection model that can be uncoupled (for reasoning, validation, simulation purposes) and automatically resynchronized.

Heterogeneity is a key challenge for modern component based systems. Until recently, component based techniques were designed to address a specific domain, such as embedded software for command and control, or distributed Web based service oriented architectures. The emergence of the Internet of Things paradigm calls for a unified approach in component based design techniques. By implementing an efficient separation of concern between platform independent architecture management and platform dependent implementations, Models@runtime is now established as a key technique to support dynamic component based designs. It provides DIVERSE with an essential foundation to explore an adaptation envelope at run-time. The goal is to automatically explore a set of alternatives and assess their relevance with respect to the considered problem. These techniques have been applied to craft software architecture exhibiting high quality of services properties 82. Multi Objectives Search based techniques 80 deal with optimization problem containing several (possibly conflicting) dimensions to optimize. These techniques provide DIVERSE with the scientific foundations for reasoning and efficiently exploring an envelope of software configurations at run-time.

3.2.4 Validation and verification

Validation and verification (V&V) theories and techniques provide the means to assess the validity of a software system with respect to a specific correctness envelope. As such, they form an essential element of DIVERSE's scientific background. In particular, we focus on model-based V&V in order to leverage the different models that specify the envelope at different moments of the software development lifecycle.

Model-based testing consists in analyzing a formal model of a system (e.g., activity diagrams, which capture high-level requirements about the system, statecharts, which capture the expected behavior of a software module, or a feature model, which describes all possible variants of the system) in order to generate test cases that will be executed against the system. Model-based testing 114 mainly relies on model analysis, constraint solving 79 and search-based reasoning 91. DIVERSE leverages in particular the applications of model-based testing in the context of highly-configurable systems and 116 interactive systems 94 as well as recent advances based on diversity for test cases selection 85.

Nowadays, it is possible to simulate various kinds of models. Existing tools range from industrial tools such as Simulink, Rhapsody or Telelogic to academic approaches like Omega 101, or Xholon. All these simulation environments operate on homogeneous environment models. However, to handle diversity in software systems, we also leverage recent advances in heterogeneous simulation. Ptolemy 74 proposes a common abstract syntax, which represents the description of the model structure. These elements can be decorated using different directors that reflect the application of a specific model of computation on the model element. Metropolis 64 provides modeling elements amenable to semantically equivalent mathematical models. Metropolis offers a precise semantics flexible enough to support different models of computation. ModHel'X 84 studies the composition of multi-paradigm models relying on different models of computation.

Model-based testing and simulation are complemented by runtime fault-tolerance through the automatic generation of software variants that can run in parallel, to tackle the open nature of software-intensive systems. The foundations in this case are the seminal work about N-version programming 62, recovery blocks 106 and code randomization 66, which demonstrated the central role of diversity in software to ensure runtime resilience of complex systems. Such techniques rely on truly diverse software solutions in order to provide systems with the ability to react to events, which could not be predicted at design time and checked through testing or simulation.

3.2.5 Empirical software engineering

The rigorous, scientific evaluation of DIVERSE's contributions is an essential aspect of our research methodology. In addition to theoretical validation through formal analysis or complexity estimation, we also aim at applying state-of-the-art methodologies and principles of empirical software engineering. This approach encompasses a set of techniques for the sound validation contributions in the field of software engineering, ranging from statistically sound comparisons of techniques and large-scale data analysis to interviews and systematic literature reviews 110, 108. Such methods have been used for example to understand the impact of new software development paradigms 73. Experimental design and statistical tests represent another major aspect of empirical software engineering. Addressing large-scale software engineering problems often requires the application of heuristics, and it is important to understand their effects through sound statistical analyses 61.

3.3 Research axis

DIVERSE explore Software Diversity. Leveraging our strong background on Model-Driven Engineering, and our large expertise on several related fields (programming languages, distributed systems, GUI, machine learning, security...), we explore tools and methods to embrace the inherent diversity in software engineering, from the stakeholders and underlying tool-supported languages involved in the software system life cycle, to the configuration and evolution space of the modern software systems, and the heterogeneity of the targeted execution platforms. Hence, we organize our research directions according to three axes (cf. Fig. 1):

- Axis #1: Software Language Engineering. We explore the future engineering and scientific environments to support the socio-technical coordination among the various stakeholders involved across modern software system life cycles.

- Axis #2: Spatio-temporal Variability in Software and Systems. We explore systematic and automatic approaches to cope with software variability, both in space (software variants) and time (software maintenance and evolution).

- Axis #3: DevSecOps and Resilience Engineering for Software and Systems. We explore smart continuous integration and deployment pipelines to ensure the delivery of secure and resilient software systems on heterogeneous execution platforms (cloud, IoT...).

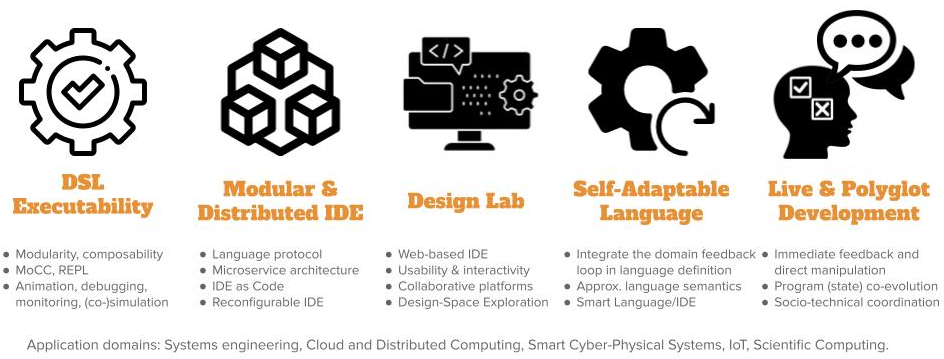

3.3.1 Axis #1: Software Language Engineering

Overall objective.

The disruptive design of new, complex systems requires a high degree of flexibility in the communication between many stakeholders, often limited by the silo-like structure of the organization itself (cf. Conway’s law). To overcome this constraint, modern engineering environments aim to: (i) better manage the necessary exchanges between the different stakeholders; (ii) provide a unique and usable place for information sharing; and (iii) ensure the consistency of the many points of view. Software languages are the key pivot between the diverse stakeholders involved, and the software systems they have to implement. Domain-Specific (Modeling) Languages enable stakeholders to address the diverse concerns through specific points of view, and their coordinated use is essential to support the socio-technical coordination across the overall software system life cycle.

Our perspectives on Software Language Engineering over the next period is presented in Figure 2 and detailed in the following paragraphs.

DSL Executability.

Providing rich and adequate environments is key to the adoption of domain-specific languages. In particular, we focus on tools that support model and program execution. We explore the foundations to define the required concerns in language specification, and systematic approaches to derive environments (e.g., IDE, notebook, design labs) including debuggers, animators, simulators, loggers, monitors, trade-off analysis, etc.

Modular & Distributed IDE.

IDEs are indispensable companions to software languages. They are increasingly turning towards Web-based platforms, heavily relying on cloud infrastructures and forges. Since all language services require different computing capacities and response times (to guarantee a user-friendly experience within the IDE) and use shared resources (e.g., the program), we explore new architectures for their modularization and systematic approaches for their individual deployment and dynamic adaptation within an IDE. To cope with the ever-growing number of programming languages, manufacturers of Integrated Development Environments (IDE) have recently defined protocols as a way to use and share multiple language services in language-agnostic environments. These protocols rely on a proper specification of the services that are commonly found in the tool support of general-purpose languages, and define a fixed set of capabilities to offer in the IDE. However, new languages regularly appear offering unique constructs (e.g., DSLs), and which are supported by dedicated services to be offered as new capabilities in IDEs. This trend leads to the multiplication of new protocols, hard to combine and possibly incompatible (e.g., overlap, different technological stacks). Beyond the proposition of specific protocols, we will explore an original approach to be able to specify language protocols and to offer IDEs to be configured with such protocol specifications. IDEs went from directly supporting languages to protocols, and we envision the next step: IDE as code, where language protocols are created or inferred on demand and serve as support of an adaptation loop taking in charge of the (re)configuration of the IDE.

Design Lab.

Web-based and cloud-native IDEs open new opportunities to bridge the gap between the IDE and collaborative platforms, e.g., forges. In the complex world of software systems, we explore new approaches to reduce the distance between the various stakeholders (e.g., systems engineers and all those involved in specialty engineering) and to improve the interactions between them through an adapted tool chain. We aim to improve the usability of development cycles with efficiency, affordance and satisfaction. We also explore new approaches to explore and interact with the design space or other concerns such as software and systems human values or security, and provide facilities for trade-off analysis and decision making.

Live & Polyglot Development.

As of today, polyglot development is massively popular and virtually all software systems put multiple languages to use, which not only complexifies their development, but also their evolution and maintenance. Moreover, as software grows more critical in new application domains (e.g., data analytics, health or scientific computing), it is crucial to ease the participation of scientists, decision-makers, and more generally non-software experts. Live programming makes it possible to change a program while it is running, by propagating changes on a program code to its run-time state. This effectively bridges the gulf of evaluation between program writing and program execution: the effects a change has on the running system are immediately visible, and the developer can take immediate action. The challenges at the intersection of polyglot and live programming have received little attention so far, and we envision a language design and implementation approach to specify domain-specific languages and their coordination, and automatically provide interactive domain-specific environments for live and polyglot programming.

Self-Adaptable Language.

Over recent years, self-adaptation has become a concern for many software systems that operate in complex and changing environments. At the core of self-adaptation lies a feedback loop and its associated trade-off reasoning, to decide on the best course of action. However, existing software languages do not abstract the development and execution of such feedback loops for self-adaptable systems. Developers have to fall back to ad-hoc solutions to implement self-adaptable systems, often with wide-ranging design implications (e.g., explicit MAPE-K loop). Furthermore, existing software languages do not capitalize on monitored usage data of a language and its modeling environment. This hinders the continuous and automatic evolution of a software language based on feedback loops from the modeling environment and runtime software system. To address the aforementioned issues, we will explore the concept of Self-Adaptable Language (SAL) to abstract the feedback loops at both system and language levels.

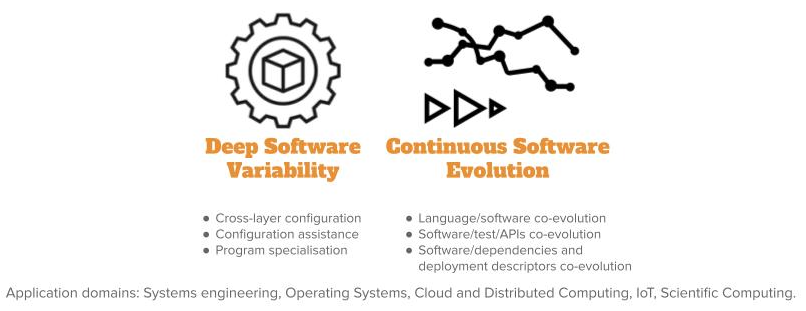

3.3.2 Axis #2: Spatio-temporal Variability in Software and Systems

Overall objective.

Leveraging our longstanding activity on variability management for software product lines and configurable systems covering diverse scenarios of use, we will investigate over the next period the impact of such a variability across the diverse layers, incl. source code, input/output data, compilation chain, operating systems and underlying execution platforms. We envision a better support and assistance for the configuration and optimisation (e.g., non-functional properties) of software systems according to this deep variability. Moreover, as software systems involve diverse artefacts (e.g., APIs, tests, models, scripts, data, cloud services, documentation, deployment descriptors...), we will investigate their continuous co-evolution during the overall lifecycle, including maintenance and evolution. Our perspectives on spatio-temporal variability over the next period is presented in Figure 3 and is detailed in the following paragraphs.

Deep Software Variability.

Software systems can be configured to reach specific functional goals and non-functional performance, either statically at compile time or through the choice of command line options at runtime. We observed that considering the software layer only might be a naive approach to tune the performance of the system or to test its functional correctness. In fact, many layers (hardware, operating system, input data, etc.), which are themselves subject to variability, can alter the performance or functionalities of software configurations. We call deep software variability the interaction of all variability layers that could modify the behavior or non-functional properties of a software. Deep software variability calls to investigate how to systematically handle cross-layer configuration. The diversification of the different layers is also an opportunity to test the robustness and resilience of the software layer in multiple environments. Another interesting challenge is to tune the software for one specific executing environment. In essence, deep software variability questions the generalization of the configuration knowledge.

Continuous Software Evolution.

Nowadays, software development has become more and more complex, involving various artefacts, such as APIs, tests, models, scripts, data, cloud services, documentation, etc., and embedding millions of lines of code (LOC). Recent evidence highlights continuous software evolution based on thousands of commits, hundreds of releases, all done by thousands of developers. We focus on the following essential backbone dimensions in software engineering: languages, models, APIs, tests and deployment descriptors, all revolving around software code implementation. We will explore the foundations of a multidimensional and polyglot co-evolution platform, and will provide a better understanding with new empirical evidence and knowledge.

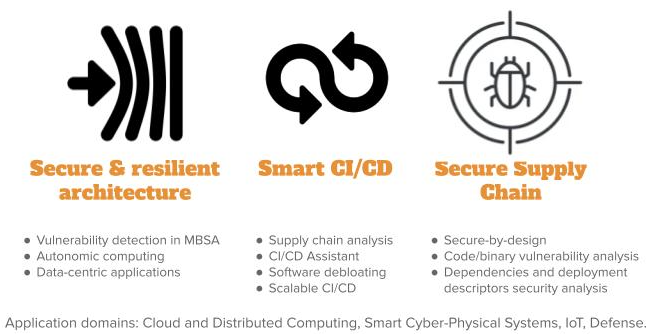

3.3.3 Axis #3: DevSecOps and Resilience Engineering for Software and Systems

Overall objective.

The production and delivery of modern software systems involves the integration of diverse dependencies and continuous deployment on diverse execution platforms in the form of large distributed socio-technical systems. This leads to new software architectures and programming models, as well as complex supply chains for final delivery to system users. In order to boost cybersecurity, we want to provide strong support to software engineers and IT teams in the development and delivery of secure and resilient software systems, ie. systems able to resist or recover from cyberattacks. Our perspectives on DevSecOps and Resilience Engineering over the next period are presented in Figure 4 and detailed in the following paragraphs.

Secure & Resilient Architecture.

Continuous integration and deployment pipelines are processes implementing complex software supply chains. We envision an explicit and early consideration of security properties in such pipelines to help in detecting vulnerabilities. In particular, we integrate the security concern in Model-Based System Analysis (MBSA) approaches, and explore guidelines, tools and methods to drive the definition of secure and resilient architectures. We also investigate resilience at runtime through frameworks for autonomic computing and data-centric applications, both for the software systems and the associated deployment descriptors.

Smart CI/CD.

Dependencies management, Infrastructure as Code (IaC) and DevOps practices open opportunities to analyze complex supply chains. We aim at providing relevant metrics to evaluate and ensure the security of such supply chains, advanced assistants to help in specifying corresponding pipelines, and new approaches to optimize them (e.g., software debloating, scalability...). We study how supply chains can actively leverage software variability and diversity to increase cybersecurity and resilience.

Secure Supply Chain.

In order to produce secure and resilient software systems, we explore new secure-by-design foundations that integrate security concerns as first class entities through a seamless continuum from the design to the continuous integration and deployment. We explore new models, architectures, inter-relations, and static and dynamic analyses that rely on explicitly expressed security concerns to ensure a secure and resilient supply chain. We lead research on automatic vulnerability and malware detection in modern supply chains, considering the various artefacts either as white boxes enabling source code analysis (to avoid accidental vulnerabilities or intentional ones or code poisoning), or as black boxes requiring binary analysis (to find malware or vulnerabilities). We also conduct research activities in dependencies and deployment descriptors security analysis.

4 Application domains

Information technology affects all areas of society. The need to develop software systems is therefore present in a huge number of application domains. One of the goals of software engineering is to apply a systematic, disciplined, quantifiable approach to the development, operation, and maintenance of software whatever the application domain.

As a result, the team covers a wide range of application domains and never refrains from exploring a particular field of application. Our primary expertise is in complex, heterogeneous and distributed systems. While we historically collaborated with partners in the field of systems engineering, it should be noted that for several years now, we have investigated several new areas in depth:

- the field of web applications, with the associated design principles and architectures, for applications ranging from cloud-native applications to the design of modern web front-ends.

- the field of scientific computing in connection with the CEA DAM, Safran and scientists from other disciplines such as the ecologists of the University of Rennes 1. In this field where the writing of complex software is common, we explore how we could help scientists to use software engineering approach, in particular, the use of SLE and approximate computing techniques.

- the field of large software systems such as the Linus kernel or other open-source projects. In this field, we explore, in particular, the variability management, the support of co-evolution and the use of polyglot approaches.

5 Highlights of the year

- Mathieu Acher junior member Institut Universitaire de France (IUF).

5.1 Awards

Best paper award at SPLC 2021 for Hugo Martin, Mathieu Acher, Juliana Alves Pereira, and Jean-Marc Jézéquel. A comparison of performance specialization learning for configurable systems (2021) 47

Best paper award for Cassius Puodzius, Olivier Zendra, Annelie Heuser, Lamine Noureddine for "Accurate and Robust Malware Analysis through Similarity of External Calls Dependency Graphs (ECDG)" in ARES-IWCC 2021 51

6 New software and platforms

6.1 New software

6.1.1 FAMILIAR

-

Keywords:

Software line product, Configators, Customisation

-

Scientific Description:

FAMILIAR (for FeAture Model scrIpt Language for manIpulation and Automatic Reasoning) is a language for importing, exporting, composing, decomposing, editing, configuring, computing "diffs", refactoring, reverse engineering, testing, and reasoning about (multiple) feature models. All these operations can be combined to realize complex variability management tasks. A comprehensive environment is proposed as well as integration facilities with the Java ecosystem.

-

Functional Description:

Familiar is an environment for large-scale product customisation. From a model of product features (options, parameters, etc.), Familiar can automatically generate several million variants. These variants can take many forms: software, a graphical interface, a video sequence or even a manufactured product (3D printing). Familiar is particularly well suited for developing web configurators (for ordering customised products online), for providing online comparison tools and also for engineering any family of embedded or software-based products.

- URL:

-

Contact:

Mathieu Acher

-

Participants:

Aymeric Hervieu, Benoit Baudry, Didier Vojtisek, Edward Mauricio Alferez Salinas, Guillaume Bécan, Joao Bosco Ferreira-Filho, Julien Richard-Foy, Mathieu Acher, Olivier Barais, Sana Ben Nasr

6.1.2 GEMOC Studio

-

Name:

GEMOC Studio

-

Keywords:

DSL, Language workbench, Model debugging

-

Scientific Description:

The language workbench put together the following tools seamlessly integrated to the Eclipse Modeling Framework (EMF):

- Melange, a tool-supported meta-language to modularly define executable modeling languages with execution functions and data, and to extend (EMF-based) existing modeling languages.

- MoCCML, a tool-supported meta-language dedicated to the specification of a Model of Concurrency and Communication (MoCC) and its mapping to a specific abstract syntax and associated execution functions of a modeling language.

- GEL, a tool-supported meta-language dedicated to the specification of the protocol between the execution functions and the MoCC to support the feedback of the data as well as the callback of other expected execution functions.

- BCOoL, a tool-supported meta-language dedicated to the specification of language coordination patterns to automatically coordinates the execution of, possibly heterogeneous, models.

- Monilog, an extension for monitoring and logging executable domain-specific models

- Sirius Animator, an extension to the model editor designer Sirius to create graphical animators for executable modeling languages.

-

Functional Description:

The GEMOC Studio is an Eclipse package that contains components supporting the GEMOC methodology for building and composing executable Domain-Specific Modeling Languages (DSMLs). It includes two workbenches: The GEMOC Language Workbench: intended to be used by language designers (aka domain experts), it allows to build and compose new executable DSMLs. The GEMOC Modeling Workbench: intended to be used by domain designers to create, execute and coordinate models conforming to executable DSMLs. The different concerns of a DSML, as defined with the tools of the language workbench, are automatically deployed into the modeling workbench. They parametrize a generic execution framework that provides various generic services such as graphical animation, debugging tools, trace and event managers, timeline.

- URL:

-

Contact:

Benoît Combemale

-

Participants:

Didier Vojtisek, Dorian Leroy, Erwan Bousse, Fabien Coulon, Julien DeAntoni

-

Partners:

IRIT, ENSTA, I3S, OBEO, Thales TRT

6.1.3 Kevoree

-

Keywords:

M2M, Dynamic components, Iot, Heterogeneity, Smart home, Cloud, Software architecture, Dynamic deployment

-

Scientific Description:

Kevoree is an open-source models@runtime platform (http://www.kevoree.org ) to properly support the dynamic adaptation of distributed systems. Models@runtime basically pushes the idea of reflection [132] one step further by considering the reflection layer as a real model that can be uncoupled from the running architecture (e.g. for reasoning, validation, and simulation purposes) and later automatically resynchronized with its running instance.

Kevoree has been influenced by previous work that we carried out in the DiVA project [132] and the Entimid project [135] . With Kevoree we push our vision of models@runtime [131] farther. In particular, Kevoree provides a proper support for distributed models@runtime. To this aim we introduced the Node concept to model the infrastructure topology and the Group concept to model semantics of inter node communication during synchronization of the reflection model among nodes. Kevoree includes a Channel concept to allow for multiple communication semantics between remoteComponents deployed on heterogeneous nodes. All Kevoree concepts (Component, Channel, Node, Group) obey the object type design pattern to separate deployment artifacts from running artifacts. Kevoree supports multiple kinds of very different execution node technology (e.g. Java, Android, MiniCloud, FreeBSD, Arduino, ...).

Kevoree is distributed under the terms of the LGPL open source license.

Main competitors:

- the Fractal/Frascati eco-system (http://frascati.ow2.org/doc/1.4/frascati-userguide.html).

- SpringSource Dynamic Module (http://spring.io/)

- GCM-Proactive (http://proactive.inria.fr/)

- OSGi (http://www.osgi.org)

- Chef

- Vagran (http://vagrantup.com/)

Main innovative features:

- distributed models@runtime platform (with a distributed reflection model and an extensible models@runtime dissemination set of strategies).

- Support for heterogeneous node type (from Cyber Physical System with few resources until cloud computing infrastructure).

- Fully automated provisioning model to correctly deploy software modules and their dependencies.

- Communication and concurrency access between software modules expressed at the model level (not in the module implementation).

-

Functional Description:

Kevoree is an open-source models@runtime platform to properly support the dynamic adaptation of distributed systems. Models@runtime basically pushes the idea of reflection one step further by considering the reflection layer as a real model that can be uncoupled from the running architecture (e.g. for reasoning, validation, and simulation purposes) and later automatically resynchronized with its running instance.

- URL:

-

Contact:

Olivier Barais

-

Participants:

Aymeric Hervieu, Benoit Baudry, Francisco-Javier Acosta Padilla, Inti Gonzalez Herrera, Ivan Paez Anaya, Jacky Bourgeois, Jean Emile Dartois, Johann Bourcier, Manuel Leduc, Maxime Tricoire, Mohamed Boussaa, Noël Plouzeau, Olivier Barais

6.1.4 Melange

-

Name:

Melange

-

Keywords:

Model-driven engineering, Meta model, MDE, DSL, Model-driven software engineering, Dedicated langage, Language workbench, Meta-modelisation, Modeling language, Meta-modeling

-

Scientific Description:

Melange is a follow-up of the executable metamodeling language Kermeta, which provides a tool-supported dedicated meta-language to safely assemble language modules, customize them and produce new DSMLs. Melange provides specific constructs to assemble together various abstract syntax and operational semantics artifacts into a DSML. DSMLs can then be used as first class entities to be reused, extended, restricted or adapted into other DSMLs. Melange relies on a particular model-oriented type system that provides model polymorphism and language substitutability, i.e. the possibility to manipulate a model through different interfaces and to define generic transformations that can be invoked on models written using different DSLs. Newly produced DSMLs are correct by construction, ready for production (i.e., the result can be deployed and used as-is), and reusable in a new assembly.

Melange is tightly integrated with the Eclipse Modeling Framework ecosystem and relies on the meta-language Ecore for the definition of the abstract syntax of DSLs. Executable meta-modeling is supported by weaving operational semantics defined with Xtend. Designers can thus easily design an interpreter for their DSL in a non-intrusive way. Melange is bundled as a set of Eclipse plug-ins.

-

Functional Description:

Melange is a language workbench which helps language engineers to mashup their various language concerns as language design choices, to manage their variability, and support their reuse. It provides a modular and reusable approach for customizing, assembling and integrating DSMLs specifications and implementations.

- URL:

-

Contact:

Benoît Combemale

-

Participants:

Arnaud Blouin, Benoît Combemale, David Mendez Acuna, Didier Vojtisek, Dorian Leroy, Erwan Bousse, Fabien Coulon, Jean-Marc Jezequel, Olivier Barais, Thomas Degueule

6.1.5 DSpot

-

Keywords:

Software testing, Test amplification

-

Functional Description:

DSpot is a tool that generates missing assertions in JUnit tests. DSpot takes as input a Java project with an existing test suite. As output, DSpot outputs new test cases on console. DSpot supports Java projects built with Maven and Gradle

- URL:

-

Contact:

Benjamin Danglot

-

Participants:

Benoit Baudry, Martin Monperrus, Benjamin Danglot

-

Partner:

KTH Royal Institute of Technology

6.1.6 ALE

-

Name:

Action Language for Ecore

-

Keywords:

Meta-modeling, Executable DSML

-

Functional Description:

Main features of ALE include:

- Executable metamodeling: Re-open existing EClasses to insert new methods with their implementations

- Metamodel extension: The very same mechanism can be used to extend existing Ecore metamodels and insert new features (eg. attributes) in a non-intrusive way

- Interpreted: No need to deploy Eclipse plugins, just run the behavior on a model directly in your modeling environment

- Extensible: If ALE doesn’t fit your needs, register Java classes as services and invoke them inside your implementations of EOperations.

- URL:

-

Contact:

Benoît Combemale

-

Partner:

OBEO

6.1.7 InspectorGuidget

-

Keywords:

Static analysis, Software testing, User Interfaces

-

Functional Description:

InspectorGuidget is a static code analysing tool. InspectorGuidget analyses UI (user interface/interaction) code of a software system to extract high level information and metrics. InspectorGuidget also finds bad UI coding pratices, such as Blob listener instances. InspectorGuidget analyses Java code.

- URL:

- Publications:

-

Contact:

Arnaud Blouin

-

Participants:

Arnaud Blouin, Benoit Baudry

6.1.8 Descartes

-

Keywords:

Software testing, Mutation analysis

-

Functional Description:

Descartes evaluates the capability of your test suite to detect bugs using extreme mutation testing.

Descartes is a mutation engine plugin for PIT which implements extreme mutation operators as proposed in the paper Will my tests tell me if I break this code?.

- URL:

- Publications:

-

Contact:

Benoit Baudry

-

Participants:

Oscar Luis Vera Perez, Benjamin Danglot, Benoit Baudry, Martin Monperrus

-

Partner:

KTH Royal Institute of Technology

6.1.9 PitMP

-

Name:

PIT for Multi-module Project

-

Keywords:

Mutation analysis, Mutation testing, Java, JUnit, Maven

-

Functional Description:

PIT and Descartes are mutation testing systems for Java applications, which allows you to verify if your test suites can detect possible bugs, and so to evaluate the quality of your test suites. They evaluate the capability of your test suite to detect bugs using mutation testing (PIT) or extreme mutation testing (Descartes). Mutation testing does it by introducing small changes or faults into the original program. These modified versions are called mutants. A good test suite should able to kill or detect a mutant. Traditional mutation testing works at the instruction level, e.g., replacing ">" by "<=", so the number of generated mutants is huge, as the time required to check the entire test suite. That's why Extreme Mutation strategy appeared. In Extreme Mutation testing, the whole body of a method under test is removed. Descartes is a mutation engine plugin for PIT which implements extreme mutation operators. Both provide reports combining, line coverage, mutation score and list of weaknesses in the source.

- URL:

-

Contact:

Caroline Landry

-

Partners:

CSQE, KTH Royal Institute of Technology, ENGINEERING

6.1.10 SE-PAC

-

Name:

Self-Evolving-PAcker Classifier

-

Keywords:

Packers, Malware, Obfuscation, Classification, Incremental clustering

-

Functional Description:

SE-PAC is a Self-Evolving PAcker Classifier software that identifies the class of the packer used to pack a (malicious) Win32 executable file. It has the the ability to identify both known and unknown packer classes, as well as self-updating its packer knowledge with the predicted packer classes.

SE-PAC relies on incremental clustering in a semi-supervised fashion. It is composed of an offline phase and an online phase. In the offline phase, a set of features is extracted from a collection of packed binaries provided with their ground truth labels, then a density-based clustering algorithm (DBSCAN) is used to group similar packers together into clusters with respect to a distance measure. In this step, the similarity threshold is tuned in order to form the clusters that fit the best with the set of labels provided. In the online phase, SE-PAC reproduces the same operations of feature extraction and distance calculation, then uses a customized version of the incremental clustering algorithm DBSCAN in order to classify incoming packed samples, either in known packer classes by extending existing clusters or new packer classes by forming new clusters, or temporarily leave them unclassified (see notion of noise of DBSCAN). The clusters formed after each update serve as a baseline for SE-PAC to self-evolve.

Finally, SE-PAC includes a post-clustering selection strategy that extracts a set of relevant samples from the clusters found in order to reduce the cost of the post-clustering packer processing.

-

Release Contributions:

Version 1.1 brings: - Implementation of so-called SRPs (Scattered Representative Points) for reducing the complexity of the incremental process. - Implementation of a post-grouping strategy for the selection of relevant samples from the constituted groups. - Better modularity of the code.

- Publication:

-

Contact:

Olivier Zendra

7 New results

7.1 Results for Axis #1: Software Language Engineering

Participants: Olivier Barais, Johann Bourcier, Benoit Combemale, Jean-Marc Jézéquel, Gurvan Leguernic, Gunter Mussbacher, Noel Plouzeau, Didier Vojtisek.

7.1.1 Foundations of Software Language Engineering

Self-Adaptable Languages

Over recent years, self-adaptation has become a concern for many software systems that have to operate in complex and changing environments. At the core of self-adaptation, there is a feedback loop and associated trade-off reasoning to decide on the best course of action. However, existing software languages do not abstract the development and execution of such feedback loops for self-adaptable systems. Developers have to fall back to ad-hoc solutions to implement self-adaptable systems, often with wide-ranging design implications (e.g., explicit MAPE-K loop). Furthermore, existing software languages do not capitalize on monitored usage data of a language and its modeling environment. This hinders the continuous and automatic evolution of a software language based on feedback loops from the modeling environment and runtime software system. To address the aforementioned issues, in 39 we introduce the concept of Self-Adaptable Language (SAL) to abstract the feedback loops at both system and language levels. We propose L-MODA (Language, Models, and Data) as a conceptual reference framework that characterizes the possible feedback loops abstracted into a SAL. To demonstrate SALs, we present emerging results on the abstraction of the system feedback loop into the language semantics. We report on the concept of Self-Adaptable Virtual Machines as an example of semantic adaptation in a language interpreter and present a roadmap for SALs.

As a first contribution in this vision, in 38 we present SEALS, a framework for building self-adaptive virtual machines for domain specific languages. This framework provides first-class entities for the language engineer to promote domain-specific feedback loops in the definition of the DSL operational semantics. In particular, the framework supports the definition of (i) the abstract syntax and the semantics of the language as well as the correctness envelope defining the acceptable semantics for a domain concept, (ii) the feedback loop and associated trade-off reasoning, and (iii) the adaptations and the predictive model of their impact on the trade-off. We use this framework to build three languages with self-adaptive virtual machines and discuss the relevance of the abstractions, effectiveness of correctness envelopes, and compare their code size and performance results to their manually implemented counterparts. We show that the framework provides suitable abstractions for the implementation of self-adaptive operational semantics while introducing little performance overhead compared to a manual implementation.

IDE as Code: Reifying Language Protocols as First-Class Citizens

To cope with the ever-growing number of programming languages, manufacturers of Integrated Development Environments (IDE) have recently defined protocols as a way to use and share multiple language services (e.g., auto-completion, type checker, language runtime) in language-agnostic environments (i.e., the user interface provided by the IDE): the most notable are the Language Server Protocol (LSP) for textual editors, and the Debug Adapter Protocol (DAP) for debugging facilities. These protocols rely on a proper specification of the services that are commonly found in the tool support of general-purpose languages, and define a fixed set of capabilities to offer in the IDE. However, new languages appear regularly, offering unique constructs (e.g., Domain-Specific Languages), and supported by dedicated services to be offered as new capabilities in IDEs. This trend leads to the multiplication of new protocols, hard to combine and possibly incompatible (e.g., overlap, use of different technological stacks). Beyond the proposition of specific protocols, the goal of 37 is to highlight the importance of being able to specify language protocols and to offer IDEs to be configured with such protocol specifications. We present our vision by discussing the main concepts for the specification of language protocols, and an approach that can make use of these specifications in order to deploy an IDE as a set of coordinated, individually deployed, language capabilities (e.g., microservice choreography). IDEs went from directly supporting languages to protocols, and we envision the next step in 37: IDE as Code, where language protocols are created or inferred on demand and serve as support of an adaptation loop taking charge of the (re)configuration of the IDE.

DataTime: a Framework to smoothly Integrate Past, Present and Future into Models

Models at runtime have been initially investigated for adaptive systems. Models are used as a reflective layer of the current state of the system to support the implementation of a feedback loop. More recently, models at runtime have also been identified as key for supporting the development of full-fledged digital twins. However, this use of models at runtime raises new challenges, such as the ability to seamlessly interact with the past, present and future states of the system. In 46, we propose a framework called DataTime to implement models at runtime which capture the state of the system according to the dimensions of both time and space, here modeled as a directed graph where both nodes and edges bear local states (ie. values of properties of interest). DataTime provides a unifying interface to query the past, present and future (predicted) states of the system. This unifying interface provides (i) an optimized structure of the time series that capture the past states of the system, possibly evolving over time, (ii) the ability to get the last available value provided by the system’s sensors, and (iii) a continuous micro-learning over graph edges of a predictive model to make it possible to query future states, either locally or more globally, thanks to a composition law. The framework has been developed and evaluated in the context of the Intelligent Public Transportation Systems of the city of Rennes (France). This experimentation has demonstrated how DataTime can deprecate the use of heterogeneous tools for managing data from the past, the present and the future, and facilitate the development of digital twins.

A Hitchhiker's Guide to Model-Driven Engineering for Data-Centric Systems

In 27, we introduce the MODA framework as a conceptual reference framework that provides the foundations for identifying the various models and their respective roles within any model-based system development life-cycle. It is intended to be a guide to organize the various models in datacentric socio-technical systems.

7.1.2 DSL for Scientific Computing

When Scientific Software Meets Software Engineering

The development of scientific software relies on the collaboration of various stakeholders for the scientific computing and software engineering activities. Computer languages have an impact on both activities and related concerns, as well as on the engineering principles required to ensure the development of reliable scientific software. The more general-purpose the language is — with low-level, computing-related, system abstractions — the more flexibility it will provide, but also the more rigorous engineering principles and Validation & Verification (V&V) activities it will require from the language user. In 32, we investigate the different levels of abstraction, linked to the diverse artifacts of the scientific software development process, a software language can propose, and the V&V facilities associated to the corresponding level of abstraction the language can provide to the user. We aim to raise awareness among scientists, engineers and language providers on their shared responsibility in developing reliable scientific software.

Monilogging for Executable Domain-Specific Languages

Runtime monitoring and logging are fundamental techniques for analyzing and supervising the behavior of computer programs. However, supporting these techniques for a given language induces significant development costs that can hold language engineers back from providing adequate logging and monitoring tooling for new domain-specific modeling languages. Moreover, runtime monitoring and logging are generally considered as two different techniques: they are thus implemented separately which makes users prone to overlooking their potentially beneficial mutual interactions. In 41, we propose a language-agnostic, unifying framework for runtime monitoring and logging and demonstrate how it can be used to define loggers, runtime monitors and combinations of the two, aka. moniloggers. We provide an implementation of the framework that can be used with Java-based executable languages, and evaluate it on two implementations of the NabLab interpreter, leveraging in turn the instrumentation facilities offered by Truffle, and those offered by AspectJ.

7.1.3 Design lab

In the context of the research on the design lab axis, we worked this year on new abstractions of interaction and we also studied how to build a simulation tool combining modeling techniques and data analysis in the field of transportation with an industrial partner.

Interacto: A Modern User Interaction Processing Model

Since most software systems provide their users with interactive features, building user interfaces (UI) is one of the core software engineering tasks. It consists in designing, implementing and testing ever more sophisticated and versatile ways for users to interact with software systems, and safely connecting these interactions with commands querying or modifying their state. However, most UI frameworks still rely on a low level model, the bare bone UI event processing model. This model was suitable for the rather simple UIs of the early 80's (menus, buttons, keyboards, mouse clicks), but now exhibits major software engineering flaws for modern, highly interactive UIs. These flaws include lack of separation of concerns, weak modularity and thus low reusability of code for advanced interactions, as well as low testability. To mitigate these flaws, we propose Interacto as a high level user interaction processing model 25. By reifying the concept of user interaction, Interacto makes it easy to design, implement and test modular and reusable advanced user interactions, and to connect them to commands with built-in undo/redo support. To demonstrate its applicability and generality, we briefly present two open source implementations of Interacto for Java/JavaFX and TypeScript/Angular. We evaluate Interacto interest (1) on a real world case study, where it has been used since 2013, and with (2) a controlled experiment with 44 master students, comparing it with traditionnal UI frameworks.

Impact of Data Cleansing for Urban Bus Commercial Speed Prediction

Public Transportation Information Systems (PTIS) are widely used for public bus services amongst cities in the world. These systems gather information about trips, bus stops, bus speeds, ridership, etc. This massive data is an inviting source of information for machine learning predictive tools. However, it most often suffers from quality deficiencies, due to multiple data sets with multiple structures, to different infrastructures using incompatible technologies, to human errors or hardware failures. In this work 33, 45, we consider the impact of data cleansing on a classical machine-learning task: predicting urban bus commercial speed. We show that simple, transport specific business and quality rules can drastically enhance data quality, whereas more sophisticated rules may offer little improvements despite a high computational cost.

7.2 Results for Axis #2: Spatio-temporal Variability in Software and Systems

Participants: Mathieu Acher, Arnaud Blouin, Jean-Marc Jezequel, Djamel Eddine Khelladi, Olivier Zendra.

In 24 we perform a systematic review of the rich literature related to the learning of software configuration spaces. We report on the different application objectives (e.g., performance prediction, configuration optimization, constraint mining), use cases, targeted software systems and application domains. We review the various strategies employed to gather a representative and cost-effective sample. We describe automated software techniques used to measure functional and non-functional properties of configurations. We classify machine learning algorithms and how they relate to the pursued application. Finally, we also describe how researchers evaluate the quality of the learning process.

In 34, we apply learning techniques to the Linux kernel. With now more than 15,000 configuration options, including more than 9,000 just for the x86 architecture, the Linux kernel is one of the most complex configurable open-source systems ever developed. If all these options were binary and independent, that would indeed yield possible variants of the kernel. Of course not all options are independent (leading to fewer possible variants), but some of them have tri-states values: yes, no, or module instead of simply boolean values (leading to more possible variants). The Linux kernel is mentioned in numerous papers about configurable systems and machine learning for motivating the problem and the underlying approach. However, only a few works truly explore such a huge configuration space. In this line of work, we take up the Linux challenge either for configurations' bug prevention or for predicting the binary size of a configured kernel. We also design a learning technique capable of transferring a prediction model among variants and versions of Linux 34.

In 47 (Best paper award at SPLC 2021), we compare six learning techniques: three variants of decision trees (including a novel algorithm) with and without the use of model-based feature selection. We first perform a study on 8 configurable systems considered in previous related works and show that the accuracy reaches more than 90%, and that feature selection can improve the results in the majority of cases. We then perform a study on the Linux kernel and show that these techniques perform as well as on the other systems. Overall, our results show that there is no one-size-fits-all learning variant (though high accuracy can be achieved): we present guidelines and discuss tradeoffs.

In 43, 44, we start from the fact that many software projects are configurable through compile-time options (e.g., using ./configure) and also through run-time options (e.g., command-line parameters, fed to the software at execution time). Several works have shown how to predict the effect of run-time options on performance. However it is yet to be studied how these prediction models behave when the software is built with different compile-time options. For instance, is the best run-time configuration always the best w.r.t. the chosen compilation options? We investigate the effect of compile-time options on the performance distributions of 4 software systems. There are cases where the compiler layer effect is linear which is an opportunity to generalize performance models or to tune and measure runtime performance at lower cost. We also prove there can exist an interplay by exhibiting a case where compile-time options significantly alter the performance distributions of a configurable system.

In 42 we notice that many layers (hardware, operating system, input data, etc.), themselves subject to variability, can alter performances of software configurations. For instance, the configurations' options of the x264 video encoder may have very different effects on x264's encoding time when used with different input videos, depending on the hardware on which it is executed. We coin the term deep software variability to refer to the interaction of all external layers modifying the behavior or non-functional properties of a software. Deep software variability challenges practitioners and researchers: the combinatorial explosion of possible executing environments complicates the understanding, the configuration, the maintenance, the debugging, and the testing of configurable systems. There are also opportunities: harnessing all variability layers (and not only the software layer) can lead to more efficient systems and configuration knowledge that truly generalizes to any usage and context.