Keywords

Computer Science and Digital Science

- A5. Interaction, multimedia and robotics

- A5.1. Human-Computer Interaction

- A5.10. Robotics

- A5.10.1. Design

- A5.10.2. Perception

- A5.10.3. Planning

- A5.10.4. Robot control

- A5.10.5. Robot interaction (with the environment, humans, other robots)

- A5.10.6. Swarm robotics

- A5.10.7. Learning

- A5.10.8. Cognitive robotics and systems

- A5.11. Smart spaces

- A5.11.1. Human activity analysis and recognition

- A8.2. Optimization

- A8.2.2. Evolutionary algorithms

- A9.2. Machine learning

- A9.5. Robotics

- A9.7. AI algorithmics

- A9.9. Distributed AI, Multi-agent

Other Research Topics and Application Domains

- B2.1. Well being

- B2.5.3. Assistance for elderly

- B5.1. Factory of the future

- B5.6. Robotic systems

- B7.2.1. Smart vehicles

- B9.6. Humanities

- B9.6.1. Psychology

1 Team members, visitors, external collaborators

Research Scientists

- François Charpillet [Team leader, Inria, Senior Researcher, HDR]

- Olivier Buffet [Inria, Researcher, HDR]

- Francis Colas [Inria, Researcher, HDR]

- Serena Ivaldi [Inria, Researcher]

- Dominique Martinez [CNRS, Senior Researcher, HDR]

- Pauline Maurice [CNRS, Researcher]

- Jean-Baptiste Mouret [Inria, Senior Researcher, HDR]

Faculty Members

- Amine Boumaza [Univ de Lorraine, Associate Professor]

- Karim Bouyarmane [Univ de Lorraine, Associate Professor, until Sep 2021]

- Jérôme Dinet [Univ de Lorraine, Associate Professor, from Sep 2021]

- Alexis Scheuer [Univ de Lorraine, Associate Professor]

- Vincent Thomas [Univ de Lorraine, Associate Professor]

Post-Doctoral Fellow

- Yassine El Khadiri [Univ de Lorraine, from Oct 2021, ATER]

PhD Students

- Lina Achaji [Groupe PSA, CIFRE]

- Timothée Anne [Univ de Lorraine]

- Waldez Azevedo Gomes Junior [Inria]

- Abir Bouaouda [Univ de Lorraine]

- Raphaël Bousigues [Inria]

- Jessica Colombel [Inria]

- Yassine El Khadiri [unemployed, until Sep 2021]

- Yoann Fleytoux [Inria]

- Nicolas Gauville [Groupe SAFRAN, CIFRE]

- Nima Mehdi [Inria]

- Alexandre Oliveira Souza [Inria, from Oct 2021]

- Luigi Penco [Inria]

- Vladislav Tempez [Univ de Lorraine, until Nov 2021]

- Julien Uzzan [Groupe PSA, CIFRE]

- Lorenzo Vianello [Univ de Lorraine]

- Aya Yaacoub [CNRS, from Dec 2021]

- Yang You [Inria]

- Éloïse Zehnder [Univ de Lorraine]

- Jacques Zhong [CEA]

Technical Staff

- Ivan Bergonzani [Inria, Engineer]

- Éloïse Dalin [Inria, Engineer]

- Edoardo Ghini [Inria, Engineer]

- Raphaël Lartot [Inria, Engineer]

- Glenn Maguire [Inria, Engineer]

- Jean Michenaud [Inria, Engineer, from Mar 2021]

- Lucien Renaud [Inria, Engineer]

- Vladislav Tempez [Inria, Engineer, from Dec 2021]

- Nicolas Valle [Inria, Engineer, from Oct 2021]

Interns and Apprentices

- Julien Claisse [Univ de Lorraine, from Mar 2021 until Aug 2021]

- Félix Cuny-Enault [Inria, from Feb 2021 until Aug 2021]

- Evelyn D'elia [Inria, until Jul 2021]

- Marwan Hilal [Inria, from Mar 2021 until Sep 2021]

- Anuyan Ithayakumar [Univ de Lorraine, until Jun 2021]

- Mathis Lamiroy [École Normale Supérieure de Lyon, from May 2021 until Jul 2021]

- Jean Landais [Inria, from Mar 2021 until Aug 2021]

- Thomas Martin [Inria, from Feb 2021 until Jul 2021]

- Aurélien Osswald [Univ de Lorraine, from Feb 2021 until Aug 2021]

- Léonie Plancoulaine [Inria, from Jun 2021 until Aug 2021]

- Julien Romary [Inria, from Mar 2021 until Aug 2021]

- Manu Serpette [Univ de Lorraine, from Sep 2021]

- Guillaume Toussaint [Univ de Lorraine, from Mar 2021 until Aug 2021]

- Étienne Vareille [Univ de Lorraine, from Oct 2021]

Administrative Assistants

- Véronique Constant [Inria]

- Antoinette Courrier [CNRS]

Visiting Scientist

- Anji Ma [Beijing Institute of Technology, until Aug 2021]

External Collaborator

- Alexis Aubry [Univ de Lorraine]

2 Overall objectives

The goal of the Larsen team is to move robots beyond the research laboratories and manufacturing industries: current robots are far from being the fully autonomous, reliable, and interactive robots that could co-exist with us in our society and run for days, weeks, or months. While there is undoubtedly progress to be made on the hardware side, robotic platforms are quickly maturing and we believe the main challenges to achieve our goal are now on the software side. We want our software to be able to run on low-cost mobile robots that are therefore not equipped with high-performance sensors or actuators, so that our techniques can realistically be deployed and evaluated in real settings, such as in service and assistive robotic applications. We envision that these robots will be able to cooperate with each other but also with intelligent spaces or apartments which can also be seen as robots spread in the environment. Like robots, intelligent spaces are equipped with sensors that make them sensitive to human needs, habits, gestures, etc., and actuators to be adaptive and responsive to environment changes and human needs. These intelligent spaces can give robots improved skills, with less expensive sensors and actuators enlarging their field of view of human activities, making them able to behave more intelligently and with better awareness of people evolving in their environment. As robots and intelligent spaces share common characteristics, we will use, for the sake of simplicity, the term robot for both mobile robots and intelligent spaces.

Among the particular issues we want to address, we aim at designing robots able to:

- handle dynamic environments and unforeseen situations;

- cope with physical damage;

- interact physically and socially with humans;

- collaborate with each other;

- exploit the multitude of sensor measurements from their surroundings;

- enhance their acceptability and usability by end-users without robotics background.

All these abilities can be summarized by the following two objectives:

- life-long autonomy: continuously perform tasks while adapting to sudden or gradual changes in both the environment and the morphology of the robot;

- natural interaction with robotics systems: interact with both other robots and humans for long periods of time, taking into account that people and robots learn from each other when they live together.

3 Research program

3.1 Lifelong autonomy

Scientific context

So far, only a few autonomous robots have been deployed for a long time (weeks, months, or years) outside of factories and laboratories. They are mostly mobile robots that simply “move around” (e.g., vacuum cleaners or museum “guides”) and data collecting robots (e.g., boats or underwater “gliders” that collect data about the water of the ocean).

A large part of the long-term autonomy community is focused on simultaneous localization and mapping (SLAM), with a recent emphasis on changing and outdoor environments 47, 57. A more recent theme is life-long learning: during long-term deployment, we cannot hope to equip robots with everything they need to know, therefore some things will have to be learned along the way. Most of the work on this topic leverages machine learning and/or evolutionary algorithms to improve the ability of robots to react to unforeseen changes 47, 52.

Main challenges

The first major challenge is to endow robots with a stable situation awareness in open and dynamic environments. This covers both the state estimation of the robot by itself as well as the perception/representation of the environment. Both problems have been claimed to be solved but it is only the case for static environments 51.

In the Larsen team, we aim at deployment in environments shared with humans which imply dynamic objects that degrade both the mapping and localization of a robot, especially in cluttered spaces. Moreover, when robots stay longer in the environment than for the acquisition of a snapshot map, they have to face structural changes, such as the displacement of a piece of furniture or the opening or closing of a door. The current approach is to simply update an implicitly static map with all observations with no attempt at distinguishing the suitable changes. For localization in not-too-cluttered or not-too-empty environments, this is generally sufficient as a significant fraction of the environment should remain stable. But for life-long autonomy, and in particular navigation, the quality of the map, and especially the knowledge of the stable parts, is primordial.

A second major obstacle to moving robots outside of labs and factories is their fragility: Current robots often break in a few hours, if not a few minutes. This fragility mainly stems from the overall complexity of robotic systems, which involve many actuators, many sensors, and complex decisions, and from the diversity of situations that robots can encounter. Low-cost robots exacerbate this issue because they can be broken in many ways (high-quality material is expensive), because they have low self-sensing abilities (sensors are expensive and increase the overall complexity), and because they are typically targeted towards non-controlled environments (e.g., houses rather than factories, in which robots are protected from most unexpected events). More generally, this fragility is a symptom of the lack of adaptive abilities in current robots.

Angle of attack

To solve the state estimation problem, our approach is to combine classical estimation filters (Extended Kalman Filters, Unscented Kalman Filters, or particle filters) with a Bayesian reasoning model in order to internally simulate various configurations of the robot in its environment. This should allow for adaptive estimation that can be used as one aspect of long-term adaptation. To handle dynamic and structural changes in an environment, we aim at assessing, for each piece of observation, whether it is static or not.

We also plan to address active sensing to improve the situation awareness of robots. Literally, active sensing is the ability of an interacting agent to act so as to control what it senses from its environment with the typical objective of acquiring information about this environment. A formalism for representing and solving active sensing problems has already been proposed by members of the team 46 and we aim to use it to formalize decision-making problems for improving situation awareness.

Situation awareness of robots can also be tackled by cooperation, whether it be between robots or between robots and sensors in the environment (led out intelligent spaces) or between robots and humans. This is in rupture with classical robotics, in which robots are conceived as self-contained. But, in order to cope with as diverse environments as possible, these classical robots use precise, expensive, and specialized sensors, whose cost prohibits their use in large-scale deployments for service or assistance applications. Furthermore, when all sensors are on the robot, they share the same point of view on the environment, which is a limit for perception. Therefore, we propose to complement a cheaper robot with sensors distributed in a target environment. This is an emerging research direction that shares some of the problematics of multi-robot operation and we are therefore collaborating with other teams at Inria that address the issue of communication and interoperability.

To address the fragility problem, the traditional approach is to first diagnose the situation, then use a planning algorithm to create/select a contingency plan. But, again, this calls for both expensive sensors on the robot for the diagnosis and extensive work to predict and plan for all the possible faults that, in an open and dynamic environment, are almost infinite. An alternative approach is then to skip the diagnosis and let the robot discover by trial and error a behavior that works in spite of the damage with a reinforcement learning algorithm 62, 52. However, current reinforcement learning algorithms require hundreds of trials/episodes to learn a single, often simplified, task 52, which makes them impossible to use for real robots and more ambitious tasks. We therefore need to design new trial-and-error algorithms that will allow robots to learn with a much smaller number of trials (typically, a dozen). We think the key idea is to guide online learning on the physical robot with dynamic simulations. For instance, in our recent work, we successfully mixed evolutionary search in simulation, physical tests on the robot, and machine learning to allow a robot to recover from physical damage 53, 1.

A final approach to address fragility is to deploy several robots or a swarm of robots or to make robots evolve in an active environment. We will consider several paradigms such as (1) those inspired from collective natural phenomena in which the environment plays an active role for coordinating the activity of a huge number of biological entities such as ants and (2) those based on online learning 50. We envision to transfer our knowledge of such phenomenon to engineer new artificial devices such as an intelligent floor (which is in fact a spatially distributed network in which each node can sense, compute and communicate with contiguous nodes and can interact with moving entities on top of it) in order to assist people and robots (see the principle in 60, 50, 45).

3.2 Natural interaction with robotic systems

Scientific context

Interaction with the environment is a primordial requirement for an autonomous robot. When the environment is sensorized, the interaction can include localizing, tracking, and recognizing the behavior of robots and humans. One specific issue lies in the lack of predictive models for human behavior and a critical constraint arises from the incomplete knowledge of the environment and the other agents.

On the other hand, when working in the proximity of or directly with humans, robots must be capable of safely interacting with them, which calls upon a mixture of physical and social skills. Currently, robot operators are usually trained and specialized but potential end-users of robots for service or personal assistance are not skilled robotics experts, which means that the robot needs to be accepted as reliable, trustworthy and efficient 67. Most Human-Robot Interaction (HRI) studies focus on verbal communication 61 but applications such as assistance robotics require a deeper knowledge of the intertwined exchange of social and physical signals to provide suitable robot controllers.

Main challenges

We are here interested in building the bricks for a situated Human-Robot Interaction (HRI) addressing both the physical and social dimension of the close interaction, and the cognitive aspects related to the analysis and interpretation of human movement and activity.

The combination of physical and social signals into robot control is a crucial investigation for assistance robots 63 and robotic co-workers 59. A major obstacle is the control of physical interaction (precisely, the control of contact forces) between the robot and the human while both partners are moving. In mobile robots, this problem is usually addressed by planning the robot movement taking into account the human as an obstacle or as a target, then delegating the execution of this “high-level” motion to whole-body controllers, where a mixture of weighted tasks is used to account for the robot balance, constraints, and desired end-effector trajectories 44.

The first challenge is to make these controllers easier to deploy in real robotics systems, as currently they require a lot of tuning and can become very complex to handle the interaction with unknown dynamical systems such as humans. Here, the key is to combine machine learning techniques with such controllers.

The second challenge is to make the robot react and adapt online to the human feedback, exploiting the whole set of measurable verbal and non-verbal signals that humans naturally produce during a physical or social interaction. Technically, this means finding the optimal policy that adapts the robot controllers online, taking into account feedback from the human. Here, we need to carefully identify the significant feedback signals or some metrics of human feedback. In real-world conditions (i.e., outside the research laboratory environment) the set of signals is technologically limited by the robot's and environmental sensors and the onboard processing capabilities.

The third challenge is for a robot to be able to identify and track people on board. The motivation is to be able to estimate online either the position, the posture, or even moods and intentions of persons surrounding the robot. The main challenge is to be able to do that online, in real-time and in cluttered environments.

Angle of attack

Our key idea is to exploit the physical and social signals produced by the human during the interaction with the robot and the environment in controlled conditions, to learn simple models of human behavior and consequently to use these models to optimize the robot movements and actions. In a first phase, we will exploit human physical signals (e.g., posture and force measurements) to identify the elementary posture tasks during balance and physical interaction. The identified model will be used to optimize the robot whole-body control as prior knowledge to improve both the robot balance and the control of the interaction forces. Technically, we will combine weighted and prioritized controllers with stochastic optimization techniques. To adapt online the control of physical interaction and make it possible with human partners that are not robotics experts, we will exploit verbal and non-verbal signals (e.g., gaze, touch, prosody). The idea here is to estimate online from these signals the human intent along with some inter-individual factors that the robot can exploit to adapt its behavior, maximizing the engagement and acceptability during the interaction.

Another promising approach already investigated in the Larsen team is the capability for a robot and/or an intelligent space to localize humans in its surrounding environment and to understand their activities. This is an important issue to handle both for safe and efficient human-robot interaction.

Simultaneous Tracking and Activity Recognition (STAR) 66 is an approach we want to develop. The activity of a person is highly correlated with his position, and this approach aims at combining tracking and activity recognition to make one benefit from the other. By tracking the individual, the system may help infer its possible activity, while by estimating the activity of the individual, the system may make a better prediction of his/her possible future positions (especially in the case of occlusions). This direction has been tested with simulator and particle filters 49, and one promising direction would be to couple STAR with decision making formalisms like partially observable Markov decision processes (POMDPs). This would allow us to formalize problems such as deciding which action to take given an estimate of the human location and activity. This could also formalize other problems linked to the active sensing direction of the team: how should the robotic system choose its actions in order to better estimate the human location and activity (for instance by moving in the environment or by changing the orientation of its cameras)?

Another issue we want to address is robotic human body pose estimation. Human body pose estimation consists of tracking body parts by analyzing a sequence of input images from single or multiple cameras.

Human posture analysis is of high value for human robot interaction and activity recognition. However, even though the arrival of new sensors like RGB-D cameras has simplified the problem, it still poses a great challenge, especially if we want to do it online, on a robot and in realistic world conditions (cluttered environment). This is even more difficult for a robot to bring together different capabilities both at the perception and navigation level 48. This will be tackled through different techniques, going from Bayesian state estimation (particle filtering), to learning, active and distributed sensing.

4 Application domains

4.1 Personal assistance

During the last fifty years, many medical advances as well as the improvement of the quality of life have resulted in a longer life expectancy in industrial societies. The increase in the number of elderly people is a matter of public health because although elderly people can age in good health, old age also causes embrittlement, in particular on the physical plan which can result in a loss of autonomy. That will lead us to re-think the current model regarding the care of elderly people.1 Capacity limits in specialized institutes, along with the preference of elderly people to stay at home as long as possible, explain a growing need for specific services at home.

Ambient intelligence technologies and robotics could contribute to this societal challenge. The spectrum of possible actions in the field of elderly assistance is very large, going from activity monitoring services, mobility or daily activity aids, medical rehabilitation, and social interactions. This will be based on the experimental infrastructure we have built in Nancy (Smart apartment platform) as well as the deep collaboration we have with OHS 2 and the company Pharmagest and it's subsidiary Diatelic, an SAS created in 2002 by a member of the teams and others.

At the same time, these technologies can be beneficial to address the increasing development of musculo-skeletal disorders and diseases that is caused by the non-ergonomic postures of the workers, subject to physically stressful tasks. Wearable technologies, sensors and robotics, can be used to monitor the worker's activity, its impact on their health, and anticipate risky movements. Two application domains have been particularly addressed in the last years: industry, and more specifically manufacturing, and healthcare.

4.2 Civil robotics

Many applications for robotics technology exist within the services provided by national and local government. Typical applications include civil infrastructure services 3 such as: urban maintenance and cleaning; civil security services; emergency services involved in disaster management including search and rescue; environmental services such as surveillance of rivers, air quality, and pollution. These applications may be carried out by a wide variety of robot and operating modalities, ranging from single robots to small fleets of homogeneous or heterogeneous robots. Often robot teams will need to cooperate to span a large workspace, for example in urban rubbish collection, and operate in potentially hostile environments, for example in disaster management. These systems are also likely to have extensive interaction with people and their environments.

The skills required for civil robots match those developed in the Larsen project: operating for a long time in potentially hostile environment, potentially with small fleets of robots, and potentially in interaction with people.

5 Social and environmental responsibility

5.1 Impact of research results

Hospitals- The research in the ExoTurn project led to the deployment of a total of four exoskeletons (Laevo) in the Intensive Care Unit Department of the Hospital of Nancy (CHRU). They have been used by the medical staff since April 2020 to perform Prone Positioning on COVID-19 patients with severe ARDS. To the best of our knowledge, other hospitals (in France, Belgium, Holland, Italy and Switzerland) are following on our footsteps and purchased Laevo exoskeletons for the same use. At the same time, the positive feedback from the CHRU of Nancy has motivated us to continue investigating if exoskeletons could be beneficial for the medical staff involved in other type of healthcare activities. A new study on bed bathing of hospitalized patients started in February 2021 in the department of vascular surgery. For sanitary reasons, preliminary experiments investigating the use of the Laevo for assisting nurses were conducted in the team's laboratory premises in summer 2021. An article presenting the findings is in preparation.

Ageing and health- This research line has the objective to propose technological solutions to the difficulties of elderly people in an ageing population (due to the increase of life expectancy). The placement of older people in a nursing home (EHPAD) is often only a choice of reason and can be rather poorly experienced by people. One answer to this societal problem is the development of smart home technologies that assist the elderly to stay in their homes longer than they can do today. With this objective we have a long term cooperation with Pharmagest which was supported through a PhD thesis (Cifre) which has been supported in recent years through a PhD thesis (Cifre) between June 2017 and August 2021. The objective is to enhance the CareLib solution developed by Diatelic (a subsidiary of the Wellcoop-Pharmagest group) and Larsen team through a previous collaboration (Satelor project). The Carelib offer is a solution consisting of (1) a connected box (with touch screen), (2) a 3D sensor that is able (i) to measure characteristics of the gait such as the speed and step length, (ii) to identify activities of daily life and (iii) to detect emergency situation such as a fall, and (3) universal sensors (motion, ...) installed in each part of the housing. A software licence has been grant by Inria to Pharmagest.

Environment- The new project TELEMOVTOP, in collaboration with the company Isotop, aims at automatizing the processes of disposal of metal sheets contaminated with asbestos from roofs. This procedure has a high environmental impact and is also a risk for the health of the workers. Robotics can be a major technology innovation in this field. With this project, the team aims at both helping to reduce the workers' risk of exposure to asbestos, and accelerating the disposal project to reduce environmental pollution.

Firefighters- The project POMPEXO, in collaboration with the SDIS 54 (firefighters from Meurthe-et-Moselle) and two laboratories from the Université de Lorraine (DevAH: biomechanics, and Perseus: Cognitive ergonomics), aims at investigating the possibility to assist firefigthers with an exoskeleton during the car cutting manoeuver. This frequent manoeuver is physically very demanding, and due to a general trend in aging and decreasing physical condition among firefigther crews, less and less firefighters are able to perform it. Hence the SDIS 54 is looking for a solution to increase the strength and reduce the fatigue of firefigthers during this maneuver. Occupational exoskeletons have the potential to alleviate the physical load on the workers, and hence may be a solution. However, feasibility and benefits of exoskeletons are task-dependent. Hence we are currently analyzing the car cutting maneuver based on data collected on-site with professional firefigthers, to identify what kind of exoskeleton may be suitable.

6 Highlights of the year

6.1 Awards

- 2021 ACM SIGEVO Dissertation Award for Adam Gaier (honorable mention)

- 2021 IEEE RA-L Distinguished Service Award as Outstanding Associate Editor for Serena Ivaldi

- Serena Ivaldi was nominated among the "50 women in robotics you need to know about" — by Women in Robotics & Robohub

7 New software and platforms

7.1 New software

7.1.1 RobotDART

-

Name:

RobotDART

-

Keywords:

Physical simulation, Robotics, Robot Panda

-

Functional Description:

RobotDart combines:

- high-level functions around DART to simulate robots,

- a 3D engine based on the Magnum library (for visualisation),

- a library of common research robots (Franka, IIWA, TALOS, iCub),

- abstractions for sensors (including cameras).

-

News of the Year:

- new URDF library (Utheque)

- support for Tiago and UR3

- numerous bugfixes and API improvements

- URL:

-

Contact:

Jean-Baptiste Mouret

-

Partner:

University of Patras

7.1.2 inria_wbc

-

Name:

Inria whole body controller

-

Keyword:

Robotics

-

Scientific Description:

This software implements Task-Space Inverse Dynamics for the Talos robot, iCub Robot, Franka-Emika Panda robot, and Tiago Robot.

-

Functional Description:

This controller exploits the TSID library (QP-based inverse dynamics) to implement a position controller for the Talos humanoid robot. It includes:

- flexible configuration files,

- links with the RobotDART library for easy simulations,

- stabilizer and torque safety checks.

-

Release Contributions:

First version

-

News of the Year:

- new stabilization methods

- numerous bug fixes

- support of iCub and Tiago

- URL:

- Publication:

-

Contact:

Jean-Baptiste Mouret

-

Participants:

Jean-Baptiste Mouret, Eloise Dalin, Ivan Bergonzani

8 New results

8.1 Lifelong autonomy

8.1.1 Planning and decision making

Addressing active sensing problems through Monte-Carlo tree search (MCTS)

Participants: Vincent Thomas, Olivier Buffet.

The problem of active sensing is of paramount interest for building self awareness in robotic systems. It consists in planning actions so as to gather information (e.g., measured through the entropy over certain state variables) in an optimal way. In the past, we have proposed an original formalism, -POMDPs, and new algorithms for representing and solving such active sensing problems 46 by using point-based algorithms, assuming either convex or Lipschitz-continuous criteria. More recently, we have developed new approaches based on Monte-Carlo Tree Search (MCTS), and in particular Partially Observable Monte-Carlo Planning (POMCP), which provably converges provided the criterion is continuous. Based on this, we have proposed algorithms which are more suitable for certain robotic tasks by allowing for continuous state and observation spaces.

Early this year we have published an extended version of a conference paper 64.

Publications: 40

Heuristic search for (partially observable) stochastic games

Participants: Olivier Buffet, Aurélien Delage, Vincent Thomas.

Collaboration with Jilles Dibangoye (INSA-Lyon, INRIA team CHROMA) and Abdallah Saffidine (University of New South Wales (UNSW), Sydney, Australia).

Many robotic scenarios involve multiple interacting agents, robots or humans, e.g., security robots in public areas.

We have mainly worked in the past on the collaborative setting, where all agents share one objective, in particular through solving Dec-POMDPs by (i) turning them into occupancy MDPs and (ii) using heuristic search techniques and value function approximation 2. A key idea is to take the point of view of a central planner and reason on a sufficient statistics called occupancy state.

We are now also working on applying similar approaches in the important 2-player zero-sum setting, i.e., with two competing agents. We have first proposed and evaluated an algorithm for (fully observable) stochastic games (SGs). Then we have proposed an algorithm for partially observable SGs (POSGs), here turning the problem into an occupancy Markov game, and using Lipschitz-continuity properties to derive bounding approximators. We are now working on an improved approach building on convexity and concavity properties.

[This line of research is pursued through Jilles Dibangoye's ANR JCJC PLASMA.]

Interpretable action policies

Participants: Olivier Buffet.

Collaboration with Iadine Chadès and Jonathan Ferrer Mestres (CSIRO, Brisbane, Australia), and Thomas G. Dietterich (Oregon State University, USA).

Computer-aided task planning requires providing user-friendly plans, in particular plans that make sense to the user. In probabilistic planning (in the MDP formalism), such interpretable plans can be derived by constraining action policies (if happens, do ) to depend on a reduced subset of (abstract) states or state variables. As a first step, we have proposed 3 solution algorithms to find a set of at most abstract states (forming a partition of the original state space) such that any optimal policy of the induced abstract MDP is as close as possible to optimal policies of the original MDP. We have then looked at mixed observability MDPs (MOMDPs), proposing solutions to (i) abstract visible state variables as described above, and (ii) simultaneously limit the number of hyperplanes used to represent the solution value function.

Publication: 14.

Visual prediction of robot's actions

Participants: Anji Ma, Serena Ivaldi.

This work is part of the CHIST-ERA project HEAP, that is focused on benchmarking methods for learning to grasp irregular objects in a heap. We are interested in the question of how to plan for a sequence of actions that enable the robot to bring the heap in a desired state where a grasp can be successful. We addressed this question in the work of Anji Ma, a visiting PhD student.

Visual prediction models are a promising solution for visual-based robotic grasping of cluttered, unknown soft objects. Previous models from the literature are computationally greedy, which limits reproducibility; although some consider stochasticity in the prediction model, it is often too weak to catch the reality of robotics experiments involving grasping such objects. Furthermore, previous work focused on elementary movements that are not efficient to reason in terms of more complex semantic actions. To address these limitations, we propose VP-GO, a “light” stochastic action-conditioned visual prediction model. We propose a hierarchical decomposition of semantic grasping and manipulation actions into elementary end-effector movements, to ensure compatibility with existing models and datasets for visual prediction of robotic actions such as RoboNet. We also record and release a new open dataset for visual prediction of object grasping, called PandaGrasp: link on gitlab. Our model can be pre-trained on RoboNet and fine-tuned on PandaGrasp, and performs similarly to more complex models in terms of signal prediction metrics. Qualitatively, it outperforms these models when predicting the outcome of complex grasps performed by our robot.

An article 54 reporting the research and the dataset was submitted to a conference.

Reinforcement learning for autonomous vehicles

Participants: Julien Uzzan, François Aioun (PSA), Thomas Hannagan (PSA), François Charpillet.

This work is funded by PSA Stellantis through a Cifre PhD Grant and it pursues the objective to study how reinforcement learning techniques can help design autonomous cars. Two application domains have been chosen : longitudinal control and "merge and split".

The longitudinal control problem, although it has already been solved in many ways, is still an interesting problem. The description is quite simple. Two vehicles are on a road : the one in front is called the “leader” and the other is the “follower”. We suppose that the road only has one lane, so there is no way for the follower to pass the leader. The objective is to learn a controller for the follower to drive as fast as possible while avoiding crashes with the leader. The algorithm used to train the agent is the Deep Deterministic Policy Gradient algorithm (DDPG). The idea of adding noise to perception during training to give our agent interesting properties such as a more cautious behavior as well as better overall performances has been proposed, and a patent has been filed about this approach.

The merge and split problem is much more appealing and completely justifies the approach knowing that, to our knowledge, no classical control methods can solve the problem. It refers to a road driving situation in which two roads of two lanes each merge and then split again, giving rise to an ephemeral common road of four lanes. The vehicles come from both roads and have different destinations. Thus, some will have to fit in, while others will only have to maintain their way. In this road situation the decision process governing the ego vehicle is quite complex as the decision includes not only the ego vehicle state but also the dynamic states of surrounding vehicles.

Repetitive path planning in unknown environments

Participants: Julien Romary, Francis Colas.

Mobile robots can be used to autonomously explore and build a map of an environment. This requires planning paths to various goal poses while the knowledge of the map is still evolving.

On the one hand, classical multiple-query algorithms such as PRM, sPRM, or PRM rely on a first phase to discover the connectivity of the environment. In a second phase, the planning requests can be performed faster. On the other hand, recent algorithms have been proposed for efficient planning in evolving environments, such as D-lite, RRT, or RRT. They all rely on updating cascades through their graph or tree. However, they are designed for a single goal and the whole process needs to be restarted for a new one.

In this work, done during the master thesis of Julien Romary, we propose an approach to expand RRT with the functionality to change goal while retaining as much as possible the connectivity discovered so far. The main idea is to modify the routines for removing and adding obstacles to respectively add a new goal and remove the previous one. We started evaluating this algorithm and proved that it is indeed faster than replanning from scratch with RRT on both synthetic and simulated use cases.

Bayesian tracker for synthesizing mobile robot behavior from demonstration

Participant: Francis Colas.

Collaboration with Stéphane Magnénat (ETH Zurich).

Programming robots often involves expert knowledge in both the robot itself and the task to execute. An alternative to direct programming is for a human to show examples of the task execution and have the robot perform the task based on these examples, in a scheme known as learning or programming from demonstration.

In this work, we propose and study a generic and simple learning-from-demonstration framework. Our approach is to combine the demonstrated commands according to the similarity between the demonstrated sensory trajectories and the current replay trajectory. This tracking is solely performed based on sensor values and time and completely dispenses with the usually expensive step of precomputing an internal model of the task.

We analyse the behavior of the proposed model in several simulated conditions and test it on two different robotic platforms. We show that it can reproduce different capabilities with a limited number of meta-parameters.

Publications: 8.

Automatic tracking of free-flying insects using a cable-driven robot

Participants: Abir Bouaouda, Dominique Martinez (LORIA), Mohamed Boutayeb (CRAN), Rémi Pannequin (CRAN), François Charpillet.

This work has been funded by the ANR IA program and started in October 2020. The aim of this work is to evaluate the contribution of AI techniques such as reinforcement learning to the problem of controlling a cable robot. Reinforcement learning for a cable driven robot doing a tracking task is quite appealing as it can take into account many more parameters than a model based approach. This project follows a series of works carried out in recent years by Dominique Martinez and Mohamed Boutayeb, culminating in a publication in Science Robotics. The task of the cable robot is to follow a flying insect in order to study their behavior. The end effector moved by the cables is equipped with two cameras.

This year, we have redesigned the robot. In the new configuration only three degrees of freedom are allowed, avoiding any rotation.

8.1.2 Multirobot and swarm

Signal-based self-organization of a chain of UAVs for subterranean exploration

Participants: Jean-Baptiste Mouret, Vladislav Tempez, Pierre Laclau, Enrico Natalizio (Loria / team Simbiot), Franck Rufier (CNRS/Aix-Marseille Université).

Miniature multi-rotors are promising robots for navigating subterranean networks, but maintaining a radio connection underground is challenging. In this paper, we introduce a distributed algorithm, called U-Chain (for Underground-chain), that coordinates a chain of flying robots between an exploration drone and an operator. Our algorithm only uses the measurement of the signal quality between two successive robots and an estimate of the ground speed based on an optic flow sensor. It leverages a distributed policy for each UAV and a Kalman filter to get reliable estimates of the signal quality. We evaluated our approach formally and in simulation, and we described experimental results with a chain of 3 real miniature quadrotors (12 by 12 cm) and a base station.

Publication: 6

Multi-robot autonomous exploration of an unknown environment

Participants: Nicolas Gauville, Dominique Malthese (Safran), Christophe Guettier (Safran), François Charpillet.

This work is funded by Safran through a Cifre PhD grant and its purpose is to study exploration problems where a set of robots has to completely observe an unknown environment such that it can then, for example, build an exaustive map from the recorded data. We envision this problem in this project as an iterative decision process in which the robots use the available information gathered by their sensors from the beginning of the mission in order to decide the next places to visit with associated paths to reach these places. Accordingly the robots determine and then execute the appropriate manoeuvre to follow those paths until the next decision steps. The resulting exploration has to guarantee that the fleet of robots perceived the whole environment by the end of the mission, and that it can be decided when the mission is finished. In this work we consider the exploration problem with a homogeneous team of robots, each equipped with a LiDAR (laser detection and ranging). The multi-robot exploration problem faces some difficult issues : the robots need to coordinate themselves such that they explore different areas. This entails the communication issue: they need to manage communication loss, and also determine the pieces of information to sent other robots.

Multi-robot localisation on a load-sensing floor

Participants: Julien Claisse, Vincent Thomas, Francis Colas.

The use of floor-sensors in the ambient intelligence context started in the late 1990's. We designed such a sensing floor in Nancy in collaboration with the Hikob company and Inria SED. This is a load-sensing floor which is composed of square tiles, each equipped with two ARM processors (Cortex M5 and A3), 4 load cells, and a wired connection to the four neighboring tiles. A hundred and four tiles cover the floor of our experimental platform (HIS). This year, during the internship of Julien Claisse, we focused on the problem of tracking multiple robots on this load-sensing floor. The main challenge is that pressure values cannot directly be processed by standard multi-target tracking filters like JPDAF.

In order to use those algorithms, a pre-processing step is required. Julien proposed a new pre-processing approach based on clusters of data, that can be either grouped or split, depending on the expected targets in the region. This approach was validated on real data previously recorded in our experimental platform.

Specialization and diversity in multi-robot swarm adaptation

Participants: Amine Boumaza.

Online Embodied Evolution is a distributed learning method for collective heterogeneous robotic swarms, in which evolution is carried out in a decentralized manner. In these algorithms each agent, typically a mobile robot, runs an EA on board and exchanges genetic material with other agents when they are in close range. Adaptation occurs when agents evolve the genomes that control their behavior. The environment exerts a selection pressure against which poorly adapted genomes go extinct. In this work, we address the problem of promoting reproductive isolation, a feature that has been identified as crucial in situations where behavioral specialization is desired. We hypothesize that one way to allow a swarm of robots to specialize in different tasks and create situations where division of labor emerges, is through the promotion of diversity. Our contribution is twofold. We describe a method that allows a swarm of heterogeneous agents evolving online to maintain a high degree of diversity in behavioral space in which selection is based on originality. We also introduce a method to measure behavioral distances that is well suited to online distributed settings, and argue that it provides reliable measurements and comparisons. We test the claim on a concurrent foraging task and the experiments show that diversity is indeed preserved, and that different behaviors emerge in the swarm, suggesting the emergence of reproductive isolation. Finally, we employ different analysis tools from computational biology that further support this claim.Publication : 42

Analysis of multi-robot swarm algorithms

Participants: Amine Boumaza, Mathis Lamiroy.

In this work we study the time complexity of an instance of an online embodied evolutionary algorithm (see section 8.1.2), namely the -Online EEA. These algorithms have been successfully applied in different contexts and have been shown to be robust in open-ended environments. They however lack theoretical grounding and rigorous analysis; there are no results that guarantee or quantify their convergence event on simple instances of problems. In this work we aim at bounding the takeover-time; the time were one (presumably fit) gene takes over the entire swarm. When such an event happens, all agents share copies of the same ancestor. The main result of this work is twofold: under certain assumptions the takeover event in a swarm of agents occurs with probability 1, and the average time for it to happen is . This bound was obtained by modeling the algorithm as a Wright-Fisher process, a well know stochastic process from computational biology. An on-going refinement of this result will allow us to drop the term and attain linear convergence.8.2 Natural interaction with robotics systems

8.2.1 Teleoperation and Human-Robot collaboration

Whole-body teleoperation

Participants: Jean-Baptiste Mouret, Serena Ivaldi, Eloïse Dalin, Ivan Bergonzani, Luigi Penco, Evelyn D'Elia.

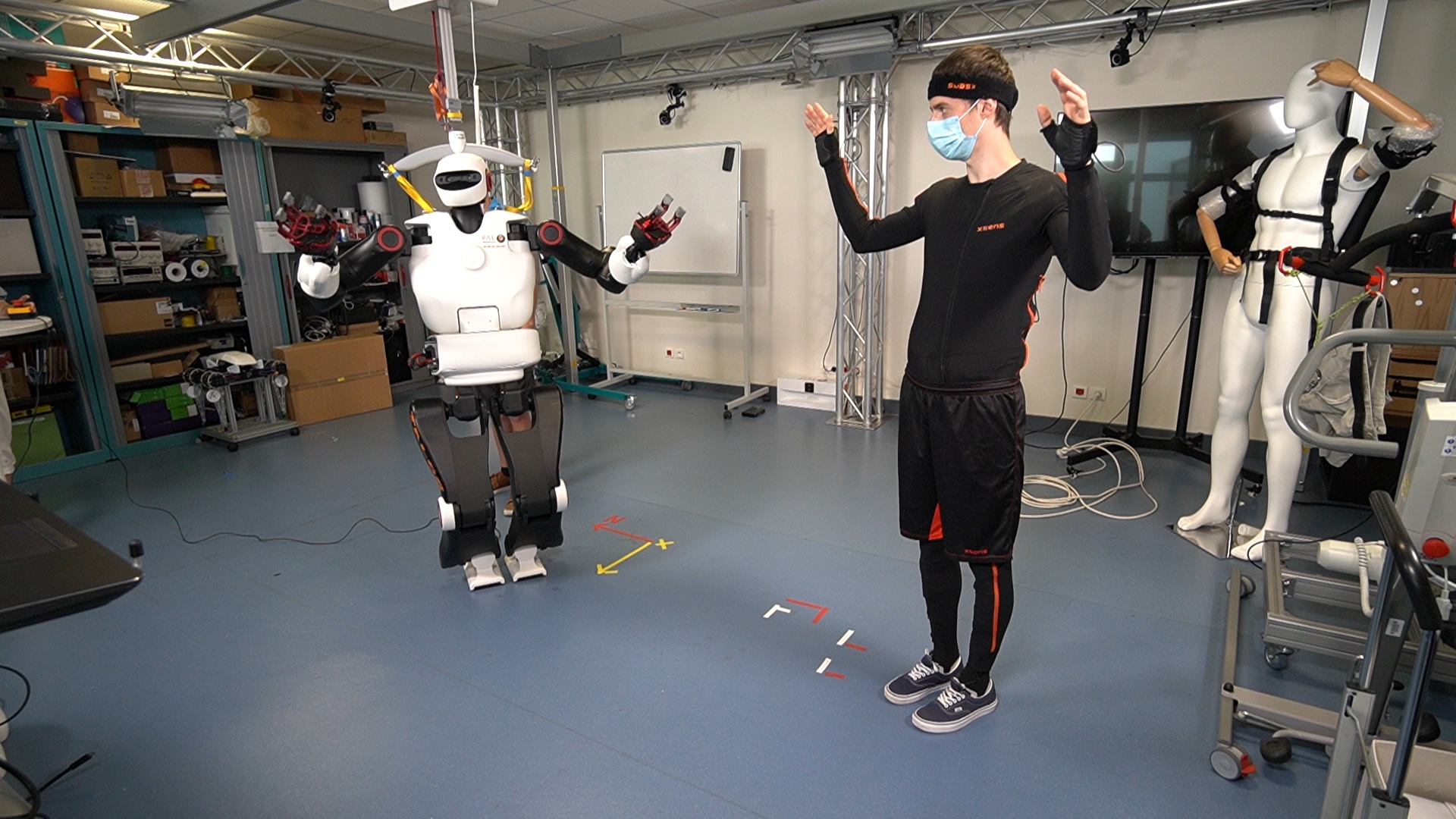

See caption for description.

Teleoperation of the Talos robot. The operator wears a motion capture suit and the robot attempts to reproduce the Cartesian position of both hands while taking into account its own constraints (balance, self-collision, kinematics, etc.).

Thanks to the ADT wbTorque and the DGA grant “Humanoïde résilient”, we now have a reliable controller for task-based inverse dynamics. This controller takes reference positions for the hands, feet and center of mass and computes the optimal joint torques (from which positions can be computed) for each of the 32 joints. The main achievements of 2021 are:

- The Talos robot can now be tele-operated with the Xsens motion capture suit: video ;

- We improved the stabilization/balance;

- We ported the last version of this controller to the iCub humanoid robot (teleoperation with the Xsens suit) and the Tiago robot (arm + mobile base, teleoperated with an haptic 6-D joystick).

We documented our current implementation in a workshop paper: 20. The code is available online (github).

In the context of the AnDy project, there were two main activities:

- we kept developing the method for teleoperating humanoid robots in presence of delays in communication, using predictive models based on ProMPs. The results were described into an article 56 now under review.

- we investigated multi-objective optimisation strategies to automatically optimize the task weights and gains of the Talos' whole-body controller, to be more precise in tracking desired reference trajectories while being more robust in balancing. The preliminary results were presented in a short paper at a ICRA 2021 workshop 43; we refined our approach by combining learned Pareto-optimal solution with Bayesian optimization. A full article presenting our results was submitted to a conference.

Teleoperation of a mobile robot for asbestos removal

Participants: Edoardo Ghini, Serena Ivaldi.

In the context of the TELEMOVTOP project, we developed a teleoperation solution for workers in the construction sector, so that they can teleoperate a mobile robot to remove asbestos-contaminated tiles from a roof. The interface was tested with a digital twin of the robot, which was fully developed by our team, using software tools developed by the team such as robot-dart and the whole-body controller library that allow controlling the simulated robot. In November 2021, a preliminary test onsite with ISOTOP (the construction company in the TELEMOVTOP project) workers was successfully conducted.

Adaptive control of collaborative robots for preventing musculoskeletal disorders

Participants: Anuyan Ithayakumar, Aurélien Osswald, Vincent Thomas, Pauline Maurice.

The use of collaborative robots in direct physical collaboration with humans constitutes a possible answer to musculoskeletal disorders: not only can they relieve the worker from heavy loads, but they could also guide him/her towards more ergonomic postures. In this context, the objective of the Ergobot project is to build adaptive robot strategies that are optimal regarding productivity but also the long-term health and comfort of the human worker, by adapting the robot behavior to the human's physiological state.

To do so, we are developing tools to compute a robot policy taking into account the long-term consequences of the biomechanical demands on the human worker's joints (joint loading) and to distribute the efforts among the different joints during the execution of a repetitive task. The proposed platform must merge within the same framework several works conducted in the LARSEN team, namely virtual human modelling and simulation, fatigue estimate and decision making in the face of uncertainties.

A first version of the platform has been developed and allowed us to perform preliminary tests and identify model-related limitations that need to be addressed.

This line of research will be pursued through Pauline Maurice's ANR JCJC ROOIBOS.

Publication: 28

Task-planning for human robot collaboration

Participants: Yang You, François Charpillet, Francis Colas, Olivier Buffet, Vincent Thomas.

Collaboration with Rachid Alami (CNRS Senior Scientist, LAAS Toulouse).

This work is part of the ANR project Flying Co-Worker (FCW) and focuses on high-level decision making for collaborative robotics. When a robot has to assist a human worker, it has no direct access to his current intention or his preferences but has to adapt its behavior to help the human complete his task.

This collaboration problem can be represented as a multi-agent problem and formalized in the decentralized-POMDP framework, where a common reward is shared among the agents. This year, we proposed an algorithm that solves infinite-horizon Dec-POMDPs by repeatedly computing best response strategies from one agent to the other (fixed) agent strategies, until convergence to an equilibrium. This approach provably converges to a local optimum, and can be restarted to search for other equilibria.

However, the cost of solving this multi-agent problem is prohibitive and, even if the optimal joint policy could be built, it may be too complex to be realistically executed by a human worker. To address the collaboration issue, we thus propose to consider a mono-agent problem by taking the robot's perspective and assuming the human is part of the environment and follows a known policy. In this context, building the robot consists in computing its best response given the human policy and can be formalized as a Partially Observable Markov Decision Process (POMDP). This makes the problem computationally simpler, but the difficulty lies in how to choose a relevant human policy for which the robot's best response could be built. This human policy should "synthesize" the various behaviors he may actually adopt, accounting for the possible help of the robot (which induces a chicken-and-egg problem), and for the multiplicity of possible solutions, which is all the more important that the human is not fully rational.

We are currently investigating this direction of research to automatically compute (i) a human policy from the multi-agent model of the collaborative situation and (ii) the corresponding robot best response.

8.2.2 Human understanding

PAAVUP - models of visual attention

Participants: Olivier Buffet, Vincent Thomas.

Collaboration with Sophie Lemonnier (Associate professor in psychology, Université de Lorraine).

Understanding human decisions and resulting behaviors is of paramount importance to design interactive and collaborative systems. This project focuses on visual attention, a central process of human-system interaction. The aim of the PAAVUP project is to develop and test a predictive model of visual attention that would be adapted to task-solving situations, and thus would depend on the task to solve and the knowledge of the participant, and not only on the visual characteristics of the environment as usually done.

By taking inspiration from Wickens et al.'s theoretical model based on the expectancy and value of information 65, we plan to model visual attention as a decision-making problem under partial observability and to compare the obtained strategies to the one observed in experimental situations. This project is an interdisciplinary project involving the cognitive psychology laboratory. We hope that gathering the skills from our scientific fields will help us to have a better understanding of the theoretical foundations of existing models (computer science and cognitive psychology) and to propose biologically plausible predictive computational models.

Sensor fusion for human pose estimation

Participants: Nima Mehdi, Francis Colas, Serena Ivaldi, Vincent Thomas.

This work is part of the ANR project Flying Co-Worker (FCW) and focuses on the perception of a human collaborator by a mobile robot for physical interaction. Physical interaction, for instance, object hand-over, requires the precise estimation of the pose of the human and, in particular her hands, with respect to the robot.

On the one hand, the human worker can wear a sensor suit with inertial sensors able to reconstruct the pose with good precision. However, such sensors cannot observe the absolute position and bearing of the human. All positions and orientations are therefore estimated by the suit with respect to an initial frame and this estimation is subject to drift (at a rate of a few cm per minute). On the other hand, the mobile robot can be equipped with cameras for which human pose estimation solutions are available such as OpenPose. The estimation is less precise but the error on the relative positioning is bounded.

This year we investigated a split particle filter mode to separately process joint groups such as the arms, the legs or the trunk. This is made possible by the use of relative observations, that is, a pre-processing of the wearable sensor data to compute key positions of the body with respect to the root of the joint group (for instance, the shoulder or the hip) instead of an arbitrary fixed reference frame.

While this prevents correction from one group to the next, this structure allows for a drastic reduction of the number of particles needed to reach the same precision and, conversely, allows for a greater precision within the same time budget.

The ongoing work is to complement this filtering of the wearable sensor data with an additional layer including pose measurement from a camera onboard the drone. The aim is to improve the estimate of the location of the human with respect to the drone.

Human motor variability in collaborative robotics

Participants: Raphaël Bousigues, Pauline Maurice.

Collaboration with David Daney (INRIA Bordeaux), Vincent Padois (INRIA Bordeaux) and Jonathan Savin (INRS)

Occupational ergonomics studies suggest that motor variability (i.e. using one's kinematic redundancy to perform the same task using different body motions and postures) might be beneficial to reduce the risk of developing musculoskeletal disorders. The positive exploitation of motor variability is, ideally, the natural result achieved by an expert. Such optimum is, however, not always reached, or requires a very long practice time. Methodologies to help the acquisition of motor habits exploiting at best the overall variability are therefore of interest. In this respect, collaborative robots are a possible tool that could enable an individualized acquisition of such good practices at the motor level by guiding the operator.

The overreaching goal of this project is to develop control laws for collaborative robots that encourage motor variability. In order to do so, it is necessary to develop models of motor variability in the context of human-robot collaboration (e.g., how do different constraints or interventions introduced by the robot affect the motor variability of a novice/expert). We are currently preparing a human subject experiment that aims at quantifying and modeling kinematic variability of humans performing a manual task assisted by a collaborative robot, depending on their fatigue and expertise of the task. The experimentally measured motor variability will then be compared with task-specific theoretical variability that can be estimated using human motion modeling and interval analysis.

Learning the user preference for grasping objects from few demonstrations

Participants: Yoann Fleytoux, Jean-Baptiste Mouret, Serena Ivaldi.

This work is part of the CHIST-ERA project HEAP, that is focused on benchmarking methods for learning to grasp irregular objects in a heap. One of our objectives is to design algorithms to learn the grasping policy from human experts with few demonstrations. We hypothesize that the expert can simply provide a binary feedback (yes/no) about suggested candidate grasps, which are shown to the user as virtual grasps projected onto the camera images before any robot grasp execution. Our target is to learn from as few "labels" as possible, to reduce the amount of interaction with the expert. This year, we introduced a data-efficient grasping pipeline (Latent Space GP Selector-LGPS) that learns grasp preferences with only a few labels per object (typically 1 to 4) and generalizes to new views of this object. Our pipeline is based on learning a latent space of grasps with a dataset generated with any state-of-the-art grasp generator (e.g., Dex-Net). This latent space is then used as a low-dimensional input for a Gaussian process classifier that selects the preferred grasp among those proposed by the generator. The results show that our method outperforms both GR-ConvNet and GG-CNN (two state-of-the-art methods that are also based on labeled grasps) on the Cornell dataset, especially when only a few labels are used: only 80 labels are enough to correctly choose 80% of the grasps (885 scenes, 244 objects). Results are similar on our own dataset (91 scenes, 28 objects). An article was submitted to a conference 38.

Human motion decomposition

Participants: Jessica Colombel, David Daney (Auctus Bordeaux), François Charpillet.

The aim of the work is to find ways of representing human movement in order to extract meaningful physical and cognitive information. After the realization of a state of the art on human movement, several methods are compared: principal component analysis (PCA), Fourier series decomposition and inverse optimal control. These methods are used on a signal comprising all the angles of a walking human being. PCA makes it possible to understand the correlations between the different angles during the trajectory. Fourier series decomposition methods are used for a harmonic analysis of the signal. Finally, inverse optimal control sets up a modeling of the engine control to highlight qualitative properties characteristic of the whole motion. An in-depth study of the inverse optimal control method has been carried out in order to better understand its properties and to develop easier methods of resolution. However, in the context of these methods it is necessary to better pay attention to the reliability of the results. We wrote a paper on the Reliability of Inverse Optimal Control 36. This paper proposes an approach based on the evaluation of Karush-Kuhn-Tucker conditions relying on a complete analysis with Singular Value Decomposition. With respect to a ground truth, our simulations illustrate how the proposed analysis guarantees the reliability of the resolution. After introducing a clear methodology, the properties of the matrices are studied with different noise levels and different experimental model and conditions. We show how to implement the method step by step by explaining the numerical difficulties encountered during the resolution and thus how to make the results of the IOC problem reliable. To go further, we continued this work on the use of the inverse opimal control with a formalization of the data in polynomial form.

Pedestrian behavior prediction

Participants: Lina Achaji, François Aioun (Stellantis), Julien Moreau (Stellantis, François Charpillet.

This work is done as part of the Ph.D. thesis of Lina Achaji (started on the 1st of March 2020) in the context of the OpenLab collaboration between Inria Nancy and Stellantis. The PhD is related to the development of Autonomous Vehicles in urban places. It underpins essential safety concerns for vulnerable road users (VRUs) such as pedestrians. Therefore, to make the roads safer, it is critical to classify and predict their future behavior. This year, a framework based on multiple variations of the Transformer models to reason attentively about the dynamic evolution of the pedestrians' past trajectory and predict its future actions of crossing or not crossing the street. We proved that, using only bounding-boxes as input, our model can outperform the previous state-of-the-art models and reach a prediction accuracy of 91% and an F1-score of 0.83 on the PIE dataset up to two seconds ahead in the future. In addition, we introduced a large-size simulated dataset (CP2A) using CARLA for action prediction. Our model has similarly reached high accuracy (91%) and F1-score (0.91) on this dataset. Interestingly, we showed that pre-training our Transformer model on the simulated dataset and then fine-tuning it on the real dataset can be very effective for the action prediction task 35.

Changepoint Detection and Activity Recognition for Understanding Activities of Daily Living (ADL) @home

Participants: Yassine El Khadiri, Cédric Rose (Diatelic), François Charpillet.

This work has been partly funded by Diatelic, a subsidiary of Pharmagest through a Cifre PhD grant. This collaboration is motivated by a project called “36 mois de plus” whose purpose is to propose technological solutions to the difficulties of elderly people in an ageing population (due to the increase of life expectancy).

The objective of the PhD Cifre program was to provide personalized follow-up by learning life habits, the main objective being to track the Activities of Daily Life (ADL) and detect emergency situations needing external interventions (e.g. fall detection). For example an algorithm capable to detect sleep-wake cycles using only motion sensors has been developed. The approach is based on artificial intelligence techniques (Bayesian inference). The algorithms have been evaluated using a publicly available dataset and Diatelic’s own dataset. The PhD has been defended in August 2021 33.

Social robots and loneliness: Acceptability and trust

Participants: Eloïse Zehnder, Jérôme Dinet (2LPN), François Charpillet.

This PhD work is done between the Larsen team and 2LPN, the psychology laboratory of the University of Lorraine. The main objective of the PhD program is to study how social robots or avatars can fight loneliness and how this is related to acceptability and trust 31, 32.

8.2.3 Exoskeleton and ergonomics

Using exoskeletons to assist medical staff manipulating Covid patients in ICU

Participants: Waldez Gomes, Pauline Maurice, Serena Ivaldi.

Collaboration with CHRU Nancy and INRS.

This work was conducted in the context of the ExoTurn project. In 2020 we conducted a pilot study to evaluate the potential and feasibility of back-support exoskeletons to help the caregivers in the Intensive Care Unit of the University Hospital of Nancy (France) executing Prone Positioning (PP) maneuvers on patients suffering from severe COVID-19-related Acute Respiratory Distress Syndrome (ARDS). After comparing four commercial exoskeletons, we selected and used the Laevo passive exoskeleton in the ICU in April 2020. Four exoskeletons were deployed in October 2020, during the second wave of COVID-19, to physically assist during PP. In 2021, we followed-up on the use of the exoskeletons in the ICU of the University Hospital of Nancy, where they were used until June 2021. While the physical assistance and benefit of the exoskeletons were clearly reported by the physicians, the adoption of the tool is limited by some organizational issues. We reported the entire experimental procedure and documented on the observations on the field in an article published in AHFE 2021 16. To encourage the replication of our results, we published the entire materials and methods as well 39.

Using exoskeletons to assist medical staff manipulating patients in daily activities

Participants: Felix Cuny-Enault, Pauline Maurice, Serena Ivaldi.

Collaboration with CHRU Nancy.

This work is a follow-up of the Exoturn project. While exoskeletons have proved to be useful to assist and relieve medical staff performing the prone-positioning maneuver during Covid-19, many other activities performed by nurses on a daily basis are physically demanding and source of fatigue and musculoskeletal disorders.

In collaboration with CHRU Nancy, we conducted an observation study in the cardiovascular department of the CHRU Nancy, where we followed nurses during their shift for several weeks. We thereby identified the patient bed bathing task as a frequent activity that is risky from an ergonomics standpoint, due to the maintained forward bent posture during the patient manipulation. The Laveo exoskeleton that we used in the Exoturn project is designed to provide back support during forward bent postures. Hence we conducted a first study (in the lab with novice participants, due to difficulty to recruit nurses during the Covid crisis), to probe into the feasibility and benefits/adverse effects brought by the use of the exoskeleton during a simulated bed bathing task. Our results show that the Laevo caused a significant change in the posture of the user, which is an unexpected effect and needs to be further investigated. These results will be presented in a paper that is in preparation.

Using digital human models to evaluate the biomechanical benefits of an exoskeleton

Participants: Pauline Maurice, Serena Ivaldi.

Collaboration with Claudia Latella and Daniele Pucci (Istituto Italiano di Tecnologia, Genova, Italy), with Jan Babic (Jozef Stefan Institute, Ljubljana, Slovenia), with Benjamin Schirrmeister, Jonas Bornmann and Jose Gonzalez (Ottobock SE & Co. KGaA, Duderstadt, Germany), Pavel Galibarov and Michael Daamsgard (ABT Technology, Denmark), Lars Fritzsche (IMK Automotive, Germany), and with Francesco Nori (Google DeepMind)

This work is part of the H2020 project AnDy. The AnDy project investigates robot control for human-robot collaboration, with a specific focus on improving ergonomics at work through physical robotic assistance. Earlier on in the project, we performed a thorough assessment of the objective and subjective effects of using the Paexo passive exoskeleton designed by Ottobock (partner in the AnDy project) to assist overhead work 55. While the results were promising, experimental assessments do not provide a complete view of the biomechancical effects of the exoskeleton on the human body: this would require the use of too many sensors to be even doable. Conversely, digital human models and musculoskeletal simulations are powerful tools that start to be used for such evaluation.

In this work, we investigated the effects of the Paexo exoskeleton on the whole-body of the wearer, with 2 different types of simulations. One is a musculoskeletal simulation with the ANyBody Modeling software, which provides an estimate of muscle-related quantities such as muscle activation. While very detailed, such simulations are also computationally expensive. Hence, we also investigated the use of a joint torque estimation algorithm that provides an estimate of the whole-body joint torques on-line, from motion capture and ground reaction force measurements. Both techniques were shown useful and providing complementary data.

Prediction of human movement to control an active exoskeleton

Participants: Raphaël Lartot, Jean Michenaud, Pauline Maurice, Serena Ivaldi, François Charpillet.

This work is part of the DGA-RAPID project ASMOA. The project deals with intention prediction for exoskeleton control. The challenge is to detect the intention of movement of the user of an exoskeleton, based on data from sensors embedded on the exoskeleton (e.g. inertial measurement units (IMUs), strain gauges). The predicted movement serves to optimize the exoskeleton control in order to provide an adapted support in an intuitive manner.

We are currently investigating the use of Probabilistic Movement Primitives (ProMPs) to predict the future limb trajectory of a human, based on the observation of the first samples of the trajectory. ProMP were previously used in the Larsen team: this work builds on code developed in the team (initially in the FP7 project CoDyCo, then in the H2020 project AnDy, and the wbTorque ADT), and aims at evaluating the technique for exoskeleton applications, in which the tight interaction between the human and the exoskeleton raises many questions.

To do so, we collected a dedicated database of human motions, to train the ProMP models. We are currently coupling our ProMP library with our digital human simulation tool, in order to predict not only the trajectory of the human limb, but also the joint torques associated to it. Indeed, to facilitate the interaction, the exoskeleton needs to be torque-controlled, hence prediction must be provided at the torque level.

Towards improving ergonomy during human-robot physical interaction

Participants: Lorenzo Vianello, Waldez Gomes, Eloïse Dalin, Pauline Maurice, Jean-Baptiste Mouret, Serena Ivaldi.

Collaboration with Alexis Aubry (CRAN)

Collaborative robots have the potential to assist humans in their daily tasks and in particular in industrial tasks. The latter often involve awkward postures and repetitive actions executed in poor ergonomics conditions, which often lead to musculo-skeletal disorders. Improving the ergonomics of such tasks leveraging robotic tools is at the core of projects such as the H2020 AnDy and LUE C-Shift, in which our team is deeply involved.

In this year, we progressed on different axes of research:

- We finalized the method for predicting the human upper-body posture of an operator physically coupled with a robot at the level of its end-effector. The results were published in 11 and presented at the AHFE 2021 conference as well 30.

- We proposed a method for optimizing whole-body trajectories of human recorded tasks with respect to several optimization criteria, using Pareto-optimal solutions obtained from multi-objective stochastic optimization algorithms. The proposed method relies on a parametric representation of recorded task trajectories, which benefits from our internal software libraries (ProMPs for trajectory representation and our whole-body QP controller for simulating and controlling Digital Human Models). Interestingly, the optimized trajectories are personalized, in that they are user-specific. We empirically showed that optimizing for multiple ergonomics criteria simultaneously leads to better ergonomics movements than when optimizing for a single criterion: while this result was intuitive, it shows that several methods proposed in the past literature of collaborative robotics have to be revisited. Our work was published in 5 and presented at AHFE 2021 25. Interestingly, the software tools developed for this activity were also used for the case study of Exoturn 27.

- Following up on previous results in human-human co-manipulation (more details in the PhD thesis manuscript of Waldez Gomes 34), we designed a human-robot co-manipulation experiment that enables us to investigate how a human adapts his interaction when executing a joint co-manipulation task with a robot. This experiment requires the automatic definition of the interaction role taken by the robot, which translates into different equations for its end-point stiffness as a function of the human arm co-contraction, measured by EMG sensors. The ethics authorization for the experiments has been obtained in December 2021. The experiments will be conducted in 2022.

- We published two short surveys of the state of the art in shared control 10 and human-robot interaction and cooperation 12.

Simulating operators' morphological variability for ergonomics assessment

Participants: Jacques Zhong, Pauline Maurice, Francis Colas.

In collaboration with Vincent Weisstroffer and Claude Andriot from CEA-LIST (PhD of Jacques Zhong funded by CEA).

Digital human models are a powerful tool to assess the ergonomics of a workstation during the design process and easily modify/optimize the workstation, without the need for a physical mock-up nor lengthy human subject measurements. However, the morphological variability of the workers is rarely taken into account. Generally, only the height and volume are considered for reachability and space questions. But morphology has other effects, such as changing the way in which a person can perform the task, changing the effort distribution in the body, etc.

In this work, we aim to leverage dynamic simulation with a digital human model (animated using a quadratic programming controller) to simulate virtual assembly tasks for workers of any morphology. The key challenge is to transfer the task execution from one morphology to another.

In a first step, we developed a digital human simulation based on a QP controller, in which a user can manipulate the posture of the digital human using virtual reality. Hence the user can easily pupeteer the human model (e.g. by moving its hands or feet), which then changes its posture while still being dynamically consistent. The user can then evaluate the ergonomics and the efforts of the task, through indicators computed in simulation. This work has been submitted to a conference (acceptance pending).

Feasibility of using an exoskeleton to assist firefighters

Participants: Pauline Maurice.

In collaboration with Guillaume Mornieux (DevAH lab, Université de Lorraine) and Sophie Lemonnier (Perseus lab, Université de Lorraine), and SDIS 54 (firefighters from Nancy).

Firefighters have to perform physically demanding tasks which can lead to musculoskeletal disorders. In addition, firefighters are aging, and their physical condition is decreasing, hence less and less firefighters are able to perform some maneuvers, such as the car cutting maneuver which requires the manipulation of heavy tools. Therefore the SDIS 54 is looking for solutions to assist firefighters perform physically demanding maneuvers. Occupational exoskeletons might be a solution in industry, they have been shown useful to reduce physical load on the workers, and increase their endurance time. However, the feasibility and benefits of exoskeletons are very task-specific. Hence the goal of this project (POMPEXO) is to investigate whether an existing exoskeleton could be used and provide some biomechanical benefit during the car cutting maneuver (or to provide recommendations on how to modify an existing exoskeleton to be compatible with the car cutting maneuver).

To do so, we conducted an on-site data collection campaign at the firefighter premises in Nancy. We recorded physiological and biomechanical data on 16 experiences of firefighters doing the car cutting maneuver. Thanks to these data, we can characterize the maneuver from a biomechanical standpoint, to identify which body parts could most benefit from an assistance. We are currently finalizing this first step, which will help us identify which existing exoskeleton(s) could be the most suitable.

In a second phase of the project, we will use digital human simulation to estimate the effect of several exoskeleton candidates on the human body (joint loading), to help us choose the best one. Then we will test this exoskeleton with the firefighters to identify its potential and limitations.

In parallel, an acceptance study is also conducted with the firefighters to analyze their opinion towards exoskeletons. The results will be useful to guide and facilitate the deployment, if a suitable exoskeleton is found.

9 Bilateral contracts and grants with industry

9.1 Bilateral grants with industry

PhD grant (Cifre) with Diatelic Pharmagest

Participants: François Charpillet, Yassine El Khadiri.