Keywords

Computer Science and Digital Science

- A2.1.3. Object-oriented programming

- A2.1.12. Dynamic languages

- A5.1.1. Engineering of interactive systems

- A5.1.2. Evaluation of interactive systems

- A5.1.3. Haptic interfaces

- A5.1.5. Body-based interfaces

- A5.1.6. Tangible interfaces

- A5.1.8. 3D User Interfaces

- A5.1.9. User and perceptual studies

- A5.2. Data visualization

- A5.6. Virtual reality, augmented reality

- A5.6.1. Virtual reality

- A5.6.2. Augmented reality

- A5.6.3. Avatar simulation and embodiment

- A5.6.4. Multisensory feedback and interfaces

- A5.7.2. Music

Other Research Topics and Application Domains

- B2.8. Sports, performance, motor skills

- B6.1.1. Software engineering

- B9.2.1. Music, sound

- B9.5.1. Computer science

- B9.5.6. Data science

- B9.6.10. Digital humanities

- B9.8. Reproducibility

1 Team members, visitors, external collaborators

Research Scientists

- Stéphane Huot [Team leader, Inria, Senior Researcher, HDR]

- Sylvain Malacria [Inria, Researcher]

- Mathieu Nancel [Inria, Researcher]

Faculty Members

- Géry Casiez [Université de Lille, Professor, HDR]

- Thomas Pietrzak [Université de Lille, Associate Professor]

- Damien Pollet [Université de Lille, Associate Professor, from Oct 2021]

Post-Doctoral Fellow

- Bruno Fruchard [Inria, from Aug 2021]

PhD Students

- Marc Baloup [Inria]

- Yuan Chen [Université de Lille & University of Waterloo - Canada]

- Johann Gonzalez Avila [Université de Lille & Carleton University - Canada]

- Alice Loizeau [Inria, from October 2021]

- Eva Mackamul [Inria]

- Gregoire Richard [Inria]

- Philippe Schmid [Inria]

- Travis West [Université de Lille & McGill University - Canada]

Technical Staff

- Axel Antoine [Inria, Engineer]

- Alice Loizeau [Inria, Engineer, from May 2021 until Sep 2021]

- Rahul Kumar Ray [Inria, Engineer, from Dec 2021]

Interns and Apprentices

- Sebastien Bart [Université de Lille, from May 2021 until Jul 2021]

- Julien Doche [ENCS Bordeaux et Université du Luxembourg, from Mar 2021 until Aug 2021]

- Alice Loizeau [Inria, until Feb 2021]

- Oceane Masse [Inria, from Jun 2021 until Aug 2021]

- Lucas Ple [Université de Lille, from Apr 2021 until Jun 2021]

- Pierrick Uro [Université de Lille, from Mar 2021 until Aug 2021]

Administrative Assistants

- Julie Jonas [Inria, until Mar 2021]

- Karine Lewandowski [Inria, from Apr 2021]

External Collaborators

- Edward Lank [University of Waterloo - Canada, until Apr 2021, HDR]

- Marcelo M. Wanderley [McGill University - Canada, from Mar 2021 until Aug 2021, HDR]

2 Overall objectives

Human-Computer Interaction (HCI) is a constantly moving field 28. Changes in computing technologies extend their possible uses, and modify the conditions of existing uses. People also adapt to new technologies and adjust them to their own needs 33. New problems and opportunities thus regularly arise and must be addressed from the perspectives of both the user and the machine, to understand and account for the tight coupling between human factors and interactive technologies. Our vision is to connect these two elements: Knowledge & Technology for Interaction.

2.1 Knowledge for Interaction

In the early 1960s, when computers were scarce, expensive, bulky, and formal-scheduled machines used for automatic computations, Engelbart saw their potential as personal interactive resources. He saw them as tools we would purposefully use to carry out particular tasks and that would empower people by supporting intelligent use 25. Others at the same time were seeing computers differently, as partners, intelligent entities to whom we would delegate tasks. These two visions still constitute the roots of today's predominant HCI paradigms, use and delegation. In the delegation approach, a lot of effort has been made to support oral, written and non-verbal forms of human-computer communication, and to analyze and predict human behavior. But the inconsistency and ambiguity of human beings, and the variety and complexity of contexts, make these tasks very difficult 39 and the machine is thus the center of interest.

2.1.1 Computers as tools

The focus of Loki is not on what machines can understand or do by themselves, but on what people can do with them. We do not reject the delegation paradigm but clearly favor the one of tool use, aiming for systems that support intelligent use rather than for intelligent systems. And as the frontier is getting thinner, one of our goals is to better understand what makes an interactive system perceived as a tool or as a partner, and how the two paradigms can be combined for the best benefit of the user.

2.1.2 Empowering tools

The ability provided by interactive tools to create and control complex transformations in real-time can support intellectual and creative processes in unusual but powerful ways. But mastering powerful tools is not simple and immediate, it requires learning and practice. Our research in HCI should not just focus on novice or highly proficient users, it should also care about intermediate ones willing to devote time and effort to develop new skills, be it for work or leisure.

2.1.3 Transparent tools

Technology is most empowering when it is transparent: invisible in effect, it does not get in your way but lets you focus on the task. Heidegger characterized this unobtruded relation to things with the term zuhanden (ready-to-hand). Transparency of interaction is not best achieved with tools mimicking human capabilities, but with tools taking full advantage of them given the context and task. For instance, the transparency of driving a car “is not achieved by having a car communicate like a person, but by providing the right coupling between the driver and action in the relevant domain (motion down the road)” 43. Our actions towards the digital world need to be digitized and we must receive proper feedback in return. But input and output technologies pose somewhat inevitable constraints while the number, diversity, and dynamicity of digital objects call for more and more sophisticated perception-action couplings for increasingly complex tasks. We want to study the means currently available for perception and action in the digital world: Do they leverage our perceptual and control skills? Do they support the right level of coupling for transparent use? Can we improve them or design more suitable ones?

2.2 Technology for Interaction

Studying the interactive phenomena described above is one of the pillars of HCI research, in order to understand, model and ultimately improve them. Yet, we have to make those phenomena happen, to make them possible and reproducible, be it for further research or for their diffusion 27. However, because of the high viscosity and the lack of openness of actual systems, this requires considerable efforts in designing, engineering, implementing and hacking hardware and software interactive artifacts. This is what we call “The Iceberg of HCI Research”, of which the hidden part supports the design and study of new artifacts, but also informs their creation process.

2.2.1 “Designeering Interaction”

Both parts of this iceberg are strongly influencing each other: The design of interaction techniques (the visible top) informs on the capabilities and limitations of the platform and the software being used (the hidden bottom), giving insights into what could be done to improve them. On the other hand, new architectures and software tools open the way to new designs, by giving the necessary bricks to build with 29. These bricks define the adjacent possible of interactive technology, the set of what could be designed by assembling the parts in new ways. Exploring ideas that lie outside of the adjacent possible require the necessary technological evolutions to be addressed first. This is a slow and gradual but uncertain process, which helps to explore and fill a number of gaps in our research field but can also lead to deadlocks. We want to better understand and master this process—i. e., analyzing the adjacent possible of HCI technology and methods—and introduce tools to support and extend it. This could help to make technology better suited to the exploration of the fundamentals of interaction, and to their integration into real systems, a way to ultimately improve interactive systems to be empowering tools.

2.2.2 Computers vs Interactive Systems

In fact, today's interactive systems—e. g., desktop computers, mobile devices— share very similar layered architectures inherited from the first personal computers of the 1970s. This abstraction of resources provides developers with standard components (UI widgets) and high-level input events (mouse and keyboard) that obviously ease the development of common user interfaces for predictable and well-defined tasks and users' behaviors. But it does not favor the implementation of non-standard interaction techniques that could be better adapted to more particular contexts, to expressive and creative uses. Those often require to go deeper into the system layers, and to hack them until getting access to the required functionalities and/or data, which implies switching between programming paradigms and/or languages.

And these limitations are even more pervading as interactive systems have changed deeply in the last 20 years. They are no longer limited to a simple desktop or laptop computer with a display, a keyboard and a mouse. They are becoming more and more distributed and pervasive (e. g., mobile devices, Internet of Things). They are changing dynamically with recombinations of hardware and software (e. g., transition between multiple devices, modular interactive platforms for collaborative use). Systems are moving “out of the box” with Augmented Reality, and users are going “ inside of the box” with Virtual Reality. This is obviously raising new challenges in terms of human factors, usability and design, but it also deeply questions actual architectures.

2.2.3 The Interaction Machine

We believe that promoting digital devices to empowering tools requires better fundamental knowledge about interaction phenomena AND to revisit the architecture of interactive systems in order to support this knowledge. By following a comprehensive systems approach—encompassing human factors, hardware elements, and all software layers above—we want to define the founding principles of an Interaction Machine:

- a set of hardware and software requirements with associated specifications for interactive systems to be tailored to interaction by leveraging human skills;

- one or several implementations to demonstrate and validate the concept and the specifications in multiple contexts;

- guidelines and tools for designing and implementing interactive systems, based on these specifications and implementations.

To reach this goal, we will adopt an opportunistic and iterative strategy guided by the designeering approach, where the engineering aspect will be fueled by the interaction design and study aspect. We will address several fundamental problems of interaction related to our vision of “empowering tools”, which, in combination with state-of-the-art solutions, will instruct us on the requirements for the solutions to be supported in an interactive system. This consists in reifying the concept of the Interaction Machine into multiple contexts and for multiple problems, before converging towards a more unified definition of “what is an interactive system”, the ultimate Interaction Machine, which constitutes the main scientific and engineering challenge of our project.

3 Research program

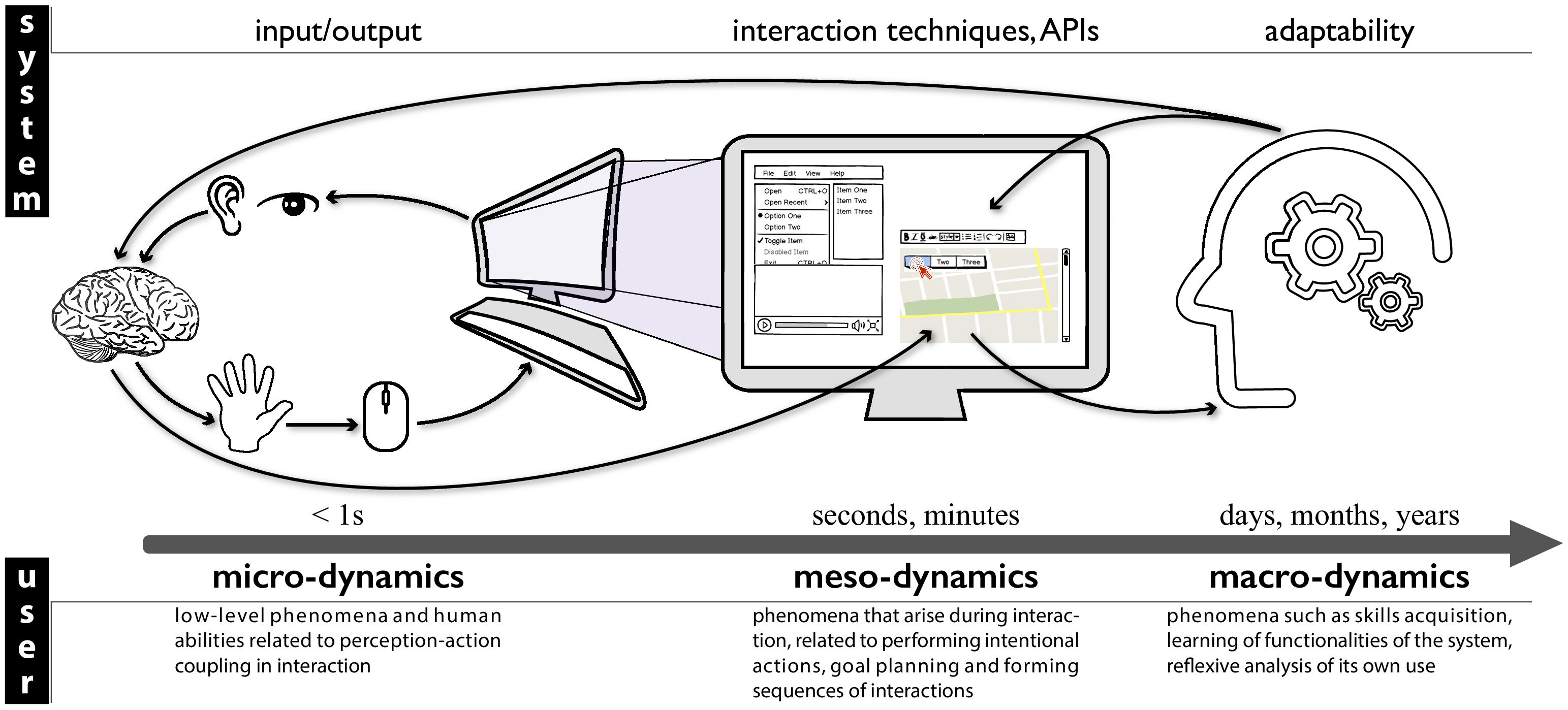

Interaction is by nature a dynamic phenomenon that takes place between interactive systems and their users. Redesigning interactive systems to better account for interaction requires fine understanding of these dynamics from the user side so as to better handle them from the system side. In fact, layers of actual interactive systems abstract hardware and system resources from a system and programing perspective. Following our Interaction Machine concept, we are reconsidering these architectures from the user's perspective, through different levels of dynamics of interaction (see Figure 1).

Represents the 3 levels of dynamics of interaction that we consider in our research program.

Considering phenomena that occur at each of these levels as well as their relationships will help us to acquire the necessary knowledge (Empowering Tools) and technological bricks (Interaction Machine) to reconcile the way interactive systems are designed and engineered with human abilities. Although our strategy is to investigate issues and address challenges for all of the three levels, our immediate priority is to focus on micro-dynamics since it concerns very fundamental knowledge about interaction and relates to very low-level parts of interactive systems, which is likely to influence our future research and developments at the other levels.

3.1 Micro-Dynamics

Micro-dynamics involve low-level phenomena and human abilities which are related to short time/instantness and to perception-action coupling in interaction, when the user has almost no control or consciousness of the action once it has been started. From a system perspective, it has implications mostly on input and output (I/O) management.

3.1.1 Transfer functions design and latency management

We have developed a recognized expertise in the characterization and the design of transfer functions 24, 38, i. e., the algorithmic transformations of raw user input for system use. Ideally, transfer functions should match the interaction context. Yet the question of how to maximize one or more criteria in a given context remains an open one, and on-demand adaptation is difficult because transfer functions are usually implemented at the lowest possible level to avoid latency. Latency has indeed long been known as a determinant of human performance in interactive systems 32 and recently regained attention with touch interactions 30. These two problems require cross examination to improve performance with interactive systems: Latency can be a confounding factor when evaluating the effectiveness of transfer functions, and transfer functions can also include algorithms to compensate for latency.

We have recently proposed new cheap but robust methods for the measurement of end-to-end latency 2 and worked on compensation methods 37 and the evaluation of their perceived side effects 9. Our goal is then to automatically adapt transfer functions to individual users and contexts of use, which we started in 31, while reducing latency in order to support stable and appropriate control. To achieve this, we will investigate combinations of low-level (embedded) and high-level (application) ways to take user capabilities and task characteristics into account and reduce or compensate for latency in different contexts, e. g., using a mouse or a touchpad, a touch-screen, an optical finger navigation device or a brain-computer interface. From an engineering perspective, this knowledge on low-level human factors will help us to rethink and redesign the I/O loop of interactive systems in order to better account for them and achieve more adapted and adaptable perception-action coupling.

3.1.2 Tactile feedback & haptic perception

We are also concerned with the physicality of human-computer interaction, with a focus on haptic perception and related technologies. For instance, when interacting with virtual objects such as software buttons on a touch surface, the user cannot feel the click sensation as with physical buttons. The tight coupling between how we perceive and how we manipulate objects is then essentially broken although this is instrumental for efficient direct manipulation. We have addressed this issue in multiple contexts by designing, implementing and evaluating novel applications of tactile feedback 5.

In comparison with many other modalities, one difficulty with tactile feedback is its diversity. It groups sensations of forces , vibrations , friction , or deformation. Although this is a richness, it also raises usability and technological challenges since each kind of haptic stimulation requires different kinds of actuators with their own parameters and thresholds. And results from one are hardly applicable to others. On a “knowledge” point of view, we want to better understand and empirically classify haptic variables and the kind of information they can represent (continuous, ordinal, nominal), their resolution, and their applicability to various contexts. From the “technology” perspective, we want to develop tools to inform and ease the design of haptic interactions taking best advantage of the different technologies in a consistent and transparent way.

3.2 Meso-Dynamics

Meso-dynamics relate to phenomena that arise during interaction, on a longer but still short time-scale. For users, it is related to performing intentional actions, to goal planning and tools selection, and to forming sequences of interactions based on a known set of rules or instructions. From the system perspective, it relates to how possible actions are exposed to the user and how they have to be executed (i. e., interaction techniques). It also has implication on the tools for designing and implementing those techniques (programming languages and APIs).

3.2.1 Interaction bandwidth and vocabulary

Interactive systems and their applications have an always-increasing number of available features and commands due to, e. g., the large amount of data to manipulate, increasing power and number of functionalities, or multiple contexts of use.

On the input side, we want to augment the interaction bandwidth between the user and the system in order to cope with this increasing complexity. In fact, most input devices capture only a few of the movements and actions the human body is capable of. Our arms and hands for instance have many degrees of freedom that are not fully exploited in common interfaces. We have recently designed new technologies to improve expressibility such as a bendable digitizer pen 26, or reliable technology for studying the benefits of finger identification on multi-touch interfaces 4.

On the output side, we want to expand users' interaction vocabulary. All of the features and commands of a system can not be displayed on screen at the same time and lots of advanced features are by default hidden to the users (e. g., hotkeys) or buried in deep hierarchies of command-triggering systems (e. g., menus). As a result, users tend to use only a subset of all the tools the system actually offers 36. We will study how to help them to broaden their knowledge of available functions.

Through this “opportunistic” exploration of alternative and more expressive input methods and interaction techniques, we will particularly focus on the necessary technological requirements to integrate them into interactive systems, in relation with our redesign of the I/O stack at the micro-dynamics level.

3.2.2 Spatial and temporal continuity in interaction

At a higher level, we will investigate how more expressive interaction techniques affect users' strategies when performing sequences of elementary actions and tasks. More generally, we will explore the “continuity” in interaction. Interactive systems have moved from one computer to multiple connected interactive devices (computer, tablets, phones, watches, etc.) that could also be augmented through a Mixed-Reality paradigm. This distribution of interaction raises new challenges, both in terms of usability and engineering, that we clearly have to consider in our main objective of revisiting interactive systems 34. It involves the simultaneous use of multiple devices and also the changes in the role of devices according to the location, the time, the task, and contexts of use: a tablet device can be used as the main device while traveling, and it becomes an input device or a secondary monitor when resuming that same task once in the office; a smart-watch can be used as a standalone device to send messages, but also as a remote controller for a wall-sized display. One challenge is then to design interaction techniques that support smooth, seamless transitions during these spatial and temporal changes in order to maintain the continuity of uses and tasks, and how to integrate these principles in future interactive systems.

3.2.3 Expressive tools for prototyping, studying, and programming interaction

Current systems suffer from engineering issues that keep constraining and influencing how interaction is thought, designed, and implemented. Addressing the challenges we presented in this section and making the solutions possible require extended expressiveness, and researchers and designers must either wait for the proper toolkits to appear, or “hack” existing interaction frameworks, often bypassing existing mechanisms. For instance, numerous usability problems in existing interfaces stem from a common cause: the lack, or untimely discarding, of relevant information about how events are propagated and how changes come to occur in interactive environments. On top of our redesign of the I/O loop of interactive systems, we will investigate how to facilitate access to that information and also promote a more grounded and expressive way to describe and exploit input-to-output chains of events at every system level. We want to provide finer granularity and better-described connections between the causes of changes (e.g. input events and system triggers), their context (e.g. system and application states), their consequences (e.g. interface and data updates), and their timing 8. More generally, a central theme of our Interaction Machine vision is to promote interaction as a first-class object of the system 23, and we will study alternative and better-adapted technologies for designing and programming interaction, such as we did recently to ease the prototyping of Digital Musical Instruments 1 or the programming of graphical user interfaces 10. Ultimately, we want to propose a unified model of hardware and software scaffolding for interaction that will contribute to the design of our Interaction Machine.

3.3 Macro-Dynamics

Macro-dynamics involve longer-term phenomena such as skills acquisition, learning of functionalities of the system, reflexive analysis of its own use (e. g., when the user has to face novel or unexpected situations which require high-level of knowledge of the system and its functioning). From the system perspective, it implies to better support cross-application and cross-platform mechanisms so as to favor skill transfer. It also requires to improve the instrumentation and high-level logging capabilities to favor reflexive use, as well as flexibility and adaptability for users to be able to finely tune and shape their tools.

We want to move away from the usual binary distinction between “novices” and “experts” 3 and explore means to promote and assist digital skill acquisition in a more progressive fashion. Indeed, users have a permanent need to adapt their skills to the constant and rapid evolution of the tasks and activities they carry on a computer system, but also the changes in the software tools they use 41. Software strikingly lacks powerful means of acquiring and developing these skills 3, forcing users to mostly rely on outside support (e. g., being guided by a knowledgeable person, following online tutorials of varying quality). As a result, users tend to rely on a surprisingly limited interaction vocabulary, or make-do with sub-optimal routines and tools 42. Ultimately, the user should be able to master the interactive system to form durable and stabilized practices that would eventually become automatic and reduce the mental and physical efforts , making their interaction transparent.

In our previous work, we identified the fundamental factors influencing expertise development in graphical user interfaces, and created a conceptual framework that characterizes users' performance improvement with UIs 7, 3. We designed and evaluated new command selection and learning methods to leverage user's digital skill development with user interfaces, on both desktop 6 and touch-based computers.

We are now interested in broader means to support the analytic use of computing tools:

- to foster understanding of interactive systems. As the digital world makes the shift to more and more complex systems driven by machine learning algorithms, we increasingly lose our comprehension of which process caused the system to respond in one way rather than another. We will study how novel interactive visualizations can help reveal and expose the “intelligence” behind, in ways that people better master their complexity.

- to foster reflexion on interaction. We will study how we can foster users' reflexion on their own interaction in order to encourage them to acquire novel digital skills. We will build real-time and off-line software for monitoring how user's ongoing activity is conducted at an application and system level. We will develop augmented feedbacks and interactive history visualization tools that will offer contextual visualizations to help users to better understand and share their activity, compare their actions to that of others, and discover possible improvement.

- to optimize skill-transfer and tool re-appropriation. The rapid evolution of new technologies has drastically increased the frequency at which systems are updated, often requiring to relearn everything from scratch. We will explore how we can minimize the cost of having to appropriate an interactive tool by helping users to capitalize on their existing skills.

We plan to explore these questions as well as the use of such aids in several contexts like web-based, mobile, or BCI-based applications. Although, a core aspect of this work will be to design systems and interaction techniques that will be as little platform-specific as possible, in order to better support skill transfer. Following our Interaction Machine vision, this will lead us to rethink how interactive systems have to be engineered so that they can offer better instrumentation, higher adaptability, and fewer separation between applications and tasks in order to support reuse and skill transfer.

4 Application domains

Loki works on fundamental and technological aspects of Human-Computer Interaction that can be applied to diverse application domains.

Our 2021 research involved desktop and mobile interaction, virtual reality, touch-based, flexible input devices and haptics, with notable applications to creativity support tools (production of illustrations, design of Digital Musical Instruments). Our technical work also contributes to the more general application domains of systems' design and configuration tools.

5 Highlights of the year

5.1 Awards

Honorable mention award from the ACM CHI conference to the paper “Interaction Illustration Taxonomy: Classification of Styles and Techniques for Visually Representing Interaction Scenarios”, from A. Antoine, S. Malacria, N. Marquardt & G. Casiez 12.

Honorable mention award from the ACM CHI conference to the paper “Typing Efficiency and Suggestion Accuracy Influence the Benefits and Adoption of Word Suggestions”, from Q. Roy, S. Berlioux, G. Casiez & D. Vogel 17.

CHI'20 @ CHI'21 People's Choice Best Demo Award from the ACM CHI conference to the demo “Bringing Interactivity to Research Papers and Presentations with Chameleon”, from D. Masson, S. Malacria, E. Lank & G. Casiez 35.

6 New software and platforms

6.1 New software

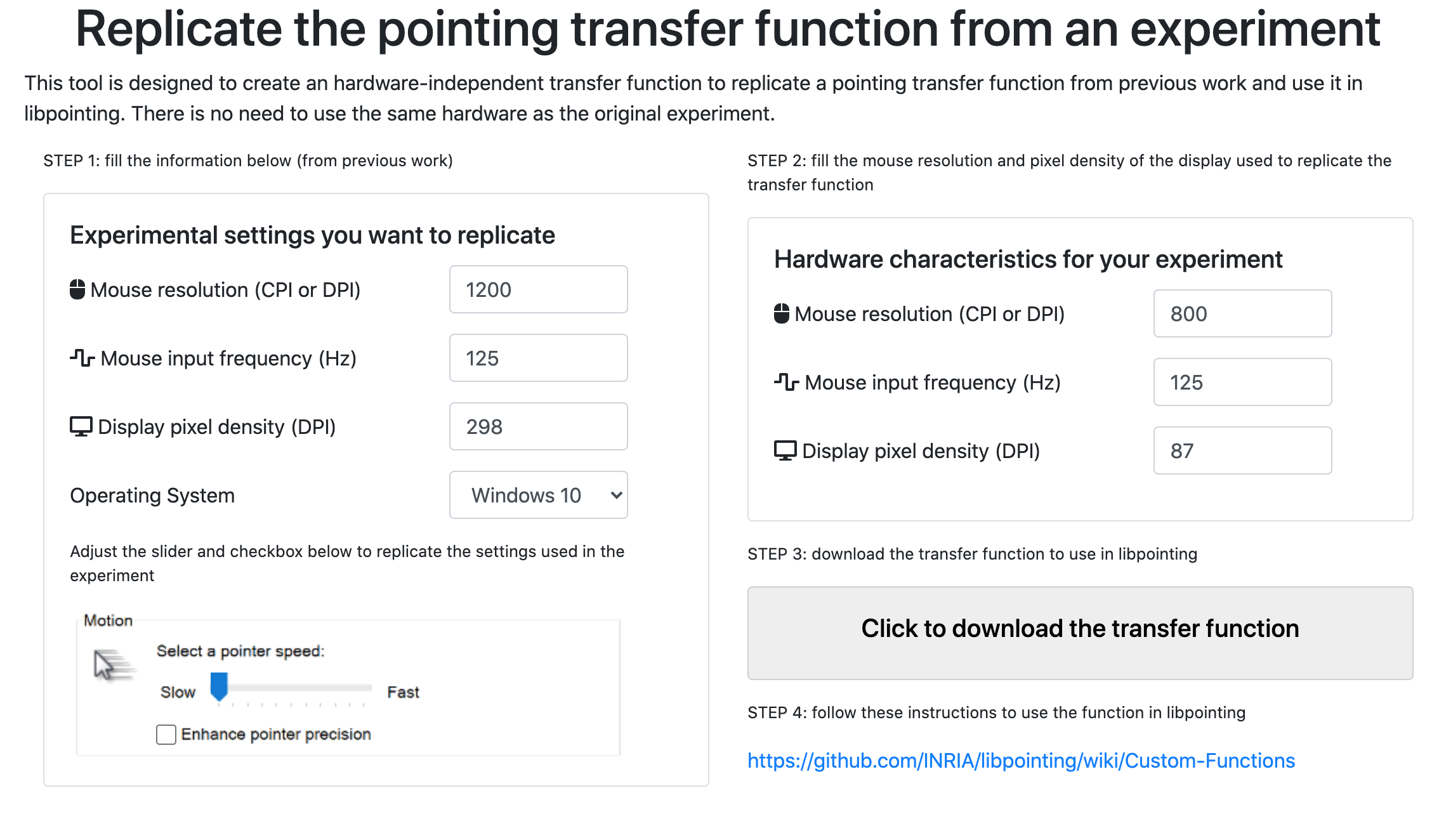

6.1.1 TransferFunctionTools

-

Name:

Tools for Hardware-independent Transfer Functions

-

Keywords:

Human Computer Interaction, Transfer functions

-

Functional Description:

Software tools developed for the research project on hardware-independenent transfer functions in order to allow a developer or an end user to have the same pointing transfer function even if the hardware configurations differ (in terms of size, resolution, etc.).

- URL:

- Publication:

-

Contact:

Gery Casiez

-

Participants:

Gery Casiez, Mathieu Nancel, Sylvain Malacria, Damien Masson, Raiza Sarkis Hanada

6.1.2 ImageTaxonomy

-

Name:

Software suite for coding images and exploring them once coded

-

Keyword:

Human Computer Interaction

-

Functional Description:

Software suite implemented for the IllustrationTaxonomy project. It is composed of the following tools: - Coding tool: standalone PyQt application that can be used to code a set of images - Exploration tool: web-based application that facilitates the exploration of coded images - Visualisation tool: web-based application that can be used to browse the hierarchy of codes

- URL:

- Publication:

-

Contact:

Axel Antoine

-

Participants:

Axel Antoine, Sylvain Malacria, Gery Casiez

-

Partner:

University College London UK

6.1.3 AvatarFacialExpressions

-

Name:

Avatar Facial Expressions

-

Keywords:

Virtual reality, Facial expression, Interaction technique, Human Computer Interaction

-

Scientific Description:

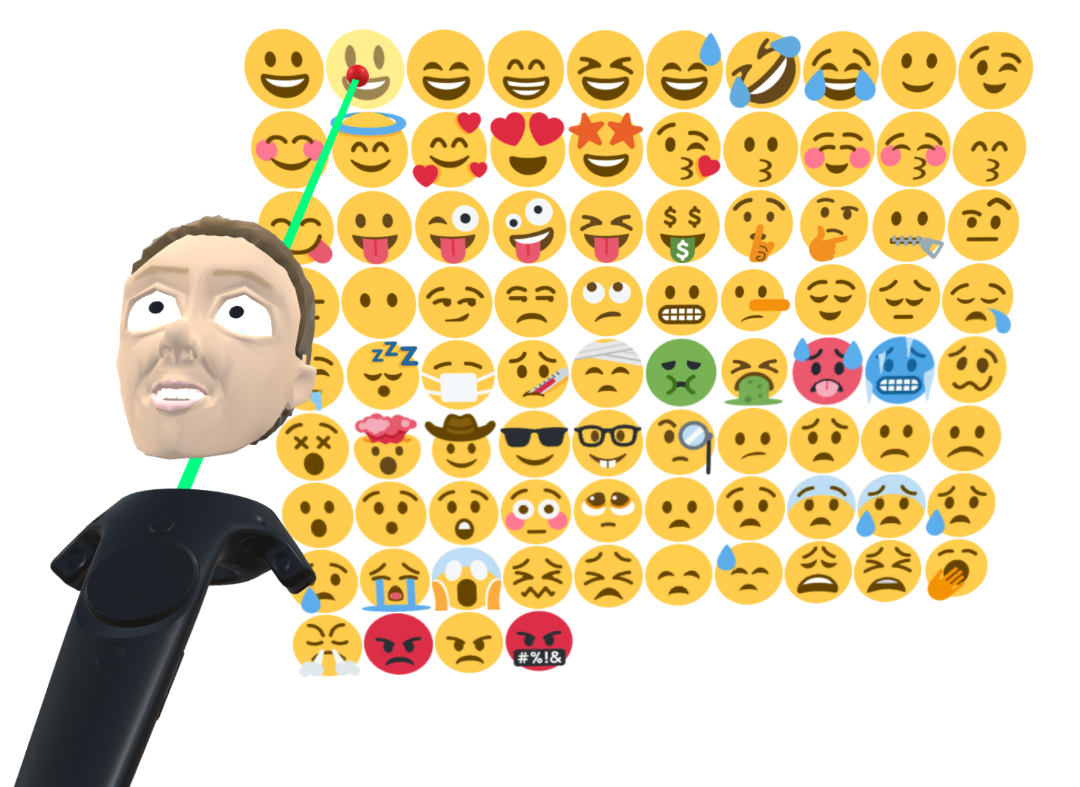

The control of an avatar’s facial expressions in virtual reality is mainly based on the automated recognition and transposition of the user’s facial expressions. These isomorphic techniques are limited to what users can convey with their own face and have recognition issues. To overcome these limitations, non-isomorphic techniques rely on interaction techniques using input devices to control the avatar’s facial expressions. Such techniques need to be designed to quickly and easily select and control an expression, and not disrupt a main task such as talking. We present the design of a set of new non- isomorphic interaction techniques for controlling an avatar facial expression in VR using a standard VR controller. These techniques have been evaluated through two controlled experiments to help designing an interaction technique combining the strengths of each approach. This technique was evaluated in a final ecological study showing it can be used in contexts such as social applications.

-

Functional Description:

This is a Unity Project containing the source code and prefab for a set of interaction techniques for the non-isomorphic control of the facial expression of an avatar in VR.

- Publication:

-

Contact:

Thomas Pietrzak

-

Participants:

Marc Baloup, Gery Casiez, Martin Hachet, Thomas Pietrzak

6.1.4 Esquisse

-

Keywords:

Vector graphics, 3D interaction, Human Computer Interaction

-

Scientific Description:

Trace figures are contour drawings of people and objects that capture the essence of scenes without the visual noise of photos or other visual representations. Their focus and clarity make them ideal representations to illustrate designs or interaction techniques. In practice, creating those figures is a tedious task requiring advanced skills, even when creating the figures by tracing outlines based on photos. To mediate the process of creating trace figures, we introduce the open-source tool Esquisse. Informed by our taxonomy of 124 trace figures, Esquisse provides an innovative 3D model staging workflow, with specific interaction techniques that facilitate 3D staging through kinematic manipulation, anchor points and posture tracking. Our rendering algorithm (including stroboscopic rendering effects) creates vector-based trace figures of 3D scenes. We validated Esquisse with an experiment where participants created trace figures illustrating interaction techniques, and results show that participants quickly managed to use and appropriate the tool.

-

Functional Description:

Esquisse is an add-on for Blender that can be used to rapidly produce trace figures. It relies on a 3D model staging workflow, with specific interaction techniques that facilitate the staging through kinematic manipulation, anchor points and posture tracking. Staged 3D scenes can be exported to SVG thanks to Esquisse's dedicated rendering algorithm.

-

News of the Year:

Thanks to a Technological Development Operations (ADT) support that started January 1st 2021, a new and updated version of Esquisse is currently under development. This new version is an online platform and therefore does no longer depend on Blender. It can run on both desktop and mobile platforms and will support novel staging and annotating interaction techniques.

- URL:

- Publication:

-

Contact:

Sylvain Malacria

-

Participants:

Axel Antoine, Gery Casiez, Sylvain Malacria

7 New results

According to our research program, in the next two to five years, we will study dynamics of interaction along three levels depending on interaction time scale and related user's perception and behavior: Micro-dynamics, Meso-dynamics, and Macro-dynamics. Considering phenomena that occur at each of these levels as well as their relationships will help us acquire the necessary knowledge (Empowering Tools) and technological bricks (Interaction Machine) to reconcile the way interactive systems are designed and engineered with human abilities. Our strategy is to investigate issues and address challenges at all three levels of dynamics in order to contribute to our longer term objective of defining the basic principles of an Interaction Machine.

7.1 Micro-dynamics

Participants: Géry Casiez [contact person], Sylvain Malacria, Mathieu Nancel, Thomas Pietrzak, Philippe Schmid, Alice Loizeau.

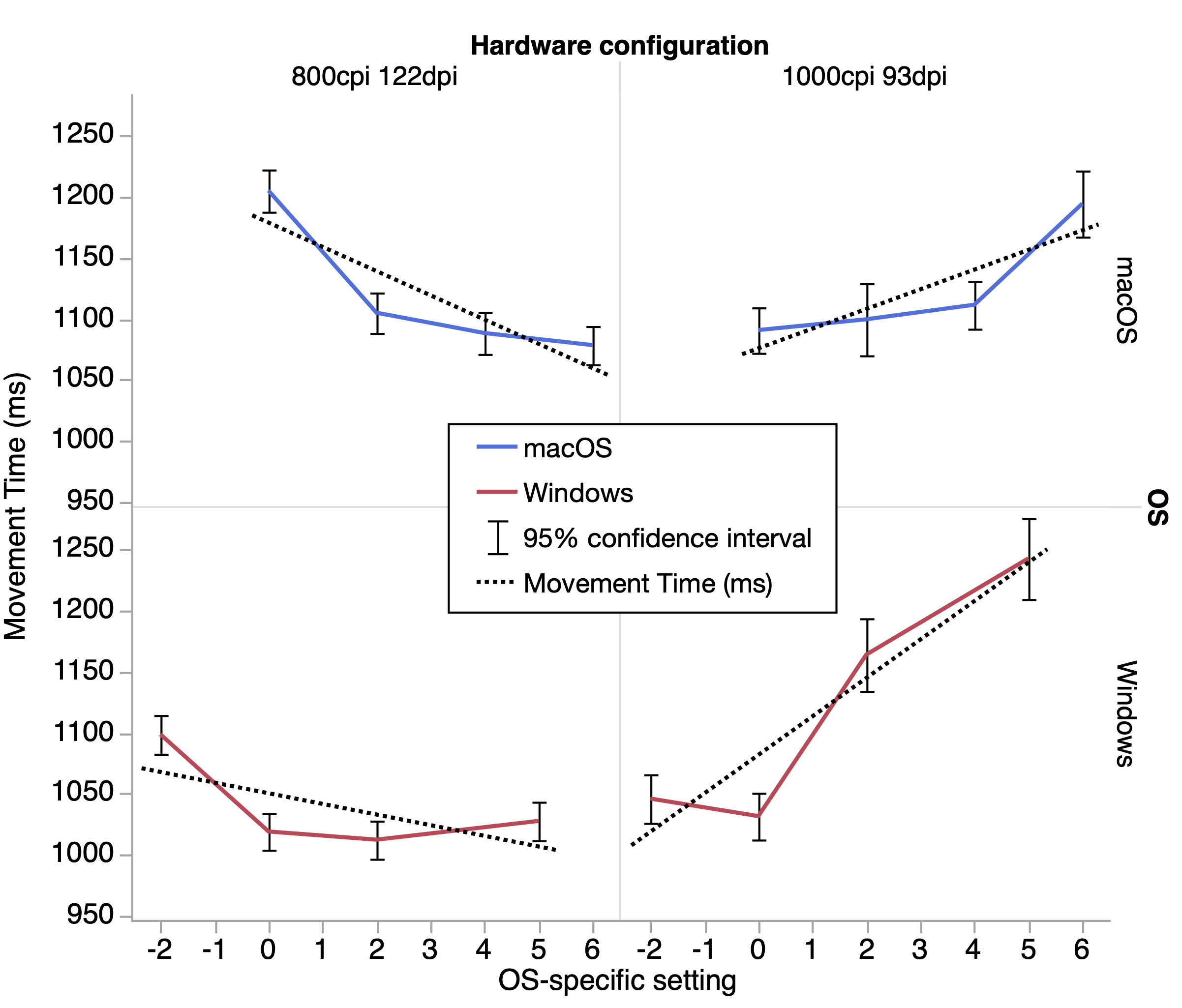

7.1.1 Relevance and Applicability of Hardware-independent Pointing Transfer Functions

Pointing transfer functions remain predominantly expressed in pixels per input counts, which can generate different visual pointer behaviors with different input and output devices; we have shown in a first controlled experiment that even small hardware differences impact pointing performance with functions defined in this manner 15. We also demonstrated the applicability of “hardware-independent” transfer functions defined in physical units. We explored two methods to maintain hardware-independent pointer performance in operating systems that require hardware-dependent definitions: scaling them to the resolutions of the input and output devices, or selecting the OS acceleration setting that produces the closest visual behavior. In a second controlled experiment, we adapted a baseline function to different screen and mouse resolutions using both methods, and the resulting functions provided equivalent performance. Lastly, we provided a tool to calculate equivalent transfer functions between hardware setups, allowing users to match pointer behavior with different devices, and researchers to tune and replicate experiment conditions. Our work emphasizes, and hopefully facilitates, the idea that operating systems should have the capability to formulate pointing transfer functions in physical units, and to adjust them automatically to hardware setups.

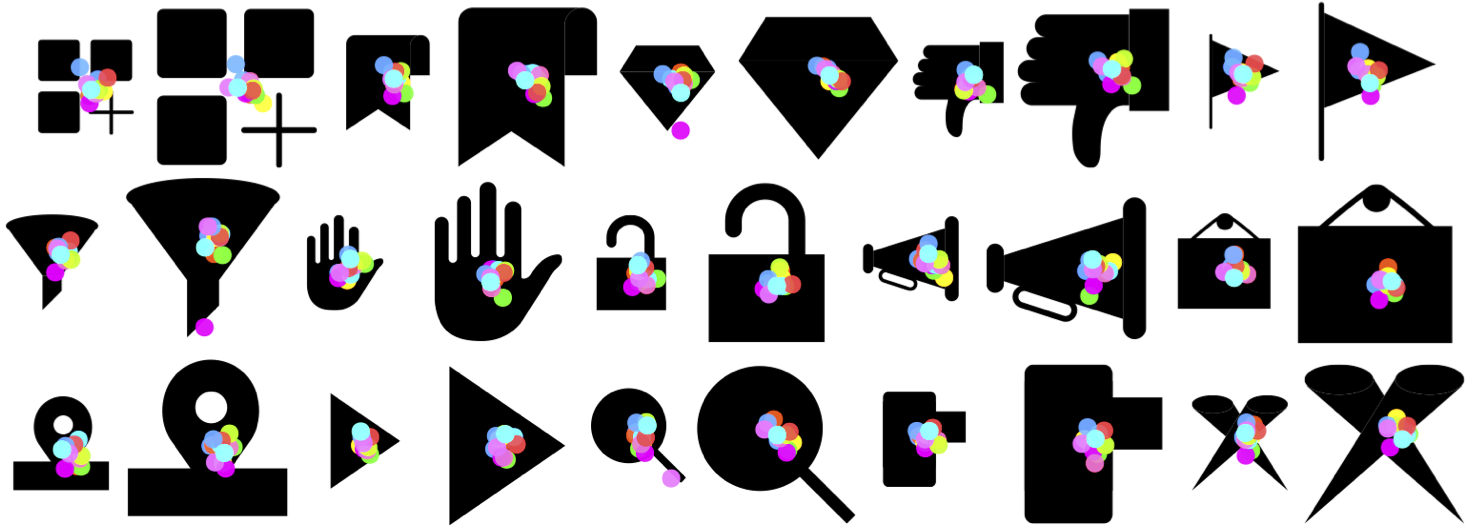

7.1.2 User Strategies When Touching Arbitrary Shaped Objects

We investigated how users touch arbitrary shapes 18. First, we performed semi-structured interviews with a fifteen-shape set as prop to identify touch strategies. Our results revealed four main potential touch strategies, from which we devised seven mathematical candidate models. The two first models consist in computing the centroid of the contour of the shape or its convex hull. The third model computes the centroid of all the pixels of the shape. The fourth and fifth models fit a rectangle of the shape, either oriented or the bounding box. The last two models either search the minimum enclosing circle, or fit an ellipse on the shape. We investigated the ability of these models to predict human behaviour in a controlled experiment (see datapoints on Figure 3). We found that the center of a shape's bounding box best approximates a user's target location when touching arbitrary shapes. Our findings not only invite designers to use a larger variety of shapes, but can also be used to design touch interaction adapted to user behaviour using our model. As an example, they are likely to be valuable for the creation of applications exposing shapes of various complexities, like drawing applications.

Images showing where participants aimed at the different shapes depending on their sizes.

7.2 Meso-dynamics

Participants: Axel Antoine [contact person], Marc Baloup [contact person], Géry Casiez [contact person], Bruno Fruchard [contact person], Stéphane Huot [contact person], Edward Lank [contact person], Alice Loizeau [contact person], Sylvain Malacria [contact person], Mathieu Nancel [contact person], Thomas Pietrzak [contact person], Damien Pollet, Marcelo Wanderley, Travis West.

7.2.1 Non-isomorphic Interaction Techniques for Controlling Avatar Facial Expressions in VR

The control of an avatar's facial expressions in virtual reality is mainly based on the automated recognition and transposition of the user's facial expressions. These isomorphic techniques are limited to what users can convey with their own face and have recognition issues. To overcome these limitations, non-isomorphic techniques rely on interaction techniques using input devices to control the avatar's facial expressions. Such techniques need to be designed to quickly and easily select and control an expression, and not disrupt a main task such as talking. We introduced the design of a set of new non-isomorphic interaction techniques for controlling an avatar facial expression in VR using a standard VR controller (Figure 4) 13. These techniques have been evaluated through two controlled experiments to help designing an interaction technique combining the strengths of each approach. This technique was evaluated in a final ecological study showing it can be used in contexts such as social applications.

7.2.2 Bendable Stylus Interfaces with different Flexural Stiffness

Flexible sensing styluses deliver additional degrees of input for pen-based interaction, yet no research has looked into the integration with creative digital applications as well as the influence of flexural stiffness. We presented HyperBrush, a modular flexible stylus with interchangeable flexible components for digital drawing applications 14. We compared our HyperBrush to rigid pressure styluses in three studies, for brushstroke manipulation, for menu selection and for creative digital drawing tasks. The results of HyperBrush were comparable to those of a commercial pressure pen. We concluded that different flexibilities could pose their own unique advantages analogous to an artist’s assortment of paintbrushes.

Image of a 3D-printed flexible pen.

7.2.3 Making Mappings: Design Criteria for Live Performance

A digital musical instrument (DMI) might be viewed as composed of a control interface, a sound synthesizer, and a mapping that connects the two. Numerous studies provide evidence that the careful design of the mapping is at least as important as the design of the control interface and the sound synthesizer: the mapping design affects how an instrument feels, how it engages the player, and even how the audience appreciates a performance. Although the literature is clear that mappings are vitally important, it remains unclear exactly how to design a mapping that allows the designer to achieve their design goals. Indeed, it is not clear what design goals are most common or important, let alone how mappings can be designed to achieve them. Much of the literature reports on the insights gained by digital instrument designers through their practice of making instruments where design criteria for mappings are implied by broader recommendations for DMI design in general. In works focused specifically on the design of mappings, the literature often focuses on the structural, topological, and technical aspects of the mapping design, such as recommending the use of intermediary layer, dimensionality reduction, machine learning, and other novel implementation techniques. In all of this work, the specific design goals of the instrument and/or mapping are often only implied by the context of the presentation.

We have explored mapping design criteria using a novel approach 19. Rather than reporting on the insights of a single or small group of designers, as is usually done in the literature, our study considers the design goals of a relatively large number of skilled users when making a mapping for live performance, and the criteria that they use to evaluate whether their mapping design allows them to achieve their goals. Two groups of participants were asked to design a mapping effective for a live performance, and then to reflect on their design process and decisions. We examined what our participants considered to be important properties for their mappings to have, and considered how these criteria may change in relation to the designer's experience with the gestural controller used. Finally, we better situated the role of mapping design and its evaluation criteria in the overall DMI design process. In summary, an effective mapping for a live performance should consider the balance of musical agency between the player and the instrument, primarily empowering the player to perform specific sounds as they intend to, but perhaps sometimes allowing the instrument to behave unexpectedly. Furthermore, and it was the strongest theme in our participants remarks, an effective mapping for a live performance should be easy to understand, for the player as well as the audience.

The generalizability of these results remain to be assessed regarding mapping design over longer periods, using a different controller, synthesizer, or mapping environment, or when the design goals and context are different from live performance. In future work, we will consider how mapping design criteria differ depending on these important factors. Ultimately, we will use these results to build more useful tools to design mappings between modular devices, helping designers to make more effective mappings for fully integrated musical instruments.

7.3 Macro-dynamics

Participants: Axel Antoine [contact person], Géry Casiez [contact person], Bruno Fruchard [contact person], Stéphane Huot [contact person], Eva Mackamul [contact person], Sylvain Malacria [contact person], Mathieu Nancel, Thomas Pietrzak, Grégoire Richard, Travis West.

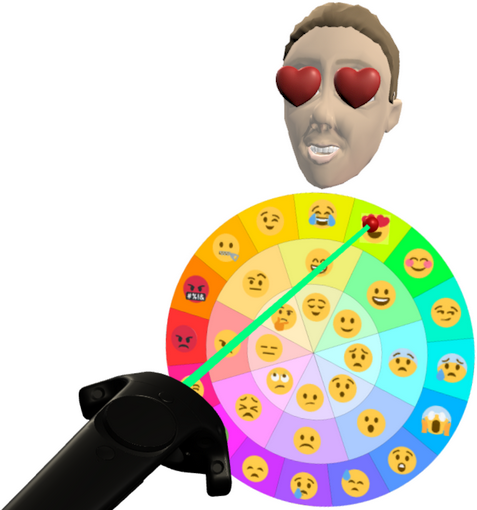

7.3.1 Typing Efficiency and Suggestion Accuracy Influence the Benefits and Adoption of Word Suggestions

Suggesting words to complete a given sequence of characters is a common feature of typing interfaces (Figure 6). Yet, previous studies have not found a clear benefit, some even finding it detrimental. We reported on the first study to control for two important factors, word suggestion accuracy and typing efficiency 17. Our accuracy factor is enabled by a new methodology that builds on standard metrics of word suggestions. Typing efficiency is based on device type. Results show word suggestions are used less often in the desktop condition, with little difference between tablet and phone conditions. Very accurate suggestions do not improve entry speed on desktop, but do on tablet and phone. Based on our findings, we discuss implications for the design of automation features in typing systems.

Images of the user interfaces of word suggestions systems.

7.3.2 Interaction Illustration Taxonomy: Classification of Styles and Techniques for Visually Representing Interaction Scenarios

Static illustrations are ubiquitous means to represent interaction scenarios. Across papers and reports, these visuals demonstrate people's use of devices, explain systems, or show design spaces. Creating such figures is challenging, and very little is known about the overarching strategies for visually representing interaction scenarios. To mitigate this task, we contributed a unified taxonomy of design elements that compose such figures 12. In particular, we provided a detailed classification of Structural and Interaction strategies, such as composition, visual techniques, dynamics, representation of users, and many others – all in context of the type of scenarios. This taxonomy can inform researchers' choices when creating new figures, by providing a concise synthesis of visual strategies, and revealing approaches they were not aware of before. Furthermore, to support the community for creating further taxonomies, we also provided three open-source software facilitating the coding process and visual exploration of the coding scheme.

7.3.3 AZERTY amélioré: Computational Design on a National Scale

In 2019, France became the first country in the world to adopt a keyboard standard informed by computational methods, aiming to improve the performance, ergonomics, and intuitiveness of the keyboard while enabling input of many more characters. We describe the human-centric approach that we took to develop this new layout 11, developed jointly with stakeholders from linguistics, typography, keyboard manufacturers, and the French ministry of Culture. It utilizes computational methods in the decision process not only to solve a well-defined problem but also to understand the design requirements, to inform subjective views, or to communicate the outcomes of intermediate propositions or of localized changes in the layout suggested by stakeholders. This research contributed computational methods that can be used in a participatory and inclusive fashion respecting the different needs and roles of stakeholders.

7.4 Interaction Machine

We were expecting to organize in 2021 several workshops and seminars (with external guests) dedicated to this aspect of our research program. This was not possible due to the restrictions related to the COVID-19 pandemic.

However, in a continuation from the past years' contributions, several of this year's new results inform our global objective of building an Interaction Machine and to redefine how systems should be designed for Interaction as a whole.

7.4.1 Understand and mitigate the “blind spots” in today's interfaces

A defining principle of the Interaction Machine is to better understand interaction phenomena in order to tailor interactive systems to human capabilities and skills. To that end, it is crucial to understand these capabilities, as well as the current interactive systems' limitations with regards to them.

Direct touch on tactile displays has been publicly available for more than a decade, yet some essential aspects of their use have remained unexplored. Our recent work on modeling touch location when manipulating arbitrary shapes on touchscreens (see section 7.1.2) investigates one such blind spot. We first investigated the user strategies and behaviors that dictate where users touch arbitrary tactile shapes, as a function of their intended use of the corresponding interactive element. We also proposed and evaluated models of touch location, which we expect could help the design of all touch-enabled interfaces, and in particular the manipulation of shapes of various complexities, such as in drawing applications. Another nowadays ubiquitous interactive mechanism is word suggestion, i.e. suggesting complete words as they are being typed. Despite their almost universal adoption, these systems have not generally been proven to improve typing performance, and were even found to be detrimental to it in some studies. We conducted the first investigation of word suggestion under the combined scopes of input device, suggestion accuracy, and user typing efficiency (see section 7.3.1), and revealed their effect on how frequently users make use of suggested words, and whether it improves typing speed. Understanding such fundamental aspects of common interactive tasks helps better adapt system features to their context and intended use. Finally, while being used in virtually every desktop computer and gaming console, pointing transfer functions (the functions that transform mouse, trackpad, or joystick input into cursor or viewport movement) remain poorly understood to this day. This is a frequent topic of investigation in the team, and our most recent work (see section 7.1.1) reveals how their current implementation in desktop OSs hinders the generalization of consistent behaviors across devices and applications, making users either satisfice with suboptimal mappings or spend unnecessary time re-setting their acceleration settings every time they change hardware or software. We designed tools to facilitate porting cursor behaviors from between systems, and discuss how to make these ubiquitous interaction mechanisms more flexible at low level.

Overall, as we did in these studies, revealing wrong assumptions on actual systems' design and working out how to solve the problems that they hide at the users side, is a crucial methodology to inform the design of our Interaction Machine.

7.4.2 Foster modularity and adaptability in the design of interactive systems

A central characteristic that we envision in our Interaction Machine is that it can adapt to its users, and that its users can adjust it to their own will or needs. Designing for modularity is arguably a stark departure from today's interaction systems, which are designed to accomplish the exact tasks they were designed to perform; unplanned uses usually require either hacking around the system's existing capabilities, or designing a whole new system.

Between 2016 and 2019, team members contributed to the design of France's first official keyboard layout under the supervision of the French Ministry of Culture, and the process was documented in a 2021 article (see section 7.3.3). To this end, a new method of “computational design” was developed, combining performance and usability modeling with combinatorial optimization in a way that allowed non-technical shareholders, such as expert linguists, typographers, and keyboard manufacturers, to explore hypotheses and suggest local or global changes to its design priorities or to the layout itself. Automatic or semi-automatic keyboard layout optimization is a hard challenge with many contradictory objectives that include typing performance, ergonomics, culture, and user's overall resistance to change. This system contributed to an efficient design process that illustrates how technical, cultural, and design considerations can blend in a seamless manner. Novel input channels are another way to extend the interactive vocabulary of a system while maintaining its already successful features. In our recent work on bendable styluses (see section 7.2.2), we explored the design space of flexural stiffness, i.e. how much an object can resist bending forces, to augment tablet styluses such as the ones used by artists on drawing tablets. Our findings inform the potential uses and benefits of this new input characteristic, and in particular how it could be used and appropriated by users to augment the capabilities and expressiveness of their everyday tools. From a system perspective, this reveals the technological weaknesses to support such approaches, especially in terms of advanced input management. Finally, we conducted an in-depth exploration of what constitutes a desirable mapping between sensor data and system response in the context of digital musical instruments (see section 7.2.3). This investigation involved a large panel of professional musicians who were asked to design such mappings for live performances, and to reflect on their design. It revealed unexpected trade-offs, such as appreciating that the resulting instrument behave unexpectedly while requiring that the mapping be easy to understand, for the player as well as for the audience.

Beyond the design of novel musical instruments, this project opens up new venues to build and generalize tools for the configuration of interactive behaviors, bridging low-level input management and higher-level goals and desired dynamic characteristics of the resulting output.

These are driving aspects of our work towards an Interaction Machine, and of the main directions that our team will take in the coming years (connecting low-level input management with higher-level system component in a more flexible and adaptable way).

8 Bilateral contracts and grants with industry

8.1 Bilateral Contracts with Industry

Participants: Thomas Pietrzak.

Julien Decaudin, former engineer in the team initiated a start-up project called Aureax. The objective is to create a haptic navigation system for cyclists with turn-by-turn information with haptic cues. This project is supported by Inria Startup Studio with Thomas Pietrzak as scientific consultant. His past work on the encoding of information with tactile cues 40, and the design and implementation of tactile displays 5 are the scientific foundations of this initiative. In 2021 we received a grant from the Hauts-de-France region through the Start-AIRR program. We hired Rahul Kumar Ray to work on the design and evaluation of navigation cues in both a laboratory setup, on a simulator, and on the road.

9 Partnerships and cooperations

9.1 International initiatives

9.1.1 Participation in other International Programs

Université de Lille - International Associate Laboratory

Reappearing Interfaces in Ubiquitous Environments (Réapp)

with Edward Lank, Daniel Vogel & Keiko Katsuragawa at University of Waterloo (Canada) - Cheriton School of Computer Science

Duration: 2019 - 2023

The LAI Réapp is an International Associated Laboratory between Loki and Cheriton School of Computer Science from the University of Waterloo in Canada. It is funded by the University of Lille to ease shared student supervision and regular inter-group contacts. The University of Lille will also provide a grant for a co-tutelle PhD thesis between the two universities.

We are at the dawn of the next computing paradigm where everything will be able to sense human input and augment its appearance with digital information without using screens, smartphones, or special glasses—making user interfaces simply disappear. This introduces many problems for users, including the discoverability of commands and use of diverse interaction techniques, the acquisition of expertise, and the balancing of trade-offs between inferential (AI) and explicit (user-driven) interactions in aware environments. We argue that interfaces must reappear in an appropriate way to make ubiquitous environments useful and usable. This project tackles these problems, addressing (1) the study of human factors related to ubiquitous and augmented reality environments, and the development of new interaction techniques helping to make interfaces reappear; (2) the improvement of transition between novice and expert use and optimization of skill transfer; and, last, (3) the question of delegation in smart interfaces, and how to adapt the trade-off between implicit and explicit interaction.

Joint publications in 2021: 18

9.2 International research visitors

9.2.1 Visits of international scientists

Inria International Chair

Marcelo M. Wanderley

Professor, Schulich School of Music/IDMIL, McGill University (Canada)

Title: Expert interaction with devices for musical expression (2017 - 2021)

The main topic of this project is the expert interaction with devices for musical expression and consists of two main directions: the design of digital musical instruments (DMIs) and the evaluation of interactions with such instruments. It will benefit from the unique, complementary expertise available at the Loki Team, including the design and evaluation of interactive systems, the definition and implementation of software tools to track modifications of, visualize and haptically display data, as well as the study of expertise development within human-computer interaction contexts. The project’s main goal is to bring together advanced research on devices for musical expression (IDMIL – McGill) and cutting-edge research in Human-computer interaction (Loki Team).

Edward Lank

Professor at Cheriton School of Computer Science, University of Waterloo (Canada)

Title: Rich, Reliable Interaction in Ubiquitous Environments (2019 - 2023)

The objectives of the research program are:

- Designing Rich Interactions for Ubiquitous and Augmented Reality Environments

- Designing Mechanisms and Metaphors for Novices, Experts, and the Novice to Expert Transition

- Integrating Intelligence with Human Action in Richly Augmented Environments.

9.3 Informal International Partners

-

Scott Bateman, University of New Brunswick, Fredericton, CA

interaction in 3D environments (VR, AR) -

Anna Maria Feit, Saarland University, Allemagne

design of the new French keyboard layout 11 -

Audrey Girouard, Carleton University, Ottawa, CA

flexible input devices 14, interactions for digital fabrication (co-tutelle thesis of Johann Felipe Gonzalez Avila) -

Nicolai Marquardt, University College, London, UK

illustration of interaction on a static support 12 -

Miguel Olivares Mendez, University of Luxembourg, Luxembourg

human factors for the teleoperation of lunar rovers -

Antti Oulasvirta, Aalto University, Finlande

automated methods to adjust pointing transfer functions to user movements, design of the new French keyboard layout 11 -

Simon Perrault, Singapore University of Technology and Design, Singapore

study and conception of tactile interactions, perception of shapes on mobile devices 18 -

Anne Roudaut, University of Bristol, UK

perception of shapes on mobile devices 18 -

Daniel Vogel, University of Waterloo, Waterloo, CA

composing text on smartphones, tactile interaction 17

9.4 National initiatives

9.4.1 ANR

Causality (JCJC, 2019-2023)

Integrating Temporality and Causality to the Design of Interactive Systems

Participants: Géry Casiez [contact person], Stéphane Huot [contact person], Alice Loizeau [contact person], Sylvain Malacria [contact person], Mathieu Nancel [contact person], Philippe Schmid.

The project addresses a fundamental limitation in the way interfaces and interactions are designed and even thought about today, an issue we call procedural information loss: once a task has been completed by a computer, significant information that was used or produced while processing it is rendered inaccessible regardless of the multiple other purposes it could serve. It hampers the identification and solving of identifiable usability issues, as well as the development of new and beneficial interaction paradigms. We will explore, develop, and promote finer granularity and better-described connections between the causes of those changes, their context, their consequences, and their timing. We will apply it to facilitate the real-time detection, disambiguation, and solving of frequent timing issues related to human reaction time and system latency; to provide broader access to all levels of input data, therefore reducing the need to "hack" existing frameworks to implement novel interactive systems; and to greatly increase the scope and expressiveness of command histories, allowing better error recovery but also extended editing capabilities such as reuse and sharing of previous actions.

Web site: http://loki.lille.inria.fr/causality/

Related publications in 2021: 15.

Discovery (JCJC, 2020-2024)

Promoting and improving discoverability in interactive systems

Participants: Géry Casiez [contact person], Sylvain Malacria [contact person], Eva Mackamul.

This project addresses a fundamental limitation in the way interactive systems are usually designed, as in practice they do not tend to foster the discovery of their input methods (operations that can be used to communicate with the system) and corresponding features (commands and functionalities that the system supports). Its objective is to provide generic methods and tools to help the design of discoverable interactive systems: we will define validation procedures that can be used to evaluate the discoverability of user interfaces, design and implement novel UIs that foster input method and feature discovery, and create a design framework of discoverable user interfaces. This project investigates, but is not limited to, the context of touch-based interaction and will also explore two critical timings when the user might trigger a reflective practice on the available inputs and features: while the user is carrying her task (discovery in-action); and after having carried her task by having informed reflection on her past actions (discovery on-action). This dual investigation will reveal more generic and context-independent properties that will be summarized in a comprehensive framework of discoverable interfaces. Our ambition is to trigger a significant change in the way all interactive systems and interaction techniques, existing and new, are thought, designed, and implemented with both performance and discoverability in mind.

Web site: http://ns.inria.fr/discovery

PerfAnalytics (PIA “Sport de très haute performance”, 2020-2023)

In situ performance analysis

Participants: Géry Casiez [contact person], Bruno Fruchard [contact person], Stéphane Huot [contact person], Sylvain Malacria.

The objective of the PerfAnalytics project (Inria, INSEP, Univ. Grenoble Alpes, Univ. Poitiers, Univ. Aix-Marseille, Univ. Eiffel & 5 sports federations) is to study how video analysis, now a standard tool in sport training and practice, can be used to quantify various performance indicators and deliver feedback to coaches and athletes. The project, supported by the boxing, cycling, gymnastics, wrestling, and mountain and climbing federations, aims to provide sports partners with a scientific approach dedicated to video analysis, by coupling existing technical results on the estimation of gestures and figures from video with scientific biomechanical methodologies for advanced gesture objectification (muscular for example).

9.4.2 Inria Project Labs

AVATAR (2018-2022)

The next generation of our virtual selves in digital worlds

Participants: Marc Baloup [contact person], Géry Casiez [contact person], Stéphane Huot [contact person], Thomas Pietrzak [contact person], Grégoire Richard.

This project aims at delivering the next generation of virtual selves, or avatars, in digital worlds. In particular, we want to push further the limits of perception and interaction through our avatars to obtain avatars that are better embodied and more interactive. Loki's contribution in this project consists in designing novel 3D interaction paradigms for avatar-based interaction and to design new multi-sensory feedbacks to better feel our interactions through our avatars.

Partners: Inria's GRAPHDECO, HYBRID, MIMETIC, MORPHEO & POTIOC teams, Mel Slater (Event Lab, University Barcelona, Spain), Technicolor and Faurecia.

Web site: https://avatar.inria.fr/

9.5 Regional initiatives

Ariane (Start-AIRR région Hauts-de-France, 2020-2021)

Validation of the feasibility and relevance of the use of haptic signals for the transmission of complex information

Participants: Thomas Pietrzak [contact person], Rahul Kumar Ray.

Tactons are abstract, structured tactile messages that can be used to convey information in a non-visual way. Several tactile parameters of vibrations have been explored as a medium for encoding information, such as rhythm, roughness, and spatial location. This has been further extended to several other haptic technologies such as pin arrays, demonstrating the possibility of giving directional cues to help visually impaired children explore simple electrical circuit diagrams and geometric shapes. More recently, we have worked in the group on other haptic technologies, in particular a non-visual display that uses the sense of touch around the wrist. The latter allows for example to create an illusion of vibration moving continuously on the skin.

In this project we will use this new tactile feedback to create Tactons, and use them in consumer applications. The key use-case developped with the start-up Aureax is a haptic turn-by-turn navigation system for cyclists. It consists in giving the user instructions such as: turn left or right, cross a traffic circle, take a particular exit in a traffic circle, follow a fork to the left or to the right, or make a U-turn. The région Hauts-de-France funding allowed us to hire an engineer for 12 months, who will implement the software needed to design and study appropriate haptic cues.

Partners: Aureax.

10 Dissemination

10.1 Promoting scientific activities

10.1.1 Scientific events: organisation

Member of the organizing committees

- CHI 2021: Sylvain Malacria (co-chair for the track Interactivity / Demonstrations)

- Mobile HCI 2021: Thomas Pietrzak (publications co-chair)

10.1.2 Scientific events: selection

Member of the conference program committees

Reviewer

10.1.3 Journal

Reviewer - reviewing activities

- Transactions on Computer-Human Interaction (ACM): Géry Casiez, Mathieu Nancel, Thomas Pietrzak

- Journal on Multimodal User Interfaces (Springer): Mathieu Nancel

- Behaviour & Information Technology (Taylor & Francis): Mathieu Nancel

10.1.4 Invited talks

- Human-Computer Interaction for Space Activities, July 12th, 2021, LIST, Luxembourg: Thomas Pietrzak

10.1.5 Leadership within the scientific community

- Association Francophone d'Interaction Humain-Machine (AFIHM): Géry Casiez (member of the steering committee), Sylvain Malacria (member of the executive committee)

10.1.6 Scientific expertise

- Agence Nationale de la Recherche (ANR): Mathieu Nancel (member of the CES33 “Interaction and Robotics” committee)

10.1.7 Research administration

For Inria

- Evaluation Committee: Stéphane Huot (member)

For CNRS

- Comité National de la Recherche Scientifique, Sec. 7: Géry Casiez (until June 2021)

For Inria Lille – Nord Europe

- Scientific Officer: Stéphane Huot

- “Commission des Emplois de Recherche du centre Inria Lille – Nord Europe” (CER): Sylvain Malacria (member)

- “Commission de Développement Technologique” (CDT): Mathieu Nancel (member)

- “Comité Opérationnel d'Évaluation des Risques Légaux et Éthiques” (COERLE, the Inria Ethics board): Thomas Pietrzak (local correspondent)

For the Université de Lille

- Coordinator for internships at IUT A: Géry Casiez

- Computer Science Department council: Thomas Pietrzak (member)

- MADIS Graduate School council: Géry Casiez (member)

For the CRIStAL lab of Université de Lille & CNRS

- Direction board: Géry Casiez

- Computer Science PhD recruiting committee: Géry Casiez (member)

Hiring committees

- Inria's committees for Junior Researcher Positions (CRCN) in Lille and Saclay: Stéphane Huot (member)

- Université de Lille's committee for Assistant Professor Positions in Computer Science (IUT A): Stéphane Huot (member)

- Université de Lille's committee for Assistant Professor Positions in Computer Science (FST): Géry Casiez (vice-president)

- Université Paris-Saclay's committee for Professor Positions in Computer Science (IUT): Géry Casiez (member)

10.2 Teaching - Supervision - Juries

10.2.1 Teaching

- Master Informatique: Géry Casiez (6h), Sylvain Malacria (12h), Mathieu Nancel (8h), Thomas Pietrzak (18h), IHMA, M2, Université de Lille

- Doctoral course: Géry Casiez (12h), Experimental research and statistical methods for Human-Computer Interaction, Université de Lille

- DUT Informatique: Géry Casiez (38h), Grégoire Richard (28h), Marc Baloup (28h), IHM, 1st year, IUT A de Lille - Université de Lille

- DUT Informatique: Grégoire Richard (21.5h), Algorithmes et Programmation, 1st year, IUT A de Lille - Université de Lille

- Cursus ingénieur: Sylvain Malacria (9h), 3DETech, IMT Lille-Douai

- Master Informatique: Thomas Pietrzak (54h), Sylvain Malacria (48h), Introduction à l'IHM, M1, Université de Lille

- Master Informatique: Thomas Pietrzak (15h), Initiation à la recherche, M1, Université de Lille

- Licence Informatique: Thomas Pietrzak (52h), Sylvain Malacria (3h), Interaction Homme-Machine, L3, Université de Lille

- Licence Informatique: Thomas Pietrzak (18h), Logique, L3, Université de Lille

- Licence Informatique: Thomas Pietrzak (18h), Logique, L2, Université de Lille

- Cursus ingénieur: Thomas Pietrzak (40h), Informatique débutant, Polytech Lille

- Cursus ingénieur: Damien Pollet (65h), Java, IMT Lille-Douai

- Licence Informatique: Damien Pollet (40.5h), Informatique, L1, Université de Lille

- Licence Informatique: Damien Pollet (18h), Conception orientée objet, L3, Université de Lille

- Licence Informatique: Damien Pollet (42h), Programmation des systèmes, L3, Université de Lille

- Licence Informatique: Damien Pollet (11.5h), Option Meta, L3, Université de Lille

- Master Informatique: Damien Pollet (27h), Langages et Modèles Dédiés, M2, Université de Lille

10.2.2 Supervision

- PhD: Marc Baloup, Interaction techniques for object selection and facial expression control in virtual reality, defended in Dec. 2021, advised by Géry Casiez & Thomas Pietrzak

- PhD: Axel Antoine, Helping Users with Interactive Strategies, defended in Jan. 2021, advised by Géry Casiez & Sylvain Malacria

- PhD in progress: Alice Loizeau, Understanding and designing around error in interactive systems, started Oct. 2021, advised by Stéphane Huot & Mathieu Nancel

- PhD in progress: Yuan Chen, Making Interfaces Re-appearing in Ubiquitous Environments, started Dec. 2020, advised by Géry Casiez, Sylvain Malacria & Edward Lank (co-tutelle with University of Waterloo, Canada)

- PhD in progress: Eva Mackamul, Towards a Better Discoverability of Interactions in Graphical User Interfaces, started Oct. 2020, advised by Géry Casiez & Sylvain Malacria

- PhD in progress: Travis West, Examining the Design of Musical Interaction: The Creative Practice and Process, started Oct. 2020, advised by Stéphane Huot & Marcelo Wanderley (co-tutelle with McGill University, Canada)

- PhD in progress: Johann Felipe González Ávila, Direct Manipulation with Flexible Devices, started Sep. 2020, advised by Géry Casiez, Thomas Pietrzak & Audrey Girouard (co-tutelle with Carleton University, Canada)

- PhD in progress: Grégoire Richard, Touching Avatars : Role of Haptic Feedback during Interactions with Avatars in Virtual Reality, started Oct. 2019, advised by Géry Casiez & Thomas Pietrzak

- PhD in progress: Philippe Schmid, Command History as a Full-fledged Interactive Object, started Oct. 2019, advised by Stéphane Huot & Mathieu Nancel

10.2.3 Juries

- Eugénie Brasier (PhD, Université Paris-Saclay): Mathieu Nancel, examiner

- Jay Henderson (PhD, University of Waterloo): Sylvain Malacria, examiner

- Elio Keddisseh (PhD, Université de Toulouse): Stéphane Huot, reviewer

- Victor Mercado (PhD, Université de Rennes): Géry Casiez, examiner

- Jean-Marie Normand (HDR, Université de Nantes): Géry Casiez, reviewer

- Carole Plasson (PhD, Université de Grenoble): Géry Casiez, reviewer

- Kaixing Zhao (PhD, Université de Toulouse): Thomas Pietrzak, examiner

10.2.4 Mid-term evaluation committees

- Sami Barchid (PhD, Univ. Lille): Géry Casiez

- Adrien Chaffangeon (PhD, Laboratoire d'informatique de Grenoble): Mathieu Nancel

- Thomas Feutrier (PhD, Univ. Lille): Géry Casiez

- Milad Jamalzadeh (PhD, Univ. Lille): Géry Casiez

- Flavien Lebrun (PhD, Sorbonne Univ.): Géry Casiez

- Etienne Ménager (PhD, Univ. Lille): Géry Casiez

- Victor Paredes (PhD, IRCAM): Stéphane Huot

- Brice Parilusyan (PhD, De Vinci Innovation Center): Thomas Pietrzak

10.3 Popularization

10.3.1 Articles and contents

Several members of the team have contributed to the recently published Inria's white paper on the Internet of Things (IoT).

10.3.2 Education

- “Numérique et Sciences Informatiques, 1ère spécialité” 22 (Hachette, ISBN 978-2-01-786630-5): Mathieu Nancel

- “Découverte des Environnements de Recherche (L2 – Université de Lille)” – Website: Bruno Fruchard

11 Scientific production

11.1 Major publications

- 1 articleA method and toolkit for digital musical instruments: generating ideas and prototypes.IEEE MultiMedia241January 2017, 63-71URL: https://doi.org/10.1109/MMUL.2017.18

- 2 inproceedingsLooking through the eye of the mouse: a simple method for measuring end-to-end latency using an optical mouse.Proceedings of UIST'15ACMNovember 2015, 629-636URL: http://dx.doi.org/10.1145/2807442.2807454

- 3 articleSupporting novice to expert transitions in user interfaces.ACM Computing Surveys472November 2014, URL: http://dx.doi.org/10.1145/2659796

- 4 articleLeveraging finger identification to integrate multi-touch command selection and parameter manipulation.International Journal of Human-Computer Studies99March 2017, 21-36URL: http://dx.doi.org/10.1016/j.ijhcs.2016.11.002

- 5 inproceedingsDirect manipulation in tactile displays.Proceedings of CHI'16ACMMay 2016, 3683-3693URL: http://dx.doi.org/10.1145/2858036.2858161

- 6 inproceedingsPromoting hotkey use through rehearsal with ExposeHK.Proceedings of CHI'13ACMApril 2013, 573-582URL: http://doi.acm.org/10.1145/2470654.2470735

- 7 inproceedingsSkillometers: reflective widgets that motivate and help users to improve performance.Proceedings of UIST'13ACMOctober 2013, 321-330URL: http://doi.acm.org/10.1145/2501988.2501996

- 8 inproceedingsCausality: a conceptual model of interaction history.Proceedings of CHI'14ACMApril 2014, 1777-1786URL: http://dx.doi.org/10.1145/2556288.2556990

- 9 inproceedingsNext-point prediction metrics for perceived spatial errors.Proceedings of UIST'16ACMOctober 2016, 271-285URL: http://dx.doi.org/10.1145/2984511.2984590

- 10 inproceedingsPolyphony: Programming Interfaces and Interactions with the Entity-Component-System Model.EICS 2019 - 11th ACM SIGCHI Symposium on Engineering Interactive Computing Systems3Valencia, SpainJune 2019

11.2 Publications of the year

International journals

International peer-reviewed conferences

Doctoral dissertations and habilitation theses

11.3 Other

Scientific popularization

11.4 Cited publications

- 23 inproceedingsDesigning interaction, not interfaces.Proceedings of AVI'04ACM2004, 15-22URL: http://doi.acm.org/10.1145/989863.989865

- 24 inproceedingsNo more bricolage! Methods and tools to characterize, replicate and compare pointing transfer functions.Proceedings of UIST'11ACMOctober 2011, 603-614URL: http://dx.doi.org/10.1145/2047196.2047276

- 25 techreportAugmenting human intellect: a conceptual framework.AFOSR-3233Stanford Research InstituteOctober 1962, URL: http://www.dougengelbart.org/pubs/augment-3906.html

- 26 inproceedingsFlexStylus: leveraging flexion input for pen interaction.Proceedings of UIST'17ACMOctober 2017, 375-385URL: https://doi.org/10.1145/3126594.3126597

- 27 articleToolkits and Interface Creativity.Multimedia Tools Appl.322February 2007, 139--159URL: http://dx.doi.org/10.1007/s11042-006-0062-y

- 28 incollectionA moving target: the evolution of Human-Computer Interaction.The Human Computer Interaction handbook (3rd edition)CRC PressMay 2012, xxvii-lxiURL: http://research.microsoft.com/en-us/um/people/jgrudin/publications/history/HCIhandbook3rd.pdf

- 29 phdthesisDesigneering interaction: a missing link in the evolution of Human-Computer Interaction.205 pagesUniversité Paris-Sud, FranceMay 2013, URL: https://hal.inria.fr/tel-00823763

- 30 inproceedingsHow fast is fast enough? A study of the effects of latency in direct-touch pointing tasks.Proceedings of CHI'13ACMApril 2013, 2291-2300URL: http://doi.acm.org/10.1145/2470654.2481317

- 31 inproceedingsAutoGain: Gain Function Adaptation with Submovement Efficiency Optimization.Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (CHI '20)Honolulu, United StatesACMApril 2020, 1-12

- 32 inproceedingsLag as a determinant of human performance in interactive systems.Proceedings of CHI'93ACMApril 1993, 488-493URL: http://doi.acm.org/10.1145/169059.169431

- 33 articleResponding to cognitive overload: coadaptation between users and technology.Intellectica301ARCo2000, 177-193URL: http://intellectica.org/SiteArchives/archives/n30/30_06_Mackay.pdf

- 34 bookProxemic Interactions: From Theory to Practice.Synthesis Lectures on Human-Centered InformaticsMorgan & Claypool2015, URL: https://books.google.fr/books?id=2dPtBgAAQBAJ

- 35 inproceedingsBringing Interactivity to Research Papers and Presentations with Chameleon.Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing SystemsCHI EA '20New York, NY, USAHonolulu, HI, USAAssociation for Computing Machinery2020, 1–4URL: https://doi.org/10.1145/3334480.3383173

- 36 inproceedingsCommunityCommands: command recommendations for software applications.Proceedings of UIST'09ACMOctober 2009, 193-202URL: http://dx.doi.org/10.1145/1622176.1622214

- 37 inproceedingsNext-Point Prediction for Direct Touch Using Finite-Time Derivative Estimation.Proceedings of the 31st Annual ACM Symposium on User Interface Software and TechnologyUIST '18New York, NY, USABerlin, GermanyACM2018, 793--807URL: http://doi.acm.org/10.1145/3242587.3242646

- 38 articleMid-air pointing on ultra-walls.ACM ToCHI225October 2015, URL: http://dx.doi.org/10.1145/2766448

- 39 incollectionHuman computing and machine understanding of human behavior: a survey.Artifical intelligence for human computing4451LNCSSpringer2007, 47-71URL: http://dx.doi.org/10.1007/978-3-540-72348-6_3

- 40 articleCreating Usable Pin Array Tactons for Non-Visual Information.ToH222009, 61-72URL: https://doi.org/10.1109/TOH.2009.6

- 41 inproceedingsShowMeHow: Translating User Interface Instructions Between Applications.Proceedings of UIST'11ACMOctober 2011, 127-134URL: http://doi.acm.org/10.1145/2047196.2047212