Keywords

Computer Science and Digital Science

- A3.1. Data

- A3.1.10. Heterogeneous data

- A3.1.11. Structured data

- A3.4. Machine learning and statistics

- A3.4.1. Supervised learning

- A3.4.2. Unsupervised learning

- A3.4.6. Neural networks

- A3.4.7. Kernel methods

- A3.4.8. Deep learning

- A9. Artificial intelligence

- A9.2. Machine learning

Other Research Topics and Application Domains

- B3.6. Ecology

- B6.3.4. Social Networks

- B7.2.1. Smart vehicles

- B8.2. Connected city

- B9.6. Humanities

1 Team members, visitors, external collaborators

Research Scientist

- Pierre-Alexandre Mattei [INRIA, Researcher]

Faculty Members

- Charles Bouveyron [Team leader, UNIV COTE D'AZUR, Professor, HDR]

- Damien Garreau [UNIV COTE D'AZUR, Associate Professor]

- Frederic Precioso [UNIV COTE D'AZUR, Professor]

- Michel Riveill [UNIV COTE D'AZUR, Professor]

- Vincent Vandewalle [UNIV COTE D'AZUR, Professor, from Sep 2022, HDR]

Post-Doctoral Fellows

- Alessandro Betti [UNIV COTE D'AZUR]

- Gabriele Ciravegna [UNIV COTE D'AZUR]

- Aude Sportisse [INRIA]

PhD Students

- Kilian Burgi [UNIV COTE D'AZUR, from Sep 2022]

- Gatien Caillet [ORANGE, from Nov 2022]

- Antoine Collin [UNIV COTE D'AZUR, from Jun 2022]

- Célia Dcruz [UNIV COTE D'AZUR]

- Kevin Dsouza [UNIV COTE D'AZUR]

- Dingge Liang [UNIV COTE D'AZUR]

- Gianluigi Lopardo [UNIV COTE D'AZUR]

- Giulia Marchello [UNIV COTE D'AZUR]

- Hugo Miralles [ORANGE]

- Kevin Mottin [UNIV COTE D'AZUR]

- Louis Ohl [UNIV COTE D'AZUR]

- Baptiste Pouthier [NXP]

- Hugo Schmutz [UNIV COTE D'AZUR]

- Hugo Senetaire [UNIV DTU]

- Julie Tores [UNIV COTE D'AZUR, from Nov 2022]

- Cédric Vincent-Cuaz [UNIV COTE D'AZUR]

- Xuchun Zhang [UNIV COTE D'AZUR]

Technical Staff

- Lucas Boiteau [INRIA, Engineer, from Aug 2022]

- Marco Corneli [UNIV COTE D'AZUR, Engineer, until Aug 2022]

- Amosse Edouard [INSTANT SYSTEM , Engineer, from Feb 2022]

- Stephane Petiot [INRIA, Engineer]

- Li Yang [CNRS, Engineer, from Feb 2022]

- Mansour Zoubeirou A Mayaki [PRO BTP, Engineer]

Interns and Apprentices

- Davide Adamo [INRIA, from Sep 2022]

Administrative Assistant

- Claire Senica [INRIA]

External Collaborators

- Marco Corneli [Université Côte d'Azur, from Sep 2022, Chaire de Professeur Junior]

- Marco Gori [UNIV FLORENCE]

- Pierre Latouche [UNIV CLERMONT AUVERG, from Nov 2022, HDR]

- Hans Ottosson [IBM]

2 Overall objectives

Artificial intelligence has become a key element in most scientific fields and is now part of everyone life thanks to the digital revolution. Statistical, machine and deep learning methods are involved in most scientific applications where a decision has to be made, such as medical diagnosis, autonomous vehicles or text analysis. The recent and highly publicized results of artificial intelligence should not hide the remaining and new problems posed by modern data. Indeed, despite the recent improvements due to deep learning, the nature of modern data has brought new specific issues. For instance, learning with high-dimensional, atypical (networks, functions, …), dynamic, or heterogeneous data remains difficult for theoretical and algorithmic reasons. The recent establishment of deep learning has also opened new questions such as: How to learn in an unsupervised or weakly-supervised context with deep architectures? How to design a deep architecture for a given situation? How to learn with evolving and corrupted data?

To address these questions, the Maasai team focuses on topics such as unsupervised learning, theory of deep learning, adaptive and robust learning, and learning with high-dimensional or heterogeneous data. The Maasai team conducts a research that links practical problems, that may come from industry or other scientific fields, with the theoretical aspects of Mathematics and Computer Science. In this spirit, the Maasai project-team is totally aligned with the “Core elements of AI” axis of the Institut 3IA Côte d’Azur. It is worth noticing that the team hosts three 3IA chairs of the Institut 3IA Côte d’Azur, as well as several PhD students funded by the Institut.

3 Research program

Within the research strategy explained above, the Maasai project-team aims at developing statistical, machine and deep learning methodologies and algorithms to address the following four axes.

Unsupervised learning

The first research axis is about the development of models and algorithms designed for unsupervised learning with modern data. Let us recall that unsupervised learning — the task of learning without annotations — is one of the most challenging learning challenges. Indeed, if supervised learning has seen emerging powerful methods in the last decade, their requirement for huge annotated data sets remains an obstacle for their extension to new domains. In addition, the nature of modern data significantly differs from usual quantitative or categorical data. We ambition in this axis to propose models and methods explicitly designed for unsupervised learning on data such as high-dimensional, functional, dynamic or network data. All these types of data are massively available nowadays in everyday life (omics data, smart cities, ...) and they remain unfortunately difficult to handle efficiently for theoretical and algorithmic reasons. The dynamic nature of the studied phenomena is also a key point in the design of reliable algorithms.

On the one hand, we direct our efforts towards the development of unsupervised learning methods (clustering, dimension reduction) designed for specific data types: high-dimensional, functional, dynamic, text or network data. Indeed, even though those kinds of data are more and more present in every scientific and industrial domains, there is a lack of sound models and algorithms to learn in an unsupervised context from such data. To this end, we have to face problems that are specific to each data type: How to overcome the curse of dimensionality for high-dimensional data? How to handle multivariate functional data / time series? How to handle the activity length of dynamic networks? On the basis of our recent results, we ambition to develop generative models for such situations, allowing the modeling and the unsupervised learning from such modern data.

On the other hand, we focus on deep generative models (statistical models based on neural networks) for clustering and semi-supervised classification. Neural network approaches have demonstrated their efficiency in many supervised learning situations and it is of great interest to be able to use them in unsupervised situations. Unfortunately, the transfer of neural network approaches to the unsupervised context is made difficult by the huge amount of model parameters to fit and the absence of objective quantity to optimize in this case. We therefore study and design model-based deep learning methods that can hande unsupervised or semi-supervised problems in a statistically grounded way.

Finally, we also aim at developing explainable unsupervised models that can ease the interaction with the practitioners and their understanding of the results. There is an important need for such models, in particular when working with high-dimensional or text data. Indeed, unsupervised methods, such as clustering or dimension reduction, are widely used in application fields such as medicine, biology or digital humanities. In all these contexts, practitioners are in demand of efficient learning methods which can help them to make good decisions while understanding the studied phenomenon. To this end, we aim at proposing generative and deep models that encode parsimonious priors, allowing in turn an improved understanding of the results.

Understanding (deep) learning models

The second research axis is more theoretical, and aims at improving our understanding of the behaviour of modern machine learning models (including, but not limited to, deep neural networks). Although deep learning methods and other complex machine learning models are obviously at the heart of artificial intelligence, they clearly suffer from an overall weak knowledge of their behaviour, leading to a general lack of understanding of their properties. These issues are barriers to the wide acceptance of the use of AI in sensitive applications, such as medicine, transportation, or defense. We aim at combining statistical (generative) models with deep learning algorithms to justify existing results, and allow a better understanding of their performances and their limitations.

We particularly focus on researching ways to understand, interpret, and possibly explain the predictions of modern, complex machine learning models. We both aim at studying the empirical and theoretical properties of existing techniques (like the popular LIME), and at developing new frameworks for interpretable machine learning (for example based on deconvolutions or generative models). Among the relevant application domains in this context, we focus notably on text and biological data.

Another question of interest is: what are the statistical properties of deep learning models and algorithms? Our goal is to provide a statistical perspective on the architectures, algorithms, loss functions and heuristics used in deep learning. Such a perspective can reveal potential issues in exisiting deep learning techniques, such as biases or miscalibration. Consequently, we are also interested in developing statistically principled deep learning architectures and algorithms, which can be particularly useful in situations where limited supervision is available, and when accurate modelling of uncertainties is desirable.

Adaptive and Robust Learning

The third research axis aims at designing new learning algorithms which can learn incrementally, adapt to new data and/or new context, while providing predictions robust to biases even if the training set is small.

For instance, we have designed an innovative method of so-called cumulative learning, which allows to learn a convolutional representation of data when the learning set is (very) small. The principle is to extend the principle of Transfer Learning, by not only training a model on one domain to transfer it once to another domain (possibly with a fine-tuning phase), but to repeat this process for as many domains as available. We have evaluated our method on mass spectrometry data for cancer detection. The difficulty of acquiring spectra does not allow to produce sufficient volumes of data to benefit from the power of deep learning. Thanks to cumulative learning, small numbers of spectra acquired for different types of cancer, on different organs of different species, all together contribute to the learning of a deep representation that allows to obtain unequalled results from the available data on the detection of the targeted cancers. This extension of the well-known Transfer Learning technique can be applied to any kind of data.

We also investigate active learning techniques. We have for example proposed an active learning method for deep networks based on adversarial attacks. An unlabelled sample which becomes an adversarial example under the smallest perturbations is selected as a good candidate by our active learning strategy. This does not only allow to train incrementally the network but also makes it robust to the attacks chosen for the active learning process.

Finally, we address the problem of biases for deep networks by combining domain adaptation approaches with Out-Of-Distribution detection techniques.

Learning with heterogeneous and corrupted data

The last research axis is devoted to making machine learning models more suitable for real-world, "dirty" data. Real-world data rarely consist in a single kind of Euclidean features, and are genereally heterogeneous. Moreover, it is common to find some form of corruption in real-world data sets: for example missing values, outliers, label noise, or even adversarial examples.

Heterogeneous and non-Euclidean data are indeed part of the most important and sensitive applications of artificial intelligence. As a concrete example, in medicine, the data recorded on a patient in an hospital range from images to functional data and networks. It is obviously of great interest to be able to account for all data available on the patients to propose a diagnostic and an appropriate treatment. Notice that this also applies to autonomous cars, digital humanities and biology. Proposing unified models for heterogeneous data is an ambitious task, but first attempts (e.g. the Linkage1 project) on combination of two data types have shown that more general models are feasible and significantly improve the performances. We also address the problem of conciliating structured and non-structured data, as well as data of different levels (individual and contextual data).

On the basis of our previous works (notably on the modeling of networks and texts), we first intend to continue to propose generative models for (at least two) different types of data. Among the target data types for which we would like to propose generative models, we can cite images and biological data, networks and images, images and texts, and texts and ordinal data. To this end, we explore modelings trough common latent spaces or by hybridizing several generative models within a global framework. We are also interested in including potential corruption processes into these heterogeneous generative models. For example, we are developping new models that can handle missing values, under various sorts of missingness assumptions.

Besides the modelling point of view, we are also interested in making existing algorithms and implementations more fit for "dirty data". We study in particular ways to robustify algorithms, or to improve heuristics that handle missing/corrupted values or non-Euclidean features.

4 Application domains

The Maasai research team has the following major application domains:

Medicine

Most of team members apply their research work to Medicine or extract theoretical AI problems from medical situations. In particular, our main applications to Medicine are concerned with pharmacovigilance, medical imaging, and omics. It is worth noticing that medical applications cover all research axes of the team due to the high diversity of data types and AI questions. It is therefore a preferential field of application of the models and algorithms developed by the team.

Digital humanities

Another important application field for Maasai is the increasingly dynamic one of digital humanities. It is an extremely motivating field due to the very original questions that are addressed. Indeed, linguists, sociologists, geographers and historians have questions that are quite different than the usual ones in AI. This allows the team to formalize original AI problems that can be generalized to other fields, allowing to indirectly contribute to the general theory and methodology of AI.

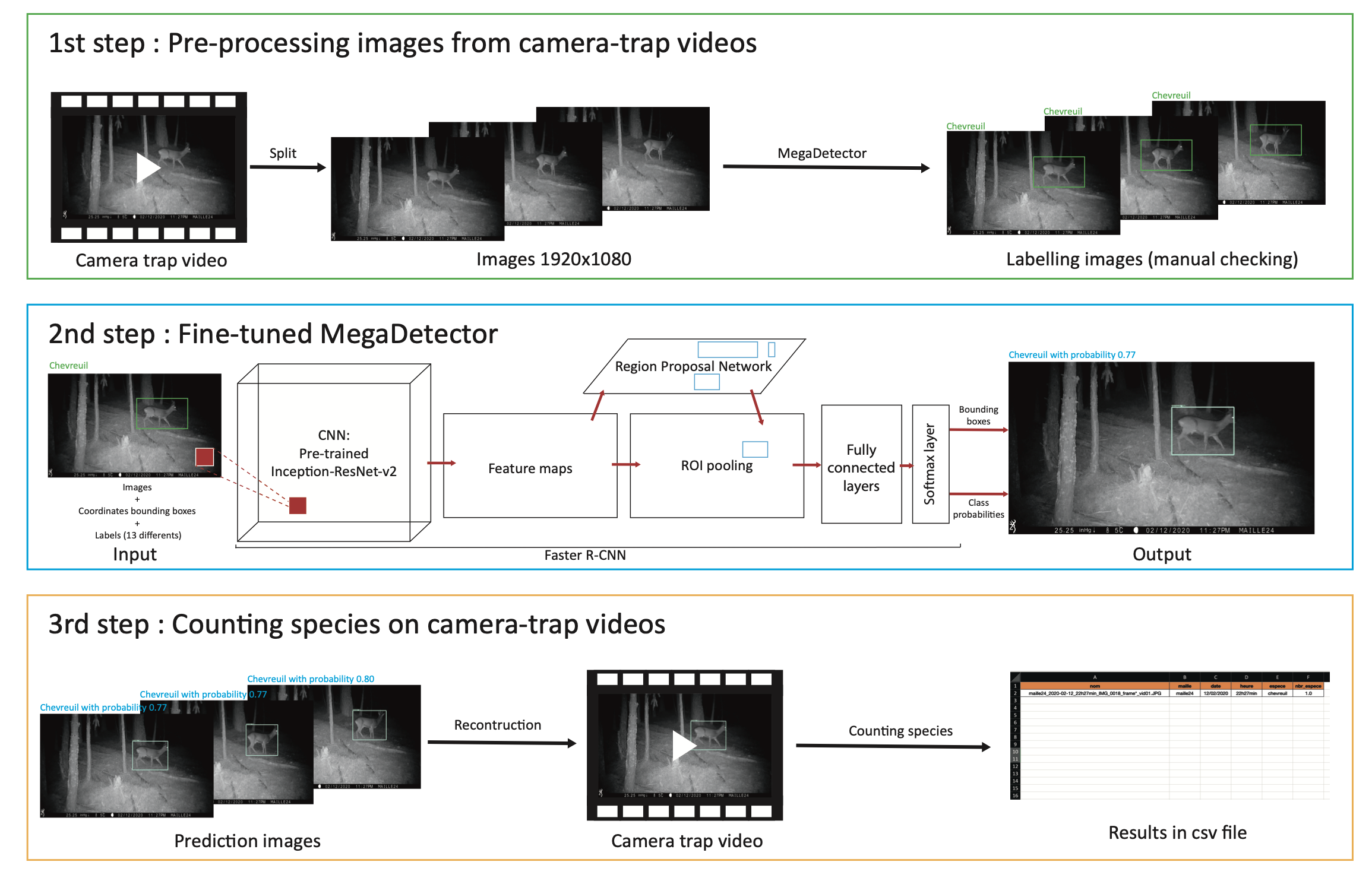

Multimedia

The last main application domain for Maasai is multimedia. With the revolution brought to computer vision field by deep learning techniques, new questions have appeared such as combining subsymbolic and symbolic approaches for complex semantic and perception problems, or as edge AI to embed machine learning approaches for multimedia solutions preserving privacy. This domain brings new AI problems which require to bridge the gap between different views of AI.

Other application domains

Other topics of interest of the team include astronomy, bioinformatics, recommender systems and ecology.

5 Highlights of the year

5.1 Recruitments and promotions

- The team benefited from the recruitment in 2022 of Vincent Vandewalle (coming from the Modal project-team of Inria Lille) as a Full Professor with Université Côte d’Azur. He joined the Maasai team on September, 1st, 2022.

- In the meantime, Marco Corneli (who was research engineer with Université Côte d’Azur) has been hired on a Chaire de Professeur Junior on AI & Archeology, effective on September, 1st, 2022.

5.2 Fundings

- Vincent Vandewalle was granted a 3IA chair from Institut 3IA Côte d'Azur.

- Marco Corneli was granted a chair of "Professor Junior" from Université Côte d'Azur.

5.3 Awards

- Hugo Schmutz was awarded a “highlight lecture” at the 35th Annual Congress of the European Association of Nuclear Medicine in Barcelona (2022), for his work in collaboration with P.-A. Mattei and O. Humbert.

- Cédric Vincent-Cuaz received a “NeurIPS Top Reviewer” award at the Thirty-sixth Conference on Neural Information Processing Systems (NeurIPS’22)

- Pierre-Alexandre Mattei was ranked among the top 10% reviewers of the International Conference on Machine Learning (ICML) in 2022.

- Louis Ohl received a “NeurIPS Scholar Award” award at the Thirty-sixth Conference on Neural Information Processing Systems (NeurIPS’22).

5.4 Conferences co-organised by team members

- The 1st Nice Workshop on Interpretability, organised by Damien Garreau, Frédéric Precioso and Gianluigi Lopardo. The workshop took place on November 17-18, 2022 in Nice, and counted 6 senior research talks, and 11 young research talks, with about 40 participants. Web : https://sites.google.com/view/nwi2022/home

- Statlearn 2022: the workshop Statlearn is a scientific workshop held every year since 2010, which focuses on current and upcoming trends in Statistical Learning. Statlearn is a scientific event of the French Society of Statistics (SFdS). The 2022 edition was the 11th edition of the Statlearn series and welcomed about 50 participants. Web : https://statlearn.sciencesconf.org

- GenU 2022: this small-scale workshop has been held physically in Copenhagen in the Fall. The 2022 edition was on September 14-15, 2022 (Web: https://genu.ai/2022/).

- SophIA Summit: AI conference that brings together researchers and companies doing AI, held every Fall in Sophia Antipolis. The 2022 edition was held on 23-25th November 2022. Web: https://univ-cotedazur.eu/events/sophia-summit.

5.5 Innovation and transfer

- A contract has been signed with the company Naval Group for the development of an open-source Python library for semi-supervised learning, via the hiring of a research engineer. Lucas Boiteau started on August 1st, 2022.

5.6 Nominations

- Vincent Vandewalle has been nominated Deputy Scientific Director of the EFELIA Côte d'Azur program, effective October 1st, 2022. The EFELIA Côte d'Azur program is funded by the AMI Compétences et Métiers d'Avenir to develop education in AI in France.

6 New software and platforms

For the Maasai research team, the main objective of the software implementations is to experimentally validate the results obtained and ease the transfer of the developed methodologies to industry. Most of the software will be released as R or Python packages that requires only a light maintaining, allowing a relative longevity of the codes. Some platforms are also proposed to ease the use of the developed methodologies by users without a strong background in Machine Learning, such as scientists from other fields.

6.1 R and Python packages

The team maintains several R and Python packages, among which the following ones have been released or updated in 2022:

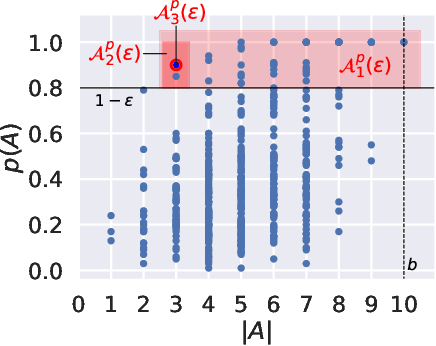

SMACE.

Web site: https://github.com/gianluigilopardo/smace.

- Software Family : vehicle;

- Audience: community;

- Evolution and maintenance: basic;

- Duration of the Development (Duration): 1 year;

- Free Description: this Python package implements SMACE, the first Semi-Model-Agnostic Contextual Explainer. The code is available on Github as well as on pypi at https://pypi.org/project/smace, distributed under the MIT License.

POT.

Web site: https://PythonOT.github.io/.

- Software Family : vehicle;

- Audience: community;

- Evolution and maintenance: lts, long term support.

- Duration of the Development (Duration): 23 Releases since April 2016. MAASAI contribution: since release 0.8.0 In November 2021.

- Free Description: Open source Python library that provides several solvers for optimization problems related to Optimal Transport for signal, image processing and machine learning. Distribution: PyPl distribution, Anaconda distribution. The library has been tested on Linux, MacOSX and Windows. It requires a C++ compiler for building/installing. License: MIT license. Website and documentation: https://PythonOT.github.io/ Source Code (MIT): https://github.com/PythonOT/POT The software contains implementations of more than 40 research papers providing new solvers for Optimal Transport problems.

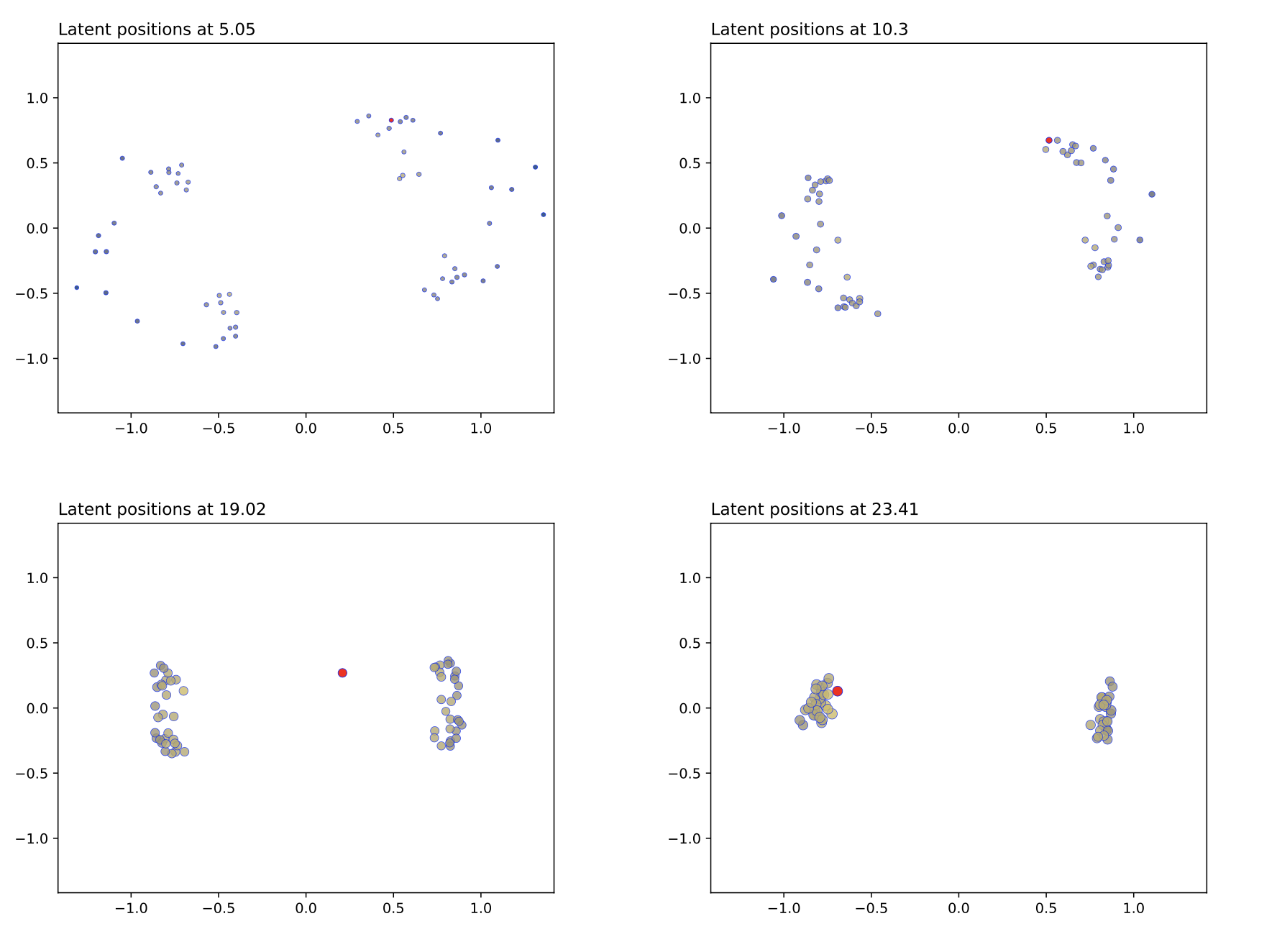

CLPM.

Web site: https://github.com/marcogenni/CLPM.

- Software Family : vehicle;

- Audience: community;

- Evolution and maintenance: basic;

- Duration of the Development (Duration): 2 years;

- Free Description: this Python software that implements CLPM, a continuous time extension of the Latent Position Model for graphs embedding. The code is available on Github and distributed under the MIT License.

ordinalLBM.

Web site: https://cran.r-project.org/web/packages/ordinalLBM/index.html.

- Software Family : vehicle;

- Audience: community;

- Evolution and maintenance: basic;

- Duration of the Development (Duration): 3 years;

- Free Description: this R package implements the inference for the ordinal latent block model for not missing at random data. The code is available on the CRAN repository and distributed under the GPL-2 | GPL-3 licence.

R-miss-tastic.

Web site: https://rmisstastic.netlify.app/.

- Software Family: vehicle.

- Audience: community.

- Evolution and maintenance: basic.

- Duration of the Development (Duration): 2 years.

- Free Description: “R-miss-tastic” platform aims to provide an overview of standard missing values problems, methods, and relevant implementations of methodologies. Beyond gathering and organizing a large majority of the material on missing data (bibliography, courses, tutorials, implementations), “R-miss-tastic” covers the development of standardized analysis workflows. Several pipelines are developed in R and Python to allow for hands-on illustration of and recommendations on missing values handling in various statistical tasks such as matrix completion, estimation and prediction, while ensuring reproducibility of the analyses. Finally, the platform is dedicated to users who analyze incomplete data, researchers who want to compare their methods and search for an up-to-date bibliography, and also teachers who are looking for didactic materials (notebooks, video, slides). The platform takes the form of a reference website: https://rmisstastic.netlify.app/.

GEMINI

Web site: https://github.com/oshillou/GEMINI.r

- Software Family: vehicle;

- Audience: community;

- Evolution and maintenance: basic;

- Duration of the Development (Duration): 1 year

- Free Description: a Python software that allows users to manipulate GEMINI objectives functions on their own data. By specifying a configuration file, users may plug their own data to GEMINI clustering as well as some custom models. The core of the software essentially lies in the file entitled losses.py which contains all of the core objective functions for clustering. The software is currently under no licence, but we are discussing about setting it under a GPL v3 licence.

FunHDDC.

Web site: https://cran.r-project.org/web/packages/funHDDC/index.html

- Software Family : vehicle;

- Audience: community;

- Evolution and maintenance: basic;

- Duration of the Development (Duration): 2 years;

- Free Description: this R package implements the inference for Clustering multivariate functional data in group-specific functional subspaces. The code is available on the CRAN repository and distributed under the GPL-2 | GPL-3 licence.

FunFEM.

Web site: https://cran.r-project.org/web/packages/funFEM/index.html

- Software Family : vehicle;

- Audience: community;

- Evolution and maintenance: basic;

- Duration of the Development (Duration): 2 years;

- Free Description: realized in 2021, this R package implements the inference for the clustering of functional data by modeling the curves within a common and discriminating functional subspace. The code is available on the CRAN repository and distributed under the GPL-2 | GPL-3 licence.

FunLBM.

Web site: https://cran.r-project.org/web/packages/funLBM/index.html

- Software Family : vehicle;

- Audience: community;

- Evolution and maintenance: basic;

- Duration of the Development (Duration): 1 years;

- Free Description: realized in 2022, this R package implements the inference for the co-clustering of functional data (time series) with application to the air pollution data in the South of France. The code is available on the CRAN repository and distributed under the GPL-2 | GPL-3 licence.

MIWAE.

Web Site: https://github.com/pamattei/miwae

- Software Family: vehicle;

- Audience: community;

- Evolution and maintenance: basic;

- Free Description: this is the implementations of the MIWAE method for handling missing data with deep generative modelling, as described in previous works of P.A. Mattei. The Python code is available on Github and freely distributed.

not-MIWAE.

Web Site: https://github.com/nbip/notMIWAE

- Software Family: vehicle;

- Audience: community;

- Evolution and maintenance: basic;

- Free Description: this is the implementations of the not-MIWAE method for handling missing not-at-random data with deep generative modelling. The Python code is available on Github and freely distributed.

supMIWAE.

Web Site: https://github.com/nbip/suptMIWAE

- Software Family: vehicle;

- Audience: community;

- Evolution and maintenance: basic;

- Free Description: this is the implementations of the supMIWAE method for supervised deep learning with missing values. The Python code is available on Github and freely distributed.

fisher-EM.

Web Site: https://cran.r-project.org/web/packages/FisherEM/index.html

- Software Family: vehicle;

- Audience: community;

- Evolution and maintenance: basic;

- Free Description: The FisherEM algorithm, proposed by Bouveyron in previous works is an efficient method for the clustering of high-dimensional data. FisherEM models and clusters the data in a discriminative and low-dimensional latent subspace. It also provides a low-dimensional representation of the clustered data. A sparse version of Fisher-EM algorithm is also provided in this package created in 2020. Distributed under the GPL-2 licence.

6.2 SAAS platforms

The team is also proposing some SAAS (software as a service) platforms in order to allow scientists from other fields or companies to use our technologies. The team developed the following platforms:

DiagnoseNET: Automatic Framework to Scale Neural Networks on Heterogeneous Systems.

Web Site: https://diagnosenet.github.io/.

- Software Family: Transfer;

- Audience: partners;

- Evolution and maintenance: basic;

- Free Description: DiagnoseNET is a platform oriented to design a green intelligence medical workflow for deploying medical diagnostic tools with minimal infrastructure requirements and low power consumption. The first application built was to automate the unsupervised patient phenotype representation workflow trained on a mini-cluster of Nvidia Jetson TX2. The Python code is available on Github and freely distributed.

Indago.

Web site: http://indago.inria.fr. (Inria internal)

- Software Family: transfer.

- Audience: partners

- Evolution and maintenance: lts: long term support.

- Duration of the Development (Duration): 1.8 years

-

Free Description: Indago implements a textual graph clustering method based on a joint analysis of the graph structure and the content exchanged between each nodes. This allows to reach a better segmentation than what could be obtained with traditional methods. Indago's main applications are built around communication network analysis, including social networks. However, Indago can be applied on any graph-structured textual network. Thus, Indago have been tested on various data, such as tweet corpus, mail networks, scientific paper co-publication network, etc.

The software is used as a fully autonomous SaaS platform with 2 parts :

- A Python kernel that is responsible for the actual data processing.

- A web application that handles collecting, pre-processing and saving the data, such as providing a set of visualisation for the interpretation of the results.

Indago is deployed internally on the Inria network and used mainly by the development team for testing and research purposes. We also build tailored versions for industrial or academic partners that use the software externally (with contractual agreements).

Topix.

Web site: https://topix.mi.parisdescartes.fr

- Software Family: research;

- Audience: universe;

- Evolution and maintenance: lts;

- Free Description: Topix is an innovative AI-based solution allowing to summarize massive and possibly extremely sparse data bases involving text. Topix is a versatile technology that can be applied in a large variety of situations where large matrices of texts / comments / reviews are written by users on products or addressed to other individuals (bi-partite networks). The typical use case consists in a e-commerce company interested in understanding the relationship between its users and the sold products thanks to the analysis of user comments. A simultaneous clustering (co-clustering) of users and products is produced by the Topix software, based on the key emerging topics from the reviews and by the underlying model.The Topix demonstration platform allows you to upload your own data on the website, in a totally secured framework, and let the AI-based software analyze them for you. The platform also proposes some typical use cases to give a better idea of what Topix can do.

7 New results

7.1 Unsupervised learning

7.1.1 Generalised Mutual Information for Discriminative Clustering

Participants: Louis Ohl, Pierre-Alexandre Mattei, Charles Bouveyron, Frédéric Precioso

Keywords: Clustering, Deep learning, Information Theory, Mutual Information

Collaborations: Mickael Leclercq, Arnaud Droit (Centre de recherche du CHU de Québec-Université, Université Laval), Warith Harchaoui (Jellysmack)

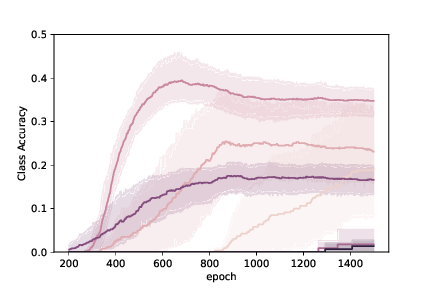

In the last decade, recent successes in deep clustering majorly involved the mutual information (MI) as an unsupervised objective for training neural networks with increasing regularisations. While the quality of the regularisations have been largely discussed for improvements, little attention has been dedicated to the relevance of MI as a clustering objective. In this paper, we first highlight how the maximisation of MI does not lead to satisfying clusters. We identified the Kullback-Leibler divergence as the main reason of this behaviour. Hence, we generalise in 26 the mutual information by changing its core distance, introducing the generalised mutual information (GEMINI): a set of metrics for unsupervised neural network training. Unlike MI, some GEMINIs do not require regularisations when training. Some of these metrics are geometry-aware thanks to distances or kernels in the data space. Finally, we highlight that GEMINIs can automatically select a relevant number of clusters, a property that has been little studied in deep clustering context where the number of clusters is a priori unknown.

7.1.2 Continual Unsupervised Learning for Optical Flow Estimation with Deep Networks

Participants: Alessandro Betti

Collaborations: Simone Marullo, Matteo Tiezzi, Lapo Faggi, Enrico Meloni, Stefano Melacci

Keywords: Continual Learning, Optical Flow, Online Learning.

In 41 we present an extensive study on how neural networks can learn to estimate optical flow in a continual manner while observing a long video stream and reacting online to the streamed information without any further data buffering. To this end, we rely on photo-realistic video streams that we specifically created using 3D virtual environments, as well as on a real-world movie. Our analysis considers important model selection issues that might be easily overlooked at a first glance, comparing different neural architectures and also state-of-the-art models pretrained in an offline manner. Our results not only show the feasibility of continual unsupervised learning in optical flow estimation, but also indicate that the learned models, in several situations, are comparable to state-of-the-art offline-pretrained networks. Moreover, we show how common issues in continual learning, such as catastrophic forgetting, do not affect the proposed models in a disruptive manner, given the task at hand.

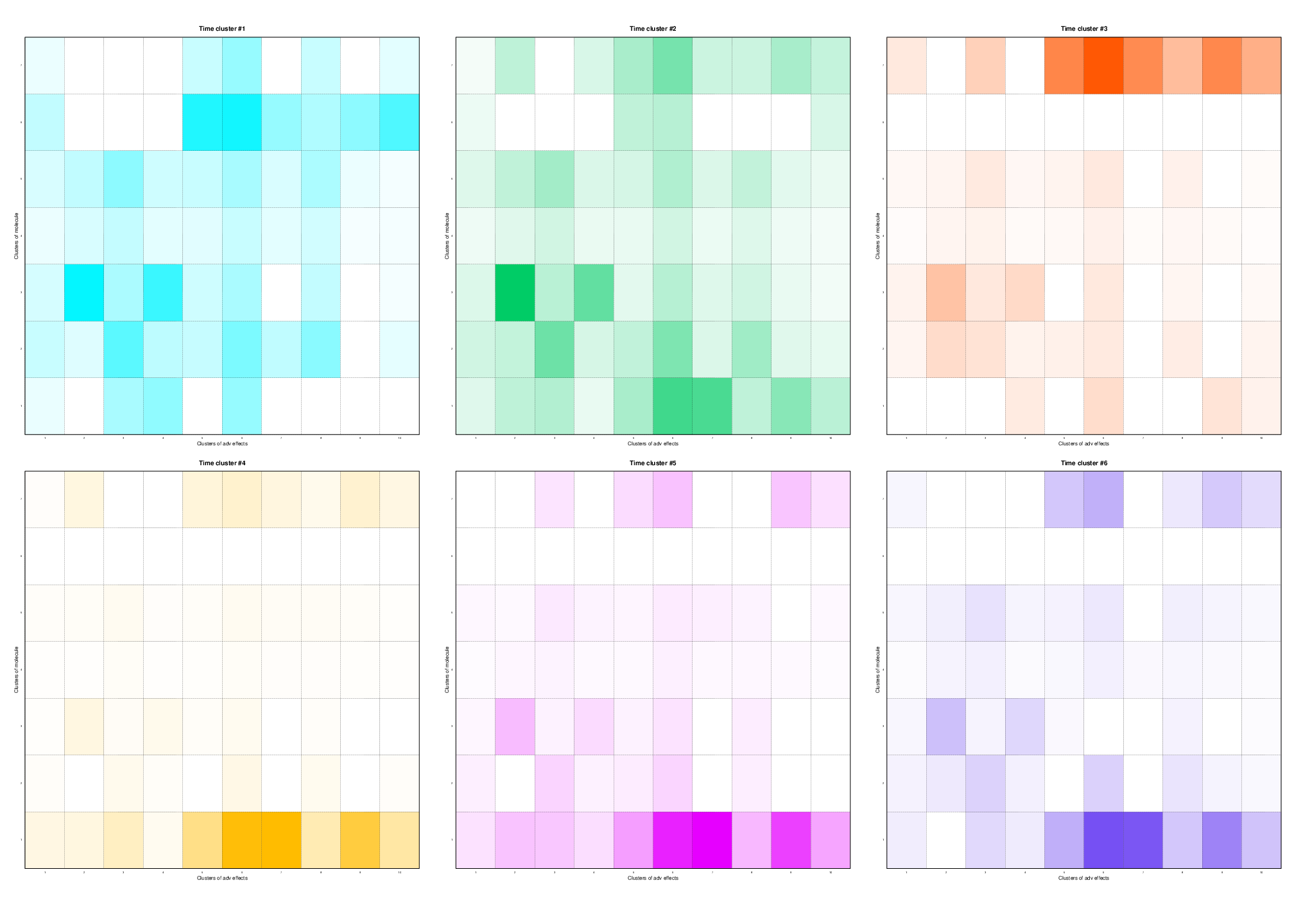

7.1.3 A Deep Dynamic Latent Block Model for the Co-clustering of Zero-Inflated Data Matrices

Participants: G. Marchello, M. Corneli, C. Bouveyron.

Keywords: Co-clustering, Latent Block Model, zero-inflated distributions, dynamic systems, VEM algorithm.

Collaborations: Regional Center of Pharmacovigilance (RCPV) of Nice.

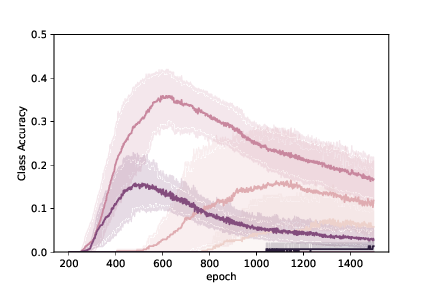

The simultaneous clustering of observations and features of data sets (known as co-clustering) has recently emerged as a central machine learning application to summarize massive data sets. However, most existing models focus on continuous and dense data in stationary scenarios, where cluster assignments do not evolve over time. In 64, we introduce a novel latent block model for the dynamic co-clustering of data matrices with high sparsity. To properly model this type of data, we assume that the observations follow a time and block dependent mixture of zero-inflated distributions, thus combining stochastic processes with the time-varying sparsity modeling. To detect abrupt changes in the dynamics of both cluster memberships and data sparsity, the mixing and sparsity proportions are modeled through systems of ordinary differential equations. The inference relies on an original variational procedure whose maximization step trains fully connected neural networks in order to solve the dynamical systems. Numerical experiments on simulated data sets demonstrate the effectiveness of the proposed methodology in the context of count data. The proposed method, called -dLBM, was then applied to two real data sets. The first is the data set on the London Bike sharing system while the second is a pharmacovigilance data set, on adverse drug reaction (ADR) reported to the Regional Center of Pharmacovigilance (RCPV) in Nice, France. Fig. 1 shows some of the main results obtained through the application of -dLBM on the pharmacovigilance data set.

Figure 1

Estimated Poisson intensities, each color represents a different drug (ADR) cluster.

7.1.4 Co-Clustering of Multivariate Functional Data for Air Pollution Analysis

Participants: Charles Bouveyron.

Keywords: generative models, model-based co-clustering, functional data, air pollution, public health

Collaborations: J. Jacques and A. Schmutz (Univ. de Lyon), Fanny Simoes and Silvia Bottini (MDlab, MSI, Univ. Côte d'Azur)

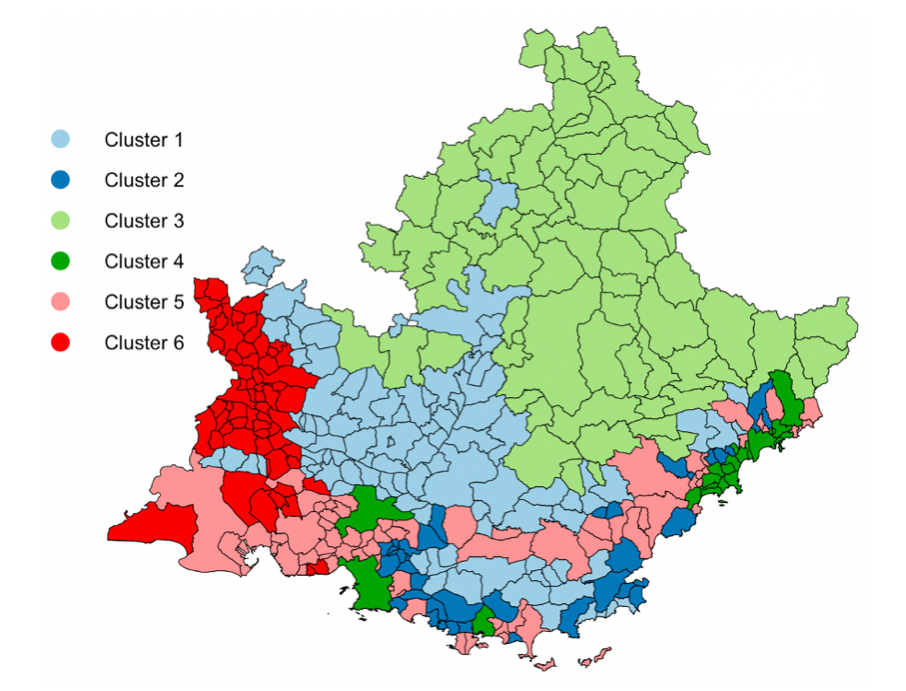

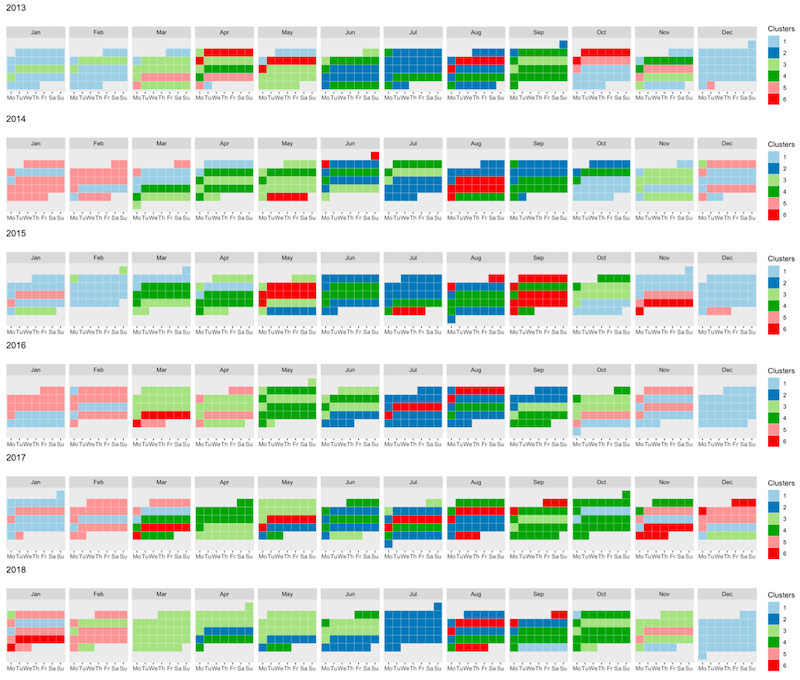

In 11, we focused on Air pollution, which is nowadays a major treat for public health, with clear links with many diseases, especially cardiovascular ones. The spatio-temporal study of pollution is of great interest for governments and local authorities when deciding for public alerts or new city policies against pollution raise. The aim of this work is to study spatio-temporal profiles of environmental data collected in the south of France (Région Sud) by the public agency AtmoSud. The idea is to better understand the exposition to pollutants of inhabitants on a large territory with important differences in term of geography and urbanism. The data gather the recording of daily measurements of five environmental variables, namely three pollutants (PM10, NO2, O3) and two meteorological factors (pressure and temperature) over six years. Those data can be seen as multivariate functional data: quantitative entities evolving along time, for which there is a growing need of methods to summarize and understand them. For this purpose, a novel co-clustering model for multivariate functional data is defined. The model is based on a functional latent block model which assumes for each co-cluster a probabilistic distribution for multivariate functional principal component scores. A Stochastic EM algorithm, embedding a Gibbs sampler, is proposed for model inference, as well as a model selection criteria for choosing the number of co-clusters. The application of the proposed co-clustering algorithm on environmental data of the Région Sud allowed to divide the region composed by 357 zones in six macro-areas with common exposure to pollution. We showed that pollution profiles vary accordingly to the seasons and the patterns are conserved during the 6 years studied. These results can be used by local authorities to develop specific programs to reduce pollution at the macro-area level and to identify specific periods of the year with high pollution peaks in order to set up specific prevention programs for health. Overall, the proposed co-clustering approach is a powerful resource to analyse multivariate functional data in order to identify intrinsic data structure and summarize variables profiles over long periods of time. Figure 2 illustrates the spatial and temporal clustering results.

Figure

Figure

Spatial clustering of the area zones according to the air pollution dynamic over the studied period (left panel) and temporal segmentation of the time (right panel). Those tools may offer meaningful summaries on such massive pollution data to experts or local authorities.

7.1.5 Semi-supervised Consensus Clustering Based on Closed Patterns

Participants: Frédéric Precioso.

Keywords: Clustering; Semi-supervised learning; Semi-supervised consensus clustering; Frequent closed itemsets

Collaborations: Tianshu Yang (Université Côte d'Azur, Amadeus), Nicolas Pasquier (Université Côte d'Azur), Luca Marchetti (Amadeus), Michael Defoin Pratel (Amadeus), in a CIFRE PhD project with Amadeus

Semi-supervised consensus clustering, also called semi-supervised ensemble clustering, is a recently emerged technique that integrates prior knowledge into consensus clustering in order to improve the quality of the clustering result. In this article 23, we propose a novel semi-supervised consensus clustering algorithm extending the previous work on the MultiCons multiple consensus clustering approach. By using closed pattern mining technique, the proposed Semi-MultiCons algorithm manages to generate a recommended consensus solution with a relevant inferred number of clusters based on ensemble members with different and pairwise constraints. Compared with other semi-supervised and/or consensus clustering approaches, Semi-MultiCons does not require the number of generated clusters as an input parameter, and is able to alleviate the widely reported negative effect related to the integration of constraints into clustering. The experimental results demonstrate that the proposed method outperforms state of the art semi-supervised consensus clustering algorithms.

7.1.6 Dimension-Grouped Mixed Membership Models for Multivariate Categorical Data

Participants: Elena Erosheva.

Keywords: Bayesian estimation, grant peer review, inter-rater reliability, maximum likelihood estimation, measurement, mixed-effects models

Collaborations: Yuqi Gu (Columbia University), Gongjun Xu (University of Michigan), David B. Dunson (Duke University)

Mixed Membership Models (MMMs) are a popular family of latent structure models for complex multivariate data. Instead of forcing each subject to belong to a single cluster, MMMs incorporate a vector of subject-specific weights characterizing partial membership across clusters. With this flexibility come challenges in uniquely identifying, estimating, and interpreting the parameters. In 61, we propose a new class of Dimension-Grouped MMMs (Gro-Ms) for multivariate categorical data, which improve parsimony and interpretability. In Gro-Ms, observed variables are partitioned into groups such that the latent membership is constant for variables within a group but can differ across groups. Traditional latent class models are obtained when all variables are in one group, while traditional MMMs are obtained when each variable is in its own group. The new model corresponds to a novel decomposition of probability tensors. Theoretically, we derive transparent identifiability conditions for both the unknown grouping structure and model parameters in general settings. Methodologically, we propose a Bayesian approach for Dirichlet Gro-Ms to infer the variable grouping structure and estimate model parameters. Simulation results demonstrate good computational performance and empirically confirm the identifiability results. We illustrate the new methodology through an application to a functional disability dataset.

7.1.7 Tensor decomposition for learning Gaussian mixtures from moments

Participants: Pierre-Alexandre Mattei.

Keywords: model-based clustering, tensor decomposition, method of moments

Collaborations: Rima Khouja, Bernard Mourrain (Inria Sophia-Antipolis, AROMATH team)

In 16 consider the problem of estimation of Gaussian mixture models. As an alternative to maximum-likelihood, our focus is on the method of moments. More specifically, we investigate symmetric tensor decomposition methods, where the tensor is built from empirical moments of the data distribution. We consider identifiable tensors, which have a unique decomposition, showing that moment tensors built from spherical Gaussian mixtures have this property. We prove that symmetric tensors with interpolation degree strictly less than half their order are identifiable and we present an algorithm, based on simple linear algebra operations, to compute their decomposition. Illustrative experimentations show the impact of the tensor decomposition method for recovering Gaussian mixtures, in comparison with other state-of-the-art approaches.

7.1.8 Dynamic Co-Clustering for PharmaCovigilance

Participants: Charles Bouveyron, Marco Corneli, Giulia Marchello.

Keywords: generative models, dynamic co-clustering, count data, pharmacovigilance

Collaborations: Audrey Fresse (Centre de Pharmacovigilance, CHU de Nice)

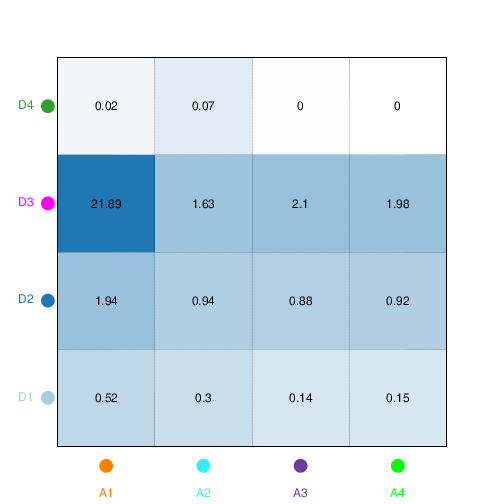

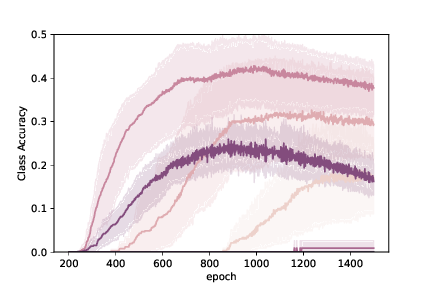

We consider in 17 the problem of co-clustering count matrices with a high level of missing values that may evolve in time. We introduce a generative model, named dynamic latent block model (dLBM), which extends the classical binary latent block model (LBM) to the dynamic case. The time dependent counting data are modeled via non-homogeneous Poisson processes (HHPPs). The continuous time is handled by a partition of the whole considered time period, with the interaction counts being aggregated on the time intervals of such partition. In this way, a sequence of static matrices that allows us to identify meaningful time clusters is obtained. The model inference is done using a SEM-Gibbs algorithm and the ICL criterion is used for model selection. Numerical experiments on simulated data highlight the main features of the proposed approach and show the interest of dLBM with respect to related works. An application to adverse drug reaction (ADR) dataset, obtained thanks to the collaboration with the Regional Center of Pharmacovigilance (RCPV) of Nice (France), is also proposed. One of the missions of RCPVs is safety signal detection. However, the current expert detection of safety signals, despite being unavoidable, has the disadvantage of being incomplete due to the workload it represents. For this reason, developing automatized methods of safety signal detection is currently a major issue in pharmacovigilance. The application of dLBM on this dataset allowed us to extract meaningful patterns for medical authorities. In particular, dLBM identifies 7 drug clusters, 10 ADRs clusters and 6 time clusters. The clusters identified by the algorithm are coherent with previous knowledge and adequately represent the variety of drugs present in the dataset. Moreover, an in depth analysis of the clusters found by the model revealed that dLBM correctly detected the three drugs that gave rise to the health scandals that took place between 2010 and 2020, demonstrating its potential as a routine tool in pharmacovigilance. Figure 3 illustrates this work.

Figure 3

Evolution of the relation between the drug clusters and the ADR clusters over time. Each color corresponds to a different cluster of adverse drug reaction.

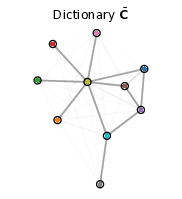

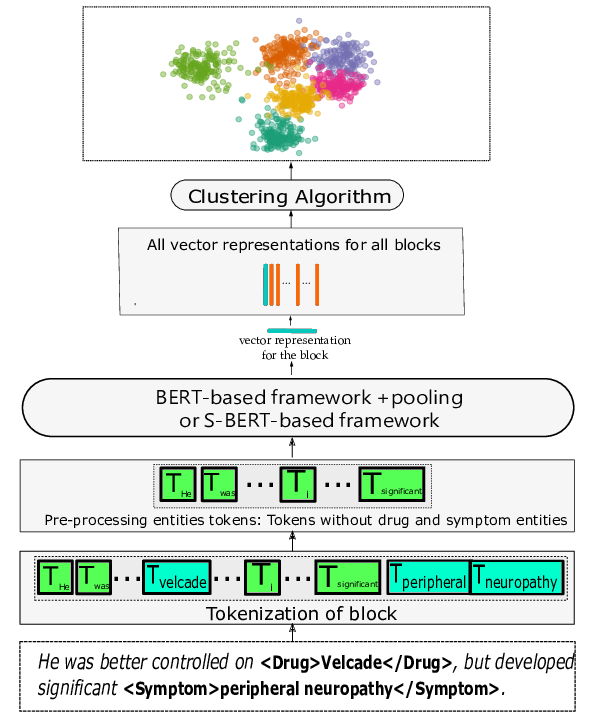

7.1.9 Embedded Topics in the Stochastic Block Model

Participants: Charles Bouveyron, Rémi Boutin, Pierre Latouche.

Keywords: generative models, clustering, networks, text, topic modeling

Collaborations: service politique du journal Le Monde

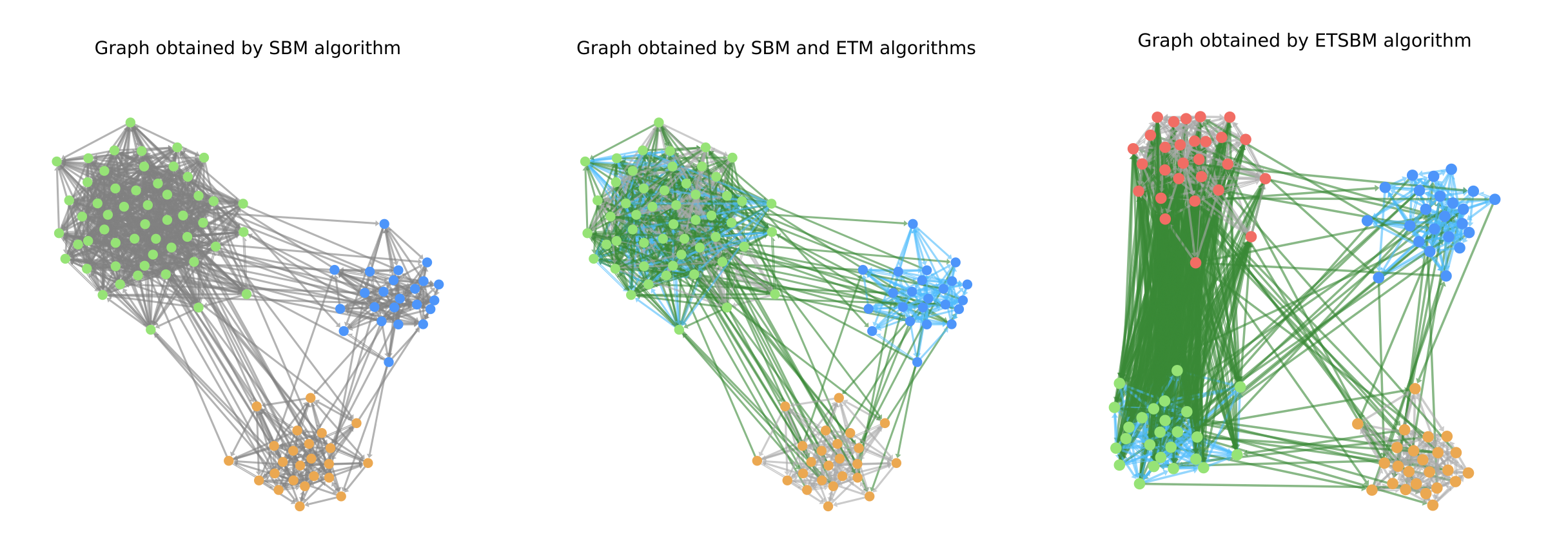

Communication networks such as emails or social networks are now ubiquitous and their analysis has become a strategic field. In many applications, the goal is to automatically extract relevant information by looking at the nodes and their connections. Unfortunately, most of the existing methods focus on analysing the presence or absence of edges and textual data is often discarded. However, all communication networks actually come with textual data on the edges. In order to take into account this specificity, we consider in 56 networks for which two nodes are linked if and only if they share textual data. We introduce a deep latent variable model allowing embedded topics to be handled called ETSBM to simultaneously perform clustering on the nodes while modelling the topics used between the different clusters. ETSBM extends both the stochastic block model (SBM) and the embedded topic model (ETM) which are core models for studying networks and corpora, respectively. The inference is done using a variational-Bayes expectation-maximisation algorithm combined with a stochastic gradient descent. The methodology is evaluated on synthetic data and on a real world dataset.

Figure 4

Clustering on a simulated network with SBM (left), SBM+ETM (center) and ETSBM (right).

7.2 Understanding (deep) learning models

7.2.1 Explainability as statistical inference

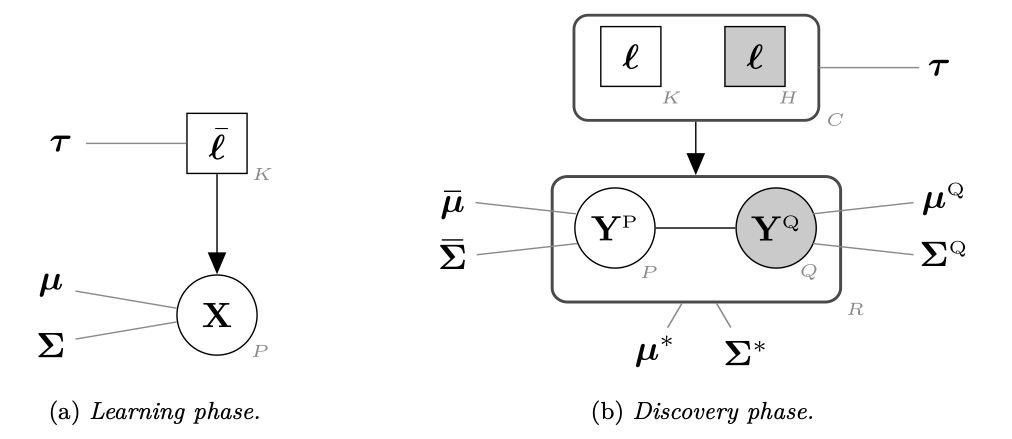

Participants: Hugo Senetaire, Damien Garreau, Pierre-Alexandre Mattei

Keywords: Interpretability, Human and AI, Explainability, latent variable models

Collaborations: Jes Frellsen (Technical University of Denmark)

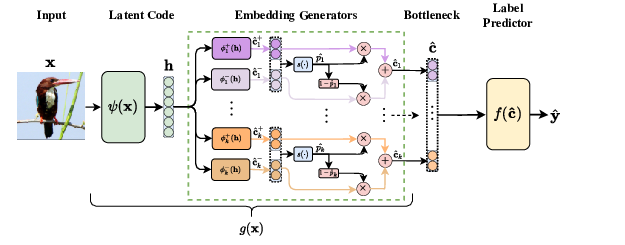

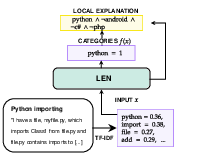

A wide variety of model explanation approaches have been proposed in recent years, all guided by very different rationales and heuristics. In 69, we take a new route and cast interpretability as a statistical inference problem. We propose a general deep probabilistic model designed to produce interpretable predictions (see fig. 5). The model’s parameters can be learned via maximum likelihood, and the method can be adapted to any predictor network architecture, and any type of prediction problem. Our method is a case of amortized interpretability models, where a neural network is used as a selector to allow for fast interpretation at inference time. Several popular interpretability methods are shown to be particular cases of regularized maximum likelihood for our general model. We propose new datasets with ground truth selection which allow for the evaluation of the features importance map. Using these datasets, we show experimentally that using multiple imputation provides more reasonable interpretation.

Figure 5

7.2.2 Concept Embedding Models

Participants: Gabriele Ciravegna, Frederic Precioso

Keywords: Deep Learning, Interpretability, Human and AI, Concept-based Explanations

Collaborations: Mateo Espinosa Zarlenga, Pietro Barbiero, Zohreh Shams, Adrian Weller, Pietro Lio, Mateja Jamnik (University of Cambridge), Francesco Giannini, Michelangelo Diligenti, Stefano Melacci (Università di Siena), Giuseppe Marra, (Katholieke Universiteit Leuven)

While any child can explain what an “apple” is by enumerating its characteristics, deep neural networks (DNNs) fail to explain what they learn in human-understandable terms despite their high prediction accuracy. This accuracy-vs-interpretability trade-off has become a major concern as high-performing DNNs become commonplace in practice, thus questioning the ethical and legal ramifications of their deployment. Concept bottleneck models (CBMs) aim at replacing “black-box” DNNs by first learning to predict a set of concepts, that is, “interpretable” high-level units of information (e.g., “colour” or “shape”) provided at training time, and then using these concepts to learn a downstream classification task. Predicting tasks as a function of concepts engenders user trust by allowing predictions to be explained in terms of concepts and by supporting human interventions, where at test-time an expert can correct a mis-predicted concept, possibly changing the CBM's output. That said, concept bottlenecks may impair task accuracy, especially when concept labels do not contain all the necessary information for accurately predicting a downstream task (i.e., they form an “incomplete” representation of the task). In principle, extending a CBM's bottleneck with a set of unsupervised neurons may improve task accuracy. However, such a hybrid approach not only significantly hinders the performance of concept interventions, but it also affects the interpretability of the learnt bottleneck, thus undermining user trust.

Figure 6

In 45, we propose Concept Embedding Models (CEMs, see fig. 6), a novel concept bottleneck model which overcomes the current accuracy-vs-interpretability trade-off found in concept-incomplete settings. Furthermore, we introduce two new metrics for evaluating concept representations and use them to help understand why our approach circumvents the limits found in the current state-of-the-art CBMs. Our experiments provide significant evidence in favour of CEM’s accuracy/interpretability and, consequently, in favour of its real-world deployment. In particular, CEMs offer: (1) state-of-the-art task accuracy, (2) interpretable concept representations aligned with human ground truths, (3) effective interventions on learnt concepts, and (4) robustness to incorrect concept interventions. While in practice CBMs require carefully selected concept annotations during training, which can be as expensive as task labels to obtain, our results suggest that CEM is more efficient in concept-incomplete settings, requiring less concept annotations and being more applicable to real-world tasks. While there is room for improvement in both concept alignment and task accuracy in challenging benchmarks such as CUB or CelebA, as well as in resource utilization during inference/training, our results indicate that CEM advances the state-of-the-art for the accuracy-vs-interpretability trade-off, making progress on a crucial concern in explainable AI.

7.2.3 How to scale hyperparameters for quickshift image segmentation

Participants: Damien Garreau

Keywords: Computer vision, clustering

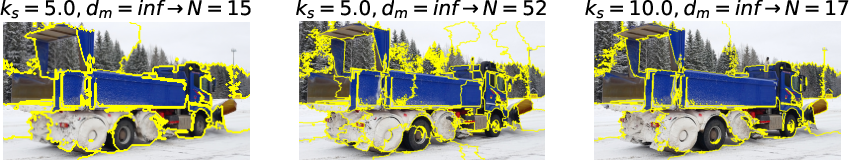

Quickshift is a popular algorithm for image segmentation, used as a preprocessing step in many applications. Unfortunately, it is quite challenging to understand the hyperparameters’ influence on the number and shape of superpixels produced by the method. In 60, we study theoretically a slightly modified version of the quickshift algorithm, with a particular emphasis on homogeneous image patches with i.i.d. pixel noise and sharp boundaries between such patches. Leveraging this analysis, we derive a simple heuristic to scale quickshift hyperparameters with respect to the image size, which we check empirically (see fig. 7).

Figure 7

Using our heuristic, we can scale hyperparameters with respect to the size of the image. Left panel: original image size, 15 superpixels obtained with kernel size and infinite maximal distance; Middle panel: same hyperparameters, upscaled image by a factor 2 yield approximately 4 times more superpixels; Right panel: multiplying kernel size by 2, we end up with roughly the same numbers of superpixels as before.

7.2.4 Interpretable Prediction of Post-Infarct Ventricular Arrhythmia using Graph Convolutional Network

Participants: Damien Garreau

Collaborations: Buntheng Ly, Sonny Finsterbach, Marta Nuñez-Garcia, Pierre Jaïs, Hubert Cochet, Maxime Sermesant

Keywords: Interpretability, graph neural networks, Ventricular Arrhythmia

Heterogeneity of left ventricular (LV) myocardium infarction scar plays an important role as anatomical substrate in ventricular arrhythmia (VA) mechanism. LV myocardium thinning, as observed on cardiac computed tomography (CT), has been shown to correlate with LV myocardial scar and with abnormal electrical activity. In 25, we propose an automatic pipeline for VA prediction, based on CT images, using a Graph Convolutional Network (GCN). The pipeline includes the segmentation of LV masks from the input CT image, the short-axis orientation reformatting, LV myocardium thickness computation and mid-wall surface mesh generation. An average LV mesh was computed and fitted to every patient in order to use the same number of vertices with point-to-point correspondence. The GCN model was trained using the thickness value as the node feature and the atlas edges as the adjacency matrix. This allows the model to process the data on the 3D patient anatomy and bypass the “grid” structure limitation of the traditional convolutional neural network. The model was trained and evaluated on a dataset of 600 patients (27% VA), using 451 (3/4) and 149 (1/4) patients as training and testing data, respectively. The evaluation results showed that the graph model (81% accuracy) outperformed the clinical baseline (67%), the left ventricular ejection fraction, and the scar size (73%). We further studied the interpretability of the trained model using LIME and integrated gradients and found promising results on the personalised discovering of the specific regions within the infarct area related to the arrhythmogenesis.

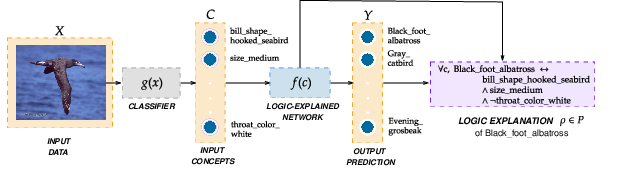

7.2.5 Logic Explained Networks

Participants: Gabriele Ciravegna, Marco Gori

Keywords: XAI, Explainability-by-design, Concept-based Explanations, Human and AI

Collaborations: Pietro Barbiero, Pietro Lió (University of Cambridge), Francesco Giannini, Marco Maggini, Stefano Melacci (Università di Siena)

In 12 we present a unified framework for XAI allowing the design of a family of neural models, the Logic Explained Networks (LENs, see fig. 8), which are trained to solve-and-explain a categorical learning problem integrating elements from deep learning and logic. Differently from vanilla neural architectures, LENs can be directly interpreted by means of a set of FOL formulas. To implement such a property, LENs require their inputs to represent the activation scores of human-understandable concepts. Then, specifically designed learning objectives allow LENs to make predictions in a way that is well suited for providing FOL-based explanations that involve the input concepts. To reach this goal, LENs leverage parsimony criteria aimed at keeping their structure simple. There are several different computational pipelines in which a LEN can be configured, depending on the properties of the considered problem and on other potential experimental constraints. For example, LENs can be used to directly classify data in an explainable manner, or to explain another black-box neural classifier. Moreover, according to the user expectations, different kinds of logic rules may be provided.

Figure 8

We investigate three different use-cases comparing different ways of implementing the LEN models. While most of the emphasis of this paper is on supervised classification, we also show how LEN can be leveraged in fully unsupervised settings. Additional human priors could be eventually incorporated into the learning process, in the architecture, and, following previous works, what we propose can be trivially extended to semi-supervised learning. Our work contributes to the XAI research field in the following ways: (1) It generalizes existing neural methods for solving and explaining categorical learning problems into a broad family of neural networks, i.e., the Logic Explained Networks (LENs). In particular, we extend the use of networks also to directly provide interpretable classifications, and we introduce other two main instances of LENs, i.e. ReLU networks and networks. (2) It describes how users may interconnect LENs in the classification task under investigation, and how to express a set of preferences to get one or more customized explanations. (3) It shows how to get a wide range of logic-based explanations, and how logic formulas can be restricted in their scope, working at different levels of granularity (explaining a single sample, a subset of the available data, etc. (4) It reports experimental results using three out-of-the-box preset LENs showing how they may generalize better in terms of model accuracy than established white-box models such as decision trees on complex Boolean tasks. (5) It advertises our public implementation of LENs in a GitHub repository with an extensive documentation about LENs models, implementing different trade-offs between interpretability, explainability and accuracy.

7.2.6 Extending Logic Explained Network to Text Classification

Participants: Gabriele Ciravegna

Keywords: XAI, Logic Explanation, Text Classification

Collaborations: Rishabh Jain, Pietro Barbiero, Pietro Lio (University of Cambridge), Francesco Giannini (Università di Siena), Davide Buffelli (Università di Padova)

The majority of the data found in an organization tends to be unstructured (with some estimates being over 80 %). Unstructured data tends to be text heavy. Sifting and sorting this data by hand require a lot of effort and time. Text classification is a useful way of automating this process, with applications ranging from small tasks (e.g., spam-email classification), to safety-critical ones (e.g., legal-document risk assessment). The development of Deep Neural Networks has enabled the creation of high accuracy text classifiers with state-of-the-art models leveraging different forms of architectures, like RNNs (GRU, LSTM) or Transformer models. However, these architectures are considered as black-box models, since their decision processes are not easy to explain and depend on a very large set of parameters. In order to shed light on neural models' decision processes, eXplainable Artificial Intelligence (XAI) techniques attempt to understand text attribution to certain classes, for instance by using white-box models. Interpretable-by-design models engender higher trust in human users with respect to explanation methods for black-boxes, at the cost, however, of lower prediction performance. Previous works introduced the Logic Explained Network (LEN), an explainable-by-design neural network combining interpretability of white-box models with high performance of neural networks. However, the authors only compared LENs with white-box models and on tabular/computer vision tasks.

Figure 9

For these reasons, in 37 we apply an improved version of the LENp to the text classification problem (see fig. 9, and we compare it with LIME a standard and very-well known explanation method. LEN and LIME provide different kind of explanations, respectively FOL formulae and feature-importance vectors, and we assess their user-friendliness by means of a user-study. As an evaluation benchmark, we considered Multi-Label Text Classification for the tag classification task on the “StackSample: 10% of Stack Overflow Q&A” dataset. The paper aims to apply LENs to the text classification problem and to test the generated explanations. More specifically, its purpose are to: (1) improve LEN explanation algorithm with LENp (2) confirm the small performance drop when employing LENs, w.r.t. using a black-box model; (3) compare the faithfulness and the sensitivity of the explanations provided by LENs and LIME; (4) assess the user-friendliness of the two kinds of explanations.

7.2.7 Foveated Neural Computation

Participants: Alessandro Betti, Marco Gori

Collaborations: Matteo Tiezzi, Simone Marullo, Enrico Meloni, Lapo Faggi, Stefano Melacci

Keywords: Foveated Convolutional Layers, Convolutional Neural Networks, Visual Attention.

In 43 this paper we introduce the notion of Foveated Convolutional Layer (FCL), that formalizes the idea of location-dependent convolutions with foveated processing, i.e., fine-grained processing in a given-focused area and coarser processing in the peripheral regions. We show how the idea of foveated computations can be exploited not only as a filtering mechanism, but also as a mean to speed-up inference with respect to classic convolutional layers, allowing the user to select the appropriate trade-off between level of detail and computational burden. FCLs can be stacked into neural architectures and we evaluate them in several tasks, showing how they efficiently handle the information in the peripheral regions, eventually avoiding the development of misleading biases. When integrated with a model of human attention, FCL-based networks naturally implement a foveated visual system that guides the attention toward the locations of interest, as we experimentally analyze on a stream of visual stimuli.

7.2.8 Continual Learning through Hamilton Equations

Participants: Alessandro Betti, Marco Gori

Collaborations: Lapo Faggi, Matteo Tiezzi, Simone Marullo, Enrico Meloni, Stefano Melacci

Keywords: Continual Learning, Optimal Control, Hamilton-Jacobi.

In 35 we consider a fully new perspective, rethinking the methodologies to be used to tackle continual learning, instead of re-adapting offline-oriented optimization. In particular, we propose a novel method to frame continual and online learning within the framework of optimal control. The proposed formulation leads to a novel interpretation of learning dynamics in terms of Hamilton equations. As a case study for the theory, we consider the problem of unsupervised optical flow estimation from a video stream. An experimental proof of concept for this learning task is discussed with the purpose of illustrating the soundness of the proposed approach, and opening to further research in this direction.

7.2.9 A free boundary singular transport equation as a formal limit of a discrete dynamical system

Participants: Alessandro Betti

Collaborations: Giovanni Bellettini, Maurizio Paolini

Keywords: PDE, Continuous Open Mancala, Transport Equation.

In 53 we study the continuous version of a hyperbolic rescaling of a discrete game, called open mancala. The resulting PDE turns out to be a singular transport equation, with a forcing term taking values in , and discontinuous in the solution itself. We prove existence and uniqueness of a certain formulation of the problem, based on a nonlocal equation satisfied by the free boundary dividing the region where the forcing is one (active region) and the region where there is no forcing (tail region). Several examples, most notably the Riemann problem, are provided, related to singularity formation. Interestingly, the solution can be obtained by a suitable vertical rearrangement of a multi-function. Furthermore, the PDE admits a Lyapunov functional.

7.2.10 Forward Approximate Solution for Linear Quadratic Tracking

Participants: Alessandro Betti, Marco Gori

Collaborations: Michele Casoni

Keywords: Linear Quadratic Problem, Forward Approximation, Optimal Control.

In 54, we discuss an approximation strategy for solving the Linear Quadratic Tracking that is both forward and local in time. We exploit the known form of the value function along with a time reversal transformation that nicely addresses the boundary condition consistency. We provide the results of an experimental investigation with the aim of showing how the proposed solution performs with respect to the optimal solution. Finally, we also show that the proposed solution turns out to be a valid alternative to model predictive control strategies, whose computational burden is dramatically reduced.

7.2.11 Comparing Feature Importance and Rule Extraction for Interpretability on Text Data

Participants: Gianluigi Lopardo, Damien Garreau

Keywords: Interpretability, Explainable Artificial Intelligence, Natural Language Processing

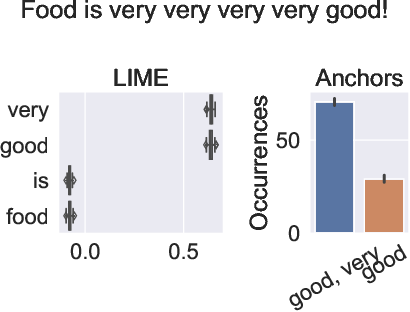

Complex machine learning algorithms are used more and more often in critical tasks involving text data, leading to the development of interpretability methods. Among local methods, two families have emerged: those computing importance scores for each feature and those extracting simple logical rules. In 39 we show that using different methods can lead to unexpectedly different explanations, even when applied to simple models for which we would expect qualitative coincidence, as in Figure 10. To quantify this effect, we propose a new approach to compare explanations produced by different methods.

Figure

Figure

Making a word disappear from the explanation by adding one occurrence. The classifier is applied when the multiplicities are (left) and (right).

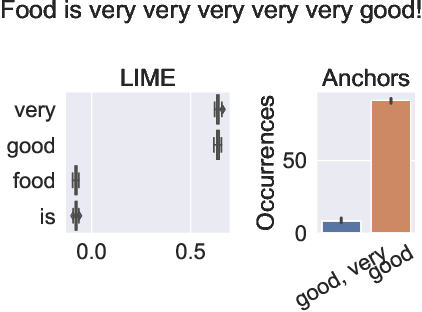

7.2.12 A Sea of Words: An In-Depth Analysis of Anchors for Text Data

Participants: Gianluigi Lopardo, Damien Garreau, Frédéric Precioso

Keywords: Interpretability, Explainable Artificial Intelligence, Natural Language Processing

Anchors (Ribeiro et al., 2018) is a post-hoc, rule-based interpretability method. For text data, it proposes to explain a decision by highlighting a small set of words (an anchor) such that the model to explain has similar outputs when they are present in a document. In 63, we present the first theoretical analysis of Anchors, considering that the search for the best anchor is exhaustive. After formalizing the algorithm for text classification, illustrated in Figure 11, we present explicit results on different classes of models when the preprocessing step is TF-IDF vectorization, including elementary if-then rules and linear classifiers. We then leverage this analysis to gain insights on the behavior of Anchors for any differentiable classifiers. For neural networks, we empirically show that the words corresponding to the highest partial derivatives of the model with respect to the input, reweighted by the inverse document frequencies, are selected by Anchors.

Figure 11

An illustration of the algorithm with evaluation function . Each blue dot is an anchor, with coordinate its length and coordinate its value for . Here, and the maximal length of an anchor is (the length of ). In the end, the anchor such that and is selected (red circle).

7.2.13 Learning and Reasoning for Cultural Metadata Quality

Participants: Frédéric Precioso.

Keywords: Deep Learning, Image Recognition, Semantic Web, Knowledge Graph

Collaborations: Anna Bobasheva, Fabien Gandon (Inria)

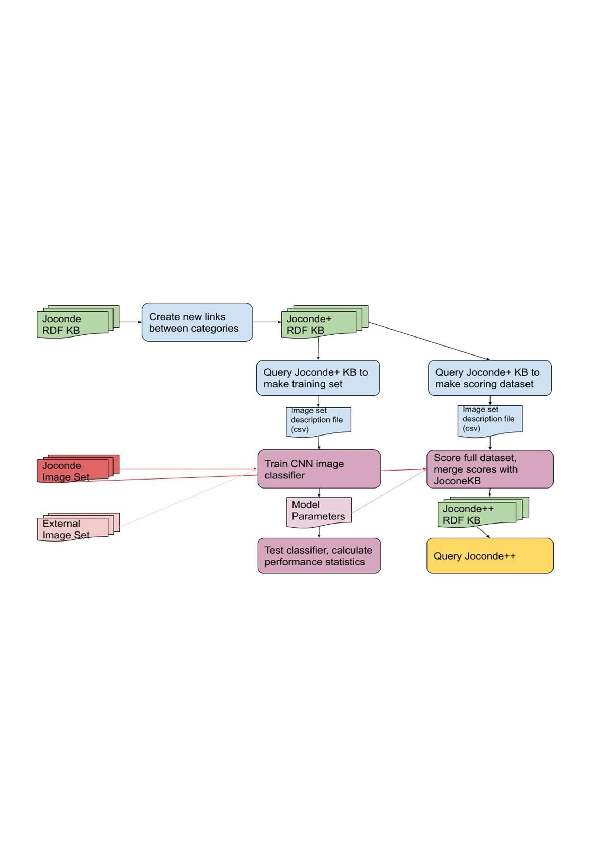

This work 10 combines semantic reasoning and machine learning to create tools that allow curators of the visual art collections to identify and correct the annotations of the artwork as well as to improve the relevance of the content-based search results in these collections. The research is based on the Joconde database maintained by French Ministry of Culture that contains illustrated artwork records from main French public and private museums representing archeological objects, decorative arts, fine arts, historical and scientific documents, etc. The Joconde database includes semantic metadata that describes properties of the artworks and their content. The developed methods create a data pipeline that processes metadata, trains a Convolutional Neural Network image classification model, makes prediction for the entire collection and expands the metadata to be the base for the SPARQL search queries. We developed a set of such queries to identify noise and silence in the human annotations and to search image content with results ranked according to the relevance of the objects quantified by the prediction score provided by the deep learning model. We also developed methods to discover new contextual relationships between the concepts in the metadata by analyzing the contrast between the concepts similarities in the Joconde’s semantic model and other vocabularies and we tried to improve the model prediction scores based on the semantic relations. Our results show that cross-fertilization between symbolic AI and machine learning can indeed provide the tools to address the challenges of the museum curators work describing the artwork pieces and searching for the relevant images.

Figure 12

Data processing pipeline combining reasoning and learning.

7.2.14 SMACE: A New Method for the Interpretability of Composite Decision Systems

Participants: Gianluigi Lopardo, Damien Garreau, Frédéric Precioso, Greger Ottosson

Keywords: Interpretability, Composite AI, Decision-making

Collaborations: IBM France

Interpretability is a pressing issue for decision systems. Many post hoc methods have been proposed to explain the predictions of a single machine learning model. However, business processes and decision systems are rarely centered around a unique model. These systems combine multiple models that produce key predictions, and then apply decision rules to generate the final decision (see Figure 13 for an illustation). To explain such decisions, we propose in 40 the Semi-Model-Agnostic Contextual Explainer (SMACE), a new interpretability method that combines a geometric approach for decision rules with existing interpretability methods for machine learning models to generate an intuitive feature ranking tailored to the end user. We show that established model-agnostic approaches produce poor results on tabular data in this setting, in particular giving the same importance to several features, whereas SMACE can rank them in a meaningful way.

7.3 Adaptive and robust learning

7.3.1 Model-agnostic out-of-distribution detection using combined statistical tests

Participants: Pierre-Alexandre Mattei, Hugo Senetaire, Hugo Schmutz

Collaborations: Jakob Havtorn, Lars Maaløe, Søren Hauberg, Jes Frellsen

Keywords: Anomaly detection, statistical tests

We present simple methods for out-of-distribution detection using a trained generative model. These techniques, based on classical statistical tests, are model-agnostic in the sense that they can be applied to any differentiable generative model. The idea is to combine a classical parametric test (Rao's score test) with the recently introduced typicality test. These two test statistics are both theoretically well-founded and exploit different sources of information based on the likelihood for the typicality test and its gradient for the score test. We show that combining them using Fisher's method overall leads to a more accurate out-of-distribution test. We also discuss the benefits of casting out-of-distribution detection as a statistical testing problem, noting in particular that false positive rate control can be valuable for practical out-of-distribution detection. Despite their simplicity and generality, these methods can be competitive with model-specific out-of-distribution detection algorithms without any assumptions on the out-distribution.

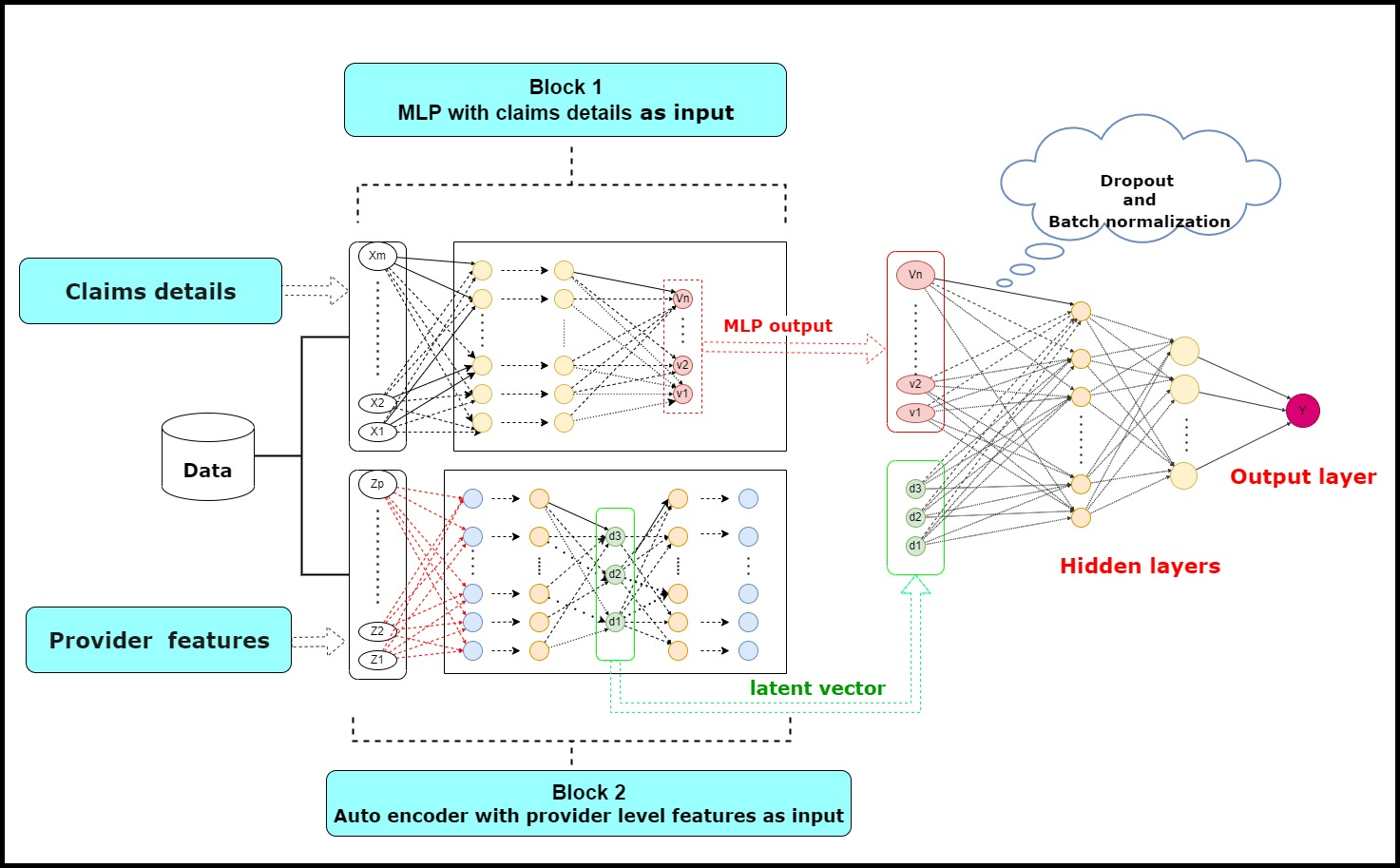

7.3.2 Autoregressive based Drift Detection Method

Participants:Mansour Zoubeirou A Mayaki, Michel Riveill

Keywords: Concept drift detection ,Data streams ,Auto-regressive model , Machine learning , Deep neural networks

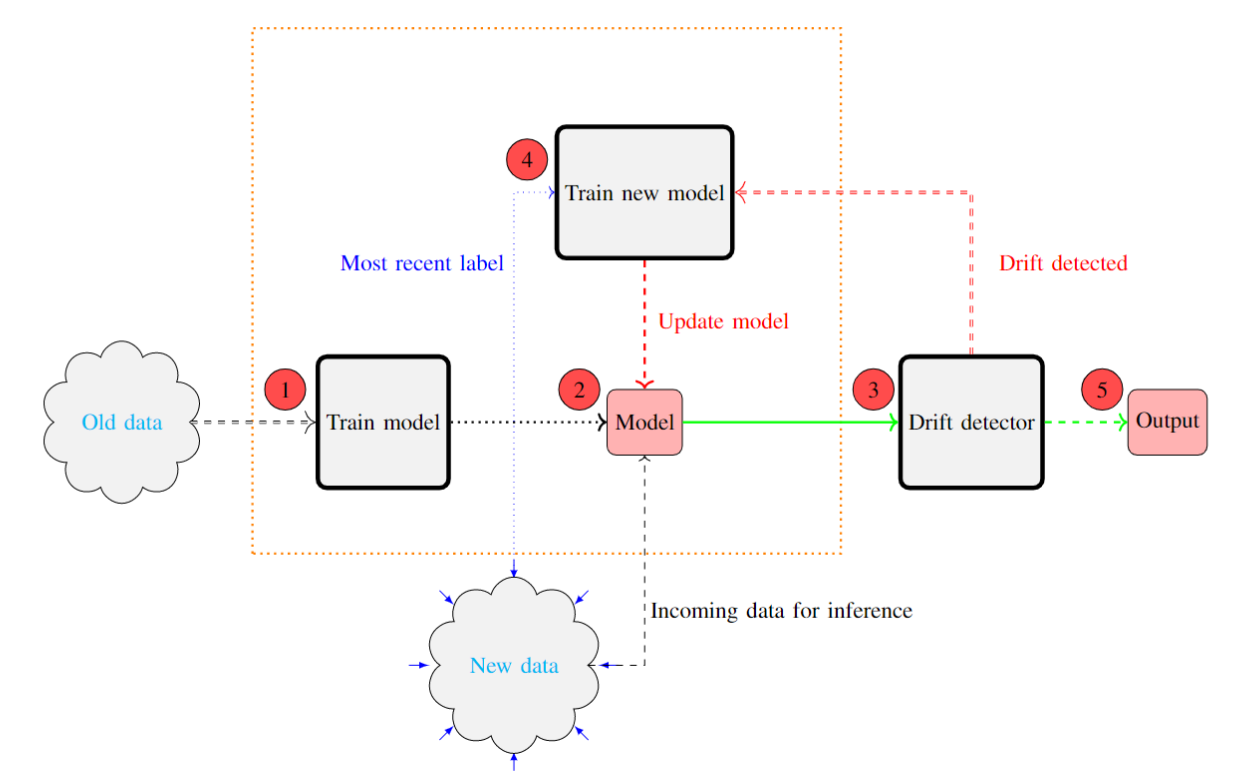

In the classic machine learning framework, models are trained on historical data and used to predict future values. It is assumed that the data distribution does not change over time (stationarity). However, in real-world scenarios, the data generation process changes over time and the model has to adapt to the new incoming data. This phenomenon is known as concept drift and leads to a decrease in the predictive model's performance. We proposed a new concept drift detection method based on autoregressive models called ADDM 48. This method can be integrated into any machine learning algorithm from deep neural networks to simple linear regression model. Our results show that this new concept drift detection method outperforms the state-of-the-art drift detection methods, both on synthetic data sets and real-world data sets. Our approach is theoretically guaranteed as well as empirical and effective for the detection of various concept drifts. In addition to the drift detector, we proposed a new method of concept drift adaptation based on the severity of the drift. The architecture and dataflow of ADDM is shown in Figure 14.

Figure 14

7.3.3 PARTIME: Scalable and Parallel Processing Over Time with Deep Neural Networks

Participants: Alessandro Betti, Marco Gori

Collaborations: Enrico Meloni, Lapo Faggi, Simone Marullo, Matteo Tiezzi, Stefano Melacci

Keywords: PyTorch, PARTIME, Software Library Transport Equation.

In 66 this paper, we present PARTIME, a software library written in Python and based on PyTorch, designed specifically to speed up neural networks whenever data is continuously streamed over time, for both learning and inference. Existing libraries are designed to exploit data-level parallelism, assuming that samples are batched, a condition that is not naturally met in applications that are based on streamed data. Differently, PARTIME starts processing each data sample at the time in which it becomes available from the stream. PARTIME wraps the code that implements a feed-forward multi-layer network and it distributes the layer-wise processing among multiple devices, such as Graphics Processing Units (GPUs). Thanks to its pipeline-based computational scheme, PARTIME allows the devices to perform computations in parallel. At inference time this results in scaling capabilities that are theoretically linear with respect to the number of devices. During the learning stage, PARTIME can leverage the non-i.i.d. nature of the streamed data with samples that are smoothly evolving over time for efficient gradient computations. Experiments are performed in order to empirically compare PARTIME with classic non-parallel neural computations in online learning, distributing operations on up to 8 NVIDIA GPUs, showing significant speedups that are almost linear in the number of devices, mitigating the impact of the data transfer overhead.

7.3.4 Unobserved classes and extra variables detection in high-dimensional discriminant analysis

Participants: Charles Bouveyron, Pierre-Alexandre Mattei.

Keywords: Adaptive supervised classification; conditional estimation; model-based discriminant analysis; unobserved classes; variable selection.

Collaborations: Michael Fop and Brendan Murphy (University College Dublin, Ireland)

In supervised classification problems, the test set may contain data points belonging to classes not observed in the learning phase. Moreover, the same units in the test data may be measured on a set of additional variables recorded at a subsequent stage with respect to when the learning sample was collected. In this situation, the classifier built in the learning phase needs to adapt to handle potential unknown classes and the extra dimensions. We introduce in 15 a model-based discriminant approach, Dimension-Adaptive Mixture Discriminant Analysis (D-AMDA), which can detect unobserved classes and adapt to the increasing dimensionality. Model estimation is carried out via a full inductive approach based on an EM algorithm. The method is then embedded in a more general framework for adaptive variable selection and classification suitable for data of large dimensions. A simulation study and an artificial experiment related to classification of adulterated honey samples are used to validate the ability of the proposed framework to deal with complex situations. Figure 15 illustrates the general framework of the proposed approach.

Figure 15

7.3.5 Knowledge-Driven Active Learning

Participants: Gabriele Ciravegna, Marco Gori, Frédéric Precioso.

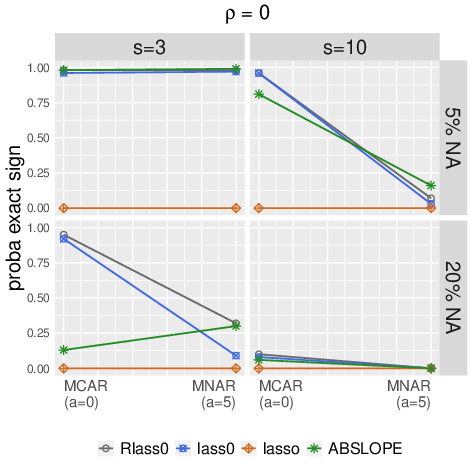

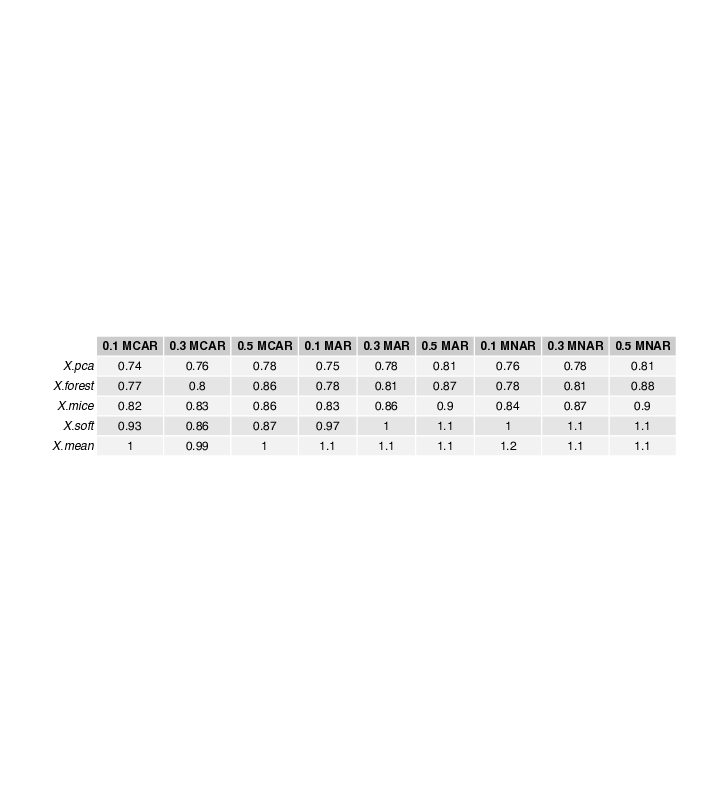

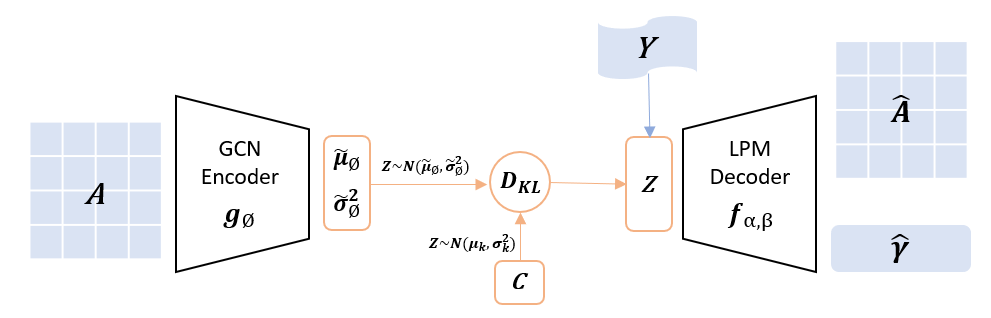

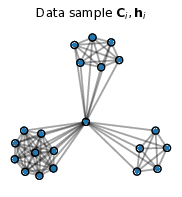

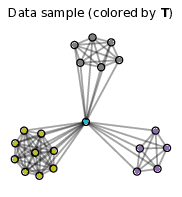

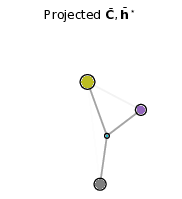

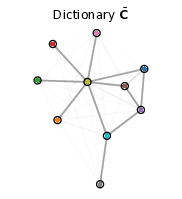

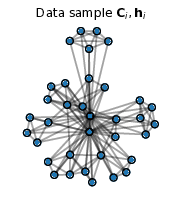

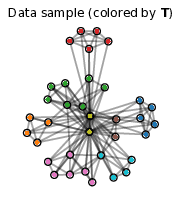

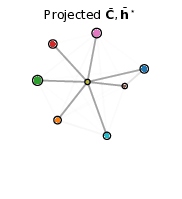

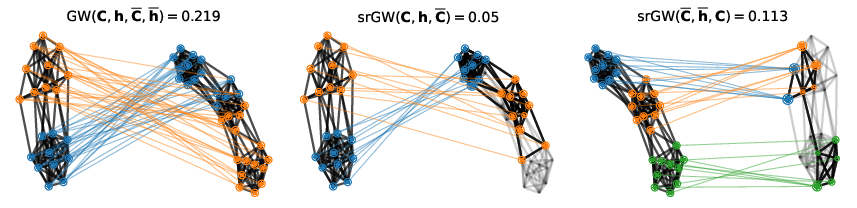

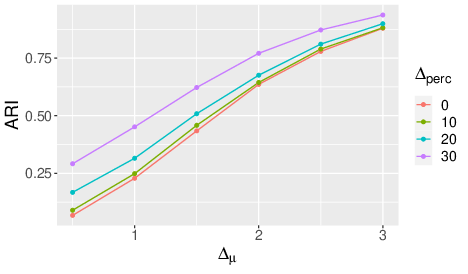

Keywords: Active Learning, Knowledge Representation, Deep Learning