Keywords

Computer Science and Digital Science

- A5.4. Computer vision

- A5.5. Computer graphics

- A5.5.1. Geometrical modeling

- A5.5.3. Computational photography

- A5.5.4. Animation

- A5.6. Virtual reality, augmented reality

- A9.1. Knowledge

- A9.2. Machine learning

- A9.3. Signal analysis

Other Research Topics and Application Domains

- B2. Health

- B2.2. Physiology and diseases

- B5.7. 3D printing

- B9.1. Education

- B9.2.2. Cinema, Television

- B9.2.3. Video games

- B9.2.4. Theater

- B9.6.6. Archeology, History

- B9.6.10. Digital humanities

1 Team members, visitors, external collaborators

Research Scientists

- Remi Ronfard [Team leader, INRIA, Senior Researcher, HDR]

- Melina Skouras [INRIA, Researcher]

Faculty Members

- Stéfanie Hahmann [GRENOBLE INP, Professor, HDR]

- Olivier Palombi [Faculté de Médecine, UGA, Professor, HDR]

Post-Doctoral Fellow

- Thibault Tricard [UL, from Dec 2022]

PhD Students

- Sandrine Barbois [Faculté de médecine, Université Claude Bernard Lyon 1]

- Siyuan He [ENPC, from Nov 2022]

- Emmanuel Rodriguez [INRIA]

- Manon Vialle [UGA]

Administrative Assistant

- Marion Ponsot [INRIA]

2 Overall objectives

ANIMA focuses on developing computer tools for authoring and directing animated movies, interactive games and mixed-reality applications, using virtual sets, actors, cameras and lights. This raises several scientific challenges. Firstly, we need to build a representation of the story that the user/director has in mind, and this requires dedicated user interfaces for communicating the story. Secondly, we need to offer tools for authoring the necessary shapes and motions for communicating the story visually, and this requires a combination of high-level geometric, physical and semantic models that can be manipulated in real-time under the user’s artistic control. Thirdly, we need to offer tools for directing the story, and this requires new interaction models for controlling the virtual actors and cameras to communicate the desired story while maintaining the coherence of the story world.

2.1 Understanding stories

Stories can come in many forms. An anatomy lesson is a story. A cooking recipe is a story. A geological sketch is a story. Many paintings and sculptures are stories. Stories can be told with words, but also with drawings and gestures. For the purpose of creating animated story worlds, we are particularly interested in communicating the story with words in the form of a screenplay or with pictures in the form of a storyboard. We also foresee the possibility of communicating the story in space using spatial gestures. The first scientific challenge for the ANIMA team is to propose new computational models and representations for screenplays and storyboards, and practical methods for parsing and interpreting screenplays and storyboards from multimodal user input. To do this, we reverse engineer existing screenplays and storyboards, which are well suited for generating animation in traditional formats. We also explore new representations for communicating stories with a combination of speech commands, 3D sketches and 3D gestures, which promise to be more suited for communicating stories in new media including virtual reality, augmented reality and mixed reality.

2.2 Authoring story worlds

Telling stories visually creates additional challenges not found in traditional, text-based storytelling. Even the simplest story requires a large vocabulary of shapes and animations to be told visually. This is a major bottleneck for all narrative animation synthesis systems. The second scientific challenge for the ANIMA team is to propose methods for quickly authoring shapes and animations that can be used to tell stories visually. We devise new methods for generating shapes and shape families, understanding their functions, styles, material properties and affordances, authoring animations for a large repertoire of actions, and printing and fabricating articulated and deformable shapes suitable for creating physical story worlds with tangible interaction.

2.3 Directing story worlds

Lastly, we develop methods for controlling virtual actors and cameras in virtual worlds and editing them into movies in a variety of situations ranging from 2D and 3D professional animation, to virtual reality movies and real-time video games. Starting from the well-established tradition of the storyboard, we create new tools for directing movies in 3D animation, where the user is really the director, and the computer is in charge of its technical execution using a library of film idioms. We also explore new areas, including the automatic generation of storyboards from movie scripts for use by domain experts, rather than graphic artists.

3 Research program

The four research themes pursued by ANIMA are (i) the geometry of story worlds; (ii) the physics of story worlds; (iii) the semantics of story worlds; and (iv) the aesthetics of story worlds.

In each theme, significant advances in the state of the art are needed to propose computational models of stories, and build the necessary tools for translating stories to 3D graphics and animation.

3.1 Geometric modeling of story worlds

Scientist in charge: Stefanie Hahmann

Other participants: Rémi Ronfard, Mélina Skouras

We aim to create intuitive tools for designing 3D shapes and animations which can be used to populate interactive, animated story worlds, rather than inert and static virtual worlds. In many different application scenarios such as preparing a product design review, teaching human anatomy with a MOOC, composing a theatre play, directing a movie, showing a sports event, 3D shapes must be modeled for the specific requirements of the animation and interaction scenarios (stories) of the application.

We will need to invent novel shape modelling methods to support the necessary affordances for interaction and maintain consistency and plausibility of the shape appearances and behaviors during animation and interaction. Compared to our previous work, we will therefore focus increasingly on designing shapes and motions simultaneously, rather than separately, based on the requirements of the stories to be told.

Previous work in the IMAGINE team has emphasized the usefulness of space-time constructions for sketching and sculpting animation both in 2D and 3D. Future work in the ANIMA team will further develop this line of research, with the long-term goal of choreographing complex multi-character animation and providing full authorial and directorial control to the user.

3.1.1 Space-time modeling

The first new direction of research in this theme is an investigation of space-time geometric modeling, i.e. the simultaneous creation of shapes and their motions. This is in continuity with our previous work on "responsive shapes", i.e. making 3D shapes respond in an intuitive way during both design and animation.

3.1.2 Spatial interaction

A second new direction of research of the ANIMA team will be the extension of sketching and sculpting tools to the case of spatial 3D interaction using virtual reality headsets, sensors and trackers.

Even though 3D modeling can be regarded as an ideal application for Virtual Reality, it is known to suffer from the lack of control for freehand drawing. Our insight is to exploit the expressiveness of hand (controller) motion and simple geometric primitives in order to form an approximated 3D shape. The goal is not to generate a final well shaped product, but to provide a 3D sketching tool for creating early design shapes, kind of scaffolds, and for rough idea exploration. Standard 3D modeling systems can then take over to generate more complex shape details.

Research directions to be explored include (i) direct interaction using VR; (ii) applications to form a 3D shape from rough design ideas; (iii) applications to modify existing objects during design review sessions; and (iv) provide tools to ease communications about imagined shapes.

3.2 Physical modeling of story worlds

Scientist in charge: Mélina Skouras

Other participants: Stefanie Hahmann, Rémi Ronfard

When authoring and directing story worlds, physics is important to obtain believable and realistic behaviors, e.g. to determine how a garment should deform when a character moves, or how the branches of a tree bend when the wind start to blow. In practice, while deformation rules could be defined a priori (e.g. procedurally), relying on physics-based simulation is more efficient in many cases as this means that we do not need to think in advance about all possible scenarii. In ANIMA, we want to go a step further. Not only do we want to be able to predict how the shape of deformable objects will change, but we also want to be able to control their deformation. In short, we are interested in solving inverse problems where we adjust some parameters of the simulation, yet to be defined so that the output of the simulation matches what the user wants.

By optimizing design parameters, we can get realistic results on input scenarii, but we can also extrapolate to new settings. For example, solving inverse problems corresponding to static cases can be useful to obtain realistic behaviors when looking at dynamics. E.g. if we can optimize the cloth material and the shape of the patterns of a dress such that it matches what an artist designed for the first frame of an animation, then we can use the same parameters for the rest of the animation. Of course, matching dynamics is also one of our goals.

Compared to more traditional approaches, this raises several challenges. It is not clear what the best way is for the user to specify constraints, i.e. how to define what she wants (we do not necessarily want to specify the positions of all nodes of the meshes for all frames, for example). We want the shape to deform according to physical laws, but also according to what the user specified, which means that the objectives may conflict and that the problem can be over-constrained or under-constrained.

Physics may not be satisfied exactly in all story worlds i.e. input may be cartoonish, for example. In such cases, we may need to adapt the laws of physics or even to invent new ones. In computational fabrication, the designer may want to design an object that cannot be fabricated using traditional materials for example. But in this case, we cannot cheat with the physics. One idea is to extend the range of things that we can do by creating new materials (meta-materials), creating 3D shapes from flat patterns, increasing the extensibility of materials, etc.

To achieve these goals, we will need to find effective metrics (how to define objective functions that we can minimize); develop efficient models (that can be inverted); find suitable parameterizations; and develop efficient numerical optimization schemes (that can account for our specific constraints).

3.2.1 Computational design of articulated and deformable objects

We would like to extend sketch-based modeling to the design of physical objects, where material and geometric properties both contribute to the desired behaviors. Our goal in this task will be to provide efficient and easy-to-use physics-aware design tools. Instead of using a single 3D idealized model as input, we would like to use sketches, photos, videos together with semantic annotations relating to materials and motions. This will require the conceptualization of physical storyboards. This implies controlling the matter and includes the computational design of meso-scale materials that can be locally assigned to the objects; the optimization of the assignment of these materials such that the objects behave as expected; the optimization of the actuation of the object (related to the point below). Furthermore, the design of the meta-materials/objects can take into account other properties in addition to the mechanical aspects. Aesthetics, in particular, might be important.

3.2.2 Physical storyboarding

Story-boards in the context of physical animation can be seen as a concept to explain how an object/character is supposed to move or to be used (a way to describe the high-level objective). Furthermore, they can be used to represent the same object from different views, in different scales, even at different times and in different situations, to better communicate the desired behavior. Finally, they can be used to represent different objects behaving "similarly".

Using storyboards as an input to physical animation raises several scientific challenges. If one shape is to be optimized; we need to make sure that the deformed shape can be reached (i.e. that there is a continuous path from the initial shape to the final shape) - e.g. deployable structures. We will need to explore different types of inputs: full target animations, key-frames, annotations (arrows), curves, multi-modal inputs. Other types of high-level goals, which implies that the object should be moving/deforming in a certain way (to be optimized), e.g locomotion, dressing-up a character.

3.3 Semantic modeling of story worlds

Scientist in charge: Oliver Palombi

Other participants: Rémi Ronfard, Nicolas Szilas

Beyond geometry and physics, we aim at representing the semantics of story worlds. We use ontologies to organize story worlds into entities described by well defined concepts and relations between them. Especially important to us is the ability to "depict" story world objects and their properties during the design process 17 while their geometric and material properties are not yet defined. Another important aspect of this research direction is to make it possible to quickly create interactive 3D scenes and movies by assembling existing geometric objects and animations. This requires a conceptual model for semantic annotations, and high level query languages where the result of a semantic query can be a 3D scene or 3D movie.

One important application area for this research direction is the teaching of human anatomy. The Phd thesis of Ameya Murukutla focuses on automatic generation of augmented reality lessons and exercises for teaching anatomy to medical students and sports students using the prose storyboard language which we introduced during Vineet Gandhi's PhD thesis 11. By specializing to this particular area, we are hoping to obtain a formal validation of the proposed methods before we attempt to generalize them to other domains such as interactive storytelling and computer games.

3.3.1 Story world ontologies

We will extend our previous work on ontology modeling of anatomy 30, 32 in two main directions. Firstly, we will add procedural representations of anatomic functions that make it possible to create animations. This requires work in semantic modeling of 3D processes, including anatomic functions in the teaching of anatomy. This needs to be generalized to actor skills and object affordances in the more general setting of role playing games and storytelling. Secondly, we will generalize the approach to other storytelling domains. We are starting to design an ontology of dramatic functions, entities and 3D models. In storytelling, dramatic functions are actions and events. Dramatic entities are places, characters and objects of the story. 3D models are virtual actors, sets and props, together with their necessary skills and affordances. In both cases, story world generation is the problem of linking 3D models with semantic entities and functions, in such a way that a semantic query (in natural language or pseudo natural language) can be used to create a 3D scene or 3D animation.

3.3.2 Story world scenarios

While our research team is primarily focused on providing authoring and directing tools to artists, there are cases where we also would like to propose methods for generating 3D content automatically. The main motivation for this research direction is virtual reality, where artists attempt to create story worlds that respond to the audience actions. An important application is the emerging field of immersive augmented reality theatre 25, 33, 28, 29, 24, 19, 26. In those cases, new research work must be devoted to create plausible interactions between human and virtual actors based on an executable representation of a shared scenario.

3.4 Aesthetic modeling of story worlds

Scientist in charge:Rémi Ronfard

Other participants: Stefanie Hahmann, Mélina Skouras, François Garnier

Data-driven methods for shape modeling and animation are becoming increasingly popular in computer graphics, due to the recent success of deep learning methods. In the context of the ANIMA team, we are particularly interested in methods that can help us capture artistic styles from examples and transfer them to new content. This has important implications in authoring and directing story worlds because it is important to offer artistic control to the author or director, and to maintain a stylistic coherence while generating new content. Ideally, we would like to learn models of our user's authoring and directing styles, and create contents that matches those styles.

3.4.1 Learning and transferring shape styles

We want to better understand shape aesthetics and styles, with the long-term goal of creating complex 3D scenes with a large number of shapes with consistent styles. We will also investigate methods for style transfer, allowing to re-use existing shapes in novel situations by adapting their style and aesthetics 27.

In the past exhaustive research has been done on aesthetic shape design in the sense of fairness, visual pleasing shapes using e.g. bending energy minimization and visual continuity. Note, that these aspects are still a challenge in motion design (see next section). In shape design, we now go one step further by focusing on style. Whereas fairness is general, style is more related to application contexts, which we would like to formalize.

3.4.2 Learning and transferring motion styles

While the aesthetics of spatial curves and surfaces has been extensively studied in the past, resulting in a large vocabulary of spline curves and surfaces with suitable control parameters, the aesthetics of temporal curves and surfaces is still poorly understood. Fundamental work is needed to better understand which geometric features are important in the perception of the aesthetic qualities of motions and to design interpolation methods that preserve them. Furthermore, we would like to transfer the learned motion styles to new animations. This is a very challenging problem, which we started to investigate in previous work in the limited domains of audiovisual speech animation 16 and hand puppeteering 23.

3.4.3 Learning and transfering film styles

In recent years, we have proposed new methods for automatically composing cinematographic shots in live action video 22 or 3D animation 20 and to edit them together into aesthetically pleasing movies 21. In future work, we plan to apply similar techniques for the new use case of immersive virtual reality. This raises interesting new issues because spatial and temporal discontinuities must be computed in real time in reaction to the user's movements. We have established a strong collaboration with the Spatial Media team at ENSADLAB to investigate those issues. We also plan to transfer the styles of famous movie directors to the generated movies by learning generative models of their composition and film editing styles, therefore extending the previous work of Thomas 31 from photographic style to cinematographic style. The pioneering work of Cutting and colleagues 18 used a valuable collection of 150 movies covering the years 1935 to 2010, mostly from classical Hollywood cinema. A more diverse dataset including European cinema in different genres and styles will be a valuable contribution to the field. Towards this goal, we are building a dataset of movie scenes aligned with their screenplays and storyboards.

4 Application domains

The research goals of the ANIMA team are applicable to many application domains which use computer graphics and are in demand of more intuitive and accessible authoring and directing tools for creating animated story worlds. This includes arts and entertainment, education and industrial design.

Arts and entertainement

Animated story worlds are central to the industries of 3D animation and video games, which are very strong in France. Designing 3D shapes and motions from storyboards is a worthwhile research goal for those industries, where it is expected to reduce production costs while at the same time increasing artistic control, which are two critical issues in those domains. Furthermore, story is becoming increasingly important in video games and new authoring and directing tools are needed for creating credible interactive story worlds, which is a challenge to many video game companies. Traditional live action cinematography is another application domain where the ANIMA team is hoping to have an impact with its research in storyboarding, virtual cinematography and film editing.

Performance art, including dance and theater, is an emergent application domain with a strong need for dedicated authoring and directing tools allowing to incorporate advanced computer graphics in live performances. This is a challenging application domain, where computer-generated scenography and animation need to interact with human actors in real-time. As a result, we are hoping that the theater stage becomes an experimental playground for our most exploratory research themes. To promote this new application domain, we are organizing the first international workshop on computer theater in Grenoble in February 2020, under the name Journées d’Informatique Théâtrale (JIT). The workshop will assemble theater researchers, artists and computer engineers whose practice incorporates computer graphics as a means of expression and/or a creative tool. With this workshop, our goal is to create a new research discipline that could be termed “computer theater”, following the model of computer music, which is now a well established discipline.

Education

Teaching of Anatomy is a suitable domain for research. As professor of Anatomy, Olivier Palombi gives us the opportunity to experiment in the field. The formalization of anatomical knowledge in our ontology called My Corporis Fabrica (MyCF) is already operational. One challenge for us is to formalize the way anatomy is taught or more exactly the way anatomical knowledge is transmitted to the students using interactive 3D scenes.

Museography is another related application domain for our research, with a high demand for novel tools allowing to populate and animate virtual reconstructions of art works into stories that make sense to museum audiences of all ages. Our research is also applicable to scientific museography, where animated story worlds can be used to illustrate and explain complex scientific concepts and theories.

Industrial design

Our research in designing shapes and motions from storyboards is also relevant to industrial design, with applications in the fashion industry, the automotive industry and in architecture. Those three industries are also in high demand for tools exploiting spatial interaction in virtual reality. Our new research program in physical modeling is also applicable to those industries. We have established strong partnerships in the past with PSA and Vuitton, and we will seek to extend them to architectural design as well in the future.

5 Social and environmental responsibility

5.1 Footprint of research activities

ANIMA is a small team of four permanent researchers and three PhD students, so our footprint is limited. We estimate that we run approximately twelve computers, including laptops, desktops and shared servers, at any given time.

Research in computer graphics is not (yet) data intensive. We mostly devise procedural algorithms, which require limited amounts of data. One notable exception is our work on computational editing of live performances, which produces large amounts of ultra high definition video files. We have opted for a centralized video server architecture (KinoAI) so that at least the videos are never duplicated and always reside on a single server.

On the other hand, our research requires prowerful graphics processing units (GPU) which significantly increase the power consumption of our most powerful desktops.

The COVID19 crisis has changed our working habits heavily. We have learned to work from home most of the week, and to abandon international travel entirely. This holds the promise of a vastly reduced footprint. But it is too early to say whether this is sustainable. While the team as a whole has been able to function in adverse conditions, it has become increasingly difficult to welcome interns and Masters students.

5.2 Impact of research results

Our research does not directly address social and environmental issues. Our work on 3D printing may have positive effects by allowing the more efficient use of materials in the production of prototypes. Our work on virtual medical simulation and training may provide an alternative in some cases to animal experiments and costly robotic simulations.

Our most important application domain is arts and culture, including computer animation and computer games. Globally, those sectors are creating jobs, rather than destroying them, in France and in Europe. The impact of our discipline is therefore positive at least in this respect.

We are more concerned with the impact of the software industry as a whole, i.e. private companies who implement our research papers and include them in their products. We note a tendency to increase the memory requirements of software. While much effort in software engineering is devoted to improving the execution speed of graphics programs, there is not enough effort in optimizing their footprint. This is an area that may be worth investigating in our future work.

6 Highlights of the year

6.1 Awards

Humbolt prize

Stefanie Hahmann was awarded the Gay Lussac-Humboldt prize by the Alexander von Humboldt Foundation in October 2022 in recognition for her academic career.

6.2 Expertise

Metaverse report

Rémi Ronfard was mandated by the French minister of economy and finance, and the French ministry of culture, on a six-month mission to investigate the challenges and opportunities of the metaverse, together with Adrien Basdevant and Camille Francois,

The conclusions of this exploratory mission were presented to the government on October 24, 2022.

The complete report is available from this official web site.

7 New software and platforms

7.1 New software

7.1.1 Kino AI

-

Name:

Artificial intelligence for cinematography

-

Keywords:

Video analysis, Post-production

-

Functional Description:

Kino AI is an implementation of the method described in our patent "automatic generation of cinematographic rushes using video processing". Starting from a single ultra high definitiion (UltraHD) recording of a live performance, we track and recognize all actors present on stage and generate one or more rushes suitable for cinematographic editing of a movie.

- URL:

- Publications:

-

Contact:

Remi Ronfard

-

Partner:

IIIT Hyderabad

————————

8 New results

———————————–

8.1 Computational Design of Laser-Cut Bending-Active Structures

Participants: Mélina Skouras, Stefanie Hahmann, Emmanuel Rodriguez.

Laser-cut bending-active structure

We proposed a method to automatically design bending-active structures, made of wood, whose silhouettes at equilibrium match desired target curves. Our approach is based on the use of a parametric pattern that is regularly laser-cut on the structure and that allows us to locally modulate the bending stiffness of the material. To make the problem tractable, we rely on a two-scale approach where we first compute the mapping between the average mechanical properties of periodically laser-cut samples of mdf wood, treated here as metamaterials, and the stiffness parameters of a reduced 2D model; then, given an input target shape, we automatically select the parameters of this reduced model that give us the desired silhouette profile. We validate our method both numerically and experimentally by fabricating a number of full scale structures of varied target shapes.

This work received the best paper award at the Symposium on Solid and Physical Modeling 2022.

8.2 Visualizing Isadora Duncan’s movements qualities

Participants: Rémi Ronfard, Mélina Skouras, Manon Vialle.

Isadora Duncan

We present a new abstract representation of choreographic motion that conveys the movement quality of fluidity that is central to the style of modern dance pioneer Isadora Duncan. We designed our model through a collaboration with an expert Duncanian dancer, using five flexible ribbons joining at the solar plexus and animated it from motion capture data using a tailored optimization-based algorithm. We display our model in a Hololens headset and provide features that allow to visualize and manipulate it in order to understand and learn Duncan’s choreographic style. Through a series of workshops, we explored our system with professional dancers and were able to observe how it provides them with an immersive experience of a novel visualization of Duncan movement qualities in a way that was not possible with traditional human-like or skeleton-based representations.

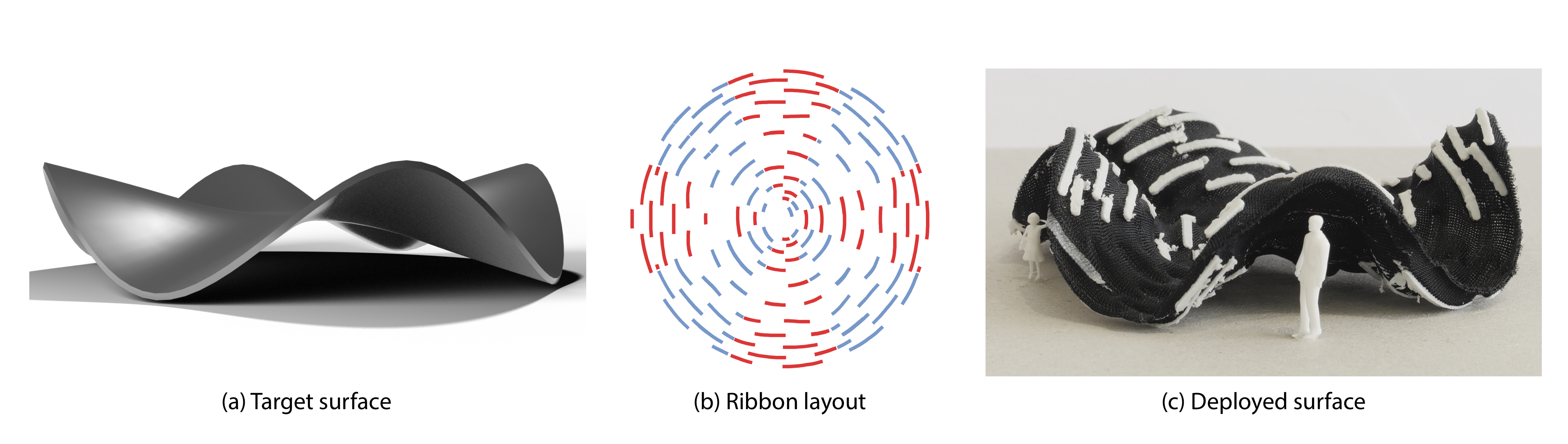

8.3 Computational Design of Self-Actuated Surfaces by Printing Plastic Ribbons on Stretched Fabric

Participant: Mélina Skouras.

Printing Plastic Ribbons on Stretched Fabric

In this work, we introduce a new mechanism for self-actuating deployable structures, based on printing a dense pattern of closely-spaced plastic ribbons on sheets of pre-stretched elastic fabric. We leverage two shape-changing effects that occur when such an assembly is printed and allowed to relax: first, the incompressible plastic ribbons frustrate the contraction of the fabric back to its rest state, forcing residual strain in the fabric and creating intrinsic curvature. Second, the differential compression at the interface between the plastic and fabric layers yields a bilayer effect in the direction of the ribbons, making each ribbon buckle into an arc at equilibrium state and creating extrinsic curvature. We describe an inverse design tool to fabricate low- cost, lightweight prototypes of freeform surfaces using the controllable directional distortion and curvature offered by this mechanism. The core of our method is a parameterization algorithm that bounds surface distortions along and across principal curvature directions, along with a pattern synthesis algorithm that covers a surface with ribbons to match the target distortions and curvature given by the aforementioned parameterization. We demonstrate the flexibility and accuracy of our method by fabricating and measuring a variety of surfaces, including nearly-developable surfaces as well as surfaces with positive and negative mean curvature, which we achieve thanks to a simple hardware setup that allows printing on both sides of the fabric.

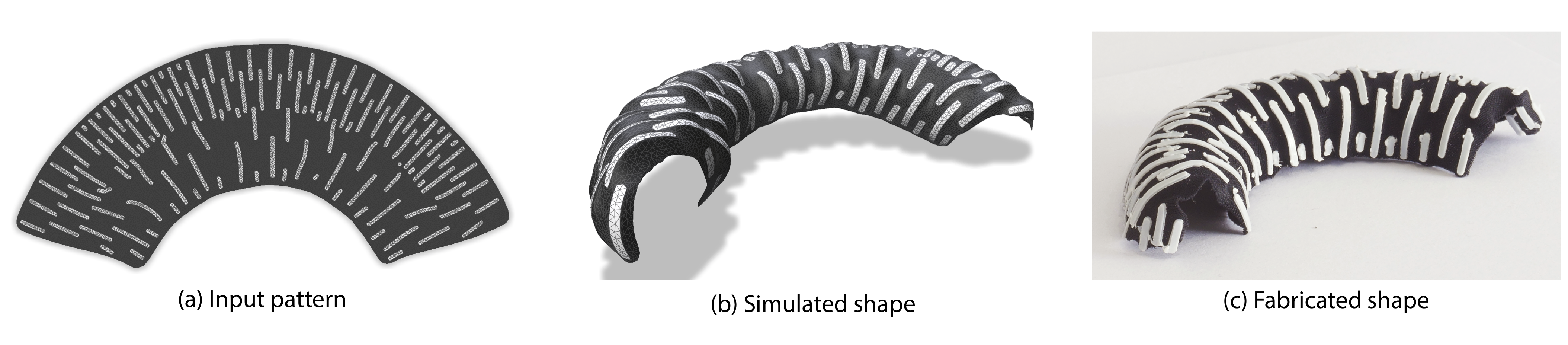

8.4 Simulation of printed-on-fabric assemblies

Participant: Mélina Skouras.

Simulation of printed-on-fabric assemblies

Printing-on-fabric is an affordable and practical method for creating self-actuated deployable surfaces: thin strips of plastic are deposited on top of a pre-stretched piece of fabric using a commodity 3D printer; the structure, once released, morphs to a programmed 3D shape. Several physics-aware modeling tools have recently been proposed to help designing such surfaces. However, existing sim- ulators do not capture well all the deformations these structures can exhibit. In this work, we proposed a new model for simulating printed-on-fabric composites based on a tailored bilayer formula- tion for modeling plastic-on-top-of-fabric strips, and an extended Saint-Venant–Kirchhoff material law for modeling the surrounding stretchy fabric. We showed how to calibrate our model through a se- ries of standard experiments. Finally, we demonstrated the improved accuracy of our simulator by conducting various tests.

8.5 OpenKinoAI: A Framework for Intelligent Cinematography and Editing of Live Performances

Participant: Rémi Ronfard.

OpenKinoAi

OpenKinoAI is an open source framework for post-production of Ultra High Definition video which makes it possible to emulate professional multiclip editing techniques for the case of single camera recordings. OpenKinoAI includes tools for uploading raw video footage of live performances on a remote web server, detecting, tracking and recognizing the performers in the original material, reframing the raw video into a large choice of cinematographic rushes, editing the rushes into movies, and annotating rushes and movies for documentation purposes. OpenKinoAI is made available to promote research in multiclip video editing of Ultra High Definition video, and to allow performing artists and companies to use this research for archiving, documenting and sharing their work online in an innovative fashion.

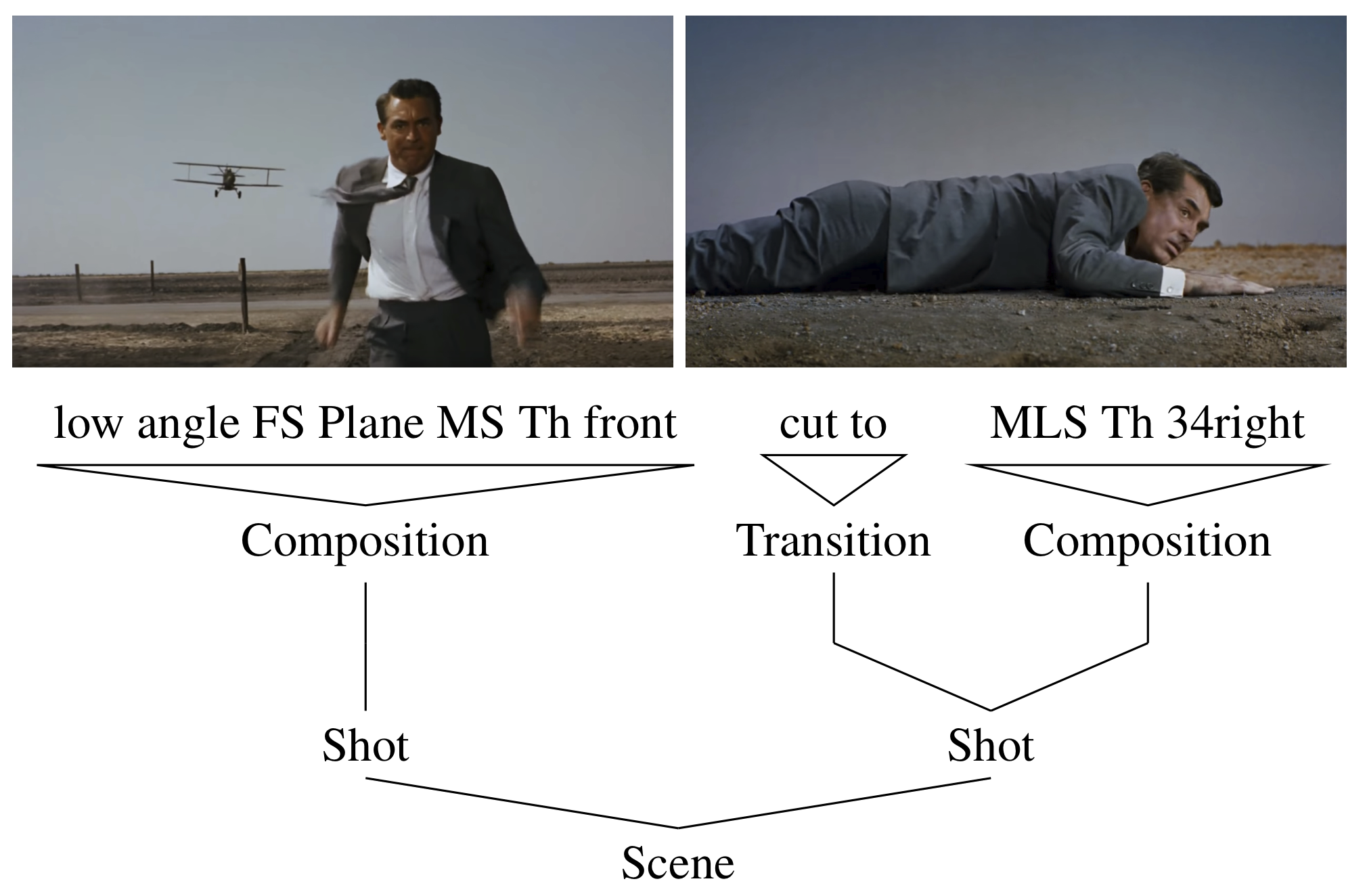

8.6 The Prose Storyboard Language: A Tool for Annotating and Directing Movies

Participant: Rémi Ronfard.

Prose storyboard language

The prose storyboard language is a formal language for describing movies shot by shot, where each shot is described with a unique sentence. The language uses a simple syntax and limited vocabulary borrowed from working practices in traditional movie-making and is intended to be readable both by machines and humans. The language has been designed over the last ten years to serve as a high-level user interface for intelligent cinematography and editing systems. In this new paper, we present the latest evolution of the language, and the results of an extensive annotation exercise showing the benefits of the language in the task of annotating the sophisticated cinematography and film editing of classic movies.

8.7 (Re-)Framing Virtual Reality

Participant: Rémi Ronfard.

Reframing virtual reality

In this paper, we address the problem of translating the rich vocabulary of cinematographic shots elaborated in classic films for use in virtual reality. Using a classic scene from Alfred Hitchcock’s "North by Northwest", we describe a series of artistic experiments attempting to enter "inside the movie" in various conditions and report on the challenges facing the film director in this task. For the case of room-scale VR, we suggest that the absence of the visual frame of the screen can be usefully replaced by the spatial frame of the physical room where the experience takes place. This "re-framing" opens new directions for creative film directing in virtual reality.

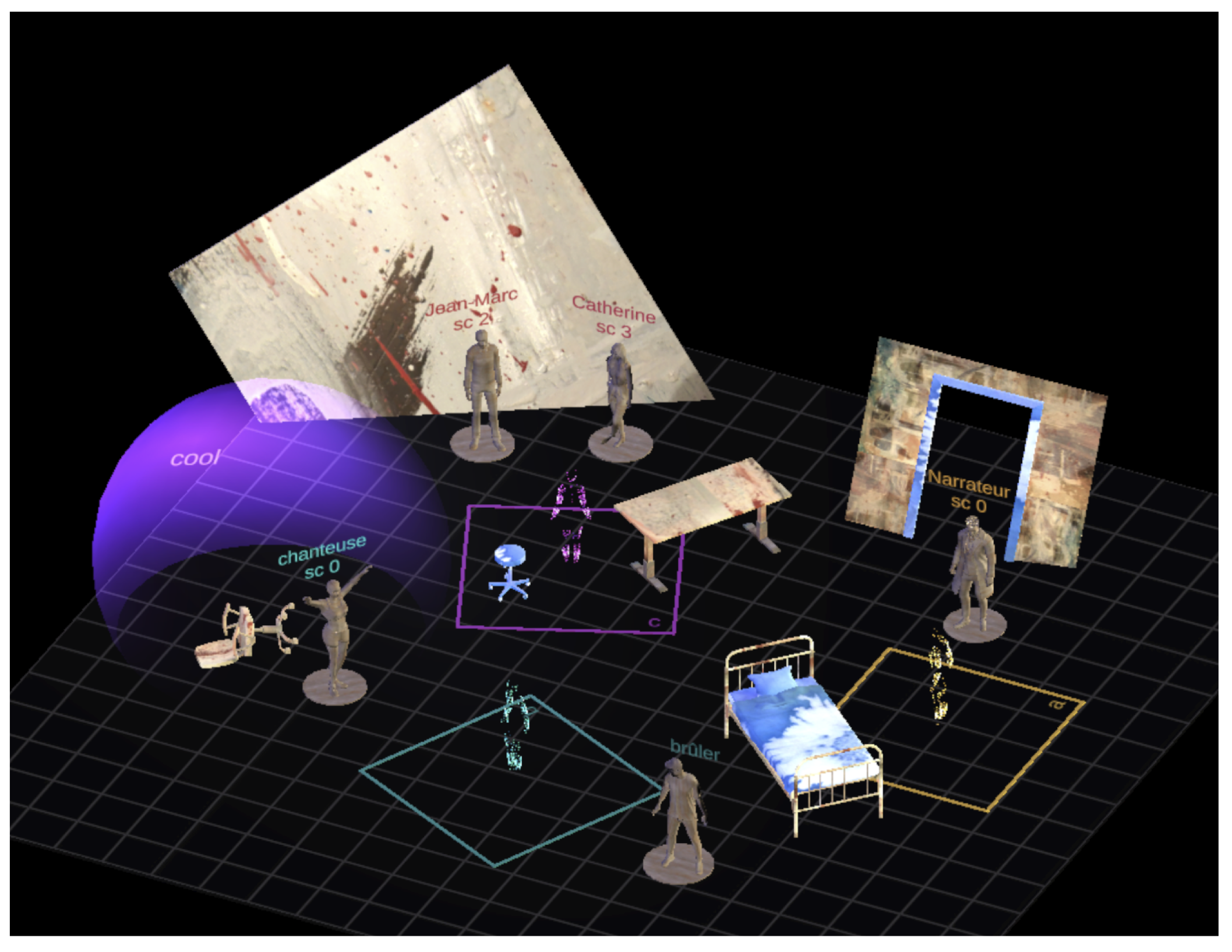

8.8 Montage as a narrative vector for virtual reality experiences

Participant: Rémi Ronfard.

Montage for virtual reality

We demonstrate through artistic experimentation that the concept of montage can be an effective vector for conveying complex narratives in immersive, room-scale virtual reality experiences. We review the particularities of virtual reality mediations regarding narrative, and we propose the new conceptual framework of « spatial montage » inspired by traditional cinematographic montage, taking into account the specific needs of virtual reality. We make the hypothesis that spatial montage needs to be related to body action of the user and can play a significant role in relating the immersive experience with an externally authored narrative. To test those hypotheses, we have built a set of tools implementing the basic capabilities of spatial montage in the Unity 3D game engine for use in an immersive virtual environment. The resulting prototype redefines the traditional concepts of shots and cuts using virtual stages and portals and proposes new interaction paradigms for creating and experiencing montage in virtual reality headsets.

9 Partnerships and cooperations

9.1 International initiatives

9.1.1 Visits to international teams

Sabbatical programme:

Stefanie Hahmann benefits a CRCT of 12 months from Grenoble-INP. Since September 2022 she is visiting the Image and Signal Processing group of Prof. Dr. Gerik Scheuermann at the Computer Science department at University of Leipzig in Germany as part of her sabbatical.

9.2 European initiatives

9.2.1 ADAM2

Participants: Stefanie Hahmann, Emmanuel Rodriguez, Mélina Skouras, Thibault Tricart.

ADAM2 (ANALYSIS, DESIGN And MANUFACTURING using MICROSTRUCTURES) is an ongoing project funded by the H2020 Horizon programme of the European Union, funding the PhD thesis of Emmanuel Rodriguez and the post-doc position of Thibault Tricart.

9.3 National initiatives

9.3.1 ANR SIDES-LAB

Participants: Olivier Palombi.

SIDES is a learning digital platform common to all French medical schools, used for official exams (tests) in faculties and for the training of students for the National Ranking Exam (ECN) which is fully computerized since 2016 (ECNi).

As part of this platform, ANIMA is taking part in the SIDES LAB project, which started in June 2022, under the leadership of Franck Ramus, Laboratoire de Sciences Cognitives et Psycholinguistique, ENS, Paris.

10 Dissemination

10.1 Promoting scientific activities

10.1.1 Scientific events: organisation

JIT 2022:

Participant: Rémi Ronfard.

Rémi Ronfard co-chaired the scientific committee of the second international Symposium on Computer Theater (Journées d'Informatique Théâtrale), which was co-organized by Inria and ENSATT (Ecole Nationale Supérieure des Arts et Techniques du Théâtre) in Lyon on October 10 and 11, 2022. The symposium attracted 30 speakers from France, Canada, Italy and Switzerland, and 100 participants. The proceedings will be published online in this HAL collection.

JIT2022 scenography session.

WICED 2022:

Participant: Rémi Ronfard.

Rémi Ronfard co-organized and co-chaired the tenth international Workshop on Intelligent Cinematography and film Editing, co-collated with Eurographics in Reims on April 28, 2022. The one-day workshop attracted 10 speakers and an audience of 50 researchers and students, see the workshop web site for details. The proceedings are published in the Eurographics digital library.

Graphyz 2nd Edition:

Participant: Mélina Skouras.

Mélina Skouras co-organized the second edition of Graphyz, the first Graphics-Physics workshop, which took place at the Saline Royale of Arc-et-Senans from October 16 to October 19, 2022. The workshop gathered about 80 researchers and students working in the fields of Physics or Computer Graphics. See the workshop web site for more details.

Graphyz 2.

10.1.2 Scientific events: selection

Chair of conference program committees:

Stefanie Hahmann is the co-chair of the tutorials program at Eurographics 2022.

Member of the conference program committees:

Rémi Ronfard is a program committee member for the International Conference on Interactive Digital Storyteling (ICIDS) conference.

Mélina Skouras was a member of the international program committee for Eurographics 2022.

Stefanie Hahmann is a program committee member for the Symposium on Solid and Physical Modeling (SPM’22).

10.1.3 Journal

Member of the editorial boards

Rémi Ronfard is a member of the editorial board of the Computer Animation and Virtual Worlds journal.

Stefanie Hahmann is an Associate Editor of CAG (Computers and Graphics, Elsevier) and CAD (Computer Aided Design, Elsevier).

Reviewer - reviewing activities:

Rémi Ronfard has reviewed papers for the Computer & Graphics (CAG), ACM transactions in Graphics (TOG), and Transactions on Visualization and Computer Graphics (T journal.

Mélina Skouras was an external reviewer for ACM Transactions on Graphics (Proceedings of SIGGRAPH 2022).

10.1.4 Invited talks

Mélina Skouras was an invited speaker for the mini-symposium on Interactive Simulation at Curves & Surfaces 2022.

Mélina Skouras gave a keynote presentation at Graphyz 2022.

Stefanie Hahmann gave an invited talk at the University of Leipzig (Germany) in the BSV seminar in November 2022 and at the TU Freiberg (Germany) in December 2022.

Emmanuel Rodrigues gave a contributed talk at Graphyz 2022.

10.1.5 Leadership within the scientific community

Stefanie Hahmann is a member of the SMI (Shape Modeling International Association) steering committee.

Stefanie Hahmann is a Work-package Leader and Principal Investigator for INRIA in the European FET OPEN Horizon 2020 project ADAM2 (Analysis, Design and Manufacturing of Microstructures, contract no. 862025).

10.1.6 Scientific expertise

Stefanie Hahmann was part of the Jury for SMA Young Investigator Award, Solid Modeling Association.

10.1.7 Research administration

Stefanie Hahmann is an elected member of the European Association for Computer Graphics – chapitre français (EGFR) and serves as secretary in the steering committee.

Stefanie Hahmann is an elected member of the council of Association Française d’Informatique Graphique (AFIG).

Stefanie Hahmann is an elected member of the Conseil Scientifique of Grenoble INP.

Stefanie Hahmann is a member of the Comité d’Etudes Doctorales (CED) at Inria Grenoble.

Stefanie Hahmann is Responsable Scientifique (Maths-Info) at the Grenoble Doctorate School MSTII.

10.2 Teaching - Supervision - Juries

10.2.1 Teaching

- Master: Mélina Skouras, Computer Graphics II, 36 HETD, M2, Ensimag-Grenoble INP (MoSIG).

- Since September 2022, Stefanie Hahmann is beneficiary of a CRCT from Grenoble INP. In 2021/22 Stefanie Hahmann had a teaching load of 208h HETD. She is responsible of 3 classes at Ensimag-Grenoble INP: NumericalMethods (240 students, 3rd year Bachelor level, 42h), Geometric Modeling (70 students, level M1 58h) and SurfaceModeling (45 students, level M2, 52h). She was president of the jury for more than 10 Masters (PFE) thesis defences.

- Stefanie Hahmann is co-responsible of the department MMIS (Images and Applied Maths) at Grenoble INP-ENSIMAG with 120 students (level M1,M2).

10.2.2 Supervision

In 2022, Rémi Ronfard supervised the PhD theses of Rémi Sagot at University PSL (Paris Sciences et Lettres) and Sandrine Barbois at Univ Grenoble Alpes.

Mélina Skouras co-supervised the PhD of David Jourdan (who defended on March 29, 2022).

Mélina Skouras has co-supervised the PhDs of Emmanuel Rodriguez (UGA, PhD in progress), Manon Vialle (UGA, PhD in progress), Alexandre Texeira da silva (UGA, PhD in progress) and Siuyan He (ENPC, PhD started in October 2022).

Stefanie Hahmann, Mélina Skouras and Georges-Pierre Bonneau co-supervise the PhD thesis of Emmanuel Rodriguez, which was started in October 2020, and the Post-Doc Thibault Tricard staring in December 2022.

10.2.3 Juries

Rémi Ronfard was a reader and member of the jury for the PhD defense of Swann Martinez at Univ. Paris 8, January 13, 2022.

Rémi Ronfard was the president of the Jury for the PhD defense of Boyao Zhou at Univ. Grenoble Alpes, November 22, 2022.

Rémi Ronfard was the president of the Jury for the PhD defense of Nolan Mestre at Univ. Grenoble Alpes, December 6, 2022.

Mélina Skouras was a member (as an examiner) of the jury for the PhD defense of Tian Gao at Sorbonne Université, November 8, 2022.

Mélina Skouras was a member (as an examiner) of the jury for the PhD defense of Paul Lacorre at Nantes Université, Dec. 22, 2022.

Mélina Skouras was an external reviewer for the PhD thesis of Iason Manolas, University of Pisa, Italy.

Stefanie Hahmann was a reader and member of the jury for the PhD defense of Jimmy Etienne at Université de Nancy, December 1, 2022.

Stefanie Hahmann was a reader and member of the jury for the PhD defense of Semyon Efremov at Université de Nancy, May 3, 2022.

Stefanie Hahmann was a member of the Recruitment Committee (CoS) for a Full Professor position at Grenoble INP/ENSIMAG

10.3 Popularization

10.3.1 Articles and contents

A portrait of Remi Ronfard was featured the French magazine Chut! covering several of the research goals of the ANIMA team as well as the ongoing collaboration between the ANIMA team at INRIA and the SPATIAL MEDIA team at Ecole des Arts Décoratifs.

11 Scientific production

11.1 Major publications

- 1 articleFine Wrinkling on Coarsely Meshed Thin Shells.ACM Transactions on Graphics405August 2021, 1-32

- 2 articleFashion Transfer: Dressing 3D Characters from Stylized Fashion Sketches.Computer Graphics Forum4062021, 466-483

- 3 inproceedingsRecognition of Laban Effort Qualities from Hand Motion.MOCO'20 - 7th International Conference on Movement and ComputingArticle No.: 8Jersey City/ Virtual, United StatesJuly 2020, 1-8

- 4 articleAs-Stiff-As-Needed Surface Deformation Combining ARAP Energy with an Anisotropic Material.Computer-Aided Design121April 2020, 1-15

- 5 articleFilm Directing for Computer Games and Animation.Computer Graphics Forum402May 2021, 713-730

11.2 Publications of the year

International journals

International peer-reviewed conferences

Reports & preprints

11.3 Cited publications

- 16 articleA Generative Audio-Visual Prosodic Model for Virtual Actors.IEEE Computer Graphics and Applications376November 2017, 40-51

- 17 articleDepicting as a method of communication.Psychological Review1232016, 324–347

- 18 articleAttention and the Evolution of Hollywood Film.Psychological Science2132010, 432-439

- 19 inproceedingsdAIrector: Automatic Story Beat Generation through Knowledge Synthesis.Proceedings of the Joint Workshop on Intelligent Narrative Technologies and Workshop on Intelligent Cinematography and Editing2018

- 20 inproceedingsCamera-on-rails: Automated Computation of Constrained Camera Paths.ACM SIGGRAPH Conference on Motion in GamesParis, FranceACMNovember 2015, 151-157

- 21 inproceedingsContinuity Editing for 3D Animation.AAAI Conference on Artificial IntelligenceAustin, Texas, United StatesAAAI PressJanuary 2015, 753-761

- 22 inproceedingsMulti-Clip Video Editing from a Single Viewpoint.European Conference on Visual Media ProductionLondon, United KingdomACMNovember 2014

- 23 inproceedingsSpatial Motion Doodles: Sketching Animation in VR Using Hand Gestures and Laban Motion Analysis.Motion, Interaction and GamesOctober 2019

- 24 inproceedingsHolojam in Wonderland: Immersive Mixed Reality Theater.ACM SIGGRAPH 2018 Art GallerySIGGRAPH '182018

- 25 inproceedingsImprovisational puppets, actors, and avatars.Proc Comp Game Dev Conf1996

- 26 inproceedingsCave: Making Collective Virtual Narrative.ACM SIGGRAPH 2019 Art GallerySIGGRAPH '192019

- 27 articleFunctionality Preserving Shape Style Transfer.ACM Transactions on Graphics3562016

- 28 articleAn empirical study of cognition and theatrical improvisation.Creativity and Cognition2009

- 29 bookHamlet on the Holodeck, The Future of Narrative in Cyberspace, Updated Edition.MIT Press2017

- 30 inproceedingsMy Corporis Fabrica: A Unified Ontological, Geometrical and Mechanical View of Human Anatomy.Proceedings of the 2009 International Conference on Modelling the Physiological Human3DPH'092009, 209--219

- 31 inproceedingsSeeing Behind the Camera: Identifying the Authorship of a Photograph.2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR)2016, 3494-3502

- 32 articleCombining 3D Models and Functions through Ontologies to Describe Man-made Products and Virtual Humans: Toward a Common Framework.Computer-Aided Design and Applications1222015, 166-180

- 33 articleThespian : An architecture for interactive pedagogical drama.Artificial Intelligence in Education1252005, 595–602