Keywords

Computer Science and Digital Science

- A3.1.1. Modeling, representation

- A3.4.1. Supervised learning

- A3.4.2. Unsupervised learning

- A3.4.4. Optimization and learning

- A3.4.5. Bayesian methods

- A3.4.6. Neural networks

- A3.4.8. Deep learning

- A6.2.4. Statistical methods

- A6.2.6. Optimization

- A9.2. Machine learning

- A9.3. Signal analysis

- A9.7. AI algorithmics

Other Research Topics and Application Domains

- B1.2. Neuroscience and cognitive science

- B1.2.1. Understanding and simulation of the brain and the nervous system

- B1.2.2. Cognitive science

- B1.2.3. Computational neurosciences

- B2.6.1. Brain imaging

1 Team members, visitors, external collaborators

Research Scientists

- Philippe Ciuciu [Team leader, CEA, Senior Researcher, HDR]

- Alexandre Gramfort [INRIA, Senior Researcher, HDR]

- Thomas Moreau [INRIA, Researcher]

- Bertrand Thirion [INRIA, Senior Researcher, HDR]

- Demian Wassermann [INRIA, Researcher, until Sep 2022]

- Demian Wassermann [INRIA, Senior Researcher, from Oct 2022, HDR]

Post-Doctoral Fellows

- Antoine Collas [INRIA, from Oct 2022]

- Richard Hoechenberger [INRIA, until Aug 2022]

- Hugo Richard [INRIA, until Mar 2022]

- Cedric Rommel [INRIA]

- David Sabbagh [INRIA, from Feb 2022]

- David Sabbagh [INSERM, until Jan 2022]

- Sheng H Wang [University of Helsinki, from Aug 2022, In delegation to CEA/NeuroSpin]

PhD Students

- Cedric Allain [INRIA]

- Serge Brosset [CEA, from Oct 2022]

- Charlotte Caucheteux [FACEBOOK]

- Ahmad Chamma [INRIA]

- Thomas Chapalain [ENS PARIS-SACLAY]

- L'Emir Omar Chehab [INRIA]

- Pierre-Antoine Comby [ENS PARIS SACLAY, CEA/NeuroSpin]

- Mathieu Dagreou [INRIA]

- Guillaume Daval-Frerot [CEA, until Nov 2022]

- Merlin Dumeur [UNIV PARIS SACLAY, PhD in cotutelle with Aalto University]

- Chaithya Giliyar Radhkrishna [CEA]

- Theo Gnassounou [ENS PARIS-SACLAY, from Oct 2022]

- Ambroise Heurtebise [INRIA, from Oct 2022]

- Hubert Jacob Banville [INTERAXON, until Jan 2022]

- Julia Linhart [UNIV PARIS-SACLAY]

- Benoit Malezieux [INRIA]

- Elsa Manquat [AP/HP, from Feb 2022]

- Apolline Mellot [INRIA]

- Raphael Meudec [INRIA]

- Florent Michel [UNIV PARIS SACLAY, from Apr 2022 until Sep 2022]

- Tuan Binh Nguyen [INRIA, until Feb 2022]

- Alexandre Pasquiou [INRIA]

- Zaccharie Ramzi [CEA, until Feb 2022]

- Louis Rouillard-Odera [INRIA]

- Alexis Thual [CEA]

- Gaston Zanitti [INRIA]

Technical Staff

- Majd Abdallah [INRIA, Engineer]

- Himanshu Aggarwal [INRIA, Engineer]

- Guillaume Favelier [INRIA, Engineer, until Apr 2022]

- Yasmin Mzayek [INRIA, Engineer, from Apr 2022]

- Agnes Perez-Millan [Univ. Pompeo Fabra (Barcelona, Spain), from Oct 2022, Visitor]

- Ana Ponce Martinez [INRIA, Engineer, from May 2022]

- Kumari Pooja [CEA, Engineer]

Interns and Apprentices

- Gabriela Gomez Jimenez [INRIA, from Nov 2022]

- Alexandre Le Bris [INRIA, from Sep 2022]

- Florent Michel [UNIV PARIS SACLAY, from Nov 2022]

- Joseph Paillard [INRIA, from Feb 2022 until Jul 2022]

Administrative Assistant

- Corinne Petitot [INRIA]

External Collaborators

- Zaineb Amor [CEA, PhD student at NeuroSpin, primarily assigned with BAOBAB.]

- Pierre Bellec [CRIUGM, from Oct 2022]

- Julie Boyle [CRIUGM, from Oct 2022]

- Jérôme-Alexis Chevalier [EMERTON DATA]

- Samuel Davenport [UNIV CALIFORNIE, until Feb 2022]

- Elizabeth Dupre [UNIV STANFORD, from Jun 2022 until Jun 2022]

- Ana Grilo Pinho [Western University, Canada, from Feb 2022 until Jul 2022]

- Yann Harel [CRIUGM, from Oct 2022]

- Karim Jerbi [UNIV MONTREAL, from Oct 2022]

- Gunnar Konig [IBE-LMU, from Sep 2022]

- Matthieu Kowalski [UNIV PARIS SACLAY]

- Tuan Binh Nguyen [IMT, from Mar 2022]

- François Paugam [CRIUGM, from Oct 2022]

- Hao-Ting Wang [CRIUGM, from Sep 2022]

2 Overall objectives

The Mind team, which finds its origin in the Parietal team, is uniquely equipped to impact the fields of statistical machine learning and artificial intelligence (AI) in service to the understanding of brain structure and function, in both healthy and pathological conditions.

AI with recent progress in statistical machine learning (ML) is currently aiming to revolutionize how experimental science is conducted by using data as the driver of new theoretical insights and scientific hypotheses. Supervised learning and predictive models are then used to assess predictability. We thus face challenging questions like Can cognitive operations be predicted from neural signals? or Can the use of anesthesia be a causal predictor of later cognitive decline or impairment?

To study brain structure and function, cognitive and clinical neuroscientists have access to various neuroimaging techniques. The Mind team specifically relies on non-invasive modalities, notably on one hand, magnetic resonance imaging (MRI) at ultra-high magnetic field to reach high spatial resolution and, on the other hand, electroencephalography (EEG) and magnetoencephalography (MEG), which allow the recording of electric and magnetic activity of neural populations, to follow brain activity in real time. Extracting new neuroscientific knowledge from such neuroimaging data however raises a number of methodological challenges, in particular in inverse problems, statistics and computer science. The Mindproject aims to develop the theory and software technology to study the brain from both cognitive to clinical endpoints using cutting-edge MRI (functional MRI, diffusion weighted MRI) and MEG/EEG data. To uncover the most valuable information from such data, we need to solve a large panoply of inverse problems using a hybrid approach in which machine or deep learning is used in combination with physics-informed constraints.

Once functional imaging data is collected the challenge of statistical analysis becomes apparent. Beyond the standard questions (Where, when and how can statistically significant neural activity be identified?), Mind is particularly interested in addressing driving effect or the cause of such activity in a given cortical region. Answering these basic questions with computer programs requires the development of methodologies built on the latest research on causality, knowledge bases and high-dimensional statistics.

The field of neuroscience is now embracing more open science standards and community efforts to address the referenced to as “replication crisis” as well as the growing complexity of the data analysis pipelines in neuroimaging. The Mindteam is ideally positioned to address these issues from both angles by providing reliable statistical inference schemes as well as open source software that are compliant with international standards.

The impact of Mindwill be driven by the data analysis challenges in neuroscience but also by the fundamental discoveries in neuroscience that presently inspire the development of novel AI algorithms. The Parietal team has proved in the past that this scientific positioning leads to impactful research. Hence, the newly created Mind team formed by computer scientists and statisticians with a deep understanding of the field of neuroscience, from data acquisition to clinical needs, offers a unique opportunity to expand and explore more fully uncharted territories.

3 Research program

The scientific project of Mind is organized around four core developments (machine learning for inverse problems, heterogeneous data & knowledge bases, statistics and causal inference in high dimension, and machine Learning on spatio-temporal signals).

3.1 Machine learning for inverse problems

Participants:

P. Ciuciu, A. Gramfort, T. Moreau, D. Wassermann

Inverse problems are ubiquitous in observational science. This necessitates the reconstruction of a signal/image of interest, or more generally a vector of parameters, from remote observations that are possibly noisy and scarce. The link between the parameters of interest and the observations is physics, and is commonly well understood. Yet, the recovery of parameters is challenging as the problem is often ill-posed due to the ill-conditioning of the forward model. Machine learning is now more frequently used to address such problems, using likelihood-free inference (LFI) to inverse nonlinear systems, or prior learning using bi-level optimization and reinforcement learning to guide the way to collect observations.

3.1.1 From linear inverse problems to simulation based inference

| Expected breakthrough: Boosts in MR image quality and reconstruction speed and in spatio-temporal resolution of M/EEG source imaging |

| Findings: Development of data-driven regularizing functions for inverse problems, as well as deep invertible and cost-effective network architectures amenable to solve nonlinear inverse problems on neuroscience data. |

Solving an inverse problem consists in estimating the unobserved parameters at the origin of some measurements. Typical examples are image denoising or image deconvolution, where, given noisy or low resolution data, the objective is to obtain an underlying high-quality image. Inverse problems are pervasive in experimental sciences such as physics, biology or neuroscience. The common problem across these fields is that the measurements are noisy and generally incomplete.

Mathematically speaking, these inverse problem can be formulated as estimating from . Here, is an additive noise and is a (generally non-injective) mapping to a lower-dimensional space. For example, in magneto- and electroenchephalography (M/EEG), is a real linear mapping and is considered white and Gaussian, while in magnetic resonance imaging (MRI), is a complex linear mapping and is circular complex white Gaussian. Despite the linearity of and , estimating is a challenging task when the measurements are incomplete, i.e., and the problem is ill-posed. This is often the case due to physical limitations on the measurement device (M/EEG) or the acquisition time (MRI). Moreover, the linear Fourier operator only reflects an ideal acquisition process and part of the acquisition artifacts (e.g. B0 inhomogeneity) can be compensated by considering nonlinear models at the cost of estimating additional parameters along with the MR image.

To tackle these inverse problems, using adequate regularization will promote the right structure for the data to be recovered. Over the last decade the members of Mind have proposed state-of-the-art models and efficient algorithms based on sparsity assumptions 74, 123, 96, 79, 124, 108, 107, 94, 99, 95. MNE is the reference software developed by the team that implements these methods for MEG/EEG data while pysap-mri proposes solvers for MR image reconstruction.

The field is now progressing with novel approaches based on deep learning by either learning the regularization from data in the context of MRI reconstruction 146, 145, or by considering nonlinear models grounded in the physics underlying the data. The team has started to explore this direction using so-called Likelihood-Free Inference (LFI) techniques built on deep invertible networks 147, 106. A particular application has been on diffusion MRI (dMRI), where we have linked the dMRI signal with physiological tissue models of grey matter tissue 106. Still in MRI but in susceptibility weighted imaging, another approach 88 has consisted in directly estimating the B0 field map from non-Cartesian k-space data to correct for off-resonance effects in non-Fourier operators . The Mind project will continue along this direction studying nonlinear simulators of imaging data as building blocks. A key aspect of the work proposed is to exploit knowledge on the physics of the data generation mechanisms.

3.1.2 Bi-level optimization

| Expected breakthrough: Efficient algorithms to select hyper-parameters and priors for source localisation in MEG and image reconstruction in MRI/fMRI. |

| Findings: Bi-level optimization solvers exploiting gradients to scale with the large number of samples and hyper-parameters. |

In recent years, bi-level optimization – minimizing over a parameter which is itself the solution of another optimization problem – has raised great interest in the machine learning community. Indeed, many methods in ML reduce to this bi-level framework, typically the problem of hyper-parameter optimization.

In most practical cases, hyper-parameter selection is done using cross-validation (CV), which basically consists in splitting the whole dataset in training and validation sets. The parameters of the method are computed by minimizing a loss function on the training set, and the hyper-parameters are then set by minimizing the loss function on the validation set. This approach is a bi-level optimization problem.

Other instances of such problems can be found in dictionary learning, robust training of neural networks or the use of implicit layers in deep learning. In all these applications, the model or the latent variables are learned by minimizing some loss while the parameters or the dictionary are updated by minimizing a second optimization problem depending on the outcome of the first problem. While theoretical results were produced in the early 70's 87, there are still many challenges related to bi-level optimization that need to be addressed to produce methods that are both theoretically well grounded and computationally efficient. Recently, the members of Mind have published several works related to the subject 57, 58, 69, 81. We intend to pursue this effort in the following directions.

Stochastic bi-level solvers.

Bi-level solvers require the use of the whole training set before doing an update on an outer-level problem: In this sense, they are full-batch methods 69. We propose to study stochastic methods for this task, where some improvement on the optimization can be achieved using only a few samples from the training data. Stochastic algorithms are notoriously faster than full-batch methods for large datasets, but are also generally harder to analyse from a theoretical standpoint. In addition to being fast, the proposed algorithm should come with some statistical guarantees. These solvers can have many applications, from stochastic prior learning for inverse problem to hyper-parameters tuning in general machine learning.

Neural Dictionary Learning.

Bi-level optimization framework offers a canvas to advance the state of the art in dictionary and prior learning. Indeed, dictionary learning has long been seen as a bi-level optimization problem 122. Practical algorithms are mainly based on alternate minimization and rarely account for the sub-optimality of each sub-problem. With advances in bi-level optimization and algorithm unrolling 57, we aim at providing efficient and theoretically justified dictionary learning algorithms, that will be able to leverage the technologies of differentiable programming 55, 141.

Deep Equilibrium Models.

The use of Deep learning, and in particular unrolled algorithms 102, has introduced a quantum leap in the resolution of inverse problems compared to variational approaches, specifically in terms of computing efficiency and image/signal recovery performance. However, these networks are very demanding in memory for the training, which currently limits their potential. Different methods exist to alleviate this problem both on the modeling (gradient check-pointing, reversible networks) and the implementation side (model parallelism, mixed precision), but come at the expense of larger computational cost. However, a promising research avenue, illustrated by 100, is the use of Deep Equilibrium Models. These models are defined implicitly and amount to unrolling an infinite number of iterations, thereby using much less memory. These implicit layers constitute another instance of bi-level optimization problem and we plan to work on these directions in the near future as a means to address DL image reconstruction in realistic 3D and 4D multi-coil MRI setting, both for structural and functional imaging.

3.1.3 Reinforcement learning for active k-space sampling

| Expected breakthrough: New hardware compliant under-sampling patterns in MRI k-space that accelerate anatomical and functional scans while optimizing MR image quality. |

| Findings: Develop novel principles of active sampling in the reinforcement learning framework which optimizes a sampling policy tightly linked to the reconstructed image quality. |

Current under-sampling schemes in MRI allow for shorter scan acquisition times, however at the cost of artifacts in various regions of the reconstructed MR image. These artifacts arise due to uncertainties in some heavily under-sampled regions of the acquired Fourier space (i.e. also called k-space). Modern reconstruction algorithms, with the use of strong priors, either hand-crafted or learned, tend to reduce these uncertainties and behave as if the acquisition is fixed.

To go beyond the state of the art, we argue that there is a need to jointly learn an algorithm that designs the optimal under-sampling pattern in k-space as well as the reconstruction network.

As it can be summarized to learning a sequential decision algorithm, we will rely on reinforcement learning (RL) to build up optimal k-space sampling patterns while enforcing physical constraints on the MRI sequence, as originally proposed in 77, 72, 115.

The k-space acquisition can be modeled by a sampling policy and the rewards for the joint network are based on reconstructed image quality. Under this paradigm, after every fixed scan time, an instantaneous reconstruction can be obtained and the Fourier space uncertainty maps analysed in depth. Based on this, the scan can continue by actively sampling the k-space and enforcing denser samples in regions where uncertainty is larger. In this way, the learned k-space trajectories may become more patient and organ specific. Further, the trajectory can run and lead to instantaneous best results of reconstruction under a given variable scan time budget. These aspects define one of the core directions we will investigate to produce the next generation of state-of-the-art MR data sampling and image reconstruction algorithms. Recent contributions 159, 142 only approach the problem in the Cartesian framework and hence perform 1D variable density sampling along the phase encoding dimension. Given our expertise on non-Cartesian sampling in developing SPARKLING for both for 2D and 3D MR imaging 115, 116, 75, we plan to extend this framework to non-Cartesian acquisition setups while still remaining compatible with hardware constraints on the gradient system. The access to various MRI scanners at CEA/NeuroSpin is necessary and an added advantage to the success of the Mind team.

3.2 Heterogeneous Data & Knowledge Bases

Participants:

B. Thirion, D. Wassermann

Inferring the relationship between the physiological bases of the human brain and its cognitive functions requires articulating different datasets in terms of their semantics and representation. Examples of these are spatio-temporal brain images, tabular datasets, structured knowledge represented as ontologies, and probabilistic datasets. Developing a formalism that can integrate all these modalities requires constructing a framework able to represent and efficiently perform computations on high-dimensional datasets as well as to combine hybrid data representations in deterministic and probabilistic settings. We will take on two main angles to achieve this task: on one hand, the automated inference of cross-dataset features, or coordinated representations and on the other hand, the use of probabilistic logic for knowledge representation and inference. The probabilistic knowledge representation part is now well advanced with the Neurolang project. It is yet a long-term endeavor. The learning of coordinated representations is less advanced.

3.2.1 Learning coordinated representations

| Expected breakthrough: Process semantic information together with image data to bridge large-scale resources and knowledge bases |

| Findings: Set up a learning model that leverages heterogeneous data: Images, annotations, texts, and coordinate tables. |

Inference is the pathway that leads from data to knowledge. One crucial aspect is that in the context of neuroscience, data comes in different forms: Full texts, images and tables. Annotations may be full texts or simply tags associated with observed images. One challenge is thus to develop automated techniques that learn coordinated representations across such heterogeneous data sources.

This learning endeavor rests on several key machine learning techniques: Compression, embeddings, and multi-layer networks. Compression (sketching) consists in building a reduced representation of some input that leads from large sparse and complex representation to low-dimension ones, while minimizing some distortion criterion. Embedding techniques also create representations, but possibly bias them to enhance some aspects of the data. It thus incorporates prior information on data distribution or the relevance of features. Finally, multi-layer networks create intermediate representation of data that are suitable to achieve a prediction goal. Such representations are rich enough in particular in multi-task settings, where the outputs of the network are multi-dimensional. Following 64, we call such latent data models coordinated representations.

Deep learning is well suited to the goal of learning intermediate representations. As an example, we plan to develop a framework that coalesces in one deep learning formulation, the task of estimating brain structures, cognitive concepts, and their relationships.

Brain structures and cognitive concepts will appear as intermediate representations responsible for linking brain activity to observed behavior. However deep learning cannot be considered as a standard means to understand coordinated representations, due to the limited data available, their poor signal-to-noise ratio (SNR) and their heterogeneity. Deep learning needs instead to be adapted by injecting our expertise on statistical structure of the data (see e.g. 104, 126). Since the challenge is to train such models on limited and noisy data, we will extend our recent work 62 that has developed regularization schemes for deep-learning models: it relies on structured stochastic regularizations (a.k.a. structured dropout). Such approaches are efficient, powerful and can be used in wide settings. We will enhance them with more generic, cross-layer, grouping schemes. Additionally, we will develop two strategies: i) aggregation of predictors for variance reduction and stability of the model 104 and ii) data augmentation – i.e. learning to augment, based on unlabeled data – to improve the fit with limited data. For this we will consider plausible generative mechanisms.

3.2.2 Probabilistic Knowledge Representation

| Expected breakthrough: A domain-specific language (DSL) capable of articulating heterogeneous probabilistic data sources in neuroimaging is a way to relate physiology to cognition. |

| Findings: Self-optimizing probabilistic solvers for discrete and continuous hierarchical models able to scale for neuroimaging problems. |

Neuroscientific data used to infer the relationships between physiology of the human brain and its cognitive function goes well beyond text, image, and tables. Knowledge graphs representing human knowledge, and the ability to encode reasoning strategies in neuroscience are also key to effectively bridge current data-centric approaches and decades-old domain knowledge. A main challenge in performing inferences combining demographic data-centric approaches, imaging measurements, and domain knowledge, is to be able to infer new knowledge soundly and efficiently taking into account the noisy nature of demographic and imaging measurements, and the common open-world assumption of ontologies and knowledge graphs. Such probabilistic hybrid logic approaches are known to be, in general, intractable in the deterministic 60 as well as in the probabilistic case 156. Nonetheless, there is an opportunity to be seized in identifying tractable segments of probabilistic hybrid logic representations able to solve open neuroscientific questions.

A noticeable opportunity to incorporate all statistical evidence gathered from noisy data into a usable knowledge base is to formalize the inferred relationships into probabilistic symbolic representations 105. These representations are much better suited to simultaneously handle data across topologies and logic systems, implementing inferential algorithms avoiding the brittleness of deterministic logic as well as causal probabilistic reasoning.

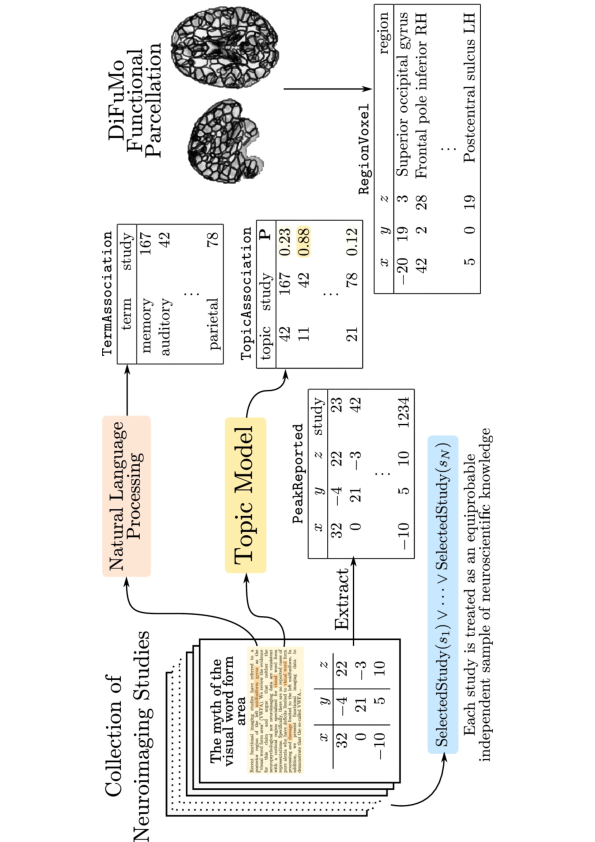

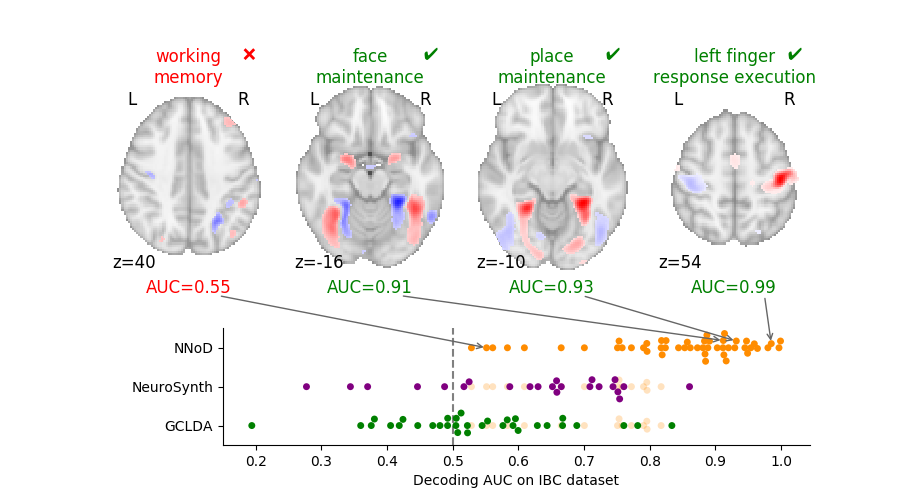

A typical application of such heterogeneous data processing is meta-analytic applications which combine neuroimaging data with results found in the scientific literature. Current tools to perform this task are NeuroSynth or Neuroquery (developed by the team). However, knowledge inferred by such tools is tremendously limited by the expressive power of the language used to query the data. Current meta-analytic tools are able to express queries relating test makers, article annotations, and their relationship with reported brain activations, support propositional logic only. Propositional logic requires the user to explicitly express every desired term with their characteristics and their relationships. Our goal is to extend the inference capabilities of such applications by leveraging current advances in probabilistic logic languages and embedding them in the Neurolang language. Neurolang enables the encoding of complex knowledge in terms of more expressive queries. Neurolang queries first-order logic segment, FO, with a tractable probabilistic extension allowing for high-dimensional and large dataset computations. Such segment of first order logic enables formalising questions such as “what brain areas are most likely reported active in a study specifically when terms related to consciousness are mentioned in such study”, hence being able to infer, amongst other tasks, specificity and causality 157 of diverse neuroscience phenomena. To disseminate our results allowing complex expressive searches of massively aggregated diverse data, we will leverage Neurolang. The latter produces a domain-specific language (DSL) for human neuroscience research, while being able to combine imaging data, anatomical descriptions and ontologies. Three main characteristics of the DSL are key to fulfilling this goal: First, it represents neuroimaging-derived information and spatial relationships in a syntax close to natural language used by neuroscientists 158. Second, through a back-end belonging to the Datalog family, it allows querying ontologies with the same expressive power as current standards SPARQL and OWL 66. Finally, we will extend Neurolang to a probabilistic language able to express graphical models allowing the implementation of a wide variety of causal inference and machine learning algorithms 63 in high-dimensional settings which are specific to neuroimaging research. In sum, by leveraging recent advances in deductive database systems 66 and this novel DSL 158 we will provide a more flexible tool to express and infer knowledge on brain structure-function relationships.

3.3 Statistics and causal inference in high dimension

Participants:

A. Gramfort, T. Moreau, B. Thirion, D. Wassermann

Statistics is the natural pathway from data to knowledge. Using statistics on brain imaging data involves dealing with high-dimensional data that can induce intensive computation and low statistical power. Besides, statistical models on large-scale data also need to take potential confounding effects and heterogeneity into account. To address these questions the Mind team will employ causal modeling and post-selection inference. Conditional and post-hoc inference are rather short-term perspectives, while the potential of causal inference stands as a longer-term endeavor.

3.3.1 Conditional inference in high dimension

| Expected breakthrough: Obtain statistical guarantees on the parameters of very-high dimensional generalized linear or non-parametric models. |

| Findings: Develop computationally efficient procedures that allow inference for such models, by leveraging structural priors on the solutions. |

Conditional inference consists of assessing the importance of a certain feature in a predictive model, while taking into account the information carried by alternative features. One motivation for using this inference scheme is that brain regions that sustain behavior and cognition are strongly interacting. Taking these interactions into account is critical to avoid confusing correlation with causation in brain/behavior analysis.

Technical difficulties come when the set of explanatory features becomes extremely large as frequently met in neuroimaging: Conditioning on many variables (or equivalently, high dimensional variables) is computationally costly and statistically inefficient. The main solutions to date are based either on linear model debiasing 110, as well as simulation-based approaches (knockoff inference 73 or conditional randomization tests 119). Importantly the latter involves simulating data with statistical characteristics described explicitly (in a parametric family) or implicitly (by samples). There remain two gaps to bridge for these methods: i) The computational gap, as the algorithmic complexity of these approaches is typically cubic in the number of samples, unless more efficient generative mechanisms are available; ii) the power gap, related to the limited number of available samples. The best solution thus far consists of associating these inference procedures with dimension reduction procedures 133. The next step is adaptation to more general settings: Conditional inference has been formulated in the linear framework, where it boils down to controlling that the corresponding coefficient is non-zero, hence it has to be generalized to nonlinear models: Non-parametric models like random forests, then possibly deep networks.

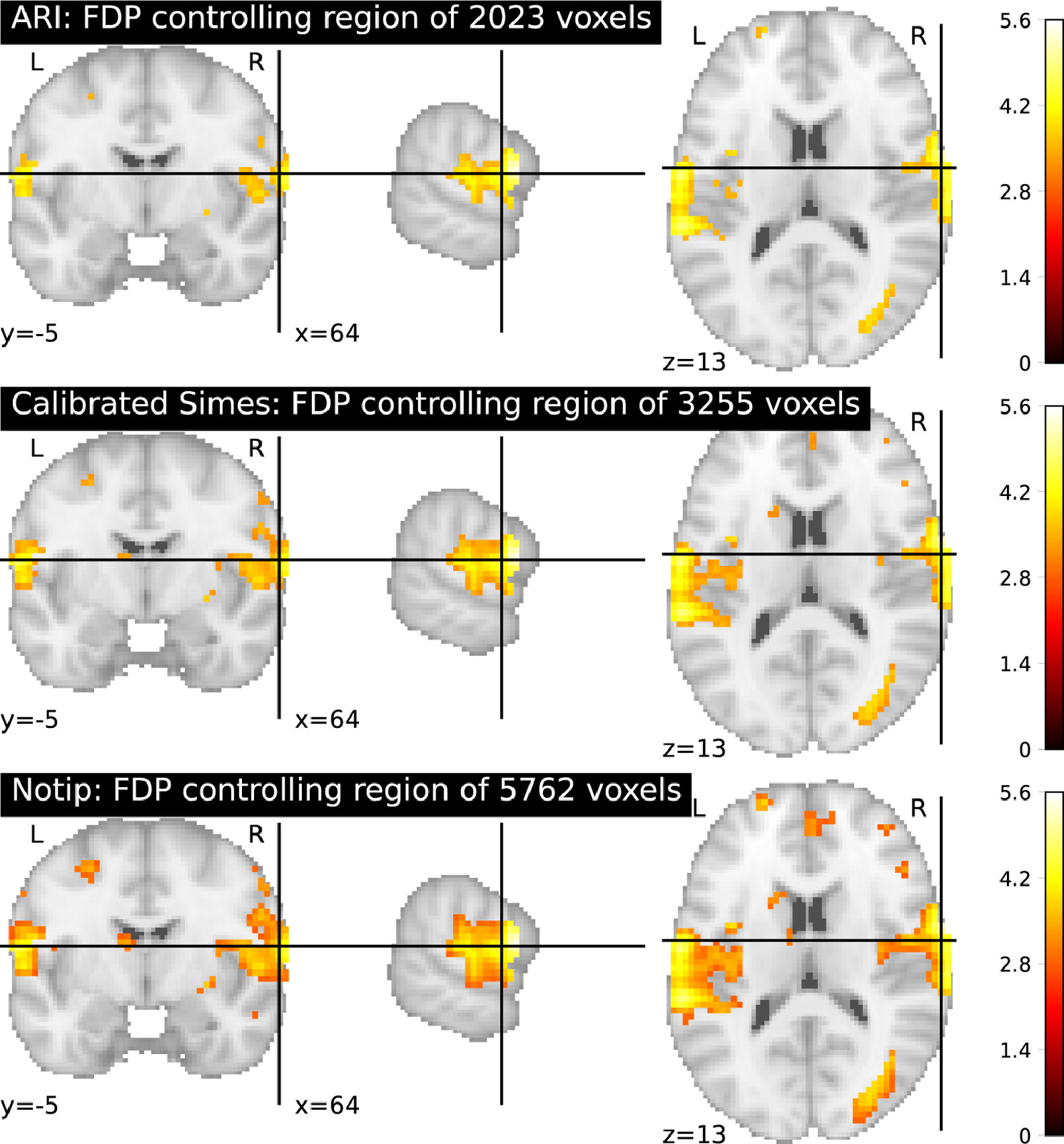

3.3.2 Post-selection inference on image data

| Expected breakthrough: Statistical control of false discovery proportion (FDP) for data under arbitrary correlation structure. |

| Findings: A computationally efficient non-parametric statistical test procedure, and a benchmark against alternative techniques. |

Large-scale statistical testing is pervasive in many scientific fields, where high-dimensional datasets are collected and compared with an outcome of interest. In such high-dimensional contexts, false discovery rate (FDR) control 68 is attractive because it yields reasonable power, while providing an explicit and interpretable control on false positives. Yet the FDR rate is the expectation of the FDP. Controlling the FDR does not mean that the FDP is controlled, a distinction that is most often ignored by practitioners. For the sake of scientific reproducibility, there is a need for methods controlling the FDP.

Such an approach has been developed in the context of neuroimaging, namely the all-resolution inference framework 148 based on classical multiple correction error control bounds. Yet, the empirical behavior of this method remains to be assessed. Moreover, it has been clearly established that the procedure is over-conservative in some settings 70. Indeed, it relies on the Simes statistical bound, that is not adaptive to the specific type of dependence for a particular data set. To bypass these limitations, 70 have proposed a randomization-based procedure known as -calibration, which yields tighter mathematical bounds that are adapted to the dependency observed in the dataset at hand. It rests on a non-parametric (permutation-based) estimation of the null distribution, leading to tight and valid inference under general assumptions.

In this research axis, we propose to fix some of the open issues with the approach described in 70, namely the choice of a template family to calibrate the error distribution in the permutation procedure. We hope to propose a practical choice for this family to avoid putting the burden of choice on practitioners.

We will characterize by simulations and theoretical arguments the behavior of these error control procedures and develop efficient computational methods for the use of these tools in brain imaging analysis.

3.3.3 Causal inference for population analysis

| Expected breakthrough: Provide a reference methodology for causal and mediation analysis in high-dimensional settings. |

| Findings: Benchmark state-of-the-art techniques and further adapt them to the high-dimensional setting. |

Modern health datasets present population characteristics with many variables and in multiple modalities. They can ground prediction and understanding of individual outcomes, using machine learning techniques. Still, heterogeneous variables have complex relationships, making it hard to tease apart each factor in an outcome of interest. Potential outcome theory 150 provides a valuable framework to evaluate the impact of treatment (interventions). Treatment effects can be heterogeneous. In particular, interactions between background and treatment variables have to be considered.

The statistical behavior (consistency and efficiency) under non-parametric models is actively investigated 61, 135. However, their behavior in high-dimensional settings, when both the number of features and the number of samples are large, is still poorly understood. Our objective is thus to extend the theory and algorithms of causal inference to noisy high-dimensional settings, where the noise level implies that effects sizes are proportionally small, and classic methods often become inefficient and potentially inaccurate due to overfitting. More specifically, we plan to explore the following directions.

Mediation analysis and conditional independence

Mediation analysis considers the question of whether a variable mediates all the effect of another variable onto a target variable , a.k.a. outcome. It turns out that full-mediation analysis amounts to testing whether ( is independent from given ), which is handled by a conditional independence test. When the dimensions of these variables ( in particular, but also and to some extent ) grow, the underlying statistical inference procedures typically lose power, or even possibly error control. We propose to leverage our experience on such high-dimensional inference problems 82, 134 to set up computationally efficient and accurate solutions to this problem.

Latent variable models and confounders

The most important aspect of inferring causal effects from observational data is the handling of confounders, i.e., factors that affect both an intervention and its outcome. For instance, age has a clear impact on brain characteristics as well as on behavior, potentially biasing brain/behavior statistical associations. A carefully designed observational study attempts to measure all important confounders. When one does not have direct access to all confounders, there may exist noisy and uncertain measurements of proxies for confounders. A possible solution to this problem relies on generative modeling, such as Variational Autencoders (VAE) and Generative Adversarial Networks (GANs), to sample the unknown latent space summarizing the confounders on datasets with incomplete information; the seminal work of 121 is promising, but still requires improvements to become usable in realistic settings.

The quest of model selection and validation

In the classical potential outcome theory 150, causal effects are determined by both factual and counterfactual outcomes, ground-truth effects can never be measured in an observational study. In the absence of such measures, how can we evaluate the performance of causal inference methods? Addressing this question is an important step for practical problems, in which one has to determine if an effect can safely be considered non-zero, or heterogeneous through a population. We propose to revisit the promising work of 59 analysing in detail the shortcomings of the procedure (regarding both bias and variance), especially when the model becomes high-dimensional.

3.4 Machine Learning on spatio-temporal signals

Participants:

P. Ciuciu, A. Gramfort, T. Moreau, D. Wassermann, B.Thirion

The brain is a dynamic system. A core task in neuroscience is to extract the temporal structures in the recorded signals as a means to linking them to cognitive processes or to specific neurological conditions. This calls for machine learning methods that are designed to handle multivariate signals, possibly mapped to some spatial coordinate system (e.g. like in fMRI).

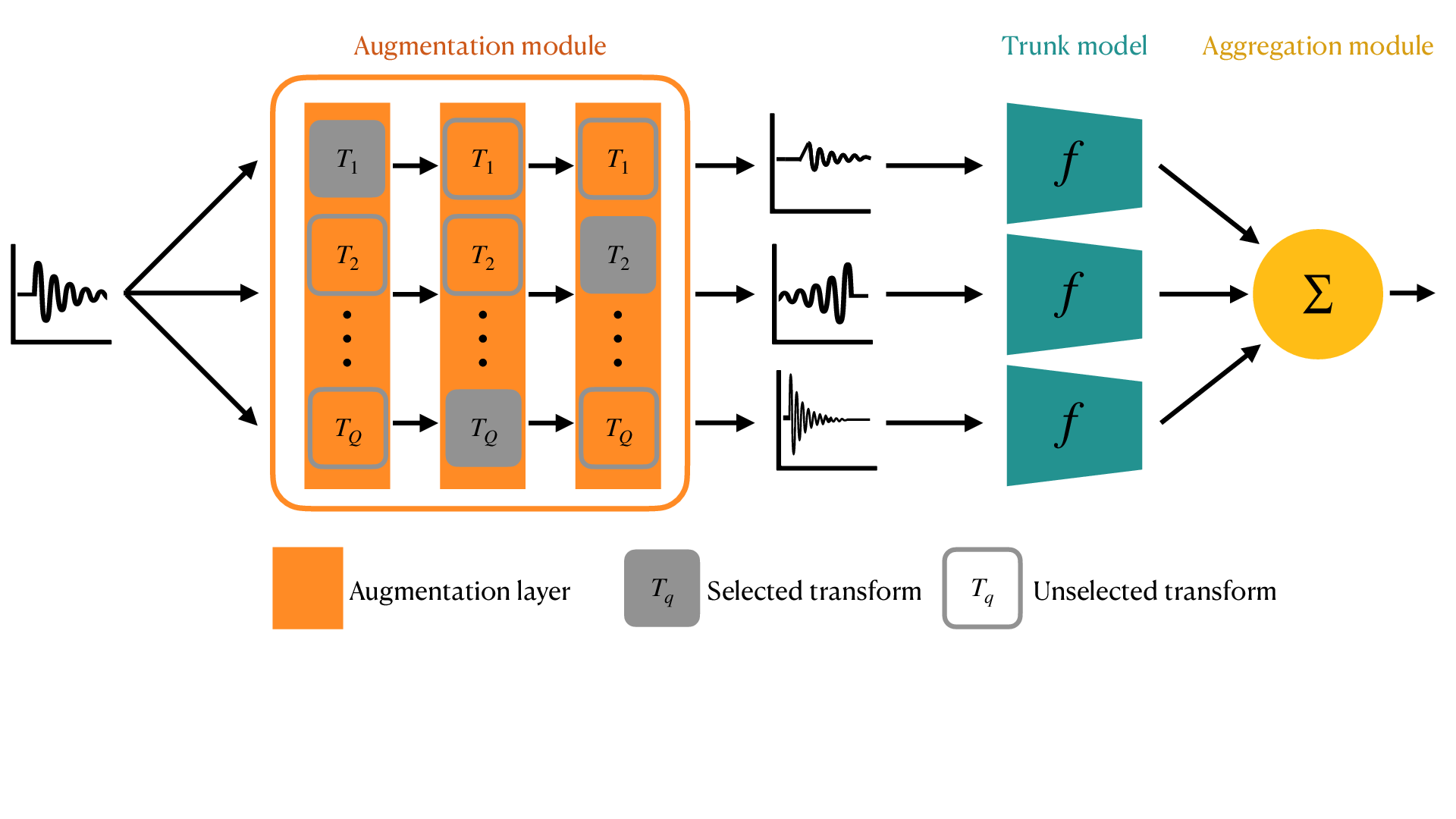

3.4.1 Injecting structural priors with Physics-informed data augmentation

| Expected breakthrough: Obtain models with more predictive power when trained on small datasets. |

| Findings: Efficient data-augmentation strategy tailored to brain signals. |

Data augmentation consists of virtually increasing dataset size during learning by applying random, yet plausible, transformations to the input data. In computer vision, this means altering data by applying symmetries, rotations, geometric deformations etc. While such strategies are reasonable for natural or medical images 140, it is still unclear how neural or BOLD signals can be augmented in order to improve prediction performance and robustness.

Some purely data driven strategies have been proposed to augment EEG data using spectral transforms 120 or advanced strategies such as channel, time or frequency masking or phase randomizations 114, 117. Although dozens of transformations have been considered in the literature to augment EEG signals, it is now apparent that different augmentation strategies should be applied to the data as a function of the prediction task to be handled. For example when considering sleep stage classification or BCI applications, the spatial sampling of electrodes and the duration of signals varies considerably, with the consequence being that different augmentation parameters and even transformations need to be employed.

In this line of work we will develop algorithms that can quickly identify the relevant augmentation techniques, building for example on 85, 119. The aim is to provide a system that can automatically learn invariance within a class and across subjects in order to maximize the prediction performance on unseen data. The methodology developed will be relevant beyond neuroscience as long as a family of physics-informed transformations is available for prediction tasks at hand.

3.4.2 Learning structural priors with self-supervised learning

| Expected breakthrough: Unveiling the latent structure of brain signals from large datasets without human supervision as well as improving the prediction performance when learning from limited data. |

| Findings: Self-supervised algorithms for multivariate brain signals. |

Self-supervised learning (SSL) is a recently developed area of research that provides a compelling approach for exploiting large unlabeled datasets. With SSL, the structure of the data is used to turn an unsupervised learning problem into a supervised one, called a “pretext task”, such as solving Jigsaw puzzles from images 137 or learning how to color gray-scaled images. The representation learned on the pretext task can then be reused for unsupervised data exploration or on a supervised downstream task, with the potential to greatly reduce the number of labeled examples required to train a good predictive model.

In fields like computer vision 137, 128 and time series processing 138, SSL has shown great promise in terms of prediction performance but also in ease of use. Indeed, SSL simplifies model selection and evaluation as it relies on prediction scores and cross-validation, contrarily to unsupervised learning methods like ICA 56.

Recently the team has applied SSL to two large cohorts of clinical EEG data 65 revealing insights on the data without any human supervision. However many challenges remain. For example in Mind, we aim to explore novel SSL strategies applicable to electrophysiology as well as to haemodynamic signals measured with fMRI. As such, our goal is to expand the recent multivariate method we have introduced in the field for the blind deconvolution of BOLD signals in both task-related and resting-state experiments 80.

While rather small networks have been employed so far on EEG data 76, 149 due to limited sets of annotations, the use of SSL tasks opens the possibility to work with much larger labeled datasets, and therefore many more overparametrized models. We aim to explore these directions, hoping to reach a state where pre-trained models could be available for EEG or MEG signals as is presently the case for images or for natural language processing (NLP) tasks.

3.4.3 Revealing spatio-temporal structures with convolutional sparse coding and driven point processes

| Expected breakthrough: A novel way to study and quantify temporal dependencies between neural processes, going beyond connectomes based on spectral analysis. |

| Findings: Temporal pattern finding algorithms that scale to massive MEG/EEG datasets with parallel processing and point-process inference algorithms. |

The convolutional sparse linear model is one established unsupervised learning framework designed for signals. Using algorithms known as convolutional sparse coding (CSC), this framework allows for the learning of shift-invariant patterns to sparsely reconstruct a time series. These patterns, also called atoms, correspond to recurrent structures present in the data. While some of our recent advances have improved the computational tractability of these methods 130, 129 and adapted them to neurophysiological data 109, 93, 80, there are still many shortcomings that make them unpractical for applications beyond denoising.

Model validation

The main challenge for the evaluation of unsupervised convolutional models comes from current theoretical limitations: What can we guarantee statistically concerning the recovered atoms? Due to their non-convexity, existing algorithms can only guarantee convergence to local minima, which might be sub-optimal. In this setting, it is challenging to quantify if the model parameters are well estimated and if they are actually representative of the signals. In Mind, we aim to develop statistical quantification of the uncertainty associated with such models and in this regard, provide objective selection criteria for the model and its parameters. This topic of research will benefit from our other developments on bi-level optimization (cf. ssub:bilevel) and on FDR control (cf. ssub:postselection) as well as the expertise of the team members on dictionary learning 130, 127, 129.

Capturing temporal dependencies with point processes

Another shortcoming of these models is that they do not capture temporal dependencies between the occurrences of the different atoms. However, neural activity at level of the whole brain is highly distributed. Different brain regions form networks that are characterized by the presence of statistical dependencies in their activity 139. An interesting question to formulate is how one can model and learn these time dependencies between brain areas from the MEG or EEG recordings using an unsupervised event-based approach such as CSC. One of the approaches considered is based on point processes (PP; 71, 103). PP are classical tools to study event trains (e.g. sequence of spikes) and to model their dependency structure. We aim here to develop PP-based inference algorithms as a means to capture network effects in different brain areas, but also to quantify how experimental stimuli are affecting the temporal statistics of temporal patterns 139. To model this latter scenario, we will develop the so-called driven PP. In a second stage, we aim to design fully unsupervised methods to capture the connections between different brain areas leveraging the full temporal resolution of non-invasive electrophysiological signals.

4 Application domains

The four research axes we presented earlier have been thought of in tight interaction with four main applications (large-scale predictive modeling, mapping cognition & brain networks, modeling clinical endpoints, from brain images and bio-signals to quantitative biology and physics).

4.1 Population modeling, large-scale predictive modeling

4.1.1 Unveiling Cognition Through Population Modeling

Linking the human brain's structure and function with cognitive abilities has been a research epicenter for the past 40 years. The sophistication of brain mapping machinery such as MRI, EEG and MEG, has produced a treasure trove of data. Nonetheless, the effect size of the phenomena leading to understanding cognition is often drowned out by noise and inter-individual variability. A main goal of Mind is to simultaneously harness the power of large-scale general purpose datasets, such as the Human Connectome Project (HCP) and the Adolescent Brain Cognitive Development Study (ABCD), as well as small scale high precision ones, such as the Individual Brain Charting (IBC) dataset 144, to understand the link between the human brain's architecture and function, and cognition. Parietal's expertise has already been demonstrated in this field. Examples of this include using diffusion MRI (dMRI) to link the brain's macrostructure with language comprehension 78, tissue microstructure with cognitive control 125, functional gradients on the cortical surface 91 to functional territory segregation 143.

Mind project will continue this task by seizing our core methodological developments, described in the previous section, and our global collaborative network of cognitive scientists.

4.1.2 Imaging for health in the general population

Individual differences in brain function and cognition have historically been investigated by studies carried out by individual laboratories having access mainly to small sample sizes. The growing availability of public large-scale data of epidemiological dimensions curated by dedicated consortia (e.g. UK Biobank) has enabled studying the relationship between cognition and the brain with unparalleled granularity and statistical power. These resources now allow researchers to relate brain signals/images to rich descriptions of the participants including behavioral and clinical assessments in addition to social and lifestyle factors. Machine learning has proven essential when modeling biomedical outcomes from the large-scale and high-dimensional data brought by consortia and biobanks. It is used to to build predictive models of heterogenous biomedial outcomes (cognitive, social, clinical) based on different neuroscientific modalities. Taken together, this facilitates the study of lifestyle and health-related behavior in the general population, potentially revealing risk factors leading to biomarker discovery.

Mind will greatly contribute to this effort by focusing on population modeling as a tool for enhancing the analysis of clinical data and mental health.

4.1.3 Proxy measures of brain health

Clinical datasets tend to be small as sharing of data is not incentivized or institutional and economic resources are missing. As a consequence, the capacity of machine learning to learn functions that relate complex-to-grasp biomedical outcomes to heterogeneous data cannot be fully exploited. This has stimulated growing interest in proxy measures of neurological conditions derived from the general population, such as individual biological aging. One counter-intuitive aspect of the methodology is that measures of biological aging (e.g. via brain imaging) can be obtained by focusing on the age of a person, which is known in advance and is, in itself not interesting as a target. However, by predicting the age, machine-learning can capture the relevant information about aging. Based on a population of brain images, it extracts the best guess for the age of a person, indirectly positioning that person within the population. Individual-specific prediction errors therefore reflect deviations from what is statistically expected 155. The brain of a person can look similar to brains commonly seen in older (or younger) people. The resulting brain-predicted age reflects physical and cognitive impairment in adults 154, 83, 92 and reveals neurodegenerative processes 118, 101, which could be overlooked without using machine learning.

Mind will extend this line of research in two directions: 1) Assessment of brain age using EEG and non-brain data such as health-records and 2) proxy measures of mental health beyond aging.

4.1.4 Studying brain age using electrophysiology

MRI is not yet available in all clinical situations and certain aspects of brain function are better understood using electrophysiological modalities (M/EEG). Until recently, it was unclear if brain age can be meaningfully estimated from M/EEG. In a recent study 97, we demonstrated, using the Cam-CAN cohort (), that combining MRI and MEG enhanced detection of cognitive dysfunction. The proposed approach not only achieved integration of brain signals from distinct modalities but explicitly handled the absence of MEG or MRI recordings, adapting ideas from 111. This is key for clinical translation where one cannot afford excluding cases because one modality is missing. In the clinical setting, EEG is predominantly used (and not MEG). Clinical recordings are far noisier than lab EEG and gold-standard source modeling with MRI is rarely done outside the lab. Supported by theoretical analysis and simulations, we found through empirical benchmarks 152 that Riemannian embeddings 1) capture individual head geometry 2) bring robustness to extreme noise and, 3) enable good age prediction from clinical 20-channel EEG (n=1300) with performance close to 306-channel lab MEG.

Mind will extend this line of research by translating EEG-based brain age measures into the hospital setting and probe these in different patient populations in which ageing-related differences in brain structure and function are part of the clinical picture, e.g., neurodevelopmental disorders, postoperative cognitive decline and dementia (cf. MIND:subsec:MCE).

4.1.5 Proxy measures of mental health beyond brain aging

Quantitative measures of mental health remain challenging despite substantial research efforts 112. Mental health, can only be probed indirectly through psychological constructs, e.g. intelligence or anxiety gauged by valid and statistically relevant questionnaires or structured examinations by a specialist. In practice, full neuropsychological evaluation is not an automated process but relies on expert judgment to confront multiple responses and interpret them in the context of a larger environmental context including the cultural background of the participant. Inspired by brain age, we set out to build empirical measures of mental health 86 by predicting traditional and broadly used psychological constructs such as fluid intelligence or neuroticism in the UK Biobank. Our results have shown that all proxies captured the target constructs and were more useful than the original measures for characterizing real-world health behavior (sleep, exercise, tobacco, alcohol consumption). In the long run, we anticipate that using proxies could complement psychometric assessments by corroborating data and potentially providing more accurate data faster and more efficiently for clinical populations.

Mind will expand this line of research by systematically searching for proxy measures of physical and mental health derived from large clinical population using electronic health records or transcripts from clinical interviews. We will propose a systematic causal analysis (treatment effect size and mediation) to provide a clearer understanding of the relationships between the many variables that characterize mental health. We will study more in detail the impact of general health markers on brain status, as this may well fit much of the unexplained variance on brain health.

4.2 Mapping cognition & brain networks

4.2.1 Problem statement

Cognitive science and psychiatry aim at describing mental operations: cognition, emotion, perception and their dysfunction. As an investigation device, they use functional brain imaging, that provides a unique window to bridge these mental concepts to the brain, neural firing and wiring. Yet aggregating results from experiments probing brain activity into a consistent description faces the roadblock that cognitive concepts and brain pathologies are ill-defined. Separation between them is often blurry. In addition, these concepts (a.k.a. psychological constructs) may not correspond to actual brain structures or systems. To tackle this challenge, we propose to leverage rapidly increasing data sources: text and brain locations described in neuroscientific publications, brain images and their annotations taken from public data repositories, and several reference datasets.

4.2.2 What machine learning can do for neuroscience

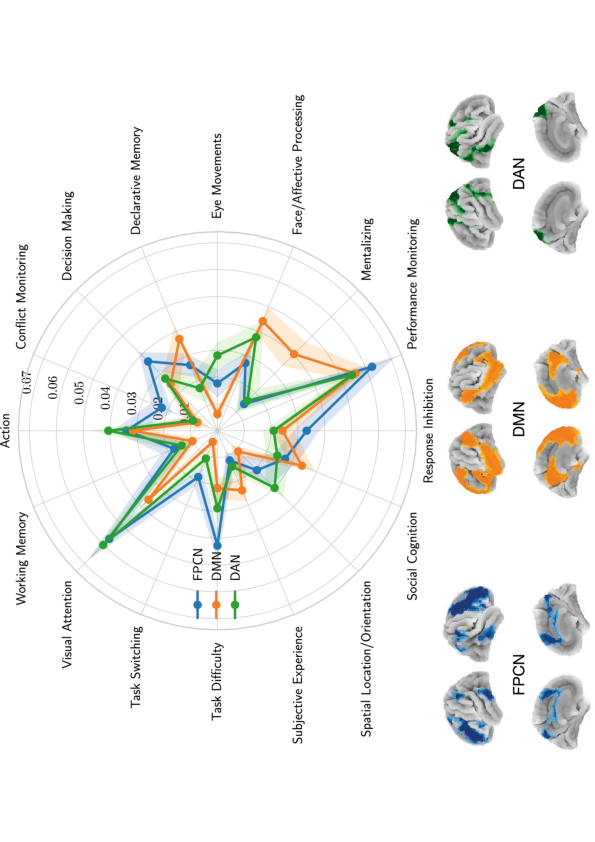

Recent works in computer vision 89 or natural language processing 84, 90 have tackled predictions on a large number of classes, getting closer to open-ended knowledge. These approaches, that rely on uncovering some form of relational structure across these classes, in effect capture the semantics of the domain 84, including the similarity structure of the relevant classes and the ambiguities across classes or the multiple aspects of a class. Broadly speaking, these contributions converge to the concept of representation learning 67, i.e. estimating latent factors that reformulate a learning problem into a new set of input features or output classes that are more natural for the data and help further analysis. These new tools enable extraction of knowledge, for instance ontology induction, with statistical learning 136. They are at the root of heterogeneous data integration, such as multi-modal machine learning 64. The machine learning challenges that we aim to tackle are three-fold:

- Existing multi-modal machine learning techniques have been developed for relatively abundant data, with overall high SNR: text, natural images, videos, sound. These data are most often non-ambiguous, while brain data typically are, due to the low SNR per image and, more crucially, poor annotation quality. We propose to tackle this by adapting machine learning solutions to this low-SNR regime: introduction of priors, aggressive dimension reduction, aggregation approaches and data augmentation to reduce overfitting.

- Leveraging implicit supervisory signals: While data sources contain lots of implicit information that could be used as targets in supervised learning, there is most often no obvious way to extract it. We propose to tackle this by using additional, ill- or not-annotated data, relying on self-supervision methods.

- Model interpretability: Our goal is to provide clear assertions on the relationships between brain structures and cognition: the inference should always lead to an updated knowledge base, i.e. updated relationships between concepts pertaining to neuroscience on one hand, psychology on the other hand. Specifically, one should be able to reason about the information extracted within Mind. For this, we will develop dedicated statistical, causal and formal (ontology-based) data analysis schemes.

Associating knowledge engineering with statistical learning to boost cognitive neuroimaging, requires tackling the challenge of multimodal machine learning under noisy conditions with limited data. Doing so, it will capture links between behavior and brain activity, and enable aggregating the information carried by neuroimaging data to redefine and link concepts in psychology and psychiatry.

4.2.3 Perspective taken: combine distributional semantics with brain images

In natural language processing (NLP), distributional semantics capture meanings of words using similarities in the way they appear in their environment. We want to adapt these ideas to learn data-driven organizations of psychological concepts. Importantly, applying these techniques solely to the psychology literature merely captures the current status quo of the field. Including brain images is necessary to bring new information.

To link observed cognition to brain activity, two typical statistical learning problems arise: encoding, that seeks to describe brain activity from behavior; and decoding, that seeks the converse, predicting behavior from brain activity 113. In addition, statistical modeling of each aspect of the data on its own generates knowledge, typically spatial decompositions from resting-state data, and topic modeling on descriptions of behavior. The research strategy followed in this proposal is to combine the different statistical learning problems in a unified framework to extract core structures from the aggregation of neuroimaging data: on one side brain structures, and on the other side semantic relationships and concepts in psychological sciences.

Mind will in particular publish automated functional meta-analyses to give a systematic assessment of the publicly available data and question the limitations of the current conceptual framework of systems neuroscience as well as of these resources.

4.3 Modeling clinical endpoints

When sufficient data is available, machine learning can be employed to directly model various clinical endpoints (such as diagnosis, drug response, and neuropsychological scores) from brain signals without the need for proxy measures. This approach has the potential to significantly and meaningfully simplify statistical modeling in clinical research. Machine learning facilitates combining heterogeneous input data (different modalities) and does not need high confidence in underlying generative models linking the data to the clinical endpoint. As a consequence, the same class of models can be applied regardless of the endpoint. Its focus is on bounding the approximation error of the endpoint instead of correct parameter estimates. As such, it provides generalizing models that are more robust. Our team has pushed this type of research program through several important collaborations with our European clinical partners using EEG and MRI.

4.3.1 EEG-based modeling of clinical endpoints

Neurological and psychiatric disorders can show complex neurological patterns. Diagnosis is often performed clinically (based on cerebral signs and behavioral symptoms), leading to important variability across doctors. In clinical neuroscience, predicting diagnosis from brain signals is therefore a common application. In the clinical context, EEG is an economically viable option that can be applied in a wide array of circumstances. In collaboration with the Salpêtrière Hospital and the Paris Brain Institute (ICM) we have developed and validated an approach for an EEG-based modeling of diagnosis for severely brain injured patients suffering from consciousness disorders (DoC) 98. Expert-defined features from consciousness studies were rigorously combined using random forest classification. Sensitivity analysis and benchmarks showed robustness across EEG-configurations (channels, time points), protocols (resting state vs evoked responses), label noise and differences between recording sites. When changes in the signal are more subtle than they are in DoC patients (average power turned out to be one of the strongest stand-alone features) more general approaches are needed.

Our future activities will focus on extending this line of research to other clinical populations and other endpoints. We have started a collaboration with the Institut Pasteur (GHFC team, T Bourgeron, R Delorme) and the University of Montreal (PPSP team, G Dumas), to characterize differences between normally developing children and children diagnosed with autism spectrum disorders. A wide array of EEG tasks will be used and endpoints (i.e. developmental timepoints) will go beyond the usually accurate diagnosis, focusing on symptom severity and social developmental scores. With the anesthesiology department at the Lariboisière hospital (A Mebazaa, E Gayat, F Vallée) and the cognitive neurology unit (C Paquet) we aim at developing EEG-based models of cognitive decline and dysfunction in two different settings. Postoperative cognitive decline is an important complication after general anesthesia and its antecedents must be better understood. As this might be an indicator for a latent neurodegenerative condition, we plan to use our EEG-based models of both Alzheimer's Disease and Lewy body dementia in which disease progression is an important change over time.

This widening scope calls for a more general methodology as compared to our previous work on DoC. For example, in these conditions involving neurodegenerative problems, we have observed that both subtle and condition-specific spatial patterns matter more than strong and global amplitude changes. To approach these challenges we will draw on our latest M/EEG-methods that were recently developed for population-level modeling of brain health and brain aging 97. We found that frequency band-specific spatial patterns of M/EEG power spectra conveyed important information of cognitive function (memory and cognitive performance) that were not explained by MRI or fMRI. This was implemented by predicting from a filter-bank of frequency-band-specific source power and source connectivity features. Core challenges to enable clinical translation include lower SNR and absence of individual anatomical MRI scans needed for gold-standard source modeling. Through theoretical analysis, simulations and benchmarks we found 152, 151 that, in M/EEG sensor space, covariance matrices in combination with spatial filtering techniques and Riemannian embeddings provide good workarounds for absent anatomical MRI scans. This covariance-based approach allows to capture fine-grained spatial information related to power and connectivity without performing biophysics-based source localization. Moreover, Riemannian embeddings make predictive modeling from M/EEG covariance matrices more robust to noise, whereas their interpretability is more challenging than that of spatial filters, indicating a direction for further research. Another challenge is given by the limited numbers of labeled samples for supervised learning and EEG-devices with small channel numbers, such as monitoring or user-grade EEG with 2-4 electrodes for which random loss of electrodes can be frequent. In this context, we expect important enhancements from self-supervised learning approaches 65 and deep learning methods for data-augmentation for which we have obtained the first results on non-clinical data. In these settings, the previous elements from classical approaches such as Riemannian geometry or spatial filtering can be readily implemented alongside more involved computations and transformations.

4.3.2 MRI-based modeling of clinical endpoints

Image based biomarkers can be objectively measured and are a sign of normal or abnormal processes, of a condition or disease. Incorporating new potential imaging biomarkers requires several steps, often in parallel and complementary to each other, to be undertaken for translation into clinical practice. These can be divided into the following phases after identification: Development and evaluation, validation, implementation, qualification, and utilization. Our team aims to cross two main translational gaps, that is, the translation from patients first and then to practice. Our aim through our current and active projects is to ensure that potential biomarkers, like the clear delineation of subterritories of the subthalamic nucleus (STN) in pharmaco-resistant Parkinson's disease (PD) patients (i.e.candidates for implantation of a deep brain stimulator) are `fit for purpose' and associated with the clinical endpoint of interest with the overarching goal being to demonstrated efficacy and health impact. This process is key to the translation into clinical practice and widespread utilization.

Through the ANR VLFMRI grant we aim to derive new MR imaging-based biomarkers related to prematurity and abnormal neurodevelopment of hospitalized neonates at low magnetic field (20 mTesla). In this setup, the objective is to perform an almost continuous monitoring to detect early signs of adverse events including ischemic stroke or encephalopathy (collaboration with Prof. V. Biran, APHP Robert Debré Hospital). An additional collaboration is already underway with the AP-HP Henri Mondor Hospital (neuroradiologist Dr B. Bapst, doing part of her PhD at NeuroSpin), to achieve high-resolution susceptibility weighted imaging (600 µisotropic) in a scan time of 2m30s for an accurate delineation of the STN in PD patients prior to surgical planning. A database of 123 patients has already been collected using both the standard SWI imaging protocol and ours based on the SPARKLING technology. This annotated database will be key to compare the diagnosis power of our solution with that of the current care, analyse to what extent a higher image resolution is instrumental in providing a more accurate clinical diagnostic, and finally make our protocol more widely accepted in the clinical practice.

Our key contribution in these projects is to translate to the clinical realm both the SPARKLING technology on the acquisition side 116, 75 as well as our PySAP software 99 for MR image reconstruction. In this regard, the recently accepted CEA postdoc funding should help us move the technology to clinical 7T MR Systems (Magnetom Terra Siemens-Healthineers) in the University hospital of Poitiers through a nascent collaboration with Prof. Rémy Guillevin. Their interest is to use the high-resolution SPARKLING SWI protocol at 7T to better delineate the anomalies along the central vein for the diagnostic of multiple sclerosis as the number of anomalies predicts the grade/severity of this inflammatory pathology. On a longer perspective, we aim to generalize the use of our recently DL networks for MR image reconstruction 146, 145 to multiple acquisition setups and other downstream tasks (e.g. motion correction and correction of off-resonance artifacts related to inhomogeneities).

4.4 From brain images and bio-signals to quantitative biology and physics

Thanks to the developments in MIND:subsec:MLIP and MIND:subsec:MLSTP we aim to approximate more accurately the biophysical models underlying MRI and electrophysiological signals. By estimating quantities grounded in the physics of the data (time, spatial localization, tissue properties) we ambition to offer more actionable outputs for cognitive, clinical and pharmacological applications.

Technologies like 4D SPARKLING should in the future allow us to carry out both fast high resolution multi-parametric quantitative imaging (e.g. T1, T2 and proton density mapping) and laminar (i.e. layer-based) functional imaging in BOLD-fMRI. First, in the mqMRI and fMRI setting, the fourth dimension is respectively the weighting contrast and time axis. mqMRI imaging enables a precise quantification of biomarkers such as iron stores in the pathological brain. Measuring these parameters intra-cortically in Parkinsonian patients defines one of the key challenges in the coming years, especially at 7 Tesla, to earlier stratify the PD patients and the evolution of their disease. Second, a particular attention will be paid to the impact of the developments performed in MIND:subsec:MLIP on the statistical sensitivity of brain activity detection, which eventually defines the final validation metric of the data acquisition/image reconstruction pipeline. For this purpose, robust experimental activation protocols such as retinotopic mapping will be used for validation on the 7T scanner and eventually on the 11.7T Iseult MR system. The finest target resolution is 500 isotropic in 3D.

Novel development on bi-level optimization for hyper-parameter selection from ssub:bilevel will bring state-of-the-art inverse methods to end users currently facing the difficulty of performing model selection on empirical data efficiently. This will lead to more accurate quantitative assessments, in sub-millimeters and milliseconds, of where neural activity occurs.

The line of work on inverse problems should also impact how non-invasive neuroimaging and electrophysiology, based on MRI, EEG and MEG, is considered by more traditional neurophysiologists working with animal data. By considering biophysical models of the data and aiming to estimate their parameters from empirical recordings our hope is to present estimates of physical quantities (tissue properties, neural interactions strengths, etc.). The line of work based on stochastic simulation based inference (SBI) can revolutionize the way MEG, EEG and MRI data are apprehended. For this line of work we will explore the inversion of the models as offered by major software such as The Virtal Brain (TVB) 153 or the Human Neocortical Neurosolver (HNN) 132. A student from the group of Prof. S. Jones at the origin of the HNN software visited the team in 2022.

5 New software and platforms

5.1 New software

5.1.1 MNE

-

Name:

MNE-Python

-

Keywords:

Neurosciences, EEG, MEG, Signal processing, Machine learning

-

Functional Description:

Open-source Python software for exploring, visualizing, and analyzing human neurophysiological data: MEG, EEG, sEEG, ECoG, and more.

-

Release Contributions:

https://mne.tools/stable/whats_new.html

- URL:

-

Contact:

Alexandre Gramfort

-

Partners:

HARVARD Medical School, New York University, University of Washington, CEA, Aalto university, Telecom Paris, Boston University, UC Berkeley, Macquarie University, University of Oregon, Aarhus University

5.1.2 NeuroLang

-

Name:

NeuroLang

-

Keywords:

Neurosciences, Probabilistic Programming, Logic programming

-

Functional Description:

NeuroLang is a probabilistic logic programming system specialised in the analysis of neuroimaging data, but not exclusively determined by it.

-

Release Contributions:

https://neurolang.github.io/

- URL:

-

Contact:

Demian Wassermann

5.1.3 Nilearn

-

Name:

NeuroImaging with scikit learn

-

Keywords:

Health, Neuroimaging, Medical imaging

-

Functional Description:

NiLearn is the neuroimaging library that adapts the concepts and tools of scikit-learn to neuroimaging problems. As a pure Python library, it depends on scikit-learn and nibabel, the main Python library for neuroimaging I/O. It is an open-source project, available under BSD license. The two key components of NiLearn are i) the analysis of functional connectivity (spatial decompositions and covariance learning) and ii) the most common tools for multivariate pattern analysis. A great deal of efforts has been put on the efficiency of the procedures both in terms of memory cost and computation time.

-

Release Contributions:

HIGHLIGHTS - Updated docs with a new theme using furo. - permuted_ols and non_parametric_inference now support TFCE statistic. - permuted_ols and non_parametric_inference now support cluster-level Family-wise error correction. - save_glm_to_bids has been added, which writes model outputs to disk according to BIDS convention.

NEW - save_glm_to_bids has been added, which writes model outputs to disk according to BIDS convention. - permuted_ols and non_parametric_inference now support TFCE statistic. - permuted_ols and non_parametric_inference now support cluster-level Family-wise error correction. - Updated docs with a new theme using furo.

See all details in https://nilearn.github.io/stable/changes/whats_new.html

- URL:

-

Contact:

Bertrand Thirion

-

Participants:

Alexandre Abraham, Alexandre Gramfort, Bertrand Thirion, Elvis Dohmatob, Fabian Pedregosa Izquierdo, Gael Varoquaux, Loic Esteve, Michael Eickenberg, Virgile Fritsch

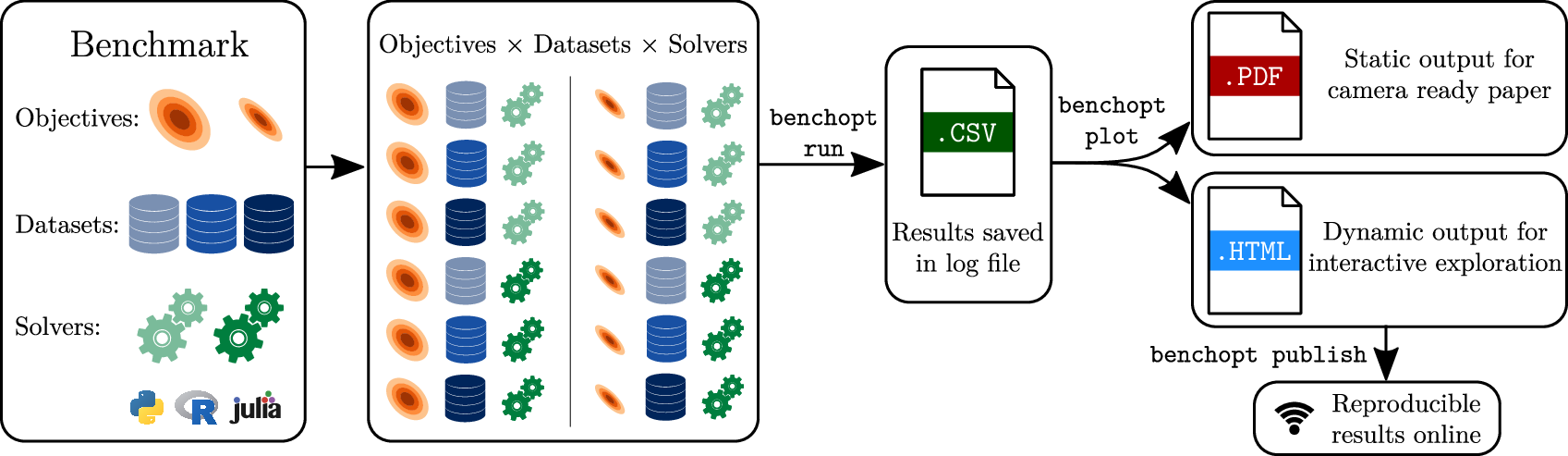

5.1.4 Benchopt

-

Keywords:

Mathematical Optimization, Benchmarking, Reproducibility

-

Functional Description:

BenchOpt is a package to simplify, make more transparent and more reproducible the comparisons of optimization algorithms. It is written in Python but it is available with many programming languages. So far it has been tested with Python, R, Julia and compiled binaries written in C/C++ available via a terminal command. If it can be installed via conda, it should just work!

BenchOpt is used through a simple command line and ultimately running and replicating an optimization benchmark should be as easy a cloning a repo and launching the computation with a single command line. For now, BenchOpt features benchmarks for around 10 convex optimization problems and we are working on expanding this to feature more complex optimization problems. We are also developing a website to display the benchmark results easily.

-

Release Contributions:

https://github.com/benchopt/benchopt/releases/tag/1.3.0

-

Contact:

Thomas Moreau

5.1.5 Scikit-learn

-

Keywords:

Clustering, Classification, Regression, Machine learning

-

Scientific Description:

Scikit-learn is a Python module integrating classic machine learning algorithms in the tightly-knit scientific Python world. It aims to provide simple and efficient solutions to learning problems, accessible to everybody and reusable in various contexts: machine-learning as a versatile tool for science and engineering.

-

Functional Description:

Scikit-learn can be used as a middleware for prediction tasks. For example, many web startups adapt Scikitlearn to predict buying behavior of users, provide product recommendations, detect trends or abusive behavior (fraud, spam). Scikit-learn is used to extract the structure of complex data (text, images) and classify such data with techniques relevant to the state of the art.

Easy to use, efficient and accessible to non datascience experts, Scikit-learn is an increasingly popular machine learning library in Python. In a data exploration step, the user can enter a few lines on an interactive (but non-graphical) interface and immediately sees the results of his request. Scikitlearn is a prediction engine . Scikit-learn is developed in open source, and available under the BSD license.

- URL:

- Publications:

-

Contact:

Olivier Grisel

-

Participants:

Alexandre Gramfort, Bertrand Thirion, Gael Varoquaux, Loic Esteve, Olivier Grisel, Guillaume Lemaitre, Jeremie Du Boisberranger, Julien Jerphanion

-

Partners:

Boston Consulting Group - BCG, Microsoft, Axa, BNP Parisbas Cardif, Fujitsu, Dataiku, Assistance Publique - Hôpitaux de Paris, Nvidia

5.1.6 joblib

-

Keywords:

Parallel computing, Cache

-

Functional Description:

Facilitate parallel computing and caching in Python.

- URL:

-

Contact:

Gael Varoquaux

6 New results

6.1 Accelerated acquisition in MRI

Participants: Chaithya Giliyar Radhakrishna, Guillaume Daval-Frérot, ZAineb Amor, Philippe Ciuciu.

Main External Collaborators: Alexandre Vignaud [CEA/NeuroSpin], Pierre Weiss [CNRS, IMT (UMR 5219), Toulouse], Aurélien Massire [Siemens-Healthineers, France].

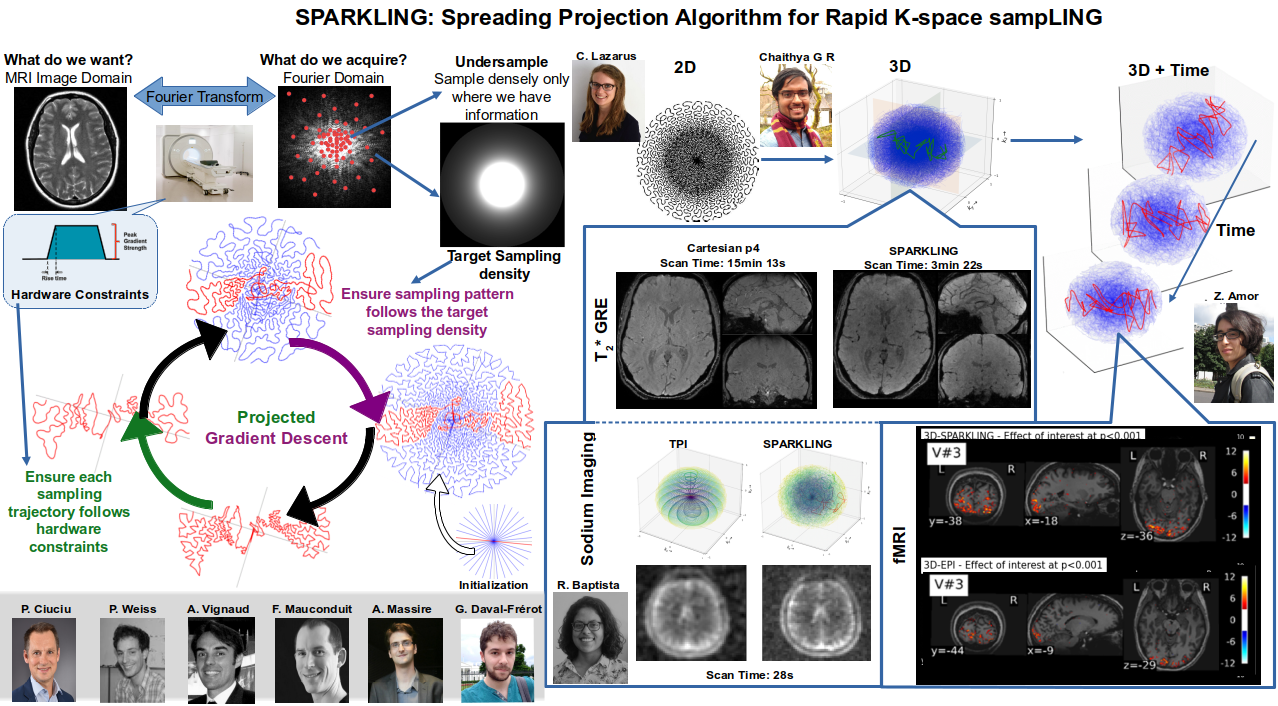

MRI is a widely used neuroimaging technique used to probe brain tissues, their structure and provide diagnostic insights on the functional organization as well as the layout of brain vessels. However, MRI relies on an inherently slow imaging process. Reducing acquisition time has been a major challenge in high-resolution MRI and has been successfully addressed by Compressed Sensing (CS) theory. However, most of the Fourier encoding schemes under-sample existing k-space trajectories which unfortunately will never adequately encode all the information necessary. Recently, the Mind team has addressed this crucial issue by proposing the Spreading Projection Algorithm for Rapid K-space sampLING (SPARKLING) for 2D/3D non-Cartesian T2* and susceptibility weighted imaging (SWI) at 3 and 7Tesla (T) 115, 116, 4. These advancements have interesting applications in cognitive and clinical neuroscience as we already have adapted this approach to address high-resolution functional and metabolic (Sodium 23Na) MR imaging at 7T – a very challenging feat 38, 40. Fig. 1 illustrates the SPARKLING application to anatomical, functional and metabolic imaging. Additionally, we have shown that this SPARKLING under-sampling strategy can be used to internally estimate the static B0 field inhomogeneities a necessary component to avoid the need for additional scans prior to correcting off-resonance artifacts due to these inhomogeneities. This finding has been published in 16 and a patent application has been filed in the US (US Patent App. 63/124,911). Ongoing extensions such as Minimized Off Resonance SPARKLING or MORE-SPARKLING tend to avoid such long-lasting processing by introducing a more temporally coherent sampling pattern in the k-space and then correcting these off-resonance effects already during data acquisition 42.

6.2 Deep learning for MR image reconstruction and artifact correction

Participants: Zaccharie Ramzi, Guillaume Daval-Frérot, Chaithya Giliyar Radhakrishna, Philippe Ciuciu.

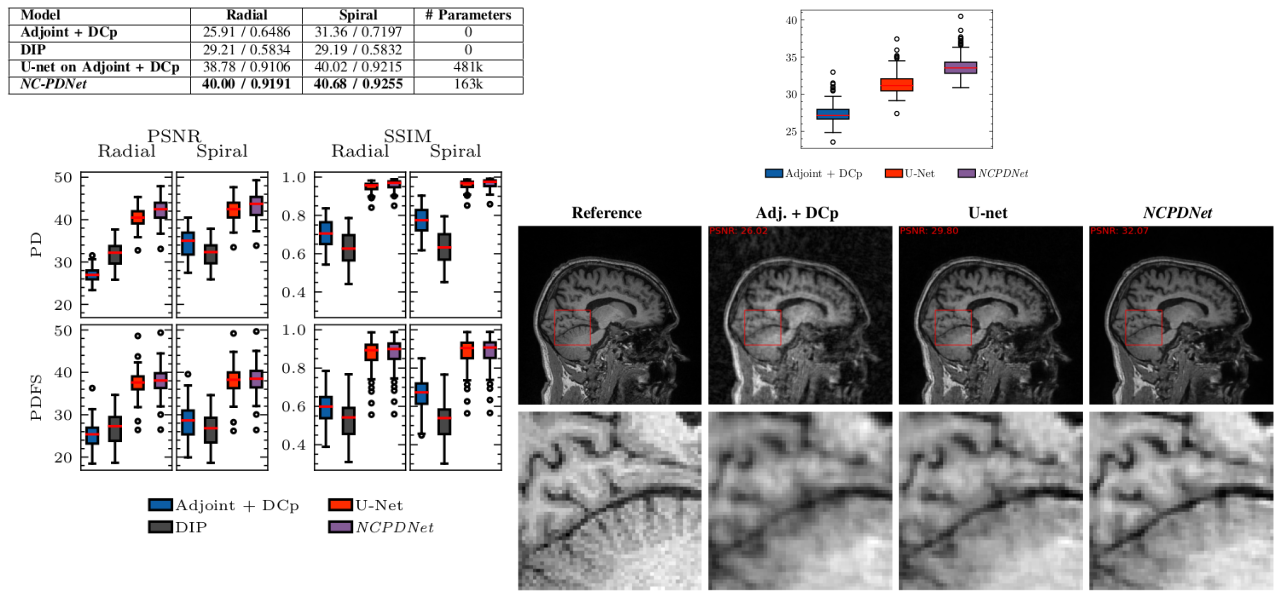

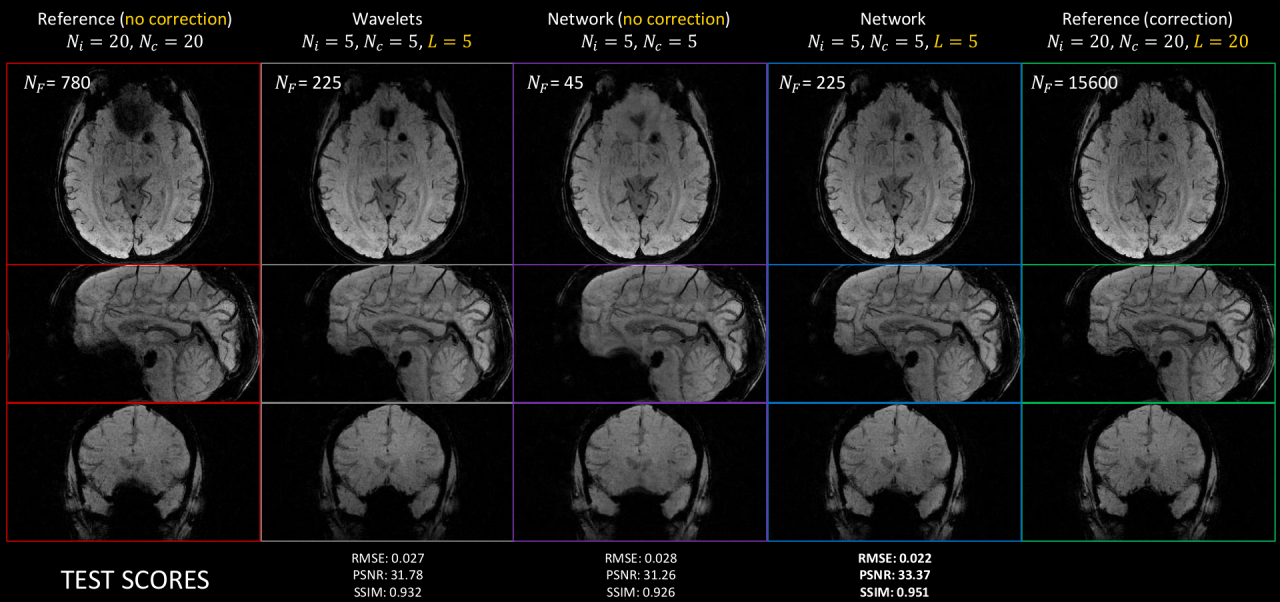

Main External Collaborators: Jean-Luc Starck [CEA/DAp/CosmoStat], Mariappan Nadar [Siemens-Healthineers, USA], Boris Mailhé [Siemens-Healthineers, USA].