2023Activity reportTeamFLOWERS

Inria teams are typically groups of researchers working on the definition of a common project, and objectives, with the goal to arrive at the creation of a project-team. Such project-teams may include other partners (universities or research institutions).

RNSR: 200820949R- Research center Inria Centre at the University of Bordeaux

- In partnership with:Ecole nationale supérieure des techniques avancées

- Team name: Flowing Epigenetic Robots and Systems

- Domain:Perception, Cognition and Interaction

- Theme:Robotics and Smart environments

Keywords

Computer Science and Digital Science

- A5.1.1. Engineering of interactive systems

- A5.1.2. Evaluation of interactive systems

- A5.1.4. Brain-computer interfaces, physiological computing

- A5.1.5. Body-based interfaces

- A5.1.6. Tangible interfaces

- A5.1.7. Multimodal interfaces

- A5.3.3. Pattern recognition

- A5.4.1. Object recognition

- A5.4.2. Activity recognition

- A5.7.3. Speech

- A5.8. Natural language processing

- A5.10.5. Robot interaction (with the environment, humans, other robots)

- A5.10.7. Learning

- A5.10.8. Cognitive robotics and systems

- A5.11.1. Human activity analysis and recognition

- A6.3.1. Inverse problems

- A9.2. Machine learning

- A9.4. Natural language processing

- A9.5. Robotics

- A9.7. AI algorithmics

Other Research Topics and Application Domains

- B1.2.1. Understanding and simulation of the brain and the nervous system

- B1.2.2. Cognitive science

- B5.6. Robotic systems

- B5.7. 3D printing

- B5.8. Learning and training

- B9. Society and Knowledge

- B9.1. Education

- B9.1.1. E-learning, MOOC

- B9.2. Art

- B9.2.1. Music, sound

- B9.2.4. Theater

- B9.6. Humanities

- B9.6.1. Psychology

- B9.6.8. Linguistics

- B9.7. Knowledge dissemination

1 Team members, visitors, external collaborators

Research Scientists

- Pierre-Yves Oudeyer [Team leader, INRIA, Senior Researcher, HDR]

- Clément Moulin-Frier [INRIA, Researcher]

- Eleni Nisioti [INRIA, Starting Research Position, until Aug 2023]

- Hélène Sauzéon [INRIA, Professor Detachement, HDR]

Faculty Members

- David Filliat [ENSTA, Professor, HDR]

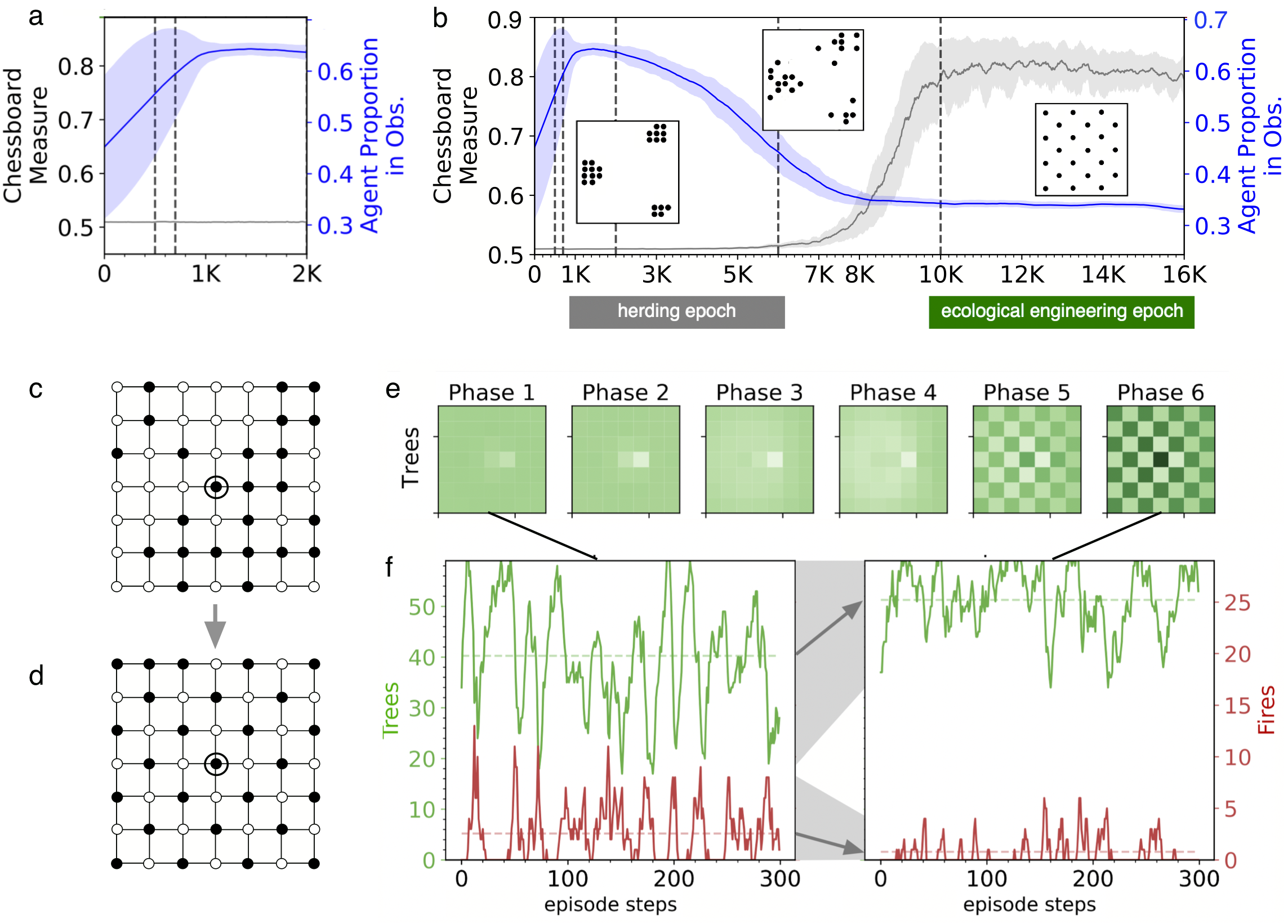

- Cécile Mazon [UNIV BORDEAUX, Associate Professor]

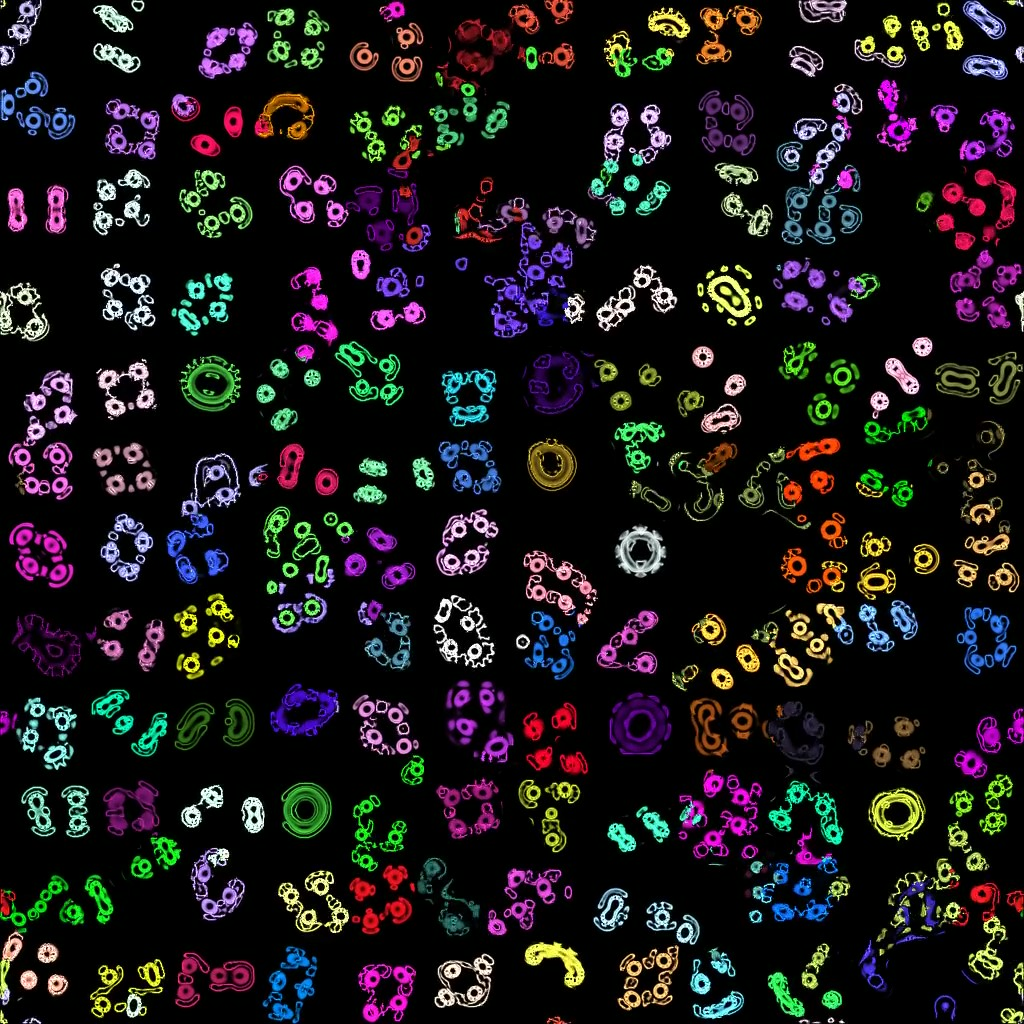

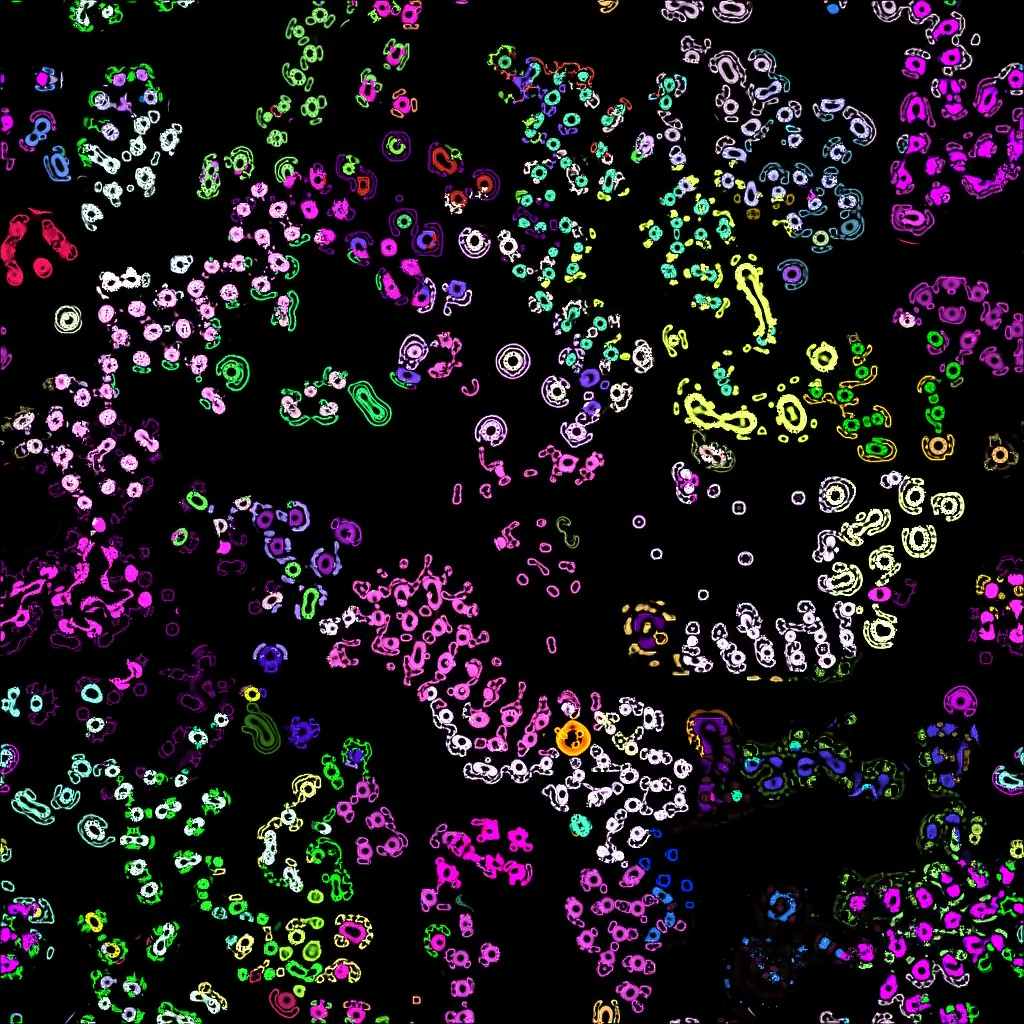

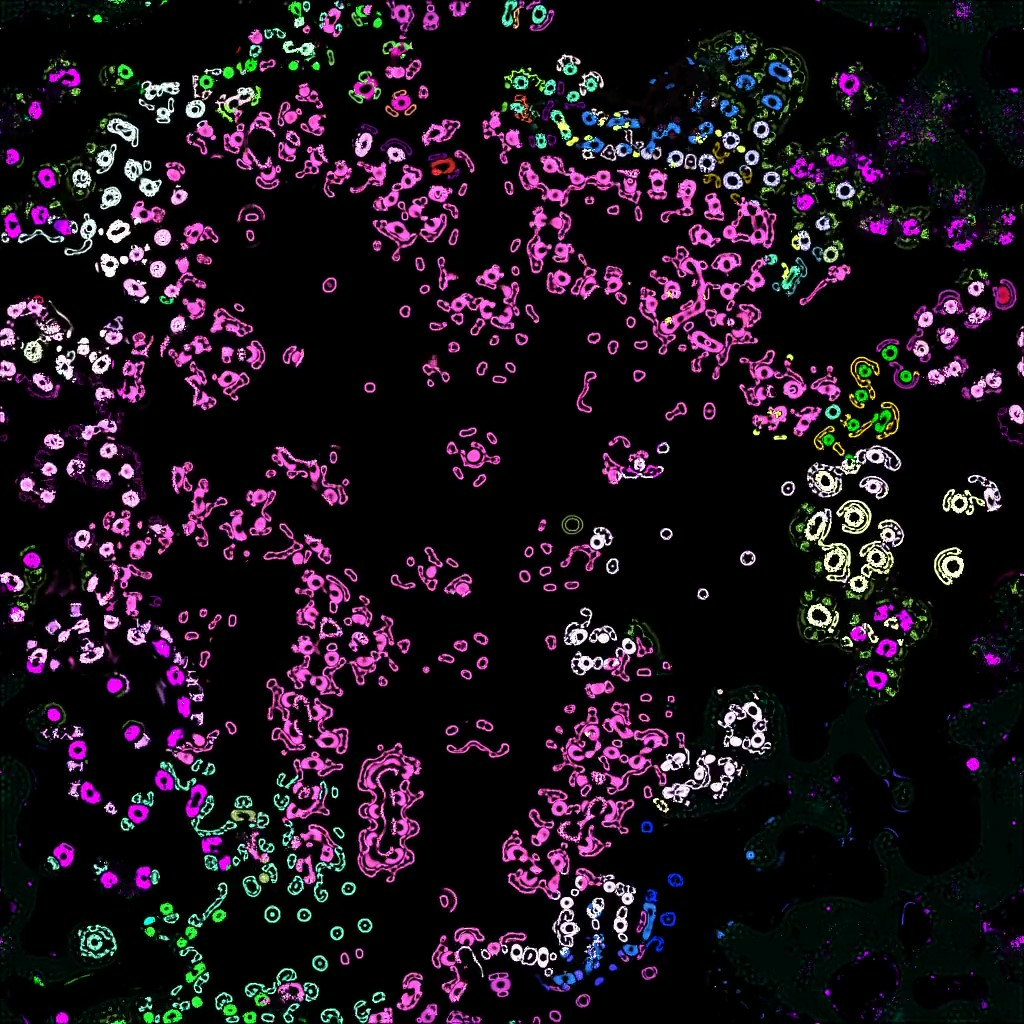

- Mai Nguyen [ENSTA]

Post-Doctoral Fellows

- Louis Annabi [ENSTA, until Aug 2023]

- Cedric Colas [INRIA, Post-Doctoral Fellow]

- Eric Meyer [INRIA, Post-Doctoral Fellow, until Aug 2023]

- Marion Pech [INRIA, Post-Doctoral Fellow, from Apr 2023]

- Remy Portelas [INRIA, Post-Doctoral Fellow, until Feb 2023]

PhD Students

- Rania Abdelghani [EVIDENCEB]

- Maxime Adolphe [ONEPOINT]

- Thomas Carta [UNIV BORDEAUX]

- Marie-Sarah Desvaux [UNIV BORDEAUX, from Oct 2023]

- Mayalen Etcheverry [POIETIS, until Oct 2023]

- Gautier Hamon [INRIA]

- Tristan Karch [INRIA, until Apr 2023]

- Grgur Kovac [INRIA]

- Jeremy Perez [UNIV BORDEAUX, from Oct 2023]

- Matisse Poupard [CATIE, CIFRE]

- Julien Pourcel [INRIA, from Nov 2023]

- Thomas Rojat [GROUPE RENAULT, until Mar 2023]

- Clément Romac [HUGGING FACE SAS, CIFRE]

- Isabeau Saint-Supery [UNIV BORDEAUX]

- Maria Teodorescu [INRIA]

- Nicolas Yax [ENS Paris, from May 2023]

Technical Staff

- Jesse Lin [INRIA, Engineer, until Oct 2023]

Interns and Apprentices

- Richard Bornemann [INRIA, Intern, from Apr 2023 until Sep 2023]

- Marie-Sarah Desvaux [INRIA, Intern, from Feb 2023 until Jun 2023]

- Theo Goix [ENS Paris, Intern, from Jun 2023 until Aug 2023]

- Corentin Leger [BORDEAUX INP, from Mar 2023 until Aug 2023]

- Stephanie Mortemousque [INRIA, Intern, from Feb 2023 until Jun 2023]

- Mathieu Perie [INRIA, Apprentice, until Feb 2023]

- Julien Pourcel [INRIA, Intern, from Apr 2023 until Oct 2023]

- Valentin Strahm [INRIA, Intern, from Feb 2023 until Jun 2023]

- Alexandre Torres-Leguet [CENTRALE59, Intern, until Feb 2023]

Administrative Assistant

- Nathalie Robin [INRIA]

External Collaborator

- Didier Roy [N/A, until Jan 2023, Associate research scientist]

2 Overall objectives

Abstract: The Flowers project-team studies models of open-ended development and learning. These models are used as tools to help us understand better how children learn, as well as to build machines that learn like children, i.e. developmental artificial intelligence, with applications in educational technologies, assisted scientific discovery, video games, robotics and human-computer interaction.

Context: Great advances have been made recently in artificial intelligence concerning the topic of how autonomous agents can learn to act in uncertain and complex environments, thanks to the development of advanced Deep Reinforcement Learning techniques. These advances have for example led to impressive results with AlphaGo 190 or algorithms that learn to play video games from scratch 156, 135. However, these techniques are still far away from solving the ambitious goal of lifelong autonomous machine learning of repertoires of skills in real-world, large and open environments. They are also very far from the capabilities of human learning and cognition. Indeed, developmental processes allow humans, and especially infants, to continuously acquire novel skills and adapt to their environment over their entire lifetime. They do so autonomously, i.e. through a combination of self-exploration and linguistic/social interaction with their social peers, sampling their own goals while benefiting from the natural language guidance of their peers, and without the need for an “engineer” to open and retune the brain and the environment specifically for each new task (e.g. for providing a task-specific external reward channel). Furthermore, humans are extremely efficient at learning fast (few interactions with their environment) skills that are very high-dimensional both in perception and action, while being embedded in open changing environments with limited resources of time, energy and computation.

Thus, a major scientific challenge in artificial intelligence and cognitive sciences is to understand how humans and machines can efficiently acquire world models, as well as open and cumulative repertoires of skills over an extended time span. Processes of sensorimotor, cognitive and social development are organized along ordered phases of increasing complexity, and result from the complex interaction between the brain/body with its physical and social environment. Making progress towards these fundamental scientific challenges is also crucial for many downstream applications. Indeed, autonomous lifelong learning capabilities similar to those shown by humans are key requirements for developing virtual or physical agents that need to continuously explore and adapt skills for interacting with new or changing tasks, environments, or people. This is crucial for applications like assistive technologies with non-engineer users, such as robots or virtual agents that need to explore and adapt autonomously to new environments, adapt robustly to potential damages of their body, or help humans to learn or discover new knowledge in education settings, and need to communicate through natural language with human users, grounding the meaning of sentences into their sensorimotor representations.

The Developmental AI approach: Human and biological sciences have identified various families of developmental mechanisms that are key to explain how infants can acquire so robustly a wide diversity of skills 137, 155, in spite of the complexity and high-dimensionality of the body 95 and the open-endedness of its potential interactions with the physical and social environment. To advance the fundamental understanding of these mechanisms of development as well as their transposition in machines, the FLOWERS team has been developing an approach called Developmental artificial intelligence, leveraging and integrating ideas and techniques from developmental robotics (207, 147, 102, 167, Deep (Reinforcement) Learning and developmental psychology. This approach consists in developing computational models that leverage advanced machine learning techniques such as intrinsically motivated Deep Reinforcement Learning, in strong collaboration with developmental psychology and neuroscience. In particular, the team focuses on models of intrinsically motivated learning and exploration (also called curiosity-driven learning), with mechanisms enabling agents to learn to represent and generate their own goals, self-organizing a learning curriculum for efficient learning of world models and skill repertoire under limited resources of time, energy and compute. The team also studies how autonomous learning mechanisms can enable humans and machines to acquire and develop grounded and culturally shared language skills, using neuro-symbolic architectures for learning structured representations and handling systematic compositionality and generalization.

Our fundamental research is organized along three strands:

-

Strand 1: Lifelong autonomous learning in machines.

Understanding how developmental mechanisms can be functionally formalized/transposed in machines and explore how they can allow these machines to acquire efficiently open-ended repertoires of skills through self-exploration and social interaction.

-

Strand 2: Computational models as tools to understand human development in cognitive sciences.

The computational modelling of lifelong learning and development mechanisms achieved in the team centrally targets to contribute to our understanding of the processes of sensorimotor, cognitive and social development in humans. In particular, it provides a methodological basis to analyze the dynamics of interactions across learning and inference processes, embodiment and the social environment, allowing to formalize precise hypotheses and later on test them in experimental paradigms with animals and humans. A paradigmatic example of this activity is the Neurocuriosity project achieved in collaboration with the cognitive neuroscience lab of Jacqueline Gottlieb, where theoretical models of the mechanisms of information seeking, active learning and spontaneous exploration have been developed in coordination with experimental evidence and investigation 18, 31.

-

Strand 3: Applications.

Beyond leading to new theories and new experimental paradigms to understand human development in cognitive science, as well as new fundamental approaches to developmental machine learning, the team explores how such models can find applications in robotics, human-computer interaction, multi-agent systems, automated discovery and educational technologies. In robotics, the team studies how artificial curiosity combined with imitation learning can provide essential building blocks allowing robots to acquire multiple tasks through natural interaction with naive human users, for example in the context of assistive robotics. The team also studies how models of curiosity-driven learning can be transposed in algorithms for intelligent tutoring systems, allowing educational software to incrementally and dynamically adapt to the particularities of each human learner, and proposing personalized sequences of teaching activities.

3 Research program

Research in artificial intelligence, machine learning and pattern recognition has produced a tremendous amount of results and concepts in the last decades. A blooming number of learning paradigms - supervised, unsupervised, reinforcement, active, associative, symbolic, connectionist, situated, hybrid, distributed learning... - nourished the elaboration of highly sophisticated algorithms for tasks such as visual object recognition, speech recognition, robot walking, grasping or navigation, the prediction of stock prices, the evaluation of risk for insurances, adaptive data routing on the internet, etc... Yet, we are still very far from being able to build machines capable of adapting to the physical and social environment with the flexibility, robustness, and versatility of a one-year-old human child.

Indeed, one striking characteristic of human children is the nearly open-ended diversity of the skills they learn. They not only can improve existing skills, but also continuously learn new ones. If evolution certainly provided them with specific pre-wiring for certain activities such as feeding or visual object tracking, evidence shows that there are also numerous skills that they learn smoothly but could not be “anticipated” by biological evolution, for example learning to drive a tricycle, using an electronic piano toy or using a video game joystick. On the contrary, existing learning machines, and robots in particular, are typically only able to learn a single pre-specified task or a single kind of skill. Once this task is learnt, for example walking with two legs, learning is over. If one wants the robot to learn a second task, for example grasping objects in its visual field, then an engineer needs to re-program manually its learning structures: traditional approaches to task-specific machine/robot learning typically include engineer choices of the relevant sensorimotor channels, specific design of the reward function, choices about when learning begins and ends, and what learning algorithms and associated parameters shall be optimized.

As can be seen, this requires a lot of important choices from the engineer, and one could hardly use the term “autonomous” learning. On the contrary, human children do not learn following anything looking like that process, at least during their very first years. Babies develop and explore the world by themselves, focusing their interest on various activities driven both by internal motives and social guidance from adults who only have a folk understanding of their brains. Adults provide learning opportunities and scaffolding, but eventually young babies always decide for themselves what activity to practice or not. Specific tasks are rarely imposed to them. Yet, they steadily discover and learn how to use their body as well as its relationships with the physical and social environment. Also, the spectrum of skills that they learn continuously expands in an organized manner: they undergo a developmental trajectory in which simple skills are learnt first, and skills of progressively increasing complexity are subsequently learnt.

A link can be made to educational systems where research in several domains have tried to study how to provide a good learning or training experience to learners. This includes the experiences that allow better learning, and in which sequence they must be experienced. This problem is complementary to that of the learner who tries to progress efficiently, and the teacher here has to use as efficiently the limited time and motivational resources of the learner. Several results from psychology 94 and neuroscience 124 have argued that the human brain feels intrinsic pleasure in practicing activities of optimal difficulty or challenge. A teacher must exploit such activities to create positive psychological states of flow 116 for fostering the indivual engagement in learning activities. A such view is also relevant for reeducation issues where inter-individual variability, and thus intervention personalization are challenges of the same magnitude as those for education of children.

A grand challenge is thus to be able to build machines that possess this capability to discover, adapt and develop continuously new know-how and new knowledge in unknown and changing environments, like human children. In 1950, Turing wrote that the child's brain would show us the way to intelligence: “Instead of trying to produce a program to simulate the adult mind, why not rather try to produce one which simulates the child's” 202. Maybe, in opposition to work in the field of Artificial Intelligence who has focused on mechanisms trying to match the capabilities of “intelligent” human adults such as chess playing or natural language dialogue 131, it is time to take the advice of Turing seriously. This is what a new field, called developmental (or epigenetic) robotics, is trying to achieve 147207. The approach of developmental robotics consists in importing and implementing concepts and mechanisms from developmental psychology 155, cognitive linguistics 115, and developmental cognitive neuroscience 136 where there has been a considerable amount of research and theories to understand and explain how children learn and develop. A number of general principles are underlying this research agenda: embodiment 98171, grounding 129, situatedness 193, self-organization 197166, enaction 203, and incremental learning 107.

Among the many issues and challenges of developmental robotics, two of them are of paramount importance: exploration mechanisms and mechanisms for abstracting and making sense of initially unknown sensorimotor channels. Indeed, the typical space of sensorimotor skills that can be encountered and learnt by a developmental robot, as those encountered by human infants, is immensely vast and inhomogeneous. With a sufficiently rich environment and multimodal set of sensors and effectors, the space of possible sensorimotor activities is simply too large to be explored exhaustively in any robot's life time: it is impossible to learn all possible skills and represent all conceivable sensory percepts. Moreover, some skills are very basic to learn, some other very complicated, and many of them require the mastery of others in order to be learnt. For example, learning to manipulate a piano toy requires first to know how to move one's hand to reach the piano and how to touch specific parts of the toy with the fingers. And knowing how to move the hand might require to know how to track it visually.

Exploring such a space of skills randomly is bound to fail or result at best on very inefficient learning 168. Thus, exploration needs to be organized and guided. The approach of epigenetic robotics is to take inspiration from the mechanisms that allow human infants to be progressively guided, i.e. to develop. There are two broad classes of guiding mechanisms which control exploration:

- internal guiding mechanisms, and in particular intrinsic motivation, responsible of spontaneous exploration and curiosity in humans, which is one of the central mechanisms investigated in FLOWERS, and technically amounts to achieve online active self-regulation of the growth of complexity in learning situations;

- social learning and guidance, a learning mechanisms that exploits the knowledge of other agents in the environment and/or that is guided by those same agents. These mechanisms exist in many different forms like emotional reinforcement, stimulus enhancement, social motivation, guidance, feedback or imitation, some of which being also investigated in FLOWERS.

Internal guiding mechanisms

In infant development, one observes a progressive increase of the complexity of activities with an associated progressive increase of capabilities 155, children do not learn everything at one time: for example, they first learn to roll over, then to crawl and sit, and only when these skills are operational, they begin to learn how to stand. The perceptual system also gradually develops, increasing children perceptual capabilities other time while they engage in activities like throwing or manipulating objects. This make it possible to learn to identify objects in more and more complex situations and to learn more and more of their physical characteristics.

Development is therefore progressive and incremental, and this might be a crucial feature explaining the efficiency with which children explore and learn so fast. Taking inspiration from these observations, some roboticists and researchers in machine learning have argued that learning a given task could be made much easier for a robot if it followed a developmental sequence and “started simple” 90121. However, in these experiments, the developmental sequence was crafted by hand: roboticists manually build simpler versions of a complex task and put the robot successively in versions of the task of increasing complexity. And when they wanted the robot to learn a new task, they had to design a novel reward function.

Thus, there is a need for mechanisms that allow the autonomous control and generation of the developmental trajectory. Psychologists have proposed that intrinsic motivations play a crucial role. Intrinsic motivations are mechanisms that push humans to explore activities or situations that have intermediate/optimal levels of novelty, cognitive dissonance, or challenge 94116118. Futher, the exploration of critical role of intrinsic motivation as lever of cognitive developement for all and for all ages is today expanded to several fields of research, closest to its original study, special education or cognitive aging, and farther away, neuropsychological clinical research. The role and structure of intrinsic motivation in humans have been made more precise thanks to recent discoveries in neuroscience showing the implication of dopaminergic circuits and in exploration behaviours and curiosity 117132187. Based on this, a number of researchers have began in the past few years to build computational implementation of intrinsic motivation 16816918292133149183. While initial models were developed for simple simulated worlds, a current challenge is to manage to build intrinsic motivation systems that can efficiently drive exploratory behaviour in high-dimensional unprepared real world robotic sensorimotor spaces 169, 168, 170, 181. Specific and complex problems are posed by real sensorimotor spaces, in particular due to the fact that they are both high-dimensional as well as (usually) deeply inhomogeneous. As an example for the latter issue, some regions of real sensorimotor spaces are often unlearnable due to inherent stochasticity or difficulty, in which case heuristics based on the incentive to explore zones of maximal unpredictability or uncertainty, which are often used in the field of active learning 110130 typically lead to catastrophic results. The issue of high dimensionality does not only concern motor spaces, but also sensory spaces, leading to the problem of correctly identifying, among typically thousands of quantities, those latent variables that have links to behavioral choices. In FLOWERS, we aim at developing intrinsically motivated exploration mechanisms that scale in those spaces, by studying suitable abstraction processes in conjunction with exploration strategies.

Socially Guided and Interactive Learning

Social guidance is as important as intrinsic motivation in the cognitive development of human babies 155. There is a vast literature on learning by demonstration in robots where the actions of humans in the environment are recognized and transferred to robots 89. Most such approaches are completely passive: the human executes actions and the robot learns from the acquired data. Recently, the notion of interactive learning has been introduced in 198, 97, motivated by the various mechanisms that allow humans to socially guide a robot 179. In an interactive context the steps of self-exploration and social guidance are not separated and a robot learns by self exploration and by receiving extra feedback from the social context 198, 140, 150.

Social guidance is also particularly important for learning to segment and categorize the perceptual space. Indeed, parents interact a lot with infants, for example teaching them to recognize and name objects or characteristics of these objects. Their role is particularly important in directing the infant attention towards objects of interest that will make it possible to simplify at first the perceptual space by pointing out a segment of the environment that can be isolated, named and acted upon. These interactions will then be complemented by the children own experiments on the objects chosen according to intrinsic motivation in order to improve the knowledge of the object, its physical properties and the actions that could be performed with it.

In FLOWERS, we are aiming at including intrinsic motivation system in the self-exploration part thus combining efficient self-learning with social guidance 160, 161. We also work on developing perceptual capabilities by gradually segmenting the perceptual space and identifying objects and their characteristics through interaction with the user 148 and robots experiments 134. Another challenge is to allow for more flexible interaction protocols with the user in terms of what type of feedback is provided and how it is provided 146.

Exploration mechanisms are combined with research in the following directions:

Cumulative learning, reinforcement learning and optimization of autonomous skill learning

FLOWERS develops machine learning algorithms that can allow embodied machines to acquire cumulatively sensorimotor skills. In particular, we develop optimization and reinforcement learning systems which allow robots to discover and learn dictionaries of motor primitives, and then combine them to form higher-level sensorimotor skills.

Autonomous perceptual and representation learning

In order to harness the complexity of perceptual and motor spaces, as well as to pave the way to higher-level cognitive skills, developmental learning requires abstraction mechanisms that can infer structural information out of sets of sensorimotor channels whose semantics is unknown, discovering for example the topology of the body or the sensorimotor contingencies (proprioceptive, visual and acoustic). This process is meant to be open- ended, progressing in continuous operation from initially simple representations towards abstract concepts and categories similar to those used by humans. Our work focuses on the study of various techniques for:

- autonomous multimodal dimensionality reduction and concept discovery;

- incremental discovery and learning of objects using vision and active exploration, as well as of auditory speech invariants;

- learning of dictionaries of motion primitives with combinatorial structures, in combination with linguistic description;

- active learning of visual descriptors useful for action (e.g. grasping).

Embodiment and maturational constraints

FLOWERS studies how adequate morphologies and materials (i.e. morphological computation), associated to relevant dynamical motor primitives, can importantly simplify the acquisition of apparently very complex skills such as full-body dynamic walking in biped. FLOWERS also studies maturational constraints, which are mechanisms that allow for the progressive and controlled release of new degrees of freedoms in the sensorimotor space of robots.

Discovering and abstracting the structure of sets of uninterpreted sensors and motors

FLOWERS studies mechanisms that allow a robot to infer structural information out of sets of sensorimotor channels whose semantics is unknown, for example the topology of the body and the sensorimotor contingencies (proprioceptive, visual and acoustic). This process is meant to be open-ended, progressing in continuous operation from initially simple representations to abstract concepts and categories similar to those used by humans.

Emergence of social behavior in multi-agent populations

FLOWERS studies how populations of interacting learning agents can collectively acquire cooperative or competitive strategies in challenging simulated environments. This differs from "Social learning and guidance" presented above: instead of studying how a learning agent can benefit from the interaction with a skilled agent, we rather consider here how social behavior can spontaneously emerge from a population of interacting learning agents. We focus on studying and modeling the emergence of cooperation, communication and cultural innovation based on theories in behavioral ecology and language evolution, using recent advances in multi-agent reinforcement learning.

Cognitive variability across Lifelong development and (re)educational Technologies

Over the past decade, the progress in the field of curiosity-driven learning generates a lot of hope, especially with regard to a major challenge, namely the inter-individual variability of developmental trajectories of learning, which is particularly critical during childhood and aging or in conditions of cognitive disorders. With the societal purpose of tackling of social inegalities, FLOWERS deals to move forward this new research avenue by exploring the changes of states of curiosity across lifespan and across neurodevelopemental conditions (neurotypical vs. learning disabilities) while designing new educational or rehabilitative technologies for curiosity-driven learning. The information gaps or learning progress, and their awareness are the core mechanisms of this part of research program due to high value as brain fuel by which the individual's internal intrinsic state of motivation is maintained and leads him/her to pursue his/her cognitive efforts for acquisitions /rehabilitations. Accordingly, a main challenge is to understand these mechanisms in order to draw up supports for the curiosity-driven learning, and then to embed them into (re)educational technologies. To this end, two-ways of investigations are carried out in real-life setting (school, home, work place etc): 1) the design of curiosity-driven interactive systems for learning and their effectiveness study ; and 2) the automated personnalization of learning programs through new algorithms maximizing learning progress in ITS.

https://red-radar.inria.fr/document/FLOWERS-RA-2023/domain

4 Application domains

Neuroscience, Developmental Psychology and Cognitive Sciences The computational modelling of life-long learning and development mechanisms achieved in the team centrally targets to contribute to our understanding of the processes of sensorimotor, cognitive and social development in humans. In particular, it provides a methodological basis to analyze the dynamics of the interaction across learning and inference processes, embodiment and the social environment, allowing to formalize precise hypotheses and later on test them in experimental paradigms with animals and humans. A paradigmatic example of this activity is the Neurocuriosity project achieved in collaboration with the cognitive neuroscience lab of Jacqueline Gottlieb, where theoretical models of the mechanisms of information seeking, active learning and spontaneous exploration have been developed in coordination with experimental evidence and investigation, see. Another example is the study of the role of curiosity in learning in the elderly, with a view to assessing its positive value against the cognitive aging as a protective ingredient (i.e, Industrial project with Onepoint and CuriousTECH associate team with M. Fernendes from the Cognitive neursocience Lab of the University of Waterloo).

Personal and lifelong learning assistive agents Many indicators show that the arrival of personal assistive agents in everyday life, ranging from digital assistants to robots, will be a major fact of the 21st century. These agents will range from purely entertainment or educative applications to social companions that many argue will be of crucial help in our society. Yet, to realize this vision, important obstacles need to be overcome: these agents will have to evolve in unpredictable environments and learn new skills in a lifelong manner while interacting with non-engineer humans, which is out of reach of current technology. In this context, the refoundation of intelligent systems that developmental AI is exploring opens potentially novel horizons to solve these problems. In particular, this application domain requires advances in artificial intelligence that go beyond the current state-of-the-art in fields like deep learning. Currently these techniques require tremendous amounts of data in order to function properly, and they are severely limited in terms of incremental and transfer learning. One of our goals is to drastically reduce the amount of data required in order for this very potent field to work when humans are in-the-loop. We try to achieve this by making neural networks aware of their knowledge, i.e. we introduce the concept of uncertainty, and use it as part of intrinsically motivated multitask learning architectures, and combined with techniques of learning by imitation.

Educational technologies that foster curiosity-driven and personalized learning. Optimal teaching and efficient teaching/learning environments can be applied to aid teaching in schools aiming both at increase the achievement levels and the reduce time needed. From a practical perspective, improved models could be saving millions of hours of students' time (and effort) in learning. These models should also predict the achievement levels of students in order to influence teaching practices. The challenges of the school of the 21st century, and in particular to produce conditions for active learning that are personalized to the student's motivations, are challenges shared with other applied fields. Special education for children with special needs, such as learning disabilities, has long recognized the difficulty of personalizing contents and pedagogies due to the great variability between and within medical conditions. More remotely, but not so much, cognitive rehabilitative carers are facing the same challenges where today they propose standardized cognitive training or rehabilitation programs but for which the benefits are modest (some individuals respond to the programs, others respond little or not at all), as they are highly subject to inter- and intra-individual variability. The curiosity-driven technologies for learning and STIs could be a promising avenue to address these issues that are common to (mainstream and specialized) education and cognitive rehabilitation.

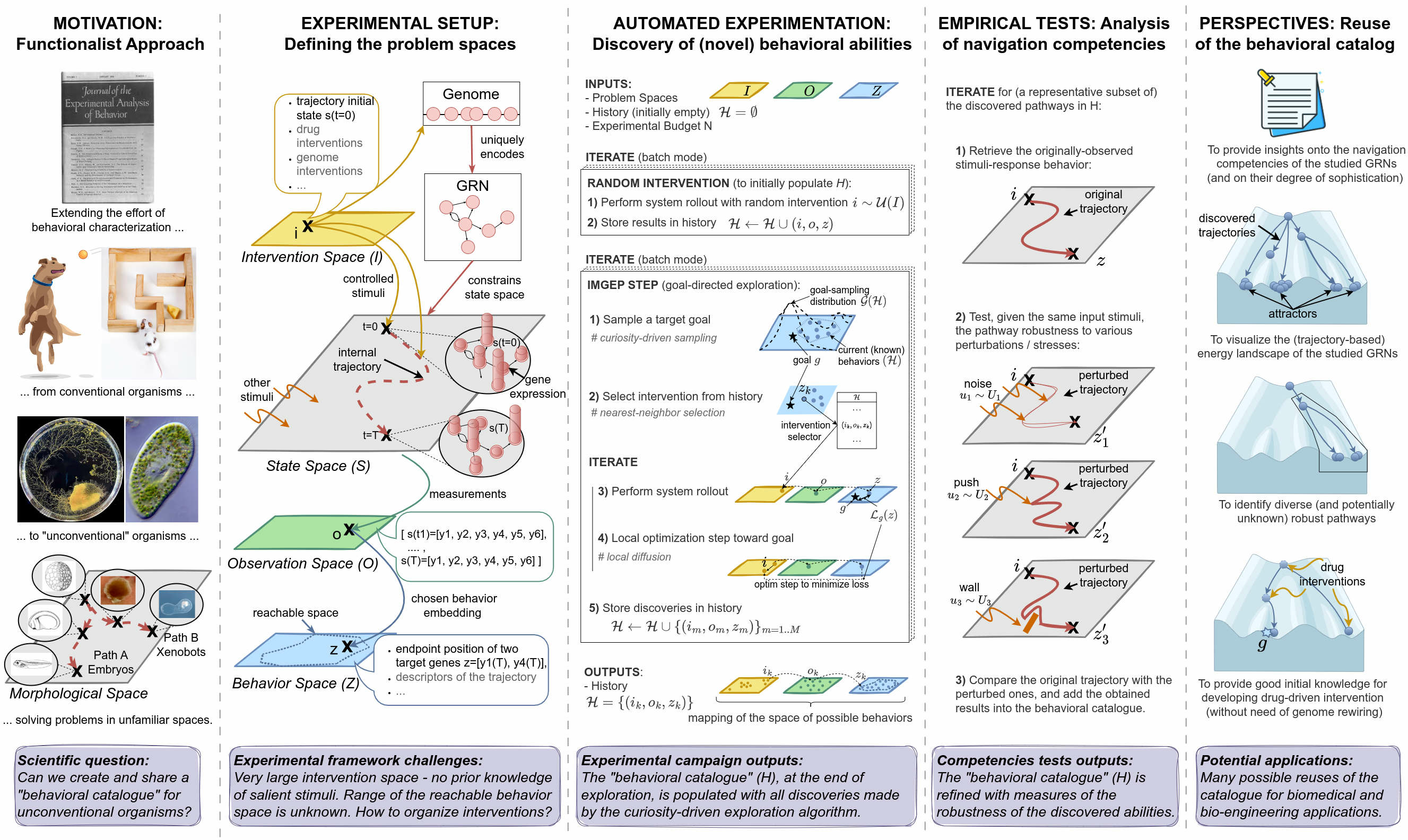

Automated discovery in science. Machine learning algorithms integrating intrinsically-motivated goal exploration processes (IMGEPs) with flexible modular representation learning are very promising directions to help human scientists discover novel structures in complex dynamical systems, in fields ranging from biology to physics. The automated discovery project lead by the FLOWERS team aims to boost the efficiency of these algorithms for enabling scientist to better understand the space of dynamics of bio-physical systems, that could include systems related to the design of new materials or new drugs with applications ranging from regenerative medicine to unraveling the chemical origins of life. As an example, Grizou et al. 125 recently showed how IMGEPs can be used to automate chemistry experiments addressing fundamental questions related to the origins of life (how oil droplets may self-organize into protocellular structures), leading to new insights about oil droplet chemistry. Such methods can be applied to a large range of complex systems in order to map the possible self-organized structures. The automated discovery project is intended to be interdisciplinary and to involve potentially non-expert end-users from a variety of domains. In this regard, we are currently collaborating with Poietis (a bio-printing company) and Bert Chan (an independant researcher in artificial life) to deploy our algorithms. To encourage the adoption of our algorithms by a wider community, we are also working on an interactive software which aims to provide tools to easily use the automated exploration algorithms (e.g. curiosity-driven) in various systems.

Human-Robot Collaboration. Robots play a vital role for industry and ensure the efficient and competitive production of a wide range of goods. They replace humans in many tasks which otherwise would be too difficult, too dangerous, or too expensive to perform. However, the new needs and desires of the society call for manufacturing system centered around personalized products and small series productions. Human-robot collaboration could widen the use of robot in this new situations if robots become cheaper, easier to program and safe to interact with. The most relevant systems for such applications would follow an expert worker and works with (some) autonomy, but being always under supervision of the human and acts based on its task models.

Environment perception in intelligent vehicles. When working in simulated traffic environments, elements of FLOWERS research can be applied to the autonomous acquisition of increasingly abstract representations of both traffic objects and traffic scenes. In particular, the object classes of vehicles and pedestrians are if interest when considering detection tasks in safety systems, as well as scene categories (”scene context”) that have a strong impact on the occurrence of these object classes. As already indicated by several investigations in the field, results from present-day simulation technology can be transferred to the real world with little impact on performance. Therefore, applications of FLOWERS research that is suitably verified by real-world benchmarks has direct applicability in safety-system products for intelligent vehicles.

5 Social and environmental responsibility

5.1 Footprint of research activities

AI is a field of research that currently requires a lot of computational resources, which is a challenge as these resources have an environmental cost. In the team we try to address this challenge in two ways:

- by working on developmental machine learning approaches that model how humans manage to learn open-ended and diverse repertoires of skills under severe limits of time, energy and compute: for example, curiosity-driven learning algorithms can be used to guide agent's exploration of their environment so that they learn a world model in a sample efficient manner, i.e. by minimizing the number of runs and computations they need to perform in the environment;

- by monitoring the number of CPU and GPU hours required to carry out our experiments. For instance, our work 11 used a total of 2.5 cpu years. More globally, our work uses large scale computational resources, such as the Jean Zay supercomputer platform, in which we use several hundred thousands hours of GPU and CPU each year.

5.2 Impact of research results

Our research activities are organized along two fundamental research axis (models of human learning and algorithms for developmental machine learning) and one application research axis (involving multiple domains of application, see the Application Domains section). This entails different dimensions of potential societal impact:

- Towards autonomous agents that can be shaped to human preferences and be explainable We work on reinforcement learning architectures where autonomous agents interact with a social partner to explore a large set of possible interactions and learn to master them, using language as a key communication medium. As a result, our work contributes to facilitating human intervention in the learning process of agents (e.g. digital assistants, video games characters, robots), which we believe is a key step towards more explainable and safer autonomous agents.

- Reproducibility of research: By releasing the codes of our research papers, we believe that we help efforts in reproducible science and allow the wider community to build upon and extend our work in the future. In that spirit, we also provide clear explanations on the statistical testing methods when reporting the results.

- Digital transformation and Competences' challenges facing schools in the 21st century. We expect our findings to inform the broader societal challenges inherent to the School of the 21st Century, ranging from helping children (and their teachers) to develop cross-domain skills for learning such as curiosity and meta-cognition, while improving inclusivity in schools (learners with disabilities, especially cognitive disabilities) as well as promoting lifelong learning in older adults (successful aging), using cognitive-based research findings.

- AI and personalized educational technologies to reduce inequalities due to neurodiversity The Flowers team develops AI technologies aiming to personalize sequences of educationa activities in digital educational apps: this entails the central challenge of designing systems which can have equitable impact over a diversity of students and reduce inequalitie in academic achievemnt. Using models of curiosity-driven learning to design AI algorithms for such personalization, we have been working to enable them to be positively and equitably impactful across several dimensions of diversity: for young learners or for aging populations; for learners with low initial levels as well as for learners with high initial levels; for "normally" developping children and for children with developmental disorders; and for learners of different socio-cultural backgrounds (e.g. we could show in the KidLearn project that the system is equally impactful along these various kinds of diversities).

- Health: Bio-printing The Flowers team is studying the use of curiosity-driven exploraiton algorithm in the domain of automated discovery, enabling scientists in physics/chemistry/biology to efficiently explore and build maps of the possible structures of various complex systems. One particular domain of application we are studying is bio-printing, where a challenge consists in exploring and understanding the space of morphogenetic structures self-organized by bio-printed cell populations. This could facilitate the design and bio-printing of personalized skins or organoids for people that need transplants, and thus could have major impact on the health of people needing such transplants.

- Tools for human creativity and the arts Curiosity-driven exploration algorithms could also in principle be used as tools to help human users in creative activities ranging from writing stories to painting or musical creation, which are domains we aim to consider in the future, and thus this constitutes another societal and cultural domain where our research could have impact.

- Education to AI As artificial intelligence takes a greater role in human society, it is of foremost importance to empower individuals with understanding of these technologies. For this purpose, the Flowers lab has been actively involved in educational and popularization activities, in particular by designing educational robotics kits that form a motivating and tangible context to understand basic concepts in AI: these include the Inirobot kit (used by >30k primary school students in France (see) and the Poppy Education kit (see) now supported by the Poppy Station educational consortium (see)

- Health: optimization of intervention strategies during pandemic events Modelling the dynamics of epidemics helps proposing control strategies based on pharmaceutical and non-pharmaceutical interventions (contact limitation, lock down, vaccination, etc). Hand-designing such strategies is not trivial because of the number of possible interventions and the difficulty to predict long-term effects. This task can be cast as an optimization problem where state-of-the-art machine learning algorithms such as deep reinforcement learning, might bring significant value. However, the specificity of each domain – epidemic modelling or solving optimization problem – requires strong collaborations between researchers from different fields of expertise. Due to its fundamental multi-objective nature, the problem of optimizing intervention strategies can benefit from the goal-conditioned reinforcement learning algorithms we develop at Flowers. In this context, we have developped EpidemiOptim, a Python toolbox that facilitates collaborations between researchers in epidemiology and optimization (see).

6 Highlights of the year

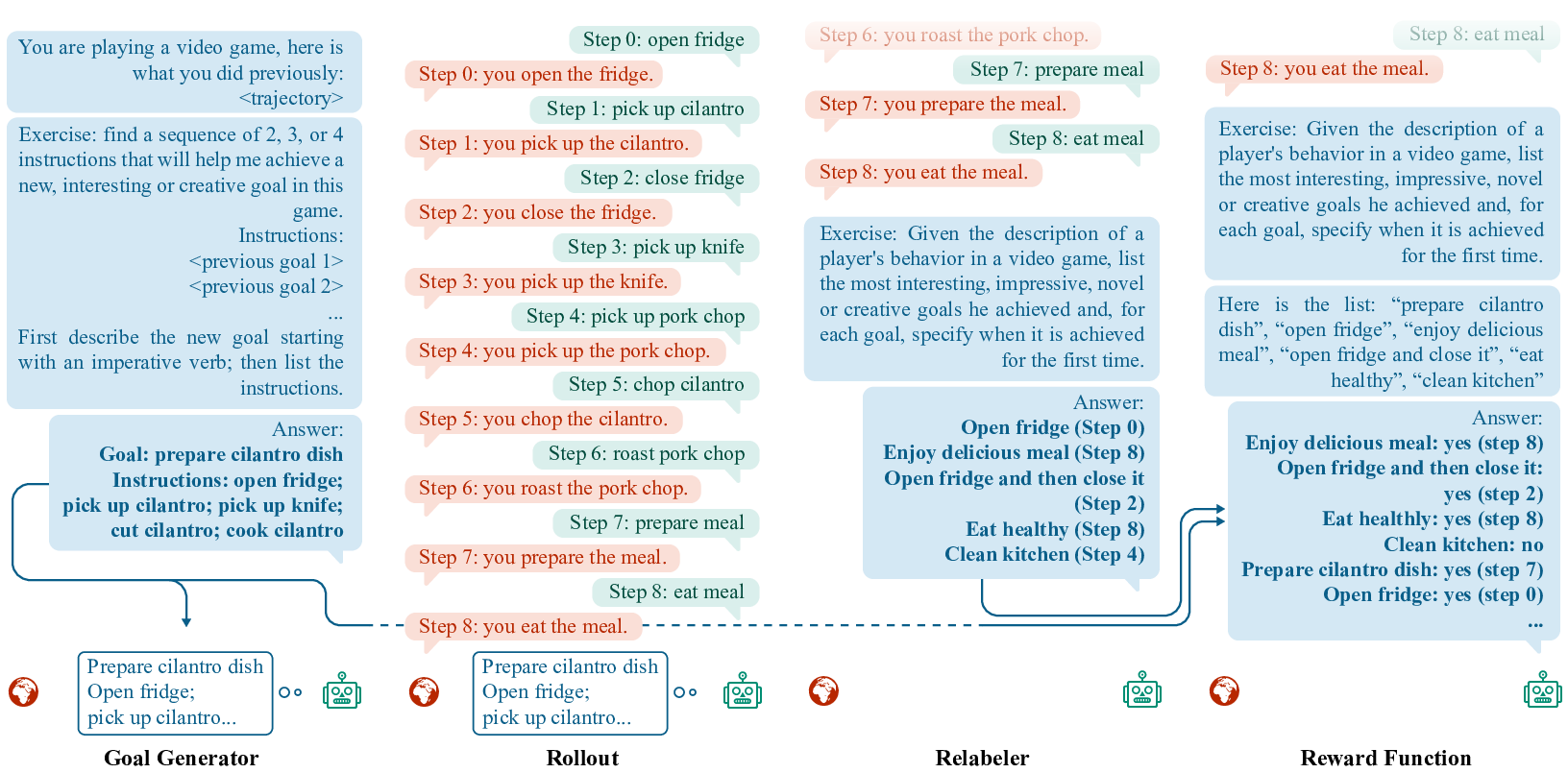

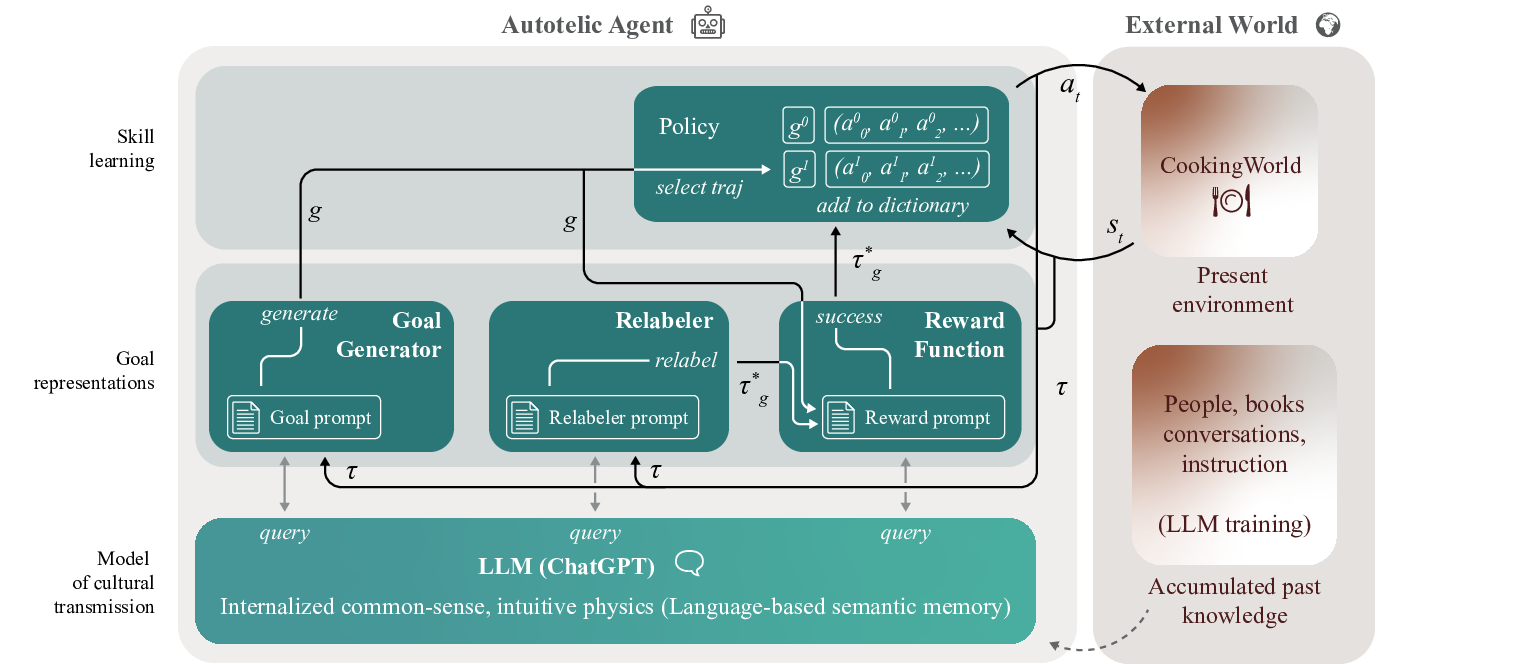

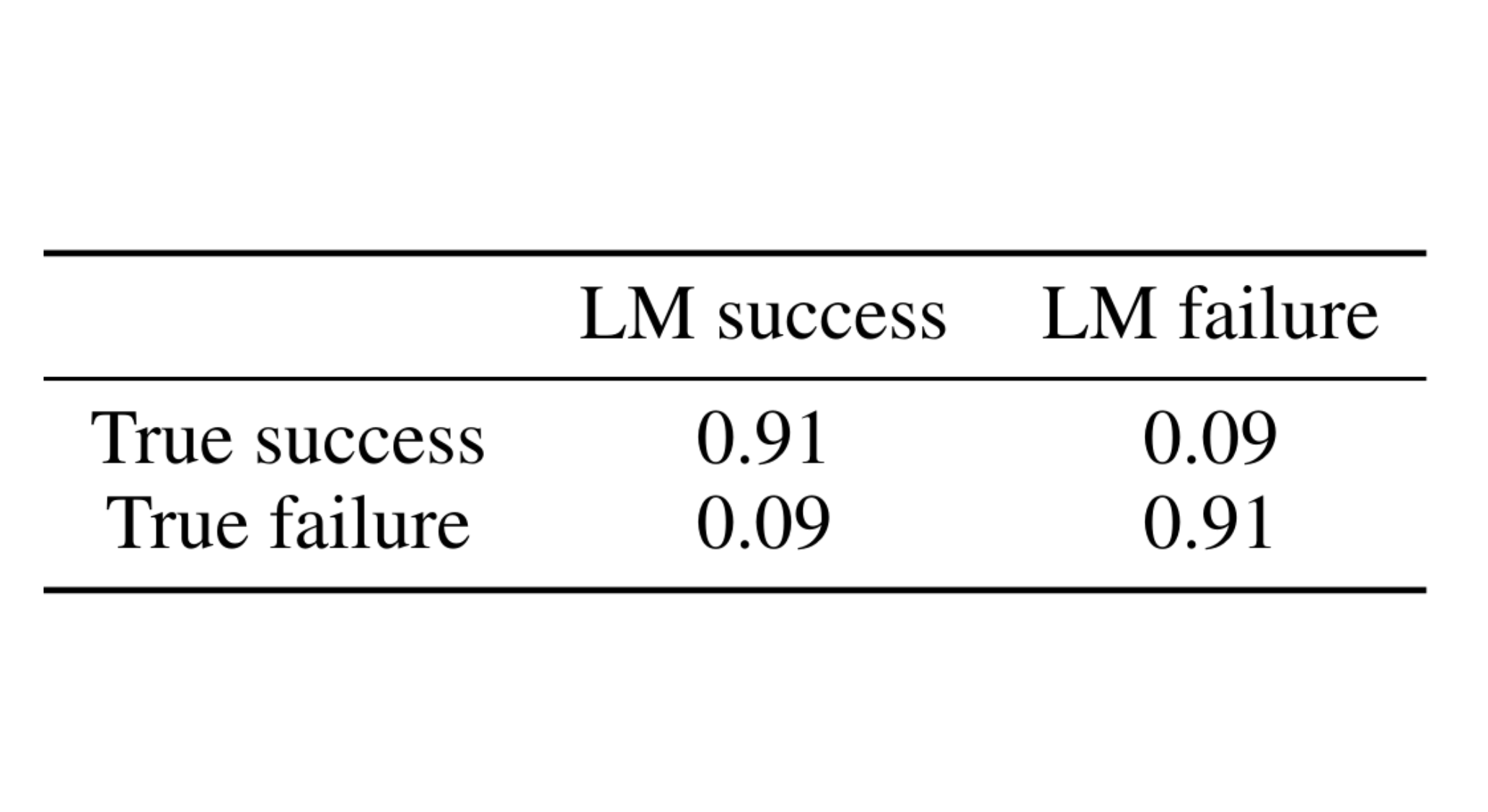

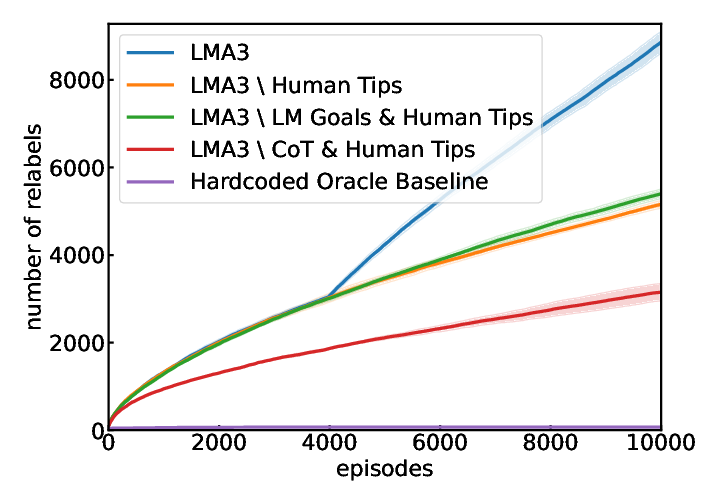

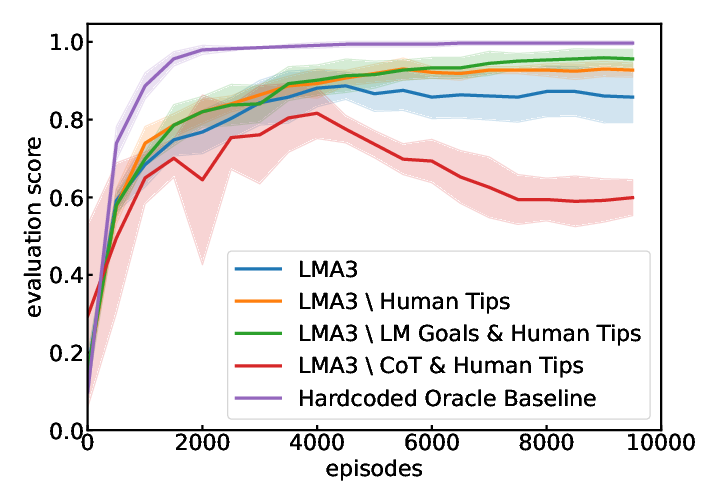

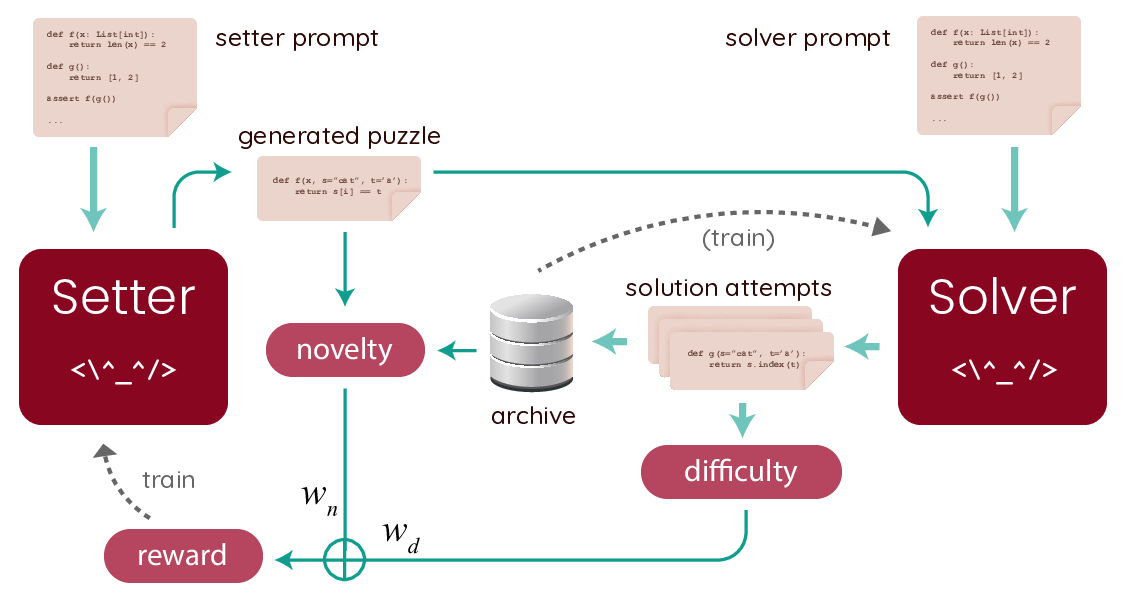

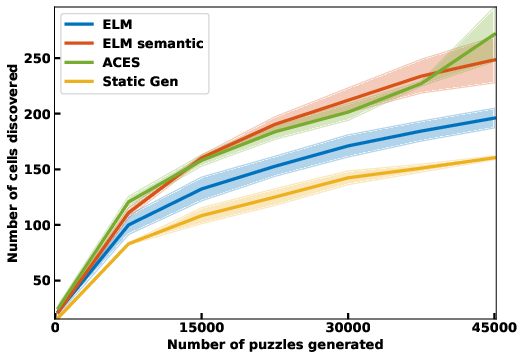

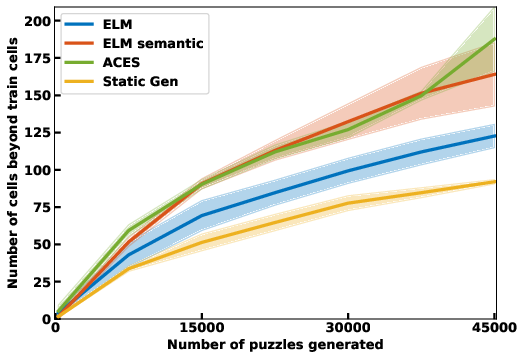

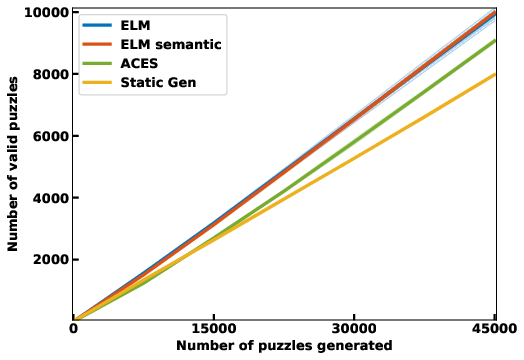

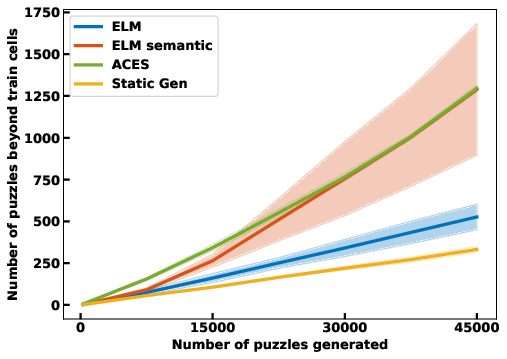

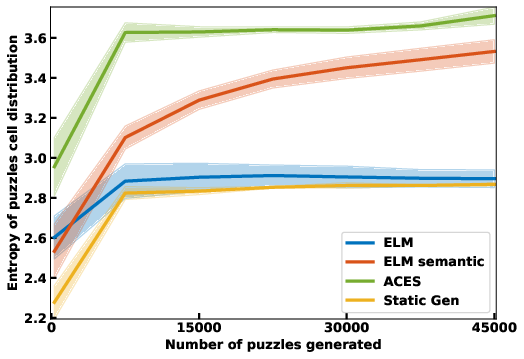

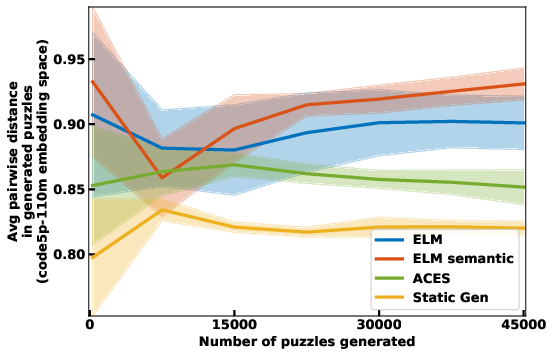

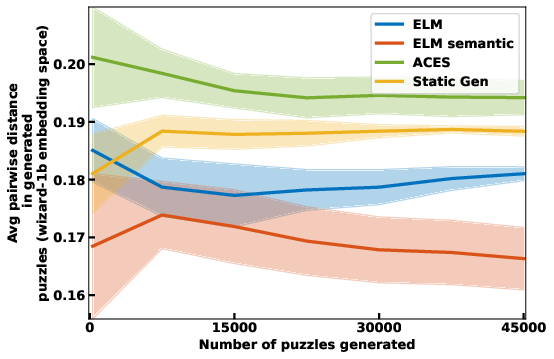

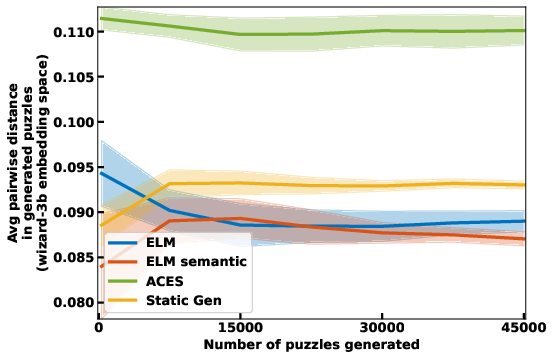

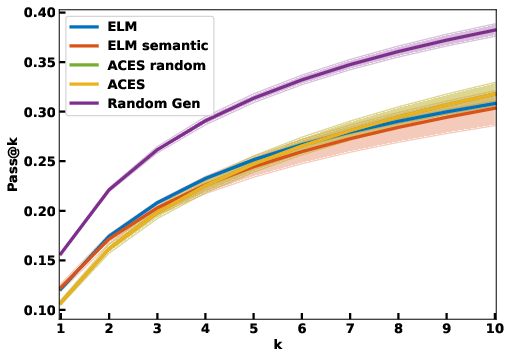

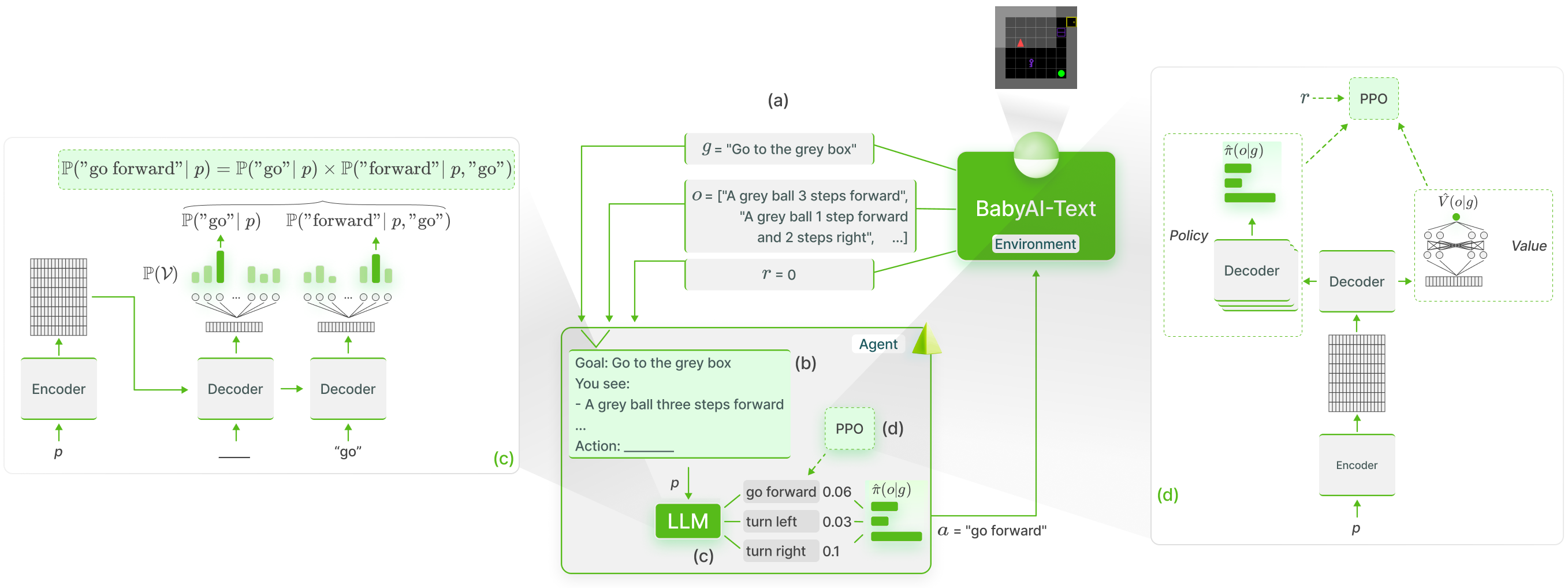

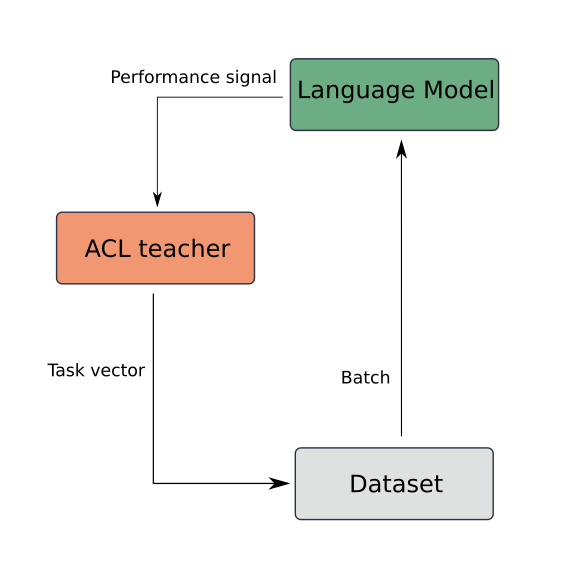

- Open-ended learning and autotelic AI with large language models: The team continued to lay the foundations of autotelic AI 113, 111, i.e. the science stuyding mechanisms enabling artificial agents to learn to represent and sample their own goals and achieve open-ended learning. In particular, this year we focused on studying how to build autotelic AI systems with large language models (LLMs). In particular, we studied how LLMs can be used as cognitive tools enabling creative generation of abstract goals, either in classical interactive environments such as in the LMA3 system 45 (collab. with M-A. Côté and E. Yuan at Microsoft Research Montreal), or in code/programming environments where LLM-based autotelic agents learn by self-improving their coding abilities, e.g. in the CodePlay system 64 (collab. with M. Bowers at MIT) or in the ACES system 77. This also set the ground for our participation in the new LLM4Code Inria Challenge. While doing this work, we also developed techniques and studied how language models can be grounded in interactive environments using online RL, such as in the GLAM system that enables to update and align LLMs to the particular dynamics of the environment 44 (collab. with O. Sigaud at Sorbonne Univ., S. Lamprier at Univ. Angers; T. Wolf at HuggingFace). This work was associated to the development of the Lamorel python library, designed for researchers eager to use Large Language Models (LLMs) in interactive environments (e.g. RL setups).

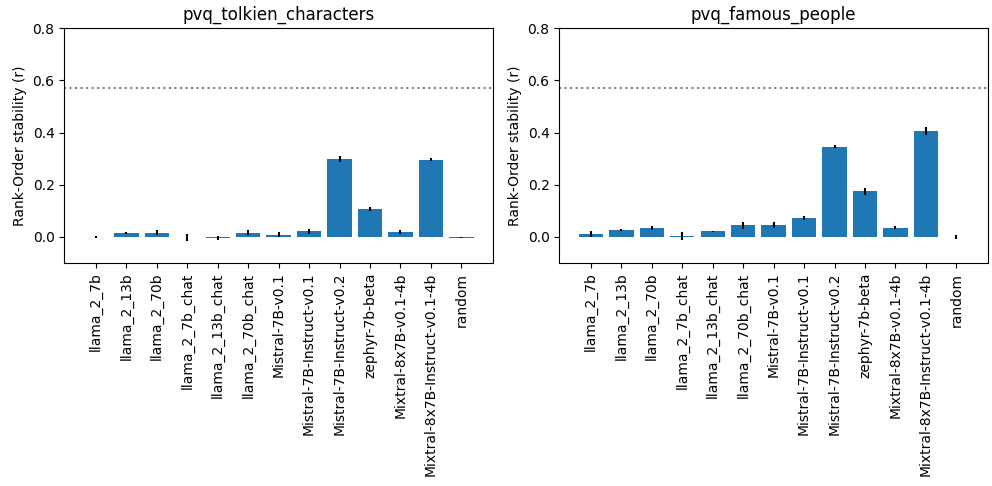

- Models of cultural evolution in humans and AI systems: As generative AI systems become powerful cultural transmission technologies that influence human cultural evolution in important ways, and can also have their own cultural processes through machine-machine large scale interaction, the study of the dynamics of cultural processes in populations of AI systems/humans becomes crucial. We continued our work in this perspective through computational models of innovation dynamics in groups of agents162 (associated with a collaboration with I. Mommenejab at Microsoft Research), of the interaction of autotelic learning and self-organization of communication conventions52, and of the formation of language protocols in groups of agents70. We also studied how LLMs can encode superpositions of socio-cultural perspectives, for example value systems, and developed tools to analyze the robustness and controllability for the expression of these socio-cultural abilites74. We also contributed to a general discussion of a new field studying "machine culture" in 36. We started a new collaboration with M. Derex from IAST Toulouse, a leading researcher in the field of Cultural Evolution, in the context of the co-direction of the PhD thesis of Jeremy Perez (started in October 2023).

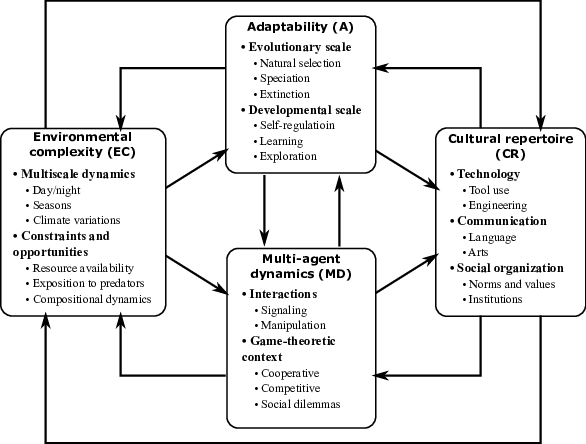

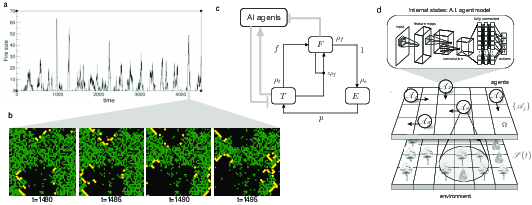

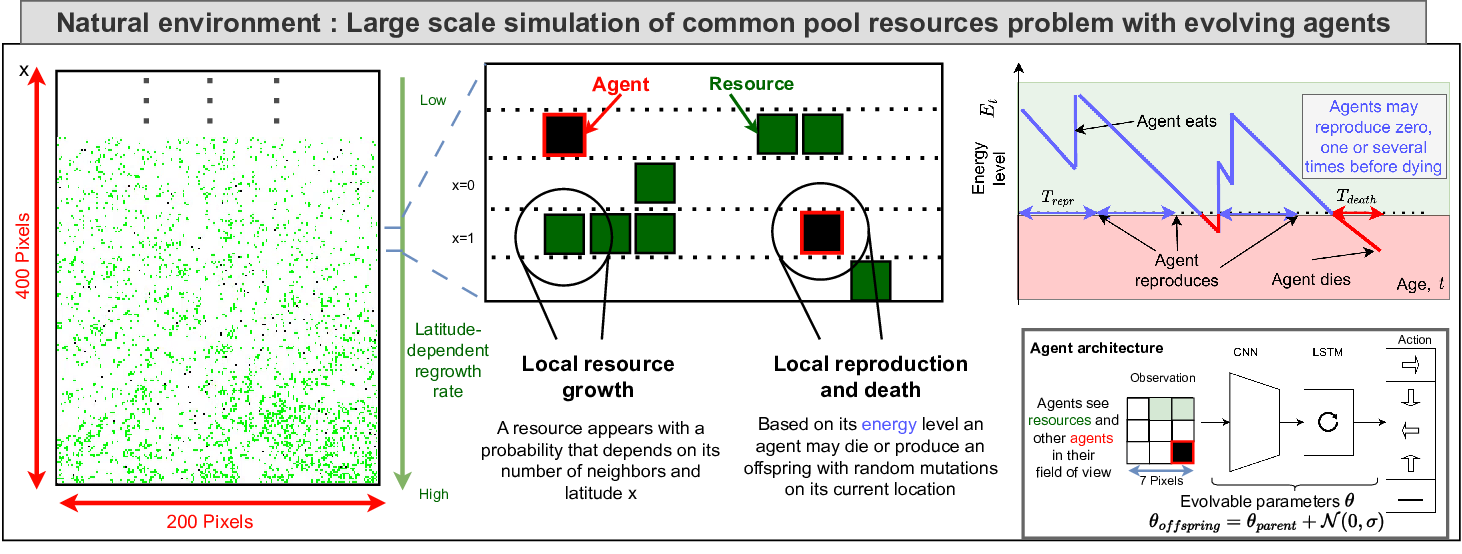

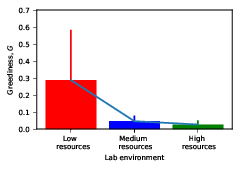

- Eco-evolutionary AI and meta-reinforcement learning: We developed an ecological research perspective on AI, highlighting the interactions between environmental, adaptive, multi-agent and cultural dynamics in sculpting intelligence. This led to the proposition of a detailled conceptual framework for studying these interactions, summarized in this blog post and in the HDR thesis of C. Moulin-Frier 157. This new research perspective is associated with several international and national collaborations: with I. Momennejad from Microsoft Research (USA), with M. Sanchez-Fibla and R. Solé from the Univ. Pompeu Fabra (Spain), with X. Hinaut from Inria Mnemosyne, with F. d'Errico and L. Doyon from the Univ. of Bordeaux (France) and with M. Derex from IAST Toulouse (France). Several conference papers have been published in this context in 2023. In 48, we studied the eco-evolutionary dynamics of non-episodic neuroevolution in large multi-agent environments. In 60, we studied the emergence of collective open-ended exploration from decentralized meta-reinforcement learning. In REFERENCE NOT FOUND: FLOWERS-RA-2023_label_nisioti:hal-03898121, we extended the framework of autotelic reinforcement learning to multi-agent environments. In 75, we introduced an eco-devo artificial agent integrating reservoir computing and meta reinforcement learning. In 53, we studied the dynamics of niche construction in adaptable populations evolving in diverse environments.

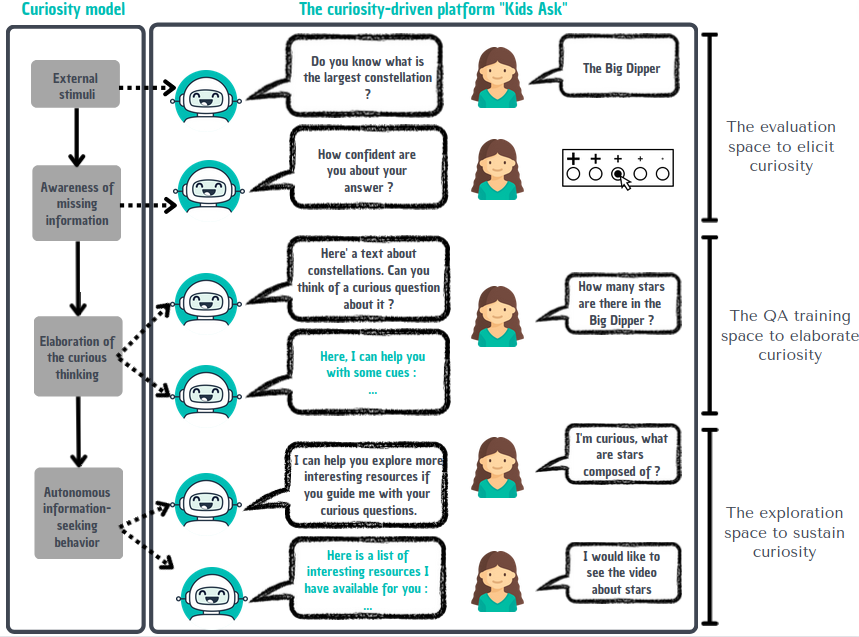

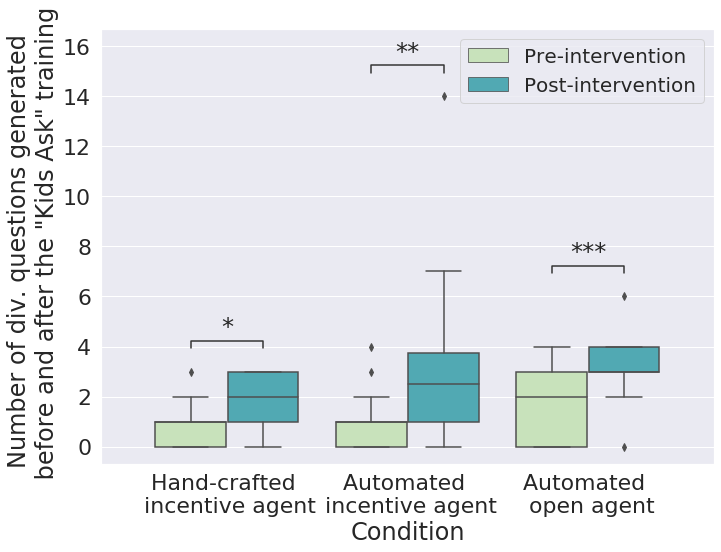

- Generative AI and educational technologies: We continued key projects studying the use of generative AI in education. First, we published one of the first international field studies investigating the pedagogical use of LLMs (here GPT-3) in real classrooms: in 33 (collab. with E. Yuan and T. Wang from MSR Montreal, and with Y-H. Wang), we showed that when used appropriately, GPT-3 can be used to build conversational agents that train efficiently curious question asking in primary school children, enabling to scale up a pedagogical approach for training curiosity skills we developped previously 85. Also, we studied the use of LLMs (GPT-3) to partially automate qualitative analysis methods in social sciences56 (collab. Z. Xiao, V. Liao, E. Yuan from MSR Montreal), opening new perspectives for studying qualitatively large text corpuses or verbal data from psychology or educational experiments. Finally, we developped a conceptual framework to think about the opportunities and challenges associated to using generative AI in the classroom, and in particular asking how this could be done by enabling children to keep and develop active learning skills 59. We here identified that one key challenge is to improve the AI litteracy of both children and their teachers: with this aim in mind, we started designing a pedagogical video series explaining in accessible ways various socio-technical dimensions of large language models (with A. Torres-Leguet). This series, available freely on the web with a Creative Commons licence, has already been reused in multiple contexts such as the mooc AI4T (AI for teachers).

-

Meta-cognition in Curiosity- driven educational technologies We developed several projects leveraging fundamental cognitive science studies of curiosity and meta-cognition to design educational interventions that either directly train these skills, or stimulate them to train other related cognitive skills ranging from maths to languages or other transverse skills like attention, and did this for diverse populations ranging from neurotypical to neurodiverse school children, to healthy young adults and aging populations.

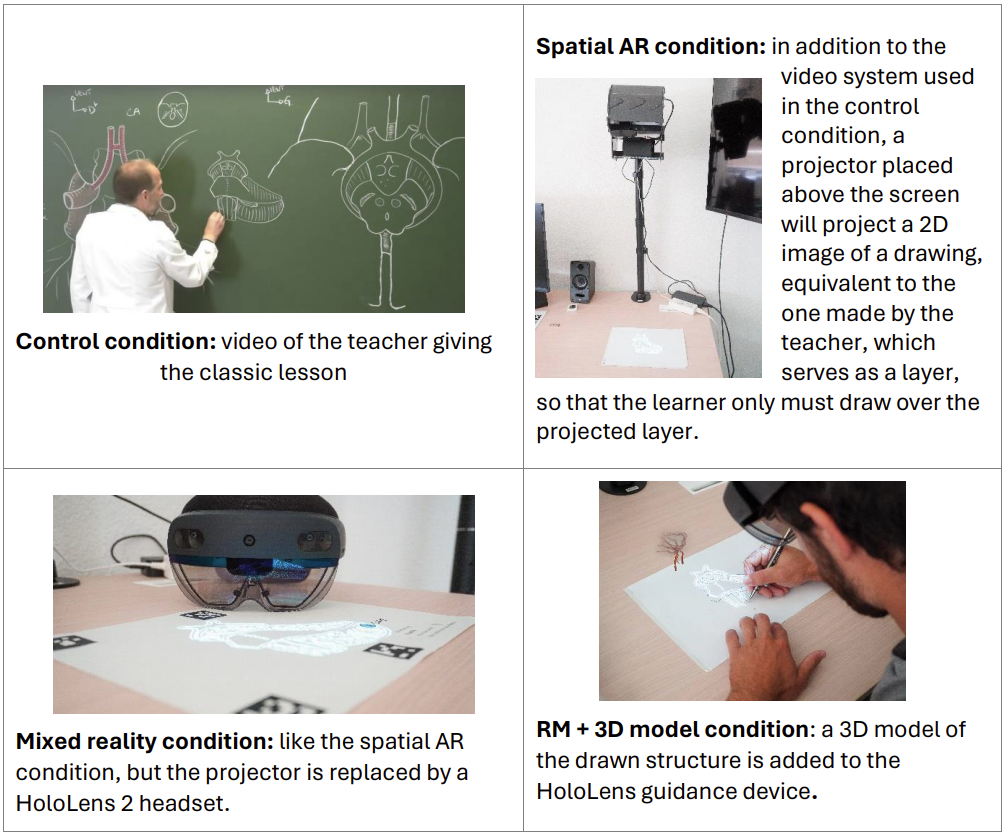

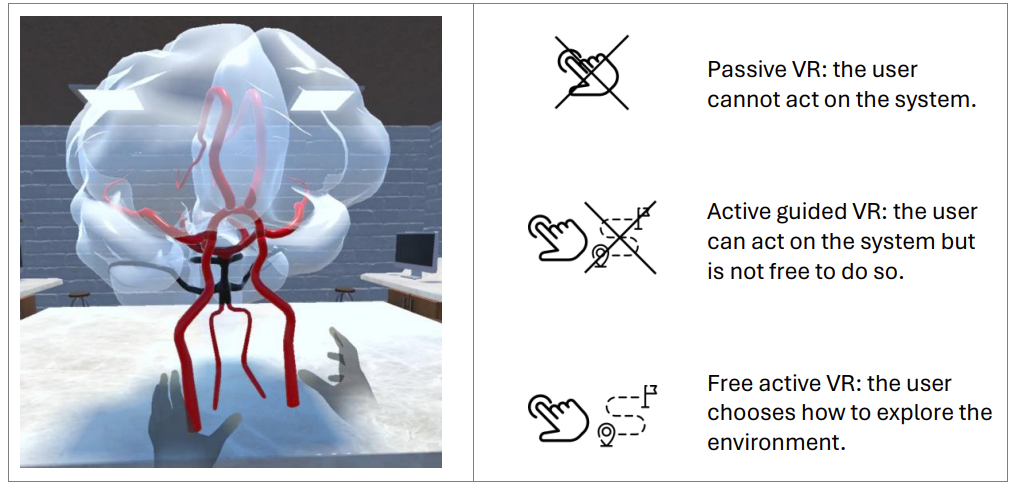

At a fundamental level, we studied the beneficial role of curiosity on route memory in children, within a new virtual reality experimental paradigm 79, and in the context of our collaboration with Myra Fernandes at Univ. Waterloo (associated team CuriousTech). To refine the understanding of metacognitive awareness of one’s own learning progress and its role as curiosity-boosters (31, we designed an educational software (4MC project) that aims to train curiosity through the practice of meta-cognitive skills in school children, and pilot studies led to very encouraging results 41. Also, as a follow-up of our systematic review on the interactions between curiosity and cognitive load in immersive learning technologies, we started a field study with 180 university students to test hypotheses about the links between this interaction and learning performances (involving the collaboration with Pr. A. Tricot from University of Montpellier and the CATIE company).

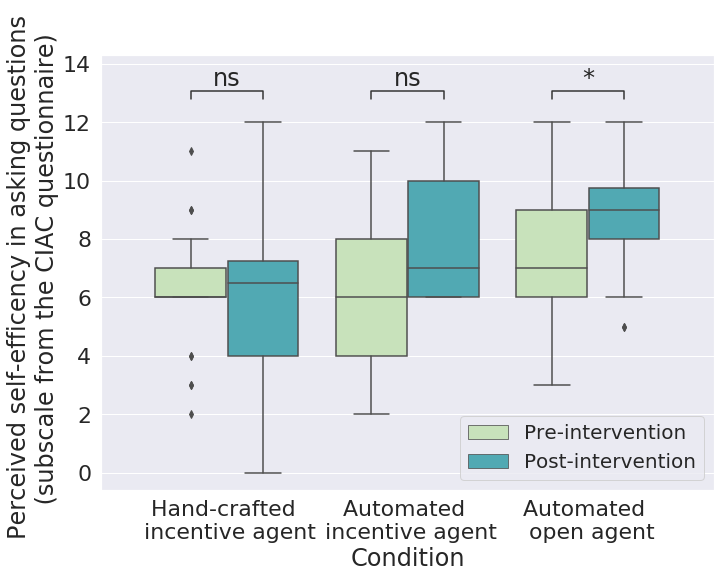

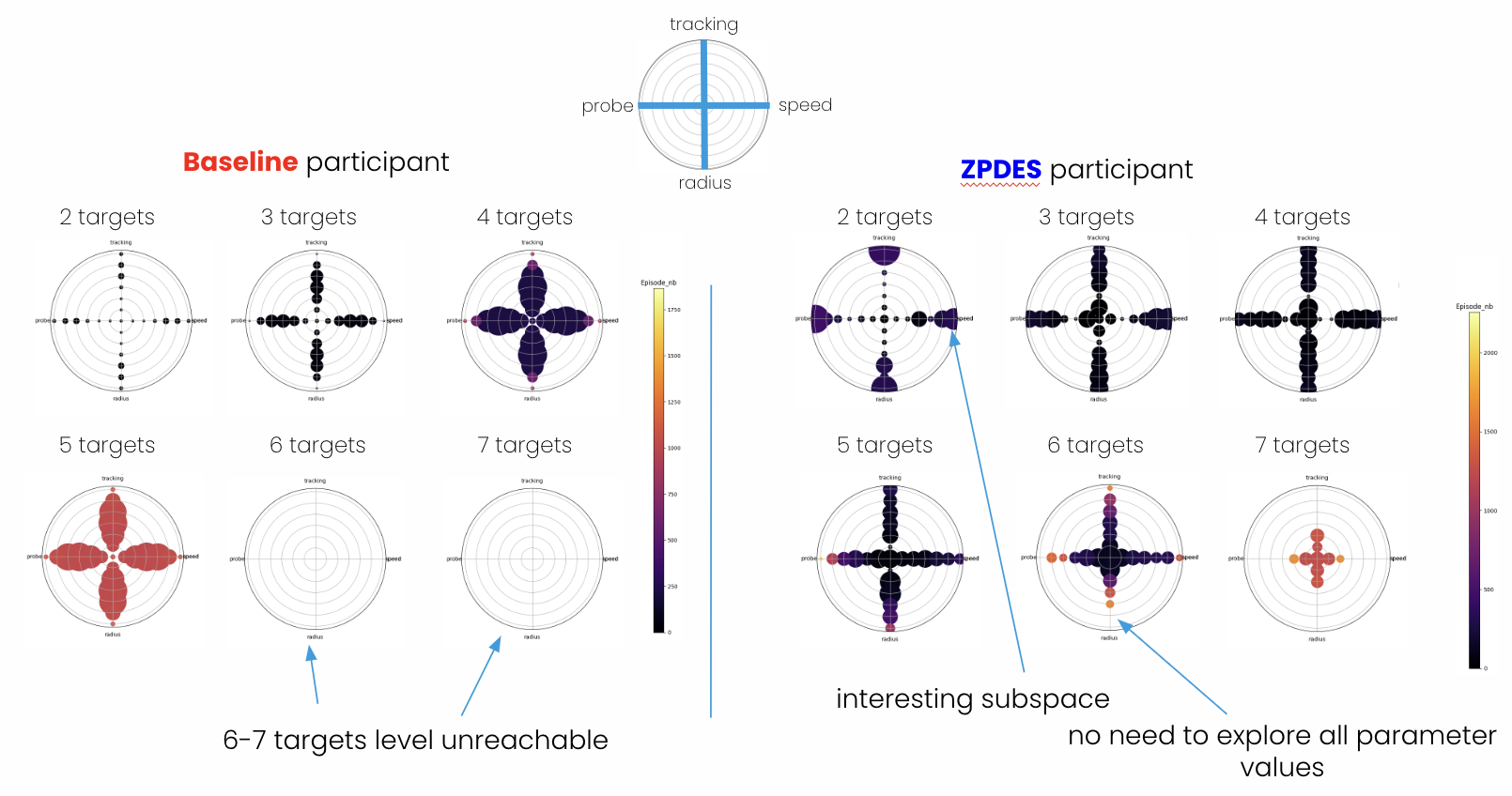

Leveraging the Learning Progress theory of human curiosity we developed in the past 17218, which led us to develop the ZPDES algorithm for personalizing sequences of exercices that foster learning efficiency and motivation 109, we continued studying how ZPDES can be used to personalize training of attention skills in both young adults and aging populations (paper under writing, collab. with D. Bavelier at Univ. of Geneva). Related to this project, we wrote a systematic review of the use of AI in cognitive training technologies 2. We also finalized the analysis of a large-scale experimental study using ZPDES in the context of training maths skills in primary schools, with a focus on the dual impact on learning efficiency and motivation on one hand, and a focus on adding choice possibilities on the other hand, showing positive results of the approach in comparison with hand-made pedagogical sequences. Through a collaboration with the EvidenceB company and support from the French Ministry of Education, the ZPDES personalization system was also deployed in the large-scale AdaptivMaths system now available in all French primary schools (> 68k classrooms). EvidenceB further used ZPDES in the MIA seconde system aimed for training high-school students in maths and french.

Finally, in the Togather project, we also experimented a system aimed at stimulating communication among stakeholders around neurodiverse children in schools (college level), and in particular trying to foster mutual curiosity among them while taking account possible cross-cultural differences in French and Belgium Schools 55. This was associated with a systematic review on methods to collaborate and co-educate students with special educational needs 51.

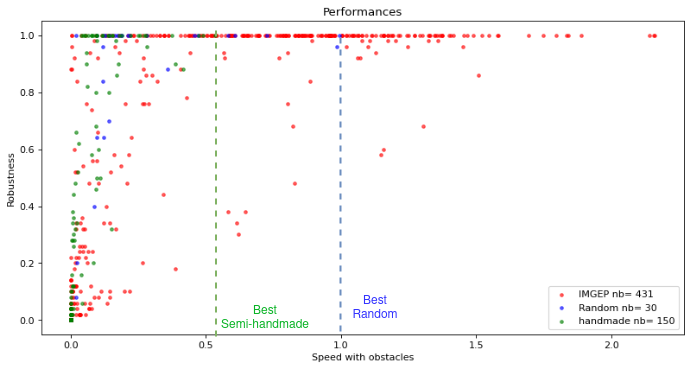

- Curiosity-driven AI for assisted scientific discovery: We continued studying how curiosity-driven AI algorithms can enable scientists (physicists, chemists, biologists, etc) explore and map the space of self-organized behaviours in diverse complex systems 69. In particular, through a collaboration with M. Levin at Tufts University, we studied how autotelic AI systems (IMGEP algorithms) can enable cost effective discovery of diverse sophisticated and robust behaviors in gene regulatory networks73. This project was associated to the development of the ADTool software aiming to facilitate the use of such exploration algorithms to scientists with various backgrounds, as well as with the development of SBMLtoODEjax 61, which aims to automatically parse and convert SBML models into python models written end-to-end in JAX, enabling fast and easy to use biological models in ML experiments. In another project, we continued our work using exploration algorithms to study self-organized structures in continuous CAs like Lenia, enabling to discover self-organization of forms of primitive agency, as described in this blog post. In this context, we designed a new continuous CA called Flow Lenia, combining mechanisms for mass conservation and localized embedding of adaptive update rules: this strongly facilitates self-organization of localized patterns and opens possibilities for the self-organization of evolutionary processes. The associated paper, in collaboration with Bert Chan at Google Brain, obtained the Best paper award at Alife 2023 in Tokyo 54.

- Workshop/symposium organization: Laetitia Teodorescu and Cédric Colas have been organizers of the Intrinsically-Motivated and Open-Ended Learning workshop at NeurIPS 2023, https://imol-workshop.github.io/; Mayalen Etcheverry was co-organizer of the Workshop on Agent Learning in Open-Endedness (ALOE) at Neurips 2023, https://sites.google.com/view/aloe2023/home; H. Sauzéon has been member of the scientific commitee of the first "Scientific Day of Gerontopole of New Aquitania", April 2023, Limoges; Pierre-Yves Oudeyer was co-organizer of the Life, Structure and Cognition symposium on Evolution and Learning, at IHES, https://indico.math.cnrs.fr/event/9963/, as well as of the Curiosity, Complexity and Creativity conference 2023 at Columbia University, NY, US, https://zuckermaninstitute.columbia.edu/ccc-event.

- International Research Visits: Rania Abdelghani visited the lab of Celeste Kidd at Univ. Berkeley to develop a new project studying how children understand and (mis)use large language models in educational settings. Marion Pech, Matisse Poupart and Maxime Adolphe visited Myra Fernandes's lab and Edith Law's lab at Univ. Waterloo in the context of associated team CuriousTech.

- Collaborations with industry: The team continued collaborations with various actors in the industry, including HuggingFace, EvidenceB, CATIE, Microsoft Research, Ubisoft, OnePoint.

6.1 Awards

Rémy Portelas obtained the Best PhD award from University of Bordeaux, category "Special prize of the jury", for his thesis entitled "Automatic Curriculum Learning for Developmental Machine Learners" 176

Erwan Plantec, Gautier Hamon, Mayalen Etcheverry, Bert Chan, Pierre-Yves Oudeyer and Clément Moulin-Frier obtained the Best paper award at Alife 2023 in Tokyo for the paper "Flow-Lenia: Towards open-ended evolution in cellular automata through mass conservation and parameter localization" 54.

6.2 PhD defenses

- Tristan Karch defended his PhD thesis on "Towards Social Autotelic Artificial Agents - Formation and Exploitation of Cultural Conventions in Autonomous Embodied Artificial Agents" 70 on May 11th, 2023.

- Mayalen Etcheverry defended her PhD thesis on "Curiosity-driven AI for Science: Automated Discovery of Self-Organized Structures" 69 on November 16th, 2023.

- Laetitia Teodorescu defended her PhD thesis on "Endless minds most beautiful: building open-ended linguistic autotelic agents with deep reinforcement learning and language models" 71 on November 20th, 2023.

7 New software, platforms, open data

7.1 New software

7.1.1 SocialAI

-

Name:

SocialAI: Benchmarking Socio-Cognitive Abilities in Deep Reinforcement Learning Agents

-

Keywords:

Artificial intelligence, Deep learning, Reinforcement learning, Large Language Models

-

Functional Description:

Source code for the paper https://arxiv.org/abs/2107.00956.

A suite of environments for testing socio-cognitive abilities of artificial agents. Environments can be used in the multimodal setting (suitable for RL agents) and in the pure text setting (suitable for Large Language Model-based agents). Also contains RL and LLM baselines.

- URL:

-

Contact:

Grgur Kovac

7.1.2 AutoDisc

-

Keyword:

Complex Systems

-

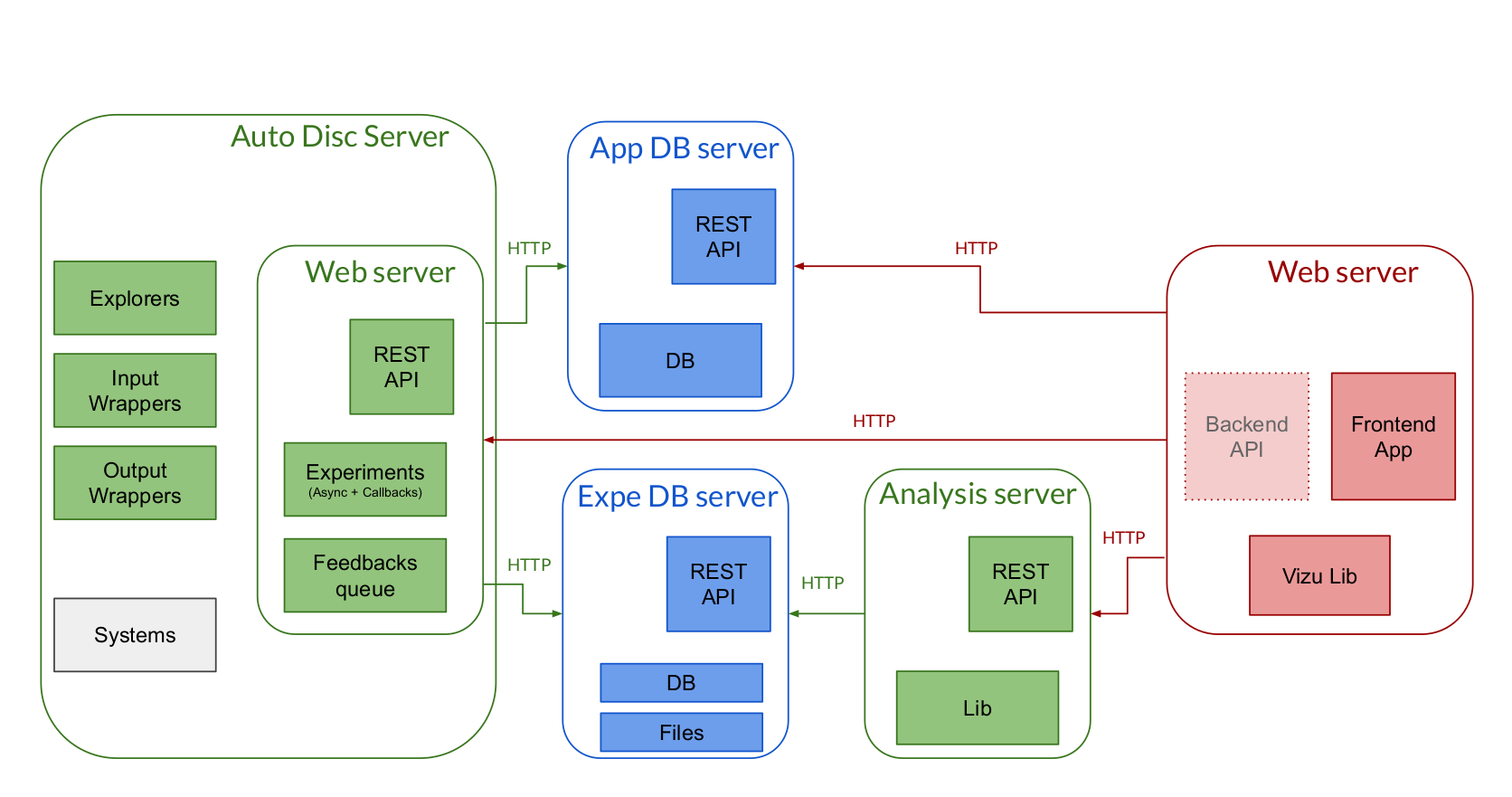

Functional Description:

AutoDisc is a software built for automated scientific discoveries in complex systems (e.g. self-organizing systems). It can be used as a tool to experiment automated discovery of various systems using exploration algorithms (e.g. curiosity-driven). Our software is fully Open Source and allows user to add their own systems, exploration algorithms or visualization methods.

- URL:

-

Contact:

Clément Romac

7.1.3 Kids Ask

-

Keywords:

Human Computer Interaction, Cognitive sciences

-

Functional Description:

Kids Ask is a web-based educational platform that involves an interaction between a child and a conversational agent. The platform is designed to teach children how to generate curiosity-based questions and use them in their learning in order to gain new knowledge in an autonomous way.

- URL:

-

Contact:

Rania Abdelghani

7.1.4 ToGather

-

Keywords:

Education, Handicap, Environment perception

-

Scientific Description:

With participatory design methods, we have designed an interactive website application for educational purposes. This application aims to provide interactive services with continuously updated content for the stakeholders of school inclusion of children with specific educational needs.

-

Functional Description:

Website gathering information on middle school students with neurodevelopmental disorders. Authentication is required to access the site's content. Each user can only access the student file(s) of the young person(s) they are accompanying. A student file contains 6 tabs, in which each type of user can add, edit or delete information: 1. Profile: to quickly get to know the student 2. Skills: evaluation at a given moment and evolution over time 3. Compendium of tips: includes psycho-educational tips 4. Meetings: manager and reports 5. News: share information over time 6. Contacts: contact information for stakeholders The student only has the right to view information about him/her.

- Publication:

-

Contact:

Cécile Mazon

-

Participants:

Isabeau Saint-Supery, Cécile Mazon, Eric Meyer, Hélène Sauzéon

7.1.5 mc_training

-

Name:

Platform for metacognitive training

-

Keywords:

Human Computer Interaction, Education

-

Functional Description:

This is a web platform for children between 9 and 11 years old, designed to help children practice 4 metacognitive skills that are thought to be involved in curiosity-driven learning: - the ability to identify uncertainties - the ability to generate informed hypotheses - the ability to ask questions - the ability to evaluate the value of a preconceived inference.

Children work on a reading-comprehension tasks and, for each of these skills, the platform offers help through a "conversation" with conversational agents that give instructions to perform the task, with respect to every skill, and can give suggestions if the child asks for it.

-

Contact:

Rania Abdelghani

7.1.6 Evolution of adaptation mechanisms in complex environments

-

Name:

Plasticity and evolvability under environmental variability: the joint role of fitness-based selection and niche-limited competition

-

Keywords:

Evolution, Ecology, Dynamic adaptation

-

Functional Description:

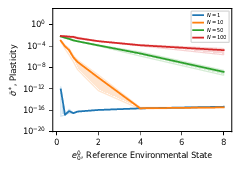

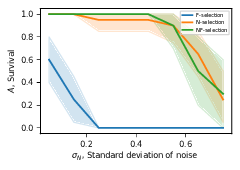

This is the code accompannying our paper Plasticity and evolvability under environmental variability: the joint role of fitness-based selection and niche-limited competition" which is to be presented at the Gecco 2022 conference.

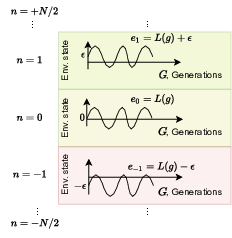

In this work we have studied the evolution of a population of agents in a world where the fitness landscape changes with generations based on climate function and a latitudinal model that divides the world in different niches. We have implemented different selection mechanisms (fitness-based selection and niche-limited competition).

The world is divided into niches that correspond to different latitudes and whose state evolves based on a common climate function.

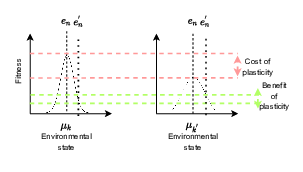

We model the plasticity of an individual using tolerance curves originally developed in ecology. Plasticity curves have the form of a Gaussian the capture the benefits and costs of plasticity when comparing a specialist (left) with a generalist (right) agent.

The repo contains the following main elements :

folder source contains the main functionality for running a simulation scripts/run/reproduce_gecco.py can be used to rerun all simulations in the paper scripts/evaluate contains scripts for reproducing figures. reproduce_figures.py will produce all figures (provided you have already run scripts/run/reproduce_gecco.py to generate the data) folder projects contains data generated from running a simulation How to run To install all package dependencies you can create a conda environment as:

conda env create -f environment.yml

All script executions need to be run from folder source. Once there, you can use simulate.py, the main interface of the codebase to run a simulation, For example:

python simulate.py –project test_stable –env_type stable –num_gens 300 –capacity 1000 –num_niches 10 –trials 10 –selection_type NF –climate_mean_init 2

will run a simulation with an environment with a climate function whose state is constantly 2 consisting of 100 niches for 300 generations and 10 independent trials. The maximum population size will be 1000*2 and selection will be fitness-based (higher fitness means higher chances of reproduction) and niche limited (individuals reproduce independently in each niche and compete only within a niche),

You can also take a look at scripts/run/reproduce_gecco.py to see which flags were used for the simulations presented in the paper.

Running all simulations requires some days. You can instead download the data produced by running scripts/run/reproduce_gecco.py from this google folder and unzip them under the projects directory.

- URL:

-

Contact:

Eleni Nisioti

7.1.7 SAPIENS

-

Name:

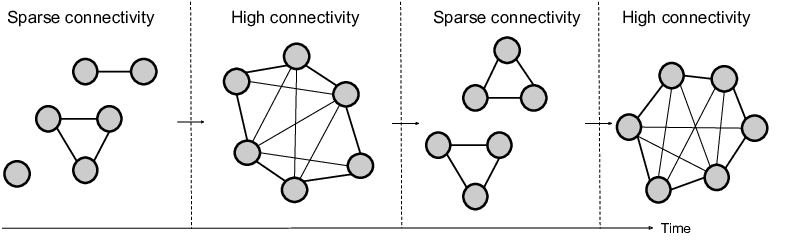

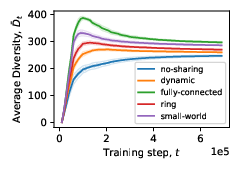

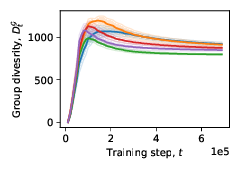

SAPIENS: Structuring multi-Agent toPology for Innovation through ExperieNce Sharing

-

Keywords:

Reinforcement learning, Multi-agent

-

Functional Description:

SAPIENS is a reinforcement learning algorithm where multiple off-policy agents solve the same task in parallel and exchange experiences on the go. The group is characterized by its topology, a graph that determines who communicates with whom.

All agents are DQNs and exchange experiences have the form of transitions from their replay buffers.

Using SAPIENS we can define groups of agents that are connected with others based on a a) fully-connected topology b) small-world topology c) ring topology or d) dynamic topology.

Install required packages You can install all required python packages by creating a new conda environment containing the packages in environment.yml:

conda env create -f environment.yml

And then activating the environment:

conda activate sapiens

Example usages Under notebooks there is a Jupyter notebook that will guide you through setting up simulations with a fully-connected and a dynamic social network structure for solving Wordcraft tasks. It also explains how you can access visualizations of the metrics produced during th$

Reproducing the paper results Scripts under the scripts directory are useful for reproducing results and figures appearing in the paper.

With scripts/reproduce_runs.py you can run all simulations presented in the paper from scratch.

This file is useful for looking at how the experiments were configured but better avoid running it: simulations will run locally and sequentially and will take months to complete.

Instead, you can access the data files output by simulations on this online repo.

Download this zip file and uncompress it under the projects directory. This should create a projects/paper_done sub-directory.

You can now reproduce all visualization presented in the paper. Run:

python scripts/reproduce_visuals.py

This will save some general plots under visuals, while project-specific plots are saved under the corresponding project in projects/paper_done

- URL:

-

Contact:

Eleni Nisioti

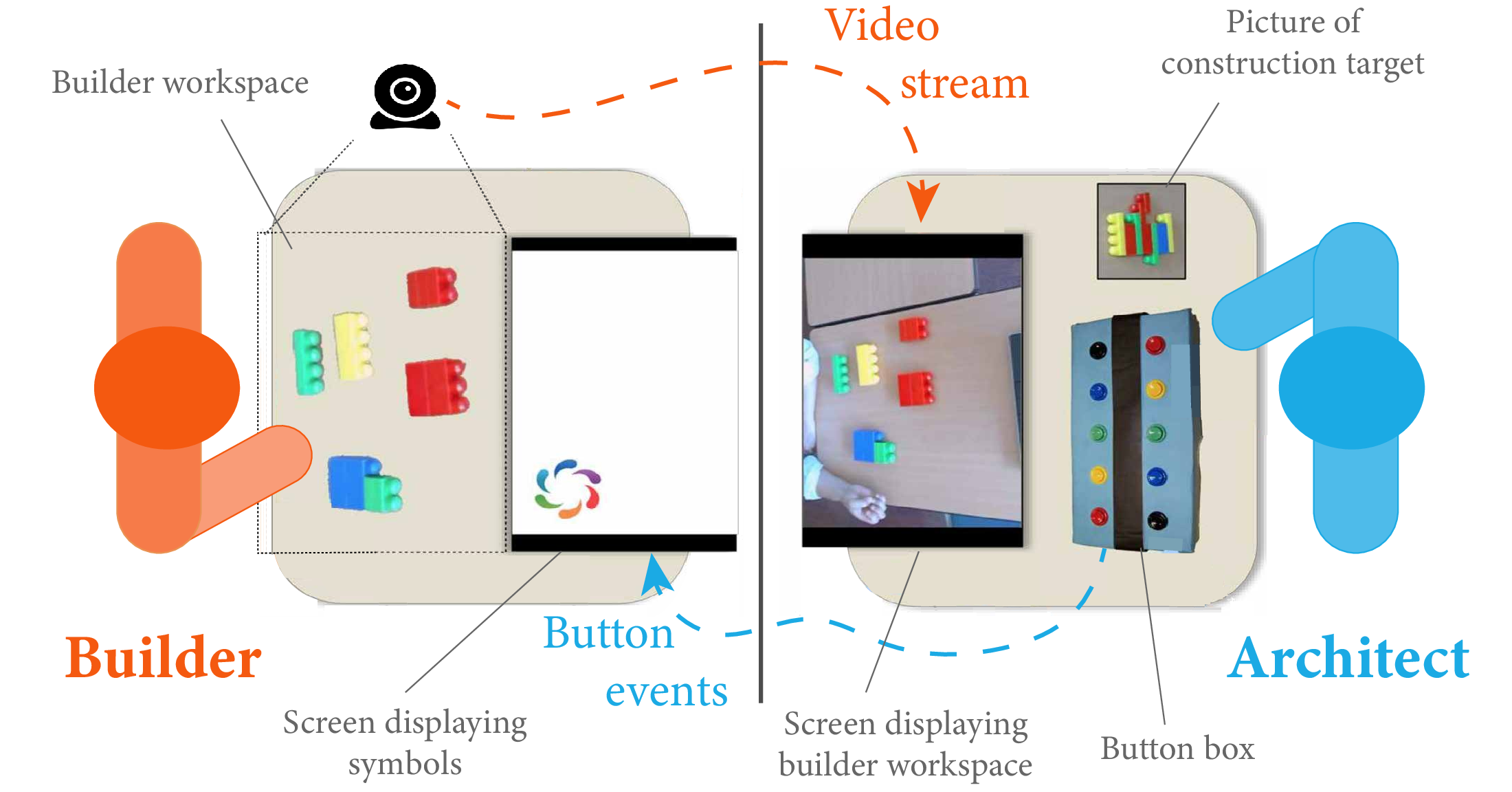

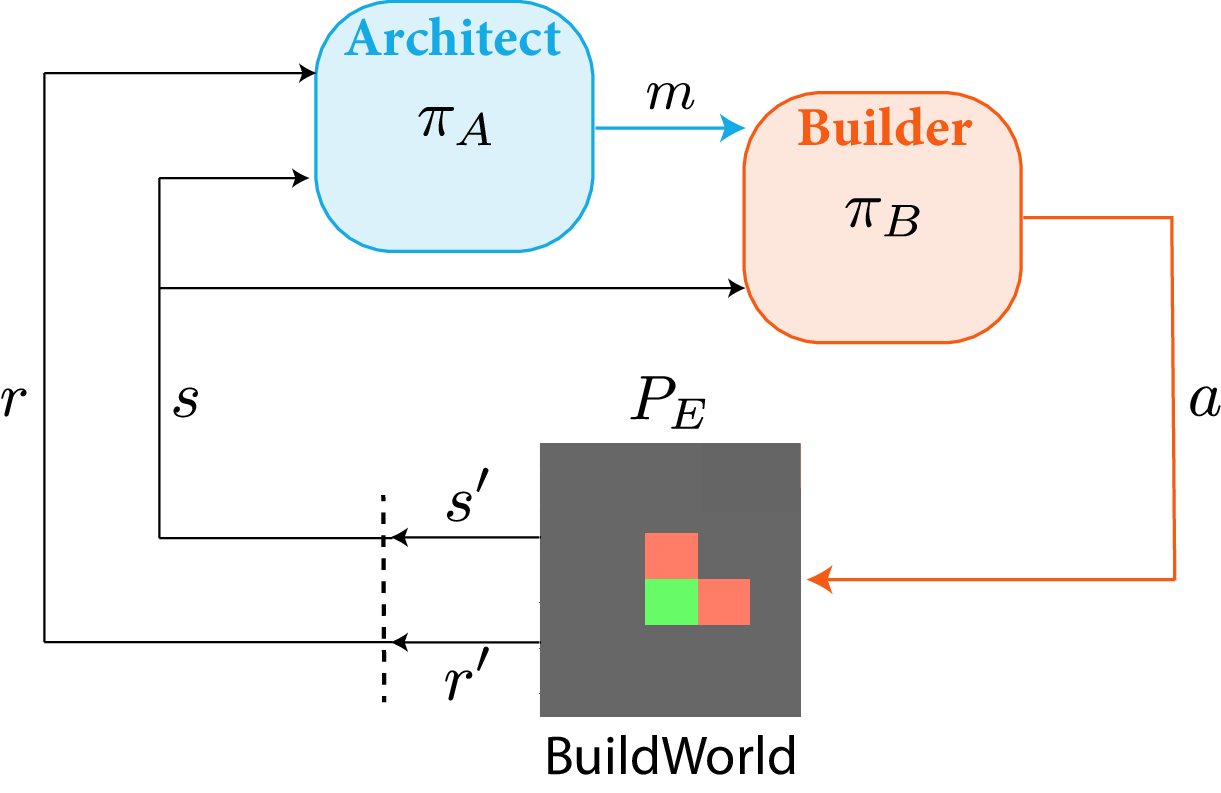

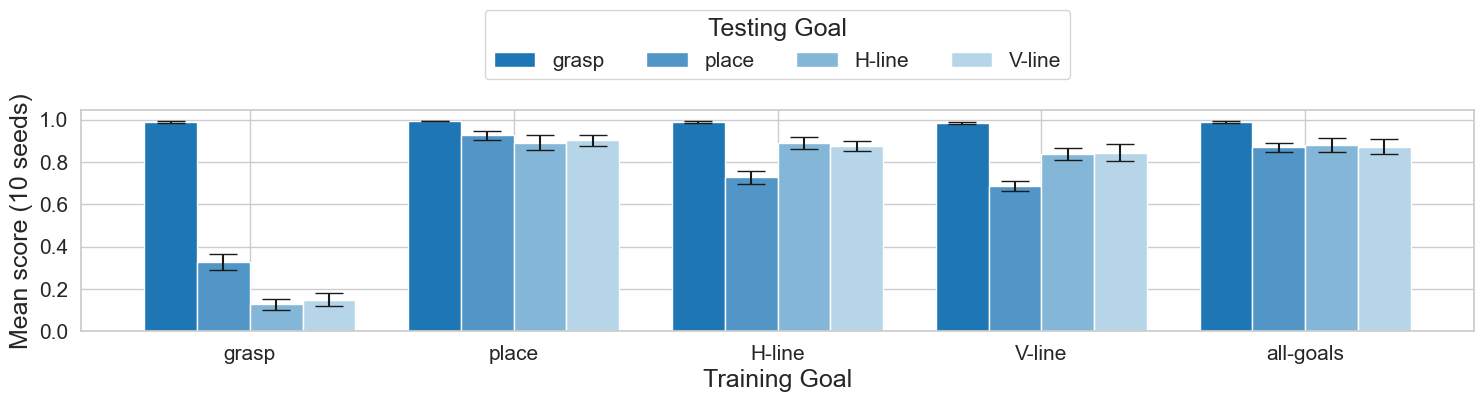

7.1.8 architect-builder-abig

-

Name:

Architect-Builder Iterated Guiding

-

Keyword:

Artificial intelligence

-

Functional Description:

Codebase for the paper Learning to guide and to be guided in the Architect-Builder Problem

ABIG stands for Architect-Builder Iterated Guiding and is an algorithmic solution to the Architect-Builder Problem. The algorithm leverages a learned model of the builder to guide it while the builder uses self-imitation learning to reinforce its guided behavior.

- URL:

-

Contact:

Tristan Karch

7.1.9 EAGER

-

Name:

Exploit question-Answering Grounding for effective Exploration in language-conditioned Reinforcement learning

-

Keywords:

Reinforcement learning, Language, Question Generation Question Answering, Reward shaping

-

Functional Description:

A novel QG/QA framework for RL called EAGER In EAGER, an agent reuses the initial language goal sentence to generate a set of questions (QG): each of these self-generated questions defines an auxiliary objective. Here, generating a question consists in masking a word of the initial language goal. Then the agent tries to answer these questions (guess the missing word) only by observing its trajectory so far. When it manages to answer a question correctly (QA) it obtains an intrinsic reward proportional to its confidence in the answer. The QA module is trained using a set of successful example trajectories. If the agent follows a path too different from correct ones at some point in its trajectory, the QA module will not answer the question correctly, resulting in zero intrinsic reward. The sum of all the intrinsic rewards measures the quality of a trajectory in relation to the given goal. In other words, maximizing this intrinsic reward incentivizes the agent to produce behaviour that unambiguously explains various aspects of the given goal.

- URL:

-

Contact:

Thomas Carta

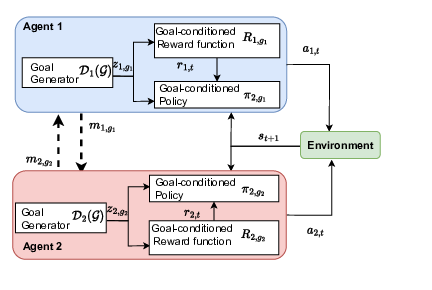

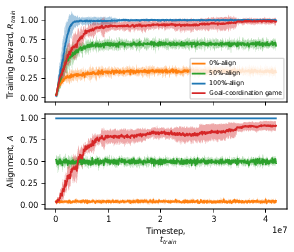

7.1.10 IMGC-MARL

-

Name:

Intrinsically Motivated Goal-Conditioned Reinforcement Learning in Multi-Agent Environments

-

Keywords:

Reinforcement learning, Multi-agent, Curiosity

-

Functional Description:

This repo contains the code base of the paper Intrinsically Motivated Goal-Conditioned Reinforcement Learning in Multi-Agent Environments.

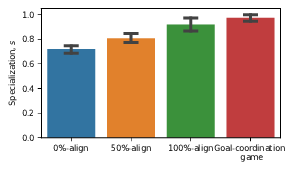

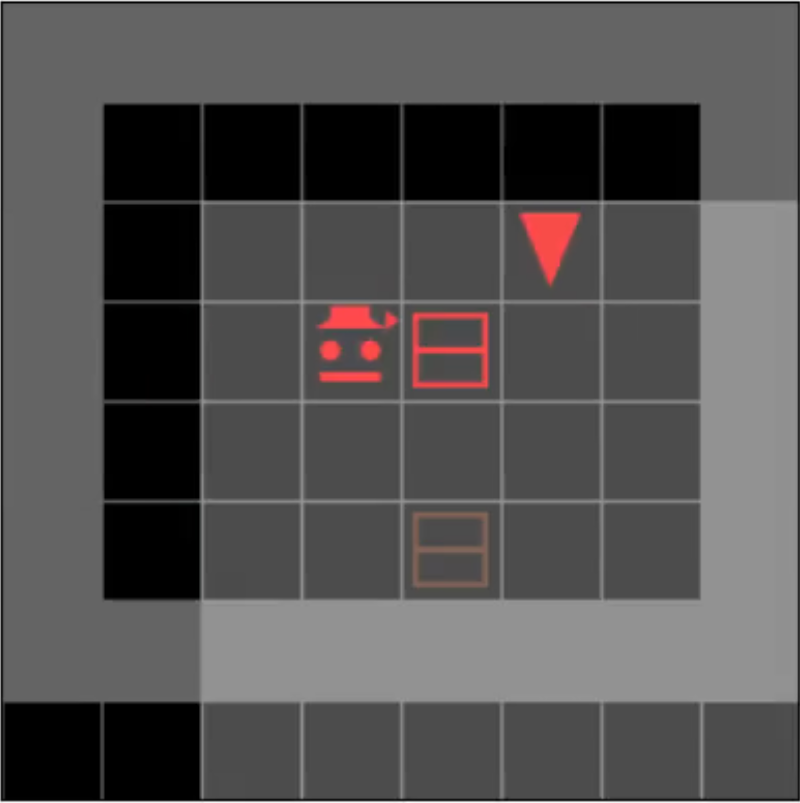

In this work, we have studied the importance of the alignment of goals in the training of instrinsically motivated agents in the multi agent goal conditioned RL case. We also proposed a simple algorithm called goal coordination game which allows such agent to learn, in a completely decentralized/selfish way, to communicate in order to align their goal.

The repository contains the code to reproduce the results of the paper. Which includes a custom RL environment ( using SimplePlayground "game engine"), model used (architecture + hyperparameters) and custom training (mostly based on RLlib ) to train both the model and the communication. We also provide the scripts for the training of every condition we test and notebook to study the results.

- URL:

-

Contact:

Gautier Hamon

7.1.11 Flow-Lenia

-

Name:

Flow Lenia: Mass conservation for the study of virtual creatures in continuous cellular automata

-

Keywords:

Cellular automaton, Self-organization

-

Functional Description:

This repo contains the code to run the Flow Lenia system which is a continuous parametrized cellular automaton with mass conservation. This work extends the classic Lenia system with mass conservation and allows to implement new feature like local parameter, environment components etc

Several declination of the system (1 or several channels etc ) are available

Please refer to the associated paper for the details of the system

Implemented in JAX

- URL:

-

Contact:

Gautier Hamon

7.1.12 Kidlearn: money game application

-

Functional Description:

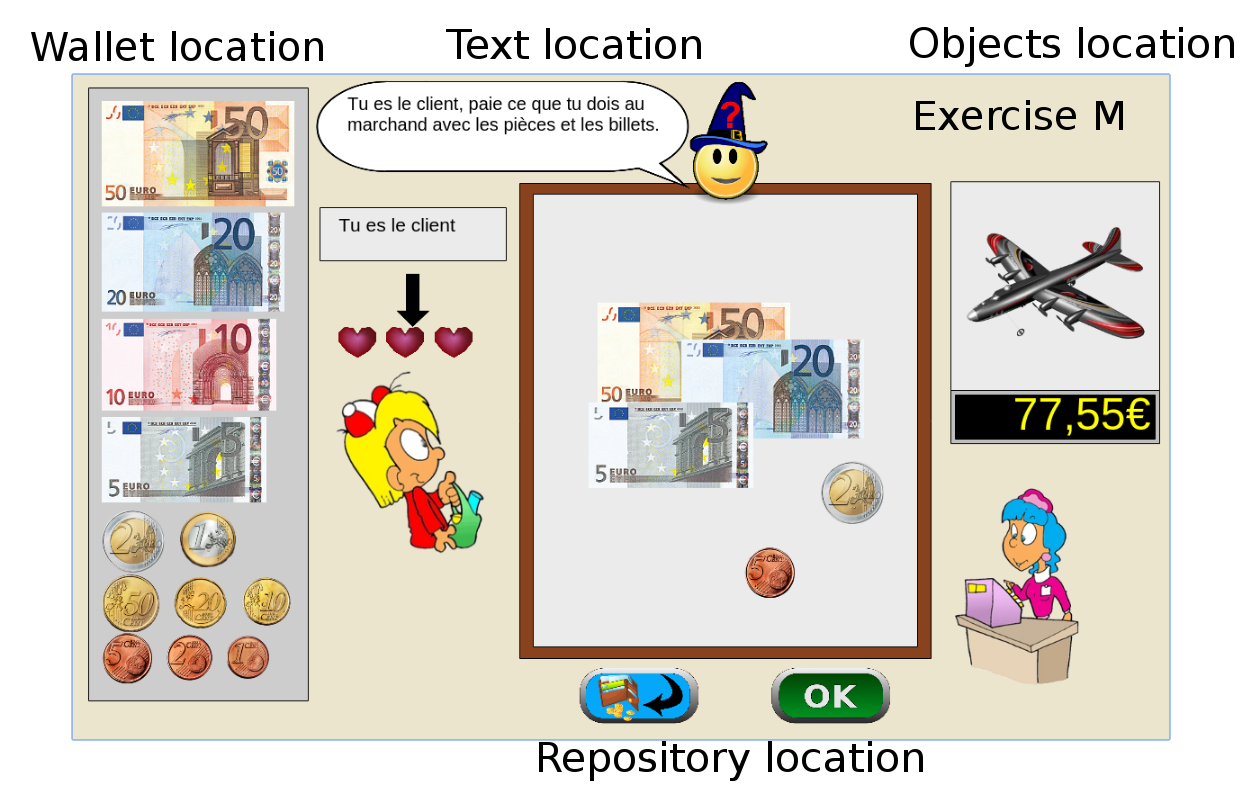

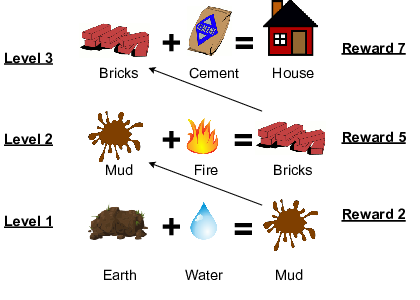

The games is instantiated in a browser environment where students are proposed exercises in the form of money/token games (see Figure 1). For an exercise type, one object is presented with a given tagged price and the learner has to choose which combination of bank notes, coins or abstract tokens need to be taken from the wallet to buy the object, with various constraints depending on exercises parameters. The games have been developed using web technologies, HTML5, javascript and Django.

Figure1: Four principal regions are defined in the graphical interface. The first is the wallet location where users can pick and drag the money items and drop them on the repository location to compose the correct price. The object and the price are present in the object location. Four different types of exercises exist: M : customer/one object, R : merchant/one object, MM : customer/two objects, RM : merchant/two objects. - URL:

-

Contact:

Benjamin Clement

7.1.13 cognitive-testbattery

-

Name:

Cognitive test battery of human attention and memory

-

Keywords:

Open Access, Cognitive sciences

-

Scientific Description:

Cognitive test batteries are widely used in diverse research fields, such as cognitive training, cognitive disorder assessment, or brain mechanism understanding. Although they need flexibility according to the objectives of their usage, most of the test batteries are not be available as open-source software and not be tuned by researchers in detail. The present study introduces an open-source cognitive test battery to assess attention and memory, using a javascript library, p5.js. Because of the ubiquitous nature of dynamic attention in our daily lives, it is crucial to have tools for its assessment or training. For that purpose, our test battery includes seven cognitive tasks (multiple-objects tracking, enumeration, go/no-go, load-induced blindness, task-switching, working memory, and memorability), common in cognitive science literature. By using the test battery, we conducted an online experiment to collect the benchmark data. Results conducted on two separate days showed the high cross-day reliability. Specifically, the task performance did not largely change with the different days. Besides, our test battery captures diverse individual differences and can evaluate them based on the cognitive factors extracted from latent factor analysis. Since we share our source code as open-source software, users can expand and manipulate experimental conditions flexibly. Our test battery is also flexible in terms of the experimental environment, i.e., it is possible to experiment either online or in a laboratory environment.

-

Functional Description:

The evaluation battery consists of 6 cognitive activities (serious games: multi-object tracking, enumeration, go/no-go, Corsi, load-induced blindness, taskswitching, memorability). Easily deployable as a web application, it can be re-used and modified for new experiments. The tool is documented in order to facilitate the deployment and the analysis of results.

- URL:

- Publication:

-

Contact:

Maxime Adolphe

-

Participants:

Pierre-yves Oudeyer, Hélène Sauzéon, Masataka Sawayama, Maxime Adolphe

7.1.14 LLM_stability

-

Keywords:

Artificial intelligence, Deep learning, Large Language Models

-

Functional Description:

Source code for the paper https://arxiv.org/abs/2307.07870

Code enabling systematic evaluation of Large Language Models with various psychology questionnaires in different contexts, e.g. following conversations on different topics.

- URL:

-

Contact:

Grgur Kovac

7.1.15 Sensorimotor-lenia

-

Keywords:

Cellular automaton, Gradient descent, Curriculum Learning

-

Functional Description:

Source code for the search of sensorimotor agency in cellular automata associated to this blogpost https://developmentalsystems.org/sensorimotor-lenia/. The code allows to find rules in the cellular automata Lenia (through gradient descent, curriculum learning and diversity search) that lead to the self-organization of moving agents robust to perturbation by obstacles.

- URL:

-

Contact:

Gautier Hamon

7.1.16 MetaIPPO

-

Keywords:

Reinforcement learning, Exploration

-

Functional Description:

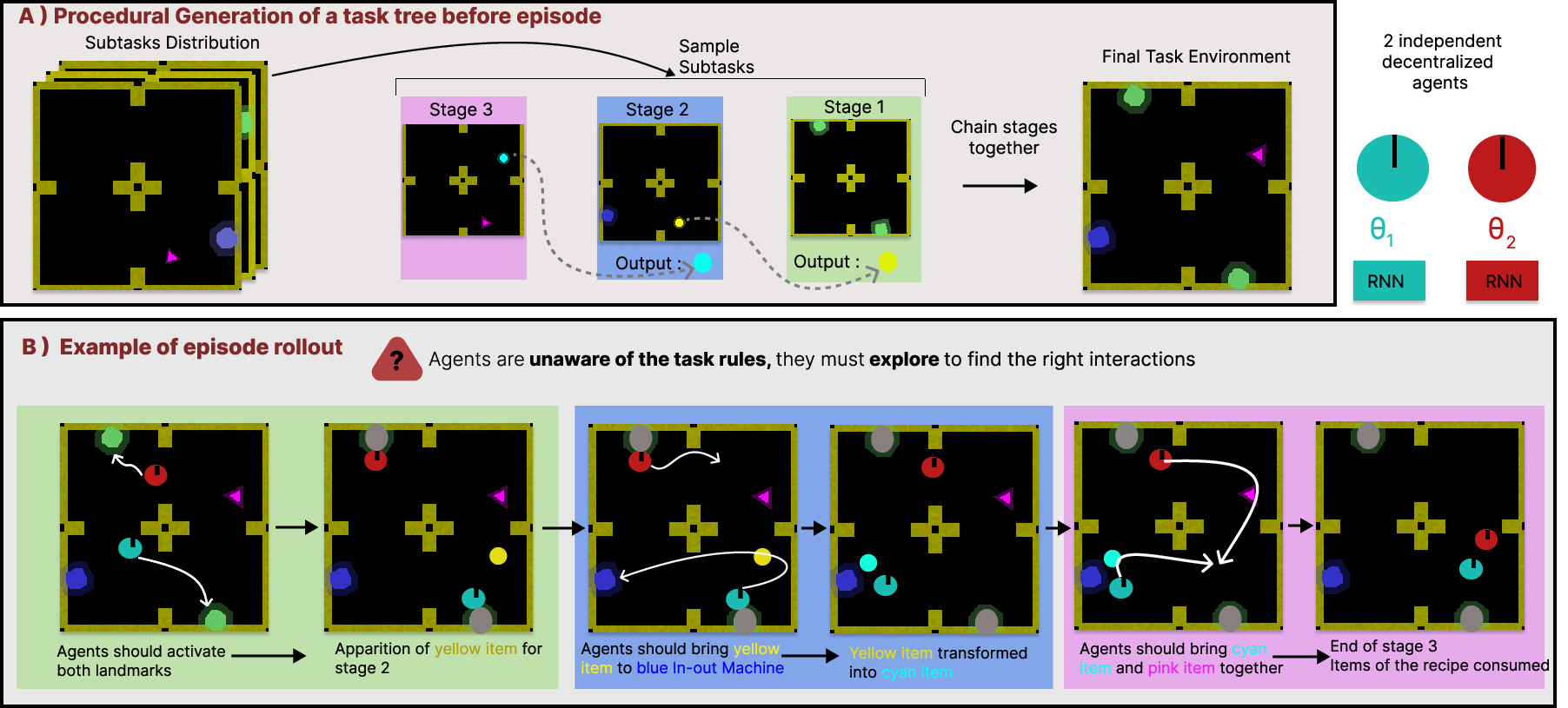

Code for the paper "Emergence of collective open-ended exploration from Decentralized Meta-Reinforcement learning" https://arxiv.org/pdf/2311.00651.pdf

We train two decentralized agents together on an open ended tasks space to study the emergence of collective exploration behaviors. Our agents are able to generalize to novel objects and tasks, as well as an essentially open ended setting.

- URL:

-

Contact:

Gautier Hamon

7.1.17 Lamorel

-

Keywords:

Large Language Models, Reinforcement learning, Distributed computing

-

Functional Description:

Lamorel is a Python library designed for people eager to use Large Language Models (LLMs) in interactive environments (e.g. RL setups).

- URL:

- Publication:

-

Contact:

Clément Romac

7.1.18 GLAM

-

Name:

Grounding LAnguage Models

-

Keywords:

Large Language Models, Reinforcement learning

-

Scientific Description:

Recent works successfully leveraged Large Language Models' (LLM) abilities to capture abstract knowledge about world's physics to solve decision-making problems. Yet, the alignment between LLMs' knowledge and the environment can be wrong and limit functional competence due to lack of grounding. In this paper, we study an approach (named GLAM) to achieve this alignment through functional grounding: we consider an agent using an LLM as a policy that is progressively updated as the agent interacts with the environment, leveraging online Reinforcement Learning to improve its performance to solve goals. Using an interactive textual environment designed to study higher-level forms of functional grounding, and a set of spatial and navigation tasks, we study several scientific questions: 1) Can LLMs boost sample efficiency for online learning of various RL tasks? 2) How can it boost different forms of generalization? 3) What is the impact of online learning? We study these questions by functionally grounding several variants (size, architecture) of FLAN-T5.

-

Functional Description:

GLAM is a new approach to achieve alignment between a Large Language Model (LLM) and a considered environment/world through functional grounding: we consider an agent using an LLM as a policy that is progressively updated as the agent interacts with the environment, leveraging online Reinforcement Learning to improve its performance to solve goals.

- URL:

- Publication:

-

Contact:

Clément Romac

7.1.19 ER-MRL

-

Keywords:

Reservoir Computing, Reinforcement learning

-

Functional Description:

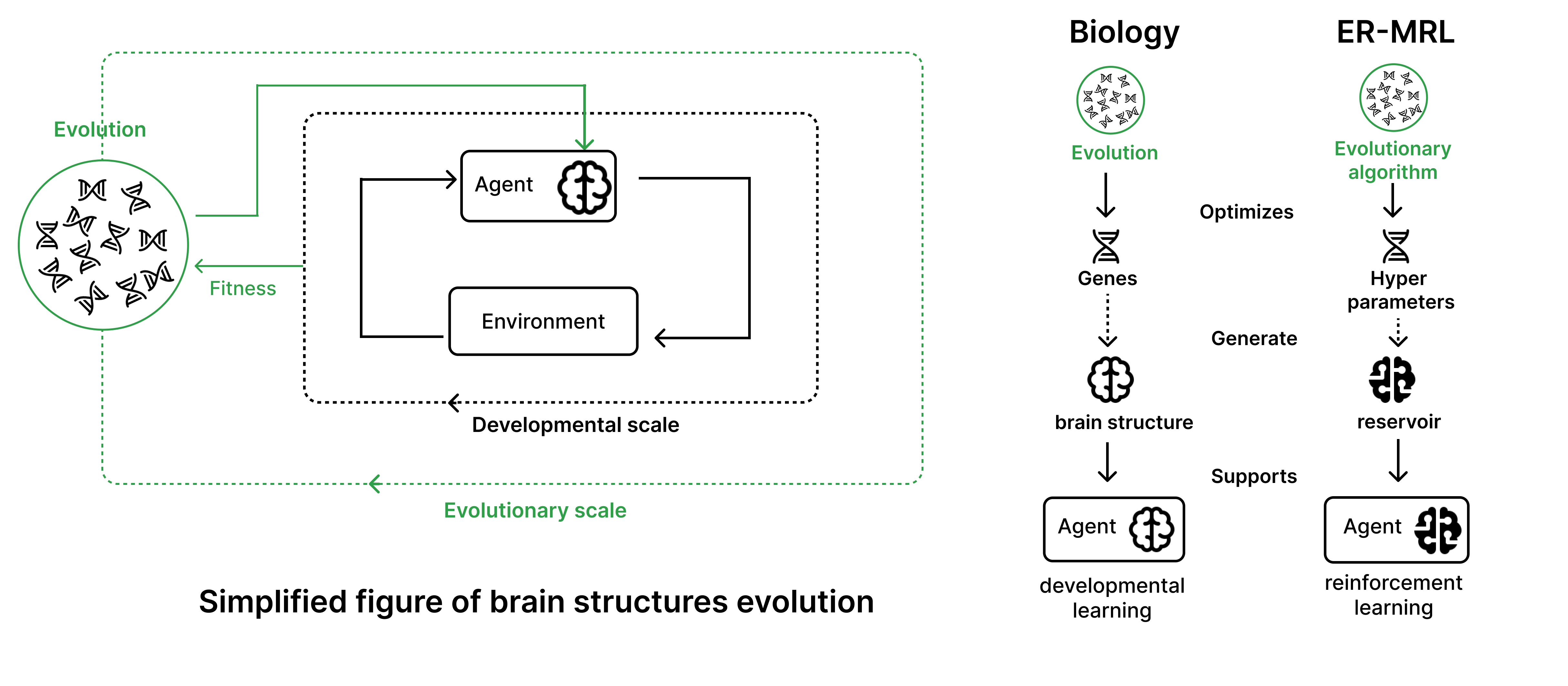

Code for the Evolving-Reservoirs-for-Meta-Reinforcement-Learning (ER-MRL) paper. Our goal is to study the following question : How neural structures, optimized at an evolutionary scale, can enhance the capabilities of agents to learn complex tasks at a developmental scale? To achieve this, we adopt a computational framework based on meta reinforcement learning, modeling the interplay between evolution and development. At the evolutionary scale, we evolve reservoirs, a family of recurrent neural networks generated from hyperparameters. These evolved reservoirs are then utilized to facilitate the learning of a behavioral policy through reinforcement learning. This is done by encoding the environment state through the reservoir before presenting it to the agent.

- URL:

-

Contact:

Corentin Leger

7.1.20 ecoevojax_analysis

-

Keywords:

Evolutionary Algorithms, Evolution, Grid

-

Functional Description: