2023Activity reportProject-TeamMAVERICK

RNSR: 201221005J- Research center Inria Centre at Université Grenoble Alpes

- In partnership with:CNRS, Université de Grenoble Alpes

- Team name: Models and Algorithms for Visualization and Rendering

- In collaboration with:Laboratoire Jean Kuntzmann (LJK)

- Domain:Perception, Cognition and Interaction

- Theme:Interaction and visualization

Keywords

Computer Science and Digital Science

- A5.2. Data visualization

- A5.5. Computer graphics

- A5.5.1. Geometrical modeling

- A5.5.2. Rendering

- A5.5.3. Computational photography

- A5.5.4. Animation

Other Research Topics and Application Domains

- B5.5. Materials

- B5.7. 3D printing

- B9.2.2. Cinema, Television

- B9.2.3. Video games

- B9.2.4. Theater

- B9.6.6. Archeology, History

1 Team members, visitors, external collaborators

Research Scientists

- Nicolas Holzschuch [Team leader, INRIA, Senior Researcher, until Jul 2023, HDR]

- Fabrice Neyret [Team leader, CNRS, Senior Researcher, from Aug 2023, HDR]

- Nicolas Holzschuch [INRIA, Senior Researcher, from Aug 2023, HDR]

- Fabrice Neyret [CNRS, Senior Researcher, until Jul 2023, HDR]

- Cyril Soler [INRIA, Researcher, HDR]

Faculty Members

- Georges-Pierre Bonneau [UGA, Professor, HDR]

- Joelle Thollot [GRENOBLE INP, Professor, HDR]

- Thibault Tricard [GRENOBLE INP, Associate Professor, from Sep 2023]

- Romain Vergne [UGA, Associate Professor]

Post-Doctoral Fellows

- Nolan Mestres [GRENOBLE INP, from Jun 2023]

- Nolan Mestres [GRENOBLE INP, Post-Doctoral Fellow, until May 2023]

PhD Students

- Mohamed Amine Farhat [UGA]

- Ana Maria Granizo Hidalgo [UGA]

- Nicolas Guichard [KDAB]

- Matheo Moinet [UGA, from Oct 2023]

- Antoine Richermoz [UGA]

- Ran Yu [OWEN'S CORNING, CIFRE, from Dec 2023]

Interns and Apprentices

- Julien Barres [INRIA, Intern, from Feb 2023 until Jul 2023]

- Valentin Despiau-Pujo [INRIA, Intern, from Apr 2023 until Sep 2023]

- Sacha Isaac–Chassande [INRIA, Intern, until Jul 2023]

- Yiqun Liu [INRIA, Intern, from Jun 2023 until Aug 2023]

- Yiqun Liu [UGA, Intern, from Feb 2023 until May 2023]

- Pacome Luton [ENS DE LYON, Intern, from Oct 2023]

- Matheo Moinet [INRIA, Intern, from Feb 2023 until Jun 2023]

Administrative Assistant

- Diane Courtiol [INRIA]

Visiting Scientist

- Arthur Novat [Atelier Novat, from Feb 2023]

2 Overall objectives

Computer-generated pictures and videos are now ubiquitous: both for leisure activities, such as special effects in motion pictures, feature movies and video games, or for more “serious” activities, such as visualization and simulation.

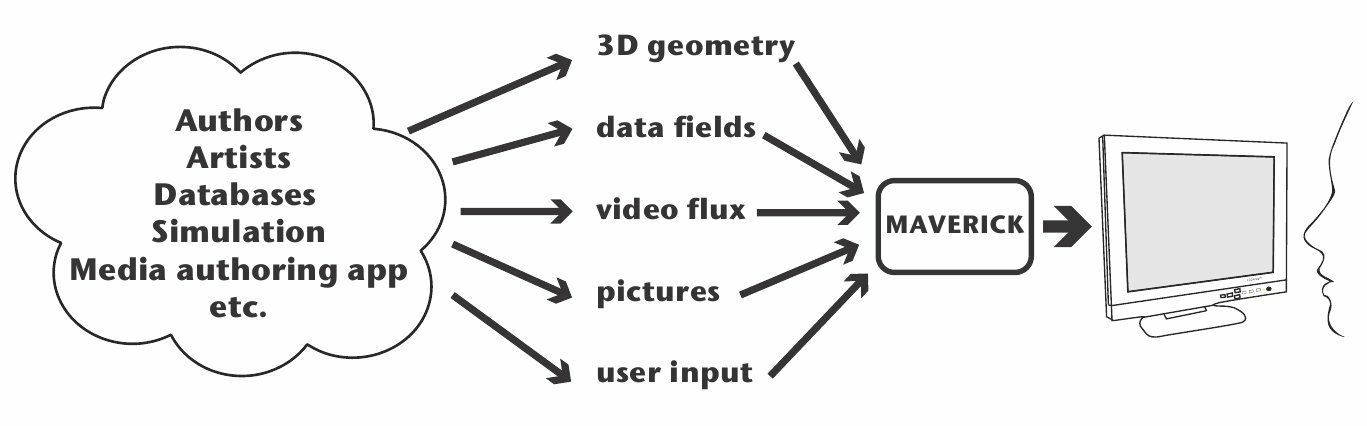

Maverick was created as a research team in January 2012 and upgraded as a research project in January 2014. We deal with image synthesis methods. We place ourselves at the end of the image production pipeline, when the pictures are generated and displayed (see Figure 1). We take many possible inputs: datasets, video flows, pictures and photographs, (animated) geometry from a virtual world... We produce as output pictures and videos.

These pictures will be viewed by humans, and we consider this fact as an important point of our research strategy, as it provides the benchmarks for evaluating our results: the pictures and animations produced must be able to convey the message to the viewer. The actual message depends on the specific application: data visualization, exploring virtual worlds, designing paintings and drawings... Our vision is that all these applications share common research problems: ensuring that the important features are perceived, avoiding cluttering or aliasing, efficient internal data representation, etc.

Computer Graphics, and especially Maverick, is at the crossroad between fundamental research and industrial applications. We are both looking at the constraints and needs of applicative users and targeting long term research issues such as sampling and filtering.

Position of the team in the graphics pipeline

The Maverick project-team aims at producing representations and algorithms for efficient, high-quality computer generation of pictures and animations through the study of four research problems:

- Computer Visualization, where we take as input a large localized dataset and represent it in a way that will let an observer understand its key properties,

- Expressive Rendering, where we create an artistic representation of a virtual world,

- Illumination Simulation, where our focus is modelling the interaction of light with the objects in the scene.

- Complex Scenes, where our focus is rendering and modelling highly complex scenes.

The heart of Maverick is understanding what makes a picture useful, powerful and interesting for the user, and designing algorithms to create these pictures.

We will address these research problems through three interconnected approaches:

- working on the impact of pictures, by conducting perceptual studies, measuring and removing artefacts and discontinuities, evaluating the user response to pictures and algorithms,

- developing representations for data, through abstraction, stylization and simplification,

- developing new methods for predicting the properties of a picture (e.g. frequency content, variations) and adapting our image-generation algorithm to these properties.

A fundamental element of the Maverick project-team is that the research problems and the scientific approaches are all cross-connected. Research on the impact of pictures is of interest in three different research problems: Computer Visualization, Expressive rendering and Illumination Simulation. Similarly, our research on Illumination simulation will gather contributions from all three scientific approaches: impact, representations and prediction.

3 Research program

The Maverick project-team aims at producing representations and algorithms for efficient, high-quality computer generation of pictures and animations through the study of four research problems:

- Computer Visualization where we take as input a large localized dataset and represent it in a way that will let an observer understand its key properties. Visualization can be used for data analysis, for the results of a simulation, for medical imaging data...

- Expressive Rendering, where we create an artistic representation of a virtual world. Expressive rendering corresponds to the generation of drawings or paintings of a virtual scene, but also to some areas of computational photography, where the picture is simplified in specific areas to focus the attention.

- Illumination Simulation, where we model the interaction of light with the objects in the scene, resulting in a photorealistic picture of the scene. Research include improving the quality and photorealism of pictures, including more complex effects such as depth-of-field or motion-blur. We are also working on accelerating the computations, both for real-time photorealistic rendering and offline, high-quality rendering.

- Complex Scenes, where we generate, manage, animate and render highly complex scenes, such as natural scenes with forests, rivers and oceans, but also large datasets for visualization. We are especially interested in interactive visualization of complex scenes, with all the associated challenges in terms of processing and memory bandwidth.

The fundamental research interest of Maverick is first, understanding what makes a picture useful, powerful and interesting for the user, and second designing algorithms to create and improve these pictures.

3.1 Research approaches

We will address these research problems through three interconnected research approaches:

Picture Impact

Our first research axis deals with the impact pictures have on the viewer, and how we can improve this impact. Our research here will target:

- evaluating user response: we need to evaluate how the viewers respond to the pictures and animations generated by our algorithms, through user studies, either asking the viewer about what he perceives in a picture or measuring how his body reacts (eye tracking, position tracking).

- removing artefacts and discontinuities: temporal and spatial discontinuities perturb viewer attention, distracting the viewer from the main message. These discontinuities occur during the picture creation process; finding and removing them is a difficult process.

Data Representation

The data we receive as input for picture generation is often unsuitable for interactive high-quality rendering: too many details, no spatial organization... Similarly the pictures we produce or get as input for other algorithms may contain superfluous details.

One of our goals is to develop new data representations, adapted to our requirements for rendering. This includes fast access to the relevant information, but also access to the specific hierarchical level of information needed: we want to organize the data in hierarchical levels, pre-filter it so that sampling at a given level also gives information about the underlying levels. Our research for this axis includes filtering, data abstraction, simplification and stylization.

The input data can be of any kind: geometric data, such as the model of an object, scientific data before visualization, pictures and photographs. It can be time-dependent or not; time-dependent data bring an additional level of challenge on the algorithm for fast updates.

Prediction and simulation

Our algorithms for generating pictures require computations: sampling, integration, simulation... These computations can be optimized if we already know the characteristics of the final picture. Our recent research has shown that it is possible to predict the local characteristics of a picture by studying the phenomena involved: the local complexity, the spatial variations, their orientation...

Our goal is to develop new techniques for predicting the properties of a picture, and to adapt our image-generation algorithms to these properties, for example by sampling less in areas of low variation.

Our research problems and approaches are all cross-connected. Research on the impact of pictures is of interest for three different research problems: Computer Visualization, Expressive rendering and Illumination Simulation. Similarly, our research on Illumination simulation will use all three research approaches: impact, representations and prediction.

3.2 Cross-cutting research issues

Beyond the connections between our problems and research approaches, we are interested in several issues, which are present throughout all our research:

-

Sampling:

is an ubiquitous process occurring in all our application domains, whether photorealistic rendering (e.g. photon mapping), expressive rendering (e.g. brush strokes), texturing, fluid simulation (Lagrangian methods), etc. When sampling and reconstructing a signal for picture generation, we have to ensure both coherence and homogeneity. By coherence, we mean not introducing spatial or temporal discontinuities in the reconstructed signal. By homogeneity, we mean that samples should be placed regularly in space and time. For a time-dependent signal, these requirements are conflicting with each other, opening new areas of research.

-

Filtering:

is another ubiquitous process, occuring in all our application domains, whether in realistic rendering (e.g. for integrating height fields, normals, material properties), expressive rendering (e.g. for simplifying strokes), textures (through non-linearity and discontinuities). It is especially relevant when we are replacing a signal or data with a lower resolution (for hierarchical representation); this involves filtering the data with a reconstruction kernel, representing the transition between levels.

-

Performance and scalability:

are also a common requirement for all our applications. We want our algorithms to be usable, which implies that they can be used on large and complex scenes, placing a great importance on scalability. For some applications, we target interactive and real-time applications, with an update frequency between 10 Hz and 120 Hz.

-

Coherence and continuity:

in space and time is also a common requirement of realistic as well as expressive models which must be ensured despite contradictory requirements. We want to avoid flickering and aliasing.

-

Animation:

our input data is likely to be time-varying (e.g. animated geometry, physical simulation, time-dependent dataset). A common requirement for all our algorithms and data representation is that they must be compatible with animated data (fast updates for data structures, low latency algorithms...).

3.3 Methodology

Our research is guided by several methodological principles:

-

Experimentation:

to find solutions and phenomenological models, we use experimentation, performing statistical measurements of how a system behaves. We then extract a model from the experimental data.

-

Validation:

for each algorithm we develop, we look for experimental validation: measuring the behavior of the algorithm, how it scales, how it improves over the state-of-the-art... We also compare our algorithms to the exact solution. Validation is harder for some of our research domains, but it remains a key principle for us.

-

Reducing the complexity of the problem:

the equations describing certain behaviors in image synthesis can have a large degree of complexity, precluding computations, especially in real time. This is true for physical simulation of fluids, tree growth, illumination simulation... We are looking for emerging phenomena and phenomenological models to describe them (see framed box “Emerging phenomena”). Using these, we simplify the theoretical models in a controlled way, to improve user interaction and accelerate the computations.

-

Transferring ideas from other domains:

Computer Graphics is, by nature, at the interface of many research domains: physics for the behavior of light, applied mathematics for numerical simulation, biology, algorithmics... We import tools from all these domains, and keep looking for new tools and ideas.

-

Develop new fondamental tools:

In situations where specific tools are required for a problem, we will proceed from a theoretical framework to develop them. These tools may in return have applications in other domains, and we are ready to disseminate them.

-

Collaborate with industrial partners:

we have a long experience of collaboration with industrial partners. These collaborations bring us new problems to solve, with short-term or medium-term transfer opportunities. When we cooperate with these partners, we have to find what they need, which can be very different from what they want, their expressed need.

4 Application domains

The natural application domain for our research is the production of digital images, for example for movies and special effects, virtual prototyping, video games... Our research have also been applied to tools for generating and editing images and textures, for example generating textures for maps. Our current application domains are:

- Offline and real-time rendering in movie special effects and video games;

- Virtual prototyping;

- Scientific visualization;

- Content modeling and generation (e.g. generating texture for video games, capturing reflectance properties, etc);

- Image creation and manipulation.

5 Social and environmental responsibility

While research in the Maverick team generaly involves significantly greedy computer hardware (e.g. 1 Tera-flop graphics cards) and heavy computations, the objective of most work in the Maverick team is the improvement of the performance of algorithms or to create new methods to obtain results at a lower computation cost. The team can in some way be seen as having a favorable impact in the long term on the overall energy consumption in computer graphics activities such as movie productions, video games, etc.

6 Highlights of the year

6.1 Startup project

The Micmap project that aims to create stylized panorama maps for tourist agencies, mountain operators, and local authorities, involves 3 Maverick team members (Nolan Mestres, Joëlle Thollot and Romain Vergne) and 2 external collaborators (Arthur Novat and Yann Boulanger). This project has won the out of labs challenge (SATT Linksium) in 2021. It has then been funded by the SATT from October 2022 to May 2023 and is now funded by Inria Startup Studio starting from June 1st, 2023 to May 2024.

6.2 Awards

Thibault Tricard, recruited Associate Professor in the team this year, received the 2023 GdR IG-RV thesis award for his thesis “Procedural noises for the design of small-scale structures in Additive Manufacturing” directed by Sylvain Lefebvre and Didier Rouxel.

7 New software, platforms, open data

7.1 New software

7.1.1 GRATIN

-

Keywords:

GLSL Shaders, Vector graphics, Texture Synthesis

-

Functional Description:

Gratin is a node-based compositing software for creating, manipulating and animating 2D and 3D data. It uses an internal direct acyclic multi-graph and provides an intuitive user interface that allows to quickly design complex prototypes. Gratin has several properties that make it useful for researchers and students. (1) it works in real-time: everything is executed on the GPU, using OpenGL, GLSL and/or Cuda. (2) it is easily programmable: users can directly write GLSL scripts inside the interface, or create new C++ plugins that will be loaded as new nodes in the software. (3) all the parameters can be animated using keyframe curves to generate videos and demos. (4) the system allows to easily exchange nodes, group of nodes or full pipelines between people.

- URL:

-

Contact:

Romain Vergne

-

Participants:

Pascal Barla, Romain Vergne

-

Partner:

UJF

7.1.2 HQR

-

Name:

High Quality Renderer

-

Keywords:

Lighting simulation, Materials, Plug-in

-

Functional Description:

HQR is a global lighting simulation platform. It provides algorithms for handling materials, geometry and light sources, accepting various industry formats by default. It also provides algorithms for solving light transport simulation problems such as photon mapping, metropolis light transport and bidirectional path tracing. Using a plugin system it allows users to implement their own parts, allowing researchers to test new algorithms for specific tasks.

- URL:

-

Contact:

Cyril Soler

-

Participant:

Cyril Soler

7.1.3 libylm

-

Name:

LibYLM

-

Keyword:

Spherical harmonics

-

Functional Description:

This library implements spherical and zonal harmonics. It provides the means to perform decompositions, manipulate spherical harmonic distributions and provides its own viewer to visualize spherical harmonic distributions.

- URL:

-

Author:

Cyril Soler

-

Contact:

Cyril Soler

7.1.4 ShwarpIt

-

Name:

ShwarpIt

-

Keyword:

Warping

-

Functional Description:

ShwarpIt is a simple mobile app that allows you to manipulate the perception of shapes in images. Slide the ShwarpIt slider to the right to make shapes appear rounder. Slide it to the left to make shapes appear more flat. The Scale slider gives you control on the scale of the warping deformation.

- URL:

-

Contact:

Georges-Pierre Bonneau

7.1.5 iOS_system

-

Keyword:

IOS

-

Functional Description:

From a programmer point of view, iOS behaves almost as a BSD Unix. Most existing OpenSource programs can be compiled and run on iPhones and iPads. One key exception is that there is no way to call another program (system() or fork()/exec()). This library fills the gap, providing an emulation of system() and exec() through dynamic libraries and an emulation of fork() using threads.

While threads can not provide a perfect replacement for fork(), the result is good enough for most usage, and open-source programs can easily be ported to iOS with minimal efforts. Examples of softwares ported using this library include TeX, Python, Lua and llvm/clang.

-

Release Contributions:

This version makes iOS_system available as Swift Packages, making the integration in other projects easier.

- URL:

-

Contact:

Nicolas Holzschuch

7.1.6 Carnets for Jupyter

-

Keywords:

IOS, Python

-

Functional Description:

Jupyter notebooks are a very convenient tool for prototyping, teaching and research. Combining text, code snippets and the result of code execution, they allow users to write down ideas, test them, share them. Jupyter notebooks usually require connection to a distant server, and thus a stable network connection, which is not always possible (e.g. for field trips, or during transport). Carnets runs both the server and the client locally on the iPhone or iPad, allowing users to create, edit and run Jupyter notebooks locally.

- URL:

-

Contact:

Nicolas Holzschuch

7.1.7 a-Shell

-

Keywords:

IOS, Smartphone

-

Functional Description:

a-Shell is a terminal emulator for iOS. It behaves like a Unix terminal and lets the user run commands. All these commands are executed locally, on the iPhone or iPad. Commands available include standard terminal commands (ls, cp, rm, mkdir, tar, nslookup...) but also programming languages such as Python, Lua, C and C++. TeX is also available. Users familiar with Unix tools can run their favorite commands on their mobile device, on the go, without the need for a network connection.

- URL:

-

Contact:

Nicolas Holzschuch

7.1.8 X3D TOOLKIT

-

Name:

X3D Development pateform

-

Keywords:

X3D, Geometric modeling

-

Functional Description:

X3DToolkit is a library to parse and write X3D files, that supports plugins and extensions.

- URL:

-

Contact:

Cyril Soler

-

Participants:

Gilles Debunne, Yannick Le Goc

7.1.9 GigaVoxels

-

Keywords:

3D rendering, Real-time rendering, GPU

-

Functional Description:

Gigavoxel is a software platform which goal is the real-time quality rendering of very large and very detailed scenes which couldn't fit memory. Performances permit showing details over deep zooms and walk through very crowdy scenes (which are rigid, for the moment). The principle is to represent data on the GPU as a Sparse Voxel Octree which multiscale voxels bricks are produced on demand only when necessary and only at the required resolution, and kept in a LRU cache. User defined producer lays accross CPU and GPU and can load, transform, or procedurally create the data. Another user defined function is called to shade each voxel according to the user-defined voxel content, so that it is user choice to distribute the appearance-making at creation (for faster rendering) or on the fly (for storageless thin procedural details). The efficient rendering is done using a GPU differential cone-tracing using the scale corresponding to the 3D-MIPmapping LOD, allowing quality rendering with one single ray per pixel. Data is produced in case of cache miss, and thus only whenever visible (accounting for view frustum and occlusion). Soft-shadows and depth-of-field is easily obtained using larger cones, and are indeed cheaper than unblurred rendering. Beside the representation, data management and base rendering algorithm themself, we also worked on realtime light transport, and on quality prefiltering of complex data. Ongoing researches are addressing animation. GigaVoxels is currently used for the quality real-time exploration of the detailed galaxy in ANR RTIGE. Most of the work published by Cyril Crassin (and al.) during his PhD (see http://maverick.inria.fr/Members/Cyril.Crassin/ ) is related to GigaVoxels. GigaVoxels is available for Windows and Linux under the BSD-3 licence.

- URL:

-

Contact:

Fabrice Neyret

-

Participants:

Cyril Crassin, Eric Heitz, Fabrice Neyret, Jérémy Sinoir, Pascal Guehl, Prashant Goswami

7.1.10 MobiNet

-

Keywords:

Co-simulation, Education, Programmation

-

Functional Description:

The MobiNet software allows for the creation of simple applications such as video games, virtual physics experiments or pedagogical math illustrations. It relies on an intuitive graphical interface and language which allows the user to program a set of mobile objects (possibly through a network). It is available in public domain for Linux,Windows and MacOS.

- URL:

-

Contact:

Fabrice Neyret

-

Participants:

Fabrice Neyret, Franck Hétroy, Joelle Thollot, Samuel Hornus, Sylvain Lefebvre

-

Partners:

CNRS, LJK, INP Grenoble, Inria, IREM, Cies, GRAVIR

7.1.11 PROLAND

-

Name:

PROcedural LANDscape

-

Keywords:

Atmosphere, Masses of data, Realistic rendering, 3D, Real time, Ocean

-

Functional Description:

The goal of this platform is the real-time quality rendering and editing of large landscapes. All features can work with planet-sized terrains, for all viewpoints from ground to space. Most of the work published by Eric Bruneton and Fabrice Neyret (see http://evasion.inrialpes.fr/Membres/Eric.Bruneton/ ) has been done within Proland and integrated in the main branch. Proland is available under the BSD-3 licence.

- URL:

-

Contact:

Fabrice Neyret

-

Participants:

Antoine Begault, Eric Bruneton, Fabrice Neyret, Guillaume Piolet

7.1.12 LavaCake

-

Name:

LavaCake

-

Keywords:

Vulkan, 3D, 3D rendering

-

Functional Description:

LavaCake is an Open Source framework that aims to simplify using Vulkan in C++ for rapid prototyping. This framework provides multiple functions to help the manipulation of the usual Vulkan structure such as queue, command buffer, render pass, shader module, and many more.

-

Contact:

Thibault Tricard

8 New results

8.1 Real-Time Rendering of Complex Scenes

8.1.1 GigaVoxels 2.0

Participants: Fabrice Neyret, Antoine Richermoz.

During the PhD thesis of Cyril Crassin (2007-11, now at nVidia Research) and after we developed (and distributed) the GigaVoxels platform (gigavoxels.inria.fr) allowing the realtime quality walkthrough in extremely larged and detailled voxel data. It heavily relies on the GPU, with hierarchical data produced and then cached on the fly on demand by the ray-marcher when a new region get visible, or required at a higher resolution. Still, the limited management of concurent tasks at the time, plus the complex treatment of tile borders at rendering and recollection of necessary information to produce new data, induced far from optimal coverage of the full GPU power and many synchronisations CPU side. In his PhD, Antoine Richermoz revisited the problem with the availability of new tools: CUDA streams and graphs, Sparse textures, RayTracing Cores + the ability to relaunch tasks from tasks, etc.

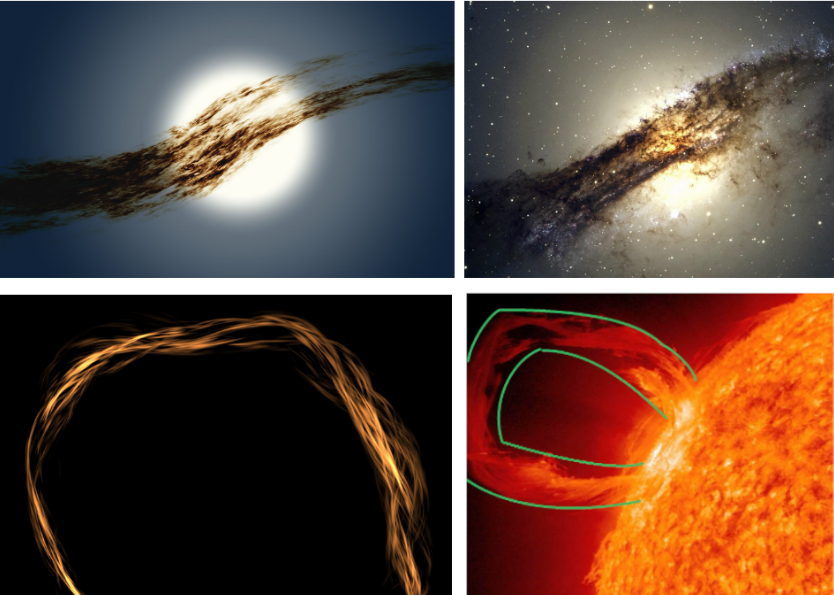

8.1.2 Efficient modelisation and rendering of dust clouds and nebulae

Participants: Fabrice Neyret, Mathéo Moinet, Julien Barres.

During the ANR Galaxy/veRTIGE collaboration with RSA Cosmos and Observatoire de Paris-Meudon in 2010-2015 ( cf www.inria.fr/en/vertige-virtual-tour-through-our-galaxy ), we extensively relied on GPU volumetric rendering and 3D procedural models of detail to handle the dust clouds and nebulas for the real-time walk-through the galaxy in Hubble-like quality, but we were still limited by performances and memory to implement the multiscaling and nebulae in the RSA Cosmos product. Since then, we conducted a series of Master2 projects on improving the proceduralism expressivity and learning of low-frequency deformations to better combine what needs to be stored (at low-res) vs what can be computed procedurally (only where strictly necessary). In particular, Mathéo Moinet's last year M1 9 and this year M2 8 internships, focussed on volumetric procedural material embedded in generalize spline tubes. The first approach relied on procedural inverse mapping of the tube parameterization toward texture space, allowing for real-time (but not so cheap) rendering with no storage at all. The second relied on precomputed low resolution uvw sparse grids in space, thus avoiding all intersection and inverse-spline transform, at the price of a bit of memory and some complication at near-overlapping regions.

In addition, Julien Barrès did his M2 internship 6 on the efficient real-time spectral rendering of nebulae (for sensor filters and illuminants in the form of low-degree polynomial times , which includes usual approximations of stars black-body spectrum). See Figure 2.

Figures

Figures

8.2 Light Transport Simulation

8.2.1 Spectral Analysis of the Light Transport Operator

Participants: Cyril Soler.

In 2022 we have proved in a Siggraph publication that the light transport operator is not compact and therefore that the light transport equation does not benefit from the guaranties that normally come with Fredholm equations. This contradicted several previous works that took the compactness of the light transport operator for granted, and it explains why methods strive to bound errors next to scene edges where the integral kernel is not bounded.

We're currently investigating the structure of the spectrum of the light transport operator as well as possible methods to compute eigenvalues and eigenfunctions. While compact operators usually have a simple spectrum, non compact ones may show any kind of structure. While we already have solved the problem for Lambertian scenes, non lambertian scenes still need some theoretical results. A publication is being written and will be sent to TOG during year 2024.

8.2.2 Practical models for the reflectance properties of fiber materials

Participants: Ran Yu, Cyril Soler.

After a successful Master 2 internship in collaboration with Owens Corning (Chambéry), Ran Yu has been recruited on a Cifre PhD to work on the modeling of the reflectance of fiber materials.

Her work will involve measuring and predicting the properties of complex materials made of intricate glass fiber layers, through multiple approaches including explicit calculation using path tracing, statistical and empirical models.

8.2.3 Dry powders reflectance model based on enhanced backscattering: case of hematite -Fe2O3

Participants: Morgane Gérardin, Pauline Martinetto, Nicolas Holzschuch.

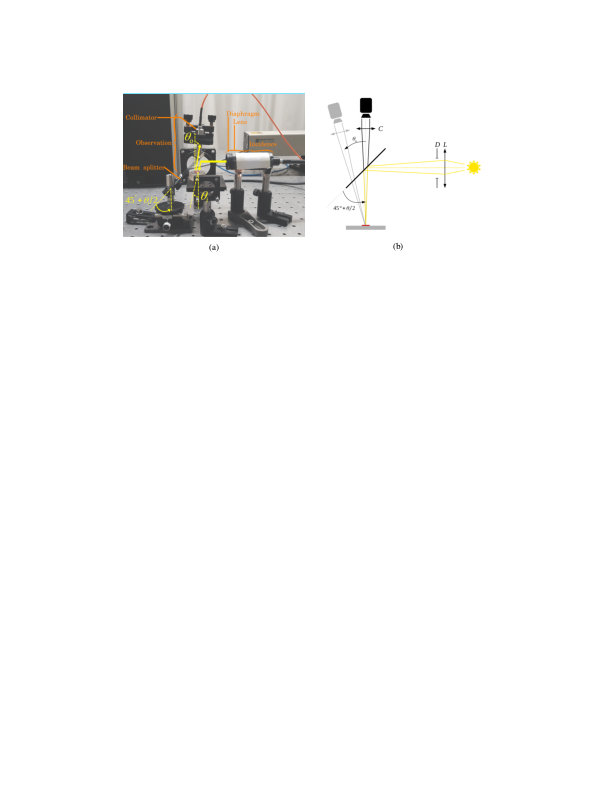

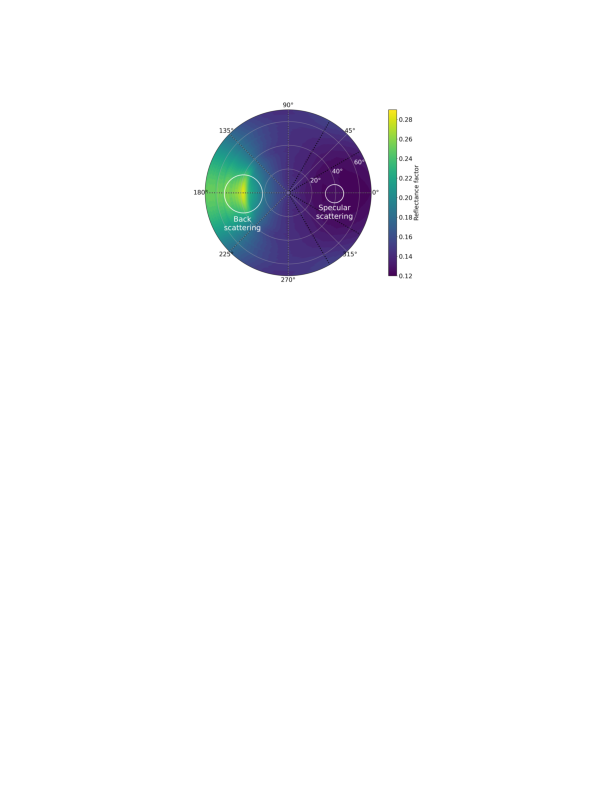

By performing bidirectionnal reflectance distribution function (BRDF) measurements (see Figure 3), we have identified backscattering as the main phenomenon involved in the appearance of dry nanocrystallized powders (see Figure 4). We introduce an analytical and physically based BRDF model that relies on the enhanced backscattering theory to accurately reproduce BRDF measurements. These experimental data were performed on optically thick layers of dry powders with various grains morphologies. Our results are significantly better than those obtained with previous models. Our model has been validated against the BRDF measurements of multiple synthesized nanocrystallized and monodisperse Fe2O3 hematite powders 1. Finally, we discuss the ability of our model to be extended to other materials or more complex powder morphologies.

Back-scattering measurement device. The beam of a white source is focused on the sample surface at an incidence θi using a 50 mm focal lens L and a beam splitter. The reflected light is collected through a collimator C in different observation directions θo.

Complete BRDF measurement of the hematite powder sample 1 at the wavelength 700 nm for an incidence θi = 40◦. The measured geometries are spotted with black dots, and the intermediate values are interpolated. Light is mostly reflected in the backward direction, and a very weak specular scattering peak may be observed as well. The spreading of the back scattering peak with the azimuth angle is due to the colormap interpolation between the measured points.

8.3 Expressive rendering

8.3.1 Micmap project

Participants: Nolan Mestre, Arthur Novat, Romain Vergne, Joëlle Thollot.

Micmap is a startup creation project following Nolan Mestres Ph.D. thesis and a long-standing informal collaboration with Arthur Novat (Atelier Novat). This project has won the out of labs challenge (SATT Linksium) in 2021. It has then been funded by the SATT from October 2022 to May 2023 and is now funded by Inria Startup Studio starting from June 1st, 2023 to May 2024.

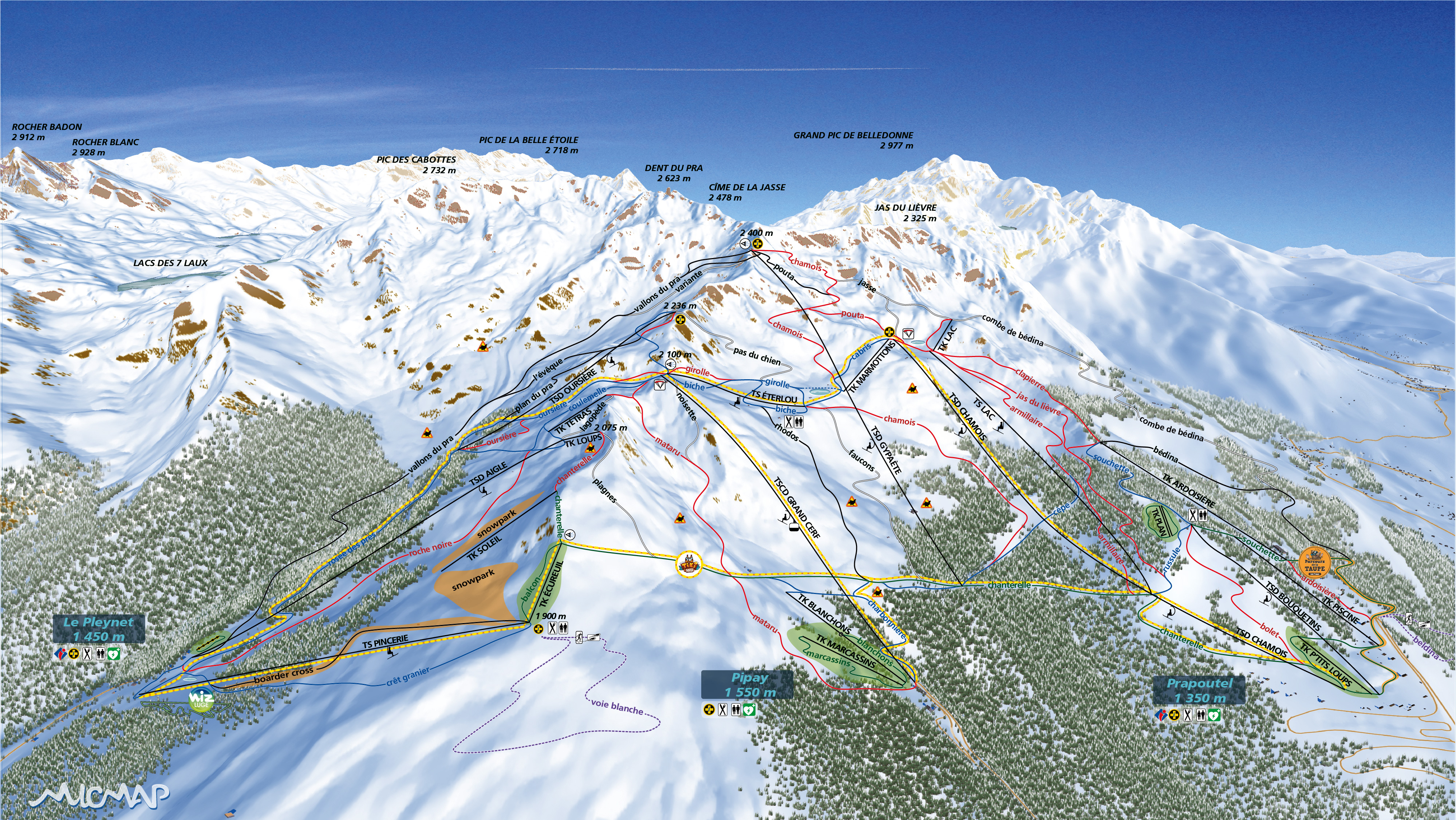

The Micmap team is composed of 3 Maverick members (Nolan Mestres, Joelle Thollot, and Romain Vergne) and 2 external collaborators (Yann Boulanger and Arthur Novat). The goal of the project is to create stylized panorama maps (Figure 5) for tourist agencies, mountain operators, and local authorities.

The project is currently in its maturation phase, combining R&D, market, and economy studies. The goal is to create the company in January 2025 as a SCOP (cooperative society).

In terms of research, the full controllable lighting model for designing digital panorama maps in the Style of Novat has been presented during the ICA 12th Mountain Cartography Workshop 7 and at JC3DSHS 2.

Ski map of 7 Laux produced by a combination of our 3D software and a manual edition by Arthur Novat.

8.3.2 VectorPattern: exploration of textural interactions for vector patterns generation.

Participants: Joëlle Thollot.

Joëlle Thollot collaborates informally with researchers in design: Maëva Calmettes et Jean-Baptiste Joatton from Ecole supérieure de design de Villefontaine and Nolwenn Maudet from UNISTRA - Université de Strasbourg.

After the rise of direct manipulation, textual interactions have been progressively devalued in creative software. Text fields within creative software currently support limited use cases such as fine tuning of numerical values or layer naming. Following the increased popularity of programming in art and design, we believe that text field based interaction can be enhanced so as to combine the unique strengths of GUI with those of text. Based on the anatomy of the text field: both an interactive interface element and a writing space, we propose a design space that explores its interactive capabilities to facilitate both reading and comprehension as well as to support writing. To explore its potential, we apply this design space to VectorPattern, a pattern creation tool that focuses on complex pattern repetition based on explicit mathematical expressions written in text fields. With this work, we call for reevaluating the place of text fields within creative software. This work has been published in IHM '23 3

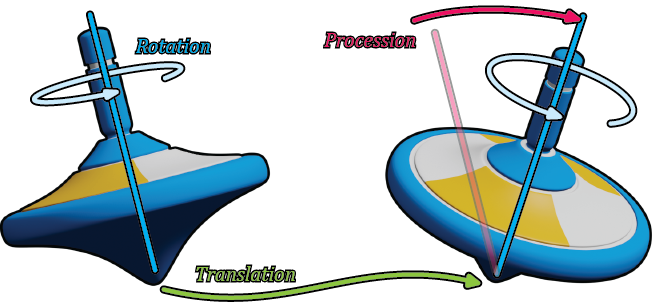

8.3.3 Controllable motion lines generation for an abstracted depiction of 3D motion

Participants: Amine Farhat, Alexandre Bleron, Romain Vergne, Joëlle Thollot.

In spite of the increasing popularity of non-photorealistic rendering techniques, very few methods allow to stylize the motion of moving objects. We propose a semi-automatic method to generate motion lines on top of a rendered 3D scene (see Figure 6). We start with a decomposition of the motion of the object into simple affine transformations, chosen by the artist. By drawing a proxy curve on an arbitrary frame, artists control the position of the motion lines in screenspace, relative to the object. We then automatically extend the proxy to the rest of the animation, while respecting the intent of the artist through a set of weighted constraints. Finally, we use this proxy to generate a time-parametrized drawing space, on which the motion lines are generated.

This work has been published at JFIG'23 4.

Controllable motion lines generation for an abstracted depiction of 3D motion.

8.4 Synthetizing new materials

8.4.1 3D auxetic meso-structures

Participants: Georges-Pierre Bonneau, Stefanie Hahmann, Mélina Skouras.

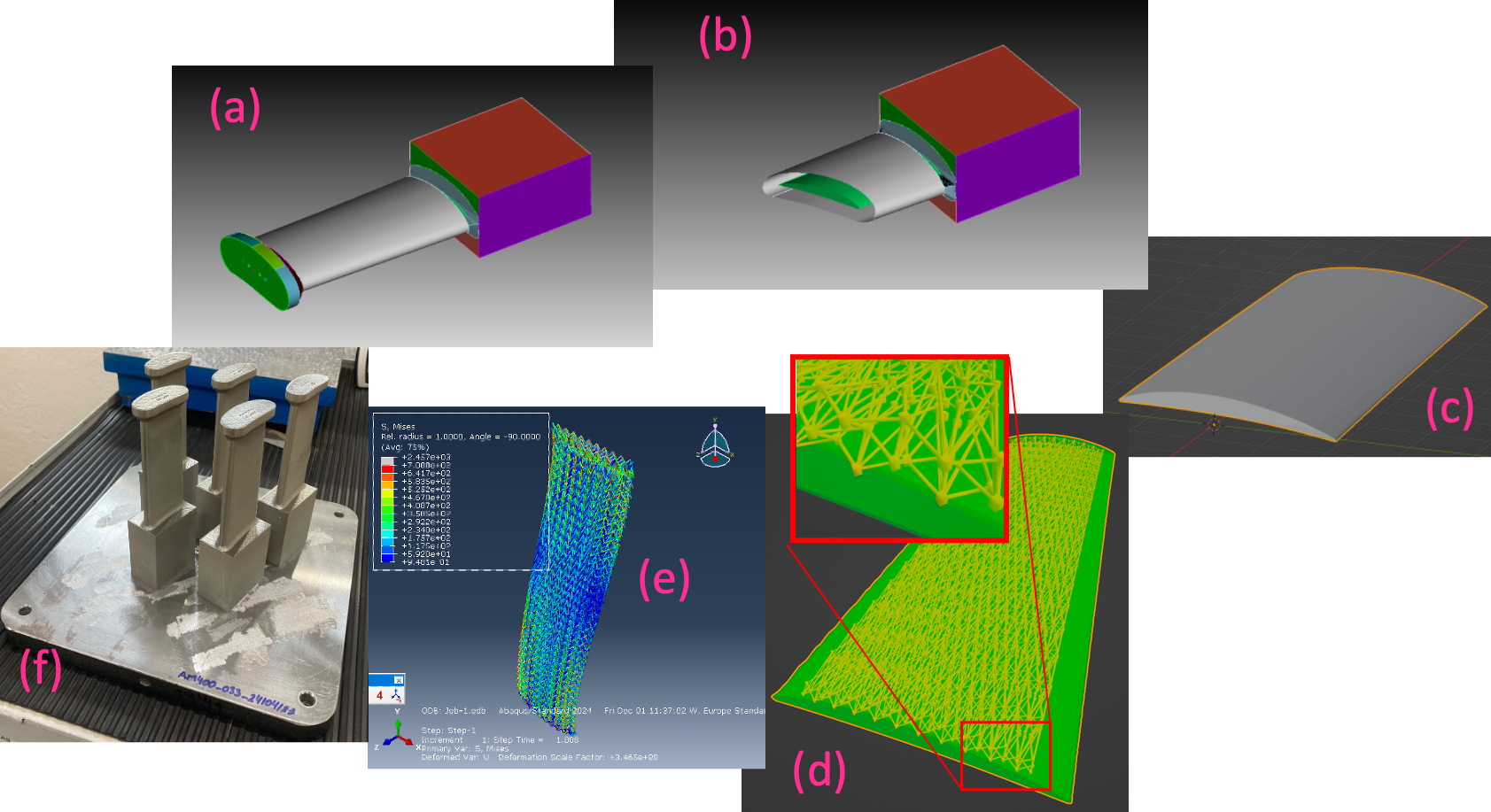

In the framework of the FET Open European Project ADAM2 we are working on producing geometric meso-structures with specific mechanical properties, that can be used for the conception of lightweight object. As a central testcase for which all the ADAM2 partners expertise is required, the conception of a turbine blade was addressed. Together with the Technion group, we were responsible of designing the geometry of the meso-structures, while our partners in applied mathematics at TU Wien and EPFL were focusing on the analysis of the mechanical properties, our partners at BCAM and UPV were fabricating the prototypes with 5-axis CNC machining and metal laser power bed fusion, and UPV and Trimek were doing experimental measurements on the fabricated prototypes. We are currently writing a common publication with all partners, describing the work on the turbine blade conception.

3D auxetic meso-structures filling the kernel of a turbine blade.

9 Bilateral contracts and grants with industry

9.1 Bilateral contracts with industry

Participants: Nicolas Holzschuch, Nicolas Guichard, Joëlle Thollot, Romain Vergne, Amine Farhat.

- We have a contract with KDAB France, connected with the PhD thesis of Nicolas Guichard (CIFRE);

- we have a contract with LeftAngle, connected with the PhD thesis of Amine Farhat (CIFRE);

- we have a contract with Owens Corning France, connected with the PhD thesis of Ran Yu (CIFRE).

10 Partnerships and cooperations

10.1 National initiatives

10.1.1 CDTools: Patrimalp

Participants: Nicolas Holzschuch [contact], Romain Vergne.

The cross-disciplinary project Patrimalp (2018-2022) on Cultural Heritage was extended by Univ. Grenoble-Alpes under the new funding “`Cross-Disciplinary Tools”, for a period of 36 months (2023-2026).

The main objective and challenge of the CDTools Patrimalp is to develop a cross-disciplinary approach in order to get a better knowledge of the material cultural heritage in order to ensure its sustainability, valorization and diffusion in society. Carried out by members of UGA laboratories, combining skills in human sciences, geosciences, digital engineering, material sciences, in close connection with stakeholders of heritage and cultural life, curators and restorers, the CDTools Patrimalp intends to develop of a new interdisciplinary science: Cultural Heritage Science.

10.1.2 Collaboration with University of Edinburgh

Participants: Cyril Soler [contact].

As a follow up of the work conducted during the ANR CaLiTrOp that finished in 2022, we have pursuit the spectral analysis of light transport in collaboration with Pr. Kartic Subr at Univ. of Edinburgh. Pr.Subr was invited for a few days in Grenoble and gave a seminar in october 2023. Cyril Soler was invited in Edinburgh in june 2023.

11 Dissemination

11.1 Promoting scientific activities

11.1.1 Scientific events: organisation

General chair, scientific chair

Georges-Pierre Bonneau was paper co-chair for the conference SMI 2023

Georges-Pierre Bonneau is paper co-chair for the conference SMI 2024

Member of the organizing committees

Romain Vergne was in charge of the best paper award steering Committee, JFIG 2023

11.1.2 Scientific events: selection

Member of the conference program committees

- Georges-Pierre Bonneau: IPC Member CAD 2023

- Georges-Pierre Bonneau: IPC Member GMP 2023, GMP 2024

- Fabrice Neyret: High Performance Graphics (HPG) 2023

- Romain Vergne : Best paper committee member, JFIG 2023

- Thibault Tricard : Best paper committee member, JFIG 2023

- Thibault Tricard : Eurographics Short Papers program.

Reviewer

Maverick faculties are regular reviewers of most of the major journals and conferences of the domain.

11.1.3 Journal

Member of the editorial boards

Georges-Pierre Bonneau is member of the Editorial Boad Committee of Elsevier CAD

Reviewer - reviewing activities

Maverick faculties are regular reviewers of most of the major journals and conferences of the domain.

11.1.4 Leadership within the scientific community

Georges-Pierre Bonneau is member of the Directorial Board and Scientific Committee of the GdR IG-RV

Georges-Pierre Bonneau is co-chair of the Best Phd thesis award selection committee of the GdR IG RV

11.1.5 Research administration

- Nicolas Holzschuch is an elected member of the Conseil National de l'Enseignement Supérieur et de la Recherche (CNESER) (2019-2027).

- Nicolas Holzschuch is co-responsible (with Anne-Marie Kermarrec of EPFL) of the Inria International Lab (IIL) “Inria-EPFL”.

- Nicolas Holzschuch and Georges-Pierre Bonneau are members of the Habilitation committee of the École Doctorale MSTII of Univ. Grenoble Alpes.

11.2 Teaching - Supervision - Juries

11.2.1 Teaching

Joëlle Thollot and Georges-Pierre Bonneau are both full Professor of Computer Science. Romain Vergne and Thibault Tricard are both Associate Professor in Computer Science. They teach general computer science topics at basic and intermediate levels, and advanced courses in computer graphics, visualization and artifical intelligence at the master levels. Nicolas Holzschuch teaches advanced courses in computer graphics at the master level.

Joëlle Thollot is in charge of the Erasmus+ program ASICIAO: a EU program to support six schools from Senegal and Togo in their pursuit of autonomy by helping them to develop their own method of improving quality in order to obtain the CTI accreditation.

- Licence: Joëlle Thollot, Théorie des langages, 45h, L3, ENSIMAG, France

- Licence: Joëlle Thollot, Module d'accompagnement professionnel, 10h, L3, ENSIMAG, France

- Licence: Joëlle Thollot, innovation, 10h, L3, ENSIMAG, France

- Master : Joelle Thollot, English courses using theater, 18h, M1, ENSIMAG, France.

- Master : Joelle Thollot, Analyse et conception objet de logiciels, 24h, M1, ENSIMAG, France.

- Licence : Romain Vergne, Introduction to algorithms, 64h, L1, UGA, France.

- Licence : Romain Vergne, WebGL, 29h, L3, IUT2 Grenoble, France.

- Licence : Romain Vergne, Programmation, 68h. L1, UGA, France.

- Master : Romain Vergne, Image synthesis, 27h, M1, UGA, France.

- Master : Romain Vergne, 3D graphics, 15h, M1, UGA, France.

- Master : Nicolas Holzschuch, Computer Graphics II, 18h, M2 MoSIG, France.

- Master : Nicolas Holzschuch, Synthèse d’Images et Animation, 32h, M2, ENSIMAG, France.

- Master: Georges-Pierre Bonneau, Image Synthesis, 23h, M1, Polytech-Grenoble, France

- Master: Georges-Pierre Bonneau, Data Visualization, 40h, M2, Polytech-Grenoble, France

- Master: Georges-Pierre Bonneau, Digital Geometry, 23h, M1, UGA

- Master: Georges-Pierre Bonneau, Information Visualization, 22h, Mastere, ENSIMAG, France.

- Master: Georges-Pierre Bonneau, Scientific Visualization, M2, ENSIMAG, France.

- Master: Georges-Pierre Bonneau, Computer Graphics II, 18h, M2 MoSiG, UGA, France.

- Master : Thibault Tricard, Artificial Intelligence Project, M2 ENSIMAG, France

- Master : Thibault Tricard, GP-GPU and High Performances Computing, M2 ENSIMAG, France

11.2.2 Supervision

- Romain Vergne and Joëlle Thollot co-supervise the PhD of Amine Farhat.

- Fabrice Neyret supervise the PhD of Antoine Richermoz and Mathéo Moinet.

- Nicolas Holzschuch supervizes the PhD of Nicolas Guichard and Anita Granizo-Hidalgo.

11.2.3 Juries

- Joëlle Thollot was reviewer and president of the jury for the PhD defense of Mathieu Marsot (Université Grenoble-Alpes).

- Nicolas Holzschuch:

- Examiner for the PhD defense of Yaniss Nyffenegger-Pere (Toulouse INP), February 22nd, 2023.

- Reviewer for the PhD defense of Loïs Paulin, (UCB Lyon 1), April 17, 2023.

- Reviewer for the PhD defense of Elsa Tamisier (Université de Poitiers), December 18, 2023.

11.3 Popularization

11.3.1 Internal or external Inria responsibilities

Romain Vergne was co-responsible of the Groupe de Travail Rendu et Visualisation of the French association for Computer Graphics (AFIG).

11.3.2 Articles and contents

- Fabrice Neyret maintains the blog shadertoy-Unofficial and various shaders examples on Shadertoy site to popularize GPU technologies as well as disseminates academic models within computer graphics, computer science, applied math and physics fields. About 24k pages viewed and 11k unique visitors (93% out of France) in 2021.

- Fabrice Neyret maintains the blog desmosGraph-Unofficial to popularize the use of interactive grapher DesmosGraph for research, communication and pedagogy. For this year, about 10k pages viewed and 6k unique visitors (99% out of France) in 2021.

- Fabrice Neyret maintains the the blog chiffres-et-paradoxes (in French) to popularize common errors, misunderstandings and paradoxes about statistics and numerical reasoning. About 9k pages viewed and 4.5k unique visitors since then (15% out of France, knowing the blog is in French) on the blog, plus the viewers via the Facebook and Twitter pages.

11.3.3 Interventions

- Fabrice Neyret presented a conference "Les maths et la physique dans les effets spéciaux et les jeux vidéo" during the 2023 edition of "Fête de la Science".

- Antoine Richermoz and Nolan Mestres run a demonstration of 3D printing during the 2023 edition of "Fête de la Science".

12 Scientific production

12.2 Publications of the year

International journals

Invited conferences

Conferences without proceedings

Edition (books, proceedings, special issue of a journal)

Other scientific publications

12.3 Cited publications

- 9 mastersthesisSculpting procedural noise: real-time rendering of interstellar dust clouds and solar flares.MA ThesisENSIMAG ; UGA (Université Grenoble Alpes)June 2022HALback to text