2024Activity reportProject-TeamACENTAURI

RNSR: 202124072D- Research center Inria Centre at Université Côte d'Azur

- Team name: Artificial intelligence and efficient algorithms for autonomus robotics

- Domain:Perception, Cognition and Interaction

- Theme:Robotics and Smart environments

Keywords

Computer Science and Digital Science

- A3.4.1. Supervised learning

- A3.4.3. Reinforcement learning

- A3.4.4. Optimization and learning

- A3.4.5. Bayesian methods

- A3.4.6. Neural networks

- A3.4.8. Deep learning

- A5.4.4. 3D and spatio-temporal reconstruction

- A5.4.5. Object tracking and motion analysis

- A5.4.7. Visual servoing

- A5.10.2. Perception

- A5.10.3. Planning

- A5.10.4. Robot control

- A5.10.5. Robot interaction (with the environment, humans, other robots)

- A5.10.6. Swarm robotics

- A5.10.7. Learning

- A6.2.3. Probabilistic methods

- A6.2.4. Statistical methods

- A6.2.5. Numerical Linear Algebra

- A6.2.6. Optimization

- A6.4.2. Stochastic control

- A6.4.3. Observability and Controlability

- A6.4.4. Stability and Stabilization

- A6.4.6. Optimal control

- A7.1.4. Quantum algorithms

- A8.2. Optimization

- A8.3. Geometry, Topology

- A8.11. Game Theory

- A9.2. Machine learning

- A9.5. Robotics

- A9.6. Decision support

- A9.10. Hybrid approaches for AI

Other Research Topics and Application Domains

- B5.1. Factory of the future

- B5.6. Robotic systems

- B7.2. Smart travel

- B7.2.1. Smart vehicles

- B7.2.2. Smart road

- B8.2. Connected city

1 Team members, visitors, external collaborators

Research Scientists

- Ezio Malis [Team leader, INRIA, Senior Researcher]

- Philippe Martinet [INRIA, Senior Researcher]

- Patrick Rives [INRIA, Emeritus]

Post-Doctoral Fellows

- Minh Quan Dao [INRIA, Post-Doctoral Fellow]

- Hasan Yilmaz [INRIA, Post-Doctoral Fellow]

PhD Students

- Mohamed Mahmoud Ahmed Maloum [UNIV COTE D'AZUR, from Dec 2024]

- Emmanuel Alao [CNRS]

- Matteo Azzini [UNIV COTE D'AZUR]

- Ayan Barui [UNIV COTE D'AZUR, from Oct 2024]

- Thomas Campagnolo [NXP, from Sep 2024]

- Enrico Fiasche [UNIV COTE D'AZUR]

- Monica Fossati [UNIV COTE D'AZUR]

- Stefan Larsen [INRIA]

- Fabien Lionti [INRIA]

- Ziming Liu [INRIA, until Apr 2024]

- Diego Navarro Tellez [CEREMA]

- Andrea Pagnini [INRIA, from Dec 2024]

Technical Staff

- Mohamed Malek Aifa [INRIA, Engineer]

- Erwan Amraoui [INRIA, Engineer]

- Marie Aspro [INRIA, Engineer]

- Jon Aztiria Oiartzabal [INRIA, Engineer, from Mar 2024]

- Nicolas Chleq [INRIA, Engineer]

- Matthias Curet [INRIA, Engineer]

- Andres Gomez Hernandez [INRIA, Engineer, from Jun 2024]

- Pierre Joyet [INRIA, Engineer]

- Pardeep Kumar [INRIA, Engineer]

- Quentin Louvel [INRIA, Engineer]

- Louis Verduci [INRIA, Engineer]

Interns and Apprentices

- Thomas Campagnolo [INRIA, Intern, until Apr 2024]

- Ludovica Danovaro [INRIA, Intern, from Feb 2024 until Jul 2024]

- Raffaele Pumpo [INRIA, Intern, from Feb 2024 until Jul 2024]

Administrative Assistants

- Patricia Riveill [INRIA]

- Stephanie Verdonck [INRIA]

Visiting Scientist

- Rafael Eric Murrieta Cid [CIMA, from Sep 2024]

2 Overall objectives

The goal of ACENTAURI is to study and to develop intelligent, autonomous and mobile robots that collaborate between them to achieve challenging tasks in dynamic environments. The team focuses on perception, decision and control problems for multi-robot collaboration by proposing an original hybrid model-driven / data driven approach to artificial intelligence and by studying efficient algorithms. The team focuses on robotic applications like environment monitoring and transportation of people and goods. In these applications, several robots will share multi-sensor information eventually coming from infrastructure. The team will demonstrate the effectiveness of the proposed approaches on real robotic systems like Autonomous Ground Vehicles (AGVs) and Unmanned Aerial Vehicles (UAVs) together with industrial partners.

The scientific objectives that we want to achieve are to develop:

- robots that are able to perceive in real-time through their sensors unstructured and changing environments (in space and time) and are able to build large scale semantic representations taking into account the uncertainty of interpretation and the incompleteness of perception. The main scientific bottlenecks are (i) how to exceed purely geometric maps to have semantic understanding of the scene and (ii) how to share these representations between robots having different sensomotoric capabilities so that they can possibly collaborate together to perform a common task.

- autonomous robots in the sense that they must be able to accomplish complex tasks by taking high-level cognitive-based decisions without human intervention. The robots evolve in an environment possibly populated by humans, possibly in collaboration with other robots or communicating with infrastructure (collaborative perception). The main scientific bottlenecks are (i) how to anticipate unexpected situations created by unpredictable human behavior using the collaborative perception of robots and infrastructure and (ii) how to design robust sensor-based control law to ensure robot integrity and human safety.

- intelligent robots in the sense that they must (i) decide their actions in real-time on the basis of the semantic interpretation of the state of the environment and their own state (situation awareness), (ii) manage uncertainty both on sensor, control and dynamic environment (iii) predict in real-time the future states of the environment taking into account their security and human safety, (iv) acquire new capacities and skills, or refine existing skills through learning mechanisms.

- efficient algorithms able to process large amount of data and solve hard problems both in robotic perception, learning, decision and control. The main scientific bottlenecks are (i) how to design new efficient algorithms to reduce the processing time with ordinary computers and (ii) how to design new quantum algorithms to reduce the computational complexity in order to solve problems that are not possible in reasonable time with ordinary computers.

3 Research program

The research program of ACENTAURI will focus on intelligent autonomous systems, which require to be able to sense, analyze, interpret, know and decide what to do in the presence of dynamic and living environment. Defining a robotic task in a living and dynamic environment requires to setup a framework where interactions between the robot or the multi-robots system, the infrastructure and the environment can be described from a semantic level to a canonical space at different levels of abstraction. This description will be dynamic and based on the use of sensory memory and short/long term memory mechanism. This will require to expand and develop (i) the knowledge on the interaction between robots and the environment (both using model-driven or data-driven approaches), (ii) the knowledge on how to perceive and control these interactions, (iii) situation awareness, (iv) hybrid architectures (both using model-driven or data-driven approaches), for monitoring the global process during the execution of the task.

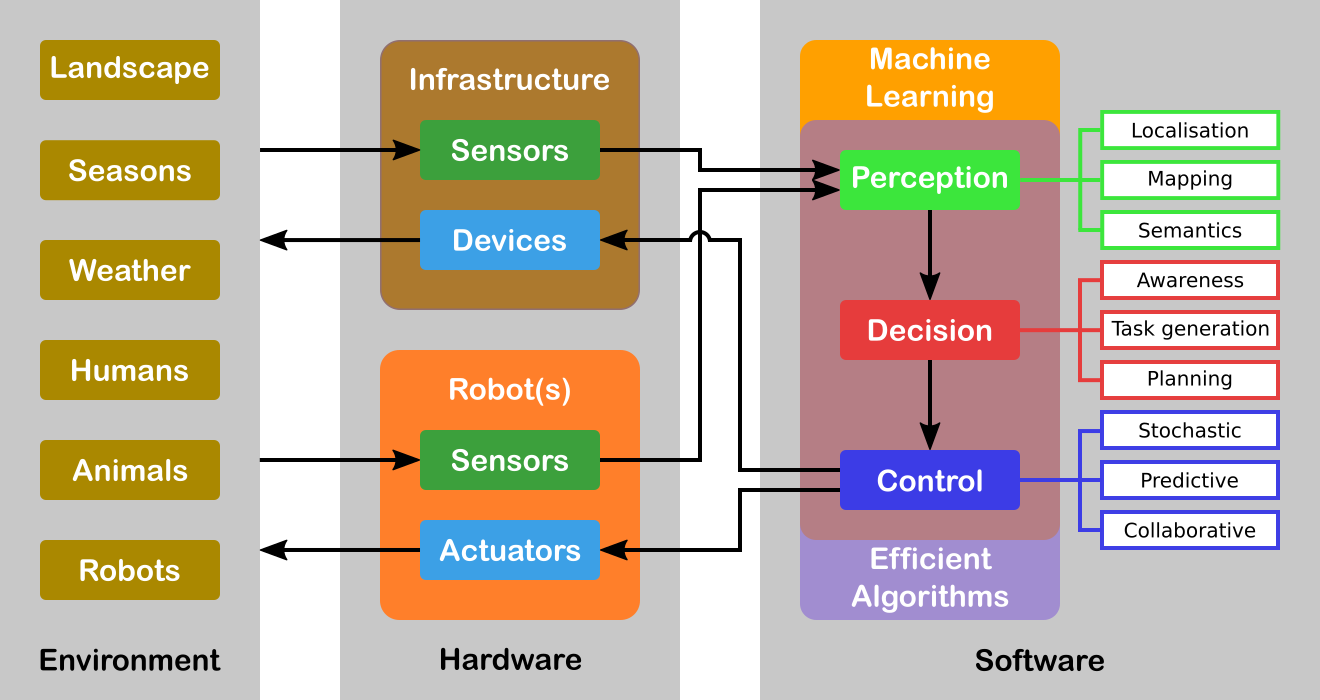

Figure 1 illustrates an overview of the global systems highlighting the core topics. For the sake of simplicity, we will decompose our research program in three axes related to Perception, Decision and Control. However, it must be noticed that these axes are highly interconnected (e.g. there is a duality between perception and control) and all problems should be addressed in a holistic approach. Moreover, Machine Learning is in fact transversal to all the robot's capacities. Our objective is the design and the development of a parameterizable architecture for Deep Learning (DL) networks incorporating a priori model-driven knowledge. We plan to do this by choosing specialized architectures depending on the task assigned to the robot and depending on the input (from standard to future sensor modalities). These DL networks must be able to encode spatio-temporal representations of the robot's environment. Indeed, the task we are interested in considers evolution in time of the environment since the data coming from the sensors may vary in time even for static elements of the environment. We are also interested to develop a novel network for situation awareness applications (mainly in the field of autonomous driving, and proactive navigation).

Another transversal issue concerns the efficiency of the algorithms involved. Either we must process a large amount of data (for example using a standard full HD camera (1920x1080 pixels) the data size to process is around 5 Terabits/hour) or the problem is hard to solve even when the underlying graph is planar. For example, path optimization problems for multiple robots are all non-deterministic polynomial-time complete (NP-complete). A particular emphasis will be given to efficient numerical analysis algorithms (in particular for optimization) that are omnipresent in all research axes. We will also explore a completely different and radically new methodology with quantum algorithms. Several quantum basic linear algebra subroutines (BLAS) (Fourier transforms, finding eigenvectors and eigenvalues, solving linear equations) exhibit exponential quantum speedups over their best known classical counterparts. This quantum BLAS (qBLAS) translates into quantum speedups for a variety of algorithms including linear algebra, least-squares fitting, gradient descent, Newton's method. The quantum methodology is completely new to the team, therefore the practical interest of pursuing such research direction should be validated in the long-term.

The research program of ACENTAURI will be decomposed in the following three research axes:

3.1 Axis A: Augmented spatio-temporal perception of complex environments

The long-term objective of this research axis is to build accurate and composite models of large-scale environments that mix metric, topological and semantic information. Ensuring the consistency of these various representations during the robot exploration and merging/sharing observations acquired from different viewpoints by several collaborative robots or sensors attached to the infrastructure, are very difficult problems. This is particularly true when different sensing modalities are involved and when the environments are time-varying. A recent trend in Simultaneous Localization And Mapping is to augment low-level maps with semantic interpretation of their content. Indeed, the semantic level of abstraction is the key element that will allow us to build the robot’s environmental awareness (see Axis B). For example, the so-called semantic maps have already been used in mobile robot navigation, to improve path planning methods, mainly by providing the robot with the ability to deal with human-understandable targets. New studies to derive efficient algorithms for manipulating the hybrid representations (merging, sharing, updating, filtering) while preserving their consistency are needed for long-term navigation.

3.2 Axis B: Situation awareness for decision and planning

The long-term objective of this research axis is to design and develop a decision-making module that is able to (i) plan the mission of the robots (global planning), (ii) generate the sub-tasks (local objectives) necessary to accomplish the mission based on Situation Awareness and (iii) plan the robot paths and/or sets of actions to accomplish each subtask (local planning). Since we have to face uncertainties, the decision module must be able to react efficiently in real-time based on the available sensor information (on-board or attached to an IoT infrastructure) in order to guarantee the safety of humans and things. For some tasks, it is necessary to coordinate a multi-robots system (centralized strategy), while for other each robot evolves independently with its own decentralized strategy. In this context, Situation Awareness is at the heart of an autonomous system in order to feed the decision-making process, but also can be seen as a way to evaluate the performance of the global process of perception and interpretation in order to build a safe autonomous system. Situation Awareness is generally divided into three parts: perception of the elements in the environment (see Axis A), comprehension of the situation, and projection of future states (prediction and planning). When planning the mission of the robot, the decision-making module will first assume that the configuration of the multi-robot system is known in advance, for example one robot on the ground and two robots on the air. However, in our long-term objectives, the number of robots and their configurations may evolve according to the application objectives to be achieved, particularly in terms of performance, but also to take into account the dynamic evolution of the environment.

3.3 Axis C: Advanced multi-sensor control of autonomous multi-robot systems

The long-term objective of this research axis is to design multi-sensor (on-board or attached to an IoT infrastructure) based control of potentially multi-robots systems for tasks where the robots must navigate into a complex dynamic environment including the presence of humans. This implies that the controller design must explicitly deal not only with uncertainties and inaccuracies in the models of the environment and of the sensors, but also to consider constraints to deal with unexpected human behavior. To deal with uncertainties and inaccuracies in the model, two strategies will be investigated. The first strategy is to use Stochastic Control techniques that assume known probability distribution on the uncertainties. The second strategy is to use system identification and reinforcement learning techniques to deal with differences between the models and the real systems. To deal with unexpected human behavior, we will investigate Stochastic Model Predictive Control (MPC) techniques and Model Predictive Path Integral (MPPI) control techniques in order to anticipate future events and take optimal control actions accordingly. A particular emphasis will be given to the theoretical analysis (observability, controllability, stability and robustness) of the control laws.

4 Application domains

ACENTAURI focus on two main applications in order to validate our researches using the robotics platforms described in section 7.2. We are aware that ethical questions may arise when addressing such applications. ACENTAURI follows the recommendations of the Inria ethical committee like for example confidentiality issues when processing data (RGPD).

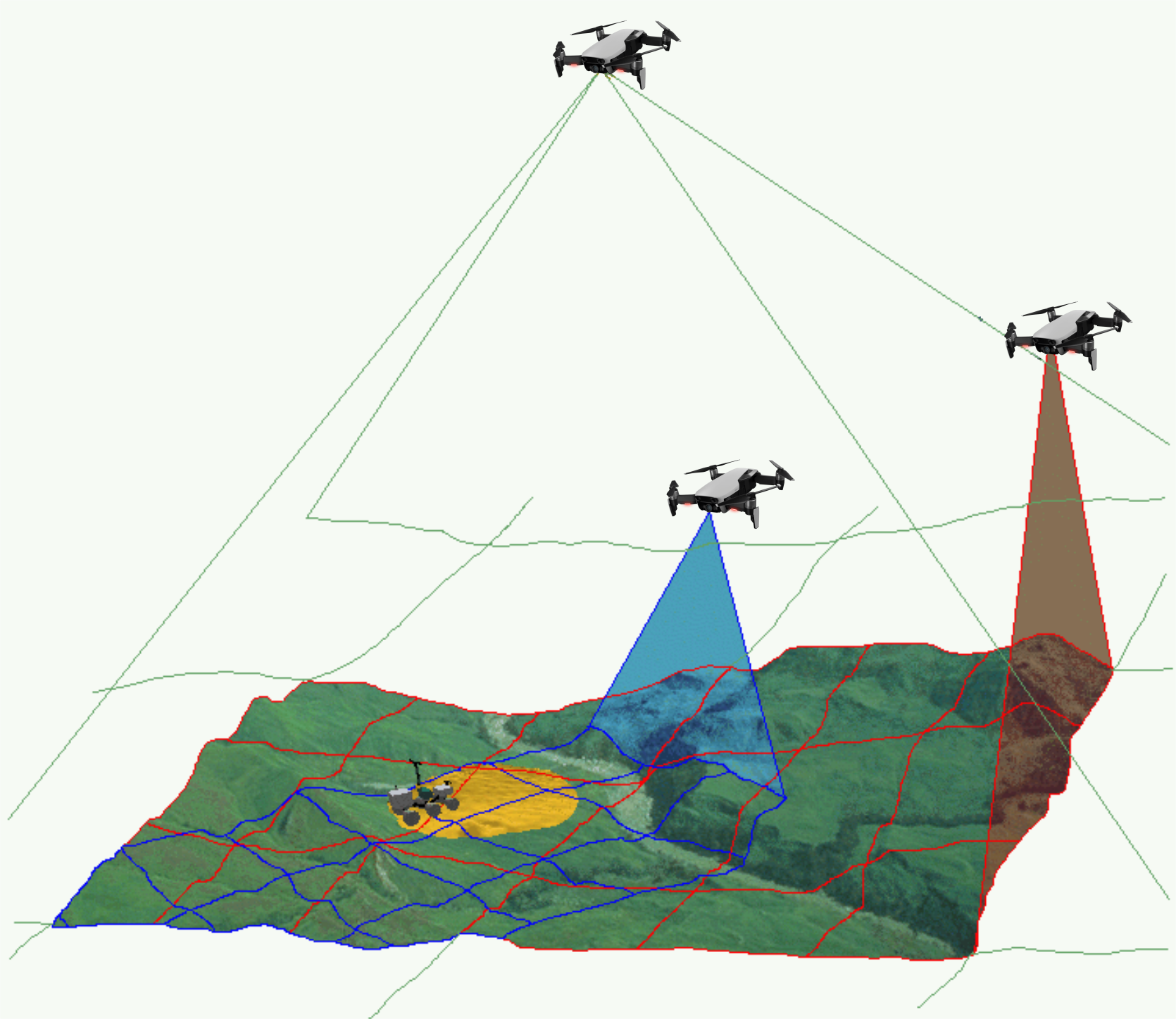

4.1 Environment monitoring with a collaborative robotic system

The first application that we will consider concerns monitoring the environment using an autonomous multi-robots system composed by ground robots and aerial robots (see Figure 2). The ground robots will patrol following a planned trajectory and will collaborate with the aerial drones to perform tasks in structured (e.g. industrial sites), semi-structured (e.g. presence of bridges, dams, buildings) or unstructured environments (e.g. agricultural space, forest space, destroyed space). In order to provide a deported perception to the ground robots, an aerial drone will be in operation while the second one will be recharging its batteries on the ground vehicle. Coordinated and safe autonomous take-off and landing of the aerial drones will be a key factor to ensure the continuity of service for a long period of time. Such a multi-robot system can be used to localize survivors in case of disaster or rescue, to localize and track people or animals (for surveillance purpose), to follow the evolution of vegetation (or even invasion of insects or parasites), to follow evolution of structures (bridges, dams, buildings, electrical cables) and to control actions in the environment like for example in agriculture (fertilization, pollination, harvesting, ...), in forest (rescue), in land (planning firefighting). Successful achievement of such applications requires to build a representation of the environment and localize the robots in the map (see Axis A in section 3.1), to re-plan the tasks of each robot when unpredictable events occurs (see Axis B in section 3.2) and to control each robot to execute the tasks (see Axis C in section 3.3). Depending on the application field, the scale and the difficulty of the problems to be solved will be increasing. In the Smart Factories field, we have a relatively small size environment, mostly structured, with highly instrumented (sensors) and with the possibility to communicate. In the Smart Territories field, we have large semi-structured or unstructured environments that are not instrumented. To set up demonstrations of this application, we intend to collaborate with industrial partners and local institutions. For example, we plan to set up a collaboration with the Parc Naturel Régional des Prealpes d'Azur to monitor the evolution of fir trees infested by bark beetles.

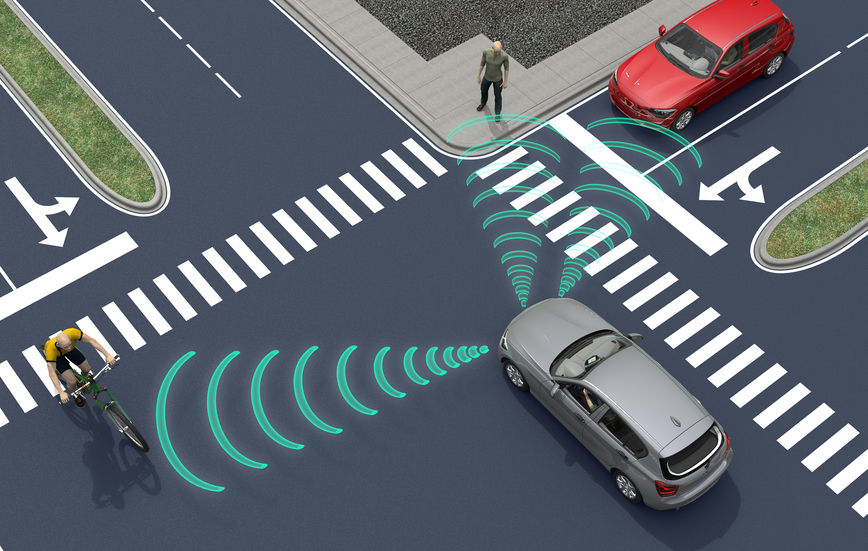

4.2 Transportation of people and goods with autonomous connected vehicles

The second application that we will consider, concerns the transportation of people and goods with autonomous connected vehicles (see Figure 3). ACENTAURI will contribute to the development of Autonomous Connected Vehicles (e.g. Learning, Mapping, Localization, Navigation) and the associated services (e.g. towing, platooning, taxi). We will develop efficient algorithms to select on-line connected sensors coming from the infrastructure in order to extend and enhance the embedded perception of a connected autonomous vehicle. In cities, there exists situations where visibility is very bad for historical reason or simply occasionally because of traffic congestion, service delivery (trucks, buses) or roadworks. It exists also situation where danger are more important and where a connected system or intelligent infrastructure can help to enhance perception and then reduce the risk of accident (see Axis A in section 3.1). In ACENTAURI, we will also contribute to the development of assistance and service robotics by re-using the same technologies required in autonomous vehicles. By adding the social level in the representation of the environment, and using techniques of proactive and social navigation, we will offer the possibility of the robot to adapt its behavior in presence of humans (see Axis B in section 3.2). ACENTAURI will study sensing technology on SDVs (Self-Driving Vehicles) used for material handling to improve efficiency and safety as products are moved around Smart Factories. These types of robots have the ability to sense and avoid people, as well as unexpected obstructions in the course of doing its work (see Axis C in section 3.3). The ability to automatically avoid these common disruptions is a powerful advantage that keeps production running optimally. To set up demonstrations of this application, we will continue the collaboration with industrial partners (Renault) and with the Communauté d'Agglomération Sophia Antipolis (CASA). Experiments with 2 autonomous Renault Zoe cars will be carried out in a dedicated space lend by CASA. Moreover, we propose, with the help of the Inria Service d'Expérimentation et de Développement (SED), to set up a demonstration of an autonomous shuttle to transport people in the future extended Inria/UCA site.

5 Social and environmental responsibility

ACENTAURI is concerned with the reduction of its environmental footprint activities and it is involved in several research projects related to the environmental challenges.

5.1 Footprint of research activities

The main footprint of our research activities comes from travels and power consumption (computers and computer cluster). Concerning travels, after the limitation due to the COVID-19 pandemic, they have increased again but we make our best efforts to prioritize visioconferencing. Concerning power consumption, besides classical actions to reduce the waste of energy, our research focus on efficient optimization algorithms to minimize the computation time of computers onboard of our robotic platforms.

5.2 Impact of research results

We have planned to propose several projects related to the environmental challenges. We give below three examples of the most advanced projects that have been recently started.

The first two concerns the monitoring of forest, one in collaboration with the Parc Naturel Regional des Préalpes d'Azur, ONF and DRAAF (EPISUD Project) and one in collaboration with INRAE UR629 Ecologie des Forêts Méditerranéennes (see ROBFORISK project in section 10.4.6) .

The third concerns the autonomous vehicles in agricultural application in collaboration with INRAE Clermont-Ferrand in the context of the PEPR "Agrologie et numérique". We aim to develop robotic approaches for the realization of new cultural practices, capable of acting as a lever for agroecological practices (see NINSAR Project in section 10.4.4).

6 Highlights of the year

6.1 Team progression

This year that has been dedicated to the growth of our team to its nominal size. In particular we have:

- welcomed eight new members to our team, each bringing unique skills, experiences, and perspectives.

- kick off a new collaborative project 10.4.7.

- organized two workshops (one in France and one in Korea) in context of the AISENSE associated team with the AVELAB of KAIST in Korea.

- strengthened industrial collaborations by initiating two CIFRE PhD theses:

- Thomas Campagnolo (NXP), "Embedded Machine Learning Solutions for Autonomous Navigation". Phd supervisors: Ezio Malis and Philippe Martinet.

- Mohamed Mahmoud Ahmed Maloum (SAFRAN), "Inertial-vision SLAM using deep learning". Phd supervisors: Ezio Malis and Philippe Martinet.

We organized a two days team seminar in Marseille to foster scientific discussion and collaborations between team members (see Figure 4).

We have also continued a biweekly robotic seminar involving both Inria (HEPHAISTOS and ACENTAURI) and I3S (OSCAR, ROBOTVISION, ...) robotic teams in order to disseminate information about the latest advancements, trends, and research in robotics.

6.2 Awards

- Emmanuel Alao received the Best Student Paper Award for the paper "Multi-Risk Assessment and Management in the Presence of Personal Light Electric Vehicles" at the ICINCO (International Conference on Informatics in Control, Automation and Robotics) conference in November 2024.

- Mathilde Theunissen won the second prize of the jury for the work "Robustness Study of Optimal Geometries for Cooperative Multi-Robot Localization" at Journée des Jeunes Chercheuses et Jeunes Chercheurs en Robotique in November 2024.

7 New software, platforms, open data

7.1 New software

7.1.1 OPENROX

-

Keywords:

Robotics, Library, Localization, Pose estimation, Homography, Mathematical Optimization, Computer vision, Image processing, Geometry Processing, Real time

-

Functional Description:

Cross-platform C library for real-time robotics:

- sensors calibration - visual identification and tracking - visual odometry - lidar registration and odometry

-

News of the Year:

Several modules have been added:

- multispectral visual servoing - camera - lidar calibration - lidar SLAM

Python and C++ Plugins have been developed to use OPENROX in ROS2 nodes.

-

Contact:

Ezio Malis

-

Partner:

Robocortex

7.2 New platforms

Participants: Ezio Malis, Philippe Martinet, Nicolas Chleq, Pierre Joyet, Quentin Louvel.

7.2.1 ICAV platform

ICAV platform has been funded by PUV@SOPHIA project (CASA, PACA Region and state), self funding, Digital Reference Center from UCA, and Academy 1 from UCA. We have now two autonomous vehicles, one instrumented vehicle, many sensors (Real Time Kinematics GPS, Lidars, Cameras), Communications devices (C-V2X, IEEE 802.11p), and one standalone localization and mapping system.

ICAV platform is composed of (see Figure 5):

- ICAV1 is an old generation of ZOE. It has been bought fully robotized and intrumented. It is equipped with Velodyne Lidar VLP16, low cost Inetial Measurement Unit (IMU) and GPS, three cameras and one embedded computer.

- ICAV2 is a new generation of ZOE which has been instrumented and robotized in 2021. It is equipped with Velodyne Lidar VLP16, low cost IMU and GPS, three cameras, two solidstate Lidars RS-M1, one embedded computer and one NVIDIA Jetson AGX Xavier.

- ICAV3 will be instrumented with different LIDARS and multi cameras system (LADYBUG5+)

- A ground truth RTK system. An RTK GPS base station has been installed and a local server configured inside the Inria Center. Each vehicle is equipped with an RTK GPS receiver and connected to a local server in order to compute a centimeter localization accuracy.

- A standalone localization and mapping system. This system is composed of a Velodyne Lidar VLP16, low cost IMU and GPS, and one NVIDIA Jetson AGX Xavier.

- A communication system vehicle-to-everything (V2X) based on the technology C-V2X and IEEE 802.11p.

- Different lidar sensors (Ouster OS2-128, RS-LIDAR16, RS-LIDAR32, RS-Ruby), and one multi-cameras system (LADYBUG5+)

The main applications of this platform are:

- datasets acquisition

- localization, Mapping, Depth estimation, Semantization

- autonomous navigation (path following, parking, platooning, ...), proactive navigation in shared space

- situation awareness and decision making

- V2X communication

- autonomous landing of UAVs on the roof.

ICAV2 has been used by Maria Kabtoul in order to demonstrate the effectiveness of autonomous navigation of a car in a crowd.

Indoor autonomous mobile platform

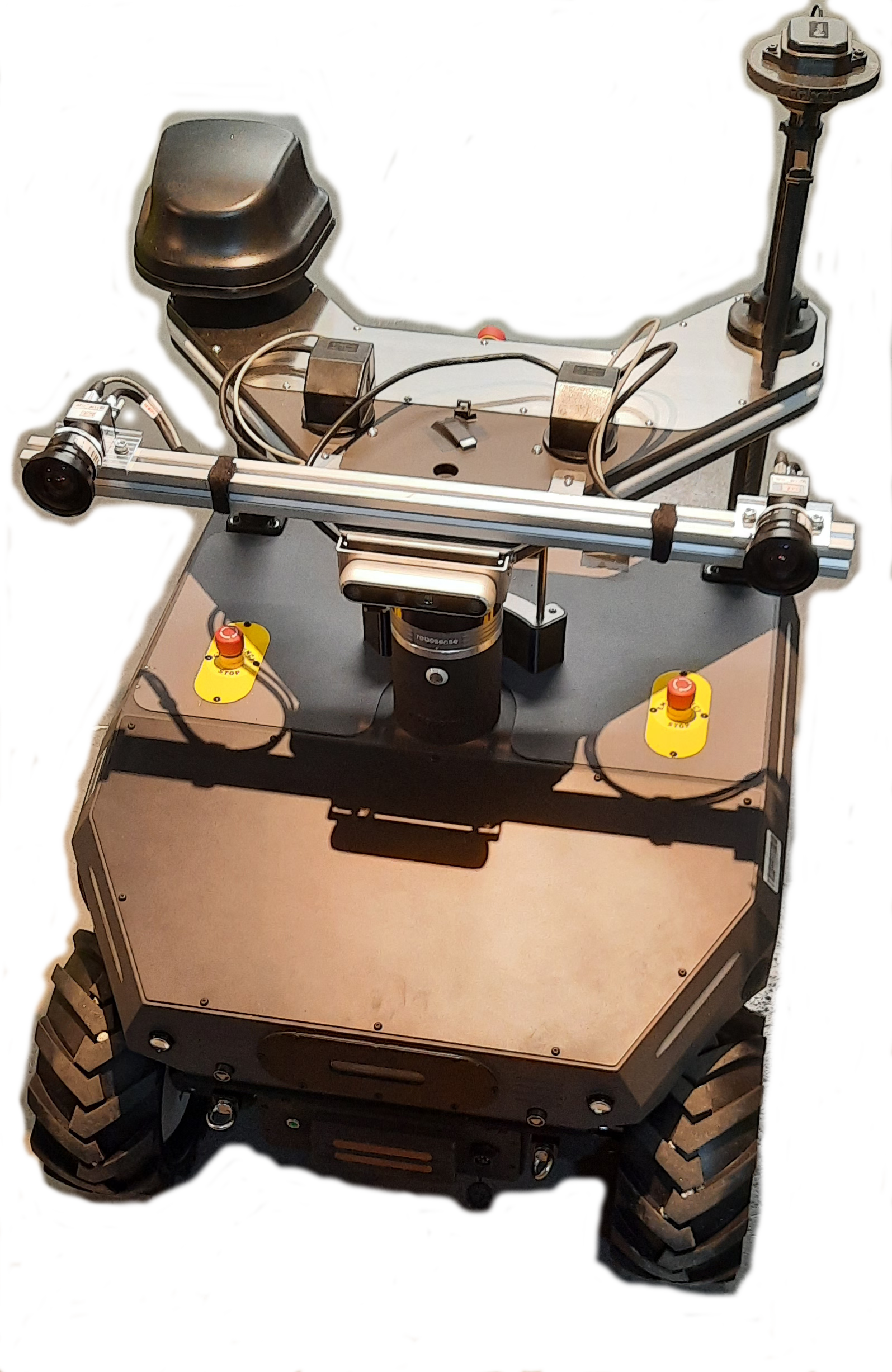

The mobile robot platform has been funded by the MOBI-DEEP project in order to demonstrate autonomous navigation capabilities in encumbered and crowded environment. This platform is composed of (see Figure 6):

- one omnidirectional mobile robot (SCOOT MINI with mecanum wheels from AGIL-X)

- one NVIDIA Jetson AGX Xavier for deep learning algorithm implementation

- one general labtop

- one Robosense RS-LIDAR16

- one Ricoh Z1 360° camera

- one Sony RGB-D D455 camera

The main applications of this platform are:

- indoor datasets acquisition

- localization, Mapping, depth estimation, Semantization

- proactive navigation in shared space

- pedestrian detection and tracking.

This platform was used in MOBI-DEEP project for integration of different work from the consortium. It is used to demonstrate new results on social navigation.

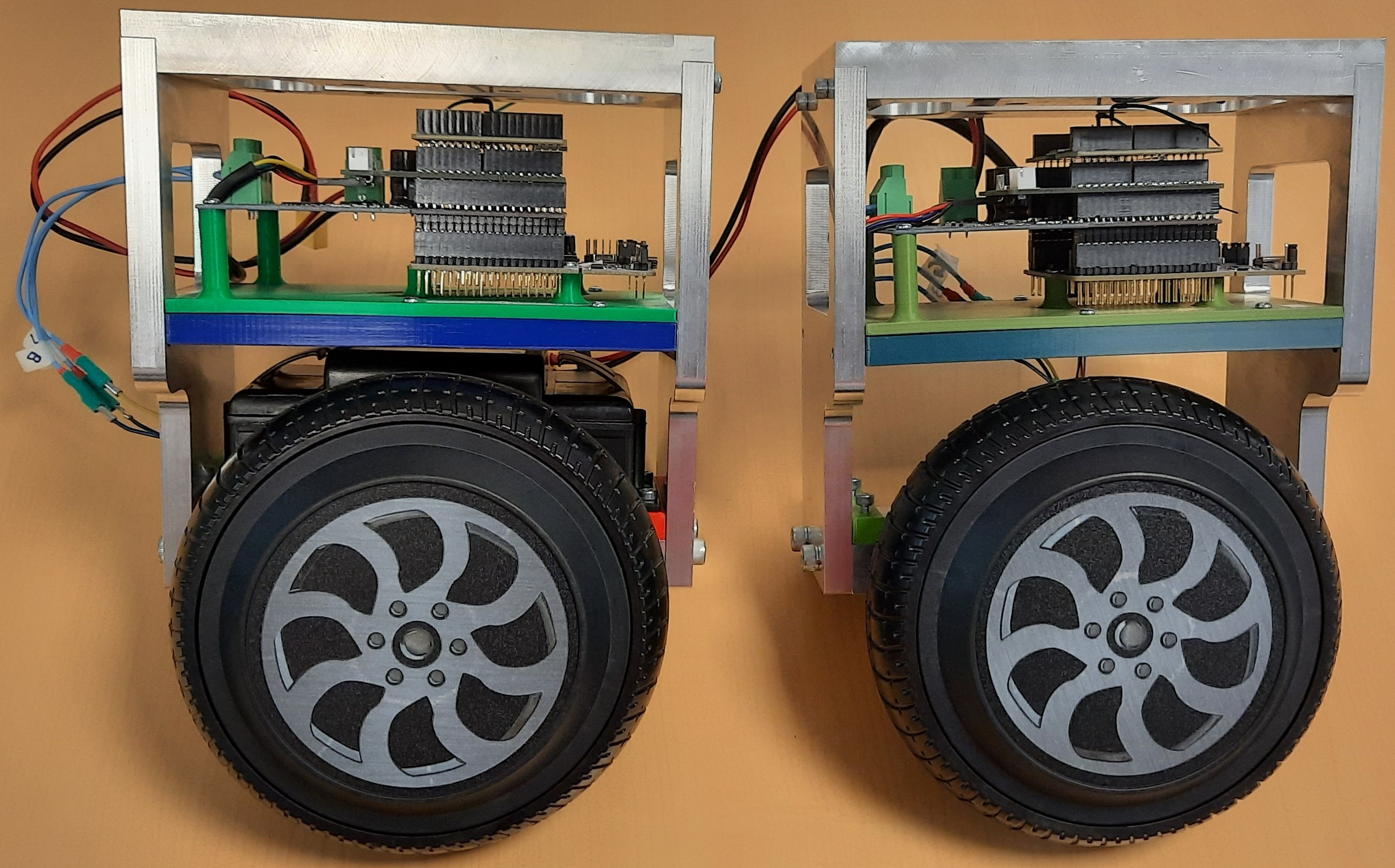

7.2.2 E-Wheeled platform

E-WHEELED is an AMDT Inria project (2019-22) coordinated by Philippe Martinet. The aim is to provide mobility to things by implementing connectivity techniques. It makes available an Inria expert engineer (Nicolas Chleq) in ACENTAURI in order to demonstrate the Proof of Concept using a small size demonstrator (see Figure 7). Due to the COVID19, the project has been delayed.

Two wheels

7.2.3 Moving Living Lab global platform

Moving Living Lab platform has been funded by the AgrifoodTEF project (H2020 project). It has been designed to perform physical field testing (Navigation algorithms, Monitoring of health and growth, Sensors and robots testing) and dataset acquisition in real agricultural sites.

Moving Living Lab platform is composed of different elements (see Figure 8):

- MLL is a moving laboratory. It has been fully equipped with a server, a RTK-GPS base, a 5G private network, WIFI, and three office desks. It has autonomy of energy and an electric generator. It has also a trailer in order to transport the robots.

- SUMMIT-HM is a customized and updated version of the Summit-XL offroad mobile robot from robotnik. It is an instrumented robots with many sensors (RTK GPS, Lidar, Camera, IMU Communications devices (WIFI, 5G)) and one embedded NVIDIA Jetson AGX Orin.

- VERSATYL is an UAV from Skydrone company. It has been customized with a payload instrumented with many sensors (RTK GPS, Lidar, Camera, IMU Communications devices (WIFI, 5G)) and one embedded NVIDIA Jetson AGX Orin.

- Matrice 300 RTK is an UAV from DJI company. It has been equipped with a multispectral camera and one embedded NVIDIA Jetson AGX Xavier.

- A ground truth RTK system. An RTK GPS base station has been installed and a local server configured inside the MLL. Each robot is equipped with an RTK GPS receiver and connected to a local server in order to compute a centimeter localization accuracy.

The main applications of this platform are:

- datasets acquisition

- localization, Mapping, Simultaneous Localization And Mapping (SLAM)

- autonomous navigation (path planning and tracking, Geofencing), proactive navigation in shared space.

7.2.4 UAV arena Dronix platform.

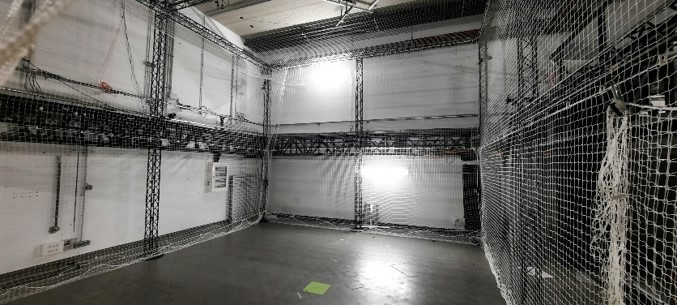

The UAV (Unmanned Aerial Vehicle) Arena (called Dronix) is a fixed and reconfigurable platform owned by ACENTAURI team at INRIA. It was cofunded by the European project AgrifoodTEF.

The volume of Dronix is 5 m x 6 m x 7 m. It is a specialized platform designed for the development, testing and demonstration of mobility algorithms for UAVs and AGRs in a controlled indoor environment. It can be considered as a fully controlled facility for preliminary testing of UAV and AGR functionalities.

Dronix platform is presented in Figure 9.

It is composed of different elements :

- Motion Capture Cameras Miqus M3 (resolution (1824 x 1088), normal mode (2MO, 340fps), High speed mode (0,5MO, 650fps))

- Dedicated Qualisys Motion Analysis System

- Multi object tracking with specific 3D markers

The main applications of this platform are:

- Data collection via UAVs and AGRs mounted with different sensors

- Testing and Validation of Different Sensors Calibration

- Building ground truth localization with 12 Cameras for a millimeter (mm) accuracy.

- Testing and Validation of Localization, Mapping, SLAM, and Navigation Algorithms for UAV, AGR, and their collaboration

Dronix is based on Qualisys Motion Capture and Tracking System and it uses 12 cameras Qualisys Miqus M3 to localize (estimation of the position and orientation) and track any moving object (equipped with markers) in a dedicated volume. The software named Qualisys Track manager is able to provide in high frequency localization of these objects with a 0.1mm accuracy and can be connected to a robotic system in real time as a ground truth source, and/or real time localization system.

8 New results

8.1 Hybrid Dense Stereo Visual Odometry

Participants: Ziming Liu, Ezio Malis, Philippe Martinet.

Visual odometry is an important task for the localization of autonomous robots and several approaches have been proposed in the literature. We consider visual odometry approaches that use stereo images since the depth of the observed scene can be correctly estimated. These approaches do not suffer from the scale factor estimation problem of monocular visual odometry approaches that can only estimate the translation up to a scale factor. Traditional model-based visual odometry approaches are generally divided into two steps. Firstly, the depths of the observed scene are estimated from the disparity obtained by matching left and right images. Then, the depths are used to obtain the camera pose. The depths can be computed for selected features like in sparse methods or for all possible pixels in the image like in dense direct methods 1.

Recently, more and more end-to-end deep learning visual odometry approaches have been proposed, including supervised and unsupervised models. However, it has been shown that hybrid visual odometry approaches can achieve better results by combining a neural network for depth estimation with a model-based visual odometry approach. Moreover, recent works have shown that deep learning based approaches can perform much better than the traditional stereo matching approaches and provide more accurate depth estimation.

State-of-the-art supervised stereo depth estimation networks can be trained with the ground truth disparity (depth) maps. However, ground truth depth maps are extremely difficult (almost impossible for dense depth maps) to be obtained for all pixels in real and variable environments. Therefore, some works try to train a model on simulated environments and reduce its gap with the real world data. However, there are only few works focusing on unsupervised stereo matching. Besides, using the ground truth depth maps or unsupervised training, we can also build temporal images reconstruction loss with ground truth camera pose which is easy to be obtained. In previous works, we proposed hybrid visual odometry methods to achieve state-of-the-art performance by fusing both data-based deep learning networks and model-based localization approaches 67.

However, these methods also suffer from deep learning domain gap problems, which leads to an accuracy drop of the hybrid visual odometry approach when new type of data is considered. In 11, we explored a practical solution to this problem. Indeed, the deep learning network in the hybrid visual odometry predicts the stereo disparity with fixed searching space. However, the disparity distribution is unbalanced in stereo images acquired in different environments. We propose an adaptive network structure to overcome this problem. Secondly, the model-based localization module has a robust performance by online optimizing the camera pose in test data, which motivates us to introduce test-time training machine learning method for improving the data-based part of the hybrid visual odometry model.

Another problem we faced in our previous work concerned stereo matching, that is one of the low-level visual perception tasks. Currently, two-stage 2D-3D networks and three-stage recurrent networks dominate deep stereo matching. These methods build a cost volume with low-resolution stereo feature maps, which splits the network into a feature net and a matching net. However, the 2D feature map is not uncontrollable, and the low-resolution feature map has lost important matching information. To overcome these problems, we proposed in 20 the first one-stage 2D-3D deep stereo network, named StereoOne. It has an efficient module that builds a cost volume at image resolution in real-time. The feature extraction and matching are learned in a single 3D network. According to the experiments, the new network can easily surpass the 2D-3D network baseline and it can achieve competitive performance with the state-of-the-art.

8.2 Decision making for autonomous driving in urban environment

Participants: Monica Fossati, Philippe Martinet, Ezio Malis.

Recent advancements in autonomous vehicle (AV) technology have led to increasingly sophisticated systems, particularly for relatively simple environments like highways and rural roads. However, achieving full autonomy in urban settings remains challenging due to the dynamic and unpredictable behavior of road users. Our work aims to address these challenges by integrating high-definition (HD) maps, specifically those based on the Lanelet2 format, with real-time perception data to dynamically characterize the space around a road agent. This integration leverages the graph-based structure, semantic richness, and modularity of Lanelet2 maps to provide a comprehensive, context-aware representation of the environment. Our goal is to view the road from the user’s perspective, extracting navigation-relevant information to support adaptive and proactive decision-making, enhancing the vehicle’s situational awareness and ability to navigate complex urban scenarios safely and efficiently. This represents a significant step toward the development of autonomous vehicles capable of intelligent, context-driven behavior in real-world environments. In this aim, our work introduces key advancements in enhancing the situational awareness of autonomous vehicles:

- Improved Reachable Set Calculation integrating Lanelet2 maps with the current state and kinematic limits of vehicles to provide a more accurate prediction of potential movements at the lanelet level.

- Physical Rule Implementation leveraging the modularity of the Lanelet2 standard to estimate multiple reachable sets capable of describing and anticipating scenarios involving relaxed or violated traffic rules.

- Automatic Interaction Identification and Classification through the intersection of their reachable sets. This allows for identifying which agents could interact and how.

The proposed approach provides autonomous vehicles with a multi-level representation of the environment, enabling adaptive decision-making. It considers both traffic and physical rules, better describing human dynamics, and allows for proactive strategies and selective rule relaxation. This leads to a more robust understanding of the vehicle’s context in urban navigation.

8.3 Multi-Spectral Visual Servoing

Participants: Enrico Fiasché, Philippe Martinet, Ezio Malis.

The objective of this work is to propose a novel approach for Visual Servoing (VS) using a multispectral camera, where the number of data are more than three times that of a standard color camera. To meet real-time feasibility, the multispectral data captured by the camera is processed using a dimensionality reduction technique. Unlike conventional methods that select a subset of bands, our technique leverages the best information coming from all the bands of the multispectral camera. This fusion process sacrifices spectral resolution but keeping spatial resolution, crucial for precise robotic control in forests. The proposed approach is validated through simulations, demonstrating its efficacy in enabling timely and accurate VS in natural settings. By leveraging the full spectral information of the camera and preserving spatial details, our method offers a promising solution for VS in natural environment, surpassing the limitations of traditional band selection approaches 17.

8.4 Efficient and accurate closed-form solution to pose estimation from 3D correspondences

Participants: Ezio Malis.

Computing the pose from 3D data acquired in two different frames is of high importance for several robotic tasks like odometry, SLAM and place recognition. The pose is generally obtained by solving a least-squares problem given points-to-points, points-to-planes or points to lines correspondences. The non-linear least-squares problem can be solved by iterative optimization or, more efficiently, in closed-form by using solvers of polynomial systems.

In 8, a complete and accurate closed-form solution for a weighted least-squares problem has been studied. Adding weights for each correspondence allows to increase robustness to outliers. Contrary to existing methods, the proposed approach is complete since it is able to solve the problem in any non-degenerate case and it is accurate since it is guaranteed to find the global optimal estimate of the weighted least-squares problem. The key contribution of this work is the combination of a robust u-resultant solver with the quaternion parametrization of the rotation, that was not considered in previous works. Simulations and experiments on real data demonstrate the superior accuracy and robustness of the proposed algorithm compared to previous approaches.

During this year, we aimed to address the limitations and unanswered questions that emerged from earlier study and offer novel perspectives and methodologies to advance the field. Notably, one of the main contributing factors to the limited efficiency observed in the u-resultant approach was the failure to leverage the two-fold symmetry inherent in the quaternion parametrization, a missed opportunity that could have led to a more efficient solver. For these reasons, a new approach has been studied that shifts from utilizing the u-resultant method to adopting the h-resultant approach 12. The h-resultant approach consists in hiding one variable considering it as a parameter. Such a hidden variable approach has been used in the past to solve different problems in computer vision. Therefore, the key contribution of the current work is the design of a novel efficient h-resultant solver that exploits the two-fold symmetry of the quaternion parametrization, that was not considered in previous work.

8.5 Lidar Odometry

Participants: Matteo Azzini, Ezio Malis, Philippe Martinet.

In the field of lidar odometry for autonomous navigation, the Iterative Closest Point (ICP) algorithm is a prevalent choice for estimating robot motion by comparing point clouds. However, ICP accuracy is strictly dependent on the nature of the features involved, but also on the directional choice of the extraction and matching, either from the current to the reference point cloud or vice-versa. Point-to-line or point-to-plane correspondences have been proven to provide the more accurate odometry results. The matching is generally done in a mono-directional framework: extract the features (lines or planes) in the current point cloud and match them to points in the reference point cloud. In 15, we introduced a novel formulation, named Balanced ICP, that performs feature extraction (lines or planes) in both point clouds and consequent matching in both directions. Therefore, the cost function is designed to perform a simultaneous optimization of all available data balancing the noise and extraction errors. The experiments, conducted both on simulated and real data from the KITTI dataset, reveal that our method outperforms the classical mono-directional formulations, in terms of robustness, accuracy and stability.

8.6 Dense-direct visual-SLAM

Participants: Diego Navarro Tellez, Ezio Malis, Raphael Antoine (CEREMA), Philippe Martinet.

In the context of the ROADAI project, we proposed a comprehensive framework based on direct Visual Simultaneous Localization and Mapping (V-SLAM) to observe a vertical coastal cliff 21. The precise positioning of data measurements (such as ground-penetrating radar) is crucial for environmental observations. However, in GPS-denied environments near large structures, the GPS signal can be severely disrupted or even unavailable. To address this challenge, we focus on the accurate localization of drones using vision sensors and SLAM systems. Traditional SLAM approaches may lack robustness and precision, particularly when cameras lose perspective near structures. We propose a new framework that combines feature-based and direct methods to enhance localization precision and robustness. The proposed system operates in two phases: first, a SLAM phase utilizing a stereo camera to reconstruct the environment from a distance sufficient to benefit from a wide field of view; second, a localization phase employing a monocular camera. Experiments conducted in realistic simulated environments demonstrate the system's ability to achieve drone localization within 15-centimeter precision, surpassing existing state-of-the-art approaches.

8.7 Reliable Risk Assessment and Management in autonomous driving

Participants: Emmanuel Alao, Lounis Adouane [UTC Compiegne], Philippe Martinet.

Autonomous driving in urban scenarios has become more challenging due to the increase in Personal Light Electric Vehicles (PLEVs). PLEVs correspond mostly to electric devices such as gyropods and scooters. They exhibit varying velocity profiles as a result of their high acceleration capacity. Multiple hypotheses about their possible motion make autonomous driving very difficult, leading to the highly conservative behavior of most control algorithms. The work proposes in 13 to solve this problem by performing a continuous risk assessment using a Fusion of Predictive Inter-Distance Profile (F-PIDP). Then a stochastic MPC algorithm performs effective risk management using the F-PIDP while taking into account adaptive constraints. The advantages of the proposed approach are demonstrated through simulations of multiple scenarios.

To handle multiple multi-modal motions using a fusion of PIDPs (F-PIDP), the traditional Predictive Inter-Distance Profile (PIDP) risk assessment metric (Bellingard et al., 2023) is extended in 14. This approach accounts for the uncertainties in the various trajectories that PLEVs can follow on the road. A priority-based strategy is then developed to select the most dangerous agent. Then F-PIDP and Model Predictive Control (MPC) algorithm are employed for risk management, ensuring safe and reliable navigation. The efficiency of the proposed method is validated through several simulations.

8.8 Parameter estimation of the lateral vehicle dynamics

Participants: Fabien Lionti, Nicolas Gutowski [LERIA Angers], Sébastien Aubin [DGA], Philippe Martinet.

Estimating parameters for nonlinear dynamic systems is a significant challenge across numerous research areas and practical applications.

In 19, we present a novel two-step approach for estimating parameters that control the lateral dynamics of a vehicle, acknowledging the limitations and noise within the data. The methodology merges spline smoothing of system observations with a Bayesian framework for parameter estimation. The initial phase involves applying spline smoothing to the system state variable observations, effectively filtering out noise and achieving precise estimates of the state variables’ derivatives.

Consequently, this technique allows for the direct estimation of parameters from the differential equations characterizing the system’s dynamics, bypassing the need for labor-intensive integration procedures. The subsequent phase focuses on parameter estimation from the differential equation residuals, utilizing a Bayesian method known as likelihood-free ABCSMC.

This Bayesian strategy offers multiple advantages: it mitigates the impact of data scarcity by incorporating prior knowledge regarding the vehicle’s physical properties and enhances interpretability through the provision of a posterior distribution for the parameters likely responsible for the observed data. Employing this innovative method facilitates the robust estimation of parameters governing vehicle lateral dynamics, even in the presence of limited and noisy data.

8.9 A Novel 3D Model Update Framework for Long-Term Autonomy

Participants: Stefan Larsen, Ezio Malis, El Mustafa Mouaddib, Patrick Rives.

The ability to perform long-term robotic operations in dynamic environments remains a challenge in fields such as surveillance, agriculture and autonomous vehicles. For improved localization and monitoring over time, we proposed a novel model update framework using image-based 3D change localization and segmentation 18. Specifically, shallow image data is used to detect and locate significant geometric change areas in a pre-made 3D model. The main contribution of this paper is the ability to precisely segment and locate both new and missing objects from few observations, and to provide consistent model updates. The applied method for geometric change detection is robust to seasonal, viewpoint, and illumination differences that may occur between operations. Qualitative and quantitative tests with both our own and publicly available datasets show that the model update framework improves on previous methods and facilitates long-term localization.

8.10 Multi-robots localization and navigation for infrastructure monitoring

Participants: Mathilde Theunissen, Isabelle Fantoni, Ezio Malis, Philippe Martinet.

In the context of the ANR SAMURAI project, we studied the interest in leveraging the robot formation control to enforce the localization precision. This involves optimizing the shape of the formation based on localizability indices that depend on the sensors used by the robots and the environment. The first focus is on the definition and optimization of a localizability index applied to collaborative localization using Ultra-Wide-Band (UWB) antennas 22 .

8.11 Collaborative perception between autonomous vehicles and roadside units

Participants: Minh-Quan Dao, Ezio Malis, Vincent Fremont [LS2N, Nantes].

In the context of the ANR ANNAPOLIS project, we started to study how to increase the vehicle’s perception capacity both in terms of precision, measurement field of view and information semantics, through vehicle to intelligent infrastructure communication. Occlusion presents a significant challenge for safety-critical applications of autonomous driving 10. Collaborative perception has recently attracted a large research interest thanks to the ability to enhance the perception of autonomous vehicles via deep information fusion with intelligent roadside units (RSU), thus minimizing the impact of occlusion. While significant advancement has been made, the data-hungry nature of these methods creates a major hurdle for their real- world deployment, particularly due to the need for annotated RSU data. Manually annotating the vast amount of RSU data required for training is prohibitively expensive, given the sheer number of intersections and the effort involved in annotating point clouds. We address this challenge by devising a label-efficient object detection method for RSU based on unsupervised object discovery 16. Our work introduces two new modules: one for object discovery based on a spatial temporal aggregation of point clouds, and another for refinement. Furthermore, we demonstrate that fine-tuning on a small portion of annotated data allows our object discovery models to narrow the performance gap with, or even surpass, fully supervised models. Extensive experiments are carried out in simulated and real-world datasets to evaluate our method.

9 Bilateral contracts and grants with industry

9.1 Bilateral contracts with industry

ACENTAURI is responsible for four research contracts.

9.1.1 Naval Group

Usine du Futur (2022-2025)

Participants: Ezio Malis, Philippe Martinet, Erwan Emraoui, Marie Aspro, Pierre Alliez (TITANE).

The context is that of the factory of the future for Naval Group in Lorient, for submarines and surface vessels. As input, we have a digital model (for example of a frigate), the equipment assembly schedule and measurement data (images or Lidar). Most of the components to be mounted are supplied by subcontractors. At the output, we want to monitor the assembly site to compare the "as-designed" with the "as-built". The challenge of the contract is a need for coordination on the construction sites for the planning decision. It is necessary to be able to follow the progress of a real project and check its conformity using a digital twin. Currently, as you have to see on board to check, inspection rounds are required to validate the progress as well as the mountability of the equipment: for example, the cabin and the fasteners must be in place, with holes for the screws, etc. These rounds are time-consuming and accident-prone, not to mention the constraints of the site, for example the temporary lack of electricity or the numerous temporary assembly and safety equipment.

La Fontaine (2022-2025)

Participants: Ezio Malis, Philippe Martinet, Hasan Yilmaz, Pierre Joyet.

The context is that of decision support for a collaborative autonomous multi-agent system with a common objective. The multi-agent system try to get around ”obstacles” which, in turn, try to prevent them from reaching their goals. As part of a collaboration with NAVAL GROUP, we wish to study a certain number of issues related to the optimal planning and control of cooperative multi-agent systems. The objective of this contract is therefore to identify and test methods for generating trajectories responding to a set of constraints, dictated by the interests, the modes of perception, and the behavior of these actors. The first problem to study is that of the strategy to adopt during the game. The strategy consists in defining “the set of coordinated actions, skillful operations, maneuvers with a view to achieving a specific objective”. In this framework, the main scientific issues are (i) how to formalize the problem (often as optimization of a cost function) and (ii) how to be able to define several possible strategies while keeping the same tools for implementation (tactics).

The second problem to study is that of the tactics to be followed during the game in order to implement the chosen strategy. The tactic consists in defining the tools to execute the strategy. In this context, we study the use of techniques such as MPC (Model Predictive Control) and MPPI (Model Predictive Path Integral) which make it possible to predict the evolution of the system over a given horizon and therefore to take the best action decision based on knowledge at time t.

The third problem is that of combining the proposed approaches with those based on AI and in particular the machine learning. Machine Learning can intervene both in the choice of the strategy and in the development of tactics. The possibility of simulating a large number of parts could allow the learning of a neural network whose architecture remains to be designed.

9.1.2 NXP

Participants: Ezio Malis, Philippe Martinet, Thomas Campagnolo, Gaetan Bael [NXP].

As part of a research collaboration between the ACENTAURI team at Inria Sophia Antipolis and NXP Semiconductors, we are interested in building autonomous devices such as robots, drones or vehicles that have to navigate through various dynamic indoor and outdoor environments, such as homes, factories or cities.

The object of the CIFRE PhD thesis of Thomas Campagnolo will be to setup a complete Perception system based on a generic spatio-temporal multi-level representation of the scene (geometrical, semantical, topological, ...) that will provide the information needed by an ontology of navigation task and directions originating from various modalities (sound, text, images, other systems). The geometric representation will be provided by state of the art SLAM algorithm, while the PhD subject will focus on extracting semantic and topological information. Semantic and topology will be extracted using a Data based approach and an abstraction toolbox (Graphs based) will be developed to make the connection with ontologies on one side and with the task to be done on the other side.

The PhD will address different contexts with increasing complexity, starting by defining a particular sensing system and a representation of the natural dynamic environment, and using state of the art algorithms to assess the situation at each time of evolution and to evaluate the different actions in a given horizon of time. The different contexts will concern various environments such as homes, factories, fields or cities.

9.1.3 SAFRAN

Participants: Ezio Malis, Philippe Martinet, Mohamed Mahmoud Ahmed Maloum, Ahmed Nasreddine Benaichouche [SAFRAN].

The objective of the CIFRE PhD thesis of Mohamed Mahmoud Ahmed Maloum would be to study the ability of deep neural networks to address the SLAM problem by leveraging multiple sensor modalities to take advantage of each. The challenge lies in the ability to find a common representation space for the different modalities while maintaining a representation of the robot's poses in SE3 space.

The architecture to be developed should take advantage of attention mechanisms (developed in Transformers) to weigh the measurements from different sensors (images, inertia) based on the robot’s state (proprioceptive information: inertia) as well as the environment (exteroceptive information: vision). The balance between real-time performance and accuracy, along with robustness in dynamic, uncertain, and complex environments, are important factors to consider in the study.

In the context of the thesis, the methodology followed will be hybrid in nature, aiming to leverage both prior knowledge from physics and data-driven insights. To this end, the approach proposed will combine deep neural networks with traditional pose estimation methods to calculate visual odometry.

10 Partnerships and cooperations

10.1 International initiatives

10.1.1 Inria associate team not involved in an IIL or an international program

AISENSE

-

Title:

Artificial intelligence for advanced sensing in autonomous vehicles

-

Duration:

2023 -> 2024

-

Coordinator:

Seung-Hyun Kong (skong@kaist.ac.kr)

-

Partners:

- Korea Advanced Institute of Science and Technology Daejeon (Corée du Sud)

-

Inria contact:

Ezio Malis

-

Summary:

The main scientific objective of the collaboration project is to study how to build a long-term perception system in order to acquire situation awareness for safe navigation of autonomous vehicles. The perception system will perform the fusion of different sensor data (lidar and vision) in order to localize a vehicle in a dynamic peri-urban environment, to identify and estimate the state (position, orientatation, velocity, …) of all possible moving agents (cars, pedestrians, …), and to get high level semantic information. To achieve such objective, we will compare different methodologies. On the one hand, we will study model-based techniques, for which the rules are pre-defined accordingly to a given model, that need few data to be setup. On the other hand, we will study end-to-end data-based techniques, a single neural network for aforementioned multi-tasks (e.g., detection, localization, and tracking) to be trained with data. We think that the deep analysis and comparison of these techniques will help us to study how to combine them in a hybrid AI system where model-based knowledge is injected in neural networks and where neural networks can provide better results when the model is too complex to be explicitly handled. This problem is hard to solve since it is not clear which is the best way to combine these two radically different approaches. Finally, the perception information will be used to acquire situation awareness for safe decision making.

10.2 International research visitors

10.2.1 Visits of international scientists

Other international visits to the team

Rafael Murrieta

-

Status

Professor

-

Institution of origin:

Centro de Investigación en Matemáticas (CIMAT)

-

Country:

Mexico

-

Dates:

September 1st 2024 - August 31st 2025

-

Context of the visit:

Collaboration on robot motion planning with sensory feedback and learning.

-

Mobility program/type of mobility:

sabbatical

10.3 European initiatives

10.3.1 Digital Europe

Agrifood-TEF (2023-2027)

Participants: Philippe Martinet, Ezio Malis, Nicolas Chleq, Pardeep Kumar, Matthias Curet, Malek Aifa, Jon Aztiria Oiartzabal, Andres Gomez Hernandez.

AGRIFOOD-TEF project is a co-funded project by the European Union and the different countries involved. It is organized in three national nodes (Italy, Germany, France) and 5 satellite nodes (Poland, Belgium, Sweden, Austria and Spain), offers its services to companies and developers from all over Europe who want to validate their robotics and artificial intelligence solutions for agribusiness under real-life conditions of use, speeding their transition to the market.

The main objectives are:

- to foster sustainable and efficient food production, AgrifoodTEF empowers innovators with validation tools needed to bridge the gap between their brightest ideas and successful market products.

- to provide services that help assess and validate third party AI and Robotics solutions in real-world conditions aiming to maximize impact from digitalization of the agricultural sector.

Five impact sectors propose tailor-made services for the testing and validation of AI-based and robotic solutions in the agri-food sector

- Arable farming: testing and validation of robotic, selective weeding and geofencing technologies to enhance autonomous driving vehicle performances and therefore decrease farmers' reliance on traditional agricultural inputs.

- Tree crop: testing and validation of AI solutions supporting optimization of natural resources and inputs (fertilisers, pesticides, water) for Mediterranean crops (Vineyards, Fruit orchards, Olive groves).

- Horticulture: testing and validation of AI-based solutions helping to strike the right balance of nutrients while ensuring the crop and yield quality.

- Livestock farming: testing and validation of AI-based livestock management applications and organic feed production improving the sustainability of cows, pigs and poultry farming.

- Food processing: testing and validation of standardized data models and self-sovereign data exchange technologies, providing enhanced traceability in the production and supply chains.

Inria will put in place a Moving Living Lab going to the field in order to provide three kind of services: data collection with mobile ground robot or aerial robot, mobility algorithms evaluation with mobile ground robot or aerial robot, and sensor/robots testing functionalities.

10.3.2 Other european programs/initiatives

EUROBIN (2022-2026)

Participants: Philippe Martinet, Ezio Malis, Ludovica Danovaro.

The team is part of the euROBIN, the Network of Excellence on AI and robotics that was launched in 2022. The master 2 internship of Ludovica Danovaro has been funded by the Eurobin project to work in collaboration with the LARSEN team in Nancy.

10.4 National initiatives

10.4.1 ANR project ANNAPOLIS (2022-2025)

Participants: Philippe Martinet, Ezio Malis, Emmanuel Alao, Kaushik Bhowmik, Monica Fossati, Minh Quan Dao, Nicolas Chleq, Quentin Louvel.

AutoNomous Navigation Among Personal mObiLity devIceS: INRIA (ACENTAURI, CHROMA), LS2N, HEUDIASYC. This project has been accepted in 2021. We are involved in Augmented Perception using Road Side Unit PPMP detection and tracking, Attention map prediction, and Autonomous navigation in presence of PPMP.

10.4.2 ANR project SAMURAI (2022-2026)

Participants: Ezio Malis, Philippe Martinet, Patrick Rives, Nicolas Chleq, Quentin Louvel, Stefan Larsen, Mathilde Theunissen, Matteo Azzini.

ShAreable Mapping using heterogeneoUs sensoRs for collAborative robotIcs: INRIA (ACENTAURI), LS2N, MIS. This project has been accepted in 2021. We are involved in building Shareable maps of a dynamic environment using heterogeneous sensors, Collaborative task of heterogeneous robots, and Update the shareable maps.

10.4.3 ANR project TIRREX (2021-2029)

Participants: Philippe Martinet, Ezio Malis.

TIRREX is an EQUIPEX+ project funded by ANR and coordinated by N. Marchand. It is composed of six thematic axis (XXL axis, Humanoid axis, Aerial axis, Autonomous Land axis, Medical axis, Micro-Nano axis) and three transverse axis (Prototyping & Design, Manipulation, and Open infrastructure). The kick-off has been done in December 2021. Acentauri is involved in:

- Autonomous Land axis (ROB@t) is coordinated by P. Bonnifait and R. Lenain is covering Autonomous Vehicles and Agricultural robots.

- Aerial Axis is coordinated by I. Fantoni and F. Ruffier.

10.4.4 PEPR project NINSAR (2023-2026)

Participants: Philippe Martinet, Ezio Malis.

Agroecology and digital. In the framework on this PEPR, ACENTAURI is leading the coordination (R. Lenain (INRAE), P. Martinet (INRIA), Yann Perrot (CEA)) of a project called NINSAR (New ItiNerarieS for Agroecology using cooperative Robots) accepted in 2022. It gathers 17 research teams from INRIA (3), INRAE(4), CNRS(7), CEA, UniLasalle, UEVE.

10.4.5 Defi Inria-Cerema ROAD-AI

Participants: Ezio Malis, Philippe Martinet, Diego Navarro [Cerema], Pierre Joyet.

The aim of the Inria-Cerema ROAD-AI (2021-2024) defi is to invent the asset maintenance of infrastructures that could be operated in the coming years. This is to offer a significant qualitative leap compared to traditional methods. Data collection is at the heart of the integrated management of road infrastructure and engineering structures and could be simplified by deploying fleets of autonomous robots. Indeed, robots are becoming an essential tool in a wide range of applications. Among these applications, data acquisition has attracted increasing interest due to the emergence of a new category of robotic vehicles capable of performing demanding tasks in harsh environments without human supervision.

10.4.6 ROBFORISK (2023-2024)

Participants: Philippe Martinet, Ezio Malis, Enrico Fiasche, Louis Verduci, Thomas Boivin [INRAE Avignon], Pilar Fernandez [INRAE Avignon].

ROBFORISK (Robot assistance for characterizing entomological risk in forests under climate constraints) is a common project between Inria-ACENTAURI and INRAE (UR629 Ecologie des Forêts Méditerranéennes). Three main objectives have been defined : to develop an innovative approach to insect hazard and tree vulnerability in forests, to tackle the challenge of drone visual servoing using multispectral camera information in natural environments, and to develop innovative research on pre-symptomatic stress tests of interest to forest managers and stakeholders.

10.4.7 DGA ASTRID project ASCAR (2024-2027)

Participants: Ezio Malis, Andrea Pagnini, Tarek Hamel [I3S Sophia Antipolis].

The physical laws governing the motion of Autonomous Robotic Systems involve natural symmetries reflected in sensory measurements and external forces applied to the vehicles that present invariance and/or equivariance properties. ASCAR project will exploit this structure explicitly by developing design principles and methods tailored for systems with symmetries. More specifically, the project will establish i) a new paradigm of Guidance and Control for Autonomous Systems that seamlessly integrates, in a unified framework, modeling, control, and optimization design procedures, ii) a framework for Navigation that integrates situation awareness for the analysis and design of efficient and reliable state observers for general systems with symmetries, and iii) a new paradigm and new tools for robust sensor-based control.

Two existing platforms will be exploited during the life of the project: a quadrotor UAV and a convertible aerial vehicle to run experimentations involving various scenarios and environments and evaluate the results inspired by the proposed research.

The project outcomes will provide engineers with an effective approach to designing efficient and computationally tractable Guidance, Navigation and Control systems for a large class of Autonomous Systems accomplishing a variety of real-world applications.

10.4.8 3IA Institute

Ezio Malis holds a senior chair from 3IA Côte d'Azur (Interdisciplinary Institute for Artificial Intelligence). The topic of his chair is “Autonomous robotic systems in dynamic and complex environments". In addition, starting from the end of 2024, he is the scientific head of the fourth research axis entitled “AI for smart and secure territories”.

11 Dissemination

Participants: Ezio Malis, Philippe Martinet.

11.1 Promoting scientific activities

11.1.1 Scientific events: organisation

Member of the organizing committees

- Philippe Martinet has been Editor of IROS24 (124 papers).

- Philippe Martinet has been Associated Editor of IV24 (3 papers).

- Philippe Martinet has been Associated Editor of ITSC24 (5 papers).

- Ezio Malis has been Associated Editor of IROS24 (9 papers).

- Ezio Malis has been part of the Scientific Committee of the 4th Inria-DFKI European Summer School on AI (IDESSAI 2024).

11.1.2 Scientific events: selection

Member of the conference program committees

- Philippe Martinet is member of the International Conference on Intelligent Robots and Systems (IROS24) Senior Program Committee.

- Ezio Malis is member of the International Conference on Robotics, Computer Vision and Intelligent Sytems (ROBOTVIS) Program Committee.

11.1.3 Journal

Member of the editorial boards

- Ezio Malis is Associated Editor of Robotics and Automation Letters in the area “Vision and Sensor-Based Control" (11 papers).

- Ezio Malis is Editor of the Young Professionals Column in the IEEE Robotics & Automation Magazine (1 paper)

Reviewer - reviewing activities

- Ezio Malis has been reviewer for IEEE Transaction on robotics (2 papers).

11.1.4 Invited talks

- Ezio Malis gave the invited talk "Hybrid AI: Integration of Rule-Driven and Data-Driven Approaches for Enhanced Autonomous Robotics" at PFIA Atelier Defense on July 2nd, 2024.

- Philippe Martinet gave an invited talk on “Proactive Autonomous Navigation in Human Populated Environment” at Owheel Workshop at UTC on March 28th, 2024.

- Philippe Martinet gave the invited talk on “NINSAR project” at Inria-Brasil Workshop on Digital Science and Agronomy in Montpellier on September 11th, 2024.

- Philippe Martinet gave an invited talk on “Navigation in Human Populated Environment” at ATLAS Workshop in Luxembourg on October 7th, 2024.

11.1.5 Leadership within the scientific community

- Philippe Martinet has been co-organizer of the IROS24 Workhop on Safety of Intelligent and Autonomous Vehicles: Formal Methods vs. Machine Learning approaches for reliable navigation (SIAV-FM2L).

- Philippe Martinet is member of the Advisory Board Meeting of the Atlas project in Luxembourg (9 PhDs).

- Ezio Malis is the head of the incubator "Quantum Algorithms for Robotics" at the GDR Robotique.

11.1.6 Scientific expertise

- Philippe Martinet has done one expertise on Franche Conté Regional project Envergure-Amorçage 2024 : projects « Amorçage ».

- Philippe Martinet has done one expertise on ERC Consolidator Grant 2024.

- Philippe Martinet has been reviewer for one proposal to the F.R.S.-FNRS in Belgium.

- Philippe Martinet has been jury member of the Mobilex Challenge 1 in 2024.

- Ezio Malis has been member of the jury for the Best Poster Award of the Journées Jeunes Chercheurs en Robotique.

11.1.7 Research administration

- Philippe Martinet is the coordinator of the PEPR project NINSAR 10.4.4.

- Philippe Martinet is the coordinator of the ANR project ANNAPOLIS 10.4.1.

- Philippe Martinet is the coordinator of the regional project EPISUD.

- Philippe Martinet is the co-coordinator of the INRIA/INRAE project ROBFORISK 10.4.6.

- Philippe Martinet is the local coordinator of the European project AGRIFOOD-TEF 10.3.1.

- Since 2024, Philippe Martinet is member of the Project management committee (called PSG) and leader of the biggest workpackage (WP1 physical testing) at the consortium level of the European project AGRIFOOD-TEF 10.3.1.

- Ezio Malis is the coordinator of the ANR project SAMURAI 10.4.2.

- Ezio Malis is the local coordinator of the Defi Inria-CEREMA ROAD-AI 10.4.5.

- Ezio Malis is the local coordinator of the ANR project ASCAR 10.4.7.

- Ezio Malis is a member of BECP (Bureau des comité de projets) at Centre Inria d'Université Côte d'Azur.

- Ezio Malis is the scientific leader of the Inria - Naval Group partnerships.

- Ezio Malis is member of the scientific council of Institut 3IA Côte d'Azur and the scientific head of the fourth research axis entitled “AI for smart and secure territories” .

- Monica Fossati has been in charge of the organisation the Biweekly Robotic Seminar (17 seminars).

- Matteo Azzini has been the social media manager for the Linkedin account of the team.

- Enrico Fiasche h as been the manager for the Website and the Youtube channel of the team.

11.2 Teaching - Supervision - Juries

11.2.1 Teaching

- Ezio Malis has taught 10 hours on Signal Processing at ROBO 3 of Polytech Nice.

- Ezio Malis has taught 40 hours on Robotic Vision at Master 2 ISC-Robotique of Université de Toulon.

11.2.2 Supervision

The team has received 3 M2 (master 2) students:

- Thomas Campagnolo Subject: "Detection and tracking of moving objects with an event-based camera on an autonomous car". Supervisors: Philippe Martinet and Ezio Malis .

- Ludovica Danovaro Subject: "Comparison of Advanced Control Methods for Autonomous Navigation of a Mobile Platform in Human-Populated Environments". Supervisors: Enrico Fiasché and Ezio Malis .

- Raffaele Pumpo Subject: "Integration of Realistic Universal Social Force Models into a Model Predictive Controller for Robotic Navigation in Human-Populated Environments". Supervisors: Enrico Fiasché and Ezio Malis

The permanent team members supervised the following PhD students:

- Ziming Liu (01/12/2020 - 28/02/2024): Hybrid Artificial Intelligence Methods for Autonomous Driving Applications 23. Phd supervisors: Philippe Martinet and Ezio Malis .

- Diego Navarro (started on 01/03/2022): Precise localization and control of an autonomous multi robot system for long-term infrastructure inspection, Defi Inria-Cerema ROAD-AI. Phd supervisors: Ezio Malis and R. Antoine, Philippe Martinet.

- Matteo Azzini (started on 1/10/2022) "Lidar-vision fusion for robust robot localization and mapping", Phd supervisors: Ezio Malis and Philippe Martinet.

- Enrico Fiasché (started on 1/10/2022) "Modeling and control of a heterogeneous and autonomous multi-robot system", Phd supervisors: Philippe Martinet and Ezio Malis .

- Stefan Larsen (started on 1/10/2022) "Detection of changes and update of environment representation using sensor data acquired by multiple collaborative robots", Phd supervisors: Ezio Malis , El Mustapha Mouaddib (MIS Amiens), Patrick Rives.

- Mathilde Theunissen (started on 1/11/2022) "Multi-robot localization and navigation for infrastructure monitoring", Phd supervisors: Isabelle Fantoni, Ezio Malis , Philippe Martinet .

- Fabien Lionti (started on 1/10/2022) "Dynamic behavior evaluation by artificial intelligence: Application to the analysis of the safety of the dynamic behavior of a vehicle", Phd supervisors: Philippe Martinet , N. Gutoswski (LERIA, Angers), S. Aubin (DGA-TT, Angers).

- Emmanuel Alao (started on 1/10/2022) "Probabilistic risk assessment and management architecture for safe autonomous navigation", Phd supervisors: L. Adouane (Heudiasyc, Compiègne) and Philippe Martinet .

- Kaushik Bhowmik (started on 1/05/2023) "Modeling and prediction of pedestrian behavior on bikes, scooters or hoverboards", Phd supervisors: Anne Spalanzani, Philippe Martinet .

- Monica Fossati (started on 1/10/2023) "Navigation sûre en environnement urbain", Phd supervisors: Philippe Martinet and Ezio Malis .

- Thomas Campagnolo (started on 1/09/2024) "Embedded Machine Learning Solutions for Autonomous Navigation", Phd supervisors: Ezio Malis and Philippe Martinet .

- Ayan Barui (started on 1/11/2024) , Phd supervisors: Ezio Malis and Philippe Martinet .

- Andrea Pagnini (started on 1/12/2024) , Phd supervisors: Ezio Malis .

11.2.3 Juries

- Philippe Martinet has been reviewer and member of the jury for the PhD of Hugo Pousseur (Heudiasyc,UTC Compiègne).

- Philippe Martinet has been president and member of the jury for the PhD of Luc Desbos (ROMEA, INRAE, Clermont-Ferrand).

- Philippe Martinet has been reviewer and member of the jury for the PhD of Antoine Villemazet (RAP-LAAS, Toulouse).

- Philippe Martinet has been reviewer and member of the jury for the PhD of Lyes Saidi (Heudiasyc,UTC Compiègne).

- Philippe Martinet has been reviewer and member of the jury for the PhD of Raphael Chekroun (CAOR, Ecole des Mines de Paris).

- Ezio Malis has been reviewer and member of the jury for the PhD of Muhammad AHMED (LS2N, Ecole Centrale, Nantes).

- Ezio Malis has been president and member of the jury for the PhD of Mohammed Guermal (Inria, Université Côte d'Azur, Sophia Antipolis).

- Ezio Malis has been president and member of the jury for the PhD of Tarek Bouazza (I3S, Université Cote d'Azur, Sophia Antipolis).

- Ezio Malis has been president and member of the jury for the PhD of Tomas Lopes de Oliveira (I3S, Université Côte d'Azur, Sophia Antipolis).

11.3 Popularization

11.3.1 Productions (articles, videos, podcasts, serious games, ...)

- Matteo Azzini supervised a five days observation internship of a middle school student.

- Pierre Joyet gave a talk for the visit of M1 and M2 Eurecom students to our center as part of a research awareness module.

11.3.2 Others science outreach relevant activities

- Ezio Malis has been the chair of the Young Professional Committee of the IEEE Robotics and Automation Society (4 events organized at RO-MAN, ICRA40, IROS and HUMANOIDS).

12 Scientific production

12.1 Major publications

- 1 articleReal-time Quadrifocal Visual Odometry.The International Journal of Robotics Research2922010, 245-266HALDOIback to text

- 2 inproceedingsTowards autonomous robot navigation in human populated environments using an Universal SFM and parametrized MPC.IROS 2023 - IEEE/RSJ International Conference on Intelligent Robots and SystemsDetroit (MI), United States2023HAL

- 3 articleHow To Evaluate the Navigation of Autonomous Vehicles Around Pedestrians?IEEE Transactions on Intelligent Transportation SystemsOctober 2023, 1-11HALDOI

- 4 articlePlatooning of Car-like Vehicles in Urban Environments: Longitudinal Control Considering Actuator Dynamics, Time Delays, and Limited Communication Capabilities.IEEE Transactions on Control Systems TechnologyDecember 2020HALDOI

- 5 inproceedingsTowards simulation of radio-frequency component with physics informed neural networks.CAID 2023 - 5e Conference on Artificial Intelligence for DefenseActes de la conférence CAID 2023Rennes, FranceNovember 2023HAL

- 6 inproceedingsA New Dense Hybrid Stereo Visual Odometry Approach.IROS 2022 - 2022 IEEE/RSJ International Conference on Intelligent Robots and SystemsKYOTO, JapanIEEEOctober 2022, 6998-7003HALDOIback to text

- 7 inproceedingsMulti-masks Generation for Increasing Robustness of Dense Direct Methods.ITSC 2023 - 26th IEEE International Conference on Intelligent Transportation SystemsBilbao, SpainSeptember 2023HALback to text