2024Activity reportProject-TeamTITANE

RNSR: 201321085S- Research center Inria Centre at Université Côte d'Azur

- Team name: Geometric Modeling of 3D Environments

- Domain:Perception, Cognition and Interaction

- Theme:Interaction and visualization

Keywords

Computer Science and Digital Science

- A5.3. Image processing and analysis

- A5.3.2. Sparse modeling and image representation

- A5.3.3. Pattern recognition

- A5.5.1. Geometrical modeling

- A8.3. Geometry, Topology

- A8.12. Optimal transport

- A9.2. Machine learning

- A9.3. Signal analysis

- A9.7. AI algorithmics

Other Research Topics and Application Domains

- B3.3. Geosciences

- B5.1. Factory of the future

- B8.3. Urbanism and urban planning

1 Team members, visitors, external collaborators

Research Scientists

- Pierre Alliez [Team leader, INRIA, Senior Researcher]

- Florent Lafarge [INRIA, Senior Researcher]

Post-Doctoral Fellows

- Roberto Dyke [INRIA, Post-Doctoral Fellow, until Jul 2024]

- François Protais [INRIA, Post-Doctoral Fellow, from Dec 2024]

- Raphael Sulzer [LUXCARTA, Post-Doctoral Fellow]

PhD Students

- Moussa Bendjilali [ALTEIA]

- Marion Boyer [CNES]

- Rao Fu [GEOMETRY FACTORY, until Jan 2024]

- Nissim Maruani [INRIA]

- Armand Zampieri [SAMP SAS, CIFRE]

- Zhenyu Zhu [UNIV COTE D'AZUR, from Nov 2024]

Technical Staff

- Abir Affane [INRIA, Engineer]

- Rahima Djahel [INRIA, Engineer, until Jul 2024]

- Roberto Dyke [INRIA, Engineer, from Aug 2024]

- Christos Georgiadis [INRIA, Engineer, from May 2024]

Interns and Apprentices

- Sarthak Maheshwari [INRIA, Intern, from May 2024 until Jul 2024]

- Ismail Sahbane [INRIA, Intern, from Jul 2024 until Sep 2024]

- Krishna Sharma [INRIA, Intern, from May 2024 until Jul 2024]

- Arvind Kumar Sharma [INRIA, Intern, from May 2024 until Jul 2024]

Administrative Assistants

- Florence Barbara [INRIA]

- Vanessa Wallet [INRIA]

Visiting Scientists

- Ange Clement [GEOMETRY FACTORY, from Oct 2024]

- Nicole Feng [Univ Carnegie Mellon, from Jun 2024 until Jul 2024]

- Lizeth Fuentes Perez [UNIV ZURICH, from Jul 2024 until Aug 2024]

External Collaborators

- Andreas Fabri [GEOMETRY FACTORY, until May 2024]

- Sven Oesau [GEOMETRY FACTORY]

- Kacper Pluta [ESIEE]

- Laurent Rineau [GEOMETRY FACTORY]

2 Overall objectives

2.1 General Presentation

Our overall objective is the computerized geometric modeling of complex scenes from physical measurements. On the geometric modeling and processing pipeline, this objective corresponds to steps required for conversion from physical to effective digital representations: analysis, reconstruction and approximation. Another longer term objective is the synthesis of complex scenes. This objective is related to analysis as we assume that the main sources of data are measurements, and synthesis is assumed to be carried out from samples.

The related scientific challenges include i) being resilient to defect-laden data due to the uncertainty in the measurement processes and imperfect algorithms along the pipeline, ii) being resilient to heterogeneous data, both in type and in scale, iii) dealing with massive data, and iv) recovering or preserving the structure of complex scenes. We define the quality of a computerized representation by its i) geometric accuracy, or faithfulness to the physical scene, ii) complexity, iii) structure accuracy and control, and iv) amenability to effective processing and high level scene understanding.

3 Research program

3.1 Context

Geometric modeling and processing revolve around three main end goals: a computerized shape representation that can be visualized (creating a realistic or artistic depiction), simulated (anticipating the real) or realized (manufacturing a conceptual or engineering design). Aside from the mere editing of geometry, central research themes in geometric modeling involve conversions between physical (real), discrete (digital), and mathematical (abstract) representations. Going from physical to digital is referred to as shape acquisition and reconstruction; going from mathematical to discrete is referred to as shape approximation and mesh generation; going from discrete to physical is referred to as shape rationalization.

Geometric modeling has become an indispensable component for computational and reverse engineering. Simulations are now routinely performed on complex shapes issued not only from computer-aided design but also from an increasing amount of available measurements. The scale of acquired data is quickly growing: we no longer deal exclusively with individual shapes, but with entire scenes, possibly at the scale of entire cities, with many objects defined as structured shapes. We are witnessing a rapid evolution of the acquisition paradigms with an increasing variety of sensors and the development of community data, as well as disseminated data.

In recent years, the evolution of acquisition technologies and methods has translated in an increasing overlap of algorithms and data in the computer vision, image processing, and computer graphics communities. Beyond the rapid increase of resolution through technological advances of sensors and methods for mosaicing images, the line between laser scan data and photos is getting thinner. Combining, e.g., laser scanners with panoramic cameras leads to massive 3D point sets with color attributes. In addition, it is now possible to generate dense point sets not just from laser scanners but also from photogrammetry techniques when using a well-designed acquisition protocol. Depth cameras are getting increasingly common, and beyond retrieving depth information we can enrich the main acquisition systems with additional hardware to measure geometric information about the sensor and improve data registration: e.g., accelerometers or gps for geographic location, and compasses or gyrometers for orientation. Finally, complex scenes can be observed at different scales ranging from satellite to pedestrian through aerial levels.

These evolutions allow practitioners to measure urban scenes at resolutions that were until now possible only at the scale of individual shapes. The related scientific challenge is however more than just dealing with massive data sets coming from increase of resolution, as complex scenes are composed of multiple objects with structural relationships. The latter relate i) to the way the individual shapes are grouped to form objects, object classes or hierarchies, ii) to geometry when dealing with similarity, regularity, parallelism or symmetry, and iii) to domain-specific semantic considerations. Beyond reconstruction and approximation, consolidation and synthesis of complex scenes require rich structural relationships.

The problems arising from these evolutions suggest that the strengths of geometry and images may be combined in the form of new methodological solutions such as photo-consistent reconstruction. In addition, the process of measuring the geometry of sensors (through gyrometers and accelerometers) often requires both geometry process and image analysis for improved accuracy and robustness. Modeling urban scenes from measurements illustrates this growing synergy, and it has become a central concern for a variety of applications ranging from urban planning to simulation through rendering and special effects.

3.2 Analysis

Complex scenes are usually composed of a large number of objects which may significantly differ in terms of complexity, diversity, and density. These objects must be identified and their structural relationships must be recovered in order to model the scenes with improved robustness, low complexity, variable levels of details and ultimately, semantization (automated process of increasing degree of semantic content).

Object classification is an ill-posed task in which the objects composing a scene are detected and recognized with respect to predefined classes, the objective going beyond scene segmentation. The high variability in each class may explain the success of the stochastic approach which is able to model widely variable classes. As it requires a priori knowledge, this process is often domain-specific such as for urban scenes where we wish to distinguish between instances as ground, vegetation and buildings. Additional challenges arise when each class must be refined, such as roof super-structures for urban reconstruction.

Structure extraction consists in recovering structural relationships between objects or parts of object. The structure may be related to adjacencies between objects, hierarchical decomposition, singularities or canonical geometric relationships. It is crucial for effective geometric modeling through levels of details or hierarchical multiresolution modeling. Ideally we wish to learn the structural rules that govern the physical scene manufacturing. Understanding the main canonical geometric relationships between object parts involves detecting regular structures and equivalences under certain transformations such as parallelism, orthogonality and symmetry. Identifying structural and geometric repetitions or symmetries is relevant for dealing with missing data during data consolidation.

Data consolidation is a problem of growing interest for practitioners, with the increase of heterogeneous and defect-laden data. To be exploitable, such defect-laden data must be consolidated by improving the data sampling quality and by reinforcing the geometrical and structural relations sub-tending the observed scenes. Enforcing canonical geometric relationships such as local coplanarity or orthogonality is relevant for registration of heterogeneous or redundant data, as well as for improving the robustness of the reconstruction process.

3.3 Approximation

Our objective is to explore the approximation of complex shapes and scenes with surface and volume meshes, as well as on surface and domain tiling. A general way to state the shape approximation problem is to say that we search for the shape discretization (possibly with several levels of detail) that realizes the best complexity / distortion trade-off. Such a problem statement requires defining a discretization model, an error metric to measure distortion as well as a way to measure complexity. The latter is most commonly expressed in number of polygon primitives, but other measures closer to information theory lead to measurements such as number of bits or minimum description length.

For surface meshes, we intend to conceive methods which provide control and guarantees both over the global approximation error and over the validity of the embedding. In addition, we seek for resilience to heterogeneous data, and robustness to noise and outliers. This would allow repairing and simplifying triangle soups with cracks, self-intersections and gaps. Another exploratory objective is to deal generically with different error metrics such as the symmetric Hausdorff distance, or a Sobolev norm which mixes errors in geometry and normals.

For surface and domain tiling, the term meshing is substituted for tiling to stress the fact that tiles may be not just simple elements, but can model complex smooth shapes such as bilinear quadrangles. Quadrangle surface tiling is central for the so-called resurfacing problem in reverse engineering: the goal is to tile an input raw surface geometry such that the union of the tiles approximates the input well and such that each tile matches certain properties related to its shape or its size. In addition, we may require parameterization domains with a simple structure. Our goal is to devise surface tiling algorithms that are both reliable and resilient to defect-laden inputs, effective from the shape approximation point of view, and with flexible control upon the structure of the tiling.

3.4 Reconstruction

Assuming a geometric dataset made out of points or slices, the process of shape reconstruction amounts to recovering a surface or a solid that matches these samples. This problem is inherently ill-posed as infinitely-many shapes may fit the data. One must thus regularize the problem and add priors such as simplicity or smoothness of the inferred shape.

The concept of geometric simplicity has led to a number of interpolating techniques commonly based upon the Delaunay triangulation. The concept of smoothness has led to a number of approximating techniques that commonly compute an implicit function such that one of its isosurfaces approximates the inferred surface. Reconstruction algorithms can also use an explicit set of prior shapes for inference by assuming that the observed data can be described by these predefined prior shapes. One key lesson learned in the shape problem is that there is probably not a single solution which can solve all cases, each of them coming with its own distinctive features. In addition, some data sets such as point sets acquired on urban scenes are very domain-specific and require a dedicated line of research.

In recent years the smooth, closed case (i.e., shapes without sharp features nor boundaries) has received considerable attention. However, the state-of-the-art methods have several shortcomings: in addition to being in general not robust to outliers and not sufficiently robust to noise, they often require additional attributes as input, such as lines of sight or oriented normals. We wish to devise shape reconstruction methods which are both geometrically and topologically accurate without requiring additional attributes, while exhibiting resilience to defect-laden inputs. Resilience formally translates into stability with respect to noise and outliers. Correctness of the reconstruction translates into convergence in geometry and (stable parts of) topology of the reconstruction with respect to the inferred shape known through measurements.

Moving from the smooth, closed case to the piecewise smooth case (possibly with boundaries) is considerably harder as the ill-posedness of the problem applies to each sub-feature of the inferred shape. Further, very few approaches tackle the combined issue of robustness (to sampling defects, noise and outliers) and feature reconstruction.

4 Application domains

In addition to tackling enduring scientific challenges, our research on geometric modeling and processing is motivated by applications to computational engineering, reverse engineering, robotics, digital mapping and urban planning. The main outcome of our research will be algorithms with theoretical foundations. Ultimately, we wish to contribute making geometry modeling and processing routine for practitioners who deal with real-world data. Our contributions may also be used as a sound basis for future software and technology developments.

Our first ambition for technology transfer is to consolidate the components of our research experiments in the form of new software components for the CGAL (Computational Geometry Algorithms Library). Through CGAL, we wish to contribute to the “standard geometric toolbox”, so as to provide a generic answer to application needs instead of fragmenting our contributions. We already cooperate with the Inria spin-off company Geometry Factory, which commercializes CGAL, maintains it and provides technical support.

Our second ambition is to increase the research momentum of companies through advising Cifre Ph.D. theses and postdoctoral fellows on topics that match our research program.

5 Social and environmental responsibility

5.1 Impact of research results

The Irima PEPR project is focused on the prevention of natural risks.

The Cifre thesis with Samp AI is related to digital twinning of industrial sites. Some of the applications are the decommissioning of these sites, or their upgrading to match the environmental regulations.

6 Highlights of the year

Pierre Alliez has been nominated president of the CST (Conseil scientifique et technique) of IGN (French mapping agency – the public operator for geographic and forest information in France) since November 2024.

7 New software, platforms, open data

7.1 New software

7.1.1 Module CGAL - Kinetic Surface Reconstruction

-

Keywords:

Geometric algorithms, Geometric modeling, Polyhedral meshes

-

Scientific Description:

The method takes as input a point cloud with oriented normals, see Figure 69.1. In a first step, Shape Detection is used to abstract planar shapes from the point cloud. The optional regularization of shapes, see Shape regularization, can not just improve the accuracy of the data by aligning parallel, coplanar and orthogonal shapes, but provides in the same time a reduction in complexity. Before inserting the planar shapes into the kinetic space partition, coplanar shapes are merged into a single shape and the 2d convex hull of each shape is constructed. The reconstruction is posed as an energy minimization labeling the convex volumes of the kinetic space partition as inside or outside. The optimal surface separating the differently labeled volumes is found via min-cut. A graph is embedded into the kinetic partition representing every volume by a vertex and every face between to volumes by an edge connecting the corresponding vertices.

-

Functional Description:

The kinetic surface reconstruction package reconstructs concise polygon surface meshes from input point clouds. At the core is the Kinetic space partition which efficiently decomposes the bounding box into a set of convex polyhedra. The decomposition is guided by a set of planar shapes which are aforehand abstracted from an input point cloud. The final surface is obtained via an energy formulation trading data faithfulness for low complexity which is solved via min-cut. The output is a polygonal watertight mesh.

-

Contact:

Florent Lafarge

-

Partner:

GeometryFactory

7.1.2 Module CGAL - Kinetic Space Partition

-

Keywords:

Geometric modeling, Computational geometry

-

Functional Description:

This CGAL component takes as input a set of non-coplanar convex polygons and partitions the bounding box of the input into polyhedra, where each polyhedron and its facets are convex. Each facet of the partition is part of the input polygons or an extension of them.

-

Contact:

Florent Lafarge

-

Partner:

GeometryFactory

7.1.3 Wireframe_Regul

-

Keywords:

Geometric modeling, Polyhedral meshes, Point cloud

-

Functional Description:

The software implements a method for simplifying and regularizing roof wireframes of 3D building models. It operates in two steps. First, it regularizes the 2D wireframe projected in the horizontal plane by reducing the number of vertices and enforcing geometric regularities, i.e., parallelism and orthogonality. Then, it extrudes the 2D wireframe to 3D by an optimization process that guarantees the planarity of the roof sections as well as the preservation of the vertical discontinuities and horizontal rooftop edges.

-

Contact:

Florent Lafarge

8 New results

8.1 Analysis

8.1.1 Entropy-driven Progressive Compression of 3D Point Clouds

Participants: Armand Zampieri, Pierre Alliez, Guillaume Delarue [Samp.ai], Nachwa Abou Bakr [Samp.ai].

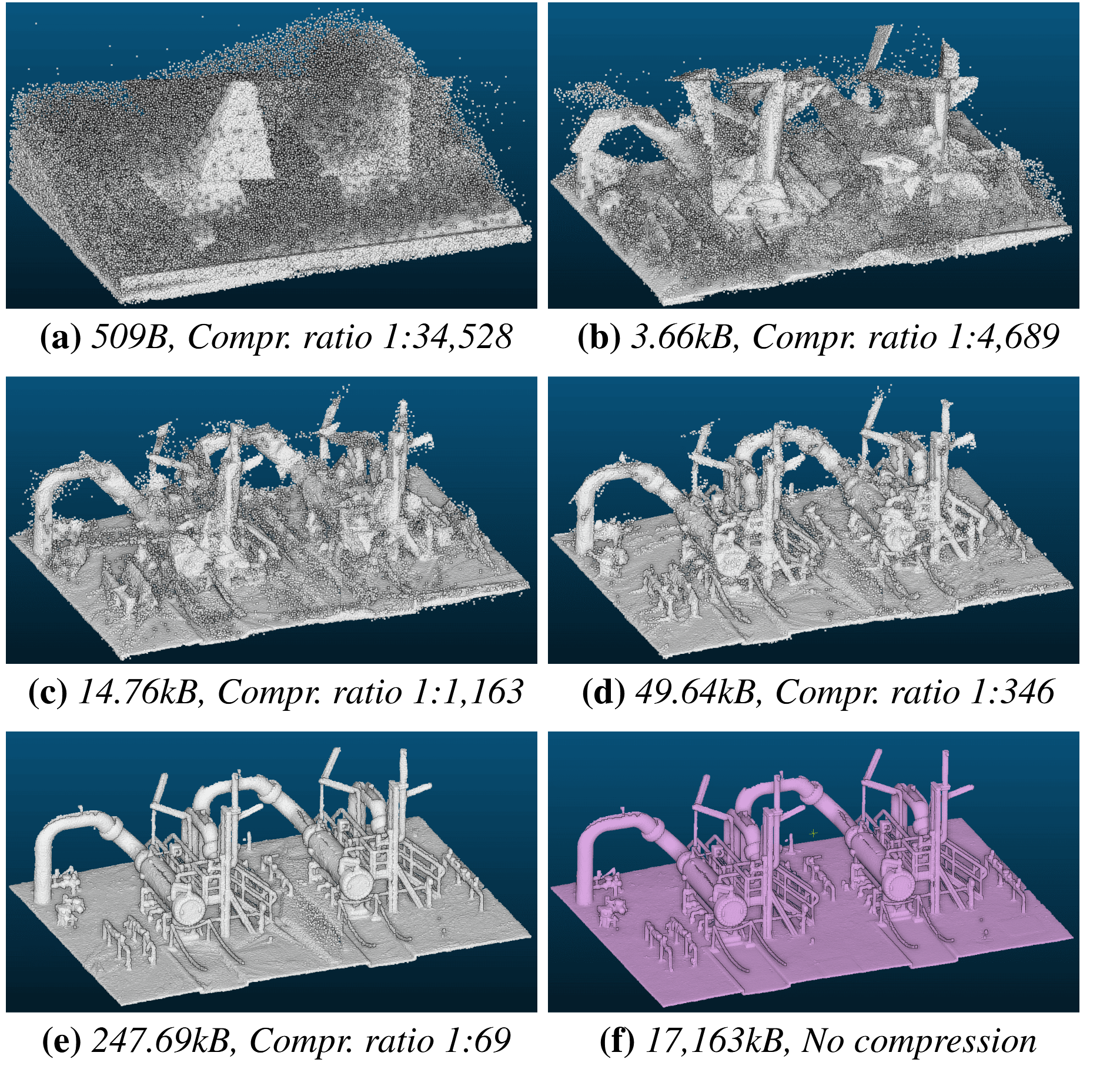

3D point clouds stand as one of the prevalent representations for 3D data, offering the advantage of closely aligning with sensing technologies and providing an unbiased representation of a measured physical scene. Progressive compression is required for real-world applications operating on networked infrastructures with restricted or variable bandwidth. We contribute a novel approach that leverages a recursive binary space partition, where the partitioning planes are not necessarily axis-aligned and optimized via an entropy criterion (see Figure 1). The planes are encoded via a novel adaptive quantization method combined with prediction. The input 3D point cloud is encoded as an interlaced stream of partitioning planes and number of points in the cells of the partition. Compared to previous work, the added value is an improved rate-distortion performance, especially for very low bitrates. The latter are critical for interactive navigation of large 3D point clouds on heterogeneous networked infrastructures. This work was presented at the Eurographics Symposium on Geometry Processing (SGP) 15.

Progressive decompression of a 3D point cloud.

8.1.2 LineFit: A Geometric Approach for Fitting Line Segments in Images

Participants: Marion Boyer, Florent Lafarge, David Youssefi [CNES].

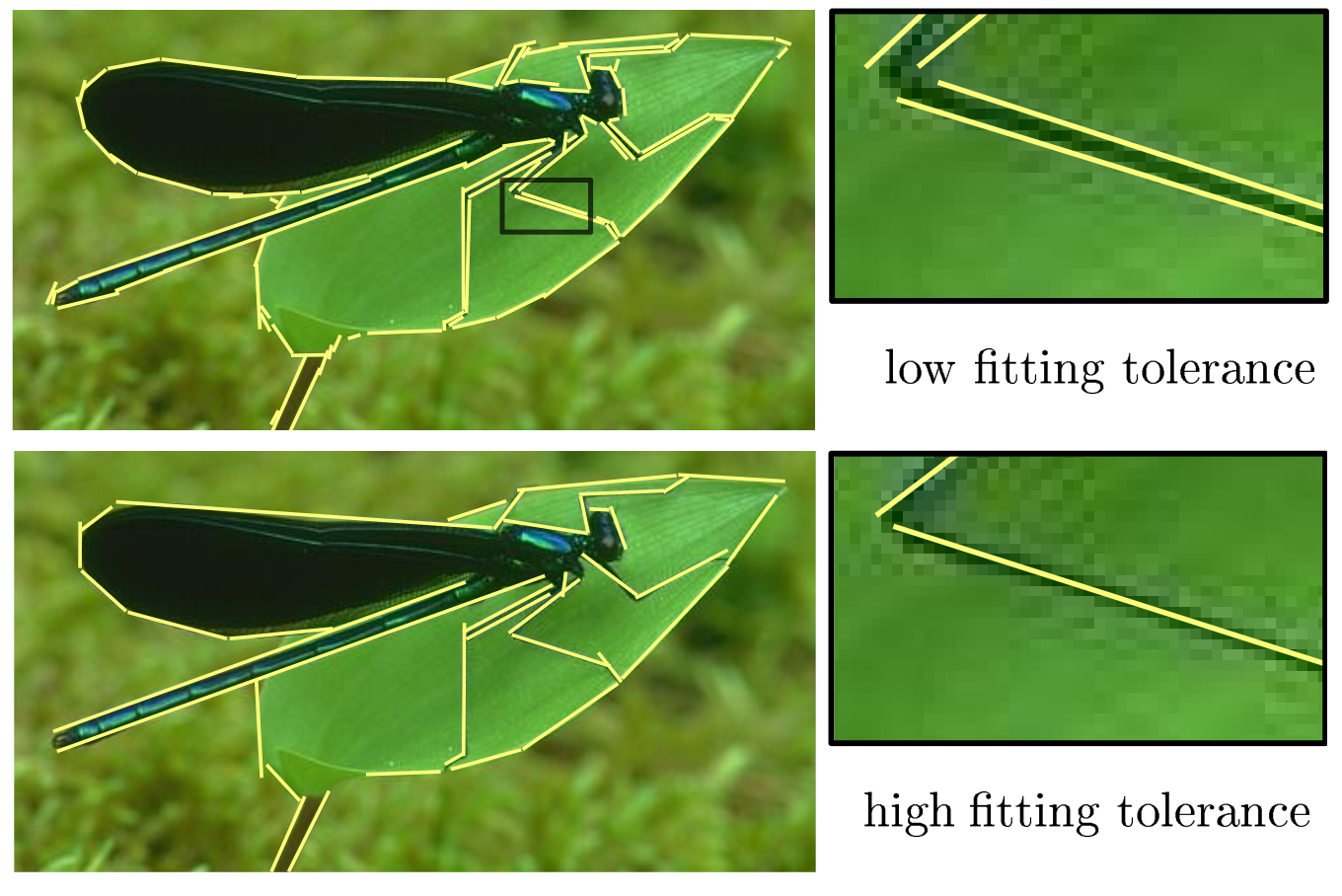

This work introduces LineFit, an algorithm that fits line segments from a predicted image gradient map. While existing detectors aim at capturing line segments on line-like structures, our algorithm also seeks to approximate curved shapes (see Figure 2). This particularity is interesting for addressing vectorization problems with edge-based representations, after connecting the detected line segments. Our algorithm measures and optimizes the quality of a line segment configuration globally as a point-to-line fitting problem. The quality of configurations is measured through the local fitting error, the completeness over the image gradient map and the capacity to preserve geometric regularities. A key ingredient of our work is an efficient and scalable exploration mechanism that refines an initial configuration by ordered sequences of geometric operations. We show the potential of our algorithm when combined with recent deep image gradient predictions and its competitiveness against existing detectors on different datasets, especially when scenes contain curved objects. We also demonstrate the benefit of our algorithm for polygonalizing objects. This work was presented at the European Conference on Computer Vision 18.

Line-segment detection with fitting tolerance control.

8.1.3 PASOA- PArticle baSed Bayesian Optimal Adaptive design

Participants: Jacopo Iollo, Pierre Alliez, Florence Forbes [Statify Inria research team], Christophe Heinkelé [Cerema].

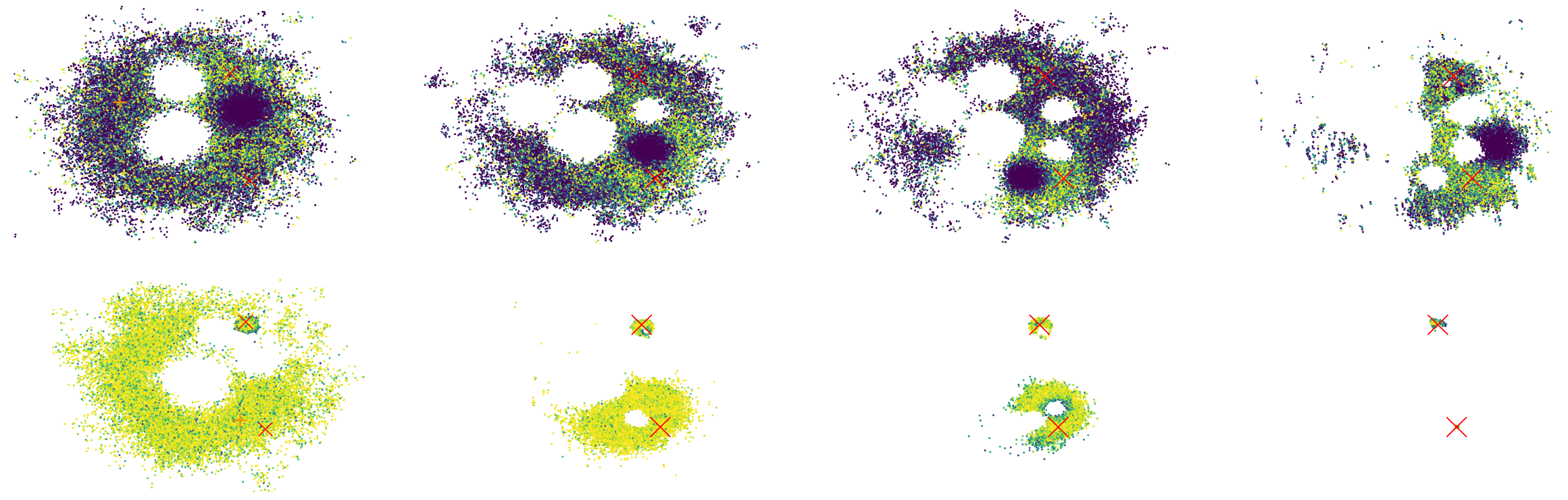

We propose a new procedure named PASOA, for Bayesian experimental design, that performs sequential design optimization by simultaneously providing accurate estimates of successive posterior distributions for parameter inference. The sequential design process is carried out via a contrastive estimation principle, using stochastic optimization and Sequential Monte Carlo (SMC) samplers to maximize the Expected Information Gain (EIG). As larger information gains are obtained for larger distances between successive posterior distributions, this EIG objective may worsen classical SMC performance. To handle this issue, tempering is proposed to have both a large information gain and an accurate SMC sampling, that we show is crucial for performance. This novel combination of stochastic optimization and tempered SMC allows to jointly handle design optimization and parameter inference. We provide a proof that the obtained optimal design estimators benefit from some consistency property. Numerical experiments confirm the potential of the approach, which outperforms other recent existing procedures (see Figure 3). This work was presented at the International Conference on Machine Learning (ICML) 19.

PASOA- PArticle baSed Bayesian Optimal Adaptive design.

8.1.4 Bayesian Experimental Design via Contrastive Diffusions

Participants: Jacopo Iollo, Pierre Alliez, Florence Forbes [Statify Inria research team], Christophe Heinkelé [Cerema].

Bayesian Optimal Experimental Design (BOED) is a powerful tool to reduce the cost of running a sequence of experiments. When based on the Expected Information Gain (EIG), design optimization corresponds to the maximization of some intractable expected contrast between prior and posterior distributions. Scaling this maximization to high dimensional and complex settings has been an issue due to BOED inherent computational complexity. In this work, we introduce an expected posterior distribution with cost-effective sampling properties and provide a tractable access to the EIG contrast maximization via a new EIG gradient expression. Diffusion-based samplers are used to compute the dynamics of the expected posterior and ideas from bi-level optimization are leveraged to derive an efficient joint sampling-optimization loop, without resorting to lower bound approximations of the EIG. The resulting efficiency gain allows to extend BOED to the well-tested generative capabilities of diffusion models. By incorporating generative models into the BOED framework, we expand its scope and its use in scenarios that were previously impractical. Numerical experiments and comparisons with state-of-the-art methods show the potential of the approach. This work is on ArXiv 24.

8.1.5 BIM2TWIN: Optimal Construction Management and Production Control

Participants: Pierre Alliez, Rahima Djahel.

This work has been done in collaboration with 27 colleagues from the consortium of the BIM2TWIN European project.

The construction sector is often described as a low-productivity sector, weak in terms of innovation and slow to adopt digital solutions. However, the adoption of BIM has streamlined the design process by facilitating communication between the players involved and has proven that the deployment of digital solutions may greatly improve productivity. The act of construction brings together professionals from different companies around the same project for a limited period, and as a result the construction process is affected by numerous problems, such as design and planning issues (both human resources and materials), unsuitable working methods or equipment, as well as safety and security issues for workers on site. The concept of the Digital Twin was first mooted in the 1970s. Initially evoked in relation to factory production processes, the notion has evolved and spread to other areas such as engineering, product design, simulation, predictive maintenance, etc. The popularity of the concept has increased considerably in recent years with technological advances such as the Internet of Things (IoT), artificial intelligence (AI) and advanced 3D modeling. For the construction industry, the adoption of Digital Building Twins (DBT) in the construction site offers the promise to address the inherent complexity of construction activity. A DBT can provide a decision support environment, bringing together an automated system able to capture on-site information and the services able to process, analyze and transform this raw information into knowledge. Accurate and reliable knowledge of the current status facilitates the decision mechanisms in planning production systems that have some resilience to unforeseen events that occur randomly during on-site activities. Applied to the construction sector, a digital twin is a virtual replica of a building or physical infrastructure that already exists or is under construction. This is the context for the BIM2TWIN project, which aimed to research, develop and test a technical solution system that includes a comprehensive set of tools for monitoring the progress of a construction site, focusing on monitoring the construction process, assessing the quality of construction, monitoring the safety and security of workers on site, and optimizing the use of equipment. The solution developed in BIM2TWIN also includes a service that takes account of deviations from the planned schedule reported during construction and proposes alternative plans to respond to these deviations as effectively as possible. Thus, this project sought to highlight the potential of the DBT concept for optimizing construction processes based on digital monitoring on-site and in the supply chain, on artificially intelligent diagnosis of the monitored data, and on simulation and predictive analysis of proposed alternate production plans. This survey has been diffused in a technical report 23.

8.1.6 Digital Twin Enabled Construction Process Monitoring

Participants: Rahima Djahel, Kacper Pluta [ESIEE Paris], Mathew Alwyn [University of Cambridge], Li Shuyan [University of Cambridge], Ioannis Brilakis [University of Cambridge].

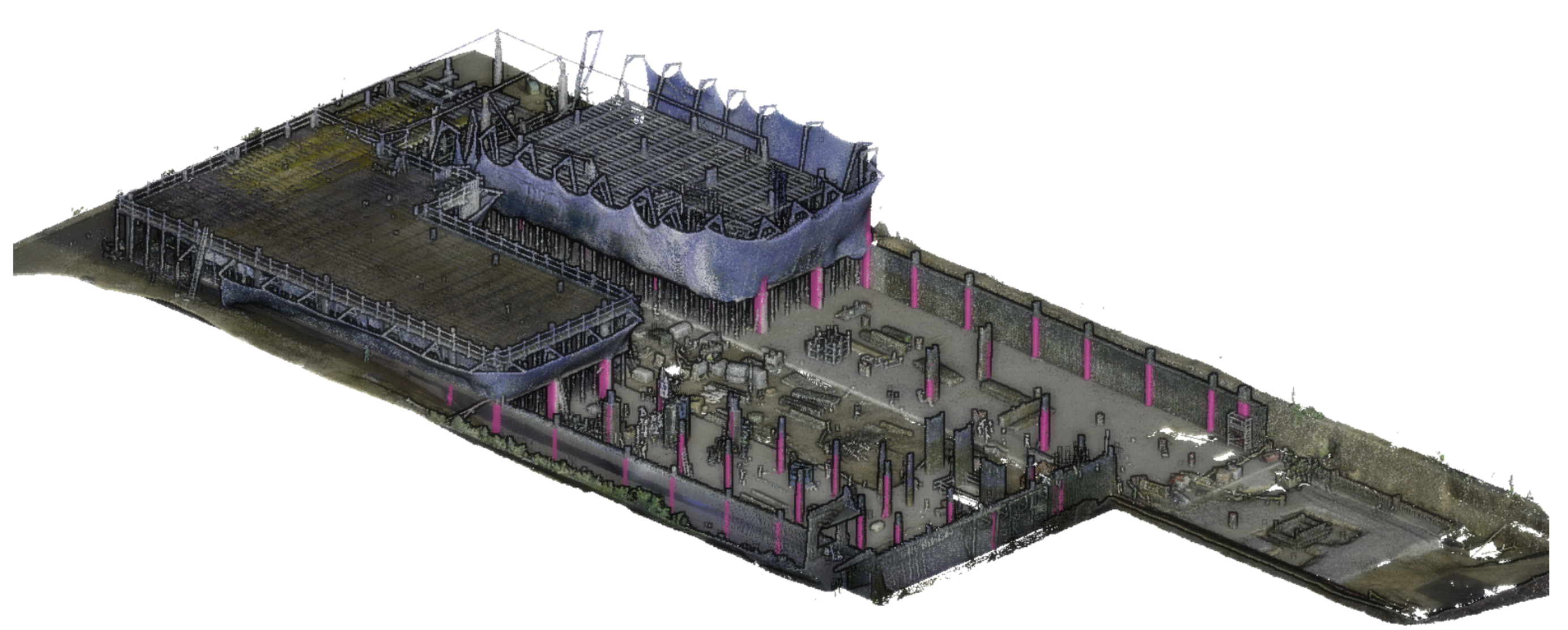

Digital Twin technology has revolutionized overseeing newly built structures. This study suggests employing digital twin-based automatic progress monitoring on construction sites, comparing 3D point clouds with their Building Information Modeling to track progress and predict completion (see Figure 4). It highlights integrating semi-continuous monitoring with a building's digital twin for efficient construction management. Leveraging precise data enhances understanding and identifies schedule deviations, enabling timely actions. Demonstrated through real-world construction data, visualized Gantt charts showcase its efficacy, offering insights into task status, schedule deviations, and projected completion dates. This underscores digital twin technology's potential to transform construction oversight. This work was presented at the European Conference on Computing in Construction 16.

Digital Twin Enabled Construction Process Monitoring.

8.2 Reconstruction

8.2.1 PoNQ: a Neural QEM-based Mesh Representation

Participants: Nissim Maruani, Pierre Alliez, Mathieu Desbrun [Geomerix Inria project-team], Maks Ovsjanikov [Ecole Polytechnique].

Although polygon meshes have been a standard representation in geometry processing, their irregular and combinatorial nature hinders their suitability for learning-based applications. In this work, we introduce a novel learnable mesh representation through a set of local 3D sample Points and their associated Normals and Quadric error metrics (QEM) w.r.t. the underlying shape, which we denote PoNQ (see Figure 5). A global mesh is directly derived from PoNQ by efficiently leveraging the knowledge of the local quadric errors. Besides marking the first use of QEM within a neural shape representation, our contribution guarantees both topological and geometrical properties by ensuring that a PoNQ mesh does not self-intersect and is always the boundary of a volume. Notably, our representation does not rely on a regular grid, is supervised directly by the target surface alone, and also handles open surfaces with boundaries and/or sharp features. We demonstrate the efficacy of PoNQ through a learning-based mesh prediction from SDF (signed distance function) grids and show that our method surpasses recent state-of-the-art techniques in terms of both surface and edge-based metrics. This work was presented at the international conference on Computer Vision and Pattern Recognition (CVPR) 20.

PoNQ: a Neural QEM-based Mesh Representation

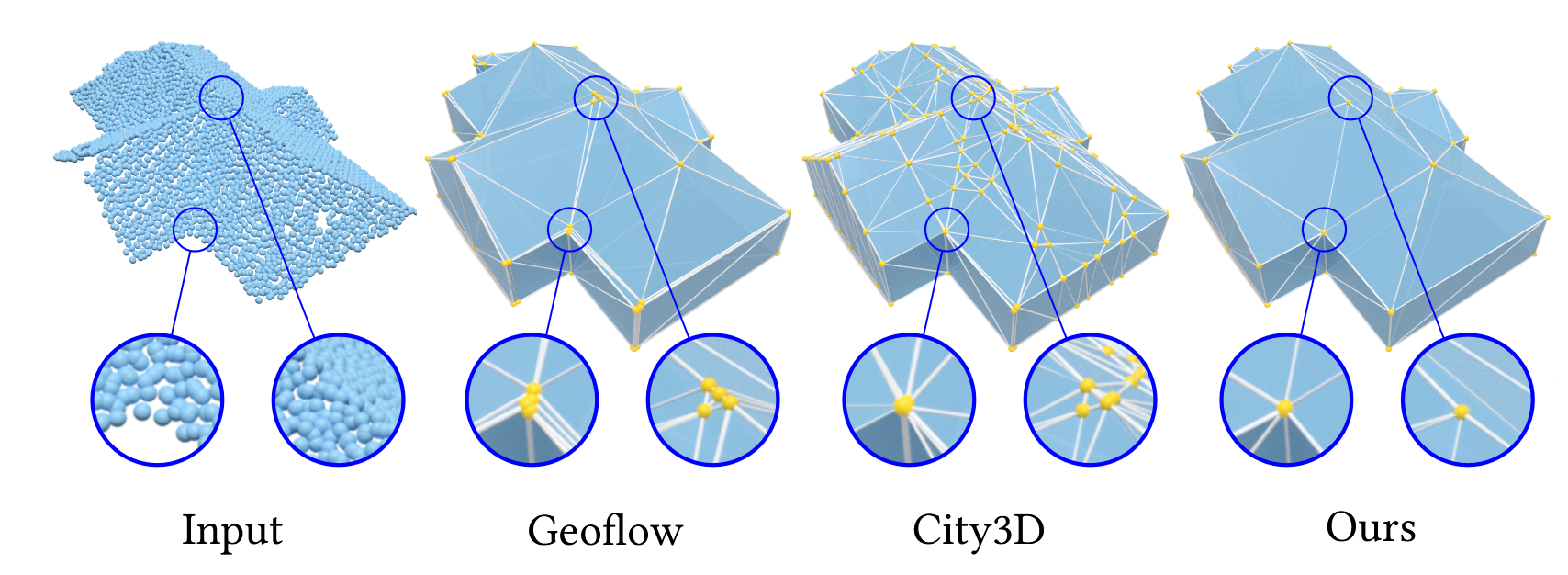

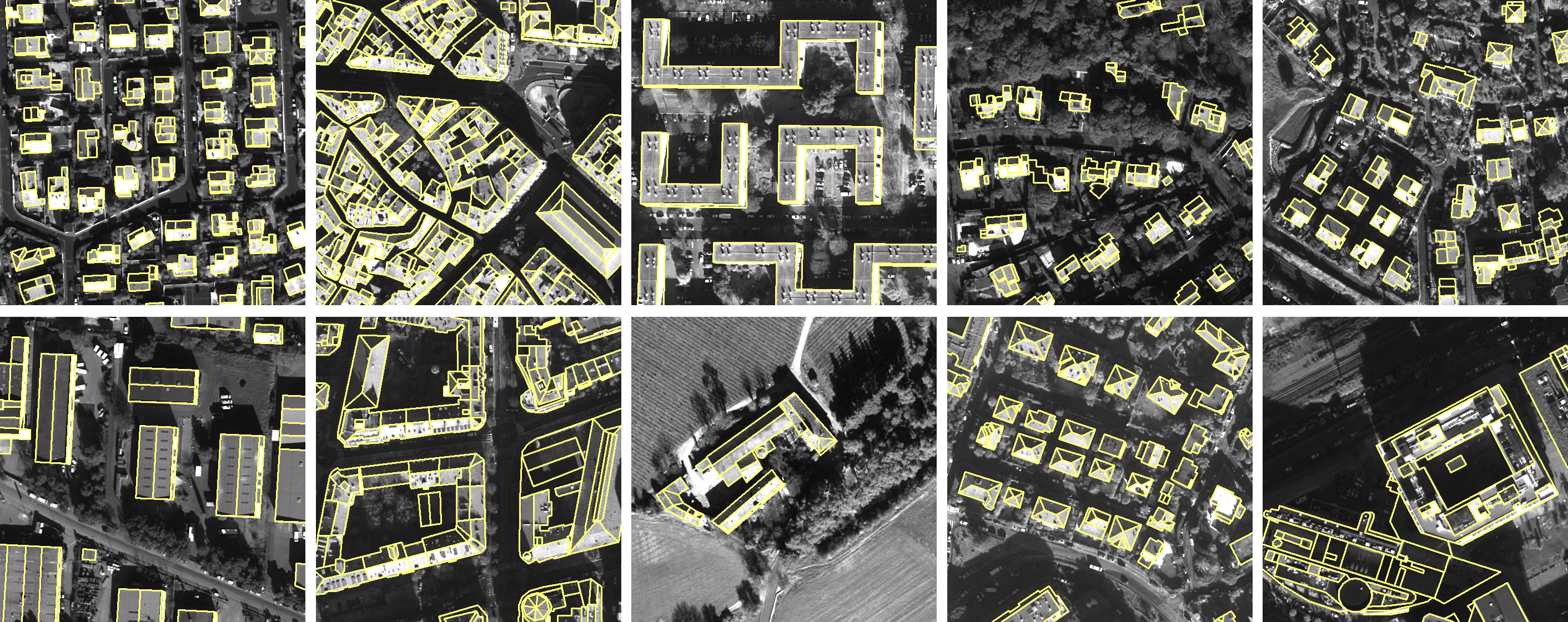

8.2.2 SimpliCity: Reconstructing Buildings with Simple Regularized 3D Models

Participants: Raphael Sulzer, Florent Lafarge, Jean-Philippe Bauchet [Luxcarta], Yuliya Tarabalka [Luxcarta].

Automatic methods for reconstructing buildings from airborne LiDAR point clouds focus on producing accurate 3D models in a fast and scalable manner, but they overlook the problem of delivering simple and regularized models to practitioners. As a result, output meshes often suffer from connectivity approximations around corners with either the presence of multiple vertices and tiny facets, or the necessity to break the planarity constraint on roof sections and facade components. We propose a 2D planimetric arrangement-based framework to address this problem. We first regularize, not the 3D planes as commonly done in the literature, but a 2D polyhedral partition constructed from the planes. Second, we extrude this partition to 3D by an optimization process that guarantees the planarity of the roof sections as well as the preservation of the vertical discontinuities and horizontal rooftop edges. We show the benefits of our approach against existing methods by producing simpler 3D models while offering a similar fidelity and efficiency (see Figure 6). This work was presented at the USM3D workshop of the international conference on Computer Vision and Pattern Recognition 17.

SimpliCity: Reconstructing Buildings with Simple Regularized 3D Models

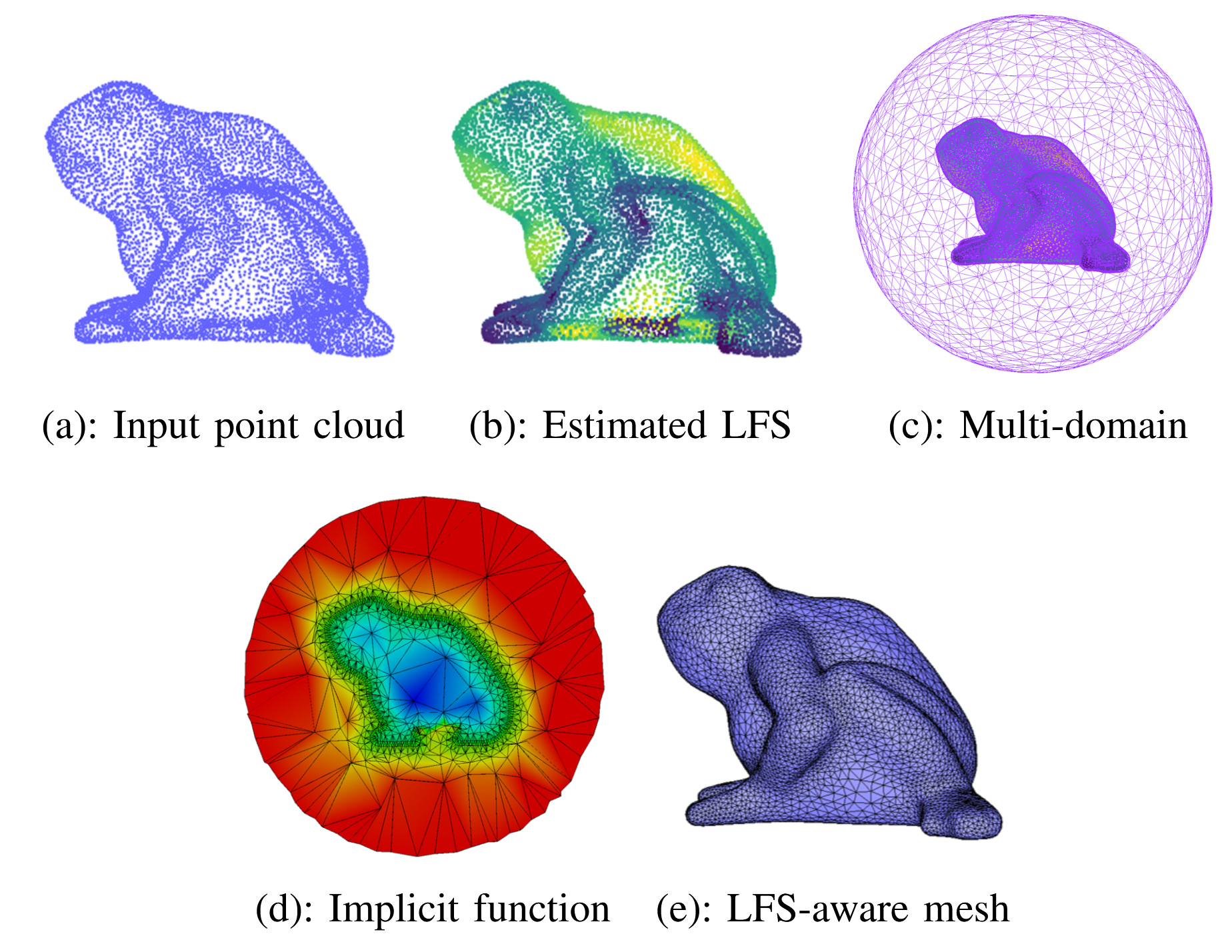

8.2.3 LFS-Aware Surface Reconstruction from Unoriented 3D Point Clouds

Participants: Rao Fu, Pierre Alliez, Kai Hormann [Universita della Svizzera italiana].

This work introduces a novel approach for generating isotropic surface triangle meshes directly from unoriented 3D point clouds, with the mesh density adapting to the estimated local feature size (LFS). Popular reconstruction pipelines first reconstruct a dense mesh from the input point cloud and then apply remeshing to obtain an isotropic mesh. The sequential pipeline makes it hard to find a lower-density mesh while preserving more details. Instead, our approach reconstructs both an implicit function and an LFS-aware mesh sizing function directly from the input point cloud, which is then used to produce the final LFS-aware mesh without remeshing (see Figure 7). We combine local curvature radius and shape diameter to estimate the LFS directly from the input point clouds. Additionally, we propose a new mesh solver to solve an implicit function whose zero level set delineates the surface without requiring normal orientation. The added value of our approach is generating isotropic meshes directly from 3D point clouds with an LFS-aware density, thus achieving a trade-off between geometric detail and mesh complexity. Our experiments also demonstrate the robustness of our method to noise, outliers, and missing data and can preserve sharp features for CAD point clouds. This work was published in the IEEE Transactions on Multimedia journal 14.

BLFS-Aware Surface Reconstruction from 3D Point Clouds.

8.2.4 Shape Reconstruction from 3D Point Clouds

Participants: Rao Fu.

This Ph.D. thesis funded by EU project GRAPES was advised by Pierre Alliez.

Shape reconstruction from raw 3D point clouds is a core challenge in the field of computer graphics and computer vision. This thesis explores new approaches to tackle this multifaceted problem, with a specific focus on two aspects: primitive segmentation and mesh-based surface reconstruction. For primitive segmentation, we introduce BPNet, a deep-learning framework designed to segment 3D point clouds according to Bézier primitives. Unlike conventional methods that address different primitive types in isolation, BPNet offers a more general and adaptive approach. Inspired by Bézier decomposition techniques commonly employed for Non-Uniform Rational B-Spline (NURBS) models, we employ Bézier decomposition to guide the segmentation of point clouds, removing the constraints posed by specific categories of primitives. More specifically, we contribute a joint optimization framework via a soft voting regularizer that enhances primitive segmentation, an auto-weight embedding module that streamlines the clustering of point features, and a reconstruction module that refines the segmented primitives. We validate the proposed approach on the ABC and AIM@Shape datasets. The experiments show superior segmentation performance and shorter inference time compared to baseline methods. On surface reconstruction, we contribute a method designed to generate isotropic surface triangle meshes directly from unoriented 3D point clouds. The benefits of this approach lie in its adaptability to local feature size (LFS). Our method consists of three steps: LFS estimation, implicit function reconstruction and LFS-aware mesh sizing. The LFS estimation process computes the minimum of two geometric properties: local curvature radius and shape diameter, determined via jet-fitting and a Lipschitz-guided dichotomic search. The implicit function reconstruction step proceeds in three sub-steps: constructing a tetrahedron multi-domain from an unsigned distance function, signing the multi-domain via data fitting, and generating a signed robust distance function. Finally, the mesh sizing function, derived from the locally estimated LFS, controls the Delaunay refinement process used to mesh the zero level-set of the implicit function. This approach yields isotropic meshes directly from 3D point clouds while offering an LFS-aware mesh density. Our experiments demonstrate the robustness of this approach, showcasing its ability to handle noise, outliers or missing data 22.

8.3 Approximation

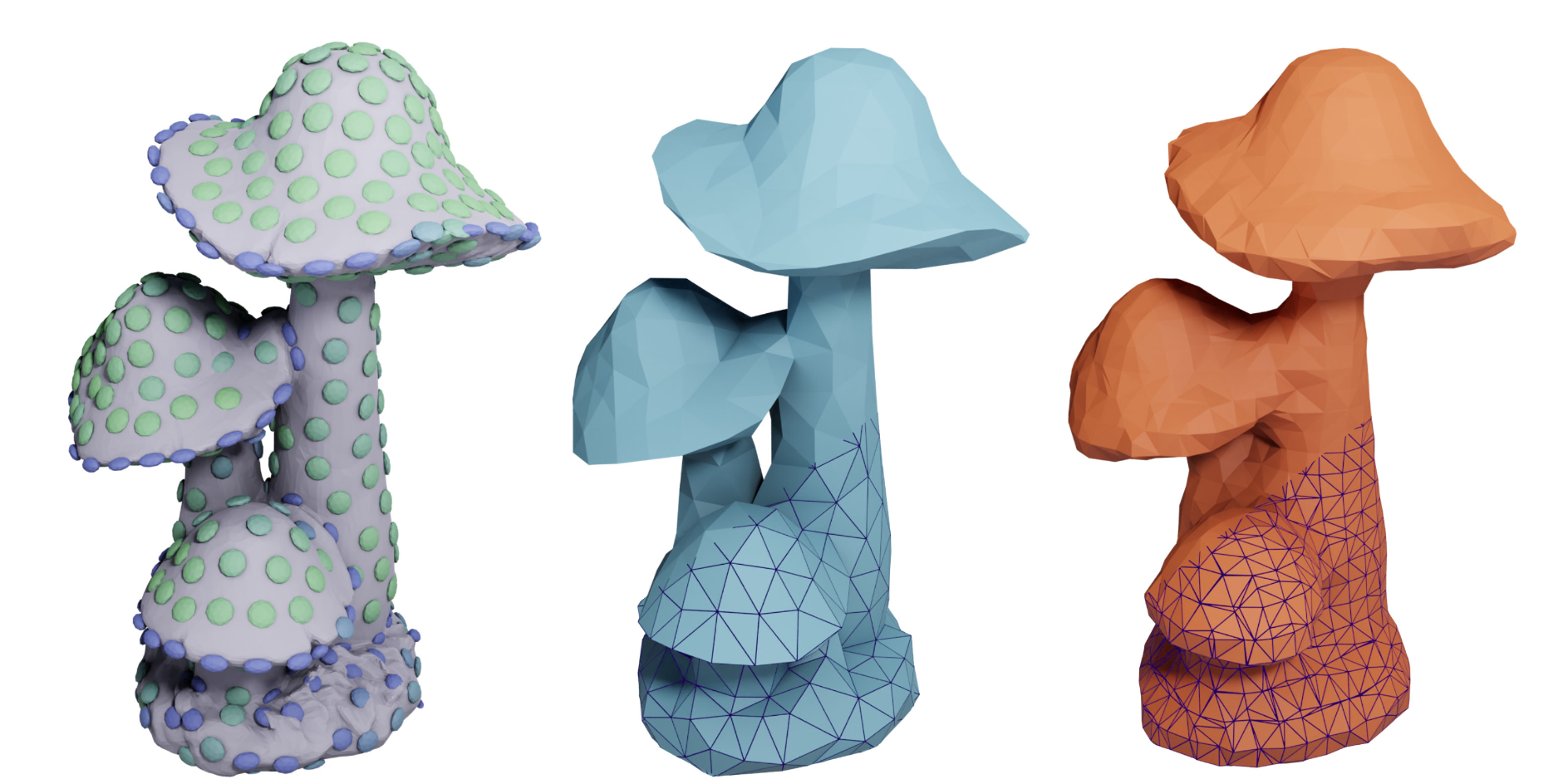

8.3.1 Concise Plane Arrangements for Low-Poly Surface and Volume Modeling

Participants: Raphael Sulzer, Florent Lafarge.

Plane arrangements are a useful tool for surface and volume modeling. However, their main drawback is poor scalability. We introduce two key novelties that enable the construction of plane arrangements for complex objects and entire scenes: (i) an ordering scheme for the plane insertion and (ii) the direct use of input points during arrangement construction. Both ingredients reduce the number of unwanted splits, resulting in improved scalability of the construction mechanism by up to two orders of magnitude compared to existing algorithms. We further introduce a remeshing and simplification technique that allows us to extract low-polygon surface meshes and lightweight convex decompositions of volumes from the arrangement (see Figure 8). We show that our approach leads to state-of-the-art results for the aforementioned tasks by comparing it to learning-based and traditional approaches on various different datasets. This work was presented at the European Conference on Computer Vision 21.

Concise Plane Arrangements for Low-Poly Surface and Volume Modeling.

9 Bilateral contracts and grants with industry

9.1 Bilateral contracts with industry

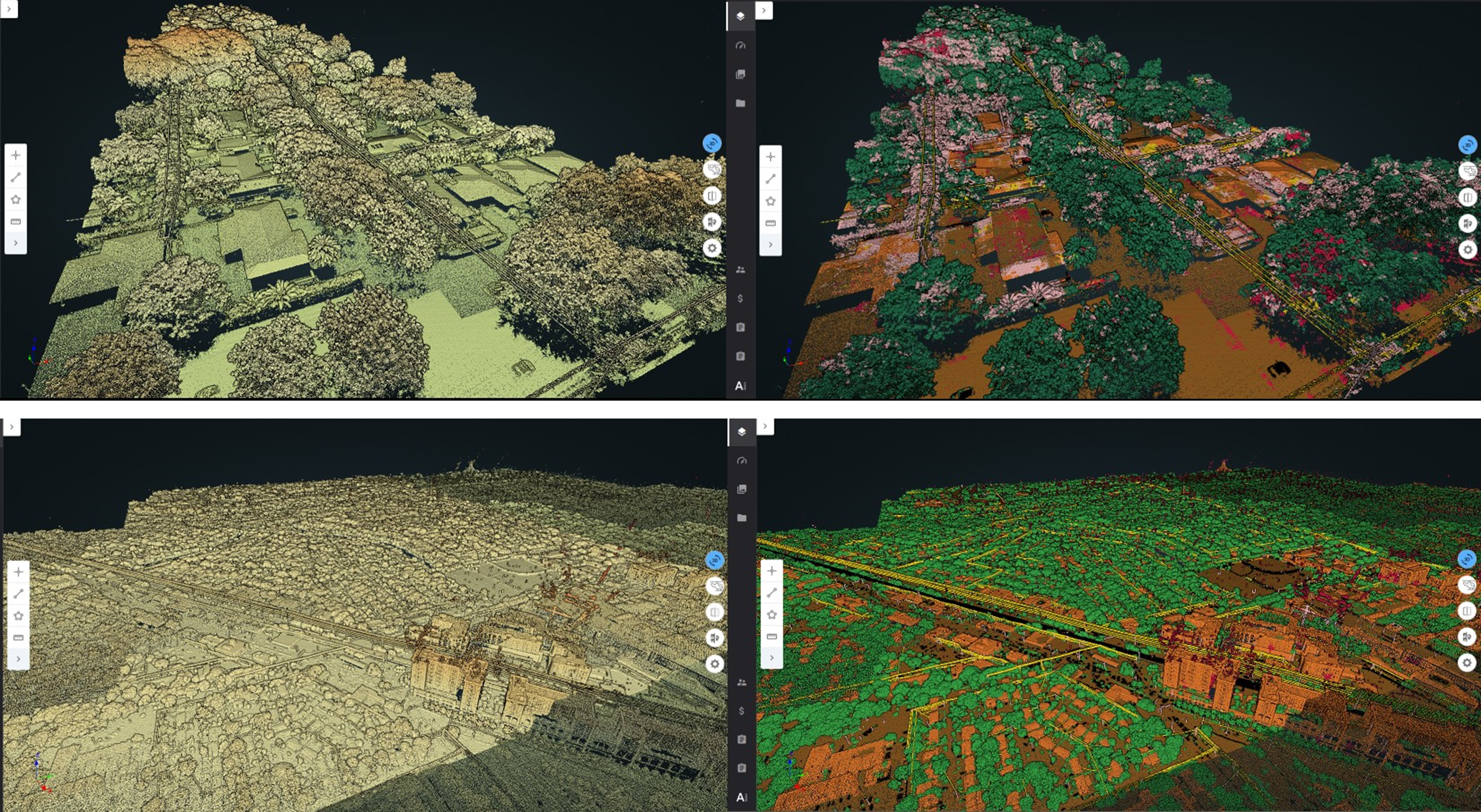

AlteIA - Cifre thesis

Participants: Pierre Alliez, Moussa Bendjilali.

This Cifre Ph.D. thesis project, entitled “From 3D Point Clouds to Cognitive 3D Models”, started in September 2023, for a total duration of 3 years.

Context. Digital twinning of large-scale scenes and industrial sites requires acquiring the geometry and appearance of terrains, large-scale structures and objects. In this thesis, we assume that the acquisition is performed by an aerial laser scanner (LiDAR) attached to a helicopter (figure 9). The generated 3D point clouds record the point coordinates, intensities of reflectivity, and possibly other attributes such as colors. These raw measurement data can be massive, in the order of 70 points per meter square, summing up to several hundred million points for scenes extending over kilometers. Analyzing large-scale scenes requires (1) enriching these data with an abstraction layer, (2) understanding the structure and semantics of the scenes, (3) producing so-called cognitive 3D models that are searchable, and (4) detecting the changes in the scenes over time.

Large-scale scene of an electric infrastructure.

We want to explore novel algorithms for addressing the aforementioned requirements. The input is assumed to be a LiDAR 3D point cloud recording the point coordinates and surface reflectivity intensity of a point set sampled over a physical scene. The objectives are multifaceted:

- Semantic segmentation. We wish to retrieve the semantics of the scene as well as the location, pose and semantic classes of objects of interest. Ideally, we seek for dense semantic segmentation, where the class of each sample point is inferred in order to yield a complete description of the physical scene.

- Instance segmentation. We wish to distinguish each instance of a semantic class so that all objects of a scene can be enumerated.

- Abstraction. We wish to detect canonical primitives (planes, cylinders, spheres, etc.) that approximate (abstract) the objects of the physical scenes, and understand the relationships between them (e.g., adjacency, containment, parallelism, orthogonality, coplanarity, concentricity). Abstraction goes beyond the notion of geometric simplification, with the capability to forget the exquisite details present in dense 3D point clouds.

- Object detection. Knowing a library of CAD models, we wish to detect and enumerate all occurrences of an object of interest used as a query. We assume some known information either on the primitive shape and its parametric description, or on the shape of the query.

- Change detection. Series of LiDAR data acquired over time are required for monitoring changes in industrial sites. The objective is to detect and characterize changes in the physical scene, with or without any explicit taxonomy.

- Levels of details. The above tasks should be tackled with levels of details (LODs), both for the geometry and semantics. LODs will relate to geometric tolerance errors and to a hierarchy of semantic ontologies.

CNES - Airbus

Participants: Marion Boyer, Florent Lafarge.

The goal of this collaboration is to design an automatic pipeline for modeling in 3D urban scenes under a CityGML LOD2 formalism using the new generation of stereoscopic satellite images, in particular the 30cm resolution images offered by Pléiades Néo satellites (see Figure 10). Traditional methods usually start with a semantic classification step followed by a 3D reconstruction of objects composing the urban scene. Too often in traditional approaches, inaccuracies and errors from the classification phase are propagated and amplified throughout the reconstruction process without any possible subsequent correction. In contrast, our main idea consists in extracting semantic information of the urban scene and in reconstructing the geometry of objects simultaneously. This project started in October 2022, for a total duration of 3 years.

Pléiades Néo satellite images

Luxcarta

Participants: Raphael Sulzer, Florent Lafarge.

The main goal of this collaboration with Luxcarta is to develop new algorithms that improve the geometry and topology of 3D models of buildings produced by the company. The algorithms should detect and enforce (i) geometric regularities in 3D models, such as parallelism of roof edges or connection of polygonal facets at exactly one vertex, and (ii) simplify models, e.g. by removing undesired facets or incoherent roof typologies. These two objectives will have to be fulfilled under the constraint that the geometric accuracy of the solution must remain close to the one of the input models. Assuming the input 3D models are valid watertight polygon surface meshes, we will investigate metrics to quantify the geometric regularities and the simplicity of the input models and will propose a formulation that allows both continuous and discrete modifications. Continuous modifications will aim to better align vertices and edges, in particular to reinforce geometric regularities. Discrete modifications will handle changes in roof topology with, for instance, removal or creation of roof sections or new adjacencies between them. The output model will have to be valid 2-manifold watertight polygon surface meshes. We will also investigate optimization mechanisms to explore the large solution space of the problem efficiently. This project started in November 2023, for a total duration of 1 year.

Naval group

Participants: Pierre Alliez.

This project is in collaboration with the Acentauri project-team (two research engineers are co-advised since November 2023). The context is that of the factory of the future for Naval Group, for submarines and surface ships. As input, we have a digital model (e.g., a frigate), the equipment assembly schedule and measurement data (images or Lidar). We wish to monitor the assembly site to compare the "as-designed" with the "as-built" model. The main problem is a need for coordination on the construction sites for decision making and planning optimization. We wish to enable following the progress of a real project and to check its conformity with the help of a digital twin. Currently, since Naval Group needs to verify on site, rounds of visits are required to validate the progress as well as the equipment. These rounds are time consuming and not to mention the constraints of the site, such as the temporary absence of electricity or the numerous temporary assembly and safety equipment. The objective of the project is to automate the monitoring, with sensor intelligence to validate the work. Fixed sensor systems (e.g. cameras or Lidar) or mobile (e.g. drones) sensors will be used, with the addition of smartphones/tablets carried by operators on site.

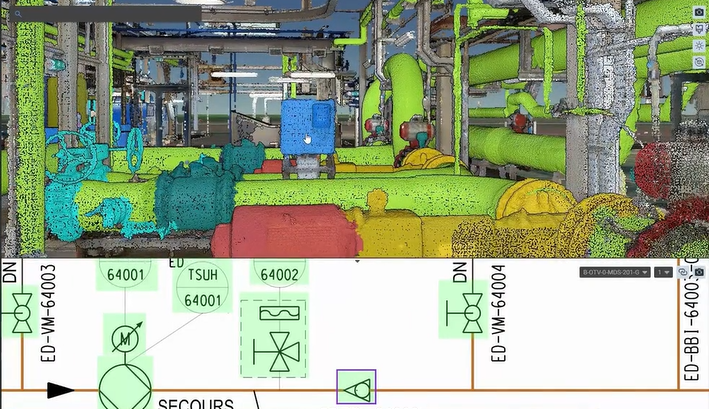

Samp AI - Cifre thesis

Participants: Armand Zampieri, Pierre Alliez.

This project (Cifre Ph.D. thesis) investigates algorithms for progressive, random access compression of 3D point clouds for digital twinning of industrial sites (see Figure 11). The context is as follows. Laser scans of industrial facilities contain massive amounts of 3D points which are very reliable visual representations of these facilities. These points represent the positions, color attributes and other metadata about object surfaces. Samp turns these 3D points into intelligent digital twins that can be searched and interactively visualized on heterogeneous clients, via streamed services on the cloud. The 3D point clouds are first structured and enriched with additional attributes such as semantic labels or hierarchical group identifiers. Efficient streaming motivates progressive compression of these 3D point clouds, while local search or visualization queries call for random accessible capabilities. Given an enriched 3D point cloud as input, the objective is to generate as output a compressed version with the following properties: (1) Random accessible compressed (RAC) file format that provides random access (not just sequential access) to the decompressed content, so that each compressed chunk can be decompressed independently, depending on the area in focus on the client side. (2) Progressive compression: the enriched point cloud is converted into a stream of refinements, with an optimized rate-distortion tradeoff allowing for early access to a faithful version of the content during streaming. (3) Structure-preserving: all structural and semantic information are preserved during compression, allowing for structure-aware queries on the client. (4) Lossless at the end: allows the original data to be perfectly reconstructed from the compressed data, when streaming is completed.

Digital twin of an industrial site.

AI Verse

Participants: Pierre Alliez, Abir Affane.

In collaboration with Guillaume Charpiat (Tau Inria project-team, Saclay) and AI Verse (Inria spin-off).

We pursued our collaboration with AI Verse on statistics for improving sampling quality, applied to the parameters of a generative model for 3D scenes. A postdoctoral fellow (Abir Affane) was initially hired for three years from 2023 but has finished end of 2024. The research topic is described next. The AI Verse technology is devised to create infinitely random and semantically consistent 3D scenes. This creation is fast, consuming less than 4 seconds per labeled image. From these 3D scenes, the system is able to build quality synthetic images that come with rich labels that are unbiased unlike manually annotated labels (Figure 12). As for real data, no metric exists to evaluate the performance of the synthetic datasets to train a neural network. We thus tend to favor the photorealism of the images but such a criterion is far from being the best. The current technology provides a means to control a rich list of additional parameters (quality of lighting, trajectory and intrinsic parameters of the virtual camera, selection and placement of assets, degrees of occlusion of the objects, choice of materials, etc). Since the generation engine can modify all of these parameters at will to generate many samples, we will explore optimization methods for improving the sampling quality. Most likely, a set of samples generated randomly by the generative model does not cover well the whole space of interesting situations, because of unsuited sampling laws or of sampling realization issues in high dimensions. The main question is how to improve the quality of this generated dataset, that one would like to be close somehow to the given target dataset (consisting of examples of images that one would like to generate). For this, statistical analyses of these two datasets and of their differences are required, in order to spot possible issues such as strongly under-represented areas of the target domain. Then, sampling laws can be adjusted accordingly, possibly by optimizing their hyper-parameters, if any.

Beds generated by AI Verse technology.

9.2 Bilateral grants with industry

Adobe

Participants: Pierre Alliez.

We received a unrestricted grant from Adobe Research.

10 Partnerships and cooperations

10.1 European initiatives

10.1.1 H2020 projects

BIM2TWIN

Participants: Pierre Alliez, Rahima Djahel.

BIM2TWIN project on cordis.europa.eu

-

Title:

BIM2TWIN: Optimal Construction Management & Production Control

-

Duration:

From November 1, 2020 to April 30, 2024

-

Partners:

- INSTITUT NATIONAL DE RECHERCHE EN INFORMATIQUE ET AUTOMATIQUE (INRIA), France

- SPADA CONSTRUCTION (SPADA CONSTRUCTION), France

- AARHUS UNIVERSITET (AU), Denmark

- FIRA GROUP OY, Finland

- INTSITE LTD (INTSITE), Israel

- THE CHANCELLOR MASTERS AND SCHOLARS OF THE UNIVERSITY OF CAMBRIDGE, United Kingdom

- ORANGE SA (Orange), France

- UNISMART - FONDAZIONE UNIVERSITA DEGLI STUDI DI PADOVA (UNISMART), Italy

- FIRA RAKENNUS OY, Finland

- FUNDACION TECNALIA RESEARCH and INNOVATION (TECNALIA), Spain

- TECHNISCHE UNIVERSITAET MUENCHEN (TUM), Germany

- FLOW TECHNOLOGIES OY, Finland

- IDP INGENIERIA Y ARQUITECTURA IBERIA SL (IDP), Spain

- SITEDRIVE OY, Finland

- SITEDRIVE OY, Finland

- UNIVERSITA DEGLI STUDI DI PADOVA (UNIPD), Italy

- FIRA OY, Finland

- RUHR-UNIVERSITAET BOCHUM (RUHR-UNIVERSITAET BOCHUM), Germany

- CENTRE SCIENTIFIQUE ET TECHNIQUE DU BATIMENT (CSTB), France

- DANMARKS TEKNISKE UNIVERSITET (TECHNICAL UNIVERSITY OF DENMARK DTU), Denmark

- TECHNION - ISRAEL INSTITUTE OF TECHNOLOGY, Israel

- UNIVERSITA POLITECNICA DELLE MARCHE (UNIVPM), Italy

- ACCIONA CONSTRUCCION SA, Spain

- SIEMENS AKTIENGESELLSCHAFT, Germany

-

Inria contact:

Pierre Alliez

-

Coordinator:

CENTRE SCIENTIFIQUE ET TECHNIQUE DU BATIMENT (CSTB)

-

Summary:

BIM2TWIN aims to build a Digital Building Twin (DBT) platform for construction management that implements lean principles to reduce operational waste of all kinds, shortening schedules, reducing costs, enhancing quality and safety and reducing carbon footprint. BIM2TWIN proposes a comprehensive, holistic approach. It consists of a (DBT) platform that provides full situational awareness and an extensible set of construction management applications. It supports a closed loop Plan-Do-Check-Act mode of construction. Its key features are:

- Grounded conceptual analysis of data, information and knowledge in the context of DBTs, which underpins a robust system architecture

- A common platform for data acquisition and complex event processing to interpret multiple monitored data streams from the construction site and supply chain to establish real-time project status in a Project Status Model (PSM)

- Exposure of the PSM to a suite of construction management applications through an easily accessible application programming interface (API) and directly to users through a visual information dashboard

- Applications include monitoring of schedule, quantities & budget, quality, safety, and environmental impact.

- PSM representation based on property graph semantically linked to the Building Information Model (BIM) and all project management data. The property graph enables flexible, scalable storage of raw monitoring data in different formats, as well as storage of interpreted information. It enables smooth transition from construction to operation.

BIM2TWIN is a broad, multidisciplinary consortium with hand-picked partners who together provide an optimal combination of knowledge, expertise and experience in a variety of monitoring technologies, artificial intelligence, computer vision, information schema and graph databases, construction management, equipment automation and occupational safety. The DBT platform will be experimented on 3 demo sites (SP, FR, FI).

10.2 National initiatives

10.2.1 3IA Côte d'Azur

Participants: Pierre Alliez, Nissim Maruani, François Protais.

Pierre Alliez holds a chair from 3IA Côte d'Azur (Interdisciplinary Institute for Artificial Intelligence). The topic of his chair is “3D modeling of large-scale environments for the smart territory”. In addition, he is the scientific head of the fourth research axis entitled “AI for smart and secure territories”. Two PhD thesis students and one postdoctoral fellow have been funded by this program: Tong Zhao (Ecole des Ponts ParisTech, master MVA, now at Dassault Systemes Paris), Nissim Maruani (Ecole Polytechnique, Master MVA, third year PhD student) and François Protais (postdoctoral fellow since December 2024).

10.2.2 Inria challenge ROAD-AI - with CEREMA

Participants: Pierre Alliez, Jacopo Iollo, Roberto Dyke.

The road network is one of the most important elements of public heritage. Road operators are responsible for maintaining, operating, upgrading, replacing and preserving this heritage, while ensuring careful management of budgetary and human resources. Today, the infrastructure is being severely tested by climate change (increased frequency of extreme events, particularly flooding and landslides). Users are also extremely attentive to issues of safety and comfort linked to the use of infrastructure, as well as to environmental issues relating to infrastructure construction and maintenance. Roads and structures are complex systems: numerous sub-systems, non-linear evolution of intrinsic characteristics, assets subject to extreme events, strong changes in use or constraints (e.g. climate change) due to their very long lifespan. This Inria challenge takes into account the following difficulties: volume and nature of data (heterogeneity and incompleteness, multi-sources... ), multiple scales of analysis (from the sub-systems of an asset to the complete national network), prediction of very local behaviors on the scale of a global network, complexity of road objects due to their operation and weather conditions, 3D modeling with semantization, and expert knowledge modeling. This Inria challenge aims at providing the scientific building blocks for upstream data acquisition and downstream decision support. Cerema's use cases for this challenge separate into four parts:

- Build a dynamic “digital twin” of the road and its environment on the scale of a complete network;

- The behavioral "laws" of pavements and engineering structures using data from surface monitoring or structure visits, sensors and environmental data;

- Invent the concept of connected bridges and tunnels on a system scale;

- Define strategic investment and maintenance planning methods (predictive, prescriptive and autonomous).

10.2.3 PEPR NumPex

Participants: Pierre Alliez, Christos Georgiadis.

The Digital PEPR for the Exascale (NumPEx) aims to design and develop the software components and tools that will equip future exascale machines and to prepare the major application domains to fully exploit the capabilities of these machines. These major application domains include both scientific research and the industrial sector. This project therefore contributes to France's response to the next EuroHPC call for expressions of interest (AMI) (Exascale France Project), with a view to hosting one of the two European exascale machines planned in Europe by 2024. The French consortium has chosen GENCI as its "Hosting Entity" and the CEA TGCC as its "Hosting Center". This PEPR contributes to the constitution of a set of tools, software, applications but also training that will allow France to remain one of the leaders in the field by developing a national ecosystem for Exascale coordinated with the European strategy.

Our team is mainly involved in the first workpackage entitled “Model and discretize at Exascale”. The main motivation is that geometric representations and their discrete counterparts (such as meshes) are usually the starting point for simulation. These include adaptive, possibly multiresolution, robust to defects, and efficient parallel representations of large-scale models. The physics-based models need to include multiple phenomena, or process couplings at multiple scales in space and time. Space and time adaptivity are then mandatory. Time integration requires special care to become more parallel, more asynchronous, and more accurate for long-time simulations. The requirement of adaptivity in geometry and physics creates an imbalance that needs to be overcome. AI-driven, data-driven, reduced-order, and more generally surrogate models are now mandatory and take various forms. Data must be understood in a broad sense: from observations of the real physical system to synthetic data generated by the physical models. Surrogates enable orders of magnitude faster model evaluations through the extraction and compression of salient features in a very intensive training/learning (supervised, unsupervised or reinforced) phase. Research topics include: handling multiphysics and multiscale coupling, learning parametric dependencies, differential operators or underlying physical laws, mitigating intensive communications during the compression process, and exploiting the underlying computer and data architectures. Multi-fidelity models include hierarchies of models to provide a multi-fidelity problem-solving approach to address very intensive problems beyond the current computing capabilities. The challenge is to switch between representations to efficiently compute and handle bias in so-called “many-query” problems, which include design, optimization and other high dimensional PDE problems. For our team, this project is funding a postdoctoral fellow (Christos Georgiadis since May 2024) for one year, in collaboration with Strasbourg University.

10.2.4 PEPR IRiMa

Participants: Florent Lafarge, Zhenyu Zhu.

The exploratory IRiMa PEPR aims to formalize a "science of risk" to contribute to the development of a new strategy for the management of risks and disasters and their impacts in the context of global change. To achieve this, it implements a series of research projects and expert assessments (involving observation, analysis or decision support) to accelerate the transition to a society capable of facing a range of threats (hydro-climatic, telluric, technological, health-related, coupled), by adapting and becoming more resilient and sustainable. In order to face this challenge, which is increased by climate change, it is necessary to consolidate, stimulate and coordinate the national research effort. It will attempt to integrate knowledge from the fields of geoscience, engineering, biology, digital technology and social sciences with a view to taking a systemic approach to the management of natural and technological risks. It will propose new innovative tools to better detect, understand, quantify, anticipate and manage risks and disasters. It will focus in particular on the issue of cascade effects combining natural, environmental, technological, health and biological hazards.

Our team is involved in the Intelligent Mapping project of the IRiMa PEPR, for proposing innovative solutions in the object vectorization problems. Indeed a central problem in the project is to describe objects of interest contained in the remote sensing data, typically large-scale images, with vectorized representations such as polygons, planar graphs or networks of parametric curves. These compact and parametrizable representations are important for understanding, analyzing and simulating natural risks. One of the main difficulties in our context is that objects of interest can have various appearances and geometric specificities. For instance, buildings are surface objects whose boundaries are piecewise-lineic while roads or faults are curved line networks. In the literature, methods are usually specific to only one type of vector representation. For our team, this project is funding a PhD student, Zhenyu Zhu, since October 2024.

11 Dissemination

11.1 Promoting scientific activities

11.1.1 Scientific events: organisation

Member of the organizing committees

- Florent Lafarge co-organized the CVPR workshop on Urban Scene Modeling in June 2024 in Seattle, US ( 150 attendees)

Member of the conference program committees

- Pierre Alliez was a committee member of the Eurographics Symposium on Geometry Processing, organized in Boston, July 2024.

Reviewer

- Pierre Alliez was a reviewer for ACM SIGGRAPH, CVPR and Eurographics Symposium on Geometry Processing.

- Florent Lafarge was a reviewer for CVPR, ECCV, ACM SIGGRAPH, NeurIPS and the AI4Space CVPR workshop.

11.1.2 Journal

Member of the editorial boards

- Pierre Alliez is the Editor in Chief of the Computer Graphics Forum (CGF), in tandem with Michael Wimmer until end of 2025. CGF is one of the leading journals in Computer Graphics and the official journal of the Eurographics Association.

- Pierre Alliez is a member of the editorial board of the CGAL open source project.

- Florent Lafarge is an associate editor for the ISPRS Journal of Photogrammetry and Remote Sensing.

11.1.3 Invited talks

- Florent Lafarge gave an invited talk at the PFIA (Plate-Forme Intelligence Artificielle) conference 2024 (La Rochelle, France). The title was "Défense et IA - Reconstruction en 3D de scènes urbaines à partir de données aéroportées: quels progrès depuis 20 ans ?"

11.1.4 Scientific expertise

- Florent Lafarge was a reviewer for the MESR (CRI - crédit impot recherche and JEI - jeunes entreprises innovantes).

11.1.5 Research administration

- Pierre Alliez has been the president of the Inria Evaluation Committee since September 2023, in collaboration with Christine Morin and Luce Brotcorne. This role is a half-time position.

- Pierre Alliez has been the scientific head of the Inria-DFKI partnership since September 2022.

- Pierre Alliez has been the president of the CST (comité scientifique et technique) of IGN (French Mapping Agency) since November 2024.

- Pierre Alliez is a member of the scientific committee of the 3IA Côte d'Azur.

- Florent Lafarge is a member of the NICE committee. The main actions of the NICE committee are to verify the scientific aspects of the files of postdoctoral students, to give scientific opinions on candidates for national campaigns for postdoctoral stays, delegations, secondments as well as requests for long duration invitations.

- Florent Lafarge is a member of the technical committee of the UCA Academy of Excellence "Space, Environment, Risk and Resilience".

11.2 Teaching - Supervision - Juries

11.2.1 Teaching

- Master: Pierre Alliez (with Gaétan Bahl and Armand Zampieri), Deep Learning, 32h, M2, Université Côte d'Azur, France.

- Master: Pierre Alliez (with Xavier Descombes, Marc Antonini and Laure Blanc-Féraud), advanced machine learning, 12h, M2, Université Côte d'Azur, France.

- Master: Florent Lafarge, Applied AI, 8h, M2, Université Côte d'Azur, France.

- Master: Florent Lafarge (with Marion Boyer and Angelos Mantzaflaris), Numerical Interpolation, 55h, M1, Université Côte d'Azur, France.

11.2.2 Supervision

- PhD defended in February 2024: Rao Fu, Surface reconstruction from raw 3D point clouds 22, funded by EU project GRAPES, in collaboration with Geometry Factory, advised by Pierre Alliez.

- PhD in progress: Jacopo Iollo, Sequential Bayesian Optimal Design, funded by Inria challenge ROAD-AI, in collaboration with CEREMA, since January 2022, co-advised by Florence Forbes (Inria Grenoble), Christophe Heinkele (CEREMA) and Pierre Alliez.

- PhD in progress: Marion Boyer, Geometric modeling of urban scenes with LOD2 formalism from satellite images, funded by CNES and Airbus, since October 2022, advised by Florent Lafarge.

- PhD in progress: Nissim Maruani, Learnable representations for 3D shapes, funded by 3IA, since November 2022, co-advised by Pierre Alliez and Mathieu Desbrun (Geomerix Inria project-team, Inria Saclay), and in collaboration with Maks Ovsjanikov (Ecole Polytechnique).

- PhD in progress: Armand Zampieri, Compression and visibility of 3D point clouds, Cifre thesis with Samp AI, since December 2022, co-advised by Pierre Alliez and Guillaume Delarue (Samp AI).

- PhD in progress: Moussa Bendjilali, From 3D point clouds to cognitive 3D models, Cifre thesis with AlteIA, since September 2023, co-advised by Pierre Alliez and Nicola Luminari (AlteIA).

- PhD in progress: Zhenyu Zhu, Object vectorization from remote sensing data, funded by PEPR IRiMa, since October 2024, advised by Florent Lafarge.

11.2.3 Juries

- Pierre Alliez was a reviewer for the PhD of Thanasis Zoumpekas (Barcelona University).

- Pierre Alliez was a member of the "Comité de Suivi Doctoral" of Arnaud Gueze (Ecole Polytechnique), Davide Adamo (CEPAM and Université Côte d'Azur) and Stefan Larsen (Acentauri Inria project-team).

- Florent Lafarge was a reviewer for the PhD of Yuchen Bai (University of Grenoble Alpes), Weixiao Gao (TU Delft) and Maxim Khomiakov (Technical University of Denmark).

- Florent Lafarge chaired the PhD thesis committee of Mohamed Cherif (Université Côte d'Azur).

- Florent Lafarge was a member of the "Comité de Suivi Doctoral" for the PhD thesis of Amine Ouasfi (Mimetic Inria project-team).

11.3 Popularization

11.3.1 Participation in Live events

Pierre Alliez gave a live demo at the Fête de la Science in Antibes Juan les Pins, on learnable representations for 3D shapes, in October 2024.

12 Scientific production

12.1 Major publications

- 1 inproceedingsKIPPI: KInetic Polygonal Partitioning of Images.IEEE Conference on Computer Vision and Pattern Recognition (CVPR)Salt Lake City, United StatesJune 2018HAL

- 2 articleKinetic Shape Reconstruction.ACM Transactions on GraphicsThis project was partially funded by Luxcarta Technology. We thank our anonymous reviewers for their input, Qian-Yi Zhou and Arno Knapitsch for providing us datasets from the Tanks and Temples benchmark (Meeting Room,Horse,M60,Barn,Ignatius,Courthouse and Church), and Pierre Alliez, Mathieu Desbrun and George Drettakis for their help and advice. We are also grateful to Liangliang Nan and Hao Fang for sharing comparison materials. Datasets Full thing,Castle,Tower of Piand Hilbert cube originate from Thingi 10K,Hand, Rocker Arm, Fertility and LansfromAim@Shape, and Stanford Bunny and Asian Dragon from the Stanford 3D Scanning Repository.2020HALDOI

- 3 articleOptimal Voronoi Tessellations with Hessian-based Anisotropy.ACM Transactions on GraphicsDecember 2016, 12HAL

- 4 inproceedingsTowards large-scale city reconstruction from satellites.European Conference on Computer Vision (ECCV)Amsterdam, NetherlandsOctober 2016HAL

- 5 articleCurved Optimal Delaunay Triangulation.ACM Transactions on Graphics374August 2018, 16HALDOI

- 6 inproceedingsApproximating shapes in images with low-complexity polygons.CVPR 2020 - IEEE Conference on Computer Vision and Pattern RecognitionSeattle / Virtual, United StatesJune 2020HAL

- 7 articleIsotopic Approximation within a Tolerance Volume.ACM Transactions on Graphics3442015, 12HALDOI

- 8 articleVariance-Minimizing Transport Plans for Inter-surface Mapping.ACM Transactions on Graphics362017, 14HALDOI

- 9 articleAlpha Wrapping with an Offset.ACM Transactions on Graphics414June 2022, 1-22HALDOI

- 10 articleSemantic Segmentation of 3D Textured Meshes for Urban Scene Analysis.ISPRS Journal of Photogrammetry and Remote Sensing1232017, 124 - 139HALDOI

- 11 articleLOD Generation for Urban Scenes.ACM Transactions on Graphics3432015, 15HAL

- 12 articleEntropy-driven Progressive Compression of 3D Point Clouds..Computer Graphics Forum4352024HALDOI

- 13 inproceedingsVariational Shape Reconstruction via Quadric Error Metrics.SIGGRAPH 2023 - The 50th International Conference & Exhibition On Computer Graphics & Interactive TechniquesLos Angeles, United StatesAugust 2023HALDOI

12.2 Publications of the year

International journals

International peer-reviewed conferences

Doctoral dissertations and habilitation theses

Reports & preprints