2024Activity reportProject-TeamCHROMA

RNSR: 201521334D- Research centers Inria Lyon Centre Inria Centre at Université Grenoble Alpes

- In partnership with:Institut national des sciences appliquées de Lyon

- Team name: Cooperative and Human-aware Robot Navigation in Dynamic Environments

- In collaboration with:Centre d'innovation en télécommunications et intégration de services

- Domain:Perception, Cognition and Interaction

- Theme:Robotics and Smart environments

Keywords

Computer Science and Digital Science

- A1.3.2. Mobile distributed systems

- A1.5.2. Communicating systems

- A2.3.1. Embedded systems

- A3.4.1. Supervised learning

- A3.4.2. Unsupervised learning

- A3.4.3. Reinforcement learning

- A3.4.4. Optimization and learning

- A3.4.5. Bayesian methods

- A3.4.6. Neural networks

- A3.4.8. Deep learning

- A5.1. Human-Computer Interaction

- A5.4.1. Object recognition

- A5.4.2. Activity recognition

- A5.4.4. 3D and spatio-temporal reconstruction

- A5.4.5. Object tracking and motion analysis

- A5.4.6. Object localization

- A5.4.7. Visual servoing

- A5.10.2. Perception

- A5.10.3. Planning

- A5.10.4. Robot control

- A5.10.5. Robot interaction (with the environment, humans, other robots)

- A5.10.6. Swarm robotics

- A5.10.7. Learning

- A5.11.1. Human activity analysis and recognition

- A6.1.2. Stochastic Modeling

- A6.1.3. Discrete Modeling (multi-agent, people centered)

- A6.2.3. Probabilistic methods

- A6.2.6. Optimization

- A6.4.1. Deterministic control

- A6.4.2. Stochastic control

- A6.4.3. Observability and Controlability

- A8.2. Optimization

- A8.2.1. Operations research

- A8.2.2. Evolutionary algorithms

- A8.11. Game Theory

- A9.2. Machine learning

- A9.5. Robotics

- A9.6. Decision support

- A9.7. AI algorithmics

- A9.9. Distributed AI, Multi-agent

- A9.10. Hybrid approaches for AI

Other Research Topics and Application Domains

- B5.2.1. Road vehicles

- B5.6. Robotic systems

- B7.1.2. Road traffic

- B8.4. Security and personal assistance

1 Team members, visitors, external collaborators

Research Scientists

- Christian Laugier [INRIA, Emeritus]

- Agostino Martinelli [INRIA, Researcher]

- Alessandro Renzaglia [INRIA, Researcher]

- Baudouin Saintyves [INRIA, ISFP, from Nov 2024]

Faculty Members

- Olivier Simonin [Team leader, INSA LYON, Professor]

- Christine Solnon [INSA LYON, Professor, until Aug 2024]

- Anne Spalanzani [UGA, Professor]

Post-Doctoral Fellows

- Jacopo Castellini [INSA LYON, Post-Doctoral Fellow, until Feb 2024]

- Xiao Peng [INSAVALOR, Post-Doctoral Fellow, from Apr 2024 until Aug 2024]

- Johan Peralez [INSA LYON, Post-Doctoral Fellow]

PhD Students

- Rabbia Asghar [INRIA]

- Théotime Balaguer [INSA LYON]

- Kaushik Bhowmik [INRIA]

- Aurélien Delage [INSA LYON, ATER, until Aug 2024]

- Manuel Diaz Zapata [INRIA, until Oct 2024]

- Simon Ferrier [INRIA, from Sep 2024]

- Thomas Genevois [POLE EMPLOI, until Jun 2024]

- Jean-Baptiste Horel [POLE EMPLOI, from Dec 2024]

- Jean-Baptiste Horel [INRIA, until Sep 2024]

- Pierre Marza [LIRIS, until Aug 2024]

- Xiao Peng [INSAVALOR, until Feb 2024]

- Florian Rascoussier [IMT ATLANTIQUE, until Aug 2024]

- Gustavo Andres Salazar Gomez [INRIA]

- Benjamin Sportich [INRIA]

Technical Staff

- Thierry Arrabal [INSA LYON, Engineer, until Jul 2024]

- Hugo Bantignies [INRIA, Engineer]

- Robin Baruffa [INRIA, Engineer]

- Kenza Boubakri [INRIA, Engineer, from Oct 2024]

- David Brown [INRIA, Engineer]

- Johan Faure [INRIA, Engineer, until Feb 2024]

- Simon Ferrier [INSAVALOR, Engineer, until Mar 2024]

- Romain Fontaine [INSAVALOR, Engineer, until Mar 2024]

- Wenqian Liu [INRIA, Engineer, until Oct 2024]

- Khushdeep Singh Mann [INRIA, Engineer, until Oct 2024]

- Damien Rambeau [INSAVALOR, Engineer, until Sep 2024]

- Lukas Rummelhard [INRIA, Engineer, SED member]

- Nicolas Valle [INRIA, Engineer, (half-time)]

- Stéphane d'Alu [INSA LYON, Engineer]

Interns and Apprentices

- Jules Cassan [FIL, Intern, from Mar 2024 until Aug 2024]

- Simon Ferrier [INRIA, Intern, from Jun 2024 until Aug 2024, pre-thesis]

- Alessio Masi [INRIA, Intern, from Apr 2024 until Aug 2024]

- Chanattan Sok [INRIA, Intern, from Sep 2024]

Administrative Assistants

- Anouchka Ronceray [INRIA]

- Linda Soumari [INSA Lyon]

Visiting Scientist

- Etienne Villemure [UNIV SHERBROOKE, from Nov 2024]

External Collaborators

- Jilles Dibangoye [UNIV GRONINGEN]

- Fabrice Jumel [CPE LYON]

- Jacques Saraydaryan [CPE LYON]

2 Overall objectives

2.1 Origin of the project

Chroma is a bi-localized project-team at Inria Lyon and Inria Grenoble (in Auvergne-Rhône-Alpes region). The project was launched in 2015 before it became officially an Inria project-team on December 1st, 2017. It brings together experts in perception and decision-making for mobile robotics and intelligent transport, all of them sharing common approaches that mainly relate to the fields of Artificial Intelligence and Control. It was originally founded by members of the working group on robotics at CITI lab1, led by Prof. Olivier Simonin (INSA Lyon2), and members from Inria project-team eMotion (2002-2014), led by Christian Laugier, at Inria Grenoble. Academic members of the team are Olivier Simonin (Prof. INSA Lyon), Anne Spalanzani (Prof., UGA), Christian Laugier (Inria researcher emeritus, Grenoble), Agostino Martinelli (Inria researcher CR, Grenoble), Alessandro Renzaglia (Inria researcher CR, Lyon, since 2021) and Baudouin Saintyves (Inria researcher ISFP, Lyon, since 2024). Before joining the University of Groningen (NL) as Professor, Jilles Dibangoye was a member from 2015 to 2023 (as Asso. Prof. INSA Lyon). Christine Solnon (Prof. INSA Lyon) was also a member of the team from 2020 to 2024. Jacques Saraydaryan and Fabrice Jumel are two associate members as lecturer-researcher from CPE Lyon, since 2015.

The overall objective of Chroma is to address fundamental and open issues that lie at the intersection of the emerging research fields called “Human Centered Robotics” 3, “Multi-Robot Systems" 4, and AI for humanity.

More precisely, our goal is to design algorithms and models that allow autonomous agents to perceive, decide, learn, and finally adapt to their environment. A focus is given to unknown and human-populated environments, where robots or vehicles have to navigate and cooperate to fulfill complex tasks.

In this context, recent advances in embedded computational power, sensor and communication technologies, and miniaturized mechatronic systems, make the required technological breakthroughs possible.

2.2 Research themes

To address the mentioned challenges, we take advantage of recent advances in: probabilistic methods, machine learning, planning and optimization methods, multi-agent decision making, and swarm intelligence. We also draw inspiration from other disciplines such as Sociology, to take into account human models, or Physics/Biology, to design self-organized robots.

Chroma research is organized in two thematic axes : i) Perception and Situation Awareness ii) Decision Making. Next, we elaborate more about these axes.

-

Perception and Situation Awareness.

This axis aims at understanding complex dynamic scenes, involving mobile objects and human beings, by exploiting prior knowledge and streams of perceptual data coming from various sensors.

To this end, we investigate three complementary research problems:

- Bayesian & AI based Perception: How to interpret in real-time a complex dynamic scene perceived using a set of different sensors, and how to predict the near future evolution of this dynamic scene and the related collision risks ? How to extract the semantic information and to process it for the autonomous navigation step.

- Modeling and simulation of dynamic environments: How to model or learn the behavior of dynamic agents (pedestrians, cars, cyclists...) in order to better anticipate their trajectories?

- Robust state estimation: Acquire a deep understanding on several sensor fusion problems and investigate their observability properties in the case of unknown inputs.

-

Decision making.

This second axis aims to design algorithms and architectures that can achieve both scalability and quality for decision making in intelligent robotic systems and more generally for problem solving.

Our methodology builds upon advantages of three (complementary) approaches: planning & control, machine learning, and swarm intelligence.

- Planning under constraints: In this theme we study planning algorithms for a single or a fleet of mobile robots when they face complex and dynamics environments, i.e. populated by humans and/or mostly unknown. Approaches include heuristics and exact methods (eg. constraint programming).

- Machine learning: We search for efficient and adaptive behaviours based on deep & reinforcement learning methods to solve complex single or multi-agent decision-making tasks.

- Decentralized models: This theme explores decentralised algorithms and models for the control of multi-agent/multi-robot systems. In particular, we study swarm robotics for its ability to generate systems that are self-organising, robust and adaptive to perturbations.

Chroma is also concerned with applications and transfer of the scientific results. Our main applications include autonomous and connected vehicles, service robotics, exploration & mapping tasks with ground and aerial robots. Chroma is currently involved in several projects in collaboration with automobile companies (Renault, Toyota) and robotics compagnies such as Enchanted Tools (see Section 4).

The team has its own robotic platforms to support the experimentation activity (see5). In Grenoble, we have two experimental vehicles equipped with various sensors: a Toyota Lexus and a Renault Zoe; the Zoe car has been automated in December 2016. We have also developed two experimental test tracks respectively at Inria Grenoble (including connected roadside sensors and a controlled dummy pedestrian) and at IRT Nanoelec & CEA Grenoble (including a road intersection with traffic lights and several urban road equipments). In Lyon, we have a fleet of UAVs (Unmanned Aerial Vehicles) composed of 5 PX4 Vision, 3 IntelAero and 10 mini-UAVs Crazyflies (with a Lighthouse localization system). We have also a fleet of ground robots composed of 16 Turtlebot and 3 humanoids Pepper. These platforms are maintained and developed by contractual engineers and by SED engineers of the team.

3 Research program

3.1 Introduction

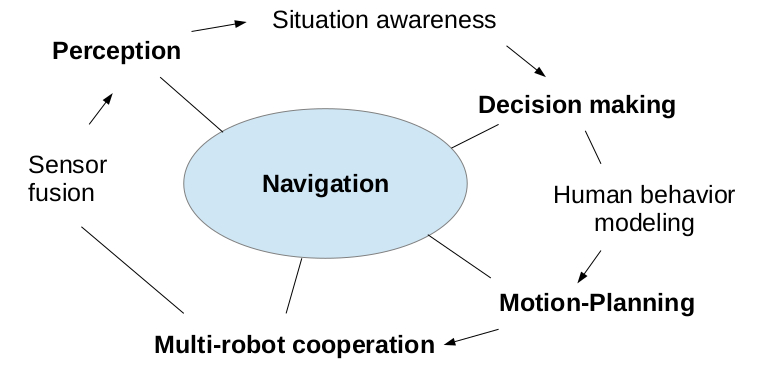

The Chroma team aims to deal with different issues of autonomous robot(s) navigation: perception, decision-making and cooperation. Figure 1 schemes the different themes and sub-themes investigated by Chroma.

Research themes of the team: Sensor fusion, perception, situation awareness, decision making, motion planning, multi-robot cooperation

We present here after our approaches to address these different themes of research, and how they combine altogether to contribute to the general problem of robot navigation. Chroma pays particular attention to the problem of autonomous navigation in highly dynamic environments populated by humans and cooperation in multi-robot systems. We share this goal with other major robotic laboratories/teams in the world, such as Autonomous Systems Lab at ETH Zurich, Robotic Embedded Systems Laboratory at USC, KIT 6 (Prof. Christoph Stiller lab and Prof. Ruediger Dillmann lab), UC Berkeley, Vislab Parma (Prof. Alberto Broggi), and iCeiRA 7 laboratory in Taipei, to cite a few. Chroma collaborates at various levels (visits, postdocs, research projects, common publications, etc.) with most of these laboratories.

3.2 Perception and Situation Awareness

Project-team positioning

The team carries out research across the full (software) stack of an autonomous driving system. This goes from perception of the environment to motion forecasting and trajectory optimization. While most of the techniques that we develop can stand on their own and are typically tested on our experimental platforms, we slowly move towards their complete integration. This will result in a functioning autonomous driving software stack. Beyond that point, we envision to focus our research on developing new methods that improve the individual performance of each of the stack’s components, as well as the global performance. This is alike to how autonomous driving companies operate (e.g. Waymo, Cruise, Tesla). The research topics that we explore are addressed by a very long list of international research laboratories and private companies. However, in terms of scope, we find the research groups of Prof. Dr.-Ing. Christoph Stiller (KIT), Prof. Marcelo Ang (NUS), Prof. Daniela Rus (MIT) and Prof. Wolfram Burgard (TRI California) to be the closest to us; we have active interconnections with these research groups (e.g. in the scope of the IEEE RAS Technical Committee AGV-ITS we are co-chairing any of the related annual Workshops). At the national level, we are cooperating in the framework of several R&D projects with UTC, Institut Pascal Clermont-Ferrand, University Gustave Eiffel, and the Inria Teams ASTRA, Acentauri and Convecs. Our main research originality relies in the combination of Bayesian approaches, AI technologies, Human-Vehicle interactions models, Robust State Estimation formalism, and a mix of Real experiments and Formal methods for validating our technologies in complex real world dynamic scenarios.

Our research on visual-inertial sensor fusion is closely related to the research carried out by the group of Prof. Stergios Roumeliotis (University of Minnesota) and the group of Prof. Davide Scaramuzza (University of Zurich). Our originality in this context is the introduction of closed-form solutions instead of filter-based approaches.

Collaborations

- Main long-term industrial collaborations: IRT Nanoelec & CEA 10 years), Toyota Motor Europe (12 years, R&D center Zaventen-Brussels), Renault (10 years), SME Easymile (3 years), Sumitomo Japan (started in 2019), Iveco (3 years in the framework of STAR project). These collaborations are funded either by Industry or by National, Regional or European projects. The main outputs are common publications, softwares development, patents or technological transfers. Two former doctoral students of our team were recruited some years ago by respectively TME Zaventen and Renault R&D centers.

- Main academic collaborations: Institut Pascal Clermont-Ferrand, University Compiegne (UTC), University Gustave Eiffel, several Inria Teams (ASTRA, Acentauri, Convecs, Rainbow). These collaborations are most of the time conducted in the scope of various National R&D projects (FUI, PIA, ANR, etc.). The main outputs are scientific and software exchanges. In addition, we had a fruitful collaboration with B. Mourrain from the Inria-Aromath team. The goal was to find the analytical solution of a polynomial equation system that fully characterizes the cooperative visual-inertial sensor fusion problem with 2 agents.

- International cooperation for scientific exchanges and IEEE scientific events organization, e.g. NUS & NTU Singapore, Peking University, TRI Mountain View, KIT Karlsruhe, Coimbra University. We have constantly interacted with Prof. D. Scaramuzza from the university of Zurich to make our findings in visual inertial sensor fusion more usable in a realistic context (e.g., for the autonomous navigation of drones).

3.3 Decision Making

Project-team positioning

In his reference book Planning algorithms56, S. LaValle discusses the different dimensions that make the motion-planning a complex problem, which are the number of robots, the obstacle regions, the uncertainty of perception and action, and the allowable velocities. In particular, it is emphasized that multiple robot planning in complex environments are NP-hard problems which implies that exact approaches have exponential time complexities in the worst case (unless P=NP). Moreover, dynamic and uncertain environments, as human-populated ones, expand this complexity. In this context, we aim at scaling up decision-making in human-populated environments and in multi-robot systems, while dealing with the intrinsic limits of the robots and machines (computation capacity, limited communication). To address these challenges, we explore new algorithms and AI architectures following three directions: online planning/self-organization for real-time decision/control, machine learning to adapt to the complexity of uncertain environments, and combinatorial optimization to deal with offline constrained problems. Combining these approaches, seeking to scale up them, and also evaluating them with real platforms are also elements of originality of our work.

We share these goals with other laboratories/teams in the world, such as the Mobile Robotics Laboratory and Intelligent Systems "IntRoLab" at Sherbrooke University (Montreal) led by Prof. F. Michaud, the Learning Agents Research Group (LARG) within the AI Lab at the University of Texas (Austin) led by Prof. P. Stone, the Autonomous Systems Lab at ETH Zurich (Switzerland) led by R. Siegwart, the Robotic Sensor Networks Lab at the University of Minnesota (USA) led by Prof. V. Isler, and the Autonomous Robots Lab at NTNU (Norway) to cite a few. At Inria, we share some of the objectives with the LARSEN team in Nancy (multi-agent machine learning, multi-robot planning) and the RAINBOW team in Rennes (multi-UAV planning). We have also collaborations with the ACENTAURY team, in Sophia Antipolis, about autonomous navigation among humans. In France, among other labs involved on similar subjects, we can cite the LAAS (CNRS, Toulouse), the ISIR lab (CNRS, Paris Sorbonne Univ.) and the MAD team in Caen University. In the more generic domain of problem solving, we can mention the TASC team at LS2N (Nantes), the CRIL lab (Lens) and, at the international level, we can cite the Insight Centre for Data Analytics (Cork, Ireland).

Collaborations

- Social navigation: collaboration with Julie Dugdale and Dominique Vaufreydaz, Asso. Prof. at LIG Laboratory and with Prof. Philippe Martinet from Acentauri Inria team (common PhD students and projects: ANR VALET, ANR HIANIC, ANR ANNAPOLIS).

- Multi-robot exploration: Prof. Cedric Pradalier from GeorgiaTech Metz/CNRS (France) leading the European project BugWright2 — Prof. Luca Schenato from University of Padova (Italy).

- Swarm of UAVs: Prof. Isabelle Guérin-Lassous from LIP lab/Lyon 1 (common PhD students and projects: ANR CONCERTO, INRIA/DGA DynaFlock) — Research Director Isabelle Fantoni from CNRS/LS2N Lab in Nantes (common projects: ANR CONCERTO, Equipex TIRREX, ANR VORTEX)

- Machine Learning: strong collaborations with Christian Wolf, Naver Labs Europe & LIRIS lab/INSA8 (common PhD students and projects: ANR DELICIO, ANR AI Chair REMEMBER) and with Laetitia Matignon, Asso. Prof. at LIRIS lab/Univ Lyon 1 (common projects and Phd/Master students, eg. BPI/ANR SOLAR-Nav) François Charpillet and Olivier Buffet, Inria researchers in LARSEN team, Nancy (common publications, projects surch as ANR PLASMA, BPI/ANR SOLAR-Nav).

- Semantic grids for autonomous navigation: Collaboration with Toyota Motor Europe (TME), R&D center Zaventen (Brussels). R&D contracts, common publications and patents.

- Constrained optimization: Omar Rifki (Post-doc) and Thierry Garaix (Ass. Prof) at LIMOS Ecole des Mines de Saint Etienne — Pascal Lafourcade and François Delobel, Asso. Prof. at LIMOS Université Clermont-Ferrand.

4 Application domains

Applications in Chroma are organized in two main domains : i) Future cars and transportation of persons and goods in cities, ii) Service robotics with ground and aerial robots. These domains correspond to the experimental fields initiated in Grenoble (eMotion team) and in Lyon (CITI lab). However, the scientific objectives described in the previous sections are intended to apply equally to these applicative domains. Even if our work on Bayesian Perception is today applied to the intelligent vehicle domain, we aim to generalize to any mobile robots. The same remark applies to the work on multi-agent decision making. We aim to apply algorithms to any fleet of mobile robots (service robots, connected vehicles, UAVs). This is the philosophy of the team since its creation.

the Toyota Lexus, the autonomous version of the Renault Zoe car, the Pepper humanoid, the PX4 Vision UAV

the Toyota Lexus, the autonomous version of the Renault Zoe car, the Pepper humanoid, the PX4 Vision UAV

4.1 Future cars and transportation systems

Thanks to the introduction of new sensor and ICT technologies in cars and in mass transportation systems, and also to the pressure of economical and security requirements of our modern society, this application domain is quickly changing. Various technologies are currently developed by both research and industrial laboratories. These technologies are progressively arriving at maturity, as it is witnessed by the results of large scale experiments and challenges such as the Google’s car project and several future products announcements made by the car industry. Moreover, the legal issue starts to be addressed in USA (see for instance the recent laws in Nevada and in California authorizing autonomous vehicles on roads) and in several other countries (including France).

In this context, we are interested in the development of ADAS 9 systems aimed at improving comfort and safety of the cars users (e.g., ACC, emergency braking, danger warnings), of Fully Autonomous Driving functions for controlling the displacements of private or public vehicles in some particular driving situations and/or in some equipped areas (e.g., automated car parks or captive fleets in downtown centers or private sites), and of Intelligent Transport including optimization of existing transportation solutions.

In this context, Chroma has two experimental vehicles equipped with various sensors (a Toyota Lexus and a Renault Zoe. , see. Fig. 2) , which are maintained by Inria-SED 10 and that allow the team to carry out experiments in realistic traffic conditions (Urban, road and highway environments). The Zoe car has been automated in December 2016, through our collaboration with the team of P. Martinet (IRCCyN Lab, Nantes), that allow new experiments in the team.

4.2 Service robotics with ground and aerial robots

Service robotics is an application domain quickly emerging, and more and more industrial companies (e.g., IS-Robotics, Samsung, LG) are now commercializing service and intervention robotics products such as vacuum cleaner robots, drones for civil applications, entertainment robots, etc. One of the main challenges is to propose robots which are sufficiently robust and autonomous, easily usable by non-specialists, and marked at a reasonable cost. We are involved in developing fleet of ground robots and aerial ones, see Fig. 2. Since 2019, we study solutions for 3D observation/exploration of complex scenes or environments with a fleet of UAVs (eg. BugWright2 H2020 project, ANR AVENUE, ANR VORTEX).

A more recent challenge for the coming decade is to develop robotized systems for assisting elderly and/or disabled people. In the continuity of our work in the IPL PAL 11, we aim to propose smart technologies to assist electric wheelchair users in their displacements and also to control autonomous cars in human crowds (see Figure 5 for illustration). Another emerging application is humanoid robots helping humans at their home or work. In this context, we address the problem of NAMO (Navigation Among Movable Obstacles) in indoor environments. More generally we address navigation and object search with humanoids robots. This is the case with the recent BPI/ANR SOLAR-Nav project involving the mobile semi-humanoid Mirokai robot from our partner Enchanted Tools (2024-27).

5 Social and environmental responsibility

5.1 Footprint of research activities

- Some of our research topics involve high CPU usage. Some researchers in the team use the Grid5000 computing cluster, which guarantees efficient management of computing resources and facilitates the reproducibility of experiments. Some researchers in the team keep a log summarizing all the uses of computing servers in order to be able to make statistics on these uses and assess their environmental footprint more finely.

5.2 Impact of research results

- Romain Fontaine's thesis aims to develop algorithms for the efficient optimization of delivery rounds in the city. Our work is motivated by taking into account environmental constraints, set out in the report "Assurer le fret dans un monde fini" from The Shift Project (theshiftproject.org/wp-content/uploads/2022/03/Fret_rapport-final_ShiftProject_PTEF.pdf)

- Léon Fauste's thesis focuses on the design of a decision support tool to choose the scale when relocating production activities (work in collaboration with the Inria STEEP team). This resulted in a publication in collaboration with STEEP at ROADEF 49 and the joint organization of a workshop on the theme "Does techno-solutionism have a future?" during the Archipel conference which has been organized in June 2022 (archipel.inria.fr/).

- Christine Solnon has co-organized in Lyon (with Sylvain Bouveret, Nadia Brauner, Pierre Fouilhoux, Alexandre Marié, and Michael Poss), a one day workshop on environmental and societal challenges of decision making, on the 17th of November (sponsored by GDR RO and GDR IA).

- Chroma will organize in 2024 the national Archipel conference (archipel.inria.fr) on anthropocene challenges.

- Some of us are strongly involved in the development of INSA Lyon training courses to integrate DDRS issues (Christine Solnon is a member of the CoPil and the GTT on environmental and social digital issues). This participation feeds our reflections on the subject.

6 Highlights of the year

6.1 Awards and appointments

- Best Paper of JFSMA 2024 conference : J. Saraydaryan, F. Jumel, O. Simonin 31.

- O. Simonin has been appointed as member of the scientific board of the GDR Robotique (starting 1/1/2025).

6.2 Recruitments and new collaborations

- Recruitment of Baudouin Saintyves, as ISFP Inria, in Lyon, who brings a new research topic in Chroma on Modular Robotics, and a collaboration with the University of Chicago.

- We obtained an Inria Associated Team with Univ. of Sherbrooke/3IT Institute (Canada). This project, called 'ROBLOC', is co-head by François Michaud (Prof. U. Sherbrooke) and Olivier Simonin (CHROMA).

6.3 New projects

- BPI/ANR Defi Transfert 'SOLAR-NAV' (3,7M€) "SOcial Logistics Advanced Robotic NAVigation". Partners are Chroma/Inria Lyon (1,2M€), Enchanted Tools company, Lyon CHU/Station H, and Univ. Lyon 3 (2024-2028). Anne Spalanzani is the PI of the first 2 years (research part).

7 New software, platforms, open data

7.1 New software

7.1.1 CMCDOT

-

Functional Description:

CMCDOT is a Bayesian filtering system for dynamic occupation grids, allowing parallel estimation of occupation probabilities for each cell of a grid, inference of velocities, prediction of the risk of collision and association of cells belonging to the same dynamic object. Last generation of a suite of Bayesian filtering methods developed in the Inria eMotion team, then in the Inria Chroma team (BOF, HSBOF, ...), it integrates the management of hybrid sampling methods (classical occupancy grids for static parts, particle sets for parts dynamics) into a Bayesian unified programming formalism , while incorporating elements resembling the Dempster-Shafer theory (state "unknown", allowing a focus of computing resources). It also offers a projection system of the estimated scene in the near future, to reference potential collisions with the ego-vehicle or any other element of the environment, as well as very low cost pre-segmentation of coherent dynamic spaces (taking into account speeds). It takes as input instantaneous occupation grids generated by sensor models for different sources, the system is composed of a ROS package, to manage the connectivity of I / O, which encapsulates the core of the embedded and optimized application on GPU Nvidia (Cuda), allowing real-time analysis of the direct environment on embedded boards (Tegra X1, X2). ROS (Robot Operating System) is a set of open source tools to develop software for robotics. Developed in an automotive setting, these techniques can be exploited in all areas of mobile robotics, and are particularly suited to highly dynamic and uncertain environment management (e.g. urban scenario, with pedestrians, cyclists, cars, buses, etc.).

-

Contact:

Christian Laugier

7.1.2 Ground Elevation and Occupancy Grid Estimator (GEOG - Estimator)

-

Functional Description:

GEOG-Estimator is a system of joint estimation of the shape of the ground, in the form of a Bayesian network of constrained elevation nodes, and the ground-obstacle classification of a pointcloud. Starting from an unclassified 3D pointcloud, it consists of a set of expectation-maximization methods computed in parallel on the network of elevation nodes, integrating the constraints of spatial continuity as well as the influence of 3D points, classified as ground-based or obstacles. Once the ground model is generated, the system can then construct a occupation grid, taking into account the classification of 3D points, and the actual height of these impacts. Mainly used with lidars (Velodyne64, Quanergy M8, IBEO Lux), the approach can be generalized to any type of sensor providing 3D pointclouds. On the other hand, in the case of lidars, free space information between the source and the 3D point can be integrated into the construction of the grid, as well as the height at which the laser passes through the area (taking into account the height of the laser in the sensor model). The areas of application of the system spread across all areas of mobile robotics, it is particularly suitable for unknown environments. GEOG-Estimator was originally developed to allow optimal integration of 3D sensors in systems using 2D occupancy grids, taking into account the orientation of sensors, and indefinite forms of grounds. The ground model generated can be used directly, whether for mapping or as a pre-calculation step for methods of obstacle recognition or classification. Designed to be effective (real-time) in the context of embedded applications, the entire system is implemented on Nvidia graphics card (in Cuda), and optimized for Tegra X2 embedded boards. To ease interconnections with the sensor outputs and other perception modules, the system is implemented using ROS (Robot Operating System), a set of opensource tools for robotics.

-

Contact:

Christian Laugier

7.1.3 Zoe Simulation

-

Name:

Simulation of INRIA's Renault Zoe in Gazebo environment

-

Keyword:

Simulation

-

Functional Description:

This simulation represents the Renault Zoe vehicle considering the realistic physical phenomena (friction, sliding, inertia, ...). The simulated vehicle embeds sensors similar to the ones of the actual vehicle. They provide measurement data under the same format. Moreover the software input/output are identical to the vehicle's. Therefore any program executed on the vehicle can be used with the simulation and reciprocally.

-

Contact:

Christian Laugier

7.1.4 Hybrid-state E*

-

Name:

Path planning with Hybrid-state E*

-

Keywords:

Planning, Robotics

-

Functional Description:

Considering a vehicle with the kinematic constraints of a car and an environment which is represented by a probabilistic occupancy grid, this software produces a path from the initial position of the vehicle to its destination. The computed path may include, if necessary, complex maneuvers. However the suggested path is often the simpler and the shorter.

This software is designed to take benefit from bayesian occupancy grids such as the ones computed by the CMCDOT software.

- URL:

-

Contact:

Christian Laugier

-

Partner:

CEA

7.1.5 Pedsim_ros_AV

-

Name:

Pedsim_ros_AV

-

Keywords:

Simulator, Multi-agent, Crowd simulation, Autonomous Cars, Pedestrian

-

Scientific Description:

These ROS packages are useful to support robotic developments that require the simulation of pedestrians and an autonomous vehicle in various shared spaces scenarios. They allow: 1. in simulation, to pre-test autonomous vehicle navigation algorithms in various crowd scenarios, 2. in real crowds, to help online prediction of pedestrian trajectories around the autonomous vehicle.

Individual pedestrian model in shared space (perception, distraction, personal space, pedestrians standing, trip purpose). Model of pedestrians in social groups (couples, friends, colleagues, family). Autonomous car model. Pedestrian-autonomous car interaction model. Definition of shared space scenarios: 3 environments (business zone, campus, city centre) and 8 crowd configurations.

-

Functional Description:

Simulation of pedestrians and an autonomous vehicle in various shared space scenarios. Adaptation of the original Pedsim_ros model to simulate heterogeneous crowds in shared spaces (individuals, social groups, etc.). The car model is integrated into the simulator and the interactions between pedestrians and the autonomous vehicle are modeled. The autonomous vehicle can be controlled from inside the simulation or from outside the simulator by ROS commands.

- URL:

- Publications:

-

Contact:

Manon Predhumeau

-

Participants:

Manon Predhumeau, Anne Spalanzani, Julie Dugdale, Lyuba Mancheva

-

Partner:

LIG

7.1.6 S-NAMO-SIM

-

Name:

S-NAMO Simulator

-

Keywords:

Simulation, Navigation, Robotics, Planning

-

Functional Description:

2D Simulator of NAMO algorithms (Navigation Among Movable Obstacles) and MR-NAMO (Multi-Robot NAMO), ROS compatible

-

Release Contributions:

Creation

-

Contact:

Olivier Simonin

7.1.7 SimuDronesGR

-

Name:

Simultion of UAV fleets with Gazebo/ROS

-

Keywords:

Robotics, Simulation

-

Functional Description:

The simulator includes the following functionality : 1) Simulation of the mechanical behavior of an Unmanned Aerial Vehicle : * Modeling of the body's aerodynamics with lift, drag and moment * Modeling of rotors' aerodynamics using the forces and moments' expressions from Philppe Martin's and Erwan Salaün's 2010 IEEE Conference on Robotics and Automation paper "The True Role of Accelerometer Feedback in Quadrotor Control". 2) Gives groundtruth informations : * Positions in East-North-Up reference frame * Linear velocity in East-North-Up and Front-Left-Up reference frames * Linear acceleration in East-North-Up and Front-Left-Up reference frames * Orientation from East-North-Up reference frame to Front-Left-Up reference frame (Quaternions) * Angular velocity of Front-Left-Up reference frame expressed in Front-Left-Up reference frame. 3) Simulation of the following sensors : * Inertial Measurement Unit with 9DoF (Accelerometer + Gyroscope + Orientation) * Barometer using an ISA model for the troposphere (valid up to 11km above Mean Sea Level) * Magnetometer with the earth magnetic field declination * GPS Antenna with a geodesic map projection.

-

Release Contributions:

Initial version

-

Contact:

Olivier Simonin

-

Partner:

Insa de Lyon

7.1.8 spank

-

Name:

Swarm Protocol And Navigation Kontrol

-

Keyword:

Protocoles

-

Functional Description:

Communication and distance measurement in an uav swarm

- URL:

-

Contact:

Stéphane D'alu

-

Participant:

Stéphane D'alu

7.2 New platforms

7.2.1 Chroma Aerial Robots platform

Participants: Olivier Simonin, Alessandro Renzaglia, Johan Faure.

This platform is composed of :

- Four quadrirotor PX4 Vision UAVs (Unmaned Aerial Vehicles), acquired 4 in 2021 and 2022. This platform is funded and used in the projects "Dynaflock" (Inria-DGA) and ANR "CONCERTO" (the team also owns 5 Parrot Bebop UAVs).

- 10 Crazyflies mini-drones and a LightHouse localization system, acquired in 2024.

- Two outdoor inflatable aviaries of 6m(L) x 4m(l) x 5m(H) each.

- Two indoor inflatable aviaries of 5m(L) x 3m(l) x 2.5m(H) each.

7.2.2 Barakuda

Participants: Lukas Rummelhard, Thomas Genevois, Robin Baruffa, Hugo Bantignies, Nicolas Turro.

Within the scope of the ANR Challenge MOBILEX, a 4-wheel all-terrain Barakuda robot from Shark Robotics is provided, and will be equipped with sensors and compute capabilities to achieve increasing level of autonomy.

8 New results

8.1 Bayesian Perception

Participants: Lukas Rummelhard, Robin Baruffa, Hugo Bantignies, Nicolas Turro, Jean-Baptiste Horel, Alessandro Renzaglia, Anne Spalanzani, Christian Laugier.

8.1.1 Evolution of the CMCDOT framework

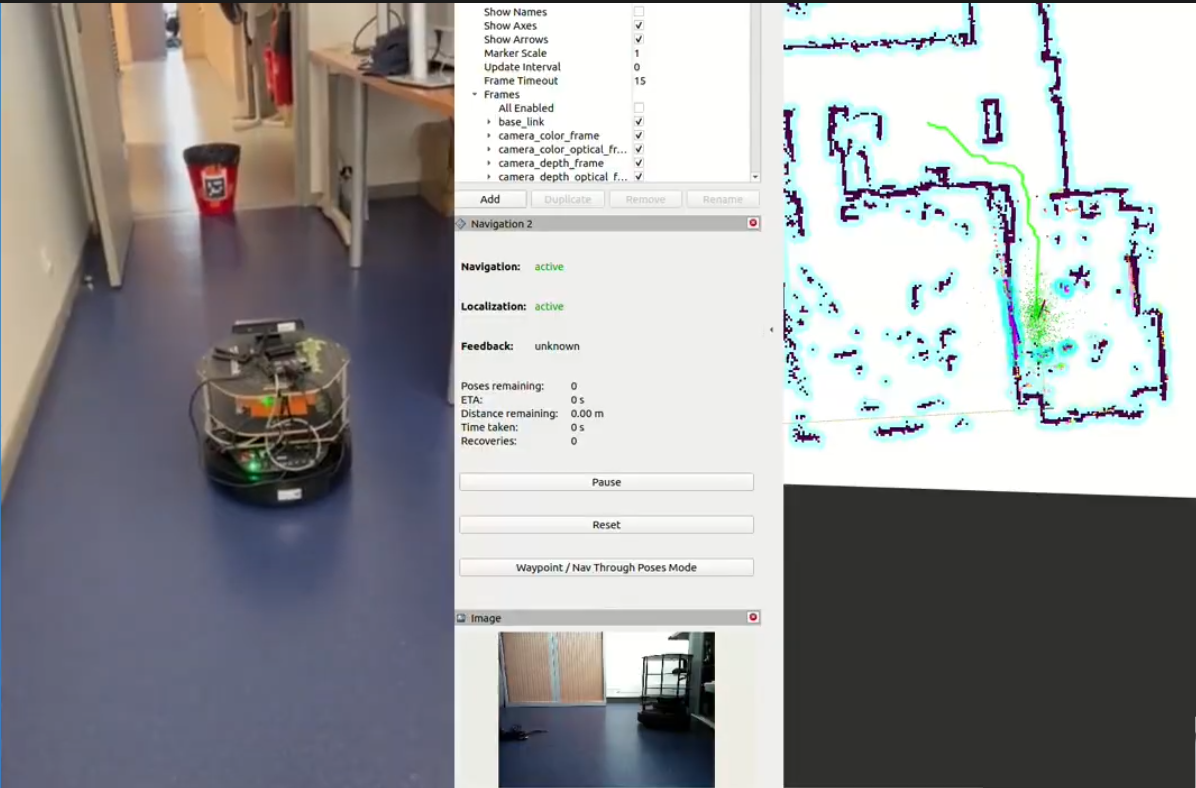

Participants: Robin Baruffa, Hugo Bantignies, Nicolas Turro, Lukas Rummelhard.

Recognized as one of the core technologies developed within the team over the years (see related sections in previous activity report of Chroma, and previously e-Motion reports), the CMCDOT framework is a generic Bayesian Perception framework, designed to estimate a dense representation of dynamic environments and the associated risks of collision, by fusing and filtering multi-sensor data. This whole perception system has been developed, implemented and tested on embedded devices, incorporating over time new key modules. Now extended to 3D for grid fusion and incorporating semantic states in the whole process, this framework, and the corresponding software, is at the core of many important industrial partnerships and academic contributions, and to be the subject of important developments, both in terms of research and engineering. Mainly focused this year on engineering issues, the activity on the important software and technological base has allowed a much stronger modularity and robustness, integrating into our solutions the latest reference developments in the mobile robotics community :

- Migration from ROS1 to ROS2 : involving the porting of all the developed perception and navigation software, and all the embedded GPU memory transfer optimizations, the migration was finally decided and carried out this year.

- Integration in the Nav2 framework : to benefit from its modularity and flexibility, allowing to develop specific bricks progressively while benefiting from a set of proven generic functionalities, the perception system has been interfaced with and the navigation system integrated in the Nav2 framework.

- Overall Software stability : synergistically with maturation efforts to spin off a start-up based on these technologies, huge steps to industrialize the software have been taken.

8.1.2 Validation of AI-based algorithms in autonomous vehicles

Participants: Jean-Baptiste Horel, Alessandro Renzaglia, Khushdeep Mann, Radu Mateescu [Convecs], Wendelin Serwe [Convecs], Christian Laugier.

In the last years, there has been an increasing demand for regulating and validating intelligent vehicle systems to ensure their correct functioning and build public trust in their use. Yet, the analysis of safety and reliability poses a significant challenge. More and more solutions for autonomous driving are based on complex AI-based algorithms whose validation is particularly challenging to achieve. An important part of our work has been devoted to tackle this problem, finding new approaches to validate probabilistic perception algorithms for autonomous driving, and investigating how simulations can be combined with real experiments via our framework of augmented reality to solve this challenge. This activity, started with our participation in the European project Enable-S3 (2016-2019), has then continued with the PRISSMA project (2021-2024) where we participated in collaboration with the Inria CONVECS team. This project, funded by the French government in the framework of the “Grand Défi: Sécuriser, certifier et fiabiliser les systèmes fondés sur l'intelligence artificielle”, regrouped several companies and public institutes to tackle the challenges of the validation and certification of artificial intelligence based solutions for autonomous mobility.

Within this context, our main effort has been focused on proposing a new approach that, given a high-level and human-interpretable description of a critical situation, generates relevant AV scenarios and uses them for automatic verification. To achieve this goal, we integrate two recently proposed methods for the generation and the verification that are based on formal verification tools. First, we proposed the use of formal conformance test generation tools to derive, from a verified formal model, sets of scenarios to be run in a simulator 54. Second, we model check the traces of the simulation runs to validate the probabilistic estimation of collision risks. Using formal methods brings the combined advantages of an increased confidence in the correct representation of the chosen configuration (temporal logic verification), a guarantee of the coverage and relevance of automatically generated scenarios (conformance testing), and an automatic quantitative analysis of the test execution (verification and statistical analysis on traces) 55. The last part of the project in 2024 has been mainly dedicated to carry out an experimental campaign at the Transpolis testing facility to showcase the main results of this work 25.

8.2 Situation Awareness & Decision-making for Autonomous Vehicles

Participants: Christian Laugier, Manuel Alejandro Diaz-Zapata, Alessandro Renzaglia, Jilles Dibangoye, Anne Spalanzani, Wenqian Liu, Abhishek Tomy, Khushdeep Singh Mann, Rabbia Asghar, Lukas Rummelhard, Gustavo Salazar-Gomez, Olivier Simonin.

In this section, we present all the novel results in the domains of perception, motion prediction and decision-making for autonomous vehicles.

8.2.1 Situation Understanding and Motion Forecasting

Participants: Kaushik Bhowmik, Anne Spalanzani.

Forecasting the motion of surrounding traffic is one of the key challenges in the quest to achieve safe autonomous driving technology. Current state-of-the-art deep forecasting architectures are capable of producing impressive results. However, in many cases, they also output completely unreasonable trajectories, making them unsuitable for deployment. In 2023 we started to work on predicting the motion of heterogeneous agents in urban environment (K. Bhowmik's PhD). The goal is to predict the behavior of pedestrians, cyclists, and even electrical scooters. Using Deeplearning methods a existing big datasets of urban scenes, we plan to build robusts models of various agents. When their motion is highly unpredictable, we aim at warning the autonomous vehicle that a specific zone around it could be dangerous.

8.2.2 Cross-dataset evaluation of Semantic Grid Generation Models

Participants: Manuel Alejandro Diaz-Zapata [PhD. student], Wenqian Liu, Robin Baruffa, Christian Laugier, Jilles Dibangoye, Olivier Simonin.

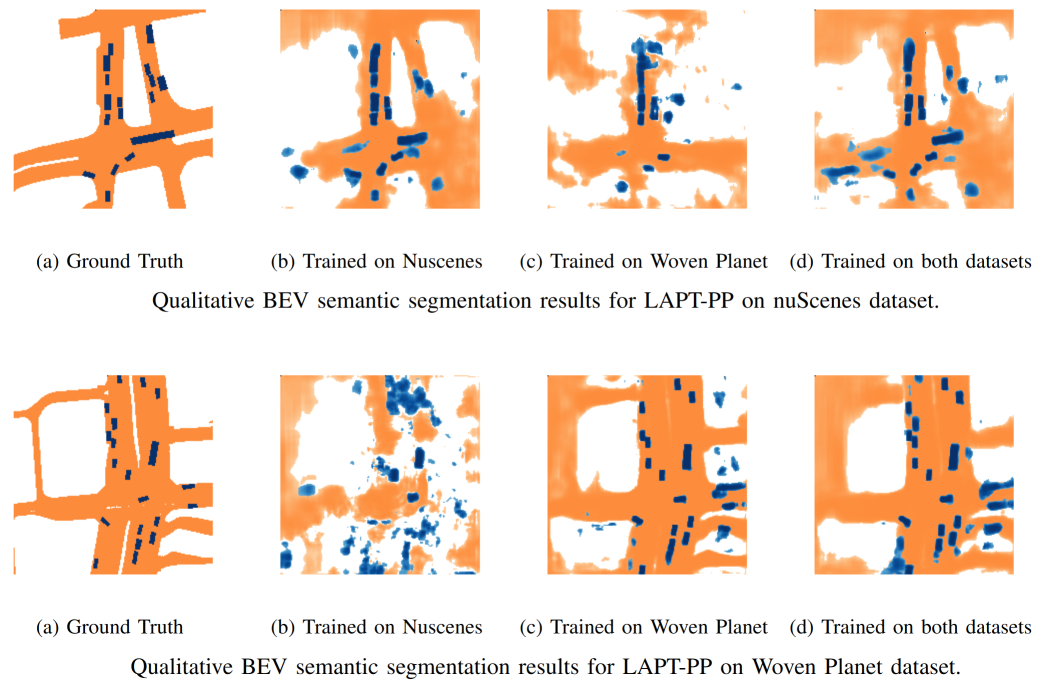

A comprehensive cross-dataset evaluation framework for Bird's-Eye View (BEV) semantic segmentation was introduced in 2024 through the BEVal study 23. This work addresses a critical gap in BEV segmentation research, which historically focused on single-dataset evaluation (typically nuScenes), potentially leading to overly specialized models that may fail when faced with different environments or sensor configurations. The study evaluated three state-of-the-art BEV segmentation approaches across different sensor modalities: LSS (camera-only), LAPT (early camera-LiDAR fusion), and LAPT-PP (late camera-LiDAR fusion). Testing was conducted across the nuScenes and Woven Planet datasets, examining performance on three key semantic categories: vehicles, humans, and drivable areas, (see Fig. 3). A notable finding was that models heavily dependent on LiDAR data, particularly LAPT-PP, showed significant performance degradation in cross-dataset scenarios, with up to 80% reduction in IoU scores. In contrast, camera-based models demonstrated better generalization capabilities across datasets.

The research also explored multi-dataset training, combining both datasets during the training phase, which showed promising results with models achieving more balanced performance across both datasets. Particularly noteworthy was the improvement in human segmentation performance on the Woven Planet dataset, which saw a 10-15% increase when trained with the more diverse nuScenes data included. The study's findings underscore the importance of cross-dataset validation in developing robust BEV segmentation models for autonomous driving applications. The results suggest that while sensor fusion approaches can achieve high performance on individual datasets, their generalization capabilities may be limited by dataset-specific characteristics, particularly in LiDAR configurations. This highlights the need for diverse training data and robust validation strategies in developing reliable autonomous driving systems.

Qualitative Results for Cross-dataset Validation

8.2.3 AI-driven Motion Planning and Temporal Multi-Modality Sensor Fusion for Semantic Grid Prediction

Participants: Gustavo Salazar-Gomez, Manuel Alejandro Diaz-Zapata, Wenqian Liu, Christian Laugier, Anne Spalanzani, Khushdeep Singh Mann.

In autonomous driving, accurately perceiving the environment despite occlusions is vital for safe and reliable navigation. TLCFuse was designed to enhance occlusion-aware semantic segmentation in autonomous driving scenarios. By integrating temporal cues and fusing multi-modal inputs from LiDAR, cameras, and ego-motion, TLCFuse creates robust bird's-eye-view (BEV) semantic grids capable of detecting occluded objects. The model processes temporal multi-step inputs using a transformer-based encoder-decoder architecture to extract spatio-temporal features effectively. Evaluated on the nuScenes dataset, TLCFuse outperforms state-of-the-art methods, particularly in scenarios with occlusions. The model's adaptability is further demonstrated in downstream tasks, including multi-step BEV prediction and ego-vehicle trajectory forecasting, showcasing its potential for broader applications in autonomous driving systems. This work was presented at IEEE Intelligent Vehicle(IV) 2024 30.

In the context of our cooperation with Toyota Motor Europe, we focus on developing advanced and reliable approaches for autonomous driving capabilities, promoting ethical and explainable decision making.

Working on AI-driven motion planning for autonomous vehicles was the main focus in 2024 with the start of Gustavo Salazar's PhD. The main objective is to propose an AI-driven framework for Trajectory Planning & Decision-making for autonomous driving, which takes into account the knowledge provided by the perception and tracking modules and interactions with other agents to plan the optimal and safest trajectory, avoiding the obstacles and complying with the traffic rules.

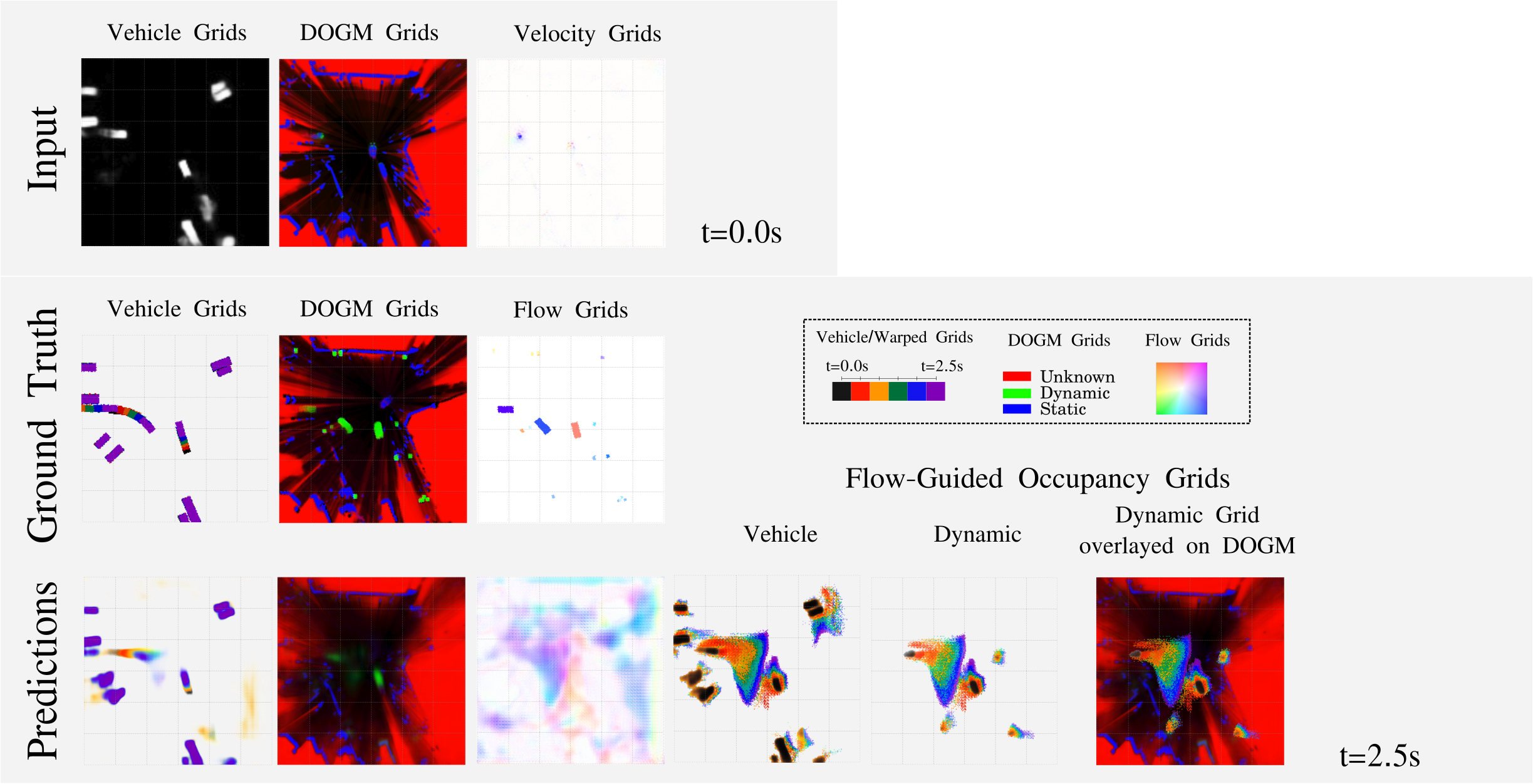

8.2.4 Dynamic Scene Prediction for Urban Driving Scene Using Occupancy Grid Maps

Participants: Rabbia Asghar [PhD. student], Lukas Rummelhard, Anne Spalanzani, Christian Laugier.

Accurate prediction of driving scenes is essential for road safety and autonomous driving. This remains a challenging task due to uncertainty in sensor data, the complex behaviors of agents, and possibility of multiple feasible futures. Over the course of Rabbia's PhD, we have addressed the prediction problem by representing the scene as dynamic occupancy grid maps (DOGMs) and integrating semantic information. We employ deep learning-based spatiotemporal approach to predict the evolution of scene and learn complex behaviours.

Carrying forward the work, in 2024, we consider prediction of flow or velocity vectors in the scene. We propose a novel multi-task framework that leverages dynamic OGMs and semantic information to predict both future vehicle semantic grids and the future flow of the scene. This incorporation of semantic flow not only offers intermediate scene features but also enables the generation of warped semantic grids. Evaluation on the real-world NuScenes dataset demonstrates improved prediction capabilities and enhanced ability of the model to retain dynamic vehicles within the scene. This work was published at IEEE ITSC 2024 21.

Developing further on these results, a novel approach to bring together agent-agnostic scene predictions and agent-specific predictions is proposed. This work not only focuses on predicting the behaviors of vehicle agents but also identifies other dynamic entities within the scene and anticipates their possible evolutions. We predict scene evolution as agent semantic grids, dynamic occupancy grid maps, and scene flow grids. Leveraging flow-guided prediction, these grids enable diverse future predictions for vehicles and dynamic elements while also capturing inter-grid dependencies through specialized loss functions. Evaluations on real-world NuScenes and Woven Planet datasets demonstrate superior prediction performances for dynamic vehicles and generic dynamic scene elements compared to baseline method (see Fig. 4). This work was presented at a poster session at IEEE IROS 2024 41.

Complex driving scene forcasting

8.3 Robust state estimation (Sensor fusion)

Participants: Agostino Martinelli.

In 2024, the primary contribution of Dr. Martinelli's research was the application of the general solution to the unknown input observability problem found in 2022 57 and the parameter identifiability problem found in 2023 to a widely studied biological models in the framework of the HIV dynamics. This application not only uncovered significant errors in the existing literature but also revealed new properties of the model, including insights from its variants. The results were published in the Journal of Theoretical Biology 16.

Additionally, Dr. Martinelli made significant improvements to the general solution of the unknown input observability problem, initially proposed in 2022. This enhancement, referred to as the "canonicalization process," was published in the journal of Information Fusion 40 and directly on HAL 15.

The solutions from 2022 (unknown input observability) and 2023 (time-varying parameter identifiability), originally derived from robotics-related problems, have proven to be both powerful and versatile. Their application in 2024 to biological models, such as HIV dynamics, highlights their broad utility and impact, enabling the correction of key errors in the current state of the art.

8.4 Online planning and learning for robot navigation

8.4.1 Motion-planning in dense pedestrian environments

Participants: Thomas Genevois, Anne Spalanzani.

Under the coordination of Anne Spalanzani, we study new motion planning algorithms to allow robots/vehicles to navigate in human populated environment while predicting pedestrians' motions. We model pedestrians and crowd behaviors using notions of social forces and cooperative behavior estimations. We investigate new methods to build safe and socially compliant trajectories using theses models of behaviors. We propose proactive navigation solutions as well as deep learning ones.

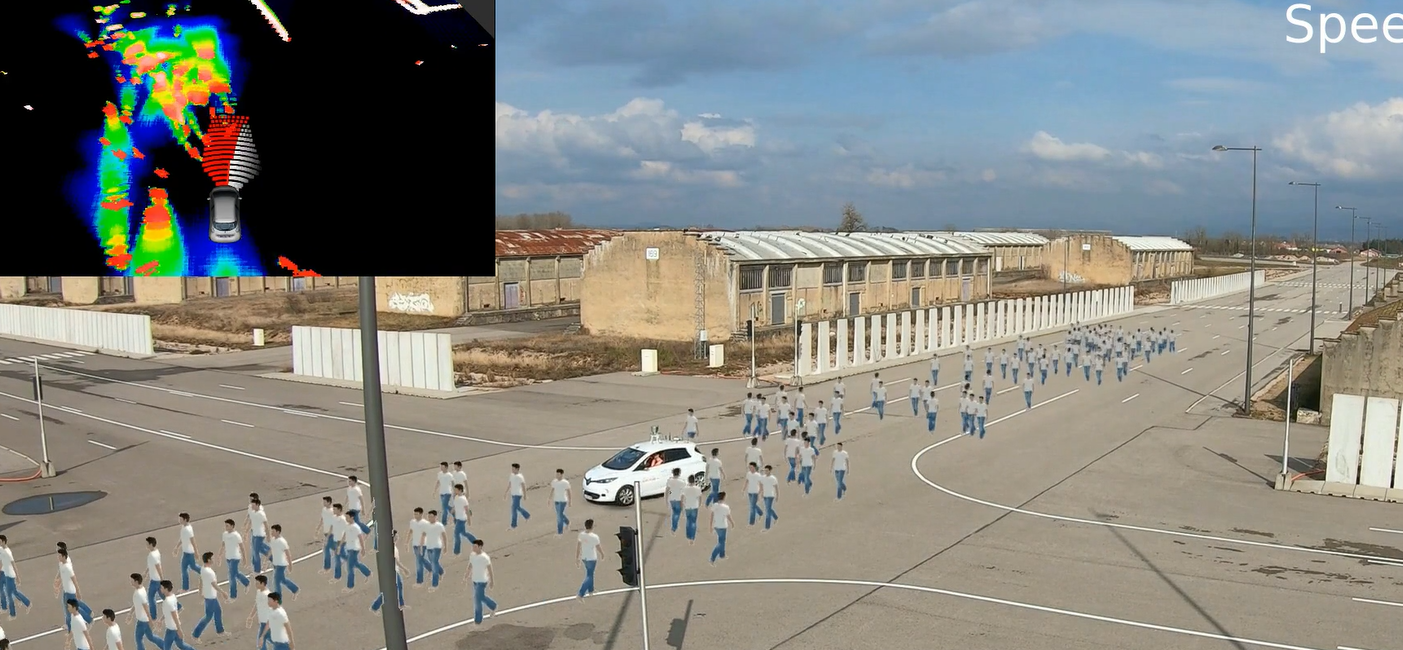

since 2018 we've been working on modelling crowds and autonomous vehicles using Extended Social Force Models (in the scope of Manon Predhumeau's PhD) and Proactive Navigation for navigating dense human populated environments (in the scope of Maria Kabtoul's PhD). The focus of the first work has been on the realistic simulation of crowds in shared spaces with an autonomous vehicle (AV), see Fig. 5.a. We proposed an agent-based model for pedestrian reactions to an AV in a shared space, based on empirical studies and the state of the art. The model includes an AV with Renault Zoé car's characteristics, and pedestrians' reactions to the vehicle. The model extends the Social Force Model with a new decision model, which integrates various observed reactions of pedestrians and pedestrians groups. The SPACiSS simulator is an opensource simulator still used by the team. The focus of the second work was a pedestrian-vehicle interaction behavioral model and a proactive navigation algorithm. The model estimates the pedestrian's cooperation with the vehicle in an interaction scenario by a quantitative time-varying function. Using this cooperation estimation the pedestrian's trajectory is predicted by a cooperation-based trajectory planning model. We then used this cooperation based behavioral model to design a proactive longitudinal velocity controller.

In 2023, Thomas Genevois worked on improving the previous works done in this topic. He proposed some improvement on the SPACiSS simulator, proposed a version of a huamn and interaction aware collision avoidance that can be used in the global architecture of the zoe car. He then linked the SPACiSS simulator to his Augmented Reality simulator so that the navigation strategies among crowds can be tested using the real zoé car 52. His PhD has been defended in June 2024.

A scene of an Autonomous Vehicle with pedestrians

A scene of an Autonomous Vehicle with pedestrians

8.4.2 Attention Graph for Multi-Robot Social Navigation with Deep Reinforcement Learning

Participants: Jacques Saraydaryan, Laetitia Matignon (Lyon1/LIRIS), Olivier Simonin.

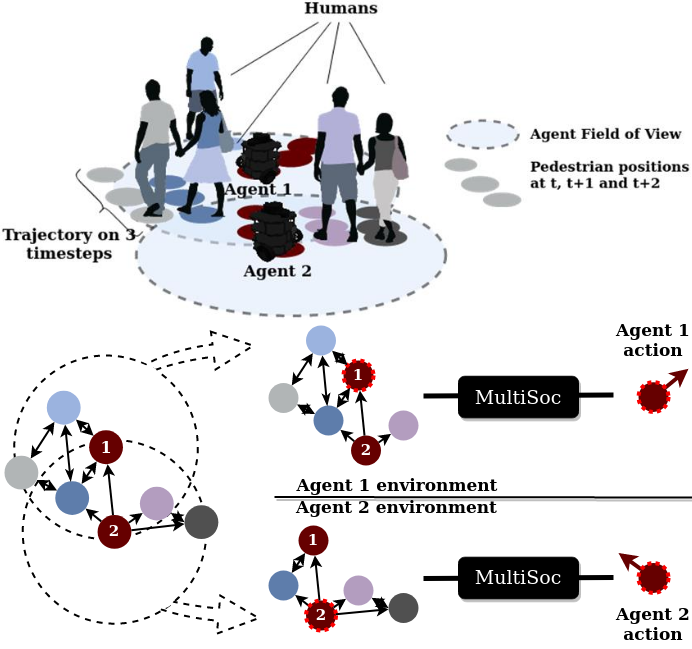

Multisoc architecture

Learning robot navigation strategies among pedestrian is crucial for domain based applications. Combining perception, planning and prediction allows to model the interactions between robots and pedestrians, resulting in impressive outcomes especially with recent approaches based on deep reinforcement learning (RL). However, these works do not consider multi-robot scenarios.

In this work, we define MultiSoc, a new method for learning multi-agent socially aware navigation strategies using RL (see illustration fig. 6). Inspired by recent works on multi-agent deep RL, our method leverages graph-based representation of agent interactions, combining the positions and fields of view of entities (pedestrians and agents). Each agent uses a model based on two Graph Neural Network combined with attention mechanisms. First an edge-selector produces a sparse graph, then a crowd coordinator applies node attention to produce a graph representing the influence of each entity on the others. This is incorporated into a model-free RL framework to learn multi-agent policies. We evaluated our approach on simulation and provide a series of experiments in a set of various conditions (number of agents/pedestrians). Empirical results show that our method learns faster than social navigation deep RL mono-agent techniques, and enables efficient multi-agent implicit coordination in challenging crowd navigation with multiple heterogeneous humans. Furthermore, by incorporating customizable meta-parameters, we can adjust the neighborhood density to take into account in our navigation strategy. This work has been published in AAMAS 2024 conference 24. We now continue this work in the postdoc supported by the SOLAR-Nav project starting in 2025.

8.4.3 Deep-Learning for vision-based navigation

Participants: Pierre Marza (PhD. student CITI & LIRIS), Christian Wolf (NaverLabs europe), Olivier Simonin, Laetitia Matignon (LIRIS/Lyon 1).

PhD thesis of Pierre Marza (2021-24). In the context of the REMEMBER AI Chair, Pierre Marza's PhD studied high-level navigation skills for autonomous agents in 3D environments.

In this work, we focuse on training neural agents able to perform semantic mapping, both from augmented supervision signal, and with proposed neural-based scene representations. Neural agents are often trained with Reinforcement Learning (RL) from a sparse reward signal. Guiding the learning of scene mapping abilities by augmenting the vanilla RL supervision signal with auxiliary spatial reasoning tasks to solve are shown to help with navigating efficiently. Instead of improving the training signal of neural agents, we showed how incorporating specific neural-based representations of semantics and geometry within the architecture of the agent can help improve performance in goal-driven navigation 58. Since 2023, we study how to best explore a 3D environment in order to build neural-based representations of space that are as satisfying as possible based on robotic-oriented metrics. This work has been published in IROS 2024 conference 26. Finally, we moved from navigation-only agents trained to generalize to new environments to multi-task agents. See Publication in CVPR 2024 27. Pierre Marza defended his PhD thesis on November 25th, 2024 35.

8.5 Multi-Robot path planning and mapping

8.5.1 Navigation Among Movable Obstacles (NAMO): extension to multi-robot and social constraints

Participants: Jacques Saraydaryan, Olivier Simonin, David Brown (Ing. Inria).

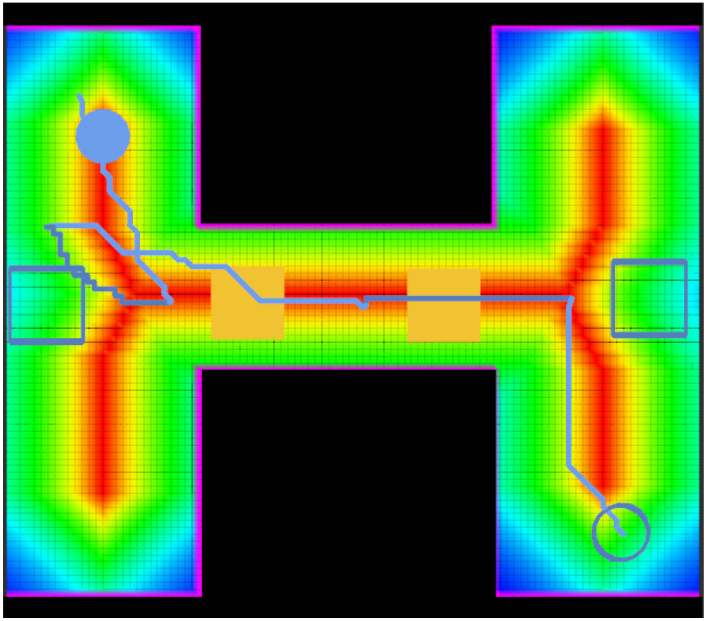

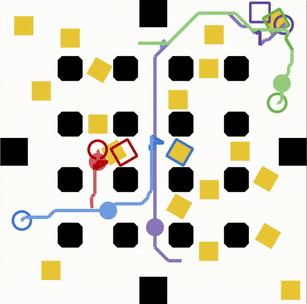

S-NAMO paths and Pepper robot

S-NAMO paths and Pepper robot

In Chroma, we study NAMO problems since 2018 (Navigation Among Movable Obstacles). The PhD thesis of Benoit Renault (2019-23) allowed us to extend existing algorithms with the social cost of object placement (Social-NAMO 62) and the generalisation to multi-robot NAMO problems.

In 2023, we obtained an Inria ADT project, called NAMOEX, aiming to extend the Benoit Renault's NAMO simulator. By hiring David Brown (Ing.) in NAMOEX, we robustified the simulator and studied multi-robot NAMO strategies, leading to a publication at IROS 2024 conference 29. In 2024, we developped a connexion between our S-NAMO planner and the Gazebo robotic simulator, also extended as a monitoring tool for real experiments with a Turtlebot robot, see Fig. 7.

8.5.2 Multi-UAV Exploration and Visual Coverage of 3D Environments

Participants: Alessandro Renzaglia, Benjamin Sportich, Kenza Boubakri, Olivier Simonin.

Multi-robot teams, especially when they include Unmanned Aerial Vehicles (UAVs), are highly efficient systems for assisting humans in gathering information on large and complex environments. In these scenarios, cooperative coverage and exploration are two fundamental problems with numerous important applications, such as mapping, inspection of complex structures, and search and rescue missions. In both cases, the goal of the robots is to navigate through the environment and cooperate to acquire new information in order to complete the mission in the shortest possible time 64. Part of this activity was recently conducted in the framework of the European H2020 project BugWright2, which ended in early 2024, where a central use-case focused on corrosion detection and inspection on cargo ships. In this context, we investigated path planning strategies for fleets of UAVs to efficiently inspect large surfaces such as ship hulls and water tanks 53.

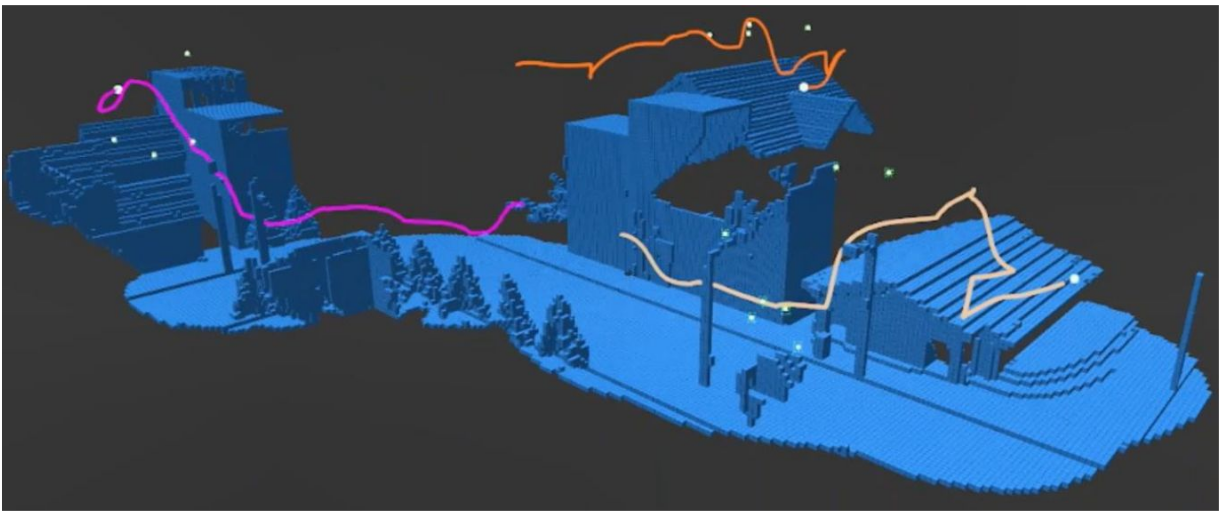

Illustration of UAV trajectories during an exploration mission.

This line of research is now mainly carried out within the ANR project AVENUE, which focuses on the coordination of a fleet of UAVs to efficiently explore and reconstruct unknown 3D outdoor scenes. In particular, in this research work, both the task execution time and the accuracy of the final map are considered by proposing a distributed strategy where each robot can dynamically switch between two different behaviors: exploration mode and coverage mode. The exploration mode aims at obtaining as much and as fast as possible information about the structure of the environment and surfaces, even if imperfect, while the coverage mode aims at refining the mesh based on the collected information. Formally, the two behaviors are obtained by defining different information gain functions, both using a Euclidean Signed Distance Field (ESDF) based representation of the environment, to define future viewpoints. See illustration 8.

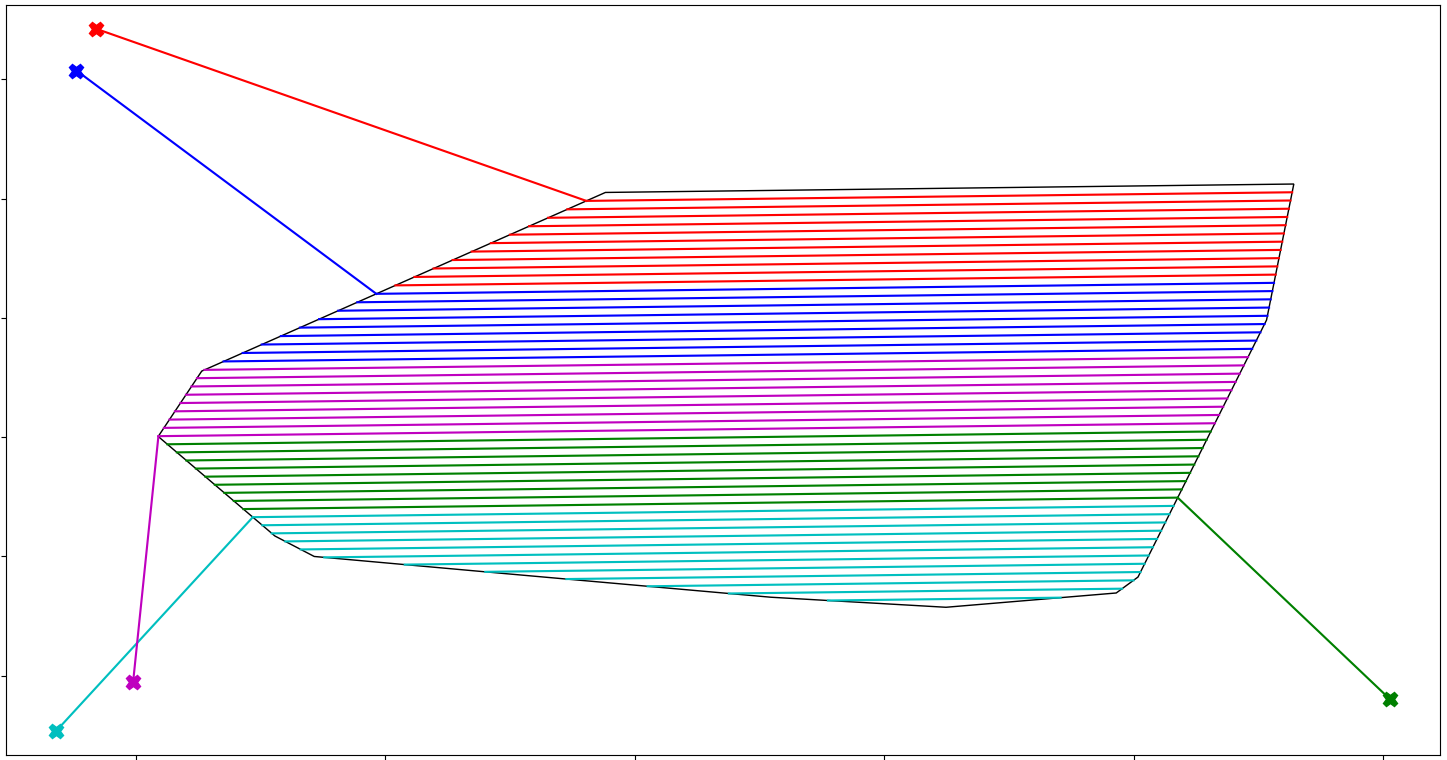

8.5.3 Multi-Robot Path Planning with Cable Constraints

Participants: Xiao Peng, Christine Solnon, Olivier Simonin, Simon Ferrier, Damien Rambeau.

This research was done within the context of the european project BugWright2 (2020-24) that aimed at designing an adaptable autonomous multi-robot solution for inspecting ship outer hulls.

PhD thesis of Xiao Peng. The aim of this PhD thesis was to design algorithms to plan the missions of a set of mobile robots, each attached to a flexible cable (a tether), while ensuring that the cables never cross. In 60, we formally defined the Non-Crossing MAPF (Multi-Agent Path Finding) problem and showed how to compute lower and upper bounds for this problem by solving well known assignment problems. We introduced a Variable Neighbourhood Search approach for improving the upper bound, and we introduced a CP model for solving the problem to optimality. In 59, we extended this approach to the case of non point-sized robots. In 2023/24, we extended the study to the coverage of an area with obstacles and fobiden arreas using a single tethered robot. Our solving approach relies on combining spanning-tree computation and ILP. This work has been accepted for publication in IEEE Robotics and Automation Letters (RAL journal) 17. Then we examined different algotihms to divide the environment in order to distribute the coverage to several robots. Xiao Peng defended his PhD thesis on February 19th, 2024 36.

Simulations and Robotics experimentations

In parallel of the thesis we developped an experimental setup in order to examine the complexity of deploying the proposed algorithms with real robots. First, Damien Rambeau (Ing.) developped a realistic simulation of crawler robots attached to tethers with Gazebo (he defined a model of cable able to enroll around obstacles). Second, Simon Ferrier (Eng.), developped a real robot demonstrator based on several turtlebot mobile robots that were attached to windable cables. At the end, we proposed an hybrid demonstrator were we merged real crawler robots (from CNRS/GeorgiaTech Metz partner) with simulated ones, in order to study complex multi-tethered-crawler scenarios. We realized a video and a presentation of this work for the ICRA 40th anniversary conference 43.

8.5.4 Multi-robot agricultural itineraries planning (NINSAR project)

Participants: Simon Ferrier, Olivier Simonin, Alessandro Renzaglia.

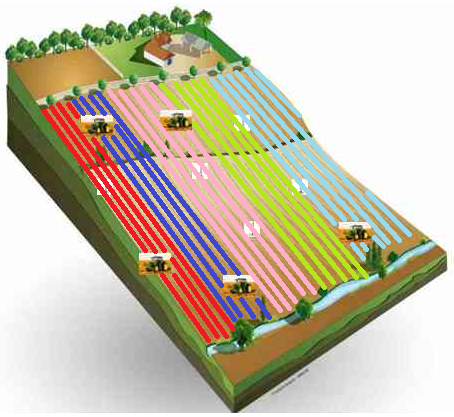

(a) Rows allocation example (b) Solution given by our heuristic for a field of 50 rows covered by 5 robots.

(a) Rows allocation example (b) Solution given by our heuristic for a field of 50 rows covered by 5 robots.

The NINSAR project, part of the PEPR AgroEcologie & Numerique, aims to use robots to help the agricultural sector. In the PhD thesis of Simon Ferrier, started on summer 2024, we define and study algorithms for the mission planning of a fleet of mobile robots. First work concerns the planning of itineries of each robot to treat all rows of a field in minimum time. We defined an optimization problem where the criteria are minimzing the makespan (mission duration) and the computational time.

To deal with this planning problem, we proposed two approaches: 1) a heuristic based on the division of the total sum of line lengths combined with a solution improvement algorithm 2) a Branch and Bound algorithm computing the optimal solution, and an anytime version. An illustration of the problem and of a solution are presented in figure 9. Results show that the heuristic approach is able to obtain very good results (close to the optimality) in a very short time while the exact approach presents a combinatory explosition with the number of robots deployed.

Next objectives are to integrate the execution of plans with unespected events requiring (local) online replanning.

8.5.5 Cooperative Gradient Estimation for Multi-Robot 3D Source Seeking

Participants: Alessandro Renzaglia.

As part of our research on online path planning and coordination of multi-robot systems, we recently began collaborating with the Gipsa-lab in Grenoble to study the problem of source seeking 47, 63. This problem is widely studied in robotics due to its numerous applications, including search and rescue operations, environmental monitoring, and pollution source detection. In source-seeking scenarios, an unknown source generates a 3D spatially distributed signal field. A team of autonomous robots, equipped with sensors to measure signal intensity at their respective locations, aims to cooperatively localize the source by leveraging the information collected and shared within the team.

Our work focuses on developing novel strategies to estimate the gradient of the signal field cooperatively. This gradient estimation is then used to guide the multi-robot system toward the source location. A key contribution of our research has been the use of geometric optimization approaches, such as centroidal Voronoi partitions on 3D surfaces, to define suitable multi-robot formations and to then exploit their geometric properties to obtain analytically a accurate and robust gradient estimations 22. This class of solutions offers the advantages of low computational cost, distributed implementation for increased robustness, and suitability for online team reconfiguration. This research is still ongoing and we are actively improving the initial solutions. In particular, current investigations focus on the impact of heterogeneous sensors with varying noise levels and how the team can adapt to such data to maintain robust and reliable gradient estimations.

8.6 Swarm Robotics

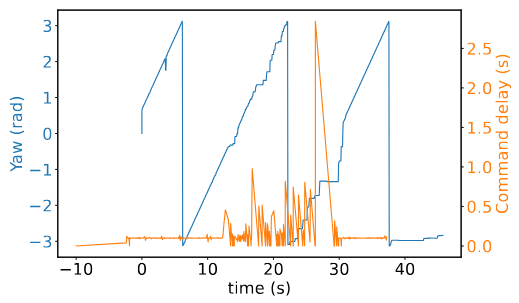

8.6.1 Communications in swarm of UAVs

Participants: Théotime Balaguer (PhD student), Thierry Arrabal, Olivier Simonin, Stephane d'Alu, Isabelle Guerin-Lassous (Lyon 1/Lip), Nicolas Valle, Hervé Rivano (Agora team).

In the context of the last year of the CONCERTO ANR project, we studied the impact of the quality of Wi-Fi communications on the behavior of remotely controlled UAVs. We designed an experimental platform composed of PX4 Vision UAVs, a Motion Capture system (the one of the ARMEN/LS2N team), and a tool we developed to generate mission traffic from the ground station. This allowed us to analyze how the presence of mission traffic impacts the stability of the reception of the control traffic, and how, in turn, it impacts the behavior of the UAVs. This work is presented in the publication 20 and illustrated in Fig. 10.

Traffic perturbation on UAV remote control

Traffic perturbation on UAV remote control

In the context of the PhD thesis of Théotime Balaguer, we proposed in 2023 a co-simulator, called DANCERS, allowing to connect any physics robotic simulator with any network simulator. In 2024 we finalized the implementation and its evaluation, in particular by connecting Gazebo and ns3 simualtors to study communication in multi-robot systems such as in a fleet of drones. DANCERS allows also SITL hybrid simulation (Software in the loop). This work has been published in the french revue ROIA 14 and recently accepted for publication in the international conference IEEE SIMPAR 2025 (Simulation, Modeling, and Programming for Autonomous Robots).

In 2024, we continued to study the Time of Flight approach to measure distances between UAVs. Stephane D'Alu, who is preparing a PhD in both Chroma and Agora teams, study the implementation of a broadcast-based protocol 48 with UWB Decawave chips embedded on UAVs. The proposed solution has been simulated and also experimented with several static units. A first publication is in prepapration.

8.6.2 Flocking-based UAVs chain creation

Participants: Théotime Balaguer (PhD student), Olivier Simonin, Isabelle Fantoni (LS2N, Nantes), Isabelle Guerin-Lassous (Lyon 1/Lip), Etienne Villemurev(Univ. Sherbrooke/3IT, Canada).

PhD thesis of Theotime Balaguer

Chain creation

Chain creation

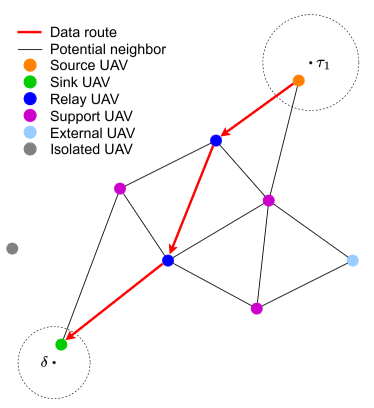

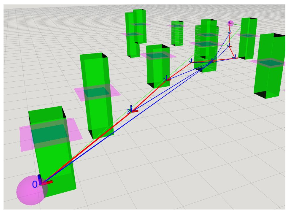

In the context of the PhD thesis of Théotime Balaguer and the Associated Team ROBLOLC with Univ. of Sherbrooke, we proposed a new decentralized algorithm to manage the deployment of a fleet of drones from a source area to one or several target areas. Our approach relies on the extension of the flocking model, which was initially defined to allow a fleet of agents to fly together. We propose an algorithm ensuring the emergence of a “mission” communication path from a leader drone to a source drone while the leader flies towards its target area. By defining two possible roles for others drones (mission node/free node), drones dynamically integrates the “mission” path while the flocking stretches while avoiding obstacles and their communication perturbations. The originality of our model is to be decentralized and able to exploit data routing measures to optimize dynamically the mission paths in term of distance and QoS. This work is illustrated in figure 11. A publication is in preparation.

8.6.3 Graph-based decentralized exploration with a swarm of UAVs in unknown subterrean environments

Participants: Mathis Fleuriel (PhD student), Olivier Simonin, Elena Vanneaux (ENSTA Paris, U2IS), David Filiat (ENSTA Paris, U2IS).

The use of unmanned aerial systems (UAS) is a promising solution for search and rescue (SAR) operations conducted to find missing persons. In the ANR VORTEX project, we study how it is possible to deploy rapidly autonomous drones in indoor environments without using conventional communications or mapping but by leveraging cameras & vision-based processes. We propose to explore an approach where a swarm of small UAVs (quadrotors) will quickly deploy in the environment while forming a dynamic mesh of sensors. By flying autonomously but keeping visual contact with at least another UAV, each drone will be able to estimate its relative location but also to communicate information from drone to drone. As a consequence, drones will form a dynamic topological graph covering progressively the environment.

In 2024, we started to study epxloration stategies through Mathis Fleuriel's Master interbnship (co-supervized by E. Vanneaux from ENSTA Paris and Olivier Simonin from Chroma team). We proposed a first model, defined as multi-agent behaviors allowing the swarm to explore the environment and to self-reconfigure when perturbed (eg. when a dead-end is encountred). Our approach is to merge graph search strategies and swarm behaviors to achieve time efficiency while managing the limited number of drones. To this end, the model relies on deploying a chain of drones guided by a leader which is the drone managing the exploration (the leader role can change when the chain needs reconfiguration). In the end of 2024, Mathis Fleuriel was recruited as PhD student, in Lyon, in the VORTEX project, to continue this research (with same co-supervisors). A first publication of the chain model is in preparation.

8.6.4 Robotic Matter

Participants: Baudouin Saintyves, Heinrich Jaeger (Prof. University of Chicago), Olivier Simonin.

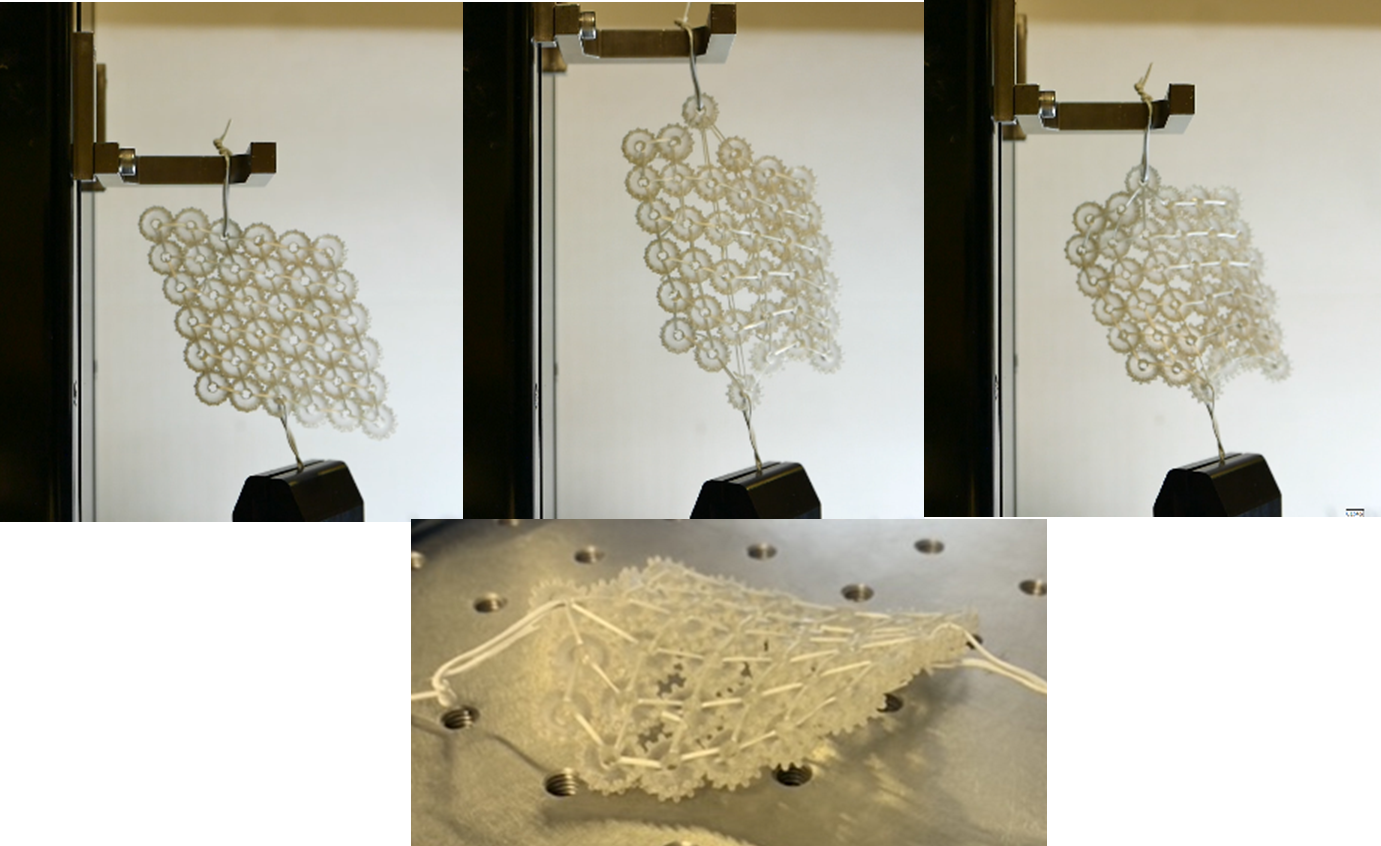

Tensile test experiment on a passive proof of concept, toward the conception of Shellbots. Full deformation cycle from left to right.

Since November, Baudouin Saintyves made progress on the development of a new robotic prototype, the Shellbot. The goal is to build a monolayer that can reconfigure robotically to change its local curvature. We have built a passive prototype, a metamaterial, and performed preliminary mechanical testing to help the design of a future robotic version (see figure 12). This study consists in exploring how the reorganization of the building blocks is correlated with the final curvature of the material.

8.7 Multi-Agent Sequential Decision-Making under Uncertainty

This research was animated by Jilles S. Dibangoye until he left the team in 2023 to join the Groningen University (NL). This section presents the work carried out till 2024 which includes foundations of sequential decision making by a group of cooperative or competitive robots or more generally artificial agents. To this end, we explore combinatorial, convex optimization and reinforcement learning methods.

8.7.1 Optimally Solving Decentralized POMDPs Under Hierarchical Information Sharing

Participants: Johan Peralez, Aurélien Delage, Olivier Buffet (Inria Nancy, Larsen), Jilles S. Dibangoye.

This work is the result of an effort to provide a strong theory for optimally solving decentralized decision-making problems, represented as decentralized partially observable Markov decision processes (Dec-POMDPs), with tractable structures 65. This work describes core ideas of multi-agent reinforcement learning algorithms for decentralized partially observable Markov decision processes (Dec-POMDPs) under hierarchical information sharing. That is the dominant management style in our society—each agent knows what her immediate subordinate knows. In this case, we prove backups to be linear due to (normal-form) Bellman optimality equations being for the first time specified in extensive form. Doing so, one can sequence agent decisions at every point in time while still preserving optimality; hence paving the way for the first optimal -learning algorithm with linear-time updates for an important subclass of Dec-POMDPs. That is a significant improvement over the double-exponential time required for normal-form Bellman optimality equations, in which updates of agent decisions are performed in sync every point in time. Experiments show algorithms utilizing extensive instead of normal-form Bellman optimality equations can be applied effectively to much larger problems. This work has been published in ICML 2024 28.

8.7.2 Optimally Solving Decentralized POMDPs: The Sequential Central Learning Approach

Participants: Johan Peralez [Post-doc], Aurélien Delage [PhD Student], Olivier Buffet [Inria Nancy, Larsen], Jilles S. Dibangoye.

The centralized learning for simultaneous decentralized execution paradigm emerged as the state-of-the-art approach to -optimally solving a simultaneous- move decentralized partially observable Markov decision process, but the scalability remains a significant issue. This paper presents a novel—yet more scalable—alternative, namely sequential central learning for simultaneous decentralized execution. This methodology pushes the applicability of Bellman’s principle of optimality further, raising three new properties. First, it allows a central learner to reason upon sufficient sequential statistics instead of prior simultaneous ones. Next, it proves that -optimal value functions are piecewise linear and convex in sufficient sequential statistics. Finally, it drops the time complexity of the backup operators from double exponential to linear at the expense of longer planning horizons. Besides, it makes it easy to use single-agent methods—e.g., a reinforcement learning algorithm enhanced with these findings applies while still preserving its asymptotic convergence guarantees. Experiments on standard 2- as well as -agent benchmarks from the literature against state-of-the-art -optimal solvers confirm the superiority of the novel paradigm. This work was accepted for publication in AAAI 2025 conference.

8.8 Constrained Optimisation problems

8.8.1 Constraint Programming

Participants: Jean-Yves Courtonne (Inria STEEP), François Delobel (LIMOS), Patrick Derbez (Inria Capsule), Léon Fauste, Arthur Gontier (Inria Capsule), Mathieu Mangeot (Inria STEEP), Loïc Rouquette, Christine Solnon.

In many of our applications, we have to solve constrained optimization problems (COPs) such as vehicle routing problems, or multi-agent path finding problems, for example. These COPs are usually NP-hard, and we study and design algorithms for solving these problems. We more particularly study approaches based on Constraint Programming (CP), that provides declarative languages for describing these problems by means of constraints, and generic algorithms for solving them by means of constraint propagation. In 61, we introduce canonical codes for describing benzenoids, and we introduce CP models for efficiently generating all benzenoids that satisfy some given constraints.

PhD thesis of Léon Fauste (since Sept. 2021). This thesis is funded by Ecole Normale Supérieure Paris Saclay and it is co-supervised by Mathieu Mangeot, Jean-Yves Courtonne (from Inria STEEP team) and Christine Solnon. The goal of the thesis is to design new tools for choosing the best geographical scale when relocating productive activities, and the context of this work is described in 49. During the first months of his thesis, Léon has designed a first Integer Linear Programming (ILP) model described in 50. In 2023/2024, Léon is on leave (césure) for one year in order to follow a Master in Geography.

8.8.2 Vehicle routing problems

Participants: Romain Fontaine (PhD Student CITI), Christine Solnon, Jilles S. Dibangoye (Univ. Groningen).

The research is done within the context of the transportation challenge and funded by INSA Lyon.

Integration of traffic conditions:

In classical vehicle routing problems, travel times between locations to visit are assumed to be constant. This is not realistic because traffic conditions are not constant throughout the day, especially in an urban context. As a consequence, quickest paths (i.e., successions of road links), and travel times between locations may change along the day. To fill this lack of realism, cost functions that define travel times must become time-dependent. These time-dependent cost functions are defined by exploiting data coming from sensors, and the frequency of the measures as well as the number and the position of the sensors may have an impact on tour quality.