2024Activity reportProject-TeamVIRTUS

RNSR: 202224309G- Research center Inria Centre at Rennes University

- In partnership with:Université Rennes 2, Université de Rennes

- Team name: The VIrtual Us

- In collaboration with:Institut de recherche en informatique et systèmes aléatoires (IRISA), Mouvement, Sport, Santé (M2S)

- Domain:Perception, Cognition and Interaction

- Theme:Interaction and visualization

Keywords

Computer Science and Digital Science

- A5.5.4. Animation

- A5.6.1. Virtual reality

- A5.6.3. Avatar simulation and embodiment

- A5.11.1. Human activity analysis and recognition

- A9.3. Signal analysis

Other Research Topics and Application Domains

- B1.2. Neuroscience and cognitive science

- B2.8. Sports, performance, motor skills

- B7.1.1. Pedestrian traffic and crowds

- B9.3. Medias

- B9.5.6. Data science

1 Team members, visitors, external collaborators

Research Scientists

- Julien Pettre [Team leader, INRIA, Senior Researcher]

- Samuel Boivin [INRIA, Researcher]

- Ludovic Hoyet [INRIA, Researcher]

- Katja Zibrek [INRIA, ISFP]

Faculty Members

- Kadi Bouatouch [Univ. Rennes, Professor, Emeritus]

- Marc Christie [Univ. Rennes, Associate Professor]

- Aline Hufschmitt [Univ. Rennes, Associate Professor, from Sep 2024]

- Anne Helene Olivier [UNIV RENNES II, Associate Professor]

PhD Students

- Kelian Baert [TECHNICOLOR, CIFRE]

- Jean-Baptiste Bordier [Univ. Rennes, until Feb 2024]

- Philippe De Clermont Gallerande [INTERDIGITAL, CIFRE]

- Céline Finet [INRIA]

- Alexis Jensen [Univ. Rennes]

- Jordan Martin [LCPP, CIFRE]

- Yuliya Patotskaya [INRIA]

- Xiaoyuan Wang [ENS RENNES]

- Tony Wolff [Univ. Rennes]

- Tairan Yin [INRIA, until Mar 2024]

Technical Staff

- François Bourel [Univ. Rennes, from Dec 2024]

- Remi Cambuzat [INRIA, Engineer, until Nov 2024]

- Théo Gerard [Univ. Rennes, from Oct 2024]

- Bhaswar Gupta [INRIA, Engineer]

Interns and Apprentices

- Mihai Anca [INRIA, Intern, from Apr 2024 until Sep 2024]

- Arthur Audrain [INRIA, Intern, until May 2024]

- Gautier Campagne [INRIA, Intern, from Mar 2024 until Sep 2024]

- Basile Fleury [INRIA, Intern, from Apr 2024 until Jun 2024]

- Raphael Manus [Univ. Rennes, Intern, from Apr 2024 until Sep 2024]

- Leo Mothais [INRIA, Intern, from Jun 2024 until Aug 2024]

- Clara Moy [ENS RENNES, Intern, from Oct 2024]

- Clara Moy [INRIA, Intern, from May 2024 until Jul 2024]

Administrative Assistant

- Gwenaelle Lannec [Univ. Rennes]

External Collaborator

- Christian Bouville [none]

2 Overall objectives

Numerical simulation is a tool at the disposal of the scientist for the understanding and the prediction of real phenomena. The simulation of complex physical behaviours is, for example, a perfectly integrated solution in the technological design of aircrafts, cars or engineered structures in order to study their aerodynamics or mechanical resistance. The economic and technological impact of simulators is undeniable in terms of preventing design flaws and saving time in the development of increasingly complex systems.

Let us now imagine the impact of a simulator that would incorporate our digital alter-egos as simulated doubles that would be capable of reproducing real humans, in their behaviours, choices, attitudes, reactions, movements, or appearances. The simulation would not be limited to the identification of physical parameters such as the shape of an object to improve its mechanical properties, but would also extend to the identification of functional or interaction parameters and would significantly increase its scope of application. Also imagine that this simulation is immersive, that is to say that beyond the simulation of humans, we would enable real users to share the experience of a simulated situation with their digital alter-egos. We would then open up a wide field of possibilities towards studies accounting for psychological and sociological parameters - which are key to decipher human behaviors - and furthermore experiential, narrative or emotional dimensions. A revolution, but also a key challenge as putting the human being into equations, following all its aspects and dimensions, is extremely difficult. This is the challenge we propose to tackle by exploring the design and applications of immersive simulators for scenes populated by virtual humans.

This challenge is transdisciplinary by nature. The human being can be considered under the eye of Physics, Psychology, Sociology, Neurosciences, which all have triggered many research topics at the interface with Computer Science: biomechanical simulation, animation and graphics rendering of characters, artificial intelligence, computational neurosciences, etc. In such a context, our transversal activity aims to create a new generation of virtual, realistic, autonomous, expressive, reactive and interactive humans to populate virtual scenes. Harnessing the ambition of designing an immersive virtual population, our Inria project-team The Virtual Us (our virtual alter-egos, code name VirtUs) has the ambition (shorten as “VirtUs simulators”) where both virtual and real humans coexist, with a sufficient level of realism so that the experience lived virtually and its results can be transposed to reality. The achievement of this goal allows us to use VirtUs simulators to better digitally replicate them, better interact with them and thus create a new kind of narrative experiences as well as new ways to observe and understand human behaviours.

3 Research program

3.1 Scientific Framework and Challenges

Immersive simulation of populated environments is currently undergoing a revolution on several fronts, with origins that extend far beyond the confines of this single theme. Immersive technologies are experiencing an industrial revolution, and are now available at low cost to a very wide range of users. Software solutions for simulation have also known an industrial revolution: generic solutions from the world of video games are now commonly available (e.g., Unity, Unreal) to design interactive and immersive virtual worlds in a simplified way. Beyond the technological aspects, simulation techniques, and in particular human-related processes, are undergoing the revolution of machine learning, with a radical shift in their paradigm: from a procedural approach that tends to reproduce the mechanisms by which humans make decisions and carry out actions, we are moving towards solutions that tend to directly reproduce the results of these mechanisms from a large statistics of past behaviors. On a broad horizon, these revolutions radically change the interactions between digital systems and the real world including us, suddenly bringing them much closer to certain human capacities to interpret or predict the world, to act or react to it. These technological and scientific revolutions necessarily reposition the application framework of simulators, also opening up new uses, such as the use of immersive simulation as a learning ground for intelligent systems or for data collection on human-computer interaction.

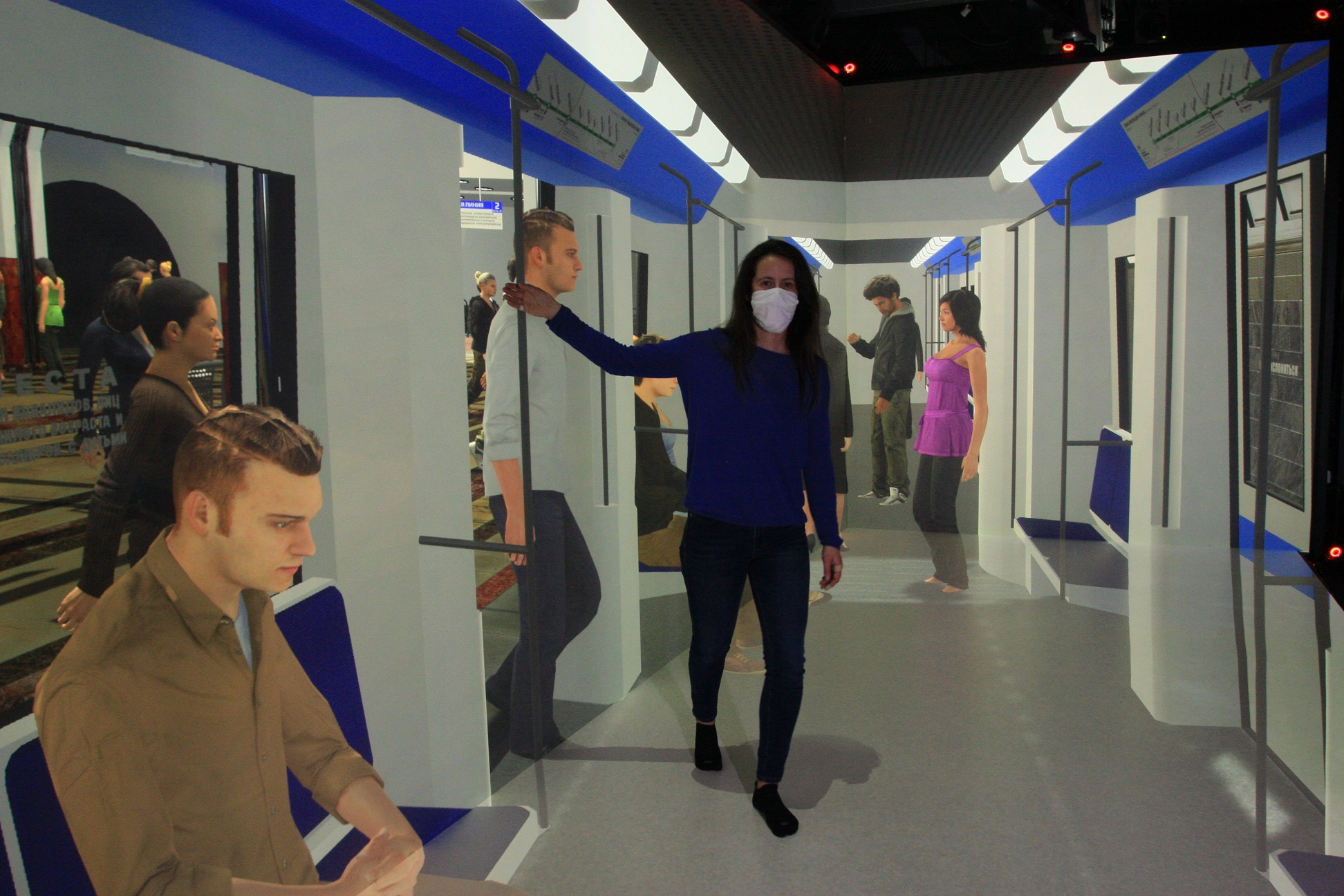

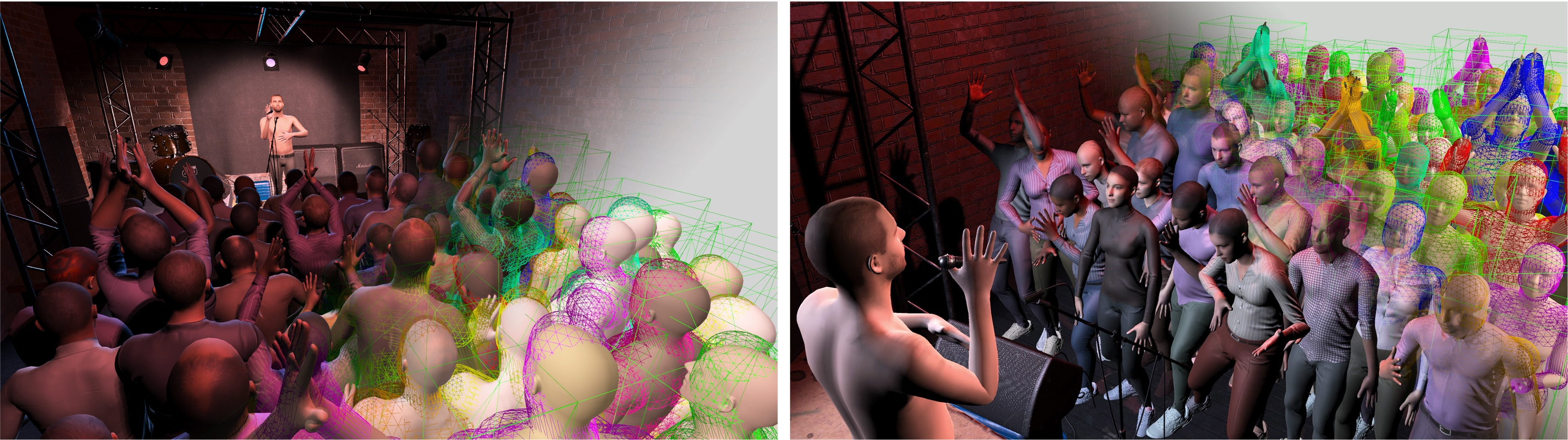

From left to right, illustrations of VirtUs simulators usage Scenario S1, S2 and S3

From left to right, illustrations of VirtUs simulators usage Scenario S1, S2 and S3

The VirtUs team's proposal is fully in line with this revolution. Typical usage of VirtUs simulators in a technological or scientific setting are, for instance, illustrated in Figure 1 through the following example scenarios:

-

S1 -

We want to model collective behaviours from a microscopic perspective, i.e., to understand the mechanisms of local human interactions and how they result into emergent crowd behaviours. The VirtUs simulator is used to: immerse real users in specific social settings, expose them to controlled behaviours of their neighbours, gather behavioral data in controlled conditions, and as an application, help modeling decisions.

-

S2 -

We want to evaluate and improve a robot's capabilities to navigate in a public space in proximity to humans. The VirtUs simulator is used to immerse a robot in a virtual public space to: generate an infinite number of test scenarios for it, generate automatically annotated sensor data for the learning of tasks related to its navigation, determine safety-critical density thresholds, observe the reactions of subjects also immersed, etc1.

-

S3 -

We want to evaluate the design of a transportation facility in terms of comfort and safety. The VirtUs simulator is used to immerse real users in virtual facilities to: study the positioning of information signs, measure the related gaze activity, evaluate users' personal experiences in changing conditions, evaluate safety procedures in specific crisis scenarios, evaluate reactions to specific events, etc.

These three scenarios allow us to detail the ambitions of VirtUs, and in particular to define the major challenges that the team wishes to take up:

-

C1 -

Better capture the characteristics of human motion in complex and variate situations

-

C2 -

Provide an increased realism in the individual and collective behaviors (from models gathered in C1)

-

C3 -

Improve the immersion of users, to not only create new user experiences, but to also better capture and understand their behaviors

But it is also stressed through these scenarios and challenges that they cannot be addressed in a concrete way without taking into account the uses made of VirtUs simulators. Scenario S2 for the synthesis of robot sensor data requires that simulated scenes reflect the same characteristics as real scenes (for instance, training a robot to predict human movements requires that virtual character indeed cover all the relevant postures and movements). Scenario S1 and S3 focus on verifying that users have the same information that guides their behavior as in a real situation (for instance, the salience of the virtual scene was consistent with reality and caused users to behave in the same way as in a real situation, etc). Thus, while the nature of the scientific questions that animate the team remains, they are addressed across the spectrum of applications of VirtUs simulators. VirtUs members explore some scientific applications that directly contribute to the VirtUs research program or to connected fields, such as: the study of crowd behaviours, the study of pedestrian behaviour, and virtual cinematography.

3.2 Research Axes

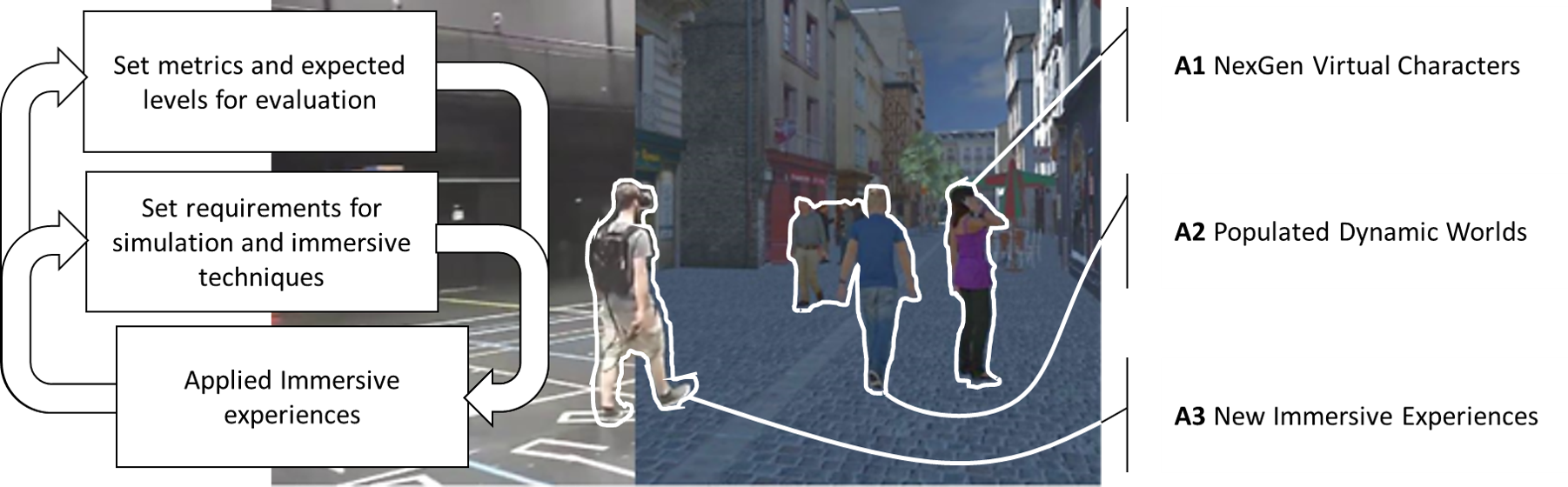

VirtUs research scheme and axes

Figure 2 shows how we articulate the challenges taken up by the team, and how we identify 3 research axes to implement this schema, addressing problems at different scales: the individual virtual human one, the group-level one, and the whole simulator one:

3.2.1 Axis A1 - NextGen Virtual Characters

Summary:

At the individual level, we aim at developing the next generation of virtual humans whose capabilities enable them to autonomously act in immersive environment and interact with immersed users in a natural, believable and autonomous way.

Vision:

Technology is today existing to generate virtual characters with a unique visual appearance (body-shape, skin color, hairstyle, clothing, etc). But it still requires a large - programming or designing - effort to individualize their motion style, to give them traits based on the way they move, or to make them behaving in consistent way across a large set of situations. Our vision is that individualization of motion should be as easy as appearance tuning. This way, virtual characters could for example convey their personality or their emotional state by the way they move, or adapt to new scenarios. Unlike other approaches which rely on always larger datasets to extend characters motion capabilities, we prefer exploring procedural techniques to adjust existing motions.

Long term scientific objective:

Axis A1 addresses the challenge C1 to better capture the characteristics of human motion in variate situations, as well as C2 to increase the realism of individual behaviour. Our expectation is that the user will have access to realistic immersive experience of the virtual world (ecological) by setting the spotlight on VirtUs characters that populate them. The objective is to bring characters to life, to be our digital alter-egos by the way they move and behave in their environment and by the way they interact with us. Our Grail is the perceived realism of characters motion, which means that users can understand the meaning of characters actions, interpret their expressions or predict their behaviors, just like they do with other real humans. In the long term, this objective raises the questions of characters non-verbal communication capabilities, including multimodal interactions between characters and users - such as touch (haptic rendering of character contacts) or audio (character reaction to user sounds) - as well as adaptation of the character's behaviors to his morphology, physiology or psychology, allowing for example to vary the weight, age, height or emotional state of a character with all the adaptations that follow. Finally, our goal is to avoid the need for constant expansion of motion databases capturing these variations, which requires expensive hardware and effort to achieve, and instead bring them procedurally to existing motions.

3.2.2 Axis A2 - Populated Dynamic Worlds

Summary:

Populated Dynamic Worlds: at the group or crowd level, we aim at developing techniques to design populated environments (i.e., how to define and control the activity of large numbers of virtual humans) as well as to simulate them (i.e., enable them to autonomously interact together, perform group actions and collective behaviours).

Vision:

Designing immersive populated environments requires bringing to life many characters, basically meaning that thousands of animation trajectory must be generated, and eventually in real-time to ensure interaction capabilities. Our vision is that, while automating the animation process is obviously required here to manage complexity, there is as well an absolute need for intelligent authoring tools to let designers controlling, scripting or devising, the higher level aspects of populated scenes: exhibited population behaviours, density, reactions to events, etc. In ten years from now, we aim for tools that combine authoring and simulation aspects of our problem, and let designers work in the most intuitive manner. Typically working from a “palette” of examples of typical populations and behaviours, we are aiming for virtual population design processes which are as simple as “I would like to populate my virtual railway station in the style of the New York central station at rush hour (full of commuters), or in the style of Paris Montparnasse station on a week-end (with more families, and different kind of shopping activities)”.

Long term scientific objective:

Axis A2 tackles the challenge of building and conveying lively, complex, realistic virtual environments populated by many characters. It addresses the challenges C1 and C2 (extending to complex and collective situations in comparison with the previous axis). Our objective is twofold. First, we want to simulate the motion of crowds of characters. Second, we want to deliver tools for authoring crowded scenes. At the interface of these two objectives, we also explore the question of animating crowds, i.e., generating full-body animations for crowd characters (crowd simulation works with abstraction levels where bodies are not represented). We target a high-level of visual realism. To this end, our objective is to be capable of simulating and authoring virtual crowds so that they resemble real ones: e.g., working from examples of crowd behaviours and tuning simulation and animations processes accordingly. We also want to progressively erase the distinction that exists today between crowd simulation and crowd animation. This is critical in the treatment of complex, body-to-body interactions. By setting this objective, we keep the application field for our crowd simulation techniques wide open, as we both satisfy the needs for visual realism of entertainment applications, as well as the need for data assimilation capabilities for real crowd management kind of applications.

3.2.3 Axis A3 - New Immersive Experiences

Summary:

New immersive experiences: in an application framework, we aim at devising relevant evaluation metrics and demonstrating the performance of VirtUs simulations as well as compliance and transferability of new immersive experiences to reality.

Vision:

We have highlighted through some scenarios the high potential of VirtUs simulators to provide new immersive experiences, and to reach new horizons in terms of scientific or technological applications. Our vision is that new immersive capabilities, and especially new kinds of immersive interactions with realistic groups of virtual characters, can lead to radical changes in various domains, such as the experimental process to study human behaviour in fields like socio-psychology, or the one of entertainment, to tell stories across new medias. In ten years from now, immersive simulators like VirtUs ones will have demonstrated their capacities to reach new levels of realism to open such possibilities, offering experiences where one can perceive the context in which immersive experience take place, can understand and interpret characters' actions happening around them, and can get his belief catched by the ongoing story conveyed by the simulated world.

Long term scientific objective:

Axis A3 addresses the challenge of designing novel immersive experiences, which builds on the innovations from the first two research axes to design VirtUs simulators placing real users in close interaction with virtual characters. We design our immersive experiences with two scientific objectives in mind. Our first objective is to observe users in new generation of immersive experiences where they will move, behave and interact as in the normal life, so that observations made in VirtUs simulators will enable us to study increasingly more complex and ecological situations. This could be a step change in the technologies to study human behaviours, that we apply to our own research objects developed in Axes 1 & 2. Our second objective is to benefit from this face-to-face interaction with VirtUs characters to evaluate their capabilities of presenting more subtle behaviors (e.g., reactivity, expressiveness), to improve immersion protocols. But evaluation methodologies are yet to be invented. Long term perspectives also encompass a deeper understanding of how immersive contents are perceived, not only at the low-level of image saliency (to compose scenes and contents more visually perceptible), but also at higher levels related to cognition and emotion (to compose scenes and contents with a meaning and an emotional impact). By embracing a broader vision, we expect that the future will bring more compelling and enjoyable interactive user experiences.

4 Application domains

In this section we detail how each research axis of the VirtUs team contributes to different application areas. We have identified the directly related disciplines, which we detail in the subsections below.

4.1 Computer Graphics, Computer Animation and Virtual Reality

Our research program aims at increasing the action and reaction capabilities of the virtual characters that populate our immersive simulations. Therefore, we contribute to techniques that enable us to animate virtual characters, to develop their autonomous behaviour capabilities, to control their visual representations, but also, more related to immersive applications, to develop their interaction capabilities with a real user, and finally to adapt all these techniques to the limited computational time budget imposed by a real time, immersive and interactive application. These contributions are at the interface of computer graphics, computer animation and virtual reality.

Our research also aims at proposing tools to stage a set of characters in relation to a specific environment. Our contributions aim at making intuitive the scene creation tasks while ensuring an excellent coherence between the visible behaviour of the characters and the expected actions in the environment in which they evolve. These contributions have applications in the field of computer animation.

4.2 Cinematography and Digital Storytelling

Our research targets the understanding of complex movie sequences, and more specifically how low-and-high level features are spatially and temporally orchestrated to create a coherent and aesthetic narrative. This understanding then feeds computational models (through probabilistic encodings but also by relying on learning techniques), which are designed with the capacity to regenerate new animated contents, through automated or interactive approaches, e.g., exploring sylistic variations. This finds applications in 1) the analysis of film/TV/Broadcast contents, augmenting the nature and range of knowledge potentially extracted from such contents, with interest from major content providers (Amazon, Netflix, Disney) and 2) the generation of animated contents, with interest from animation companies, film previsualisation, or game engines.

Furthermore, our research focusses on the extraction of lighting features from real or captured scenes, and the simulation of these lightings in new virtual or augmented reality contexts. The underlying scientific challenges are complex and related to the understanding from images where lights are, how they interact with the scene and how light propagates. Applications in understanding this light staging, and reproducing it in virtual environments find many applications in the film and media industries, which want to seamlessly integrate virtual and real contents together through spatially and temporally coherent light setups.

4.3 Human motion, Crowd dynamics and Pedestrian behaviours

Our research program contributes to crowd modelling and simulation, including pedestrian simulation. Crowd simulation has various applications ranging from entertainment (visual effects for cinema, animated and interactive scenes for video games) to architecture and security (dimensioning of places intended to receive the public, flow management). Our research program also aims to immerse users in these simulations in order to study their behaviour, opening the way to applications for research on human behaviour, including human movement sciences, or the modeling of pedestrians and crowds.

4.4 Psychology and Perception

One important dimension of our research consists in identifying and measuring what users of our immersive simulators are able to perceive of the virtual situations. We are particularly interested in understanding how the content presented to them is interpreted, how they react to different situations, what are the elements that impact their reactions as well as their immersion in the virtual word, and how all these elements differ from real situations. Such challenges are directly related to the fields of Psychology and Perception.

5 Social and environmental responsibility

The VirtUs team is tackling the environmental issue by reshaping its ecosystem, in particular by redeveloping its national network, and is putting its scientific objectives and methodological approach at the service of applications linked to low-carbon energy mobility.

5.1 Footprint of research activities

VirtUs is seeking to reduce its footprint by 'relocating' its research activities and redeploying its local network within France. In particular, the team has submitted several collaborative research projects at national level with joint partners, including the SNCF, the national rail transport company, the Museum National d'Histoire Naturelles, as well as the Gustave Eiffel University.

Some members of the VirtUs team, and in particular Ludovic Hoyet, CRCN Inria, are actively taking part in discussion groups on reducing the carbon footprint generated by research activities. For example, he is one of the two "Environmental Impact Reduction and Awareness Chairs" for the IEEE VR 2025 conference.

5.2 Impact of research results

VirtUs is also revisiting the applications of its research, seeking to address issues related to mobility and low-carbon modes of travel. Working with the SNCF, the VirtUs team is looking to tackle issues of crowd management in transport infrastructures. With teams from the University of Gustave Eiffel, VirtUs is exploring issues relating to the development of multi-modal traffic zones, and in particular pedestrian-bicycle interactions. Finally, with the Muséum National d'Histoire Naturelle, VirtUs is exploring applications to Marine Biology, to better understand the impact of human activities on marine wildlife.

6 Highlights of the year

6.1 New collaboration with Muséum National d'Histoire Naturelle

In conjunction with the Muséum National d'Histoire Naturelle, the VirtUs team was able to obtain funding for a thesis as part of the "Ocean-Climate" PPR. The thesis focuses on the study of the behaviour of crustaceans, in particular spider crabs, to gain a better understanding of the impact of human activity (in particular noise pollution of marine environments) on the behaviour of this fauna.

6.2 Closure of the CrowdDNA project

The H2020 FET-Open CrowdDNA project ended on 31 October 2024. The project, led by a European consortium of 6 partners, explored the issue of safety in dense crowds in an original way. In particular, the project collected detailed physical and biomechanical data of great scientific value on the behaviour of dense crowds, leading to new approaches for modelling, simulating and analysing the risk associated with the behaviour of dense crowds. The project deployed the concept of 'crowd observatories', bringing to the fore the need to be active in the field to address these issues.

6.3 Awards

- Tairan YIN reiceved the "Best Papers - Honorable Mentions" award at IEEE VR 2024 for his paper entitled : With or Without You: Effect of Contextual and Responsive Crowds on VR-based Crowd Motion Capture

7 New software, platforms, open data

7.1 New software

7.1.1 AvatarReady

-

Name:

A unified platform for the next generation of our virtual selves in digital worlds

-

Keywords:

Avatars, Virtual reality, Augmented reality, Motion capture, 3D animation, Embodiment

-

Scientific Description:

AvatarReady is an open-source tool (AGPL) written in C#, providing a plugin for the Unity 3D software to facilitate the use of humanoid avatars for mixed reality applications. Due to the current complexity of semi-automatically configuring avatars coming from different origins, and using different interaction techniques and devices, AvatarReady aggregates several industrial solutions and results from the academic state of the art to propose a simple and fast way to use humanoid avatars in mixed reality in a seamless way. For example, it is possible to automatically configure avatars from different libraries (e.g., rocketbox, character creator, mixamo), as well as to easily use different avatar control methods (e.g., motion capture, inverse kinematics). AvatarReady is also organized in a modular way so that scientific advances can be progressively integrated into the framework. AvatarReady is furthermore accompanied by a utility to generate ready-to-use avatar packages that can be used on the fly, as well as a website to display them and offer them for download to users.

-

Functional Description:

AvatarReady is a Unity tool to facilitate the configuration and use of humanoid avatars for mixed reality applications. It comes with a utility to generate ready-to-use avatar packages and a website to display them and offer them for download.

- URL:

-

Contact:

Ludovic Hoyet

7.1.2 PyNimation

-

Keywords:

Moving bodies, 3D animation, Synthetic human

-

Scientific Description:

PyNimation is a python-based open-source (AGPL) software for editing motion capture data which was initiated because of a lack of open-source software enabling to process different types of motion capture data in a unified way, which typically forces animation pipelines to rely on several commercial software. For instance, motions are captured with a software, retargeted using another one, then edited using a third one, etc. The goal of Pynimation is therefore to bridge the gap in the animation pipeline between motion capture software and final game engines, by handling in a unified way different types of motion capture data, providing standard and novel motion editing solutions, and exporting motion capture data to be compatible with common 3D game engines (e.g., Unity, Unreal). Its goal is also simultaneously to provide support to our research efforts in this area, and it is therefore used, maintained, and extended to progressively include novel motion editing features, as well as to integrate the results of our research projects. At a short term, our goal is to further extend its capabilities and to share it more largely with the animation/research community.

-

Functional Description:

PyNimation is a framework for editing, visualizing and studying skeletal 3D animations, it was more particularly designed to process motion capture data. It stems from the wish to utilize Python’s data science capabilities and ease of use for human motion research.

In its version 1.0, Pynimation offers the following functionalities, which aim to evolve with the development of the tool : - Import / Export of FBX, BVH, and MVNX animation file formats - Access and modification of skeletal joint transformations, as well as a certain number of functionalities to manipulate these transformations - Basic features for human motion animation (under development, but including e.g. different methods of inverse kinematics, editing filters, etc.). - Interactive visualisation in OpenGL for animations and objects, including the possibility to animate skinned meshes

- URL:

-

Contact:

Ludovic Hoyet

7.2 Open data

Full Body Motion Capture of Single Individuals Following External Perturbations from Different Directions

-

Contributors:

Thomas Chatagnon, Charles Pontonnier, Anne-Hélène Olivier, Ludovic Hoyet and Julien Pettré

-

Description:

This dataset is composed of C3D files corresponding to full body motion of participants undergoing external perturbation at shoulder height with different sensory conditions. The temporal force profiles of the perturbations are also available. The following experiment received ethical approval from an ethics committee and all participants signed an informed consent form relative to the processing of their data. The experiments were carried on 21 healthy young adults (10 females, 11 males). All were between 20 and 38 yo with a mean age of 27.2 (std: 4.2). Mean mass was 70.2 (std: 12.1) kg and height was 1.74 (std: 0.08) m. Participants motion was recorded using 45 reflective markers and a 23 Qualisys camera system (200Hz). The markers were placed on participants following standardised anatomical landmarks. The output signal of the force sensor was processed using a Butterworth low pass filter with a 5Hz cutoff frequency without phase shift. The force sensor was synchronised with the motion capture software. Three reflective markers were also placed along the pole in order to retrieve the exact direction of the perturbations.

-

Dataset PID:

https://doi.org/10.5281/zenodo.10512652

-

Project link:

https://zenodo.org/records/10512652

- Publications:

-

Contact:

julien.pettre@inria.fr

- Release contributions:

8 New results

8.1 NextGen Virtual Characters

8.1.1 Human Motion Prediction under Unexpected Perturbation

Participants: Julien Pettré [contact].

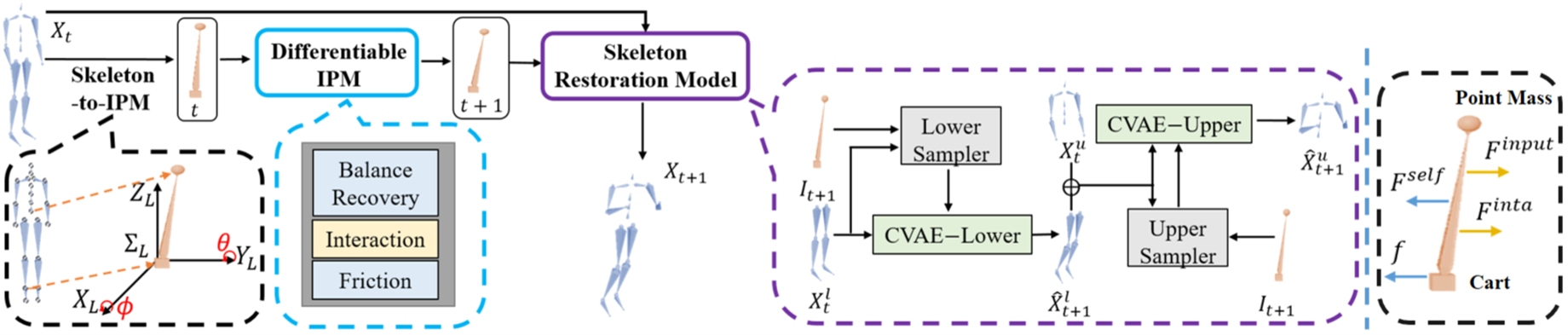

Overview of our model

We investigate a new task in human motion prediction 17, which is predicting motions under unexpected physical perturbation potentially involving multiple people. Compared with existing research, this task involves predicting less controlled, unpremeditated and pure reactive motions in response to external impact and how such motions can propagate through people. It brings new challenges such as data scarcity and predicting complex interactions. To this end, we propose a new method capitalizing differentiable physics and deep neural networks as illustrated in 3, leading to an explicit Latent Differentiable Physics (LDP) model. Through experiments, we demonstrate that LDP has high data efficiency, outstanding prediction accuracy, strong generalizability and good explainability. Since there is no similar research, a comprehensive comparison with 11 adapted baselines from several relevant domains is conducted, showing LDP outperforming existing research both quantitatively and qualitatively, improving prediction accuracy by as much as 70%, and demonstrating significantly stronger generalization.

8.1.2 Standing balance recovery strategies of young adults in a densely populated environment following external perturbations

Participants: Thomas Chatagnon, Ludovic Hoyet, Anne-Hélène Olivier, Julien Pettré [contact].

In this work 5, performed in collaboration with the MimeTIC Inria team and Forschungszentrum Jülich, we investigated the recovery strategies used by young adults to maintain standing balance following external force-controlled perturbations in densely populated group formations. In particular, the moment of step initiation as well and the characteristics of the first recovery steps and hand-raising were studied here. The experimental data considered in this work are part of a larger dataset relying on a new experimental paradigm inspired by Feldmann and Adrian (2023). In this experiment, 20 participants (8 females, 12 males, 24.83.7 y.o.) equipped with motion capture suits were asked to stand in tightly packed formation before receiving a force-controlled perturbation. In total, four group configurations and two interpersonal distancing conditions have been investigated here. The standing balance recovery strategies observed in this dense groups experiment were then compared with the observed behaviour of single individuals following external perturbations. Results suggest that the moment of initiation for recovery steps was affected by the initial interpersonal distancing conditions. The first recovery steps within the studied dense groups were observed to be slower, smaller and more dispersed than those of single individuals for comparable level of perturbation intensity. However, the relationship between the average speed of first recovery steps and the length of these steps remained similar to the one of single individuals. This suggests that the first recovery step duration remained almost constant during both the dense groups experiment and the experiment with single individuals. Finally, we observed a significant occurrence of participants raising their hands, as physical interactions played an important role in this dense groups experiment. This behaviour was mainly observed to be initiated before recovery steps.

8.1.3 Temporal segmentation of motion propagation in response to an external impulse

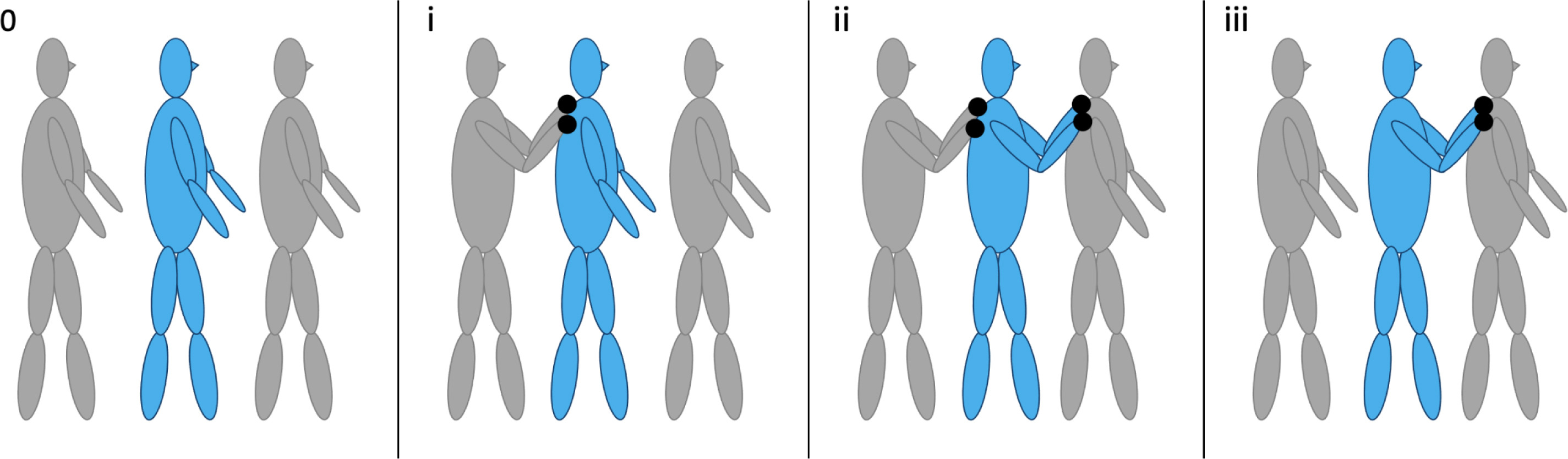

Participants: Thomas Chatagnon, Julien Pettré [contact].

Overview of the phases of impulse propagation for the blue person in the middle: (0) no external impact (i) receiving an impulse, (ii) receiving and passing on an impulse, and (iii) passing on an impulse. The black dots represent a contact.

In high-density crowds, local motion can propagate, amplify, and lead to macroscopic phenomena, including ”density waves”. These density waves only occur when individuals interact, and impulses are transferred to neighbours. How this impulse is passed on by the human body and which effects this has on individuals is still not fully understood. To further investigate this, experiments focusing on the propagation of a push were conducted within the EU-funded project CrowdDNA, in collaboration with Forschungszentrum Jülich 6. In the experiments the crowd is greatly simplified by five people lining up in a row (Figure 4). The rearmost person in the row was pushed forward in a controlled manner with a punching bag. The intensity of the push, the initial distance between participants and the initial arm posture were varied. Collected data included side view and top view video recordings, head trajectories, 3D motion using motion capturing (MoCap) suits as well as pressure measured at the punching bag. With a hybrid tracking algorithm, the MoCap data are combined with the head trajectories to allow an analysis of the motion of each limb in relation to other persons. The observed motion of the body in response to the push can be divided into three phases. These are (i) receiving an impulse, (ii) receiving and passing on an impulse, and (iii) passing on an impulse. Using the 3D MoCap data, we can identify the start and end times of each phase. To determine when a push is passed on, the forward motion of the person in front has to be considered. The projection of the center of mass relative to the initial position of the feet is a measure of the extent to which a person is displaced from the rest position. Specifying the timing of these phases is particularly important to distinguish between different types of physical interactions. Our results contribute to the development and validation of a pedestrian model for identifying risks due to motion propagation in dense crowds.

8.1.4 A survey on realistic virtual humans in animation: what is realism and how to evaluate it?

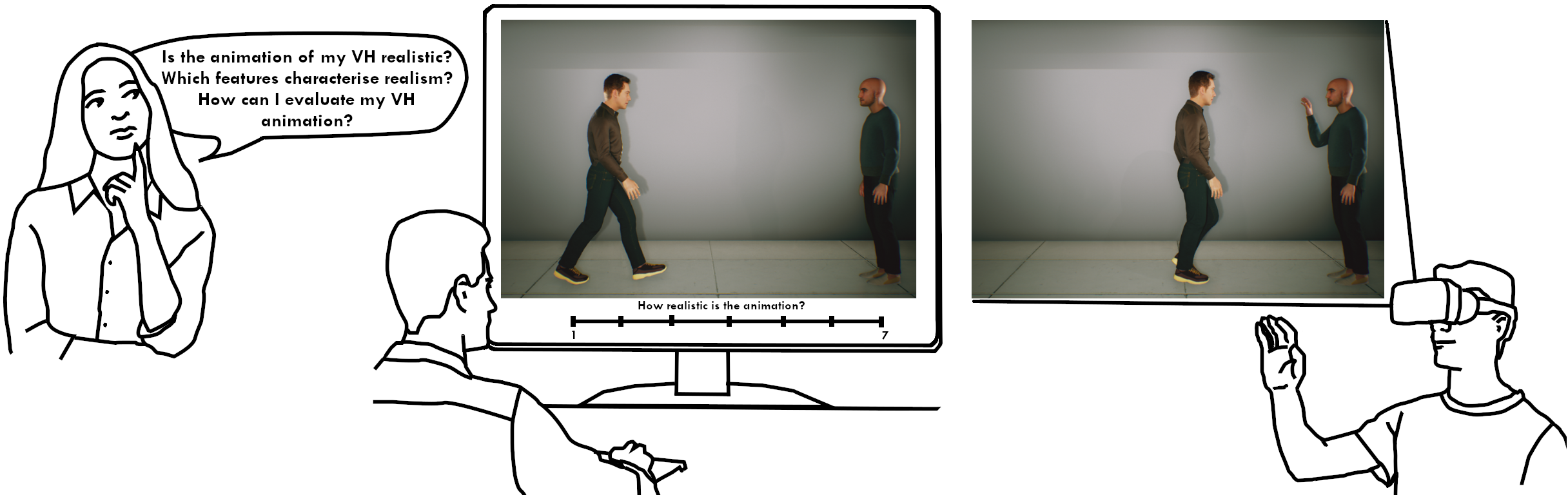

Participants: Ludovic Hoyet, Anne-Hélène Olivier [contact], Rim Rekik Dit Nekhili, Katja Zibrek.

The central goal of this survey is to explore the aspects of animation realism of virtual humans. We approach this topic by reviewing existing definitions of realism, provide a taxonomy of animation features that influence realism, and discuss existing evaluation methods: objective, subjective and hybrid approaches. Two examples of subjective approaches are depicted in the image above: (left) a user rating realism with a self-report measure, and (right) a user reacting to the virtual human while being immersed in a VR environment).

Generating realistic animated virtual humans is a problem that has been extensively studied with many applications in different types of virtual environments. However, the creation process of such realistic animations is challenging, especially because of the number and variety of influencing factors, that should then be identified and evaluated. In this survey 10, performed in collaboration with the Morpheo Inria team, we attempt to provide a clearer understanding of how the multiple factors that have been studied in the literature impact the level of realism of animated virtual humans (see Figure 5), by providing a survey of studies assessing their realism. This includes a review of features that have been manipulated to increase the realism of virtual humans, as well as evaluation approaches that have been developed. As the challenges of evaluating animated virtual humans in a way that agrees with human perception are still active research problems, this survey further identifies important open problems and directions for future research.

8.1.5 Collision avoidance behaviours of young adult walkers: Influence of a virtual pedestrian’s age-related appearance and gait profile

Participants: Ludovic Hoyet, Anne-Hélène Olivier [contact].

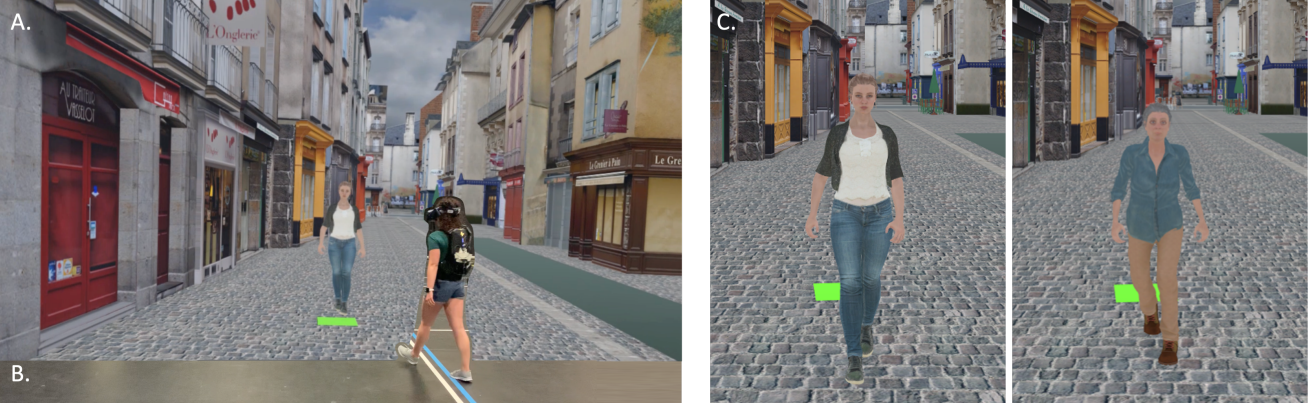

Virtual environment from the viewpoint of the participant’s starting position (A), real-world set-up (B), and the Young Adult (YA) and Older Adult (OA) virtual pedestrians (C).

Populating virtual words with realistic pedestrian behaviours is challenging but essential to ensure natural interactions with users. In pedestrian encounters, successful collision avoidances require adapting speed and/or locomotor trajectory based on situational- and personal-characteristics. Personal characteristics such as the age of a pedestrian can easily be observed, but whether it affects avoidance behaviours is unknown. This is however an important question to ensure realism and variety of the simulations. The purpose of the current study 13, performed in collaboration with the University of Waterloo and the Wilfrid Laurier University (Canada), was to examine the influence of a virtual pedestrian’s (VP) age-related appearance and gait profile on avoidance behaviours during a circumvention task. We expected that older adult (OA) gait characteristics and/or appearance would result in more cautious behaviours. Young adults (YA; n=17, 5 females; 23.62.7yrs) navigated a virtual street using HMD. Individuals walked 8m towards a goal, while avoiding an approaching VP (Figure 6) who would approach and steer towards the participant’s left, right, or continue straight, while exhibiting different age-related appearances and gait profiles: 1. YA appearance, YA gait; 2. OA appearance, OA gait; 3. OA appearance, YA gait; and 4. YA appearance, OA gait. Statistical analyses revealed significant effects of VP appearance and gait profile on clearance distance at the time of crossing. Clearance was larger when the VP resembled an OA (M=.79m.23) versus YA (M=.76m.19), and when the VP walked like an OA (M=.80m.23) versus YA (M=.76m.20). Larger clearance distances observed with OA characteristics may be due to societal norms associated with the principle of parental respect as well as a cautious strategy for any potential instability (wavering) in balance commonly observed in OA. This research sheds light on how age-related cues influence pedestrian interactions, with implications for the design of populated virtual environments.

8.1.6 Entropy and Speed: Effects of Obstacle Motion Properties on Avoidance Behavior in Virtual Environment

Participants: Ludovic Hoyet, Yuliya Patotskaya, Julien Pettré, Katja Zibrek [contact].

Participants traversed a virtual room, avoiding a moving cylinder with varying speed and entropy.

Avoiding moving obstacles in immersive environments requires adjustments in the walking trajectory and depends on the type of obstacle movement. Previous research studied the impact of speed and direction of motion but not much is known about how predictability of motion impacts human circumvention in terms of distance to the obstacle (proximity). In this paper 15, we investigate how participants navigate through VR collision avoidance scenarios with obstacles of varying motion characteristics in terms of speed and predictability (Figure 7). We introduce a novel concept of creating unpredictable motion using entropy calculations. We anticipated that higher entropy would increase the proximity distance which we measured with several metrics related to the distance from the obstacle and centre of the scene. We found a significant influence of motion speed and predictability on proximity-related metrics, with participants exhibiting a tendency to maintain larger distances in scenarios where obstacle speed and entropy were higher. We also outline two decision-making strategies for avoidance behaviour and investigate the factors that influence individuals' selection of one strategy over the other.

8.1.7 Correspondence-free online human motion retargeting

Participants: Anne-Hélène Olivier [contact], Rim Rekik Dit Nekhili.

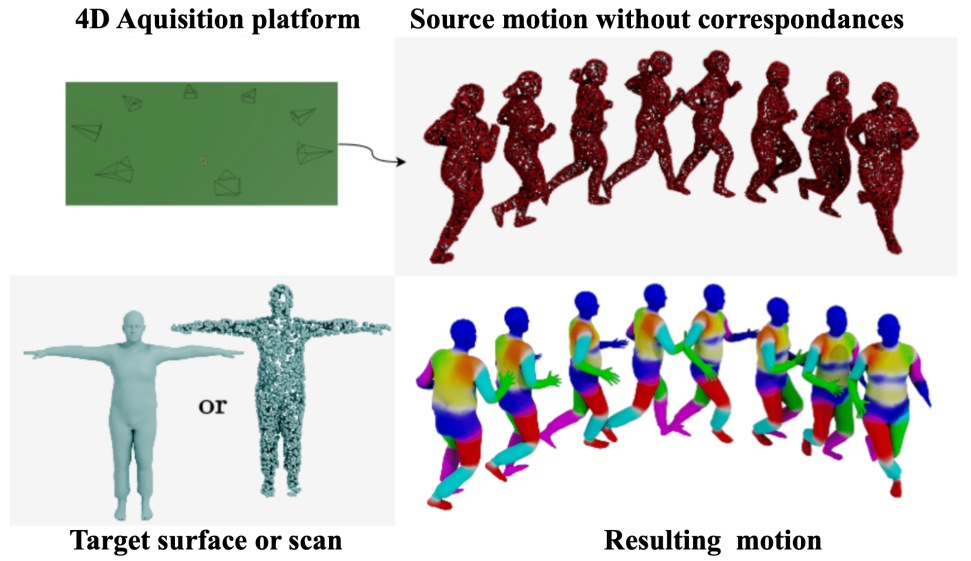

Given an untracked motion performed by a source subject (top) and a target body shape (bottom left), our method animates the target with the source motion, preserving temporal correspondences of the output motion (bottom right).

In this work 16, performed in collaboration with the Morpheo Inria team, we present a data-driven framework for unsupervised human motion retargeting that animates a target subject with the motion of a source subject (Figure 8). Our method is correspondence-free, requiring neither spatial correspondences between the source and target shapes nor temporal correspondences between different frames of the source motion. This allows to animate a target shape with arbitrary sequences of humans in motion, possibly captured using 4D acquisition platforms or consumer devices. Our method unifies the advantages of two existing lines of work, namely skeletal motion retargeting, which leverages long-term temporal context, and surface-based retargeting, which preserves surface details, by combining a geometry-aware deformation model with a skeleton-aware motion transfer approach. This allows to take into account long-term temporal context while accounting for surface details. During inference, our method runs online, i.e. input can be processed in a serial way, and retargeting is performed in a single forward pass per frame. Experiments show that including long-term temporal context during training improves the method's accuracy for skeletal motion and detail preservation. Furthermore, our method generalizes to unobserved motions and body shapes. We demonstrate that our method achieves state-of-the-art results on two test datasets and that it can be used to animate human models with the output of a multi-view acquisition platform.

8.2 Populated Dynamic Worlds

8.2.1 Collaborative services for Crowd Safety systems across the Edge-Cloud Computing Continuum

Participants: Julien Pettré [contact].

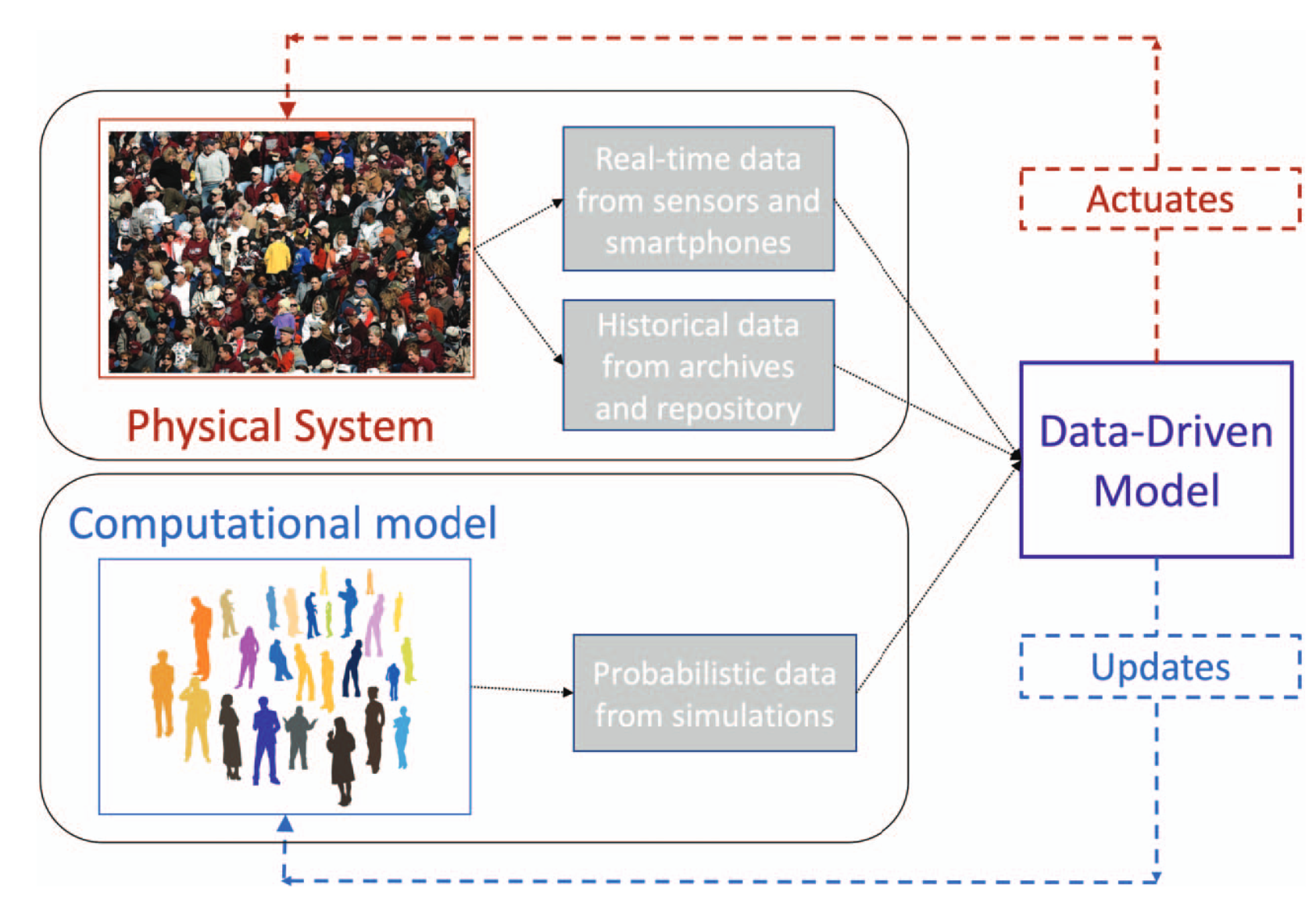

The ideas of digital twins focus on automatically controlling the state of data-driven and computational models by comparing the current state, derived from historical and real-time data, with the ideal or desired state gained from simulation.

Crowd or mass gatherings at various venues such as entertainment events or transportation systems are faced by individuals on a daily basis. Crowd management for largescale planned events is essential, and depends on behavioural and mathematical modelling to understand how crowds move in different scenarios. In this paper, we propose the creation of a set of collaborative and services, the CrowdMesh platform, to allow for processing, analysis, and sharing of crowd monitoring datasets constructed over the Edge-Cloud Continuum (see Figure 9). Comprehensive tools and best practices are needed to ensure that analysis pipelines are robust, and that results can be replicated 12. This work was performed in collaboration with the Stack Inria team.

8.2.2 Resolving Collisions in Dense 3D Crowd Animations

Participants: Ludovic Hoyet, Anne-Hélène Olivier, Julien Pettré [contact].

Our physics-based method resolves the collisions in highly-dense 3D crowds, enabling the synthesis of 3D crowd animations where characters realistically interact and push each other, as shown in this indoor concert scene. Through a rigorous perceptual study, we demonstrate that resolving collisions is needed to generate 3D dense crowds that move in a natural and convincing way, while traditional animation methods do not model or correct such contacts between 3D characters.

In this work 7, performed in collaboration with the Universidad Rey Juan Carlos (Spain), we propose a novel contact-aware method to synthesize highly-dense 3D crowds of animated characters. Existing methods animate crowds by, first, computing the 2D global motion approximating subjects as 2D particles and, then, introducing individual character motions without considering their surroundings. This creates the illusion of a 3D crowd, but, with density, characters frequently intersect each other since character-to-character contact is not modeled. We tackle this issue and propose a general method that considers any crowd animation and resolves existing residual collisions. To this end, we take a physics-based approach to model contacts between articulated characters. This enables the real-time synthesis of 3D high-density crowds with dozens of individuals that do not intersect each other, producing an unprecedented level of physical correctness in animations (Figure 10). Under the hood, we model each individual using a parametric human body incorporating a set of 3D proxies to approximate their volume. We then build a large system of articulated rigid bodies, and use an efficient physics-based approach to solve for individual body poses that do not collide with each other while maintaining the overall motion of the crowd. We first validate our approach objectively and quantitatively. We then explore relations between physical correctness and perceived realism based on an extensive user study that evaluates the relevance of solving contacts in dense crowds. Results demonstrate that our approach outperforms existing methods for crowd animation in terms of geometric accuracy and overall realism.

8.3 New Immersive Experiences

8.3.1 With or Without You: Effect of Contextual and Responsive Crowds on VR-based Crowd Motion Capture

Participants: Marc Christie, Ludovic Hoyet, Julien Pettré [contact], Tairan Yin.

Illustration of our proposed 3R paradigm (Replace-Record-Replay), where a single user (red outline) is initially immersed into a simulated contextual crowd whose autonomous agents (green outline) are successively replaced by the user’s captured data (blue outline). The consistency of the user’s behavior in this 3R scenario is compared with 4R (Replace-Record-Replay-Responsive), where the blue agents are made locally responsive.

While data is vital to better understand and model interactions within human crowds, capturing real crowd motions is extremely challenging. Virtual Reality (VR) demonstrated its potential to help, by immersing users into either simulated virtual crowds based on autonomous agents, or within motion-capture-based crowds. In the latter case, users' own captured motion can be used to progressively extend the size of the crowd, a paradigm called Record-and-Replay (2R). However, both approaches demonstrated several limitations which impact the quality of the acquired crowd data. In this work 11, we propose the new concept of contextual crowds to leverage both crowd simulation and the 2R paradigm towards more consistent crowd data. We evaluate two different strategies to implement it, namely a Replace-Record-Replay (3R) paradigm where users are initially immersed into a simulated crowd whose agents are successively replaced by the user's captured-data, and a Replace-Record-Replay-Responsive (4R) paradigm where the pre-recorded agents are additionally endowed with responsive capabilities (see Figure 11). These two paradigms are evaluated through two real-world-based scenarios replicated in VR. Our results suggest that the behaviors observed in VR users with surrounding agents from the beginning of the recording process are made much more natural, enabling 3R or 4R paradigms to improve the consistency of captured crowd datasets.

8.3.2 Virtual Crowds Rheology: Evaluating the Effect of Character Representation on User Locomotion in Crowds

Participants: Ludovic Hoyet, Jordan Martin, Julien Pettré [contact].

Participant (yellow outline) moving through virtual crowds. Top-left: enclosing elliptic cylinder at (where stands for agents); Top-middle: puppets at ; Top-right: realistic characters at ; Bottom-left: enclosing elliptic cylinder at ; Bottom-middle: puppets at ; Bottom-right: realistic characters at .

Crowd data is a crucial element in the modeling of collective behaviors, and opens the way to simulation for their study or prediction. Given the difficulty of acquiring such data, virtual reality is useful for simplifying experimental processes and opening up new experimental opportunities. This comes at the cost of the need to assess the biases introduced by the use of this technology. This work 9 is part of this effort, and investigates the effect of the graphical representation of a crowd on the behavior of a user immersed within (Figure 12), in collaboration with the firefighter's department of the Laboratoire Central de la Prefecture de Police de Paris. More specifically, we inspect the virtual navigation through virtual crowds, in terms of travel speeds and local navigation choices as a function of the visual representation of the virtual agents that make up the crowd (simple geometric model, anthropomorphic model or realistic model). Through an experiment in which we ask a user to navigate virtual crowds of varying densities, we show that the effect of the visual representation is limited, but that an anthropomorphic representation offers the best trade-off between computational complexity and ecological validity, even though a more realistic representation can be preferred when user behaviour is studied in more details. Our work leads to clear recommendations on the design of immersive simulations for the study of crowd behavior.

8.3.3 Wheelchair Proxemics: interpersonal behaviour between pedestrians and power wheelchair drivers in real and virtual environments

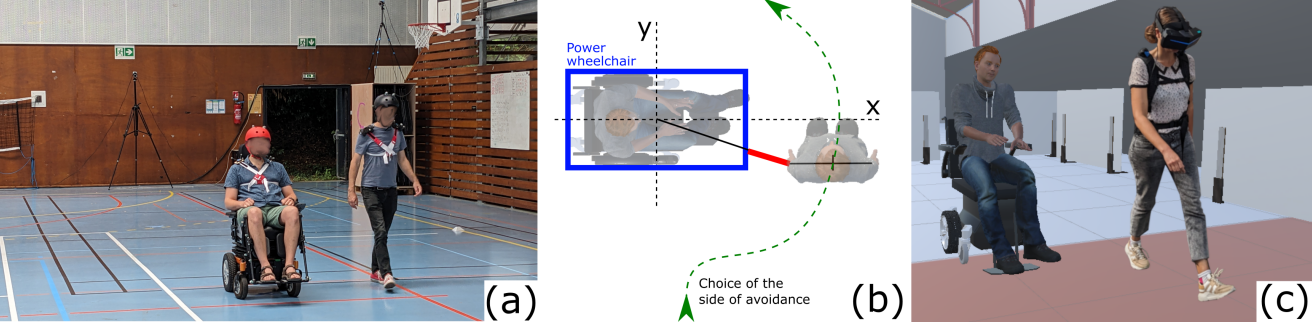

Participants: Emilie Leblong, Anne-Hélène Olivier [contact].

we are interested in evaluating collision avoidance strategies (b) including speed, side of avoidance, and shape-to-shape distance between a pedestrian and a power wheelchair user to improve the design of power wheelchair navigation simulators. We designed 2 experiments where one of the 2 persons involved in the interaction was static and the other was moving to a given goal while avoiding the collision. We also aim to compare avoidance behavior in real (a) and virtual (c) conditions.

Immersive environments provide opportunities to learn and transfer skills to real life. This opens up new areas of application, such as rehabilitation, where people with neurological disabilities can learn to drive a power wheelchair (PWC) through the development of immersive simulators. To expose these specific users to daily-life study interaction situations, it is important to ensure realistic interactions with the virtual humans that populate the simulated environment, as PWC users should learn to drive and navigate under everyday conditions. While non-verbal pedestrian-pedestrian interactions have been extensively studied, understanding pedestrian-PWC user interactions during locomotion is still an open research area. Our study 14, performed in collaboration with the Rainbow Inria team, aimed to investigate the regulation of interpersonal distance (i.e., proxemics) between a pedestrian and a PWC user in real and virtual situations (Figure 13). We designed 2 experiments in which 1) participants had to reach a goal by walking (respectively driving a PWC) and avoid a static PWC confederate (respectively a standing confederate) and 2) participants had to walk to a goal and avoid a static confederate seated on a PWC in real and virtual conditions. Our results showed that interpersonal distances were significantly different whether the pedestrian avoided the PWC user or vice versa. We also showed an influence of the orientation of the person to be avoided. We discuss these findings with respect to pedestrian-pedestrian interactions, as well as their implications for the design of virtual humans interacting with PWC users for rehabilitation applications. In particular, we proposed a proof of concept by adapting existing microscopic crowd simulation algorithms to consider the specificity of pedestrian-PWC user interactions.

8.3.4 DepthLight: a Single Image Lighting Pipeline for Seamless Integration of Virtual Objects into Real Scenes

Participants: Samuel Boivin [contact], Marc Christie [contact], Raphaël Manus.

Two qualitative examples showcasing DepthLight's capability to generate spatial-aware lighting effects from a single low dynamic range and limited field-of-view image (LDR LFOV). We integrated two 3D rabbits – half purely diffuse material, and half specular material in each input image. DepthLight reconstructs an entire emissive texture mesh representation of the scene that re-casts spatialized lighting on virtual objects and enables seamless integration of synthetic objects in real images for VFX applications whatever their location in the scene. This approach enables photorealistic relighting of objects, capturing intricate lighting variations and occlusion effects that traditional environment map representations fail to achieve.

We present DepthLight, a novel method to estimate spatial lighting for photorealistic Visual Effects (VFX) using a single image. Previous approaches either relied on estimated or captured environment maps that fail to account for localized lighting effects or use simplified representations of estimated light positions that do not fully capture the complexity of the lighting process. DepthLight addresses these limitations by using a single LDR image with a limited field of view (LFOV) as an input to build an emissive texture mesh, producing a simple and lightweight 3D representation for photorealistic object relighting. Our approach includes a two-step HDR environment map estimation process. First, an LDR panorama is generated around the input image using a photorealistic diffusion-based inpainting technique. Then, a LDR to HDR network reconstructs the HDR panorama. A deep-learning depth estimation technique is exploited on the photorealistic LDR panorama to finally build an emissive mesh representation which provides spatial lighting information and that is used to relight virtual objects and compose the final image. This flexible pipeline can be easily integrated into different VFX production workflows. In our experiments, DepthLight shows that virtual objects are seamlessly integrated into real scenes with a visually plausible simulation of the lighting. We compared our results to the ground truth lighting using Unreal Engine, as well as to state-of-the-art approaches that use pure HDRI lighting techniques. Finally, we validated our approach using well-established image quality assessment metrics.

8.3.5 Cinematographic Camera Diffusion Model

Participants: Marc Christie [contact].

Designing effective camera trajectories in virtual 3D environments is a challenging task even for experienced animators. Despite an elaborate film grammar, forged through years of experience, that enables the specification of camera motions through cinematographic properties (framing, shots sizes, angles, motions), there are endless possibilities in deciding how to place and move cameras with characters. Dealing with these possibilities is part of the complexity of the problem. While numerous techniques have been proposed in the literature (optimization-based solving, encoding of empirical rules, learning from real examples,...), the results either lack variety or ease of control. In this work 8, we propose a cinematographic camera diffusion model using a transformer-based architecture to handle temporality and exploit the stochasticity of diffusion models to generate diverse and qualitative trajectories conditioned by high-level textual descriptions. We extend the work by integrating keyframing constraints and the ability to blend naturally between motions using latent interpolation, in a way to augment the degree of control of the designers. We demonstrate the strengths of this text-to-camera motion approach through qualitative and quantitative experiments and gather feedback from professional artists. The code and data are available at https://github.com/jianghd1996/Camera-control.

8.3.6 SPARK: Self-supervised Personalized Real-time Monocular Face Capture

Participants: Marc Christie [contact], Kelian Baert.

Feedforward monocular face capture methods seek to reconstruct posed faces from a single image of a person. Current state of the art approaches have the ability to regress parametric 3D face models in real-time across a wide range of identities, lighting conditions and poses by leveraging large image datasets of human faces. These methods however suffer from clear limitations in that the underlying parametric face model only provides a coarse estimation of the face shape, thereby limiting their practical applicability in tasks that require precise 3D reconstruction (aging, face swapping, digital make-up,...). In this work , we propose a method for high-precision 3D face capture taking advantage of a collection of unconstrained videos of a subject as prior information. Our proposal builds on a two stage approach. We start with the reconstruction of a detailed 3D face avatar of the person, capturing both precise geometry and appearance from a collection of videos. We then use the encoder from a pre-trained monocular face reconstruction method, substituting its decoder with our personalized model, and proceed with transfer learning on the video collection. Using our pre-estimated image formation model, we obtain a more precise self-supervision objective, enabling improved expression and pose alignment. This results in a trained encoder capable of efficiently regressing pose and expression parameters in real-time from previously unseen images, which combined with our personalized geometry model yields more accurate and high fidelity mesh inference. Through extensive qualitative and quantitative evaluation, we showcase the superiority of our final model as compared to state-of-the-art baselines, and demonstrate its generalization ability to unseen pose, expression and lighting.

8.3.7 AKiRa: Augmentation Kit on Rays for optical video generation

Participants: Marc Christie [contact], Robin Courant.

Recent advances in text-conditioned video diffusion have greatly improved video quality. However, these methods offer limited or sometimes no control to users on camera aspects, including dynamic camera motion, zoom, distorted lens and focus shifts. These motion and optical aspects are crucial for adding controllability and cinematic elements to generation frameworks, ultimately resulting in visual content that draws focus, enhances mood, and guides emotions according to filmmakers' controls. In this work , we aim to close the gap between controllable video generation and camera optics. To achieve this, we propose AKiRa (Augmentation Kit on Rays), a novel augmentation framework that builds and trains a camera adapter with a complex camera model over an existing video generation backbone. It enables fine-tuned control over camera motion as well as complex optical parameters (focal length, distortion, aperture) to achieve cinematic effects such as zoom, fisheye effect, and bokeh. Extensive experiments demonstrate AKiRa's effectiveness in combining and composing camera optics while outperforming all state-of-the-art methods. This work sets a new landmark in controlled and optically enhanced video generation, paving the way for future optical video generation methods.

8.3.8 E.T. the Exceptional Trajectories: Text-to-camera-trajectory generation with character awareness

Participants: Marc Christie [contact], Robin Courant.

Stories and emotions in movies emerge through the effect of well-thought-out directing decisions, in particular camera placement and movement over time. Crafting compelling camera trajectories remains a complex iterative process, even for skilful artists. To tackle this, in this work, we propose a dataset called the Exceptional Trajectories (E.T.) with camera trajectories along with character information and textual captions encompassing descriptions of both camera and character. To our knowledge, this is the first dataset of its kind. To show the potential applications of the E.T. dataset, we propose a diffusion-based approach, named Director, which generates complex camera trajectories from textual captions that describe the relation and synchronisation between the camera and characters. To ensure robust and accurate evaluations, we train on the E.T. dataset CLaTr, a Contrastive Language-Trajectory embedding for evaluation metrics. We posit that our proposed dataset and method significantly advance the democratization of cinematography, making it more accessible to common users.

8.3.9 Plausible and Diverse Human Hand Grasping Motion Generation

Participants: Marc Christie [contact], Xiaoyuan Wang.

Techniques to grasp targeted objects in realistic and diverse ways find many applications in computer graphics, robotics and VR. This studygenerates diverse grasping motions while keeping plausible final grasps for human hands. We first build on a Transformer-based VAE to encode diverse reaching motions into a latent representation noted as GMF and then train an MLP-based cVAE to learn the grasping affordance of targeted objects. Finally, through learning a denoising process, we condition GMF with affordance to generate grasping motions for the targeted object. We identify improvements in our results, and will further address them in future work.

9 Bilateral contracts and grants with industry

9.1 Bilateral contracts with industry

Cifre InterDigital - Deep-based semantic representation of avatars for virtual reality

Participants: Ludovic Hoyet [contact], Philippe De Clermont Gallerande.

The overall objective of the PhD thesis of Philippe De Clermont Gallerande, which started in February 2023, is to explore novel approaches (including both full body and facial elements) to enable both full body and facial encoding and decoding for multi-user immersive experiences. Objective is also to enable the evaluation of the quality of experience. More specifically, one of the focus is to propose solutions to represent digital characters (avatars) with semantic-based approaches in a context of multi-user immersive telepresence, that are compact, plausible and simulatenously resiliant to data perturbation caused by streaming. This PhD is conducted within the context of the joint laboratory Nemo.ai between Inria and InterDigital, and more specifically within the Ys.ai project which is dedicated to exploring novel research questions and applications in Virtual Reality. This work is also conducted in collaboration between the two Inria teams Hybrid and Virtus, as well as with the Interactive Media team of InterDigital.

Cifre InterDigital - Facial features from high quality cinema footage

Participants: Marc Christie [contact], Kelian Baert.

The overall objective of the PhD thesis of Kelian Baert funded by VFX company Mikros Image is to explore novel representations to extract facial features from high quality cinema footage, and provide intuitive techniques to perform facial editing including shape, appearance and animation. More precisely, we search to improve the controllability of learning-based techniques techniques for editing photo-realistic faces in video sequences, aimed at the visual effects for cinema. The aim is to accelerate post-production processes on faces by enabling an artist to finely control different characteristics over time. There are numerous applications: rejuvenation and aging, make-up/tattooing, strong modifications morphology (adding a 3rd eye, for example), replacing an understudy with the actor's face by the actor's face, adjustments to the actor's acting. The PhD will rely on a threefold approach: transfer of features from real to synthetic, editing in the synthetic domain on simplified representations, and then to transfer the contents back to photorealistic sequences using GAN/Diffusion-based models to ensure visual quality.

LCPP (PhD contract) - Immersive crowd simulation for the study and design of public spaces

Participants: Julien Pettré [contact], Ludovic Hoyet, Jordan Martin.

The overall objective of the PhD thesis of Jordan Martin, started in November 2022, is to explore the use of Virtual Reality to better design and assess public spaces. The VirtUs team specialises in the simulation, animation and immersion of virtual crowds. The aim of this thesis is to explore new ways of analysing crowd behaviours in real environments using new virtual reality technologies that allow users to be directly immersed in digital replicas of these situations. More specifically, Jordan explores the technical conditions of Virtual Reality experiments that lead to collecting realistic data. These conditions revolve around the visual representations of virtual humans, as well as display conditions.

9.2 Bilateral Grants with Industry

Unreal MegaGrant

Participants: Marc Christie [contact], Anthony Mirabile.

The objective of the Unreal Megagrant (70k€) is to initiate the development of Augmented Reality techniques as animation authoring tools. While we have demonstrated the benefits of VR as a relevant authoring tool for artists, we would like to explore with this funding the animation capacities of augmented reality techniques. Underlying challenges are pertained to the capacity of estimating precisely hand poses for fine animation tasks, but also exploring means of high-level authoring tools such as gesturing techniques.

10 Partnerships and cooperations

10.1 International initiatives

10.1.1 Inria associate team not involved in an IIL or an international program

SocNav

-

Title:

Studying complex social navigation with an innovative, co-developed immersive platform

-

Duration:

2024 ->

-

Coordinator:

Anne-Hélène Olivier and Bradford McFadyen (Brad.McFadyen@fmed.ulaval.ca)

-

Partners:

- Université Laval Quebec (Canada)

-

Inria contact:

Anne Helene Olivier

-

Summary:

The context of the SocNav team is the study of locomotor navigation in more socially complex environments representative of daily life. When performing goal-directed walking, alone or with others in public spaces, we are continuously interacting with our dynamic surrounding environment, especially avoiding collisions with other pedestrians. However, research to date has mainly considered simple, pairwise interactions and many questions remain. In particular, the combined roles of anticipatory and reactive control underlying locomotor navigation in more socially complex environments with multiple pedestrian interactions should be carried out. This needs to also consider the confounding effects of one’s physical limitations due to physical activity level or neurological deficits and for mode of transport (i.e., biped vs wheeled). This work will require the means to create protocols that allow flexible control over environmental factors, something best provided through immersive virtual reality (VR) for which both teams are experts. Therefore, this project has 3 main objectives: 1. Create and evaluate an immersive experimental platform to be used for the study of social navigation in complex social environments 2. Study and model how environmental and personal factors affect the control of navigation in complex social environments in healthy populations 3. Study and model how neurological deficits affect the control of navigation in complex social environments

The parallel development of such an immersive platform will solidify collaborations beyond the granting period as well as provide a tool that can have great potential for application. The findings from the proposed collaborations will not only improve our understanding of the trade-offs in anticipatory and reactive control for locomotor navigation of complex social environments, but will also significantly contribute to improving assessment and training tools, and the development of intelligent mobility aids (smart wheelchairs).

10.2 International research visitors

10.2.1 Visits of international scientists

Other international visits to the team

Bobby Bodenheimer

-

Status

Professor

- Institution of origin: Dept. of Computer Science, Vanderbilt University

- Country: USA

- Dates: Sept 1 -Sept 8, 2024

- Context of the visit: Visit of the team to discuss our respective activities

-

Mobility program/type of mobility:

Bobby's own funding

Joris Boulo

-

Status

PhD Candidate

- Institution of origin: Univ Laval, Québec

- Country: Canda

- Dates: July, 2024

- Context of the visit: Collaboration on the development of the VR Platform LogVs together with Umans

-

Mobility program/type of mobility:

Associate team SocNav

Brad McFadyen

-

Status

Professor

- Institution of origin: Univ Laval, Québec

- Country: Canda

- Dates: Nov 18- Nov 29,2024

- Context of the visit: Collaboration new theories of locomotr navigation and the VR platform to study pedestrian behavior, preparation of a symposium proposal to ISPGR

-

Mobility program/type of mobility:

Associate team SocNav

Krista Best

-

Status

Associate Professor

- Institution of origin: Univ Laval, Québec

- Country: Canda

- Dates: Nov 18 - Nov 19, 2024

- Context of the visit: Collaboration on the design of experiments using the wheelchair simulator and data processing and sharing

-

Mobility program/type of mobility:

Associate team SocNav

François Routhier

-

Status

Professor

- Institution of origin: Univ Laval, Québec

- Country: Canda

- Dates: Nov 18 - Nov 19, 2024

- Context of the visit: Collaboration on the design of experiments using the wheelchair simulator and data processing and sharing

-

Mobility program/type of mobility:

Associate team SocNav

Ioannis Karamouzas

-

Status

Associate Professor

-

Institution of origin:

University of California, Riverside

- Country: USA

- Dates: 10-20 December 2024

- Context of the visit: Collaboration on the PhD of Alexis Jensen

-

Mobility program/type of mobility:

research stay

10.3 European initiatives

10.3.1 Horizon Europe

META-TOO

META-TOO project on cordis.europa.eu

-

Title:

A transfer of knowledge and technology for investigating gender-based inappropriate social interactions in the Metaverse

-

Duration:

From June 1, 2024 to May 31, 2027

-

Partners:

- INSTITUT NATIONAL DE RECHERCHE EN INFORMATIQUE ET AUTOMATIQUE (INRIA), France

- ETHNIKO KAI KAPODISTRIAKO PANEPISTIMIO ATHINON (UOA), Greece

- FUNDACIO DE RECERCA CLINIC BARCELONA-INSTITUT D INVESTIGACIONS BIOMEDIQUES AUGUST PI I SUNYER (IDIBAPS-CERCA), Spain

-

Inria contact:

Katja Zibrek

- Coordinator:

-

Summary:

The META-TOO proposal underscores the knowledge and skills transfer from two distinguished European institutions, INRIA and IDIBAPS, each renowned for its research contributions in the digital forefront, to the National and Kapodistrian University of Athens (NKUA), Greece, serving as the coordinating institution representing a Widening country (Greece). Specifically, both INRIA (The French National Institute for Research in Computer Science and Automation) and IDIBAPS (Fundació de Recerca Clínic Barcelona in Spain) have earned global recognition for their outstanding contributions to Extended Reality (XR) research. It is precisely within this domain that we have elected to focus the support of INRIA and IDIBAPS (henceforth called “mentors”) for the National and Kapodistrian University of Athens (“coordinator”), with a concerted effort aimed at reinforcing research management and administrative competencies, alongside enhancing research and innovation capabilities.

10.3.2 H2020 projects

H2020 MCSA ITN CLIPE

-

Title:

Creating Lively Interactive Populated Environments

-

Duration:

2020 - 2024

-

Coordinator:

University of Cyprus

-

Partners:

- University of Cyprus (CY)

- Universitat Politecnica de Catalunya (ES)

- INRIA (FR)

- University College London (UK)

- Trinity College Dublin (IE)

- Max Planck Institute for Intelligent Systems (DE)

- KTH Royal Institute of Technology, Stockholm (SE)

- Ecole Polytechnique (FR)

- Silversky3d (CY)

-

Inria contact:

Julien Pettré

-

Summary:

CLIPE is an Innovative Training Network (ITN) funded by the Marie Skłodowska-Curie program of the European Commission. The primary objective of CLIPE is to train a generation of innovators and researchers in the field of virtual characters simulation and animation. Advances in technology are pushing towards making VR/AR worlds a daily experience. Whilst virtual characters are an important component of these worlds, bringing them to life and giving them interaction and communication abilities requires highly specialized programming combined with artistic skills, and considerable investments: millions spent on countless coders and designers to develop video-games is a typical example. The research objective of CLIPE is to design the next-generation of VR-ready characters. CLIPE is addressing the most important current aspects of the problem, making the characters capable of: behaving more naturally; interacting with real users sharing a virtual experience with them; being more intuitively and extensively controllable for virtual worlds designers. To meet our objectives, the CLIPE consortium gathers some of the main European actors in the field of VR/AR, computer graphics, computer animation, psychology and perception. CLIPE also extends its partnership to key industrial actors of populated virtual worlds, giving students the ability to explore new application fields and start collaborations beyond academia.

- Website:

FET-Open CrowdDNA

-

Title:

CrowdDNA

-

Duration:

2020 - 2024

-

Coordinator:

Inria

-

Partners:

- Inria (Fr)

- ONHYS (FR)

- University of Leeds (UK)

- Crowd Dynamics (UK)

- Universidad Rey Juan Carlos (ES)

- Forschungszentrum Jülich (DE)

- Universität Ulm (DE)

-

Inria contact:

Julien Pettré

-

Summary: