Section: New Results

Topological and Geometric Inference

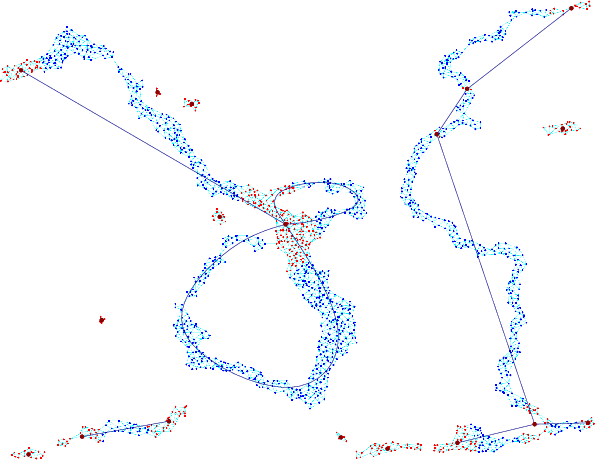

Metric graph reconstruction from noisy data

Participants : Frédéric Chazal, Marc Glisse.

In collaboration with Mridul Aanjaneya, Daniel Chen, Leonidas J. Guibas and Dmitriy Morozov.

Many real-world data sets can be viewed of as noisy samples of special types of metric spaces called metric graphs. Building on the notions of correspondence and Gromov-Hausdorff distance in metric geometry, we describe a model for such data sets as an approximation of an underlying metric graph. We present a novel algorithm that takes as an input such a data set, and outputs the underlying metric graph with guarantees. We also implement the algorithm, and evaluate its performance on a variety of real world data sets [26] .

|

Persistence-Based Clustering in Riemannian Manifolds

Participants : Frédéric Chazal, Steve Oudot.

In collaboration with Leonidas J. Guibas and Primoz Skraba.

We introduce a clustering scheme that combines a mode-seeking phase with a cluster merging phase in the corresponding density map. While mode detection is done by a standard graph-based hill-climbing scheme, the novelty of our approach resides in its use of topological persistence to guide the merging of clusters. Our algorithm provides additional feedback in the form of a set of points in the plane, called a persistence diagram (PD), which provably reflects the prominences of the modes of the density. In practice, this feedback enables the user to choose relevant parameter values, so that under mild sampling conditions the algorithm will output the correct number of clusters, a notion that can be made formally sound within persistence theory.

The algorithm only requires rough estimates of the density at the data points, and knowledge of (approximate) pairwise distances between them. It is therefore applicable in any metric space. Meanwhile, its complexity remains practical: although the size of the input distance matrix may be up to quadratic in the number of data points, a careful implementation only uses a linear amount of memory and takes barely more time to run than to read through the input. [29] .

Data-driven trajectory smoothing

Participant : Frédéric Chazal.

In collaboration with Daniel Chen, Leonidas J. Guibas, Xiaoye Jiang and Christian Sommer

Motivated by the increasing availability of large collections of noisy GPS traces, we present a new data-driven framework for smoothing trajectory data. The framework, which can be viewed of as a generalization of the classical moving average technique, naturally leads to efficient algorithms for various smoothing objectives. We analyze an algorithm based on this framework and provide connections to previous smoothing techniques. We implement a variation of the algorithm to smooth an entire collection of trajectories and show that it performs well on both synthetic data and massive collections of GPS traces. [28] .

A Weighted -Nearest Neighbor Density Estimate for Geometric Inference

Participants : Frédéric Chazal, David Cohen-Steiner.

In collaboration with Gérard Biau, Luc Devroye and Carlos Rodriguez

Motivated by a broad range of potential applications in topological and geometric inference, we introduce a weighted version of the -nearest neighbor density estimate. Various pointwise consistency results of this estimate are established. We present a general central limit theorem under the lightest possible conditions. In addition, a strong approximation result is obtained and the choice of the optimal set of weights is discussed. In particular, the classical -nearest neighbor estimate is not optimal in a sense described in the manuscript. The proposed method has been implemented to recover level sets in both simulated and real-life data. [12] .

Deconvolution for the Wasserstein metric and geometric inference

Participants : Frédéric Chazal, Claire Caillerie.

In collaboration with Jérôme Dedecker and Bertrand Michel

Recently, [17] , [13] have defined a distance function to measures to answer geometric inference problems in a probabilistic setting. According to their result, the topological properties of a shape can be recovered by using the distance to a known measure , if is close enough to a measure concentrated on this shape. Here, close enough means that the Wasserstein distance between and is sufficiently small. Given a point cloud, a natural candidate for is the empirical measure . Nevertheless, in many situations the data points are not located on the geometric shape but in the neighborhood of it, and can be too far from . In a deconvolution framework, we consider a slight modification of the classical kernel deconvolution estimator, and we give a consistency result and rates of convergence for this estimator. Some simulated experiments illustrate the deconvolution method and its application to geometric inference on various shapes and with various noise distributions. [14] .

Manifold Reconstruction Using Tangential Delaunay Complexes

Participants : Jean-Daniel Boissonnat, Arijit Ghosh.

We give a new provably correct algorithm to reconstruct a -dimensional manifold embedded in -dimensional Euclidean space [44] . The input to our algorithm is a point sample coming from an unknown manifold. Our approach is based on two main ideas : the notion of tangential Delaunay complex and the technique of sliver removal by weighting the sample points. Differently from previous methods, we do not construct any subdivision of the -dimensional ambient space. As a result, the running time of our algorithm depends only linearly on the extrinsic dimension while it depends quadratically on the size of the input sample, and exponentially on the intrinsic dimension . This is the first certified algorithm for manifold reconstruction whose complexity depends linearly on the ambient dimension. We also prove that for a dense enough sample the output of our algorithm is ambient isotopic to the manifold and a close geometric approximation of the manifold.

Equating the witness and restricted Delaunay complexes

Participants : Jean-Daniel Boissonnat, Ramsay Dyer, Arijit Ghosh, Steve Oudot.

It is a well-known fact that the restricted Delaunay and witness complexes may differ when the landmark and witness sets are located on submanifolds of Rd of dimension 3 or more. Currently, the only known way of overcoming this issue consists of building some crude superset of the witness complex, and applying a greedy sliver exudation technique on this superset. Unfortunately, the construction time of the superset depends exponentially on the ambient dimension, which makes the witness complex based approach to manifold reconstruction impractical. This work [43] provides an analysis of the reasons why the restricted Delaunay and witness complexes fail to include each other. From this a new set of conditions naturally arises under which the two complexes are equal.

Reconstructing 3D compact sets

Participant : David Cohen-Steiner.

In collaboration with Frédéric Cazals.

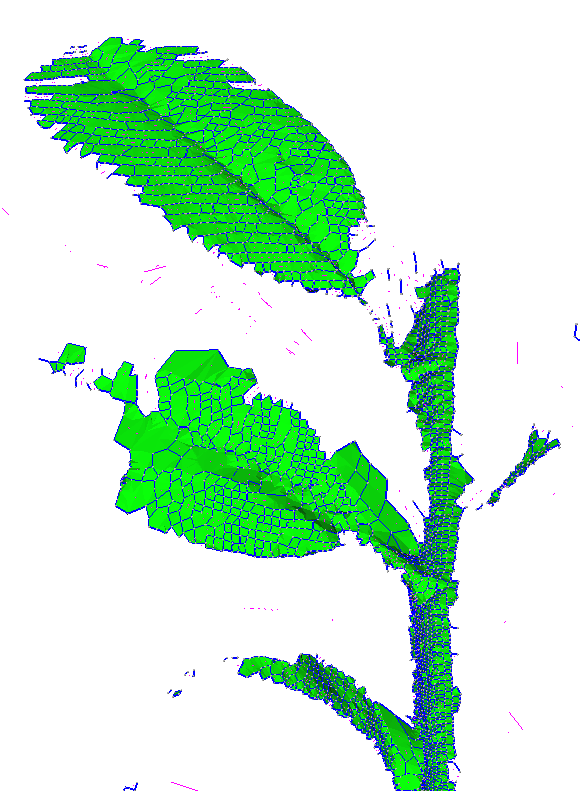

Reconstructing a 3D shape from sample points is a central problem faced in medical applications, reverse engineering, natural sciences, cultural heritage projects, etc. While these applications motivated intense research on 3D surface reconstruction, the problem of reconstructing more general shapes hardly received any attention. This paper develops a reconstruction algorithm changing the 3D reconstruction paradigm as follows.

First, the algorithm handles general shapes i.e. compact sets as opposed to surfaces. Under mild assumptions on the sampling of the compact set, the reconstruction is proved to be correct in terms of homotopy type. Second, the algorithm does not output a single reconstruction but a nested sequence of plausible reconstructions. Third, the algorithm accommodates topological persistence so as to select the most stable features only. Finally, in case of reconstruction failure, it allows the identification of under-sampled areas, so as to possibly fix the sampling.

These key features are illustrated by experimental results on challenging datasets (see Figure 5 ), and should prove instrumental in enhancing the processing of such datasets in the aforementioned applications. [16] .