Section: New Results

Multimodal immersive interaction

Brain-Computer Interaction based mental state

Participants : Anatole Lécuyer [contact] , Bruno Arnaldi, Laurent George, Yann Renard.

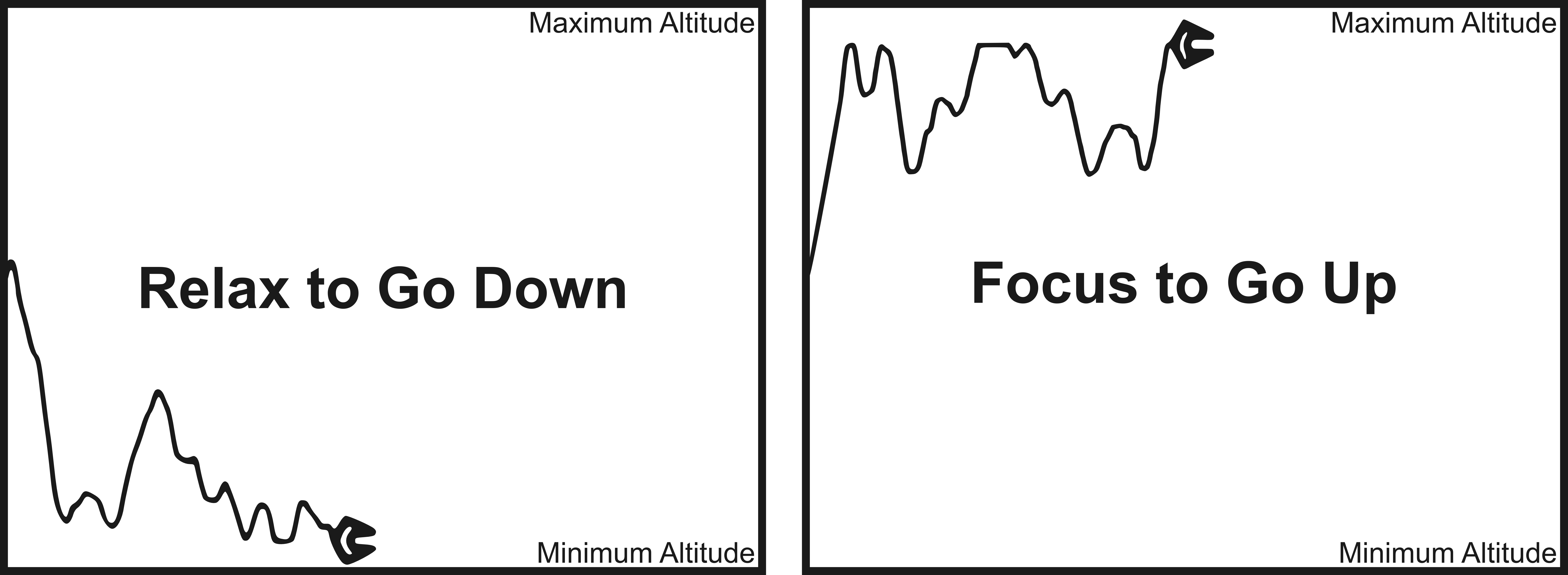

In [20] , presented at IEEE EMBS conference, we have explored the use of electrical biosignals measured on scalp and corresponding to mental relaxation and concentration tasks in order to control an object in a video game as illustrated in Figure 4 . To evaluate the requirements of such a system in terms of sensors and signal processing we compared two designs. The first one used only one scalp electroencephalographic (EEG) electrode and the power in the alpha frequency band. The second one used sixteen scalp EEG electrodes and machine learning methods. The role of muscular activity was also evaluated using five electrodes positioned on the face and the neck.

|

Results show that the first design enabled 70% of the participants to successfully control the game, whereas 100% of the participants managed to do it with the second design based on machine learning. Subjective questionnaires confirm these results: users globally felt to have control in both designs, with an increased feeling of control in the second one. Offline analysis of face and neck muscle activity shows that this activity could also be used to distinguish between relaxation and concentration tasks. Results suggest that the combination of muscular and brain activity could improve performance of this kind of system. They also suggest that muscular activity has probably been recorded by EEG electrodes.

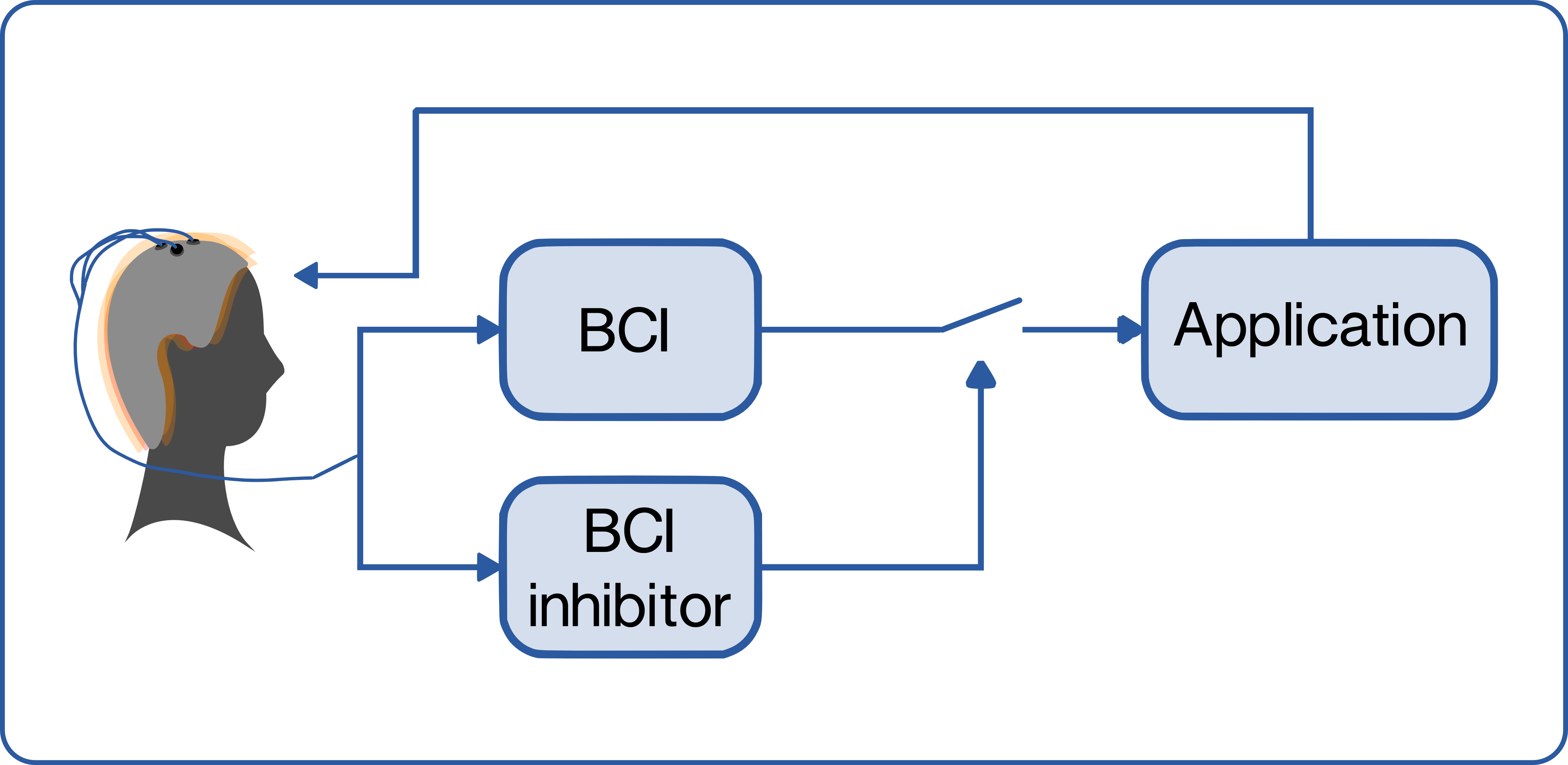

In [19] ,presented in the 5th International Brain-Computer Interface Conference, we introduce the concept of Brain-Computer Interface (BCI) inhibitor, which is meant to standby the BCI until the user is ready, in order to improve the overall performance and usability of the system. BCI inhibitor can be defined as a system that monitors user's state and inhibits BCI interaction until specific requirements (e.g. brain activity pattern, user attention level) are met.

We conducted a pilot study to evaluate a hybrid BCI composed of a classic synchronous BCI system based on motor imagery and a BCI inhibitor (Figure 5 ). The BCI inhibitor initiates the control period of the BCI when requirements in terms of brain activity are reached (i.e. stability in the beta band).

Preliminary results with four participants suggest that BCI inhibitor system can improve BCI performance.

Navigating in virtual worlds using a Brain-Computer Interface

Participants : Anatole Lécuyer [contact] , Jozef Legény.

When a person looks at a light flickering at a constant frequency, we can observe a corresponding electrical signal in their EEG. This phenomenon, located in the occipital area of the brain is called Steady-State Visual-Evoked Potential (SSVEP).

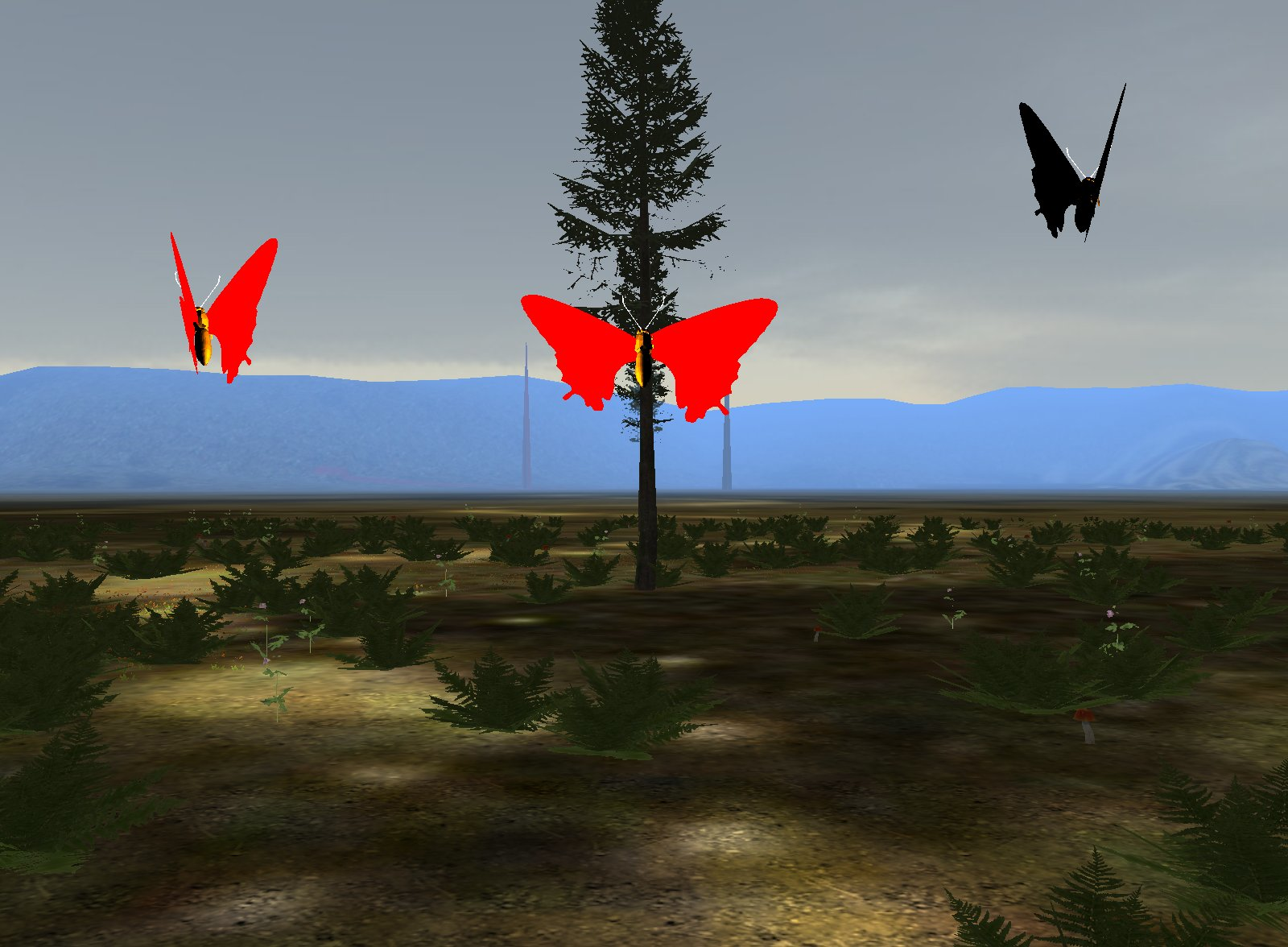

In [7] we introduce a novel paradigm for a controller using SSVEP. Compared to the state-of-the-art implementations which use static flickering targets, we have used animated and moving objects. In our example applications we have used animated butterflies flying in front of the user as show in Figure 6 . A study has revealed that, at the cost of decreased performance, this controller increases the personal feeling of presence.

These results show that integrating visual SSVEP stimulation into the environment is possible and that further study is necessary in order to improve the performance of the system.

Walking-in-place in virtual environments

Participants : Anatole Lécuyer [contact] , Maud Marchal [contact] , Léo Terziman, Bruno Arnaldi, Franck Multon.

The Walking-In-Place interaction technique was introduced to navigate infinitely in 3D virtual worlds by walking in place in the real world. The technique has been initially developed for users standing in immersive setups and was built upon sophisticated visual displays and tracking equipments. We have proposed to revisit the whole pipeline of the Walking-In-Place technique to match a larger set of configurations and apply it notably to the context of desktop Virtual Reality. With our novel "Shake-Your-Head" technique, the user has the possibility to sit down, and to use small screens and standard input devices for tracking. The locomotion simulation can compute various motions such as turning, jumping and crawling, using as sole input the head movements of the user (Figure 7 ).

In a second study [29] we analyzed and compared the trajectories made in a Virtual Environment with two different navigation techniques. The first is a standard joystick technique and the second is the Walking-In-Place (WIP) technique. We proposed a spatial and temporal analysis of the trajectories produced with both techniques during a virtual slalom task. We found that trajectories and users' behaviors are very different across the two conditions. Our results notably showed that with the WIP technique the users turned more often and navigated more sequentially, i.e. waited to cross obstacles before changing their direction. However, the users were also able to modulate their speed more precisely with the WIP. These results could be used to optimize the design and future implementations of WIP techniques. Our analysis could also become the basis of a future framework to compare other navigation techniques.

Improved interactive stereoscopic rendering : SCVC

Participants : Jérôme Ardouin, Anatole Lécuyer [contact] , Maud Marchal [contact] , Eric Marchand.

Frame cancellation comes from the conflict between two depth cues: stereo disparity and occlusion with the screen border. When this conflict occurs, the user suffers from poor depth perception of the scene. It also leads to uncomfortable viewing and eyestrain due to problems in fusing left and right images.

In [10] , presented at the IEEE 3DUI conference, we propose a novel method to avoid frame cancellation in real-time stereoscopic rendering. To solve the disparity/frame occlusion conflict, we propose rendering only the part of the viewing volume that is free of conflict by using clipping methods available in standard real-time 3D APIs. This volume is called the Stereo Compatible Volume (SCV) and the method is named Stereo Compatible Volume Clipping (SCVC).

Black Bands, a proven method initially designed for stereoscopic movies is also implemented to conduct an evaluation. Twenty two people were asked to answer open questions and to score criteria for SCVC, Black Bands and a Control method with no specific treatment. Results show that subjective preference and user's depth perception near screen edge seem improved by SCVC, and that Black Bands did not achieve the performance we expected.

At a time when stereoscopic capable hardware is available from the mass consumer market, the disparity/frame occlusion conflict in stereoscopic rendering will become more noticeable. SCVC could be a solution to recommend. SCVC's simplicity of implementation makes the method able to target a wide range of rendering software from VR application to game engine.

Six degrees-of-freedom haptic interaction

Participants : Anatole Lécuyer [contact] , Maud Marchal [contact] , Gabriel Cirio.

Haptic interaction with virtual objects is a major concern in the virtual reality field. There are many physically-based efficient models that enable the simulation of a specific type of media, e.g. fluid volumes, deformable and rigid bodies. However, combining these often heterogeneous algorithms in the same virtual world in order to simulate and interact with different types of media can be a complex task.

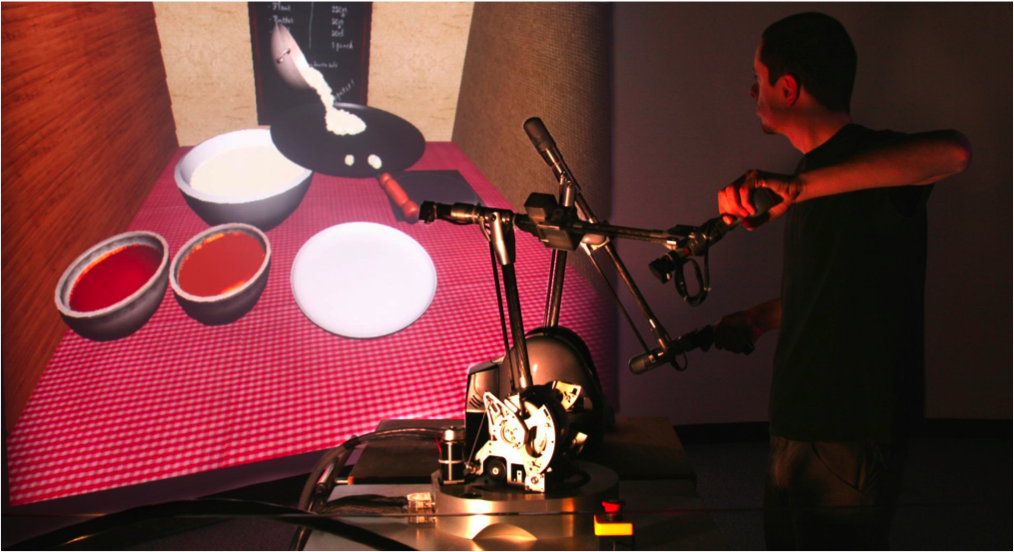

In [5] , published at IEEE Transactions on visualization and Computer Graphics, we propose a novel approach that allows real-time 6 Degrees of Freedom (DoF) haptic interaction with fluids of variable viscosity. Our haptic rendering technique, based on a Smoothed-Particle Hydrodynamics (SPH) physical model, provides a realistic haptic feedback through physically-based forces. 6DoF haptic interaction with fluids is made possible thanks to a new coupling scheme and a unified particle model, allowing the use of arbitrary-shaped rigid bodies. Particularly, fluid containers can be created to hold fluid and hence transmit to the user force feedback coming from fluid stirring, pouring, shaking or scooping. We evaluate and illustrate the main features of our approach through different scenarios, highlighting the 6DoF haptic feedback and the use of containers.

The Virtual Crepe Factory [14] illustrates this approach for 6DoF haptic interaction with fluids. It showcases a 2-handed interactive haptic scenario: a recipe consisting in using different types of fluid in order to make a special pancake also known as "crepe". The scenario (Figure 8 ) guides the user through all the steps required to prepare a crepe: from the stirring and pouring of the dough to the spreading of different toppings, without forgetting the challenging flipping of the crepe. With the Virtual Crepe Factory, users can experience for the first time 6DoF haptic interactions with fluids of varying viscosity.

|

In [15] , presented at the IEEE Virtual Reality Conference, we propose the first haptic rendering technique for the simulation and the interaction with multistate (Figure 9 ) media, namely fluids, deformable bodies and rigid bodies, in real-time and with 6DoF haptic feedback. The shared physical model (SPH) for all three types of media avoids the complexity of dealing with different algorithms and their coupling. We achieve high update rates while simulating a physically-based virtual world governed by fluid and elasticity theories, and show how to render interaction forces and torques through a 6DoF haptic device.

|

Joyman: a human-scale joystick for navigating in virtual worlds

Participants : Maud Marchal [contact] , Anatole Lécuyer, Julien Pettré.

We have proposed a novel interface called Joyman (Figure 10 ), designed for immersive locomotion in virtual environments. Whereas many previous interfaces preserve or stimulate the users proprioception, the Joyman aims at preserving equilibrioception in order to improve the feeling of immersion during virtual locomotion tasks. The proposed interface is based on the metaphor of a human-scale joystick. The device has a simple mechanical design that allows a user to indicate his virtual navigation intentions by leaning accordingly. We have also proposed a control law inspired by the biomechanics of the human locomotion to transform the measured leaning angle into a walking direction and speed - i.e., a virtual velocity vector. A preliminary evaluation was conducted in order to evaluate the advantages and drawbacks of the proposed interface and to better draw the future expectations of such a device.

This principle of this new interface was published at international conference IEEE 3DUI [25] and a patent has been filed for the interface. A demonstration of this interface was proposed at ACM Siggraph Asia Emerging Technologies [33] .

Interactions within 3D virtual universes

Participants : Thierry Duval [contact] , Valérie Gouranton [contact] , Bruno Arnaldi, Laurent Aguerreche, Cédric Fleury, Thi Thuong Huyen Nguyen.

Our work focuses upon new formalisms for 3D interactions in virtual environments, to define what an interactive object is, what an interaction tool is, and how these two kinds of objects can communicate together. We also propose virtual reality patterns to combine navigation with interaction in immersive virtual environments.

We have worked upon generic interaction tools for collaboration, based on multi-point interaction. In that context we have studied the efficiency of one instance of our Reconfigurable Tangible Device, the RTD-3, for collaborative manipulation of 3D objects compared to state of the art metaphors [9] . We have setup an experiment for collaborative distant co-manipulation (figure 1 ) of a clipping plane inside for remotely analyzing 3D scientific data issued from an earthquake simulation .