Section: New Results

Interaction Techniques

Participants : Caroline Appert, Michel Beaudouin-Lafon, Anastasia Bezerianos, David Bonnet, Olivier Chapuis [correspondant] , Cédric Fleury, Stéphane Huot, Can Liu, Justin Mathew, Wendy Mackay, Halla Olafsdottir, Theophanis Tsandilas, Oleksandr Zinenko.

InSitu explores interaction and visualization techniques in a variety of contexts, including individual interaction techniques on different display surfaces that range from mobile devices to very large wall-sized displays, including standard desktop systems and tabletops.

This year, we investigated multi-touch gestures on tabletop [26] , we considered the combination of Tilt and Touch on smartphone [29] , we proposed novel bi-manual interaction techniques for tablets [18] , we introduced a novel focus+context technique to facilitate route following [14] , we introduced the GlideCursor to facilitate pointing on large display [15] , we compared physical navigation in front of a wall-size display with virtual navigation on the desktop [22] , we studied users' behavior in immersive Virtual Environments [12] , we built a tool to ease the extraction and the expression of parallelism in programs [30] and we investigated the effect of contours on star glyphs [13] .

In addition to providing knowledge for designers and practitioners, this set of remarkable results advances our overall knowledge regarding basic interactive phenomena, and allows to better understand how user practices will change.

Multitouch on Tabletop – We systematically studied how users adapt their grasp when asked to translate and rotate virtual objects on a multitouch tabletop [26] . We have shown that users choose a grip orientation that is influenced by three factors: (1) a preferred orientation defined by the start object position, (2) a preferred orientation defined by the target object position, and (3) the anticipated object rotation. We have examined these results in the light of the most recent models of planning for manipulating physical objects and explored how these results can inform the design of tabletop applications.

Tilt & Touch – We studied the combination of tilt and touch when interacting with mobile devices [29] . We conducted an experiment to explore the effectiveness of TilTouch gestures for both one-handed and two-handed use. Our results indicate the best combinations of TilTouch gestures in terms of performance, motor coordination, and user preferences.

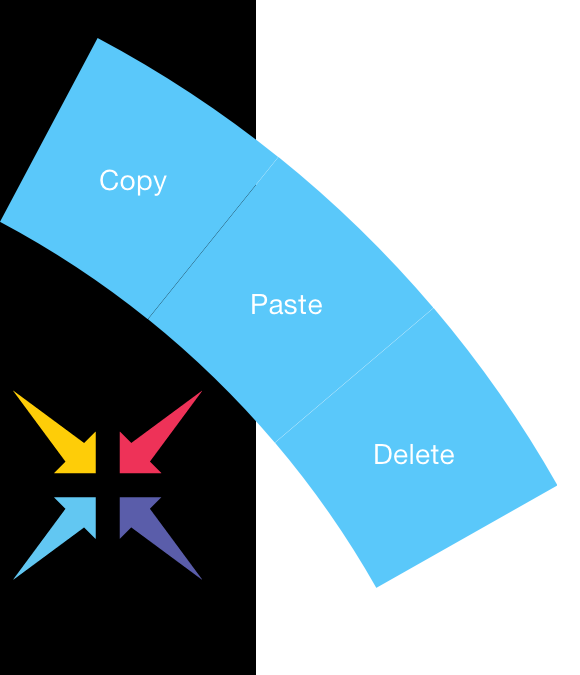

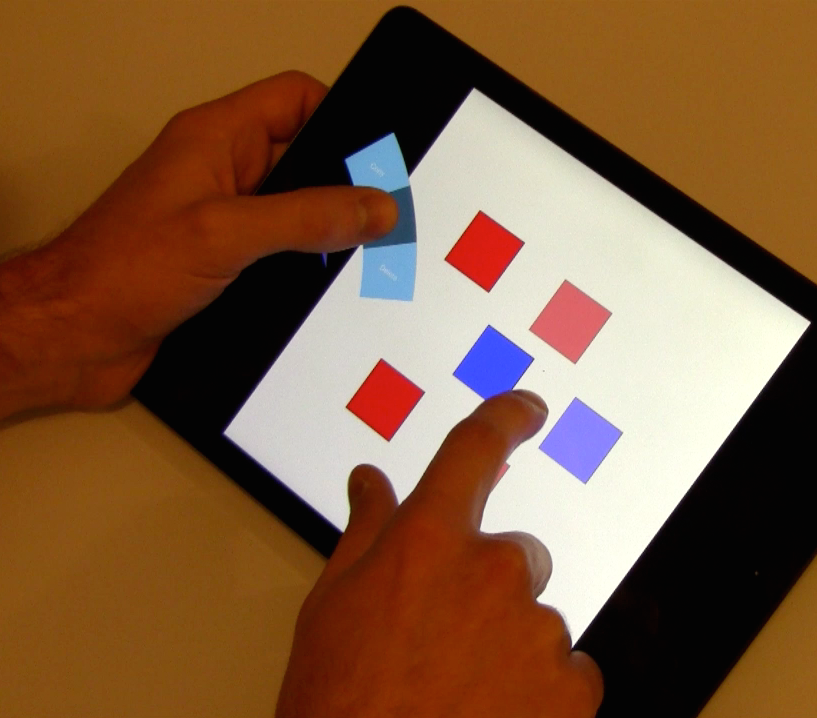

SPad – We created SPad [18] , a new bimanual interaction technique designed to improve productivity on multi-touch tablets: the user activates quasimodes with the thumb of the non-dominant hand while holding the device with that hand and interacts with the content with the dominant hand (figure 3 ). We conducted an iterative design process and created a tablet application that demonstrates how SPad enables faster, more direct and more powerful interaction without increasing complexity.

|

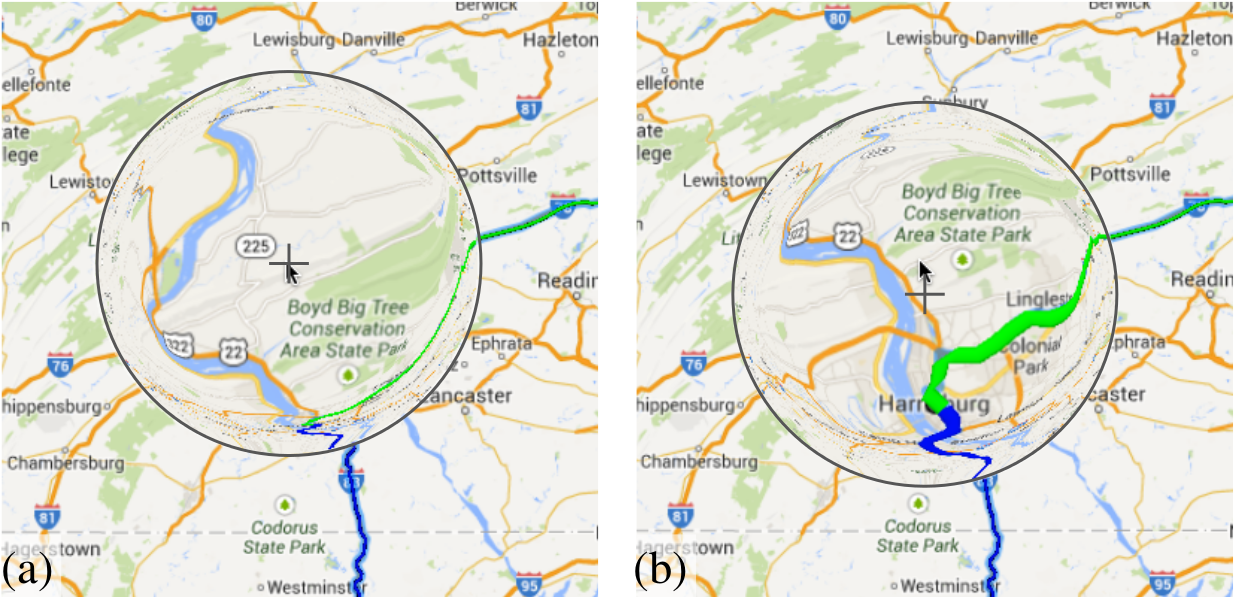

RouteLenses – Millions of people go to the Web to search for geographical itineraries. Inspecting those map itineraries remains tedious because they seldom fit on screen, requiring much panning & zooming to see details. Focus+context techniques address this problem by displaying routes at a scale that allows them to fully fit on screen: users see the entire route at once, and perform magnified steering using a lens to navigate along the path, revealing additional detail. We created RouteLenses [14] , a type of lenses that automatically adjusts their position based on the geometry of the path that users steer through (figure 4 . RouteLenses make it easier for users to follow a route, yet do not constrain movements too strictly, leaving them free to move the lens away from the path to explore its surroundings.

|

GlideCursor – Pointing on large displays with an indirect, relative pointing device such as a touchpad often requires clutching. We designed and evaluated GlideCursor [15] , which lets the cursor continues to move during clutching gestures. The effect is that of controlling the cursor as a detached object that can be pushed, with inertia and friction similar to a puck being pushed on a table. We analyzed gliding from a practical and a theoretical perspective and conducted two studies. The first controlled experiment established that gliding reduces clutching and can improve pointing performance for large distances. We introduced a measure called cursor efficiency to capture the effects of gliding on clutching. The second experiment demonstrated that participants use gliding even when an efficient acceleration function lets them perform the task without it, without degrading performance.

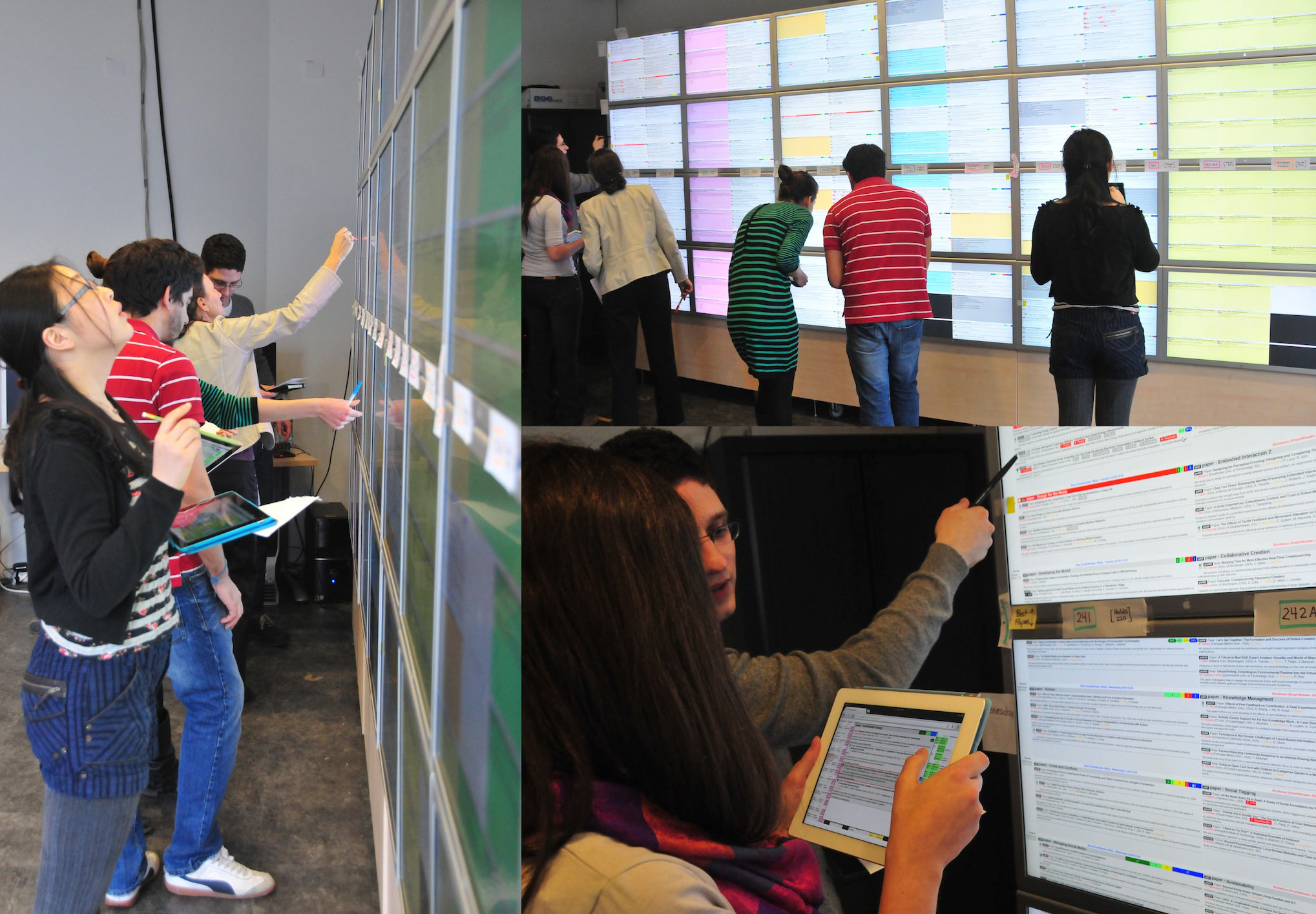

Wall vs. Desktop – The advent of ultra-high resolution wall-size displays and their use for complex tasks require a more systematic analysis and deeper understanding of their advantages and drawbacks compared with desktop monitors. While previous work has mostly addressed search, visualization and sense-making tasks, we have designed and evaluated an abstract classification task that involves explicit data manipulation [22] . Based on our observations of real uses of a wall display (figure 5 -left), this task represents a large category of applications. We conducted a controlled experiment that uses this task to compare physical navigation in front of a wall-size display (figure 5 -right) with virtual navigation using pan-and-zoom on the desktop. Our main finding is a robust interaction effect between display type and task difficulty: while the desktop can be faster than the wall for simple tasks, the wall gains a sizable advantage as the task becomes more difficult.

|

Immersive VE – The feeling of presence is essential for efficient interaction within Virtual Environments (VEs). When a user is fully immersed within a VE through a large immersive display system, her feeling of presence can be altered because of disturbing interactions with her physical environment. This alteration can be avoided by taking into account the physical features of the user as well as those of the system hardware. Moreover, the 3D abstract representation of these physical features can also be useful for collaboration between distant users. In [12] we presented how we use the Immersive Interactive Virtual Cabin (IIVC) model to obtain this virtual representation of the user’s physical environment and we illustrated how this representation can be used in a collaborative navigation task in a VE. We also presented how we can add 3D representations of 2D interaction tools in order to cope with asymmetrical collaborative configurations, providing 3D cues for a user to understand the actions of others even if he/she is not fully immersed in the shared VE.

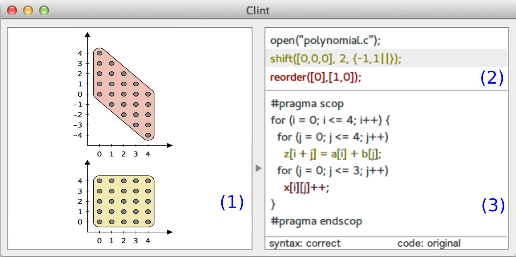

Clint – We created Clint, a direct manipulation tool to ease the extraction and the expression of parallelism in existing programs [30] . Clint is built on top of state-of-the-art compilation tools (polyhedral representation of programs) in order to give a visual representation of the code, perform automatic data dependence analysis and to ensure the correctness of code transformations (figure 6 ). It can be used to rework and improve automatically generated optimizations and to make manual program transformation faster, safer and more efficient.

|

Start Glyphs – We conducted three studies using crowd-sourcing on Amazon mechanical Turk, to determine the effect of using contours on data glyphs such as star glyphs [13] . Our results indicate that glyphs without contours lead viewers to naturally make judgements that are data-driven. Whereas adding contours encourages shape similarity, e.g. perceiving rotated variations of glyphs as similar (even though they are not similar in data space).