Section: Research Program

Perception and Situation Awareness

Robust perception and decision-making in open and dynamic environments populated by human beings is an open and challenging scientific problem. Traditional perception techniques do not provide an adequate solution for this problems, mainly because such environments are uncontrolled (partially unknown and open) and exhibit strong constraints to be satisfied (in particular high dynamicity and strong uncertainty). This means that the proposed solutions have to simultaneously take into account characteristics such as real time processing, temporary occultations, dynamic changes or motion predictions; these solutions have also to include explicit models for reasoning about uncertainty (data incompleteness, sensing errors, hazards of the physical world).

Sensor fusion

In the context of autonomous navigation we investigate sensor fusion problems when sensors and robots have limited capacities. This relates to the general study of the minimal condition for observability.

A special attention is devoted to the fusion of inertial and monocular vision sensors. We are particularly interested in closed-form solutions, i.e., solutions able to determine the state only in terms of the measurements obtained during a short time interval. This is fundamental in robotics since such solutions do not need initialization. For the fusion of visual and inertial measurements we have recently obtained such closed-form solutions in [41] and [44] . This work is currently supported by our ANR project VIMAD (Navigation autonome des drones aériens avec la fusion des données visuelles et inertielles, lead by A. Martinelli, Chroma.).

We are also interested in understanding the observability properties of these sensor fusion problems. In other words, for a given sensor fusion problem, we want to obtain the physical quantities that the sensor measurements allow us to estimate. This is a fundamental step in order to properly define the state to be estimated. To achieve this goal, we apply standard analytic tools developed in control theory together with the concept of continuous symmetry recently introduced by the emotion team [40] . In order to take into account the presence of disturbances, we introduce general analytic tools able to derive the observability properties in the nonlinear case when some of the system inputs are unknown (and act as disturbances).

|

Bayesian perception

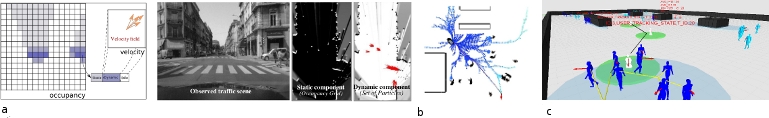

In previous work carried out in the eMotion team, we have proposed a new paradigm in robotics called “Bayesian Perception”. The foundation of this approach relies on the concept of “Bayesian Occupancy Filter (BOF)” initially proposed in the PhD thesis of Christophe Coué [28] and further developed in the team [36] . The basic idea is to combine à Bayesian filter with a probabilistic grid representation of both the space and the motions, see illustration Fig. 1 .a. This model allows the filtering and the fusion of heterogeneous and uncertain sensors data, and takes into account the history of the sensors measurements, a probabilistic model of the sensors and of the uncertainty, and a dynamic model of the observed objects motions. Current and future work on this research axis addresses two complementary issues:

-

Development of a complete framework for extending the Bayesian Perception approach to the object level, in particular by integrating in a robust way higher level functions such as multiple objects detection and tracking or objects classification. The idea is to avoid well known data association problems by both reasoning at the occupancy grid level and at object level (i.e. identified clusters of dynamic cells) [47] .

-

Software and hardware integration to improve the efficiency of the approach (high parallelism), and to reduce important factors such as ship size, price and energy consumption. This work is developed in cooperation with the CEA LETI and the project Perfect of the IRT nanoelec. The validation and the certification issues will also be addressed in the scope of the ECSEL ENABLE-S3 project (to be started in 2016).

Situation Awareness & Bayesian Decision-making

Prediction is an important ability for navigation in dynamic uncertain environments, in particular of the evolution of the perceived actors for making on-line safe decisions (concept of “Bayesian Decision-making”). We have recently shown that an interesting property of the Bayesian Perception approach is to generate short-term conservative (i.e. when motion parameters are supposed to be stable during a small amount of time) predictions on the likely future evolution of the observed scene, even if the sensing information is temporary incomplete or not available [46] . But in human populated environments, estimating more abstract properties (e.g. object classes, affordances, agents intentions) is also crucial to understand the future evolution of the scene. Our current and future work in this research axis focus on two complementary issues :

-

Development of an integrated approach for “Situation Awareness & Risk Assessment” in complex dynamic scenes involving multiples moving agents (e.g vehicles, cyclists, pedestrians ...) whose behaviors are most of the time unknown but predictable. Our approach relies on combining machine learning to build a model of the agent behaviors and generic motion prediction techniques (Kalman-based, GHMM [57] , Gaussian Processes [52] ). In the perspective of a long-term prediction we will consider the semantic level (knowledge about agents' activities and tasks) and planning techniques developed in the following Section.

-

Development a new framework for on-line Bayesian Decision-Making in multiple vehicles environments, under both dynamic and uncertainty constraints, and based on contextual information and on a continuous analysis of the evolution of the probabilistic collision risk. Results have recently been obtained in cooperation with Renault and Berkeley, by using the “Intention / Expectation” paradigm and Dynamic Bayesian Networks [38] , [39] . This work is carried out through several cooperative projects (Toyota, Renault, project Prefect of IRT Nanoelec).