Section: New Results

Analysis and modeling for compact representation

3D modelling, light-fields, 3D meshes, epitomes, image-based rendering, inpainting, view synthesis

Visual attention

Participant : Olivier Le Meur.

Visual attention is the mechanism allowing to focus our visual processing resources on behaviorally relevant visual information. Two kinds of visual attention exist: one involves eye movements (overt orienting) whereas the other occurs without eye movements (covert orienting). Our research activities deal with the understanding and modeling of overt attention.

Saccadic model: Since 2015, we have worked on saccadic model, which predicts the visual scanpaths of an observer watching a scene displayed onscreen. In 2016, we proposed a first improvement consisting in using spatially-variant and context-dependent viewing biases. We showed that the joint distribution of saccade amplitudes and orientations is significantly dependent on the type of visual stimulus. In addition, the joint distribution turns out to be spatially variant within the scene frame. This model outperforms state-of-the-art saliency models, and provides scanpaths in close agreement with human behavior. In [19], [35], we went further by showing that saccadic models are a flexible framework that can be tailored to emulate observer's viewing tendencies. More specifically, we tailored the proposed model to simulate visual scanpaths of 5 age groups of observers (i.e. adults, 8-10 y.o., 6-8 y.o., 4-6 y.o. and 2 y.o.). The key point is that the joint distribution of saccade amplitude and orientation is a visual signature specific to each age group, and can be used to generate age-dependent scanpaths. Our age-dependent saccadic model does not only output human-like, age-specific visual scanpaths, but also significantly outperforms other state-of-the-art saliency models. We demonstrated that the computational modelling of visual attention, through the use of saccadic model, can be efficiently adapted to emulate the gaze behavior of a specific group of observers.

Effects on Comics by Clustering Gaze Data: Comics are a compelling communication medium conveying a visual storytelling. With a smart mixture of text or/and other visual information, artists tell a story by drawing the viewer attention on specific areas. With the digital comics revolution (e.g. mobile comic and webcomic), we are witnessed a resurgence of interest for this art form. This new form of comics allows not only to tackle a wider audience but also new consumption methods. An open question in this endeavor is identifying where in a comic panel the effects should be placed. We proposed a fast, semi-automatic technique to identify effects-worthy segments in a comic panel by utilizing gaze locations as a proxy for the importance of a region. We took advantage of the fact that comic artists influence viewer gaze towards narrative important regions. By capturing gaze locations from multiple viewers, we can identify important regions. The key contribution is to leverage a theoretical breakthrough in the computer networks community towards robust and meaningful clustering of gaze locations into semantic regions, without needing the user to specify the number of clusters. We have developed a method based on the concept of relative eigen quality that takes a scanned comic image and a set of gaze points and produces an image segmentation. A variety of effects such as defocus, recoloring, stereoscopy, and animations has been demonstrated. We also investigated the use of artificially generated gaze locations from saliency models in place of actual gaze locations.

Perceptual metric for perceptual transfer: Color transfer between input and target images has raised a lot of interest in the past decade. Color transfer aims at modifying the look of an original image considering the illumination and the color palette of a reference image. It can be employed for image and video enhancement by simulating the appearance of a given image or a video sequence. Different color transfer methods often result in different output images. The process of determining the most plausible output image is difficult and requires, due to the lack of an objective metric, time-consuming and costly subjective experiments. To overcome this problem, we proposed a perceptual model for evaluating results from color transfer methods [31]. From a subjective experiment, involving several color transfer methods, we build a regression model with random forests to describe the relationship between a set of features (e.g. objective quality, saliency, etc.) and the subjective scores. An analysis and a cross-validation showed that the predictions of the proposed quality metric are highly accurate.

Saliency-based navigation in omnidirectional image

Participants : Olivier Le Meur, Thomas Maugey.

Omnidirectional images describe the color information at a given position from all directions. Affordable 360° cameras have recently been developed leading to an explosion of the 360 degrees data shared on the social networks. However, an omnidirectional image does not contain interesting content everywhere. Some part of the images are indeed more likely to be looked at by some users than others. Knowing these regions of interest might be useful for 360° image compression, streaming, retargeting or even editing. In the work published in [25], a new approach based on 2D image saliency is proposed both to model the user navigation within a 360° image, and to detect which parts of an omnidirectional content might draw users’ attention. A double cube projection is first used to put the saliency estimation in the classical 2D image framework. Consecutively, the saliency map serves as a support for the navigation estimation algorithm.

Context-aware Clustering and Assessment of Photo Collections

Participants : Dmitry Kuzovkin, Olivier Le Meur.

To ensure that all important moments of an event are represented and that challenging scenes are correctly captured, both amateur and professional photographers often opt for taking large quantities of photographs. As such, they are faced with the tedious task of organizing large collections and selecting the best images among similar variants. Automatic methods assisting with this task are based on independent assessment approaches, evaluating each image apart from other images in the collection. However, the overall quality of photo collections can largely vary due to user skills and other factors. We explore the possibility of context-aware image quality assessment, where the photo context is defined using a clustering approach, and statistics of both the extracted context and the entire photo collection are used to guide identification of low-quality photos. We demonstrate that the proposed method is able to adapt flexibly to the nature of processed albums and to facilitate the task of image selection in diverse scenarios.

Light fields view extraction from lenslet images

Participants : Pierre David, Christine Guillemot, Mikael Le Pendu.

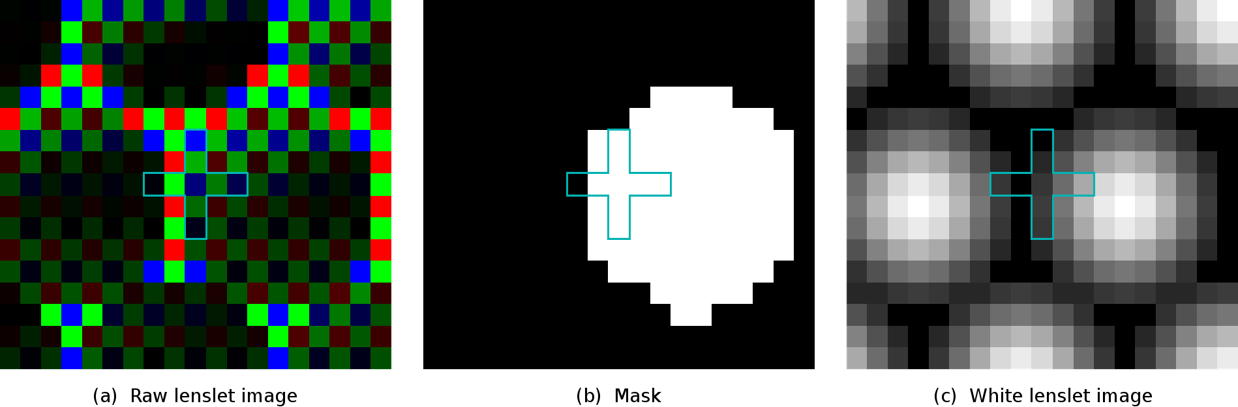

Practical systems have recently emerged for the capture of real light fields which go from cameras arrays to single cameras mounted on moving gantries and plenoptic cameras. While camera arrays capture the scene from different viewpoints, hence with a large baseline, plenoptic cameras use an array of micro-lenses placed in front of the photosensor to separate the light rays striking each microlens into a small image on the photosensors pixels, and this way capture dense angular information with a small baseline. Extracting views from the raw lenslet data captured by plenoptic cameras involves several processing steps: devignetting which, with white images, aims at compensating for the loss of illumination at the periphery of the micro-lenses, color demosaicing, alignment of the sensor data with the micro-lens array, and converting the hexagonal sampling grid into a rectangular sampling grid. These steps are quite critical as they have a strong impact on the quality of the extracted sub-aperture images (views).

We have addressed two important steps of the view extraction from lenslet data: color demosaicing and alignment of the micro-lens array on the photosensor. We have developed a new method guided by a white lenslet image for color demosaicing of raw lenslet data [27](best paper award). The white lenslet image gives measures of confidence on the color values which are then used to weight the color samples interpolation (see Fig.3. Similarly, the white image is used to guide the interpolation performed in the alignment of the micro-len arrays on the photosensor. The method significantly decreases the crosstalk artefacts from which suffer existing methods.

|

Super-rays for efficient Light fields processing

Participants : Matthieu Hog, Christine Guillemot.

Light field acquisition devices allow capturing scenes with unmatched post-processing possibilities. However, the huge amount of high dimensional data poses challenging problems to light field processing in interactive time. In order to enable light field processing with a tractable complexity, we have addressed, in collaboration with Neus Sabater (technicolor) the problem of light field over-segmentation [15]. We have introduced the concept of super-ray, which is a grouping of rays within and across views (see Fig.4), as a key component of a light field processing pipeline. The proposed approach is simple, fast, accurate, easily parallelisable, and does not need a dense depth estimation. We have demonstrated experimentally the efficiency of the proposed approach on real and synthetic datasets, for sparsely and densely sampled light fields. As super-rays capture a coarse scene geometry information, we have also shown how they can be used for real time light field segmentation and correcting refocusing angular aliasing.