Keywords

Computer Science and Digital Science

- A5.1.2. Evaluation of interactive systems

- A5.1.9. User and perceptual studies

- A5.3. Image processing and analysis

- A5.4. Computer vision

- A5.5.4. Animation

- A5.6. Virtual reality, augmented reality

- A6.1.1. Continuous Modeling (PDE, ODE)

- A6.1.4. Multiscale modeling

- A6.1.5. Multiphysics modeling

- A6.2.4. Statistical methods

Other Research Topics and Application Domains

- B1.1.8. Mathematical biology

- B1.2.1. Understanding and simulation of the brain and the nervous system

- B1.2.3. Computational neurosciences

- B2.1. Well being

- B2.5.1. Sensorimotor disabilities

- B2.5.3. Assistance for elderly

- B2.7.2. Health monitoring systems

- B9.3. Medias

- B9.5.2. Mathematics

- B9.5.3. Physics

1 Team members, visitors, external collaborators

Research Scientists

- Bruno Cessac [Team leader, Inria, Senior Researcher, HDR]

- Aurelie Calabrese [Inria, Starting Research Position]

- Pierre Kornprobst [Inria, Senior Researcher, HDR]

- Hui-Yin Wu [Inria, from Oct 2020, Starting Faculty Position]

Post-Doctoral Fellows

- Selma Souihel [Univ Côte d'Azur, until Aug 2020]

- Hui-Yin Wu [Inria, until Sep 2020]

PhD Students

- Simone Ebert [Univ Côte d'Azur, from Sep 2020]

- Evgenia Kartsaki [University of Newcastle]

Interns and Apprentices

- Johanna Delachambre [Inria, Apprentice, from Nov 2020]

- Simone Ebert [Univ Côte d'Azur, until Feb 2020]

- Sebastian Gallardo-Diaz [Inria, until Mar 2020]

- Alex Ye [Inria, from Mar 2020 until Sep 2020]

Administrative Assistant

- Marie-Cecile Lafont [Inria]

2 Overall objectives

Vision is a key function to sense the world and perform complex tasks. It has high sensitivity and strong reliability, given that most of its input is noisy, changing, and ambiguous. A better understanding of biological vision can thus bring about scientific, medical, societal, and technological advances, contributing towards well-being and quality of life. Especially considering the aging of the population in developed countries, where the number of people with impaired vision is increasing dramatically 46.

In these countries, the majority of people with vision loss are adults who are legally blind, but not totally blind; instead, they have what is referred to as low vision. Low vision is a condition caused by eye disease, in which visual acuity is 3/10 or poorer in the better seeing eye (corrected normal acuity being 10/10) and cannot be corrected or improved with glasses, medication or surgery. Severe acuity reduction, reduced contrast sensitivity and visual field loss are the three types of visual impairment leading to low vision. Broadly speaking, visual field loss can be divided into macular loss (central scotoma) and peripheral loss (tunnel vision), resulting from the development of retinopathy, causing damages to the retina (e.g., retinitis pigmentosa).

Common forms of retinopathy include maculopathies, such as diabetic maculopathy, myopic maculopathy or Age-related Macular Degeneration (AMD). They are non-curable retinal diseases that affect mainly the macula, causing Central visual Field Loss (CFL). Patients suffering from such pathologies will develop a blind region called scotoma, located at the center of their visual field and spanning about 20° or more (Figure 1). To better visualize the impact of such a large hole in your visual field, try stretching your index and little finger as far as possible from each other at arm’s length; the span is about 15°.

Central blind spot (i.e., scotoma), as perceived by an individual suffering from Central Field Loss (CFL) when looking at someone's face.

In contrast to visual deficits such as myopia, hypermetropia, or cataract, these pathologies cannot be compensated by glasses, lens or surgery and necessitates specific, ongoing research and techniques, for diagnosis, rehabilitation, aids or therapy. This calls for the need of integrated efforts to understand the human visual system, investigating the impact of visual pathologies at both a neuronal and perceptual level, as well as the development of novel solutions for diagnosis, rehabilitation, and assistance technologies. In particular, we investigate a number of methodologies, including :

- Image enhancement: There is a high interest to use these methods coming from image processing and computer graphics in the design of vision aid systems, such as to simplify complex scenes, augment important visual indices, or compensate missing information. The choice of enhancement technique needs to be adapted to the perceptual skills and needs of each patient.

-

Biophysical modeling: Mathematical models are used to explain observations, predict behaviours and propose new experiments. This requires a constant interaction with neuroscientists. In Biovision team we use this approach to better understand vision in normal and pathological conditions (Figure 2).

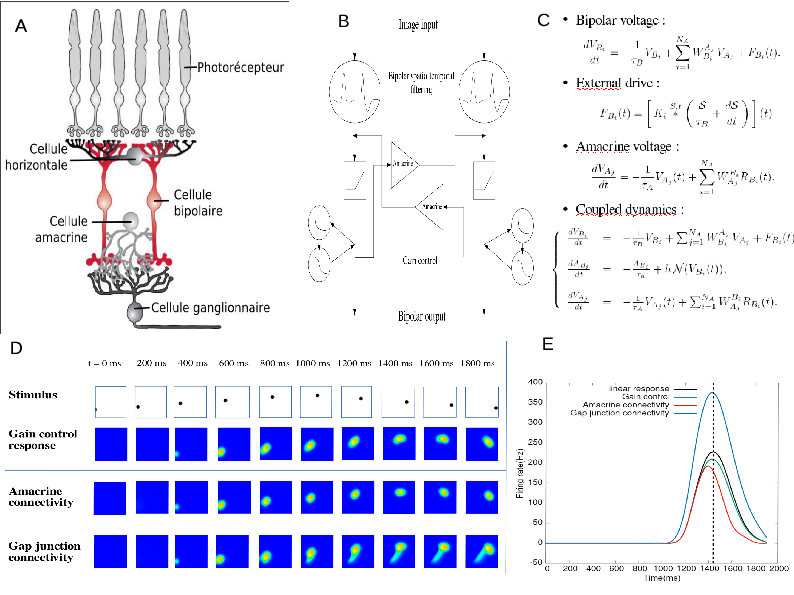

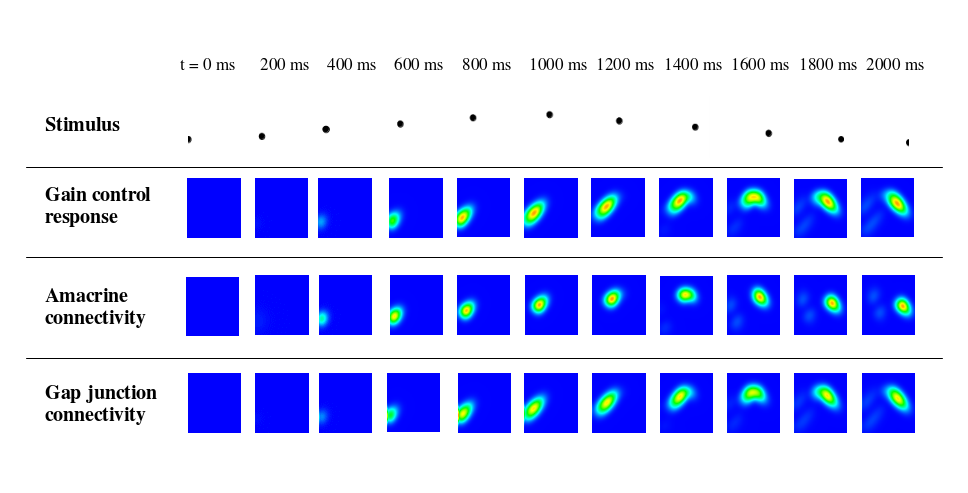

Figure 2: The process of retina modelling. A) The retina structure from biology. B) Designing a simplified architecture keeping retina components that we want to better understand (here, the role of Amacrine cells in motion anticipation); C) Deriving mathematical equations from A and B. D, E). Results from numerical modelling and mathematical modelling. Here, our retina model's response to a parabolic motion where the peak of response resulting from amacrine cells connectivity or gain control is in advance with respect to the peak in response without these mechanisms. This illustrates retinal anticipation.

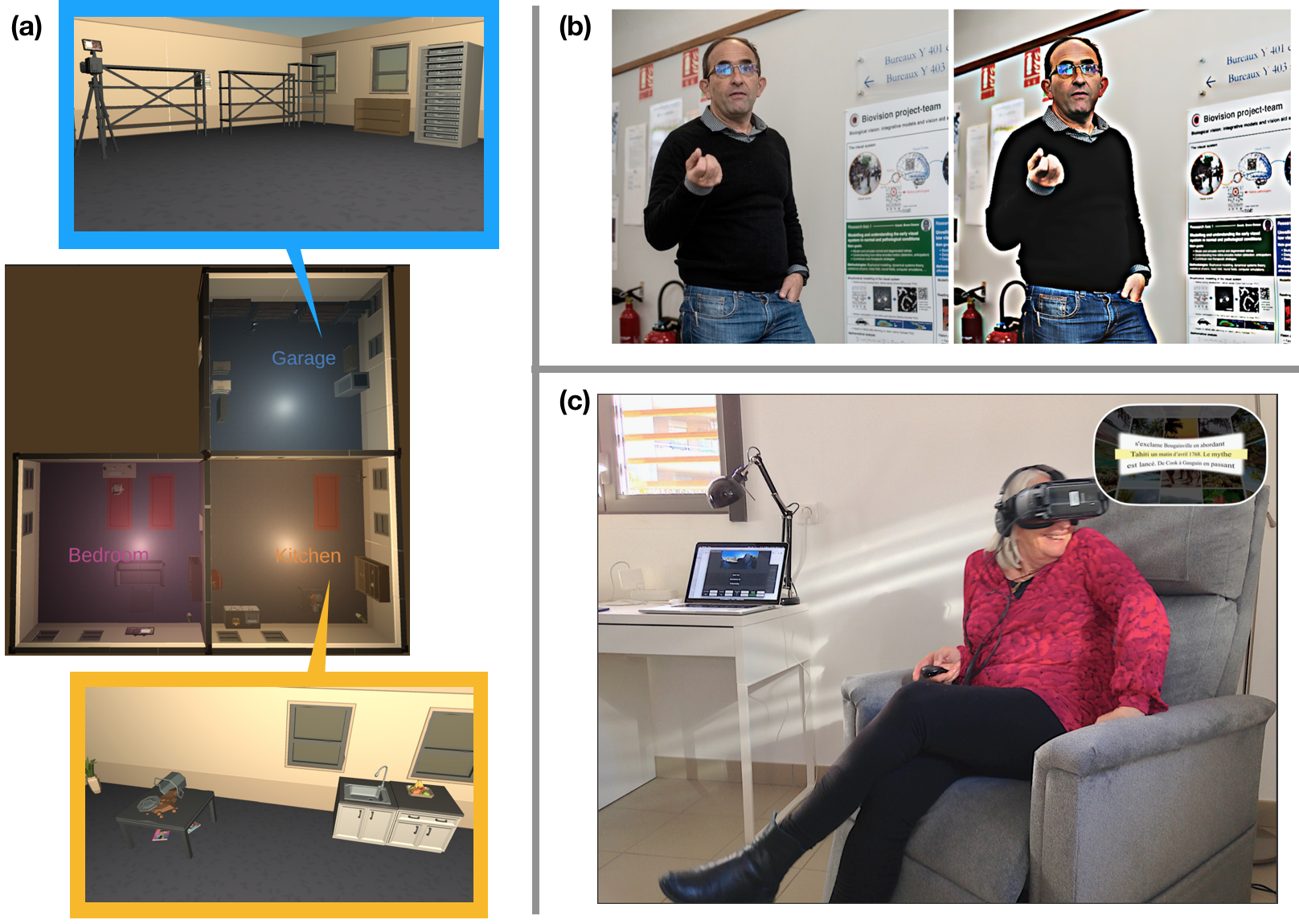

- Virtual and augmented reality: 3D graphics and animations technologies allow the creation of “mixed reality” on a spectrum of partially to fully virtual 3D environments. Augmented reality involves injecting 3D content as an overlay on a screen or transparent lens; virtual reality refers to immersing the viewer in a fully virtual world. Mixed reality applications can be experienced with headsets, smart glasses, or even mobile devices. They have strong applications towards study of user attention and behavior, immersive storytelling experiences, and in the context of low vision, to increase accessibility of visual media content by combining image processing techniques (Figure 3).

- Retinal imaging: Microperimetry refers to the precise and automated assessment of the visual field sensitivity. Pairing both anatomical and functional measurements, microperimetry is an essential tool to define field loss extent and progression. Indeed, it allows to perform two essential types of testing: (1) a scotometry (i.e., a precise measure of field loss position, size and magnitude), by presenting light stimuli of varying luminance in specific locations and for a given duration of time; (2) a fixation exam, by presenting a fixation target and recording the eye position for a given time period (usually 20-30 sec). Therefore, this retinal imaging technique is a core measure of Biovision's work with visually impaired patients.

- Eye-tracking: In explorations of oculomotor behavior, eye tracking allows to record the exact position of the gaze at any time point, providing measures of eye movement patterns, such as fixation duration, number of fixations and saccade duration. Because of calibration issues, using eye tracking with patients suffering from loss of macular function is a real challenge. In this aspect, members of Biovision have extensive experience, in addition to collaborating with health institutes, as well as cognitive and neuroscientists for the design of eye-tracking studies with low-vision patients.

In this context, Biovision aims to develop fundamental research as well as technology transfer along three axes of research and to form a strong synergy, involving a large network of national and international collaborators with neuroscientists, physicians, and modelers (Figure 3).

Multiple methodologies in graphics and image processing have applications towards low-vision technologies including (a) 3D virtual environments for studies of user perception and behavior, and creating 3D stimuli for model testing, (b) image enhancement techniques to magnify and increase visibility of contours of objects and people, and (c) personalization of media content such as text in 360 degrees visual space using VR headsets.

3 Research program

3.1 Axis 1 - Understanding the normal visual system at neuronal and perceptual levels

A holistic point of view is emerging in neuroscience, where one can simultaneously observe how vision works on different levels in the hierarchy of the visual system. An explosion of interest in multi-scale functional analysis and connectomics in brain science, and the rapid advancement in perceptual studies of visual systems are at the forefront of this movement. These studies call for new theoretical and integrated models where the goal is to model visual functions from motion integration at the retical-cortical level, to perception of and reaction to both basic stimuli and complex media content. In Biovision, we contribute to a better understanding of the visual system with those main goals:

- Better understanding the early visual system, retina, thalamus (LGN), cortex V1, with an emphasis on the retina, from a modelling perspective. In tight collaboration with neurobiologists labs, we want to extract computational principles ruling the way how retina encodes a visual scene thanks to its multi-layered structure, made of a wide variety of functional circuits involving specific cells and synapses. Our analysis is at the interface between biology, physics, mathematics and computer science, and involves multiple scales, from ion channels to the whole retina. This is necessary to figure out how local changes, induced e.g. by pharmacology, development, pathologies (see next section) impact the retina processing. We also want to understand how the information flow coming out of the retina drives the thalamus (LGN) and cortical (V1) response especially when handling motion: anticipation of a trajectory, detection of surprise (e.g. sharp change in a trajectory), fast motion (e.g. saccades).

- Reading, moving, behaving in 3D virtual environments. Here, the goal is to better understand how normal sighted people behave or react in specific, controlled conditions. Using virtual reality headsets with eye trackers, we are able to generate specific synthetic 3D environments where eye and head movements can be monitored. Emotional and behavioral reactions can equally be observed using specific captors such as electrodermal activity meters. This opens the possibility of adapting the 3D and visual content to the subject's reaction and feedback. Studies under controlled 3D environments provide a benchmark of contextual data for normally sighted people. In Biovision, we address such challenges as the modeling of user attention and emotion for interactive tasks in virtual reality (such as navigation and object manipulation), and the study of reading behavior and related occular motion patterns for automatically generated textual content.

- Analysis of visual media and user perception. Automated techniques to analyze visual content such as videos, images, complex documents, and 3D stimuli directly address challenges in understanding the impact of motion, style, and content on user perception. While deep learning has been used for various visual classification tasks, it has limitations for the qualitative analysis of visual content due to the difficulty of interpretability. Through multidisciplinary collaboration with researchers in computer science, linguistics and media studies, we can formalise visual properties of film or other visual media 47, and establish pipelines for automated content analysis, design embeddings for deep learning models to enhance interpretability, and use findings to the benefit of qualitative media studies.

3.2 Axis 2 - Understanding the impact of low vision at neuronal and perceptual levels

Following the same spirit as in Axis 1, we wish to study pathology's impact at multiple scales. Similarly, we want to develop new theoretical and integrated models of vision, from the microscopic level to perception. Notably, in Biovision, we have a particular interest in understanding how Central visual Field Loss (CFL) impacts attentional and oculo-motor behaviors. In this axis we aim at:

- Modeling of retinal prostheses stimulation and cortical responses. Retinal impairments leading to low vision start at the microscopic scale (cell scale or smaller, e.g. ion channel) and progressively affects the whole retina with cells degeneration, excessive cells growth (like Mueller cells), reshaping, appearance of large scale electric oscillations 36… These evolutive degeneracies obviously impact sight but have also to be taken into when proposing strategies to cure or assist low vision people. For example, when using electrical prostheses electric diffusion enlarges the size of evoked phosphenes 41 and the appearance of spontaneous electric oscillations can screen the electric stimulation 31. One of the key idea of our team (see previous section) is that the multi-scale dynamics of retina, in normal or pathological conditions, can be addressed from the point of view of dynamical systems theory completed with large scale simulations. In this spirit we are currently interested in the modeling of retinal prostheses stimulation and cortical responses in collaboration with F. Chavane team (INT) 30. We are also interested to develop models for the dynamical evolution of retinal degeneracies.

- Understanding eccentric vision pointing for selection and identification. The CFL caused by non-curable retinal diseases such as AMD induces dramatic deficits in several high-level perceptual abilities: mainly text reading, face recognition, and visual search of objects in cluttered environments, thus degrading the autonomy and the quality of life of these people. To adapt to this irreversible handicap, visually impaired people must learn how to use the peripheral parts of their visual field optimally. This requires the development of an “eccentric viewing (EV)” ability allowing low-vision persons to “look away” from the entity (e.g., a face) they want to identify. As a consequence, an efficient improvement of EV should target one of the most fundamental visuomotor functions in humans: pointing at targets with an appropriate combination of hand, head and eyes. We claim that this function should be re-adapted and assisted in priority. To do so, we want to understand the relation between sensori-motor behavior (while performing pointing tasks) and the pathology characteristics (from anatomo-functional retinal imaging). In particular, better understanding how EV could be optimized for each patient is at the core of the ANR DEVISE (see Sec. 5.1). This work will have an strong impact on the design of rehabilitation methods and vision-aid systems.

- Understanding how low vision impacts attentional and oculo-motor behaviors during reading. Difficulty with reading remains the primary complaint of CFL patients seeking rehabilitation 32. Considering its high relevance to quality of life and independence, especially among the elderly, reading should be maintained at all costs in those individuals. For struggling readers with normal vision (e.g., dyslexics, 2nd language learners), reading performance can be improved by reducing text complexity (e.g., substituting difficult words, splitting long sentences), i.e., by simplifying text optimally. However, this approach of text simplification has never been applied to low vision before. One of our goal is to investigate the different components of text complexity in the context of CFL, combining experimental investigations of both higher-level factors (linguistics) and lower-level ones (visual). The outcome results will constitute groundwork for precise guidelines to design reading aid systems relying on automated text simplification tools, especially targeted towards low vision. In addition to this experimental approach, modelling will also be used to try and decipher reading behaviour of the visually impaired. Our goal being to model mathematically pathological eye movements during reading 34, 33, taking into account the perceptual constraint of the scotoma and adjust the specific influence of linguistic parameters, using results from our experimental investigation on text complexity with CFL.

- Attentional and behavioural studies using virtual reality. Studying low-vision accessibility to visual media content is a major challenge due to the difficulty of (1) having quantifiable metrics on user experience, which varies between individuals, and (2) providing well-controlled environments to develop such metrics. Virtual reality offers such opportunities to create a fully-customisable and immersive 3D environment for the study of user perception and bahaviour. Integrated sensors such as gaze tracking, accelerometers, and gyroscopes allow to some degree the tracking of user head, limb (from handheld controllers), and eye movement. Added sensors can further extend our capabilities to understand the impact of different user experiences, such as the addition of electrodermal sensors to gain insight into cognitive load and emotional intensity.

3.3 Axis 3 - Diagnosis, rehabilitation, and low-vision aids

In 2015, 405 million people were visually impaired around the globe, against ‘only‘ 285 million in 2010. Because of aging and its strong correlation with eye disease prevalence, this number is only expected to grow. To address this global health problem, actions must be taken to design efficient solutions for diagnosis, personalized rehabilitation methods, and vision-aid systems handling real-life situations. In Biovision, we envision synergistic solutions where, e.g., the same principles used in our rehabilitation protocols could be useful in a vision-aid system.

- Exploring new solutions for diagnosis. Our goal is to allow for earlier and more decisive detection of visual pathologies than current methods to prevent the development of some pathologies by earlier interventions in the form of treatment or rehabilitation. These methods will rely on a fine analysis of subjects doing a specific task (e.g., pointing or reading). They will result from our understanding of the relation between the anatomo-functional characteristics of the pathology and the sensori-motor performance of the subjects.

- Designing rehabilitation methods in virtual reality (VR). Current rehabilitation methods used by ophthalmologists, optometrists, or orthoptists often impose non-ecological constraints on oculomotor behaviours (for example by keeping the head still) and rely on repetitive exercises which can be unpleasant and hardly motivating. One particular focus we have is to train eccentric vision for patients suffering from CFL. Our objective is to design novel visual rehabilitation protocols using virtual reality, to offer immersive and engaging experiences, adapted to the target audience and personalized depending on their pathology. This work requires a large combination of skills, from the precise identification of the sensori-motor function we target, to the design of an engaging immersive experience that focuses on this function, and of course the long testing phase necessary to validate the efficacy of a rehabilitation method. It has to be noted that our goal is not to use VR simply to reproduce existing conventional therapies but instead develop new motivating serious games allowing subjects to be and behave in real-life scenarios. Beyond, we also want to take inspiration from what is done in game development (e.g., game design, level design, narratology).

- Developing innovative vision-aid digital systems to empower patients with improved perceptual capacities. Based on our understanding of the visual system and subjects behavior in normal and pathological conditions, we want to develop digital systems to help low-vision people by increasing the accessibility of their daily-life activities. We have a particular focus on text and multi-modal content accessibility (e.g., books and magazines) again for people with CFL, for whom the task of reading becomes sometimes impossible. The solutions we develop seek to integrate linguistic, perceptual and ergonomic concepts. We currently explore solutions on different medias (e.g., tablets, VR).

4 Application domains

4.1 Applications of low-vision studies and technologies

- Rehabilitation: Serious games use game mechanics in order to achieve goals such as in training, education, or awareness. In our context, we consider serious games as a way to help low-vision patients in performing rehabilitation exercises. Virtual and augmented reality technology is a promising platform to develop such rehabilitation exercises targeted to specific pathologies due to their potential to create fully immersive environments, or inject additional information in the real world. For example, with Age-Related Macular Degeneration (AMD), our objective is to propose solutions allowing rehabilitation of visuo-perceptual-motor functions to optimally use residual portions of the peripheral retina and obtain efficient eccentric viewing.

- Vision aid-systems: A variety of aids for low-vision people are already on the market. They use various kinds of desktop (e.g. CCTVs), handheld (mobile applications), or wearable (e.g. OxSight, Helios) technologies, and offer different functionalities including magnification, image enhancement, text to speech, face and object recognition. Our goal is to design new solutions allowing autonomous interaction primarily using mixed reality – virtual and augmented reality. This technology could offer new affordable solutions developped in synergy with rehabilitation protocols to provide personalized adaptations and guidance.

- Cognitive research: Virtual and augmented reality technology represents a new opportunity to conduct cognitive and behavioural research using virtual environments where all parameters can be psychophysically controlled. Our objective is to re-assess common theories by allowing patients to freely explore their environment in more ecological conditions.

4.2 Applications of vision modeling studies

-

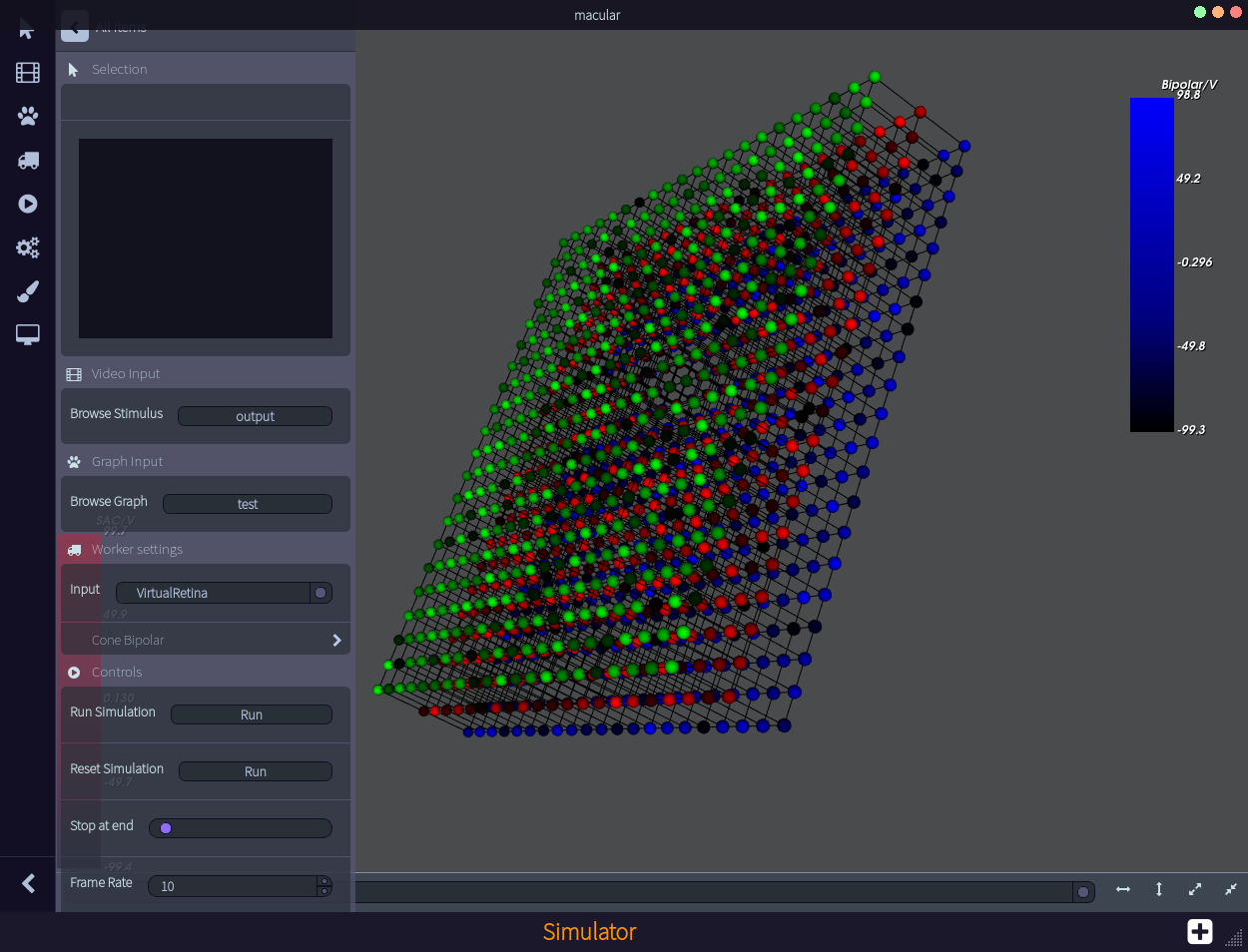

Neuroscience research. Making in-silico experiments is a way to reduce the experimental costs, to test hypotheses and design models, and to test algorithms. Our goal is to develop a large-scale simulations platform of impaired retinas, called Macular, allowing to mimic specific degeneracies or pharmacologically induced impairments, as well as to emulate electric stimulation by prostheses.

In addition, the platform provides a realistic entry to models or simulators of the thalamus or the visual cortex, in contrast to the entries usually considered in modelling studies.

- Education. Macular is also targeted as a useful tool for educational purposes, illustrating for students how the retina works and responds to visual stimuli.

4.3 Applications of multimedia analysis and synthesis

- Media studies and social awareness. Investigating interpretable models of media analysis will allow us to provide tools to conduct qualitative media studies on large amounts of data in relation to existing societal challenges and issues. This includes understanding the impact of media design on accessibility for patients of low-vision, as well as raising awareness towards biases in media towards various minority groups.

- Content creation and assisted creativity. Models of user perception can be integrated in tools for content creators, such as to simulate low-vision conditions for architecture or media design. Furthermore, generative media technologies such as autoencoder neural networks can be integrated in assistive

5 Highlights of the year

5.1 New ANR projects

- The Agence Nationale de la Recherche (ANR) will be funding the project DEVISE, for 4 years, starting from 2021 (AAPG 2020). In this project, we aim to develop in a Virtual Reality headset new functional rehabilitation techniques for visually impaired people. A strong point of these techniques will be the personalization of their parameters according to each patient’s pathology, and they will eventually be based on serious games whose practice will increase the sensory-motor capacities that are deficient in these patients. For more details: https://

team. inria. fr/ biovision/ anr-devise - The Agence Nationale de la Recherche (ANR) will be funding the project ShootingStar, for 4 years, starting from 2021 (AAPG 2020). In this project, in collaboration with the Integrative Neuroscience and Cognition Center, Institut des Neurosciences de la Timone, NeuroPsi, Institut de la Vision we aim at studying the visual perception of fast (over 100 deg/s) motion, especially related to ocular saccades shifting the retinal image at speeds of 100-500 degrees of visual angle per second. How these very fast shifts are suppressed, leading to clear, accurate, and stable representations of the visual scene, is a fundamental unsolved problem in visual neuroscience known as saccadic suppression. The project combines neuroscience, psychophysics, and modeling. Especially, the Biovision team (in collaboration with NeuroPsi – Alain Destexhe) will design a model of the early visual system (retina-LGN-V1) to study saccadic suppression.

5.2 Action de Développement Technologique (ADT)

- The CDT commission for Inria Sophia Antipolis Méditerranée awarded Aurelie Calabrèse and Pierre Kornprobst a 12PM ADT for their project entitled: “InriaREAD : Développement d’une plateforme pour étudier les comportements de lecture en vision normale et pathologique”.

5.3 Student grants

- Selma Souihel, supervised by Bruno Cessac, has got 5 months of post doc funding from the Neuromod institute, allowing her to finish her work on retinal anticipation and contribute to the Macular software.

- Evgenia Kartsaki, supervised by Bruno Cessac, was a laureate of the Docwalker international mobility program funded by the EUR Digital Systems for Humans (DS4H) and the Academy of Excellence “Networking, Information, and Digital Society”. Docwalker supported one month stay at the lab of M. Hennig at the Institute for Adaptive and Neural Computation School of Informatics, University of Edinburgh, Scotland.

- Simone Ebert has obtained a funding from the Neuromod institute for a 3 year PhD, supervised by Bruno Cessac.

6 New software and platforms

6.1 New software

6.1.1 SlidesWatchAssistant

- Name: Helping visually impaired employees to follow presentations in the company: Towards a mixed reality solution

- Keywords: Handicap, Low vision, Vision-aid system

- Scientific Description: The objective of the work is to develop a first proof-of-concept (PoC) targetting a precise use-case scenario defined by EDF (contract with InriaTech). The use-case is one of an employee with visual impairment willing to follow a presentation. The idea of the PoC is a vision-aid system based on a mixed-reality solution. This work aims at (1) estimating the feasibility and interest of such kind of solution and (2) identifying research questions that could be jointly addressed in a future partnership.

- Functional Description: This PoC has the following features: - Watch presentation using camera pass-through - Take a photo - Show/hide photo panel - Navigate through photos - Zoom in/out - Apply image transforms. Three image enhancements tested in LIVE and PHOTO modes: color inversion, Peli transform high intensity and low intensity.

- Authors: Pierre Kornprobst, Riham Nehmeh, Carlos Zubiaga Pena, Julia Elizabeth Luna

- Contacts: Pierre Kornprobst, Riham Nehmeh

- Participants: Pierre Kornprobst, Riham Nehmeh, Carlos Zubiaga Pena, Julia Elizabeth Luna

- Partner: Edf

6.1.2 CardNews3D

- Name: 3D Cards for Accessible News Reading on the Web

- Keywords: 3D web, Virtual reality, Accessibility, Reading

-

Functional Description:

CardNews3D a toolkit for a browser-based news reading application that can be customized for low-vision reading. It features

(1) a multi-layered navigation scheme for pages, articles, and multimedia content (text and images) (2) a card-like reading interface with content labels and navigation view, and (3) a menu that allows the user to customize reading parameters including polarity (i.e. foreground and background color), text size, and visibility of interface elements.

The toolkit is implemented using the A-Frame framework for virtual reality on popular headset browsers.

- Release Contributions: First version of the software

- Publication: hal-02321739

- Authors: Hui-Yin Wu, Pierre Kornprobst, Aurelie Calabrese

- Contact: Hui-Yin Wu

- Participants: Hui-Yin Wu, Pierre Kornprobst, Aurelie Calabrese

6.2 New platforms

6.2.1 Macular

Keywords: Large scale simulations, retino-cortical modelling, retinal prostheses, effect of pharmacology.

Paricipants: Evgenia Kartsaki, Selma Souihel, Alex Ye, Bruno Cessac.

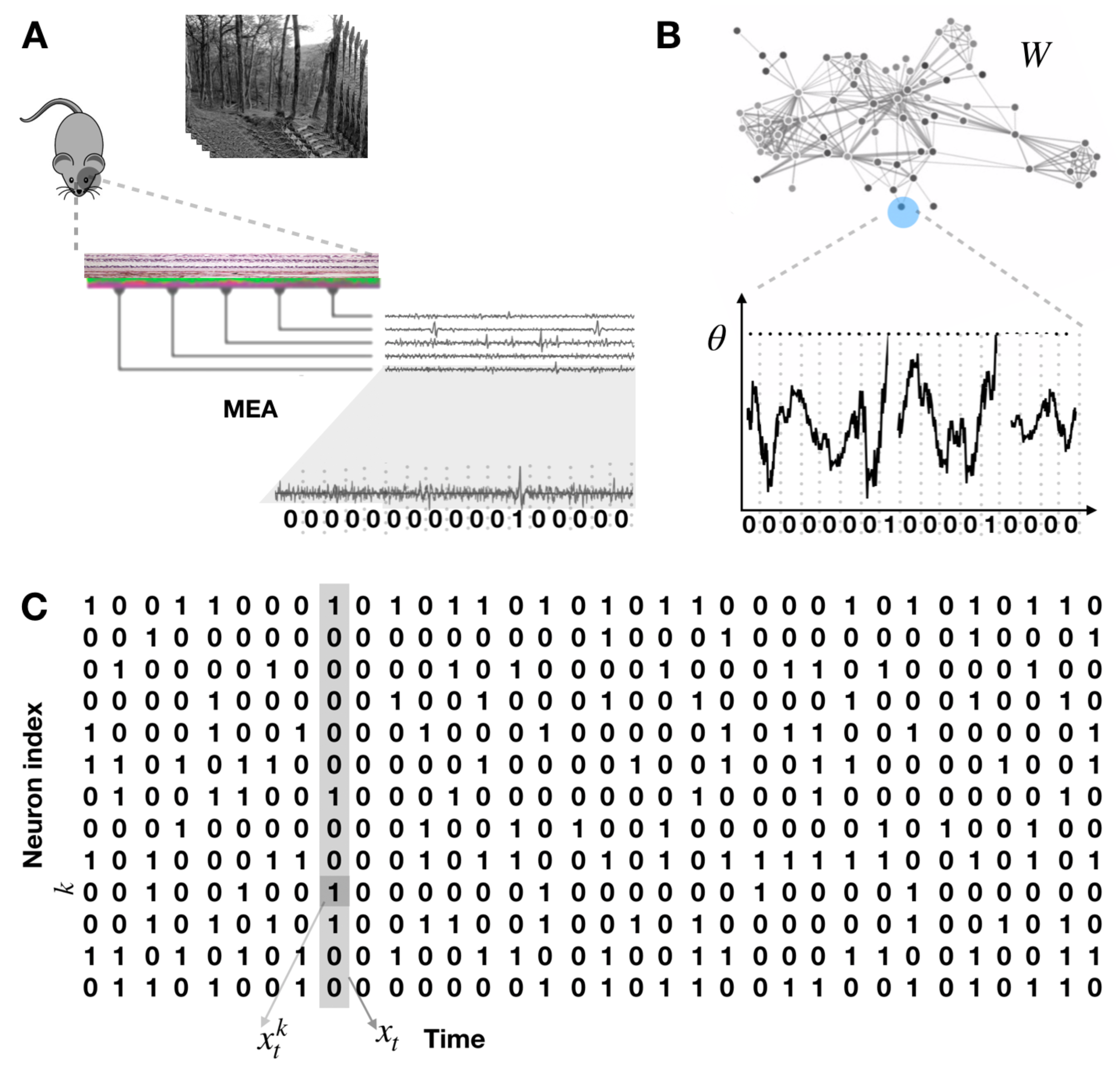

Scientific description: Macular is a platform for the numerical simulation of the retina and primary visual cortex (Figure 4)). This software is currently under development within the Biovision team of Inria (https://

6.2.2 Automated analysis of anatomical and functional retinal exams to quantify the residual visual function of low-vision patients

Participants: Pierre Kornprobst, Aurélie Calabrèse

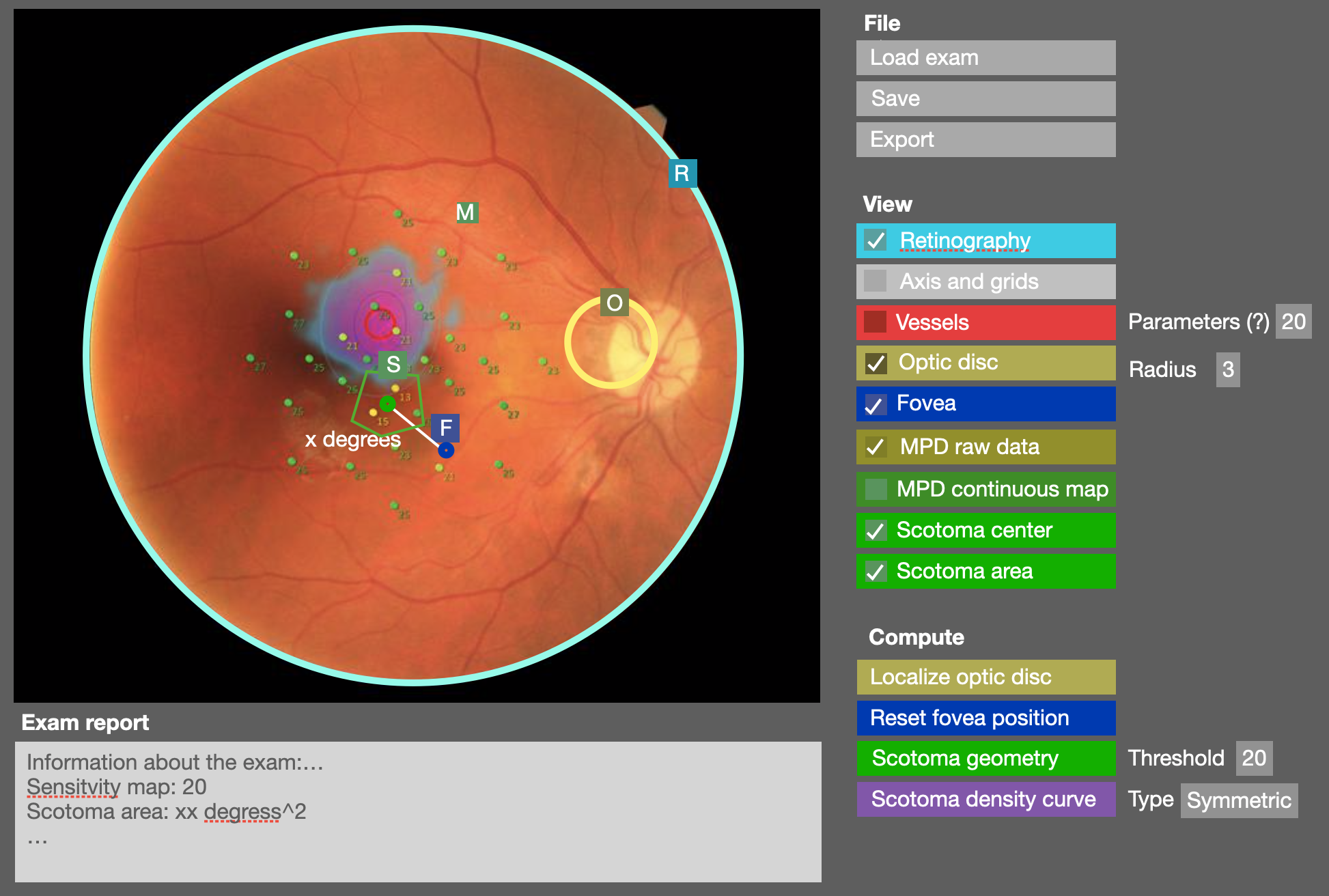

Scientific Description: Microperimetry is an ophthalmic exam coupling precise measurement of the functional visual field, with anatomical images of the retina. In both research and clinics, microperimetry is essential to define the extent and progression of visual field loss and contrast sensitivity deficits and field loss. In the Biovision team, we use the three different microperimeter models currently available to perform standard automated microperimetry: the MP1 (Nidek Inc.), the MP3 (Nidek Inc.) and the MAIA (iCare)). Currently, microperimetry exam results must be analysed manually by trained individuals to extract data of interest.

In collaboration with Eric Castet (CNRS, Marseille), Pierre Kornprobst and Aurelie Calabrese launched a new project to design and develop a software to analyse microperimetry data and extract relevant outcome automatically (Figure 5). Furthermore, this tool is intended to work efficiently for data acquired with all models of microperimeter. Software development was performed during the 'projet de fin d'études' of 3 students from Polytech Nice (Erwan Gaymard, Hugo Lavezac et Sacha Wanono - See Section 10.2.2).

6.2.3 Developing an automated generator of standardized MNREAD sentences

Participants: Pierre Kornprobst, Aurélie Calabrèse

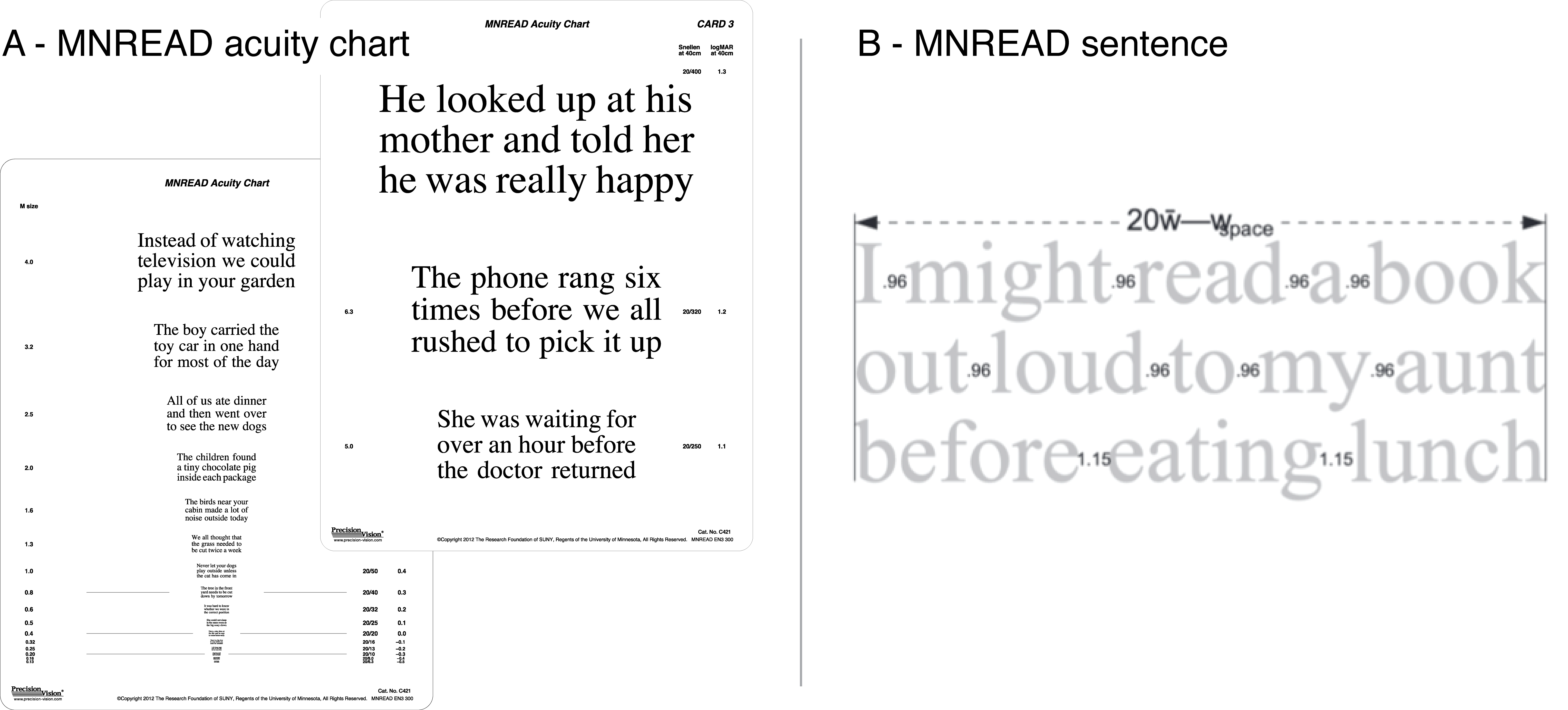

Scientific Description: Since reading speed is a strong predictor of visual ability and vision-related quality of life for patients with vision loss 42, reading performance has become one of the most important clinical measures for judging the effectiveness of treatments, surgical procedures or rehabilitation techniques. Accurate measurement of reading performance requires highly standardized reading test, such as the MNREAD acuity chart 39. This test, available in 19 languages, allows to measure reading performance in people with normal and low vision. In brief, performance is measured from the time needed to read a series of short sentences that were designed to be equivalent in terms of linguistics, length and layout (Figure 6-A). To ensure accurate measurement, each sentence must be presented only once to avoid introduction of a memorization bias.

Because of their highly constrained nature (Figure 6-B), MNREAD sentences are hard to produce, leading to a very limited number of test versions (only two in French). Given that repeated measures are needed in many applications of MNREAD, there is a strong interest from the scientific and medical communities for a much larger pool of sentences.

Recent progress in Natural Language Generation – at the crossroads between natural language processing and artificial intelligence – has lead to the development of automated text generation software. From semi-automated template-based methods 40 to fully automated ones using supervised machine learning 37, generating natural written material from computation remains at the prototype stage.

In order to go beyond the current literature in the field of Natural Language Generation, Aurélie Calabrèse and Pierre Kornprobst launched a new project in collaboration with Jean-Charles Régin (a specialist in constraint programming and natural language processing from Université Côte d’Azur, I3S. Our consortium has just started to explore methods for the automatic production of constrained text from a corpus that are able to satisfy a large number of constraints, and will continue to pursue this work. After a year, a first prototype version of our generator is ready to be tested and validated experimentally.

The possibility to create an almost infinite number of controlled MNREAD sentences, paired with the MNREAD iPad app designed by Aurelie Calabrèse 35, will open up a large number of potential applications: (1) in clinical research, to assess the effectiveness of treatments, reading-aid devices or rehabilitation techniques by measuring a potential reading speed improvement at several time points over the course of the intervention; (2) in the field of rehabilitation, by creating adaptive reading trainings with controlled text complexity; etc. Beyond its use for low vision, it could also be applied to the fields of: (3) education, to screen children for reading disorders such as dyslexia using sentences customized based on grade level. Generally speaking, it could provide relevant additional information for disability assessment; (4) typography, by generalizing the principles of the test to evaluate the effects of dependent variables other than print size (e.g., assess the readability of a newly designed font, of line length, etc.).

This software development was initiated during the Master 2 internship of Arthur Doglio (UCA, M2 Informatique), and then continued by Alexandre Bonlarron (UCA, M2 Informatique), during his 'Travail d'Étude et de Recherche' (TER) project (See Section 10.2.2).

7 New results

We present here the new scientific results of the team over the course of the year. For each entry, members of Biovision are marked with a .

7.1 Understanding the normal visual system at neuronal and perceptual levels

7.1.1 Large visual neuron assemblies receptive fields estimation using a super resolution approach

Participants: Daniela Pamplona, Gerrit Hilgen, Matthias H. Hennig, Bruno Cessac, Evelyne Sernagor, Pierre Kornprobst

1 Ecole Nationale Supérieure de Techniques Avancées, Institut Polytechnique de Paris, France

2 Institute of Neuroscience (ION), United Kingdom

3 Institute for Adaptive and Neural Computation, University of Edinburgh, United Kingdom

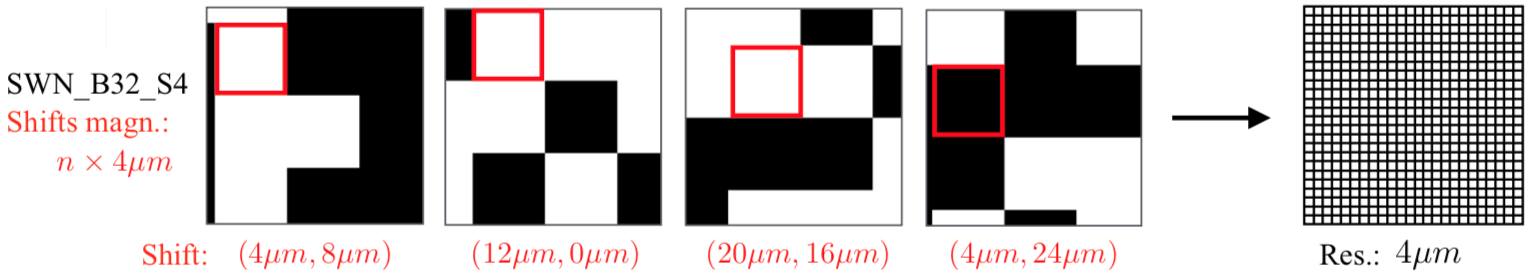

Description: One primary goal in analyzing properties of sensory neurons is to map the sensory space of the neural response, thus estimating the neuron’s receptive field (RF). For visual neurons, the classical method to estimate RFs is the Spike Triggered Average (STA) response to light stimuli. In short, STA estimates the average stimulus before each spike evoked by a white noise stimulus whose block size can be ad-hoc tuned to target one single neuron. However, this approach becomes impractical to deal with in large-scale recordings of heterogeneous populations of neurons since no single block size can optimally match all neurons. Here, we aim to overcome this limitation by leveraging super resolution techniques to extend STA’s scope. We defined a novel type of stimulus, the shifted white noise, by introducing random spatial shifts in the white noise stimulus in order to increase the resolution of the measurements without compromising on response strength. We evaluated this new stimulus thoroughly on both synthetic and real neuronal populations consisting of 216 and 4798 neurons, respectively. Considering the same target STA resolution, the average error in the synthetic dataset using our stimulus was 1.7 times smaller than when using the classical stimulus, with successful mapping of 2.3 times more neurons, covering a broader range of RF sizes. Moreover, successful RF mapping of single neurons was achieved within only 1 min of stimulation, which is more than 10 times more efficient than when using classical white noise stimuli. Similar improvements were obtained with experimental electrophytsiological data. Considering the same target STA resolution, we successfully mapped 18 times more RFs covering a broader range of sizes (with RF size distribution kurtosis 0.3 times smaller). Overall, the shifted white noise improves the RFs’ estimation in several ways. (1) It performs better at the single-cell level; (2) RF estimation is independent of the neuron’s position relative to the stimulus and offers high-resolution; (3) our approach is stronger at the population-level, yielding more RFs encompassing a broader range of sizes; (4) the approach is faster, necessitating shorter stimulation time. Finally. this stimulus could also be used in other spike-triggered methods, extended to the time dimension, and adapted to other sensory modalities. Due to its design simplicity and strong results, we anticipate that the shifted white noise could become a new standard in sensory physiology, allowing the discovery of novel, higher-resolution RF properties.

Figure 7 illustrates our method. For more information: 24

Example of shifted white noise stimulus.

7.1.2 Ghost Attractors in Spontaneous Brain Activity: Recurrent Excursions Into Functionally-Relevant BOLD Phase-Locking States

Participants: Jakub Vohryzek, Gustavo Deco, Bruno Cessac, Morten Kringelbach, Joana Cabral

1 University of Oxford, United Kingdom

2 Aarhus University, Denmark

3 UPF - Universitat Pompeu Fabra, Barcelona

4 University of Minho, Portugal

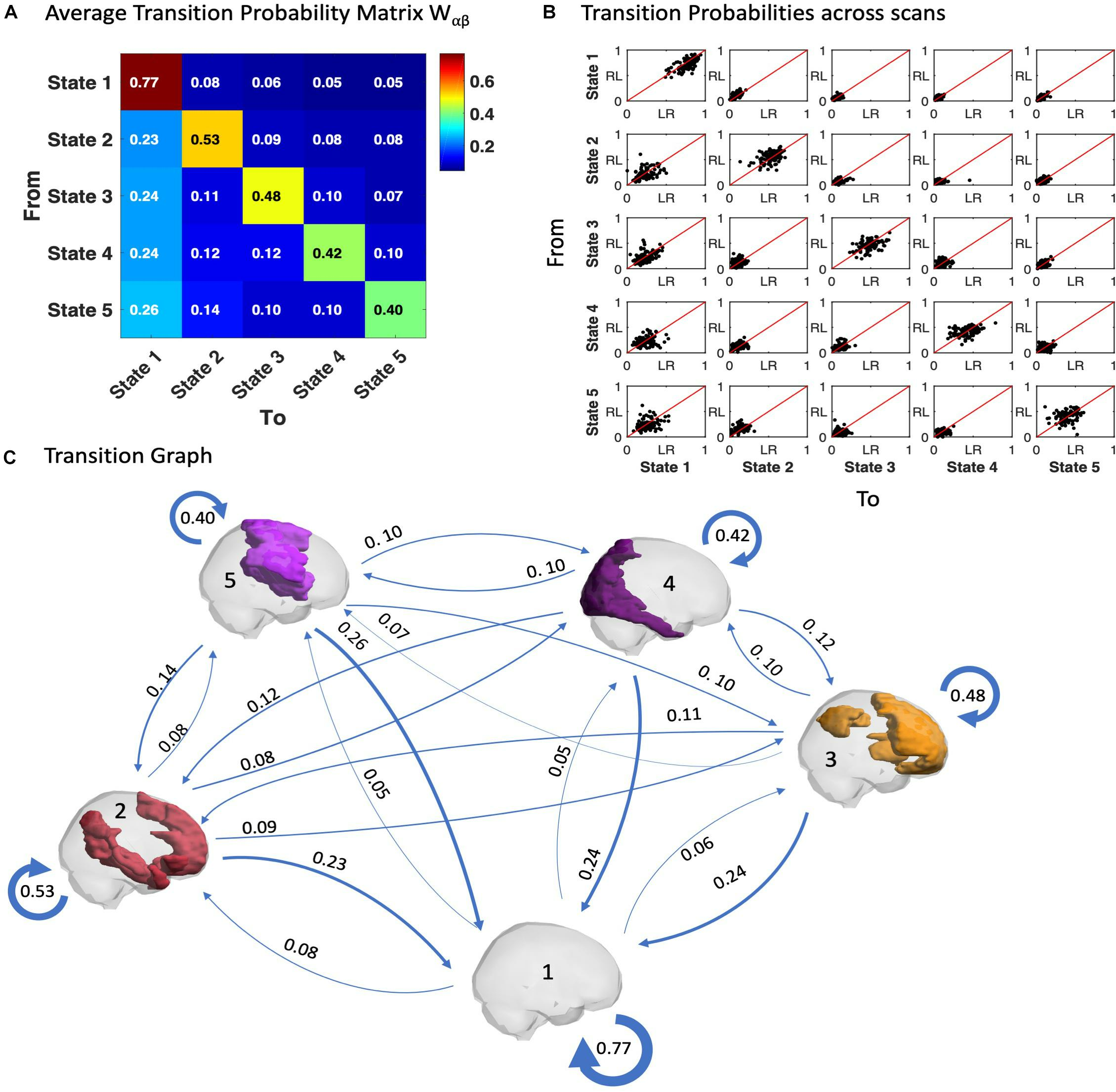

Description: Functionally relevant network patterns form transiently in brain activity during rest, where a given subset of brain areas exhibits temporally synchronized BOLD signals. To adequately assess the biophysical mechanisms governing intrinsic brain activity, a detailed characterization of the dynamical features of functional networks is needed from the experimental side to constrain theoretical models. In this work, we use an open-source fMRI dataset from 100 healthy participants from the Human Connectome Project and analyze whole-brain activity using Leading Eigenvector Dynamics Analysis (LEiDA), which serves to characterize brain activity at each time point by its whole-brain BOLD phase-locking pattern. Clustering these BOLD phase-locking patterns into a set of k states, we demonstrate that the cluster centroids closely overlap with reference functional subsystems. Borrowing tools from dynamical systems theory, we characterize spontaneous brain activity in the form of trajectories within the state space, calculating the Fractional Occupancy and the Dwell Times of each state, as well as the Transition Probabilities between states. Finally, we demonstrate that within-subject reliability is maximized when including the high frequency components of the BOLD signal ( Hz), indicating the existence of individual fingerprints in dynamical patterns evolving at least as fast as the temporal resolution of acquisition (here TR = 0.72 s). Our results reinforce the mechanistic scenario that resting-state networks are the expression of erratic excursions from a baseline synchronous steady state into weakly-stable partially-synchronized states – which we term ghost attractors. To better understand the rules governing the transitions between ghost attractors, we use methods from dynamical systems theory, giving insights into high-order mechanisms underlying brain function.

Figure 8 illustrates our results. For more details, see the paper https://

7.1.3 A mean-field approach to the dynamics of networks of complex neurons, from nonlinear Integrate-and-Fire to Hodgkin–Huxley models

Participants: M. Carlu, O. Chehab, X.L. Dalla Porta D. Depannemaecker, X.C. Héricé, M. Jedynak, X.E. Köksal Ersöz P. Muratore, S. Souihel, X.C. Capone, Y. Zerlaut, A. Destexhe, and M. di Volo

1 Department of Integrative and Computational Neuroscience, Paris-Saclay Institute of Neuroscience, CNRS, France

2 Ecole Normale Superieure Paris-Saclay, France

3 Institut d’InvestigacionsBiomèdiques August Pi i Sunyer, Barcelona, Spain

4 Strathclyde Institute of Pharmacy and Biomedical Sciences, United Kingdom

5 Université Grenoble Alpes, Grenoble Institut des Neurosciences and Institut National de la Santé et de la Recherche Médicale (INSERM U1216), France

6 INSERM, U1099, Rennes, France

7 MathNeuro Team, Inria Sophia Antipolis Méditerranée, France

8 Physics Department, Sapienza University, Italy

9 Laboratoire de Physique Théorique et Modelisation, Université de Cergy-Pontoise, France

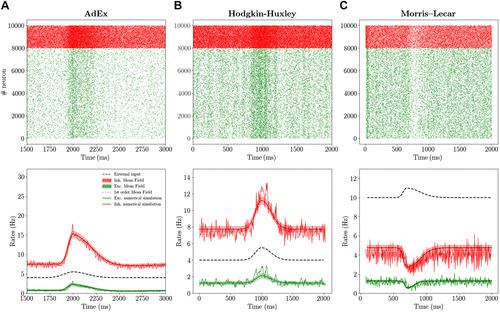

Description: Brain dynamics can be investigated at different scales, from the microscopic cellular scale, describing the voltage dynamics of neurons and synapses, to the mesoscopic scale, characterizing the dynamics of whole populations of neurons, up to the scale of the whole brain where several populations connect together. A large effort has been made to derive population descriptions from the specificity of the network model under consideration. This bottom-up approach permits to obtain a dimensionally reduced mean-field description of the network population dynamics in different regimes.

On one hand, mean-field models permit a simpler, reduced picture of the dynamics of a population of neurons, thus allowing to unveil mechanisms determining specific observed phenomena. On the other hand, they enable a direct comparison with imaging studies where the spatial resolution implies that the recorded field represents the average over a large population of neurons (i.e., a mean field).

Here, we present a mean-field formalism able to predict the collective dynamics of large networks of conductance-based interacting spiking neurons. We apply this formalism to several neuronal models, from the simplest Adaptive Exponential Integrate-and-Fire model to the more complex Hodgkin–Huxley and Morris–Lecar models. We show that the resulting mean-field models are capable of predicting the correct spontaneous activity of both excitatory and inhibitory neurons in asynchronous irregular regimes, typical of cortical dynamics. Moreover, it is possible to quantitatively predict the population response to external stimuli in the form of external spike trains. This mean-field formalism therefore provides a paradigm to bridge the scale between population dynamics and the microscopic complexity of the individual cells physiology.

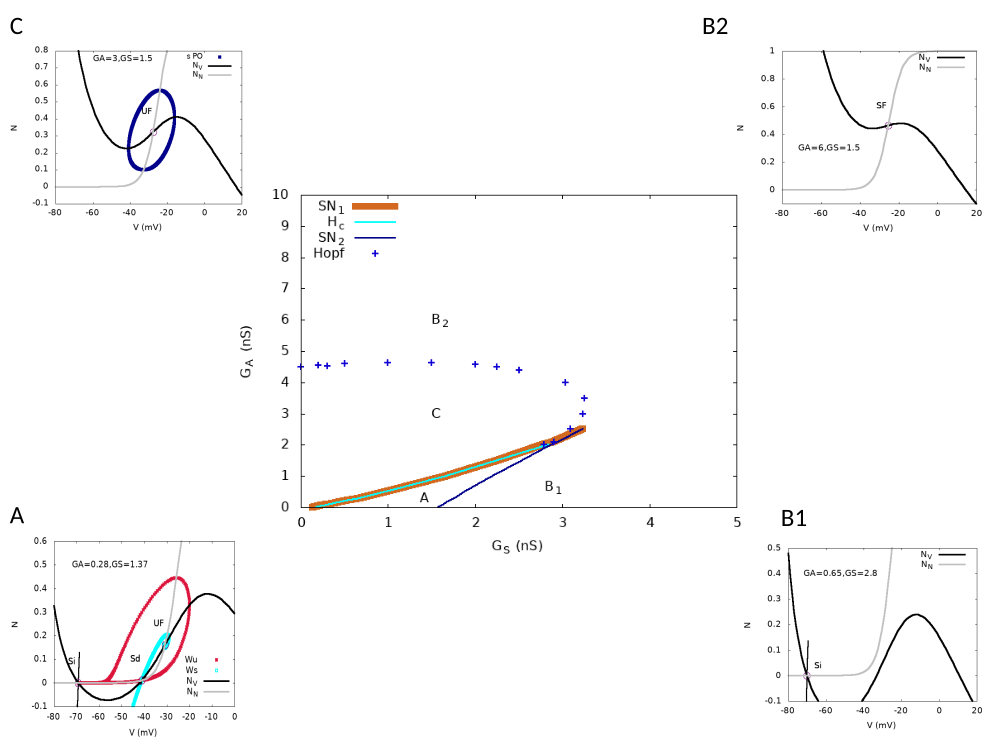

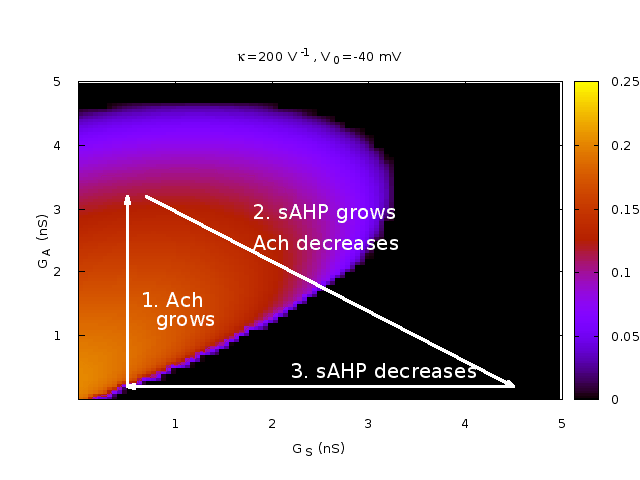

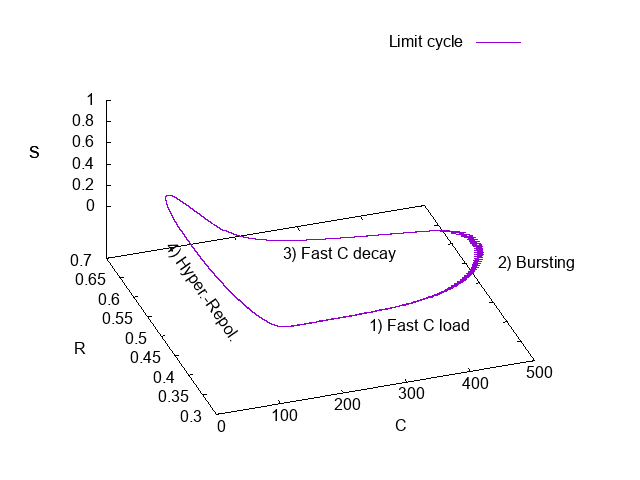

Figure 9 illustrates our results. For more details, see the paper https://

7.1.4 Thermodynamic Formalism in Neuronal Dynamics and Spike Train Statistics

Participants: Rodrigo Cofré, Cesar Maldonado, Bruno Cessac

1 CIMFAV-Ingemat, Facultad de Ingeniería, Universidad de Valparaíso, Valparaíso 2340000, Chile

2 IPICYT/División de Matemáticas Aplicadas, San Luis Potosí 78216, Mexico

Description: Since neuronal spikes can be represented as binary variables, it is natural to adapt methods and concepts from statistical physics, and more specifically the statistical physics of spin systems, to analyse spike trains statistics. In this review, we show how the so-called Thermodynamic Formalism can be used to study the link between the neuronal dynamics and spike statistics, not only from experimental data, but also from mathematical models of neuronal networks, properly handling causal and memory dependent interactions between neurons and their spikes. The Thermodynamic Formalism provides indeed a rigorous mathematical framework for studying quantitative and qualitative aspects of dynamical systems. At its core, there is a variational principle that corresponds, in its simplest form, to the Maximum Entropy principle. It is used as a statistical inference procedure to represent, by specific probability measures (Gibbs measures), the collective behaviour of complex systems. This framework has found applications in different domains of science. In this article, we review how the Thermodynamic Formalism can be exploited in the field of theoretical neuroscience, as a conceptual and operational tool, in order to link the dynamics of interacting neurons and the statistics of action potentials from either experimental data or mathematical models. We comment on perspectives and open problems in theoretical neuroscience that could be addressed within this formalism.

Figure 9 illustrates our results.

For more details, see the paper https://

7.1.5 The Retina as a Dynamical System

Participants: Bruno Cessac

Descripiton: The retina is the entrance to the visual system. The development of new technologies and experimental methodologies (MEA, 2-photon, genetic engineering, pharmacology) and the resulting experiments have made it possible to show that the retina is not a mere camera, transforming the flow of photons coming from a visual scene in sequences of action potentials interpretable by the visual cortex. It appears, in contrast, that the specific and hierarchical structure of the retina allows it to pre-process visual information, at different scales, in order to reduce redundancy and increase the speed, efficiency and reliability of visual responses. This is particularly salient in the processing of motion, which is the essence of what our visual system receives as input. The retina is thus a fascinating object and its study opens the door to better understand how our brain processes visual information. However, the retina is a specific organ with specific neurons and circuits, rather different from their cortical counterparts, leading to specific questions - requiring specific techniques -. Some of these questions can be addressed in the realm of dynamical systems theory.

In this paper, considering the retina as a high dimensional, non autonomous, dynamical system, layered and structured, with non stationary and spatially inhomogeneous entries (visual scenes), we present several examples where dynamical systems-, bifurcations-, and ergodic-theory provide useful insights on retinal behaviour and dynamics.

Figure 11 illustrates our results. It shows a bifurcation diagram in a model of bursting cells involved in the process of retinal waves. For more details, see the paper https://

7.1.6 On the potential role of lateral connectivity in retinal anticipation

Participants: Selma Souihel, Bruno Cessac

Description: Our visual system has to constantly handle moving objects. Static images do not exist for it, as the en-vironment, our body, our head, our eyes are constantly moving. The process leading from the photons reception in the retina to the cortical response takes about milliseconds. Most of this delay is due to photo-transduction. Though this might look fast, it is actually too slow. A tennis ball moving at 30 m/s - 108 km/h (the maximum measured speed is about 250 km/h) covers between and 3 m during this time, so, without a mechanism compensating this delay it wouldn’t be possible to play tennis (not to speak of survival, a necessary condition for a species to reach the level whereplaying tennis becomes possible). The visual system is indeed able to extrapolate the trajectory of a moving object to perceive it at its actual location. This corresponds to anticipation mechanisms taking place in the visual cortex and in the retina, with different modalities.

In this paper we analyse the potential effects of lateral connectivity (amacrine cells and gap junctions) on motion anticipation in the retina. Our main result is that lateral connectivity can - under conditions analysed in the paper - trigger a wave of activity enhancing the anticipation mechanism provided by local gain control. We illustrate these predictions by two examples studied in the experimental literature: differential motion sensitive cells and direction sensitive cells where direction sensitivity is inherited from asymmetry in gap junctions connectivity. We finally present reconstructions of retinal responses to 2D visual inputs to assess the ability of our model to anticipate motion in the case of three different 2D stimuli.

Figure 12 illustrates our results.

For more details, see the paper https://

7.1.7 Linear Response of General Observables in Spiking Neuronal Network Models

Participants: Bruno Cessac, Ignacio Ampuero, Rodrigo Cofré

1 Departamento de Informática, Universidad Técnica Federico Santa María, Valparaíso, Chile

2 CIMFAV-Ingemat, Facultad de Ingeniería 2340000, Universidad de Valparaíso, Valparaíso, Chile

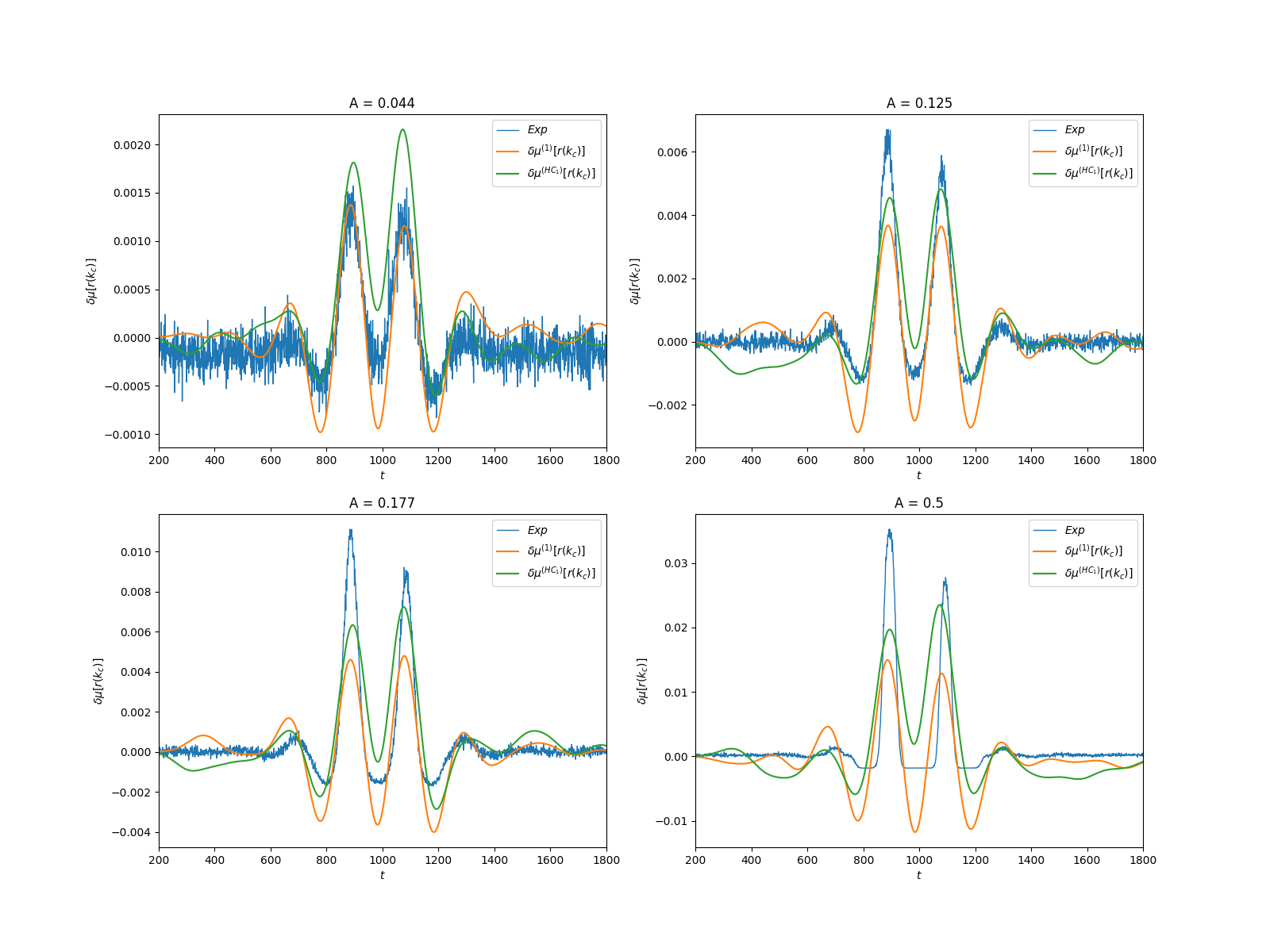

Description: We consider a spiking neuronal network model where the non-linear dynamics and neurons interactions naturally produce spatio-temporal spikes correlations. We assume that these neurons reach a stationary state without stimuli and from a given time are submitted to a time-dependent stimulation (See Fig. 13). How are the spatio-temporal spike correlations modified by this stimulus ? We address this question in the context of linear response theory using methods from ergodic theory and so-called chains with complete connections, extending the notion of Markov chains to infinite memory, providing a generalized notion of Gibbs distribution. We show that spatio-temporal response is written in term of a history-dependent convolution kernel applied to the stimuli. We compute explicitly this kernel in a specific model example and analyse the validity of our linear response formula by numerical means. This relation allow us to predict the influence of a weak amplitude time dependent external stimuli on spatio-temporal spike correlations, from the spontaneous statistics (without stimulus) in a general context where the memory in spike dynamics can extend arbitrarily far in the past. Using this approach, we show how the linear response is explicitly related to the collective effect of the stimuli, intrinsic neuronal dynamics, and network connectivity on spike train statistics.

For more detail, see the paper https://

7.1.8 Investigating how specific classes of retinal cells contribute to vision with a multi-layered simulator

Participants: Evgenia Kartsaki Gerrit Hilgen, Evelyne Sernagor, Bruno Cessac

1 Biosciences Institute, Newcastle University, Newcastle, UK

Description: Thanks to the astonishing functional and anatomical diversity within retinal neuronal classes, our brain can recreate images from interpreting parallel streams of information emitted by the retina. However, how these neuronal classes interact to perform these functions remains largely a mystery.

To track down the signature of a specific cell class, we employ a novel experimental approach that is based on the ability to pharmacologically control the level of neural activity in specific subgroups of retinal ganglion cells(RGCs), specialized cells which connect the retina to the brain via the optic nerve and amacrine cells(ACs), interneurons that modulate RGCs activity over a wide area via lateral connectivity. We modified the activity of Scnn1a-and Grik4-expressing RGCs and ACs through excitatory DREADD (Designer Receptors Exclusively Activated by Designer Drugs) activation using clozapine-n-oxide(CNO). We hypothesize that modifying the activity of RGCs and/or ACs may not only affect the individual response of these cells,but also their concerted activity to different stimuli, impacting the information sent to the brain, thereby shedding light on their role in population encoding of complex visual scenes. However, it is difficult to distinguish the pharmacological effect on purely experimental grounds when both cell types express DREADDs and respond to CNO, as these cells "antagonize" each other. Contrarily, modeling and numerical simulation can afford it.

To this end, we have developed a novel simulation platform that can reflect normal and impaired retinal function (from single-cell to large-scale population). It is able to handle different visual processing circuits and allows us to visualize responses to visual scenes (movies). In addition, it simulates retinal responses in normal and pharmacologically induced conditions; namely, in the presence of DREADD-expressing cells sensitive to CNO-induced activity modulation. Firstly, we deploy a circuit that models different RGCs classes and their interactions via ACs and emulates both their individual and concerted responses to simple and complex stimuli. Next, we study the direct (single-cell) or indirect (network) pharmacological effect when the activity of RGCs and/or ACs is modified in order to disentangle their role in experimental observations. To fit and constrain the numerical models and check their validity and predictions, we use empirical data. Ultimately, we expect this synergistic effort (1) to contribute new knowledge on the role specific subclasses of RGCs play in conveying meaningful signals to the brain, leading to visual perception and (2) propose new experimental paradigms to understand how the activation of ensembles of neurons in the retina can encode visual information.

This work has been presented in 28

7.1.9 Joint Attention for Automated Video Editing

Participants: Hui-Yin Wu, Trevor Santarra, Michael Leece, Rolando Vargas, Arnav Jhala

1 Unity Technologies, USA

2 University of California Santa Cruz, USA

3 Facebook, USA

4 North Carolina State University, USA

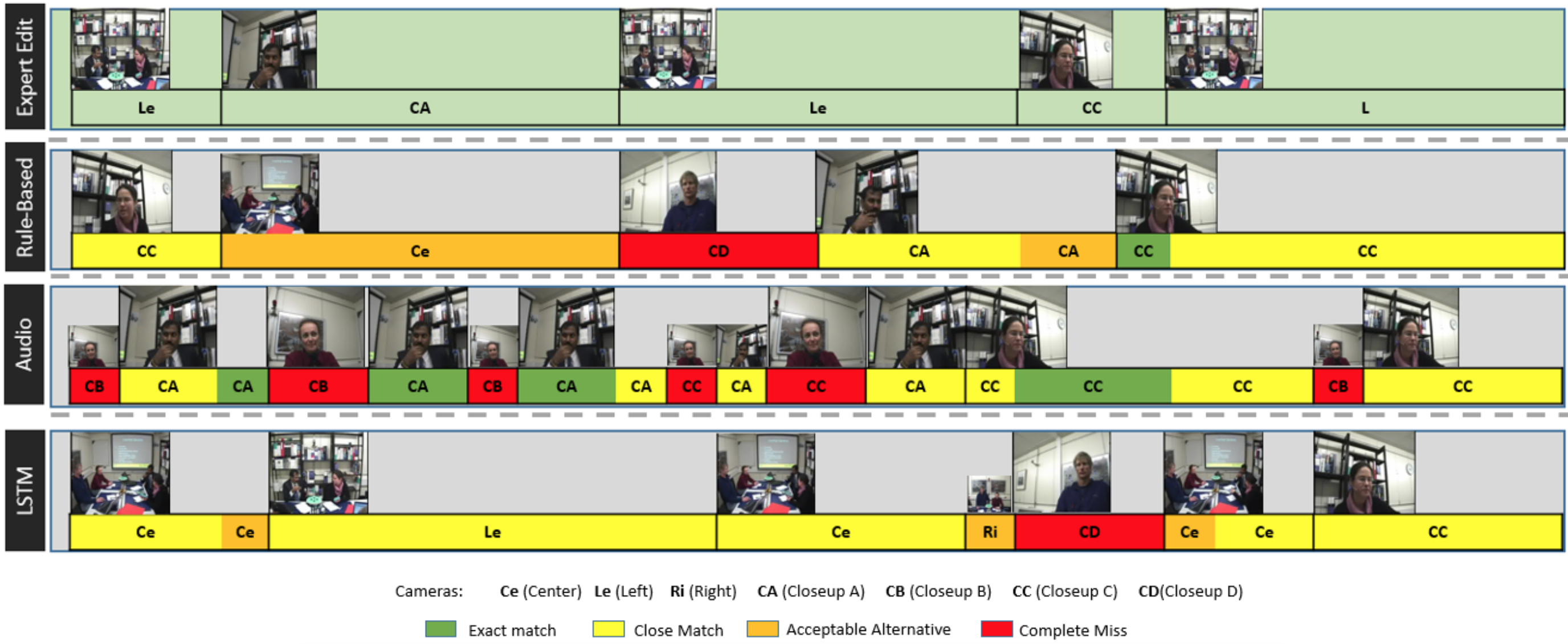

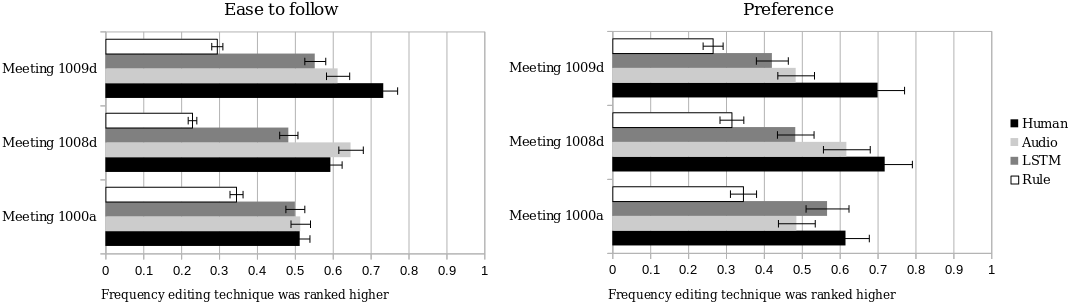

Description: Joint attention refers to the shared focal points of attention for occupants in a space. In this work, we proposed a computational definition of joint attention behaviours for the automated editing of meetings in multi-camera environments from the AMI corpus – containing over 100 hours of audio-video data captured in smart conference rooms.

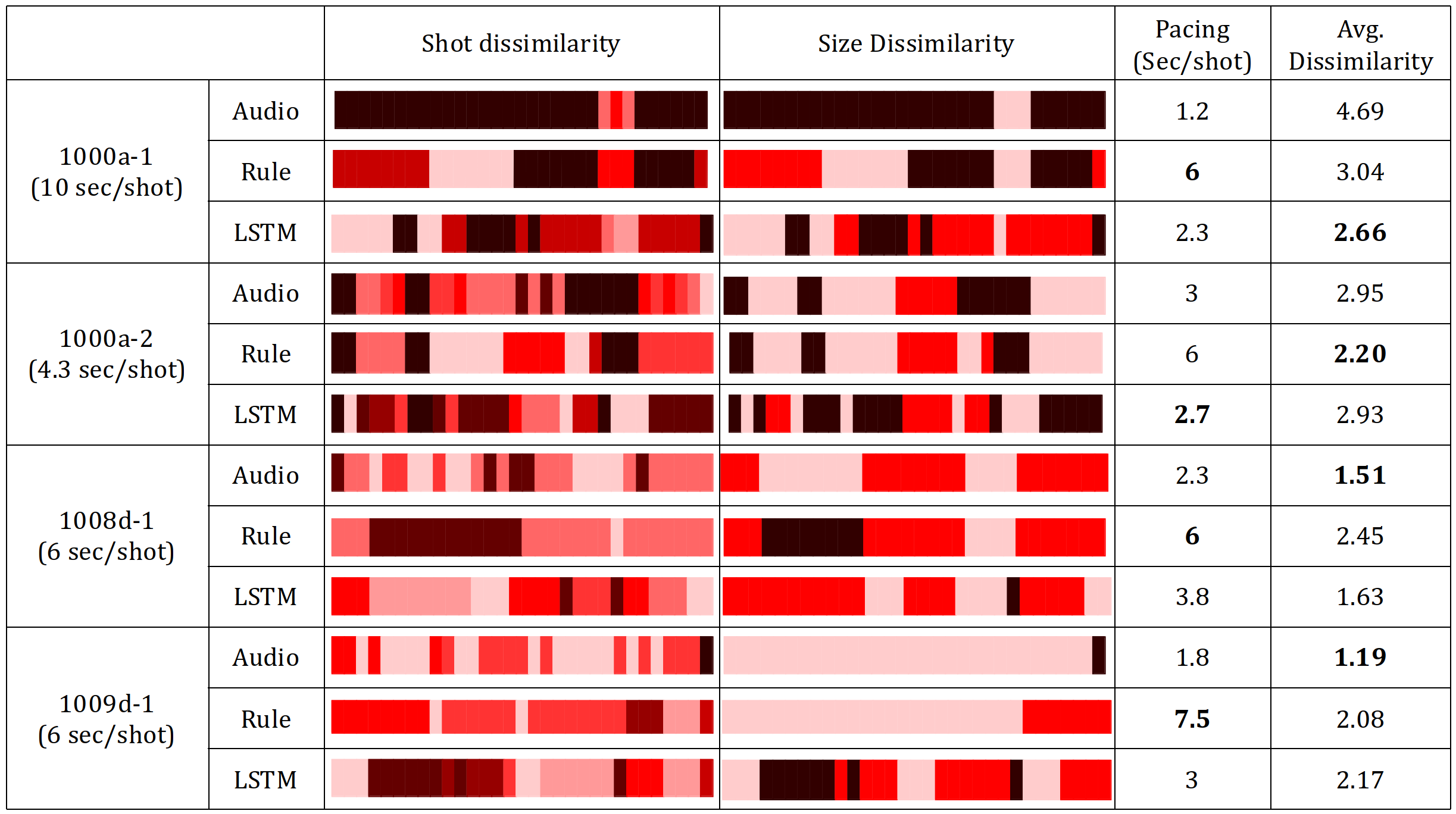

Using extracted head pose and individual headset amplitude as input features, we developed three editing methods: (1) a naive audio-based method that selects the camera using only the headset input, (2) a rule-based edit that selects cameras at a fixed pacing using pose data, and (3) an editing algorithm using LSTM (Long-short term memory) learned joint-attention from both pose and audio data, trained on expert edits. An example output of the three methods compared to the expert edit are shown in Figure 14.

An evaluation of these methods were conducted, including qualitatively against the human edit (Figure 15), and quantitatively in a user study with 22 participants (Figure 16). Results indicate that LSTM-trained joint attention produces edits that are comparable to the expert edit, offering a wider range of camera views than audio, while being more generalizable as compared to rule-based methods.

This work was published as a full paper at the ACM Interactive Media Experiences 2020 conference 10 and presented as an invited talk at the 9th Eurographics Workshop on Intelligent Cinematography and Editing (WICED 2020). The video presentation of the work can be found here: https://

7.2 Understanding the impact of low vision at neuronal and perceptual levels

7.2.1 The inhibitory effect of word neighborhood size when reading with central field loss is modulated by word predictability and reading proficiency

Participants: Lauren Sauvan, Natacha Stolowy, Carlos Aguilar, Thomas François, Nuria Gala, Frédéric Matonti, Eric Castet, Aurélie Calabrèse

1 North Hospital, Marseille, France

2 Université Côte d'Azur, France

3 Université catholique de Louvain, Belgique

4 Aix-Marseille University, Marseille, France

5 University Hospital of La Timone, Marseille, France

Description: For a given word, word neighborhood size corresponds to the total number of new words that can be formed by changing one letter, while preserving letter positions 48. For example, the word “shore” has many neighbors, including chore, score and share, while “neighbor” has 0 neighbors. For normal readers, word neighborhood size has a facilitator effect on word recognition (the more neighbors, the easier to identify) 45. In the case of low vision, visual input is deteriorated and access to text is only partial. Because bottom-up visual input is less reliable, patients must rely much more on top-down linguistic inference than normally sighted readers. Here we investigate the effect of word neighborhood size on reading performance with central field loss (CFL) and whether it is modulated by word predictability and reading proficiency.

Nineteen patients with binocular CFL from 32 to 89 years old (mean ± SD = 75 ± 15) read short sentences presented with the self-paced reading paradigm. Accuracy and reading time were measured for each target word read, along with its predictability, i.e., its probability of occurrence following the two preceding words in the sentence using a trigram analysis. Linear mixed effects models were then fit to estimate the individual contributions of word neighborhood size, predictability, frequency and length on accuracy and reading time, while taking patients’ reading proficiency into account.

For the less proficient readers, who have given up daily reading as a consequence of their visual impairment (Figure 17 - red lines), we found that: (1) the effect of neighborhood size was reversed compared to normally sighted readers and (1) of higher amplitude than the effect of frequency. Furthermore, (3) this inhibitory effect is of greater amplitude (up to 50% decrease in reading speed) when a word is not easily predictable because its chances to occur after the two preceding words in a specific sentence are rather low (Figure 17 - A vs. B).

Severely impaired patients with CFL often quit reading on a daily basis because this task becomes simply too exhausting. This innovative 3-fold result is of great relevance in the context of low-vision rehabilitation, advocating for lexical text simplification as a new alternative to promote effective rehabilitation in these patients. Indeed, these results provide first guidelines for optimal lexical simplification rules, such as substituting complex words (i.e., words with many orthographic neighbors) with synonyms that have less neighbors and equal or higher frequency. By increasing reading accessibility for those who struggle the most, text simplification has potential to become an efficient rehabilitation tool and a daily reading assistive technology, (1) improving overall reading ability and fluency, while (2) fostering the long-term motivation necessary to resume daily reading practice.

This work was published in Scientific Reports. For more details: 8

7.2.2 Can patients with central field loss perform head pointing in a virtual reality environment?

Participants: Aurélie Calabrèse, Hui-Yin Wu, Pierre Kornprobst, Ambre Denis-Noël, Eric Castet, Frédéric Matonti

1 Aix-Marseille University, Marseille, France

2 Centre d'Ophtalmologie Monticelli-Paradis, Marseille, France

Description: Head pointing (a common way to interact with the world in VR environments) may represent a promising option for patients with CFL, who lost the ability to direct their gaze efficiently towards a target. Yet, little is known about the actual head-pointing capacities of these patients. In order to set a first milestone in VR research for the visually impaired, we recently led an experiment to evaluate whether patients with CFL are able to perform precise head-pointing tasks in VR. 49 patients with binocular CFL, aged 34 to 97 (mean = 77±13), were tested with an Oculus Go headset in a very simple VR environment (grey background). At the beginning of each block, a head-contingent reticle was displayed in a specific location in front of the patient. A total of 9 reticle locations were tested either in the center of the visual field or with a 7° offset. At each trial, a target appeared in the visual field and patients were instructed to move their head to position the reticle precisely onto the target. Targets were black circles (1° to 3° diameter) randomly presented in five fixed positions (center or top, right, bottom, left at 18° of eccentricity). On average, patients were able to use their head to position the reticle precisely onto the target 94% of the time. Individual differences emerged, with a significant drop in pointing speed performance for specific reticle locations.

This represents a fundamental step towards the design of efficient and user-friendly visual aids and rehabilitation tools using VR. For instance, head pointing could provide an ergonomic framework to design user interfaces that require precise pointing abilities to perform item selection. Similarly, one can imagine designing head-contingent pointing exercises that will drive the rehabilitation process while limiting straining of the eyes.

This constitutes preliminary results of the ANR project Devise, which was recently funded through the 2020 ANR campaign. It has been accepted for an oral presentation at Vision 2020+1 (conference scheduled in 2020 and postponed to 2021). For more details, 27.

7.2.3 Reading Speed as an Objective Measure of Improvement Following Vitrectomy for Symptomatic Vitreous Opacities

Participants: Edwin H. Ryan, Linda A. Lam, Christine M. Pulido, Steven R. Bennett, Aurélie Calabrèse

1 VitreoRetinal Surgery PA, Edina, Minnesota, USA

2 USC Keck School of Medicine, Los Angeles, California, USA

3 Feinberg School of Medicine, Chicago, Illinois, USA

Description: There is currently no objective measure of the visual deficits experienced by patients with symptomatic vitreous opacities (SVOs) that would also correlate with the functional improvement they report following their removal (i.e. vitrectomy). While this surgical intervention is subjectively reported as helpful by patients, no objective measure of visual function (e.g. visual acuity) was ever able to report its benefits and most surgeons are reluctant to perform it.

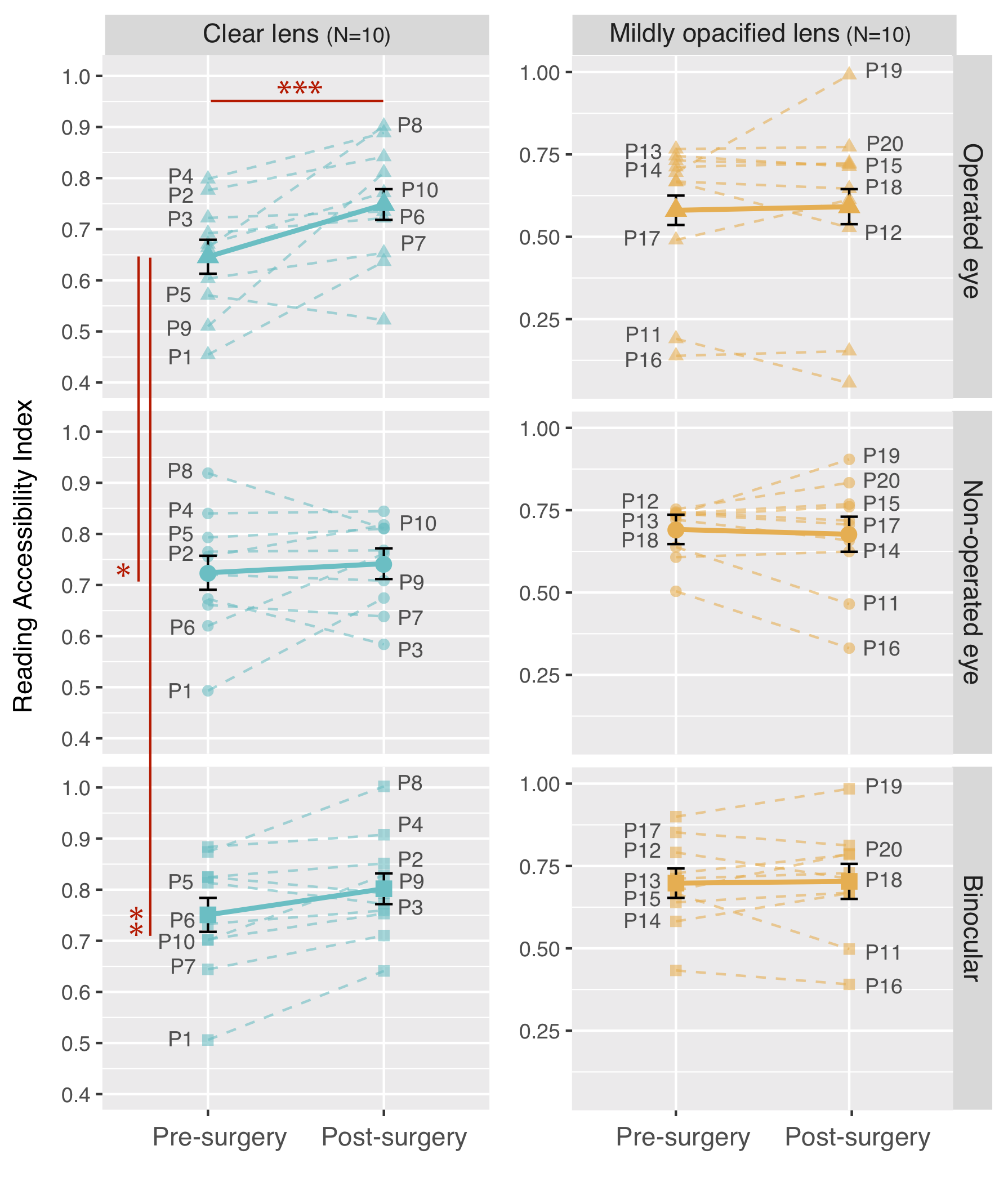

This clinical study aims to determine whether reading speed can be used as a reliable outcome measure to assess objectively the impact of both SVOs and vitrectomy on patients’ visual performance.

Twenty adult patients seeking surgery for SVO were included. Measures of visual function were obtained before and after vitrectomy using the MNREAD app, a standardized reading test running on an iPad tablet 1.

In patients with non-opacified lenses (n = 10), maximum reading speed increased significantly from 138 to 159 words per minute after complete removal of SVOs by vitrectomy (95% confidence interval, 14-29; P < .001) (Figure 18). For patients with mildly opacified lenses (n = 10), there was no significant difference in MRS before and after surgery in any of the three conditions tested (operated eye, unoperated eye, and binocular).

Reading speed is impaired with SVOs and improves following vitrectomy in phakic and pseudophakic eyes with clear lenses. Reading speed is a valid objective measure to assess the positive effect of vitrectomy for SVOs on near-distance daily life activities. Moreover, our results provide evidence to the surgical community that this intervention does produce significant functional benefit for daily-life activities, but only in patients with clear lenses (i.e., pseudophakic and phakic with no cataract).

This work was published in Ophthalmic Surgery, Lasers & Imaging Retina. For more details: 6

7.3 Diagnosis, rehabilitation and low vision aids

7.3.1 Towards Accessible News Reading Design in Virtual Reality for Low Vision

Participants: Hui-Yin Wu, Aurélie Calabrèse, Pierre Kornprobst

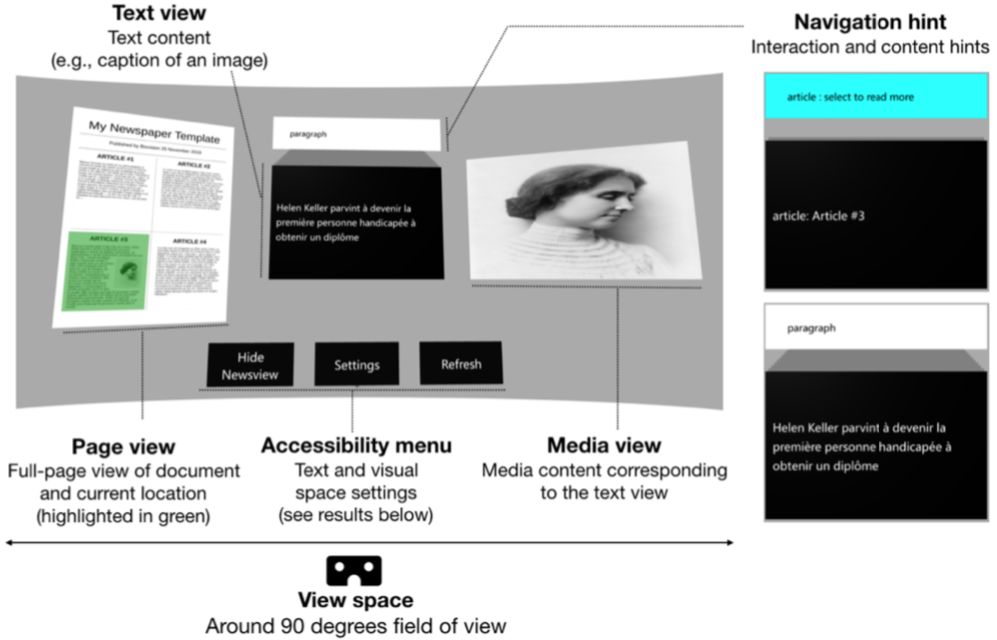

Description: Low-vision conditions resulting in partial loss of the visual field strongly affect patients' daily tasks and routines, and none more prominently than the ability to access text. Though vision aids such as magnifiers, digital screens, and text-to-speech devices can improve overall accessibility to text, news media, which is non-linear and has complex and volatile formatting, is still inaccessible, barring low-vision patients from easy access to essential news content. This work positions virtual reality as the next step towards accessible and enjoyable news reading. We review the current state of low-vision reading aids and conduct a hands-on study of eight reading applications in virtual reality to evaluate how accessibility design is currently implemented in existing products. From this we extract a number of design principles related to the usage of virtual reality for low-vision reading. We then present a framework that integrates the design principles resulting from our analysis and study, and implement a proof-of-concept for this framework using browser-based graphics to demonstrate the feasibility of our proposal with modern virtual reality technology (see Fig. 19).

This work was accepted to the conference Vision 2020 (postponed to 2021). For more information: 25. The software produced for this work is in the process obtaining a CeCILL licence through dépôt APP (cf. New Softwares).

Application prototype: The global overview of the newspaper page is shown side- by-side with the enlarged text and images of the highlighted region. Navigation hints above the card show what type of content is displayed (e.g., photo, heading, paragraph) and whether the card can be selected (i.e., highlighted in light blue) to reveal further content. Text and images of the newspaper are purely for demonstrating a proof-of-concept.

7.3.2 Study and design of user evaluation of VR platform for accessible news reading

Participants: Hui-Yin Wu, Aurélie Calabrèse, Pierre Kornprobst

Description: We present a number of major findings that position virtual reality as a promising solution towards accessible and enjoyable news reading for low vision patients.

Notably, through literature review and hands-on study of existing applications, we noted eight points of advantages, as elaborated in Table 1.

| Comfort | Users have no obligation to sit at a desk, bent over the text (e.g., with handheld magnifiers), nor fight with lighting conditions. |

| Mobility | Headsets can be used in various reading environments without additional cables or surfaces. |

| Visual field | The field of view of modern VR headsets is 90-110 |

| Multifunction | Modern VR headsets all come with web browsers, online capabilities, and downloadable applications. |

| Multimedia | Affords text, image, audio, video, and 3D content. |

| Interaction | Interaction methods can be customized, encompassing controller, voice, head movement, and gesture. |

| Immersion | Provides a private virtual space that is separate from the outside world offering privacy and freedom to personalise the space 43. |

| Affordability | Compatible with most modern smartphones, a Google Cardboard© costs as low as $15, and an Oculus Go© $200. |

In addition, we also identified six major points of low-vision accessibility design for VR reading platforms: (1) global and local navigation, (2) adjustable print and layout, (3) smart text contrasting, (4) accessibility menu, (5) hands-free control, and (6) image enhancement. These were also evaluated in eight existing applications (Table 2).

|

|

Device | Genre | Content | Accessibility | ||

| MZ | PS | AB | ||||

|

|

GVR,CB | books | app limited | |||

| Chimera | GVR | books | epub | • | ||

| [HTML]E5E4E2ComX VR | CB | comics | app limited | • | ||

| ImmersionVR Reader | GVR,GO | books | epubs & pdf | • | • | |

| [HTML]E5E4E2Madefire Comics | GVR,CB,DD | comics | app limited | • | ||

| Sphere Toon | VI | comics | app limited | • | ||

| [HTML]E5E4E2Virtual Book Viewer | GO | books | pdfs & images | • | ||

| Vivepaper | V,CB | books | app limited | |||

|

|

Finally, for preparation of user evaluations, we collected a set of 90 articles from the French Vikipedia site (a version of Wikipedia adapted to the reading level of children), and using ParseTree verified that they have the same linguistic properties as the iRest test for low-vision reading. 44.

The reading platform is planned for release as an open toolbox using browser-based graphics (WebGL) that implements the design principles from our study. A proof-of-concept is created using this toolbox to demonstrate the feasibility of our proposal with modern virtual reality technology.

7.3.3 Auto-illustration of short French texts

Participants: Paola Palowski, Pierre Kornprobst, Marco Benzi, Elena Cabrio

1 Inria, Wimmics project-team

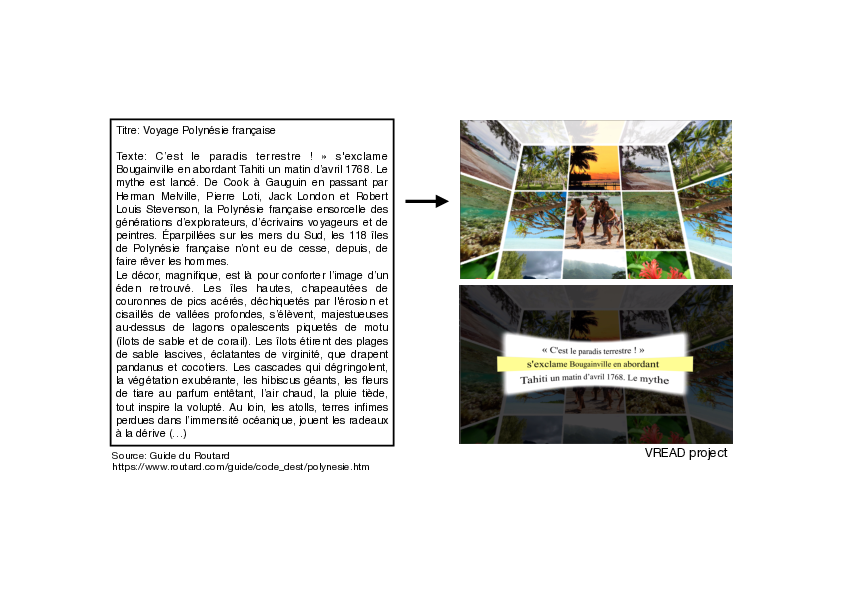

Description: Text auto-illustration refers to the automatic annotation of text with images. Traditionally, research on the topic has focused on domain-specific texts in English and has depended on curated image data sets and human evaluation. This research automates text auto-illustration by uniting natural language processing (NLP) methods and resources with computer vision (CV) image classification models to create a pipeline for auto-illustration of short French texts and its automatic evaluation. We create a corpus containing documents for which we build queries. Each query is based on a named entity that is associated with a synset label and is used to retrieve images. These images are analyzed and classified with a synset label. We create an algorithm that analyzes this set of synsets to automatically evaluate whether an image correctly illustrates a text. We compare our results to human evaluation and find that our system’s evaluation of illustrations is on par with human evaluation. Our system’s standards for acceptance are high, which excludes many images that are potentially good candidates for illustration. However, images that our system approves for illustration are also approved by human reviewers. This method was applied to create simple text-realated 3D environments to support an immersive reading experience (see Fig. 20 or supporting video).

For more information: 29

Application of the text auto-illustration method for immersive reading experiences. From a text about Polynesie française (left), we find automatically images that are used to "paint" the walls of a 3D cube so that user could alternate between reading phase and image exploration phase (see VRead project).

8 Bilateral contracts and grants with industry

8.1 Bilateral contracts with industry

Helping visually impaired employees to follow presentations in the company: Towards a mixed reality solution

Participants: Riham Nehmeh, Carlos Zubiaga, Julia-Elizabeth Luna, Arnaud Mas, Alain Schmid, Christophe Hugon, Pierre Kornprobst

1 InriaTech, UCA Inria, France

2 EDF, R&D PERICLES – Groupe Réalité Virtuelle et Visualisation Scientifique, France

3 EDF, Lab Paris Saclay, Département SINETICS, France

4 R&DoM

Duration: 3 months

Objective: The objective of the work is to develop a proof-of-concept (PoC) targeting a precise use-case scenario defined by EDF (contract with InriaTech, supervised by Pierre Kornprobst). The use-case is one of an employee with visual impairment willing to follow a presentation. The idea of the PoC is a vision-aid system based on a mixed-reality solution. This work aims at (1) estimating the feasibility and interest of such kind of solution and (2) identifying research questions that could be jointly addressed in a future partnership.

APP Deposit: SlidesWatchAssistant IDDN.FR.001.080024.000.S.P.2020.000.31235)

9 Partnerships and cooperations

9.1 International initiatives

9.1.1 Inria International Labs

MAGMA

- Title: Modelling And understandinG Motion Anticipation in the retina

- Duration: 2019 - 2022

- Coordinator: Bruno Cessac

-

Partners:

- Advanced Center for Electrical and Electronic Engineering (AC3E), Universidad Técnica Federico Santa María Valparaiso (Chile)

- Inria contact: Bruno Cessac

- Summary: Motion processing represents a fundamental visual computation ruling many visuomotor features such as motion anticipation which compensates the transmission delays between retina and cortex, and is fundamental for survival. We want to strengthen an existing collaborative network between the Universidad de Valparaiso in Chile and the Biovision team, gathering together skills related with physiological recording in the retina, data analysis numerical platforms and theoretical tools to implement functional and biophysical models aiming at understanding the mechanisms underlying anticipatory response and the predictive coding observed in the mammalian retina, with a special emphasis on the role of lateral connectivity (amacrine cells and gap junctions).

9.1.2 Inria international partners

- Evelyne Sernagor lab, University of Newcastle, UK.

- Neuroscience institute and University of Valparaiso, Chile.

- Matthias Hennig lab, University of Edinburgh, UK.

9.2 International research visitors

Due to COVID pandemic we had no International visitors this year.

9.3 National initiatives

9.3.1 ANR

Trajectory

- Title: Encoding and predicting motion trajectories in early visual networks

- Programme: ANR

- Duration: October 2015 - September 2020

- Coordinator: Invibe Team, Institut des Neurosciences de la Timone, Frédéric Chavane,

-

Partners:

- Institut de Neurosciences de la Timone (CNRS and Aix-Marseille Université, France)

- Institut de la Vision (IdV), Paris, France

- Universidad Tecnico Federico Santa María (Electronics Engineering Department, Valparaíso, Chile)

- Inria contact: Bruno Cessac

-

Summary:

Global motion processing is a major computational task of biological visual systems. When an object moves across the visual field, the sequence of visited positions is strongly correlated in space and time, forming a trajectory. These correlated images generate a sequence of local activation of the feed-forward stream. Local properties such as position, direction and orientation can be extracted at each time step by a feed-forward cascade of linear filters and static non-linearities. However such local, piecewise, analysis ignores the recent history of motion and faces several difficulties, such as systematic delays, ambiguous information processing (e.g., aperture and correspondence problems) high sensitivity to noise and segmentation problems when several objects are present. Indeed, two main aspects of visual processing have been largely ignored by the dominant, classical feed-forward scheme. First, natural inputs are often ambiguous, dynamic and non-stationary as, e.g., objects moving along complex trajectories. To process them, the visual system must segment them from the scene, estimate their position and direction over time and predict their future location and velocity. Second, each of these processing steps, from the retina to the highest cortical areas, is implemented by an intricate interplay of feed-forward, feedback and horizontal interactions. Thus, at each stage, a moving object will not only be processed locally, but also generate a lateral propagation of information. Despite decades of motion processing research, it is still unclear how the early visual system processes motion trajectories. We, among others, have proposed that anisotropic diffusion of motion information in retinotopic maps can contribute resolving many of these difficulties. Under this perspective, motion integration, anticipation and prediction would be jointly achieved through the interactions between feed-forward, lateral and feedback propagations within a common spatial reference frame, the retinotopic maps.

Addressing this question is particularly challenging, as it requires to probe these sequences of events at multi-scale (from individual cells to large networks) and multiple stages (retina, primary visual cortex (V1)). “TRAJECTORY” proposes such an integrated approach. Using state-of-the-art micro- and mesoscopic recording techniques combined with modeling approaches, we aim at dissecting, for the first time, the population responses at two key stages of visual motion encoding: the retina and V1. Preliminary experiments and previous computational studies demonstrate the feasibility of our work.

We plan three coordinated physiology and modeling work-packages aimed to explore two crucial early visual stages in order to answer the following questions: How is a translating bar represented and encoded within a hierarchy of visual networks and for which condition does it elicit anticipatory responses? How is visual processing shaped by the recent history of motion along a more or less predictable trajectory? How much processing happens in V1 as opposed to simply reflecting transformations occurring already in the retina?

The project is timely because partners master new tools such as multi-electrode arrays and voltage-sensitive dye imaging for investigating the dynamics of neuronal populations covering a large segment of the motion trajectory, both in retina and V1. Second, it is strategic: motion trajectories are a fundamental aspect of visual processing that is also a technological obstacle in computer vision and neuroprostheses design. Third, this project is unique by proposing to jointly investigate retinal and V1 levels within a single experimental and theoretical framework. Lastly, it is mature being grounded on (i) preliminary data paving the way of the three different aims and (ii) a history of strong interactions between the different groups that have decided to join their efforts.

10 Dissemination

10.1 Promoting scientific activities

10.1.1 Scientific events: organisation

Member of the organizing committees

- B. Cessac organized the “mini-cours” and the “challenges in neuroscience” of the Neuromod institute : (see webpage http://