Keywords

Computer Science and Digital Science

- A2.5. Software engineering

- A5.1. Human-Computer Interaction

- A5.1.1. Engineering of interactive systems

- A5.1.2. Evaluation of interactive systems

- A5.1.3. Haptic interfaces

- A5.1.4. Brain-computer interfaces, physiological computing

- A5.1.5. Body-based interfaces

- A5.1.6. Tangible interfaces

- A5.1.7. Multimodal interfaces

- A5.1.8. 3D User Interfaces

- A5.1.9. User and perceptual studies

- A5.2. Data visualization

- A5.6. Virtual reality, augmented reality

- A5.6.1. Virtual reality

- A5.6.2. Augmented reality

- A5.6.3. Avatar simulation and embodiment

- A5.6.4. Multisensory feedback and interfaces

- A5.10.5. Robot interaction (with the environment, humans, other robots)

- A6. Modeling, simulation and control

- A6.2. Scientific computing, Numerical Analysis & Optimization

- A6.3. Computation-data interaction

Other Research Topics and Application Domains

- B1.2. Neuroscience and cognitive science

- B2.4. Therapies

- B2.5. Handicap and personal assistances

- B2.6. Biological and medical imaging

- B2.8. Sports, performance, motor skills

- B5.1. Factory of the future

- B5.2. Design and manufacturing

- B5.8. Learning and training

- B5.9. Industrial maintenance

- B6.4. Internet of things

- B8.1. Smart building/home

- B8.3. Urbanism and urban planning

- B9.1. Education

- B9.2. Art

- B9.2.1. Music, sound

- B9.2.2. Cinema, Television

- B9.2.3. Video games

- B9.4. Sports

- B9.6.6. Archeology, History

1 Team members, visitors, external collaborators

Research Scientists

- Anatole Lécuyer [Team leader, Inria, Senior Researcher, HDR]

- Fernando Argelaguet Sanz [Inria, Researcher]

Faculty Members

- Bruno Arnaldi [INSA Rennes, Professor, HDR]

- Melanie Cogné [Univ de Rennes I, Hospital Staff]

- Valérie Gouranton [INSA Rennes, Associate Professor]

- Maud Marchal [INSA Rennes, Associate Professor, until Mar 2020, HDR]

Post-Doctoral Fellows

- Thomas Howard [CNRS]

- Panagiotis Kourtesis [Inria, from Oct 2020]

- Justine Saint-Aubert [Inria, from Apr 2020]

PhD Students

- Guillaume Bataille [Orange Labs, until Sep 2020]

- Antonin Bernardin [INSA Rennes, until Jun 2020]

- Hugo Brument [Univ de Rennes I]

- Xavier De Tinguy De La Girouliere [INSA Rennes, until Aug 2020]

- Diane Dewez [Inria]

- Mathis Fleury [Inria]

- Gwendal Fouché [Inria]

- Rebecca Fribourg [Inria]

- Adelaïde Genay [Inria]

- Vincent Goupil [Vinci Construction, CIFRE, from Nov 2020]

- Lysa Gramoli [Orange Labs, CIFRE, from Oct 2020]

- Martin Guy [ECN]

- Romain Lagneau [INSA Rennes, until Jun 2020]

- Salome Le Franc [Centre hospitalier régional et universitaire de Rennes]

- Flavien Lecuyer [INSA Rennes, until Aug 2020]

- Tiffany Luong [Institut de recherche technologique B-com, CIFRE]

- Victor Rodrigo Mercado Garcia [Inria]

- Nicolas Olivier [InterDigital, CIFRE]

- Etienne Peillard [Inria, until Nov 2020]

- Grégoire Richard [Inria]

- Romain Terrier [Institut de recherche technologique B-com, until Sep 2020]

- Guillaume Vailland [INSA Rennes]

- Sebastian Vizcay [Inria]

Technical Staff

- Alexandre Audinot [INSA Rennes, Engineer]

- Ronan Gaugne [Univ de Rennes I, Engineer]

- Thierry Gaugry [INSA Rennes, Engineer, until Jun 2020]

- Florian Nouviale [INSA Rennes, Engineer]

- Thomas Prampart [Inria, Engineer]

- Adrien Reuzeau [INSA Rennes, Engineer, until Sep 2020]

- Hakim Si Mohammed [Inria, Engineer, until Jun 2020]

Interns and Apprentices

- Bastien Daniel [INSA Rennes, Intern, from Jun 2020 until Sep 2020]

- Loic Delabrouille [École normale supérieure de Rennes, Intern, from Jun 2020 until Aug 2020]

- Quentin Denis-Lutard [Inria, Intern, from May 2020 until Aug 2020]

- Rafik Drissi [Univ de Rennes I, Intern, until Sep 2020]

- Timothee Durgeaud [INSA Rennes, Intern, from Jun 2020 until Aug 2020]

- Johan Julien [INSA Rennes, Intern, from May 2020 until Aug 2020]

- Meven Leblanc [INSA Rennes, Intern, from Jun 2020 until Sep 2020]

- Thomas Rinnert [Inria, Intern, until Mar 2020]

- Killian Tiroir [INSA Rennes, Intern, from Apr 2020 until Aug 2020]

Administrative Assistant

- Nathalie Denis [Inria]

External Collaborators

- Guillaume Moreau [École centrale de Nantes, HDR]

- Jean Marie Normand [École centrale de Nantes]

2 Overall objectives

Our research project belongs to the scientific field of Virtual Reality (VR) and 3D interaction with virtual environments. VR systems can be used in numerous applications such as for industry (virtual prototyping, assembly or maintenance operations, data visualization), entertainment (video games, theme parks), arts and design (interactive sketching or sculpture, CAD, architectural mock-ups), education and science (physical simulations, virtual classrooms), or medicine (surgical training, rehabilitation systems). A major change that we foresee in the next decade concerning the field of Virtual Reality relates to the emergence of new paradigms of interaction (input/output) with Virtual Environments (VE).

As for today, the most common way to interact with 3D content still remains by measuring user's motor activity, i.e., his/her gestures and physical motions when manipulating different kinds of input device. However, a recent trend consists in soliciting more movements and more physical engagement of the body of the user. We can notably stress the emergence of bimanual interaction, natural walking interfaces, and whole-body involvement. These new interaction schemes bring a new level of complexity in terms of generic physical simulation of potential interactions between the virtual body and the virtual surrounding, and a challenging "trade-off" between performance and realism. Moreover, research is also needed to characterize the influence of these new sensory cues on the resulting feelings of "presence" and immersion of the user.

Besides, a novel kind of user input has recently appeared in the field of virtual reality: the user's mental activity, which can be measured by means of a "Brain-Computer Interface" (BCI). Brain-Computer Interfaces are communication systems which measure user's electrical cerebral activity and translate it, in real-time, into an exploitable command. BCIs introduce a new way of interacting "by thought" with virtual environments. However, current BCI can only extract a small amount of mental states and hence a small number of mental commands. Thus, research is still needed here to extend the capacities of BCI, and to better exploit the few available mental states in virtual environments.

Our first motivation consists thus in designing novel “body-based” and “mind-based” controls of virtual environments and reaching, in both cases, more immersive and more efficient 3D interaction.

Furthermore, in current VR systems, motor activities and mental activities are always considered separately and exclusively. This reminds the well-known “body-mind dualism” which is at the heart of historical philosophical debates. In this context, our objective is to introduce novel “hybrid” interaction schemes in virtual reality, by considering motor and mental activities jointly, i.e., in a harmonious, complementary, and optimized way. Thus, we intend to explore novel paradigms of 3D interaction mixing body and mind inputs. Moreover, our approach becomes even more challenging when considering and connecting multiple users which implies multiple bodies and multiple brains collaborating and interacting in virtual reality.

Our second motivation consists thus in introducing a “hybrid approach” which will mix mental and motor activities of one or multiple users in virtual reality.

3 Research program

The scientific objective of Hybrid team is to improve 3D interaction of one or multiple users with virtual environments, by making full use of physical engagement of the body, and by incorporating the mental states by means of brain-computer interfaces. We intend to improve each component of this framework individually, but we also want to improve the subsequent combinations of these components.

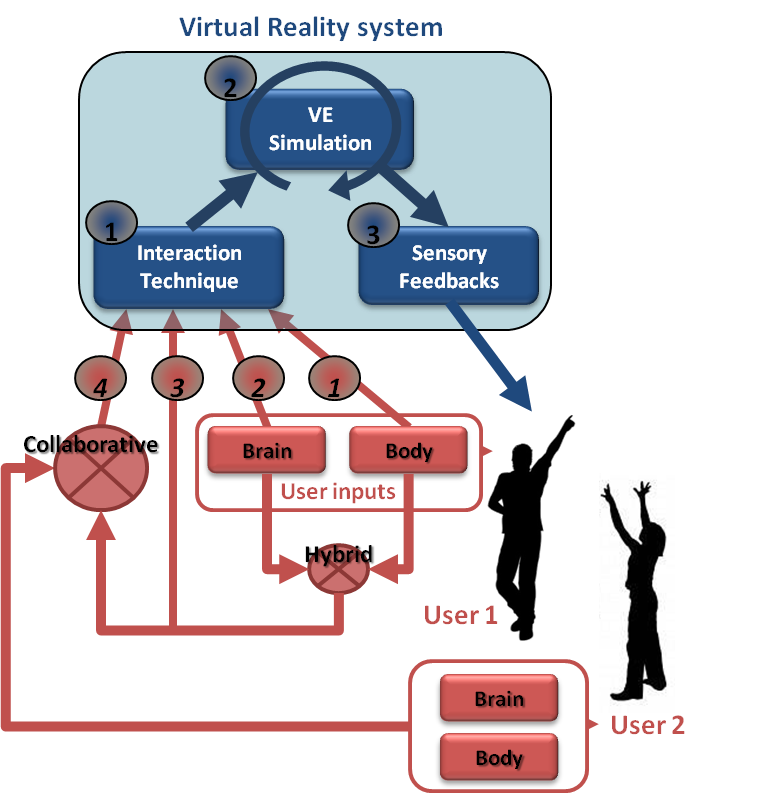

The "hybrid" 3D interaction loop between one or multiple users and a virtual environment is depicted in Figure 1. Different kinds of 3D interaction situations are distinguished (red arrows, bottom): 1) body-based interaction, 2) mind-based interaction, 3) hybrid and/or 4) collaborative interaction (with at least two users). In each case, three scientific challenges arise which correspond to the three successive steps of the 3D interaction loop (blue squares, top): 1) the 3D interaction technique, 2) the modeling and simulation of the 3D scenario, and 3) the design of appropriate sensory feedback.

The 3D interaction loop involves various possible inputs from the user(s) and different kinds of output (or sensory feedback) from the simulated environment. Each user can involve his/her body and mind by means of corporal and/or brain-computer interfaces. A hybrid 3D interaction technique (1) mixes mental and motor inputs and translates them into a command for the virtual environment. The real-time simulation (2) of the virtual environment is taking into account these commands to change and update the state of the virtual world and virtual objects. The state changes are sent back to the user and perceived by means of different sensory feedbacks (e.g., visual, haptic and/or auditory) (3). The sensory feedbacks are closing the 3D interaction loop. Other users can also interact with the virtual environment using the same procedure, and can eventually “collaborate” by means of “collaborative interactive techniques” (4).

This description is stressing three major challenges which correspond to three mandatory steps when designing 3D interaction with virtual environments:

- 3D interaction techniques: This first step consists in translating the actions or intentions of the user (inputs) into an explicit command for the virtual environment. In virtual reality, the classical tasks that require such kinds of user command were early categorized in four 69: navigating the virtual world, selecting a virtual object, manipulating it, or controlling the application (entering text, activating options, etc). The addition of a third dimension, the use of stereoscopic rendering and the use of advanced VR interfaces make however inappropriate many techniques that proved efficient in 2D, and make it necessary to design specific interaction techniques and adapted tools. This challenge is here renewed by the various kinds of 3D interaction which are targeted. In our case, we consider various cases, with motor and/or cerebral inputs, and potentially multiple users.

- Modeling and simulation of complex 3D scenarios: This second step corresponds to the update of the state of the virtual environment, in real-time, in response to all the potential commands or actions sent by the user. The complexity of the data and phenomena involved in 3D scenarios is constantly increasing. It corresponds for instance to the multiple states of the entities present in the simulation (rigid, articulated, deformable, fluids, which can constitute both the user’s virtual body and the different manipulated objects), and the multiple physical phenomena implied by natural human interactions (squeezing, breaking, melting, etc). The challenge consists here in modeling and simulating these complex 3D scenarios and meeting, at the same time, two strong constraints of virtual reality systems: performance (real-time and interactivity) and genericity (e.g., multi-resolution, multi-modal, multi-platform, etc).

- Immersive sensory feedbacks: This third step corresponds to the display of the multiple sensory feedbacks (output) coming from the various VR interfaces. These feedbacks enable the user to perceive the changes occurring in the virtual environment. They are closing the 3D interaction loop, making the user immersed, and potentially generating a subsequent feeling of presence. Among the various VR interfaces which have been developed so far we can stress two kinds of sensory feedback: visual feedback (3D stereoscopic images using projection-based systems such as CAVE systems or Head Mounted Displays); and haptic feedback (related to the sense of touch and to tactile or force-feedback devices). The Hybrid team has a strong expertice in haptic feedback, and in the design of haptic and “pseudo-haptic” rendering 70. Note that a major trend in the community, which is strongly supported by the Hybrid team, relates to a “perception-based” approach, which aims at designing sensory feedbacks which are well in line with human perceptual capacities.

These three scientific challenges are addressed differently according to the context and the user inputs involved. We propose to consider three different contexts, which correspond to the three different research axes of the Hybrid research team, namely: 1) body-based interaction (motor input only), 2) mind-based interaction (cerebral input only), and then 3) hybrid and collaborative interaction (i.e., the mixing of body and brain inputs from one or multiple users).

3.1 Research Axes

The scientific activity of Hybrid team follows three main axes of research:

- Body-based interaction in virtual reality. Our first research axis concerns the design of immersive and effective "body-based" 3D interactions, i.e., relying on a physical engagement of the user’s body. This trend is probably the most popular one in VR research at the moment. Most VR setups make use of tracking systems which measure specific positions or actions of the user in order to interact with a virtual environment. However, in recent years, novel options have emerged for measuring “full-body” movements or other, even less conventional, inputs (e.g. body equilibrium). In this first research axis we are thus concerned by the emergence of new kinds of “body-based interaction” with virtual environments. This implies the design of novel 3D user interfaces and novel 3D interactive techniques, novel simulation models and techniques, and novel sensory feedbacks for body-based interaction with virtual worlds. It involves real-time physical simulation of complex interactive phenomena, and the design of corresponding haptic and pseudo-haptic feedback.

- Mind-based interaction in virtual reality. Our second research axis concerns the design of immersive and effective “mind-based” 3D interactions in Virtual Reality. Mind-based interaction with virtual environments is making use of Brain-Computer Interface technology. This technology corresponds to the direct use of brain signals to send “mental commands” to an automated system such as a robot, a prosthesis, or a virtual environment. BCI is a rapidly growing area of research and several impressive prototypes are already available. However, the emergence of such a novel user input is also calling for novel and dedicated 3D user interfaces. This implies to study the extension of the mental vocabulary available for 3D interaction with VE, then the design of specific 3D interaction techniques "driven by the mind" and, last, the design of immersive sensory feedbacks that could help improving the learning of brain control in VR.

- Hybrid and collaborative 3D interaction. Our third research axis intends to study the combination of motor and mental inputs in VR, for one or multiple users. This concerns the design of mixed systems, with potentially collaborative scenarios involving multiple users, and thus, multiple bodies and multiple brains sharing the same VE. This research axis therefore involves two interdependent topics: 1) collaborative virtual environments, and 2) hybrid interaction. It should end up with collaborative virtual environments with multiple users, and shared systems with body and mind inputs.

4 Application domains

4.1 Overview

The research program of Hybrid team aims at next generations of virtual reality and 3D user interfaces which could possibly address both the “body” and “mind” of the user. Novel interaction schemes are designed, for one or multiple users. We target better integrated systems and more compelling user experiences.

The applications of our research program correspond to the applications of virtual reality technologies which could benefit from the addition of novel body-based or mind-based interaction capabilities:

- Industry: with training systems, virtual prototyping, or scientific visualization;

- Medicine: with rehabilitation and reeducation systems, or surgical training simulators;

- Entertainment: with movie industry, content customization, video games or attractions in theme parks,

- Construction: with virtual mock-ups design and review, or historical/architectural visits.

- Cultural Heritage: with acquisition, virtual excavation, virtual reconstruction and visualization

5 Social and environmental responsibility

5.1 Impact of research results

A salient initiative launched in 2020 and carried out by Hybrid is the Inria Covid-19 project “VERARE”. VERARE is a unique and innovative concept implemented in record time thanks to a close collaboration between the Hybrid research team and the teams from the intensive care and physical and rehabilitation medicine departments of Rennes University Hospital. VERARE consists in using virtual environments and VR technologies for the rehabilitation of Covid-19 patients, coming out of coma, weakened, and with strong difficulties in recovering walking. With VERARE, the patient is immersed in different virtual environments using a VR headset. He is represented by an “avatar”, carrying out different motor tasks involving his lower limbs, for example : walking, jogging, avoiding obstacles, etc. Our main hypothesis is that the observation of such virtual actions, and the progressive resumption of motor activity in VR, will allow a quicker start to rehabilitation, as soon as the patient leaves the ICU. The patient will then be able to carry out sessions in his room, or even from his hospital bed, in simple and secure conditions, hoping to obtain a final clinical benefit, either in terms of motor and walking recovery or in terms of hospital length of stay. The project started at the end of April 2020, and we could deploy a first version of our application at the Rennes hospital in mid-June 2020 only 2 months after the project started. Several patients have already started using our virtual reality application at the Rennes University Hospital, and the clinical evaluation of VERARE is expected to be achieved and completed in 2021.

6 Highlights of the year

- Mélanie Cogné (Medical Doctor, PhD, CHU Rennes) has joined the Hybrid team as a permanent member.

6.1 Awards

- 20 IEEE VR Best Journal Paper Award 2020: obtained by Rebecca Fribourg, Ferran Argelaguet, Anatole Lécuyer, Ludovic Hoyet, for the paper entitled: “Avatar and Sense of Embodiment: Studying the Relative Preference Between Appearance, Control and Point of View”.

- 53 IEEE VR Best Conference Paper Award 2020: obtained by Hakim Si-Mohammed, Catarina Lopes-Dias, María Duarte, Ferran Argelaguet Sanz, Camille Jeunet, Géry Casiez, Gernot Müller-Putz, Anatole Lécuyer, Reinhold Scherer, for the paper entitled: “Detecting System Errors in Virtual Reality Using EEG Through Error-Related Potentials”.

- 38 EuroVR Conference Best Paper Award 2020: obtained by Hugo Brument, Maud Marchal, Anne-Hélène Olivier, Ferran Argelaguet, for the paper entitled: “Influence of Dynamic Field of View Restrictions on Rotation Gain Perception in Virtual Environments”.

- 41 ICAT-EGVE Conference Best paper Award 2020: obtained by Rebecca Fribourg, Evan Blanpied, Ludovic Hoyet, Anatole Lécuyer, Ferran Argelaguet, for the paper entitled: “Influence of Threat Occurrence and Repeatability on the Sense of Embodiment and Threat Response in VR”.

- 36 Best IEEE VR 3DUI Contest Demo Award - Honorable Mention 2020, obtained by Alexandre Audinot, Diane Dewez, Gwendal Fouché, Rebecca Fribourg, Thomas Howard, Flavien Lécuyer, Tiffany Luong, Victor Mercado, Adrien Reuzeau, Thomas Rinnert, Guillaume Vailland, and Ferran Argelaguet, for the paper entitled: “3Dexterity: Finding your place in a 3-armed world”.

7 New software and platforms

7.1 New software

7.1.1 #FIVE

- Name: Framework for Interactive Virtual Environments

- Keywords: Virtual reality, 3D, 3D interaction, Behavior modeling

- Scientific Description: #FIVE (Framework for Interactive Virtual Environments) is a framework for the development of interactive and collaborative virtual environments. #FIVE was developed to answer the need for an easier and a faster design and development of virtual reality applications. #FIVE provides a toolkit that simplifies the declaration of possible actions and behaviours of objects in a VE. It also provides a toolkit that facilitates the setting and the management of collaborative interactions in a VE. It is compliant with a distribution of the VE on different setups. It also proposes guidelines to efficiently create a collaborative and interactive VE. The current implementation is in C# and comes with a Unity3D engine integration, compatible with MiddleVR framework.

- Functional Description: #FIVE contains software modules that can be interconnected and helps in building interactive and collaborative virtual environments. The user can focus on domain-specific aspects for his/her application (industrial training, medical training, etc) thanks to #FIVE's modules. These modules can be used in a vast range of domains using virtual reality applications and requiring interactive environments and collaboration, such as in training for example.

-

URL:

https://

bil. inria. fr/ fr/ software/ view/ 2527/ tab - Publication: hal-01147734

- Contacts: Florian Nouviale, Bruno Arnaldi, Valérie Gouranton

- Participants: Florian Nouviale, Valérie Gouranton, Bruno Arnaldi, Vincent Goupil, Carl-Johan Jorgensen, Emeric Goga, Adrien Reuzeau, Alexandre Audinot

7.1.2 #SEVEN

- Name: Sensor Effector Based Scenarios Model for Driving Collaborative Virtual Environments

- Keywords: Virtual reality, Interactive Scenarios, 3D interaction

- Scientific Description: #SEVEN (Sensor Effector Based Scenarios Model for Driving Collaborative Virtual Environments) is a model and an engine based on petri nets extended with sensors and effectors, enabling the description and execution of complex and interactive scenarios

- Functional Description: #SEVEN enables the execution of complex scenarios for driving Virtual Reality applications. #SEVEN's scenarios are based on enhanced Petri net and state machine models which is able to describe and solve intricate event sequences. #SEVEN comes with an editor for creating, editing and remotely controlling and running scenarios. #SEVEN is implemented in C# and can be used as a stand-alone application or as a library. An integration to the Unity3D engine, compatible with MiddleVR, also exists.

- Release Contributions: Adding state machine handling for scenario description in addition to the already existing petri net format. Improved scenario editor

-

URL:

https://

bil. inria. fr/ fr/ software/ view/ 2528/ tab - Publications: hal-01147733, hal-01199738, tel-01419698, hal-01086237

- Contacts: Valérie Gouranton, Bruno Arnaldi, Florian Nouviale

- Participants: Florian Nouviale, Valérie Gouranton, Bruno Arnaldi, Vincent Goupil, Emeric Goga, Carl-Johan Jorgensen, Adrien Reuzeau, Alexandre Audinot

7.1.3 OpenVIBE

- Keywords: Neurosciences, Interaction, Virtual reality, Health, Real time, Neurofeedback, Brain-Computer Interface, EEG, 3D interaction

- Functional Description: OpenViBE is a free and open-source software platform devoted to the design, test and use of Brain-Computer Interfaces (BCI). The platform consists of a set of software modules that can be integrated easily and efficiently to design BCI applications. The key features of OpenViBE software are its modularity, its high-performance, its portability, its multiple-users facilities and its connection with high-end/VR displays. The designer of the platform enables to build complete scenarios based on existing software modules using a dedicated graphical language and a simple Graphical User Interface (GUI). This software is available on the Inria Forge under the terms of the AGPL licence, and it was officially released in June 2009. Since then, the OpenViBE software has already been downloaded more than 60000 times, and it is used by numerous laboratories, projects, or individuals worldwide. More information, downloads, tutorials, videos, documentations are available on the OpenViBE website.

-

URL:

http://

openvibe. inria. fr - Authors: Charles Garraud, Jérôme Chabrol, Thierry Gaugry, Cedric Riou, Yann Renard, Anatole Lécuyer, Jozef Legény, Laurent Bonnet, Jussi Tapio Lindgren, Fabien Lotte, Thomas Prampart, Thibaut Monseigne

- Contacts: Anatole Lécuyer, Ana Bela Leconte

- Participants: Cedric Riou, Thierry Gaugry, Anatole Lécuyer, Fabien Lotte, Jussi Tapio Lindgren, Laurent Bougrain, Maureen Clerc, Théodore Papadopoulo

- Partners: INSERM, GIPSA-Lab

7.2 New platforms

7.2.1 Immerstar

- Participants: Florian Nouviale, Ronan Gaugne

- URL: http://

www. irisa. fr/ immersia/

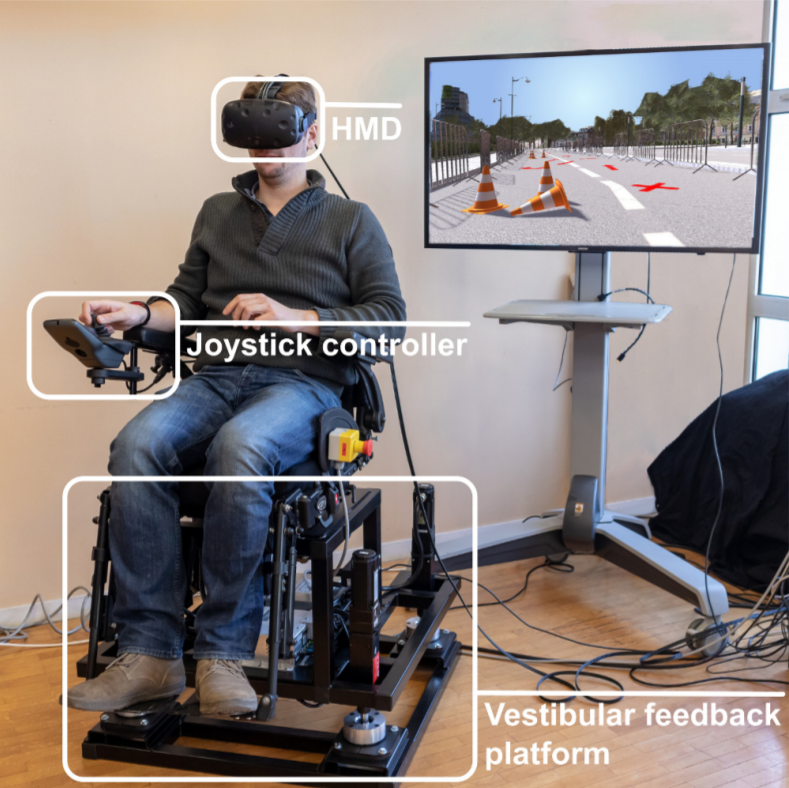

With the two virtual reality technological platforms Immersia and Immermove, grouped under the name Immerstar, the team has access to high-level scientific facilities. This equipment benefits the research teams of the center and has allowed them to extend their local, national and international collaborations. The Immerstar platform was granted by an Inria funding for the 2015-2019 period which had enabled several important evolutions. In particular, in 2018, a haptic system covering the entire volume of the Immersia platform was installed, allowing various configurations from single haptic device usage to dual haptic devices usage with either one or two users. In addition, a motion platform designed to introduce motion feedback for powered wheelchair simulations has also been incorporated (see Figure 2).

We celebrated the twentieth anniversary of the Immersia platform in November 2019 by inaugurating the new haptic equipment. We proposed scientific presentations and received 150 participants, and visits for the support services in which we received 50 persons.

Based on these support, in 2020, we participated to a PIA3-Equipex+ proposal. This proposal CONTINUUM involves 22 partner, has been succesfully evaluated and will be granted. The CONTINUUM project will create a collaborative research infrastructure of 30 platforms located throughout France, to advance interdisciplinary research based on interaction between computer science and the human and social sciences. Thanks to CONTINUUM, 37 research teams will develop cutting-edge research programs focusing on visualization, immersion, interaction and collaboration, as well as on human perception, cognition and behaviour in virtual/augmented reality, with potential impact on societal issues. CONTINUUM enables a paradigm shift in the way we perceive, interact, and collaborate with complex digital data and digital worlds by putting humans at the center of the data processing workflows. The project will empower scientists, engineers and industry users with a highly interconnected network of high-performance visualization and immersive platforms to observe, manipulate, understand and share digital data, real-time multi-scale simulations, and virtual or augmented experiences. All platforms will feature facilities for remote collaboration with other platforms, as well as mobile equipment that can be lent to users to facilitate onboarding.

8 New results

8.1 Virtual Reality Tools and Usages

8.1.1 Do Distant or Colocated Audiences Affect User Activity in VR?

Participants: Romain Terrier, Valérie Gouranton, Bruno Arnaldi.

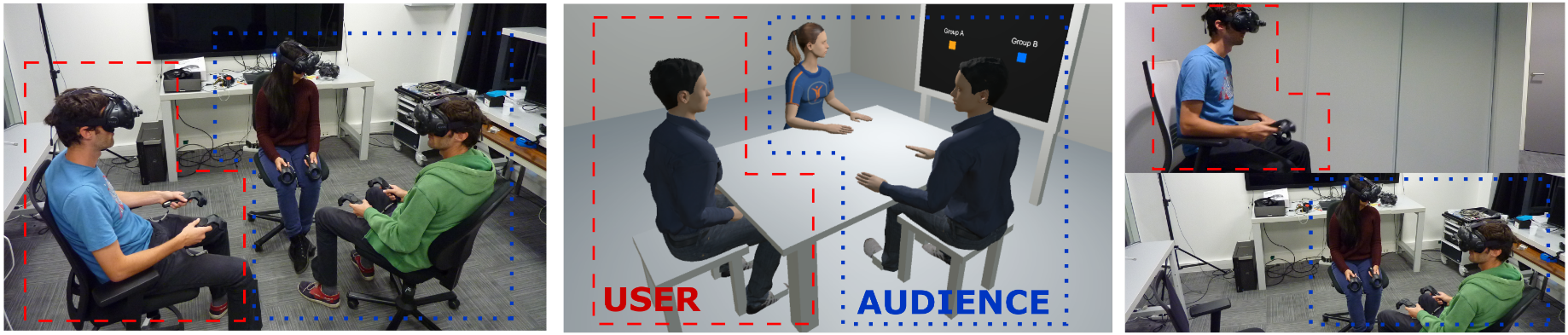

In this work 61 we explored the impact of distant or colocated real audiences on social inhibition through a user study in virtual reality (VR) (see Figure 3). The study investigated, in an application, the differences among two multi-user configurations (i.e., the local and distant conditions) and one control condition where the user is alone (i.e., the alone condition). In the local condition, a single user and a real audience shared the same real room. Conversely, in the distant condition, the user and the audience were separated into two different real rooms. The user performed a categorization of numbers task in VR, for which the users’ performance results (i.e., type and answering time) were extracted as subjective feelings and perceptions (i.e., perceptions of others, stress, cognitive workload, presence). The differences between the local and distant configurations were explored. Furthermore, we investigated any gender biases in the objective and subjective results. During the local and distant conditions, the presence of a real audience affected the user’s performance due to social inhibition. The users were even more influenced when the audience doesn't share the same room, despite the audience being less directly perceived in this condition.

This work was done in collaboration with Orange Labs Rennes and the Institute of research and technology b<>com, Cesson-Sevigne, France.

8.1.2 Scenario-based VR Framework for Product Design

Participants: Romain Terrier, Valérie Gouranton, Bruno Arnaldi.

Virtual Reality (VR) applications are promising solutions in supporting design processes across multiple domains. In complex systems (e.g. machines, cities, interior layouts), VR applications are used alongside Computer Assisted Design (CAD) systems which are (1) rigid (i.e., they lack customization), and (2) limit the design iterations. VR systems need to address these shortcomings so that they can become widespread and adaptable across design domains. We thus propose a new VR Framework 54 based on scenarios and a new generic theoretical design model to assist developers in creating versatile and personalized applications for designers. The new generic theoretical model describes the common design activities shared by many design domains, and the scenario depicts the design model to allow design iterations in VR. Through scenarios, the VR Framework enables creating customized copies of the generic design process to fulfill the needs of each design domain. The customization capability of our solution is illustrated on a use case

This work was done in collaboration with the Institute of research and technology b<>com, Cesson-Sevigne, France and the Human Design Group (HDG), Toulouse, France.

8.1.3 A Machine Learning Tool to Match 2D Drawings and 3D Objects’ Category for Populating Mockups in VR

Participants: Romain Terrier.

Virtual Environments (VE) relying on Virtual Reality (VR) can facilitate the codesign by enabling the users to create 3D mockups directly in the VE. Databases of 3D objects are helpful to populate the mockup and necessitate retrieving methods for the users. In early stages of the design process, the mockups are made up of common objects rather than variations of objects. Retrieving a 3D object in a large database can be fastidious even more in VR. Taking into account the necessity of a natural user's interaction and the necessity to populate the mockup with common 3D objects, we propose, in this work, a retrieval method based on 2D sketching in VR and machine learning. Our system is able to recognize 90 categories of objects related to VR interior design with an accuracy up to 86Image : See image C in pièce-jointe

This work was done in collaboration with the Institute of research and technology b<>com, Cesson-Sevigne, France.

8.1.4 Action sequencing in VR, a no-code Approach

Participants: Lécuyer Flavien, Adrien Reuzeau, Ronan Gaugne, Valérie Gouranton, Bruno Arnaldi.

In many domains, it is common to have procedures, with a given sequence of actions to follow. To perform such procedures, virtual reality is a helpful tool as it allows to safely place a user in a given situation as many times as needed, without risk. Indeed, learning in a real situation implies risks for both the studied object – or the patient – (e.g. badly treated injury) and the trainee (e.g. lack of danger awareness). To do this, it is necessary to integrate the procedure in the virtual environment, under the form of a scenario. Creating such a scenario is a difficult task for a domain expert, as the coding skill level needed for that is too high. Often, a developer is needed to manage the creation of the virtual content, with the drawbacks that are implied (e.g. time loss and misunderstandings).

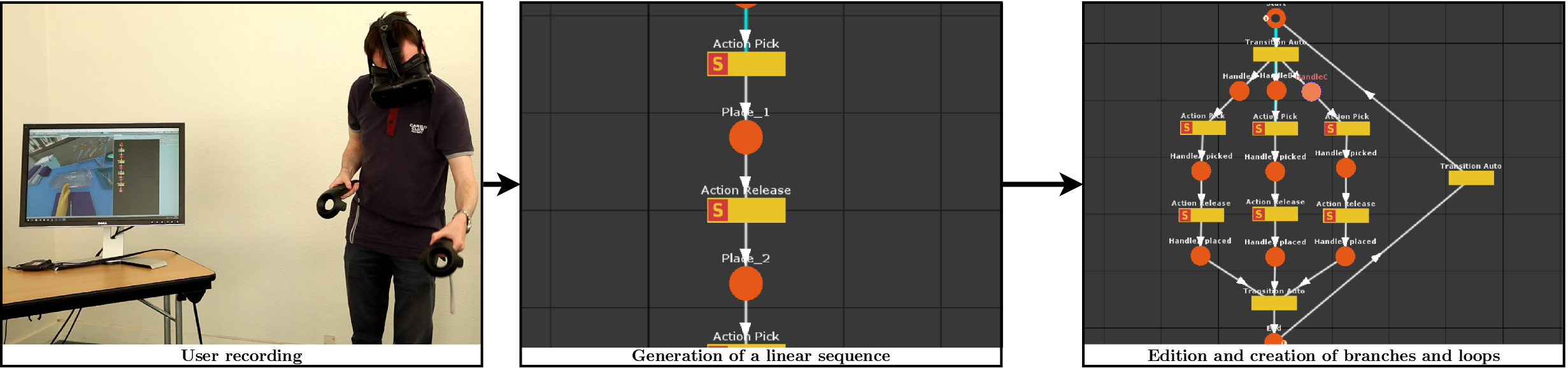

We propose 29 a complete workflow to let the domain expert create their own scenarized content for virtual reality, without any need for coding. This workflow is divided in two steps: first, a new approach is provided to generate a scenario without any code, through the principle of creating by doing. Then, efficient methods are provided to reuse the scenario in an application in different ways, for either a human user guided by the scenario, or a virtual actor controlled by it (see Figure4).

8.1.5 Unveiling the implicit knowledge, one scenario at a time

Participants: Lécuyer Flavien, Adrien Reuzeau, Valérie Gouranton, Bruno Arnaldi.

When defining virtual reality applications with complex procedures, such as medical operations or mechanical assembly or maintenance procedures, the complexity and the variability of the procedures makes the definition of the scenario difficult and time-consuming. Indeed, the variability complicates the definition of the scenario by the experts, and its combinatories demands a comprehension effort for the developer, which is often out of reach. Additionally, the experts have a hard time explaining the procedures with a sufficient level of details, as they usually forget to mention some actions that are, in fact, important for the application.

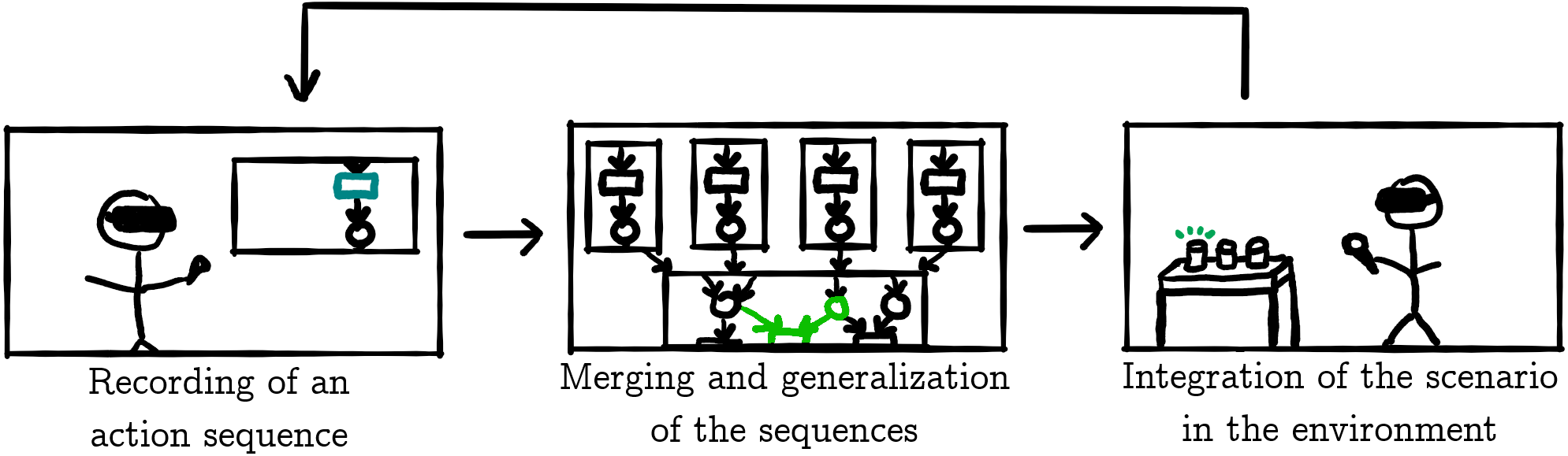

To ease the creation of scenario, we propose 28 a complete methodology, based on (1) an iterative process composed of: (2) the recording of actions in virtual reality to create sequences of actions, and (3) the use of mathematical tools that can generate a complete scenario from a few of those sequences, with (4) graphical visualization of the scenarios and complexity indicators. This process helps the expert to determine the sequences that must be recorded to obtain a scenario with the required variability (see Figure 5).

This work was done in collaboration with the Hycomes team.

8.1.6 Vestibular Feedback on a Virtual Reality Wheelchair Driving Simulator: A Pilot Study

Participants: Vailland Guillaume, Valérie Gouranton, Bruno Arnaldi.

Autonomy and the ability to maintain social activities can be challenging for people with disabilities experiencing reduced mobility. In the case of disabilities that impact mobility, power wheelchairs can help such people retain or regain autonomy. Nonetheless, driving a power wheelchair is a complex task that requires a combination of cognitive, visual and visuo-spatial abilities. In this context, driving simulators might be efficient and promising tools to provide alternative, adaptive, flexible, and safe training. In previous work, we proposed a Virtual Reality (VR) driving simulator 56, 57 integrating vestibular feedback to simulate wheelchair motion sensations. The performance and acceptability of a VR simulator rely on satisfying user Quality of Experience (QoE). This paper presents a pilot study assessing the impact of the vestibular feedback provided on user QoE (see Figure 6). The results show that vestibular feedback activation increases SoP and decreases cybersickness.

This work was done in collaboration with the Rainbow team.

8.1.7 Introducing Mental Workload Assessment for the Design of VR Training Scenarios

Participants: Tiffany Luong, Ferran Argelaguet, Anatole Lécuyer.

Training is one of the major use cases of Virtual Reality (VR) due to the flexibility and reproducibility of VR simulations. However, the use of the user's cognitive state, and in particular mental workload (MWL), remains largely unexplored in the design of training scenarios. In this work 47, we propose to consider MWL for the design of complex training scenarios involving multiple parallel tasks in VR. The proposed approach is based on the assessment of the MWL elicited by each potential tasks configuration in the training application. Following the assessment, the resulting model is then used to create training scenarios able to modulate the user's MWL over time. This approach is illustrated by a VR flight training simulator based on the Multi-Attribute Task Battery II, able to generate 12 different tasks configurations. A first user study (N=38) was conducted to assess the MWL for each tasks configuration using self-reports and performance measurements. This assessment was then used to generate three training scenarios in order to induce different levels of MWL over time. A second user study (N=14) confirmed that the proposed approach was able to induce the expected MWL over time for each training scenario. These results pave the way to further studies exploring how MWL modulation can be used to improve VR training applications.

This work was done in collaboration with the Institute of research and technology b<>com, Cesson-Sevigne, France.

8.1.8 Towards Real-Time Recognition of Users' Mental Workload Using Integrated Physiological Sensors into a VR HMD

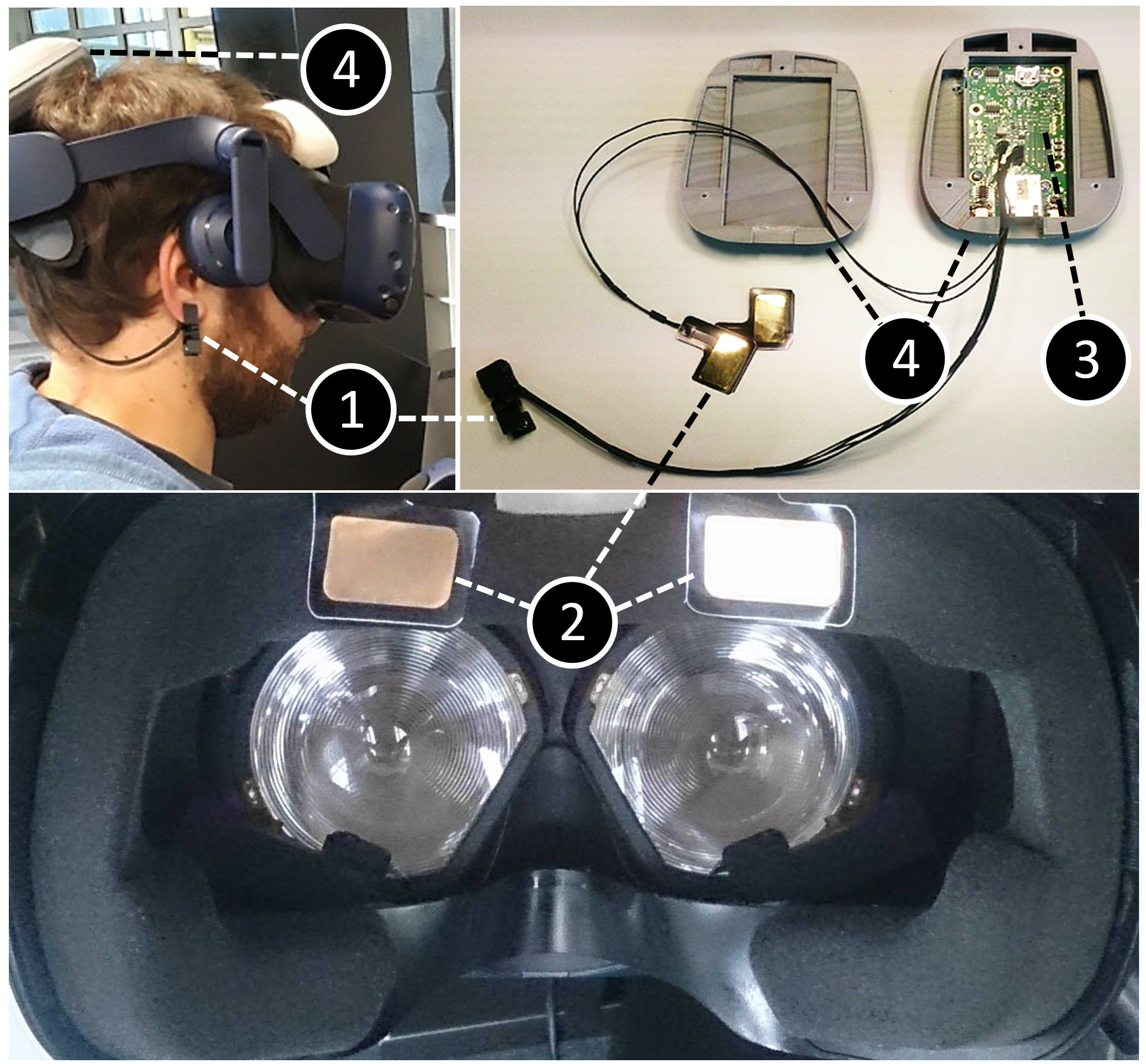

Participants: Tiffany Luong, Ferran Argelaguet, Anatole Lécuyer.

In this work 48 we proposed an “all-in-one” solution for the real-time recognition of users’ mental workloads in Virtual Reality (VR) through the customization of a commercial HMD with physiological sensors. First, we describe the hardware and software solution employed to build the system (see Figure 7). Second, we detail the machine learning methods used for the automatic recognition of the users' mental workload, which are based on the well-known Random Forest algorithm. In order to gather data to train the system, we conducted an extensive user study with 75 participants using a VR flight simulator to induce different levels of mental workload. With the data collected, we were able to train the system in order to classify four different levels of mental workload with an accuracy up to 65%. In addition, we discuss the role of the signal normalization procedures, the contribution of the different physiological signals on the recognition accuracy and compare the results obtained with the sensors embedded in the HMD with commercial grade systems. Taken together, our results suggest that such all-in-one approach, with physiological sensors directly embedded in the HMD, is a promising path for VR applications in which the real-time or off-line estimation of Mental Workload assessment is beneficial.

This work was done in collaboration with the Institute of research and technology b<>com, Cesson-Sevigne, France.

8.1.9 Influence of Dynamic Field of View Restrictions on Rotation Gain Perception in Virtual Environments

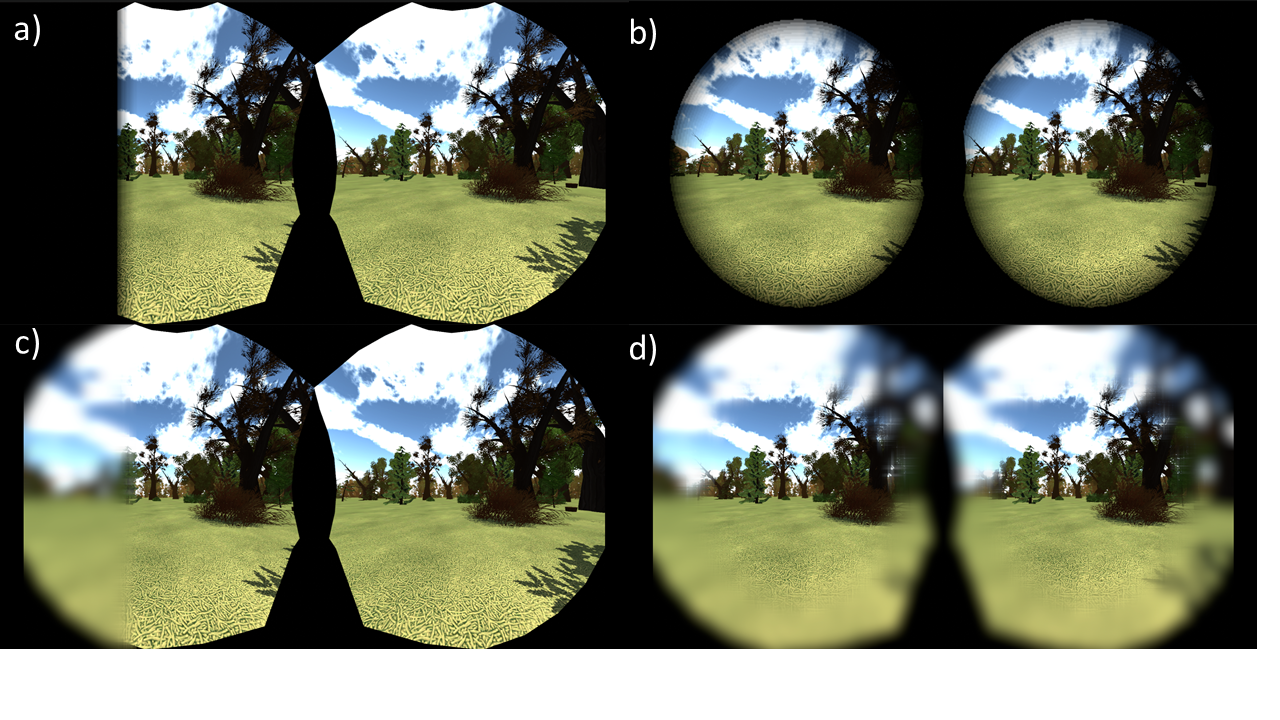

Participants: Hugo Brument, Maud Marchal, Ferran Argelaguet.

The perception of rotation gains, defined as a modification of the virtual rotation with respect to the real rotation, has been widely studied to determine detection thresholds and widely applied to redirected navigation techniques. In contrast, Field of View (FoV) restrictions have been explored in virtual reality as a mitigation strategy for motion sickness, although they can alter user's perception and navigation performance in virtual environments. This work 38 explored whether the use of dynamic FoV manipulations, referred also as vignetting, could alter the perception of rotation gains during virtual rotations in virtual environments (see Figure 8). We conducted a study to estimate and compare perceptual thresholds of rotation gains while varying the vignetting type (no vignetting, horizontal and global vignetting) and the vignetting effect (luminance or blur). 24 Participants performed 60 or 90 degrees virtual rotations in a virtual forest, with different rotation gains applied. Participants had to choose whether or not the virtual rotation was greater than the physical one. Results showed that the point of subjective equality was different across the vignetting types, but not across the vignetting effect or the turns. Subjective questionnaires indicated that vignetting seems less comfortable than the baseline condition to perform the task. We discuss the applications of such results to improve the design of vignetting for redirection techniques as well as the understanding of perception of rotation gains.

This work was done in collaboration with the MimeTIC and Rainbow teams.

8.1.10 Does the Control Law Matter? Characterization and Evaluation of Control Laws for Virtual Steering Navigation

Participants: Hugo Brument, Maud Marchal, Ferran Argelaguet.

This work 39 aimed to investigate the influence of the control law in virtual steering techniques, and in particular the speed update, on users' behaviour while navigating in virtual environments. To this end, we first proposed to characterize existing control laws. Then, we designed a user study to evaluate the impact of the control law on users' behaviour and performance in a navigation task. Participants had to perform a virtual slalom while wearing a head-mounted display. They were following three different sinusoidal-like trajectory (with low, medium and high curvature) using a torso-steering navigation technique with three different control laws (constant, linear and adaptive). The adaptive control law, based on the biomechanics of human walking, takes into account the relation between speed and curvature. We propose a spatial and temporal analysis of the trajectories performed both in the virtual and the real environment. The results show that users' trajectories and behaviors were significantly affected by the shape of the trajectory but also by the control law. In particular, users' angular velocity was higher with constant and linear laws compared to the adaptive law. In addition, constant and linear laws generated a higher variability in linear speed, angular velocity and accelerations profiles compared to the adaptive law. The analysis of subjective feedback suggests that these differences might result in a lower perceived physical demand and effort for the adaptive control law. This work concludes discussing the potential applications of such results to improve the design and evaluation of navigation control laws.

This work was done in collaboration with the MimeTIC and Rainbow teams.

8.2 Virtual Avatars

8.2.1 Avatar and Sense of Embodiment: Studying the Relative Preference Between Appearance, Control and Point of View

Participants: Rebecca Fribourg, Ferran Argelaguet, Anatole Lécuyer.

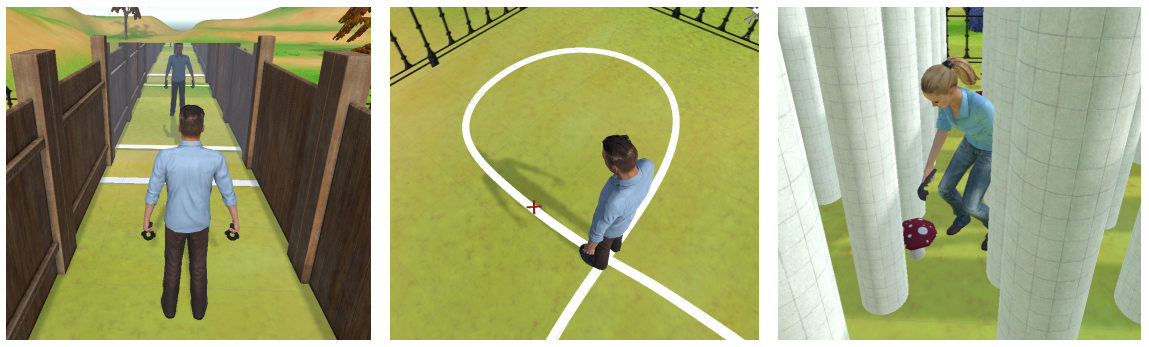

In Virtual Reality, a number of studies have been conducted to assess the influence of avatar appearance, avatar control and user point of view on the Sense of Embodiment (SoE) towards a virtual avatar. However, such studies tend to explore each factor in isolation. This work aims to better understand the inter-relations among these three factors by conducting a subjective matching experiment. We conducted an experiment 20 in which participants had to match a given “optimal” SoE avatar configuration (realistic avatar, full-body motion capture, first-person point of view), starting by a “minimal” SoE configuration (minimal avatar, no control, third-person point of view), by iteratively increasing the level of each factor. The choices of the participants provide insights about their preferences and perception over the three factors considered. Moreover, the subjective matching procedure was conducted in the context of four different interaction tasks with the goal of covering a wide range of actions an avatar can do in a VE (see Figure 9). The work also included a baseline experiment (n=20) which was used to define the number and order of the different levels for each factor, prior to the subjective matching experiment (e.g. different degrees of realism ranging from abstract to personalised avatars for the visual appearance). The results of the subjective matching experiment show that point of view and control levels were consistently increased by users before appearance levels when it comes to enhancing the SoE. Second, several configurations were identified with equivalent SoE as the one felt in the optimal configuration, but vary between the tasks. Taken together, our results provide valuable insights about which factors to prioritize in order to enhance the SoE towards an avatar in different tasks, and about configurations which lead to fulfilling SoE in VE.

This work was done in collaboration with the MimeTIC team.

8.2.2 Virtual Co-Embodiment: Evaluation of the Sense of Agency while Sharing the Control of a Virtual Body among Two Individuals

Participants: Rebecca Fribourg, Ferran Argelaguet, Anatole Lécuyer.

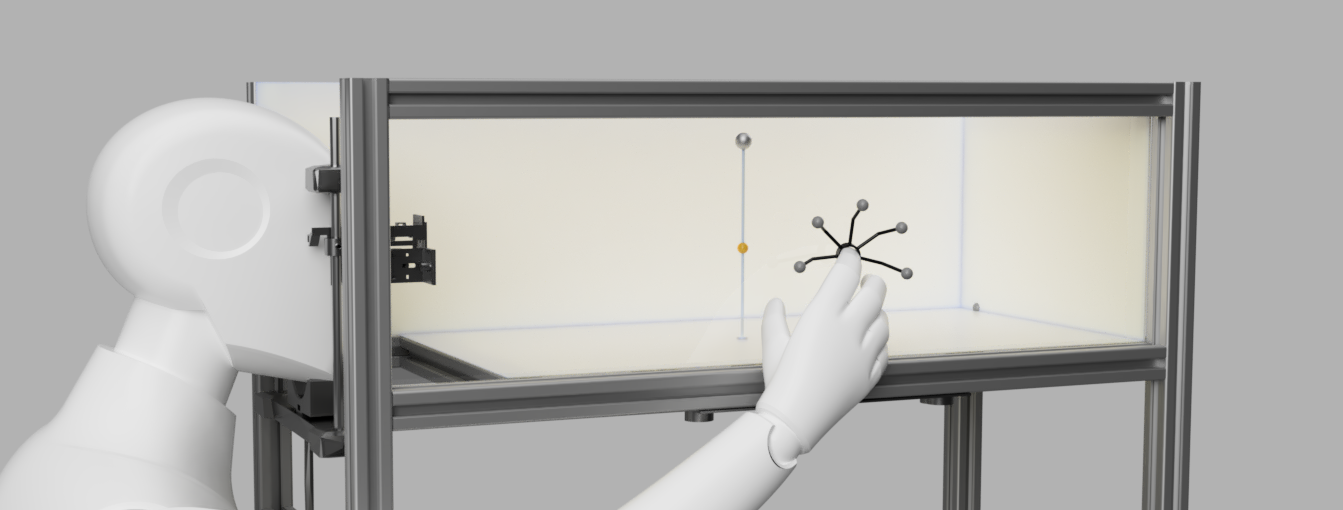

In this work, we introduce a concept called “virtual co-embodiment” 21, which enables a user to share their virtual avatar with another entity (e.g., another user, robot, or autonomous agent) as depicted in Figure 10. We describe a proof-of-concept in which two users can be immersed from a first-person perspective in a virtual environment and can have complementary levels of control (total, partial, or none) over a shared avatar. In addition, we conducted an experiment to investigate the influence of users' level of control over the shared avatar and prior knowledge of their actions on the users' sense of agency and motor actions. The results showed that participants are good at estimating their real level of control but significantly overestimate their sense of agency when they can anticipate the motion of the avatar. Moreover, participants performed similar body motions regardless of their real control over the avatar. The results also revealed that the internal dimension of the locus of control, which is a personality trait, is negatively correlated with the user's perceived level of control. The combined results unfold a new range of applications in the fields of virtual-reality-based training and collaborative teleoperation, where users would be able to share their virtual body.

This work was done in collaboration with the MimeTIC team and the University of Tokyo.

8.2.3 Virtual Avatars as Children Companions For a VR-based Educational Platform: How Should They Look Like?

Participants: Jean-Marie Normand.

Virtual Reality (VR) has the potential of becoming a game changer in education, with studies showing that VR can lead to better quality of and access to education. One area that is promising, especially for young children, is the use of Virtual Companions that act as teaching assistants and support the learners' educational journey in the virtual environment. However, as it is the case in real life, the appearance of the virtual companions can be critical for the learning experience. This work studies the impact of the age, gender and general appearance (human- or robot-like) of virtual companions on 9-12 year old children 55. Our results over two experiments (n=24 and n=13) tend to show that children have a bigger sense of Spatial Presence, Engagement and Ecological Validity when interacting with a human-like Virtual Companion of the Same Age and of a Different Gender.

This work was done in collaboration with Elsa Thiaville and Anthony Ventresque, from SFI Lero & School of Computer Science, University College Dublin, Ireland and Joe Kenny from Zeeko, an Irish company.

8.2.4 Influence of Threat Occurrence and Repeatability on the Sense of Embodiment and Threat Response in VR

Participants: Rebecca Fribourg, Ferran Argelaguet, Anatole Lécuyer.

Does virtual threat harm the Virtual Reality (VR) experience? We explored the potential impact of threat occurrence (see Figure 11) and repeatability on users' Sense of Embodiment (SoE) and threat response 41. The main findings of our experiment are that the introduction of a threat does not alter users' SoE but might change their behaviour while performing a task after the threat occurrence. In addition, threat repetitions did not show any effect on users' subjective SoE, or subjective and objective responses to threat. Taken together, our results suggest that embodiment studies should expect potential change in participants behaviour while doing a task after a threat was introduced, but that threat introduction and repetition do not seem to impact the subjective measure of the SoE (user responses to questionnaires) nor the objective measure of the SoE (behavioural response to threat towards the virtual body).

This work was done in collaboration with the MimeTIC team and Davidson College.

8.2.5 Studying the Role of Haptic Feedback on Virtual Embodiment in a Drawing Task

Participants: Grégoire Richard, Ferran Argelaguet, Anatole Lécuyer.

The role of haptic feedback on virtual embodiment is investigated in this work 34 in a context of active and fine manipulation. In particular, we explore which haptic cue, with varying ecological validity, has more influence on virtual embodiment. We conducted a within-subject experiment with 24 participants and compared self-reported embodiment over a humanoid avatar during a coloring task under three conditions: force-feedback, vibrotactile feedback, no haptic feedback. In the experiment, force-feedback was more ecological as it matched reality more closely, while vibrotactile feedback was more symbolic. Taken together, our results show significant superiority of force-feedback over no haptic feedback regarding embodiment, and significant superiority of force feedback over the other two conditions regarding subjective performance. Those results suggest that a more ecological feedback is better suited to elicit embodiment during fine manipulation tasks.

This work was done in collaboration with the Loki Inria team.

8.2.6 Studying the Inter-Relation Between Locomotion Techniques and Embodiment in Virtual Reality

Participants: Diane Dewez, Ferran Argelaguet, Anatole Lécuyer.

This work 40 explored the potential inter-relation between locomotion and embodiment by focusing on the two following questions: Does the locomotion technique have an impact on the user’s sense of embodiment? Does embodying an avatar have an impact on the user’s preference and performance depending on the locomotion technique? To address these questions, we conducted a user study with sixty participants. Three widely used locomotion techniques were evaluated: real walking, walking-in-place and virtual steering (see Figure 12). All participants performed four tasks with and without a full-body avatar. The results show that participants had a comparable sense of embodiment with all techniques when embodied in an avatar seen from first-person perspective, and that the presence or absence of the virtual avatar did not alter their performance while navigating, independently of the technique. Taken together, our results represent a first attempt to qualify the inter-relation between virtual navigation and virtual embodiment, and suggest that the 3D locomotion technique used has little influence on the user’s sense of embodiment in VR.

This work was done in collaboration with the MimeTIC team.

8.2.7 The impact of stylization on face recognition

Participants: Ferran Argelaguet, Anatole Lécuyer, Nicolas Olivier.

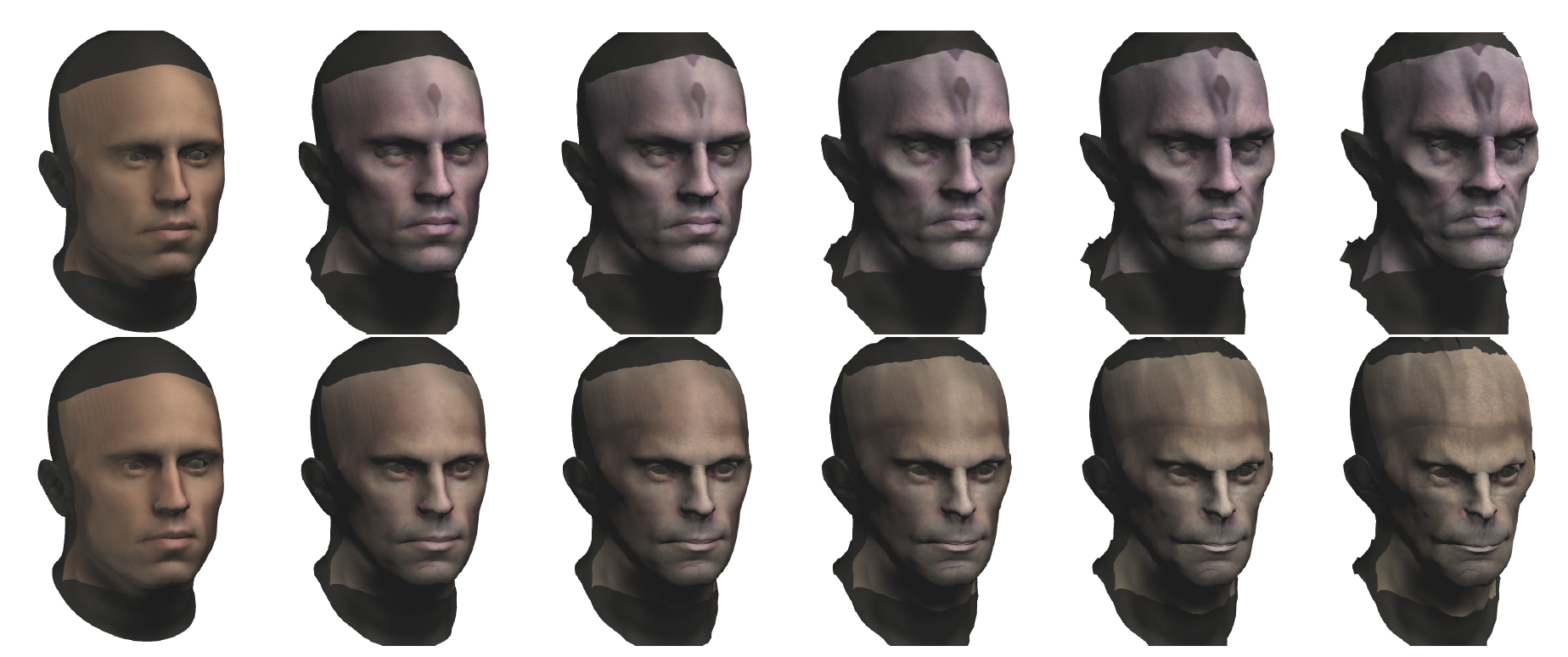

While digital humans are key aspects of the rapidly evolving areas of virtual reality, gaming, and online communications, many applications would benefit from using digital personalized (stylized) representations of users, as they were shown to highly increase immersion, presence and emotional response. In particular, depending on the target application, one may want to look like a dwarf or an elf in a heroic fantasy world, or like an alien on another planet, in accordance with the style of the narrative. While creating such virtual replicas requires stylization of the user’s features onto the virtual character, no formal study has however been conducted to assess the ability to recognize stylized characters. We carried-out a perceptual study investigating the effect of the degree of stylization on the ability to recognize an actor, and the subjective acceptability of stylizations 51 (see Figure 13). Results show that recognition rates decrease when the degree of stylization increases, while acceptability of the stylization increases. These results provide recommendations to achieve good compromises between stylization and recognition, and pave the way to new stylization methods providing a tradeoff between stylization and recognition of the actor.

This work was done in collaboration with the MimeTIC team and Interdigital.

8.2.8 3Dexterity: Finding your place in a 3-armed world

Participants: Audinot Alexandre, Dewez Diane, Fouché Gwendal, Fribourg Rebecca, Howard Thomas, Lécuyer Flavien, Luong Tiffany, Mercado Victor, Reuzeau Adrien, Rinnert Thomas, Vailland Guillaume, Argelaguet Ferran.

In the context of the IEEE VR 2020 3DUI Contest entitled “Embodiment for the Difference”, we showcased a VR application to highlight the challenges that people with physical disabilities face on their daily life. Two-armed users are placed in a world where people normally have three arms, making them effectively physically disabled. The scenario takes the user through the process of struggling with everyday interactions (designed for humans with three arms), hen receiving a third arm prosthesis and thus recovering some level of autonomy. The experience is intended to generate a sense of difference and empathy for physically disabled persons.

8.3 Augmented Reality Tools and Usages

8.3.1 Can Retinal Projection Displays Improve Spatial Perception in Augmented Reality?

Participants: Étienne Peillard, Jean-Marie Normand, Ferran Argelaguet Sanz, Guillaume Moreau, Anatole Lécuyer.

Commonly used Head Mounted Displays in Augmented Reality (AR), namely Optical See-Through displays, suffer from a main drawback: their focal lenses can only provide a fixed focal distance. Such a limitation is suspected to be one of the main factors for distance misperception in AR.

In this work 52, we studied the use of an emerging new kind of AR display to tackle such perception issues: Retinal Projection Displays (RPD). With RPDs, virtual images have no focal distance and the AR content is always in focus. We conducted the first reported experiment evaluating egocentric distance perception of observers using Retinal Projection Displays (see Figure 14). We compared the precision and accuracy of the depth estimation between real and virtual targets, displayed by either OST HMDs or RPDs. Interestingly, our results show that RPDs provide depth estimates in AR closer to real ones compared to OST HMDs. Indeed, the use of an OST device was found to lead to an overestimation of the perceived distance by 15%, whereas the distance overestimation bias dropped to 3% with RPDs. Besides, the task was reported with the same level of difficulty and no difference in precision. As such, our results shed the first light on retinal projection displays' benefits in terms of user's perception in Augmented Reality, suggesting that RPD is a promising technology for AR applications in which an accurate distance perception is required.

This work was done in collaboration with Yuta Itoh, from the Augmented Vision Laboratory of the Tokyo Institute of Technology.

8.3.2 A Unified Design & Development Framework for Mixed Interactive Systems

Participants: Guillaume Bataille, Valérie Gouranton, Bruno Arnaldi.

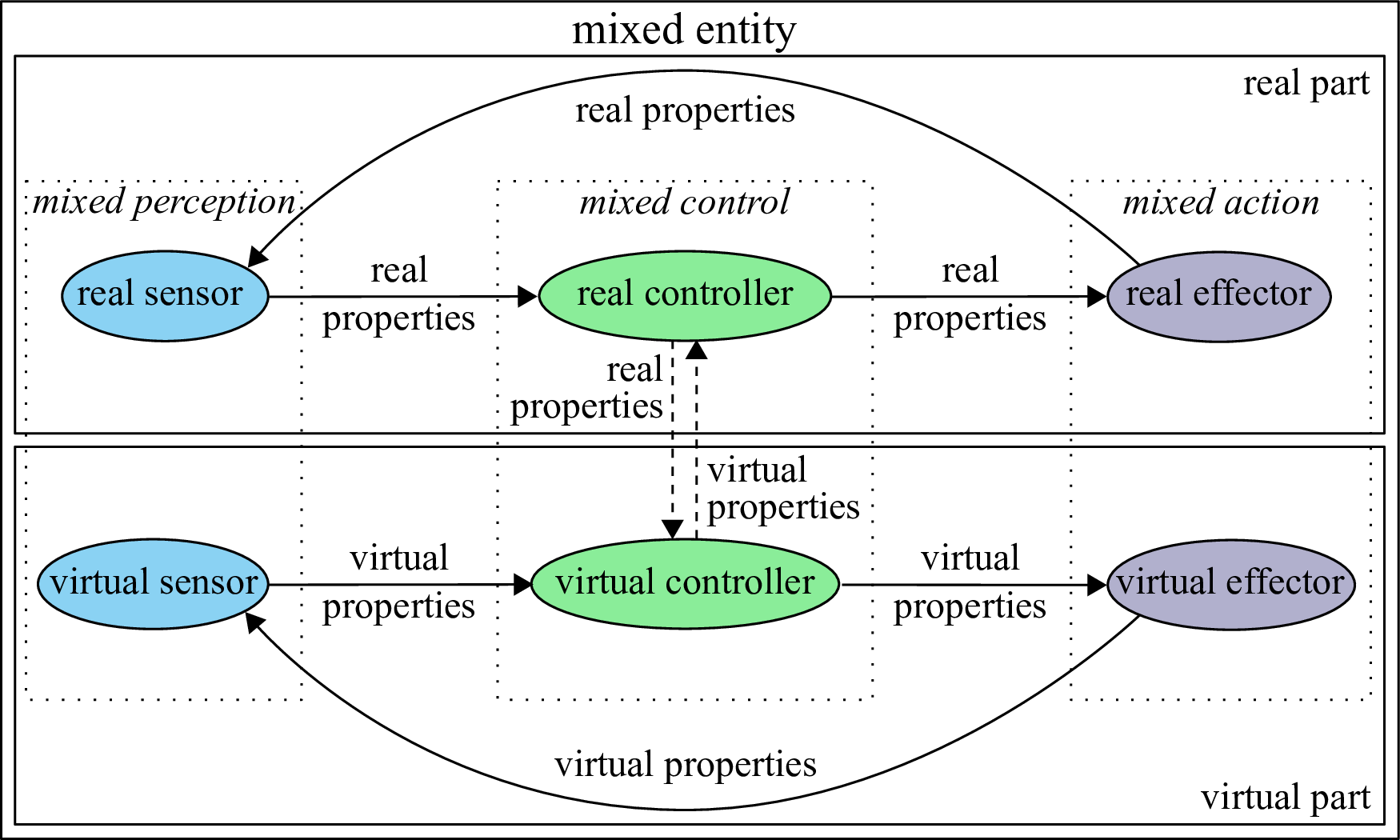

Mixed reality, natural user interfaces and the internet of things are complementary computing paradigms. They converge towards new forms of interactive systems named mixed interactive systems. Because of their exploding complexity, mixed interactive systems induce new challenges for designers and developers. We need new abstractions of these systems in order to describe their real-virtual interplay. We also need to break mixed interactive systems down into pieces in order to segment their complexity into comprehensible subsystems. This work 37 presents a framework to enhance the design and development of these systems. We propose a model unifying the paradigms of mixed reality, natural user interfaces and the internet of things. Our model decomposes a mixed interactive system into a graph of mixed entities. Our framework implements this model, which facilitates interactions between users, mixed reality devices and connected objects (see Figure 15). In order to demonstrate our approach, we present how designers and developers can use this framework to develop a mixed interactive system dedicated to smart building occupants.

This work was done in collaboration with Jérémy Lacoche, from Orange.

8.4 Haptic Feedback

8.4.1 Towards Haptic Images: A Survey on Touchscreen-Based Surface Haptics

Participants: Antoine Costes, Ferran Argelaguet, Anatole Lécuyer.

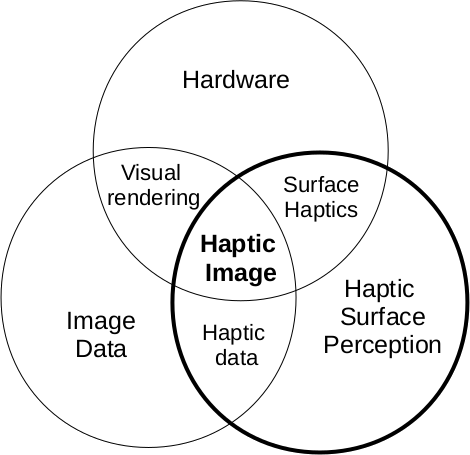

In this work 17, we propose a survey on touchscreen-based surface haptics. The development of tactile screens opens new perspectives for co-located images and haptic rendering, leading to the concept of “haptic images.” They emerge from the combination of image data, rendering hardware, and haptic perception. This enables one to perceive haptic feedback while manually exploring an image. This raises nevertheless two scientific challenges, which serve as thematic axes for this survey work (see Figure 16). Firstly, the choice of appropriate haptic data raises a number of issues about human perception, measurements, modeling and distribution. Secondly, the choice of appropriate rendering technology implies a difficult trade-off between expressiveness and usability.

This work was done in collaboration with Interdigital company.

8.4.2 Capacitive Sensing for Improving Contact Rendering with Tangible Objects in VR

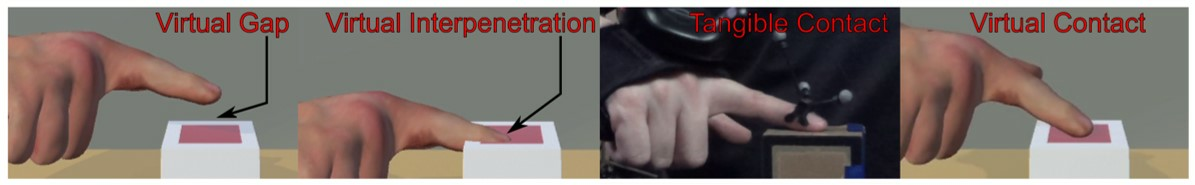

Participants: Xavier de Tinguy, Maud Marchal, Anatole Lécuyer.

In this work 18, we propose an innovative approach to tracking and rendering of contacts with tangible objects in VR, compensating such relative positioning error to achieve a better visuo-haptic synchronization upon contact and preserve immersion during interaction in VR. We employ one tangible object to provide distributed haptic sensations. It is equipped with capacitive sensors to estimate the proximity of the user’s fingertips to its surface. This information is then used to retarget, prior contact, the fingertips position as obtained from a standard vision tracking system, so as to achieve better synchronization between virtual and tangible contacts(see Figure 17). The main contributions of this work can thus be summarized as follows. We proposed a novel approach for enhancing contact rendering in VR when using tangible objects, instrumenting the latter with capacitive sensors. We designed and showcaseed a sensing system and visuohaptic interaction technique enabling high contact synchronization between what users see and feel. We conducted a user study showing the capability of our combined approach vs two stand-alone state-of-the-art tracking systems (Vicon and HTC Vive) in improving the VR experience.

This work was done in collaboration with the Inria Rainbow team.

8.4.3 WeATaViX: WEarable Actuated TAngibles for VIrtualreality eXperiences

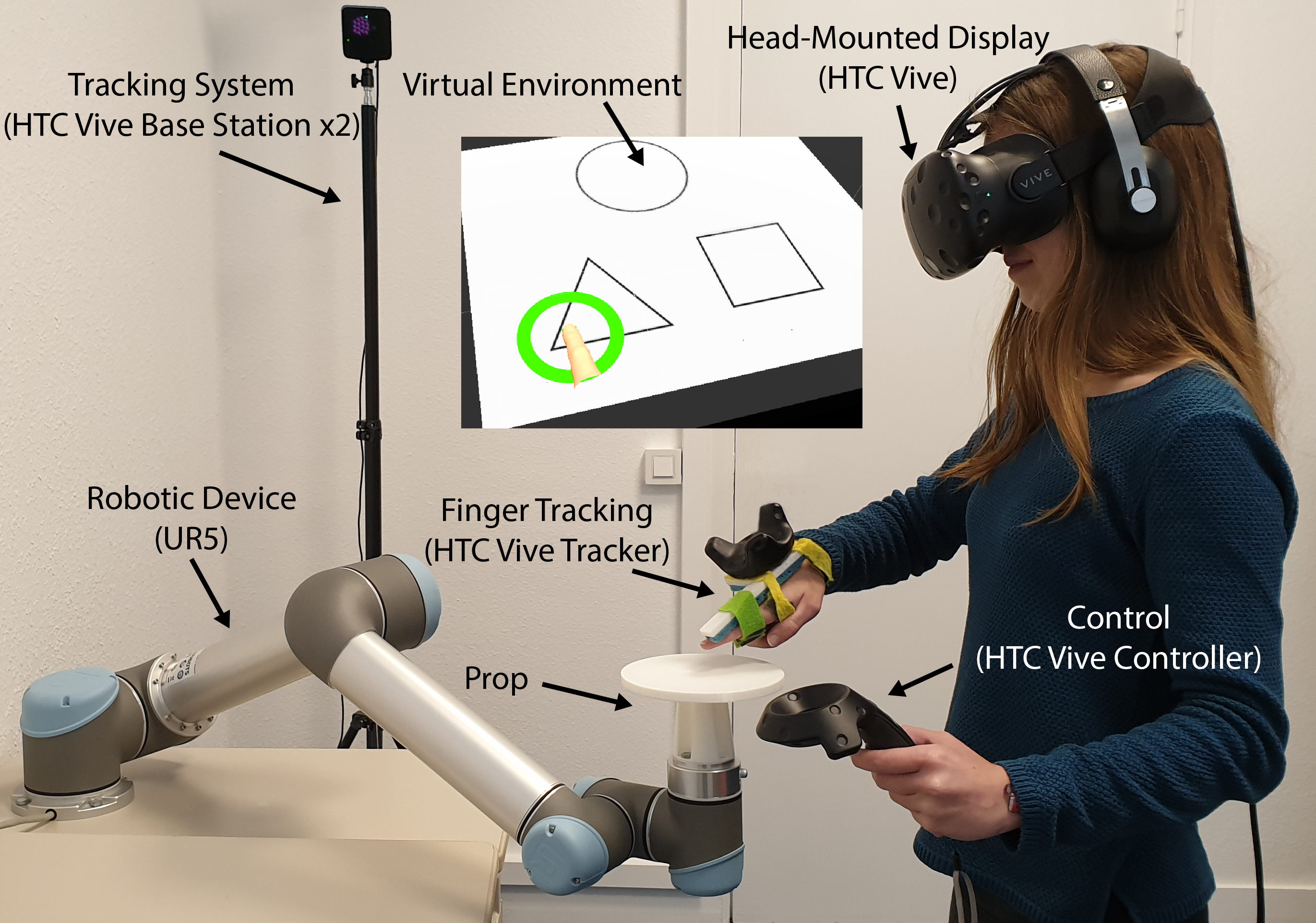

Participants: Xavier Tinguy, Thomas Howard, Maud Marchal, Anatole Lécuyer.

This work 60 focus on the design and evaluation of a wearable haptic interface for natural manipulation of tangible objects in Virtual Reality (VR). It proposes an interaction concept between encounter-type and tangible haptics. The actuated 1 degree-of-freedom interface brings a tangible object in and out of contact with a user’s palm, rendering making and breaking of contact with virtual objects, and allowing grasping and manipulation of virtual objects. Attached at the back of the users' hand with sticky layer of silicone, the lightweight device is made as unobtrusive as possible for the users (see Figure 18). Device performance tests show that changes in contact states can be rendered with delays as low as 50 ms, with additional improvements to contact synchronicity obtained through our proposed interaction technique. An exploratory user study in VR showed that our device can render compelling grasp and release interactions with static and slowly moving virtual objects, contributing to the users' immersion.

This work was done in collaboration with the Inria Rainbow team.

8.4.4 Design and Evaluation of Interaction Techniques Dedicated to Integrate Encountered-Type Haptic Displays in Virtual Environments

Participants: Victor Mercado, Maud Marchal, Anatole Lécuyer.

In this work 33, we presented novel interaction techniques (ITs) dedicated to ETHDs (see Figure 19). The techniques aim at addressing the issues commonly presented for these devices such as limited contact areas, lags and unexpected collisions with the user. First, our work proposes a design framework based on several parameters defining the interactive process between user and ETHD (input, movement control, displacement and contact). Five techniques based on different ramifications of the design space framework were conceived, respectively named: Swipe, Drag, Clutch, Bubble and Follow. Then, a use-case scenario was designed to depict the usage of these techniques on the task of touching and coloring a wide, flat surface. Finally, a user study based on the coloring task was conducted to assess the performance and user experience for each IT. Results were in favor of Drag and Clutch techniques which are based on manual surface displacement, absolute position selection and intermittent contact interaction. Taken together our results and design methodology pave the way to the design of future ITs for ETHDs in virtual environments.

This work was done in collaboration with the Inria Rainbow team.

8.4.5 “Kapow!”: Augmenting Contacts with Real and Virtual Objects Using Stylized Visual Effects

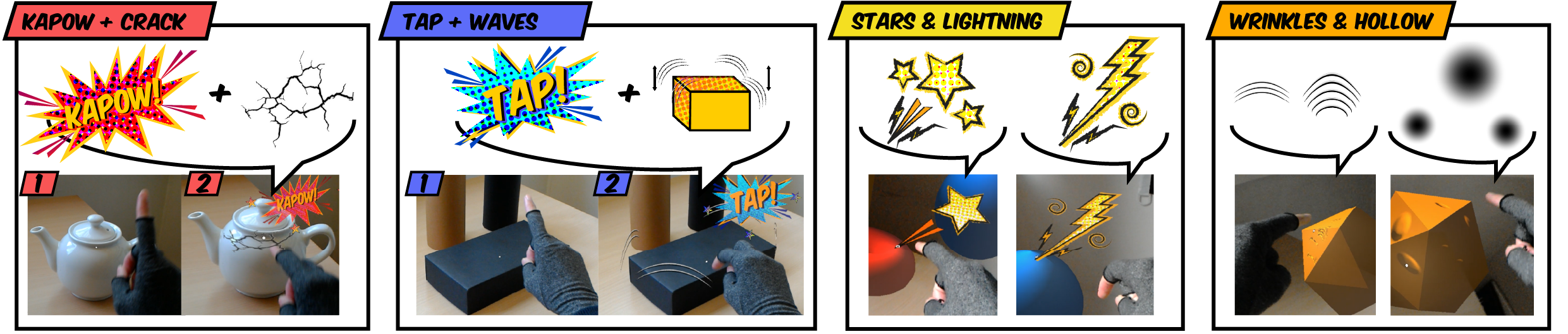

Participants: Victor Mercado, Jean-Marie Normand, Anatole Lécuyer.

In this work 66, we proposed a set of stylized visual effects (VFX) meant to improve the sensation of contact with objects in Augmented Reality (AR). Various graphical effects have been conceived, such as virtual cracks, virtual wrinkles, or even virtual onomatopoeias inspired by comics (see Figure 20). The VFX are meant to augment the perception of contact, with either real or virtual objects, in terms of material properties or contact location for instance. These VFX can be combined with a pseudo-haptics approach to further increase the range of simulated physical properties of the touched materials. An illustrative setup based on a HoloLens headset was designed, in which our proposed VFX could be explored. The VFX appear each time a contact is detected between the user's finger and one object of the scene. Such VFX-based approach could be introduced in AR applications for which the perception and display of contact information are important.

8.4.6 PUMAH : Pan-tilt Ultrasound Mid-Air Haptics for larger interaction workspace in virtual reality

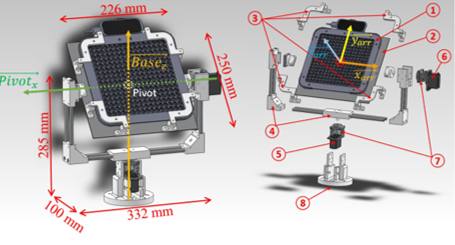

Participants: Thomas Howard, Maud Marchal, Anatole Lécuyer.

Abstract—Mid-air haptic interfaces are promising tools for providing tactile feedback in Virtual Reality (VR) applications, as they do not require the user to be tethered to, hold, or wear any system or device. Currently, one of the most mature solutions for providing mid-air haptic feedback is through focused ultrasound phased arrays. They modulate the phase of an array of ultrasound emitters so as to generate focused points of oscillating high pressure, which in turn elicit vibrotactile sensations when encountering a user’s skin. While these arrays feature a reasonably large vertical workspace, they are not capable of displaying stimuli far beyond their horizontal limits, severely limiting their workspace in the lateral dimensions. In this work 25, we propose an innovative low-cost solution for enlarging the workspace of focused ultrasound arrays. It features 2 degrees of freedom, rotating the array around the pan and tilt axes, thereby significantly increasing the usable workspace and enabling multi-directional feedback (see Figure 21). Our hardware tests and human subject study in an ecological VR setting show a 14-fold increase in workspace volume, with focal point repositioning speeds over 0.85m/s while delivering tactile feedback with positional accuracy below 18mm. Finally, we propose a representative use case to exemplify the potential of our system for VR applications.

This work was done in collaboration with the Inria Rainbow team.

8.4.7 Comparing Motion-based Versus Controller-based Pseudo-haptic Weight Sensations in VR

Participants: Anatole Lécuyer.

In this work 44, we examined whether pseudo-haptic experiences can be achieved using a game controller without motion tracking. For this purpose, we implemented a virtual hand manipulation method that uses the controller’s analog stick. We compared the method’s pseudo-haptic experience to that of the conventional approach of using a hand-held motion controller. The results suggest that our analog stick manipulation can present pseudo-weight sensations in a similar way to the conventional approach. This means that interaction designers and users can also choose to utilize analog stick manipulation for pseudo-haptic experiences, as an alternative to motion controllers.

This work was done in collaboration with Hirose Lab (Tokyo University).

8.4.8 Influence of virtual reality visual feedback on the illusion of movement induced by tendon vibration of wrist in healthy participants

Participants: Salomé Lefranc, Mathis Fleury, Mélanie Cogné, Anatole Lécuyer.

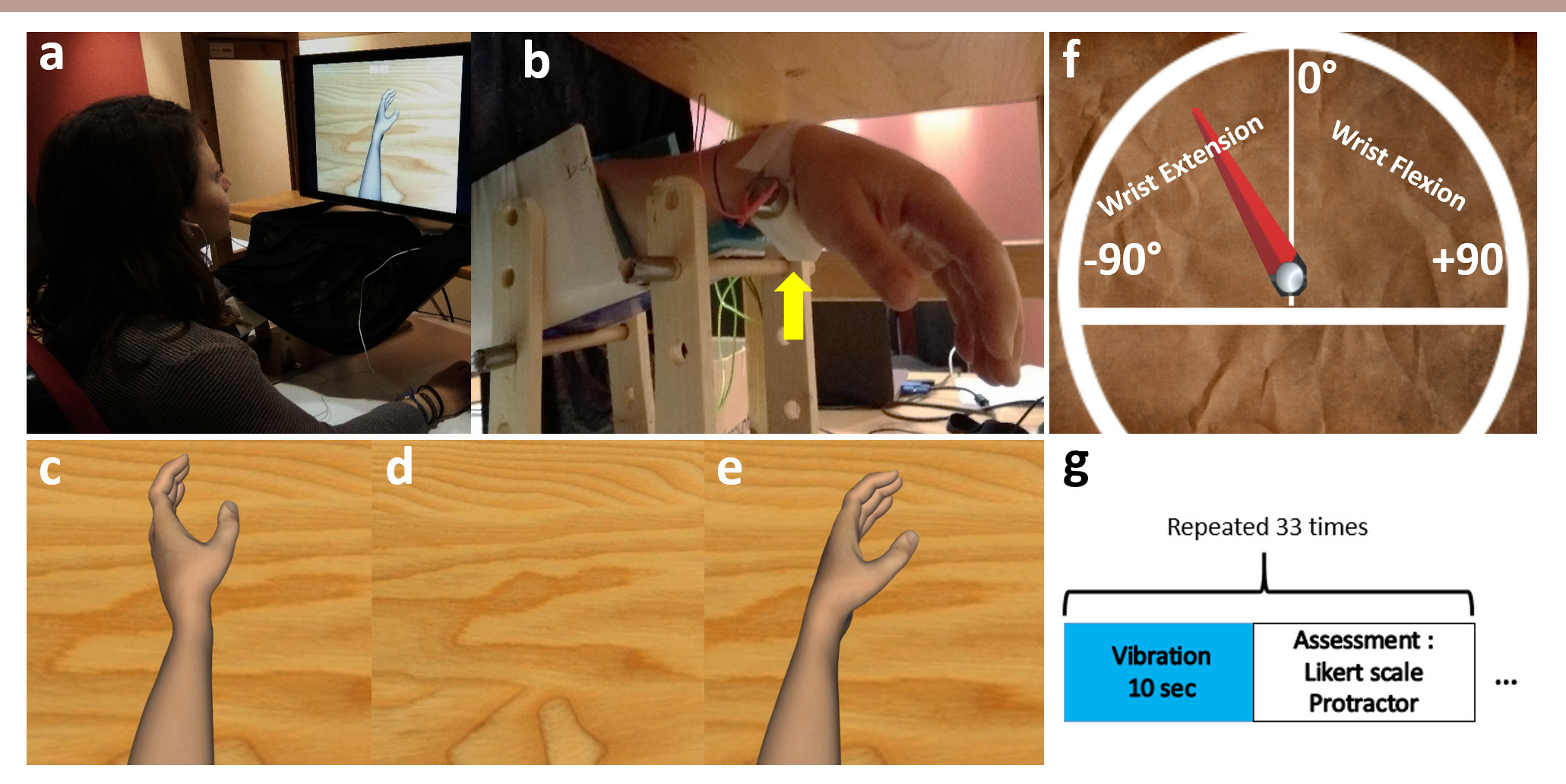

Illusion of movement induced by tendon vibration is often use in neurorehabilitation. The aim of our study 27 was to investigate which modality of visual feedback in Virtual Reality (VR) associated with tendon vibration of the wrist could induce the best illusion of movement. Thirty healthy participants tried on their wrist tendon vibration inducing illusion of movement. Three VR visual conditions was applied: a moving virtual hand (Moving condition), a static virtual hand (Static condition), or no virtual hand at all (Hidden condition) (see Figure 22). The participants had to quantify the intensity of the illusory movement on a Likert scale, the subjective degree of extension of their wrist. The Moving condition induced a higher intensity of illusion of movement and a higher sensation of wrist’s extension than the Hidden condition (p<0.001 and p<0.001 respectively) than that of the Static condition (p<0.001 and p<0.001 respectively). This study demonstrated the importance of carefully selecting a visual feedback to improve the illusion of movement induced by tendon vibration. Further work will consist in testing the same hypothesis with stroke patients.

This work was done in collaboration with CHU Rennes.

8.5 Brain-Computer Interfaces

8.5.1 A survey on the use of haptic feedback in BCI/NF

Participants: Mathis Fleury, Giulia Lioi, Anatole Lécuyer.

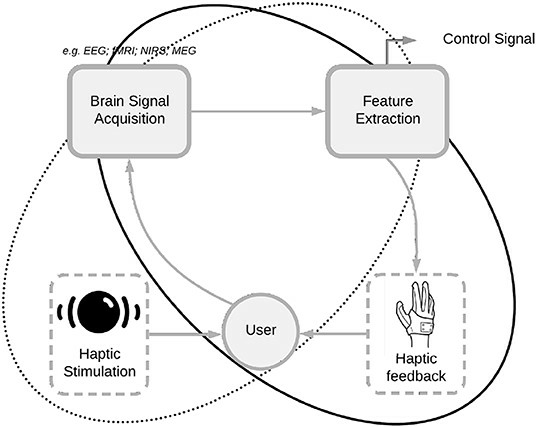

Neurofeedback (NF) and brain-computer interface (BCI) applications rely on the registration and real-time feedback of individual patterns of brain activity with the aim of achieving self-regulation of specific neural substrates or control of external devices. These approaches have historically employed visual stimuli. However, in some cases vision is unsuitable or inadequately engaging. Other sensory modalities, such as auditory or haptic feedback have been explored, and multisensory stimulation is expected to improve the quality of the interaction loop. Moreover, for motor imagery tasks, closing the sensorimotor loop through haptic feedback may be relevant for motor rehabilitation applications, as it can promote plasticity mechanisms. In this work 19, we survey the various haptic technologies and describes their application to BCIs and NF (see Figure 23). We identify major trends in the use of haptic interfaces for BCI and NF systems and discuss crucial aspects that could motivate further studies.

This work was done in collaboration with the Inria EMPENN team.

8.5.2 A Multi-Target Motor Imagery Training Using Bimodal EEG-fMRI Neurofeedback: A Pilot Study in Chronic Stroke Patients

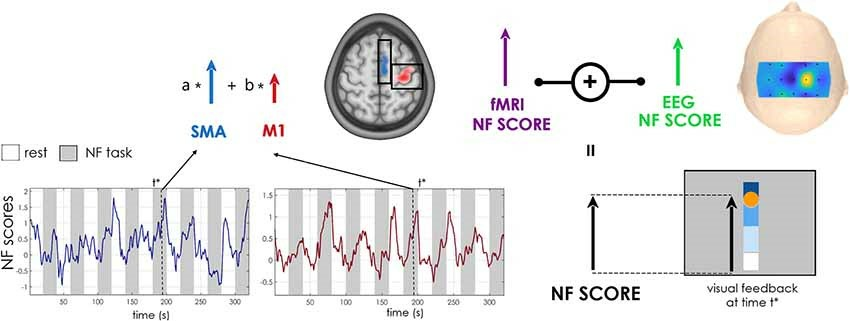

Participants: Mathis Fleury, Giulia Lioi, Anatole Lécuyer.

Traditional rehabilitation techniques present limitations and the majority of patients show poor 1-year post-stroke recovery. Thus, Neurofeedback (NF) or Brain-Computer-Interface applications for stroke rehabilitation purposes are gaining increased attention. Indeed, NF has the potential to enhance volitional control of targeted cortical areas and thus impact on motor function recovery. However, current implementations are limited by temporal, spatial or practical constraints of the specific imaging modality used. In this pilot work 30 and for the first time in literature, we applied bimodal EEG-fMRI NF for upper limb stroke recovery on four stroke-patients with different stroke characteristics and motor impairment severity. We also propose a novel, multi-target training approach that guides the training towards the activation of the ipsilesional primary motor cortex (see Figure 24). In addition to fMRI and EEG outcomes, we assess the integrity of the corticospinal tract (CST) with tractography. Preliminary results suggest the feasibility of our approach and show its potential to induce an augmented activation of ipsilesional motor areas, depending on the severity of the stroke deficit. Only the two patients with a preserved CST and subcortical lesions succeeded in upregulating the ipsilesional primary motor cortex and exhibited a functional improvement of upper limb motricity. These findings highlight the importance of taking into account the variability of the stroke patients’ population and enabled to identify inclusion criteria for the design of future clinical studies.

This work was done in collaboration with the Inria EMPENN team and CHU Rennes.

8.5.3 Impact of 1D and 2D visualisation on EEG-fMRI neurofeedback training during a motor imagery task

Participants: Mathis Fleury, Giulia Lioi, Anatole Lécuyer.

Bi-modal EEG-fMRI neurofeedback (NF) is of great interest. First, it can improve the quality of NF training by combining different real-time information (haemodynamic and electrophysiological) from the participant’s brain activity; Second, it has potential to better understand the link and the synergy of the two modalities (EEG-fMRI). There are however different ways to present to the participant his NF scores during bi-modal neurofeedback sessions. In this work 58, we investigate the impact of the use of a 1D or 2D representation when a visual feedback is given during motor imagery task. Results show a better coherence between EEG and fMRI when 2D display is used and subjects can separately regulate EEG and fMRI. Subjects

This work was done in collaboration with the Inria EMPENN team.

8.5.4 Simultaneous EEG-fMRI during a neurofeedback task, a brain imaging dataset for multimodal data integration

Participants: Mathis Fleury, Giulia Lioi, Anatole Lécuyer.

Combining EEG and fMRI allows for integration of fine spatial and accurate temporal resolution yet presents numerous challenges, noticeably if performed in real-time to implement a Neurofeedback (NF) loop. Here we describe a multimodal dataset of EEG and fMRI acquired simultaneously during a motor imagery NF task, supplemented with MRI structural data. The study involved 30 healthy volunteers undergoing five training sessions. We showed the potential and merit of simultaneous EEG-fMRI NF in previous work. In this work 31 we illustrate the type of information that can be extracted from this dataset and show its potential use. This represents one of the first simultaneous recording of EEG and fMRI for NF and here we present the first open access bi-modal NF dataset integrating EEG and fMRI. We believe that it will be a valuable tool to (1) advance and test methodologies for multi-modal data integration, (2) improve the quality of NF provided, (3) improve methodologies for de-noising EEG acquired under MRI and (4) investigate the neuromarkers of motor-imagery using multi-modal information.

This work was done in collaboration with the Inria EMPENN team.

8.5.5 Uncovering EEG Correlates of Covert Attention in Soccer Goalkeepers: Towards Innovative Sport Training Procedures

Participants: Ferran Argelaguet, Anatole Lécuyer.

Advances in sports sciences and neurosciences offer new opportunities to design efficient and motivating sport training tools. For instance, using NeuroFeedback (NF), athletes can learn to self-regulate specific brain rhythms and consequently improve their performances. In this work 26, we focused on soccer goalkeepers’ Covert Visual Spatial Attention (CVSA) abilities, which are essential for these athletes to reach high performances. We looked for Electroencephalography (EEG) markers of CVSA usable for virtual reality-based NF training procedures, i.e., markers that comply with the following criteria: (1) specific to CVSA, (2) detectable in real-time and (3) related to goalkeepers’ performance/expertise. Our results revealed that the best-known EEG marker of CVSA—increased α-power ipsilateral to the attended hemi-field— was not usable since it did not comply with criteria 2 and 3. Nonetheless, we highlighted a significant positive correlation between athletes’ improvement in CVSA abilities and the increase of their α-power at rest. While the specificity of this marker remains to be demonstrated, it complied with both criteria 2 and 3. This result suggests that it may be possible to design innovative ecological training procedures for goalkeepers, for instance using a combination of NF and cognitive tasks performed in virtual reality.

This work was done in collaboration with the MimeTIC team and the EPFL.

8.5.6 Detecting System Errors in Virtual Reality Using EEG Through Error-Related Potentials

Participants: Hakim Si Mohammed, Ferran Argelaguet, Anatole Lécuyer.

When persons interact with the environment and experience or witness an error (e.g. an unexpected event), a specific brain pattern, known as error-related potential (ErrP) can be observed in the electroencephalographic signals (EEG). Virtual Reality technology enables users to interact with computer-generated simulated environments and to provide multi-modal sensory feedback. Using VR systems can, however, be error-prone. In this work 53, we investigate the presence of ErrPs when Virtual Reality users face 3 types of visualization errors: (Te) tracking errors when manipulating virtual objects, (Fe) feedback errors, and (Be) background anomalies. We conducted an experiment in which 15 participants (see Figure 25) were exposed to the 3 types of errors while performing a center-out pick and place task in virtual reality. The results showed that tracking errors generate error-related potentials, the other types of errors did not generate such discernible patterns. In addition, we show that it is possible to detect the ErrPs generated by tracking losses in single trial, with an accuracy of 85% . This constitutes a first step towards the automatic detection of error-related potentials in VR applications, paving the way to the design of adaptive and self-corrective VR/AR applications by exploiting information directly from the user’s brain.

This work was done in collaboration with TU Graz.

8.6 Cultural Heritage

8.6.1 From the engraved tablet to the digital tablet, history of a fifteenth century music score

Participants: Ronan Gaugne, Valérie Gouranton.

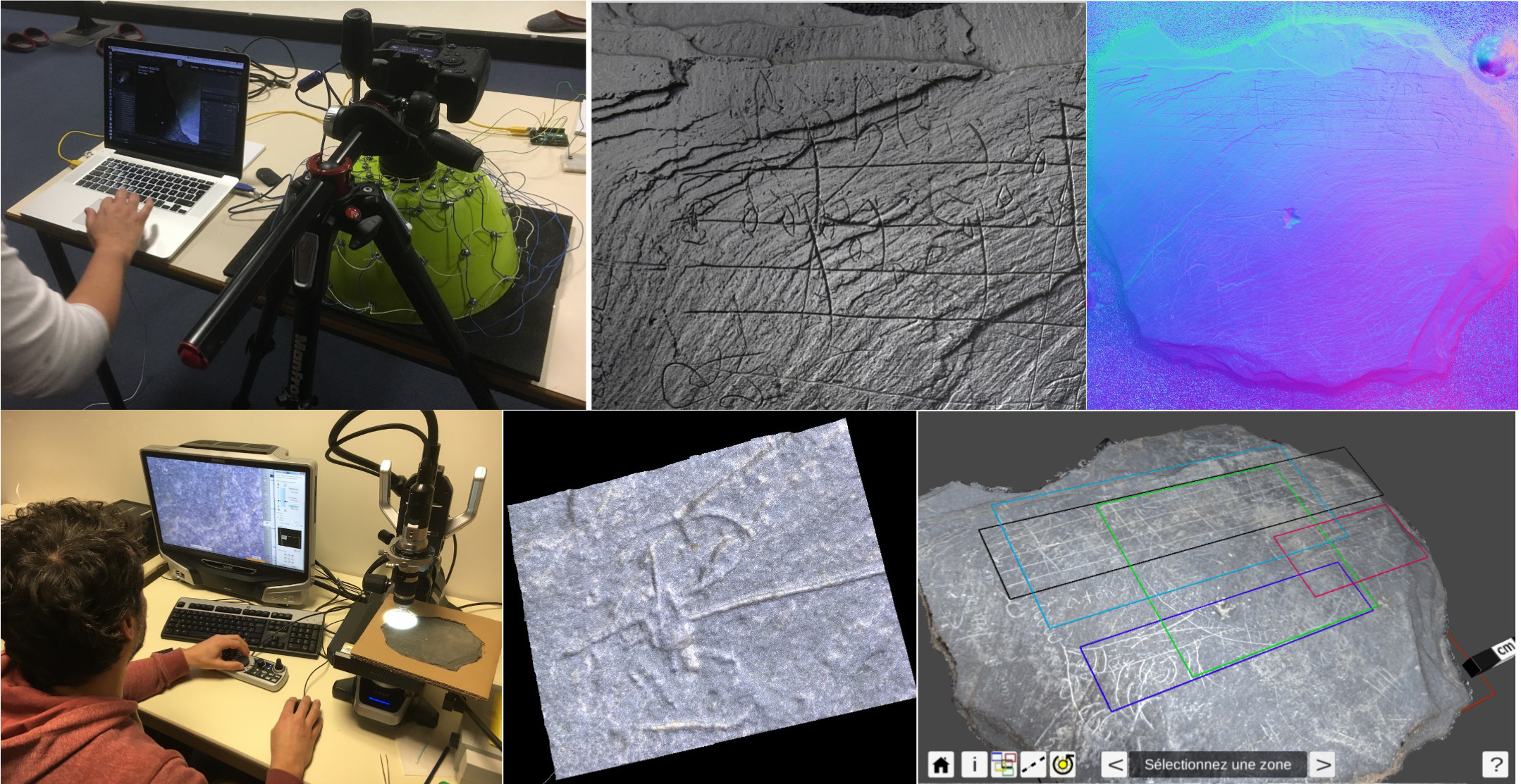

During an important archaeologicial excavation in the center of the city of Rennes, a 15th century engraved tablet was discovered in the area of a former convent. The tablet is covered with engraved inscriptions on both sides, and includes a musical score.

Different digitization techniques were used in order to study and valorize the tablet (see Figure 26). Digitization allowed for an advanced analysis of the inscriptions, and to generate a complete and precise 3D model of the artifact which was used to produce an interactive application deployed both on tactile tablets and website 24. The interactive application integrates a musical interpretation of the score that gives access to a testimony of intangible heritage. This interdisciplinary work gathered archaeologists, researchers from computer science and physics, and a professional musician.

This work was done in collaboration with Inrap, France.

8.6.2 Reconstruction of life aboard an East India Company ship in the 18th century

Participants: Ronan Gaugne, Valérie Gouranton.