2023Activity reportProject-TeamHYBRID

RNSR: 201322122U- Research center Inria Centre at Rennes University

- In partnership with:Institut national des sciences appliquées de Rennes, CNRS, Université de Rennes

- Team name: 3D interaction with virtual environments using body and mind

- In collaboration with:Institut de recherche en informatique et systèmes aléatoires (IRISA)

- Domain:Perception, Cognition and Interaction

- Theme:Interaction and visualization

Keywords

Computer Science and Digital Science

- A2.5. Software engineering

- A5.1. Human-Computer Interaction

- A5.1.1. Engineering of interactive systems

- A5.1.2. Evaluation of interactive systems

- A5.1.3. Haptic interfaces

- A5.1.4. Brain-computer interfaces, physiological computing

- A5.1.5. Body-based interfaces

- A5.1.6. Tangible interfaces

- A5.1.7. Multimodal interfaces

- A5.1.8. 3D User Interfaces

- A5.1.9. User and perceptual studies

- A5.2. Data visualization

- A5.6. Virtual reality, augmented reality

- A5.6.1. Virtual reality

- A5.6.2. Augmented reality

- A5.6.3. Avatar simulation and embodiment

- A5.6.4. Multisensory feedback and interfaces

- A5.10.5. Robot interaction (with the environment, humans, other robots)

- A6. Modeling, simulation and control

- A6.2. Scientific computing, Numerical Analysis & Optimization

- A6.3. Computation-data interaction

Other Research Topics and Application Domains

- B1.2. Neuroscience and cognitive science

- B2.4. Therapies

- B2.5. Handicap and personal assistances

- B2.6. Biological and medical imaging

- B2.8. Sports, performance, motor skills

- B5.1. Factory of the future

- B5.2. Design and manufacturing

- B5.8. Learning and training

- B5.9. Industrial maintenance

- B6.4. Internet of things

- B8.1. Smart building/home

- B8.3. Urbanism and urban planning

- B9.1. Education

- B9.2. Art

- B9.2.1. Music, sound

- B9.2.2. Cinema, Television

- B9.2.3. Video games

- B9.4. Sports

- B9.6.6. Archeology, History

1 Team members, visitors, external collaborators

Research Scientists

- Anatole Lécuyer [Team leader, INRIA, Senior Researcher, HDR]

- Fernando Argelaguet Sanz [INRIA, Researcher, HDR]

- Marc Macé [CNRS, Researcher, HDR]

- Léa Pillette [CNRS, Researcher]

- Justine Saint-Aubert [CNRS, Researcher, from Dec 2023]

Faculty Members

- Bruno Arnaldi [INSA Rennes, Emeritus, HDR]

- Valérie Gouranton [INSA Rennes, Associate Professor, HDR]

- Mélanie Villain [UNIV Rennes, Associate Professor]

Post-Doctoral Fellows

- Elodie Bouzbib [INRIA, until Nov 2023]

- Yann Glemarec [INRIA, Post-Doctoral Fellow, from Oct 2023]

- Francois Le Jeune [INRIA, Post-Doctoral Fellow, from Mar 2023]

- Justine Saint-Aubert [INRIA, until Oct 2023]

- Kyung-Ho Won [INRIA, Post-Doctoral Fellow, from Mar 2023]

PhD Students

- Clément Broutin [CENTRALE Nantes, from Dec 2023]

- Florence Celant-Le Manac'H [CHRU Rennes, UNIV Rennes, from Nov 2023]

- Romain Chabbert [CNRS, from Oct 2023, INSA Rennes]

- Antonin Cheymol [INRIA, INSA Rennes]

- Maxime Dumonteil [UNIV Rennes, from Oct 2023]

- Nicolas Fourrier [Segula Technologies, CIFRE, CENTRALE Nantes]

- Vincent Goupil [Sogea Bretagne, CIFRE, until Oct 2023, INSA Rennes]

- Lysa Gramoli [ORANGE LABS, CIFRE, until Sep 2023, INSA Rennes]

- Martin Guy [CENTRALE Nantes, until Feb 2023]

- Jeanne Hecquard [INRIA, UNIV Rennes]

- Gabriela Herrera Altamira [INRIA and UNIV Lorraine]

- Emilie Hummel [INRIA, INSA Rennes]

- Julien Lomet [UNIV Paris 8, UNIV Rennes]

- Julien Manson [UNIV Rennes, from Oct 2023]

- Maé Mavromatis [INRIA, until Mar 2023]

- Yann Moullec [UNIV Rennes]

- Grégoire Richard [INRIA, UNIV Lille, until Jun 2023]

- Mathieu Risy [INSA Rennes]

- Tom Roy [INRIA and InterDigital, CIFRE, from Oct 2023, INSA Rennes]

- Sony Saint-Auret [INRIA , INSA Rennes]

- Emile Savalle [UNIV UNIV Rennes]

- Sabrina Toofany [INRIA, from Oct 2023, UNIV Rennes]

- Philippe de Clermont Gallerande [INRIA and InterDigital, CIFRE, from Mar 2023, UNIV Rennes]

Technical Staff

- Alexandre Audinot [INSA Rennes, Engineer]

- Ronan Gaugne [UNIV Rennes, Engineer]

- Lysa Gramoli [INSA Rennes, Engineer, from Nov 2023]

- Anthony Mirabile [INRIA, Engineer, from Oct 2023]

- Florian Nouviale [INSA Rennes, Engineer]

- Thomas Prampart [INRIA, Engineer]

- Adrien Reuzeau [UNIV Rennes, Engineer]

Interns and Apprentices

- Arthur Chaminade [INRIA, Intern, from May 2023 until Oct 2023]

- Lea Driessens [INRIA, Intern, from Feb 2023 until Jun 2023]

- Maxime Dumonteil [INRIA, Intern, from Apr 2023 until Sep 2023]

- Pierre Fayol [UNIV Rennes, Intern, from Mar 2023 until Aug 2023]

- Julien Manson [ENS Rennes, Intern, from Feb 2023 until Aug 2023]

- Vincent Philippe [INRIA, Intern, from May 2023 until Nov 2023]

Administrative Assistant

- Nathalie Denis [INRIA]

External Collaborators

- Rebecca Fribourg [CENTRALE Nantes]

- Guillaume Moreau [IMT Atlantique, HDR]

- Jean-Marie Normand [CENTRALE Nantes, HDR]

2 Overall objectives

Our research project belongs to the scientific field of Virtual Reality (VR) and 3D interaction with virtual environments. VR systems can be used in numerous applications such as for industry (virtual prototyping, assembly or maintenance operations, data visualization), entertainment (video games, theme parks), arts and design (interactive sketching or sculpture, CAD, architectural mock-ups), education and science (physical simulations, virtual classrooms), or medicine (surgical training, rehabilitation systems). A major change that we foresee in the next decade concerning the field of Virtual Reality relates to the emergence of new paradigms of interaction (input/output) with Virtual Environments (VE).

As for today, the most common way to interact with 3D content still remains by measuring user's motor activity, i.e., his/her gestures and physical motions when manipulating different kinds of input device. However, a recent trend consists in soliciting more movements and more physical engagement of the body of the user. We can notably stress the emergence of bimanual interaction, natural walking interfaces, and whole-body involvement. These new interaction schemes bring a new level of complexity in terms of generic physical simulation of potential interactions between the virtual body and the virtual surrounding, and a challenging "trade-off" between performance and realism. Moreover, research is also needed to characterize the influence of these new sensory cues on the resulting feelings of "presence" and immersion of the user.

Besides, a novel kind of user input has recently appeared in the field of virtual reality: the user's mental activity, which can be measured by means of a "Brain-Computer Interface" (BCI). Brain-Computer Interfaces are communication systems which measure user's electrical cerebral activity and translate it, in real-time, into an exploitable command. BCIs introduce a new way of interacting "by thought" with virtual environments. However, current BCI can only determine a small amount of mental states and hence a small number of mental commands. Thus, research is still needed here to extend the capacities of BCI, and to better exploit the few available mental states in virtual environments.

Our first motivation consists thus in designing novel “body-based” and “mind-based” controls of virtual environments and reaching, in both cases, more immersive and more efficient 3D interaction.

Furthermore, in current VR systems, motor activities and mental activities are always considered separately and exclusively. This reminds the well-known “body-mind dualism” which is at the heart of historical philosophical debates. In this context, our objective is to introduce novel “hybrid” interaction schemes in virtual reality, by considering motor and mental activities jointly, i.e., in a harmonious, complementary, and optimized way. Thus, we intend to explore novel paradigms of 3D interaction mixing body and mind inputs. Moreover, our approach becomes even more challenging when considering and connecting multiple users which implies multiple bodies and multiple brains collaborating and interacting in virtual reality.

Our second motivation consists thus in introducing a “hybrid approach” which will mix mental and motor activities of one or multiple users in virtual reality.

3 Research program

The scientific objective of Hybrid team is to improve 3D interaction of one or multiple users with virtual environments, by making full use of physical engagement of the body, and by incorporating the mental states by means of brain-computer interfaces. We intend to improve each component of this framework individually and their subsequent combinations.

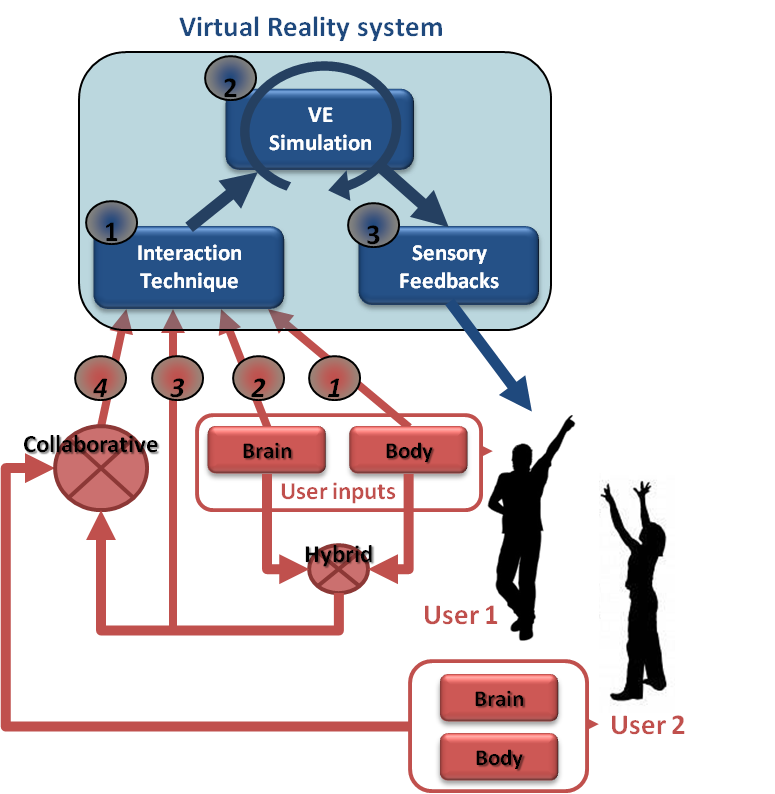

The “hybrid” 3D interaction loop between one or multiple users and a virtual environment is depicted in Figure 1. Different kinds of 3D interaction situations are distinguished (red arrows, bottom): 1) body-based interaction, 2) mind-based interaction, 3) hybrid and/or 4) collaborative interaction (with at least two users). In each case, three scientific challenges arise which correspond to the three successive steps of the 3D interaction loop (blue squares, top): 1) the 3D interaction technique, 2) the modeling and simulation of the 3D scenario, and 3) the design of appropriate sensory feedback.

3D hybrid interaction loop between one or multiple users and a virtual reality system

The 3D interaction loop involves various possible inputs from the user(s) and different kinds of output (or sensory feedback) from the simulated environment. Each user can involve his/her body and mind by means of corporal and/or brain-computer interfaces. A hybrid 3D interaction technique (1) mixes mental and motor inputs and translates them into a command for the virtual environment. The real-time simulation (2) of the virtual environment is taking into account these commands to change and update the state of the virtual world and virtual objects. The state changes are sent back to the user and perceived through different sensory feedbacks (e.g., visual, haptic and/or auditory) (3). These sensory feedbacks are closing the 3D interaction loop. Other users can also interact with the virtual environment using the same procedure, and can eventually “collaborate” using “collaborative interactive techniques” (4).

This description is stressing three major challenges which correspond to three mandatory steps when designing 3D interaction with virtual environments:

- 3D interaction techniques: This first step consists in translating the actions or intentions of the user (inputs) into an explicit command for the virtual environment. In virtual reality, the classical tasks that require such kinds of user command were early classified into four 51: navigating the virtual world, selecting a virtual object, manipulating it, or controlling the application (entering text, activating options, etc). However, adding a third dimension and using stereoscopic rendering along with advanced VR interfaces cause many 2D techniques to become inappropriate. It is thus necessary to design specific interaction techniques and adapted tools. This challenge is here renewed by the various kinds of 3D interaction which are targeted. In our case, we consider various situations, with motor and/or cerebral inputs, and potentially multiple users.

- Modeling and simulation of complex 3D scenarios: This second step corresponds to the update of the state of the virtual environment, in real-time, in response to all the potential commands or actions sent by the user. The complexity of the data and phenomena involved in 3D scenarios is constantly increasing. It corresponds for instance to the multiple states of the entities present in the simulation (rigid, articulated, deformable, fluids, which can constitute both the user’s virtual body and the different manipulated objects), and the multiple physical phenomena implied by natural human interactions (squeezing, breaking, melting, etc). The challenge consists here in modeling and simulating these complex 3D scenarios and meeting, at the same time, two strong constraints of virtual reality systems: performance (real-time and interactivity) and genericity (e.g., multi-resolution, multi-modal, multi-platform, etc).

- Immersive sensory feedbacks: This third step corresponds to the display of the multiple sensory feedbacks (output) coming from the various VR interfaces. These feedbacks enable the user to perceive the changes occurring in the virtual environment. They are closing the 3D interaction loop, making the user immersed, and potentially generating a subsequent feeling of presence. Among the various VR interfaces which have been developed so far we can stress two kinds of sensory feedback: visual feedback (3D stereoscopic images using projection-based systems such as CAVE systems or Head Mounted Displays); and haptic feedback (related to the sense of touch and to tactile or force-feedback devices). The Hybrid team has a strong expertice in haptic feedback, and in the design of haptic and “pseudo-haptic” rendering 52. Note that a major trend in the community, which is strongly supported by the Hybrid team, relates to a “perception-based” approach, which aims at designing sensory feedbacks which are well in line with human perceptual capabilities.

These three scientific challenges are addressed differently according to the context and the user inputs involved. We propose to consider three different contexts, which correspond to the three different research axes of the Hybrid research team, namely: 1) body-based interaction (motor input only), 2) mind-based interaction (cerebral input only), and then 3) hybrid and collaborative interaction (i.e., the mixing of body and brain inputs from one or multiple users).

3.1 Research Axes

The scientific activity of Hybrid team follows three main axes of research:

- Body-based interaction in virtual reality. Our first research axis concerns the design of immersive and effective "body-based" 3D interactions, i.e., relying on a physical engagement of the user’s body. This trend is probably the most popular one in VR research at the moment. Most VR setups make use of tracking systems which measure specific positions or actions of the user in order to interact with a virtual environment. However, in recent years, novel options have emerged for measuring “full-body” movements or other, even less conventional, inputs (e.g. body equilibrium). In this first research axis we focus on new emerging methods of “body-based interaction” with virtual environments. This implies the design of novel 3D user interfaces and 3D interactive techniques, new simulation models and techniques, and innovant sensory feedbacks for body-based interaction with virtual worlds. It involves real-time physical simulation of complex interactive phenomena, and the design of corresponding haptic and pseudo-haptic feedback.

- Mind-based interaction in virtual reality. Our second research axis concerns the design of immersive and effective “mind-based” 3D interactions in Virtual Reality. Mind-based interaction with virtual environments relies on Brain-Computer Interface technology, which corresponds to the direct use of brain signals to send “mental commands” to an automated system such as a robot, a prosthesis, or a virtual environment. BCI is a rapidly growing area of research and several impressive prototypes are already available. However, the emergence of such a novel user input is also calling for novel and dedicated 3D user interfaces. This implies to study the extension of the mental vocabulary available for 3D interaction with VEs, the design of specific 3D interaction techniques “driven by the mind” and, last, the design of immersive sensory feedbacks that could help improve the learning of brain control in VR.

- Hybrid and collaborative 3D interaction. Our third research axis intends to study the combination of motor and mental inputs in VR, for one or multiple users. This concerns the design of mixed systems, with potentially collaborative scenarios involving multiple users, and thus, multiple bodies and multiple brains sharing the same VE. This research axis therefore involves two interdependent topics: 1) collaborative virtual environments, and 2) hybrid interaction. It should end up with collaborative virtual environments with multiple users, and shared systems with body and mind inputs.

4 Application domains

4.1 Overview

The research program of the Hybrid team aims at next generations of virtual reality and 3D user interfaces which could possibly address both the “body” and “mind” of the user. Novel interaction schemes are designed, for one or multiple users. We target better integrated systems and more compelling user experiences.

The applications of our research program correspond to the applications of virtual reality technologies which could benefit from the addition of novel body-based or mind-based interaction capabilities:

- Industry: with training systems, virtual prototyping, or scientific visualization;

- Medicine: with rehabilitation and re-education systems, or surgical training simulators;

- Entertainment: with movie industry, content customization, video games or attractions in theme parks,

- Construction: with virtual mock-ups design and review, or historical/architectural visits.

- Cultural Heritage: with acquisition, virtual excavation, virtual reconstruction and visualization

5 Social and environmental responsibility

5.1 Impact of research results

A salient initiative carried out by Hybrid in relation to social responsibility on the field of health is the Inria Covid-19 project “VERARE”. VERARE is a unique and innovative concept implemented in record time thanks to a close collaboration between the Hybrid research team and the teams from the intensive care and physical and rehabilitation medicine departments of Rennes University Hospital. VERARE consists in using virtual environments and VR technologies for the rehabilitation of Covid-19 patients, coming out of coma, weakened, and with strong difficulties in recovering walking. With VERARE, the patient is immersed in different virtual environments using a VR headset. He is represented by an “avatar”, carrying out different motor tasks involving his lower limbs, for example : walking, jogging, avoiding obstacles, etc. Our main hypothesis is that the observation of such virtual actions, and the progressive resumption of motor activity in VR, will allow a quicker start to rehabilitation, as soon as the patient leaves the ICU. The patient will then be able to carry out sessions in his room, or even from his hospital bed, in simple and secure conditions, hoping to obtain a final clinical benefit, either in terms of motor and walking recovery or in terms of hospital length of stay. The project started at the end of April 2020, and we were able to deploy a first version of our application at the Rennes hospital in mid-June 2020 only 2 months after the project started. Covid patients are now using our virtual reality application at the Rennes University Hospital, and the clinical evaluation of VERARE is still on-going and expected to be achieved and completed in 2024. The project is also pushing the research activity of Hybrid on many aspects, eg., haptics, avatars, and VR user experience, with 4 papers published in IEEE TVCG in 2022 & 2023.

6 Highlights of the year

- Hiring of Justine Saint-Aubert as CNRS Research Scientist.

- Hiring of Thomas Prampart as Inria Research Engineer.

- Attribution of IEEE VR 2025 conference organization (Anatole Lécuyer and Ferran Argelaguet are General Chairs)

- Definition of the new team project: Seamless

6.1 Awards

- IEEE VGTC Virtual Reality Best PhD Dissertation Award (Honorable Mention) - Hugo Brument

7 New software, platforms, open data

7.1 New software

7.1.1 OpenVIBE

-

Keywords:

Neurosciences, Interaction, Virtual reality, Health, Real time, Neurofeedback, Brain-Computer Interface, EEG, 3D interaction

-

Functional Description:

OpenViBE is a free and open-source software platform devoted to the design, test and use of Brain-Computer Interfaces (BCI). The platform consists of a set of software modules that can be integrated easily and efficiently to design BCI applications. The key features of OpenViBE software are its modularity, its high performance, its portability, its multiple-user facilities and its connection with high-end/VR displays. The designer of the platform enables users to build complete scenarios based on existing software modules using a dedicated graphical language and a simple Graphical User Interface (GUI). This software is available on the Inria Forge under the terms of the AGPL licence, and it was officially released in June 2009. Since then, the OpenViBE software has already been downloaded more than 60000 times, and it is used by numerous laboratories, projects, or individuals worldwide. More information, downloads, tutorials, videos, documentations are available on the OpenViBE website.

-

Release Contributions:

Added: - Metabox to perform log of signal power - Artifacted files for algorithm tests

Changed: - Refactoring of CMake build process - Update wildcards in gitignore - Update CSV File Writer/Reader - Stimulations only

Removed: - Ogre games and dependencies - Mensia distribution

Fixed: - Intermittent compiler bug

-

News of the Year:

Python2 support dropped in favour of Python3 New feature boxes: - Riemannian geometry - Multimodal Graz visualisation - Artefact detection - Features selection - Stimulation validator

Support for Ubuntu 18.04 Support for Fedora 31

- URL:

-

Contact:

Anatole Lecuyer

-

Participants:

Cedric Riou, Thierry Gaugry, Anatole Lecuyer, Fabien Lotte, Jussi Lindgren, Laurent Bougrain, Maureen Clerc Gallagher, Théodore Papadopoulo, Thomas Prampart

-

Partners:

INSERM, GIPSA-Lab

7.1.2 Xareus

-

Name:

Xareus

-

Keywords:

Virtual reality, Augmented reality, 3D, 3D interaction, Behavior modeling, Interactive Scenarios

-

Scientific Description:

Xareus mainly contains a scenario engine (#SEVEN) and a relation engine (#FIVE) #SEVEN is a model and an engine based on petri nets extended with sensors and effectors, enabling the description and execution of complex and interactive scenarios #FIVE is a framework for the development of interactive and collaborative virtual environments. #FIVE was developed to answer the need for an easier and a faster design and development of virtual reality applications. #FIVE provides a toolkit that simplifies the declaration of possible actions and behaviours of objects in a VE. It also provides a toolkit that facilitates the setting and the management of collaborative interactions in a VE. It is compliant with a distribution of the VE on different setups. It also proposes guidelines to efficiently create a collaborative and interactive VE.

-

Functional Description:

Xareus is implemented in C# and is available as libraries. An integration to the Unity3D engine, also exists. The user can focus on domain-specific aspects for his/her application (industrial training, medical training, etc) thanks to Xareus modules. These modules can be used in a vast range of domains for augmented and virtual reality applications requiring interactive environments and collaboration, such as in training. The scenario engine is based on Petri nets with the addition of sensors and effectors that allow the execution of complex scenarios for driving Virtual Reality applications. Xareus comes with a scenario editor integrated to Unity 3D for creating, editing and remotely controlling and running scenarios. The relation engine contains software modules that can be interconnected and helps in building interactive and collaborative virtual environments.

-

Release Contributions:

This version is up to date with Unity 3D 2022.3 LTS and includes the rewrite of most of the visual editor on UIToolkit library. It also add many features and options to customize the editor Added : - Sections - Multiple sensor/effector on one transition - Negation of sensors - Website

- URL:

- Publications:

-

Contact:

Valerie Gouranton

-

Participants:

Florian Nouviale, Valerie Gouranton, Bruno Arnaldi, Vincent Goupil, Carl-Johan Jorgensen, Emeric Goga, Adrien Reuzeau, Alexandre Audinot

7.1.3 AvatarReady

-

Name:

A unified platform for the next generation of our virtual selves in digital worlds

-

Keywords:

Avatars, Virtual reality, Augmented reality, Motion capture, 3D animation, Embodiment

-

Scientific Description:

AvatarReady is an open-source tool (AGPL) written in C#, providing a plugin for the Unity 3D software to facilitate the use of humanoid avatars for mixed reality applications. Due to the current complexity of semi-automatically configuring avatars coming from different origins, and using different interaction techniques and devices, AvatarReady aggregates several industrial solutions and results from the academic state of the art to propose a simple and fast way to use humanoid avatars in mixed reality in a seamless way. For example, it is possible to automatically configure avatars from different libraries (e.g., rocketbox, character creator, mixamo), as well as to easily use different avatar control methods (e.g., motion capture, inverse kinematics). AvatarReady is also organized in a modular way so that scientific advances can be progressively integrated into the framework. AvatarReady is furthermore accompanied by a utility to generate ready-to-use avatar packages that can be used on the fly, as well as a website to display them and offer them for download to users.

-

Functional Description:

AvatarReady is a Unity tool to facilitate the configuration and use of humanoid avatars for mixed reality applications. It comes with a utility to generate ready-to-use avatar packages and a website to display them and offer them for download.

- URL:

-

Authors:

Ludovic Hoyet, Fernando Argelaguet Sanz, Adrien Reuzeau

-

Contact:

Ludovic Hoyet

7.1.4 ElectroStim

-

Keywords:

Virtual reality, Unity 3D, Electrotactility, Sensory feedback

-

Scientific Description:

ElectroStim provides an agnostic haptic rendering framework able to exploit electrical stimulation capabilities, test quickly different prototypes of electrodes, and have a fast and easy way to author electrotactile sensations so they can quickly be compared when used as tactile feedback in VR interactions. The framework was designed to exploited electrotactile tactile feedback but it can also be extended to other tactile rendering system such as vibrotactile feedback. Furthermore, it is designed to be easily extendable to other types of haptic sensations.

-

Functional Description:

This software provides the tools necessary to control an electrotactile stimulator in Unity 3D. The software allows precise control of the system to generate tactile sensations in virtual reality applications.

- Publication:

-

Authors:

Sebastian Santiago Vizcay, Fernando Argelaguet Sanz

-

Contact:

Fernando Argelaguet Sanz

7.2 New platforms

7.2.1 Immerstar

Participants: Florian Nouviale, Ronan Gaugne.

URL: Immersia website

With the two virtual reality technological platforms Immersia and Immermove, grouped under the name Immerstar, the team has access to high-level scientific facilities. This equipment benefits the research teams of the center and has allowed them to extend their local, national and international collaborations. In 2023, the Immersia platform was extended to offer a new space dedicated to scientific experimentations in XR, named ImmerLab (see figure 2). A Photography Rig was also installed. This system given by Interdigital will allow to explore head/face picture computing and rendering.

We celebrated the twentieth anniversary of the Immersia platform in November 2019 by inaugurating the new haptic equipment. We proposed scientific presentations and received 150 participants, and visits for the support services in which we received 50 people.

Since 2021, Immerstar is granted by a PIA3-Equipex+ funding, CONTINUUM. The CONTINUUM project involves 22 partners and animates a collaborative research infrastructure of 30 platforms located throughout France. It aims to foster advance interdisciplinary research based on interaction between computer science and the human and social sciences. Thanks to CONTINUUM, 37 research teams can develop cutting-edge research programs focusing on visualization, immersion, interaction and collaboration, as well as on human perception, cognition and behaviour in virtual/augmented reality, with potential impact on societal issues. CONTINUUM enables a paradigm shift in the way we perceive, interact, and collaborate with complex digital data and digital worlds by putting humans at the center of the data processing workflows.

Immerstar is involved in a new National Research Infrastructure since the end of 2021, which gathers the main platforms of CONTINUUM.

Immerstar is also involved in EUR Digisport led by University of Rennes, H2020 EU projects GuestXR and Share Space, and PIA4 DemoES AIR led by University of Rennes.

In october 2023, the team of the platform has been strengthened by the recruitment of a permanent research engineer by the University of Rennes, Adrien Reuzeau. Immerstar was also granted in 2023 by a CPER funding for a 2 years position of a University Rennes 2 research engineer dedicated to the development of partnership with private entities.

Immersia also hosted teaching activities for students from INSA Rennes, ENS Rennes, and University of Rennes.

Immersia VR and haptics platform

8 New results

8.1 Virtual Reality Tools and Usages

8.1.1 Assisted walking-in-place: Introducing assisted motion to walking-by-cycling in embodied virtual reality

Participants: Yann Moullec, Mélanie Cogné [contact], Justine Saint-Aubert, Anatole Lécuyer.

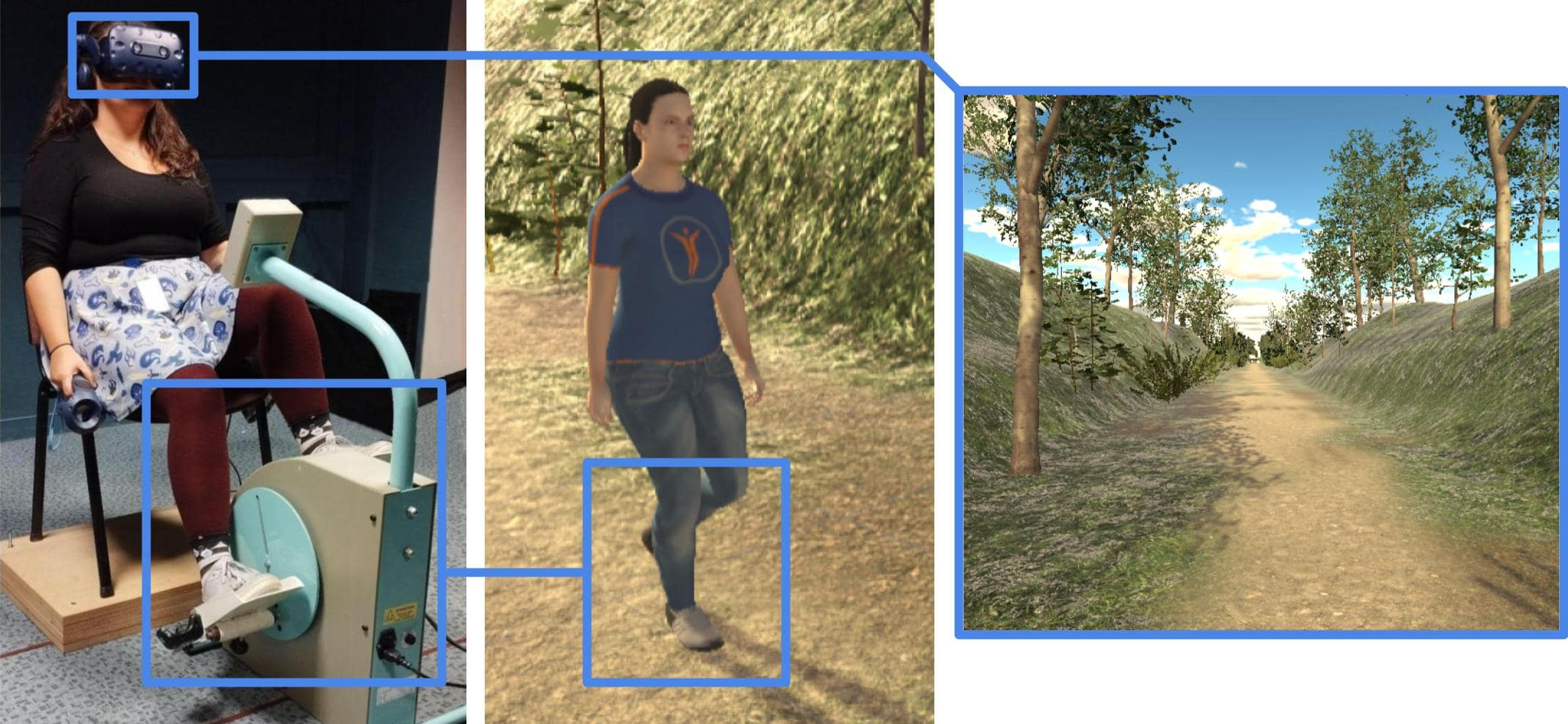

In this work 28, we investigate the use of a motorized bike to support the walk of a self-avatar in Virtual Reality (VR). While existing walking-in-place (WIP) techniques render compelling walking experiences, they can be judged repetitive and strenuous. Our approach consists in assisting a WIP technique so that the user does not have to actively move in order to reduce effort and fatigue. We chose to assist a technique called walking-by-cycling, which consists in mapping the cycling motion of a bike onto the walking of the user's self-avatar, by using a motorized bike (see figure 3). We expected that our approach could provide participants with a compelling walking experience while reducing the effort required to navigate. We conducted a within-subjects study where we compared "assisted walking-by-cycling" to a traditional active walking-by-cycling implementation, and to a standard condition where the user is static. In the study, we measured embodiment, including ownership and agency, walking sensation, perceived effort and fatigue. Results showed that assisted walking-by-cycling induced more ownership, agency, and walking sensation than the static simulation. Additionally, assisted walking-by-cycling induced levels of ownership and walking sensation similar to that of active walking-by-cycling, but it induced less perceived effort. Taken together, this work promotes the use of assisted walking-by-cycling in situations where users cannot or do not want to exert much effort while walking in embodied VR such as for injured or disabled users, for prolonged uses, medical rehabilitation, or virtual visits.

Teaser of the experimental setup

8.1.2 Assistive robotic technologies for next-generation smart wheelchairs

Participants: Valérie Gouranton [contact].

This work 27 describes the robotic assistive technologies developed for users of electrically powered wheelchairs, within the framework of the European Union’s Interreg ADAPT (Assistive Devices for Empowering Disabled People Through Robotic Technologies) project. In particular, special attention is devoted to the integration of advanced sensing modalities and the design of new shared control algorithms. In response to the clinical needs identified by our medical partners, two novel smart wheelchairs with complementary capabilities and a virtual reality (VR)-based wheelchair simulator have been developed (see figure 4). These systems have been validated via extensive experimental campaigns in France and the United Kingdom.

Wheelchair simulator tested by a volunteer in immersive conditions

This work was done in collaboration with Inria RAINBOW Team, MIS - Modélisation Information et Systèmes - UR UPJV 4290, University College of London, Pôle Saint-Hélier - Médecine Physique et de Réadaptation [Rennes], CNRS-AIST JRL - Joint Robotics Laboratory and IRSEEM - Institut de Recherche en Systèmes Electroniques Embarqués.

8.1.3 VR for vocational and ecological rehabilitation of patients with cognitive impairment: a survey

Participants: Emilie Hummel, Mélanie Cogné [contact], Anatole Lécuyer, Valérie Gouranton.

Cognitive impairment arises from various brain injuries or diseases, such as traumatic brain injury, stroke, schizophrenia, or cancer-related cognitive impairment. Cognitive impairment can be an obstacle for patients to the return-to-work. Research suggests various interventions using technology for cognitive and vocational rehabilitation. This work 23 offers an overview of sixteen vocational or ecological VR-based clinical studies among patients with cognitive impairment. The objective is to analyze these studies from a VR perspective focusing on the VR apparatus and tasks, adaptivity, transferability, and immersion of the interventions. Our results highlight how a higher level of immersion could bring the participants to a deeper level of engagement and transferability, rarely assessed in current literature, and a lack of adaptivity in studies involving patients with cognitive impairments. From these considerations, we discuss the challenges of creating a standardized yet adaptive protocol and the perspectives of using immersive technologies to allow precise monitoring, personalized rehabilitation and increased commitment.

This work was done in collaboration with the Service de recherche clinique du Centre François Baclesse, ANTICIPE - Unité de recherche interdisciplinaire pour la prévention et le traitement des cancers and the CHU of Caen.

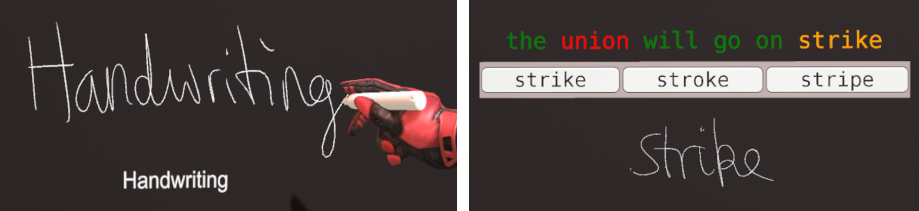

8.1.4 Handwriting for efficient text entry in industrial VR applications: Influence of board orientation and sensory feedback on performance

Participants: Guillaume Moreau, Jean-Marie Normand [contact].

Text entry in Virtual Reality (VR) is becoming an increasingly important task as the availability of hardware increases and the range of VR applications widens. This is especially true for VR industrial applications where users need to input data frequently. Large-scale industrial adoption of VR is still hampered by the productivity gap between entering data via a physical keyboard and VR data entry methods. Data entry needs to be efficient, easy-to-use and to learn and not frustrating. In this work 19, we present a new data entry method based on handwriting recognition (HWR). Users can input text by simply writing on a virtual surface. We conduct a user study to determine the best writing conditions when it comes to surface orientation and sensory feedback. This feedback consists of visual, haptic, and auditory cues (see figure 5). We find that using a slanted board with sensory feedback is best to maximize writing speeds and minimize physical demand. We also evaluate the performance of our method in terms of text entry speed, error rate, usability and workload. The results show that handwriting in VR has high entry speed, usability with little training compared to other controller-based virtual text entry techniques. The system could be further improved by reducing high error rates through the use of more efficient handwriting recognition tools. In fact, the total error rate is 9.3% in the best condition. After 40 phrases of training, participants reach an average of 14.5 WPM, while a group with high VR familiarity reach 16.16 WPM after the same training. The highest observed textual data entry speed is 21.11 WPM.

Handwriting recognition for text entry in VR

This work was done in collaboration with Segula Technologies.

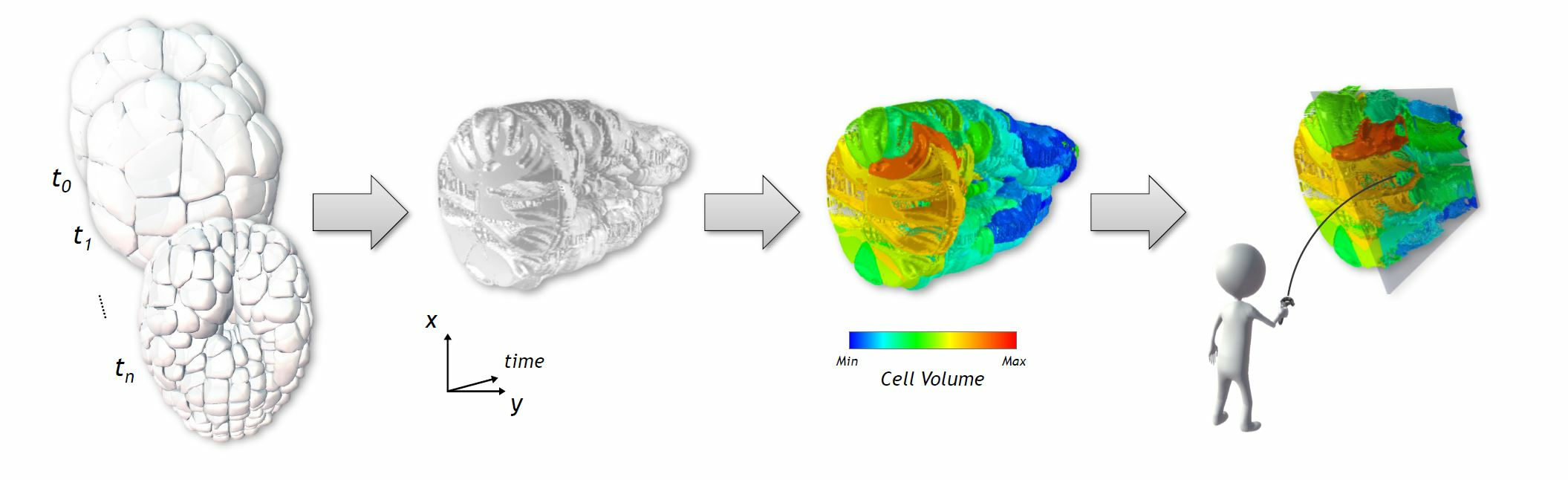

8.1.5 Immersive and interactive visualization of 3D spatio-temporal data using a space time hypercube: Application to cell division and morphogenesis analysis

Participants: Gwendal Fouché, Ferran Argelaguet [contact].

The analysis of multidimensional time-varying datasets faces challenges, notably regarding the representation of the data and the visualization of temporal variations. In this work 18, we proposed an extension of the well-known Space-Time Cube (STC) visualization technique in order to visualize time-varying 3D spatial data, taking advantage of the interaction capabilities of Virtual Reality (VR). First, we propose the Space-Time Hypercube (STH) as an abstraction for 3D temporal data, extended from the STC concept. Second, through the example of embryo development imaging dataset, we detail the construction and visualization of a STC based on a user-driven projection of the spatial and temporal information. This projection yields a 3D STC visualization, which can also encode additional numerical and categorical data (see figure 6). Additionally, we propose a set of tools allowing the user to filter and manipulate the 3D STC which benefits the visualization, exploration and interaction possibilities offered by VR. Finally, we evaluated the proposed visualization method in the context of 3D temporal cell imaging data analysis, through a user study (n = 5) reporting the feedback from five biologists. These domain experts also accompanied the application design as consultants, providing insights on how the STC visualization could be used for the exploration of complex 3D temporal morphogenesis data.

Immersive and interactive visualization of 3D spatio-temporal data

This work was done in collaboration with Inria Serpico team and the LIRMM.

8.1.6 Can you find your way? Comparing wayfinding behaviour between reality and virtual reality

Participants: Vincent Goupil, Bruno Arnaldi, Ferran Argelaguet, Valérie Gouranton [contact].

Signage is an essential element in finding one’s way and avoiding getting lost in open and indoor environments. Yet, designing an effective signage system for a complex structure remains a challenge, as some buildings may need to communicate a lot of information in a minimum amount of space. Virtual reality (VR) provides a new way of studying human wayfinding behaviour, offering a flexible and cost-effective platform for assessing the efficiency of signage, especially during the design phase of a building. However, it is not yet clear whether wayfinding behaviour and signage interpretation differ between reality and virtual reality. We conducted a wayfinding experiment 34 using signage with 20 participants who performed a series of tasks in virtual and real conditions (see figure 7). Participants were video-recorded in both conditions. In addition, oral feedback and post-experiment questionnaires were collected as supplementary data. The aim of this study was to investigate the wayfinding behaviour of a user using signs in an unfamiliar real and virtual environment. The results of the experiment showed a similarity in behaviour between both environments; regardless of the order of passage and the environment, participants required less time to complete the task during the second run by reducing their mistakes and learning from their first run.

Wayfinding in VR

This work was done in collaboration with Vinci Construction and Sogea Bretagne.

8.1.7 Deep weathering effects

Participants: Jean-Marie Normand [contact], Guillaume Moreau.

Weathering phenomena are ubiquitous in urban environments, where it is easy to observe severely degraded old buildings as a result of water penetration. Despite being an important part of any realistic city, this kind of phenomenon has received little attention from the Computer Graphics community compared to stains resulting from biological or flow effects on the building exteriors. In this work 29, we present physically-inspired deep weathering effects, where the penetration of humidity (i.e., water particles) and its interaction with a building’s internal structural elements result in large, visible degradation effects. Our implementation is based on a particle-based propagation model for humidity propagation, coupled with a spring-based interaction simulation that allows chemical interactions, like the formation of rust, to deform and destroy a building’s inner structure. To illustrate our methodology, we show a collection of deep degradation effects applied to urban models involving the creation of rust or of ice within walls (see figure 8).

Realistic weathering effects in graphics

This work was done in collaboration with Univ. Gérone (Spain).

8.2 Avatars and Virtual Embodiment

8.2.1 The sense of embodiment in Virtual Reality and its assessment methods

Participants: Martin Guy, Jean-Marie Normand [contact], Guillaume Moreau.

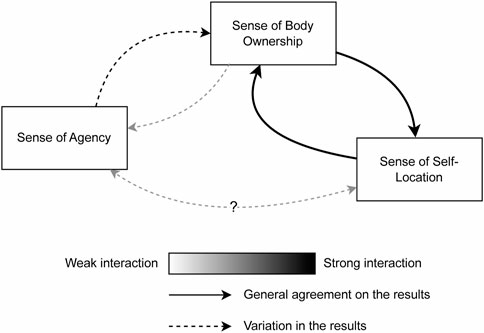

The sense of embodiment refers to the sensations of being inside, having, and controlling a body. In virtual reality, it is possible to substitute a person's body with a virtual body, referred to as an avatar. Modulations of the sense of embodiment through modifications of this avatar have perceptual and behavioural consequences on users that can influence the way users interact with the virtual environment. Therefore, it is essential to define metrics that enable a reliable assessment of the sense of embodiment in virtual reality to better understand its dimensions, the way they interact, and their influence on the quality of interaction in the virtual environment. In this work 21, we first introduce the current knowledge on the sense of embodiment, its dimensions (senses of agency, body ownership, and self-location), and how they relate the ones with the others (see figure 9). Then, we dive into the different methods currently used to assess the sense of embodiment, ranging from questionnaires to neurophysiological measures. We provide a critical analysis of the existing metrics, discussing their advantages and drawbacks in the context of virtual reality. Notably, we argue that real-time measures of embodiment, which are also specific and do not require double tasking, are the most relevant in the context of virtual reality. Electroencephalography seems a good candidate for the future if its drawbacks (such as its sensitivity to movement and practicality) are improved. While the perfect metric has yet to be identified if it exists, this work provides clues on which metric to choose depending on the context, which should hopefully contribute to better assessing and understanding the sense of embodiment in virtual reality.

Sense of embodiement

This work was done in collaboration with INCIA (Bordeaux).

8.2.2 I’m transforming! Effects of visual transitions to change of avatar on the sense of embodiment in AR

Participants: Anatole Lécuyer [contact], Adélaïde Genay.

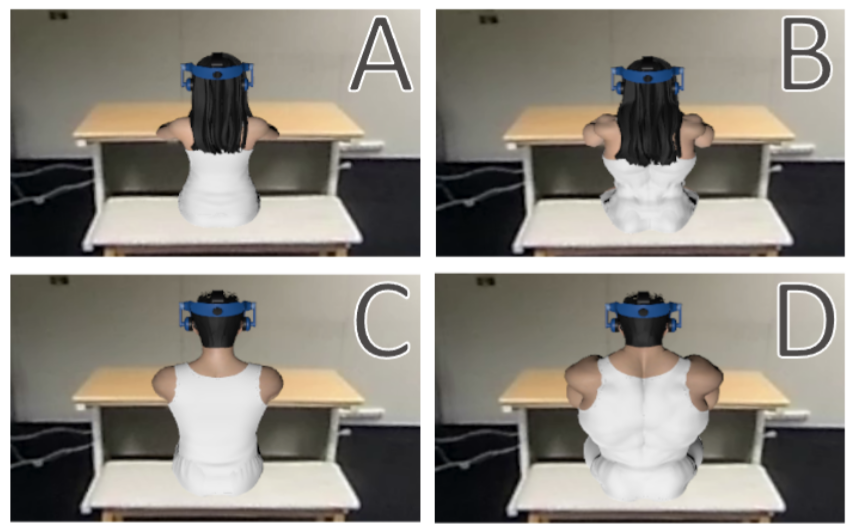

Virtual avatars are more and more often featured in Virtual Reality (VR) and Augmented Reality (AR) applications. When embodying a virtual avatar, one may desire to change of appearance over the course of the embodiment. However, switching suddenly from one appearance to another can break the continuity of the user experience and potentially impact the sense of embodiment (SoE), especially when the new appearance is very different. In this work 36, we explore how applying smooth visual transitions at the moment of the change can help to maintain the SoE and benefit the general user experience. To address this, we implemented an AR system allowing users to embody a regular-shaped avatar that can be transformed into a muscular one through a visual effect (see figure 10). The avatar’s transformation can be triggered either by the user through physical action (“active” transition), or automatically launched by the system (“passive” transition). We conducted a user study to evaluate the effects of these two types of transformations on the SoE by comparing them to control conditions where there was no visual feedback of the transformation. Our results show that changing the appearance of one’s avatar with an active transition (with visual feedback), compared to a passive transition, helps to maintain the user’s sense of agency, a component of the SoE. They also partially suggest that the Proteus effects experienced during the embodiment were enhanced by these transitions. Therefore, we conclude that visual effects controlled by the user when changing their avatar’s appearance can benefit their experience by preserving the SoE and intensifying the Proteus effects.

Avatar transition

This work was done in collaboration with NAIST and Inria POTIOC team.

8.2.3 I am a Genius! Influence of virtually embodying Leonardo Da Vinci on creative performance

Participants: Anatole Lécuyer [contact].

Virtual reality (VR) provides users with the ability to substitute their physical appearance by embodying virtual characters (avatars) using head-mounted displays and motion-capture technologies. Previous research demonstrated that the sense of embodiment toward an avatar can impact user behavior and cognition. In this work 20, we present an experiment designed to investigate whether embodying a well-known creative genius could enhance participants' creative performance. Following a preliminary online survey to select a famous character suited to the purpose of this study, we developed a VR application allowing participants to embody Leonardo da Vinci (see figure 11) or a self-avatar. Self-avatars were approximately matched with participants in terms of skin tone and morphology. 40 participants took part in three tasks seamlessly integrated in a virtual workshop. The first task was based on a Guilford's Alternate Uses test (GAU) to assess participants' divergent abilities in terms of fluency and originality. The second task was based on a Remote Associates Test (RAT) to evaluate convergent abilities. Lastly, the third task consisted in designing potential alternative uses of an object displayed in the virtual environment using a 3D sketching tool. Participants embodying Leonardo da Vinci demonstrated significantly higher divergent thinking abilities, with a substantial difference in fluency between the groups. Conversely, participants embodying a self-avatar performed significantly better in the convergent thinking task. Taken together, these results promote the use of our virtual embodiment approach, especially in applications where divergent creativity plays an important role, such as design and innovation.

Impersonating Da Vinci

This work was done in collaboration with Arts et Métiers.

8.2.4 Beyond my real body: Characterization, impacts, applications and perspectives of “dissimilar” avatars in Virtual Reality

Participants: Antonin Cheymol, Rebecca Fribourg [contact], Anatole Lécuyer, Jean-Marie Normand, Ferran Argelaguet.

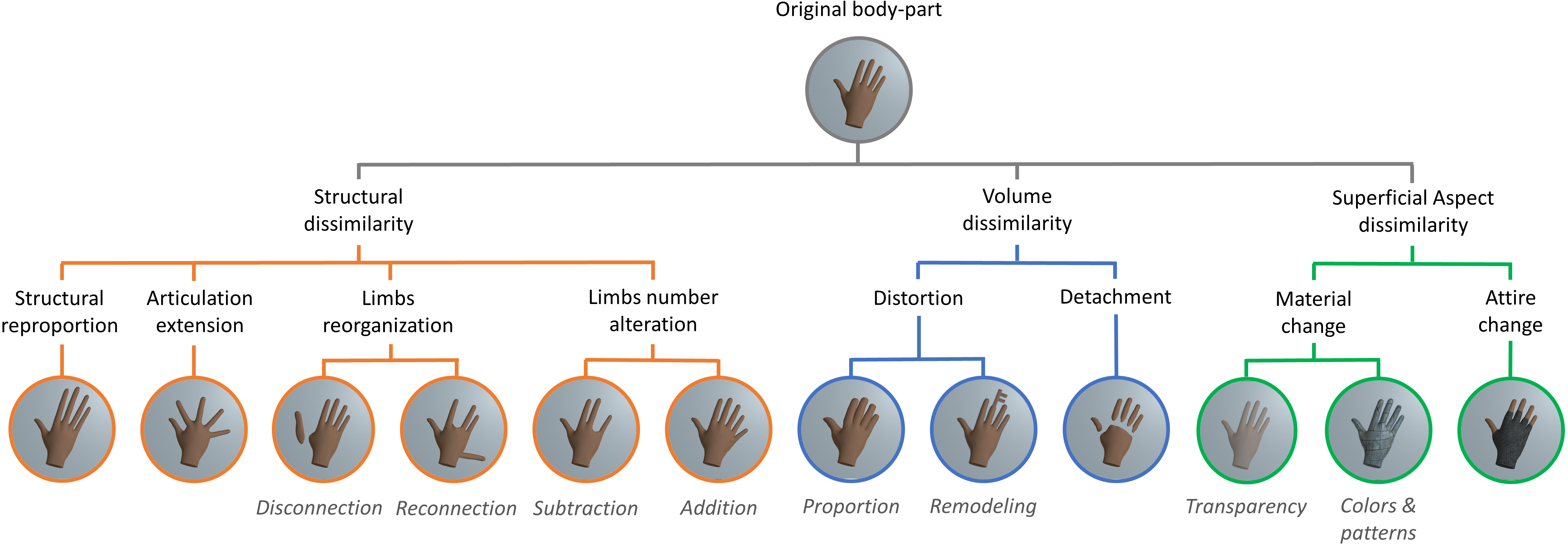

In virtual reality, the avatar - the user’s digital representation - is an important element which can drastically influence the immersive experience. In this work, we especially focus on the use of “dissimilar” avatars i.e., avatars diverging from the real appearance of the user, whether they preserve an anthropomorphic aspect or not (see figure 12). Previous studies reported that dissimilar avatars can positively impact the user experience, in terms for example of interaction, perception or behaviour. However, given the sparsity and multi-disciplinary character of research related to dissimilar avatars, it tends to lack common understanding and methodology, hampering the establishment of novel knowledge on this topic. In this work 15, we propose to address these limitations by discussing: (I) a methodology for dissimilar avatars characterization, (ii) their impacts on the user experience, (iii) their different fields of application, and finally, (iv) future research direction on this topic. Taken together, we believe that this work can support future research related to dissimilar avatars, and help designers of VR applications to leverage dissimilar avatars appropriately.

Atomic hand taxonomy

8.2.5 Now I wanna be a dog: Exploring the impact of audio and tactile feedback on animal embodiment

Participants: Rebecca Fribourg [contact].

Embodying a virtual creature or animal in Virtual Reality (VR) is becoming common, and can have numerous beneficial impacts. For instance, it can help actors improve their performance of a computer-generated creature, or it can endow the user with empathy towards threatened animal species. However, users must feel a sense of embodiment towards their virtual representation, commonly achieved by providing congruent sensory feedback. Providing effective visuo-motor feedback in dysmorphic bodies can be challenging due to human-animal morphology differences. Thus, the purpose of this work 41 was to experiment with the inclusion of audio and audio-tactile feedback to begin unveiling their influence towards animal avatar embodiment (see figure 13). Two experiments were conducted to examine the effects of different sensory feedback on participants’ embodiment in a dog avatar in an Immersive Virtual Environment (IVE). The first experiment (n= 24) included audio, tactile, audio-tactile, and baseline conditions. The second experiment (n= 34) involved audio and baseline conditions only.

Dog avatar

This work was done in collaboration with TCD (Trinity College Dublin).

8.2.6 To stick or not to stick? Studying the impact of offset recovery techniques during mid-air interactions

Participants: Maé Mavromatis, Anatole Lécuyer, Ferran Argelaguet [contact].

During mid-air interactions, common approaches (such as the god-object method) typically rely on visually constraining the user's avatar to avoid visual interpenetrations with the virtual environment in the absence of kinesthetic feedback. In this work we explored two methods which influence how the position mismatch (positional offset) between users' real and virtual hands is recovered when releasing the contact with virtual objects 26. The first method (sticky) constrains the user's virtual hand until the mismatch is recovered, while the second method (unsticky) employs an adaptive offset recovery method. In the first study, we explored the effect of positional offset and of motion alteration on users' behavioral adjustments and users' perception. In a second study, we evaluated variations in the sense of embodiment and the preference between the two control laws. Overall, both methods presented similar results in terms of performance and accuracy, yet, positional offsets strongly impacted motion profiles and users' performance. Both methods also resulted in comparable levels of embodiment. Finally, participants usually expressed strong preferences toward one of the two methods, but these choices were individual-specific and did not appear to be correlated solely with characteristics external to the individuals. Taken together, these results highlight the relevance of exploring the customization of motion control algorithms for avatars.

This work was done in collaboration with Inria Virtus team.

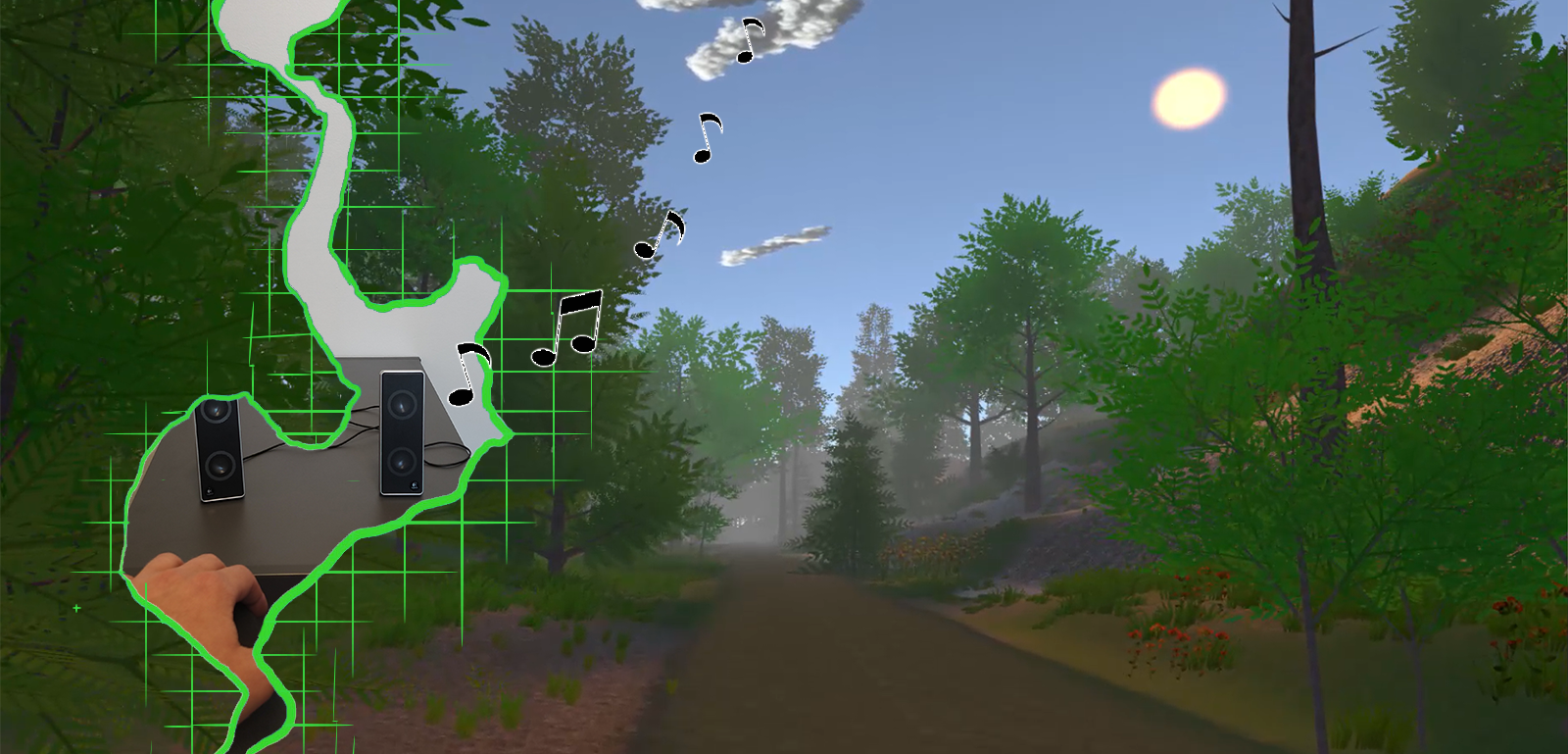

8.2.7 Cybersickness, cognition, & motor skills: The effects of music, gender, and gaming experience

Participants: Panagiotis Kourtesis, Ferran Argelaguet [contact].

Recent research has attempted to identify methods to mitigate cybersickness and examine its aftereffects. In this direction, this work 24 examined the effects of cybersickness on cognitive, motor, and reading performance in VR (see figure 14). Also, this work evaluates the mitigating effects of music on cybersickness, as well as the role of gender, and the computing, VR, and gaming experience of the user. This work reports on two studies. In the 1st study, 92 participants selected the music tracks considered most calming (low valence) or joyful (high valence) to be used in the 2nd study. In the 2nd study, 39 participants performed an assessment four times, once before the rides (baseline), and then once after each ride (3 rides). In each ride either Calming, or Joyful, or No Music was played. During each ride, linear and angular accelerations took place to induce cybersickness in the participants. In each assessment, while immersed in VR, the participants evaluated their cybersickness symptomatology and performed a verbal working memory task, a visuospatial working memory task, and a psychomotor task. While responding to the cybersickness questionnaire (3D UI), eye-tracking was conducted to measure reading time and pupillometry. The results showed that Joyful and Calming music substantially decreased the intensity of nausea-related symptoms. However, only Joyful music significantly decreased the overall cybersickness intensity. Importantly, cybersickness was found to decrease verbal working memory performance and pupil size. Also, it significantly decelerated psychomotor (reaction time) and reading abilities. Higher gaming experience was associated with lower cybersickness. When controlling for gaming experience, there were no significant differences between female and male participants in terms of cybersickness. The outcomes indicated the efficiency of music in mitigating cybersickness, the important role of gaming experience in cybersickness, and the significant effects of cybersickness on pupil size, cognition, psychomotor skills, and reading ability. Another publication related to this work 25 compare and validate Cybersickness in Virtual Reality Questionnaire (CSQ-VR) with SSQ and VRSQ questionnaires.

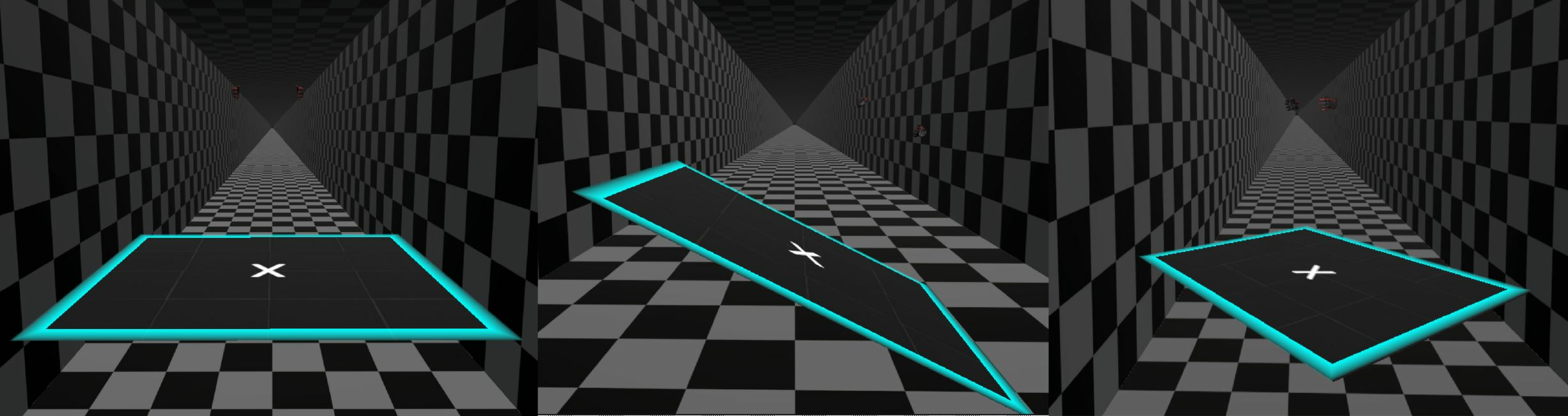

Accelerations in VR

This work was done in collaboration with the University of Edinburgh.

8.3 Haptic Feedback

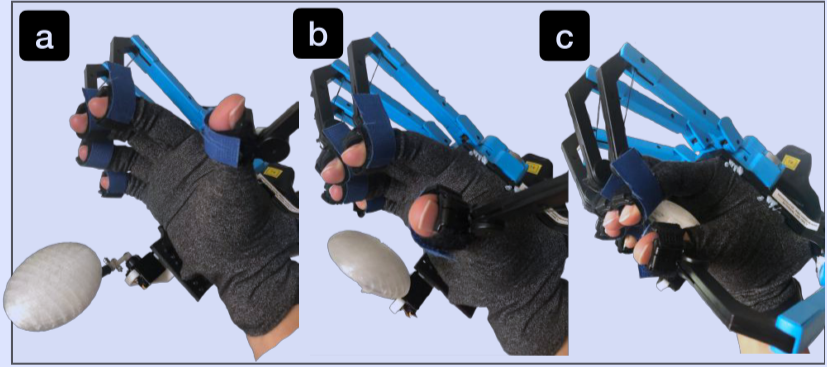

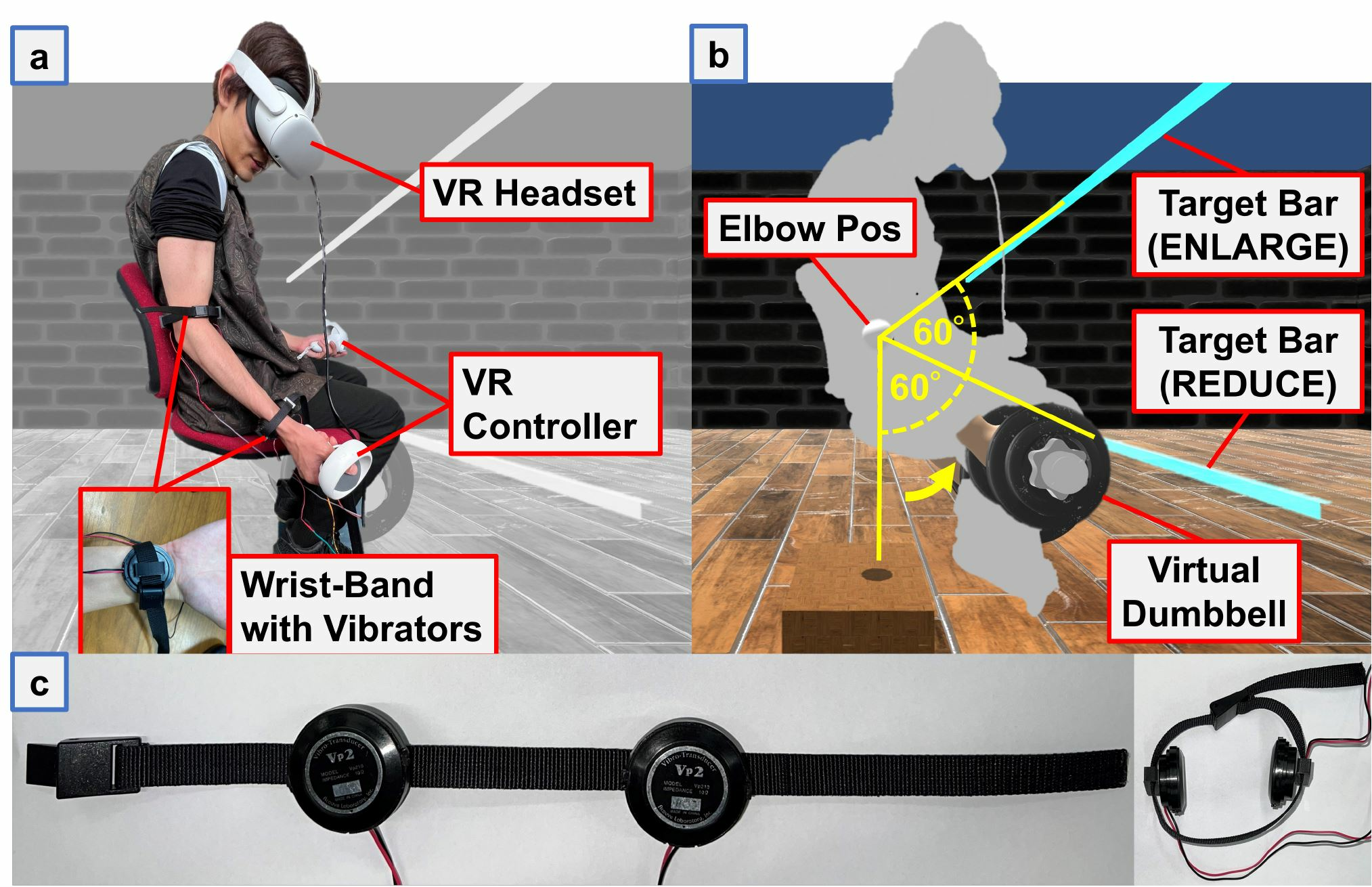

8.3.1 PalmEx: adding palmar force-feedback for 3D manipulation with haptic exoskeleton gloves

Participants: Anatole Lécuyer [contact], Elodie Bouzbib.

Haptic exoskeleton gloves are a widespread solution for providing force-feedback in Virtual Reality (VR), especially for 3D object manipulations. However, they are still lacking an important feature regarding in-hand haptic sensations: the palmar contact. In this work 14 we present PalmEx, a novel approach which incorporates palmar force-feedback into exoskeleton gloves to improve the overall grasping sensations and manual haptic interactions in VR. PalmEx's concept is demonstrated through a self-contained hardware system augmenting a hand exoskeleton with an encountered palmar contact interface-physically encountering the users' palm (see figure 15). We build upon current taxonomies to elicit PalmEx's capabilities for both the exploration and manipulation of virtual objects. We first conduct a technical evaluation optimising the delay between the virtual interactions and their physical counterparts. We then empirically evaluate PalmEx's proposed design space in a user study (n=12) to assess the potential of a palmar contact for augmenting an exoskeleton. Results show that PalmEx offers the best rendering capabilities to perform believable grasps in VR. PalmEx highlights the importance of the palmar stimulation, and provides a low-cost solution to augment existing high-end consumer hand exoskeletons.

PALMEX prototype

This work was done in collaboration with Inria RAINBOW team.

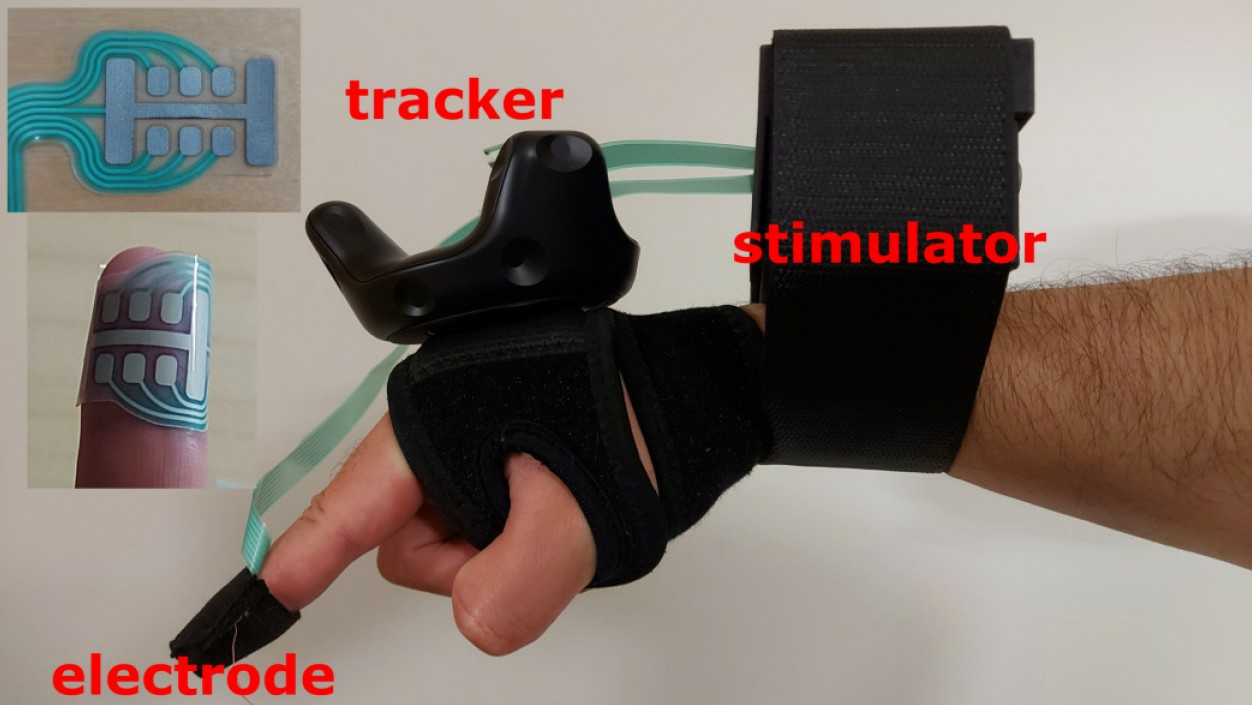

8.3.2 Design, evaluation and calibration of wearable electrotactile interfaces for enhancing contact information in virtual reality

Participants: Sebastian Vizcay, Panagiotis Kourtesis, Ferran Argelaguet [contact].

Electrotactile feedback is a convenient tactile rendering method thanks to reduced form factor and power consumption. Yet, its usage in immersive virtual reality has been scarcely addressed. This work 30 explores how electrotactile feedback could be used to enhance contact information for mid-air interactions in virtual reality (see figure 16). We propose an electrotactile rendering method which modulates the perceived intensity of the electrotactile stimuli according to the interpenetration distance between the user's finger and the virtual surface. In a first user study (N=21), we assessed the performance of our method against visual interpenetration feedback and no-feedback. Contact precision and accuracy were significantly improved when using interpenetration feedback. The results also showed that the calibration of the electrotactile stimuli was key, which motivated a second study exploring how the calibration procedure could be improved. In a second study (N=16), we compared two calibration techniques: a non-VR keyboard and a VR direct interaction method. While the two methods provided comparable usability and calibration accuracy, the VR method was significantly faster. These results pave the way for the usage of electrotactile feedback as an efficient alternative to visual feedback for enhancing contact information in virtual reality.

Electrical stimulator prototype

This work was done in collaboration with Inria RAINBOW team.

8.3.3 Tangible avatar: Enhancing presence and embodiment during seated virtual experiences with a prop-based controller

Participants: Justine Saint-Aubert [contact], Ferran Argelaguet, Anatole Lécuyer.

We investigate 39 using a prop to control human-like avatars in virtual environments while remaining seated. We believe that manipulating a tangible interface, capable of rendering physical sensations and reproducing the movements of an avatar, could lead to a greater virtual experience (presence) and strengthen the relationship between users and the avatar (embodiment) compared to other established controllers. We present a controller based on an instrumented artist doll that users can manipulate to move the avatar in virtual environments (see figure 17). We evaluated the influence of such a controller on the sense of presence and the sense of embodiment in 3 perspectives (third person perspective on a screen, immersive third-person perspective, and immersive first-person perspective in a head-mounted display). We compared the controller with gamepad controllers to control the movements of an avatar in a kick-in-a-ball game as illustration. The results showed that the prop-based controller can increase the sense of presence and fun in all three perspectives. It also enhances the sense of embodiment in the immersive perspectives. It could therefore enhance the user experience in various simulations involving human-like avatars.

Tangible prop

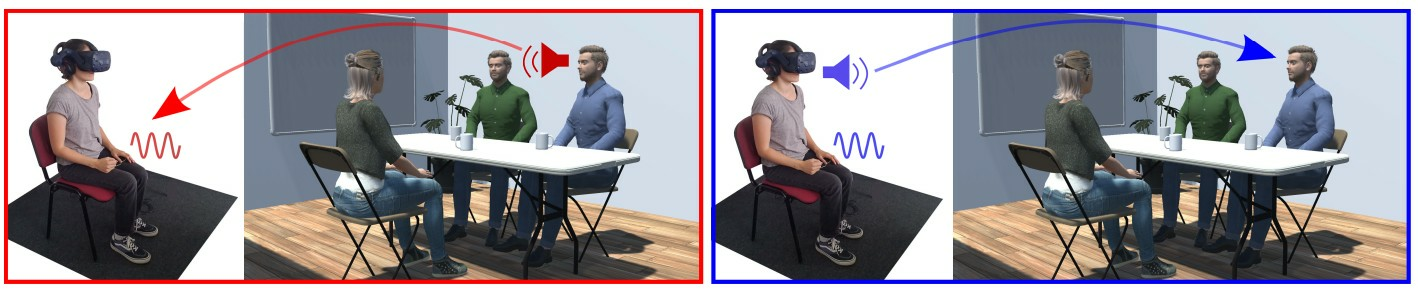

8.3.4 Persuasive vibrations: speech-based vibrations on persuasion, leadership, and co-presence during verbal communication in VR

Participants: Justine Saint-Aubert [contact], Ferran Argelaguet, Marc Macé, Anatole Lécuyer.

In Virtual Reality (VR), a growing number of applications involve verbal communications with avatars, such as for teleconference, entertainment, virtual training, social networks, etc. In this context, our work 40 aims to investigate how tactile feedback consisting in vibrations synchronized with speech could influence aspects related to VR social interactions such as persuasion, co-presence and leadership. We conducted two experiments where participants embody a first-person avatar attending a virtual meeting in immersive VR (see figure 18). In the first experiment, participants were listening to two speaking virtual agents and the speech of one agent was augmented with vibrotactile feedback. Interestingly, the results show that such vibrotactile feedback could significantly improve the perceived co-presence but also the persuasiveness and leadership of the haptically-augmented agent. In the second experiment, the participants were asked to speak to two agents, and their own speech was augmented or not with vibrotactile feedback. The results show that vibrotactile feedback had again a positive effect on co-presence, and that participants perceive their speech as more persuasive in presence of haptic feedback. Taken together, our results demonstrate the strong potential of haptic feedback for supporting social interactions in VR, and pave the way to novel usages of vibrations in a wide range of applications in which verbal communication plays a prominent role.

Persuasive vibrations in VR

This work was done in collaboration with Inria RAINBOW team and Ivcher's institute (Israel).

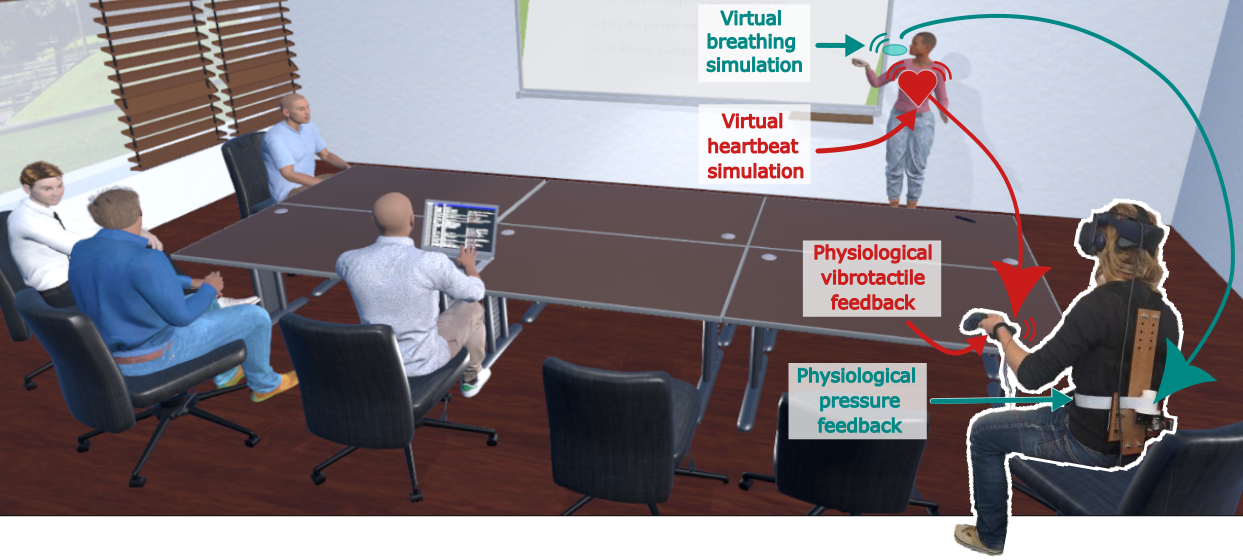

8.3.5 Fostering empathy in social Virtual Reality through physiologically based affective haptic feedback

Participants: Jeanne Hecquard, Justine Saint-Aubert, Ferran Argelaguet, Anatole Lécuyer, Marc Macé [contact].

We study the promotion of positive social interactions in VR by fostering empathy with other users present in the virtual scene. For this purpose 35, we propose using affective haptic feedback to reinforce the connection with another user through the direct perception of their physiological state. We developed a virtual meeting scenario where a human user attends a presentation with several virtual agents. Throughout the meeting, the presenting virtual agent faces various difficulties that alter her stress level. The human user directly feels her stress via two physiologically based affective haptic interfaces: a compression belt and a vibrator, simulating the breathing and the heart rate of the presenter, respectively (see figure 19). We conducted a user study that compared the use of such a "sympathetic" haptic rendering vs an "indifferent" one that does not communicate the presenter's stress status, remaining constant and relaxed at all times. Results are rather contrasted and user-dependent, but they show that sympathetic haptic feedback is globally preferred and can enhance empathy and perceived connection to the presenter. The results promote the use of affective haptics in social VR applications, in which fostering positive relationships plays an important role.

Empathy feedback

This work was done in collaboration with Inria RAINBOW team.

8.3.6 MultiVibes: What if your VR controller had 10 times more vibrotactile actuators?

Participants: Grégoire Richard, Ferran Argelaguet, Anatole Lécuyer [contact].

Consumer-grade virtual reality (VR) controllers are typically equipped with one vibrotactile actuator, allowing to create simple and non-spatialized tactile sensations through the vibration of the entire controller. Leveraging the funneling effect, an illusion in which multiple vibrations are perceived as a single one, in this work 38, we proposed MultiVibes, a VR controller capable of rendering spatialized sensations at different locations on the user’s hand and fingers. The designed prototype includes ten vibrotactile actuators, directly in contact with the skin of the hand, limiting the propagation of vibrations through the controller (see figure 20). We evaluated MultiVibes through two controlled experiments. The first one focused on the ability of users to recognize spatio-temporal patterns, while the second one focused on the impact of MultiVibes on the users’ haptic experience when interacting with virtual objects they can feel. Taken together, the results show that MultiVibes is capable of providing accurate spatialized feedback and that users prefer MultiVibes over recent VR controllers.

MultiVibes prototype

This work was done in collaboration with INRIA LOKI team.

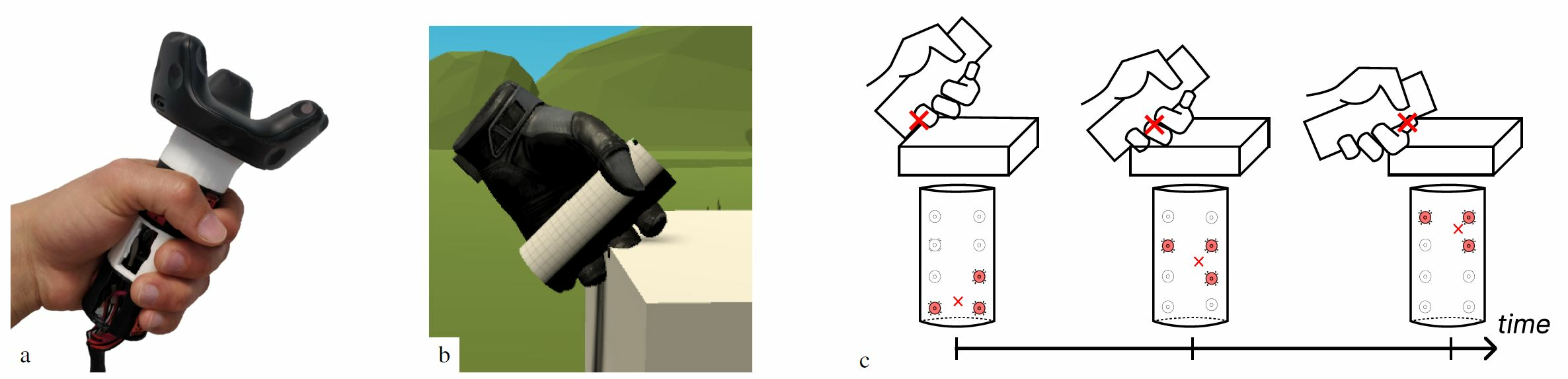

8.3.7 Inducing self-motion sensations with haptic feedback: State-of-the-art and perspectives on “haptic motion”

Participants: Anatole Lécuyer [contact].

While virtual reality applications flourish, there is a growing need for technological solutions to induce compelling self-motion, as an alternative to cumbersome motion platforms. Haptic devices target the sense of touch, yet more and more researchers managed to address the sense of motion by means of specific and localized haptic stimulations. This innovative approach constitutes a specific paradigm that can be called “haptic motion”. This work 16 aims to introduce, formalize, survey and discuss this relatively new research field. First, we summarize some core concepts of self-motion perception, and propose a definition of the haptic motion approach based on three criteria. Then, we present a summary of existing related literature, from which we formulate and discuss three research problems that we estimate key for the development of the field: the rationale to design a proper haptic stimulus, the methods to evaluate and characterize self-motion sensations, and the usage of multimodal motion cues (see figure 21).

Self-motion sensations

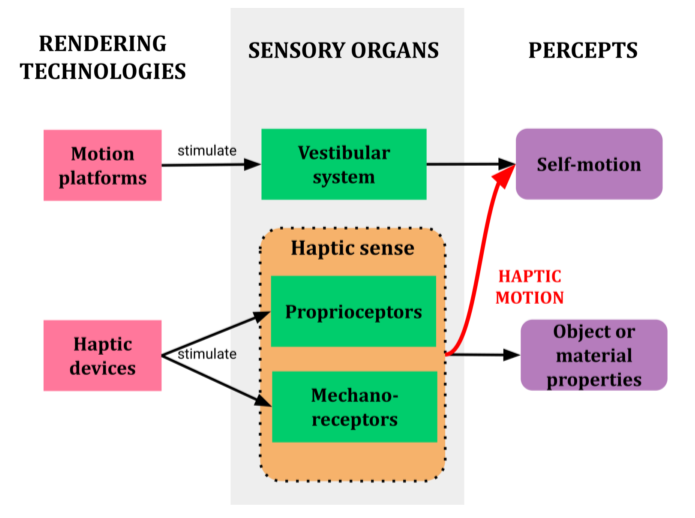

8.3.8 Leveraging tendon vibration to extend pseudo-haptic sensations in VR

Participants: Ferran Argelaguet, Anatole Lécuyer [contact].

The Pseudo-haptic technique is used to modify haptic perception by appropriately changing visual feedback to body movements. Because tendon vibration can affect our somatosensory perception, we proposed a method for leveraging tendon vibration to extend pseudo-haptics 22. To evaluate the proposed method, we conducted three experiments that investigate the effect of tendon vibration on the range and resolution of pseudo-haptic sensation (see figure 22). The first experiment evaluated the effect of tendon vibration on the detection threshold (DT) of visual/physical motion discrepancy. The results show that vibrations on the inner tendons of the wrist and elbow increased the DT. This indicates that tendon vibration can increase applicable visual motion gain without being noticed by users. The second experiment investigated the effect of tendon vibration on the resolution, that is, the just noticeable difference (JND) of pseudo-weight sensation. The results indicate that both with-and without-vibration conditions had a similar JND of pseudo-weight sensation and thus, both conditions can be considered to have a similar resolution of sense of weight. The third experiment investigated the equivalence between the weight sensation induced by tendon vibration and visual motion gain, that is, the point of subjective equality (PSE). The results show that vibration increases the sense of weight, and its effect was the same as that using a gain of 0.64 without vibration. Taken together, our results suggest that using tendon vibration can enable a significantly wider (nearly double) range of pseudo-haptic sensation, without impairing its resolution.

Tendon vibrations

This work was done in collaboration with the University of Tokyo.

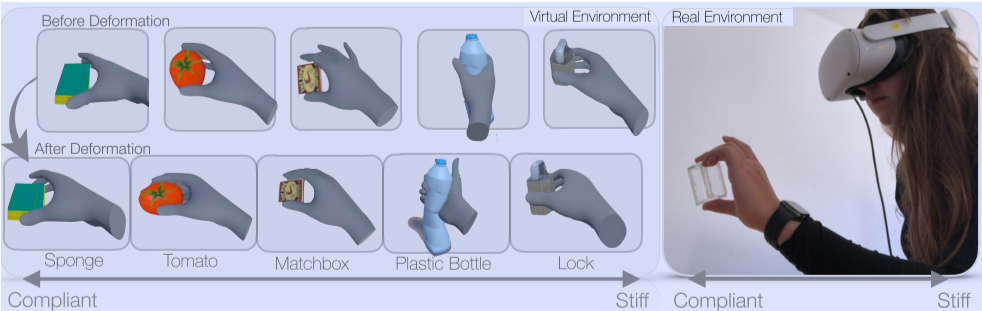

8.3.9 When tangibles become deformable: Studying pseudo-stiffness perceptual thresholds in a VR grasping task

Participants: Anatole Lécuyer [contact], Elodie Bouzbib.

Pseudo-Haptic techniques, or visuo-haptic illusions, leverage user’s visual dominance over haptics to alter the users’ perception. As they create a discrepancy between virtual and physical interactions, these illusions are limited to a perceptual threshold. Many haptic properties have been studied using pseudo-haptic techniques, such as weight, shape or size. In this work, we focus on estimating the perceptual thresholds for pseudo-stiffness in a virtual reality grasping task 13. We conducted a user study where we estimated if compliance can be induced on a non-compressible tangible object and to what extent (see figure 23). Our results show that (1) compliance can be induced in a rigid tangible object and that (2) pseudo-haptics can simulate beyond 24 N/cm stiffness (between a gummy bear and a raisin, up to rigid objects). Pseudo-stiffness efficiency is (3) enhanced by the objects’ scales, but mostly (4) correlated to the user input force. Taken altogether, our results offer novel opportunities to simplify the design of future haptic interfaces, and extend the haptic properties of passive props in VR.

Deformable tangibles

This work was done in collaboration with Inria RAINBOW team.

8.4 Brain Computer Interfaces

8.4.1 Design and evaluation of vibrotactile stimulus to support a KMI-based neurofeedback

Participants: Anatole Lécuyer [contact], Gabriela Altamira.

In this work 31, we are developing a brain-computer interface integrating visual and vibrotactile feedback on the forearm and the hand in a gamified virtual environment to give situated and embodied information about the quality of stroke patients’ kinesthetic motor imagery (KMI) of a grasping movement (see figure 24). Multimodal sensory stimuli are used to provide a sense of embodiment. Particularly, adding vibrotactile feedback is expected to improve the performance of a motor imagery task in neurotypical and stroke participants. A related publication 32 describes the multisensory stimuli used to provide a sense of embodiment.

Multisensory interface for stroke neurorehabilitation

This work was done in collaboration with LORIA and Univ. Lorraine.

8.4.2 Which sensations should you imagine?

Participants: François Le Jeune, Emile Savalle, Léa Pillette [contact].

During motor imagery-based brain computer interfaces (MI-BCI) training, users are most often instructed to perform kinesthetic motor imagery, i.e., imagine the sensations related to a movement [1]. However, there is a great variety of sensations associated with motor imagery that can either be interoceptive or exteroceptive [2]. Interoceptive sensations related to movement arise from within the body, such as muscle contraction, whereas exteroceptive sensations are sensed through the skin, such as touch or vibration. Among those different sensations, we do not know which to advise MI-BCI users to imagine. Thus, our experiment 44 aims at studying the influence of imagining sensations on neurophysiological activity and user experience.

8.4.3 Measuring presence in a virtual environment using electroencephalography: A study of breaks in presence using an oddball paradigm

Participants: Emile Savalle, Léa Pillette, Ferran Argelaguet, Léa Pillette, Anatole Lécuyer, Kyung-Ho Won, Marc Macé [contact].

Presence is one of the main factor conditioning the user-experience in virtual reality (VR). It corresponds to the illusion of being physically located in a virtual environment. Presence is usually measured subjectively through questionnaires. However, questionnaires cannot be filled in when the user is experiencing presence, as it would disrupt the feeling. The use of electroencephalography (EEG) to monitor users while they are immersed in VR presents an opportunity to bridge this gap and assess presence continuously. This study 47 aims at investigating whether different levels of presence can be distinguished from EEG signals (see figure 25). Investigating further, we also tried to classify single trials according to the level of presence in VR 45.

Teaser measuring presence in VR

8.4.4 Acceptability of BCI-based procedures for motor rehabilitation after stroke: A questionnaire study among patients

Participants: Léa Pillette [contact].

Stroke leaves around 40% of surviving patients dependent in their activities, notably due to severe motor disabilities. BCIs have been shown to favour motor recovery after stroke, but this efficiency has not reached yet the level required to achieve a clinical usage. We hypothesise that improving BCI acceptability, notably by personalising BCI-based rehabilitation procedures to each patient, will reduce anxiety and favour engagement in the rehabilitation process, thereby increasing the efficiency of those procedures. To test this hypothesis, we need to understand how to adapt BCI procedures to each patient depending on their profile. Thus, we constructed a model of BCI acceptability 43 based on the literature, adapted it in a questionnaire, and distributed the latter to post-stroke patients (N=140). Several articles related to this online tool have been published 42, 50, 37

This work was done in collaboration with INCIA (Bordeaux), CLLE and ICHN (Toulouse) and CRISTAL (Lille).

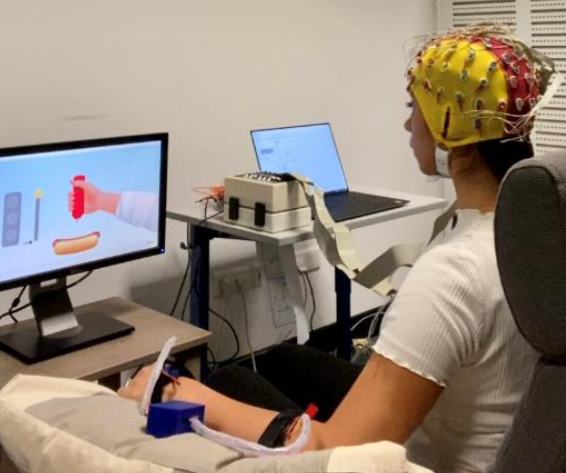

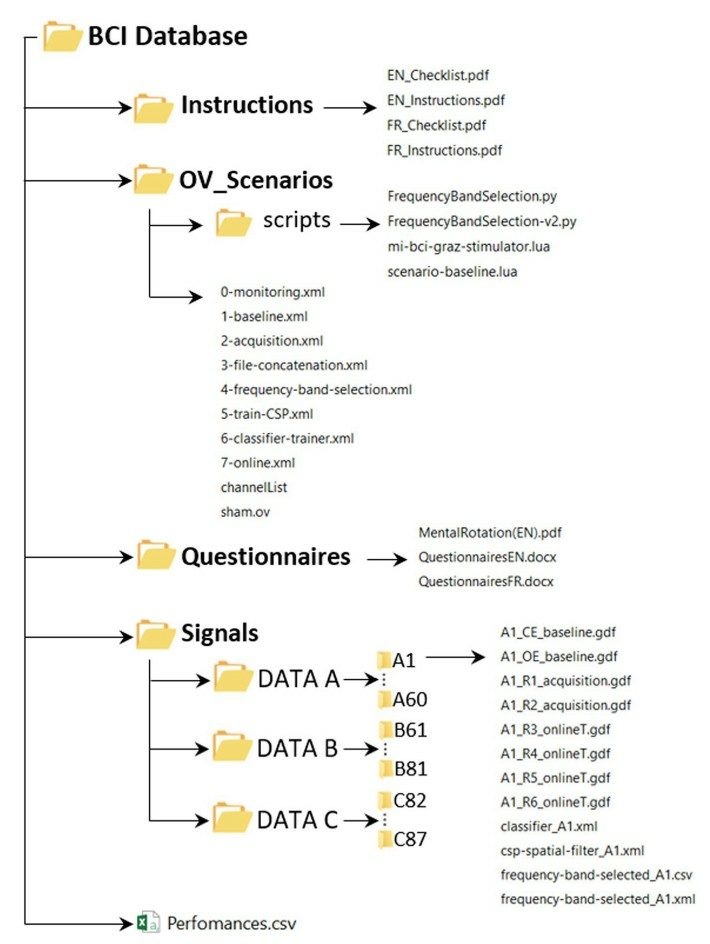

8.4.5 A large EEG database with users’ profile information for motor imagery brain-computer interface research

Participants: Léa Pillette [contact].

In this work 17, we present and share a large database containing electroencephalographic signals from 87 human participants, collected during a single day of brain-computer interface (BCI) experiments, organized into 3 datasets (A, B, and C) that were all recorded using the same protocol: right and left hand motor imagery (MI). Each session contains 240 trials (120 per class), which represents more than 20,800 trials, or approximately 70 hours of recording time. It includes the performance of the associated BCI users, detailed information about the demographics, personality profile as well as some cognitive traits and the experimental instructions and codes (executed in the open-source platform OpenViBE). Such database could prove useful for various studies, including but not limited to: (1) studying the relationships between BCI users’ profiles and their BCI performances, (2) studying how EEG signals properties varies for different users’ profiles and MI tasks, (3) using the large number of participants to design cross-user BCI machine learning algorithms or (4) incorporating users’ profile information into the design of EEG signal classification algorithms. The content of this EEG database is also described in this article 49 (see figure 26).

EEG database structure

This work was done in collaboration with Inria POTIOC team (Bordeaux).

8.4.6 Does gender matter in motor imagery BCIs?

Participants: Léa Pillette [contact].

A major issue in application of Motor Imagery Brain-computer interfaces (MI-BCI) is BCI inefficiency, which affects 15-30% of the population. Several studies have tried to examine the effect of gender on MI-BCI performance, however the reports remain inconsistent due to small sample sizes and unequal gender distribution in past research. Hence, this study 48 aimed to address this gap by collecting a large sample of female and male BCI users and investigating the role of gender in MI-BCIs in a reliable and generalizable manner.

This work was done in collaboration with Tilburg University (Netherlands) and Inria POTIOC team (Bordeaux).

8.5 Art and Cultural Heritage

8.5.1 Virtual Reality for the preservation and promotion of historical real tennis

Participants: Ronan Gaugne [contact], Sony Saint-Auret, Valérie Gouranton.

Real tennis or “courte paume" in its original naming in French, is a racket sport that has been played for centuries and is considered the ancestor of tennis. It was a very popular sport in Europe during the Renaissance period, practiced in every layer of the society. It is still practiced today in few courts in the world, especially in United Kingdom, France, Australia, and USA. It has been listed in the Inventory of Intangible Cultural Heritage in France since 2012. The goal of our project 33 was to elicit interest in this historical sport and for the new and future generations to experience it. We developed a virtual environment that enables its users to experience real tennis game (see figure 27). This environment was then tested to assess its acceptability and usability in different context of use. We found that such use of virtual reality enabled our participants to discover the history and rules of this sport, in a didactic and pleasant manner. We hope that our VR application will encourage younger and future generations to play real tennis.

“Real tennis” in VR

8.5.2 De l’imagerie médicale à la réalité virtuelle pour l’archéologie

Participants: Ronan Gaugne, Bruno Arnaldi, Valérie Gouranton [contact].

In this book chapter, we describe the IRMA project 46 which aims to design innovative methodologies for research in the field of historical and archaeological heritage based on a combination of medical imaging technologies and interactive 3D restitution modalities (virtual reality, augmented reality, haptics, additive manufacturing) (see figure 28). These tools are based on recent research results from a collaboration between IRISA, Inrap and the company Image ET and are intended for cultural heritage professionals such as museums, curators, restorers and archaeologists.

3D printed copy of a fibula

This work was done in collaboration with INRAP institute, Trajectoires team (Paris) and Image ET company.

9 Bilateral contracts and grants with industry

9.1 Grants with Industry

Nemo.AI Laboratory with InterDigital

Participants: Ferran Argelaguet [contact], Anatole Lécuyer, Yann Glemarrec, Tom Roy, Philippe Clermont de Gallerande.

To engage and employ scientists and engineers across the Brittany region in researching the technologies that will shape the metaverse, Inria, the French National Institute for Research in Digital Science and Technology, and InterDigital, Inc. (NASDAQ:IDCC), a mobile and video technology research and development company, launched the Nemo.AI Common Lab. This public-private partnership is dedicated to leveraging the combined research expertise of Inria and InterDigital labs to foster local participation in emerging innovations and global technology trends. Named after the pioneering Captain Nemo from Jules Verne’s 20,000 Leagues Under the Sea, the Nemo.AI Common Lab aims to equip the Brittany region with resources to pursue cutting edge scientific research and explore the technologies that will define media experiences in the future. The project reflects the recognized importance of artificial intelligence (AI) in enabling new media experiences in a digital and responsible society.

Orange Labs

Participants: Lysa Gramoli, Bruno Arnaldi, Valérie Gouranton [contact].

This grant started in October 2020. It supported Lysa Gramoli's PhD program with Orange Labs company on “Simulation of autonomous agents in connected virtual environments”.

Sogea Bretagne

Participants: Vincent Goupil, Bruno Arnaldi, Valérie Gouranton [contact].