Keywords

Computer Science and Digital Science

- A5.1. Human-Computer Interaction

- A5.1.1. Engineering of interactive systems

- A5.1.2. Evaluation of interactive systems

- A5.1.3. Haptic interfaces

- A5.1.4. Brain-computer interfaces, physiological computing

- A5.1.5. Body-based interfaces

- A5.1.6. Tangible interfaces

- A5.1.8. 3D User Interfaces

- A5.1.9. User and perceptual studies

- A5.2. Data visualization

- A5.6. Virtual reality, augmented reality

- A5.6.1. Virtual reality

- A5.6.2. Augmented reality

- A5.6.3. Avatar simulation and embodiment

- A5.6.4. Multisensory feedback and interfaces

- A5.7.2. Music

Other Research Topics and Application Domains

- B2.2.6. Neurodegenerative diseases

- B2.8. Sports, performance, motor skills

- B6.1.1. Software engineering

- B9.2.1. Music, sound

- B9.5.1. Computer science

- B9.5.6. Data science

- B9.6.10. Digital humanities

- B9.8. Reproducibility

1 Team members, visitors, external collaborators

Research Scientists

- Stéphane Huot [Team leader, Inria, Senior Researcher, HDR]

- Edward Lank [Inria & University of Waterloo, Canada, Advanced Research Position, until Apr 2020]

- Sylvain Malacria [Inria, Researcher]

- Mathieu Nancel [Inria, Researcher]

- Marcelo M. Wanderley [Inria & McGill University, Canada, Advanced Research Position, until Mar 2020]

Faculty Members

- Géry Casiez [Université de Lille, Professor, Junior member of Institut Universitaire de France, HDR]

- Thomas Pietrzak [Université de Lille, Associate Professor]

Post-Doctoral Fellow

- Raiza Sarkis Hanada [Inria, until Mar 2020]

PhD Students

- Axel Antoine [Université de Lille]

- Marc Baloup [Inria]

- Yuan Chen [Université de Lille & University of Waterloo, Canada, from Dec 2020]

- Johann Felipe Gonzalez Avila [Université de Lille & Carleton University, Canada, from Sep 2020]

- Eva Mackamul [Inria, from Oct 2020]

- Damien Masson [University of Waterloo, Canada]

- Nicole Pong [Inria, until Feb 2020]

- Gregoire Richard [Inria]

- Philippe Schmid [Inria]

- Travis West [Université de Lille & McGill University, Canada, from Oct 2020]

Interns and Apprentices

- Shaan Chopra [Université de Lille, from Jun 2020 until Aug 2020]

- Rohan Hundia [Université de Lille, from Jun 2020 until Aug 2020]

- Alice Loizeau [Inria, from Sep 2020]

- Ophelie Morel [Université Jules Vernes Picardie, until Apr 2020]

- Enzo Pain [Université de Lille, from May 2020 until Jul 2020]

- Pierrick Uro [Université de Lille, from Jun 2020 until Jul 2020]

Administrative Assistant

- Julie Jonas [Inria]

External Collaborator

- Edward Lank [Université de Waterloo, Canada, from May 2020]

2 Overall objectives

Human-Computer Interaction (HCI) is a constantly moving field 41. Changes in computing technologies extend their possible uses, and modify the conditions of existing uses. People also adapt to new technologies and adjust them to their own needs 45. New problems and opportunities thus regularly arise and must be addressed from the perspectives of both the user and the machine, to understand and account for the tight coupling between human factors and interactive technologies. Our vision is to connect these two elements: Knowledge & Technology for Interaction.

2.1 Knowledge for Interaction

In the early 1960s, when computers were scarce, expensive, bulky, and formal-scheduled machines used for automatic computations, Engelbart saw their potential as personal interactive resources. He saw them as tools we would purposefully use to carry out particular tasks and that would empower people by supporting intelligent use 38. Others at the same time were seeing computers differently, as partners, intelligent entities to whom we would delegate tasks. These two visions still constitute the roots of today's predominant HCI paradigms, use and delegation. In the delegation approach, a lot of effort has been made to support oral, written and non-verbal forms of human-computer communication, and to analyze and predict human behavior. But the inconsistency and ambiguity of human beings, and the variety and complexity of contexts, make these tasks very difficult 50 and the machine is thus the center of interest.

2.1.1 Computers as tools

The focus of Loki is not on what machines can understand or do by themselves, but on what people can do with them. We do not reject the delegation paradigm but clearly favor the one of tool use, aiming for systems that support intelligent use rather than for intelligent systems. And as the frontier is getting thinner, one of our goals is to better understand what makes an interactive system perceived as a tool or as a partner, and how the two paradigms can be combined for the best benefit of the user.

2.1.2 Empowering tools

The ability provided by interactive tools to create and control complex transformations in real-time can support intellectual and creative processes in unusual but powerful ways. But mastering powerful tools is not simple and immediate, it requires learning and practice. Our research in HCI should not just focus on novice or highly proficient users, it should also care about intermediate ones willing to devote time and effort to develop new skills, be it for work or leisure.

2.1.3 Transparent tools

Technology is most empowering when it is transparent: invisible in effect, it does not get in your way but lets you focus on the task. Heidegger characterized this unobtruded relation to things with the term zuhanden (ready-to-hand). Transparency of interaction is not best achieved with tools mimicking human capabilities, but with tools taking full advantage of them given the context and task. For instance, the transparency of driving a car “is not achieved by having a car communicate like a person, but by providing the right coupling between the driver and action in the relevant domain (motion down the road)” 54. Our actions towards the digital world need to be digitized and we must receive proper feedback in return. But input and output technologies pose somewhat inevitable constraints while the number, diversity, and dynamicity of digital objects call for more and more sophisticated perception-action couplings for increasingly complex tasks. We want to study the means currently available for perception and action in the digital world: Do they leverage our perceptual and control skills? Do they support the right level of coupling for transparent use? Can we improve them or design more suitable ones?

2.2 Technology for Interaction

Studying the interactive phenomena described above is one of the pillars of HCI research, in order to understand, model and ultimately improve them. Yet, we have to make those phenomena happen, to make them possible and reproducible, be it for further research or for their diffusion 40. However, because of the high viscosity and the lack of openness of actual systems, this requires considerable efforts in designing, engineering, implementing and hacking hardware and software interactive artifacts. This is what we call “The Iceberg of HCI Research”, of which the hidden part supports the design and study of new artifacts, but also informs their creation process.

2.2.1 “Designeering Interaction”

Both parts of this iceberg are strongly influencing each other: The design of interaction techniques (the visible top) informs on the capabilities and limitations of the platform and the software being used (the hidden bottom), giving insights into what could be done to improve them. On the other hand, new architectures and software tools open the way to new designs, by giving the necessary bricks to build with 42. These bricks define the adjacent possible of interactive technology, the set of what could be designed by assembling the parts in new ways. Exploring ideas that lie outside of the adjacent possible require the necessary technological evolutions to be addressed first. This is a slow and gradual but uncertain process, which helps to explore and fill a number of gaps in our research field but can also lead to deadlocks. We want to better understand and master this process—i. e., analyzing the adjacent possible of HCI technology and methods—and introduce tools to support and extend it. This could help to make technology better suited to the exploration of the fundamentals of interaction, and to their integration into real systems, a way to ultimately improve interactive systems to be empowering tools.

2.2.2 Computers vs Interactive Systems

In fact, today's interactive systems—e. g., desktop computers, mobile devices— share very similar layered architectures inherited from the first personal computers of the 1970s. This abstraction of resources provides developers with standard components (UI widgets) and high-level input events (mouse and keyboard) that obviously ease the development of common user interfaces for predictable and well-defined tasks and users' behaviors. But it does not favor the implementation of non-standard interaction techniques that could be better adapted to more particular contexts, to expressive and creative uses. Those often require to go deeper into the system layers, and to hack them until getting access to the required functionalities and/or data, which implies switching between programming paradigms and/or languages.

And these limitations are even more pervading as interactive systems have changed deeply in the last 20 years. They are no longer limited to a simple desktop or laptop computer with a display, a keyboard and a mouse. They are becoming more and more distributed and pervasive (e. g., mobile devices, Internet of Things). They are changing dynamically with recombinations of hardware and software (e. g., transition between multiple devices, modular interactive platforms for collaborative use). Systems are moving “out of the box” with Augmented Reality, and users are going “ inside of the box” with Virtual Reality. This is obviously raising new challenges in terms of human factors, usability and design, but it also deeply questions actual architectures.

2.2.3 The Interaction Machine

We believe that promoting digital devices to empowering tools requires better fundamental knowledge about interaction phenomena AND to revisit the architecture of interactive systems in order to support this knowledge. By following a comprehensive systems approach—encompassing human factors, hardware elements, and all software layers above—we want to define the founding principles of an Interaction Machine:

- a set of hardware and software requirements with associated specifications for interactive systems to be tailored to interaction by leveraging human skills;

- one or several implementations to demonstrate and validate the concept and the specifications in multiple contexts;

- guidelines and tools for designing and implementing interactive systems, based on these specifications and implementations.

To reach this goal, we will adopt an opportunistic and iterative strategy guided by the designeering approach, where the engineering aspect will be fueled by the interaction design and study aspect. We will address several fundamental problems of interaction related to our vision of “empowering tools”, which, in combination with state-of-the-art solutions, will instruct us on the requirements for the solutions to be supported in an interactive system. This consists in reifying the concept of the Interaction Machine into multiple contexts and for multiple problems, before converging towards a more unified definition of “what is an interactive system”, the ultimate Interaction Machine, which constitutes the main scientific and engineering challenge of our project.

3 Research program

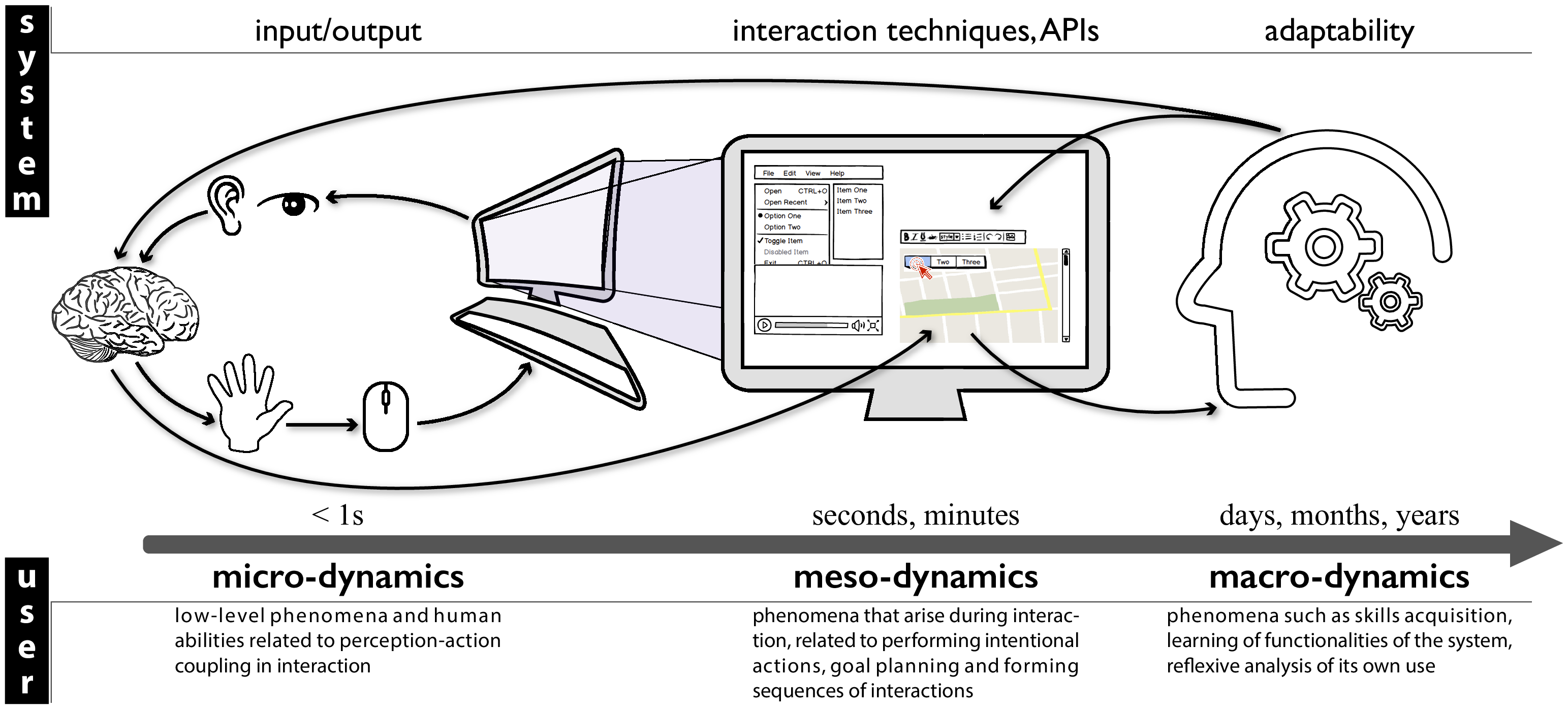

Interaction is by nature a dynamic phenomenon that takes place between interactive systems and their users. Redesigning interactive systems to better account for interaction requires fine understanding of these dynamics from the user side so as to better handle them from the system side. In fact, layers of actual interactive systems abstract hardware and system resources from a system and programing perspective. Following our Interaction Machine concept, we are reconsidering these architectures from the user's perspective, through different levels of dynamics of interaction (see Figure 1).

Considering phenomena that occur at each of these levels as well as their relationships will help us to acquire the necessary knowledge (Empowering Tools) and technological bricks (Interaction Machine) to reconcile the way interactive systems are designed and engineered with human abilities. Although our strategy is to investigate issues and address challenges for all of the three levels, our immediate priority is to focus on micro-dynamics since it concerns very fundamental knowledge about interaction and relates to very low-level parts of interactive systems, which is likely to influence our future research and developments at the other levels.

3.1 Micro-Dynamics

Micro-dynamics involve low-level phenomena and human abilities which are related to short time/instantness and to perception-action coupling in interaction, when the user has almost no control or consciousness of the action once it has been started. From a system perspective, it has implications mostly on input and output (I/O) management.

3.1.1 Transfer functions design and latency management

We have developed a recognized expertise in the characterization and the design of transfer functions 37, 49, i. e., the algorithmic transformations of raw user input for system use. Ideally, transfer functions should match the interaction context. Yet the question of how to maximize one or more criteria in a given context remains an open one, and on-demand adaptation is difficult because transfer functions are usually implemented at the lowest possible level to avoid latency. Latency has indeed long been known as a determinant of human performance in interactive systems 44 and recently regained attention with touch interactions 43. These two problems require cross examination to improve performance with interactive systems: Latency can be a confounding factor when evaluating the effectiveness of transfer functions, and transfer functions can also include algorithms to compensate for latency.

We have recently proposed new cheap but robust methods for the measurement of end-to-end latency 2 and worked on compensation methods 48 and the evaluation of their perceived side effects 9. Our goal is then to automatically adapt transfer functions to individual users and contexts of use, which we started in 23, while reducing latency in order to support stable and appropriate control. To achieve this, we will investigate combinations of low-level (embedded) and high-level (application) ways to take user capabilities and task characteristics into account and reduce or compensate for latency in different contexts, e. g., using a mouse or a touchpad, a touch-screen, an optical finger navigation device or a brain-computer interface. From an engineering perspective, this knowledge on low-level human factors will help us to rethink and redesign the I/O loop of interactive systems in order to better account for them and achieve more adapted and adaptable perception-action coupling.

3.1.2 Tactile feedback & haptic perception

We are also concerned with the physicality of human-computer interaction, with a focus on haptic perception and related technologies. For instance, when interacting with virtual objects such as software buttons on a touch surface, the user cannot feel the click sensation as with physical buttons. The tight coupling between how we perceive and how we manipulate objects is then essentially broken although this is instrumental for efficient direct manipulation. We have addressed this issue in multiple contexts by designing, implementing and evaluating novel applications of tactile feedback 5.

In comparison with many other modalities, one difficulty with tactile feedback is its diversity. It groups sensations of forces , vibrations , friction , or deformation. Although this is a richness, it also raises usability and technological challenges since each kind of haptic stimulation requires different kinds of actuators with their own parameters and thresholds. And results from one are hardly applicable to others. On a “knowledge” point of view, we want to better understand and empirically classify haptic variables and the kind of information they can represent (continuous, ordinal, nominal), their resolution, and their applicability to various contexts. From the “technology” perspective, we want to develop tools to inform and ease the design of haptic interactions taking best advantage of the different technologies in a consistent and transparent way.

3.2 Meso-Dynamics

Meso-dynamics relate to phenomena that arise during interaction, on a longer but still short time-scale. For users, it is related to performing intentional actions, to goal planning and tools selection, and to forming sequences of interactions based on a known set of rules or instructions. From the system perspective, it relates to how possible actions are exposed to the user and how they have to be executed (i. e., interaction techniques). It also has implication on the tools for designing and implementing those techniques (programming languages and APIs).

3.2.1 Interaction bandwidth and vocabulary

Interactive systems and their applications have an always-increasing number of available features and commands due to, e. g., the large amount of data to manipulate, increasing power and number of functionalities, or multiple contexts of use.

On the input side, we want to augment the interaction bandwidth between the user and the system in order to cope with this increasing complexity. In fact, most input devices capture only a few of the movements and actions the human body is capable of. Our arms and hands for instance have many degrees of freedom that are not fully exploited in common interfaces. We have recently designed new technologies to improve expressibility such as a bendable digitizer pen 39, or reliable technology for studying the benefits of finger identification on multi-touch interfaces 4.

On the output side, we want to expand users' interaction vocabulary. All of the features and commands of a system can not be displayed on screen at the same time and lots of advanced features are by default hidden to the users (e. g., hotkeys) or buried in deep hierarchies of command-triggering systems (e. g., menus). As a result, users tend to use only a subset of all the tools the system actually offers 47. We will study how to help them to broaden their knowledge of available functions.

Through this “opportunistic” exploration of alternative and more expressive input methods and interaction techniques, we will particularly focus on the necessary technological requirements to integrate them into interactive systems, in relation with our redesign of the I/O stack at the micro-dynamics level.

3.2.2 Spatial and temporal continuity in interaction

At a higher level, we will investigate how more expressive interaction techniques affect users' strategies when performing sequences of elementary actions and tasks. More generally, we will explore the “continuity” in interaction. Interactive systems have moved from one computer to multiple connected interactive devices (computer, tablets, phones, watches, etc.) that could also be augmented through a Mixed-Reality paradigm. This distribution of interaction raises new challenges, both in terms of usability and engineering, that we clearly have to consider in our main objective of revisiting interactive systems 46. It involves the simultaneous use of multiple devices and also the changes in the role of devices according to the location, the time, the task, and contexts of use: a tablet device can be used as the main device while traveling, and it becomes an input device or a secondary monitor when resuming that same task once in the office; a smart-watch can be used as a standalone device to send messages, but also as a remote controller for a wall-sized display. One challenge is then to design interaction techniques that support smooth, seamless transitions during these spatial and temporal changes in order to maintain the continuity of uses and tasks, and how to integrate these principles in future interactive systems.

3.2.3 Expressive tools for prototyping, studying, and programming interaction

Current systems suffer from engineering issues that keep constraining and influencing how interaction is thought, designed, and implemented. Addressing the challenges we presented in this section and making the solutions possible require extended expressiveness, and researchers and designers must either wait for the proper toolkits to appear, or “hack” existing interaction frameworks, often bypassing existing mechanisms. For instance, numerous usability problems in existing interfaces stem from a common cause: the lack, or untimely discarding, of relevant information about how events are propagated and how changes come to occur in interactive environments. On top of our redesign of the I/O loop of interactive systems, we will investigate how to facilitate access to that information and also promote a more grounded and expressive way to describe and exploit input-to-output chains of events at every system level. We want to provide finer granularity and better-described connections between the causes of changes (e.g. input events and system triggers), their context (e.g. system and application states), their consequences (e.g. interface and data updates), and their timing 8. More generally, a central theme of our Interaction Machine vision is to promote interaction as a first-class object of the system 36, and we will study alternative and better-adapted technologies for designing and programming interaction, such as we did recently to ease the prototyping of Digital Musical Instruments 1 or the programming of animations in graphical interfaces 10. Ultimately, we want to propose a unified model of hardware and software scaffolding for interaction that will contribute to the design of our Interaction Machine.

3.3 Macro-Dynamics

Macro-dynamics involve longer-term phenomena such as skills acquisition, learning of functionalities of the system, reflexive analysis of its own use (e. g., when the user has to face novel or unexpected situations which require high-level of knowledge of the system and its functioning). From the system perspective, it implies to better support cross-application and cross-platform mechanisms so as to favor skill transfer. It also requires to improve the instrumentation and high-level logging capabilities to favor reflexive use, as well as flexibility and adaptability for users to be able to finely tune and shape their tools.

We want to move away from the usual binary distinction between “novices” and “experts” 3 and explore means to promote and assist digital skill acquisition in a more progressive fashion. Indeed, users have a permanent need to adapt their skills to the constant and rapid evolution of the tasks and activities they carry on a computer system, but also the changes in the software tools they use 52. Software strikingly lacks powerful means of acquiring and developing these skills 3, forcing users to mostly rely on outside support (e. g., being guided by a knowledgeable person, following online tutorials of varying quality). As a result, users tend to rely on a surprisingly limited interaction vocabulary, or make-do with sub-optimal routines and tools 53. Ultimately, the user should be able to master the interactive system to form durable and stabilized practices that would eventually become automatic and reduce the mental and physical efforts , making their interaction transparent.

In our previous work, we identified the fundamental factors influencing expertise development in graphical user interfaces, and created a conceptual framework that characterizes users' performance improvement with UIs 7, 3. We designed and evaluated new command selection and learning methods to leverage user's digital skill development with user interfaces, on both desktop 6 and touch-based computers.

We are now interested in broader means to support the analytic use of computing tools:

- to foster understanding of interactive systems. As the digital world makes the shift to more and more complex systems driven by machine learning algorithms, we increasingly lose our comprehension of which process caused the system to respond in one way rather than another. We will study how novel interactive visualizations can help reveal and expose the “intelligence” behind, in ways that people better master their complexity.

- to foster reflexion on interaction. We will study how we can foster users' reflexion on their own interaction in order to encourage them to acquire novel digital skills. We will build real-time and off-line software for monitoring how user's ongoing activity is conducted at an application and system level. We will develop augmented feedbacks and interactive history visualization tools that will offer contextual visualizations to help users to better understand and share their activity, compare their actions to that of others, and discover possible improvement.

- to optimize skill-transfer and tool re-appropriation. The rapid evolution of new technologies has drastically increased the frequency at which systems are updated, often requiring to relearn everything from scratch. We will explore how we can minimize the cost of having to appropriate an interactive tool by helping users to capitalize on their existing skills.

We plan to explore these questions as well as the use of such aids in several contexts like web-based, mobile, or BCI-based applications. Although, a core aspect of this work will be to design systems and interaction techniques that will be as little platform-specific as possible, in order to better support skill transfer. Following our Interaction Machine vision, this will lead us to rethink how interactive systems have to be engineered so that they can offer better instrumentation, higher adaptability, and fewer separation between applications and tasks in order to support reuse and skill transfer.

4 Application domains

Loki works on fundamental and technological aspects of Human-Computer Interaction that can be applied to diverse application domains.

Our 2020 research involved desktop and mobile interaction, augmented and virtual reality, touch-based, haptics, and BCI interfaces with notable applications to medicine (analysis of fine motor control for patients with Parkinson disease) as well as creativity support tools (production of illustrations, design of Digital Musical Instruments). Our technical work also contributes to the more general application domains of systems' design.

5 Highlights of the year

Géry Casiez and Mathieu Nancel received the Prix Challenge Hyve (academic contribution) from the Région Hauts-de-France for their project “Real-time Latency Measure and Compensation”.

Julien Decaudin, former engineer in the team, initiated a start-up project called Aureax. The objective is to create a haptic navigation solution for cyclists with turn-by-turn information with haptic cues. This project is supported by Inria Startup Studio with Thomas Pietrzak from Loki as scientific consultant.

5.1 Awards

Best paper award from the IEEE VR conference to the paper “Detecting System Errors in Virtual Reality Using EEG through Error Related Potentials”, from H. Si-Mohammed, C. Lopes Dias, M. Duarte, F. Argelaguet, C. Jeunet, G. Casiez, G. Müller-Putz, A. Lécuyer & R. Scherer 26.

Honorable mention award from the ACM CHI conference to the paper “AutoGain: Gain Function Adaptation with Submovement Efficiency Optimization”, from B. Lee, M. Nancel, S. Kim & A. Oulasvirta 23.

6 New software and platforms

6.1 New software

6.1.1 Chameleon

- Name: Chameleon Interactive Documents

- Keywords: HCI, Augmented documents

- Functional Description: Chameleon is a system-wide tool that combines computer vision algorithms used for image identification with an open database format to allow for the layering of dynamic content. Using Chameleon, static documents can be easily upgraded by layering user-generated interactive content on top of static images, all while preserving the original static document format and without modifying existing applications.

- Release Contributions: Initial version

- News of the Year: Software improved to be shared on-line. The corresponding paper has been published.

-

URL:

https://

ns. inria. fr/ loki/ chameleon - Publications: hal-02467817, hal-02867619

- Contact: Géry Casiez

- Partner: University of Waterloo

6.1.2 Spatial Jitter

- Name: SpatialJitterAsyncInputOutput

- Keywords: HCI, Spatial jitter

- Functional Description: Source code for a simulator allowing to measure the spatial jitter introduced by different output frequencies. Participants' anonymous data is also available to test the simulator. The source code allowing to generate the figures presented in the article https://dx.doi.org/10.1145/3379337.3415833 is also available.

- Release Contributions: Initial version

- News of the Year: Software improved to be shared on-line. The corresponding paper has been published.

-

URL:

https://

ns. inria. fr/ loki/ async - Publication: hal-02919191

- Contact: Géry Casiez

- Partner: Google

7 New results

According to our research program, in the next two to five years, we will study dynamics of interaction along three levels depending on interaction time scale and related user's perception and behavior: Micro-dynamics, Meso-dynamics, and Macro-dynamics. Considering phenomena that occur at each of these levels as well as their relationships will help us to acquire the necessary knowledge (Empowering Tools) and technological bricks (Interaction Machine) to reconcile the way interactive systems are designed and engineered with human abilities. Our strategy is to investigate issues and address challenges for all of the three levels of dynamics. For the past two years we focused on micro-dynamics since it concerns very fundamental knowledge about interaction and relates to very low-level parts of interactive systems. In 2020 we were able to build upon those results (micro), but also to enlarge the scope of our studies within larger interaction time scales (meso and macro). Some of these results have also contributed to our objective of defining the basic principles of an Interaction Machine.

7.1 Micro-dynamics

Participants: Géry Casiez, Sylvain Malacria, Mathieu Nancel, Thomas Pietrzak, Philippe Schmid.

7.1.1 Modeling and reducing spatial jitter caused by asynchronous input and output rates

Jitter in interactive systems occurs when visual feedback is perceived as unstable or trembling even though the input signal is smooth or stationary. It can have multiple causes such as sensing noise, or feedback calculations introducing or exacerbating sensing imprecisions. Jitter can however occur even when each individual component of the pipeline works perfectly, as a result of the differences between the input frequency and the display refresh rate. This asynchronicity can introduce rapidly-shifting latencies between the rendered feedbacks and their display on screen, which can result in trembling cursors or viewports. Through a collaboration with Google we contributed a better understanding of this particular type of jitter 18. We first detail the problem from a mathematical standpoint, from which we develop a predictive model of jitter amplitude as a function of input and output frequencies, and a new metric to measure this spatial jitter. Using touch input data gathered in a study, we developed a simulator to validate this model and to assess the effects of different techniques and settings with any output frequency. The most promising approach, when the time of the next display refresh is known, is to estimate (interpolate or extrapolate) the user's position at a fixed time interval before that refresh. When input events occur at 125 Hz, as is common in touch screens, we show that an interval of 4 to 6 ms works well for a wide range of display refresh rates. This method effectively cancels most of the jitter introduced by input/output asynchronicity, while introducing minimal imprecision or latency.

7.1.2 Transfer functions

The control-display gains involved in the cursor control mechanisms of most operating systems have been found to improve pointing performance, e.g. with mice and trackpads. However the reasons for these improvements, and how to design gain transfer functions best suited to a given user or task, remain open questions as they involve complex feedback-loops of perception, decision and action that are challenging to study all at once.

In collaboration with Aalto University and KAIST we designed AutoGain 23, an unobtrusive method to individualize a gain function for indirect pointing devices in contexts where cursor trajectories can be tracked. AutoGain gradually improves pointing efficiency by using a submovement-level tracking+optimization technique that minimizes aiming error (undershooting/overshooting) for each sub-movement. We first showed that AutoGain can produce gain functions with performance comparable to that of commercial OSs, in less than half an hour of active use. We also demonstrated AutoGain’s applicability to emerging input devices (a Leap Motion controller) for which there exists no standard gain function. Finally, a one-month longitudinal study of normal computer use with AutoGain showed performance improvements from participants’ default (OS) transfer functions.

On a more exploratory approach, we tested non-constant but linear velocity-based control display gains to determine which parameters were responsible for pointing performance changes based on analyses of the movement kinematics 16. Using a Fitts’ paradigm, constant gains of 1 and 3 were compared with a linearly increasing gain (i.e., the control display gain increases with the motor velocity) and a decreasing gain (i.e., the control display gain decreases with the motor velocity). Three movements with various indexes of difficulty (ID) were tested (3, 5 and 7 bits). The increasing gain was expected to increase the velocity of the initial impulse phase and decrease that of the correction phase, thus decreasing the movement time (MT), and the contrary in the case of the decreasing gain. Although the decreasing gain increased MT at ID3, the increasing gain was found to be less efficient than the constant gain of 3, probably because a non-constant gain between the motion and its visual consequences disrupted the sensorimotor control. In addition, the kinematic analyses of the movements suggested that the motion profile was planned by the central nervous system based on the visuomotor gain at maximum motor velocity, as common features were observed between the constant gain of 1 and the decreasing gain, and between the constant gain of 3 and the increasing gain. By contrast, the amplitude of the velocity profile seemed to be specific to each particular visuomotor mapping process.

7.1.3 Analysis of pointing kinematics

Pointing movements can also be analyzed through sensory loss, involving irreversible behavioral and neural changes 15. Paradigms of short-term limb immobilization mimic deprivation of proprioceptive inputs and motor commands, which occur after the loss of limb use. While several studies have shown that short-term immobilization induced motor control impairments, the origin of such modifications is an open question. A Fitts’ pointing task was conducted, and kinematic analyses were performed to assess whether the feedforward and/or feedback processes of motor control were impacted. The Fitts’ pointing task specifically required dealing with spatial and temporal aspects (speed-accuracy trade-off) to be as fast and as accurate as possible. Forty trials were performed on two consecutive days by Control and Immobilized participants who wore a splint on the right arm during this 24 h period. The immobilization modified the motor control in a way that the full spatiotemporal structure of the pointing movements differed: A global slowdown appeared. The acceleration and deceleration phases were both longer, suggesting that immobilization impacted both the early impulse phase based on sensorimotor expectations and the later online correction phase based on feedback use. First, the feedforward control may have been less efficient, probably because the internal model of the immobilized limb would have been incorrectly updated relative to internal and environmental constraints. Second, immobilized participants may have taken more time to correct their movements and precisely reach the target, as the processing of proprioceptive feedback might have been altered.

In the ParkEvolution project, we studied the detection of early signs of Parkinson's disease from the information of the mouse pointer coordinates. An appropriate choice of segments in the cursor position raw data provides a filtered signal from which a number of quantifiable criteria can be obtained. Dynamical features were derived based on control theory methods and a subsequent analysis allowed the detection of users with tremor 27. Real-life data from patients with Parkinson's and healthy controls were used to illustrate our detection method.

7.1.4 Detecting system errors in virtual reality using EEG through error related potentials

When persons interact with the environment and experience or witness an error (e.g. an unexpected event), a specific brain pattern, known as error-related potential (ErrP) can be observed in the electroencephalographic signals (EEG). Virtual Reality (VR) technology enables users to interact with computer-generated simulated environments and to provide multi-modal sensory feedback. Using VR systems can, however, be error-prone. We investigated the presence of ErrPs when Virtual Reality users face 3 types of visualization errors: (Te) tracking errors when manipulating virtual objects, (Fe) feedback errors, and (Be) background anomalies 26. We conducted an experiment in which 15 participants were exposed to the 3 types of errors while performing a center-out pick and place task in virtual reality. The results showed that tracking errors generate error-related potentials, the other types of errors did not generate such discernible patterns. In addition, we show that it is possible to detect the ErrPs generated by tracking losses in single trial, with an accuracy of 85%. This constitutes a first step towards the automatic detection of error-related potentials in VR applications, paving the way to the design of adaptive and self-corrective VR/AR applications by exploiting information directly from the user's brain.

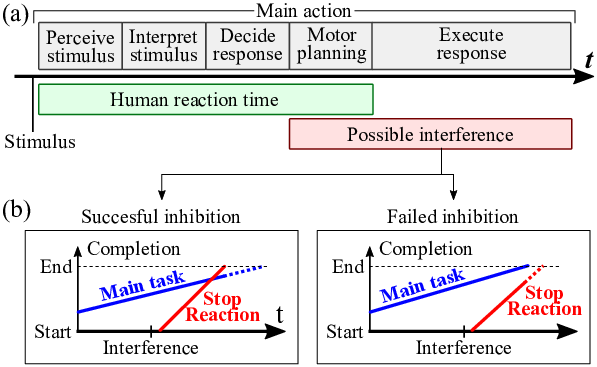

7.1.5 Interaction Interferences: Implications of Last-Instant System State Changes

We studied interaction interferences25, situations where an unexpected change occurs in an interface immediately before the user performs an action, causing the corresponding input to be misinterpreted by the system (Figure 2). For example, a user tries to select an item in a list, but the list is automatically updated immediately before the click, causing the wrong item to be selected. We formally defined interaction interferences and discussed their causes from behavioral and system-design perspectives. We also reported the results of a survey examining users' perceptions of the frequency, frustration, and severity of interaction interferences. Finally, we conducted a controlled experiment exploring the minimum time interval, before clicking, below which participants could not refrain from completing their action. Our results suggest that users' ability to inhibit an action follows previous findings in neuro-psychology (e.g. an inhibition threshold above 200 ms) and will help design and tune mechanisms to alleviate or prevent interferences. They also hint that our participants’ “refrain-ability” is not affected by target distance, size, or direction in typical pointing tasks.

7.2 Meso-dynamics

Participants: Axel Antoine, Marc Baloup, Géry Casiez, Stéphane Huot, Edward Lank, Sylvain Malacria, Mathieu Nancel, Thomas Pietrzak, Thibault Raffaillac, Marcelo Wanderley.

7.2.1 Bringing interactivity to static supports

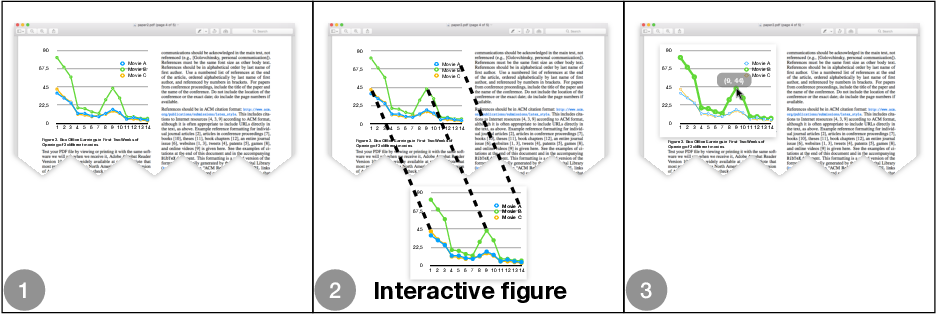

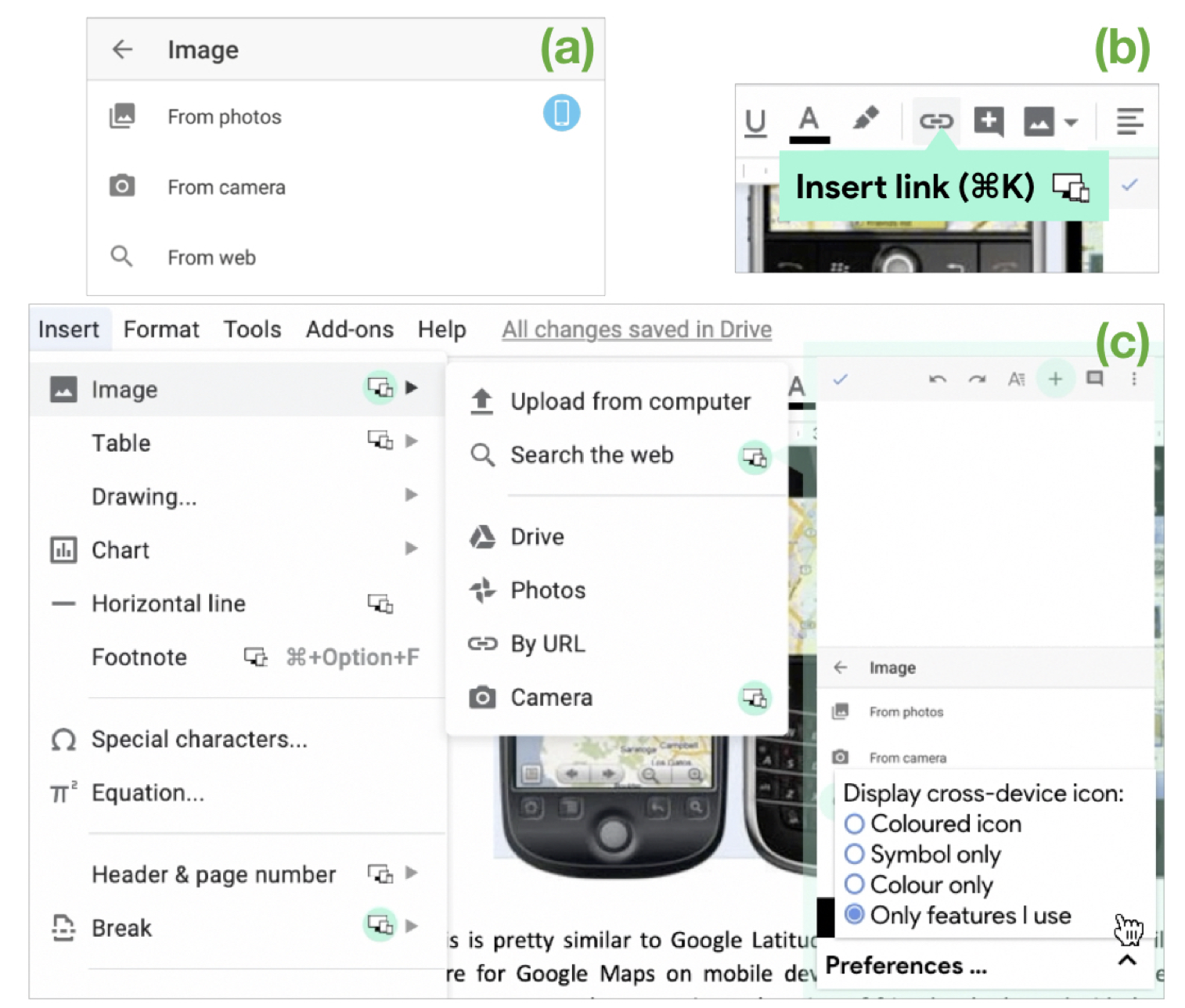

Documents such as presentations, instruction manuals, and research papers are disseminated using various file formats, many of which barely support the incorporation of interactive content. To address this lack of interactivity, we introduced Chameleon, a system-wide tool that combines computer vision algorithms used for image identification with an open database format to allow for the layering of dynamic content 24, 34. Using Chameleon, static documents can be easily upgraded by layering user-generated interactive content on top of static images, all while preserving the original static document format and without modifying existing applications (Figure 3). We detailed the development of Chameleon, including the design and evaluation of vision-based image replacement algorithms, the new document-creation pipeline as well as a user study evaluating Chameleon.

7.2.2 Comparing speech recognition and touchscreen typing for composition and transcription on a smartphone

Ruan et al. found transcribing short phrases with speech recognition nearly 200% faster than typing on a smartphone. We extended this comparison to a novel composition task, using a protocol that enables a controlled comparison with transcription 21. Results show that both composing and transcribing with speech is faster than typing. But, the magnitude of this difference is lower with composition, and speech has a lower error rate than keyboard during composition, but not during transcription. When transcribing, speech outperformed typing in most NASA-TLX measures, but when composing, there were no significant differences between typing and speech for any measure except physical demand.

7.2.3 Efficient command selection without explicit memorization

Efficient command selection mechanisms often implement the principle of rehearsal, that is that “guidance should be a physical rehearsal of the way an expert would issue a command”. In practice, commands are hidden by default and are revealed only when the user wait for given duration (e.g. holding a button pressed for 250 ms). Unpracticed users can therefore select commands by waiting for the command overlay to appear, whereas experienced users can directly perform the gesture they rehearsed and memorized through muscular memory. Unfortunately, it also means that users have to systematically wait to select a command they are not familiar with, or memorize beforehand where it is located.

This delayed display of menu items is a core design component of marking menus, arguably to prevent visual distraction and foster the use of mark mode. We investigated these assumptions, by contrasting the original marking menu design with immediately-displayed marking menus 22. In three controlled experiments, we failed to reveal obvious and systematic performance or usability advantages to using delay and mark mode. Only in very constrained settings – after significant training and only two items to learn – did traditional marking menus show a time improvement of about 260 ms. Otherwise, we found an overall decrease in performance with delay, whether participants exhibited practiced or unpracticed behaviour. Our final study failed to demonstrate that an immediately-displayed menu interface is more visually disrupting than a delayed menu. These findings inform the costs and benefits of incorporating delay in marking menus, and motivate guidelines for situations in which its use is desirable.

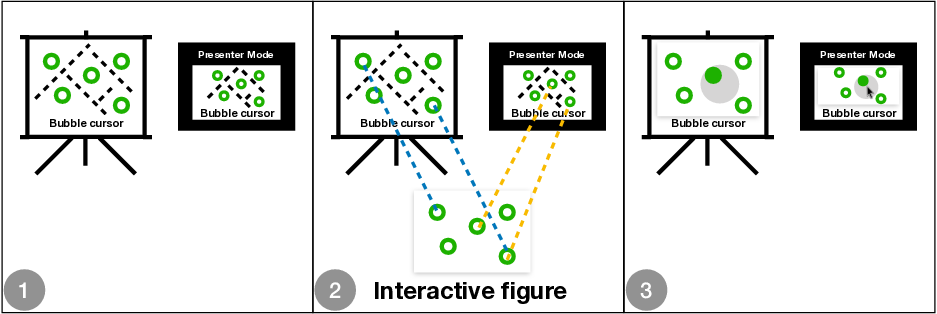

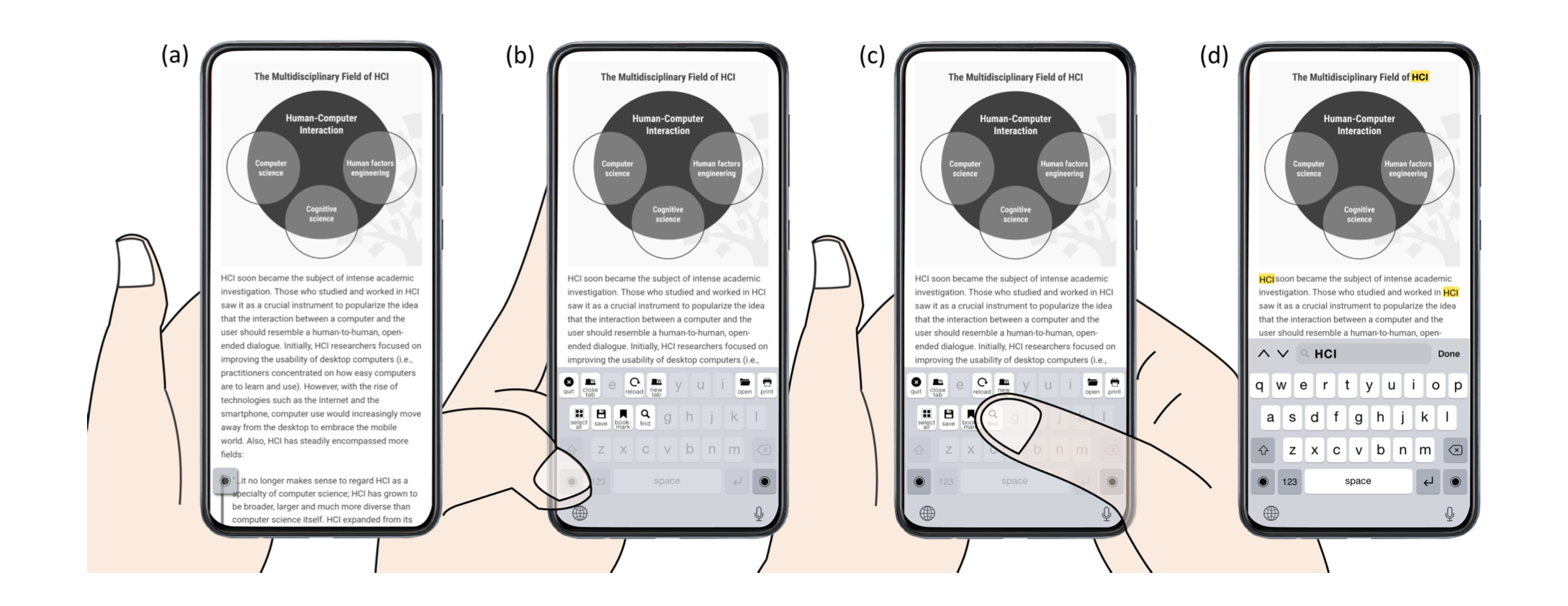

We also investigated how efficient touch-based command selection could be designed without relying on the principle of rehearsal. Notably, we advocated that the dynamic nature of touchscreen make hotkeys, conventionally used as a shortcut mechanism on desktop computers, an interesting command selection mechanism even for keyboard-less applications 20. We investigated the performance and usage of soft keyboard shortcuts or hotkeys (abbreviated SoftCuts, Figure 4) through two studies comparing different input methods across sitting, standing and walking conditions. Our results suggest that SoftCuts not only are appreciated by participants but also support rapid command selection with different devices and hand configurations. We also did not find evidence that walking deters their performance under certain input conditions.

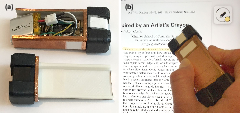

7.2.4 Design of Vibrotactile Widgets with Printed Actuators and Sensors

New technologies for printing sensors and actuators combine the flexibility of interface layouts of touchscreens with localized vibrotactile feedback, but their fabrication still requires industrial-grade facilities. Until these technologies become easily replicable, interaction designers need material for ideation. We proposed an open-source hardware and software toolbox providing maker-grade tools for iterative design of vibrotactile widgets with industrial-grade printed sensors and actuators 29. Our hardware toolbox provides a mechanical structure to clamp and stretch printed sheets, and electronic boards to drive sensors and actuators. Our software toolbox expands the design space of haptic interaction techniques by reusing the wide palette of available audio processing algorithms to generate real-time vibrotactile signals. We validated our toolbox with the implementation of buttons, sliders, and touchpads with tactile feedback.

7.3 Macro-dynamics

Participants: Géry Casiez, Stéphane Huot, Sylvain Malacria, Thomas Pietrzak, Nicole Pong, Grégoire Richard.

7.3.1 Manipulation, Learning, and Recall with Tangible Pen-Like Input

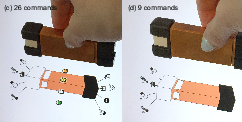

We examined two key human performance characteristics of a pen-like tangible input device that executes a different command depending on which corner, edge, or side contacts a surface. The manipulation time when transitioning between contacts is examined using physical mock-ups of three representative device sizes and a baseline pen mock-up. Results show the largest device is fastest overall and minimal differences with a pen for equivalent transitions. Using a hardware prototype able to sense all 26 different contacts (Figure 5), a second experiment evaluates learning and recall. Results show almost all 26 contacts can be learned in a two-hour session with an average of 94% recall after 24 hours. The results provide empirical evidence for the practicality, design, and utility for this type of tangible pen-like input.

7.3.2 Towards BCI-Based Interfaces for Augmented Reality

Brain-Computer Interfaces (BCIs) enable users to interact with computers without any dedicated movement, bringing new hands-free interaction paradigms. We studied the combination of BCI and Augmented Reality (AR) 33. We first tested the feasibility of using BCI in AR settings based on Optical See-Through Head-Mounted Displays (OST-HMDs). Experimental results showed that a BCI and an OST-HMD equipment (EEG headset and Hololens in our case) are well compatible and that small movements of the head can be tolerated when using the BCI. Second, we introduced a design space for command display strategies based on BCI in AR, when exploiting a famous brain pattern called Steady-State Visually Evoked Potential (SSVEP). Our design space relies on five dimensions concerning the visual layout of the BCI menu; namely: orientation, frame-of-reference, anchorage, size and explicitness. We implemented various BCI-based display strategies and tested them within the context of mobile robot control in AR. Our findings were finally integrated within an operational prototype based on a real mobile robot that is controlled in AR using a BCI and a HoloLens headset. Taken together our results (4 user studies) and our methodology could pave the way to future interaction schemes in Augmented Reality exploiting 3D User Interfaces based on brain activity and BCIs.

7.3.3 Studying the Role of Haptic Feedback on Virtual Embodiment

We investigated the role of haptic feedback on virtual embodiment in a context of active and fine manipulation. In particular, we explored which haptic cue has more influence on virtual embodiment 14. We conducted a within-subject experiment with 24 participants and compared self-reported embodiment over a humanoid avatar during a coloring task under three conditions: force feedback, vibrotactile feedback, and no haptic feedback. In the experiment, force feedback was more ecological as it matched reality more closely, while vibrotactile feedback was more symbolic. Taken together, our results show significant superiority of force feedback over no haptic feedback regarding embodiment, and significant superiority of force feedback over the other two conditions regarding subjective performance. Those results suggest that a more ecological feedback is better suited to elicit embodiment during fine manipulation tasks.

7.3.4 Designing for Cross-Device Software Learnability

We investigated how interactive systems could be designed to better support cross-device software learnability 17. People increasingly access cross-device applications from their smartphones while on the go. Yet, they do not fully use the mobile versions for complex tasks, preferring the desktop version of the same application. We conducted a survey (N=77) to identify challenges when switching back and forth between devices. We discovered significant cross-device learnability issues, including that users often find exploring the mobile version frustrating, which leads to prematurely giving up on using the mobile version. Based on the findings, we created four design concepts: Peek-Through Icons (Figure 6), Dual-Screen Façade, Keyword Searchbar and To-Learn List. These concept, who vary in four key dimensions (the device involved, automation, temporality, and learning approach) were implemented as video prototypes to explore how to support cross-device learnability. Interviews (N=20) probing the design concepts identified individual differences affecting cross-device learning preferences, and that users are more motivated to use cross-device applications when offered the right cross-device learnability support. We conclude with future design directions for supporting seamless cross-device learnability.

7.4 Interaction Machine

Several of our new results this year informed our global objective of building an Interaction Machine and to redefine how systems should be designed for Interaction as a whole.

7.4.1 Understand and mitigate the “blind spots” in today's interfaces

A defining principle of the Interaction Machine is to better understand interaction phenomena in order to tailor interactive systems to human capabilities and skills. To that end, it is crucial to understand the limitations of the current interactive systems with regards to these capabilities. Our recent work on input-output asynchronicity (see section 7.1.1) describes, models, and alleviates a category of cursor or interface jitter that results from input and output devices (e.g. mouse and screen) having different refresh rates. All forms of jitter can be visually disturbing to users, but they tend to be caused by sensing inaccuracies or computation errors; this particular one, however, occurs even when all components of the system function exactly as expected, which can explain why it has been so little studied yet. We modeled it, and proposed a software method to alleviate it regardless of the hardware involved, which we expect could help improve the low-level software layers of interactive systems with high-end input and output rates. Another of such blind spots is one we dubbed “interaction interferences” (see section 7.1.5): sudden changes in an interface that occur right before the user was about to perform an action, e.g. click a button or press a key, too late for them to interrupt it, and that cause that action to be interpreted differently than when the user triggered their movement. This phenomenon, which can be very frustrating to users, is also one that can occur when every aspect of the interactive system is otherwise faultless. More than describing this phenomenon, this work contributes a telling illustration of the sort of hidden assumptions that underlie interaction design; here, that users can react immediately to any new information (no latency), and that any sensed event, even a click on a pop-up window that has been on screen for 50 milliseconds, is an accurate indicator of their intentions.

Overall, as we did in these studies, revealing wrong assumptions on actual systems' design and working out how to solve the problems that they hide at the users side, is a crucial methodology to inform the design of our Interaction Machine.

7.4.2 Sense and integrate the user's state into interaction design

A critical aspect in our path to an Interaction Machine is also to reveal new perceptual or cognitive phenomena that can improve how systems interact with users. In particular, we explore means to better understand the user's intentions, reactions, and overall state in order to integrate them into the system reactions.

In the domains of mixed reality and haptics, we explored the effects of force and vibrotactile feedbacks on perceived performance in a fine motor task in VR (see section 7.3.3). Our work revealed that force feedback, which better simulates the physicality of the task, improves both the user's sense of embodiment into the VR avatar and the user's perceived performance into the task. Such results pinpoint force feedback, and such “ecological” output channels in general, as a promising research direction to improve the bandwidth between user and system in VR. Still in mixed reality, we revealed that EEG can successfully be combined with VR and could contribute to solving some of its immediate issues (see section 7.1.4). We found that error-related potentials (ErrP) can be sensed while using a VR helmet, and accurately match simulated errors in our virtual environment. In effect, this means that we can detect the user's errors ahead of time, paving the way for adaptive and self-corrective VR/AR applications that exploit information directly from the user's brain.

But these findings are not limited to novel environments, and we expect to reveal interesting and relevant phenomena even in well-established areas of HCI. We recently challenged the assumptions behind the delayed display of menu items in a cornerstone of interaction technique design, the marking menu: that it fosters the use of `mark' mode, and to prevent visual distraction (see section 7.2.3). In three controlled experiments, and despite popular belief, we failed to reveal obvious and systematic performance or usability advantages to using delay and mark mode. This work challenges decades of agreed-upon design guidelines on menu techniques and reveals new trade-offs, thereby affecting how interaction techniques should be designed.

7.4.3 Foster modularity and adaptability in interactive systems

A central characteristic that we envision in our Interaction Machine is that it can adapt to its users, and that its users can adjust it to their own will or needs. Designing for modularity is arguably a stark departure from today's interaction systems, which are designed to accomplish the exact tasks they were designed to perform; unplanned uses usually require either hacking around the system's existing capabilities, or designing a whole new system.

A first step is to identify what users want to but cannot do with existing systems, and how to make these features possible in order to better understand what a system requires to be truly modular. Chameleon (see section 7.2.1) is an example of bending an operating system's capabilities to bypass its own limitations. Documents in an OS are disseminated using various file formats, most of which do not support the incorporation of interactive content. Chameleon uses computer vision to upgrade static documents with alternative, dynamic, or even interactive content, while preserving the original static document format – and without modifying any existing applications. But limitations can be harder to circumvent, as sometimes true modularity requires sophisticated, expensive equipment that restricts who can access it. This is especially true when building physical devices. New technologies for printing sensors and actuators combine the flexibility of touchscreens with localized vibrotactile feedback, but their fabrication requires industrial-grade facilities. We proposed an open-source hardware and software toolbox for the design of vibrotactile widgets with printed sensors and actuators (see section 7.2.4). It allows interaction designers to easily explore and iterate over new physical devices, or to simply augment existing ones with new capabilities.

Finally, in line of the “continuity” goal defined in our research program, the concept of modularity needs to evolve from being a characteristic of individual devices and systems, to a unified aspect of a user's interactive experience. Nowadays applications become increasingly cross-device, i.e. one can access the same application from their laptop at home or from their smartphone while on the go. Yet, users tend to prefer the desktop version of the same application for complex tasks. In a survey we discovered significant cross-device learnability issues, including that users often find exploring the mobile version frustrating and give up on it prematurely (see section 7.3.4). We created and ran video probes on four design concepts to explore how to better support cross-device learnability. This provides us promising directions to address how to better combine and integrate different devices into a unified and continuous experience with different interactive devices, which is another goal of the Interaction Machine.

8 Bilateral contracts and grants with industry

8.1 Bilateral Contracts with Industry

Julien Decaudin, former engineer in the team initiated a start-up project called Aureax. The objective is to create a haptic navigation system for cyclists with turn-by-turn information with haptic cues. This project is supported by Inria Startup Studio with Thomas Pietrzak as scientific consultant. His past work on the encoding of information with tactile cues 51, and the design and implementation of tactile displays 5 are the scientific foundations of this initiative.

9 Partnerships and cooperations

9.1 International Initiatives

9.1.1 Inria International Partners

Declared Inria International Partners

Marcelo M. Wanderley – Professor, Schulich School of Music/IDMIL, McGill University (Canada)

Inria International Chair: Expert interaction with devices for musical expression (2017 - 2021)

The main topic of this project is the expert interaction with devices for musical expression and consists of two main directions: the design of digital musical instruments (DMIs) and the evaluation of interactions with such instruments. It will benefit from the unique, complementary expertise available at the Loki Team, including the design and evaluation of interactive systems, the definition and implementation of software tools to track modifications of, visualize and haptically display data, as well as the study of expertise development within human-computer interaction contexts. The project’s main goal is to bring together advanced research on devices for musical expression (IDMIL – McGill) and cutting-edge research in Human-computer interaction (Loki Team).

Joint publications in 2020: 30

Edward Lank – Professor at Cheriton School of Computer Science, University of Waterloo (Canada)

Inria International Chair: Rich, Reliable Interaction in Ubiquitous Environments (2019 - 2023)

The objectives of the research program are:

- Designing Rich Interactions for Ubiquitous and Augmented Reality Environments

- Designing Mechanisms and Metaphors for Novices, Experts, and the Novice to Expert Transition

- Integrating Intelligence with Human Action in Richly Augmented Environments.

Informal International Partners

-

Andy Cockburn, University of Canterbury, Christchurch, NZ

characterization of usability problems 25 -

Daniel Vogel, University of Waterloo, Waterloo, CA

composing text on smartphones, tactile interaction 21, 19, 33 -

Simon Perrault, Singapore University of Technology and Design, Singapore

study and conception of tactile interactions 20 -

Audrey Girouard, Carleton University, Ottawa, CA

new flexible interaction devices (co-tutelle thesis of Johann Felipe Gonzalez Avila) -

Paul Dietz & Bruno Araújo, Tactual Labs, Toronto, CA

new flexible interaction devices (co-tutelle thesis of Johann Felipe Gonzalez Avila) -

Nicolai Marquardt, University College, London, UK

illustration of interaction on a static support -

Scott Bateman, University of New Brunswick, Fredericton, CA

interaction in 3D environments (VR, AR) -

Antti Oulasvirta, Aalto University, Finlande

automated methods to adjust pointing transfer functions to user movements 23, design of the new French keyboard layout -

Anna Maria Feit, Saarland University, Allemagne

design of the new French keyboard layout

9.1.2 Participation in other International Programs

Université de Lille - International Associate Laboratory

Reappearing Interfaces in Ubiquitous Environments (Réapp)

with Edward Lank, Daniel Vogel & Keiko Katsuragawa at University of Waterloo (Canada) - Cheriton School of Computer Science

Duration: 2019 - 2023

The LAI Réapp is an International Associated Laboratory between Loki and Cheriton School of Computer Science from the University of Waterloo in Canada. It is funded by the Université de Lille to ease shared student supervision and regular inter-group contacts. Université de Lille will also provide a grant for a co-tutelle PhD thesis between the two universities.

We are at the dawn of the next computing paradigm where everything will be able to sense human input and augment its appearance with digital information without using screens, smartphones, or special glasses—making user interfaces simply disappear. This introduces many problems for users, including the discoverability of commands and use of diverse interaction techniques, the acquisition of expertise, and the balancing of trade-offs between inferential (AI) and explicit (user-driven) interactions in aware environments. We argue that interfaces must reappear in an appropriate way to make ubiquitous environments useful and usable. This project tackles these problems, addressing (1) the study of human factors related to ubiquitous and augmented reality environments, and the development of new interaction techniques helping to make interfaces reappear; (2) the improvement of transition between novice and expert use and optimization of skill transfer; and, last, (3) the question of delegation in smart interfaces, and how to adapt the trade-off between implicit and explicit interaction.

9.2 International Research Visitors

9.2.1 Visits of International Scientists

- Edward Lank, Professor at the University of Waterloo (Canada), who has been awarded an Inria International Chair in our team in 2019, spent 4 months in our group this year (January to April).

- Marcelo M. Wanderley, Professor at McGill University (Canada), who has been awarded an Inria International Chair in our team in 2017, spent 3 months in our group this year (February to April).

9.3 National Initiatives

9.3.1 ANR

Causality (JCJC, 2019-2023)

Integrating Temporality and Causality to the Design of Interactive Systems

Participants: Géry Casiez, Rohan Hundia, Stéphane Huot, Alice Loizeau, Sylvain Malacria, Mathieu Nancel, Philippe Schmid.

The project addresses a fundamental limitation in the way interfaces and interactions are designed and even thought about today, an issue we call procedural information loss: once a task has been completed by a computer, significant information that was used or produced while processing it is rendered inaccessible regardless of the multiple other purposes it could serve. It hampers the identification and solving of identifiable usability issues, as well as the development of new and beneficial interaction paradigms. We will explore, develop, and promote finer granularity and better-described connections between the causes of those changes, their context, their consequences, and their timing. We will apply it to facilitate the real-time detection, disambiguation, and solving of frequent timing issues related to human reaction time and system latency; to provide broader access to all levels of input data, therefore reducing the need to “hack” existing frameworks to implement novel interactive systems; and to greatly increase the scope and expressiveness of command histories, allowing better error recovery but also extended editing capabilities such as reuse and sharing of previous actions.

Web site: http://

Related publications in 2020: 25, 23.

Discovery (JCJC, 2020-2024)

Promoting and improving discoverability in interactive systems

Participants: Géry Casiez, Shaan Chopra, Sylvain Malacria, Eva Mackamul.

This project addresses a fundamental limitation in the way interactive systems are usually designed, as in practice they do not tend to foster the discovery of their input methods (operations that can be used to communicate with the system) and corresponding features (commands and functionalities that the system supports). Its objective is to provide generic methods and tools to help the design of discoverable interactive systems: we will define validation procedures that can be used to evaluate the discoverability of user interfaces, design and implement novel UIs that foster input method and feature discovery, and create a design framework of discoverable user interfaces. This project investigates, but is not limited to, the context of touch-based interaction and will also explore two critical timings when the user might trigger a reflective practice on the available inputs and features: while the user is carrying her task (discovery in-action); and after having carried her task by having informed reflection on her past actions (discovery on-action). This dual investigation will reveal more generic and context-independent properties that will be summarized in a comprehensive framework of discoverable interfaces. Our ambition is to trigger a significant change in the way all interactive systems and interaction techniques, existing and new, are thought, designed, and implemented with both performance and discoverability in mind.

Web site: http://

PerfAnalytics (PIA) “Sport de très haute performance”, 2020-2023)

In situ performance analysis

Participants: Géry Casiez, Stéphane Huot, Sylvain Malacria.

The objective of the PerfAnalytics project (Inria, INSEP, Université Grenoble-Alpes, Université de Poitiers, Université Aix-Marseille, Université Eiffel & 5 sports federations) is to study how video analysis, now a standard tool in sport training and practice, can be used to quantify various performance indicators and deliver feedback to coaches and athletes. The project, supported by the boxing, cycling, gymnastics, wrestling, and mountain and climbing federations, aims to provide sports partners with a scientific approach dedicated to video analysis, by coupling existing technical results on the estimation of gestures and figures from video with scientific biomechanical methodologies for advanced gesture objectification (muscular for example).

9.3.2 Inria Project Labs

AVATAR (2018-2022)

The next generation of our virtual selves in digital worlds

Participants: Marc Baloup, Géry Casiez, Stéphane Huot, Thomas Pietrzak, Grégoire Richard.

This project aims at delivering the next generation of virtual selves, or avatars, in digital worlds. In particular, we want to push further the limits of perception and interaction through our avatars to obtain avatars that are better embodied and more interactive. Loki's contribution in this project consists in designing novel 3D interaction paradigms for avatar-based interaction and to design new multi-sensory feedbacks to better feel our interactions through our avatars.

Partners: Inria's GRAPHDECO, HYBRID, MIMETIC, MORPHEO & POTIOC teams, Mel Slater (Event Lab, University Barcelona, Spain), Technicolor and Faurecia.

Web site: https://

Related publication in 2020: 14

10 Dissemination

10.1 Promoting Scientific Activities

10.1.1 Scientific Events: Organisation

Member of the Organizing Committees

10.1.2 Scientific Events: Selection

Member of the Conference Program Committees

Reviewer

- CHI (ACM): Mathieu Nancel

- UIST (ACM): Géry Casiez, Sylvain Malacria, Mathieu Nancel

- ISS (ACM): Géry Casiez, Mathieu Nancel

- SIGGRAPH (ACM): Géry Casiez

- VR (IEEE): Géry Casiez, Mathieu Nancel

- Haptics Symposium (IEEE): Géry Casiez

- NordiCHI (ACM): Sylvain Malacria, Mathieu Nancel

- Eurohaptics: Thomas Pietrzak

- Ubicomp (ACM): Thomas Pietrzak

10.1.3 Journal

Member of the Editorial Boards

- Thomas Pietrzak and Marcelo Wanderley co-edited a special issue of Intl. Journal of Human-Computer Studies (Elsevier) with extended versions of HAID 2019 papers 30.

Reviewer - Reviewing Activities

- Transactions on Computer-Human Interaction (ACM): Géry Casiez, Sylvain Malacria, Thomas Pietrzak

- Intl. Journal of Human-Computer Studies (Elsevier): Thomas Pietrzak

10.1.4 Invited Talks

- Forging digital hammers: the design and engineering of empowering interaction techniques and devices, February 25th, 2020, Collaborative Learning of Usability Experiences (CLUE), Carleton University, Canada: Thomas Pietrzak

- Pointing Preconceptions Out, March 3rd, 2020, University of Waterloo, University of Toronto and Chatham Labs, Canada: Mathieu Nancel

- Recognition or recall? The case of “expert” features in Graphical User Interfaces, March 3rd, 2020, University of Waterloo, University of Toronto and Chatham Labs, Canada: Sylvain Malacria

- Leveraging direct manipulation for the design of interactive systems, June 29th, 2020, University of Luxembourg: Thomas Pietrzak

- Increasing Input Vocabulary and Improving User Experience with Touch Surfaces, November 12th, 2020, Huawei Intelligent Collaboration Workshop 2020 (virtual): Géry Casiez

10.1.5 Leadership within the Scientific Community

- Association Francophone d'Interaction Homme-Machine (AFIHM): Géry Casiez (member of the scientific council since March 2019)

10.1.6 Scientific Expertise

- High Council for Evaluation of Research and Higher Education (HCÉRES): Stéphane Huot (expert reviewer for the Lab-STICC evaluation committee)

- Agence Nationale de la Recherche (ANR): Stéphane Huot (vice-chair for the CES33 “Interaction and Robotics” committee)

- CONEX-Plus, Universidad Carlos III de Madrid, Spain: Mathieu Nancel (expert reviewer for post-doctoral grants)

- SNF, Switzerland: Thomas Pietrzak (expert reviewer for research grant)

- Leverhulme Trust, United Kingdom: Thomas Pietrzak (expert reviewer for research grant)

- Dutch Research Council (NWO), The Netherlands: Sylvain Malacria (expert reviewer for research grant)

10.1.7 Research Administration

For Inria

- Evaluation Committee: Stéphane Huot (member)

For CNRS

- Comité National de la Recherche Scientifique, Sec. 7: Géry Casiez (member since Sep 2020)

For Inria Lille – Nord Europe

- Scientific Officer: Stéphane Huot (since Feb 2020)

- “Commission des Emplois de recherche du centre Inria Lille – Nord Europe” (CER): Sylvain Malacria (member)

- “Commission de développement technologique” (CDT): Mathieu Nancel (member)

- “Comité opérationnel d'évaluation des risques légaux et éthiques” (COERLE, the Inria Ethics board): Stéphane Huot (local correspondent until Jan 2020), Thomas Pietrzak (local correspondent since Feb 2020)

For the Université de Lille

- Coordinator for internships at IUT A: Géry Casiez

- Computer Science Department council: Thomas Pietrzak (member)

For the CRIStAL lab of Université de Lille & CNRS

- Direction board: Géry Casiez

- Computer Science PhD recruiting committee: Géry Casiez (member)

Hiring committees

- Inria's committee for Junior Researcher Positions (CRCN) in Lille: Stéphane Huot (member)

- Université de Lille's committee for Full Professor Positions in Computer Science (FST): Géry Casiez (member)

- Université de Lille's committee for Assistant Professor Positions in Computer Science (IUT A): Géry Casiez (president)

10.2 Teaching - Supervision - Juries

10.2.1 Teaching

- Parcours des Ecoles d’ingénieurs Polytech: Thomas Pietrzak, Informatique débutant, 36h, 1st year, Polytech Lille

- DUT Informatique: Géry Casiez (38h), Stéphane Huot (28h), Axel Antoine (28h), Marc Baloup (28h) IHM, 1st year, IUT A de Lille - Université de Lille

- DUT Informatique: Marc Baloup, Algorithme et Programmation, 21.5h, 1st year, IUT A de Lille - Université de Lille

- DUT Informatique: Marc Baloup, CDIN Web, 11h, 1st year, IUT A de Lille - Université de Lille

- Licence Informatique: Thomas Pietrzak, Logique, 36h, L3, Université de Lille

- Licence Informatique: Thomas Pietrzak, Image et Interaction 2D, 10.5h, L3, Université de Lille

- Cursus ingénieur: Sylvain Malacria (10h), 3DETech, IMT Lille-Douai

- Master Informatique: Thomas Pietrzak (34.5h), Sylvain Malacria (34.5), IHM, M1, Université de Lille

- Master Informatique: Géry Casiez (4h), Sylvain Malacria (12h), Mathieu Nancel (8h), Thomas Pietrzak (18h) NIHM, M2, Université de Lille

- Master Informatique: Géry Casiez (4h) RVI, M2, Université de Lille

- Doctoral course - Experimental research and statistical methods for Human-Computer Interaction: Géry Casiez (12h)

10.2.2 Supervision

- PhD: Nicole Ke Chen Pong, Understanding and Improving Users Interactive Vocabulary, defended in Oct. 2020, advised by Nicolas Roussel, Sylvain Malacria & Stéphane Huot

- PhD in progress: Yuan Chen, Making Interfaces Re-appearing in Ubiquitous Environments, started Dec. 2020, advised by Géry Casiez, Sylvain Malacria & Edward Lank (co-tutelle with University of Waterloo, Canada)

- PhD in progress: Eva Mackamul, Towards a Better Discoverability of Interactions in Graphical User Interfaces, started Oct. 2020, advised by Géry Casiez & Sylvain Malacria

- PhD in progress: Travis West, Examining the Design of Musical Interaction: The Creative Practice and Process, started Oct. 2020, advised by Stéphane Huot & Marcelo Wanderley (co-tutelle with McGill University, Canada)

- PhD in progress: Johann Felipe González Ávila, Direct Manipulation with Flexible Devices, started Sep. 2020, advised by Géry Casiez, Thomas Pietrzak & Audrey Girouard (co-tutelle with Carleton University, Canada)

- PhD in progress: Grégoire Richard, Touching Avatars: Role of Haptic Feedback during Interactions with Avatars in Virtual Reality, started Oct. 2019, advised by Géry Casiez & Thomas Pietrzak

- PhD in progress: Philippe Schmid, Command History as a Full-fledged Interactive Object, started Oct. 2019, advised by Mathieu Nancel & Stéphane Huot

- PhD: Damien Masson, Supporting Interactivity with Static Content, University of Waterloo, Canada, started Jan. 2019, advised by Edward Lank, Géry Casiez& Sylvain Malacria (in Waterloo)

- PhD in progress: Marc Baloup, Interaction with avatars in immersive virtual environments, started Oct. 2018, advised by Géry Casiez & Thomas Pietrzak

- PhD in progress: Axel Antoine, Helping Users with Interactive Strategies, started Oct. 2017, advised by Géry Casiez & Sylvain Malacria

10.2.3 Juries

- Guillaume Bataille (PhD, INSA Rennes): Géry Casiez, reviewer

- Theophanis Tsandilas (HDR, Université Paris-Saclay): Géry Casiez, reviewer

- Basil Duvernoy (PhD, Sorbonne Université): Thomas Pietrzak, examiner

10.2.4 Mid-term evaluation committees

- Moitree Basu (PhD, Université de Lille): Géry Casiez

- Mohammad Ghosn (PhD, Université de Lille): Géry Casiez

- Anatolii Khalin (PhD, Université de Lille): Géry Casiez

- Vincent Reynaert (PhD, Université de Lille): Géry Casiez

- Yen-Ting Yeh (PhD, University of Waterloo, Canada): Géry Casiez

11 Scientific production

11.1 Major publications

- 1 articleA method and toolkit for digital musical instruments: generating ideas and prototypesIEEE MultiMedia241January 2017, 63-71URL: https://doi.org/10.1109/MMUL.2017.18

- 2 inproceedingsLooking through the eye of the mouse: a simple method for measuring end-to-end latency using an optical mouseProceedings of UIST'15ACMNovember 2015, 629-636URL: http://dx.doi.org/10.1145/2807442.2807454

- 3 articleSupporting novice to expert transitions in user interfacesACM Computing Surveys472November 2014, URL: http://dx.doi.org/10.1145/2659796

- 4 articleLeveraging finger identification to integrate multi-touch command selection and parameter manipulationInternational Journal of Human-Computer Studies99March 2017, 21-36URL: http://dx.doi.org/10.1016/j.ijhcs.2016.11.002

- 5 inproceedingsDirect manipulation in tactile displaysProceedings of CHI'16ACMMay 2016, 3683-3693URL: http://dx.doi.org/10.1145/2858036.2858161

- 6 inproceedingsPromoting hotkey use through rehearsal with ExposeHKProceedings of CHI'13ACMApril 2013, 573-582URL: http://doi.acm.org/10.1145/2470654.2470735

- 7 inproceedingsSkillometers: reflective widgets that motivate and help users to improve performanceProceedings of UIST'13ACMOctober 2013, 321-330URL: http://doi.acm.org/10.1145/2501988.2501996

- 8 inproceedingsCausality: a conceptual model of interaction historyProceedings of CHI'14ACMApril 2014, 1777-1786URL: http://dx.doi.org/10.1145/2556288.2556990

- 9 inproceedingsNext-point prediction metrics for perceived spatial errorsProceedings of UIST'16ACMOctober 2016, 271-285URL: http://dx.doi.org/10.1145/2984511.2984590