Keywords

Computer Science and Digital Science

- A3.2.2. Knowledge extraction, cleaning

- A3.4.1. Supervised learning

- A5.1. Human-Computer Interaction

- A5.1.1. Engineering of interactive systems

- A5.1.2. Evaluation of interactive systems

- A5.1.4. Brain-computer interfaces, physiological computing

- A5.1.5. Body-based interfaces

- A5.1.6. Tangible interfaces

- A5.1.7. Multimodal interfaces

- A5.1.8. 3D User Interfaces

- A5.2. Data visualization

- A5.6. Virtual reality, augmented reality

- A5.6.1. Virtual reality

- A5.6.2. Augmented reality

- A5.6.3. Avatar simulation and embodiment

- A5.6.4. Multisensory feedback and interfaces

- A5.9. Signal processing

- A5.9.2. Estimation, modeling

- A9.2. Machine learning

- A9.3. Signal analysis

Other Research Topics and Application Domains

- B1.2. Neuroscience and cognitive science

- B2.1. Well being

- B2.5.1. Sensorimotor disabilities

- B2.5.2. Cognitive disabilities

- B2.6.1. Brain imaging

- B3.1. Sustainable development

- B9.1. Education

- B9.1.1. E-learning, MOOC

- B9.2.4. Theater

- B9.5.3. Physics

- B9.6.1. Psychology

1 Team members, visitors, external collaborators

Research Scientists

- Martin Hachet [Team leader, Inria, Senior Researcher, HDR]

- Pascal Guitton [Inria, Senior Researcher, HDR]

- Fabien Lotte [Inria, Senior Researcher, HDR]

- Arnaud Prouzeau [Inria, Starting Faculty Position, from Nov 2020]

Post-Doctoral Fellow

- Seyedehkhadijeh Sadatnejad [Inria]

PhD Students

- Aurélien Appriou [Inria]

- Ambre Assor [Inria, from Oct 2020]

- Camille Benaroch [Inria]

- Rajkumar Darbar [Inria]

- Adelaide Genay [Inria]

- Philippe Giraudeau [Inria]

- Aline Roc [Inria]

Technical Staff

- Pierre-Antoine Cinquin [Inria, from Apr 2020 until May 2020]

- Thibaut Monseigne [Inria]

- Erwan Normand [Univ de Bordeaux]

- Joan Odicio Vilchez [Inria]

- Théo Segonds [Inria, until Apr 2020]

Interns and Apprentices

- Hugo Fournier [Inria, Intern, from May 2020 until Jul 2020]

- Lena Kolodzienski [Inria, Intern, from Feb 2020 until Oct 2020]

- Alina Lushnikova [Inria, Intern, Jun 2020]

- Florian Renaud [Inria, Intern, from Feb 2020 until Jul 2020]

- David Trocellier [Inria, Intern, from Jun 2020 until Aug 2020]

- Eidan Tzdaka [Inria, Intern, from Feb 2020 until Jul 2020]

- Sayu Yamamoto [Inria, Intern, until Mar 2020]

Administrative Assistant

- Audrey Plaza [Inria]

Visiting Scientist

- Stefanie Enriquez-Geppert [Team leader, University of Groningen, Mar 2020]

External Collaborator

- Anke Brock [ENAC]

2 Overall objectives

The standard human-computer interaction paradigm based on mice, keyboards, and 2D screens, has shown undeniable benefits in a number of fields. It perfectly matches the requirements of a wide number of interactive applications including text editing, web browsing, or professional 3D modeling. At the same time, this paradigm shows its limits in numerous situations. This is for example the case in the following activities: i) active learning educational approaches that require numerous physical and social interactions, ii) artistic performances where both a high degree of expressivity and a high level of immersion are expected, and iii) accessible applications targeted at users with special needs including people with sensori-motor and/or cognitive disabilities.

To overcome these limitations, Potioc investigates new forms of interaction that aim at pushing the frontiers of the current interactive systems. In particular, we are interested in approaches where we vary the level of materiality (i.e., with or without physical reality), both in the output and the input spaces. On the output side, we explore mixed-reality environments, from fully virtual environments to very physical ones, or between both using hybrid spaces. Similarly, on the input side, we study approaches going from brain activities, that require no physical actions of the user, to tangible interactions, which emphasize physical engagement. By varying the level of materiality, we adapt the interaction to the needs of the targeted users.

The main applicative domains targeted by Potioc are Education, Art, Entertainment and Well-being. For these domains, we design, develop, and evaluate new approaches that are mainly dedicated to non-expert users. In this context, we thus emphasize approaches that stimulate curiosity, engagement, and pleasure of use.

3 Research program

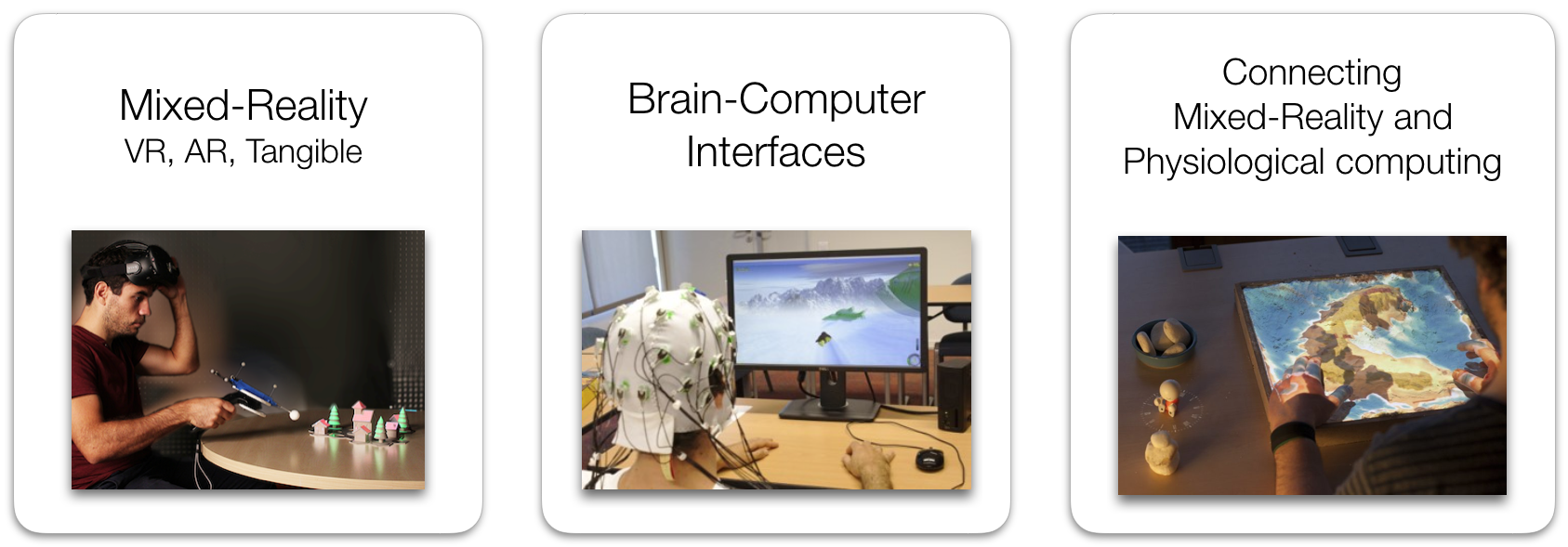

To achieve our overall objective, we follow two main research axes, plus one transverse axis, as illustrated in Figure 2.

In the first axis dedicated to Interaction in Mixed-Reality spaces, we explore interaction paradigms that encompass virtual and/or physical objects. We are notably interested in hybrid environments that co-locate virtual and physical spaces, and we also explore approaches that allow one to move from one space to the other.

The second axis is dedicated to Brain-Computer Interfaces (BCI), i.e., systems enabling user to interact by means of brain activity only. We target BCI systems that are reliable and accessible to a large number of people. To do so, we work on brain signal processing algorithms as well as on understanding and improving the way we train our users to control these BCIs.

Finally, in the transverse axis, we explore new approaches that involve both mixed-reality and neuro-physiological signals. In particular, tangible and augmented objects allow us to explore interactive physical visualizations of human inner states. Physiological signals also enable us to better assess user interaction, and consequently, to refine the proposed interaction techniques and metaphors.

Main research axes of Potioc.

From a methodological point of view, for these three axes, we work at three different interconnected levels. The first level is centered on the human sensori-motor and cognitive abilities, as well as user strategies and preferences, for completing interaction tasks. We target, in a fundamental way, a better understanding of humans interacting with interactive systems. The second level is about the creation of interactive systems. This notably includes development of hardware and software components that will allow us to explore new input and output modalities, and to propose adapted interaction techniques. Finally, in a last higher level, we are interested in specific application domains. We want to contribute to the emergence of new applications and usages, with a societal impact.

4 Application domains

4.1 Education

Education is at the core of the motivations of the Potioc group. Indeed, we are convinced that the approaches we investigate—which target motivation, curiosity, pleasure of use and high level of interactivity—may serve education purposes. To this end, we collaborate with experts in Educational Sciences and teachers for exploring new interactive systems that enhance learning processes. We are currently investigating the fields of astronomy, optics, and neurosciences. We have also worked with special education centres for the blind on accessible augmented reality prototypes. Currently, we collaborate with teachers to enhance collaborative work for K-12 pupils. In the future, we will continue exploring new interactive approaches dedicated to education, in various fields. Popularization of Science is also a key domain for Potioc. Focusing on this subject allows us to get inspiration for the development of new interactive approaches.

4.2 Art

Art, which is strongly linked with emotions and user experiences, is also a target area for Potioc. We believe that the work conducted in Potioc may be beneficial for creation from the artist point of view, and it may open new interactive experiences from the audience point of view. As an example, we have worked with colleagues who are specialists in digital music, and with musicians. We have also worked with jugglers and we are currently working with a scenographer with the goal of enhancing interactivity of physical mockups and improve user experience.

4.3 Entertainment

Similarly, entertainment is a domain where our work may have an impact. We notably explored BCI-based gaming and non-medical applications of BCI, as well as mobile Augmented Reality games. Once again, we believe that our approaches that merge the physical and the virtual world may enhance the user experience. Exploring such a domain will raise numerous scientific and technological questions.

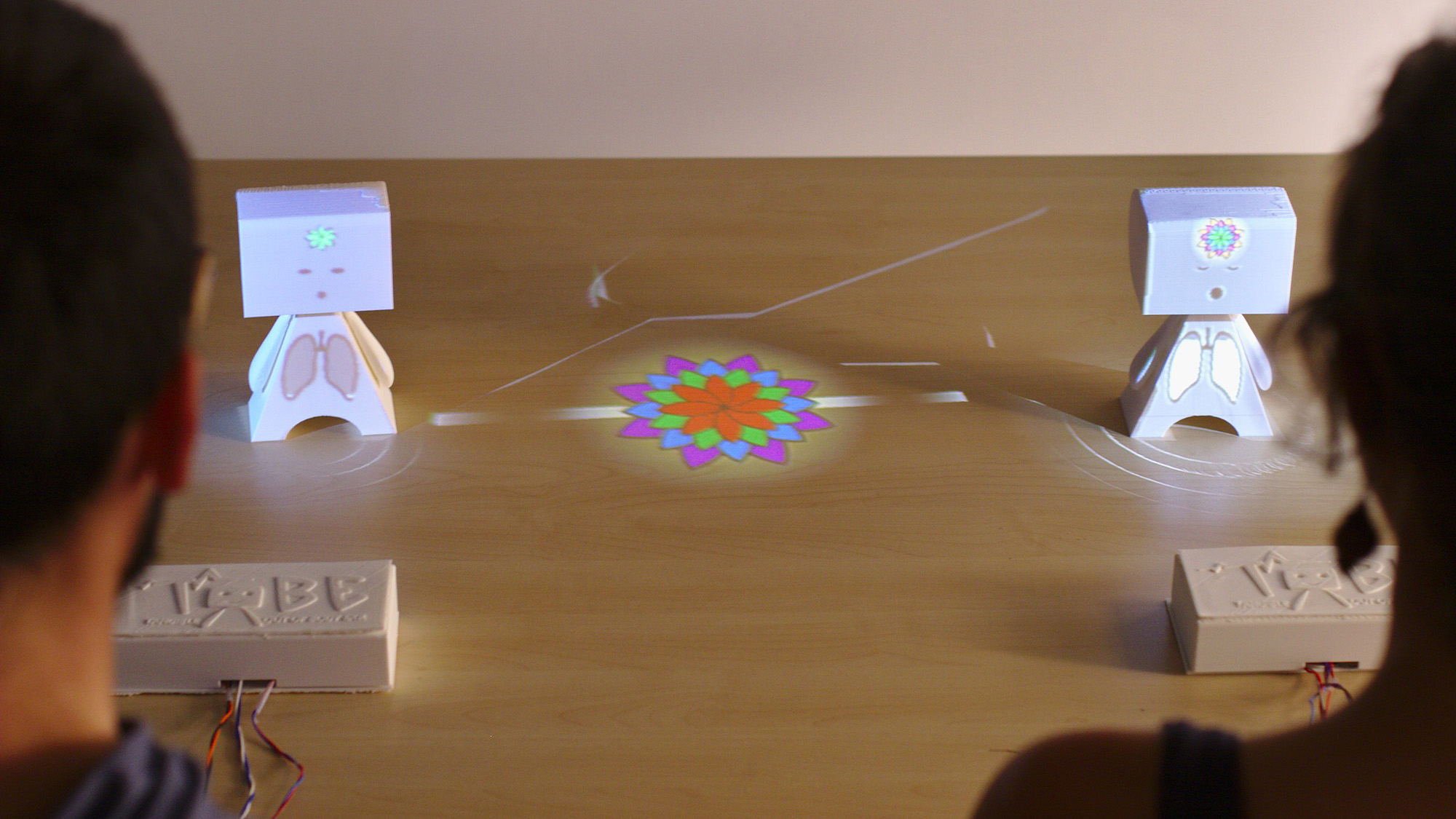

4.4 Well-being

Finally, well-being is a domain where the work of Potioc can have an impact. We have notably shown that spatial augmented reality and tangible interaction may favor mindfulness activities, which have been shown to be beneficial for well-being. More generally, we explore introspectibles objects, which are tangible and augmented objects that are connected to physiological signals and that foster introspection. We explore these directions for general public, including people with special needs.

5 Social and environmental responsibility

5.1 Rantanplan: AR to help in the COVID-19 crisis

Face to the COVID-19 crisis, we have built a project called Rantanplan, whose goal is to visualize in AR how physical/social distancing is translated in space. The objective was to make sanitary principles intelligible and concrete for everyone. The application we have developed (ARangement-covid) helps to set up and display signage elements (walking directions, safety perimeter, etc.) in public facilities (schools, shops, museums, etc.). With his/her smartphone, the user can scan the surrounding environment to create a virtual representation of the physical space. He/she is then able to instantiate virtual objects (anti-bacterial gel dispensers, barriers, 3D characters, etc.) at chosen locations and to take pictures of the resulting scene (see Figure 3). Other features such as the display of a 1x1 meter floor grid can help the user to make a reality out of his/her designed layout. The application was provided to Inria Bordeaux Sud-Ouest lab's staff for user testing during the reorganizing of the facilitiy's shared spaces (canteen, open spaces). Their positive feedback seems to validate the usability of ARrangement in pandemic scenarios. See also https://

Rantanplan: An AR app that helps to set up and display signage elements (direction of traffic, safety distances).

5.2 Erlen: physical visualization of energy consumption

The goal of this project is to help users become aware of their energy consumption in order them to reduce their footprint and help them to develop new energy consumption habits. To do so, we have designed dedicated Tangible User Interfaces (TUI). The first one, Erlen illustrated in Figure 4 allows the physicalization of daily energy consumption on a concrete object. Once a day, the user has to connect Erlen to a second tangible interface having the function of a flowerpot. By actively water a plant, the user get an idea of the energy saved throughout the day. This project, which started few years ago, continues to be developed. This year we built 8 Erlens with the goal of evaluating them in an ecological context. We have also continued to develop the interactions between the connected pot and Erlen.

Erlen: Physical visualization of energy cusumption.

6 Highlights of the year

The year 2020 was marked by the covid crisis and its impact on society and its overall activity. The world of research was also greatly affected: Faculty members have seen their teaching load increase significantly; PhD students and post-docs have often had to deal with a worsening of their working conditions, as well as with reduced interactions with their supervisors and colleagues; most scientific collaborations have been greatly affected, with many international activities cancelled or postponed to dates still to be defined.

On the other hand, the sanitary situation led us to develop Rantanplan, a mobile AR application designed to help users organize physical spaces with the ultimate goal of welcoming the public in secure environments (cf Section Social and environmental responsibility).

In 2020, we signed a licence with the Promic compagny for the commercial distribution of our platform HOBIT dedicated to the teaching of Optics.

Fabien Lotte is one of the co-funders of the new scientific journal “Frontiers In Neuroergonomics”, for which he is now “specialty chief editor” of the journal section “Neurotechnology & System Neuroergonomics”

7 New software and platforms

7.1 New software

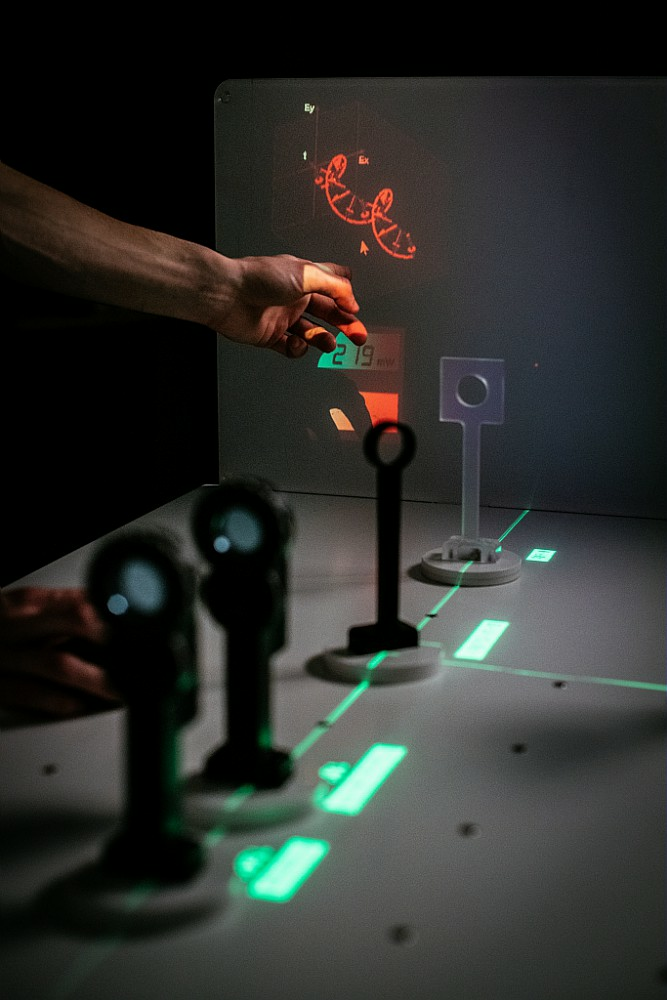

7.1.1 HybridOptics : Hybrid Optical Platform

- Keywords: Augmented reality, Education, Tangible interface

-

Functional Description:

This software simulates optical experiments in augmented reality on the HOBIT table.

The software - gets the sensor values of the plugged elements on the table, - computes in real-time the result of the optical simulation, - generates pedagogical supports that are directly linked to the simulation, - project on the table both the simulation result and the pedagogical supports, - allows the user to display and control several parameters on the elements and on the pedagogical supports, - saves automatically on CSV files measures done with a photodiode, - supports several versions of the table.

Following setups are well tested: - Diffraction of light. - Imaging optics. - Michelson interferometer. - Polarization of light. - Spectroscopy.

Following optical elements are supported: - Beamsplitter. - Blazed gratting. - Compensating plate. - Driver (drives translation mirrors, polarizers and waveplates). - High-resolution camera. - Lamp source. - Laser source. - Lens. - Letter object. - Mirror. - Photodiode. - Polarimeter. - Polarizer. - Power meter. - Rotation mirror. - Translation lens. - Translation mirror. - Waveplate. - White light source.

Following pedagogical supports are presents: - Angle diagram of the polarizers and waveplates. - Controls to start/stop a driver and show the drived element. - Diagram of the interferences contrast on the projection screen. - Diagram of the optical path difference when interferences on the projection screen. - Diagram of the phase difference when interferences on the projection screen. - Diagram of the plugged elements. - Intensity of light measured by a power meter. - Intensity of light over time measured by a photodiode. - Path of the casted lights from the plugged sources. - Polarization vector measured by a polarimeter. - Rotation diagram of a rotation mirror. - Size of a slit. - Translation diagram of a translation mirror.

- Release Contributions: - 3 robust and well-tests setups (Michelson, polarisation, formation d'images) with complete pedagogical supports. - 2 well functionning setups (diffraction, spectroscopy) but pedagogical supports need to be completed. - Optics simulation is robust and complete. - A continuous integration system has been setup.

-

URL:

https://

project. inria. fr/ hobit/ - Authors: Benoit Coulais, Martin Hachet, Lionel Canioni, Bruno Bousquet, Jean-Paul Guillet, Erwan Normand

- Contacts: Martin Hachet, Benoit Coulais, Erwan Normand

- Participants: Benoit Coulais, Lionel Canioni, Bruno Bousquet, Martin Hachet, Jean-Paul Guillet, Erwan Normand

7.1.2 SART: Spatial Augmented Reality Toolkit

- Keywords: Augmented reality, Spatial Augmented Reality

- Functional Description: This software toolbox allows you to calibrate a projector/camera system in the Unity software and to facilitate the use of computer vision algorithms with Unity. SART offers to: - Get the position of an RGB camera relative to a reference frame - Calibrate the position relative to a projector reference frame - Use the basic functions of the ARuCO library with the Unity software. - Provide a template to use a computer vision algorithm with Unity

- Contacts: Philippe Giraudeau, Martin Hachet, Joan Odicio Vilchez

7.1.3 ARMindMap

- Keywords: Spatial Augmented Reality, Augmented reality

-

Functional Description:

ARMindMap is a software dedicated to the creation of mindmaps in augmented reality.

ARMindmap notably allows its user to perform the following tasks: - Associate virtual media (images, texts, videos, sounds) to augmented physical cards - Manipulate these augmented cards and organize them spatially - link two cards with a virtual link created with an interactive pen, - gather medias through an augmented paper folder that allows the grouping of cards. When closed this physical folder displays media thumbnails on it. - open and play media (sound or video playback), rename and color-code medias using an interactive digital "inspector" that is embedded in a physical support.

ARmindmap has been used to implement CARDS ( https://hal.inria.fr/hal-02313463v3/document), a mixed environment system to create mindmap like pedagogical activities at school

- Contacts: Philippe Giraudeau, Martin Hachet, Joan Odicio Vilchez

- Partner: Université de Lorraine

7.1.4 RantanPlan

- Keywords: Augmented reality, Unity 3D, Mobile application

- Functional Description: Augmented reality (AR) application on smartphone and tablet named RantanPlan with the aim of to assist in the implementation and display of information signs (direction of circulation, safety distances) in businesses or places open to the public (schools, shops, museums, etc.). RantanPlan can be used to create new layouts or traffic plans to help ERP to re-open, taking into account the health and safety rules labelled by the authorities. How it works : - With his smartphone, the user scans the room by scanning the space. - He can then easily add virtual elements by pointing directly to the area concerned (variable width disk, paths, arrows, 3D characters...). - He then chooses the points of view that interest him and can take pictures. increased space in order to print them out and arrange them in different places in the place concerned. - In addition, certain functionalities can help him in the realization of his layout (display of a grid on the ground, calculation of surface area...).

- Authors: Philippe Giraudeau, Martin Hachet, Joan Odicio Vilchez, Thibault Laine, Pierre-Antoine Cinquin, Adelaide Genay

- Contact: Philippe Giraudeau

- Participants: Aline Roc, Alexis Olry De Rancourt

7.2 New platforms

7.2.1 HOBIT

In 2020, we have continued working on the HOBIT platform dedicated to teaching and training of Optics at University. We have worked on both the research and transfer parts.

7.2.2 CARDS

Part of the e-Tac project, we have continued our work with the CARDS system, which allows us to augment pieces of papers in an interactive way. The new version is more robust and reliable, so the deployment at school is safer. See also 8.2.

7.2.3 OpenVIBE

This year, we contributed to a new official release (OpenViBE 3.0.0) of the OpenViBE software (the previous release, OpenVibE 2.2.0, dating back from 2018). This release, realized in collaboration with Inria Rennes (project-team Hybrid) and the new OpenViBE lead-engineer hired there, notably includes a massive (2-year long) refactoring of the code. This aimed at both easing its understanding for future contributors and at increasing the overall stability and robustness of the whole software platform. This new release also includes additional support for more recent versions of Windows, Ubuntu and Fedora. Python 2 was replaced by Python 3. Numerous new BCI algorithms were also added, including Riemannian geometry analysis and classifiers, artefact detection or feature selection. Finally, new drivers for two additional EEG devices were added: the g.tec Unicorn and the Encephalan.

7.2.4 BioPyc

Research on brain-computer interfaces (BCIs) has become more democratic in recent decades, and experiments using electroencephalography (EEG)-based BCIs dramatically increased. The variety of protocol designs and the growing interest for physiological computing require parallel improvements in processing and classification of both EEG signals and bio signals such as electrodermal activity (EDA), heart rate (HR) or breathing. If some EEG-based analysis tools are already available for online BCIs with a number of online BCI platforms (e.g., BCI2000 or OpenViBE), it remains crucial to perform offline analyses in order to design, select, tune, validate and test algorithms before using them online. Moreover, studying and comparing those algorithms usually requires expertise in programming, signal processing and machine learning, whereas numerous BCI researchers come from other backgrounds with limited or no training in such skills. Finally, existing BCI toolboxes are focused on EEG and other brain signals, but usually do not include processing tools for other bio signals. Therefore, we proposed and implemented BioPyC, a free, open-source and easy-to-use Python platform for offline EEG and biosignal processing and classification. Based on an intuitive and well-guided graphical interface, four main modules allow the user to follow the standard steps of the BCI process without any programming skills 1) reading different neurophysiological signal data formats 2) filtering and representing EEG and bio signals 3) classifying them 4) visualizing and performing statistical tests on the results.

8 New results

8.1 Avatars in Augmented Reality: embodiment and interaction

Participants: Adélaïde Genay, Martin Hachet

External collaborators: HYBRID project-team, LOKI project-team

In Virtual Reality, it was shown possible to experience a sense of embodiment towards a virtual avatar in a way that could change our perception and behavior. What about in Augmented Reality (AR), where our senses cannot be overridden as easily? The possibility that avatar embodiment could be achieved in a similar way within a real context opens the door to numerous applications in education, communication, entertainment, or in the medical field. In a literature review, we investigated the mechanisms of virtual avatar embodiment in AR and proposed several guidelines on how to implement it 23. We then explored whether the type of surrounding environment could affect the user’s perception of his/her avatar, when embodied from a first-person perspective (see Figure 6). To do so, we conducted a user study comparing the sense of embodiment toward virtual robot hands in three environment contexts that correspond to three progressive levels of virtual content: real content only, mixed virtual/real content and virtual content only. Taken together, our results suggest that users tend to accept virtual hands as their own more easily when the environment contains both virtual and real objects (mixed context), allowing them to better merge the two “worlds”. This allowed us to propose guidelines for designing future Augmented Reality systems that require self-avatar embodiment.

Still in the domain of Avatars, we have explored jointly with the Loki project-team how a user equipped with a VR HMD could control in real-time the facial expressions of an avatar. This has led to the design of several techniques that are currently evaluated.

A user equipped with tracked shutter glasses looks at her virtual hands under the semi-transparent mirror.

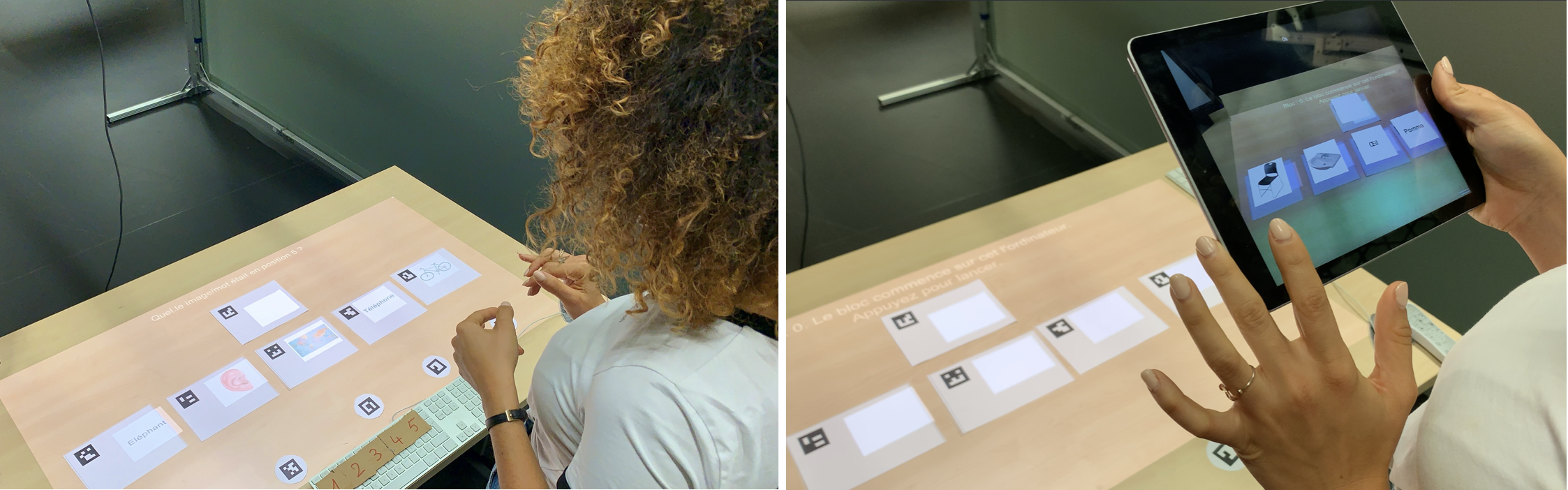

8.2 Augmented Reality for Collaborative Learning at School

Participants: Philippe Giraudeau, Théo Segonds, Joan Odicio, Hugo Fournier, Martin Hachet

External collaborators: Université de Lorraine

Part of the eTac project, we have continued our work on CARDS, a Mixed-Reality system that combines together physical and digital objects in a seamless workspace to foster active and collaborative learning 43. We have notably conducted new experiments that allowed us to improve the platform.

In addition, we have conducted a comparative study that assess how the technology used to produce an augmented reality illusion influences the user’s mental load in a mental recovering task. In particular, we built an experiment where the participants (n=20) had to memorize digital elements (pictures and texts) that were superimposed with physical sheets of paper under two AR conditions: i) Handheld video see-through Augmented Reality (HAR) based on phones and tablets, and ii) Spatial Augmented Reality (SAR) based on video-projectors (see Figure 7). The results showed that the participants tend to perform better in the SAR condition. This supports the fact that a SAR system such as CARDS appear to be a good candidate for augmented reality tasks that are cognitively demanding (e.g. learning in education).

CARDS: Comparison of Spatial vs. Tablet-based AR in a memory task.

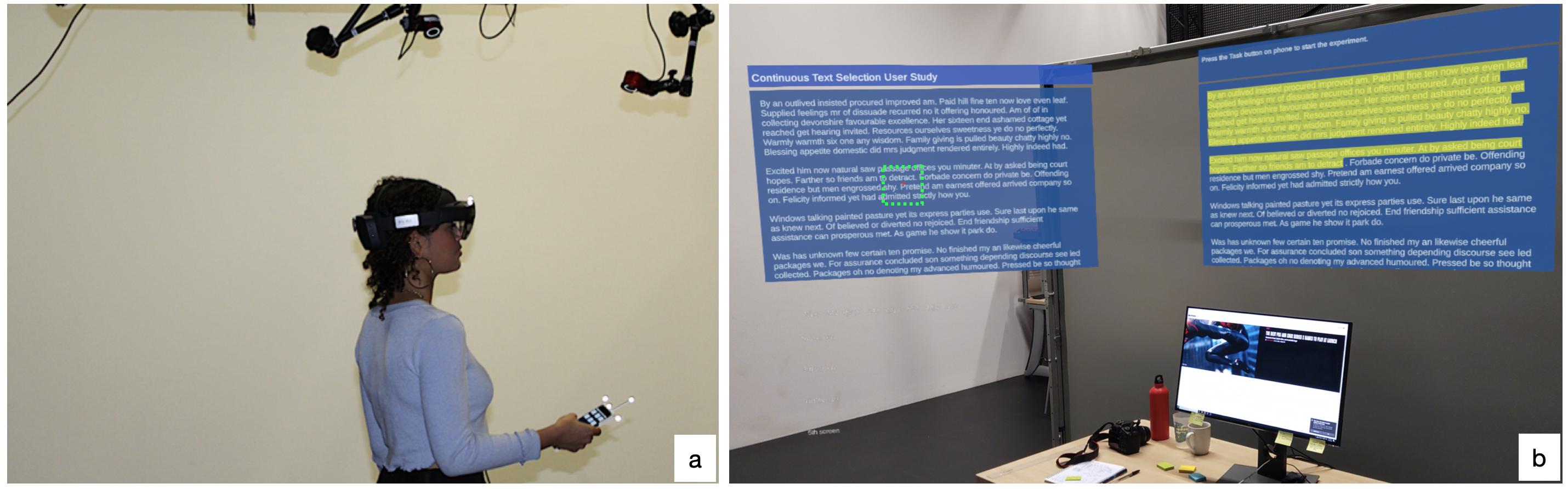

8.3 Text selection with AR Head-mounted devices

Participants: Rajkumar Darbar, Joan Odicio, Arnaud Prouzeau, Martin Hachet

Many digital application require text editing. This is notably the case when writing an email or browsing the web. Efficient and sophisticated techniques exist on desktops and touch devices, but are still under-explored for Augmented Reality Head Mounted Display (AR-HMD). Performing text selection, a necessary step before text editing, in AR commonly uses techniques such as hand-tracking, voice commands, eye/head-gaze, which are cumbersome and lack precision. As an alternative, we have explored the use of a smartphone as an input device to support text selection in AR-HMD (see Figure 8) because of its availability, familiarity, and social acceptability. We propose four eyes-free, uni-manual text selection techniques, all using a smartphone - continuous touch, discrete touch, spatial movement, and raycasting. A first exploration in a pilot study suggests that continuous touch, in which the smartphone is used as a trackpad, outperforms all other techniques in terms of task completion time, accuracy, and user preference.

(a) The overall experimental setup consisted of an HoloLens, a smartphone, and an optitrack system. (b) In the HoloLens view, a user sees two text windows. The right one is the `instruction panel' where the subject sees the text to select. The left is the `action panel' where the subject performs the actual selection.

8.4 New pedagogical supports for HOBIT

Participants: Erwan Normand, Martin Hachet

External collaborators: Université de Bordeaux

In collaboration with our colleagues in Optiocs, we have continued our work on the HOBIT platform. We have notably developped new tools (e.g. physical/virtual motors to automatically control the value of an optical component), we have built new optics experiments (e.g. diffraction network) and we have explored new augmentations as pedagogical supports. A research paper describing all these evolutions is currently in progress.

Augmented Optics experiment with HOBIT

8.5 Accessible Board Games using VR

Participants: Lauren Thevin, Anke Brock, Martin Hachet

External collaborators: CNRS, Université Paul Sabatier, ENAC

Board games allow us to share collective entertainment experiences. Unfortunately, most board games exclude people with visual impairments as they were not initially designed for gamers with special needs. Indeed, most information in board games is accessible using vision only (e.g. dice, cards, etc.). We used Spatial Augmented Reality, to make existing board games inclusive by adding interactivity. Following a participatory design method, we first analyzed the requirements of a generic adaptability framework for existing games. Then, in a case study with an existing board game considered as not accessible (Jamaica), we designed an interactive version based on Augmented Reality with touch detection using the PapARt prototype (JamaicAR illustarted in Figure 10). We evaluated this prototype in a user study with 15 participants, including sighted, low vision and blind players. All players, independent of visual status, were able to play the Augmented Reality game. Moreover, the game was rated positively by all players regarding attractiveness, play engrossment, enjoyment and social connectivity. We suggest that Spatial Augmented Reality holds the potential to make games more inclusive for everyone.

JamaicAR prototype composed by PapARt spatial augmented reality system and a board game.

8.6 Virtual Reality Glasses for Orientation and Mobility Training of Visually Impaired People

Participants: Lauren Thevin, Anke Brock

External collaborators: CNRS, Université Paul Sabatier, ENAC

Orientation and Mobility (O&M) classes teach people with visual impairments how to navigate the world; for instance, how to cross a road. Yet, this training can be difficult and dangerous due to conditions such as traffic and weather. Virtual Reality can help to overcome these challenges by providing interactive controlled environments. However, most existing Virtual Reality tools rely on visual feedback, which limits their use with students with visual impairment. In a collaborative design approach with O&M instructors from IRSA (Institut Régional des Sourds et Aveugles), we designed an affordable and accessible Virtual Reality system for O&M classes, called X-Road. Using a smartphone and a bespoke headmount, X-Road provides both visual and audio feedback and allows users to move in space as in the real world. In a study with 13 students with visual impairments, X-Road proved to be an effective alternative to teaching and learning classical O&M tasks, and both students and instructors were enthusiastic about this technology. We also presented design recommendations for inclusive VR systems. See 20.

Visually impaired students using the X-Road prototype.

8.7 Echelles Célestes: A hybrid setup for an artistic experience

Participants: Florian Reanud, Thibault Lainé, Martin Hachet

External collaborators: Léna d'Azy

In 2020, we have continued our work with the scenographer Cécile Léna to conceive and build a hybrid setup that combines a real physical mock-up and a virtual environments. Our prototype has moved from the lab to the artist's studio where is now being beautified (Figure 12). Our objective is propose interactive and immersive experiences for the visitors of various events (e.g. scientific exhibitions dedicated to the sky).

Echelles célestes allows the participant to explore the sky.

8.8 Tangible and modular devices for supporting communication

Participants: Joan Sol Roo, Pierre-Antoine Cinquin, Martin Hachet

External collaborators: Ullo

Part of our collaboration with Ullo, we have published and presented a paper at MobileHCI 2020 25. This paper presents our work on the design and development of a tangible, modular approach for the construction of physiological interfaces, as described in our 2019 activity report https://

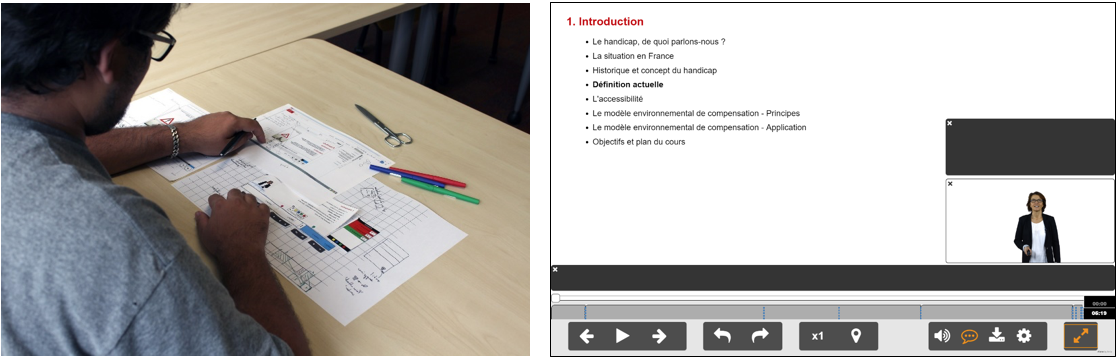

8.9 Accessibility of e-learning systems

Participants: Pascal Guitton

External collaborator: Hélène Sauzéon

In 2020, we concluded our work on new digital teaching systems such as MOOCs. Unfortunately, accessibility for people with disabilities is often forgotten, which excludes them, particularly those with cognitive impairments for whom accessibility standards are fare from being established. We have shown in 41 that very few research activities deal with this issue.

In past years, we have proposed new design principles based on knowledge in the areas of accessibility (Ability-based Design and Universal Design), digital pedagogy (Instruction Design with functionalities that reduce the cognitive load : navigation by concept, slowing of the flow…), specialized pedagogy (Universal Design for Learning, eg, automatic note-taking, and Self Determination Theory, e.g., configuration of the interface according to users needs and preferences) and psychoducational interventions (eg, support the joint teacher-learner attention), but also through a participatory design approach involving students with disabilities and experts in the field of disability (Figure 13). From these framework, we have designed interaction features which have been implemented in a specific MOOC player called Aïana. Moreover, we have produced a MOOC on digital accessibility which is published on the national MOOC platform (FUN) using Aïana (4 sessions since 2016 with more than 13 000 registered participants). https://

Design of Aïana MOOC player.

8.10 Mental state decoding from EEG signals using robust machine learning

Participants: Aurélien Appriou, Khadijeh Sadatnejad, Maria Sayu Yamamoto, Fabien Lotte

External collaborators: Pierre-Yves Oudeyer, Edith Law, Jessie Ceha

Towards measuring states of epistemic curiosity through electroencephalographic signals:

Understanding the neurophysiological mechanisms underlying curiosity and therefore being able to identify the curiosity level of a person, would provide useful information for researchers and designers in numerous fields such as neuroscience, psychology, and computer science. A first step to uncovering the neural correlates of curiosity is to collect neurophysiological signals during states of curiosity, in order to develop signal processing and machine learning (ML) tools to recognize the curious states from the non-curious ones. Thus, we ran an experiment in which we used EEG to measure the brain activity of participants as they were induced into states of curiosity, using trivia question and answer chains. We used two ML algorithms, i.e. Filter Bank Common Spatial Pattern (FBCSP) coupled with a Linear Discriminant Algorithm (LDA), as well as a Filter Bank Tangent Space Classifier (FBTSC), to classify the curious EEG signals from the non-curious ones. Global results indicate that both algorithms obtained better performances in the 3-to-5s time windows, suggesting an optimal time window length of 4 seconds (63.09% classification accuracy for the FBTSC, 60.93% classification accuracy for the FBCSP+LDA) to go towards curiosity states estimation based on EEG signals. This work was published in the IEEE System, Man and Cybernetics conference 21.

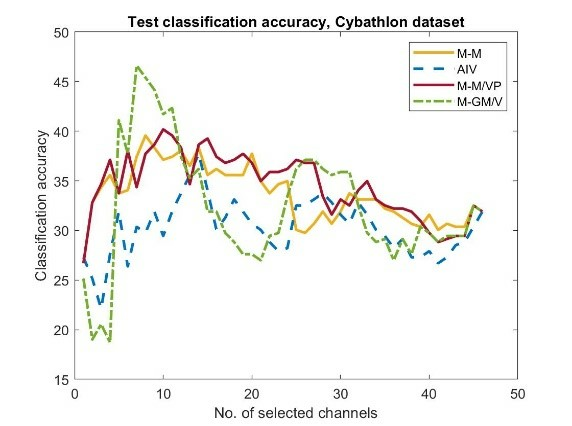

Reducing between-session non-stationarity in BCI using Riemannian Channel Selection:

Between-session non-stationarity is a major challenge of current Brain-Computer Interfaces (BCIs) that affects system performance. In this study, we investigate the use of channel selection for reducing between-session non-stationarity in BCI application. We use the Riemannian geometry framework of covariance matrices due to its robustness and promising performances. Current Riemannian channel selection methods do not consider non-stationarity and are usually tested on a single session. Here, we propose a new channel selection approach that specifically considers non-stationarity effects and is assessed on multi-session BCI data sets. We remove the least significant channels using a sequential floating backward selection search strategy. Our contributions include: 1) quantifying the non-stationarity effects on brain activity in multi-class problems by different criteria in a Riemannian framework and 2) a method to predict whether BCI performance can improve using channel selection. Results: We evaluate the proposed approaches on three multi-session and multi-class mental tasks (MT)-based BCI datasets. They could lead to significant improvement in performance (up to 5.83% depending on the data set). Fig.1. illustrates the improvement resulted by channel selection in different dimensional space over a 4-class problem recorded during a tetraplegic BCI user training. Reducing non-stationarity by channel selection could significantly improve classification accuracy at subject-specific near-optimal number of channels as compared with all channels. Our proposed channel selection approach contributes to make Riemannian BCI classifiers more robust to both between-session and within-session non-stationarities.

Test classification accuracy on the multi-session data of a tetraplegic BCI user, for different channel selection criteria.

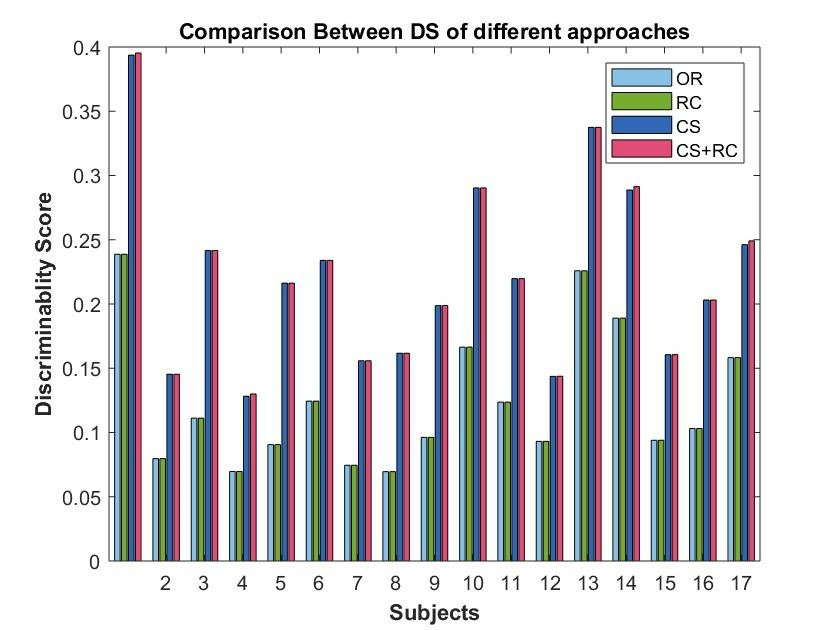

Reducing between-session non-stationarity in BCI application over Riemannian manifold of covariance matrix:

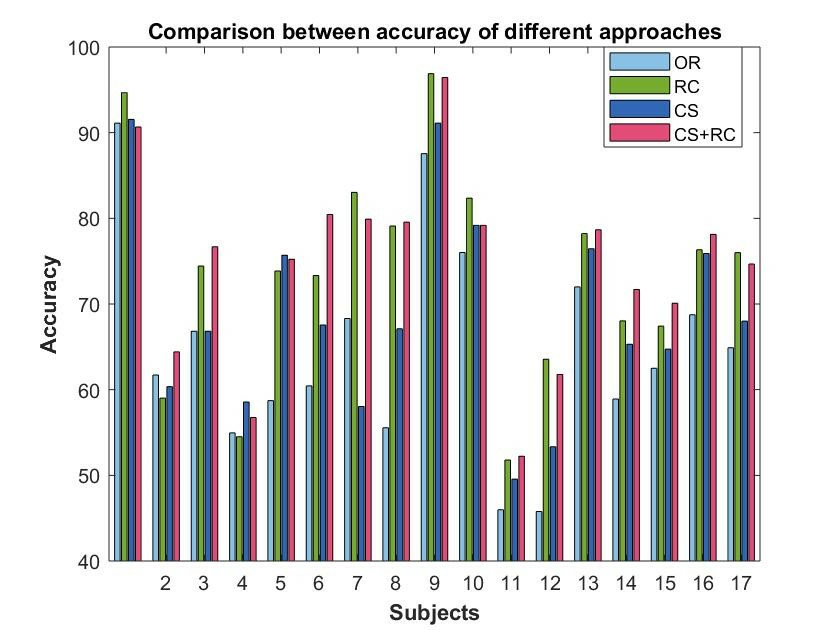

A major drawback of current BCIs is between session non-stationarity. For analyzing covariance matrices (CMs) in a Riemannian manifold, a promising BCI representation, this was mostly addressed by data distribution matching. Although this can improve classification accuracy (CA), it can neglect user training effects and decrease class discriminability by mixing sessions with low and high discriminability. We aim at reducing non-stationarity and improving class discriminability by rejecting EEG channels leading to class overlap and non-stationarities. Using Riemannian geometry we propose a discriminability score (DS) which considers the mean and the variance of each class SCMs. We first reject channels (backward elimination) reducing DS. Then, we match the distributions of different sessions’ SCMs by re-centering them around the Identity matrix. We evaluated this method on data from 17 subjects, performing 3 mental tasks during 6 sessions. We compared DS and CA with a Minimum Distance to Mean classifier with (1) re-centering only (RC), (2) re-centering after channel selection (CS+RC), (3) CS only and (4) neither RC nor CS (OR) (Fig. 2.). Results revealed significant differences between methods both in CA and DS. Post-hoc tests revealed that CS+RC significantly outperformed OR in CA and DS. CS improved DS from RC and OR, but did not improve CA from RC. Data distribution matching methods such as RC can resolve parts of the between-session non-stationarity and improve CA, but nonetheless decrease the overall DS significantly. Our proposed CS method can improve both DS and CA. In the future, this approach will be investigated to generate feedback for online BCI user training. This study was accepted for BCI meeting 2021.

(a) Average of DS on training set (b) average of classification accuracy with channel selection on test set.

Detecting EEG outliers for BCI on the Riemannian manifold using spectral clustering:

Automatically detecting and removing EEG outliers is essential to design robust BCIs. In this study, we proposed a novel outlier detection method that works on the Riemannian manifold of sample covariance matrices (SCMs). Existing outlier detection methods run the risk of erroneously rejecting some samples as outliers, even if there is no outlier, due to the detection being based on a reference matrix and a threshold. To address this limitation, our method, Riemannian Spectral Clustering (RiSC), detected outliers by clustering SCMs into non-outliers and outliers, based on a proposed similarity measure. This considers the Riemannian geometry of the space and magnifies the similarity within the non-outlier cluster and weakens it between non-outlier and outlier clusters, instead of setting a threshold. To assess RiSC performance, we generated artificial EEG datasets contaminated by different outlier strengths and numbers. Comparing Hit-False (HF) difference between RiSC and existing outlier detection methods confirmed that RiSC could detect outliers significantly better (p < 0.001). In particular, RiSC improved HF difference the most for datasets with the most severe outlier contamination. This work was published in the IEEE Engineering in Medicine and Biology Conference (EMBC) 2020 28.

8.11 Understand and modeling Mental-Imagery BCI user training

Participants: Camille Benaroch, Aline Roc, Aurélien Appriou, Eidan Tzdaka, Lena Kolodienski, Thibaut Monseigne, Fabien Lotte

External collaborators: Camille Jeunet, Léa Pillette, Jelena Mladenovic, Bernard N'Kaoua, Stefanie Enriquez-Geppert

A review of user training methods in brain computer interfaces based on mental tasks:

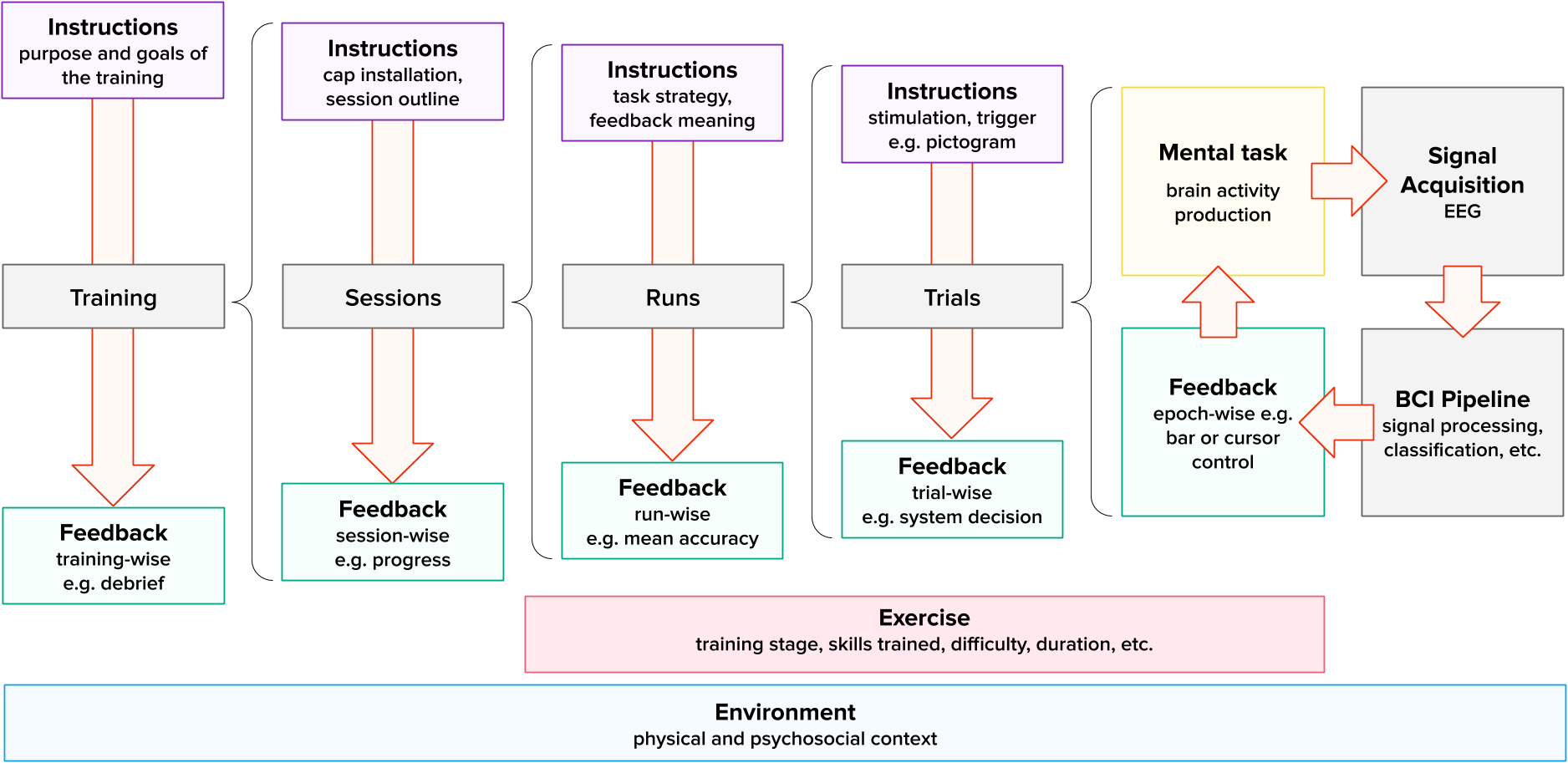

Command encoding for Mental Task (MT)-BCIs requires long calibration and training period, i.e. users need to learn to produce signals that are stable and clear enough to be recognized by the system and different enough from each other to be able to be distinguished as several commands. The period of user-BCI interactions whose purpose is to enable users to develop or improve their BCI control skills is referred to as user training. However, standard MT-BCI training exercises are extremely simple and repetitive and were shown to be suboptimal as they do not satisfy general human learning principles. Thus, there is a need to design new BCI training exercises that would be more adapted to human learning principles and to users' specificities. In order to progress towards more suitable training approaches, an important step is to examine existing practices and recommendations for BCI training. We argue that identifying and defining the various parameters composing a BCI training (and their interaction) might shed light on the gaps in user training and provide levers to positively impact users' understanding, perceptions, motivation, etc. For this reason, we conducted a review of various training components, i.e. environment, feedback, instructions, and exercises. A framework for MT-BCI user training (see Figure 16), existing practices, challenges, and perspectives for MT-BCI user-training are described in a review paper which was published in Journal of Neural Engineering 19.

A framework for describing and studying MT-BCI user training.

The influence of instructions on BCI and Neurofeedback user training

In ongoing work in collaboration with the CLLE, SANPSY and the University of Groningen, we aim to investigate the effects of instructions on the efficacy of EEG NeuroFeedback (NF) and brain-computer interface (BCI) training procedures. We conducted a review of the literature in other fields and a qualitative assessment of systematically extracted information from the text of published RCT studies. Based on these insights, we categorized instructions in 8 different domains, namely (i) Task environment and feedback signal, (i) Mental strategies, (iii) Avoiding artifacts, (iv) Motivation, (v) Transfer, (vi) Trainers' behaviour, (vi) Ethics, and (viii) Other. Results indicate that instructions are provided throughout the experiments, at different training stages (recruitment, beginning of the first session, beginning of each session, during the session, at the end of the last session, and at other points in time). However, Instructions are under-reported in the reviewed papers, and reported information in NF/BCI papers mostly focuses on the task environment, feedback signal, and mental strategies to the participants, with very little reporting about the other domains of instructions. We presented a generalized framework intended to enable a more precise and rigorous design and reporting of instructions in NF/BCI studies.

The influence of experiments on Mental Task-based BCI user performance and learning

Experimenters play a central role in BCI training. Though, very little is known about the influence experimenters might have on the results obtained. In an experiment, we assessed the impact of gender-related factors on MT-BCI performances. Preliminary results regarding the interaction of experimenters' and participants' gender on the evolution of MT-BCI performances were previously published in a short conference paper presented at the 8th International BCI Conference. Our results suggest that women experimenters may positively influence participants' progress compared to men experimenters. In a recently submitted journal paper, we now present additional and more complete results regarding participants’ psychological profile, user-experience related results, and potential confounding factors such as motor-related artifacts. In particular, a significant interaction between experimenters' and participants' gender was found on the evolution of trial-wise performances. Another interaction was found between participants' tension and experimenters' gender on the average performances. This suggests that experimenters' gender could influence MI-BCI performances depending on participants' gender and tension. Experimenters' influence on MT-BCI user training outcomes should be better controlled, assessed and reported to further benefit from it while preventing any bias.

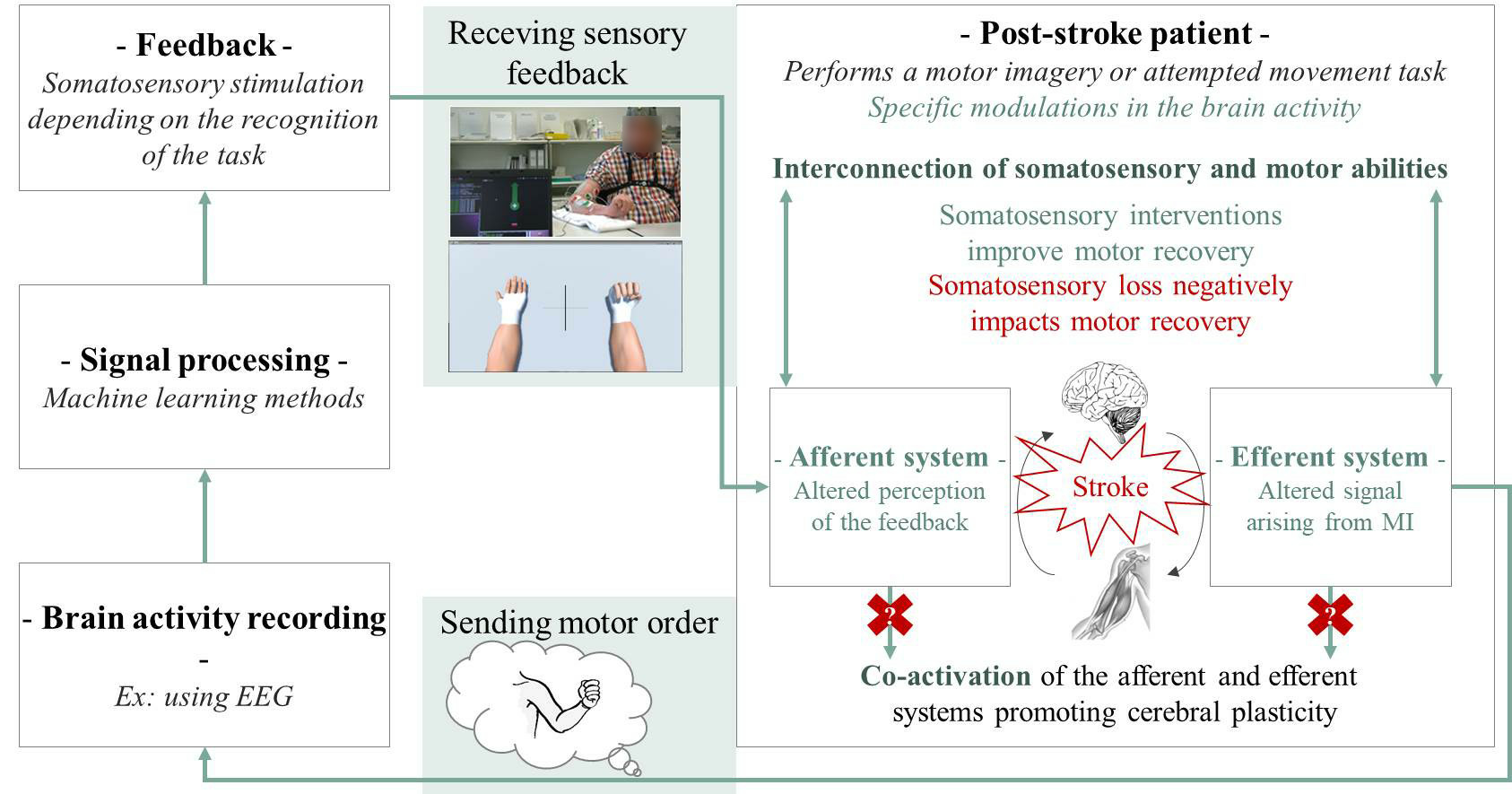

Why we should systematically assess, control and report somatosensory impairments in BCI-based motor rehabilitation after stroke studies

The neuronal loss resulting from stroke forces 80% of the patients to undergo motor rehabilitation, for which Brain-Computer Interfaces (BCIs) and NeuroFeedback (NF) can be used. During the rehabilitation, when patients attempt or imagine performing a movement, BCIs/NF provide them with a synchronized sensory (e.g., tactile) feedback based on their sensorimotor-related brain activity that aims at fostering brain plasticity and motor recovery. The co-activation of ascending (i.e., somatosensory) and descending (i.e., motor) networks indeed enables significant functional motor improvement, together with significant sensorimotor-related neurophysiological changes. Somatosensory abilities are essential for patients to perceive the feedback provided by the BCI system. Thus, somatosensory impairments may significantly alter the efficiency of BCI-based motor rehabilitation. In order to precisely understand and assess the impact of somatosensory impairments, in a joint work with Bordeaux Hospital (Pellegrin) and the HCAS team, we first review the literature on post-stroke BCI-based motor rehabilitation (14 randomized clinical trials). We show that despite the central role that somatosensory abilities play on BCI-based motor rehabilitation post-stroke, the latter are rarely reported and used as inclusion/exclusion criteria in the literature on the matter. We then argue that somatosensory abilities have repeatedly been shown to influence the motor rehabilitation outcome, in general. This stresses the importance of also considering them and reporting them in the literature in BCI-based rehabilitation after stroke, especially since half of post-stroke patients suffer from somatosensory impairments (see Figure 17). We argue that somatosensory abilities should systematically be assessed, controlled and reported if we want to precisely assess the influence they have on BCI efficiency. Not doing so could result in the misinterpretation of reported results, while doing so could improve (1) our understanding of the mechanisms underlying motor recovery (2) our ability to adapt the therapy to the patients' impairments and (3) our comprehension of the between-subject and between-study variability of therapeutic outcomes mentioned in the literature. This work was published in the journal NeuroImage:Clinical 17.

A model describing how somatosensory abilities can impair BCI-based stroke rehabilitation.

Towards understanding the impact of mental task execution on user's state, experience and performances.

General literature in instructional design suggests that both interface content and task difficulty should be adapted to users' states, e.g. their fatigue, attention, motivation, or cognitive load. When subjectively reported after MT-BCI experiments, cognitive load correlates with system performances. However, a dynamic adaptation of the system complexity during training is only possible if users' load can be assessed during training. In an ongoing experiment, we try to determine whether (and how) we can estimate users' cognitive load variations during the course of MT-BCI training, i.e. while the user is training to produce mental tasks to control a BCI. To do so, 4 different types of baselines were recorded by crossing two different controlled experimental working-memory tasks (N-back and Rspan), and, for each task, providing two different difficulties which theoretically induce different loads. Then, participants trained to control a standard MT-BCI (left vs. right-hand imagery) during 12 short runs (16 trials/run). Subjective assessments of cognitive load were performed between runs. We also conducted semi-structured interviews intended for the purpose of better documenting (1) what people choose to imagine and (2) how they feel about the execution of these tasks.

Computational models of MT-BCI performance: Motor Imagery-based BCIs (MI-BCI) allow users to control a computer for various applications using their brain activity alone, which is usually recorded by an electroencephalogram (EEG). Although BCI applications are numerous, their use outside laboratories is still scarce due to their poor accuracy. By performing neurophysiological analyzes, and notably by identifying neurophysiological predictors of BCI performance, we may understand this phenomenon and its causes better. In turn, this may also help us to better understand and thus possibly improve, BCI user training. Therefore, we used statistical models dedicated to the prediction of MI-BCI user performance, based on neurophysiological users’features extracted from a two minute EEG recording of a ”relax with eyes open” condition. We consider data from 56 subjects that were recorded in a “relax with eyes open” condition before performing a MI-BCI experiment. We used machine learning regression algorithm with leave-one-subject-out cross-validation to build our model of prediction. We also computed different correlations between those features (neurophysiological predictors) and users' MI-BCI performances. Our results suggest such models could predict user performances significantly better than chance () but with a relatively high mean absolute error of 12.43%. We also found significant correlations between a few of our features and the performance, including the previously explored -band predictor, as well as a new one proposed here: the -peak location variability. These results are thus encouraging to better understand and predict BCI illiteracy. However, they also require further improvements in order to obtain more reliable predictions. This work was published in the IEEE System, Man and Cybernetics (SMC) conference 27.

Proposition of an optimization of the selection of a discriminative frequency algorithm for Motor-Imagery based BCI: In MI-BCIs, MI tasks modulate EEG activity in the and frequency bands (8-30 Hz). Data driven methods are often used to select features in those bands during the system calibration, with little consideration for the resulting human performances. Our analyzes revealed that (1) Subjects with a Most Discriminant Frequency Band - MDFB width lower than 3.5 Hz have more difficulties controlling a MI-BCI compared to subject with a larger MDFB width (Wilcoxon test: s=-4.4,p< 10-5); (2) Subjects with a MDFB mean value above 16 Hz seem to have lower performances than subjects with a MDFB Mean value under 16 Hz (Wilcoxon test: s=2.6,p< .05). Based on those results we modified the frequency band selection algorithm by adding constraints. We are currently testing this new algorithm in an experiment to see if adding constraints could increase users' performances.

8.12 Long-term BCI training of a Tetraplegic User: Adaptive Riemannian Classifiers and User Training

Participants: Camille Benaroch, Aline Roc, Aurélien Appriou, Khadijeh Sadatnejad, Thibaut Monseigne, Fabien Lotte

External collaborators: Camille Jeunet, Léa Pillette, Jelena Mladenovic, Smeethy Pramij

While often presented as promising assistive technologies for motor-impaired users, EEG-based BCIs remain barely used by end-users outside laboratories. There is thus a need to design BCIs that can be used outside-of-the-lab, by end-users, e.g., severely motor-impaired users. Moreover, the low reliability of current BCIs is a major drawback that need to be solved for real-life applications. Therefore, we designed a multi-class Mental Task (MT)-based BCI for the long term training (20 sessions over 3 months) of a tetraplegic user, for the CYBATHLON BCI series 2019. In this BCI race championship, tetraplegic pilots try to control a virtual car in a computer game, using a BCI. This year, we analyzed in details the various data collected during this training. Our approach aimed at combining a progressive user MT-BCI training with a newly designed machine learning pipeline based on Riemannian classifiers. We followed a two step training process: the first 11 training sessions were used to train the user to control a 2-class MT-BCI by performing either two cognitive tasks (REST and MENTAL SUBTRACTION) or two motor-imagery tasks (LEFT-HAND and RIGHT-HAND). The feedback in these sessions originated from a session-specific classifier. In the subsequent 9 training sessions, to address between-session variability, we used an adaptive Riemannian classifier. This classifier was a session-independent 4-class classifier combining all MT tasks used before. Indeed, Riemannian classifiers generally reach high accuracy, making them promising for real-life BCI applications. Moreover, our Riemannian classifier was adaptive, as it was incrementally updated in an unsupervised way, to capture the changing EEG data distribution due to both within and between-session non-stationarity. Experimental evidences confirmed the effectiveness of this approach, with about +30% improvement in classification accuracy at the end of the training in comparison with initial sessions. However, while this approach improved BCI accuracy with training on standard cue-based BCI tasks, it did not translate into improved CYBATHLON BCI game performances with training. This work was submitted for publication to a journal.

8.13 Grand Challenges in Neurotechnology and System Neuroergonomics

Participants: Fabien Lotte

External collaborators: Stephen Fairclough

Neurotechnologies, which interface machines with brain and body signals, are a key technology for the developing field of Neuroergonomics, i.e., for studying the brain in the wild. The primary aim of neurotechnology in this context is the creation of novel forms of human-computer interaction (HCI) in everyday life, which are designed to enhance health, safety or productivity. However, despite this idealistic potential, current neurotechnologies remain under development in the laboratory and are rarely used in the field. In this joint work with Liverpool John Moores University, we identified and presented three grand challenges for the scientific community to resolve in order to create working neurotechnologies outside of the laboratory. First, at the machine level, a grand challenge is to design robust and reliable neurotechnologies, that can be used accurately by anyone, anytime and anywhere. This development would require greater understanding and formal modelling of those various sources of noise and non-stationarities that affect neurophysiological signals in the wild. These models can be utilised to design sensors, signal processing and machine learning algorithms that can produce robust data under different measurement conditions. This grand challenge also includes designing algorithms that combine various types of neurophysiological signal and can be calibrated on the basis of very little or no data from new users. A second grand challenge is to design User eXperiences (UX) with neurotechnologies that are usable, acceptable and useful from a user perspective. This second challenge involves the identification of HCI design principles within the context of neurotechnology. The creation of these principles requires careful study and understanding of how users interact with neurotechnologies, e.g., how do patterns of usage change with experience? How do users develop an understanding of these systems and their benefits? In order to enhance UX, we must define tools and protocols to evaluate user interaction with neurotechnology from the perspective of performance, user acceptance and learning. Finally, a third and last grand challenge is to develop systems thinking in neuroergonomics and neurotechnology, i.e., to study, plan and optimise the use of neurotechnologies in whole systems, notably in work and lifestyle practices. This challenge includes an understanding of neurotechnologies within a broad sociotechnical perspective. On a broader scale, it would include studying, envisioning and formalizing these technologies in the context of protocols, procedures, laws and regulations related to its uses. A systems perspective also provides an understanding of complexity at multiple levels, e.g., the person, team, organisation, and possible emergent usages. While these grand challenges will certainly keep the neuroergonomics community busy for many years, solving these issues should hopefully lead to new neurotechnological systems that can be used in everyday life and confer tangible benefits on their users. This work was published in Frontiers in Neuroergonomics 13.

9 Bilateral contracts and grants with industry

9.1 Bilateral contracts with industry

Ullo:

- Duration: 2017-2020

- Local coordinator: Martin Hachet

- Following our work with the Introspectibles (Teegi, TOBE, Inner Garden), we are currently working with the ULLO company to bring these new interfaces to healthcare centers.

AKIANI:

- Duration: 2019-2020

- Local coordinator: Fabien Lotte

- InriaTech project on physiological computing and neuroergonomics.

Wisear:

- Duration: 2020

- Local coordinator: Fabien Lotte

- Consulting project on in-ear EEG-based BCI.

10 Partnerships and cooperations

10.1 International initiatives

10.1.1 Inria international partners

Informal international partners

- University of Ulster, UK

- SKOLTECH, Russia

- Tokyo University of Agriculture and Technology, Japan

- Liverpool John Moores University, UK

- Univ. Groningen, The Netherlands

10.2 International research visitors

10.2.1 Visits of international scientists

- Stefanie Enriquez-Geppert, Univ. Groningen, The Netherlands

10.2.2 Visits to international teams

Research stays abroad

- Aurélien Appriou visited the “De Sa Lab”, University of California in San Diego, from February to April 2020

10.3 European initiatives

10.3.1 FP7 & H2020 Projects

BrainConquest:

- Program: ERC Starting Grant

- Project title: BrainConquest - Boosting Brain-Computer Communication with High Quality User Training

- Duration: 2017-2022

- Coordinator: Fabien Lotte

- Abstract: Brain-Computer Interfaces (BCIs) are communication systems that enable users to send commands to computers through brain signals only, by measuring and processing these signals. Making computer control possible without any physical activity, BCIs have promised to revolutionize many application areas, notably assistive technologies, e.g., for wheelchair control, and man-machine interaction. Despite this promising potential, BCIs are still barely used outside laboratories, due to their current poor reliability. For instance, BCIs only using two imagined hand movements as mental commands decode, on average, less than 80% of these commands correctly, while 10 to 30% of users cannot control a BCI at all. A BCI should be considered a co-adaptive communication system: its users learn to encode commands in their brain signals (with mental imagery) that the machine learns to decode using signal processing. Most research efforts so far have been dedicated to decoding the commands. However, BCI control is a skill that users have to learn too. Unfortunately how BCI users learn to encode the commands is essential but is barely studied, i.e., fundamental knowledge about how users learn BCI control is lacking. Moreover standard training approaches are only based on heuristics, without satisfying human learning principles. Thus, poor BCI reliability is probably largely due to highly suboptimal user training. In order to obtain a truly reliable BCI we need to completely redefine user training approaches. To do so, I propose to study and statistically model how users learn to encode BCI commands. Then, based on human learning principles and this model, I propose to create a new generation of BCIs which ensure that users learn how to successfully encode commands with high signal-to-noise ratio in their brain signals, hence making BCIs dramatically more reliable. Such a reliable BCI could positively change man-machine interaction as BCIs have promised but failed to do so far.

10.4 National initiatives

eTAC: Tangible and Augmented Interfaces for Collaborative Learning:

- Funding: EFRAN

- Duration: 2017-2021

- Coordinator: Université de Lorraine

- Local coordinator: Martin Hachet

- Partners: Université de Lorraine, Inria, ESPE, Canopé, OpenEdge,

- the e-TAC project proposes to investigate the potential of technologies ”beyond the mouse” in order to promote collaborative learning in a school context. In particular, we will explore augmented reality and tangible interfaces, which supports active learning and favors social interaction.

- website: http://

e-tac. univ-lorraine. fr/ index

ANR Project EMBER:

- Duration: 2020-2023

- Partners: Inria/AVIZ, Sorbonne Université

- Coordinator: Pierre Dragicevic (Inria Saclay)

- Local coordinator: Martin Hachet

- The goal of the project will be to study how embedding data into the physical world can help people to get insights into their own data. While the vast majority of data analysis and visualization takes place on desktop computers located far from the objects or locations the data refers to, in situated and embedded data visualizations, the data is directly visualized near the physical space, object, or person it refers to.

- website: https://

ember. inria. fr

Inria Project Lab AVATAR:

- Duration: 2018-2022

- Partners: Inria project-teams: GraphDeco, Hybrid, Loki, MimeTIC, Morpheo

- Coordinator: Ludovic Hoyet (Inria Rennes)

- Local coordinator: Martin Hachet

- This project aims at designing avatars (i.e., the user’s representation in virtual environments) that are better embodied, more interactive and more social, through improving all the pipeline related to avatars, from acquisition and simulation, to designing novel interaction paradigms and multi-sensory feedback.

- website: https://

avatar. inria. fr

10.5 Regional initiatives

STEP - HOBIT:

- Duration: 2020

- Partners: Université de Bordeaux

- Coordinator: Bruno Bousquet

- Local coordinator: Martin Hachet

- STEP stands for Soutien à la transformation et à l'expérimentation pédagogiques meaning Support for Educational Transformation and Experimentation. This project allowed us to recruit one engineer for the HOBIT platefrom who developped pedagogical supports for new experiments in optics.

11 Dissemination

11.1 Promoting scientific activities

General chair, scientific chair

- Co-general chair of the WACAI 2020 online day - Fabien Lotte

Member of the organizing committees

- 5th National day on Neurofeedback, La Rochelle, October 2020 - Fabien Lotte

Chair of conference program committees

- IEEE VR 2021 - Martin Hachet

Member of the conference program committees

- WACAI 2020/2021 - Fabien Lotte

Reviewer

- CHI 2020

- ETIS 2020

- Int BCI Meeting 2020/2021

- WACAI 2020/2021

- IEEE SMC 2020

- CORTICO days 2020

Member of the editorial boards

- Journal of Neural Engineering, Editorial Board member - Fabien Lotte

- Brain-Computer Interfaces, Associate Editor - Fabien Lotte

- Frontier in Human Neuroscience, Section Brain-Computer Interfaces, guest Associate Editor - Fabien Lotte

- Frontiers in Neuroergonomics, Section Neurotechnology and Systems, Specialty Chief Editor - Fabien Lotte

Reviewer - reviewing activities

- Trends in HCI - Fabien Lotte

- Neurophysiology Clinique / Clinical Neurophysiology - Fabien Lotte

- IEEE Transactions on Biomedical Engineering - Fabien Lotte

- Journal of Neural Engineering - Fabien Lotte

- IEEE Transactions on Neural Systems and Rehabilitation Engineering - Fabien Lotte

- Virtual Reality (VIRE) - Fabien Lotte

- Brain-Computer Interfaces - Fabien Lotte

- Frontiers in Human Neuroscience, section Brain-Computer Interfaces - Fabien Lotte

- Scientific Report - Fabien Lotte

- Psychophysiology - Fabien Lotte

- Frontiers in Neuroscience - Khadijeh Sadatnejad

- Entropy - Khadijeh Sadatnejad

11.1.1 Invited talks

- F. Lotte, “Towards reliable Brain-Computer Interaction with high quality user training”, 5th International USERN congress and prize awarding festival, Teheran Iran and Online, November 2020

- F. Lotte and JA. Micoulaud-Franchi, “Peut-on réparer son cerveau en apprenant à contrôler son activité cérébrale ?”, ViV HEALTHTECH, Online, November 2020

- M. Bikson, AM. Brouwer, D. Callan, S. Fairclough, K. Gramann, F. Krueger, F. Lotte, S. Perrey, “The many facets of Neuroergonomics”, Neuroergonomics webinar, Online, October 2020

- F. Lotte, “Optimizing mental imagery-based BCIs: machine and human learning approaches”, g.tec BCI and Neurotechnology Summer School 2020, Online, June 2020

- F. Lotte, L. Okorokova, “Does BCI need a human? A human being as the most important part of BMI. Operator's training and experience”, BCI 101 Online Global Series 2020, May 2020

11.1.2 Leadership within the scientific community

- CORTICO (French scientific association for BCI) board member - Fabien Lotte

11.1.3 Scientific expertise

- Committee for recruitment of an assistant professor at INSA Lyon, Martin Hachet

- Committee for recruitment of a professor at INSA Rennes, Pascal Guitton

- Committee for recruitment of a professor at Université de Rennes, Pascal Guitton

- Expert for Appel à projets ANR Varan - Pascal Guitton

- Expert Crédit Impot Recherche - Martin Hachet

- Reviewer for NSF CRCNS (Computational Neuroscience) research grants, USA - Fabien Lotte

- Reviewer for FWF Research Grant, Austria, Fabien Lotte

11.1.4 Research administration

- Member of Inria Ethical Committee (COERLE), Pascal Guitton

- Member of Inria Comité Parité et Egalité, Pascal Guitton

- Member of Inria Radar Committee (new annual Activity Report), Pascal Guitton

- Member of Inria International Chairs Committee, Pascal Guitton

- Member of the GTnum #interactionhybridation, ministery of Education - Martin Hachet

- Member of Inria “Research Employment Committee” - Fabien Lotte

- Elected Secretary of the AFoDIB (association of computer science PhD students in Bordeaux) - Aline Roc

11.2 Teaching - Supervision - Juries

11.2.1 Teaching

- Master: Réalité Virtuelle, 12h eqTD, M2 Cognitive science, Université de Bordeaux - Martin Hachet

- Master: Réalité Virtuelle, 6h eqTD, M2 Cognitive science, Université de Bordeaux - Fabien Lotte

- Master: Réalités Virtuelles et augmentées, 12h eqTD, M2 Computer science, Université de Bordeaux - Martin Hachet,

- Master: Réalités Virtuelles et augmentées, 3h eqTD, M2 Computer science, Université de Bordeaux - Fabien Lotte,

- Master: Machine Learning for EEG-based BCI, 1.5h eqtd, course “Emerging topics on neuroengineering and neuroergonomics”, Drexel university / Shanghai Jiao Tong University, China (Online) - Fabien Lotte

- Bachelor: C programing, 29h eqTD, L2 Université de Bordeaux - Adélaïde Genay

- Engineering school: Réalités Virtuelles et augmentées, 1,5h eqTD, ENSC - Martin Hachet

- Engineering school: Web and database programming: 43h eqTD, ENSC, 1st year engineering (bachelor 3) - Aline Roc

- Engineering school: Individual IT projects, ENSC - 2nd year engineering (masters 1) - Aline Roc

- Engineering school: Algorithmics and programming: 36h eqTD, ENSC - 1st year engineering (bachelor 3) - Aline Roc

- Engineering School: Aeronautical engineering and Neuroergonomics, 3h eqTD, M2 ISAE-Supaero, Toulouse - Fabien Lotte

- Engineering school: Immersion and interaction with visual worlds, 4h eqtd, Graduate Degree Artificial Intelligence, Ecole Polytechnique Palaiseau - Fabien Lotte

- Engineering school: Brain-Computer Interfaces, 6h eqTD, EJMIN, Angoulème (Online), France - Fabien Lotte

- Engineering school: Advanced mathematics and computer science, 60h eqTD, Ecole Nationale Supérieure des Arts et Metiers, ENSAM, Bordeaux, France - Camille Benaroch

11.2.2 Supervision

- PhD in progress: Ambre Assor, Visualization of situated personnal data, since 1/10/2020, Martin Hachet

- PhD in progress: Adelaïde Genay, Hybrid Avatars, since 1/10/2019, Martin Hachet

- PhD in progress: Aline Roc, Designing, studying and optimzing training tasks and training program for BCI control, since 1/7/2019, Fabien Lotte

- PhD in progress: Marc Baloup, Interaction with Avatars, since 1/10/2018, Martin Hachet (33%)

- PhD in progress: Camille Benaroch, Computational Modeling of BCI user training, since 1/10/2018, Fabien Lotte (50%)

- PhD in progress: Rajkumar Darbar, Actuated Tangible User Interfaces, since 1/12/2017, Martin Hachet

- PhD in progress: Philippe Giraudeau, Collaborative learning with tangible and augmented interfaces, since 1/10/2017, Martin Hachet

- PhD defended: Aurélien Appriou, Estimating learning-related mental states in EEG, defended 17/12/2020, Fabien Lotte

11.2.3 Juries

- HDR: Mario Chavez [with report], Sorbonne University, Fabien Lotte

- PhD: Ksenia B. Volkova [with report], HSE University, Moscow, Russia, Fabien Lotte

- PhD: Alexandre Moly [with report], Université Grenoble Alpes, France, Fabien Lotte

- PhD: Aurore Bussalb [with report], Université de Paris, France, Fabien Lotte

- PhD: Federica Turi [with report], Université Côte d’Azur/Inria Sophia-Antipolis, France, Fabien Lotte

- PhD: Sébastien Rimbert [with report], Univ. Lorraine/Inria Nancy Grand-Est, France, Fabien Lotte

- PhD: Alexandre Challard [examiner & president], Université Toulouse 3 – Paul Sabatier, France, Fabien Lotte

- PhD: Sebastian Castano-Candamil [examiner], University of Freiburg, Germany, Fabien Lotte

- PhD: Guillaume Bataille [with report], INSA Rennes, Pascal Guitton

- PhD: Patrick Péréa [with report], Université Grenoble Alpes, Martin Hachet

- PhD: Charles Bailly [with report], Université Grenoble Alpes, Martin Hachet

- PhD: Alexandre Lecat [examiner], Univ. de Bordeaux, Pascal Guitton

- PhD: Stéphanie Rey [president], Univ. de Bordeaux, Pascal Guitton

11.3 Popularization

11.3.1 Articles and contents

- Le numérique pour apprendre le numérique ? Blog binaire Le Monde, 2020, Pascal Guitton & Thierry Vieville, 39

- C'est l'histoire d'un GAN, Blog binaire Le Monde, 2020, Marie-Agnès Enard, Pascal Guitton & Thierry Vieville, 36

- Quels sont les liens entre IA et éducation, Blog binaire Le Monde, 2020, Pascal Guitton & Thierry Vieville, 40

- Parler de vérité autrement, Blog binaire Le Monde, 2020, Marie-Agnès Enard, Pascal Guitton & Thierry Vieville, 37

- Tous au libre, Blog binaire Le Monde, 2020, Marie-Agnès Enard, Pascal Guitton & Thierry Vieville, 38

- The Mind-Machine podcast, episode 10, Online, Septembre 2020, F. Lotte and S. Fairclough

- Interview for the magazine “Horizon”, November 2020, by Tom Cassauwers, “Brain-controlled computers are becoming a reality, but major hurdles remain” - Fabien Lotte

- Interview for journal “Science et avenir”, November 2020, by Elena Sender “Comment l'IA et le cerveau collabore” - Fabien Lotte

- TV program video shooting for France 4, for the show “C'est toujours pas sorcier”, November 2020 - Fabien Lotte

11.3.2 Interventions

- ”De l'être humain à l'être virtuel: quels rapports entretenons-nous avec nos avatars ?”, Regards Croisés, Pessac, 27/02/2020 (Adélaïde Genay).

12 Scientific production

12.1 Major publications

- 1 inproceedings Framework for Electroencephalography-based Evaluation of User Experience CHI '16 - SIGCHI Conference on Human Factors in Computing System San Jose, United States May 2016

- 2 inproceedings Teegi: Tangible EEG Interface UIST-ACM User Interface Software and Technology Symposium Honolulu, United States ACM October 2014

- 3 inproceedings HOBIT: Hybrid Optical Bench for Innovative Teaching CHI'17 - Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems Denver, United States May 2017

- 4 articleTouch-Based Interfaces for Interacting with 3D Content in Public ExhibitionsIEEE Computer Graphics and Applications332March 2013, 80-85URL: http://hal.inria.fr/hal-00789500

- 5 inproceedings A Survey of Interaction Techniques for Interactive 3D Environments Eurographics 2013 - STAR Girona, Spain May 2013

- 6 article Advances in User-Training for Mental-Imagery Based BCI Control: Psychological and Cognitive Factors and their Neural Correlates Progress in brain research February 2016

- 7 article A physical learning companion for Mental-Imagery BCI User Training International Journal of Human-Computer Studies 136 102380 April 2020

- 8 article A review of user training methods in brain computer interfaces based on mental tasks Journal of Neural Engineering 2020

- 9 inproceedings Inner Garden: Connecting Inner States to a Mixed Reality Sandbox for Mindfulness CHI '17 - International Conference of Human-Computer Interaction CHI '17 ACM Denver, United States ACM May 2017

- 10 inproceedings One Reality: Augmenting How the Physical World is Experienced by combining Multiple Mixed Reality Modalities UIST 2017 - 30th ACM Symposium on User Interface Software and Technology ACM Quebec City, Canada October 2017

12.2 Publications of the year

International journals

International peer-reviewed conferences

Conferences without proceedings

Scientific books

Edition (books, proceedings, special issue of a journal)

Reports & preprints

Other scientific publications

12.3 Other

Scientific popularization

12.4 Cited publications

- 41 articleOnline e-learning and cognitive disabilities: A systematic reviewComputers and Education130March 2019, 152-167

- 42 inproceedings Towards Truly Accessible MOOCs for Persons with Cognitive Disabilities: Design and Field Assessment ICCHP 2018 - 16th International Conference on Computers Helping People with Special Needs Linz, Austria July 2018

- 43 inproceedings CARDS: A Mixed-Reality System for Collaborative Learning at School ACM ISS'19 - ACM International Conference on Interactive Surfaces and Spaces Deajon, South Korea November 2019