Keywords

Computer Science and Digital Science

- A3.2.2. Knowledge extraction, cleaning

- A3.4.1. Supervised learning

- A5.1. Human-Computer Interaction

- A5.1.1. Engineering of interactive systems

- A5.1.2. Evaluation of interactive systems

- A5.1.4. Brain-computer interfaces, physiological computing

- A5.1.5. Body-based interfaces

- A5.1.6. Tangible interfaces

- A5.1.7. Multimodal interfaces

- A5.1.8. 3D User Interfaces

- A5.2. Data visualization

- A5.6. Virtual reality, augmented reality

- A5.6.1. Virtual reality

- A5.6.2. Augmented reality

- A5.6.3. Avatar simulation and embodiment

- A5.6.4. Multisensory feedback and interfaces

- A5.9. Signal processing

- A5.9.2. Estimation, modeling

- A9.2. Machine learning

- A9.3. Signal analysis

Other Research Topics and Application Domains

- B1.2. Neuroscience and cognitive science

- B2.1. Well being

- B2.5.1. Sensorimotor disabilities

- B2.5.2. Cognitive disabilities

- B2.6.1. Brain imaging

- B3.1. Sustainable development

- B9.1. Education

- B9.1.1. E-learning, MOOC

- B9.2.4. Theater

- B9.5.3. Physics

- B9.6.1. Psychology

1 Team members, visitors, external collaborators

Research Scientists

- Martin Hachet [Team leader, Inria, Senior Researcher, HDR]

- Pierre Dragicevic [Inria, Researcher, from Sep 2021]

- Pascal Guitton [Inria, Senior Researcher, until Aug 2021, HDR]

- Yvonne Jansen [CNRS, Researcher, from Sep 2021]

- Fabien Lotte [Inria, Senior Researcher, HDR]

- Arnaud Prouzeau [Inria, Starting Faculty Position]

Faculty Member

- Stephanie Cardoso [UNIV BORDEAUX MONTAI, Associate Professor, from Sep 2021]

Post-Doctoral Fellows

- Luiz Augusto De Macedo Morais [Inria, from Sep 2021]

- Sébastien Rimbert [Inria, from Mar 2021]

- Seyedehkhadijeh Sadatnejad [Inria, until Mar 2021]

PhD Students

- Come Annicchiarico [INSERM, from Nov 2021]

- Ambre Assor [Inria]

- Camille Benaroch [Inria, Until Dec 2021]

- Rajkumar Darbar [Inria, until Jul 2021]

- Adelaide Genay [Inria]

- Philippe Giraudeau [Inria, until May 2021]

- Edwige Gros [Inria, from Oct 2021]

- Morgane Koval [Inria, from Oct 2021]

- Clara Rigaud [Sorbonne Université, from Sep 2021]

- Aline Roc [Inria]

- David Trocellier [Univ de Bordeaux, from Oct 2021]

Technical Staff

- Vincent Casamayou [Inria, Engineer, from Oct 2021]

- Pauline Dreyer [Inria, Technician, From Feb 2021]

- Thibaut Monseigne [Inria, Engineer]

- Joan Odicio Vilchez [Inria, Engineer, until Jun 2021]

Interns and Apprentices

- Nibras Abo Alzahab [Inria, from Oct 2021 Until Dec 2021]

- Mihai Anca [Inria, from May 2021 until Jul 2021]

- Thibault Audouit [Cap sciences, from Feb 2021 until Jul 2021]

- Alper Er [Inria, from Mar 2021 until Sep 2021]

- Edwige Gros [Inria, from Mar 2021 until Jul 2021]

- Anagael Pereira [Univ de Bordeaux, from Oct 2021]

- Smeety Pramij [Inria, From Jan 2021 until Jul 2021]

- Julie Vallcaneras [Inria, from May 2021 until Jul 2021]

Administrative Assistant

- Audrey Plaza [Inria]

2 Overall objectives

The standard human-computer interaction paradigm based on mice, keyboards, and 2D screens, has shown undeniable benefits in a number of fields. It perfectly matches the requirements of a wide number of interactive applications including text editing, web browsing, or professional 3D modeling. At the same time, this paradigm shows its limits in numerous situations. This is for example the case in the following activities: i) active learning educational approaches that require numerous physical and social interactions, ii) artistic performances where both a high degree of expressivity and a high level of immersion are expected, and iii) accessible applications targeted at users with special needs including people with sensori-motor and/or cognitive disabilities.

To overcome these limitations, Potioc investigates new forms of interaction that aim at pushing the frontiers of the current interactive systems. In particular, we are interested in approaches where we vary the level of materiality (i.e., with or without physical reality), both in the output and the input spaces. On the output side, we explore mixed-reality environments, from fully virtual environments to very physical ones, or between both using hybrid spaces. Similarly, on the input side, we study approaches going from brain activities, that require no physical actions of the user, to tangible interactions, which emphasize physical engagement. By varying the level of materiality, we adapt the interaction to the needs of the targeted users.

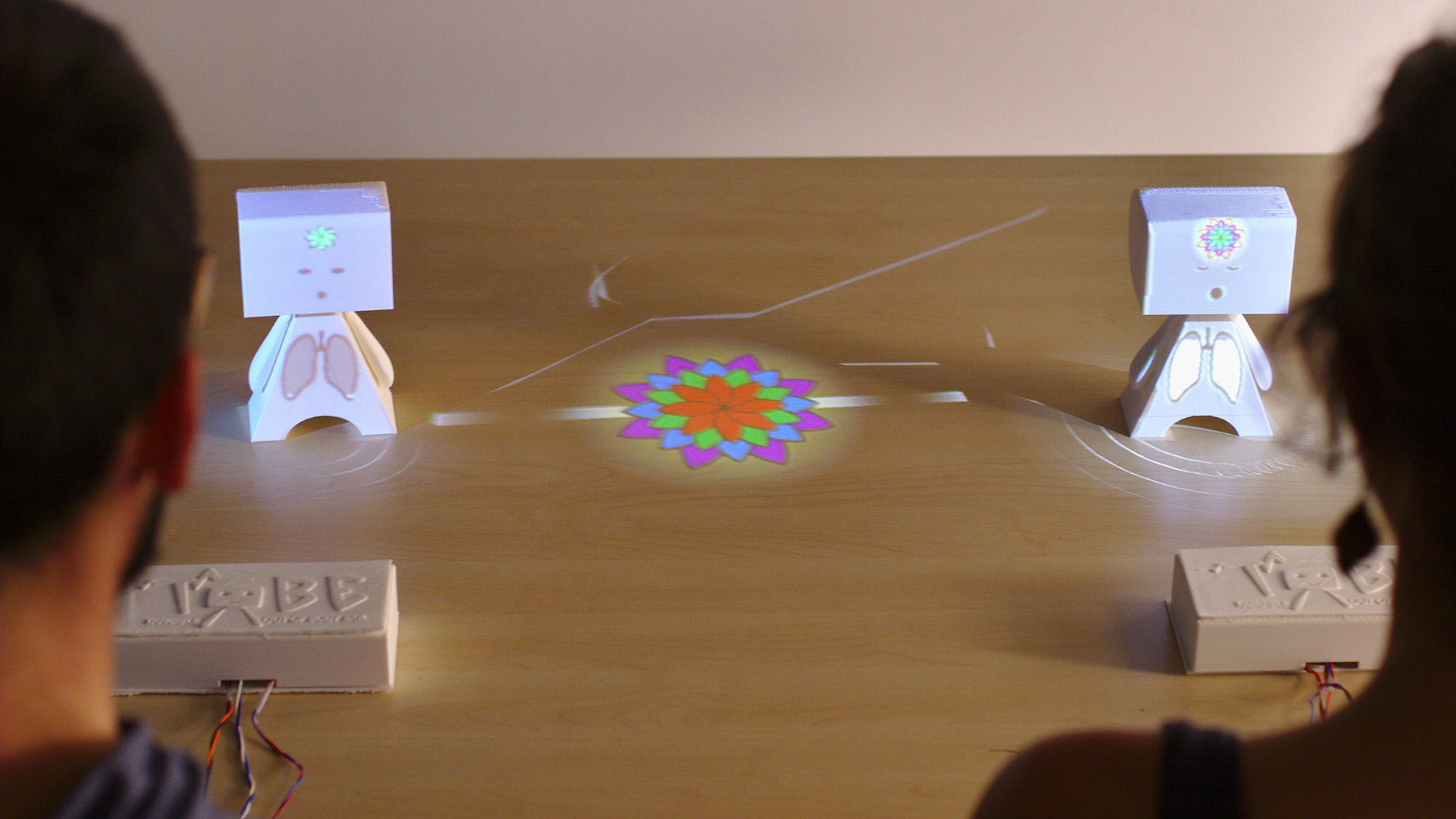

Two users are facing a physical puppet on which digital information are projected.

The main applicative domains targeted by Potioc are Education, Art, Entertainment and Well-being. For these domains, we design, develop, and evaluate new approaches that are mainly dedicated to non-expert users. In this context, we thus emphasize approaches that stimulate curiosity, engagement, and pleasure of use.

3 Research program

To achieve our overall objective, we follow two main research axes, plus one transverse axis, as illustrated in Figure 2.

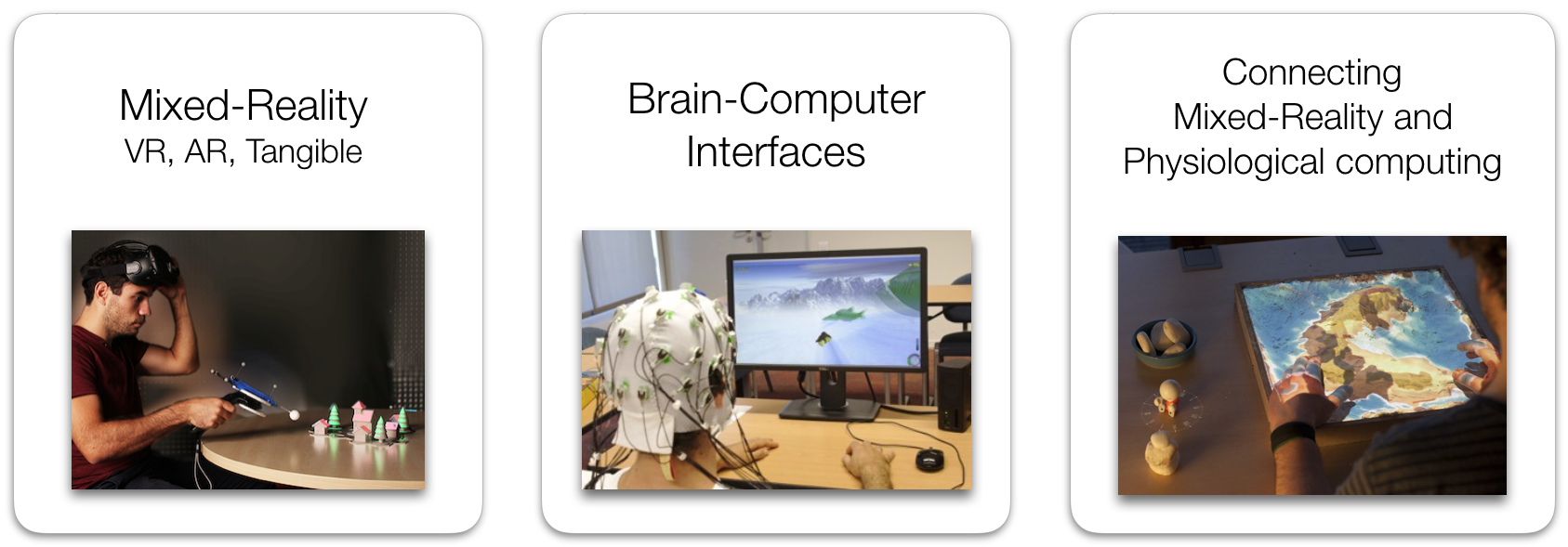

In the first axis dedicated to Interaction in Mixed-Reality spaces, we explore interaction paradigms that encompass virtual and/or physical objects. We are notably interested in hybrid environments that co-locate virtual and physical spaces, and we also explore approaches that allow one to move from one space to the other.

The second axis is dedicated to Brain-Computer Interfaces (BCI), i.e., systems enabling user to interact by means of brain activity only. We target BCI systems that are reliable and accessible to a large number of people. To do so, we work on brain signal processing algorithms as well as on understanding and improving the way we train our users to control these BCIs.

Finally, in the transverse axis, we explore new approaches that involve both mixed-reality and neuro-physiological signals. In particular, tangible and augmented objects allow us to explore interactive physical visualizations of human inner states. Physiological signals also enable us to better assess user interaction, and consequently, to refine the proposed interaction techniques and metaphors.

Three images visually represent Potioc's research axes: a user with a HMD facing physical objects, a user wearing an EEG in front of a screen, and an sandbox augmented with digital information projected on it.

Main research axes of Potioc.

From a methodological point of view, for these three axes, we work at three different interconnected levels. The first level is centered on the human sensori-motor and cognitive abilities, as well as user strategies and preferences, for completing interaction tasks. We target, in a fundamental way, a better understanding of humans interacting with interactive systems. The second level is about the creation of interactive systems. This notably includes development of hardware and software components that will allow us to explore new input and output modalities, and to propose adapted interaction techniques. Finally, in a last higher level, we are interested in specific application domains. We want to contribute to the emergence of new applications and usages, with a societal impact.

4 Application domains

4.1 Education

Education is at the core of the motivations of the Potioc group. Indeed, we are convinced that the approaches we investigate—which target motivation, curiosity, pleasure of use and high level of interactivity—may serve education purposes. To this end, we collaborate with experts in Educational Sciences and teachers for exploring new interactive systems that enhance learning processes. We are currently investigating the fields of astronomy, optics, and neurosciences. We have also worked with special education centres for the blind on accessible augmented reality prototypes. Currently, we collaborate with teachers to enhance collaborative work for K-12 pupils. In the future, we will continue exploring new interactive approaches dedicated to education, in various fields. Popularization of Science is also a key domain for Potioc. Focusing on this subject allows us to get inspiration for the development of new interactive approaches.

4.2 Art

Art, which is strongly linked with emotions and user experiences, is also a target area for Potioc. We believe that the work conducted in Potioc may be beneficial for creation from the artist point of view, and it may open new interactive experiences from the audience point of view. As an example, we have worked with colleagues who are specialists in digital music, and with musicians. We have also worked with jugglers and we are currently working with a scenographer with the goal of enhancing interactivity of physical mockups and improve user experience.

4.3 Entertainment

Similarly, entertainment is a domain where our work may have an impact. We notably explored BCI-based gaming and non-medical applications of BCI, as well as mobile Augmented Reality games. Once again, we believe that our approaches that merge the physical and the virtual world may enhance the user experience. Exploring such a domain will raise numerous scientific and technological questions.

4.4 Well-being

Finally, well-being is a domain where the work of Potioc can have an impact. We have notably shown that spatial augmented reality and tangible interaction may favor mindfulness activities, which have been shown to be beneficial for well-being. More generally, we explore introspectibles objects, which are tangible and augmented objects that are connected to physiological signals and that foster introspection. We explore these directions for general public, including people with special needs.

5 Social and environmental responsibility

5.1 Accessible e-learning tools and accessible board games

Among our explorations in Human-Computer Interaction, we have been interested in designing and developing tools that can be used by people suffering from physiological or cognitive disorders.

In particular, we used the PapARt tool developed in the team to contribute making board games accessible for people suffering from visual impairment 16.

We also designed a MOOC player, called AIANA, in order to ease the access of people with cognitive disorders (attention, memory).

5.2 Augmented reality for environmental challenges

Face to the big challenge of climate change, we are currently orienting our research towards approaches that may contribute to pro-environmental behaviors. To do so, we are framing research directions and building projects with the objective of putting our expertise in HCI, visualization, and mixed-reality to work for the reduction of human impact on the planet.

5.3 Humanitarian information visualization

Members of the team have been involved in research promoting humanitarian goals, such as studying how to design visualizations of humanitarian data in a way that promotes charitable donations to traveling migrants and other populations in need. See

6 Highlights of the year

- Two permanent researchers joined the team. Pierre Dragicevic - transfer from Inria AVIZ, and Yvonne Jansen, CNRS, transfer from ISIR to LaBRI.

- Four HOBIT systems, developed in the team, have been purchased by Universities, part of a regional pedagogical project.

6.1 Awards

Best paper award for :

- IEEE VIS 2021: Wesley Willett, Bon Adriel Aseniero, Sheelagh Carpendale, Pierre Dragicevic, Yvonne Jansen, Lora Oehlberg, and Petra Isenberg. "Perception! Immersion! Empowerment!: Superpowers as Inspiration for Visualization." IEEE Transactions on Visualization and Computer Graphics (2021) 21.

- ISS 2021: Lauren Thevin, Nicolas Rodier, Bernard Oriola, Martin Hachet, Christophe Jouffrais, Anke M. Brock. Inclusive Adaptation of Existing Board Games for Gamers with and without Visual Impairments using a Spatial Augmented Reality Framework for Touch Detection and Audio Feedback 16.

Young investigator award for :

- A. Appriou, F. Lotte, “Tools for affective, cognitive and conative states estimation from both EEG and physiological signals”, Proceedings of the Third International Neuroergonomics Conference, 2021.

Best student talk for :

- J. Mladenovic, J. Frey, J. Mattout, F. Lotte, "Biased feedback influences learning of Motor Imagery BCI", 8th International BCI Meeting, 2021.

Best student poster for :

- M.S. Yamamoto, K. Sadatnejad, R. M. Islam, F. Lotte, T. Tanaka. "Reliable outlier detection by spectral clustering on Riemannian manifold of EEG covariance matrix", 8th International BCI meeting, 2021.

7 New software and platforms

7.1 New software

7.1.1 BioPyC

-

Name:

Bio-signals with python-based classifier

-

Keywords:

Machine learning, Classification, EEG, Signal processing, Brain-Computer Interface, Computational biology

-

Functional Description:

BioPyC is an open-source and easy-to-use platform for offline signal processing and classification. This toolbox allows users to make both offline EEG and biosignals analyses, i.e., to apply signal processing and classification algorithms to neurophysiological signals such as EEG, HR, EDA or breathing. Four main modules allow the user to follow the standard steps of the classification & analysis process: 1) reading different EEG and biosignal data formats 2) filtering and representing EEG and biosignals 3) classifying them 4) visualizing and performing statistical tests on the results. In order to facilitate those analyses, BioPyC offers a graphical user interface (GUI) based on Jupyter that allows users to handle the toolbox without any prior knowledge in computer science or machine learning. Finally, with BioPyC, users can apply and study algorithms for the main steps of biosignals analysis, i.e., pre-processing, signal processing, classification, statistical analysis and data visualization.

-

Release Contributions:

First version

- URL:

-

Contact:

Aurélien Appriou

-

Participants:

Dan Dutartre, Aurélien Appriou, Fabien Lotte

7.2 New platforms

7.2.1 CARDS

Participants: Philippe Giraudeau, Joan Odicio, Martin Hachet.

We have continued our work with the CARDS system, which allows us to augment pieces of papers in an interactive way. This technology serves as a basis for a startup project led by Philippe Giraudeau.

7.2.2 HOBIT

Participants: Erwan Normand, Vincent Casamayou, Martin Hachet.

Similarly, we have continued improving the HOBIT platform for exploring innovative teaching of wave optics. This hybrid tool (real/virtual) is being installed in 4 universities for pushing new concrete pedagogical approaches at University.

7.2.3 OpenVIBE

Participants: Thibaut Monseigne, Fabien Lotte.

External collaborators: Thomas Prampart [Inria Rennes - Hybrid], Anatole Lécuyer [Inria Rennes - Hybrid].

This year, we have contributed to two new official releases (OpenViBE 3.1.0 & 3.2.0) of the OpenViBE software (the implementation of 2 releases per year is planned from now). These releases, realized in collaboration with Inria Rennes (Hybrid project-team), include the correction of small bugs, an update of dependencies, the addition of an algorithm for the detection and correction of artifacts, the addition of template to communicate with Unity in LSL and finally a redesign of the build system.

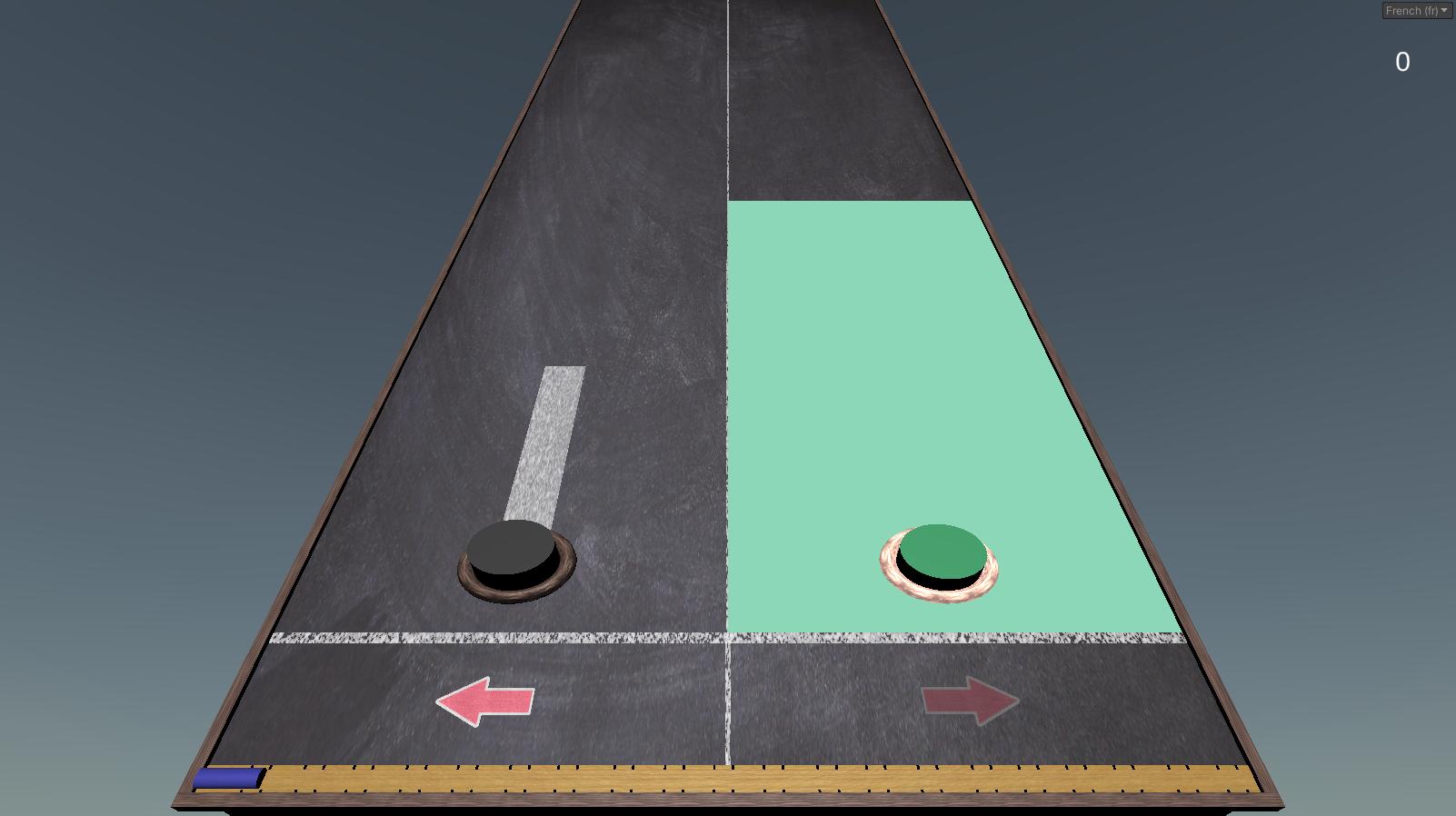

7.2.4 Brain Hero

Participants: Thibaut Monseigne, Aline Roc, Fabien Lotte.

Brain Hero is a gamification of the traditional feedback of mental imagery BCI protocols. The principle is inspired from rhythmic games such as Guitar Hero in order to make the BCI sessions more interesting for the subjects. The game was implemented under Unity and communicates with the OpenViBE software (used in the team for BCI protocols) using the LSL (Lab Straming Layer) protocol.

This picture represents the visual interface of the Brain Hero visual BCI training environment. It looks like notes on a guitar FretBoard, each note representing a specific mental task for the BCI.

The visual feedback and gaming environment of the Brain Hero mental imagery-based BCI training.

8 New results

8.1 Avatars in Augmented Reality

Participants: Adélaïde Genay, Martin Hachet.

External collaborators: Anatole Lécuyer [Inria Rennes - Hybrid].

In 2021, we have continued our work on avatarization in augmented reality. The study described in our 2020 activity report about how surrounding objects influence the sense of embodiment in optical see-through experiences has been published in ”Frontiers in Virtual Reality” 15.

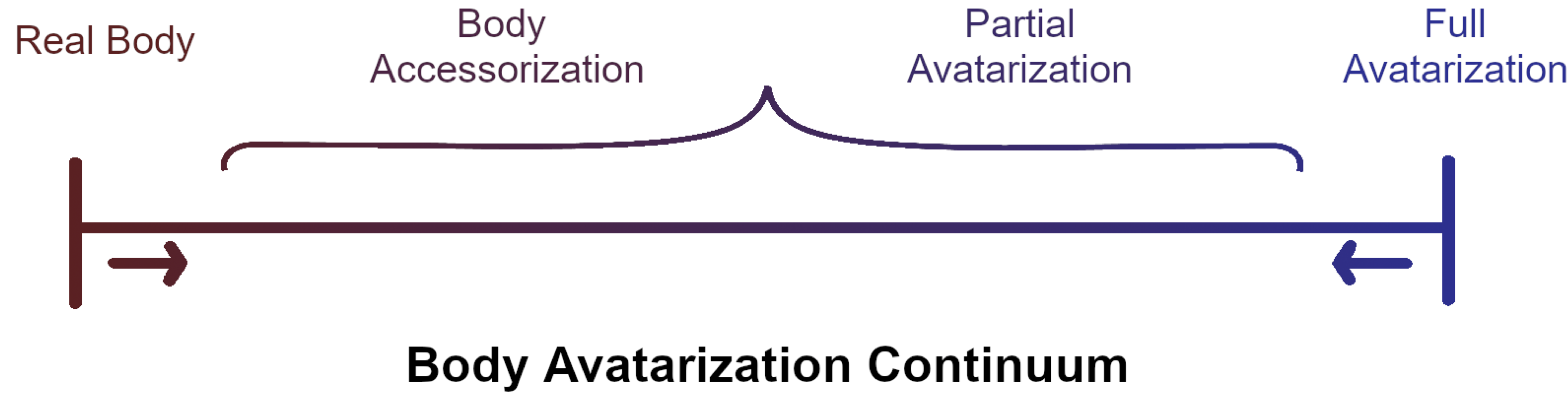

In addition, we have proposed a literature review published in ”TVCG” 14 covering the embodiment of virtual self-avatars in AR. Our goal was (i) to guide readers through the different options and challenges linked to the implementation of AR embodiment systems, (ii) to provide a better understanding of AR embodiment perception by classifying the existing knowledge, and (iii) to offer insight on future research topics and trends for AR and avatar research. To do so, we introduce a taxonomy of virtual embodiment experiences by defining a “body avatarization” continuum (see Figure 4). The presented knowledge suggests that the sense of embodiment evolves in the same way in AR as in other settings, but this possibility has yet to be fully investigated. We suggest that, whilst it is yet to be well understood, the embodiment of avatars has a promising future in AR and conclude by discussing possible directions for research.

We are now starting a collaboration with NARA Institute of technology - CARE lab (Japan), to push these explorations further.

A scheme shows the continuum.

The body avatarization continuum covers the extent of the user’s virtual representation within a virtual embodiment system, regardless of the environmental context. It is inspired by the Reality-Virtuality Continuum proposed by Milgram et al. on the virtuality degree of an environment.

8.2 Control of Facial Expressions in VR

Participants: Martin Hachet.

External collaborators: Marc Baloup [Inria Lille - Loki], Gery Casiez [Univ Lille - Loki], Thomas Pietrzak [Univ Lille - Loki].

This work, published in VRST 2021 23, is at the heart of the PhD project from Marc Baloup, Inria Lille - Loki Team, co-advised by Martin Hachet.

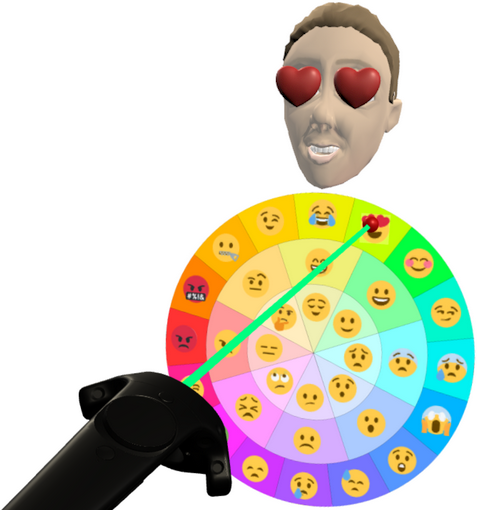

The control of an avatar's facial expressions in virtual reality is mainly based on the automated recognition and transposition of the user's facial expressions. These isomorphic techniques are limited to what users can convey with their own face and have recognition issues. To overcome these limitations, non-isomorphic techniques rely on interaction techniques using input devices to control the avatar's facial expressions. Such techniques need to be designed to quickly and easily select and control an expression, and not disrupt a main task such as talking. We present the design of a set of new non-isomorphic interaction techniques for controlling an avatar facial expression in VR using a standard VR controller. These techniques, as the one illustrated in Figure 5 have been evaluated through two controlled experiments to help designing an interaction technique combining the strengths of each approach. This technique was evaluated in a final ecological study showing it can be used in contexts such as social applications.

This image shows a VR controller with a ray pointing to a disc where emojis are displayed, and an avatar's head with the corresponding expression.

Control of facial expression in VR

8.3 HOBIT+

Participants: Vincent Casamayou, Martin Hachet.

External collaborators: Bruno Bousquet [Univ. Bordeaux], Lionel Canioni [Univ. Bordeaux], Jean-Paul Guillet [Univ. Bordeaux].

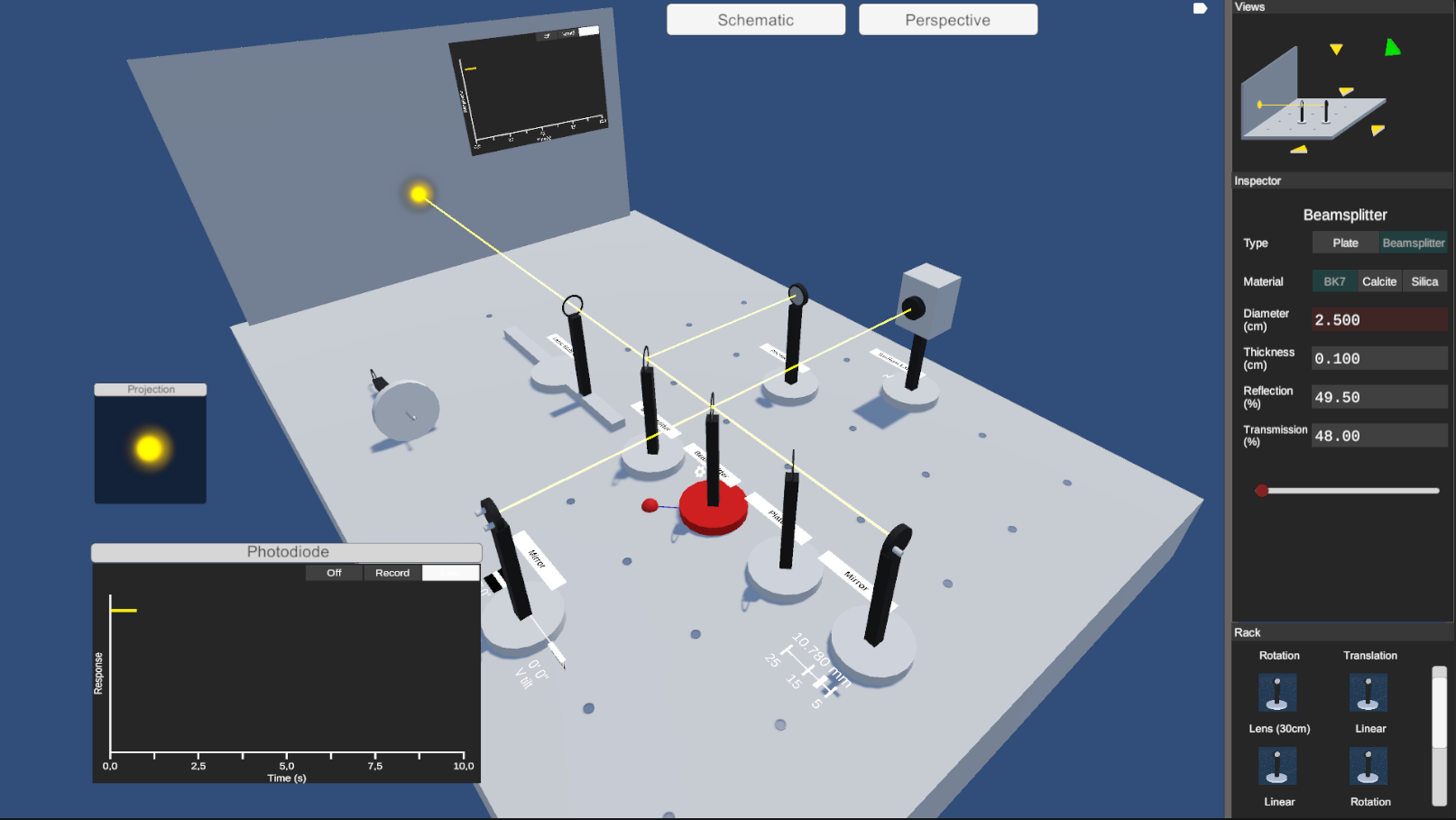

We have started extending the concept of HOBIT, a hybrid (physical/virtual) platform dedicated to the learning of wave optics 3736, to a purely virtual version of the system (see Figure 6). The main objective is for remote students to continue doing practical work, as if they were manipulating the physical table. At the end, we envision to connect both the physical table and its virtual counterparts for exploring hybrid (insitu/remote) collaborative training sessions.

A view of the desktop version of the HOBIT software

8.4 Interaction in Mixed Reality and Accessible Board Games

Participants: Rajkumar Darbar, Arnaud Prouzeau, Martin Hachet.

External collaborators: Lauren Thevin [UCO Bretagne], Anke Brock [ENAC], Christophe Jouffrais [CNRS], Bernard Oriola [CNRS].

Two of our projects started in 2020 were published in 2021.

The first one, led by Rajkumar Darbar focuses on text selection with AR Head-mounted displays 25. The main idea is to use a mobile device for interacting with displayed text. Four techniques were proposed and evaluated. See the dedicated section of our 2020 report.

The second one is the result of a collaboration with Lauren Thevin and Anke Brock, former members of Potioc, as well as other collaborators. We have explored how PapARt, a interactive projection technology developed in the team, could make board games accessible to players with visual impairments (See 2020 description). This work was published at ISS 2021 and obtained a best paper award 16.

8.5 Investigating Around Field of View Glancable Command Selection in AR-HMDs

Participants: Rajkumar Darbar, Arnaud Prouzeau, Martin Hachet.

Designing menus for augmented reality head-mounted displays (AR-HMDs) is challenging because of the limited display field of view (FOV) of the current devices. In this work, we are exploring an approach where users can quickly access contextual commands in AR-HMDs by interacting below the display area. Compared to previous work, our technique provides the following advantages: commands are always available in the user’s FOV (i.e., head referenced) with a very minimum occlusion and can be issued anytime with a single tap; there is no need to switch attention explicitly from the ongoing task; it does not require any external device except the AR-HMD having hand tracking available.

A user wears a Hololens. She is selecting a button.

Reducing the visual clutter in Hololens by interacting below the display area.

8.6 Hidden Objects in Situated Visualization

Participants: Ambre Assor, Arnaud Prouzeau, Pierre Dragicevic, Martin Hachet.

As part of the PhD thesis of Ambre Assor, we are defining a design space of techniques for handling non-visible physical referents in situated visualizations. Although visual occlusion has been rarely discussed in the literature on situated visualizations, it is a known problem in the areas of 3D user interfaces and 3D visualization, as well as in AR and virtual reality (VR). To construct our design space, we take inspiration from the techniques proposed in these research areas. These techniques served to identify the main dimensions, to which we add specific ones dedicated to situated visualizations. The final design space includes dimensions related to: (1) the visual representation of the physical representation of the physical referent; (2) the interactivity and degree of embodiment of the visual representation of the physical referent; (3) the view paradigm of the technique; (4) the data visualisation associated with the physical referent. With this design space, we define families of potential techniques, and we explore the challenges and opportunities associated with the design of techniques for situated visualizations with non-visible objects.

8.7 Tangible interfaces for Railroad Traffic Monitoring

Participants: Arnaud Prouzeau, Martin Hachet.

External collaborators: Maudeline Marlier [SNCF].

Maudeline Marlier did her end-of-study internship at Potioc and at SNCF working on the use of tangible interaction in railroad traffic monitoring control rooms. More specifically, the aim was to work on the concept of a tangible interface and to develop a proof of concept to propose an idea to operators before testing it with them. It was, first, important to understand the context and activities performed in such control rooms. To this purpose, several observation sessions and interviews were organised in control rooms around Paris. Results from these sessions suggest that communication and information sharing are essential in these rooms, especially during a crisis, in which operators stand up, discuss and watch information on their colleague's screens. In this way, they can analyse what others are doing thanks to non-verbal communication to anticipate their needs: this represents a typical use case for tangible interfaces. Maudeline then developed a first prototype of a tangible interface working on a tabletop (see Figure 8). This work will be continued in a CIFRE PhD. with Maudeline and SNCF.

A user is moving a token on a multi-touch tabletop.

A working prototype has been developed on a multi-touch tabletop and uses tangible tokens.

8.8 Superpower-Inspired Framework for Data Visualization

Participants: Pierre Dragicevic, Yvonne Jansen.

External collaborators: Wesley Willett [Univ. Calgary], Bon Adriel Aseniero [Autodesk], Sheelagh Carpendale [Simon Fraser Univ.], Lora Oehlberg [Univ. Calgary], Petra Isenberg [Inria Paris-Saclay, correspondent].

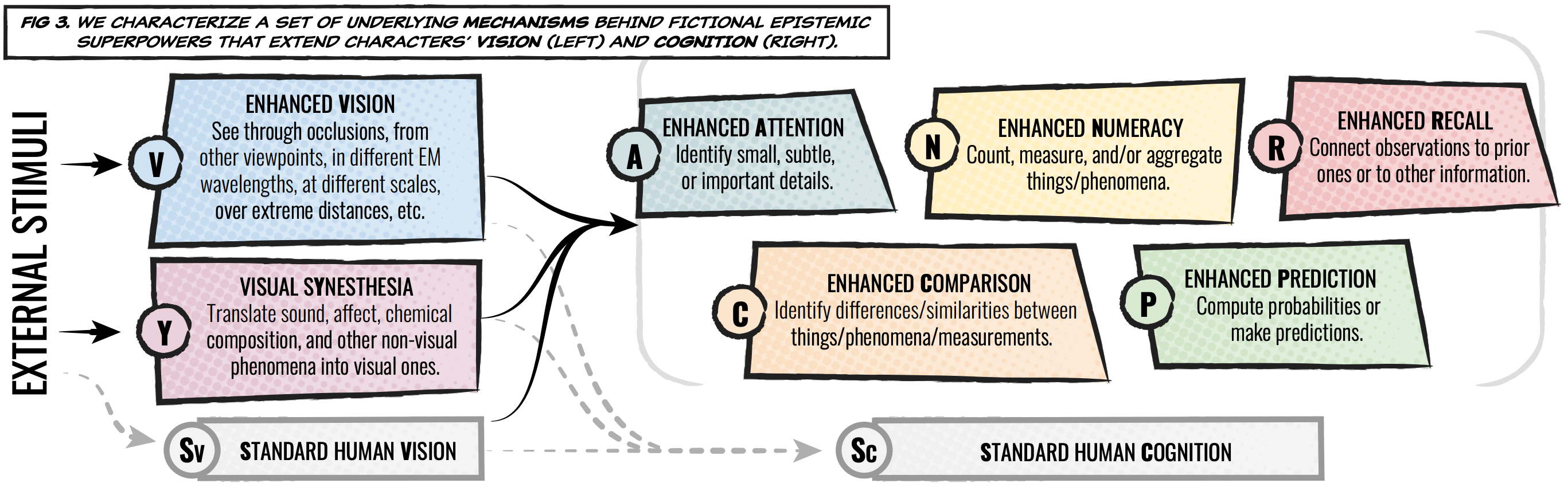

We explored how the lens of fictional superpowers can help characterize how visualizations empower people and provide inspiration for new visualization systems. Researchers and practitioners often tout visualizations' ability to “make the invisible visible” and to “enhance cognitive abilities.” Meanwhile superhero comics and other modern fiction often depict characters with similarly fantastic abilities that allow them to see and interpret the world in ways that transcend traditional human perception. We investigated the intersection of these domains, and showed how the language of superpowers can be used to characterize existing visualization systems and suggest opportunities for new and empowering ones. We introduced two frameworks: The first characterizes seven underlying mechanisms that form the basis for a variety of visual superpowers portrayed in fiction. The second identifies seven ways in which visualization tools and interfaces can instill a sense of empowerment in the people who use them. Building on these observations, we illustrated a diverse set of “visualization superpowers” and highlighted opportunities for the visualization community to create new systems and interactions that empower new experiences with data. Material and illustrations are available under CC-BY 4.0 at osf.io/8yhfz.

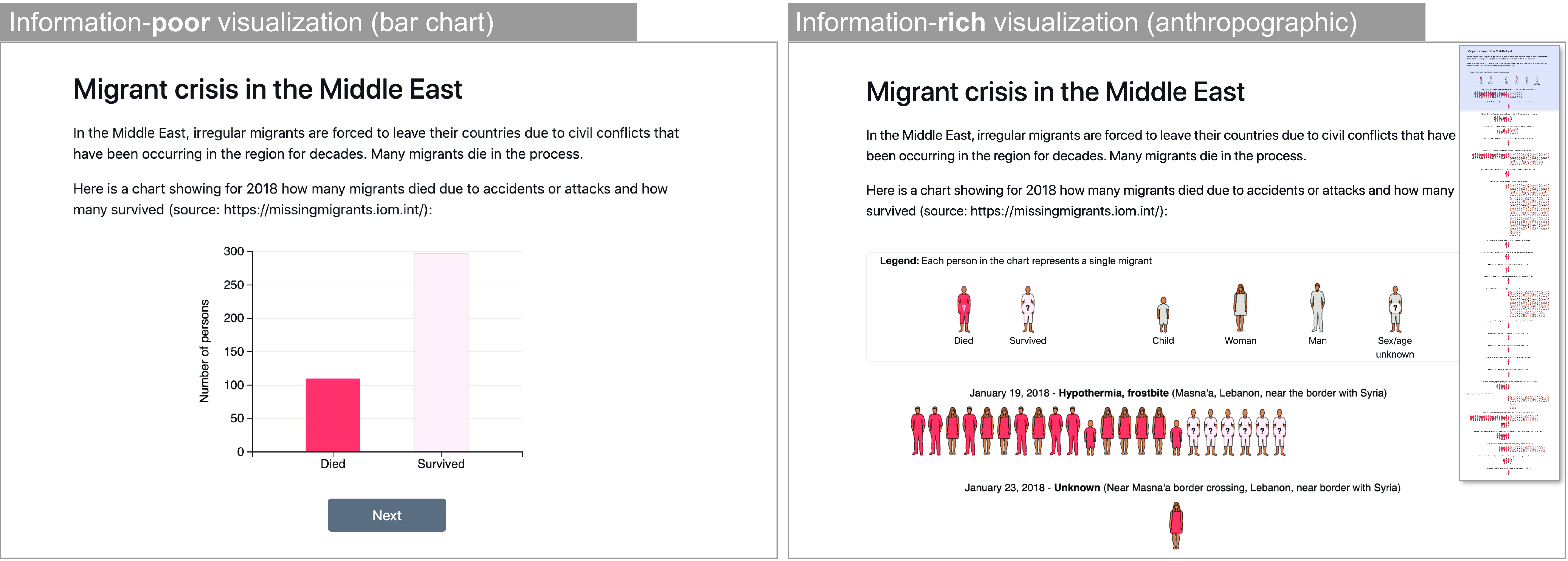

8.9 Visualizations for Promoting Empathy and Compassion

Participants: Luiz Morais [Universidade Federal de Campina Grande], Yvonne Jansen [Universidade Federal de Campina Grande], Nazareno Andrade [Universidade Federal de Campina Grande], Pierre Dragicevic [correspondant].

When showing data about people, visualization designers and data journalists often use design strategies that presumably help the audience relate to those people. The term “anthropographics” has been recently coined to refer to this practice and the resulting visualizations. Empirical studies have recently examined whether anthropographics promote empathy, compassion, or the likelihood of prosocial behavior, but findings have been inconclusive so far. In an article published at ACM CHI 47, we contributed a detailed overview of past experiments, and two new experiments that use large samples and a combination of design strategies to maximize the possibility of finding an effect. We tested an information-rich anthropographic against a simple bar chart (Figure 10), asking participants to allocate hypothetical money in a crowdsourcing study. We found that the anthropographic had, at best, a small effect on money allocation. Such a small effect may be relevant for large-scale donation campaigns, but the large sample sizes required to observe an effect and the noise involved in measuring it make it very difficult to study in more depth. We discussed implications for our findings, such as the importance of testing alternative anthropographic design strategies as well as using alternative methodologies, such as real money donation tasks or observations in the field, for example, by testing alternative designs on the website of a real charity. Data and code are available at osf.io/xqae2.

8.10 Review of Data Physicalization

Participants: Pierre Dragicevic [KU Leuven], Yvonne Jansen [KU Leuven], Andrew Vande Moere [KU Leuven].

Data physicalization is a rich and vast research area that studies the use of physical artifacts to convey data. It overlaps with a number of research areas including information visualization, scientific visualization, visual analytics, tangible user interfaces, shape-changing interfaces, personal fabrication interfaces, as well as graphic design, architecture, and art. We published a book chapter 46 in an HCI handbook that surveys academic work on data physicalization and also provides a broad overview of nonacademic work. It discusses how data physicalization has been used for analytical purposes, communication and education, accessibility, self-reflection and self-expression, and finally for enjoyment and meaning. It also discusses enabling technologies, reviews empirical studies, and surveys models and theories of data physicalization.

8.11 Use of Space in Immersive Analytics

Participants: Arnaud Prouzeau.

External collaborators: Maxime Cordeil [University of Queensland], Thibault Friedrich [Ecole de Technologie Superieure in Montreal], Michael McGuffin [Ecole de Technologie Superieure in Montreal], Jiazhou Liu [Monash University], Barrett Ens [Monash University], Tim Dwyer [Monash University], Benjamin Lee [Monash University], Yidan Zhang [Monash University], Sarah Goodwin [Monash University].

An immersive system is one whose technology allows us to “step through the glass” of a computer display to engage in a visceral experience of interaction with digitally-created elements. As immersive technologies have rapidly matured to bring commercially successful virtual and augmented reality (VR/AR) devices and mass market applications, researchers have sought to leverage its benefits, such as enhanced sensory perception and embodied interaction, to aid human data understanding and sensemaking 26, 18. In 2021, we have mainly explored the use of the 3D workspace in immersive analytics systems.

In immersive environments, positioning data around the user to utilise the abundant display space has been advocated as an advantage over traditional flat screens. However, other than limiting the distance users must walk, there is no clear design rationale behind this common practice, and little research on the impact of wraparound layouts on visualisation tasks. One aspect of user performance in such tasks is the ability to remember the spatial locations of visualisations as users shift their focus between them. Motivated by these use-cases, we study the effects of flat-wall layout versus circular-wraparound layout on a visuo-spatial memory task in a virtual environment. The results demonstrate that participants are able to recall spatial patterns with greater accuracy using flat layouts than with wraparound layouts. Our study participants also preferred the flat over the wraparound layout, and subjectively reported less mental and physical effort with a flat layout. A second study highlighted the fact that this better performance was not counterbalanced with the use of landmarks, or with when we remove the possibility for the participants to get an overview of the workspace. This work have been performed in collaboration with Monash University and will be submitted at the conference ACM ISS 2022.

Another method to improve spatial memory is to increase the size of the workspace to encourage users to physically move inside. In collaboration with ETS Montreal, we performed a study testing this hypothesis and showed only a very small benefits of a large workspace over a small one. This has been presented at GI 2021 27.

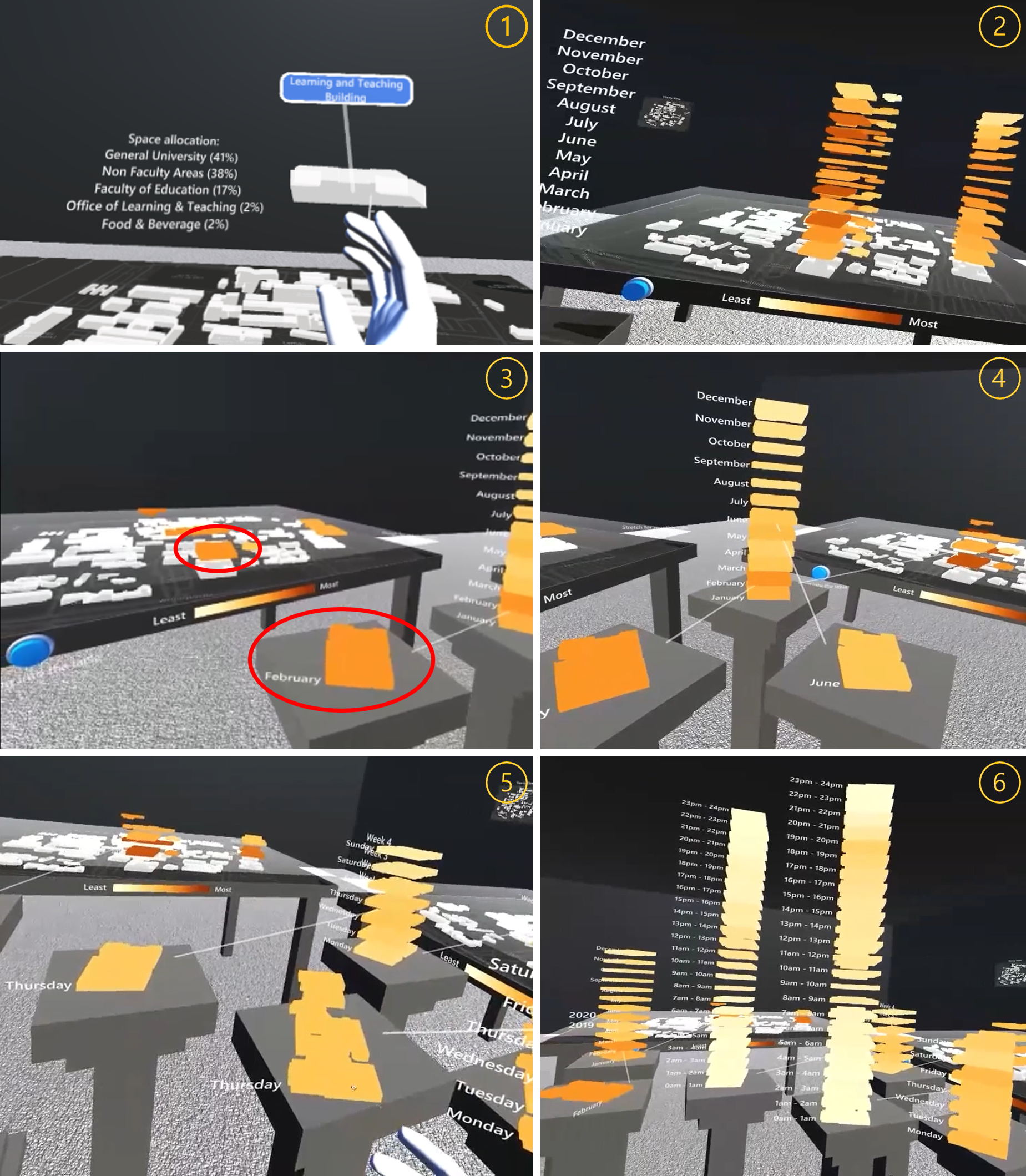

There are six small multiples demonstrating the TimeTables prototype. In Virtual Reality, we can see a table with a map of a campus. 3D representations of the buildings are placing on top of each other to represent different moment in time and their color encode the energy consumption.

Illustration of the TimeTables prototype.

In collaboration with Monash University, we explore the potential of using VR to explore spatio-temporal dataset. We developed a prototype, called TimeTables, that focuses on the visualisation and manipulation of space-time cubes. TimeTables uses multiple space-time cubes on virtual tabletops, which users can manipulate by extracting time layers or individual buildings to create new tabletop views (see Figure 11). The surrounding environment includes a large space for multiple linked tabletops and a storage wall. TimeTables presents information at different time scales by stretching layers to drill down in time. Users can also jump into tabletops to inspect data from an egocentric perspective. We present a use case scenario of energy consumption displayed on a university campus to demonstrate how our system could support data exploration and analysis over space and time. This work has been accepted at IEEE VR 2022.

Finally, working in a 3D space does not mean you should only use 3D visualisations. In collaboration with Monash University, we explored the design space of transformation between 2D and 3D visualisations. To explore this, we first establish an overview of the different states that a data visualisation can take in AR/VR, followed by how transformations between these states can facilitate common visualisation tasks. We then describe a design space of how these transformations function, in terms of the different stages throughout the transformation, and the user interactions and input parameters that affect it. This design space is then demonstrated with multiple exemplary techniques based in AR/VR. This work has been accepted at ACM CHI 2022.

A workshop on spatial sensemaking in immersive analytics has been accepted at ACM CHI 2022 and Arnaud is part of the organising committee.

8.12 Virtual Reality to Amplify Episodic Future Thinking

Participants: Adelaide Genay, Arnaud Prouzeau.

External collaborators: Verity Petratos [Monash University], Simon Van Baal [Monash University], Alex Robinson [Monash University], Antonio Verdejo-Garcia [Monash University].

Neuroscience research discovered that when we imagine the future with the same level of detail and affective quality as when we remember the past, we tend to make more future-oriented decisions (e.g. staying home to protect others). Episodic future thinking (EFT) is a prospection-based training that stimulates this ability and has shown to improve future-oriented choices while simultaneously reducing anxiety about the future. Our team is currently working on two ways to improve the effectiveness and scalability of EFT: (1) building an immersive virtual reality (VR) scenario to facilitate imagining the future with sufficient detail and infusing it with positive emotions; (2) exploring the potential of collective EFT (imagining the future together) via VR communities. In 2021, a first study was performed at Monash University to assess the impact of using a VR training (see Figure 12) during an EFT training and showed encouraging regarding delay discounting, an important mechanism of impulsivity. A second wider study focused on a clinical population (food addiction) is planned in 2022.

View of a 3D virtual scene of a forest track with picture panels.

3D modelisation of a part of the Kokoda trail track located near Melbourne in Australia that was used in the VR training.

8.13 VR Authoring Systems

Participants: Edwige Gros, Arnaud Prouzeau, Martin Hachet.

Edwige Gros performed a 6-months internship at Potioc during which she explored the design of an immersive tool to author interactive experience in virtual reality using demonstration and direct manipulation. Authoring by demonstration and direct manipulation is an intuitive way of creation as users directly visualize the outcome of their actions and create the interaction with a first-person point of view inside the context. Yet authoring by demonstration raises several challenges. In the first place, as a user demonstrates the interaction, the system has several ambiguities to solve. It needs to identify the expected action, the referent to the action as well as its spatial and temporal context and properties. Secondly, some concepts required for interaction authoring are either intangible, abstract or both.

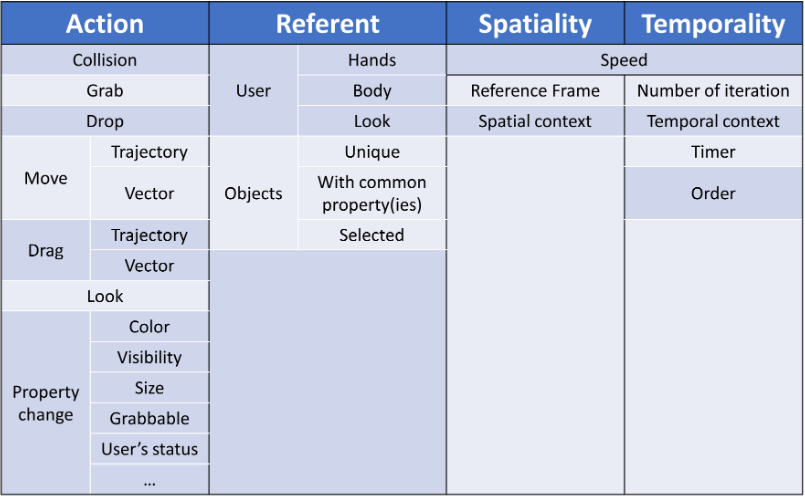

We proposed a framework to define an interaction thanks to demonstration (see Figure 13) and developed an immersive authoring prototype in VR. Users can record their actions directly or manipulate a puppet in order to specify the final user’s action. Users can also record objects modification, interaction and movements. Testing is also enabled during the creation process to allow an iterative authoring method and facilitate error processing. Edwige is now following-up on this topic in a PhD and now explore the collaborative aspect of this design experience.

Table representing the framework for authoring interaction by demonstration.

Framework for authoring interaction by demonstration. We identified four main categories: action, referent, spatiality and temporality.

8.14 Accessibility of E-Learning Systems

Participants: Pascal Guitton.

External collaborators: Hélène Sauzéon [EP Flowers].

In 2021, we concluded our work on new digital teaching systems such as MOOCs. Unfortunately, accessibility for people with disabilities is often forgotten, which excludes them, particularly those with cognitive impairments for whom accessibility standards are far from being established. We have shown in 44 that very few research activities deal with this issue.

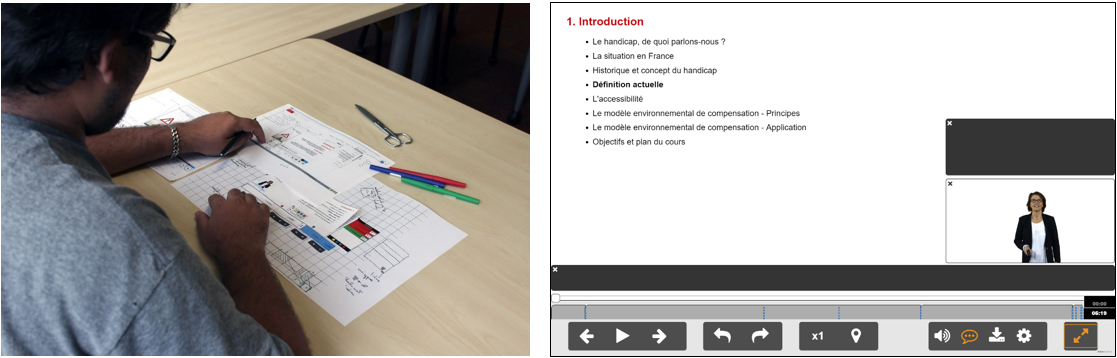

In past years, we have proposed new design principles based on knowledge in the areas of accessibility (Ability-based Design and Universal Design), digital pedagogy (Instruction Design with functionalities that reduce the cognitive load : navigation by concept, slowing of the flow…), specialized pedagogy (Universal Design for Learning, e.g., automatic note-taking, and Self Determination Theory, e.g., configuration of the interface according to users needs and preferences) and psychoeducational interventions (e.g., support the joint teacher-learner attention), but also through a participatory design approach involving students with disabilities and experts in the field of disability (Figure 14). From these frameworks, we have designed interaction features which have been implemented in a specific MOOC player called Aïana. Moreover, we have produced a MOOC on digital accessibility which is published on the national MOOC platform (FUN) using Aïana (4 sessions since 2016 with more than 13 000 registered participants). Our first field studies demonstrated the benefits of using Aïana for disabled participants 45. We published one of the first systematic review on accessibility of e-learning system 43. Finally in 2021, early interaction (with accessibility features), participation, learning and learner experience were assessed thanks to a field study (N=546) without equivalent in the literature, including learners with and without disabilities 13.

Image showing an interface design session with a real user (left side) and a slide displayed using Aïana (right side)

Design of Aïana MOOC player.

8.15 Tools for affective, cognitive and conative states estimation from both EEG and physiological signals

Participants: Aurélien Appriou, David Trocellier, Fabien Lotte.

External collaborators: Pierre-Yves Oudeyer [Inria Bordeaux Sud-Ouest, Flowers], Edith Law [Univ. Waterloo], Jessie Ceha [Univ. Waterloo], Dan Dutartre [Inria Bordeaux Sud-Ouest, SED], Andrzej Cichocki [SKOLTECH].

Passive BCIs could estimate cognitive (process of coming to know and understand), conative (personal, intentional and motivational drives to process the information) and affective states (emotional interpretation of perceptions, information, or knowledge). Being able to estimate such mental states from EEG and/or physiological signals, using passive BCIs, could improve, for instance the understanding of individual users’ capabilities and motivations to learn. However, being able to do so is no easy task. Indeed, this requires psychological models and protocols to induce and manipulate such mental states, as well as machine learning algorithms and software tools to robustly estimate them from EEG signals. Our recent research works with passive BCIs addressed these issues. In particular we proposed 1) machine learning algorithms we designed and/or studied to classify cognitive and affective states from EEG; 2) BioPyC, an open-source python toolbox for easy classification of EEG and physiological signals 11; and 3) a protocol and study demonstrating that a conative state can be newly estimated from EEG and physiological signals: curiosity. This work was presented at the third Neuroergonomics conference and received the "Young Investigator Award".

8.16 Riemannian channel selection for BCI with between-session non-stationarity reduction capabilities

Participants: Khadijeh Sadatnejad, Fabien Lotte.

Between-session non-stationarity is a major challenge of current BCIs that affects system performance. We investigated the use of channel selection for reducing between-session non-stationarity with Riemannian BCI classifiers. We use the Riemannian geometry framework of covariance matrices due to its robustness and promising performances. Current Riemannian channel selection methods do not consider between-session non-stationarity and are usually tested on a single session. We proposed a new channel selection approach that specifically considers non-stationarity effects and is assessed on multi-session BCI data sets. To do so, we removed the least significant channels using a sequential floating backward selection search strategy. Our contributions include: 1) quantifying the non-stationarity effects on brain activity in multi-class problems by different criteria in a Riemannian framework and 2) a method to predict whether BCI performance can improve using channel selection. We evaluated the proposed approaches on three multisession and multi-class mental tasks (MT)-based BCI datasets. They could lead to significant improvements in performance as compared to using all channels for datasets affected by between-session non-stationarity and to significant superiority to the state-of-the-art Riemannian channel selection methods over all datasets, notably when selecting small channel set sizes. This work was very recently accepted in the journal IEEE transactions on Neural System and Rehabilitation Engineering.

8.17 Towards identifying optimal biased feedback for various user states and traits in motor imagery BCI

Participants: Smeety Pramij, Fabien Lotte.

External collaborators: Jelena Mladenovic [Univ. Belgrade], Jérémy Frey [Ullo], Jérémie Mattout [Inserm Lyon].

Neural self-regulation is necessary for achieving control over BCIs. This can be an arduous learning process especially for motor imagery BCI. Various training methods were proposed to assist users in accomplishing BCI control and increase performance. Notably the use of biased feedback, i.e. non-realistic representation of performance. Benefits of biased feedback on performance and learning vary between users (e.g. depending on their initial level of BCI control) and remain speculative. To disentangle the speculations, we investigated what personality type, initial state and calibration performance (CP) could benefit from a biased feedback. We conducted an experiment (n=30 for 2 sessions) in which the feedback provided to each group (n=10) was either positively, negatively or not biased. Statistical analyses suggested that interactions between bias and: 1) workload, 2) anxiety, and 3) self-control significantly affected online performance. For instance, low initial workload paired with negative bias was associated to higher peak performances (86%) than without any bias (69%). High anxiety related negatively to performance no matter the bias (60%), while low anxiety matched best with negative bias (76%). For low CP, learning rate (LR) increased with negative bias only short term (LR=2%) as during the second session it severely dropped (LR=-1%). Overall, we unveiled many interactions between said human factors and bias. Additionally, we used prediction models to confirm and reveal even more interactions. This work was published in the journal IEEE Transactions on Biomedical Engineering 17.

8.18 Multi-Session Influence of Two Modalities of feedback and Their Order of Presentation on MI-BCI User Training

Participants: Fabien Lotte.

External collaborators: Léa Pillette [INCIA], Bernard N'Kaoua [Univ. Bordeaux], Romain Sabau [Univ. Bordeaux].

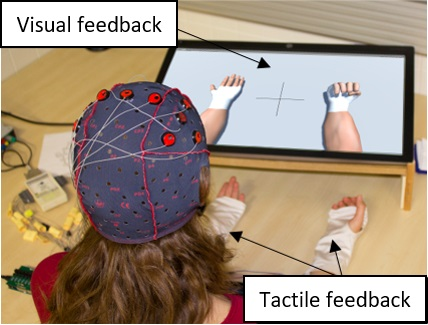

By performing motor-imagery tasks, for example, imagining hand movements, Motor-Imagery based BCIs (MI-BCIs) users can control digital technologies, for example, neuroprosthesis, using their brain activity only. MI-BCI users need to train, usually using a unimodal visual feedback, to produce brain activity patterns that are recognizable by the system. The literature indicates that multimodal vibrotactile and visual feedback is more effective than unimodal visual feedback, at least for short term training. However, the multi-session influence of such multimodal feedback on MI-BCI user training remained unknown, so did the influence of the order of presentation of the feedback modalities. In our experiment, 16 participants trained to control a MI-BCI during five sessions with a realistic visual feedback and five others with both a realistic visual feedback and a vibrotactile one. training benefited from a multimodal feedback, in terms of performances and self-reported mindfulness. There was also a significant influence of the order presentation of the modality. Participants who started training with a visual feedback had higher performances than those who started training with a multimodal feedback. We recommend taking into account the order of presentation for future experiments assessing the influence of several modalities of feedback. This work was published in the journal MDPI Multimodal Technologies and Interaction 19.

A picture showing our vibrotactile gloves worn by a BCI participant who can also see 3D hands a screen on top of her real hands.

Our visual and vibrotactile feedback for MI-BCI user training.

8.19 Modeling Mental Imagery-based BCI user performances

Participants: Camille Benaroch, Aline Roc, Fabien Lotte.

External collaborators: Camille Jeunet [INCIA/CNRS/Univ. Bordeaux], Maria Sayu Yamamoto [Univ. Paris-Saclay].

Models based on Neurophysiological Factors:

In that work, we tried to understand the mechanisms underlying MT-BCI user training, notably by identifying neurophysiological factors related/associated to it. Such factors of interest should generalize across datasets. However, so far, most neurophysiological factors that have been identified, individually, as factors related to BCI performance but not as predictors of performance of unseen users. Furthermore, they were studied in single experiments and/or data sets. Therefore, in our work:

- We computed and compared neurophysiological predictors of MI-BCI performance found in the literature using two datasets.

- We proposed new neurophysiological predictors of MI-BCI performance.

- We studied whether statistical models can predict and explain MT-BCI user performance across experiments, based on users' neurophysiological characteristics.

We used data from 85 subjects, for a total of 340 online MI-BCI runs collected from two different studies based on the same MI-BCI paradigm. We used machine learning regressions with a leave-one-subject-out cross validation on one dataset ( to build our predictive model of performance and, to study the reliability of the model, we used it to predict the performance of an other dataset. Our results suggested that it was possible to predict MI-BCI performances of unseen users using neurophysiological characteristics of a user with an error of 9.3% (p = 0.005). In addition, the proposed new predictors were found to be relevant predictors of MI-BCI performances in our analyses.

Models based on characteristics of the machine learning algorithms used:

In this work, we proposed to study and extract predictors from two frequently used data-driven algorithms during the MI-BCI system calibration (i.e., the common spatial pattern (CSP) algorithm and the Linear Discriminant analyses (LDA)). In particular:

- We extracted characteristics from the trained CSP and LDA weights.

- We studied the relationships between MI-BCI performances and subject-specific characteristics extracted from the CSP algorithm and LDA.

- We studied whether statistical models can predict and explain MI-BCI user performance across experiments, based on subject-specific characteristics extracted from the machine (i.e., from the results of the calibration algorithms).

Our results suggested that a large majority of our proposed characteristics were strongly correlated to MI-BCI performances.

When should MI-BCI feature optimization include prior knowledge, and which one?:

MI-BCIs rely on interactions between humans and machines. Therefore, the (learning) characteristics of both components are key to understand and improve performances. Data-driven methods are often used to select/extract features with very little neurophysiological prior. Should such approach include prior knowledge and, if so, which one? Can such approach reach optimal performances? Our work studied the relationship between BCI performances and characteristics of the subject-specific Most Discriminant Frequency Band (MDFB) selected by a popular heuristic algorithm. First, our results showed a correlation between the selected MDFB characteristics (mean and width) and performances 35. Then, to investigate a possible causality link, we compared, online, performances obtained with a constrained (enforcing characteristics associated to high performances) and an unconstrained algorithm. Although we could not conclude on causality, average performances using the constrained algorithm were the highest. Finally, to understand the relationship between MDFB characteristics and performances better, we used machine learning to 1) predict MI-BCI performances using MDFB characteristics and 2) select automatically the optimal algorithm (constrained or unconstrained) for each subject. Our results revealed that the constrained algorithm could improve performances for subjects with either clearly distinct or no distinct EEG patterns. This work was submitted to the Brain-Computer Interfaces journal, and was accepted conditionally with minor revisions.

8.20 Long-term BCI training of a Tetraplegic User: Adaptive Riemannian Classifiers and User Training

Participants: Camille Benaroch, Aline Roc, Smeety Pramij, Khadijeh Sadatnejad, Thibaut Monseigne, Fabien Lotte.

External collaborators: Aurélien Appriou [Flitsport], Camille Jeunet [INCIA/CNRS/Univ. Bordeaux], Léa Pillette [Centrale Nantes], Jelena Mladenovic [Univ. Belgrade].

While often presented as promising assistive technologies for motor-impaired users, EEG-based BCIs remain barely used outside laboratories due to low reliability in real-life conditions. There is thus a need to design long-term reliable BCIs that can be used outside-of-the-lab by end-users, e.g., severely motor-impaired ones. Therefore, we proposed and evaluated the design of a multi-class Mental Task (MT)-based BCI for longitudinal training (20 sessions over 3 months) of a tetraplegic user for the CYBATHLON BCI series 2019. In this BCI championship, tetraplegic pilots are mentally driving a virtual car in a racing video game. We aimed at combining a progressive user MT-BCI training with a newly designed machine learning pipeline based on adaptive Riemannian classifiers shown to be promising for real-life applications. We followed a two step training process: the first 11 sessions served to train the user to control a 2-class MT-BCI by performing either two cognitive tasks (REST and MENTAL SUBTRACTION) or two motor-imagery tasks (LEFT-HAND and RIGHT-HAND). The second raining step (9 remaining sessions) applied an adaptive, session-independent Riemannian classifier that combined all 4 MT classes used before. Moreover, as our Riemannian classifier was incrementally updated in an unsupervised way it would capture both within and between-session non-stationarity. Experimental evidences confirmed the effectiveness of this approach. Namely, the classification accuracy improved by about 30% at the end of the training compared to initial sessions. We also studied the neural correlates of this performance improvement. Using a newly proposed BCI user learning metric, we could show our user learned to improve his BCI control by producing EEG signals matching increasingly more the BCI classifier training data distribution, rather than by improving his EEG class discrimination. Note that, when re-analyzing past data sets, we could also observe this new type of learning in some subjects 40. However, the resulting improvement was effective only on synchronous (cue-based) BCI and it did not translate into improved CYBATHLON BCI game performances. This work was published in the journal Frontiers in Human Neuroscience: Brain-Computer Interfaces 12.

8.21 Training programs for for Mental-Task based BCI user training:

Participants: Aline Roc, Smeety Pramij, Thibaut Monseigne, Pauline Dreyer, Fabien Lotte.

A Framework for Mental-Task based BCI user training:

Based on the review and study of related works, we are working towards identifying the relevant parameters allowing for a more comprehensive description of user training, such as interaction properties (what to perform, when, how, targeted skill, etc.), environment (social and physical), information communicated through instructions or through feedback/interface design (modality/dimensionality, etc.). Such framework can help studying and describing training in a more structured way, perhaps providing a template to support a better design, evaluation, comparison or reporting of experimental research. This framework was presented at the (virtual) international BCI meeting 39.

Studying User states during MT-BCI training

We are investigating user states through subjective feedback from the user and passive BCI classification. We recently assembled a dataset with 24 participants training over three Mental Tasks (MT)-BCI sessions (with CSP+LDA classification) to control a standard MT-BCI (left vs. right-hand imagery) during 12 short runs (16 trials/run). Before each session, they all completed two different cognitive tasks (N-back & Rspan) both involving a high and low cognitive load while recording EEG activity. Participants also provided subjective measures of their cognitive load during BCI interaction after each 16-trials run (with 10-points Likert scales over 6 items).

In ongoing work, we attempt to build a classifier based on these Working-Memory Tasks and use it to assess and study users' cognitive load level during MI-BCI training.

We also showed different trends in users' self-assessments, especially higher reported load in the first session compared to 2nd and next sessions. It also seem that users tend to gradually improve their capability to estimate their own performance based on the feedback they receive.

We also conducted semi-structured interviews intended for the purpose of better documenting (1) what people choose to imagine and (2) how they feel about the execution of these tasks.

Involving the users in their training

In current BCI user training paradigms, the interaction is generally both synchronous and guided, which means that the user is provided with both temporal cues and content cues. For example, in the Graz paradigm, users are provided with a specific time period of a few seconds during which they are instructed to perform a specific task, then another time period in which they have to perform another task, and so on. However, synchronous and guided training is repetitive and ultimately boring for the user. Additionally, the literature in human learning suggests that learners should train with individualized learning objectives and proceed at their own pace 38. Thus, we designed an experimental protocol with 2 experimental groups who will train with feedback to control a MT-BCI. The control group will undergo a standard closed training, with fully synchronous and guided copy tasks, while the other group will undergo a modified version of the protocol where a percentage of the training will be user-initiated instead (with no cues indicating which task to perform or when). Such runs will allow for internally-paced and internally-guided decisions during user-device interactions. For both groups and for future experiments, an experimental platform for user training was designed and implemented (it is named Brain Hero) to allow for easy modification of many trial parameters (e.g. presence, speed or aspect of the cues) and to improve the collection of online metrics. Additionally, as compared to the standard one, the user interface is inspired by rhythm games and is expected to provide improved user experience through gamification.

8.22 Public BCI data sharing:

Participants: Pauline Dreyer, Aline Roc, Camille Benaroch, Thibaut Monseigne, Sébastien Rimbert, Fabien Lotte.

The numerous BCI experiments carried out within the BrainConquest project allow the creation of a large database, that has been analyzed and verified in order to be shared permanently and free of charge with the scientific community. In particular, we will share: the protocols used for BCI experiments, the EEG and physiological signals of 87 BCI participants; information about their profile: demographics, current state (e.g., their state of fatigue or motivation, measured through questionnaires), their personality traits and their BCI performance; the OpenViBE codes and data (e.g., the parameters of the machine learning algorithms) generated during each experiment. This will allow the community to extend this research, for example by using our extensive EEG data to design new machine learning algorithms or to identify new factors influencing BCI learning.

8.23 Understanding the fundamental interrelation between ERD/ERS and MI-BCI use

Participants: Sébastien Rimbert, Fabien Lotte.

One of the most prominent BCI types of interaction is Motor Imagery (MI)-based BCI. Users control a system by performing MI tasks, e.g., imagining hand or foot movements detected from EEG signals. Indeed, movement and imagination of movement activate similar neural networks, enabling the MI-based BCI to exploit the modulation of sensorimotor rhythms (SMR) over the motor cortex, respectively known as Event-Related Desynchronization (ERD) and Event-Related Synchronization (ERS), coming from the mu (7-13 Hz) and beta (15-30 Hz) frequency bands. Despite current research, the relationship between ERD/ERS generated during the MI task is still not well understood. Indeed, numerous studies have previously shown that there is a lot of inter-subject and intra-subject variability concerning the ERDs and ERSs patterns, but difficulties remain to understand the origin of such phenomenon. This lack of knowledge about temporal variability of cerebral motor patterns limits the possibilities of improving the performance of BCI interfaces, which remain quite poor on average. All these unknowns would be problematic for such targeted applications. A variation in the nature or quality of the brain inputs can affect the overall quality of the BCI, and therefore the corresponding user experience, and vice versa, creating a potential mutual degradation of the BCI-loop.

We believe that a better understanding of the intra-individual variability of ERD/ERS during BCI use is crucial for developing effective interfaces. Indeed, individual activation patterns during MI are largely neglected in most studies, which have mainly focused on identifying patterns common to all participants. In particular, how the cerebral motor patterns underlying the MI task (i.e., ERDs and ERSs) are modulated depending (i) on the context of the BCI experiment, (ii) on the characteristics of the user (e.g., right-handed/left-handed, age, gender, athlete etc.) or on the user's experience with the BCI overtime needs to be better understood. Particularly, how the user is changing, in terms of mastery of the task, acceptability of the interface, subjective perception of motivation, with continued use of the interface has not been sufficiently investigated. Thus, we propose to analyze large MI-BCI databases (> 150 subjects) to show how ERD/ERS are modulated according to the experimental context, the characteristics of the user or their specific experience with the BCI. We will see that this enhanced knowledge will enable the design of more efficient MI-BCIs adapted to each user.

8.24 Guidelines to use Transfer Learning for Motor Imagery Detection: an experimental study

Participants: Sébastien Rimbert, Fabien Lotte.

External collaborators: Laurent Bougrain [Univ. Lorraine], Pedro Luiz Coelho Rodrigues [Inria Paris-Saclay, Parietal], Geoffrey Canron [Univ. Lorraine].

MI-BCI have shown promising results for motor recovery, intraoperative awareness detection or assistive technology control. However, they suffer from several limitations due to the high variability of EEG signals, mainly lengthy and tedious calibration times usually required for each new day of use, and a lack of reliability for all users. Such problems can be addressed, to some extent, using transfer learning algorithms. However, the performance of such algorithms has been very variable so far, and when they can be safely used is still unclear. Therefore, in this work, we studied the performance of various state-of-the-art Riemannian transfer learning algorithms on a MI-BCI database (30 users), for various conditions: 1) supervised and unsupervised transfer learning; 2) for various amount of available training EEG data for the target domain; 3) intra-session or inter-session transfer; 4) for both users with good and less good MI-BCI performances. From such experiments, we derived guidelines about when to use which algorithm. Re-centering the target data is effective as soon as a few samples of this target set are taken into account. This is true even for an intra-session transfer learning. Likewise, re-centering is particularly useful for subjects who have difficulty producing stable motor imagery from session to session. This work was published in the IEEE NER'21 conference 24.

9 Bilateral contracts and grants with industry

9.1 Bilateral contracts with industry

Wisear:

- Duration: 2020-2021

- Local coordinator: Fabien Lotte

- Consulting project on in-ear EEG-based BCI

10 Partnerships and cooperations

10.1 International initiatives

10.1.1 Participation in other International Programs

BCI Endeavour

-

Description

: BCI endeavour is an international consortium that aims at designing a state-of-the-art MT-BCI user training protocol and at running this protocol in 20+ labs in order to create the largest MT-BCI study and database (to be shared open-source) ever.

-

Partner Institution(s):

- INCIA, France (Project leader. Lead: Camille Jeunet)

- Inria (Bordeaux, Paris, Rennes), France

- INSERM Lyon, France

- Univ. Lille, France

- Univ. Lorraine, France

- ISAE-Supaero, France

- Univ. Groeningen, The Netherlands

- Donders Institute, The Netherlands

- Univ. Graz, Austria

- TU Graz, Austria

- Univ. Vienna

- Univ. Wurzburg, Germany

- TU Berlin, Germany

- Univ. Oldenburg, Germany

- Univ. Essex, UK

- Univ. Glasgow, UK

- Univ. Ulster, UK

- ETH Zurich, Switzerland

- Univ. Padova, Italy

- Fundazione Santa Lucia, Italy

- Univ. Lisbon, Portugal

- Tokyo University of Agriculture and Technology, Japan

-

Date/Duration:

2020-present

-

Role:

Our team is part of three design subgroups for the design of a unified shared MT-BCI protocol:

- "Instructions" (led by S. Enriquez-Geppert, Univ. Groningen, The Netherlands) - Aline Roc, Sébastien Rimbert, Fabien Lotte

- "Assessment" (led by L. Pillette, INCIA, France) - Aline Roc, Sébastien Rimbert

- "Machine learning" (led by Reinhold Scheirer, Univ. Essex, UK) - Camille Benaroch, Fabien Lotte

10.2 European initiatives

10.2.1 FP7 & H2020 projects

BrainConquest:

- Funding: ERC Starting Grant

- Project title: BrainConquest - Boosting Brain-Computer Communication with High Quality User Training

- Duration: 2017-2022

- Coordinator: Fabien Lotte

- Abstract: Brain-Computer Interfaces (BCIs) are communication systems that enable users to send commands to computers through brain signals only, by measuring and processing these signals. Making computer control possible without any physical activity, BCIs have promised to revolutionize many application areas, notably assistive technologies, e.g., for wheelchair control, and man-machine interaction. Despite this promising potential, BCIs are still barely used outside laboratories, due to their current poor reliability. For instance, BCIs only using two imagined hand movements as mental commands decode, on average, less than 80% of these commands correctly, while 10 to 30% of users cannot control a BCI at all. A BCI should be considered a co-adaptive communication system: its users learn to encode commands in their brain signals (with mental imagery) that the machine learns to decode using signal processing. Most research efforts so far have been dedicated to decoding the commands. However, BCI control is a skill that users have to learn too. Unfortunately how BCI users learn to encode the commands is essential but is barely studied, i.e., fundamental knowledge about how users learn BCI control is lacking. Moreover standard training approaches are only based on heuristics, without satisfying human learning principles. Thus, poor BCI reliability is probably largely due to highly suboptimal user training. In order to obtain a truly reliable BCI we need to completely redefine user training approaches. To do so, I propose to study and statistically model how users learn to encode BCI commands. Then, based on human learning principles and this model, I propose to create a new generation of BCIs which ensure that users learn how to successfully encode commands with high signal-to-noise ratio in their brain signals, hence making BCIs dramatically more reliable. Such a reliable BCI could positively change man-machine interaction as BCIs have promised but failed to do so far.

BITSCOPE:

- Funding: CHIST-ERA Grant

- Project title: BITSCOPE: Brain Integrated Tagging for Socially Curated Online Personalised Experiences

- Duration: 2022-2024 (3 years)

- Coordinator: Tomàs Ward, Dublin City University, Ireland

- Abstract: This project presents a vision for brain computer interfaces (BCI) which can enhance social relationships in the context of sharing virtual experiences. In particular we propose BITSCOPE, that is, Brain-Integrated Tagging for Socially Curated Online Personalised Experiences. We envisage a future in which attention, memorability and curiosity elicited in virtual worlds will be measured without the requirement of “likes” and other explicit forms of feedback. Instead, users of our improved BCI technology can explore online experiences leaving behind an invisible trail of neural data-derived signatures of interest. This data, passively collected without interrupting the user, and refined in quality through machine learning, can be used by standard social sharing algorithms such as recommender systems to create better experiences. Technically the work concerns the development of a passive hybrid BCI (phBCI). It is hybrid because it augments electroencephalography with eye tracking data, galvanic skin response, heart rate and movement in order to better estimate the mental state of the user. It is passive because it operates covertly without distracting the user from their immersion in their online experience and uses this information to adapt the application. It represents a significant improvement in BCI due to the emphasis on improved denoising facilitating operation in home environments and the development of robust classifiers capable of taking inter- and intra-subject variations into account. We leverage our preliminary work in the use of deep learning and geometrical approaches to achieve this improvement in signal quality. The user state classification problem is ambitiously advanced to include recognition of attention, curiosity, and memorability which we will address through advanced machine learning, Riemannian approaches and the collection of large representative datasets in co-designed user centred experiments.

- Partner Institution(s):

- Dublin City University, Ireland (Project Leader. Lead: Tomàs Ward)

- Inria Bordeaux Sud-Ouest, France (Lead: Fabien Lotte)

- Nicolas Copernicus University, Poland (Lead: Veslava Osinska)

- University Politechnic of Valence, Spain (Lead: Mariona Alcañiz)

10.3 National initiatives

eTAC: Tangible and Augmented Interfaces for Collaborative Learning:

- Funding: EFRAN

- Duration: 2017-2021

- Coordinator: Université de Lorraine

- Local coordinator: Martin Hachet

- Partners: Université de Lorraine, Inria, ESPE, Canopé, OpenEdge,

- the e-TAC project proposes to investigate the potential of technologies ”beyond the mouse” in order to promote collaborative learning in a school context. In particular, we will explore augmented reality and tangible interfaces, which supports active learning and favors social interaction.

- website: eTac

ANR Project EMBER:

- Duration: 2020-2023

- Partners: Inria/AVIZ, Sorbonne Université

- Coordinator: Pierre Dragicevic

- Local coordinator: Martin Hachet

- The goal of the project will be to study how embedding data into the physical world can help people to get insights into their own data. While the vast majority of data analysis and visualization takes place on desktop computers located far from the objects or locations the data refers to, in situated and embedded data visualizations, the data is directly visualized near the physical space, object, or person it refers to.

- website: Ember

Inria Project Lab AVATAR:

- Duration: 2018-2022

- Partners: Inria project-teams: GraphDeco, Hybrid, Loki, MimeTIC, Morpheo

- Coordinator: Ludovic Hoyet (Inria Rennes)

- Local coordinator: Martin Hachet

- This project aims at designing avatars (i.e., the user’s representation in virtual environments) that are better embodied, more interactive and more social, through improving all the pipeline related to avatars, from acquisition and simulation, to designing novel interaction paradigms and multi-sensory feedback.

- website: Avatar

11 Dissemination

11.1 Promoting scientific activities

11.1.1 Scientific events: organisation

General chair, scientific chair

- General co-chair of WACAI 2021 (Fabien Lotte)

Member of the organizing committees

- alt.chi co-chair at ACM CHI 2021 (Pierre Dragicevic)

- EnergyVis co-chair at ACM e-Energy 2021 (Arnaud Prouzeau)

- General chair 4th International OpenViBE workshop @NEC'21 (Fabien Lotte)

- 4th International OpenViBE workshop @NEC'21 (Aline Roc, Sébastien Rimbert)

- General co-chair, Neuroergonomics Webinar 3, "Neurotechnologies and System Neuroergonomics" (Fabien Lotte)

- F NEC'21 Grand Challenge: Passive BCI competition (Fabien Lotte)

- Workshop "Brain-Computer Interfaces for outside the lab: Neuroergonomics for human-computer interaction, education and sport" @International BCI meeting 2021 (Fabien Lotte)

11.1.2 Scientific events: selection

Chair of conference program committees

- Program co-chair IEEE VR 2021 and 2022 (Martin Hachet)

Member of the conference program committees

- IEEE VIS 2021. (Pierre Dragicevic, Yvonne Jansen, Arnaud Prouzeau)

- IEEE VR 21-22 (Arnaud Prouzeau)

- ACM ISS 21 (Arnaud Prouzeau)

- ACM MobileHCI 21 (Arnaud Prouzeau)

- IEEE ISMAR 21 (Arnaud Prouzeau)

- Rencontres Doctorales IHM 2021 (Martin Hachet)

- Neuroergonomics Conference (NEC) 2021 (Fabien Lotte)

- Augmented Humans (AHs) 2021 (Fabien Lotte)

Reviewer

The members of Potioc participate to regular reviewing activities for numerous conferences: NEC 2021, AHs 2021, alt.chi 2021, IEEE SMC 2021, JJC-ICON 2021, IEEE VR/3DUI 2022, ACM CHI, ACM VRST, IEEE ISMAR

11.1.3 Journal

Member of the editorial boards

- Member of the editorial board of the Journal of Perceptual Imaging (JPI) (Pierre Dragicevic)

- Member of the editorial board of the Springer Human–Computer Interaction Series (HCIS) (Pierre Dragicevic).

- Co-specialty Chief Editor of Frontiers in Neuroergonomics: Neurotechnology and System Neuroergonomics (Fabien Lotte)

- Member of the editorial board of Journal of Neural Engineering (Fabien Lotte)

- Member of the editorial board of Brain-Computer Interfaces (Fabien Lotte)

- Member of the editorial board of IEEE Transactions on Biomedical Engineering (Fabien Lotte)

Reviewer - reviewing activities

The members of Potioc participate to regular reviewing activities for numerous journals:

Frontiers in Neuroscience, Frontiers in Neuroergonomics, Frontiers in Human Neuroscience, Frontiers in Computer Science, Frontiers in Sports and Active Living, Frontiers in Neurorobotics, Plos One, PeerJ, IEEE Journal of Biomedical and Health Informatics, Journal of Neural Engineering, International Journal of Human Computer Studies, Psychophysiology, IEEE Transactions on Neural Systems and Rehabilitation Engineering, IEEE Transactions on Biomedical Engineering, IEEE Transactions on Visualization and Computer Graphics

11.1.4 Invited talks

- ”Interagir avec l’invisible dans des espaces physico-numériques” EASD Pau, November 21. (Martin Hachet)

- ”Visualisation immersive de données spatiales” Webinar 3D « autour de la 3D » co-organisé par le GDR CNRS MAGIS et le GDR CNRS IG-RV, July 21 (Arnaud Prouzeau)

- “Contribution of median nerve stimulation in the design of a BCI based on cerebral motor activity : towards improvement in the detection of intraoperative awareness during general anaesthesia”, CHRU Brugmann Seminar, Belgium, November 2021. (Sébastien Rimbert)

- "Understanding, Modelling and Optimizing BCI user training: the BrainConquest project" - BCI Society BCI Thursdays - Next Generations: Industry Academia Talks, online, December 2021 (Fabien Lotte)

- "Interfaces Cerveau-Ordinateur et NeuroFeedback : enjeux et perspectives pour la recherche biomédicale" - Institut de Recherche Biomédicale des Armées (IRBA), Brétigny, France, November 2021 (Fabien Lotte)

- "Brain-Computer Interaction: at the crossroad of Machine and Human Learning research", 6th USERN Congress and prize awarding Festival, online/Tehran, November 2021 (Fabien Lotte)

- "Interfaces Cerveau-Machines", 47ème Congrès de la Société Francophone de Chronobiologie (SFC) , Bordeaux, October 2021 (Fabien Lotte)

- "Towards Theories of Mental Task-based Brain-Computer Interfaces User Training", 3rd International Neuroergonomics conference, online/Munich, September 2021 (Fabien Lotte)

11.1.5 Leadership within the scientific community

- Member of the IRCAM Scientific council (Martin Hachet)

- Member of the Administrative Council of CORTICO, the French association for BCI research (Fabien Lotte, Sébastien Rimbert)

11.1.6 Scientific expertise

- Expert for the french minister of research: ”Crédit Impot Recherche” (Martin Hachet)

- Reviewer for the Austrian Science Fund (FWF) (Arnaud Prouzeau, Fabien Lotte)

- Reviewer for Lecturer promotion at the University of Essex, UK (Fabien Lotte)

- Committee for recruitment of a professor at University of Bordeaux, (Pascal Guitton)

- Committee for recruitment of an assistant-professor at Ecole centrale of Nantes, (Pascal Guitton)

11.1.7 Research administration

- Member of the CCSU (Commission Consultative de Spécialistes de l’Université Paris-Saclay) (Pierre Dragicevic)

- Member of the Conseil de Labo LISN (Pierre Dragicevic)

- Co-chair of the IID axis of the Labex Digicosme (Pierre Dragicevic)

- Member of the CER (Comité d’Éthique de la Recherche) Paris-Saclay (Pierre Dragicevic)

- Member of the CUMI (Commission des Utilisateurs des Moyens Informatiques) at Inria BSO (Arnaud Prouzeau)

- Member of GT Num GTHybridationInteraction - Ministery of reserach and education (Martin Hachet)

- Elected Secretary of the AFoDIB (association of computer science PhD students in Bordeaux) (Aline Roc)

- Elected Representative of Students at the EDMI doctoral school Council (Aline Roc)

- Member of Inria Bordeaux's commission for research jobs (CER) (Fabien Lotte)

- Member of the LaBRI scientific council (Fabien Lotte)

- President of the Cognitive Science association ASCOERGO, mission of Bordeaux research dissemination (David Trocellier)

- Member of Inria Ethical Committee (COERLE), (Pascal Guitton)

- Member of Inria Radar Committee (new annual Activity Report), (Pascal Guitton)

- Member of Inria International Chairs Committee, (Pascal Guitton)

11.2 Teaching - Supervision - Juries

11.2.1 Teaching

- Doctoral School: Interaction, Réalité Virtuelle et Réalité augmentée, 12h eqTD, EDMI, Université de Bordeaux (Martin Hachet)

- Master: Réalité Virtuelle, 12h eqTD, M2 Cognitive science, Université de Bordeaux (Martin Hachet)

- Master: Réalité Virtuelle, 6h eqTD, M2 Cognitive science, Université de Bordeaux (Fabien Lotte)