Keywords

Computer Science and Digital Science

- A5.3.3. Pattern recognition

- A5.4. Computer vision

- A5.4.2. Activity recognition

- A5.4.4. 3D and spatio-temporal reconstruction

- A5.4.5. Object tracking and motion analysis

- A9.1. Knowledge

- A9.2. Machine learning

- A9.3. Signal analysis

- A9.8. Reasoning

Other Research Topics and Application Domains

- B1.2.2. Cognitive science

- B2.1. Well being

- B7.1.1. Pedestrian traffic and crowds

- B8.1. Smart building/home

- B8.4. Security and personal assistance

1 Team members, visitors, external collaborators

Research Scientists

- Francois Bremond [Team leader, Inria, Senior Researcher, HDR]

- Antitza Dantcheva [Inria, Researcher, HDR]

- Esma Ismailova [Ecole Nationale Supérieure des Mines de Saint Etienne, Researcher, until Feb 2021]

- Alexandra Konig [Inria, Starting Research Position]

- Sabine Moisan [Inria, Researcher, HDR]

- Jean-Paul Rigault [Univ Côte d'Azur, Emeritus]

- Monique Thonnat [Inria, Senior Researcher, HDR]

- Susanne Thummler [Univ Côte d'Azur, Researcher, until Aug 2021]

Post-Doctoral Fellows

- Michal Balazia [Univ Côte d'Azur, until Mar 2021]

- Abhijit Das [Inria, until Feb 2021]

- Laura Ferrari [Univ Côte d'Azur]

- Mohsen Tabejamaat [Inria]

- Leonard Torossian [Inria, until Oct 2021]

- Ujjwal Ujjwal [Inria, until Nov 2021]

PhD Students

- Abid Ali [Univ Côte d'Azur]

- David Anghelone [Inria / Thales Group, from Apr 2021]

- Hao Chen [Inria]

- Rui Dai [Univ Côte d'Azur]

- Juan Diego Gonzales Zuniga [Inria, until Jul 2021]

- Mohammed Guermal [Inria]

- Jen Cheng Hou [Inria]

- Indu Joshi [Inria, from Aug 2021]

- Thibaud Lyvonnet [Inria]

- Tomasz Stanczyk [Inria, from Aug 2021]

- Valeriya Strizhkova [Inria]

- Yaohui Wang [Inria]

- Di Yang [Inria]

Technical Staff

- Tanay Agrawal [Inria, Engineer]

- Sebastien Gilabert [Inria, Engineer]

- Snehashis Majhi [Inria, Engineer, from Sep 2021]

- Farhood Negin [Inria, Engineer]

- Duc Minh Tran [Inria, Engineer]

Interns and Apprentices

- Dhruv Agarwal [Inria, until Jul 2021]

- Elias Bou Ghosn [Inria, from Apr 2021 until Sep 2021]

- Benjamin Brou [Inria, from Apr 2021 until Jun 2021]

- Mansi Mittal [Inria, from Aug 2021]

- Vishal Pani [Inria, until Jul 2021]

- Carlotta Sanges [Inria, until Mar 2021]

- Neelabh Sinha [Inria, from Feb 2021 until Jul 2021]

- Rian Touchent [Inria, from May 2021 until Aug 2021]

- Deepak Yadav [Inria, from Sep 2021]

Administrative Assistant

- Sandrine Boute [Inria]

External Collaborators

- Michal Balazia [Univ Côte d'Azur, from Apr 2021]

- Rachid Guerchouche [Univ Côte d'Azur]

2 Overall objectives

2.1 Presentation

The STARS (Spatio-Temporal Activity Recognition Systems) team focuses on the design of cognitive vision systems for Activity Recognition. More precisely, we are interested in the real-time semantic interpretation of dynamic scenes observed by video cameras and other sensors. We study long-term spatio-temporal activities performed by agents such as human beings, animals or vehicles in the physical world. The major issue in semantic interpretation of dynamic scenes is to bridge the gap between the subjective interpretation of data and the objective measures provided by sensors. To address this problem Stars develops new techniques in the field of computer vision, machine learning and cognitive systems for physical object detection, activity understanding, activity learning, vision system design and evaluation. We focus on two principal application domains: visual surveillance and healthcare monitoring.

2.2 Research Themes

Stars is focused on the design of cognitive systems for Activity Recognition. We aim at endowing cognitive systems with perceptual capabilities to reason about an observed environment, to provide a variety of services to people living in this environment while preserving their privacy. In today world, a huge amount of new sensors and new hardware devices are currently available, addressing potentially new needs of the modern society. However the lack of automated processes (with no human interaction) able to extract a meaningful and accurate information (i.e. a correct understanding of the situation) has often generated frustrations among the society and especially among older people. Therefore, Stars objective is to propose novel autonomous systems for the real-time semantic interpretation of dynamic scenes observed by sensors. We study long-term spatio-temporal activities performed by several interacting agents such as human beings, animals and vehicles in the physical world. Such systems also raise fundamental software engineering problems to specify them as well as to adapt them at run time.

We propose new techniques at the frontier between computer vision, knowledge engineering, machine learning and software engineering. The major challenge in semantic interpretation of dynamic scenes is to bridge the gap between the task dependent interpretation of data and the flood of measures provided by sensors. The problems we address range from physical object detection, activity understanding, activity learning to vision system design and evaluation. The two principal classes of human activities we focus on, are assistance to older adults and video analytic.

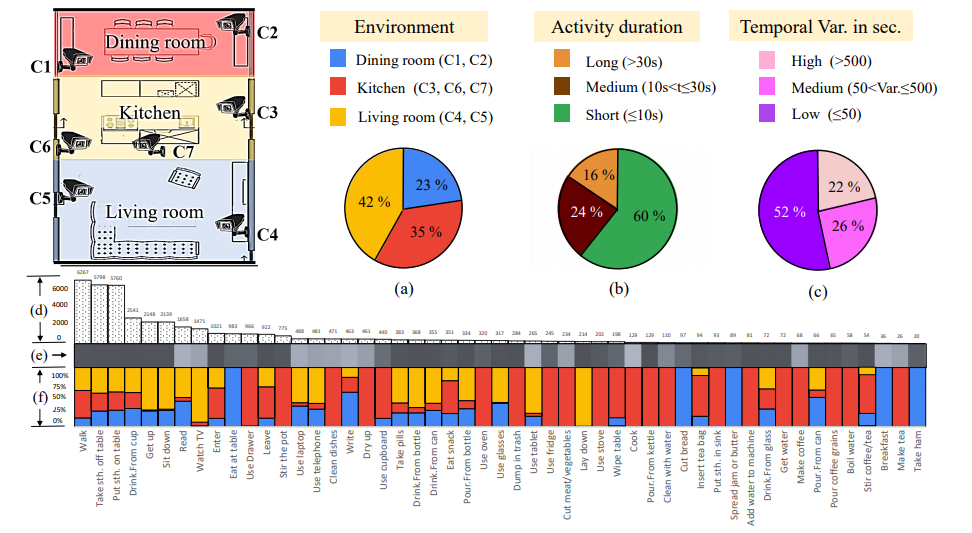

Typical examples of complex activity are shown in Figure 1 and Figure 2 for a homecare application (See Toyota Smarthome Dataset at ). In this example, the duration of the monitoring of an older person apartment could last several months. The activities involve interactions between the observed person and several pieces of equipment. The application goal is to recognize the everyday activities at home through formal activity models (as shown in Figure 3) and data captured by a network of sensors embedded in the apartment. Here typical services include an objective assessment of the frailty level of the observed person to be able to provide a more personalized care and to monitor the effectiveness of a prescribed therapy. The assessment of the frailty level is performed by an Activity Recognition System which transmits a textual report (containing only meta-data) to the general practitioner who follows the older person. Thanks to the recognized activities, the quality of life of the observed people can thus be improved and their personal information can be preserved.

.png)

| Activity (PrepareMeal, | |

| PhysicalObjects( | (p : Person), (z : Zone), (eq : Equipment)) |

| Components( | (s_inside : InsideKitchen(p, z)) |

| (s_close : CloseToCountertop(p, eq)) | |

| (s_stand : PersonStandingInKitchen(p, z))) | |

| Constraints( | (z->Name = Kitchen) |

| (eq->Name = Countertop) | |

| (s_close->Duration >= 100) | |

| (s_stand->Duration >= 100)) | |

| Annotation( | AText("prepare meal"))) |

The ultimate goal is for cognitive systems to perceive and understand their environment to be able to provide appropriate services to a potential user. An important step is to propose a computational representation of people activities to adapt these services to them. Up to now, the most effective sensors have been video cameras due to the rich information they can provide on the observed environment. These sensors are currently perceived as intrusive ones. A key issue is to capture the pertinent raw data for adapting the services to the people while preserving their privacy. We plan to study different solutions including of course the local processing of the data without transmission of images and the utilization of new compact sensors developed for interaction (also called RGB-Depth sensors, an example being the Kinect) or networks of small non visual sensors.

2.3 International and Industrial Cooperation

Our work has been applied in the context of more than 10 European projects such as COFRIEND, ADVISOR, SERKET, CARETAKER, VANAHEIM, SUPPORT, DEM@CARE, VICOMO, EIT Health.

We had or have industrial collaborations in several domains: transportation (CCI Airport Toulouse Blagnac, SNCF, Inrets, Alstom, Ratp, Toyota, GTT (Italy), Turin GTT (Italy)), banking (Crédit Agricole Bank Corporation, Eurotelis and Ciel), security (Thales R&T FR, Thales Security Syst, EADS, Sagem, Bertin, Alcatel, Keeneo), multimedia (Thales Communications), civil engineering (Centre Scientifique et Technique du Bâtiment (CSTB)), computer industry (BULL), software industry (AKKA), hardware industry (ST-Microelectronics) and health industry (Philips, Link Care Services, Vistek).

We have international cooperations with research centers such as Reading University (UK), ENSI Tunis (Tunisia), Idiap (Switzerland), Multitel (Belgium), National Cheng Kung University, National Taiwan University (Taiwan), MICA (Vietnam), IPAL, I2R (Singapore), University of Southern California, University of South Florida (USA), Michigan State University (USA), Chinese Academy of Sciences (China), IIIT Delhi (India), Hochschule Darmstadt (Germany), Fraunhofer Institute for Computer Graphics Research IGD (Germany).

2.3.1 Industrial Contracts

-

Toyota: (Action Recognition System):

This project run from the 1st of August 2013 up to 2023. It aimed at detecting critical situations in the daily life of older adults living home alone. The system is intended to work with a Partner Robot (to send real-time information to the robot for assisted living) to better interact with older adults. The funding was 106 Keuros for the 1st period and more for the following years.

- Thales: This contract is a CIFRE PhD grant and runs from September 2018 until September 2021 within the French national initiative SafeCity. The main goal is to analyze faces and events in the invisible spectrum (i.e., low energy infrared waves, as well as ultraviolet waves). In this context models will be developed to efficiently extract identity, as well as event - information. This models will be employed in a school environment, with a goal of pseudo-anonymized identification, as well as event-detection. Expected challenges have to do with limited colorimetry and lower contrasts.

- Kontron: This contract is a CIFRE PhD grant and runs from April 2018 until April 2021 to embed CNN based people tracker within a video-camera.

- ESI: This contract is a CIFRE PhD grant and runs from September 2018 until March 2022 to develop a novel Re-Identification algorithm which can be easily set-up with low interaction.

3 Research program

3.1 Introduction

Stars follows three main research directions: perception for activity recognition, action recognition and semantic activity recognition. These three research directions are organized following the workflow of activity recognition systems: First, the perception and the action recognition directions provide new techniques to extract powerful features, whereas the semantic activity recognition research direction provides new paradigms to match these features with concrete video analytic and healthcare applications.

Transversely, we consider a new research axis in machine learning, combining a priori knowledge and learning techniques, to set up the various models of an activity recognition system. A major objective is to automate model building or model enrichment at the perception level and at the understanding level.

3.2 Perception for Activity Recognition

Participants: François Brémond, Antitza Dantcheva, Sabine Moisan, Monique Thonnat.

Keywords: Activity Recognition, Scene Understanding, Machine Learning, Computer Vision, Cognitive Vision Systems, Software Engineering.

3.2.1 Introduction

Our main goal in perception is to develop vision algorithms able to address the large variety of conditions characterizing real world scenes in terms of sensor conditions, hardware requirements, lighting conditions, physical objects, and application objectives. We have also several issues related to perception which combine machine learning and perception techniques: learning people appearance, parameters for system control and shape statistics.

3.2.2 Appearance Models and People Tracking

An important issue is to detect in real-time physical objects from perceptual features and predefined 3D models. It requires finding a good balance between efficient methods and precise spatio-temporal models. Many improvements and analysis need to be performed in order to tackle the large range of people detection scenarios.

Appearance models. In particular, we study the temporal variation of the features characterizing the appearance of a human. This task could be achieved by clustering potential candidates depending on their position and their reliability. This task can provide any people tracking algorithms with reliable features allowing for instance to (1) better track people or their body parts during occlusion, or to (2) model people appearance for re-identification purposes in mono and multi-camera networks, which is still an open issue. The underlying challenge of the person re-identification problem arises from significant differences in illumination, pose and camera parameters. The re-identification approaches have two aspects: (1) establishing correspondences between body parts and (2) generating signatures that are invariant to different color responses. As we have already several descriptors which are color invariant, we now focus more on aligning two people detection and on finding their corresponding body parts. Having detected body parts, the approach can handle pose variations. Further, different body parts might have different influence on finding the correct match among a whole gallery dataset. Thus, the re-identification approaches have to search for matching strategies. As the results of the re-identification are always given as the ranking list, re-identification focuses on learning to rank. "Learning to rank" is a type of machine learning problem, in which the goal is to automatically construct a ranking model from a training data.

Therefore, we work on information fusion to handle perceptual features coming from various sensors (several cameras covering a large scale area or heterogeneous sensors capturing more or less precise and rich information). New 3D RGB-D sensors are also investigated, to help in getting an accurate segmentation for specific scene conditions.

Long term tracking. For activity recognition we need robust and coherent object tracking over long periods of time (often several hours in video surveillance and several days in healthcare). To guarantee the long term coherence of tracked objects, spatio-temporal reasoning is required. Modeling and managing the uncertainty of these processes is also an open issue. In Stars we propose to add a reasoning layer to a classical Bayesian framework modeling the uncertainty of the tracked objects. This reasoning layer can take into account the a priori knowledge of the scene for outlier elimination and long-term coherency checking.

Controlling system parameters. Another research direction is to manage a library of video processing programs. We are building a perception library by selecting robust algorithms for feature extraction, by insuring they work efficiently with real time constraints and by formalizing their conditions of use within a program supervision model. In the case of video cameras, at least two problems are still open: robust image segmentation and meaningful feature extraction. For these issues, we are developing new learning techniques.

3.3 Action Recognition

Participants: François Brémond, Antitza Dantcheva, Monique Thonnat.

Keywords: Machine Learning, Computer Vision, Cognitive Vision Systems.

3.3.1 Introduction

Due to the recent development of high processing units, such as GPU, this is now possible to extract meaningful features directly from videos (e.g. video volume) to recognize reliably short actions. Action Recognition benefits also greatly from the huge progress made recently in Machine Learning (e.g. Deep Learning), especially for the study of human behavior. For instance, Action Recognition enables to measure objectively the behavior of humans by extracting powerful features characterizing their everyday activities, their emotion, eating habits and lifestyle, by learning models from a large number of data from a variety of sensors, to improve and optimize for example, the quality of life of people suffering from behavior disorders. However, Smart Homes and Partner Robots have been well advertised but remain laboratory prototypes, due to the poor capability of automated systems to perceive and reason about their environment. A hard problem is for an automated system to cope 24/7 with the variety and complexity of the real world. Another challenge is to extract people fine gestures and subtle facial expressions to better analyze behavior disorders, such as anxiety or apathy. Taking advantage of what is currently studied for self-driving cars or smart retails, there is a large avenue to design ambitious approaches for the healthcare domain. In particular, the advance made with Deep Learning algorithms has already enabled to recognize complex activities, such as cooking interactions with instruments, and from this analysis to differentiate healthy people from the ones suffering from dementia.

To address these issues, we propose to tackle several challenges:

3.3.2 Action recognition in the wild

The current Deep Learning techniques are mostly developed to work on few clipped videos, which have been recorded with students performing a limited set of predefined actions in front of a camera with high resolution. However, real life scenarios include actions performed in a spontaneous manner by older people (including people interactions with their environment or with other people), from different viewpoints, with varying framerate, partially occluded by furniture at different locations within an apartment depicted through long untrimmed videos. Therefore, a new dedicated dataset should be collected in a real-world setting to become a public benchmark video dataset and to design novel algorithms for ADL activity recognition. A special attention should be taken to anonymize the videos.

3.3.3 Attention mechanisms for action recognition

Activities of Daily Living (ADL) and video-surveillance activities are different from internet activities (e.g. Sports, Movies, YouTube), as they may have very similar context (e.g. same background kitchen) with high intra-variation (different people performing the same action in different manners), but in the same time low inter-variation, similar ways to perform two different actions (e.g. eating and drinking a glass of water). Consequently, fine-grained actions are badly recognized. So, we will design novel attention mechanisms for action recognition, for the algorithm being able to focus on a discriminative part of the person conducting the action. For instance, we will study attention algorithms, which could focus on the most appropriate body parts (e.g. full body, right hand). In particular, we plan to design a soft mechanism, learning the attention weights directly on the feature map of a 3DconvNet, a powerful convolutional network, which takes as input a batch of videos.

3.3.4 Action detection for untrimmed videos

Many approaches have been proposed to solve the problem of action recognition in short clipped 2D videos, which achieved impressive results with hand-crafted and deep features. However, these approaches cannot address real life situations, where cameras provide online and continuous video streams in applications such as robotics, video surveillance, and smart-homes. Here comes the importance of action detection to help recognizing and localizing each action happening in long videos. Action detection can be defined as the ability to localize starting and ending of each human action happening in the video, in addition to recognizing each action label. There have been few action detection algorithms designed for untrimmed videos, which are based on either sliding window, temporal pooling or frame-based labeling. However, their performance is too low to address real-word datasets. A first task consists in benchmarking the already published approaches to study their limitations on novel untrimmed video datasets, recorded following real-world settings. A second task could be to propose a new mechanism to improve either 1) the temporal pooling directly from the 3DconvNet architecture using for instance Temporal Convolution Networks (TCNs) or 2) frame-based labeling with a clustering technique (e.g. using Fisher Vectors) to discover the sub-activities of interest.

3.3.5 View invariant action recognition

The performance of current approaches strongly relies on the used camera angle: enforcing that the camera angle used in testing is the same (or extremely close to) as the camera angle used in training, is necessary for the approach performs well. On the contrary, the performance drops when a different camera view-point is used. Therefore, we aim at improving the performance of action recognition algorithms by relying on 3D human pose information. For the extraction of the 3D pose information, several open-source algorithms can be used, such as openpose or videopose3D (from CMU or Facebook research, . Also, other algorithms extracting 3d meshes can be used. To generate extra views, Generative Adversial Network (GAN) can be used together with the 3D human pose information to complete the training dataset from the missing view.

3.3.6 Uncertainty and action recognition

Another challenge is to combine the short-term actions recognized by powerful Deep Learning techniques with long-term activities defined by constraint-based descriptions and linked to user interest. To realize this objective, we have to compute the uncertainty (i.e. likelihood or confidence), with which the short-term actions are inferred. This research direction is linked to the next one, to Semantic Activity Recognition.

3.4 Semantic Activity Recognition

Participants: François Brémond, Sabine Moisan, Monique Thonnat.

Keywords: Activity Recognition, Scene Understanding, Computer Vision.

3.4.1 Introduction

Semantic activity recognition is a complex process where information is abstracted through four levels: signal (e.g. pixel, sound), perceptual features, physical objects and activities. The signal and the feature levels are characterized by strong noise, ambiguous, corrupted and missing data. The whole process of scene understanding consists in analyzing this information to bring forth pertinent insight of the scene and its dynamics while handling the low level noise. Moreover, to obtain a semantic abstraction, building activity models is a crucial point. A still open issue consists in determining whether these models should be given a priori or learned. Another challenge consists in organizing this knowledge in order to capitalize experience, share it with others and update it along with experimentation. To face this challenge, tools in knowledge engineering such as machine learning or ontology are needed.

Thus we work along the following research axes: high level understanding (to recognize the activities of physical objects based on high level activity models), learning (how to learn the models needed for activity recognition) and activity recognition and discrete event systems.

3.4.2 High Level Understanding

A challenging research axis is to recognize subjective activities of physical objects (i.e. human beings, animals, vehicles) based on a priori models and objective perceptual measures (e.g. robust and coherent object tracks).

To reach this goal, we have defined original activity recognition algorithms and activity models. Activity recognition algorithms include the computation of spatio-temporal relationships between physical objects. All the possible relationships may correspond to activities of interest and all have to be explored in an efficient way. The variety of these activities, generally called video events, is huge and depends on their spatial and temporal granularity, on the number of physical objects involved in the events, and on the event complexity (number of components constituting the event).

Concerning the modeling of activities, we are working towards two directions: the uncertainty management for representing probability distributions and knowledge acquisition facilities based on ontological engineering techniques. For the first direction, we are investigating classical statistical techniques and logical approaches. For the second direction, we built a language for video event modeling and a visual concept ontology (including color, texture and spatial concepts) to be extended with temporal concepts (motion, trajectories, events ...) and other perceptual concepts (physiological sensor concepts ...).

3.4.3 Learning for Activity Recognition

Given the difficulty of building an activity recognition system with a priori knowledge for a new application, we study how machine learning techniques can automate building or completing models at the perception level and at the understanding level.

At the understanding level, we are learning primitive event detectors. This can be done for example by learning visual concept detectors using SVMs (Support Vector Machines) with perceptual feature samples. An open question is how far can we go in weakly supervised learning for each type of perceptual concept (i.e. leveraging the human annotation task). A second direction is to learn typical composite event models for frequent activities using trajectory clustering or data mining techniques. We name composite event a particular combination of several primitive events.

3.4.4 Activity Recognition and Discrete Event Systems

The previous research axes are unavoidable to cope with the semantic interpretations. However they tend to let aside the pure event driven aspects of scenario recognition. These aspects have been studied for a long time at a theoretical level and led to methods and tools that may bring extra value to activity recognition, the most important being the possibility of formal analysis, verification and validation.

We have thus started to specify a formal model to define, analyze, simulate, and prove scenarios. This model deals with both absolute time (to be realistic and efficient in the analysis phase) and logical time (to benefit from well-known mathematical models providing re-usability, easy extension, and verification). Our purpose is to offer a generic tool to express and recognize activities associated with a concrete language to specify activities in the form of a set of scenarios with temporal constraints. The theoretical foundations and the tools being shared with Software Engineering aspects.

The results of the research performed in perception and semantic activity recognition (first and second research directions) produce new techniques for scene understanding and contribute to specify the needs for new software architectures (third research direction).

4 Application domains

4.1 Introduction

While in our research the focus is to develop techniques, models and platforms that are generic and reusable, we also make effort in the development of real applications. The motivation is twofold. The first is to validate the new ideas and approaches we introduce. The second is to demonstrate how to build working systems for real applications of various domains based on the techniques and tools developed. Indeed, Stars focuses on two main domains: video analytic and healthcare monitoring.

Domain: Video Analytics

Our experience in video analytic (also referred to as visual surveillance) is a strong basis which ensures both a precise view of the research topics to develop and a network of industrial partners ranging from end-users, integrators and software editors to provide data, objectives, evaluation and funding.

For instance, the Keeneo start-up was created in July 2005 for the industrialization and exploitation of Orion and Pulsar results in video analytic (VSIP library, which was a previous version of SUP). Keeneo has been bought by Digital Barriers in August 2011 and is now independent from Inria. However, Stars continues to maintain a close cooperation with Keeneo for impact analysis of SUP and for exploitation of new results.

Moreover new challenges are arising from the visual surveillance community. For instance, people detection and tracking in a crowded environment are still open issues despite the high competition on these topics. Also detecting abnormal activities may require to discover rare events from very large video data bases often characterized by noise or incomplete data.

Domain: Healthcare Monitoring

Since 2011, we have initiated a strategic partnership (called CobTek) with Nice hospital (CHU Nice, Prof P. Robert) to start ambitious research activities dedicated to healthcare monitoring and to assistive technologies. These new studies address the analysis of more complex spatio-temporal activities (e.g. complex interactions, long term activities).

4.1.1 Research

To achieve this objective, several topics need to be tackled. These topics can be summarized within two points: finer activity description and longitudinal experimentation. Finer activity description is needed for instance, to discriminate the activities (e.g. sitting, walking, eating) of Alzheimer patients from the ones of healthy older people. It is essential to be able to pre-diagnose dementia and to provide a better and more specialized care. Longer analysis is required when people monitoring aims at measuring the evolution of patient behavioral disorders. Setting up such long experimentation with dementia people has never been tried before but is necessary to have real-world validation. This is one of the challenge of the European FP7 project Dem@Care where several patient homes should be monitored over several months.

For this domain, a goal for Stars is to allow people with dementia to continue living in a self-sufficient manner in their own homes or residential centers, away from a hospital, as well as to allow clinicians and caregivers remotely provide effective care and management. For all this to become possible, comprehensive monitoring of the daily life of the person with dementia is deemed necessary, since caregivers and clinicians will need a comprehensive view of the person's daily activities, behavioral patterns, lifestyle, as well as changes in them, indicating the progression of their condition.

4.1.2 Ethical and Acceptability Issues

The development and ultimate use of novel assistive technologies by a vulnerable user group such as individuals with dementia, and the assessment methodologies planned by Stars are not free of ethical, or even legal concerns, even if many studies have shown how these Information and Communication Technologies (ICT) can be useful and well accepted by older people with or without impairments. Thus one goal of Stars team is to design the right technologies that can provide the appropriate information to the medical carers while preserving people privacy. Moreover, Stars will pay particular attention to ethical, acceptability, legal and privacy concerns that may arise, addressing them in a professional way following the corresponding established EU and national laws and regulations, especially when outside France. Now, Stars can benefit from the support of the COERLE (Comité Opérationnel d'Evaluation des Risques Légaux et Ethiques) to help it to respect ethical policies in its applications.

As presented in 2, Stars aims at designing cognitive vision systems with perceptual capabilities to monitor efficiently people activities. As a matter of fact, vision sensors can be seen as intrusive ones, even if no images are acquired or transmitted (only meta-data describing activities need to be collected). Therefore new communication paradigms and other sensors (e.g. accelerometers, RFID, and new sensors to come in the future) are also envisaged to provide the most appropriate services to the observed people, while preserving their privacy. To better understand ethical issues, Stars members are already involved in several ethical organizations. For instance, F. Brémond has been a member of the ODEGAM - “Commission Ethique et Droit” (a local association in Nice area for ethical issues related to older people) from 2010 to 2011 and a member of the French scientific council for the national seminar on “La maladie d'Alzheimer et les nouvelles technologies - Enjeux éthiques et questions de société” in 2011. This council has in particular proposed a chart and guidelines for conducting researches with dementia patients.

For addressing the acceptability issues, focus groups and HMI (Human Machine Interaction) experts, are consulted on the most adequate range of mechanisms to interact and display information to older people.

5 Social and environmental responsibility

5.1 Footprint of research activities

We have limited our travels by reducing our physical participation to conferences and to international collaborations.

5.2 Impact of research results

We have been involved for many years in promoting public transportation by improving safety onboard and in station. Moreover, we have been working on pedestrian detection for self-driving cars, which will help also reducing the number of individual cars.

6 Highlights of the year

During this period, several novel activity recognition algorithms have been designed for Activities of Daily Living (ADLs) in real-world settings. These algorithms got the best performances on all relevant action datasets. However, most of them were built in more or less artificial settings. Therefore, we have released a new video dataset in real-world settings, which is going to become one of the main benchmarks of the domain: Real-World Activities of Daily Living. We have validated our activity detection algorithms on this new video dataset to foster novel research directions.

7 New software and platforms

Most of team contributions come with a published paper and an associated software, which is publicly available through github.

7.1 New software

7.1.1 SUP

-

Name:

Scene Understanding Platform

-

Keywords:

Activity recognition, 3D, Dynamic scene

-

Functional Description:

SUP is a software platform for perceiving, analyzing and interpreting a 3D dynamic scene observed through a network of sensors. It encompasses algorithms allowing for the modeling of interesting activities for users to enable their recognition in real-world applications requiring high-throughput.

- URL:

-

Contact:

François Brémond

-

Participants:

Etienne Corvée, François Brémond, Hung Nguyen, Vasanth Bathrinarayanan

-

Partners:

CEA, CHU Nice, USC Californie, Université de Hamburg, I2R

7.1.2 VISEVAL

-

Functional Description:

ViSEval is a software dedicated to the evaluation and visualization of video processing algorithm outputs. The evaluation of video processing algorithm results is an important step in video analysis research. In video processing, we identify 4 different tasks to evaluate: detection, classification and tracking of physical objects of interest and event recognition.

- URL:

-

Contact:

François Brémond

-

Participants:

Bernard Boulay, François Brémond

8 New results

8.1 Introduction

This year Stars has proposed new results related to its three main research axes: (i) perception for activity recognition, (ii) action recognition and (iii) semantic activity recognition.

Perception for Activity Recognition

Participants: François Brémond, Antitza Dantcheva, Juan Diego Gonzales zuniga, Farhood Negin, Vishal Pani, Indu Joshi, David Anghelone, Laura M. Ferrari, Neelabh Sinha, Neelabh Sinha, Hao Chen, Yaohui Wang, Valeriya Strizhkova, Mohsen Tabejamaat, Abhijit Das.

The new results for perception for activity recognition are:

- Detection of Tiny Vehicles from Satellite Video (see 8.2)

- TrichTrack: Multi-Object Tracking of Small-Scale Trichogramma Wasps (see 8.3)

- On Generalizable and Interpretable Biometric (see 8.4)

- Sensor-invariant Fingerprint ROI Segmentation Using Recurrent Adversarial Learning (see 8.5)

- Biosignals analysis for multimodal learning (see REFERENCE NOT FOUND: STARS-RA-2021/label/resultats:laurra_1)

- Learning-based approach for wearables design (see REFERENCE NOT FOUND: STARS-RA-2021/label/resultats:laurra_2)

- Computer Vision and Deep Learning applied to Facial Analysis in the invisible spectra (see 8.8)

- Explainable Thermal to Visible Face Recognition using Latent-Guided Generative Adversarial Network (see 8.9)

- Facial Landmark Heatmap Activated Multimodal Gaze Estimation (see 8.10)

- ICE: Inter-instance Contrastive Encoding for Unsupervised Person Re-identification (see 8.11)

- Joint Generative and Contrastive Learning for Unsupervised Person Re-identification (see 8.12)

- Emotion Editing in Head Reenactment Videos using Latent Space Manipulation (see 8.13)

- Learning to Generate Human Video (see 8.14)

- Guided Flow Field Estimation by Generating Independent Patches (see 8.15)

- BVPNet: Video-to-BVP Signal Prediction for Remote Heart Rate Estimation (see 8.16)

- Demystifying Attention Mechanisms for Deepfake Detection (see 8.17)

- Computer Vision for deciphering and generating faces (see 8.18)

Action Recognition

Participants: François Brémond, Antitza Dantcheva, Monique Thonnat, Mohammed Guermal, Tanay Agrawal, Abid Ali, Jen-Cheng Hou, Di Yang, Rui Dai, Snehashis Majhi.

The new results for action recognition are:

- DAM : Dissimilarity Attention Module for Weakly-supervised Video Anomaly Detection (see 8.19)

- Pyramid Dilated Attention Network (see 8.20)

- Class-Temporal Relational Network (see 8.21)

- Learning an Augmented RGB Representation with Cross-Modal Knowledge Distillation for Action Detection (see 8.22)

- VPN++: Rethinking Video-Pose embeddings for understanding Activities of Daily Living (see 8.23)

- Multimodal Personality Recognition using Cross-Attention Transformer and Behaviour Encoding (see 8.24)

- From Multimodal to Unimodal Attention in Transformers using Knowledge Distillation (see 8.25)

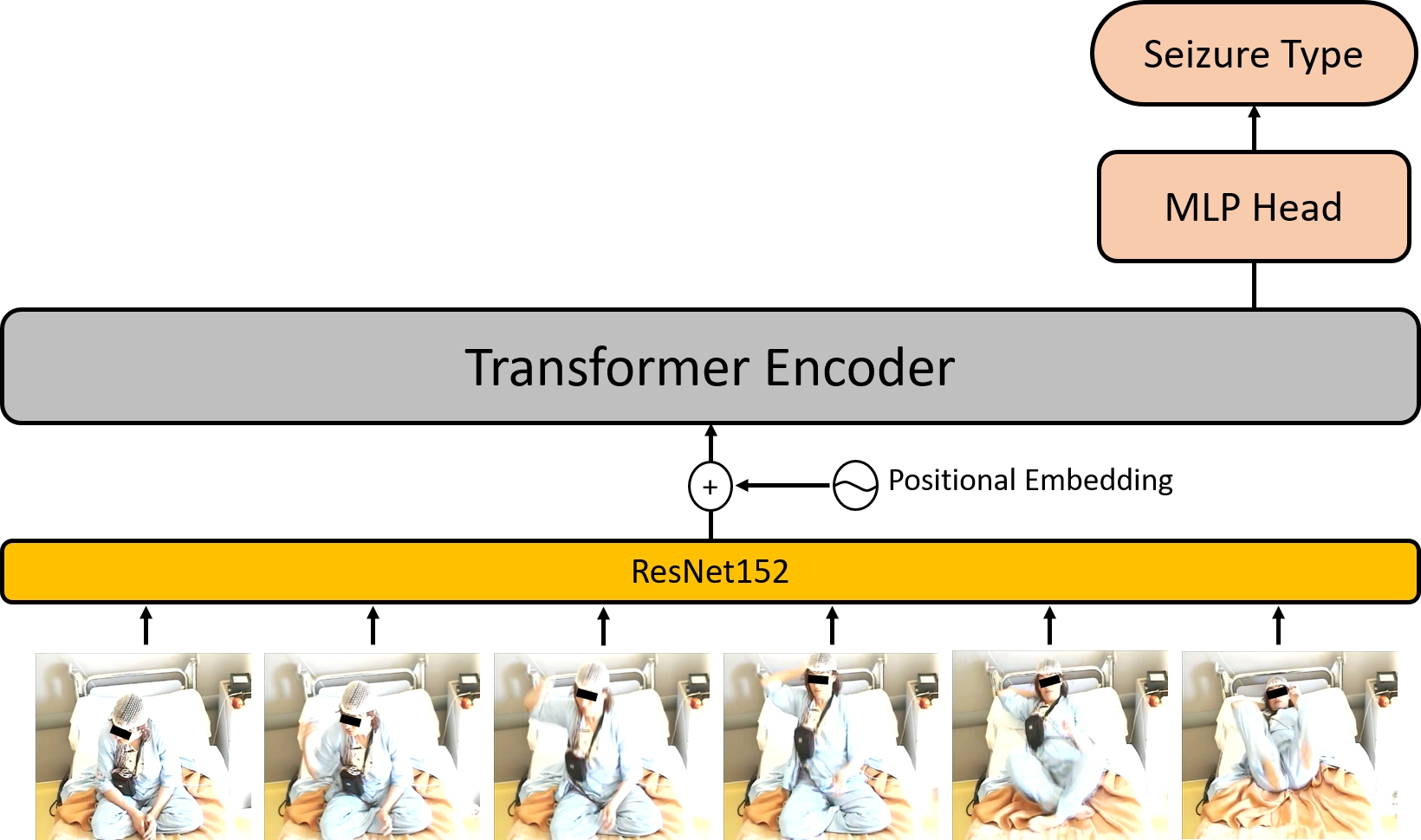

- Quantified Analysis for Video Recordings of Seizure (see 8.26)

- A Self-supervised pre-training framework for Vision-based Seizure Classification (see 8.27)

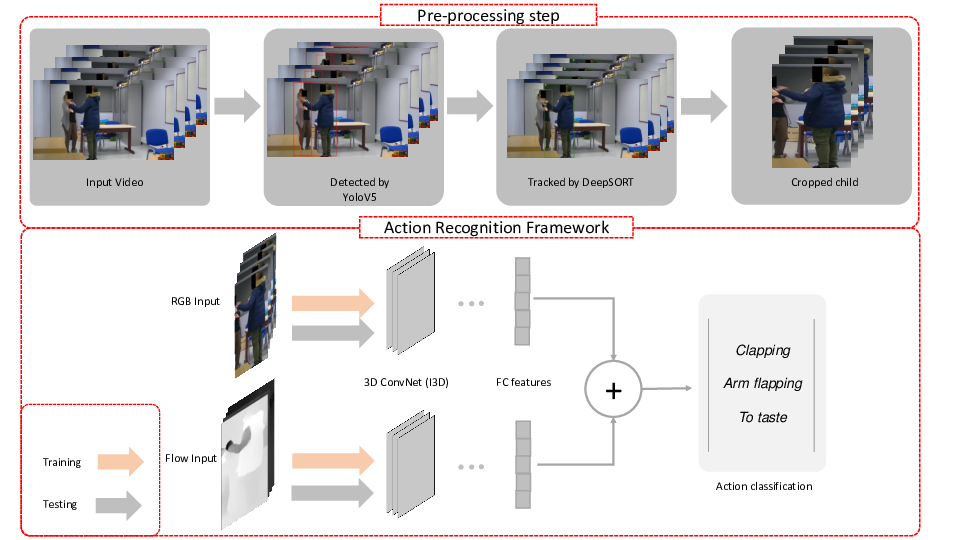

- Video-based Behavior Understanding of Children for Objective Diagnosis of Autism (see 8.28)

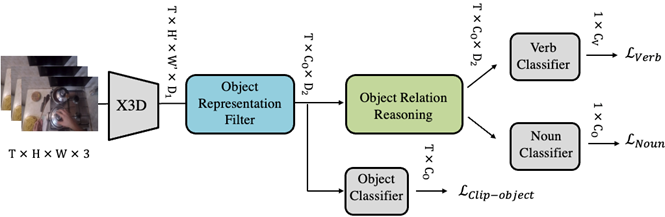

- Human activity recognition for interaction scerarios (see 8.29)

- Self-Supervised Video Pose Representation Learning for Occlusion-Robust Action Recognition (see 8.30)

Semantic Activity Recognition

Participants: Sabine Moisan, François Brémond, Monique Thonnat, Jean-Paul Rigault, Alexandra Konig, Rachid Guerchouche, Thibaud L'Yvonnet.

For this research axis, the contributions are:

8.2 Detection of Tiny Vehicles from Satellite Video

Participants: Farhood Negin, François Brémond.

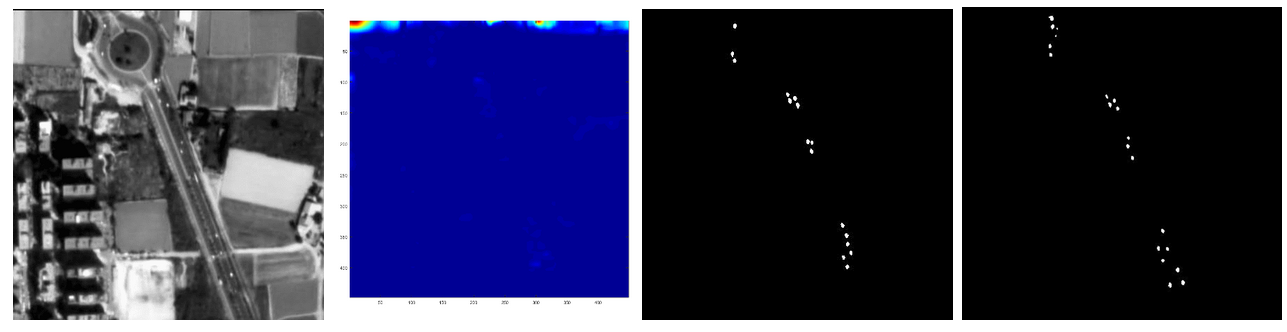

In this work, our goal is to achieve a perceptual understanding of the images/videos captured by satellites or other aerial imaging techniques. This will allow us to evaluate the behavior of various entities in those images and to plan appropriate responses and prevent undesirable actions such as abnormal behaviors 58, 59. To develop such systems, the primary step is the detection of objects of interest in the acquired imagery. Therefore, our first task is to detect objects in satellite images.

8.2.1 Work Description

Objects in the satellite datasets have a very small size (5 to 20 pixels) and the conventional methods have a hard time detecting those objects 70. There is a huge discrepancy between how a model works on small objects compared to large objects. In deep architectures, as the model learns, it forms features from the images passing through it. These features are based on pixels, and pixels in small objects are not so many for the model to learn strong features. Spatiotemporal information is already utilized in other contexts such as temporal segmentation but it is not fairly investigated in object detection in satellite images and there are only a few works using this information 65. In this work, the first goal is to develop a spatiotemporal-based framework to achieve a reliable detection and tracking of tiny objects from the satellite image sequences.

8.2.2 Datasets and Methods

Three datasets will be used for our evaluations (Figure 4): AirBus provided data, CGSTL dataset (Chinese satellite), and WPAFB 2009 (U.S. Air force). CGST dataset is a wide area imagery dataset that covers 3-4 square kilometers where 3 selected areas ( pixels) are fully annotated in every 10 frames. WPAFB is captured by 6 cameras and to obtain the final image six images are stitched together. It covers an area of around 35 square kilometers and the captured frames are multiresolution where he highest resolution is pixels.

To achieve a baseline the subsequent steps are followed: Image registration (stabilization), background subtraction, filtering. It is necessary to compensate for the camera motion by aligning all the previous frames to the current frame. Therefore, the transformation matrix: denoting transformation from frame t-k to frame t is calculated. For that SIFT feature point detector for interest point detection and SURF for feature extraction are utilized. Then, features matched between the two frames and transformation which is calculated by RANSAC. The stabilized frames then are subsampled at every 5 frames. The foreground objects are detected by frame differentiation and then morphological operations are applied to remove irregular blobs and too small/big detections (results are shown in Figure 5).

In this work, to produce a baseline, we applied image processing techniques for detection and tracking problems. In this step, the challenges are realized and will be addressed by designing new solutions and leveraging the available methods. One approach to solving the object detection challenge is to have an iterative process for training a deep model. In this approach, a background subtraction algorithm with a higher threshold is used to generate object proposals. Then, a classifier is trained based on the proposals to decide which proposal is a genuine object or merely a noise.

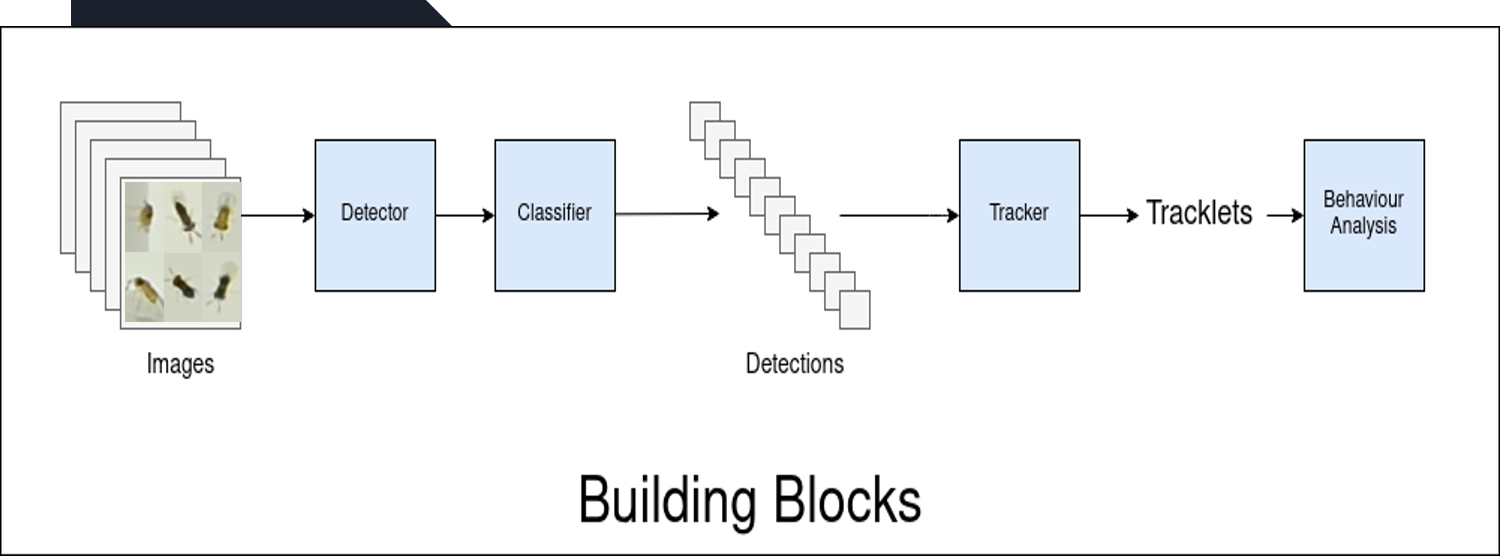

8.3 TrichTrack: Multi-Object Tracking of Small-Scale Trichogramma Wasps

Participants: Vishal Pani, Martin Bernet, Vincent Calcagno, Louise Van Oudenhove, François Brémond.

Trichogramma wasps behaviors are studied extensively due to their effectiveness as biological control agents across the globe. However, to our knowledge, the field of intra/inter-species Trichogramma behavior is yet to be explored thoroughly. To study these behaviors it is crucial to identify and track Trichogramma individuals over a long period in a lab setup. For this, we propose a robust tracking pipeline named TrichTrack 51. Due to the unavailability of labeled data, we train our detector using an iterative weakly supervised method. We also use a weakly supervised method to train a Re-Identification (ReID) network by leveraging noisy tracklet sampling. This enables us to distinguish Trichogramma individuals that are indistinguishable from human eyes. We also develop a two-staged tracking module that filters out the easy association to improve its efficiency. Our method outperforms existing insect trackers on most of the MOTMetrics, specifically on ID switches and fragmentations.

8.4 On Generalizable and Interpretable Biometrics

Participants: Indu Joshi, Antitza Dantcheva.

Black box behaviour and poor generalization are major limitation of state-of-the-art biometrics recognition systems. We work towards addressing these limitations in our recent work. We exploit attention mechanisms and adversarial learning to improve generalization ability and uncertainty estimation to impart interpretability to biometrics recognition systems. We describe these contributions in the following sections.

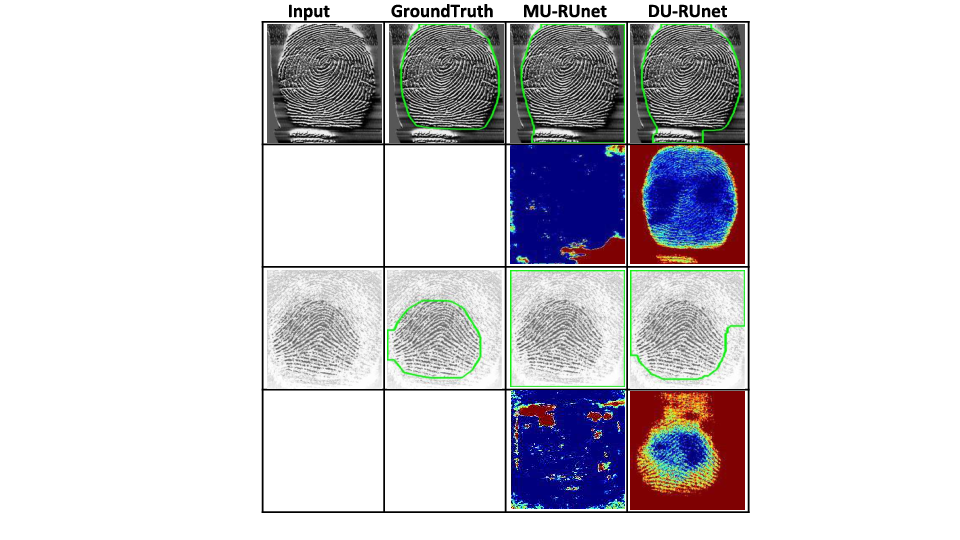

Data Uncertainty Guided Noise Aware Preprocessing We exploit Bayesian framework to estimate data uncertainty in a fingerprint preprocessing model 41. Given the input fingerprint image, data uncertainty estimation requires placing a prior distribution over the output of the model and calculating the variance of noise in model output. Predicted data uncertainty being input dependent, is learned as a function of input image. To obtain both the preprocessed image and its associated uncertainty, the network architecture of the baseline fingerprint preprocessing model is modified. The last layer of the baseline architecture is modified by splitting it into two. One branch predicts the model output (preprocessed image), whereas the other branch predicts the data uncertainty (noise variance). The mapping between input and the preprocessed image is learnt in a supervised manner. However, no labels for uncertainty are used, and the uncertainty values are learnt in an unsupervised manner. Furthermore, The loss function of the baseline architecture is also modified as suggested in 64. Results reveal that predicting data uncertainty helps the model to identify noisy regions in fingerprint images (see Figure 7), due to which higher activations are obtained around foreground fingerprint pixels. As a result, improved segmentation performance on noisy background pixels is obtained. Similar observations are reported for fingerprint enhancement.

8.5 Sensor-invariant Fingerprint ROI Segmentation Using Recurrent Adversarial Learning

Participants: Indu Joshi, Antitza Dantcheva.

A fingerprint region of interest (ROI) segmentation algorithm is designed 42 to separate the foreground fingerprint from the background noise. All the learning based state-of-the-art fingerprint ROI segmentation algorithms proposed in the literature are benchmarked on scenarios when both training and testing databases consist of fingerprint images acquired from the same sensors. However, when testing is conducted on a different sensor, the segmentation performance obtained is often unsatisfactory. As a result, every time a new fingerprint sensor is used for testing, the fingerprint ROI segmentation model needs to be re-trained with the fingerprint image acquired from the new sensor and its corresponding manually marked ROI. Manually marking fingerprint ROI is expensive because firstly, it is time consuming and more importantly, requires domain expertise. In order to save the human effort in generating annotations required by state-of-the-art, we propose a fingerprint ROI segmentation model which aligns the features of fingerprint images derived from the unseen sensor such that they are similar to the ones obtained from the fingerprints whose ground truth ROI masks are available for training. Specifically, we propose a recurrent adversarial learning based feature alignment network that helps the fingerprint ROI segmentation model to learn sensor-invariant features. Consequently, sensor-invariant features learnt by the proposed ROI segmentation model help it to achieve improved segmentation performance on fingerprints acquired from the new sensor. Experiments on publicly available FVC databases demonstrate the efficacy of the proposed work.

8.6 Biosignals analysis for multimodal learning

Participants: Laura M. Ferrari, François Brémond.

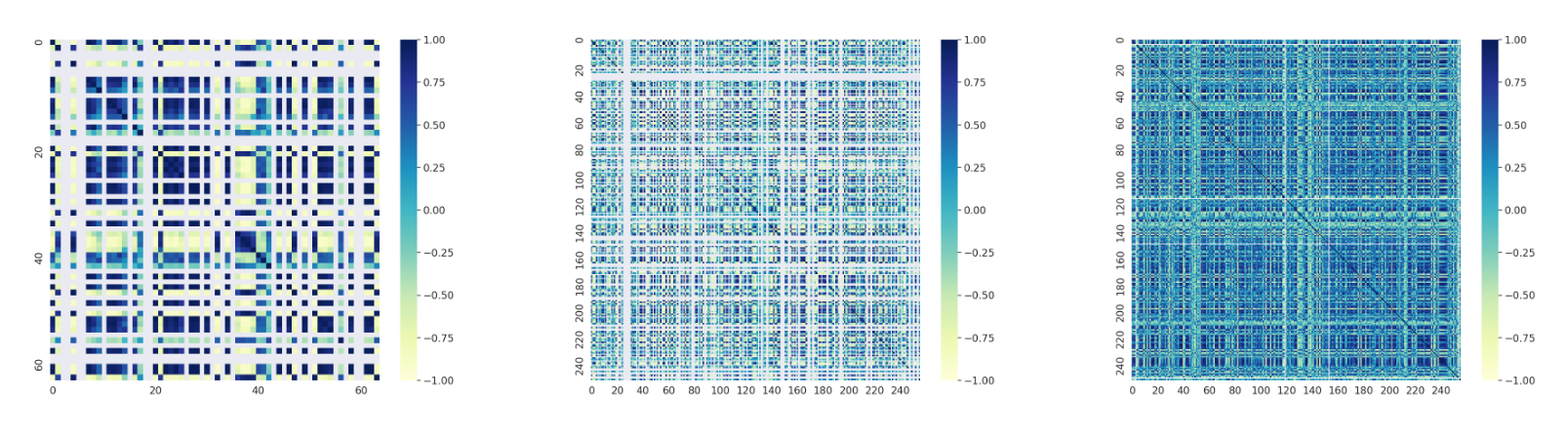

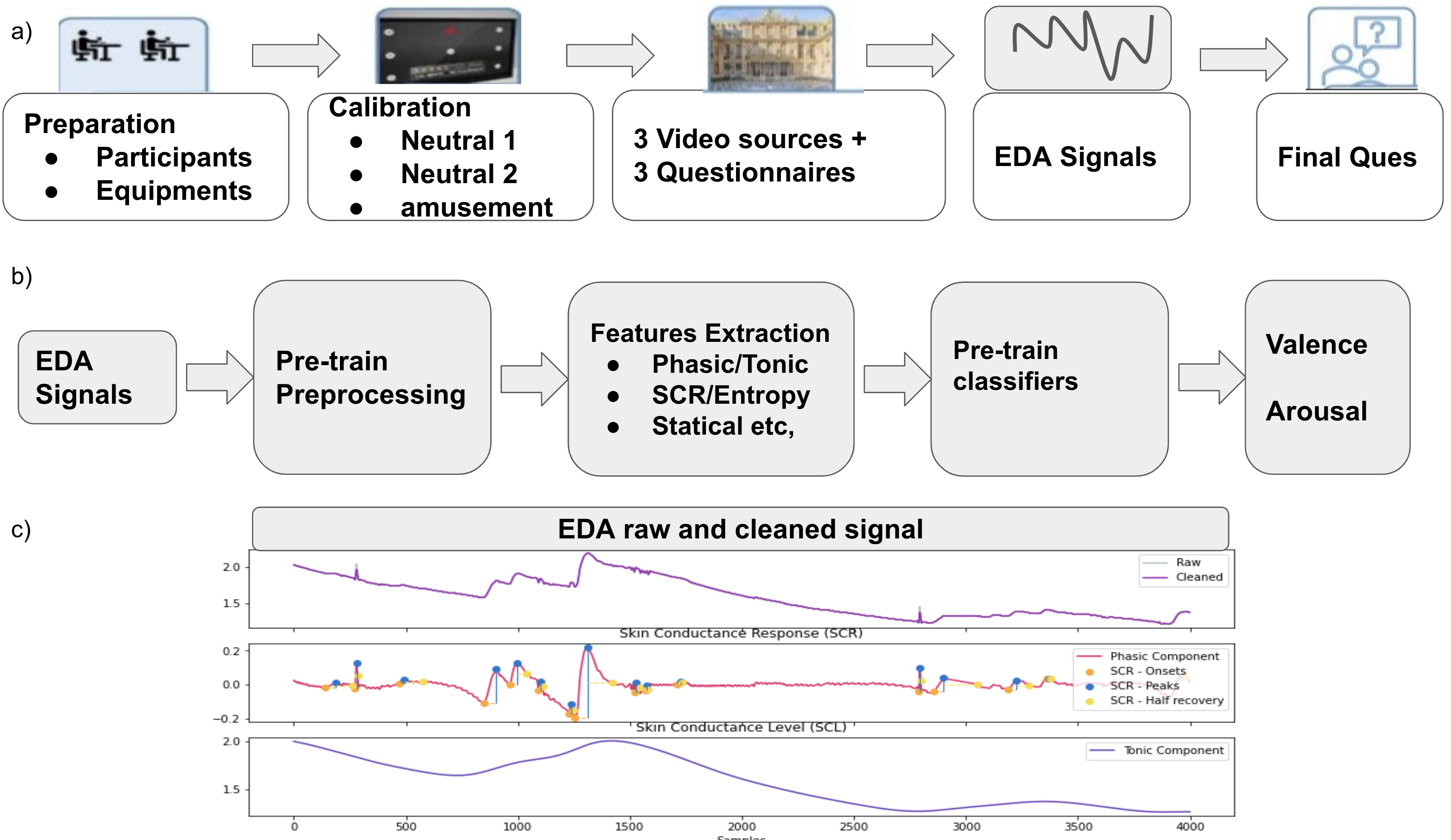

Multimodal machine learning aims at developing models that can process information from multiple input. Recently many fields have started exploiting multimodality as emotions or personality recognition. The idea is to combine salient information from different modalities such as RGB/3D cameras, thermal sensor, audio and biosensors. Despite the proven increased accuracy over singular modality the limits of multimodal analysis are multiple. Regarding the processing of biosignals, little has been reported for the treatment of multiple biosignals, coming from the skin surface. Indeed, while lot of works deal with electroencephalography (EEG) analysis for clinical applications, other source of information as electrodermal activity (EDA) and electrocardiography (ECG) have not been fully exploited nor a multimodal analysis proposed. Moreover multimodal datasets with high quality biosignals are limited in terms of dimension and quantity. In this project we are working to develop a robust pipeline to analyse multiple kinds of biosignals (e.g. EDA, ECG, EEG, EMG etc.). The analysis comprehends a pre-processing step (Figure 9c), with filtering and artefact removal, and then a feature extraction and selection step. Furthermore, we are building a refined dataset with more than 60 participants (Figure 9a). The goal is to combine and compare biosignal with video analysis to infer on emotion recognition, at first. Nevertheless this approach can be extended to health data in order to assist clinicians in their daily activities developing new insights in neuroscience.

8.7 Learning-based approach for wearables design

Participants: Laura M. Ferrari, François Brémond.

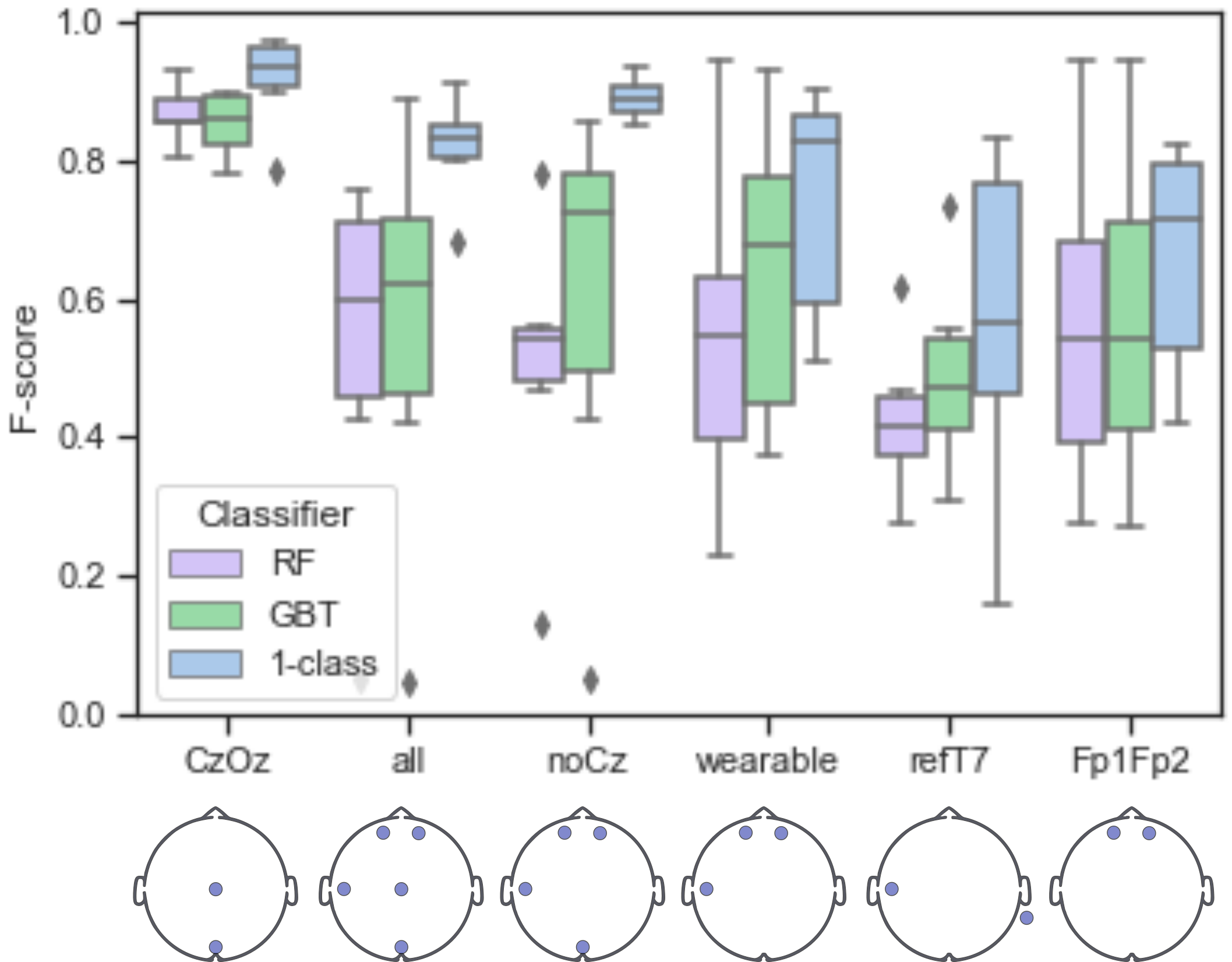

A limiting factor towards the wide use of wearable devices for continuous healthcare monitoring is their cumbersome and obtrusive nature. This is particularly true in electroencephalography (EEG), where numerous electrodes are placed in contact with the scalp to perform brain activity recordings. We propose to identify the optimal wearable EEG electrode set, in terms of minimal number of electrodes, comfortable location and performance, for EEG-based event detection and monitoring 38. By relying on the demonstrated power of autoencoder (AE) networks to learn latent representations from high-dimensional data, our proposed strategy trains an AE architecture in a one-class classification setup with different electrode combinations as input data. Alpha waves detection is the use case through which we demonstrate that the proposed method allows to detect a brain state from an optimal set of electrodes. The so-called wearable configuration, consisting of electrodes in the forehead and behind the ear, is the chosen optimal set, with an average F-score of 0.78 (Figure 10). Comparing this work with the state-of-the-art, it can be related to the more general problem of feature selection. Since this accounts to select the EEG channels achieving the highest performing accuracy, these methods do not consider the required number of electrodes, comfort or discreteness as a selection criteria. However, some methods used in sleep studies have considered comfortable EEG channels, e.g. forehead electrodes, among their pool of features. The main difference with other methods is that the AE network here adopted permits the use of unbalanced training, which is an advantage in view of wearable implementation. Although not directly comparable, this formulation allows our method to achieve a higher accuracy (83%) than that one reported in previous works, in forehead electrodes (76-77%) 60, 62. The proposed method represents a proof-of-concept on how machine learning-based techniques can help the design of realistic wearables for every-day use, which can go beyond EEG applications.

8.8 Computer Vision and Deep Learning applied to Facial Analysis in the invisible spectra

Participants: David Anghelone, Antitza Dantcheva.

Beyond the Visible - A survey on cross-spectral face recognition

This subject is within the framework of the national project SafeCity: Security of Smart Cities.

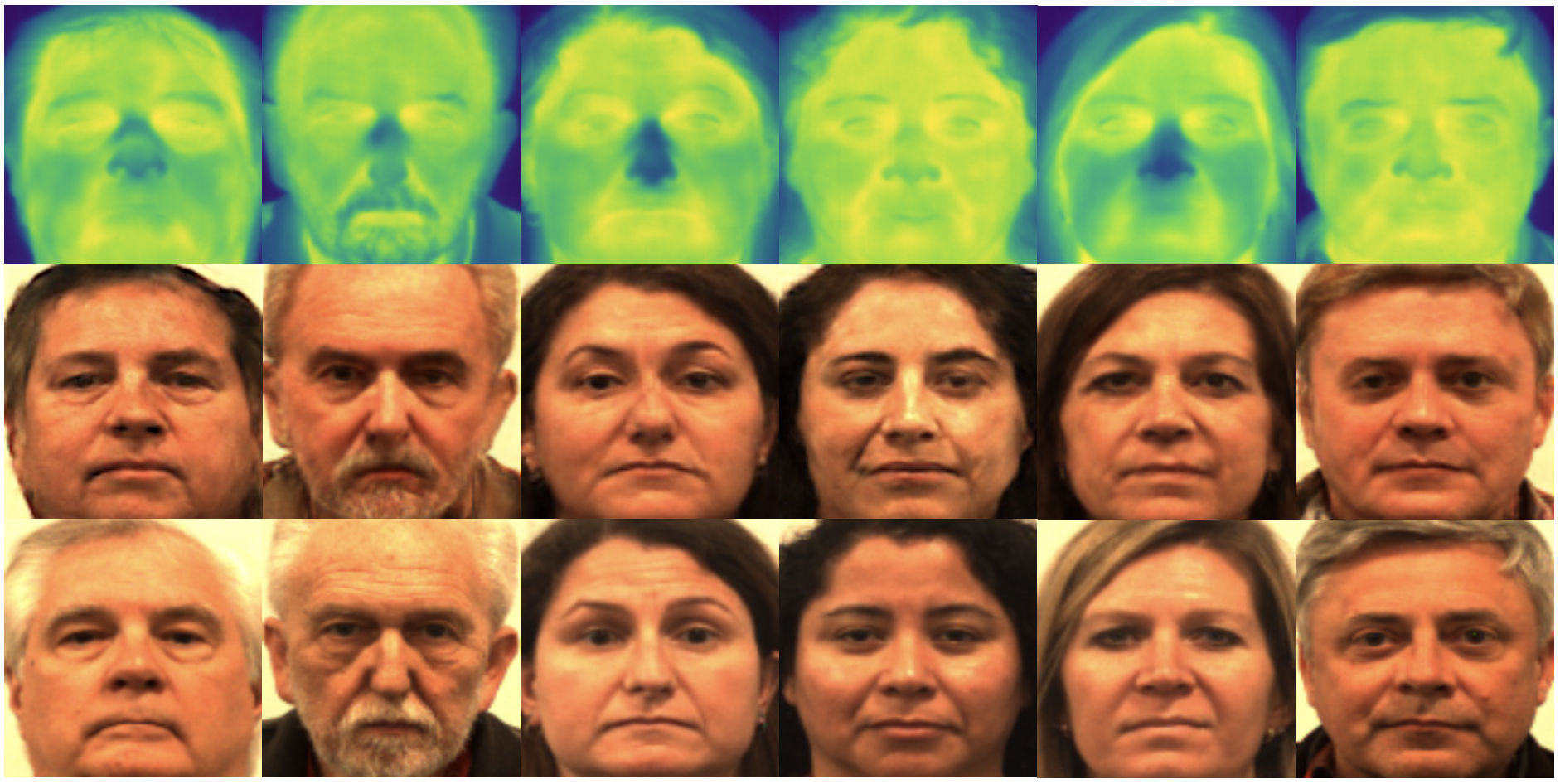

Face recognition has been a highly active area for decades and has witnessed increased interest in the scientific community. In addition, these technologies are being widely deployed, becoming part of our daily life. So far, these systems operate mainly in the visible spectrum as RGB-imagery, due to the ubiquity of advanced sensor technologies. However, limitations encountered in the visible spectrum such as illumination-restriction, variation in poses, noise as well as occlusion significantly degrades the recognition performance. In order to overcome such limitations, recent research has explored face recognition based on spectral bands beyond the visible. In this context, one pertinent scenario has been the matching of facial images that are sensed in different modalities - infrared vs. visible. Challenging in this recognition process has been the significant variation in facial appearance caused by the modality gap, this is depicted on Figure 11. Motivated by this, we conduced a survey on cross-spectral face recognition by providing an overview of recent advance and placing emphasis on deep learning methods.

8.9 Explainable Thermal to Visible Face Recognition using Latent-Guided Generative Adversarial Network

Participants: David Anghelone, Cunjian Chen, Philippe Faure, Arun Ross, Antitza Dantcheva.

One major challenge in performing thermal-to-visible face image translation is preserving the identity across different spectral bands. Existing work does not effectively disentangle the identity from other confounding factors. We hence proposed LG-GAN 28 a Latent-Guided Generative Adversarial Network to explicitly decompose an input image into identity code that is spectral-invariant and style code that is spectral-dependent. By using such a disentanglement, we were able to analyze the identity preservation by interpreting and visualizing the identity code. We presented extensive face recognition experiments on two challenging Visible-Thermal face datasets. In particular, LG-GAN increased facial recognition accuracy - the setting, where we directly compared a thermal probe to the visible gallery, to - the setting, where we applied our LG-GAN prior to matching. Hence, translating thermal face images into visible-like face images with LG-GAN significantly boosts the verification performance. Figure 12 depicts synthesized samples generated by LG-GAN from thermal face input. Additionally, we showed that the learned identity code is effective in preserving the identity, thus offering useful insights on interpreting and explaining thermal-to-visible face image translation.

8.10 Facial Landmark Heatmap Activated Multimodal Gaze Estimation

Participants: Neelabh Sinha, Michal Balazia, Mansi Mittal.

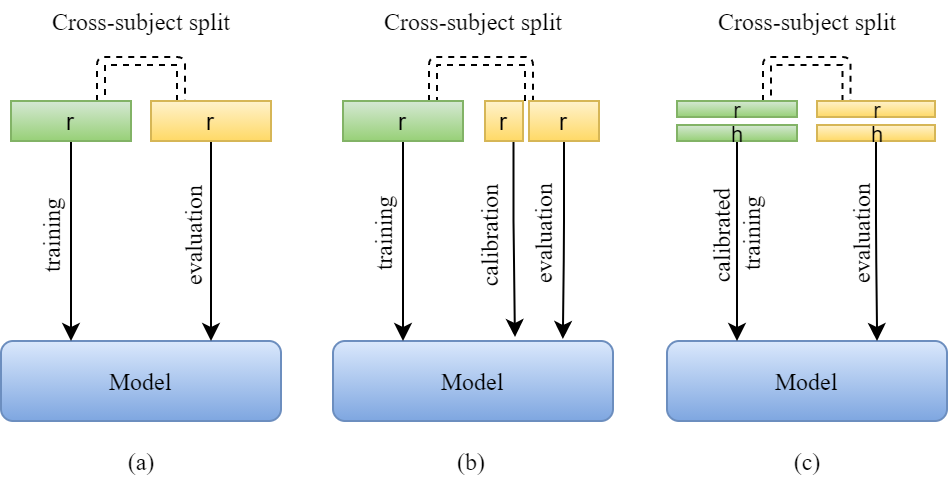

3D gaze estimation is about predicting the line of sight of a person in 3D space. Person-independent models (Figure 13(a)) lack precision due to anatomical differences of subjects, whereas person-specific calibrated techniques (Figure 13(b)) add strict constraints on scalability. To overcome these issues, we propose a novel technique, Facial Landmark Heatmap Activated Multimodal Gaze Estimation (FLAME), as a way of combining eye anatomical information using eye landmark heatmaps to obtain precise gaze estimation without any person-specific calibration (Figure 13(c)).

Different types of gaze estimation methods: (a) person-independent technique, (b) person-specific technique, (c) FLAME. Training subjects are green and test subjects are yellow. rr stands for RGB image and hh stands for eye landmark heatmap.

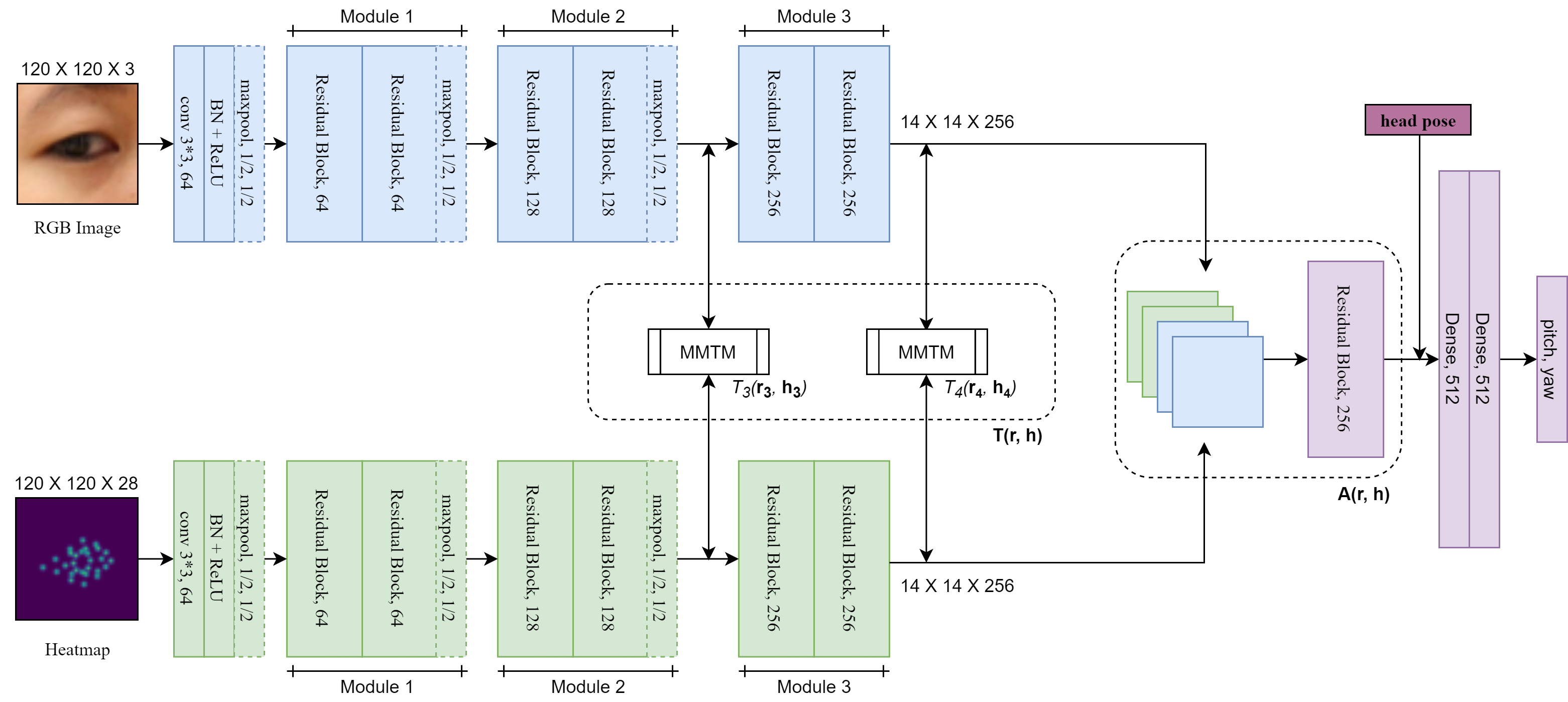

Information exchanged between the RGB stream and the eye landmark heatmap stream is defined by a transfer function based on Multimodal Transfer Module (MMTM). MMTM is a slow modality fusion block used to re-calibrate channel-wise features based on squeeze and excitation mechanism between any two feature maps of arbitrary dimension. To design the transfer function, we use this MMTM block for feature reactivation at the last and second last feature maps, as given in Figure 14. This is because we want the network to learn some initial representation and then, the higher-level features of both streams can be utilized by each other. At the deeper layers, using this transfer function can help both individual unimodal streams to learn better representation by benefiting from the information extracted by the other stream.

Evaluations reported in our paper 46 demonstrates a competitive performance of about 10% improvement on benchmark datasets ColumbiaGaze and EYEDIAP. To validate our method, we also conduct an ablation study, further proving that incorporating eye anatomical information plays a vital role in accurately predicting gaze.

8.11 ICE: Inter-instance Contrastive Encoding for Unsupervised Person Re-identification

Participants: Hao Chen, Benoit Lagade, François Brémond.

Recent self-supervised contrastive learning provides an effective approach for unsupervised person re-identification (ReID) by learning invariance from different views (transformed versions) of an input. In this work, we incorporate a Generative Adversarial Network (GAN) and a contrastive learning module into one joint training framework. While the GAN provides online data augmentation for contrastive learning, the contrastive module learns view-invariant features for generation, as shown in Figure 15. In this context, we propose a mesh-based view generator. Specifically, mesh projections serve as references towards generating novel views of a person. In addition, we propose a view-invariant loss to facilitate contrastive learning between original and generated views. Deviating from previous GAN-based unsupervised ReID methods involving domain adaptation, we do not rely on a labeled source dataset, which makes our method more flexible. Extensive experimental results show that our method 32 significantly outperforms state-of-the-art methods under both, fully unsupervised and unsupervised domain adaptive settings on several large scale ReID datsets. Source code and models are available under . This work has been published in IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2021.

Left: Traditional self-supervised contrastive learning maximizes agreement between representations (f1f_1 and f2f_2) of augmented views from Data Augmentation (DA). Right: Joint generative and contrastive learning maximizes agreement between original and generated views.

8.12 Joint Generative and Contrastive Learning for Unsupervised Person Re-identification

Participants: Hao Chen, Yaohui Wang, Benoit Lagade, Antitza Dantcheva, François Brémond.

Unsupervised person re-identification (ReID) aims at learning discriminative identity features without annotations. Recently, self-supervised contrastive learning has gained increasing attention for its effectiveness in unsupervised representation learning. The main idea of instance contrastive learning is to match a same instance in different augmented views. However, the relationship between different instances has not been fully explored in previous contrastive methods, especially for instance-level contrastive loss. To address this issue, we propose Inter-instance Contrastive Encoding (ICE) 31 that leverages inter-instance pairwise similarity scores to boost previous class-level contrastive ReID methods. We first use pairwise similarity ranking as one-hot hard pseudo labels for hard instance contrast, which aims at reducing intra-class variance. Then, we use similarity scores as soft pseudo labels to enhance the consistency between augmented and original views, which makes our model more robust to augmentation perturbations. Experiments on several large-scale person ReID datasets validate the effectiveness of our proposed unsupervised method ICE, as shown in Figure 16, which is competitive with even supervised methods. Code is made available at github. This work has been published in IEEE/CVF International Conference on Computer Vision (ICCV) 2021.

Comparison of top 5 retrieved images on Market1501 between CAP and ICE. Green boxes denote correct results, while red boxes denote false results. Important visual clues are marked with red dashes.

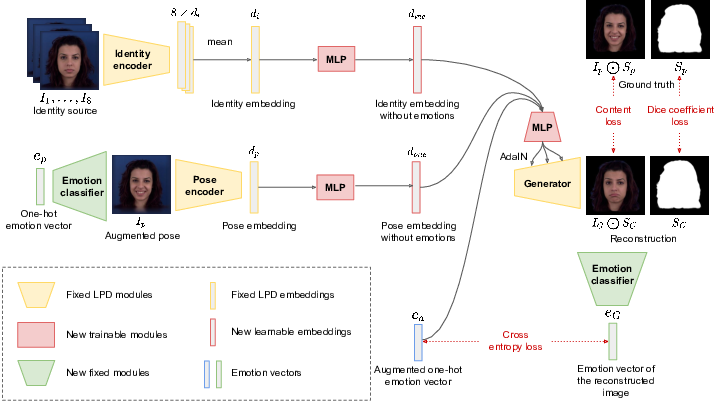

8.13 Emotion Editing in Head Reenactment Videos using Latent Space Manipulation

Participants: Valeriya Strizhkova, François Brémond, Antitza Dantcheva, Yaohui Wang, David Anghelone, Di Yang.

Video generation greatly benefits from integrating facial expressions, as they are highly pertinent in social interaction and hence increase realism in generated talking head videos. Motivated by this, we propose a method for editing emotions in head reenactment videos that is streamlined to modify the latent space of a pre-trained neural head reenactment system 47. Specifically, our method seeks to disentangle emotions from the latent pose and identity representation. The proposed learning process is based on cycle consistency and image reconstruction losses. Our results suggest that despite its simplicity, such learning successfully decomposes emotion from pose and identity. Our method reproduces facial mimics of a person from a driving video, as well as allows for emotion editing in the reenactment video. We compare our method to the state-of-art for altering emotions in reenactment videos, producing more realistic results that the state-of-art.

8.14 Learning to Generate Human Video

Participants: Yaohui Wang, Antitza Dantcheva, François Brémond.

Generative Adversarial Networks (GANs) have witnessed increasing attention due to their abilities to model complex visual data distributions, which allow them to generate and translate realistic images. While realistic video generation is the natural sequel, it is substantially more challenging w.r.t. complexity and computation, associated to the simultaneous modeling of appearance, as well as motion. Specifically, in inferring and modeling the distribution of human videos, generative models face three main challenges: (a) generating uncertain motion and retaining of human appearance, (b) modeling spatio-temporal consistency, as well as (c) understanding of latent representation.

In this thesis 56, we propose three novel approaches towards generating high-visual quality videos and interpreting latent space in video generative models. We firstly introduce a method, which learns to conditionally generate videos based on single input images. Our proposed model allows for controllable video generation by providing various motion categories. Secondly, we present a model, which is able to produce videos from noise vectors by disentangling the latent space into appearance and motion. We demonstrate that both factors can be manipulated in both, conditional and unconditional manners. Thirdly, we introduce an unconditional video generative model that allows for interpretation of the latent space. We place emphasis on the interpretation and manipulation of motion. We show that our proposed method is able to discover semantically meaningful motion representations, which in turn allow for control in generated results. Finally, we describe a novel approach to combine generative modeling with contrastive learning for unsupervised person re-identification. Specifically, we leverage generated data as data augmentation and show that such data can boost re-identification accuracy.

8.15 Guided Flow Field Estimation by Generating Independent Patches

Participants: Mohsen Tabejamaat, Farhood Negin, François Brémond.

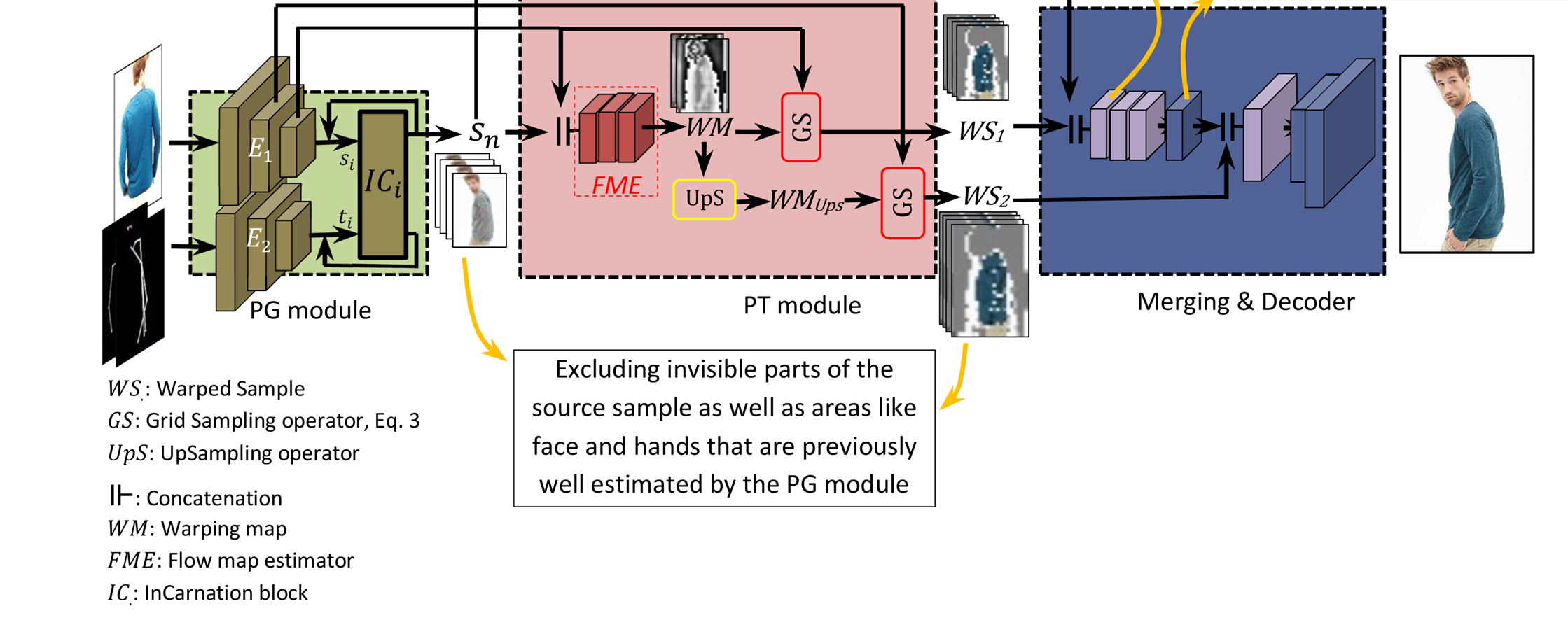

Guided flow field estimation is a novel strategy for high fidelity generation of fashion images. Current strategies propose to warp the samples using an offline pre-training fashion, where an additional network is considered for extracting the trajectory of warping flows so as to be further used as a prior to the main generative process. While interesting, pushing the network in a pseudo-Siamese way leads to a huge number of parameters, ending up to a significantly reduced generalization ability of the network. To address the issue, we propose the flow maps to adaptively learn from the estimations of the output sample rather than the fix keypoints at the input of the network (Figure 17), published in BMVC 2021 52. Finding a solution that enables for a fine trade off between the quantity of the parameters and the quality of samples was at the forefront of our project definition.

We also proposed a patch generation module which helps the transfer function be specialized on specific tasks. We proposed the target patches to be estimated from the same locations in the source sample but through two distinct functions that act as individual experts on the source and target samples.

8.16 BVPNet: Video-to-BVP Signal Prediction for Remote Heart Rate Estimation

Participants: Abhijit Das, Antitza Dantcheva.

We propose a new method for remote photoplethysmography (rPPG) based heart rate (HR) estimation. In particular, our proposed method BVPNet 37 is streamlined to predict the blood volume pulse (BVP) signals from face videos. Towards this, we firstly define ROIs based on facial landmarks and then extract the raw temporal signal from each ROI. Then the extracted signals are pre-processed via first-order difference and Butterworth filter and combined to form a Spatial-Temporal map (STMap). We then propose to revise U-Net, in order to predict BVP signals from the STMap. BVPNet takes into account both temporal and frequency domain losses in order to learn better than conventional models. Our experimental results suggest that our BVPNet outperforms the state-of-the-art methods on two publicly available datasets (MMSE-HR and VIPL-HR).

8.17 Demystifying Attention Mechanisms for Deepfake Detection

Participants: Abhijit Das, Ritaban Roy, Indu Joshi, Srijan Das, Antitza Dantcheva.

Manipulated images and videos, i.e., deepfakes have become increasingly realistic due to the tremendous progress of deep learning methods. However, such manipulation has triggered social concerns, necessitating the introduction of robust and reliable methods for deepfake detection. In this works 53, 36, we explore a set of attention mechanisms and adapt them for the task of deepfake detection. Generally, attention mechanisms in videos modulate the representation learned by a convolutional neural network (CNN) by focusing on the salient regions across space-time. In our scenario, we aim at learning discriminative features to take into account the temporal evolution of faces to spot manipulations. To this end, we address the two research questions `How to use attention mechanisms?', and `What type of attention is effective for the task of deepfake detection?' Towards answering these questions, we provide a detailed study and experiments on videos tampered by four manipulation techniques, as included in the FaceForensics++ dataset. We investigate three scenarios, where the networks are trained to detect (a) all manipulated videos, (b) each manipulation technique individually, as well as (c) the veracity of videos pertaining to manipulation techniques not included in the train set.

8.18 Computer Vision for deciphering and generating faces

Participants: Antitza Dantcheva.

The main volume of the HDR 54 is in computer vision, and it aims to holistically decipher information enciphered in human faces.Motivation originates from the emerging importance of automated face-analysis in our evolving society, be it for security or health applications, as well as from the practicality of such systems. Specifically, we have placed emphasis on learning representations of human faces concerning two main domains of application: security and healthcare. While seemingly different, these applications share the core processing-competence, which has proven to be beneficial as it has brought to the fore cross-fertilization of ideas across areas. With respect to security, we have designed algorithms, which extract soft biometrics attributes such as gender, age, ethnicity, height and weight. We have aimed at mitigating bias, when estimating such attributes. Prior, we have established the impact of facial cosmetics on automated face analysis systems and have then focused on the design of methods that reduce such impact and ensure for makeup-robust face recognition.

Results related to healthcare deal with facial behavioral analysis, as well as apathy analysis of Alzheimer's disease patients. In our current work with the STARS team of Inria and the Cognition Behaviour Technology (CoBTeK) lab of the Université Côte d'Azur, we have developed a series of spatio-temporal methods for facial behavior, emotion and expression recognition.

Most recently, we have additionally focused on Generative Adversarial Networks (GANs), which have witnessed increasing attention due to their abilities to model complex visual data distributions. We have proposed a number of novel approaches towards conditional and unconditional generation of realistic videos and have additionally aimed at disentangling the latent space into appearance and motion, as well as interpreting it.

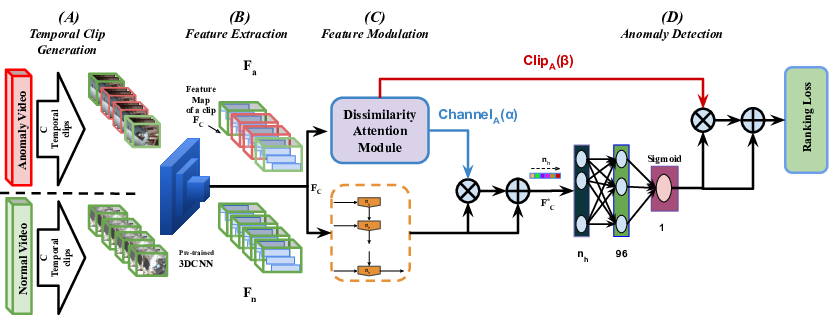

8.19 DAM : Dissimilarity Attention Module for Weakly-supervised Video Anomaly Detection

Participants: Snehashis Majhi, Srijan Das, François Brémond.

Video anomaly detection under weak supervision is complicated due to the difficulties in identifying the anomaly and normal instances during training, hence, resulting in non-optimal margin of separation. In this paper, we propose a framework consisting of Dissimilarity Attention Module (DAM) 44 to discriminate the anomaly instances from normal ones both at feature level and score level. In order to decide instances to be normal or anomaly, DAM takes local spatio-temporal (i.e. clips within a video) dissimilarities into account rather than the global temporal context of a video 45. This allows the framework to detect anomalies in real-time (i.e. online) scenarios without the need of extra window buffer time. Further more, we adopt two-variants of DAM for learning the dissimilarities between successive video clips. The proposed framework along with DAM is validated on two large scale anomaly detection datasets i.e. UCF-Crime and ShanghaiTech, outperforming the online state-of-the-art approaches by 1.5 and 3.4 respectively.

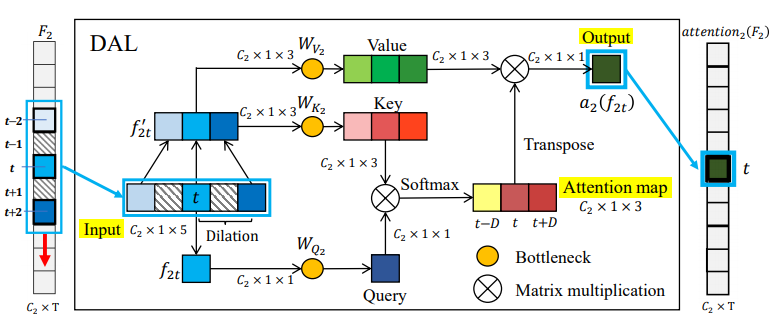

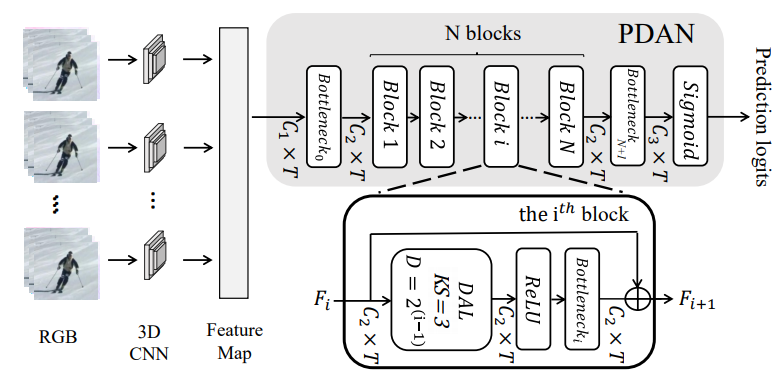

8.20 Pyramid Dilated Attention Network

Participants: Rui Dai, Srijan Das, François Brémond.

Handling long and complex temporal information is an important challenge for action detection tasks. This challenge is further aggravated by densely distributed actions in untrimmed videos. Previous action detection methods fail in selecting the key temporal information in long videos. To this end, we introduce the Dilated Attention Layer (DAL) 35, see Fig. 20. Compared to previous temporal convolution layer, DAL allocates attentional weights to local frames in the kernel, which enables it to learn better local representation across time. Furthermore, we introduce Pyramid Dilated Attention Network (PDAN) which is built upon DAL, see Fig. 21. With the help of multiple DALs with different dilation rates, PDAN can model short-term and long-term temporal relations simultaneously by focusing on local segments at the level of low and high temporal receptive fields. This property enables PDAN to handle complex temporal relations between different action instances in long untrimmed videos. To corroborate the effectiveness and robustness of our method, we evaluate it on three densely annotated, multi-label datasets: MultiTHUMOS, Charades and Toyota Smarthome Untrimmed (TSU) dataset. PDAN is able to outperform previous state-of-the-art methods on all these datasets. This work was published in the Winter Conference on Applications of Computer Vision 2021 (WACV 2021).

Dilated Attention Layer (DAL). In this figure, we present a computation flow inside the kernel at time step tt for layer ii=2 (kernel size KS is 3, dilation rate D is 2). Afterwards, DAL processes one step forward following the red arrow at time t+1t+1.

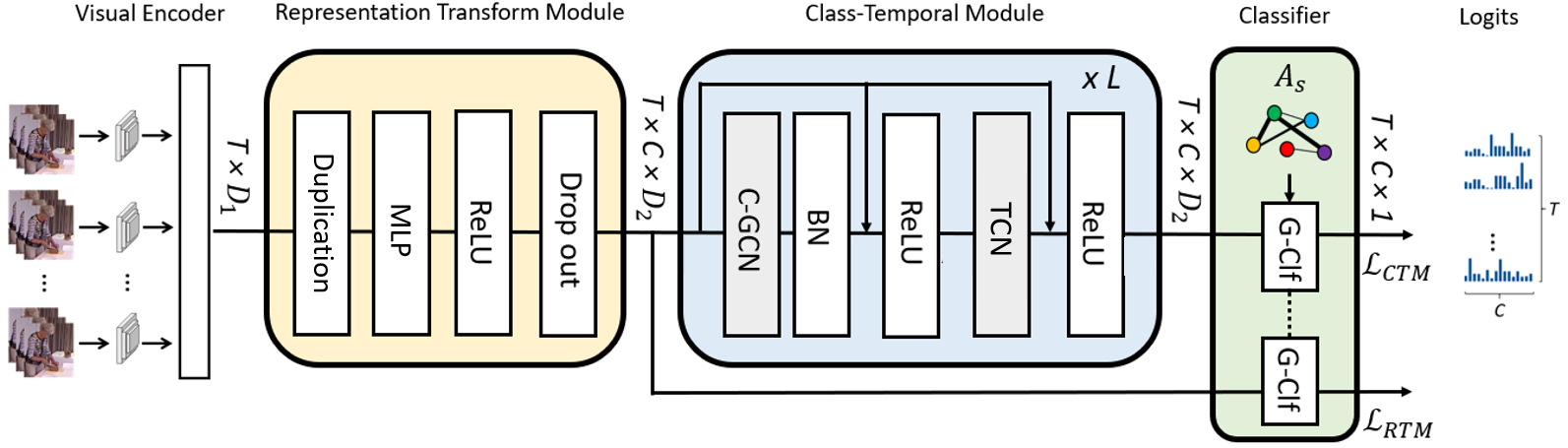

8.21 Class-Temporal Relational Network

Participants: Rui Dai, Srijan Das, François Brémond.

Action detection is an essential and challenging task, especially for densely labelled datasets of untrimmed videos. There are many real-world challenges in those datasets, such as composite action, co-occurring action, and high temporal variation of instance duration. For handling these challenges, we propose to explore both the class and temporal relations of detected actions. We introduce an end-to-end network 33 (see Fig. 22): Class-Temporal Relational Network (CTRN). It contains three key components: (1) The Representation Transform Module filters the class-specific features from the mixed representations to build graph-structured data. (2) The Class-Temporal Module models the class and temporal relations in a sequential manner. (3) G-classifier leverages the privileged knowledge of the snippet-wise co-occurring action pairs to further improve the co-occurring action detection. We evaluate CTRN on three challenging densely labelled datasets and achieve state-of-the-art performance, reflecting the effectiveness and robustness of our method. This work is accepted in The British Machine Vision Conference 2021 (BMVC 2021) 33 as an oral presentation.

8.22 Learning an Augmented RGB Representation with Cross-Modal Knowledge Distillation for Action Detection

Participants: Rui Dai, Srijan Das, François Brémond.

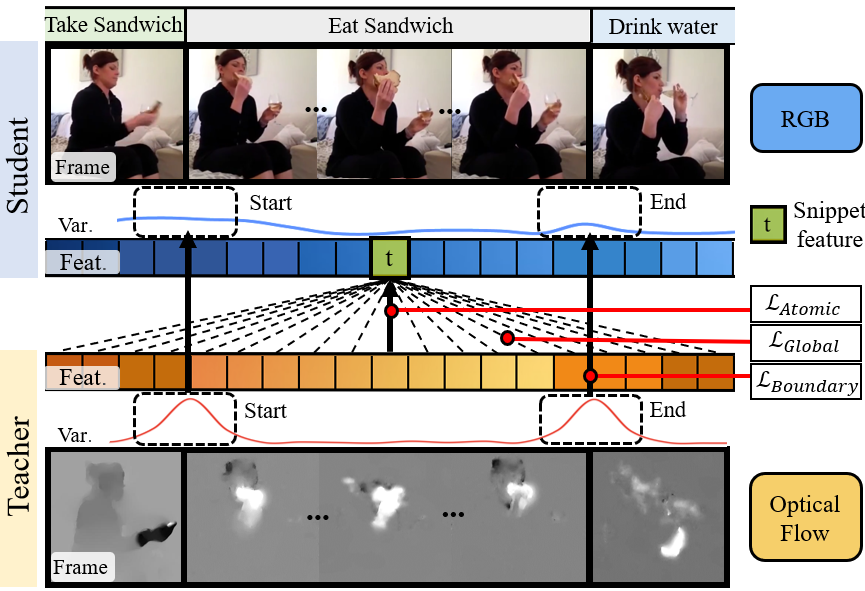

In video understanding, most cross-modal knowledge distillation (KD) methods are tailored for classification tasks, focusing on the discriminative representation of the trimmed videos. However, action detection requires not only categorizing actions, but also localizing them in untrimmed videos. Therefore, transferring knowledge pertaining to temporal relations is critical for this task which is missing in the previous cross-modal KD frameworks. To this end, we aim at learning an augmented RGB representation for action detection, taking advantage of additional modalities at training time through KD. We propose a KD framework 34 consisting of two levels of distillation (see Fig. 23). On one hand, atomic-level distillation encourages the RGB student to learn the sub-representation of the actions from the teacher in a contrastive manner. On the other hand, sequence-level distillation encourages the student to learn the temporal knowledge from the teacher, which consists of transferring the Global Contextual Relations and the action Boundary Saliency. The result is an Augmented-RGB stream that can achieve competitive performance as the two-stream network while using only RGB at inference time. Extensive experimental analysis shows that our proposed distillation framework is generic and outperforms other popular cross-modal distillation methods in the action detection task. This work was published in the International Conference on Computer Vision 2021 (ICCV 2021) 34.

Proposed cross-modal distillation framework for action detection. Our distillation framework is composed of three loss terms corresponding to different types of knowledge to transfer across modalities. ℒAtomic\mathcal {L}_{Atomic}: Atomic KD loss; ℒGlobal\mathcal {L}_{Global}: Global Contextual Relation loss; ℒBoundary\mathcal {L}_{Boundary}: Boundary Saliency loss.

8.23 VPN++: Rethinking Video-Pose embeddings for understanding Activities of Daily Living

Participants: Rui Dai, Srijan Das, François Brémond.

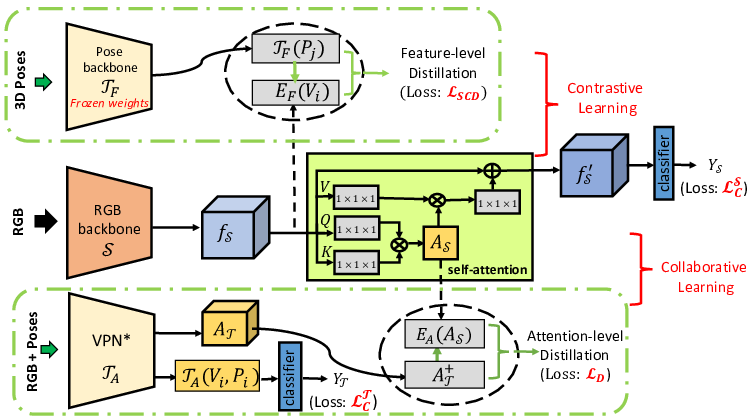

Many attempts have been made towards combining RGB and 3D poses for the recognition of Activities of Daily Living (ADL). ADL may look very similar and often necessitate to model fine-grained details to distinguish them. Because the recent 3D ConvNets are too rigid to capture the subtle visual patterns across an action, this research direction is dominated by methods combining RGB and 3D Poses. But the cost of computing 3D poses from RGB stream is high in the absence of appropriate sensors. This limits the usage of aforementioned approaches in real-world applications requiring low latency. Then, how to best take advantage of 3D Poses for recognizing ADL? To this end, we propose an extension of a pose driven attention mechanism: Video-Pose Network (VPN) 17, exploring two distinct directions. One is to transfer the Pose knowledge into RGB through a feature-level distillation and the other towards mimicking pose driven attention through an attention-level distillation. Finally, these two approaches are integrated into a single model, we call VPN++ (see Fig. 24). We show that VPN++ is not only effective but also provides a high speed up and high resilience to noisy Poses. VPN++, with or without 3D Poses, outperforms the representative baselines on 4 public datasets. This work is accepted in Transactions on Pattern Analysis and Machine Intelligence (TPAMI) 17.

8.24 Multimodal Personality Recognition using Cross-Attention Transformer and Behaviour Encoding

Participants: Tanay Agrawal, Dhruv Agrawal, Neelabh Sinha, François Brémond.

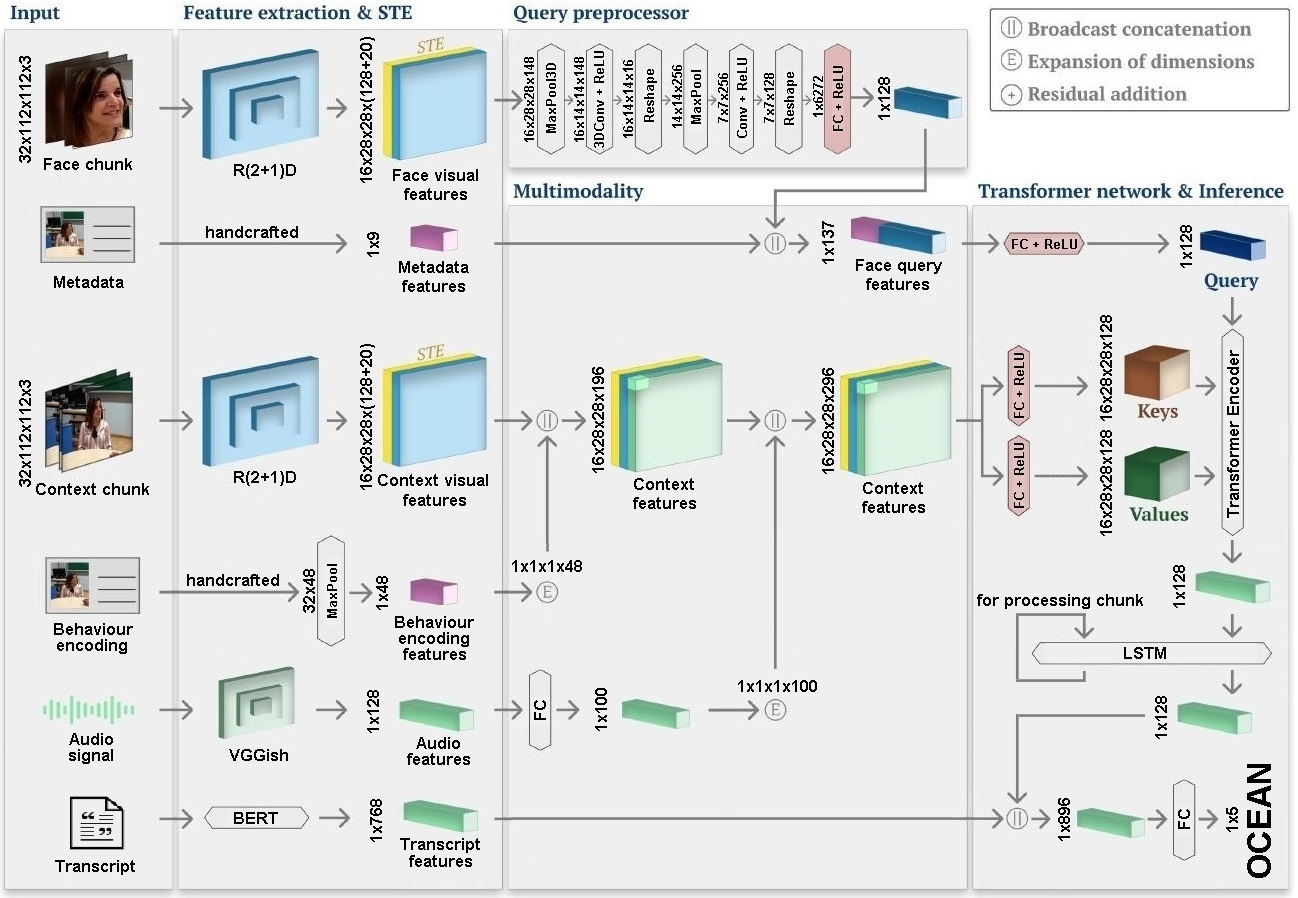

Personality computing and affective computing have gained recent interest in many research areas. The datasets for the task generally have multiple modalities like video, audio, language and bio-signals. We propose a flexible model for the task which exploits all available data. The task involves complex relations and, to avoid using a large model for video processing specifically, we propose the use of behaviour encoding which boosts performance with minimal change to the model. Cross-attention using transformers has become popular in recent times and is utilised for fusion of different modalities. Since long term relations may exist, breaking the input into chunks is not desirable, thus the proposed model processes the entire input together.

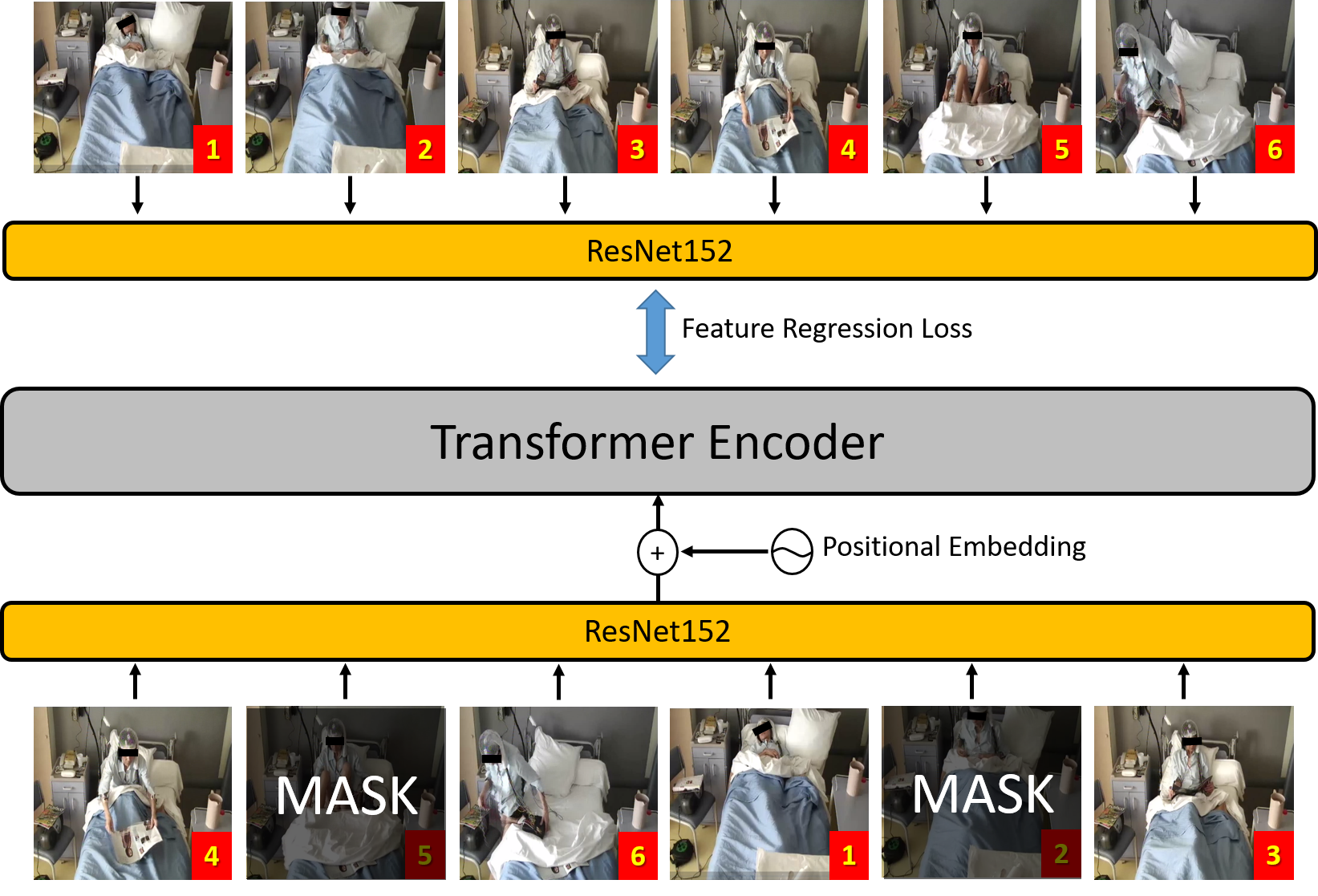

Our approach uses face crops of the target person and relates it to body language, surroundings and speech using a transformer based architecture. Short-term temporal relations are processed in this way and longer temporal relations are established using LSTM. For transcript analysis, short term temporal relations are not very meaningful so the features for the entire input sequences are extracted using BERT. Late fusion is then finally used for inferring the OCEAN personality traits. Figure 25 shows the overview of the entire architecture.