Keywords

Computer Science and Digital Science

- A2.1.10. Domain-specific languages

- A3.1.4. Uncertain data

- A3.1.7. Open data

- A3.1.8. Big data (production, storage, transfer)

- A3.3. Data and knowledge analysis

- A3.3.1. On-line analytical processing

- A3.3.3. Big data analysis

- A3.5.1. Analysis of large graphs

- A5.1. Human-Computer Interaction

- A5.1.1. Engineering of interactive systems

- A5.1.2. Evaluation of interactive systems

- A5.1.6. Tangible interfaces

- A5.1.8. 3D User Interfaces

- A5.1.9. User and perceptual studies

- A5.2. Data visualization

- A5.6.1. Virtual reality

- A5.6.2. Augmented reality

- A6.3.3. Data processing

- A9.6. Decision support

Other Research Topics and Application Domains

- B1. Life sciences

- B1.1. Biology

- B1.2. Neuroscience and cognitive science

- B9.5.6. Data science

- B9.6. Humanities

- B9.6.1. Psychology

- B9.6.3. Economy, Finance

- B9.6.6. Archeology, History

- B9.6.10. Digital humanities

1 Team members, visitors, external collaborators

Research Scientists

- Jean Daniel Fekete [Team leader, INRIA, Senior Researcher, HDR]

- Tobias Isenberg [INRIA, Senior Researcher, HDR]

- Petra Isenberg [INRIA, Researcher, HDR]

Faculty Member

- Frederic Vernier [UNIV PARIS SACLAY, Associate Professor]

Post-Doctoral Fellows

- Florent Cabric [INRIA, from Oct 2022]

- Gaelle Richer [INRIA, until May 2022]

- Mickael Sereno [INRIA]

PhD Students

- Katerina Batziakoudi [Berger-Levrault, CIFRE, from Oct 2022]

- Tingying He [UNIV PARIS SACLAY]

- Jiayi Hong [INRIA]

- Mohammad Alaul Islam [INRIA]

- Alexis Pister [INRIA]

- Lijie Yao [INRIA]

Technical Staff

- Christian Poli [INRIA]

Interns and Apprentices

- Federica Bucchieri [INRIA, Intern, from Mar 2022 until Aug 2022]

- Ebrar D. Santos [INRIA, Intern, from Mar 2022 until Aug 2022]

Administrative Assistant

- Katia Evrat [INRIA]

Visiting Scientists

- Raimund Dachselt [Technische Universität Dresden, from Feb 2022 until Feb 2022]

- Nivan Ferreira Junior [UFPE, from Jul 2022 until Oct 2022]

- Juan Carlos Torres Cantero [University of Granada, from May 2022 until Jul 2022]

2 Overall objectives

2.1 Objectives

Aviz (Analysis and VIsualiZation) is a multidisciplinary project that seeks to improve data exploration methods, techniques, and tools based on Interactive Visualization. Visualization, in general, refers to the graphical representation of data or concepts to aid access, distribution or explanations of data. Card et al. give a general definition for visualization as

“the use of computer-supported, interactive, visual representations of data to amplify cognition.” 74

Taking this definition, visualization is a means of creating visual aids that lead to insight in the underlying data sets. It is not about producing nice pictures but about making data understandable and explorable so that visualizations help viewers gain knowledge about the data. It is about aiding the process of forming a mental model for the acquired data and so helping the viewer to understand underlying concepts, patterns, and connections within the data 127. In partiular, visualization has the goal to improve humans' sensemaking of complex data by taking advantage of the capabilities of their vision system: visual information can be processed in parallel and with a high bandwidth into the human cognitive centers 136. Ware defines five advantages of visualization 136:

- Comprehension: Supports the comprehension of large amounts of data.

- Pattern Perception: Previously unnoticed properties of data may emerge.

- Problem Analysis: Problems within the data may become immediately apparent.

- Adaptability: facilitates understanding of large- and small-scale features of data.

- Interpretation: Hypothesis formulation is facilitated.

Three main areas of visualization have evolved in the computer science community: Scientific Visualization, Information Visualization, and Visual Analytics. Scientific visualization is primarily concerned with displaying real or simulated scientific data. Basic visualization techniques for this area include surface rendering, volume rendering, and animation. Typical examples include processing of satellite photographs, fluid flows, or medical data. Datasets in information visualization typically come from large information spaces or information systems and are both structured or unstructured. Examples include network data, multi-dimensional tables of abstract measurements, or unstructured data such as text. Visual analytics, finally, is concerned with augmenting human-led data exploration with automatic techniques such as machine learning. The Aviz team has expertise in all three areas of visualization.

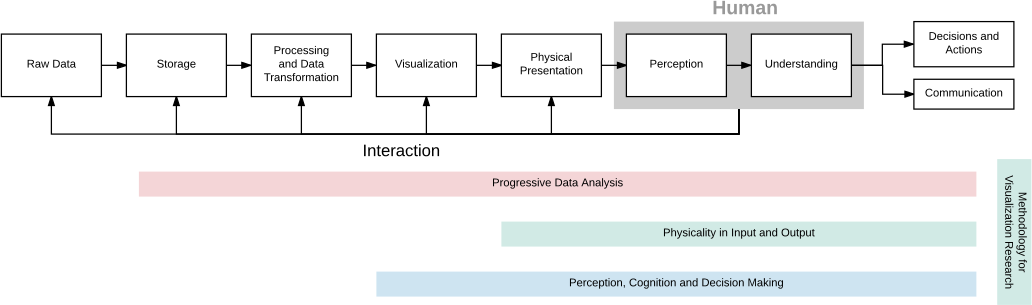

As shown in Figure 1, visualization deals with the data analysis pipeline and research in visualization has been addressing all the stages with less emphasis on the two initial ones and the last one. In its initial incarnation, Aviz has been focusing on interaction in combination with visualization, physical presentation, and perception. We now want to expand our research to wider questions, both in the human-side and in the system side. For the human side, we want to better understand human perception and cognition to improve the visualization techniques, so as to better convey information to the human brain. We also want to better understand the human biases to overcome them when possible, or provide methods to avoid them otherwise.

On the system side, we want to expand the scope of visualization that is currently limited to relatively small datasets and relatively simple analytical methods. To achieve scalability in visualization, we will focus on a paradigm shift: progressive data analysis. Long-running computations currently hamper the exploration and visualization process because human's attention is limited by latency constraints. We want to design exploratory systems that provide continuous feedback and allow interactions at any time during computation. The new progressive data analysis paradigm offers these capabilities, but to be usable, it requires the whole analytical pipeline to be re-implemented, and visualization and interaction techniques to be adapted.

2.2 Research Themes

Aviz's research on Visualization and Visual Analytics is organized around four research themes, described in more detail in the next section. Instead of addressing point problems, each research theme will address several stages of the visualization pipeline in a holistic manner, as summarized in fig:diagram.

1. Progressive Data Analysis will address visualization scalability problems. Existing data analysis systems (such as Tableau 130, R 131, or Python with its data analysis ecosystem 105) are not scalable for exploratory analysis because their latency is not controllable. This theme will lay out the foundations of progressive data analysis systems, which generate estimates of the results and updates the analyst continuously at a bounded pace. It will focus on all the stages of the data analysis pipeline: data management mechanisms, data analysis modules, as well as visualizations, perception, understanding, and decision making.

2. Physicality in Input and Output will seek to better understand the benefits of physicality for information. Although beyond-desktop environments for visualization are generating more and more interest, theories and empirical data are lacking. This theme will consolidate the nascent areas of data physicalization, situated visualization, and immersive visualization.

3. Perception, Cognition, and Decision Making will study how we perceive and understand visualizations in order to develop generalized guidelines for optimizing effectiveness. It will generalize results obtained with simple charts to more complex visualizations of large datasets, establish theories on the use of abstraction in visualization, and contribute new empirical knowledge on decision making with visualizations.

4. Methodologies for Visualization Research will develop new methods to ground the study of the above scientific questions, and to benefit visualization more generally. This theme will develop evidence-based strategies for communicating quantitative empirical findings, and will promote methodological discussions and open research practices within the field.

3 Research program

3.1 Progressive Data Analysis

Permanent involved: Jean-Daniel Fekete

While data analysis has made tremendous progresses in scalability in the last decade, this progress has only benefited “confirmatory” analysis or model-based computation; progress in data exploration has lagged behind.

Existing data analysis systems do not support data exploration at scale because, for large amounts of data or for expensive computations, their latency is not controllable: computations can take minutes, hours, even days and months. Cognitive psychologists have shown that humans cognitive capabilities degrade when latency increases 118, 126. Miller 118 points out that the feedback of a system should remain below 10 seconds to maintain the user's attention. Therefore, to try to limit the latency, analysts currently resort to complex, inefficient, and unsatisfactory strategies, such as sampling with its issues related to representativity.

To address the scalability problem under controlled latency, instead of performing each computation in one long step that forces the analyst to wait for an unbounded amount of time, a progressive system generates estimates of the results and updates the analyst continuously at a bounded pace. The process continues until the computation is complete, or it stops early if the analyst considers that the quality of the estimates is sufficient to make a decision. During the process, a progressive system allows users to monitor the computation with visualizations and steer it with interactions.

While the topic of progressive data analysis has started to emerge in the late 90's, it has remained marginal practically because it touches three fields of computer science that are traditionally separate: data management, data analysis, and visualization. Research on progressive data analysis remains fragmented; the solutions proposed are partial and the different solutions cannot always be combined. We have organized a Dagstuhl seminar on Progressive Data Analysis and Visualization 82, 132 that acknowledged the harm of this topical separation and devised a research agenda. Aviz will participate in this agenda with specific assets.

Aviz has recently started to design and implement the ProgressiVis language that is natively progressive 83. The language relies on a Python interpreter but its execution semantics is different in the sense that all the operations that would take time to execute are performed progressively. The ProgressiVis system touches all the stages of the conceptual data analysis pipeline of fig:diagram; it integrates data management mechanisms, data analysis modules, as well as visualizations, perception, understanding, and decision making. Aviz will strengthen its work on the implementation of a natively progressive data science system. Such a system will lead to the following research topics:

- Progressive language kernel and data management mechanisms

- Progressive algorithms and computation strategies

- Progressive visualizations

- Management of uncertainties, computed from the algorithms and conveyed to the analysts.

Language:

ProgressiVis relies on a Python interpreter that is convenient for quick prototyping but is inefficient. Once the semantics of the language and its core libraries become clearer, we will replace the implementation with a C++ library that will improve performance and allow its integration in other popular data science environments such as R and Julia. We are collaborating with colleagues in the database community to tackle the challenges related to progressive data management 132, 62.

Algorithms:

Existing libraries implementing algorithms are either “eager”, sometimes “online”, and more rarely “streaming”, but very few are compatible with our progressive paradigm. We have started to work in collaboration with the University of Seoul and the University of Delft on the problem of converting existing algorithms into a progressive form. We currently have a running version of approximate k-nearest-neighbors 103, kernel density estimation 103, k-means clustering, multidimensional projection using t-SNE 103, and several other core algorithms. We want to further expand the range of algorithms we can support, and better understand how algorithms could be transformed into their progressive counterpart.

Progressive computation can trade speed, memory, and quality. When analysts want to compute rough estimates of their results quickly, several progressive algorithms offer parameters to control the tradeoffs. For example, our progressive k-nearest-neighbors algorithm 103 allows choosing the number of k-d-trees that influences the memory footprint, accuracy, and speed. Progressive data analysis also uses and adapt streaming algorithms, also called “data sketches” 84, that offer various tradeoffs between speed, memory, and quality.

Visualizations:

Like algorithms need to be adapted or transformed, visualization techniques and user interfaces also need to be adapted to be used in a progressive setting. For the visualization pipeline, including the rendering phase, there has been previous work related to progressive layout computation and progressive rendering. For example, in network visualization, there is a old tradition of using iterative force-directed solvers and showing their results progressively when computing complex graph layouts. We want to generalize this approach to all the visualizations. In the recent years, we have collaborated on work related to the progressive computation of multidimensional projections, including PCA and t-SNE 120, 103, and will continue to improve the scalability of projection methods. Progressive rendering is popular in the real-time computer graphics and gaming domains, but new in visualization. Our colleague Renaud Blanch from Grenoble has started to investigate this problem 71 by designing a rendering engine that can visualize and filter a large amount of data in interactive time, transiently simplifying details when necessary. We will also explore this problem both in the context of direct rendering, when the GPU shares the memory performing the computations, and web-based rendering.

We have also started to explore the problem of requirements for progressive user interfaces 61, leading the community of researchers to understand that progressive systems need to provide effective assessments of the quality of their results to allow analysts to make early decisions. This justifies the next research topic on the management of uncertainties. We have also started to propose new data models for managing aggregated visualizations 104. We will now continue our research to adapt existing visualization techniques and interaction environments to deal with progressive results and parameter steering.

Management of uncertainties

When performing exploratory data analysis on large data, analysts start by trying some algorithms with different parameters until they obtain useful results. Therefore, it is of paramount importance to be able to realize that a specific algorithm or a specific set of parameters are not leading to a useful result and to abort an analysis before it ends to explore other methods. On the other side, in many circumstances when data is “well-behaved”, progressive algorithms achieve very good results quickly, and analysts can make a decision before the end of the algorithms' execution. The assessment of a quality measure related to a computed result is a complex problem, already tackled by other communities (e.g., streaming, approximate queries). Progressive Data Analysis adds two problems: 1) controlling uncertainty during progressive computations, and 2) conveying the results of this uncertainty in a way understandable by analysts, while avoiding possible cognitive biases such as priming and anchoring 106.

To control the uncertainty during the progression of a computation, methods related to Sequential Analysis 85 should be used. They are well-known in the area of clinical trials and have evolved recently to manage large amounts of data while controlling time or quality in Approximate Query Databases such as BlinkDB 58. These approaches should be further adapted for progressive data analysis. The topic of human cognitive bias will be developed further in sec:decision.

3.2 Physicality in Input and Output

Permanents involved: Petra Isenberg, Tobias Isenberg, Jean-Daniel Fekete

In the past few years, researchers at Aviz have begun to investigate beyond-desktop environments for visualization 92, 93, 97, 113. In particular, our team has made important contributions in the areas of large-display visualizations 90, 70, 89, tangible interaction for scientific visualization 64, 63, 66, and data physicalization 98, 102, 100, 112. We will pursue and consolidate this stream of research by focusing on how to leverage physicality in visualization, both as an input channel and as an output channel. We will more specifically i) explore physically-situated and physically-embedded visualizations for personal analytics; and ii) study augmented-reality-based scientific visualization.

3.2.1 Situated Data Representations for Personal Analytics

We will study how embedding data visualizations in the physical world can empower people to make effective use of their personal data in a variety of application contexts. People generate data in many areas of daily life (e. g., data generated from shopping cards, fitness and health data). However, these types of personal data are often only exploited by the companies capturing it while they remain unused by the people who “generated” them. Rarely is personal data subjected to an in-depth analysis and used for reflection and decision making.

Personal visual analytics is a new and growing area of research 88, but has mostly focused on desktop and mobile applications. With our work we want to go beyond the traditional platforms of personal data analytics by using situated data visualizations on various types of non-traditional displays. In a situated data visualization, the data is directly visualized near the physical space, object, or person it originates from 137. For example, a person may attach small e-ink displays embedded with sensors at various locations of their house or their workplace, to better understand their use of space, of equipment, or of energy resources. Or a person who wishes to exercise more may use an augmented reality device to visualize their past running performance in-place. New situated data visualizations like these can surface information in the physical environment—allowing viewers to interpret data in-context and take action in response to it. Because they will make it possible to explore personal data and take action in relevant physical locations, situated visualizations of personal data have the potential to radically transform how people relate to data.

This research will investigate how situated visualizations of personal data can help address problems faced by the general public by investigating a range of research hypotheses and application domains. For example, we will study how augmented reality technology can help surface digital traces of personal data in physical environments and enable people to make more informed decisions. We will also study how small, portable, and situated micro-visualizations can allow people to understand personal data in-place in changing, dynamic, and mobile contexts.

On this topic, we already had an “équipe associée” Inria grant with University of Calgary, and the ANR project Ember.

3.2.2 Visualization using Augmented Reality Devices

Based on past work on the integration of various types of input and output devices for the visualization of inherently three-dimensional spatial data 64, 63, 66, 91, 114, 94, 95, 96, we investigate the use of, in particular, augmented reality (AR) head-mounted displays (HMDs). This combination of stereoscopically projected 3D data which provides a high amount of visual immersion with innovative interaction techniques that facilitate data exploration though immersion that arises from direct manipulation is part of the recently established subfield of visualization research which focuses on immersive analytics 81, 73 and promises to combine benefits of traditional virtual reality (VR) environments with those that use traditional, touch, or tangible input and used innovative interaction designs. We specifically focus on AR-based HMDs because they do not prevent us from using traditional data exploration environments such as workstations which are not only familiar to experts but also widely available. The AR HMD, moreover, is an easily maintainable device that does not rely on constant calibration and thus could more easily be integrated into and accepted by existing research facilities than more elaborate VR settings such as CAVEs.

Specifically, we have worked on two projects in this direction. One is a collaboration with particle physicists at the local linear accelerator laboratory as well as researchers at CERN with whom we have explored an interactive data exploration tool of the experimental data generated at CERN from collisions of protons. In a second project we have worked with climate researchers on novel collaborative data analysis scenarios in which several experts investigate 3D datasets together with the help of AR HMDs. We are currently also working with archaeological conservation experts to investigate the use of AR technology to capture, analyze, guide, and collaborate about spatial and abstract data capture about historical monuments and their conservation status.

3.2.3 Other

In addition to the three major themes previously mentioned, Aviz has also been involved in research on the use of tangible input devices, both to navigate abstract data visualizations 99, 109 and to explore 3D data 95, 67, 65. We will continue this stream of research, possibly applying emerging trends in tangible input device design 86 to data exploration.

3.3 Perception, Cognition and Decision Making

Permanents involved: Petra Isenberg, Tobias Isenberg, Jean-Daniel Fekete

As we collect increasingly large amounts of data in fields such as climate science, finance, and medicine, the need to understand and communicate that data becomes more important. Data visualizations (e.g., graphs, maps, infographics, etc.) are often used to give an overview of information, however it can be challenging to predict whether these visualizations will be effective before spending resources to develop them. Consequently, researchers make use of experimental methods from visual perception and cognition to study how we perceive and understand visualizations in order to develop generalized guidelines for optimizing effectiveness. While many past studies provide a research groundwork using simple and small visualizations (such as a bar graph with four bars), there has been little research on perception and cognition for more complex visualizations used for large datasets with thousands or millions of elements. Novel techniques researched by Aviz to alleviate complexity can be more effectively used if we understand when and why these techniques become beneficial.

3.3.1 Perceiving the Gist of a Visualization

It would be highly inefficient if users of large data visualizations serially inspected individual visual elemnts. Instead, the visual system allows people to quickly get a visual gist of an overall pattern in the image 129. But how quickly and reliably can we detect these gists when multiple groups or regions in a visualization need to be summarized seperately and compared? What happens to speed and accuracy as more items are added to a visualization? These open questions about the impact of data scale and complexity on visualization perception were discussed in the review article by Szafir, Haroz, Gleicher, and Franconeri 129. To answer these questions, the experiment methods and findings from visual perception can be employed. Specifically, “ensemble coding” is the term used by vision scientists to describe our ability to rapidly perceive simple statistical properties of sets without the need to inspect individual items. Research has shown that we can perceive averages for sets made of simple 59 and complex 128 objects as well as the variance of sets for simple objects 119. But it is not clear if or how we can answer more complex statistical questions about subsets within visualizations, such as:

- How different are trends between groups of line charts?

- Which group's trends are more variable?

- Is the distribution of trends uniform or normally distributed?

For the field of visual perception research, understanding how we make these statistical decisions with collections would be a novel contribution that helps explain how our brain represents and summarizes complex information, how much information is discarded to make rapid judgements, and when biases or accuracy limits arise when working with collections of items.

For the field of data visualization research, understanding the conditions when we can make these statistical judgements and the speed and precision with which we can make them will directly translate to new guidelines for optimal use of visualization for large datasets. Although much visualization software uses settings that rely on design intuition, these results would be the first science-based guidelines for large data visualization and progressive visualization.

3.3.2 Cognitive Limitations for Visually Selecting Subsets in Visualizations

A critical task for analyzing large data mentioned in the previous section is filtering and comparing subsets. But why and when are these filtering tasks difficult? And how can a visualization facilitate the identification of subsets – especially for data with many categories? How much information we can visually select and attend to and the minimum size of a selection are strongly limited, and this limitation is impacted by the layout of items in an image and the types of visual features and tasks employed 87.

3.3.3 Illustrative Visualization

We will continue our work in the area of illustrative visualization. This sub-field of visualization takes inspiration from illustrators' decades to centuries of experience in using knowledge from perception and cognition to better portray scientific subject matter. Another input arises from the field of non-photorealistic rendering which has developed numerous techniques of stylizing images and other input data. Traditionally, illustrative visualization has thus been applied primarily to data with a concrete spatial mapping in 2D and, more frequently, in 3D space. Nonetheless, some efforts for understanding the potential benefit of illustrative visualization to non-spatial data have been documented, including some of our own team 78, 60. An interesting aspect in this context is the role of depiction style can play in how visualizations or other forms of depiction are interpreted and understood. For example, we recently investigated the effect of stylization on emotion for images 68, and will extend this work in the future to study how we can use stylization to change the way people perceive, consume, and understand visualizations. One hypothesis to investigate, for example, is that a more hand-drawn depiction style 80 could lead to viewers spending more time with a visualization, and thus take more time to understand and interact with it.

Another main future direction of research in this context is the question of what the role of abstraction is in illustrative visualization 134 as well as visualization in general, and specifically how we can provide dedicated means to control the abstraction being applied to visual representations of data. This means that we need to go beyond seeing abstraction only as a side-product of stylization as it has traditionally been viewed in many approaches in non-photorealistic rendering as well as illustrative visualization to date, and investigate how we can interactively adjust it to provide practitioners with a means to find the best visual representations for a given task. For example, we have investigated this question recently in the context of structural biology 117, 138 and DNA nanostructures 116, 115, but also want to expand this work to other application domains in the future.

3.3.4 Decision Making with Visualizations

Aviz has recently started a novel stream of research on the topic of judgment and decision making with visualizations 76, 75, 77. Human decision making and cognitive biases are important research topics in the fields of psychology, economics and marketing, but until recently, the presence of cognitive biases in visualization-supported decision making has not been investigated. Yet, visualization systems are increasingly used to support decision making: large companies switch to visualization solutions to improve their decisions in a range of areas, where large sums of money or human lives are at stake. More and more, the ultimate goal of visualization is not to understand patterns in the data and get insights as was traditionally assumed, but to make good decisions. In order to fully understand how information visualizations can support decision making, it is important to go beyond traditional evaluations based on data understanding, and study how visualizations interact with human judgment, human heuristics, and cognitive biases.

We will pursue this important stream of research by investigating yet-unexplored areas, such as group decision-making with visualizations, decision making in the presence of uncertainty and incomplete information (in connection with the topics discussed in Sections 3.1, and the use of visualizations to support social choice and group decisions in the presence of conflicts of interest. How cognitive biases interact with visual perception is also an important and difficult question that has remained largely unexplored. In terms of application domains, we will be focusing more on evidence-based decision making in everyday life, e.g., how can visualizations be useful (or harmful) when choosing medicines, food products, means of transportation, charity donations, or when electing politicians. For example, a recent study has investigated whether specific infographic designs can lead people to feel more empathy towards suffering populations 72, but with inconclusive or negative results. In collaboration with the University of Campina Grande in Brazil, we are currently investigating designs that we believe are more likely to be effective. We will be evaluating these designs using metrics that are more likely to be correlated with prosocial decision making than empathy, as it has been shown that the emotion or empathy is not always conducive to helping behavior.

3.4 Methodology for Visualization Research

Permanents involved: Petra Isenberg, Tobias Isenberg, Jean-Daniel Fekete

An important aspect of any scientific research is to establish and follow rigorous and effective methodologies for acquiring new knowledge. In the field of Visualization in particular, scientific discourse on the validity, use, and establishment of methodologies is important as the field is highly interdisciplinary, with diverse influences and opinions. It is important to establish, for example, what level of rigor the field should require of its methods, how to choose among established methods and methodologies, and how to best communicate the results of our empirical research. Aviz researchers have in the past been very active in contributing critical reflection on ways to assess different forms of value offered by visualization and visual analytics research and design (e. g., 79, 101, 107, 108, 110, 124, 135). This included work on novel research methods but also existing methods and tools such as statistics. As part of the new team we will focus our efforts on three main topics related to visualization research methodologies.

3.4.1 Promoting and Following Open Research Practices

For issues with open research practices to be addressed, educational materials and guidelines need to be written, so researchers have clarity about how to make their research more credible. Moreover, Aviz members are working with the organizing bodies of the visualization research community to establish incentives for making research artifacts and potentially establish minimal requirements for openness in published articles. Meanwhile, it is important to continue measuring and cataloging openness in the field to monitor progress. The goal is to improve the credibility and applicability of the field’s research.

3.4.2 Shaping the Scientific Visualization Community

In the past, Aviz researchers have been heavily involved in the organization structure of IEEE visualization conferences, the most prestigious conference in our field, by proposing workshops, tutorials, serving on various organizing committees, steering committees, editorial boards, and even the IEEE Visualization Executive Committee that oversees the whole IEEE Visualization conference. We will continue to do so. We will, in particular, aid the process by providing data collection and analysis services through the vispubdata.org dataset that we are collecting, cleaning, and making available to the community. The dataset has already been used in research (e. g., 111) but also to shape the scientific community by proposing program committee members, new processes, and was used by the Visualization Restructuring Committee (ReVISe). We are also involved in the EuroVis community and participate at multiple levels to its organization and management.

4 Application domains

4.1 Natural Sciences

As part of a CORDI PhD project, we collaborated with researchers at CERN on interactive data visualization using augmented reality, with the goal to better understand this new visualization environment and to support the physicists in analysing their 3D particle collision data. As part of another CORDI PhD project, we collaborated with researchers at the German Center for Climate Computation (DKRZ) and the Helmholtz-Zentrum Hereon (formerly Helmholtz-Zentrum Geesthacht, HZG), to better understand collaborative data exploration and interaction in immersive analytics contexts. Finally, as part of the Inria IPL “Naviscope,” we collaborate with researchers at INRAE (as well as other Inria teams) on interactive visualization tools for the exploration of plant embryo development. We also work with experts from the natural sciences in general to create illustrative visualizations of scientific data, such as a continuous zooming technique from the nucleus of a cell all the way down to the atom configuration of the DNA, for example for the application in education.

4.2 Social Sciences

We collaborate with social science researchers from EHESS Paris on the visualization of dynamic networks; they use our systems (GeneaQuilts 69, Vistorian 125, PAOHVis 133, PK-Clustering 121) and teach them to students and researchers. Our tools are used daily by ethnographers and historians to study the evolution of social relations over time. In the social sciences, many datasets are gathered by individual researchers to answer a specific question, and automated analytical methods cannot be applied to these small datasets. Furthermore, the studies are often focused on specific persons or organizations and not always on the modeling or prediction of the behavior of large populations. The tools we design to visualize complex multivariate dynamic networks are unique and suited to typical research questions shared by a large number of researchers. This line of research is supported by the DataIA “HistorIA” project, and by the “IVAN” European project. We currently collaborate with PayAnalytics, an Icelandic company to visualize data to help companies close their gender pay gaps.

4.3 Medicine

We collaborate with the Health-Data-Hub on the analysis and visualization of CNAM Data “parcours de santé” to help referent doctors and epidemiologists make sense of French health data. In particular, we are working on a subset of the CNAM Data focused on urinary problems, and we have received very positive feedback from doctors who can see what happens to the patients treated in France vs. what they thought happened through the literature. This project is starting but is already getting a lot of traction from our partners in medicine, epidemiology, and economy of health.

We are also collaborating with the “Assistance Publique - Hôpitaux de Paris” AP-HP with a newly funded project called URGE, aimed at improving the emergency services for the parisian hospitals. See the press announcement.

5 Social and environmental responsibility

5.1 Impact of research results

Aviz' work on illustrative visualization has the potential to be integrated into future teaching materials for students in schools, visitors in museums, or similar.

Aviz' work on visualization of large documents corpora with Cartolabe is used to present the results of the French “Grand débat”, as well as other citizen expressions.

Aviz' work on the gender pay gap aims at improving decision making for closing the adjusted pay gap.

Open science: Aviz regularly shares full research material on the repository of the Center for Open Science to facilitate scrutiny, reuse, and replication:

- Visualization in Motion: A Research Agenda and Two Evaluations 39 — osf.io/km3s2/, osf.io/9c4bz/, osf.io/t748d/, osf.io/t748d/,gitlab.inria.fr/lyao/visinmotion, GRSI Stamp

- Reflections on Visualization in Motion for Fitness Trackers 42 — hal.inria.fr/hal-03775633

- Situated Visualization in Motion for Swimming 48 — hal.inria.fr/hal-03700406

- Situated Visualization in Motion for Video Game 53 — hal.inria.fr/hal-03694019

- Situated Visualization in Motion in Video Game for Different Types of Data 46 — hal.inria.fr/hal-03700418

- BeauVis: A Validated Scale for Measuring the Aesthetic Pleasure of Visual Representations 3 — osf.io/fxs76, osf.io/djrn3, github.com/tingying-he/beauvis, GRSI Stamp

- LineageD: An Interactive Visual System for Plant Cell Lineage Assignments based on Correctable Machine Learning 20 — osf.io/rhyg4, gitlab.inria.fr/jhong/lineaged, GRSI Stamp

- Do You Believe Your (Social Media) Data? A Personal Story on Location Data Biases, Errors, and Plausibility as well as their Visualization 22 — osf.io/u8ejr, github.com/tobiasisenberg/Motion-Plausibility-Profiles, GRSI Stamp

- Point Specification in Collaborative Visualization for 3D Scalar Fields Using Augmented Reality 30 — osf.io/43j9g, osf.io/7a6yr, github.com/MickaelSERENO/SciVis_Server/tree/CHI2020

- Hybrid Touch/Tangible Spatial Selection in Augmented Reality 31 — osf.io/qu634, osf.io/pwauq, osf.io/rvpuc, github.com/MickaelSERENO/SciVis_Server/tree/CHI2020, github.com/MickaelSERENO/TangibleBrushAR_GRSI, GRSI Stamp

- Understanding Differences between Combinations of 2D and 3D Input and Output Devices for 3D Data Visualization 37 – osf.io/7gsk8, github.com/xiyaowang/2D3DInOutputFor3DVis

- Preferences and Effectiveness of Sleep Data Visualizations for Smartwatches and Fitness Bands 41 – osf.io/yz8ar/

- Context Specific Visualizations on Smartwatches 55 – osf.io/vhn43/

- Studying Early Decision Making with Progressive Bar Charts 27 — osf.io/tn2ph/

- Scalability in Visualization 29 — osf.io/xrvu7/

- Six methods for transforming layered hypergraphs to apply layered graph layout algorithms 16 — osf.io/grvwu/

- Pyramid-based Scatterplots Sampling for Progressive and Streaming Data Visualization 15 — ProgressivePyramidBasedSampling and www.yunhaiwang.net/Vis2021/progressive-sampling/

6 Highlights of the year

6.1 Concours

- Petra Isenberg was nominated as DR2 at Inria

6.2 Awards

- Our IEEE VIS best paper on “Perception! Immersion! Empowerment! Superpowers as Inspiration for Visualization” was published in IEEE TVCG 38.

- Mickaël Sereno, Petra Isenberg received special recognitions for outstanding reviews for the CHI 2023 conference.

7 New software and platforms

7.1 New software

7.1.1 Cartolabe

-

Name:

Cartolabe

-

Keyword:

Information visualization

-

Functional Description:

The goal of Cartolabe is to build a visual map representing the scientific activity of an institution/university/domain from published articles and reports. Using the HAL Database, Cartolabe provides the user with a map of the thematics, authors and articles . ML techniques are used for dimensionality reduction, cluster and topics identification, visualisation techniques are used for a scalable 2D representation of the results.

Cartolabe has in particular been applied to the Grand Debat dataset (3M individual propositions from french Citizen, see https://cartolabe.fr/map/debat). The results were used to test both the scaling capabilities of Cartolabe and its flexibility to non-scientific and non-english corpuses. We also Added sub-map capabilities to display the result of a year/lab/word filtering as an online generated heatmap with only the filtered points to facilitate the exploration. Cartolabe has also been applied in 2020 to the COVID-19 kaggle publication dataset (Cartolabe-COVID project) to explore these publications.

- URL:

- Publication:

-

Contact:

Philippe Caillou

-

Participants:

Philippe Caillou, Jean Daniel Fekete, Michèle Sebag, Anne-Catherine Letournel

-

Partners:

LRI - Laboratoire de Recherche en Informatique, CNRS

7.1.2 PAOHvis

-

Name:

Parallel Aggregated Ordered Hypergraph Visualization

-

Keywords:

Dynamic networks, Hypergraphs

-

Functional Description:

Parallel Aggregated Ordered Hypergraph (PAOH) is a novel technique to visualize dynamic hypergraphs 133. Hypergraphs are a generalization of graphs where edges can connect more than two vertices. Hypergraphs can be used to model co-authorship networks with multiple authors per article, or networks of business partners. A dynamic hypergraph evolves over discrete time slots. A PAOH display represents vertices as parallel horizontal bars and hyperedges as vertical lines that connect two or more vertices. We believe that PAOH is the first technique with a highly readable representation of dynamic hypergraphs without overlaps. It is easy to learn and is well suited for medium size dynamic hypergraph networks such as those commonly generated by digital humanities projects - our driving application domain.

- URL:

-

Contact:

Jean Daniel Fekete

7.1.3 ParcoursVis

-

Name:

Visualization of Patient Pathways

-

Keywords:

Visualization, Health, Progressive visualization

-

Scientific Description:

We developed ParcoursVis, our Progressive Visual Analytics (PVA) tool to explore patients' care pathways at scale. Current tools to visualize temporal event sequences are restricted to datasets as large as a few thousand sequences to remain reactive. With ParcoursVis, we aim to visualize patients' care pathways stored in country-level databases, which can contain order of magnitudes higher of event sequences, at scale using a progressive architecture. PVA tools, instead of waiting for the whole computation to finish before rendering the final results, yield partial results each time the algorithm processes small chunks of data or iterations. This paradigm makes the tool reactive and quickens processes such as checking errors of a query.

-

Functional Description:

ParcoursVis allow extracting a subset of the nationwide database from CNAMTS, transforming the raw data into meaningful medical events, and visualizing it interactively at scale via a web interface.

For the moment, ParcoursVis focuses on non-cancerous prostate adenoma. With this focus, our domain expert users extract meaningful high-level types of events (e.g., treatments and outcomes) that the patients undertake in their care pathways.

Using a progressive visualization method, ParcoursVis visualizes in an aggregated manner the care pathways of tens of millions of patients treated with thousands of events over decades, several orders of magnitude more than existing interactive systems.

- URL:

-

Authors:

Jean Daniel Fekete, Mickael Sereno

-

Contact:

Jean Daniel Fekete

7.1.4 LineageD

-

Keywords:

3D, Human Computer Interaction, Machine learning

-

Scientific Description:

We describe LineageD—a hybrid web-based system to predict, visualize, and interactively adjust plant embryo cell lineages. Currently, plant biologists explore the development of an embryo and its hierarchical cell lineage manually, based on a 3D dataset that represents the embryo status at one point in time. This human decision-making process, however, is time-consuming, tedious, and error-prone due to the lack of integrated graphical support for specifying the cell lineage. To fill this gap, we developed a new system to support the biologists in their tasks using an interactive combination of 3D visualization, abstract data visualization, and correctable machine learning to modify the proposed cell lineage. We use existing manually established cell lineages to obtain a neural network model. We then allow biologists to use this model to repeatedly predict assignments of a single cell division stage. After each hierarchy level prediction, we allow them to interactively adjust the machine learning based assignment, which we then integrate into the pool of verified assignments for further predictions. In addition to building the hierarchy this way in a bottom-up fashion, we also offer users to divide the whole embryo and create the hierarchy tree in a top-down fashion for a few steps, improving the ML-based assignments by reducing the potential for wrong predictions. We visualize the continuously updated embryo and its hierarchical development using both 3D spatial and abstract tree representations, together with information about the model's confidence and spatial properties. We conducted case study validations with five expert biologists to explore the utility of our approach and to assess the potential for LineageD to be used in their daily workflow. We found that the visualizations of both 3D representations and abstract representations help with decision making and the hierarchy tree top-down building approach can reduce assignments errors in real practice.

-

Functional Description:

LineageD is a hybrid web-based system to predict, visualize, and interactively adjust plant embryo cell lineages. Users can build the hierarchy tree in two directions, bottom-up and top-down. Also, they can check and correct assignments based on Machine Learning predictions.

- URL:

- Publication:

-

Contact:

Jiayi Hong

-

Partner:

INRAE

7.2 New platforms

7.2.1 Vispubdata.org

Participants: Petra Isenberg [Inria Paris-Saclay, correspondent], Tobias Isenberg [Inria Paris-Saclay].

AVIZ members are making available for research a dataset of IEEE VIS publications at vispubdata.org. This dataset is actively being used for research and conference organization.

7.2.2 VisImageNavigator

Participants: Petra Isenberg [Inria Paris-Saclay, correspondent], Tobias Isenberg [Inria Paris-Saclay].

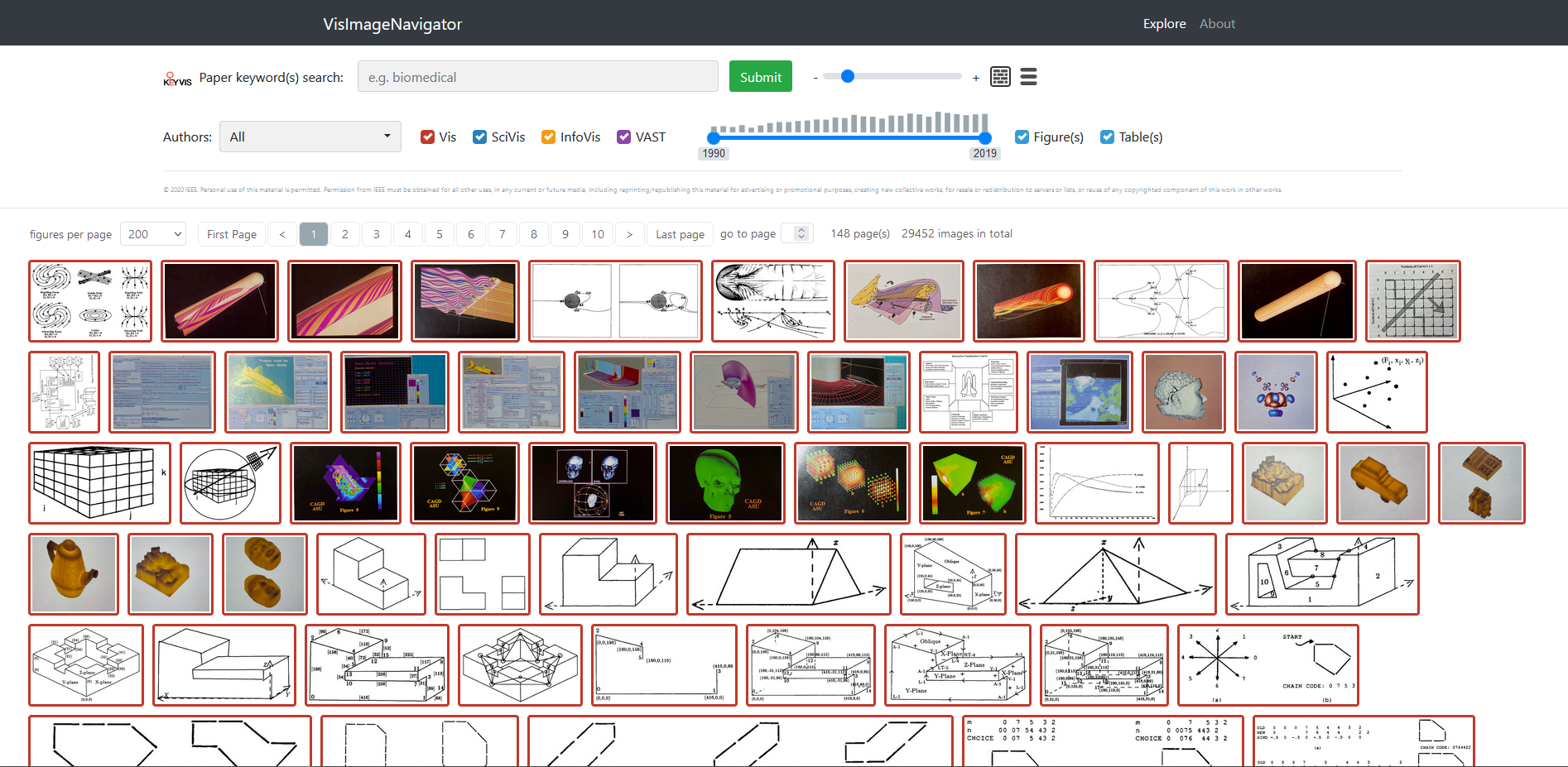

An image of the VisImageNavigator interface showing images collected from IEEE VIS papers in a grid format with several sliders and checkboxes to filter by time and conference.

AVIZ members are contributing to making available for research a dataset of IEEE VIS images at ieee-dataport.org/open-access/ieee-vis-figures-and-tables-image-dataset49. The set of images can be browsed online at vispubfigures.github.io/VisPubFigures/. The work is primarily led by Jian Chen at Ohio State University.

7.2.3 Gender in Visualization Dataset

Participants: Natkamon Tovanich [System X], Pierre Dragicevic [Inria Bordeau], Petra Isenberg [Inria Paris-Saclay, correspondent].

In the context of our paper on “Gender in 30 Years of IEEE Visualization” we make available data about the diversity of the Visualization community in terms of the gender of authors, organizing, and program committee members as well as award winners: www.aviz.fr/Research/Gender-in-Vis.

7.2.4 ParcoursVis

Participants: Jean-Daniel Fekete [Inria Paris-Saclay, correspondent], Mickaël Sereno [Inria Paris-Saclay].

The French Social Security (CNAMTS) wants to analyze the patient pathways for specific treatments to understand how treatments are actually done in contrast to the recommendations. Aviz and the Health Data Hub are collaborating to design and implement a visualization system, ParcoursVis, to explore its prescription data. ParcoursVis allow extracting a subset of the nationwide database from CNAMTS, transforming the raw data into meaningful medical events, and visualizing it interactively at scale. See more information at www.aviz.fr/Research/ParcoursVis.8 New results

8.1 Hybrid Touch/Tangible Spatial Selection in Augmented Reality

Participants: Mickaël Sereno [Inria Paris-Saclay, correspondent], Stéphane Gosset [Inria Paris-Saclay], Lonni Besançon [Linköping Univ.], Tobias Isenberg [Inria Paris-Saclay].

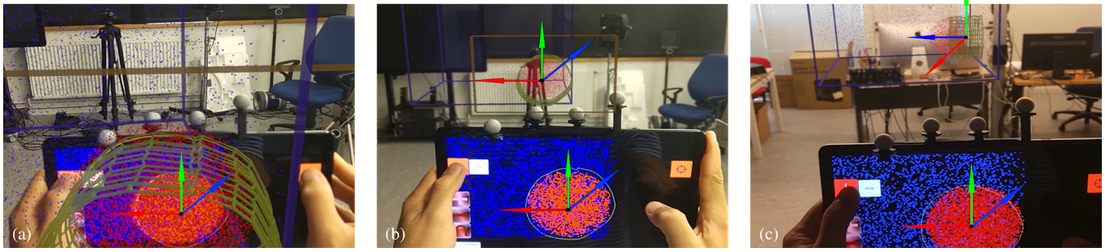

Our three AR mappings. In Natural Mapping (a), the user extrudes the drawn 2D shape in a direct approach. The position of the virtual tablet overlaps the position of the physical tablet. In Relative-Aligned (b), the orientations of the virtual and physical tablets with respect to the physical space are the same during an extrusion. However, the user controls the virtual plane with relative motions. In this example, the user stepped back before starting remotely an extrusion. Finally, the Relative-Full mode (c) creates two coordinate systems. Movements from the one defined by the physical tablet are mapped to the coordinate system defined by the virtual table. In this example, the user, after having placed the tablet in front of the dataset, stepped back and rotated around the dataset which the user now is facing sideways. As the user moves along the normal of the physical tablet, its virtual counterpart also moves along its own normal axis (the blue arrows)

We study tangible touch tablets combined with Augmented Reality Head-Mounted Displays (AR-HMDs) to perform spatial 3D selections 9. We are primarily interested in the exploration of 3D unstructured datasets such as cloud points or volumetric datasets. AR-HMDs immerse users by showing datasets stereoscopically, and tablets provide a set of 2D exploration tools. Because AR-HMDs merge the visualization, interaction, and the users’ physical spaces, users can also use the tablets as tangible objects in their 3D space. Nonetheless, the tablets’ touch displays provide their own visualization and interaction spaces, separated from those of the AR-HMD. This raises several research questions compared to traditional setups. In this paper, we theorize, discuss, and study different available mappings for manual spatial selections using a tangible tablet within an AR-HMD space. We then study the use of this tablet within a 3D AR environment, compared to its use with a 2D external screen.

8.2 Visualization in Motion: A Research Agenda and Two Evaluations

Participants: Lijie Yao [Inria Paris-Saclay, correspondent], Anastasia Bezerianos [Univ. Paris-Saclay], Romain Vuillemot [Ecole Centrale Lyon], Petra Isenberg [Inria Paris-Saclay].

Visualization scenarios that involve different types of relative movement between viewers and visualization: (a): 0 A.D. game characters with attached health meters, (b): an augmented basketball match from the tool Clipper CourtVision. (c): a walkable visualization of the general organization of scholars at ENAC in France , . (d): an on-street bar chart that can be driven or walked by created by the Respect New Haven activist group. (e): a runner looking at her fitness data. (f): a person checking financial charts on her phone while walking to a meeting.

We contribute a research agenda for visualization in motion and two experiments to understand how well viewers can read data from moving visualizations 13. We define visualizations in motion as visual data representations that are used in contexts that exhibit relative motion between a viewer and an entire visualization. Sports analytics, video games, wearable devices, or data physicalizations are example contexts that involve different types of relative motion between a viewer and a visualization. To analyze the opportunities and challenges for designing visualization in motion, we show example scenarios and outline a first research agenda. Motivated primarily by the prevalence of and opportunities for visualizations in sports and video games we started to investigate a small aspect of our research agenda: the impact of two important characteristics of motion—speed and trajectory on a stationary viewer's ability to read data from moving donut and bar charts. We found that increasing speed and trajectory complexity did negatively affect the accuracy of reading values from the charts and that bar charts were more negatively impacted. In practice, however, this impact was small: both charts were still read fairly accurately.

8.3 BeauVis: A Validated Scale for Measuring the Aesthetic Pleasure of Visual Representations

Participants: Tingying He [Univ. Paris-Saclay, correspondent], Petra Isenberg [Inria Paris-Saclay], Raimund Dachselt [Technische Universität Dresden], Tobias Isenberg [Inria Paris-Saclay].

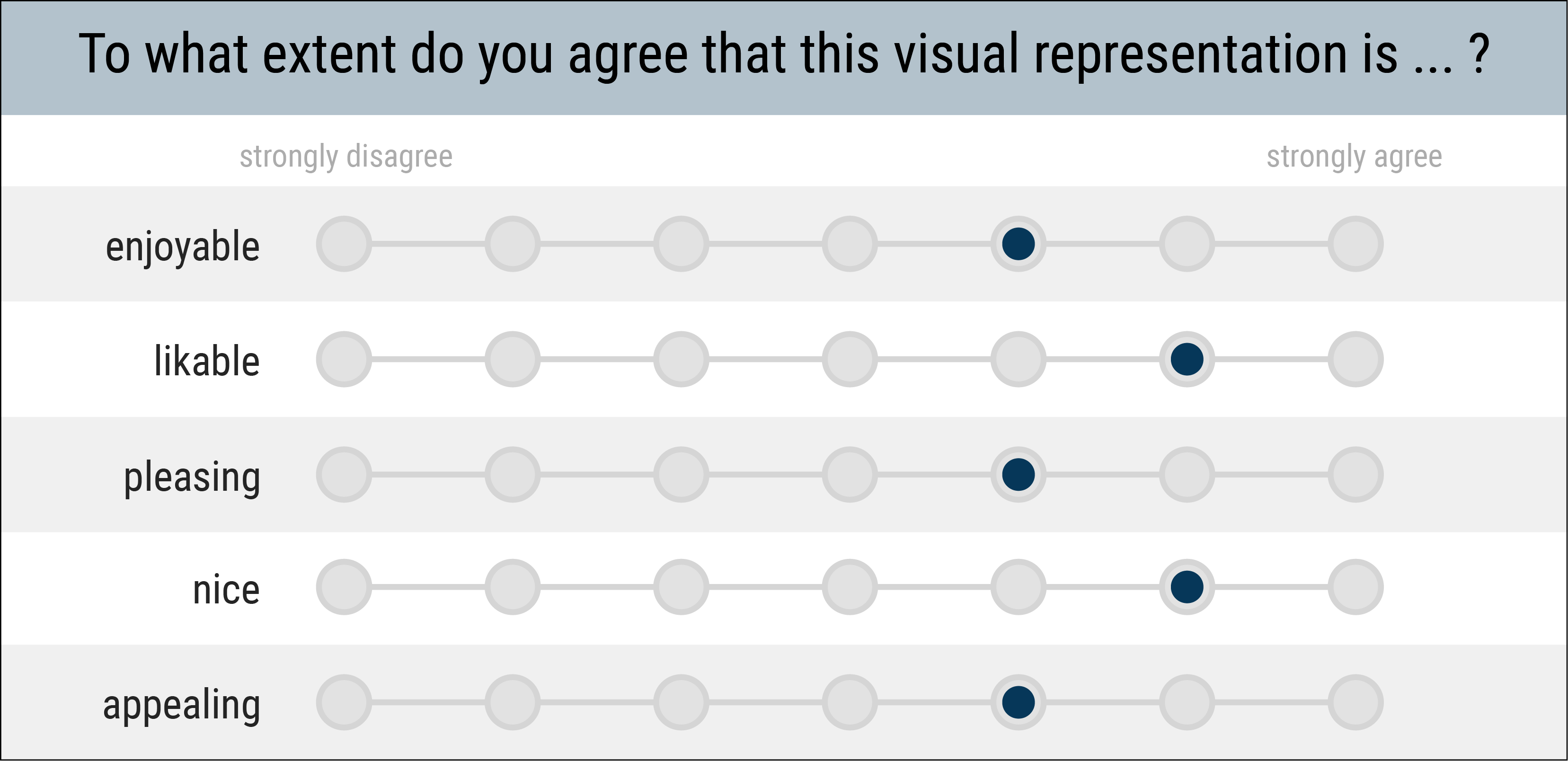

Our BeauVis scale in its recommended version showing 5 Likert items with the prompts: enjoyable, likable, pleasing, nice, appealing. The question on to top reads To what extent do you agree that this visualization is...? The end-points of the Likert items range from strongly disagree to strongly agree.

We developed and validated a rating scale to assess the aesthetic pleasure (or beauty) of a visual data representation: the BeauVis scale. With our work we offer researchers and practitioners a simple instrument to compare the visual appearance of different visualizations, unrelated to data or context of use. Our rating scale can, for example, be used to accompany results from controlled experiments or be used as informative data points during in-depth qualitative studies. Given the lack of an aesthetic pleasure scale dedicated to visualizations, researchers have mostly chosen their own terms to study or compare the aesthetic pleasure of visualizations. Yet, many terms are possible and currently no clear guidance on their effectiveness regarding the judgment of aesthetic pleasure exists. To solve this problem, we engaged in a multi-step research process to develop the first validated rating scale specifically for judging the aesthetic pleasure of a visualization (osf.io/fxs76). Our final BeauVis scale consists of five items, “enjoyable,” “likable,” “pleasing,” “nice,” and “appealing.” Beyond this scale itself, we contribute (a) a systematic review of the terms used in past research to capture aesthetics, (b) an investigation with visualization experts who suggested terms to use for judging the aesthetic pleasure of a visualization, and (c) a confirmatory survey in which we used our terms to study the aesthetic pleasure of a set of 3 visualizations.

8.4 LineageD: An Interactive Visual System for Plant Cell Lineage Assignments based on Correctable Machine Learning

Participants: Jiayi Hong [Inria Paris-Saclay, correspondent], Alain Trubuil [InraE Paris-Saclay], Tobias Isenberg [Inria Paris-Saclay].

Screenshot of LineageD, for the example of assigning a 256-cell embryo (in progress). The core elements are the Main 3D View of the embryo, here exploded and with the target cell highlighted, and the Hierarchy Tree of the lineage, which is interactively established by the biologists. The Target and Sister View shows the relative position of the target cell within the whole embryo and its proposed sister. The Thumbnail View of the tree provides the context of the full hierarchy. Finally, the Operation Panel supports further control of the tool.

We developed LineageD—a hybrid web-based system to predict, visualize, and interactively adjust plant embryo cell lineages. Currently, plant biologists explore the development of an embryo and its hierarchical cell lineage manually, based on a 3D dataset that represents the embryo status at one point in time. This human decision-making process, however, is time-consuming, tedious, and error-prone due to the lack of integrated graphical support for specifying the cell lineage. To fill this gap, we developed a new system to support the biologists in their tasks using an interactive combination of 3D visualization, abstract data visualization, and correctable machine learning to modify the proposed cell lineage. We use existing manually established cell lineages to obtain a neural network model. We then allow biologists to use this model to repeatedly predict assignments of a single cell division stage. After each hierarchy level prediction, we allow them to interactively adjust the machine learning based assignment, which we then integrate into the pool of verified assignments for further predictions. In addition to building the hierarchy this way in a bottom-up fashion, we also offer users to divide the whole embryo and create the hierarchy tree in a top-down fashion for a few steps, improving the ML-based assignments by reducing the potential for wrong predictions. We visualize the continuously updated embryo and its hierarchical development using both 3D spatial and abstract tree representations, together with information about the model's confidence and spatial properties. We conducted case study validations with five expert biologists to explore the utility of our approach and to assess the potential for LineageD to be used in their daily workflow. We found that the visualizations of both 3D representations and abstract representations help with decision making and the hierarchy tree top-down building approach can reduce assignments errors in real practice.

9 Partnerships and cooperations

9.1 International initiatives

9.1.1 Participation in other International Programs

MicroVis

Participants: Petra Isenberg, Alaul Islam, Lijie Yao.

-

Program:

ANR PRCI

- Title: MicroVis: Micro visualizations for pervasive and mobile data exploration

-

Partner Institution(s):

- University of Stuttgart, Germany

- Date/Duration: 2019–2023

9.1.2 Visits of international scientists

Dr. Nivan Ferreira

-

Status:

assistant professor

-

Institution of origin:

Universidade Federal de Pernambuco

-

Country:

Brazil

-

Dates:

July 1st–October 30th, 2022

-

Context of the visit:

lab visit, invited talk, collaboration with Jean-Daniel Fekete

-

Mobility program/type of mobility:

DataIA invited professor

Dr. Daniel F. Keefe

-

Status:

professor

-

Institution of origin:

University of Minnesota, Twin Cities

-

Country:

USA

-

Dates:

November 21–22, 2022

-

Context of the visit:

lab visit, invited talk, collaboration with Tobias Isenberg

-

Mobility program/type of mobility:

sabbatical

Dr. Raimund Dachselt

-

Status:

professor

-

Institution of origin:

Technische Universität Dresden

-

Country:

Germany

-

Dates:

February, 2022

-

Context of the visit:

work on joint research projects

-

Mobility program/type of mobility:

sabbatical

Dr. Juan Carlos Torres Cantero

-

Status:

professor

-

Institution of origin:

University of Granada

-

Country:

Spain

-

Dates:

May to July 2022

-

Context of the visit:

collaboration with Tobias Isenberg

-

Mobility program/type of mobility:

research stay

9.1.3 Visits to international teams

Research stays abroad

Tobias Isenberg

-

Visited institution:

KAUST: King Abdullah University of Science and Technology

-

Country:

Kingdom of Saudi Arabia

-

Dates:

November 5–10, 2022

-

Context of the visit:

research visit and invited talk, organization of collaboration

-

Mobility program/type of mobility:

research stay, invited talk

Alaul Islam

-

Visited institution:

Interactive Media Lab Dresden, Technische Universität Dresden

-

Country:

Germany

-

Dates:

June 26– July 8, 2022

-

Context of the visit:

research visit and invited talk

-

Mobility program/type of mobility:

research stay, invited talk

9.2 National initiatives

-

Program:

ANR PRC (ANR-19-CE33-0012)

-

Project acronym:

EMBER

-

Project title:

Situated Visualizations for Personal Analytics

-

Duration:

2020 – 2023. Total funding: 712 k€

-

Coordinator:

Pierre Dragicevic

-

Other partners:

Inria Bordeaux, Sorbonne Université

-

Abstract:

The Ember project will study how situated data visualization systems can help people use their personal data (e.g., fitness and physiological data, energy consumption, banking transactions, online social activity,…) for their own benefit. Although personal data is generated in many areas of daily life, it remains underused by individuals. Rarely is personal data subjected to an in-depth analysis and used to inform daily decisions. This research aims to empower individuals to improve their lives by helping them become advanced consumers of their own data. This research builds on the area of personal visual analytics, which focuses on giving the general public effective and accessible tools to get insights from their own data. Personal visual analytics is a nascent area of research, but has so far focused on scenarios where the data visualization is far removed from the source of the data it refers to. The goal of this project is to address the limitations of traditional platforms of personal data analytics by exploring the potential of situated data visualizations. In a situated data visualization, the data is directly visualized near the physical space, object, or person it refers to. Situated data visualizations have many potential benefits: they can surface information in the physical environment and allow viewers to interpret data in-context; they can be tailored to highlight spatial connections between data and the physical environment, making it easier to make decisions and act on the physical world in response to the insights gained; and they can embed data into physical environments so that it remains visible over time, making it easier to monitor changes, observe patterns over time and collaborate with other people. Website: ember.inria.fr/.

-

Program:

Inria IPL

-

Project acronym:

NAVISCOPE

-

Project title:

Image-guided navigation and visualization of large data sets in live cell imaging and microscopy

-

Duration:

2018 – 2022.

-

Coordinator:

Charles Kevrann (Inria Rennes)

-

Participants in AVIZ:

Tobias Isenberg, Jiayi Hong

-

Other partners:

Inria Rennes and other Inria centers

-

Abstract:

Nowadays, the detection and visualization of important localized events and process in multidimensional and multi-valued images, especially in cell and tissue imaging, is tedious and inefficient. Specialized scientists can miss key events due to complexity of the data and the lack of computer guidance. In this proposal, we plan to develop original and cutting-edge visualization and navigation methods to assist scientists, enabling semi-automatic analysis, manipulation, and investigation of temporal series of multi-valued volumetric images, with a strong focus on live cell imaging and microscopy application domains. We will build Naviscope upon the strength of scientific visualization and machine learning methods in order to provide systems capable to assist the scientist to obtain a better understanding of massive amounts of information. Such systems will be able to recognize and highlight the most informative regions of the dataset by reducing the amount of information displayed and guiding the observer attention. In the Naviscope project, we address the three following challenges and issues:

- Novel machine learning methods able to detect the main regions of interest, and automatic quantification of sparse sets of molecular interactions and cell processes during navigation to save memory and computational resources.

- Novel visualization methods able to encode 3D motion/deformation vectors and dynamics features with color/texture-based and non-sub-resolved representations, abstractions, and discretization, as used to show 2D motion and deformation vectors and patterns.

- Effective machine learning-driven navigation and interaction techniques for complex functional 3D+Time data enabling the analysis of sparse sets of localized intra-cellular events and cell processes (migration, division, etc.).

Finally, we will have also to overcome the technological challenge of gathering up the software developed in each team to provide a unique original tool for users in biological imaging, and potentially in medical imaging. Website: project.inria.fr/naviscope/.

Other projects:

- DATAIA project HistorIA “Computational social sciences and information visualization join forces to explore and analyze large historical databases”. Duration: 36 months. Total funding: 240k€. Partners: Inria Saclay, Telecom Paris. Coordinators: Jean-Daniel Fekete, Christophe Prieur.

- Health Data Hub “ParcoursVis” post-doctoral funding. Duration: 24 months. Total funding: 220k€. Partners: Inria Saclay, Health Data Hub. Coordinator: Jean-Daniel Fekete. See www.aviz.fr/Research/ParcoursVis.

- AP-HP / Inria joint project URGE. Duration 48 months. Total funding: 520k€. Partners: Inria, Hôpital Saint Antoine, Sorbonne Université. Coordinators: Xavier Leroy (Inria), Youri Yordanov (AP-HP, Inserm, Sorbonne Université).

- Action Exploratoire: EquityAnalytics: Visual Analytics for Pay Equity, Action Exploratoire. Post-Doctoral Funding. Duration 24 months. Total funding: 106k€. Coordinator: Petra Isenberg. Partners: PayAnalytics.

10 Dissemination

10.1 Promoting scientific activities

10.1.1 Scientific events: selection

- Petra Isenberg : Paper Co-Chair for ACM MobileHCI

- Tobias Isenberg : Area Papers Co-Chair for VIS 2022

Member of the conference program committees

- Petra Isenberg: IEEE VIS 2022

- Tobias Isenberg: BELIV 2022, VCBM 2022, EuroVis 2022

- Jean-Daniel Fekete: IEEE VIS 2022 Short Papers

- Mickaël Sereno: ACM MobileHCI

- Jiayi Hong: ACM SIGGRAPH Asia Posters

Conference Reviewer

- IEEE Visualization: Jean-Daniel Fekete, Petra Isenberg, Tobias Isenberg, Jiayi Hong, Lijie Yao

- IEEE VR 2022: Tobias Isenberg, Petra Isenberg

- IEEE VR 2023: Mickaël Sereno

- IEEE ISMAR 2022: Mickaël Sereno

- BELIV 2022: Tobias Isenberg, Petra Isenberg

- IEEE SIGGRAPH Asia 2022; full papers: Tobias Isenberg

- IEEE SIGGRAPH Asia 2023; Extended Abstract: Mickaël Sereno, Jiayi Hong, Lijie Yao

- Eurographics STARs 2023: Tobias Isenberg

- EuroVis STARs 2023: Tobias Isenberg, Jean-Daniel Fekete

- ACM ISS 2022: Tobias Isenberg

- VCBM 2022: Tobias Isenberg

- ACM MobileHCI: Mickaël Sereno

- ACM VRST 2022: Jiayi Hong

- ACM IHM 2023: Jiayi Hong

- ChinaVis 2022: Jiayi Hong, Lijie Yao

10.1.2 Journal

Member of the editorial boards

- Jean-Daniel Fekete: Associate Editor-in-Chief IEEE Transactions on Visualization and Computer Graphics

- Petra Isenberg: Associate Editor IEEE Transactions on Visualization and Computer Graphics

- Petra Isenberg: Associate Editor-in-Chief IEEE Computer Graphics and Applications (CG&A)

- Tobias Isenberg: Associate Editor for Elsevier Computers & Graphics and Elsevier Graphics and Visual Computing

Journal Reviewer

- IEEE Transaction on Visualization and Computer Graphics: Tobias Isenberg, Mickaël Sereno, Petra Isenberg

- ACM International Journal of Human-Computer Interaction: Mickaël Sereno

- ACM Journal on Computing and Cultural Heritage: Mickaël Sereno

- Computer Science Review: Petra Isenberg

- ACM Transactions on Computer-Human Interaction: Petra Isenberg

10.1.3 Invited talks

- Jean-Daniel Fekete: Keynote at the Inria-EPFL Workshop 2022 Scalability in Exploratory Visual Data Analysis with Progressive Data Analysis, Lausanne, Nov. 18, 2022

- Jean-Daniel Feket: Keynote at the Visualization in Data Science 2022 Conference Scalability with Progressive Data Science, Oklahoma City, Oct. 16, 2022

- Tobias Isenberg: Illustrative Visualization of DNA: A Voyage from the Nucleus to the Nucleotides and Back, Indiana University, USA (November 2022)

- Tobias Isenberg: Thoughts on how to Effectively Explore Visual Representations in Mixed Reality, Kaust, Saudi Arabia (November 2022)

- Alaul Islam: Visualizations for Fitness Trackers, Interactive Media Lab Dresden, Germany (July 2022)

- Petra Isenberg: Evaluating the value of visualizations tools & designs, Beijing Summer School (July, 2022)

- Petra Isenberg: When Visualization meets HCI, Sino-European Workshop Series on Visualization (February, 2022)

- Petra Isenberg: Micro Visualizations, LISN IAH Department Day (December 2022)

10.1.4 Leadership within the scientific community

- Jean-Daniel Fekete: Member of the Scientific Committee of the French journal “humanités numériques”

- Jean-Daniel Fekete: Committee member of the Prix de Thèse Gilles Kahn (French best CS PhD dissertation award)

- Petra Isenberg: Vice-Chair of the IEEE VIS Steering Committee

- Tobias Isenberg, Petra Isenberg: Steering Committee for BELIV

- Tobias Isenberg: Member of the EuroVis 2022 best poster committee workshop series

10.1.5 Scientific expertise

- Petra Isenberg: Ex[ert Reviewer for the Swedish Vinväxt Initiative

- Petra Isenberg: Member of the ANR grant review committee, Interaction et Robotique

- Petra Isenberg: Member of the Inria Concours n° 2022-DT-SIF-1

10.1.6 Research administration

- Petra Isenberg: Member of the Commission de Developpement Technologique (Inria)

- Petra Isenberg: Commission formation de la GS ISN (Paris-Saclay)

- Frédéric Vernier: Conseil d'Administration de l'Université Paris-Saclay (membre du bureau du CA)

- Frédéric Vernier: Commission Electorale de l'Université Paris-Saclay

- Frédéric Vernier: Commission des Carrières des Enseignants-Chercheur (CCEC= equiv. Conseil ACadémique en formation restreinte) de l'Université Paris-Saclay

- Frédéric Vernier: Membre élu du conseil du departement d'informatique de l'Université Paris-Saclay

10.2 Teaching - Supervision - Juries

10.2.1 Teaching

- Master: Petra Isenberg, Lijie Yao, “Interactive Information Visualization”, 36.5h equivalent TD (total, co-taught with Anastasia Bezerianos), M1/M2, Université Paris-Saclay.

- Master: Petra Isenberg, “Visual Analytics”, 60h equivalent TD (co-taught with Natkamon Tovanich), M2, Centrale Supelec.

- Licence: Tobias Isenberg, “Introduction to Computer Graphics”, 18h en équivalent TD, L3, Polytech Paris-Saclay, France.

- Master: Tobias Isenberg, “Photorealistic Rendering/Advanced Computer Graphics”, 28h en équivalent TD, M2, Polytech Paris-Saclay, France.

- Master: Tobias Isenberg, Jiayi Hong, “Data Visualization”, 36h en équivalent TD, M2, CentraleSupélec, France.

- Master: Frédéric Vernier, class and lab class. “Information visualization”, 24h en équivalent TD, M2 ISC, Faculté des sciences d'Orsay. Univ. Paris-Saclay, France.

- Master: Frédéric Vernier, class and lab class. “Web development with node.js”, 21h en équivalent TD, M2 HCI, Faculté des sciences d'Orsay. Univ. Paris-Saclay, France.

- Licence: Mickaël Sereno, tutorial sessions for “Introduction to Computer Graphics”, 24h en équivalent TD, ET3 (eq. L3), Polytech Paris-Saclay, France.

- Licence: Mickaël Sereno, tutorial sessions for “Informatique Graphique 3D”, 40h en équivalent TD, équivalent L3, Ecole Polytechnique Paris-Saclay, France.

- Licence: Frédéric Vernier, class and lab class. “Introduction to Computer Graphics”, 42h en équivalent TD, L1-MI, Faculté des sciences d'Orsay. Univ. Paris-Saclay, France.

- Licence: Frédéric Vernier, class and lab class. “Advanced Computer Graphics”, 36h en équivalent TD, L2-MI, Faculté des sciences d'Orsay. Univ. Paris-Saclay, France.

- Licence: Frédéric Vernier, class and lab class. “Web development”, 42h en équivalent TD, L3 computer sciences, Faculté des sciences d'Orsay. Univ. Paris-Saclay, France.

10.2.2 Supervision

- PhD completed: Alexis Pister, Exploration, Analysis, Interpretability of Large Historical Networks, Univ. Paris-Saclay, (co-supervision with Christophe Prieur), defense December 2022, supervised by Jean-Daniel Fekete

- PhD in progress: Katerina Batziakoudi, Visualization of budget and accounting data, supervised by Jean-Daniel Fekete and Stéphanie Rey from the Berger-Levrault company, defense in 2025

- PhD completed: Natkamon Tovanich, Visualization and Visual Analytics of Blockchain, supervised by Petra Isenberg and Jean-Daniel Fekete, defense February 2022

- PhD in progress: Alaul Islam, Micro Visualizations for Fitness Trackers, Supervised by Petra Isenberg, defense planned for March 2023

- PhD in progress: Lijie Yao, Situated Visualization in Motion, Univ. Paris-Saclay, defense planned for December 2023, Petra Isenberg, Anastasia Bezerianos

- PhD in progress: Tobias Isenberg, Alain Trubuil; PhD student: Jiayi Hong; Thesis: Machine Learning Supported Interactive Visualization of Hybrid 3D and 2D Data for the Example of Plant Cell Lineage Specification; on-going, defense planned for February 2023

- PhD in progress: Tobias Isenberg; PhD student: Tingying He; Thesis: A design space for visual data mapping for low-color displays; on-going, defense planned for September 2024

- MSc thesis completed: Petra Isenberg, Lijie Yao; Master student: Federica Bucchieri; Thesis: Situated Visualization in Motion for Video Games; Duration: 01/03/2022 - 31/08/2022

- MSc thesis completed: Jiayi Hong, Tobias Isenberg; Master student: Ebrar Ada D. Santos; Thesis: Exploring Interaction Design for Combining 2D Abstract Representations with 3D Representations; Duration: 01/03/2022 - 31/08/2022

10.2.3 PhD Juries

- Jean-Daniel Fekete: member of the PhD jury for Dr. Miguel Renom, Université Paris-Saclay: Theoretical Bases of human tool use in digital environments (Apr. 12, 2022)

- Tobias Isenberg: member of the PhD jury for Dr. Gwendal Fouché, INSA Rennes, France: Immersive and Interactive Visualization of 3D Temporal Data (December 2022)

- Tobias Isenberg: member of the PhD jury for Dr. Éloi Durant, Université de Montpellier, France: Development of a Tool for the Visualization of Plant Pangenomes (September 2022)

- Tobias Isenberg: member of the PhD jury for Dr. Laura A. Garrison, University of Bergen, Norway: From Molecules to the Masses: Visual Exploration, Analysis, and Communication of Human Physiology (September 2022)

- Tobias Isenberg: member of the PhD jury for Dr. Corentin Guérinot, Institut Pasteur, France: Statistical Signatures of Learning in the Olfactory Bulb and a New Approach to Image Stack Segmentation (June 2022)

- Tobias Isenberg: member of the PhD jury for Dr. Jung Nam, University of Minnesota, USA: Everyday Scientific Visualization: Making 3D Visualization Techniques Accessible for Day-To-Day Team-Science Collaboration and Analysis (May 2022)

- Tobias Isenberg: member of the PhD jury for Dr. Pauline Olivier, École Polytechnique, France: Dynamic Sketches: Hierarchical Modeling of Complex and Time-evolving Scenes (March 2022)

- Petra Isenberg: opponent in the PhD jury for Dr. Nico Reski, Linnaeus University, Sweden: Supporting Data Interaction and Hybrid Asymmetric Collaboration Using Virtual Reality Within the Context of Immersive Analytics (September, 2022)

10.3 Popularization

10.3.1 Social Media Responsibilities

- Petra Isenberg is social media chair at IEEE Computer Graphics and Applications

10.3.2 Education

- Tobias Isenberg: organization and supervision of a stage d’observation des collèges et lycées on the topic of sensor programming, data visualization, and fabrication (April 2022)

11 Scientific production

11.1 Major publications

- 1 articlePyramid-based Scatterplots Sampling for Progressive and Streaming Data Visualization.IEEE Transactions on Visualization and Computer Graphics281January 2022, 593-603

- 2 articleSix Methods for Transforming Layered Hypergraphs to Apply Layered Graph Layout Algorithms.Computer Graphics Forum413June 2022, 1467-8659

- 3 articleBeauVis: A Validated Scale for Measuring the Aesthetic Pleasure of Visual Representations.IEEE Transactions on Visualization and Computer Graphics291January 2023, 363–373

- 4 articleLineageD: An Interactive Visual System for Plant Cell Lineage Assignments based on Correctable Machine Learning.Computer Graphics Forum413June 2022, 195--207

- 5 articleDo you believe your (social media) data? A personal story on location data biases, errors, and plausibility as well as their visualization.IEEE Transactions on Visualization and Computer Graphics289September 2022, 3277–3291