Keywords

Computer Science and Digital Science

- A5.3. Image processing and analysis

- A5.3.2. Sparse modeling and image representation

- A5.3.3. Pattern recognition

- A5.5.1. Geometrical modeling

- A8.3. Geometry, Topology

- A8.12. Optimal transport

- A9.2. Machine learning

Other Research Topics and Application Domains

- B2.5. Handicap and personal assistances

- B3.3. Geosciences

- B5.1. Factory of the future

- B5.6. Robotic systems

- B5.7. 3D printing

- B8.3. Urbanism and urban planning

1 Team members, visitors, external collaborators

Research Scientists

- Pierre Alliez [Team leader, INRIA, Senior Researcher, HDR]

- Florent Lafarge [INRIA, Senior Researcher, HDR]

Post-Doctoral Fellows

- Roberto Dyke [INRIA, from Dec 2022]

- Johann Lussange [INRIA, until Aug 2022]

- Raphael Sulzer [INRIA, from Nov 2022]

- Daniel Zint [INRIA]

PhD Students

- Gaétan Bahl [IRT ST EXUPERY, until Feb 2022]

- Marion Boyer [CNES, from Oct 2022]

- Rao Fu [GEOMETRY FACTORY]

- Jacopo Iollo [INRIA]

- Nissim Maruani [INRIA, from Nov 2022]

- Mulin Yu [INRIA]

- Armand Zampieri [SAMP SAS, CIFRE, from Dec 2022]

- Tong Zhao [INRIA, until Nov 2022]

- Alexandre Zoppis [Université Côte d’Azur]

Technical Staff

- Kacper Pluta [INRIA, Engineer]

- Cédric Portaneri [INRIA, Engineer, until Jan 2022]

Interns and Apprentices

- Théo Aguilar Vidal [Université Côte d’Azur, from Apr 2022 until Sep 2022]

- Amaury Autric [Ecole Polytechnique Palaiseau, from Jun 2022 until Aug 2022]

- Qiuyu Chen [Polytech Nice Sophia, Université Côte d’Azur, from Mar 2022 until Aug 2022]

- Agrawal Rudransh [Indian Institute of Technology Delhi, from Jun 2022 until Aug 2022, Engineer]

Administrative Assistant

- Florence Barbara [INRIA]

Visiting Scientists

- Andriamahenina Ramanantoanina [Università della Svizzera Italiana , from Sep 2022]

- Julian Stahl [Erlangen University, from Sep 2022 until Sep 2022]

External Collaborators

- Andreas Fabri [GEOMETRY FACTORY]

- Sven Oesau [GEOMETRY FACTORY, from Apr 2022]

- Laurent Rineau [GEOMETRY FACTORY]

2 Overall objectives

2.1 General Presentation

Our overall objective is the computerized geometric modeling of complex scenes from physical measurements. On the geometric modeling and processing pipeline, this objective corresponds to steps required for conversion from physical to effective digital representations: analysis, reconstruction and approximation. Another longer term objective is the synthesis of complex scenes. This objective is related to analysis as we assume that the main sources of data are measurements, and synthesis is assumed to be carried out from samples.

The related scientific challenges include i) being resilient to defect-laden data due to the uncertainty in the measurement processes and imperfect algorithms along the pipeline, ii) being resilient to heterogeneous data, both in type and in scale, iii) dealing with massive data, and iv) recovering or preserving the structure of complex scenes. We define the quality of a computerized representation by its i) geometric accuracy, or faithfulness to the physical scene, ii) complexity, iii) structure accuracy and control, and iv) amenability to effective processing and high level scene understanding.

3 Research program

3.1 Context

Geometric modeling and processing revolve around three main end goals: a computerized shape representation that can be visualized (creating a realistic or artistic depiction), simulated (anticipating the real) or realized (manufacturing a conceptual or engineering design). Aside from the mere editing of geometry, central research themes in geometric modeling involve conversions between physical (real), discrete (digital), and mathematical (abstract) representations. Going from physical to digital is referred to as shape acquisition and reconstruction; going from mathematical to discrete is referred to as shape approximation and mesh generation; going from discrete to physical is referred to as shape rationalization.

Geometric modeling has become an indispensable component for computational and reverse engineering. Simulations are now routinely performed on complex shapes issued not only from computer-aided design but also from an increasing amount of available measurements. The scale of acquired data is quickly growing: we no longer deal exclusively with individual shapes, but with entire scenes, possibly at the scale of entire cities, with many objects defined as structured shapes. We are witnessing a rapid evolution of the acquisition paradigms with an increasing variety of sensors and the development of community data, as well as disseminated data.

In recent years, the evolution of acquisition technologies and methods has translated in an increasing overlap of algorithms and data in the computer vision, image processing, and computer graphics communities. Beyond the rapid increase of resolution through technological advances of sensors and methods for mosaicing images, the line between laser scan data and photos is getting thinner. Combining, e.g., laser scanners with panoramic cameras leads to massive 3D point sets with color attributes. In addition, it is now possible to generate dense point sets not just from laser scanners but also from photogrammetry techniques when using a well-designed acquisition protocol. Depth cameras are getting increasingly common, and beyond retrieving depth information we can enrich the main acquisition systems with additional hardware to measure geometric information about the sensor and improve data registration: e.g., accelerometers or gps for geographic location, and compasses or gyrometers for orientation. Finally, complex scenes can be observed at different scales ranging from satellite to pedestrian through aerial levels.

These evolutions allow practitioners to measure urban scenes at resolutions that were until now possible only at the scale of individual shapes. The related scientific challenge is however more than just dealing with massive data sets coming from increase of resolution, as complex scenes are composed of multiple objects with structural relationships. The latter relate i) to the way the individual shapes are grouped to form objects, object classes or hierarchies, ii) to geometry when dealing with similarity, regularity, parallelism or symmetry, and iii) to domain-specific semantic considerations. Beyond reconstruction and approximation, consolidation and synthesis of complex scenes require rich structural relationships.

The problems arising from these evolutions suggest that the strengths of geometry and images may be combined in the form of new methodological solutions such as photo-consistent reconstruction. In addition, the process of measuring the geometry of sensors (through gyrometers and accelerometers) often requires both geometry process and image analysis for improved accuracy and robustness. Modeling urban scenes from measurements illustrates this growing synergy, and it has become a central concern for a variety of applications ranging from urban planning to simulation through rendering and special effects.

3.2 Analysis

Complex scenes are usually composed of a large number of objects which may significantly differ in terms of complexity, diversity, and density. These objects must be identified and their structural relationships must be recovered in order to model the scenes with improved robustness, low complexity, variable levels of details and ultimately, semantization (automated process of increasing degree of semantic content).

Object classification is an ill-posed task in which the objects composing a scene are detected and recognized with respect to predefined classes, the objective going beyond scene segmentation. The high variability in each class may explain the success of the stochastic approach which is able to model widely variable classes. As it requires a priori knowledge this process is often domain-specific such as for urban scenes where we wish to distinguish between instances as ground, vegetation and buildings. Additional challenges arise when each class must be refined, such as roof super-structures for urban reconstruction.

Structure extraction consists in recovering structural relationships between objects or parts of object. The structure may be related to adjacencies between objects, hierarchical decomposition, singularities or canonical geometric relationships. It is crucial for effective geometric modeling through levels of details or hierarchical multiresolution modeling. Ideally we wish to learn the structural rules that govern the physical scene manufacturing. Understanding the main canonical geometric relationships between object parts involves detecting regular structures and equivalences under certain transformations such as parallelism, orthogonality and symmetry. Identifying structural and geometric repetitions or symmetries is relevant for dealing with missing data during data consolidation.

Data consolidation is a problem of growing interest for practitioners, with the increase of heterogeneous and defect-laden data. To be exploitable, such defect-laden data must be consolidated by improving the data sampling quality and by reinforcing the geometrical and structural relations sub-tending the observed scenes. Enforcing canonical geometric relationships such as local coplanarity or orthogonality is relevant for registration of heterogeneous or redundant data, as well as for improving the robustness of the reconstruction process.

3.3 Approximation

Our objective is to explore the approximation of complex shapes and scenes with surface and volume meshes, as well as on surface and domain tiling. A general way to state the shape approximation problem is to say that we search for the shape discretization (possibly with several levels of detail) that realizes the best complexity / distortion trade-off. Such a problem statement requires defining a discretization model, an error metric to measure distortion as well as a way to measure complexity. The latter is most commonly expressed in number of polygon primitives, but other measures closer to information theory lead to measurements such as number of bits or minimum description length.

For surface meshes we intend to conceive methods which provide control and guarantees both over the global approximation error and over the validity of the embedding. In addition, we seek for resilience to heterogeneous data, and robustness to noise and outliers. This would allow repairing and simplifying triangle soups with cracks, self-intersections and gaps. Another exploratory objective is to deal generically with different error metrics such as the symmetric Hausdorff distance, or a Sobolev norm which mixes errors in geometry and normals.

For surface and domain tiling the term meshing is substituted for tiling to stress the fact that tiles may be not just simple elements, but can model complex smooth shapes such as bilinear quadrangles. Quadrangle surface tiling is central for the so-called resurfacing problem in reverse engineering: the goal is to tile an input raw surface geometry such that the union of the tiles approximates the input well and such that each tile matches certain properties related to its shape or its size. In addition, we may require parameterization domains with a simple structure. Our goal is to devise surface tiling algorithms that are both reliable and resilient to defect-laden inputs, effective from the shape approximation point of view, and with flexible control upon the structure of the tiling.

3.4 Reconstruction

Assuming a geometric dataset made out of points or slices, the process of shape reconstruction amounts to recovering a surface or a solid that matches these samples. This problem is inherently ill-posed as infinitely-many shapes may fit the data. One must thus regularize the problem and add priors such as simplicity or smoothness of the inferred shape.

The concept of geometric simplicity has led to a number of interpolating techniques commonly based upon the Delaunay triangulation. The concept of smoothness has led to a number of approximating techniques that commonly compute an implicit function such that one of its isosurfaces approximates the inferred surface. Reconstruction algorithms can also use an explicit set of prior shapes for inference by assuming that the observed data can be described by these predefined prior shapes. One key lesson learned in the shape problem is that there is probably not a single solution which can solve all cases, each of them coming with its own distinctive features. In addition, some data sets such as point sets acquired on urban scenes are very domain-specific and require a dedicated line of research.

In recent years the smooth, closed case (i.e., shapes without sharp features nor boundaries) has received considerable attention. However, the state-of-the-art methods have several shortcomings: in addition to being in general not robust to outliers and not sufficiently robust to noise, they often require additional attributes as input, such as lines of sight or oriented normals. We wish to devise shape reconstruction methods which are both geometrically and topologically accurate without requiring additional attributes, while exhibiting resilience to defect-laden inputs. Resilience formally translates into stability with respect to noise and outliers. Correctness of the reconstruction translates into convergence in geometry and (stable parts of) topology of the reconstruction with respect to the inferred shape known through measurements.

Moving from the smooth, closed case to the piecewise smooth case (possibly with boundaries) is considerably harder as the ill-posedness of the problem applies to each sub-feature of the inferred shape. Further, very few approaches tackle the combined issue of robustness (to sampling defects, noise and outliers) and feature reconstruction.

4 Application domains

In addition to tackling enduring scientific challenges, our research on geometric modeling and processing is motivated by applications to computational engineering, reverse engineering, robotics, digital mapping and urban planning. The main outcome of our research will be algorithms with theoretical foundations. Ultimately, we wish to contribute making geometry modeling and processing routine for practitioners who deal with real-world data. Our contributions may also be used as a sound basis for future software and technology developments.

Our first ambition for technology transfer is to consolidate the components of our research experiments in the form of new software components for the CGAL (Computational Geometry Algorithms Library). Through CGAL, we wish to contribute to the “standard geometric toolbox”, so as to provide a generic answer to application needs instead of fragmenting our contributions. We already cooperate with the Inria spin-off company Geometry Factory, which commercializes CGAL, maintains it and provide technical support.

Our second ambition is to increase the research momentum of companies through advising Cifre Ph.D. theses and postdoctoral fellows on topics that match our research program.

5 Social and environmental responsibility

5.1 Impact of research results

We are collaborating with Ekinnox, an Inria spin-off which develops human movement analysis solutions for healthcare institutions, and with the Lamhess laboratory (Université Côte d'Azur). We provide scientific advice on automated analysis of human walking, in collaboration with Laurent Busé from the Aromath project-team.

6 Highlights of the year

Three PhD students have defended their thesis.

This year has been rich in new collaborations on digital twins of various kinds: for factories, industrial sites, civil infrastructures and construction sites.

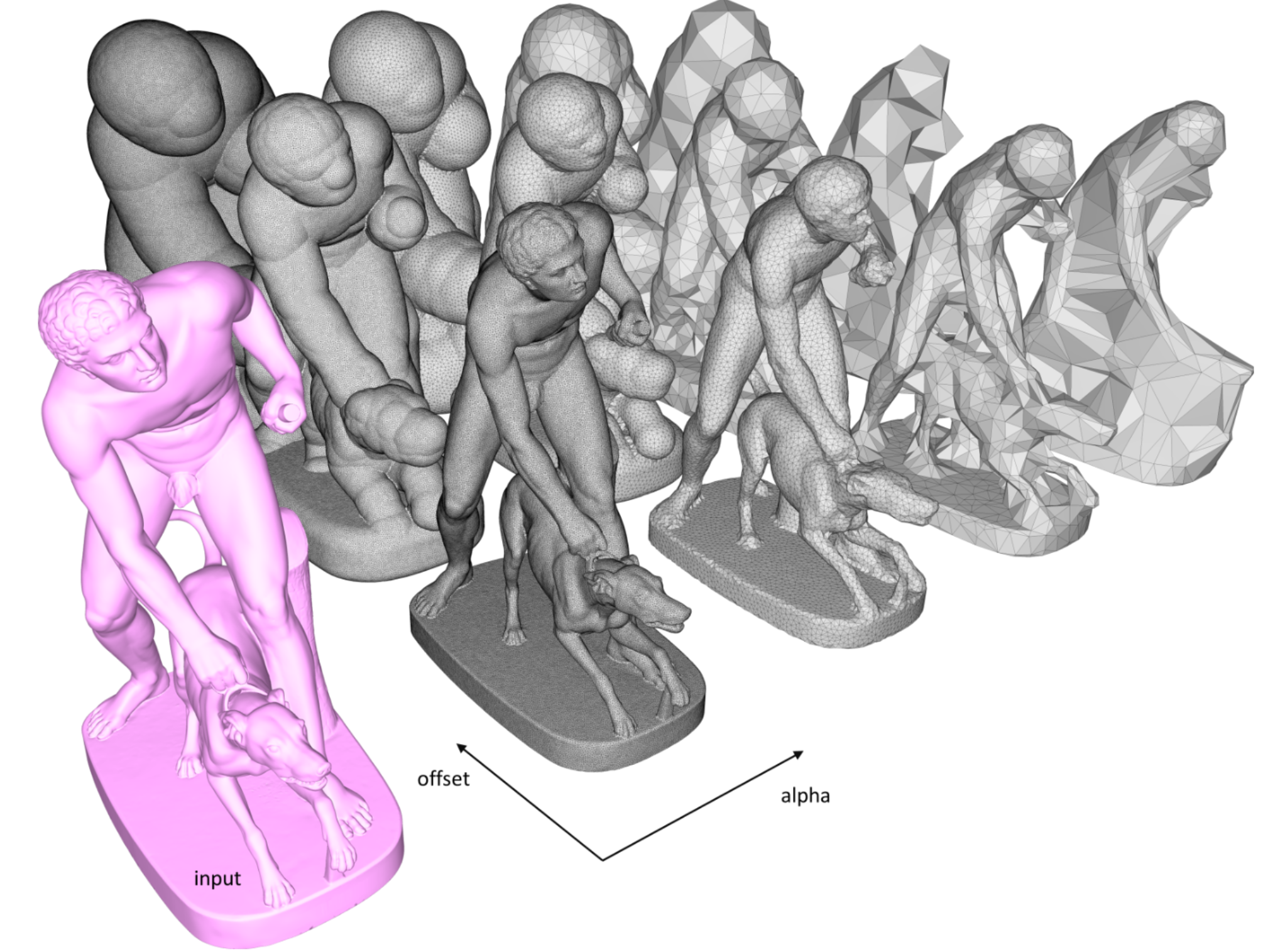

We have successfully completed a three-year collaboration with Google X, by delivering a novel algorithm for wrapping raw input 3D data, implementing it as a novel component for the CGAL library with help from Geometry Factory, and publishing a paper at the ACM SIGGRAPH conference 13.

Our activities on CGAL have intensified, with one postdoc funded by the plan de relance in collaboration with Geometry Factory, and three interns funded by the Google summer of code.

We have started a new collaborative project (an Inria challenge) with the CEREMA institute, on digital twinning of roads and civil infrastructures.

Pierre Alliez is editor in chief of the Computer Graphics Forum since January 2022 (212 submissions handled this year, with help from 54 associate editors).

7 New software and platforms

7.1 New software

7.1.1 Cifre PhD Thesis Titane - DS

-

Name:

Software for Cifre PhD thesis Titane - Dassault Systemes

-

Keyword:

Geometric computing

-

Functional Description:

This software addresses the problem of simplifying two-dimensional polygonal partitions that exhibit strong regularities. Such partitions are relevant for reconstructing urban scenes in a concise way. Preserving long linear structures spanning several partition cells motivates a point-line projective duality approach in which points represent line intersections and lines possibly carry multiple points. We implement a simplification algorithm that seeks a balance between the fidelity to the input partition, the enforcement of canonical relationships between lines (orthogonality or parallelism) and a low complexity output. Our methodology alternates continuous optimization by Riemannian gradient descent with combinatorial reduction, resulting in a progressive simplification scheme. Our experiments show that preserving canonical relationships helps gracefully degrade partitions of urban scenes, and yields more concise and regularity-preserving meshes than previous mesh-based simplification approaches.

-

Contact:

Pierre Alliez

-

Participants:

Pierre Alliez, Florent Lafarge

-

Partner:

Dassault Systèmes

7.1.2 CGAL - Orthtree

-

Name:

Quadtrees, Octrees, and Orthtrees

-

Keyword:

Octree/Quadtree

-

Functional Description:

Quadtrees are tree data structures in which each node encloses a square section of space, and each internal node has exactly 4 children. Octrees are a similar data structure in 3D in which each node encloses a cubic section of space, and each internal node has exactly 8 children.

We call the generalization of such data structure "orthtrees", as orthants are generalizations of quadrants and octants. The term "hyperoctree" can also be found in literature to name such data structures in dimensions 4 and higher.

This package provides a general data structure Orthtree along with aliases for Quadtree and Octree. These trees can be constructed with custom point ranges and split predicates, and iterated on with various traversal methods.

-

Release Contributions:

Initial version

-

Contact:

Pierre Alliez

-

Participants:

Pierre Alliez, Jackson Campolattaro, Simon Giraudot

-

Partner:

GeometryFactory

7.1.3 CGAL - 3D Alpha Wrapping

-

Keyword:

Shrink-wrapping

-

Scientific Description:

Various tasks in geometric modeling and processing require 3D objects represented as valid surface meshes, where "valid" refers to meshes that are watertight, intersection-free, orientable, and combinatorially 2-manifold. Such representations offer well-defined notions of interior/exterior and geodesic neighborhoods.

3D data are usually acquired through measurements followed by reconstruction, designed by humans, or generated through imperfect automated processes. As a result, they can exhibit a wide variety of defects including gaps, missing data, self-intersections, degeneracies such as zero-volume structures, and non-manifold features.

Given the large repertoire of possible defects, many methods and data structures have been proposed to repair specific defects, usually with the goal of guaranteeing specific properties in the repaired 3D model. Reliably repairing all types of defects is notoriously difficult and is often an ill-posed problem as many valid solutions exist for a given 3D model with defects. In addition, the input model can be overly complex with unnecessary geometric details, spurious topological structures, nonessential inner components, or excessively fine discretizations. For applications such as collision avoidance, path planning, or simulation, getting an approximation of the input can be more relevant than repairing it. Approximation herein refers to an approach capable of filtering out inner structures, fine details and cavities, as well as wrapping the input within a user-defined offset margin.

Given an input 3D geometry, we address the problem of computing a conservative approximation, where conservative means guaranteeing a strictly enclosed input. We seek unconditional robustness in the sense that the output mesh should be valid (oriented, combinatorially 2-manifold and without self-intersections), even for raw input with many defects and degeneracies. The default input is a soup of 3D triangles, but the generic interface leaves the door open to other types of finite 3D primitives.

-

Functional Description:

The algorithm proceeds by shrink-wrapping and refining a 3D Delaunay triangulation loosely bounding the input. Two user-defined parameters, alpha and offset, offer control over the maximum size of cavities where the shrink-wrapping process can enter, and the tightness of the final surface mesh to the input, respectively. Once combined, these parameters provide a means to trade fidelity to the input for complexity of the output

-

Contact:

Pierre Alliez

-

Participants:

Pierre Alliez, Cedric Steveen Portaneri, Mael Rouxel-Labbé

-

Partner:

GeometryFactory

7.1.4 CGAL - NURBS meshing

-

Name:

CGAL - Meshing NURBS surfaces via Delaunay refinement

-

Keywords:

Meshing, NURBS

-

Scientific Description:

NURBS is the dominant boundary representation (B-Rep) in CAD systems. The meshing algorithms of NURBS models available for the industrial applications are based on the meshing of individual NURBS surfaces. This process can be hampered by the inevitable trimming defects in NURBS models, leading to non-watertight surface meshes and further failing to generate volumetric meshes. In order to guarantee the generation of valid surface and volumetric meshes of NURBS models even with the presence of trimming defects, NURBS models are meshed via Delaunay refinement, based on the Delaunay oracle implemented in CGAL. In order to achieve the Delaunay refinement, the trimmed regions of a NURBS model are covered with balls. Protection balls are used to cover sharp features. The ball centres are taken as weighted points in the Delaunay refinement, so that sharp features are preserved in the mesh. The ball sizes are determined with local geometric features. Blending balls are used to cover other trimmed regions which do not need to be preserved. Inside blending balls implicit surfaces are generated with Duchon’s interpolating spline with a handful of sampling points. The intersection computation in the Delaunay refinement depends on the region where the intersection is computed. The general line/NURBS surface intersection is computed for intersections away from trimmed regions, and the line/implicit surface intersection is computed for intersections inside blending balls. The resulting mesh is a watertight volumetric mesh, satisfying user-defined size and shape criteria for facets and cells. Sharp features are preserved in the mesh adaptive to local features, and mesh elements cross smooth surface boundaries.

-

Functional Description:

Input: NURBS surface Output: isotropic tetrahedron mesh

- Publication:

-

Contact:

Pierre Alliez

-

Participants:

Pierre Alliez, Laurent Busé, Xiao Xiao, Laurent Rineau

-

Partner:

GeometryFactory

7.2 New platforms

Participants: Pierre Alliez, Florent Lafarge.

No new platforms in the period.

8 New results

Our quest for algorithms that are both reliable and resilient to defects led to several new results either for repairing urban 3D models, or for providing topological guarantees by design (e.g., strict enclosure of input 3D models for robotics applications). Our collaboration with Dassault systemes (Cifre PhD thesis) ended with a novel algorithm for simplification of urban scenes, with a 2D standpoint. Our collaborations with industrial partners offer a great source of problems with high diversity. We pursued our research activities in remote sensing (onboard image analysis via low-power deep learning) via our collaboration with IRT Saint Exupery. Our collaboration with Dorea technology was an opportunity to explore a physics-informed shape approximation method for radiative thermal simulation of satellites. We have very few research projects on realtime analysis which is very new and challenging. Our ANR project on indoor localization for soldiers ended with a new wearable system of sensors mingling images and 3D point clouds. Our collaboration with Ekinnox (Inria spinoff) led to a new method for gait analysis.

8.1 Analysis

8.1.1 Finding Good Configurations of Planar Primitives in Unorganized Point Clouds

Participants: Mulin Yu, Florent Lafarge.

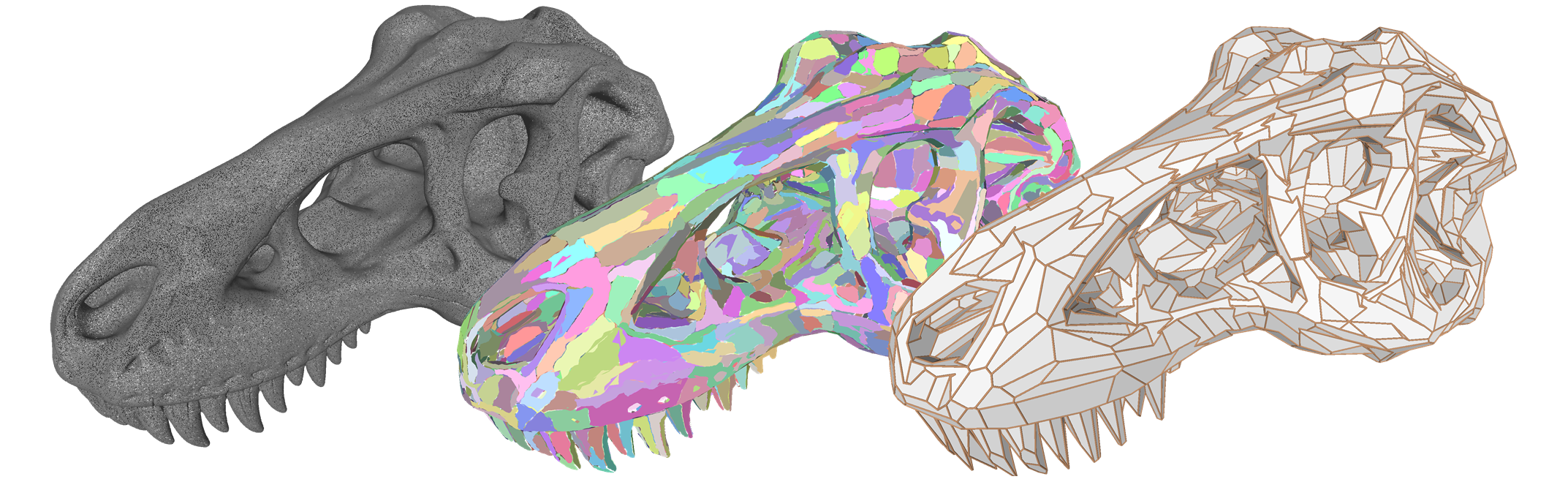

Planar primitives are detected on a surface triangle mesh.

We propose an algorithm for detecting planar primitives from unorganized 3D point clouds. Departing from an initial configuration, the algorithm refines both the continuous plane parameters and the discrete assignment of input points to them by seeking high fidelity, high simplicity and high completeness. Our key contribution relies upon the design of an exploration mechanism guided by a multiobjective energy function. The transitions within the large solution space are handled by five geometric operators that create, remove and modify primitives. We demonstrate the potential of our method on a variety of scenes, from organic shapes to man-made objects, and sensors, from multiview stereo to laser. We show its efficacy with respect to existing primitive fitting approaches and illustrate its applicative interest in compact mesh reconstruction, when combined with a plane assembly method (See Figure 1). This work was presented at the Conference on Computer Vision and Pattern Recognition 19.

8.1.2 Single-Shot End-to-end Road Graph Extraction

Participants: Gaétan Bahl, Florent Lafarge.

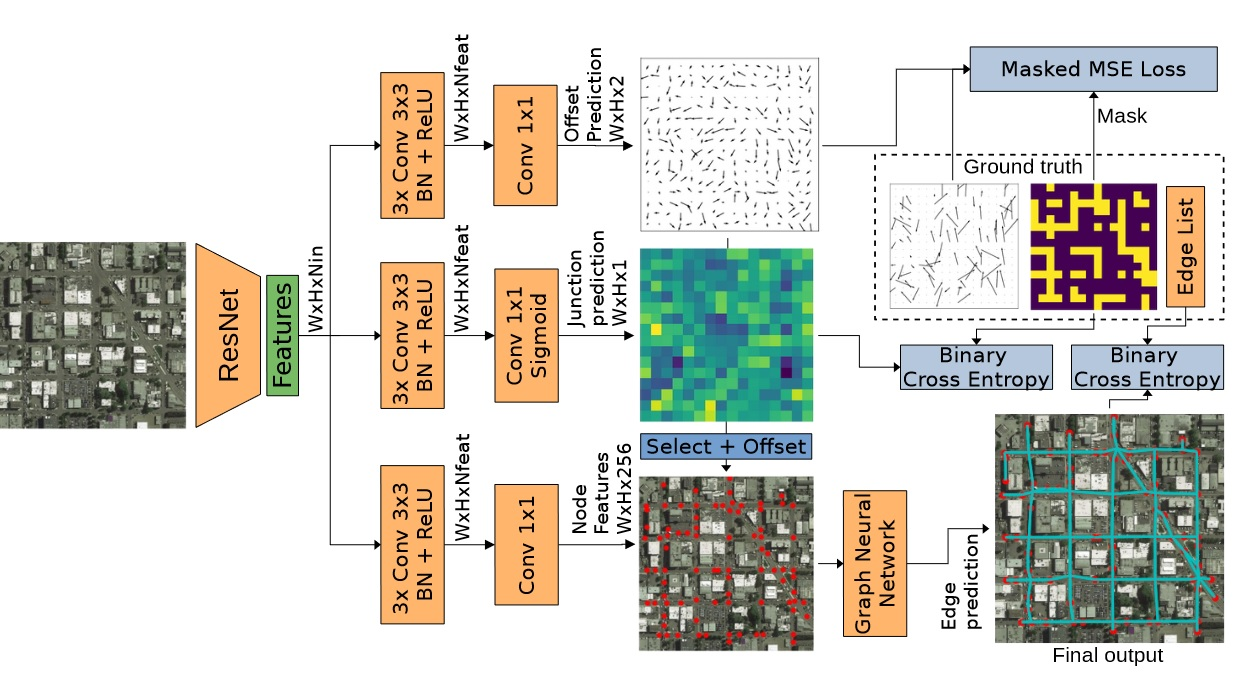

In collaboration with Mehdi Bahri (Imperial College London).

Road graph extraction from a satellite image.

Automatic road graph extraction from aerial and satellite images is a long-standing challenge. Existing algorithms are either based on pixel-level segmentation followed by vectorization, or on iterative graph construction using next move prediction. Both of these strategies suffer from severe drawbacks, in particular high computing resources and incomplete outputs. By contrast, we propose a method that directly infers the final road graph in a single pass. The key idea consists in combining a Fully Convolutional Network in charge of locating points of interest such as intersections, dead ends and turns, and a Graph Neural Network which predicts links between these points. Such a strategy is more efficient than iterative methods and allows us to streamline the training process by removing the need for generation of starting locations while keeping the training end-to-end (See Figure 2). We evaluate our method against existing works on the popular RoadTracer dataset and achieve competitive results. We also benchmark the speed of our method and show that it outperforms existing approaches. Our method opens the possibility of in-flight processing on embedded devices for applications such as real-time road network monitoring and alerts for disaster response. This work was presented at the Computer Vision and Pattern Recognition (CVPR) EarthVision Workshop 16.

8.1.3 Scanner Neural Network for On-board Segmentation of Satellite Images

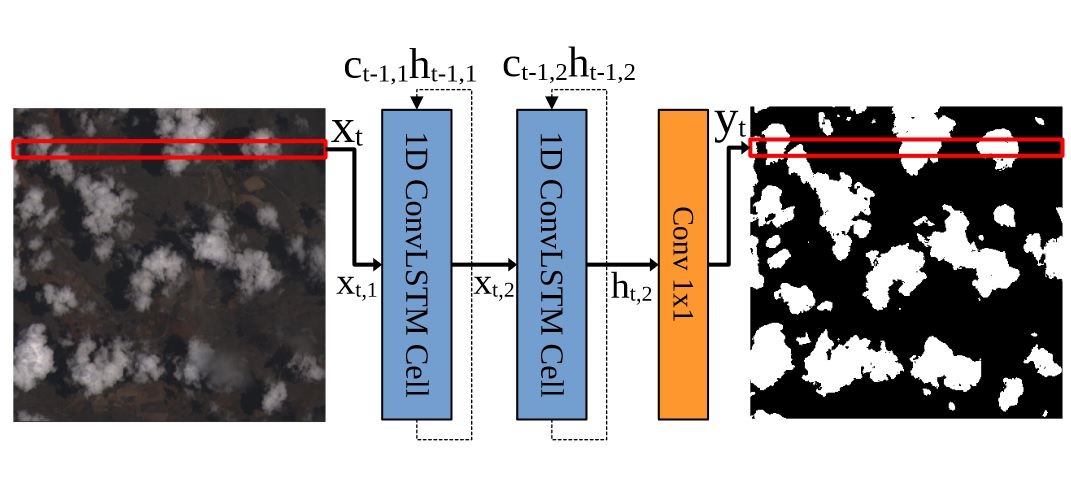

Participants: Gaétan Bahl, Florent Lafarge.

Low power neural network.

Traditional Convolutional Neural Networks (CNN) for semantic segmentation of images use 2D convolution operations. While the spatial inductive bias of 2D convolutions allow CNNs to build hierarchical feature representations, they require that the whole feature maps are kept in memory until the end of the inference. This is not ideal for memory and latency-critical applications such as real-time on-board satellite image segmentation. In this work, we propose a new neural network architecture for semantic segmentation, "Scan-nerNet", based on a Recurrent 1D Convolutional architecture. Our network performs a segmentation of the input image lineby-line, and thus reduces the memory footprint and output latency. These characteristics make it ideal for on-the-fly segmentation of images on-board satellites equipped with push broom sensors such as Landsat 8, or satellites with limited compute capabilities, such as Cubesats. We perform cloud segmentation experiments on embedded hardware and show that our method offers a good compromise between accuracy, memory usage and latency. This work was presented at the International Geoscience and Remote Sensing Symposium 17.

8.1.4 Sharp Feature Consolidation from Raw 3D Point Clouds via Displacement Learning

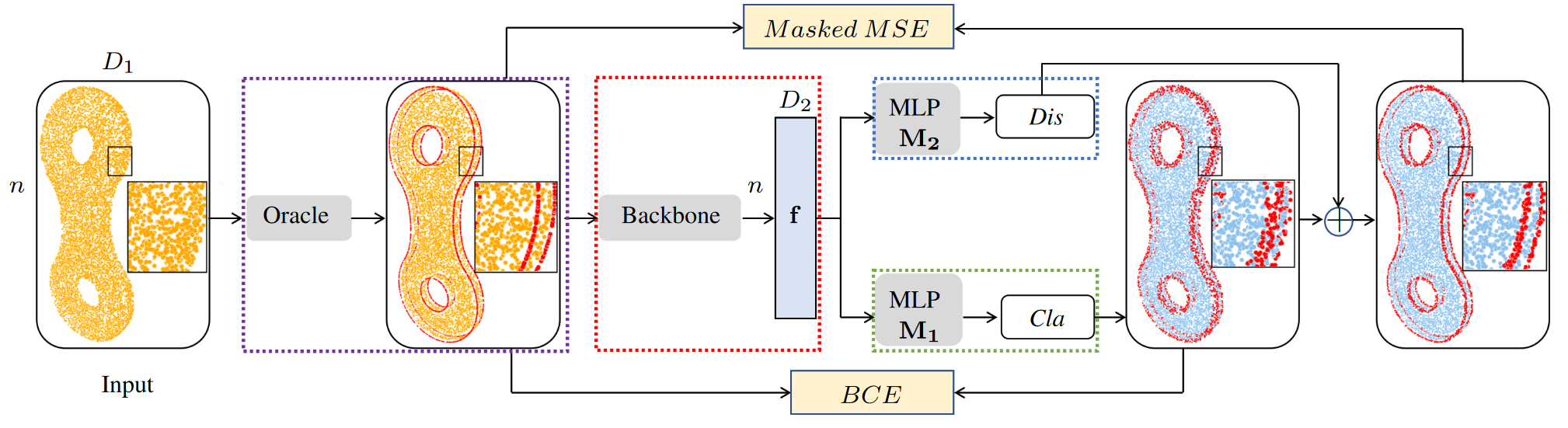

Participants: Tong Zhao, Mulin Yu, Florent Lafarge, Pierre Alliez.

Consolidation of sharp features.

Detecting sharp features in raw 3D point clouds is an essential step in designing efficient priors in several 3D Vision applications. We present a deep learning-based approach that learns to detect and consolidate sharp feature points on raw 3D point clouds. We devised a multi-task neural network architecture that identifies points near sharp features and predicts displacement vectors toward the local sharp features (see Figure 4). The so-detected points are thus consolidated via relocation. Our approach is robust against noise by utilizing a dynamic labeling oracle during the training phase. The approach is also flexible and can be combined with several popular point-based network architectures. Our experiments demonstrate that our approach outperforms the previous work in terms of detection accuracy measured on the popular ABC dataset. We show the efficacy of the proposed approach by applying it to several 3D Vision tasks. A preprint version of this work is available on arXiv 24, currently under submission.

8.1.5 SCR: Smooth Contour Regression with Geometric Priors

Participants: Gaétan Bahl, Florent Lafarge.

In collaboration with Lionel Daniel (IRT Saint Exupéry).

Regression of smooth contours.

While object detection methods traditionally make use of pixel-level masks or bounding boxes, alternative representations such as polygons or active contours have recently emerged. Among them, methods based on the regression of Fourier or Chebyshev coefficients have shown high potential on freeform objects. By defining object shapes as polar functions, they are however limited to star-shaped domains. We address this issue with SCR: a method that captures resolution-free object contours as complex periodic functions. The method offers a good compromise between accuracy and compactness thanks to the design of efficient geometric shape priors (see Figure 5). We benchmark SCR on the popular COCO 2017 instance segmentation dataset, and show its competitiveness against existing algorithms in the field. In addition, we design a compact version of our network, which we benchmark on embedded hardware with a wide range of power targets, achieving up to real-time performance. A preprint version of this work is available on arXiv 23.

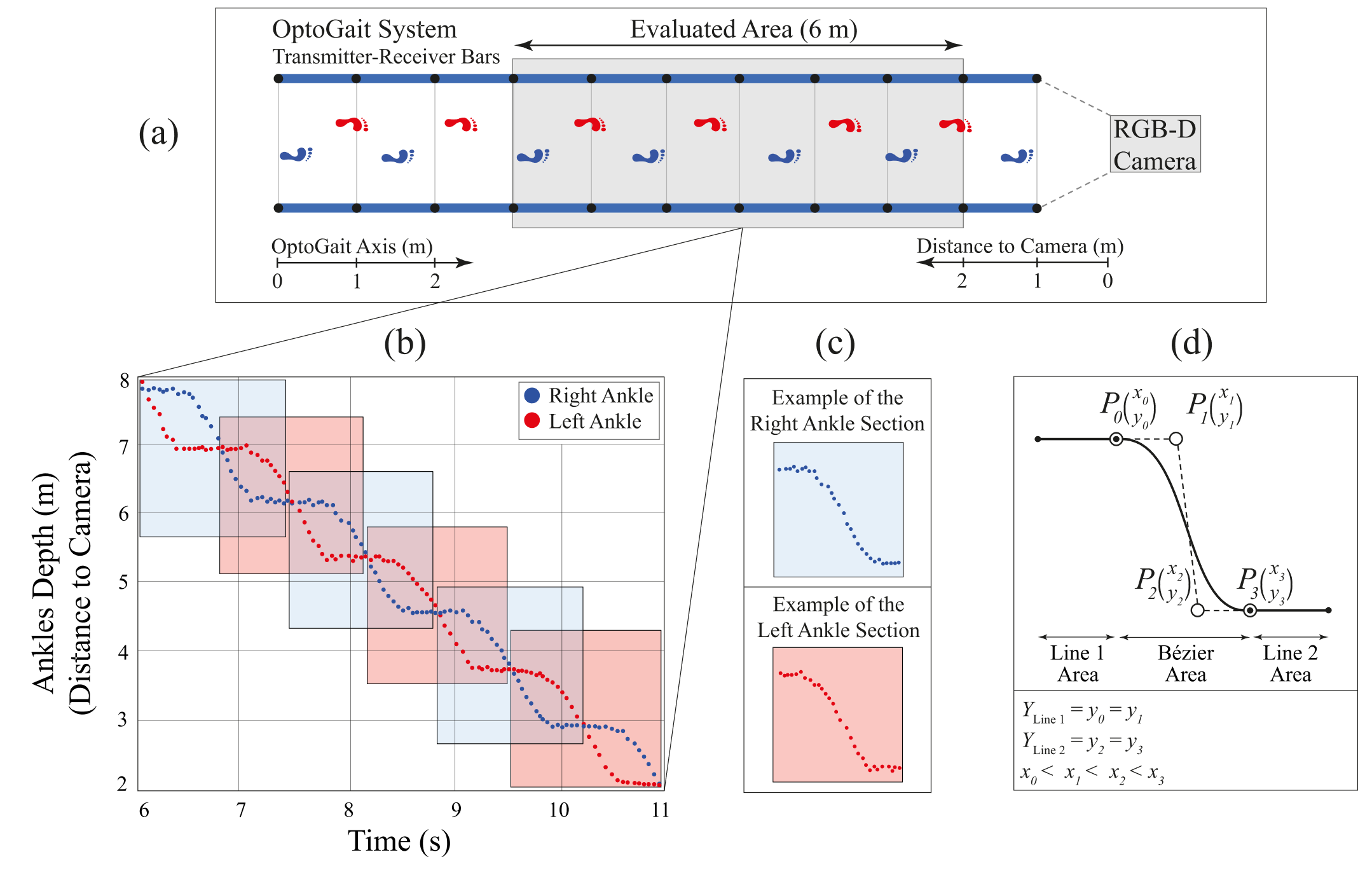

8.1.6 A kinematic-geometric model based on ankles’ depth trajectory in frontal plane for gait analysis using a single RGB-D camera

Participants: Pierre Alliez.

In collaboration with Mehran Hatamzadeh and Laurent Busé (Aromath Inria project team), Frédéric Chorin and Raphael Zory (CHU Nice) and Jean-Dominique Favreau (EKINNOX).

Analysis of human gait.

The emergence of RGB-D cameras and the development of pose estimation algorithms offer opportunities in biomechanics. However, some challenges still remain when using them for gait analysis, including noise which leads to misidentification of gait events and inaccuracy. Therefore, we present a novel kinematic-geometric model for spatio-temporal gait analysis, based on ankles’ trajectory in the frontal plane and distance-to-camera data (depth). Our approach consists of three main steps: identification of the gait pattern and modeling via parameterized curves, development of a fitting algorithm, and computation of locomotive indices. The proposed fitting algorithm applies on both ankles’ depth data simultaneously, by minimizing through numerical optimization some geometric and biomechanical error functions. For validation, 15 subjects were asked to walk inside the walkway of the OptoGait, while the OptoGait and an RGB-D camera (Microsoft Azure Kinect) were both recording. Then, the spatio-temporal parameters of both feet were computed using the OptoGait and the proposed model (see Figure 6). Validation results show that the proposed model yields good to excellent absolute statistical agreement. Our kinematic-geometric model offers several benefits: (1) It relies only on the ankles’ depth trajectory both for gait events extraction and spatio-temporal parameters’ calculation; (2) it is usable with any kind of RGB-D camera or even with 3D marker-based motion analysis systems in absence of toes’ and heels’ markers; and (3) it enables improving the results by denoising and smoothing the ankles’ depth trajectory. Hence, the proposed kinematic-geometric model facilitates the development of portable markerless systems for accurate gait analysis. This work was published in the Journal of Biomechanics 11.

8.1.7 Deep learning architectures for onboard satellite image analysis

Participants: Gaétan Bahl.

This Ph.D. thesis was advised by Florent Lafarge.

The recent advances in high-resolution Earth observation satellites and the reduction in revisit times introduced by the creation of constellations of satellites has led to the daily creation of large amounts of image data hundreds of TeraBytes per day). Simultaneously, the popularization of Deep Learning techniques allowed the development of architectures capable of extracting semantic content from images. While these algorithms usually require the use of powerful hardware, low-power AI inference accelerators have recently been developed and have the potential to be used in the next generations of satellites, thus opening the possibility of onboard analysis of satellite imagery. By extracting the information of interest from satellite images directly onboard, a substantial reduction in bandwidth, storage and memory usage can be achieved. Current and future applications, such as disaster response, precision agriculture and climate monitoring, would benefit from a lower processing latency and even real-time alerts. In this thesis, our goal is two-fold: On the one hand, we design efficient Deep Learning architectures that are able to run on low-power edge devices, such as satellites or drones, while retaining a sufficient accuracy. On the other hand, we design our algorithms while keeping in mind the importance of having a compact output that can be efficiently computed, stored, transmitted to the ground or other satellites within a constellation. First, by using depth-wise separable convolutions and convolutional recurrent neural networks, we design efficient semantic segmentation neural networks with a low number of parameters and a low memory usage. We apply these architectures to cloud and forest segmentation in satellite images. We also specifically design an architecture for cloud segmentation on the FPGA of OPS-SAT, a satellite launched by ESA in 2019, and perform onboard experiments remotely. Second, we develop an instance segmentation architecture for the regression of smooth contours based on the Fourier coefficient representation, which allows detected object shapes to be stored and transmitted efficiently. We evaluate the performance of our method on a variety of low-power computing devices. Finally, we propose a road graph extraction architecture based on a combination of fully convolutional and graph neural networks. We show that our method is significantly faster than competing methods, while retaining a good accuracy 20.

8.2 Reconstruction

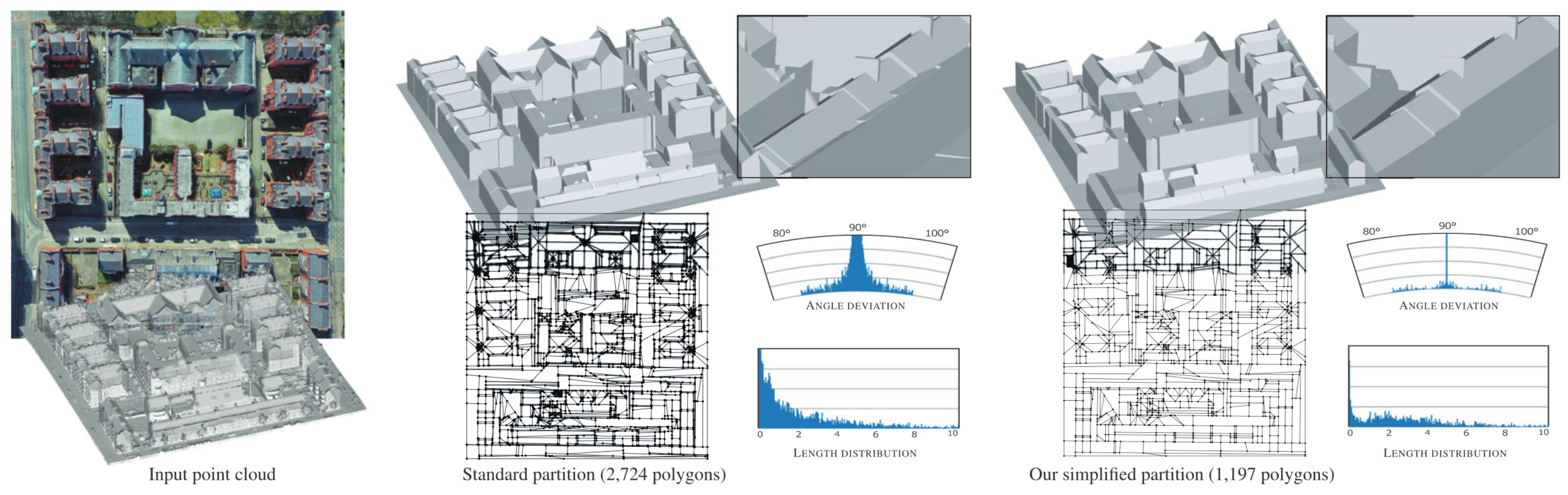

8.2.1 Simplification of 2D Polygonal Partitions via Point‐line Projective Duality, and Application to Urban Reconstruction

Participants: Florent Lafarge, Pierre Alliez.

In collaboration with Julien Vuillamy and André Lieutier (Dassault Systemes).

Simplification of a 2D polygonal partition.

We address the problem of simplifying two-dimensional polygonal partitions that exhibit strong regularities. Such partitions are relevant for reconstructing urban scenes in a concise way. Preserving long linear structures spanning several partition cells motivates a point-line projective duality approach in which points represent line intersections, and lines possibly carry multiple points. We propose a simplification algorithm that seeks a balance between the fidelity to the input partition, the enforcement of canonical relationships between lines (orthogonality or parallelism) and a low complexity output. Our methodology alternates continuous optimization by Riemannian gradient descent with combinatorial reduction, resulting in a progressive simplification scheme. Our experiments show that preserving canonical relationships helps gracefully degrade partitions of urban scenes, and yields more concise and regularity-preserving meshes than common mesh-based simplification approaches (see Figure 7). This work was published in the Computer Graphics Forum journal and presented at the Eurographics Symposium on Geometry Processing 14.

8.2.2 ConSLAM: Periodically Collected Real-World Construction Dataset for SLAM and Progress Monitoring

Participants: Kacper Pluta, Pierre Alliez.

In collaboration with Maciej Trzeciak, Yasmin Fathy and Ioannis Brilakis (University of Cambridge), and Lucio Alcalde, Stanley Chee and Antony Bromley (Laing O'Rourke).

Hand-held scanners are progressively adopted to workflows on construction sites. Yet, they suffer from accuracy problems, preventing them from deployment for demanding use cases. In this work, we present a real-world dataset collected periodically on a construction site to measure the accuracy of SLAM algorithms that mobile scanners utilize. The dataset contains time-synchronised and spatially registered images and LiDAR scans, inertial data and professional ground-truth scans. To the best of our knowledge, this is the first publicly available dataset which reflects the periodic need of scanning construction sites with the aim of accurate progress monitoring using a hand-held scanner. This work was presented at the European Conference on Computer Vision (ECCV) Workshop 18, 25.

8.2.3 Wearable Cooperative SLAM System for Real-time Indoor Localization Under Challenging Conditions

Participants: Pierre Alliez, Fernando Ireta Munoz.

This research was funded by the ANR/DGA MALIN challenge. In collaboration with Viachaslau Kachurka, David Roussel, Fabien Bonardi, Jean-Yves Didier, Hicham Hadj-Abdelkader and Samia Bouchafa (IBSIC lab Evry), and Bastien Rault and Maxime Robin (Innodura TB).

Wearable system for indoor localization.

Real-time globally consistent GPS tracking is critical for an accurate localization and is crucial for applications such as autonomous navigation or multi-robot mapping. However, under challenging environment conditions such as indoor/outdoor transitions, GPS signals are partially available or not consistent over time. In this project, a real-time tracking system for continuously locating emergency response agents in challenging conditions is presented. A cooperative localization method based on Laser-Visual-Inertial (LVI) and GPS sensors is achieved by communicating optimization events between a LiDAR-Inertial-SLAM (LI-SLAM) and Visual-Inertial-SLAM (VI-SLAM) that operate simultaneously. The estimation of the pose assisted by multiple SLAM approaches provides the GPS localization of the agent when a stand-alone GPS fails. The system has been tested under the terms of the MALIN Challenge, which aims to globally localize agents across outdoor and indoor environments under challenging conditions (such as smoked rooms, stairs, indoor/outdoor transitions, repetitive patterns, extreme lighting changes) where it is well known that a stand-alone SLAM will not be enough to maintaining the localization. The system achieved Absolute Trajectory Error of 0.48%, with a pose update rate between 15 and 20 Hz. Furthermore, the system is able to build a global consistent 3D LiDAR Map that is post-processed to create a 3D reconstruction at different level of details. This work was published in the IEEE Sensors journal 12.

8.3 Approximation

8.3.1 Alpha wrapping with an offset

Participants: Cedric Portaneri, Pierre Alliez.

In collaboration with Mael Rouxel-Labbe (Geometry Factory), David Cohen-Steiner (Inria DataShape), and Michael Hemmer (formerly at Google X).

Wrapping a 3d model with an offset.

Given an input 3D geometry such as a triangle soup or a point set, we address the problem of generating a watertight and orientable surface triangle mesh that strictly encloses the input. The output mesh is obtained by greedily refining and carving a 3D Delaunay triangulation on an offset surface of the input, while carving with empty balls of radius alpha. The proposed algorithm is controlled via two user-defined parameters: alpha and offset. Alpha controls the size of cavities or holes that cannot be traversed during carving, while offset controls the distance between the vertices of the output mesh and the input (see Figure 9). Our algorithm is guaranteed to terminate and to yield a valid and strictly enclosing mesh, even for defect-laden inputs. Genericity is achieved using an abstract interface probing the input, enabling any geometry to be used, provided a few basic geometric queries can be answered. We benchmark the algorithm on large public datasets such as Thingi10k, and compare it to state-of-the-art approaches in terms of robustness, approximation, output complexity, speed, and peak memory consumption. This work was presented at the ACM SIGGRAPH Conference 13 and is available as a software component for the open source CGAL library.

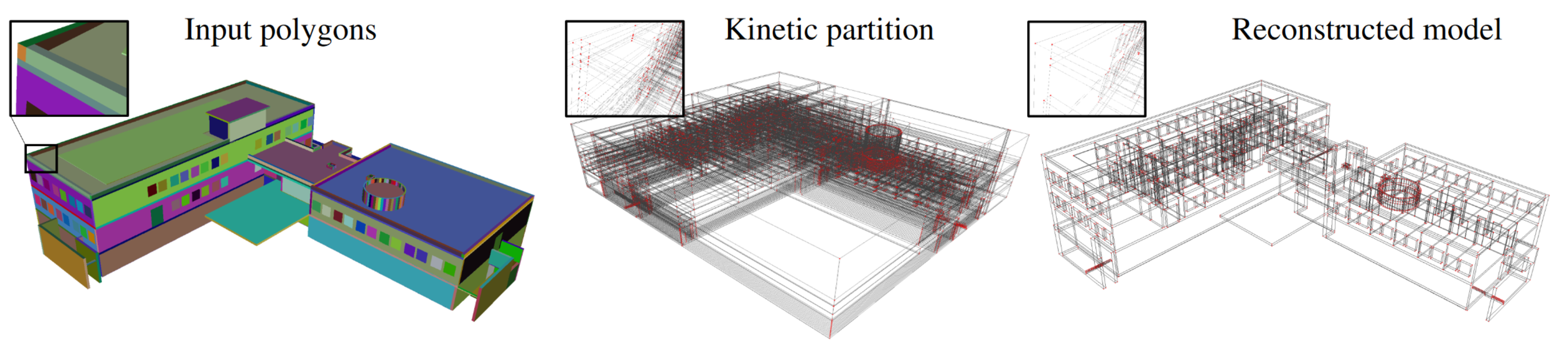

8.3.2 Repairing geometric errors in 3D urban models with kinetic data structures

Participants: Mulin Yu, Florent Lafarge.

In collaboration with Sven Oesau and Bruno Hilaire (CSTB).

to do

Urban 3D models created either interactively by human operators or automatically with reconstruction algorithms often contain geometric and semantic errors. Correcting them in an automated manner is an important scientific challenge. Prior work, which traditionally relies on local analysis and heuristic-based geometric operations on mesh data structures, is typically tailored-made for specific 3D formats and urban objects. We propose a more general method to process different types of urban models without tedious parameter tuning. The key idea lies on the construction of a kinetic data structure that decomposes the 3D space into polyhedra by extending the facets of the imperfect input model. Such a data structure allows us to rebuild all the relations between the facets in an efficient and robust manner. Once built, the cells of the polyhedral partition are regrouped by semantic classes to reconstruct the corrected output model. We demonstrate the robustness and efficiency of our algorithm on a variety of real-world defect-laden models and show its competitiveness with respect to traditional mesh repairing techniques from both Building Information Modeling (BIM) and Geographic Information Systems (GIS) data. This work was published in the ISPRS Journal of Photogrammetry and Remote Sensing 15

8.3.3 Geometric simplification for radiative thermal simulation of satellites

Participants: Vincent Vadez.

This Ph.D. thesis was co-advised by Pierre Alliez and François Brunetti.

The life cycle of a satellite includes the launch phase, the positioning on the desired orbit, different maneuvers (deployment of solar panels and safety position), and finally placing the satellite on the junk orbit. The satellite gravitates in a hostile environment,exposed to thermal variations of very large amplitude, alternating sun exposure and eclipse phases. The survival of the satellite depends on the temperature of its components, the variation of which must be monitored within safety intervals. In this context, the thermal simulation of the satellite for its design is crucial to anticipate the reality of its operation. Radiative thermal simulation is essential for anticipating the generation of energy from solar and albedo radiation, and for regulating temperatures of on-board equipment. Ideal operation consists in providing appropriate cooling for components exposed to radiation, and conversely, heating of unexposed components. As an order of magnitude, the external temperature ranges from -150 to +150 degrees Celsius, and the internal electronic equipment has a safe range between -50 and +50, with a safety margin of 10 degrees. In the eclipse phase where the radiation is significantly lower, heating is provided by the energy accumulated during the exposed phase, combined with heat pipes for thermal regulation.In this thesis, the objective is to advance the knowledge on radiative thermal simulation calculation methods for satellites. To this end, two approaches are considered. The first approach consists in establishing a reference calculation of a quantity governing radiative thermal simulation: view factors. Being subject to time constraints, this method is based on a hierarchical data structure enabling progressive computation of view factors, in order to offer a satisfactory tradeoff between time dedicated to computations and desired accuracy. For the sake of accuracy, a prediction step is added to guarantee a better convergence towards the reference value.The second approach, also motivated by time constraints, aims at reducing the geometric model of a mechanical part or a spacecraft while being faithful to the numerical simulation. In order to render the decimation physics-informed, a preprocessing step relying on a sensitivity analysis is carried out. To better preserve the physical simulation, the geometric cost of a simplification operator is coupled to a factor deduced from the simulation deviation between the reference model and the reduced model 21.

8.3.4 Reconstructing and Repairing Urban Models with Kinetic Data Structures

Participants: Mulin Yu.

This Ph.D. thesis was advised by Florent Lafarge.

Compact and accurate digital 3D models of buildings are commonly used by practitioners for the visualization of existing or imaginary environments, the physical simulations or the fabrication of urban objects. Generating such ready-to-use models is however a difficult problem. When created by designers, 3D models usually contain geometric errors whose automatic correction is a scientific challenge. When created from data measurements, typically laser scans or multiview images, the accuracy and complexity of the models produced by existing reconstruction algorithms often do not reach the requirements of the practitioners. In this thesis, we address this problem by proposing two algorithms: one for repairing the geometric errors contained in urban-specific formats of 3D models, and one for reconstructing compact and accurate models from input point clouds generated from laser scanning or multiview stereo imagery. The key component of these algorithms relies upon a space-partitioning data structure able to decompose the space into polyhedral cells in a natural and efficient manner. This data structure is used to both correct geometric errors by reassembling the facets of defect-laden 3D models, and reconstruct concise 3D models from point clouds with a quality that approaches those generated by Computer-Aided-Design interactive tools. The first contribution of this thesis is an algorithm to repair different types of urban models. Prior work, which traditionally relies on local analysis and heuristic-based geometric operations on mesh data structures, is typically tailored-made for specific 3D formats and urban objects. We propose a more general method to process different types of urban models without tedious parameter tuning. The key idea lies on the construction of a kinetic data structure that decomposes the 3D space into polyhedra by extending the facets of the imperfect input model. Such a data structure allows us to re-build all the relations between the facets in an efficient and robust manner. Once built, the cells of the polyhedral partition are regrouped by semantic classes to reconstruct the corrected output model. We demonstrate the robustness and efficiency of the algorithm on a variety of real-world defect-laden models and show its competitiveness with respect to traditional mesh repairing techniques from both Building Information Modeling (BIM) and Geographic Information Systems (GIS) data. The second contribution is a reconstruction algorithm inspired by the Kinetic Shape Reconstruction method, that improves the later in different ways. In particular, we propose a data fitting technique for detecting planar primitives from unorganized 3D point clouds. Departing from an initial configuration, the technique refines both the continuous plane parameters and the discrete assignment of input points to them by seeking high fidelity, high simplicity and high completeness. The solution is found by an exploration mechanism guided by a multi-objective energy function. The transitions within the large solution space are handled by five geometric operators that create, remove and modify primitives. We demonstrate its potential, not on buildings only, but on a variety of scenes, from organic shapes to man-made objects 22.

9 Bilateral contracts and grants with industry

9.1 Bilateral contracts with industry

X Development LLC (formerly Google X)

Participants: Cédric Portaneri, Pierre Alliez.

We explored an adaptive framework to repair and generate levels of detail from defect-laden 3D measurement data. Repair herein implies to convert raw 3D point sets or triangle soups into watertight solids, which are suitable for computing collision detection and situational awareness of robots. In this context, adaptive stands for the capability to select not only different levels of detail, but also the type of representation best suited to the environment of the scene and the task of the robot. This project focused on geometry processing for collision detection of robots with mechanical parts and other robots in motion.

Dorea technology

Participants: Vincent Vadez, Pierre Alliez.

In collaboration with SME Dorea Technology, our objective was to advance the knowledge on the radiative thermal simulation of satellites, via geometric model reduction. The survival of a satellite is related to the temperature of its components, the variation of which must be controlled within safety intervals. In this context, the thermal simulation of the satellite for its design is crucial to anticipate the reality of its operation. This CIFRE project started in August 2018, for a total duration of 3.5 years.

Luxcarta

Participants: Johann Lussange, Florent Lafarge.

The goal of this collaboration is to develop efficient, robust and scalable methods for extracting geometric shapes from the last generation of satellite images (at 0.3m resolution). Besides satellite images, input data will also include classification maps that identify the location of buildings and Digital Surface Models that bring rough pixel-based estimation of the urban object elevation. The geometric shapes will be first restricted to planes that typically describe well the piecewise planar geometry of buildings. The goal will be then to detect and identify each roof section of facade component of a building by a plane in the 3D space. This DGA Rapid project started in September 2020, for a total duration of 2 years.

CNES - Airbus

Participants: Marion Boyer, Florent Lafarge.

The goal of this collaboration is to design an automatic pipeline for modeling in 3D urban scenes under a CityGML LOD2 formalism using the new generation of stereoscopic satellite images, in particular the 30cm resolution images offered by Pléiades Néo satellites. Traditional methods usually start with a semantic classification step followed by a 3D reconstruction of objects composing the urban scene. Too often in traditional approaches, inaccuracies and errors from the classification phase are propagated and amplified throughout the reconstruction process without any possible subsequent correction. In contrast, our main idea consists in extracting semantic information of the urban scene and in reconstructing the geometry of objects simultaneously. This project started in October 2022, for a total duration of 3 years.

CSTB

Participants: Mulin Yu, Florent Lafarge.

This collaboration took the form of a research contract. The project investigated the design of as-automatic-as-possible algorithms for repairing and converting Building Information Modeling (BIM) models of buildings in different urban-specific CAD formats using combinatorial maps. This project started in November 2019, for a total duration of 3 years.

IRT Saint-Exupéry

Participants: Gaetan Bahl, Florent Lafarge.

This project (Ph.D. thesis) investigated low-power deep learning architectures for detecting, localizing and characterizing changes in temporal satellite images. These architectures are designed to be exploited on-board satellites with low computational resources. The project started in March 2019, for a total duration of 3 years.

Samp AI

Participants: Pierre Alliez, Armand Zampieri.

This project (Cifre Ph.D. thesis) investigates algorithms for progressive, random access compression of 3D point clouds acquired on industrial sites.

The context is as follows. Laser scans of industrial facilities contain massive amounts of 3D points which are very reliable visual representations of these facilities. These points represent the positions, color attributes and other metadata about object surfaces. Samp turns these 3D points into intelligent digital twins that can be searched and interactively visualized on heterogeneous clients, via streamed services on the cloud. The 3D point clouds are first structured and enriched with additional attributes such as semantic labels or hierarchical group identifiers. Efficient streaming motivates progressive compression of these 3D point clouds, while local search or visualization queries call for random accessible capabilities. Given an enriched 3D point cloud as input, the objective is to generate as output a compressed version with the following properties: (1) Random accessible compressed (RAC) file format that provides random access (not just sequential access) to the decompressed content, so that each compressed chunk can be decompressed independently, depending on the area in focus on the client side. (2) Progressive compression: the enriched point cloud is converted into a stream of refinements, with an optimized rate-distortion tradeoff allowing for early access to a faithful version of the content during streaming. (3) Structure-preserving: all structural and semantic information are preserved during compression, allowing for structure-aware queries on the client. (4) Lossless at the end: allows the original data to be perfectly reconstructed from the compressed data, when streaming is completed.

Geometry Factory

Participants: Pierre Alliez, Daniel Zint.

In this collaborative project with a postdoctoral fellowship funded by the plan de relance programme, we explored a novel algorithm for computing offsets of surface triangle meshes, with guarantees on topology and geometric error bounds. Reliable offsets are becoming indispensable for automated design exploration approaches that loop between simulation and shape editing. We are currently benchmarking on industrial use cases.

AI Verse

Participants: Pierre Alliez.

We are currently pursuing our collaboration with AI Verse on statistics for improving sampling quality, applied to the parameters of a generative model for 3D scenes. A postdoctoral fellowship is funded for three years (co-advised with Guillaume Charpiat from Inria Saclay and Ai Verse), but recruitment is pending. The full research topic is described next.

The AI Verse technology is devised to create infinitely random and semantically consistent 3D scenes. This creation is fast, consuming less than 4 seconds per labeled image. From these 3D scenes, the system is able to build quality synthetic images that come with rich labels that are unbiased unlike manually annotated labels. As for real data, no metric exists to evaluate the performance of the synthetic datasets to train a neural network. We thus tend to favor the photorealism of the images but such a criterion is far from being the best. The current technology provides a means to control a rich list of additional parameters (quality of lighting, trajectory and intrinsic parameters of the virtual camera, selection and placement of assets, degrees of occlusion of the objects, choice of materials, etc). Since the generation engine can modify all of these parameters at will to generate many samples, we will explore optimization methods for improving the sampling quality. Most likely, a set of samples generated randomly by the generative model does not cover well the whole space of interesting situations, because of unsuited sampling laws or of sampling realization issues in high dimensions. The main question is how to improve the quality of this generated dataset, that one would like to be close somehow to the given target dataset (consisting of examples of images that one would like to generate). For this, statistical analyses of these two datasets and of their differences are required, in order to spot possible issues such as strongly under-represented areas of the target domain. Then, sampling laws can be adjusted accordingly, possibly by optimizing their hyper-parameters, if any.

Naval group

Participants: Pierre Alliez.

In collaboration with the Acentauri project-team. The project has started but we still need to recruit a postdoctoral fellow. The context is that of the factory of the future for Naval Group, for submarines and surface ships. As input, we have a digital model (e.g., a frigate), the equipment assembly schedule and measurement data (images or Lidar). We wish to monitor the assembly site to compare the "as-designed" with the "as-built" model. The main problem is a need for coordination on the construction sites for decision making and planning optimization. We wish to enable following the progress of a real project and to check its conformity with the help of a digital twin. Currently, since we need to see on site to verify, rounds of visits are required to validate the progress as well as the equipment. These rounds are time consuming and not to mention the constraints of the site, such as the temporary absence of electricity or the numerous temporary assembly and safety equipment. The objective of the project is to automate the monitoring, with sensor intelligence to validate the work. Fixed sensor systems (e.g. cameras or Lidar) or mobile (e.g. drones) sensors will be used, with the addition of smartphones/tablets carried by operators on site.

10 Partnerships and cooperations

10.1 International research visitors

10.1.1 Visits of international scientists

Other international visits to the team

Julian Stahl

-

Status

Master intern

-

Institution of origin:

University Erlangen

-

Country:

Germany

-

Dates:

Sep-October

-

Context of the visit:

Google summer of code student

-

Mobility program/type of mobility:

Funded by the team

Andriamahenina Ramanantoanina

-

Status

PhD student

-

Institution of origin:

University of Lugano

-

Country:

Switzerland

-

Dates:

October-December

-

Context of the visit:

PhD student from the EU GRAPES project

-

Mobility program/type of mobility:

European ITN network.

10.2 European initiatives

10.2.1 H2020 projects

BIM2TWIN

BIM2TWIN project on cordis.europa.eu

-

Title:

BIM2TWIN: Optimal Construction Management & Production Control

-

Duration:

From November 1, 2020 to April 30, 2024

-

Partners:

- INSTITUT NATIONAL DE RECHERCHE EN INFORMATIQUE ET AUTOMATIQUE (INRIA), France

- SPADA CONSTRUCTION (SPADA CONSTRUCTION), France

- AARHUS UNIVERSITET (AU), Denmark

- FIRA GROUP OY, Finland

- INTSITE LTD (INTSITE), Israel

- THE CHANCELLOR MASTERS AND SCHOLARS OF THE UNIVERSITY OF CAMBRIDGE, United Kingdom

- ORANGE SA (Orange), France

- UNISMART - FONDAZIONE UNIVERSITA DEGLI STUDI DI PADOVA (UNISMART), Italy

- FUNDACION TECNALIA RESEARCH and INNOVATION (TECNALIA), Spain

- TECHNISCHE UNIVERSITAET MUENCHEN (TUM), Germany

- IDP INGENIERIA Y ARQUITECTURA IBERIA SL (IDP), Spain

- SITEDRIVE OY, Finland

- UNIVERSITA DEGLI STUDI DI PADOVA (UNIPD), Italy

- FIRA OY, Finland

- RUHR-UNIVERSITAET BOCHUM (RUHR-UNIVERSITAET BOCHUM), Germany

- CENTRE SCIENTIFIQUE ET TECHNIQUE DU BATIMENT (CSTB), France

- TECHNION - ISRAEL INSTITUTE OF TECHNOLOGY, Israel

- UNIVERSITA POLITECNICA DELLE MARCHE (UNIVPM), Italy

- ACCIONA CONSTRUCCION SA (ACCIONA), Spain

- SIEMENS AKTIENGESELLSCHAFT, Germany

-

Inria contact:

Pierre Alliez

-

Coordinator:

Bruno Fies (CSTB)

-

Summary:

BIM2TWIN aims to build a Digital Building Twin (DBT) platform for construction management that implements lean principles to reduce operational waste of all kinds, shortening schedules, reducing costs, enhancing quality and safety and reducing carbon footprint. BIM2TWIN proposes a comprehensive, holistic approach. It consists of a (DBT) platform that provides full situational awareness and an extensible set of construction management applications. It supports a closed loop Plan-Do-Check-Act mode of construction. Its key features are:

1> Grounded conceptual analysis of data, information and knowledge in the context of DBTs, which underpins a robust system architecture

2> A common platform for data acquisition and complex event processing to interpret multiple monitored data streams from construction site and supply chain to establish real-time project status in a Project Status Model (PSM)

3> Exposure of the PSM to a suite of construction management applications through an easily accessible application programming interface (API) and directly to users through a visual information dashboard

4> Applications include monitoring of schedule, quantities & budget, quality, safety, and environmental impact.

5> PSM representation based on property graph semanticaly linked to the Building Information Model (BIM) and all project management data. The property graph enables flexible, scalable storage of raw monitoring data in different formats, as well as storage of interpreted information. It enables smooth transition from construction to operation.

BIM2TWIN is a broad, multidisciplinary consortium with hand-picked partners who together provide an optimal combination of knowledge, expertise and experience in a variety of monitoring technologies, artificial intelligence, computer vision, information schema and graph databases, construction management, equipment automation and occupational safety. The DBT platform will be experimented on 3 demo sites (SP, FR, FI).

We are participating to the GRAPES RTN project (learninG, Representing, And oPtimizing shapES), coordinated by ATHENA (Greece). GRAPES aims at advancing the state of the art in Mathematics, CAD, and Machine Learning in order to promote game changing approaches for generating, optimizing, and learning 3D shapes, along with a multisectoral training for young researchers. Concrete applications include simulation and fabrication, hydrodynamics and marine design, manufacturing and 3D printing, retrieval and mining, reconstruction and urban planning. In this context, Pierre Alliez advises a PhD student in collaboration with the beneficiary partner Geometry Factory. Our objective is to advance the state of the art in applied mathematics, computer-aided design (CAD) and machine learning in order to promote novel approaches for generating, optimizing, and learning 3D shapes. We will focus on dense semantic segmentation of 3D point clouds and semantic-aware reconstruction of 3D scenes. Our goal is to enrich the final reconstructed 3D models with labels that reflect the main semantic class of outdoor scenes such as ground, buildings, roads and vegetation. The Titane project-team also contributes to the training of young researchers via tutorials on the CGAL library and practical exercises on real-world use cases.

10.3 National initiatives

10.3.1 3IA Côte d'Azur

Pierre Alliez holds a senior chair from 3IA Côte d'Azur (Interdisciplinary Institute for Artificial Intelligence). The topic of his chair is “3D modeling of large-scale environments for the smart territory”. In addition, he is the scientific head of the fourth research axis entitled “AI for smart and secure territories”.

10.3.2 ANR

BIOM: Building Indoor and Outdoor Modeling

Participants: Muxingzi Li, Florent Lafarge [contact].

The BIOM project aimed at automatic, simultaneous indoor and outdoor modeling of buildings from images and dense point clouds. We want to achieve a complete, geometrically accurate, semantically annotated but nonetheless lean 3D CAD representation of buildings and objects they contain in the form of a Building Information Models (BIM) that will help manage buildings in all their life cycle (renovation, simulation, deconstruction). The project is in collaboration with IGN (coordinator), Ecole des Ponts Paristech, CSTB and INSA-ICube. Total budget: 723 KE, 150 KE for TITANE. The PhD thesis of Muxingzi Li was funded by this project which started in February 2018, for a total duration of 4 years.

11 Dissemination

Participants: Pierre Alliez, Florent Lafarge.

11.1 Promoting scientific activities

11.1.1 Scientific events: selection

Member of the conference program committees

- Pierre Alliez was an advisory board member for the EUROGRAPHICS 2023 conference.

- Pierre Alliez was a member of the scientific committee for the SophIA Summit conference.

- Florent Lafarge was an area chair for 3DV.

Reviewer

- Pierre Alliez was a reviewer for ACM SIGGRAPH, SIGGRAPH Asia and Eurographics Symposium on Geometry Processing.

- Florent Lafarge was a reviewer for CVPR, ECCV, and ACM SIGGRAPH.

11.1.2 Journal

Member of the editorial boards

- Florent Lafarge is an associate editor for the ISPRS Journal of Photogrammetry and Remote Sensing and for the Revue Française de Photogrammétrie et de Télédétection.

Reviewer - reviewing activities

- Florent Lafarge was a reviewer for TPAMI.

11.1.3 Invited talks

- Pierre Alliez gave a keynote at the ISPRS conference in Nice, on geometry processing and learning.

- Pierre Alliez gave an invited talk on geometric modeling for digital twinning, at the International Conference on Discretization in Geometry and Dynamics, in Germany.

- Florent Lafarge gave invited talks at TU Deft and at the Journées Françaises d'Informatique Graphique (JFIG 2022) on efficient Space Partitioning Data Structures for Vision problems.

11.1.4 Leadership within the scientific community

Since January 2022, Pierre Alliez is the editor in chief of the Computer Graphics Forum (CGF), in tandem with Helwig Hauser. CGF is one of the leading journals in Computer Graphics and the official journal of the Eurographics association.

Pierre Alliez is chair of the Ph.D. award committee of the Eurographics Association, since 2020.

Pierre Alliez is a member of the following steering committees: Eurographics, Eurographics Symposium on Geometry Processing and Eurographics Workshop on Graphics and Cultural Heritage.

PIerre Alliez is a member of the editorial board of the CGAL open source project.

11.1.5 Scientific expertise

- Pierre Alliez was a reviewer for the ERC and for the Eureka program. He is a scientific advisory board member for the Bézout Labex in Paris (Models and algorithms: from the discrete to the continuous).

- Florent Lafarge was a reviewer for the MESR (CIR and JEI expertises).

11.1.6 Research administration

- Pierre Alliez was Head of Science (VP for Science) of the Inria Sophia Antipolis center, in tandem with Fabien Gandon, until mid 2022. In this role, he worked with the executive team to advise and support the Institute's scientific strategy, to stimulate new research directions and the creation of project-teams, and to manage the scientific evaluation and renewal of the project-teams.

- Pierre Alliez is scientific head for the Inria-DFKI partnership since September 2022.

- Pierre Alliez is a member of the scientific committee of the 3IA Côte d'Azur.

- Florent Lafarge is a member of the NICE committee. The main actions of the NICE committee are to verify the scientific aspects of the files of postdoctoral students, to give scientific opinions on candidates for national campaigns for postdoctoral stays, delegations, secondments as well as requests for long duration invitations.

- Florent Lafarge is a member of the technical committee of the UCA Academy of Excellence "Space, Environment, Risk and Resilience".

11.2 Teaching - Supervision - Juries

11.2.1 Teaching

- Master: Pierre Alliez (with Xavier Descombes, Marc Antonini and Laure Blanc-Feraud), advanced machine learning, 10h, M2, Univ. Côte d'Azur, France.

- Master: Pierre Alliez, Gaétan Bahl and Guillaume Cordonnier (from Graphdeco), deep learning, 21h, M2, Univ. Côte d'Azur, France.

- Master: Florent Lafarge, Applied AI, 8h, M2, Univ. Côte d'Azur, France.

- Master: Pierre Alliez, Florent Lafarge and Angelos Mantzaflaris (from Aromath), Interpolation numérique, 60h, M1, Univ. Côte d'Azur, France.

- Master: Florent Lafarge, Mathématiques pour la géométrie, 22h, M1, EFREI, France.

11.2.2 Supervision

- PhD defended in June: Gaétan Bahl, Deep learning architectures for onboard satellite image analysis, Florent Lafarge.

- PhD defended in September: Vincent Vadez, Geometric simplification for radiative thermal simulation of satellites, Cifre thesis with Dorea, co-advised by Pierre Alliez and Francois Brunetti (Dorea).

- PhD defended in December: Mulin Yu, Reconstructing and Repairing Urban Models with Kinetic Data Structures, Florent Lafarge.

- PhD withdrawn in March: Alexandre Zoppis, City reconstruction from satellite data, Florent Lafarge.

- PhD in progress: Tong Zhao, Shape reconstruction, since November 2019, co-advised by Pierre Alliez and Laurent Busé (Aromath Inria project-team), funded by 3IA, in collaboration with Jean-Marc Thiery and Tamy Boubekeur (formerly at Telecom ParisTech, now at Adobe research), and David Cohen-Steiner (Datashape Inria project-team). Tong will defend in March 2023.

- PhD in progress: Rao Fu, Piecewise-curved reconstruction from raw 3D point clouds, thesis funded by EU project GRAPES, in collaboration with Geometry Factory, since January 2021, advised by Pierre Alliez.

- PhD in progress: Jacopo Iollo, , thesis funded by Inria challenge ROAD-AI, in collaboration with CEREMA, since January 2022, co-advised by Florence Forbes (Inria Grenoble), Christophe Heinkele (CEREMA) and Pierre Alliez.

- PhD in progress: Marion Boyer, Geometric modeling of urban scenes with LOD2 formalism from satellite images, since October 2022, advised by Florent Lafarge.

- PhD in progress: Nissim Maruani, Physics-informed geometric modeling and processing, funded by 3IA, since November 2022, co-advised by Pierre Alliez and Mathieu Desbrun (Inria Saclay).

- PhD in progress: Armand Zampieri, Compression and visibility of 3D point clouds, Cifre thesis with Samp AI, since December 2022, co-advised by Pierre Alliez and Guillaume Delarue (Samp AI).

11.2.3 Juries

- Pierre Alliez was a reviewer for the PhD of Claudio Mancinelli (Univ. Genova), Raphael Sulzer (IGN) and Theo Deprelle (Ecole des Ponts), and a PhD committee member for Florent Jousse (Epione project-team) and Gaétan Bahl.

- Pierre Alliez was a member of the "Comité de Suivi Doctoral" for the PhD thesis of Di Yang (Stars project-team) and Emilie Yu (Graphdeco project-team).

- Florent Lafarge was a member of the "Comité de Suivi Doctoral" for the PhD thesis of Cherif Abedrazek (GEOAZUR) and Mariem Mezghanni (LIX).

12 Scientific production

12.1 Major publications

- 1 articleKinetic Shape Reconstruction.ACM Transactions on GraphicsThis project was partially funded by Luxcarta Technology. We thank our anonymous reviewers for their input, Qian-Yi Zhou and Arno Knapitsch for providing us datasets from the Tanks and Temples benchmark (Meeting Room,Horse,M60,Barn,Ignatius,Courthouse and Church), and Pierre Alliez, Mathieu Desbrun and George Drettakis for their help and advice. We are also grateful to Liangliang Nan and Hao Fang for sharing comparison materials. Datasets Full thing,Castle,Tower of Piand Hilbert cube originate from Thingi 10K,Hand, Rocker Arm, Fertility and LansfromAim@Shape, and Stanford Bunny and Asian Dragon from the Stanford 3D Scanning Repository.2020

- 2 articleOptimal Voronoi Tessellations with Hessian-based Anisotropy.ACM Transactions on GraphicsDecember 2016, 12

- 3 inproceedingsTowards large-scale city reconstruction from satellites.European Conference on Computer Vision (ECCV)Amsterdam, NetherlandsOctober 2016

- 4 articleCurved Optimal Delaunay Triangulation.ACM Transactions on Graphics374August 2018, 16

- 5 inproceedingsApproximating shapes in images with low-complexity polygons.CVPR 2020 - IEEE Conference on Computer Vision and Pattern RecognitionSeattle / Virtual, United StatesJune 2020

- 6 articleIsotopic Approximation within a Tolerance Volume.ACM Transactions on Graphics3442015, 12

- 7 articleVariance-Minimizing Transport Plans for Inter-surface Mapping.ACM Transactions on Graphics362017, 14

- 8 articleAlpha Wrapping with an Offset.ACM Transactions on Graphics414June 2022, 1-22

- 9 articleSemantic Segmentation of 3D Textured Meshes for Urban Scene Analysis.ISPRS Journal of Photogrammetry and Remote Sensing1232017, 124 - 139

- 10 articleLOD Generation for Urban Scenes.ACM Transactions on Graphics3432015, 15

12.2 Publications of the year

International journals

International peer-reviewed conferences

Doctoral dissertations and habilitation theses

Reports & preprints

Other scientific publications