2023Activity reportProject-TeamMIMETIC

RNSR: 201120991Y- Research center Inria Centre at Rennes University

- In partnership with:Université Haute Bretagne (Rennes 2), École normale supérieure de Rennes, Université de Rennes

- Team name: Analysis-Synthesis Approach for Virtual Human Simulation

- In collaboration with:Institut de recherche en informatique et systèmes aléatoires (IRISA), Mouvement, Sport, Santé (M2S)

- Domain:Perception, Cognition and Interaction

- Theme:Interaction and visualization

Keywords

Computer Science and Digital Science

- A5.1.3. Haptic interfaces

- A5.1.5. Body-based interfaces

- A5.1.9. User and perceptual studies

- A5.4.2. Activity recognition

- A5.4.5. Object tracking and motion analysis

- A5.4.8. Motion capture

- A5.5.4. Animation

- A5.6. Virtual reality, augmented reality

- A5.6.1. Virtual reality

- A5.6.3. Avatar simulation and embodiment

- A5.6.4. Multisensory feedback and interfaces

- A5.10.3. Planning

- A5.10.5. Robot interaction (with the environment, humans, other robots)

- A5.11.1. Human activity analysis and recognition

- A6. Modeling, simulation and control

Other Research Topics and Application Domains

- B1.2.2. Cognitive science

- B2.5. Handicap and personal assistances

- B2.8. Sports, performance, motor skills

- B5.1. Factory of the future

- B5.8. Learning and training

- B9.2.2. Cinema, Television

- B9.2.3. Video games

- B9.4. Sports

1 Team members, visitors, external collaborators

Research Scientists

- Franck Multon [Team leader, INRIA, Professor Detachement, until Aug 2023, HDR]

- Franck Multon [Team leader, INRIA, Senior Researcher, from Sep 2023, HDR]

- Adnane Boukhayma [INRIA, Researcher]

Faculty Members

- Benoit Bardy [UNIV MONTPELLIER, Associate Professor Delegation, until Aug 2023, HDR]

- Nicolas Bideau [UNIV RENNES II, Associate Professor]

- Benoit Bideau [UNIV RENNES II, Professor, HDR]

- Armel Cretual [UNIV RENNES II, Associate Professor, HDR]

- Georges Dumont [ENS RENNES, Professor, HDR]

- Diane Haering [UNIV RENNES II, Associate Professor]

- Richard Kulpa [UNIV RENNES II, Professor, HDR]

- Fabrice Lamarche [UNIV RENNES, Associate Professor]

- Guillaume Nicolas [UNIV RENNES II, Associate Professor]

- Charles Pontonnier [ENS RENNES, Associate Professor, HDR]

Post-Doctoral Fellows

- Théo Rouvier [ENS Rennes]

- Aurelie Tomezzoli [ENS RENNES, Post-Doctoral Fellow]

PhD Students

- Ahmed Abdourahman Mahamoud [INRIA, from Dec 2023]

- Kelian Baert [Technicolor, CIFRE, from Sep 2023]

- Rebecca Crolan [ENS RENNES]

- Shubhendu Jena [INRIA]

- Qian Li [INRIA, until Oct 2023]

- Guillaume Loranchet [INTERDIGITAL, CIFRE, from Nov 2023]

- Pauline Morin [ENS RENNES, until Aug 2023]

- Hasnaa Ouadoudi Belabzioui [MOOVENCY, CIFRE]

- Amine Ouasfi [INRIA]

- Valentin Ramel [INRIA, from Jun 2023]

- Victor Restrat [INRIA, from Oct 2023]

- Etienne Ricard [INRS - VANDOEUVRE- LES- NANCY]

- Sony Saint-Auret [INRIA]

- Aurelien Schuster [FONDATION ST CYR, from Oct 2023]

- Mohamed Younes [INRIA]

Technical Staff

- Benjamin Gamblin [UNIV RENNES II, Engineer, until Sep 2023]

- Ronan Gaugne [UNIV RENNES, Engineer]

- Laurent Guillo [CNRS, Engineer]

- Julian Joseph [INRIA, Engineer, from Oct 2023]

- Tangui Marchand Guerniou [INRIA, Engineer, until Aug 2023]

- Valentin Ramel [INRIA, Engineer, until May 2023]

- Salome Ribault [INRIA, Engineer, until Sep 2023]

Interns and Apprentices

- Girardine Kabayisa Ndoba [INRIA, Intern, from May 2023 until Jul 2023]

- Achraf Sbai [INRIA, Intern, from Jul 2023]

Administrative Assistant

- Nathalie Denis [INRIA]

2 Overall objectives

2.1 Presentation

MimeTIC is a multidisciplinary team whose aim is to better understand and model human activity in order to simulate realistic autonomous virtual humans: realistic behaviors, realistic motions and realistic interactions with other characters and users. It leads to modeling the complexity of a human body, as well as of his environment where he can pick up information and where he can act on it. A specific focus is dedicated to human physical activity and sports as it raises the highest constraints and complexity when addressing these problems. Thus, MimeTIC is composed of experts in computer science whose research interests are computer animation, behavioral simulation, motion simulation, crowds and interaction between real and virtual humans. MimeTIC also includes experts in sports science, motion analysis, motion sensing, biomechanics and motion control. Hence, the scientific foundations of MimeTIC are motion sciences (biomechanics, motion control, perception-action coupling, motion analysis), computational geometry (modeling of the 3D environment, motion planning, path planning) and design of protocols in immersive environments (use of virtual reality facilities to analyze human activity).

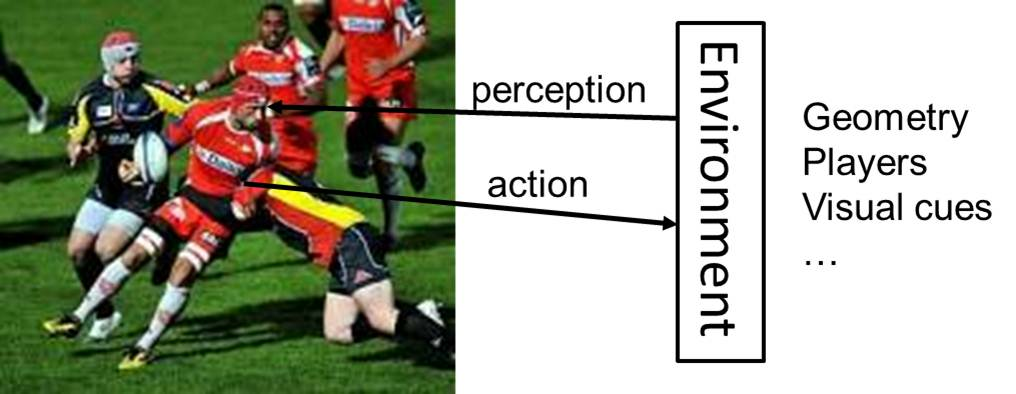

Thanks to these skills, we wish to reach the following objectives: to make virtual humans behave, move and interact in a natural manner in order to increase immersion and improve knowledge on human motion control. In real situations (see Figure 1), people have to deal with their physiological, biomechanical and neurophysiological capabilities in order to reach a complex goal. Hence MimeTIC addresses the problem of modeling the anatomical, biomechanical and physiological properties of human beings. Moreover these characters have to deal with their environment. First, they have to perceive this environment and pick up relevant information. Thus, MimeTIC focuses on the problem of modeling the environment including its geometry and associated semantic information. Second, they have to act on this environment to reach their goals. It leads to cognitive processes, motion planning, joint coordination and force production in order to act on this environment.

Main objective of MimeTIC: to better understand human activity in order to improve virtual human simulations. It involves modeling the complexity of human bodies, as well as of environments where to pick up information and act upon.

In order to reach the above objectives, MimeTIC has to address three main challenges:

- deal with the intrinsic complexity of human beings, especially when addressing the problem of interactions between people for which it is impossible to predict and model all the possible states of the system,

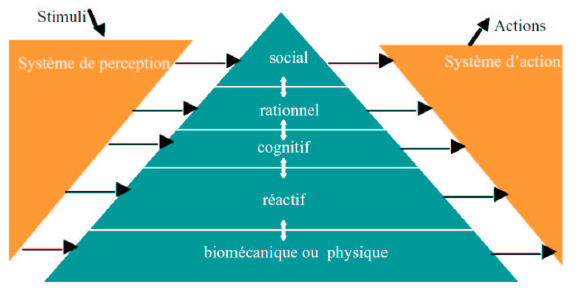

- make the different components of human activity control (such as the biomechanical and physical, the reactive, cognitive, rational and social layers) interact while each of them is modeled with completely different states and time sampling,

- and measure human activity while balancing between ecological and controllable protocols, and to be able to extract relevant information in wide databases of information.

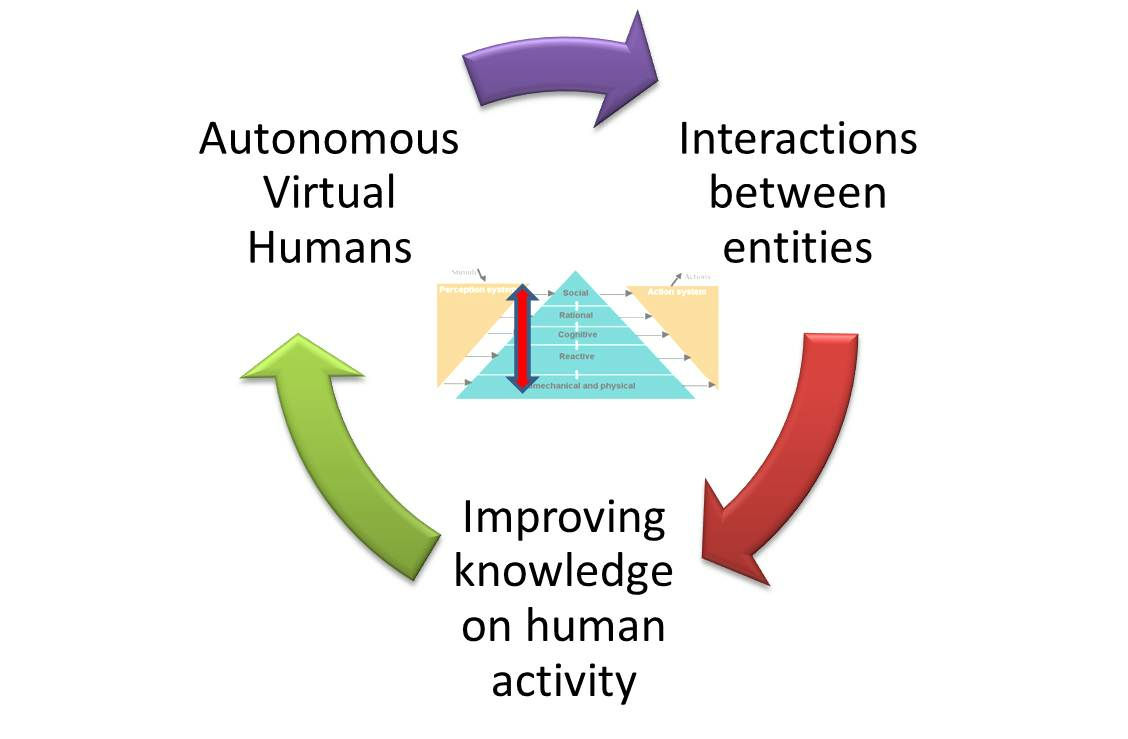

As opposed to many classical approaches in computer simulation, which mostly propose simulation without trying to understand how real people act, the team promotes a coupling between human activity analysis and synthesis, as shown in Figure 2.

Research path of MimeTIC: coupling analysis and synthesis of human activity enables us to create more realistic autonomous characters and to evaluate assumptions about human motion control.

In this research path, improving knowledge on human activity allows us to highlight fundamental assumptions about natural control of human activities. These contributions can be promoted in e.g. biomechanics, motion sciences, neurosciences. According to these assumptions, we propose new algorithms for controlling autonomous virtual humans. The virtual humans can perceive their environment and decide of the most natural action to reach a given goal. This work is promoted in computer animation, virtual reality and has some applications in robotics through collaborations. Once autonomous virtual humans have the ability to act as real humans would in the same situation, it is possible to make them interact with others, i.e., autonomous characters (for crowds or group simulations) as well as real users. The key idea here is to analyze to what extent the assumptions proposed at the first stage lead to natural interactions with real users. This process enables the validation of both our assumptions and our models.

Among all the problems and challenges described above, MimeTIC focuses on the following domains of research:

- motion sensing which is a key issue to extract information from raw motion capture systems and thus to propose assumptions on how people control their activity,

- human activity & virtual reality, which is explored through sports application in MimeTIC. This domain enables the design of new methods for analyzing the perception-action coupling in human activity, and to validate whether the autonomous characters lead to natural interactions with users,

- interactions in small and large groups of individuals, to understand and model interactions with lot of individual variability such as in crowds,

- virtual storytelling which enables us to design and simulate complex scenarios involving several humans who have to satisfy numerous complex constraints (such as adapting to the real-time environment in order to play an imposed scenario), and to design the coupling with the camera scenario to provide the user with a real cinematographic experience,

- biomechanics which is essential to offer autonomous virtual humans who can react to physical constraints in order to reach high-level goals, such as maintaining balance in dynamic situations or selecting a natural motor behavior among the whole theoretical solution space for a given task,

- autonomous characters which is a transversal domain that can reuse the results of all the other domains to make these heterogeneous assumptions and models provide the character with natural behaviors and autonomy.

3 Research program

3.1 Biomechanics and Motion Control

Human motion control is a highly complex phenomenon that involves several layered systems, as shown in Figure 3. Each layer of this controller is responsible for dealing with perceptual stimuli in order to decide the actions that should be applied to the human body and his environment. Due to the intrinsic complexity of the information (internal representation of the body and mental state, external representation of the environment) used to perform this task, it is almost impossible to model all the possible states of the system. Even for simple problems, there generally exists an infinity of solutions. For example, from the biomechanical point of view, there are much more actuators (i.e. muscles) than degrees of freedom leading to an infinity of muscle activation patterns for a unique joint rotation. From the reactive point of view there exists an infinity of paths to avoid a given obstacle in navigation tasks. At each layer, the key problem is to understand how people select one solution among these infinite state spaces. Several scientific domains have addressed this problem with specific points of view, such as physiology, biomechanics, neurosciences and psychology.

Layers of the motion control natural system in humans.

In biomechanics and physiology, researchers have proposed hypotheses based on accurate joint modeling (to identify the real anatomical rotational axes), energy minimization, force and torques minimization, comfort maximization (i.e. avoiding joint limits), and physiological limitations in muscle force production. All these constraints have been used in optimal controllers to simulate natural motions. The main problem is thus to define how these constraints are composed altogether such as searching the weights used to linearly combine these criteria in order to generate a natural motion. Musculoskeletal models are stereotyped examples for which there exists an infinity of muscle activation patterns, especially when dealing with antagonist muscles. An unresolved problem is to define how to use the above criteria to retrieve the actual activation patterns, while optimization approaches still leads to unrealistic ones. It is still an open problem that will require multidisciplinary skills including computer simulation, constraint solving, biomechanics, optimal control, physiology and neuroscience.

In neuroscience, researchers have proposed other theories, such as coordination patterns between joints driven by simplifications of the variables used to control the motion. The key idea is to assume that instead of controlling all the degrees of freedom, people control higher level variables which correspond to combinations of joint angles. In walking, data reduction techniques such as Principal Component Analysis have shown that lower-limb joint angles are generally projected on a unique plane whose angle in the state space is associated with energy expenditure. Although knowledge exists for specific motions, such as locomotion or grasping, this type of approach is still difficult to generalize. The key problem is that many variables are coupled and it is very difficult to objectively study the behavior of a unique variable in various motor tasks. Computer simulation is a promising method to evaluate such type of assumptions as it enables to accurately control all the variables and to check if it leads to natural movements.

Neuroscience also addresses the problem of coupling perception and action by providing control laws based on visual cues (or any other senses), such as determining how the optical flow is used to control direction in navigation tasks, while dealing with collision avoidance or interception. Coupling of the control variables is enhanced in this case as the state of the body is enriched by the large amount of external information that the subject can use. Virtual environments inhabited with autonomous characters whose behavior is driven by motion control assumptions, is a promising approach to solve this problem. For example, an interesting issue in this field is to navigate in an environment inhabited with other people. Typically, avoiding static obstacles along with other people moving inside that environment is a combinatory problem that strongly relies on the coupling between perception and action.

One of the main objectives of MimeTIC is to enhance knowledge on human motion control by developing innovative experiments based on computer simulation and immersive environments. To this end, designing experimental protocols is a key point and some of the researchers in MimeTIC have developed this skill in biomechanics and perception-action coupling. Associating these researchers to experts in virtual human simulation, computational geometry and constraints solving allows us to contribute to enhance fundamental knowledge in human motion control.

3.2 Experiments in Virtual Reality

Understanding interactions between humans is challenging because it addresses many complex phenomena including perception, decision-making, cognition and social behaviors. Moreover, all these phenomena are difficult to isolate in real situations, and it is therefore highly complex to understand their individual influence on these human interactions. It is then necessary to find an alternative solution that can standardize the experiments and that allows the modification of only one parameter at a time. Video was first used since the displayed experiment is perfectly repeatable and cut-offs (stop of the video at a specific time before its end) allow having temporal information. Nevertheless, the absence of adapted viewpoint and stereoscopic vision does not provide depth information that are very meaningful. Moreover, during video recording sessions, a real human acts in front of a camera and not in front of an opponent. That interaction is then not a real interaction between humans.

Virtual Reality (VR) systems allow full standardization of the experimental situations and the complete control of the virtual environment. It enables to modify only one parameter at a time and observe its influence on the perception of the immersed subject. VR can then be used to understand what information is picked up to make a decision. Moreover, cut-offs can also be used to obtain temporal information about when information is picked up. When the subject can react as in a real situation, his movement (captured in real time) provides information about his reactions to the modified parameter. Not only is the perception studied, but the complete perception-action loop. Perception and action are indeed coupled and influence each other as suggested by Gibson in 1979.

Finally, VR also allows the validation of virtual human models. Some models are indeed based on the interaction between the virtual character and the other humans, such as a walking model. In that case, there are two ways to validate it. They can be compared to real data (e.g. real trajectories of pedestrians). But such data are not always available and are difficult to get. The alternative solution is then to use VR. The validation of the realism of the model is then done by immersing a real subject into a virtual environment in which a virtual character is controlled by the model. Its evaluation is then deduced from how the immersed subject reacts when interacting with the model and how realistic he feels the virtual character is.

3.3 Computer Animation

Computer animation is the branch of computer science devoted to models for the representation and simulation of the dynamic evolution of virtual environments. A first focus is the animation of virtual characters (behavior and motion). Through a deeper understanding of interactions using VR and through better perceptive, biomechanical and motion control models to simulate the evolution of dynamic systems, the Mimetic team has the ability to build more realistic, efficient and believable animations. Perceptual study also enables us to focus computation time on relevant information (i.e. leading to ensure natural motion from the perceptual points of view) and save time for unperceived details. The underlying challenges are (i) the computational efficiency of the system which needs to run in real-time in many situations, (ii) the capacity of the system to generalise/adapt to new situations for which data were not available, or models were not defined for, and (iii) the variability of the models, i.e. their ability to handle many body morphologies and generate variations in motions that would be specific to each virtual character.

In many cases, however, these challenges cannot be addressed in isolation. Typically, character behaviors also depend on the nature and the topology of the environment they are surrounded by. In essence, a character animation system should also rely on smarter representations of the environments, in order to better perceive the environment itself, and take contextualised decisions. Hence the animation of virtual characters in our context often requires to be coupled with models to represent the environment, to reason, and to plan both at a geometric level (can the character reach this location), and at a semantic level (should it use the sidewalk, the stairs, or the road). This represents the second focus. Underlying challenges are the ability to offer a compact -yet precise- representation on which efficient path, motion planning and high-level reasoning can be performed.

Finally, a third scientific focus is digital storytelling. Evolved representations of motions and environments enable realistic animations. It is yet equally important to question how these events should be portrayed, when and under which angle. In essence, this means integrating discourse models into story models, the story representing the sequence of events which occur in a virtual environment, and the discourse representing how this story should be displayed (i.e. which events to show in which order and with which viewpoint). Underlying challenges are pertained to:

- narrative discourse representations,

- projections of the discourse into the geometry, planning camera trajectories and planning cuts between the viewpoints,

- means to interactively control the unfolding of the discourse.

By therefore establishing the foundations to build bridges between the high-level narrative structures, the semantic/geometric planning of motions and events, and low-level character animations, the Mimetic team adopts a principled and all-inclusive approach to the animation of virtual characters.

4 Application domains

4.1 Animation, Autonomous Characters and Digital Storytelling

Computer Animation is one of the main application domains of the research work conducted in the MimeTIC team, in particular in relation to the entertainment and game industries. In these domains, creating virtual characters that are able to replicate real human motions and behaviours still highlights unanswered key challenges, especially as virtual characters are required to populate virtual worlds. For instance, virtual characters are used to replace secondary actors and generate highly populated scenes that would be hard and costly to produce with real actors. This requires to create high quality replicas that appear, move and behave both individually and collectively like real humans. The three key challenges for the MimeTIC team are therefore:

- to create natural animations (i.e., virtual characters that move like real humans),

- to create autonomous characters (i.e., that behave like real humans),

- to orchestrate the virtual characters so as to create interactive stories.

First, our challenge is to create animations of virtual characters that are natural, i.e. moving like a real human would. This challenge covers several aspects of Character Animation depending on the context of application, e.g., producing visually plausible or physically correct motions, producing natural motion sequences, etc. Our goal is therefore to develop novel methods for animating virtual characters, based on motion capture, data-driven approaches, or learning approaches. However, because of the complexity of human motion (number of degrees of freedom that can be controlled), resulting animations are not necessarily physically, biomechanically, or visually plausible. For instance, current physics-based approaches produce physically correct motions but not necessarily perceptually plausible ones. All these reasons are why most entertainment industries (gaming and movie production for example) still mainly rely on manual animation. Therefore, research in MimeTIC on character animation is also conducted with the goal of validating the results from objective standpoint (physical, biomechanical) as well as subjective one (visual plausibility).

Second, one of the main challenges in terms of autonomous characters is to provide a unified architecture for the modeling of their behavior. This architecture includes perception, action and decisional parts. This decisional part needs to mix different kinds of models, acting at different time scales and working with different natures of data, ranging from numerical (motion control, reactive behaviors) to symbolic (goal oriented behaviors, reasoning about actions and changes). For instance, autonomous characters play the role of actors that are driven by a scenario in video games and virtual storytelling. Their autonomy allows them to react to unpredictable user interactions and adapt their behavior accordingly. In the field of simulation, autonomous characters are used to simulate the behavior of humans in different kinds of situations. They enable to study new situations and their possible outcomes. In the MimeTIC team, our focus is therefore not to reproduce the human intelligence but to propose an architecture making it possible to model credible behaviors of anthropomorphic virtual actors evolving/moving in real-time in virtual worlds. The latter can represent particular situations studied by psychologists of the behavior or to correspond to an imaginary universe described by a scenario writer. The proposed architecture should mimic all the human intellectual and physical functions.

Finally, interactive digital storytelling, including novel forms of edutainment and serious games, provides access to social and human themes through stories which can take various forms and contains opportunities for massively enhancing the possibilities of interactive entertainment, computer games and digital applications. It provides chances for redefining the experience of narrative through interactive simulations of computer-generated story worlds and opens many challenging questions at the overlap between computational narratives, autonomous behaviours, interactive control, content generation and authoring tools. Of particular interest for the MimeTIC research team, virtual storytelling triggers challenging opportunities in providing effective models for enforcing autonomous behaviours for characters in complex 3D environments. Offering both low-level capacities to characters such as perceiving the environments, interacting with the environment itself and reacting to changes in the topology, on which to build higher-levels such as modelling abstract representations for efficient reasoning, planning paths and activities, modelling cognitive states and behaviours requires the provision of expressive, multi-level and efficient computational models. Furthermore virtual storytelling requires the seamless control of the balance between the autonomy of characters and the unfolding of the story through the narrative discourse. Virtual storytelling also raises challenging questions on the conveyance of a narrative through interactive or automated control of the cinematography (how to stage the characters, the lights and the cameras). For example, estimating visibility of key subjects, or performing motion planning for cameras and lights are central issues for which have not received satisfactory answers in the literature.

4.2 Fidelity of Virtual Reality

VR is a powerful tool for perception-action experiments. VR-based experimental platforms allow exposing a population to fully controlled stimuli that can be repeated from trial to trial with high accuracy. Factors can be isolated and objects manipulations (position, size, orientation, appearance, ..) are easy to perform. Stimuli can be interactive and adapted to participants’ responses. Such interesting features allow researchers to use VR to perform experiments in sports, motion control, perceptual control laws, spatial cognition as well as person-person interactions. However, the interaction loop between users and their environment differs in virtual conditions in comparison with real conditions. When a user interacts in an environment, movement from action and perception are closely related. While moving, the perceptual system (vision, proprioception,..) provides feedback about the users’ own motion and information about the surrounding environment. That allows the user to adapt his/her trajectory to sudden changes in the environment and generate a safe and efficient motion. In virtual conditions, the interaction loop is more complex because it involves several material aspects.

First, the virtual environment is perceived through a numerical display which could affect the available information and thus could potentially introduce a bias. For example, studies observed a distance compression effect in VR, partially explained by the use of a Head Mounted Display with reduced field of view and exerting a weight and torques on the user’s head. Similarly, the perceived velocity in a VR environment differs from the real world velocity, introducing an additional bias. Other factors, such as the image contrast, delays in the displayed motion and the point of view can also influence efficiency in VR. The second point concerns the user’s motion in the virtual world. The user can actually move if the virtual room is big enough or if wearing a head mounted display. Even with a real motion, authors showed that walking speed is decreased, personal space size is modified and navigation in VR is performed with increased gait instability. Although natural locomotion is certainly the most ecological approach, the physical limited size of VR setups prevents from using it most of the time. Locomotion interfaces are therefore required. They are made up of two components, a locomotion metaphor (device) and a transfer function (software), that can also introduce bias in the generated motion. Indeed, the actuating movement of the locomotion metaphor can significantly differ from real walking, and the simulated motion depends on the transfer function applied. Locomotion interfaces cannot usually preserve all the sensory channels involved in locomotion.

When studying human behavior in VR, the aforementioned factors in the interaction loop potentially introduce bias both in the perception and in the generation of motor behavior trajectories. MimeTIC is working on the mandatory step of VR validation to make it usable for capturing and analyzing human motion.

4.3 Motion Sensing of Human Activity

Recording human activity is a key point of many applications and fundamental works. Numerous sensors and systems have been proposed to measure positions, angles or accelerations of the user's body parts. Whatever the system is, one of the main problems is to be able to automatically recognize and analyze the user's performance according to poor and noisy signals. Human activity and motion are subject to variability: intra-variability due to space and time variations of a given motion, but also inter-variability due to different styles and anthropometric dimensions. MimeTIC has addressed the above problems in two main directions.

First, we have studied how to recognize and quantify motions performed by a user when using accurate systems such as Vicon (product from Oxford Metrics), Qualisys, or Optitrack (product from Natural Point) motion capture systems. These systems provide large vectors of accurate information. Due to the size of the state vector (all the degrees of freedom) the challenge is to find the compact information (named features) that enables the automatic system to recognize the performance of the user. Whatever the method used, finding these relevant features that are not sensitive to intra-individual and inter-individual variability is a challenge. Some researchers have proposed to manually edit these features (such as a Boolean value stating if the arm is moving forward or backward) so that the expertise of the designer is directly linked with the success ratio. Many proposals for generic features have been proposed, such as using Laban notation which was introduced to encode dancing motions. Other approaches tend to use machine learning to automatically extract these features. However most of the proposed approaches were used to seek a database for motions, whose properties correspond to the features of the user's performance (named motion retrieval approaches). This does not ensure the retrieval of the exact performance of the user but a set of motions with similar properties.

Second, we wish to find alternatives to the above approach which is based on analyzing accurate and complete knowledge of joint angles and positions. Hence new sensors, such as depth-cameras (Kinect, product from Microsoft) provide us with very noisy joint information but also with the surface of the user. Classical approaches would try to fit a skeleton into the surface in order to compute joint angles which, again, lead to large state vectors. An alternative would be to extract relevant information directly from the raw data, such as the surface provided by depth cameras. The key problem is that the nature of these data may be very different from classical representation of human performance. In MimeTIC, we try to address this problem in some application domains that require picking specific information, such as gait asymmetry or regularity for clinical analysis of human walking.

4.4 Sports

Sport is characterized by complex displacements and motions. One main objective is to understand the determinants of performance through the analysis of the motion itself. In the team, different sports have been studied such as the tennis serve, where the goal was to understand the contribution of each segment of the body in the performance but also the risk of injuries as well as other situation in cycling, swimming, fencing or soccer. Sport motions are dependent on visual information that the athlete can pick up in his environment, including the opponent's actions. Perception is thus fundamental to the performance. Indeed, a sportive action, as unique, complex and often limited in time, requires a selective gathering of information. This perception is often seen as a prerogative for action. It then takes the role of a passive collector of information. However, as mentioned by Gibson in 1979, the perception-action relationship should not be considered sequentially but rather as a coupling: we perceive to act but we must act to perceive. There would thus be laws of coupling between the informational variables available in the environment and the motor responses of a subject. In other words, athletes have the ability to directly perceive the opportunities of action directly from the environment. Whichever school of thought considered, VR offers new perspectives to address these concepts by complementary using real time motion capture of the immersed athlete.

In addition to better understand sports and interactions between athletes, VR can also be used as a training environment as it can provide complementary tools to coaches. It is indeed possible to add visual or auditory information to better train an athlete. The knowledge found in perceptual experiments can be for example used to highlight the body parts that are important to look at, in order to correctly anticipate the opponent's action.

4.5 Ergonomics

The design of workstations nowadays tends to include assessment steps in a Virtual Environment (VE) to evaluate ergonomic features. This approach is more cost-effective and convenient since working directly on the Digital Mock-Up (DMU) in a VE is preferable to constructing a real physical mock-up in a Real Environment (RE). This is substantiated by the fact that a Virtual Reality (VR) set-up can be easily modified, enabling quick adjustments of the workstation design. Indeed, the aim of integrating ergonomics evaluation tools in VEs is to facilitate the design process, enhance the design efficiency, and reduce the costs.

The development of such platforms asks for several improvements in the field of motion analysis and VR. First, interactions have to be as natural as possible to properly mimic the motions performed in real environments. Second, the fidelity of the simulator also needs to be correctly evaluated. Finally, motion analysis tools have to be able to provide in real-time biomechanics quantities usable by ergonomists to analyse and improve the working conditions.

In real working condition, motion analysis and musculoskeletal risk assessment raise also many scientific and technological challenges. Similarly to virtual reality, fidelity of the working process may be affected by the measurement method. Wearing sensors or skin markers, together with the need of frequently calibrating the assessment system may change the way workers perform the tasks. Whatever the measurement is, classical ergonomic assessments generally address one specific parameter, such as posture, or force, or repetitions..., which makes it difficult to design a musculoskeletal risk factor that actually represents this risk. Another key scientific challenge is then to design new indicators that better capture the risk of musculoskeletal disorders. However, this indicator has to deal with the trade-off between accurate biomechanical assessment and the difficulty to get reliable and required information in real working conditions.

4.6 Locomotion and Interactions between walkers

Modeling and simulating locomotion and interactions between walkers is a very active, complex and competitive domain, being investigating by various disciplines such as mathematics, cognitive sciences, physics, computer graphics, rehabilitation etc. Locomotion and interactions between walkers are by definition at the very core of our society since they represent the basic synergies of our daily life. When walking in the street, we should produce a locomotor movement while taking information about our surrounding environment in order to interact with people, move without collision, alone or in a group, intercept, meet or escape to somebody. MimeTIC is an international key contributor in the domain of understanding and simulating locomotion and interactions between walkers. By combining an approach based on Human Movement Sciences and Computer Sciences, the team focuses on locomotor invariants which characterize the generation of locomotor trajectories. We also conduct challenging experiments focusing on visuo-motor coordination involved during interactions between walkers both using real and virtual set-ups. One main challenge is to consider and model not only the "average" behaviour of healthy younger adult but also extend to specific populations considering the effect of pathology or the effect of age (kids, older adults). As a first example, when patients cannot walk efficiently, in particular those suffering from central nervous system affections, it becomes very useful for practitioners to benefit from an objective evaluation of their capacities. To facilitate such evaluations, we have developed two complementary indices, one based on kinematics and the other one on muscle activations. One major point of our research is that such indices are usually only developed for children whereas adults with these affections are much more numerous. We extend this objective evaluation by using person-person interaction paradigm which allows studying visuo-motor strategies deficit in these specific populations.

Another fundamental question is the adaptation of the walking pattern according to anatomical constraints, such as pathologies in orthopedics, or adaptation to various human and non-human primates in paleoanthropoly. Hence, the question is to predict plausible locomotion according to a given morphology. This raises fundamental questions about the variables that are regulated to control gait: balance control, minimum energy, minimum jerk...In MimeTIC we develop models and simulators to efficiently test hypotheses on gait control for given morphologies.

5 Social and environmental responsibility

MimeTIC is not directly involved in environmental responsabilities.

6 Highlights of the year

6.1 Achievments

- Team evaluation: The team has been evaluated by three expert for the last time this year, ending a 12 years cycle for the project. The team has been recognized by the experts as a expert in sport sciences and physical activity analysis and synthesis.

- Franck Multon status: Franck Multon has been hired as Research Director by INRIA.

- Olympic Games "Paris2024": Many team members have been strongly involved in the scientific support of French Olympic teams training, and have carried-out dissemination of this research work in the wide public audience.

7 New software, platforms, open data

7.1 New software

7.1.1 AsymGait

-

Name:

Asymmetry index for clinical gait analysis based on depth images

-

Keywords:

Motion analysis, Kinect, Clinical analysis

-

Scientific Description:

The system uses depth images delivered by the Microsoft Kinect to retrieve the gait cycles first. To this end it is based on a analyzing the knees trajectories instead of the feet to obtain more robust gait event detection. Based on these cycles, the system computes a mean gait cycle model to decrease the effect of noise of the system. Asymmetry is then computed at each frame of the gait cycle as the spatial difference between the left and right parts of the body. This information is computed for each frame of the cycle.

-

Functional Description:

AsymGait is a software package that works with Microsoft Kinect data, especially depth images, in order to carry-out clinical gait analysis. First it identifies the main gait events using the depth information (footstrike, toe-off) to isolate gait cycles. Then it computes a continuous asymmetry index within the gait cycle. Asymmetry is viewed as a spatial difference between the two sides of the body.

-

Contact:

Franck Multon

-

Participants:

Edouard Auvinet, Franck Multon

7.1.2 Cinematic Viewpoint Generator

-

Keyword:

3D animation

-

Functional Description:

The software, developed as an API, provides a mean to automatically compute a collection of viewpoints over one or two specified geometric entities, in a given 3D scene, at a given time. These viewpoints satisfy classical cinematographic framing conventions and guidelines including different shot scales (from extreme long shot to extreme close-up), different shot angles (internal, external, parallel, apex), and different screen compositions (thirds,fifths, symmetric of di-symmetric). The viewpoints allow to cover the range of possible framings for the specified entities. The computation of such viewpoints relies on a database of framings that are dynamically adapted to the 3D scene by using a manifold parametric representation and guarantee the visibility of the specified entities. The set of viewpoints is also automatically annotated with cinematographic tags such as shot scales, angles, compositions, relative placement of entities, line of interest.

-

Contact:

Marc Christie

-

Participants:

Christophe Lino, Emmanuel Badier, Marc Christie

-

Partners:

Université d'Udine, Université de Nantes

7.1.3 CusToM

-

Name:

Customizable Toolbox for Musculoskeletal simulation

-

Keywords:

Biomechanics, Dynamic Analysis, Kinematics, Simulation, Mechanical multi-body systems

-

Scientific Description:

The present toolbox aims at performing a motion analysis thanks to an inverse dynamics method.

Before performing motion analysis steps, a musculoskeletal model is generated. Its consists of, first, generating the desire anthropometric model thanks to models libraries. The generated model is then kinematical calibrated by using data of a motion capture. The inverse kinematics step, the inverse dynamics step and the muscle forces estimation step are then successively performed from motion capture and external forces data. Two folders and one script are available on the toolbox root. The Main script collects all the different functions of the motion analysis pipeline. The Functions folder contains all functions used in the toolbox. It is necessary to add this folder and all the subfolders to the Matlab path. The Problems folder is used to contain the different study. The user has to create one subfolder for each new study. Once a new musculoskeletal model is used, a new study is necessary. Different files will be automaticaly generated and saved in this folder. All files located on its root are related to the model and are valuable whatever the motion considered. A new folder will be added for each new motion capture. All files located on a folder are only related to this considered motion.

-

Functional Description:

Inverse kinematics Inverse dynamics Muscle forces estimation External forces prediction

- Publications:

-

Contact:

Charles Pontonnier

-

Participants:

Antoine Muller, Charles Pontonnier, Georges Dumont, Pierre Puchaud, Anthony Sorel, Claire Livet, Louise Demestre

7.1.4 Directors Lens Motion Builder

-

Keywords:

Previzualisation, Virtual camera, 3D animation

-

Functional Description:

Directors Lens Motion Builder is a software plugin for Autodesk's Motion Builder animation tool. This plugin features a novel workflow to rapidly prototype cinematographic sequences in a 3D scene, and is dedicated to the 3D animation and movie previsualization industries. The workflow integrates the automated computation of viewpoints (using the Cinematic Viewpoint Generator) to interactively explore different framings of the scene, proposes means to interactively control framings in the image space, and proposes a technique to automatically retarget a camera trajectory from one scene to another while enforcing visual properties. The tool also proposes to edit the cinematographic sequence and export the animation. The software can be linked to different virtual camera systems available on the market.

-

Contact:

Marc Christie

-

Participants:

Christophe Lino, Emmanuel Badier, Marc Christie

-

Partner:

Université de Rennes 1

7.1.5 Kimea

-

Name:

Kinect IMprovement for Egronomics Assessment

-

Keywords:

Biomechanics, Motion analysis, Kinect

-

Scientific Description:

Kimea consists in correcting skeleton data delivered by a Microsoft Kinect in an ergonomics purpose. Kimea is able to manage most of the occlultations that can occur in real working situation, on workstations. To this end, Kimea relies on a database of examples/poses organized as a graph, in order to replace unreliable body segments reconstruction by poses that have already been measured on real subject. The potential pose candidates are used in an optimization framework.

-

Functional Description:

Kimea gets Kinect data as input data (skeleton data) and correct most of measurement errors to carry-out ergonomic assessment at workstation.

- Publications:

-

Contact:

Franck Multon

-

Participants:

Franck Multon, Hubert Shum, Pierre Plantard

-

Partner:

Faurecia

7.1.6 Populate

-

Keywords:

Behavior modeling, Agent, Scheduling

-

Scientific Description:

The software provides the following functionalities:

- A high level XML dialect that is dedicated to the description of agents activities in terms of tasks and sub activities that can be combined with different kind of operators: sequential, without order, interlaced. This dialect also enables the description of time and location constraints associated to tasks.

- An XML dialect that enables the description of agent's personal characteristics.

- An informed graph describes the topology of the environment as well as the locations where tasks can be performed. A bridge between TopoPlan and Populate has also been designed. It provides an automatic analysis of an informed 3D environment that is used to generate an informed graph compatible with Populate.

- The generation of a valid task schedule based on the previously mentioned descriptions.

With a good configuration of agents characteristics (based on statistics), we demonstrated that tasks schedules produced by Populate are representative of human ones. In conjunction with TopoPlan, it has been used to populate a district of Paris as well as imaginary cities with several thousands of pedestrians navigating in real time.

-

Functional Description:

Populate is a toolkit dedicated to task scheduling under time and space constraints in the field of behavioral animation. It is currently used to populate virtual cities with pedestrian performing different kind of activities implying travels between different locations. However the generic aspect of the algorithm and underlying representations enable its use in a wide range of applications that need to link activity, time and space. The main scheduling algorithm relies on the following inputs: an informed environment description, an activity an agent needs to perform and individual characteristics of this agent. The algorithm produces a valid task schedule compatible with time and spatial constraints imposed by the activity description and the environment. In this task schedule, time intervals relating to travel and task fulfillment are identified and locations where tasks should be performed are automatically selected.

-

Contact:

Fabrice Lamarche

-

Participants:

Carl-Johan Jorgensen, Fabrice Lamarche

7.1.7 PyNimation

-

Keywords:

Moving bodies, 3D animation, Synthetic human

-

Scientific Description:

PyNimation is a python-based open-source (AGPL) software for editing motion capture data which was initiated because of a lack of open-source software enabling to process different types of motion capture data in a unified way, which typically forces animation pipelines to rely on several commercial software. For instance, motions are captured with a software, retargeted using another one, then edited using a third one, etc. The goal of Pynimation is therefore to bridge the gap in the animation pipeline between motion capture software and final game engines, by handling in a unified way different types of motion capture data, providing standard and novel motion editing solutions, and exporting motion capture data to be compatible with common 3D game engines (e.g., Unity, Unreal). Its goal is also simultaneously to provide support to our research efforts in this area, and it is therefore used, maintained, and extended to progressively include novel motion editing features, as well as to integrate the results of our research projects. At a short term, our goal is to further extend its capabilities and to share it more largely with the animation/research community.

-

Functional Description:

PyNimation is a framework for editing, visualizing and studying skeletal 3D animations, it was more particularly designed to process motion capture data. It stems from the wish to utilize Python’s data science capabilities and ease of use for human motion research.

In its version 1.0, Pynimation offers the following functionalities, which aim to evolve with the development of the tool : - Import / Export of FBX, BVH, and MVNX animation file formats - Access and modification of skeletal joint transformations, as well as a certain number of functionalities to manipulate these transformations - Basic features for human motion animation (under development, but including e.g. different methods of inverse kinematics, editing filters, etc.). - Interactive visualisation in OpenGL for animations and objects, including the possibility to animate skinned meshes

- URL:

-

Authors:

Ludovic Hoyet, Robin Adili, Benjamin Niay, Alberto Jovane

-

Contact:

Ludovic Hoyet

7.1.8 The Theater

-

Keywords:

3D animation, Interactive Scenarios

-

Functional Description:

The Theater is a software framework to develop interactive scenarios in virtual 3D environements. The framework provides means to author and orchestrate 3D character behaviors and simulate them in real-time. The tools provides a basis to build a range of 3D applications, from simple simulations with reactive behaviors, to complex storytelling applications including narrative mechanisms such as flashbacks.

-

Contact:

Marc Christie

-

Participant:

Marc Christie

7.2 New platforms

7.2.1 Immerstar Platform

Participants: Georges Dumont [contact], Ronan Gaugne, Anthony Sorel, Richard Kulpa.

With the two platforms of virtual reality, Immersia) and Immermove Immermove, grouped under the name Immerstar, the team has access to high level scientific facilities. This equipment benefits the research teams of the center and has allowed them to extend their local, national and international collaborations. The Immerstar platform was granted by a Inria CPER funding for 2015-2019 that enabled important evolutions of the equipment. In 2016, the first technical evolutions have been decided and, in 2017, these evolutions have been implemented. On one side, for Immermove, the addition of a third face to the immersive space, and the extension of the Vicon tracking system have been realized and continued this year with 23 new cameras. And, on the second side, for Immersia, the installation of WQXGA laser projectors with augmented global resolution, of a new tracking system with higher frequency and of new computers for simulation and image generation in 2017. In 2018, a Scale One haptic device has been installed. It allows, as in the CPER proposal, one or two handed haptic feedback in the full space covered by Immersia and possibility of carrying the user. Based on these supports, in 2020, we participated to a PIA3-Equipex+ proposal. This proposal CONTINUUM involves 22 partners, has been succesfully evaluated and will be granted. The CONTINUUM project will create a collaborative research infrastructure of 30 platforms located throughout France, to advance interdisciplinary research based on interaction between computer science and the human and social sciences. Thanks to CONTINUUM, 37 research teams will develop cutting-edge research programs focusing on visualization, immersion, interaction and collaboration, as well as on human perception, cognition and behaviour in virtual/augmented reality, with potential impact on societal issues. CONTINUUM enables a paradigm shift in the way we perceive, interact, and collaborate with complex digital data and digital worlds by putting humans at the center of the data processing workflows. The project will empower scientists, engineers and industry users with a highly interconnected network of high-performance visualization and immersive platforms to observe, manipulate, understand and share digital data, real-time multi-scale simulations, and virtual or augmented experiences. All platforms will feature facilities for remote collaboration with other platforms, as well as mobile equipment that can be lent to users to facilitate onboarding. The kick-off meeting of continuum has been held in 2022, January the 14th. A global meeting was held in 2022, July the 5th and 6th.

8 New results

8.1 Outline

In 2023, MimeTIC has maintained its activity in motion analysis, modelling and simulation, to support the idea that these approaches are strongly coupled in a motion analysis-synthesis loop. This idea has been applied to the main application domains of MimeTIC:

- Animation, Autonomous Characters and Digital Storytelling,

- Motion sensing of Human Activity,

- Sports,

- Ergonomics,

- Locomotion and Interactions Between Walkers.

8.2 Animation, Autonomous Characters and Digital Storytelling

MimeTIC main research path consists in associating motion analysis and synthesis to enhance the naturalness in computer animation, with applications in camera control, movie previsualisation, and autonomous virtual character control. Thus, we pushed example-based techniques in order to reach a good trade-off between simulation efficiency and naturalness of the results. In 2022, to achieve this goal, MimeTIC continued to explore the use of perceptual studies and model-based approaches, but also began to investigate deep learning to generate plausible behaviors.

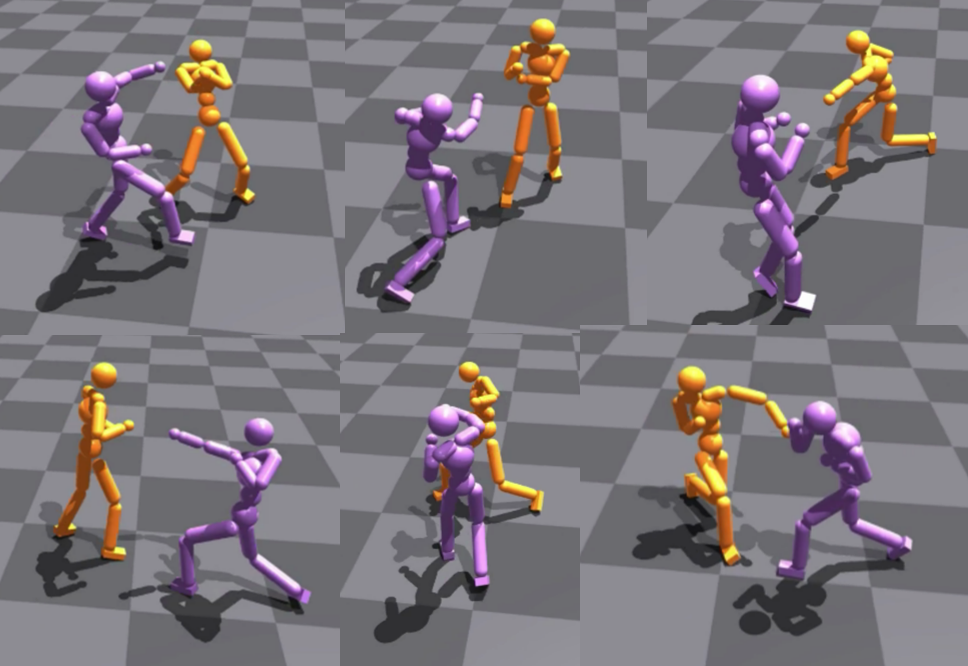

8.2.1 MAAIP: Multi-Agent Adversarial Interaction Priors for imitation from fighting demonstrations for physics-based characters

Participants: Mohamed Younes, Franck Multon [contact], Richard Kulpa.

Simulated shadowboxing interactions between two physics-based characters.

Simulating realistic interaction and motions for physics-based characters is of great interest for interactive applications, and automatic secondary character animation in the movie and video game industries. Recent works in reinforcement learning have proposed impressive results for single character simulation, especially the ones that use imitation learning based techniques. However, imitating multiple characters interactions and motions requires to also model their interactions. In this paper, we propose a novel Multi-Agent Generative Adversarial Imitation Learning based approach that generalizes the idea of motion imitation for one character to deal with both the interaction and the motions of the multiple physics-based characters 25. Two unstructured datasets are given as inputs: 1) a single-actor dataset containing motions of a single actor performing a set of motions linked to a specific application, and 2) an interaction dataset containing a few examples of interactions between multiple actors. Based on these datasets, our system trains control policies allowing each character to imitate the interactive skills associated with each actor, while preserving the intrinsic style. This approach has been tested on two different fighting styles, boxing and full-body martial art, to demonstrate the ability of the method to imitate different styles.

8.3 Motion Sensing of Human Activity

MimeTIC has a long experience in motion analysis in laboratory condition. In the MimeTIC project, we proposed to explore how these approaches could be transferred to ecological situations, with a lack of control on the experimental conditions. In 2022, we continued to explore the use of deep learning techniques to capture human performance based on simple RGB or depth images. We also continued exploring how customizing complex musculoskeletal models with simple calibration processes. We also investigated the use of machine learning to access parameters that could not be measured directly.

8.3.1 Evaluation of hybrid deep learning and optimization method for 3D human pose and shape reconstruction in simulated depth images

Participants: Adnane Boukhayma, Franck Multon [contact].

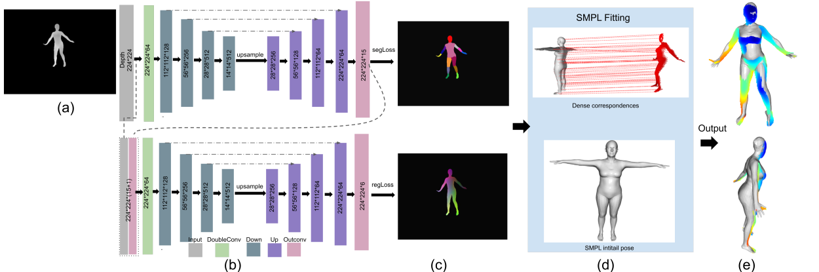

(a) Input depth image, b) DoubleUnet, stacked two Unets to infer segmentation and color map regression; c) embeded color: first three channels encode human part, last-three channels encode pixel normalized distance; d) SMPL fitting e) Outputs : 3D human shape.

In 2022, we addressed the problem of capturing both the shape and the pose of a character using a single depth sensor. Some previous works proposed to fit a parametric generic human template in the depth image, while others developed deep learning (DL) approaches to find the correspondence between depth pixels and vertices of the template. In this paper, we explore the possibility of combining these two approaches to benefit from their respective advantages. The hypothesis is that DL dense correspondence should provide more accurate information to template model fitting, compared to previous approaches which only use estimated joint position only. Thus, we stacked a state-of-the-art DL dense correspondence method (namely double U- Net) and parametric model fitting (namely Simplify-X). The experiments on the SURREAL [1], DFAUST datasets [2] and a subset of AMASS [3], show that this hybrid approach enables us to enhance pose and shape estimation compared to using DL or model fitting separately. This result opens new perspectives in pose and shape estimation in many applications where complex or invasive motion capture set-ups are impossible, such as sports, dance, ergonomic assessment, etc.

In 2023, we more deeply evaluated this method and its ability to segment backgroud in complex simulated depth images 24. Results show that this hybrid approach enables us to enhance pose and shape estimation compared to using DL or model fitting separately. We also evaluated the ability of the DL-based dense correspondence method to segment also the background - not only the body parts. We also evaluated 4 different methods to perform the model fitting based on a dense correspondence, where the number of available 3D points differs from the number of corresponding template vertices. These two results enabled us to better understand how to combine DL and model fitting, and the potential limits of this approach to deal with real depth images. Future works could explore the potential of taking temporal information into account, which has proven to increase the accuracy of pose and shape reconstruction based on a unique depth or RGB image.

This work was part of the European project SCHEDAR, funded by ANR, and leaded by Cyprus University. This work was performed in collaboration with University of Reims Champagne Ardennes.

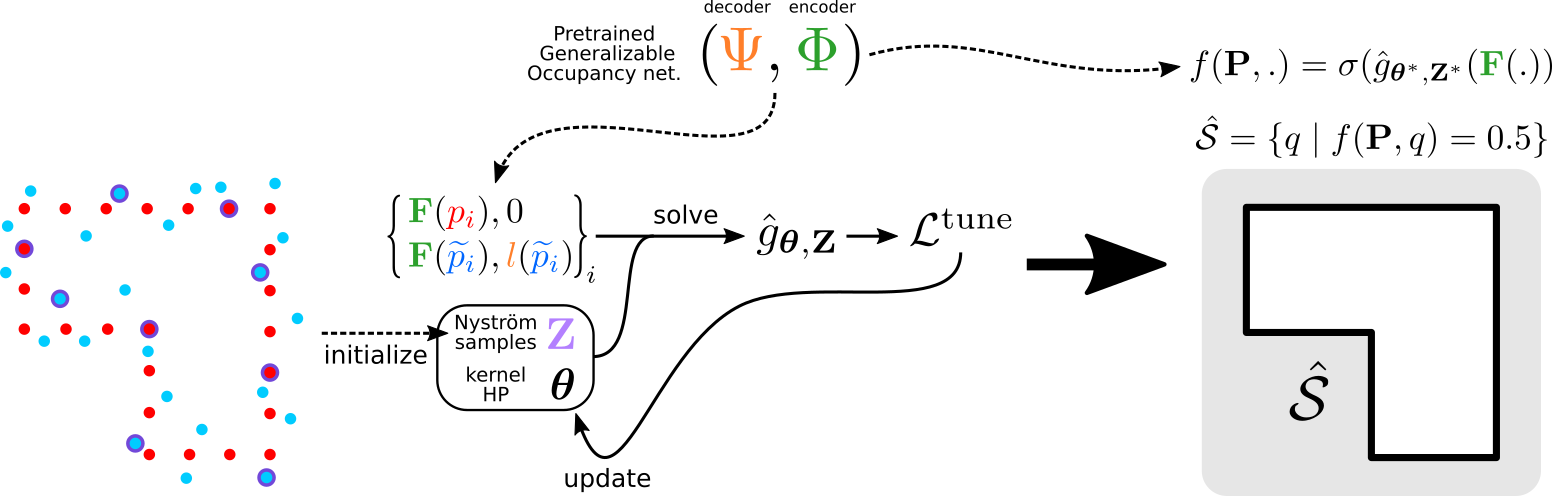

8.3.2 Robustifying Generalizable Implicit Shape Networks with a Tunable Non-Parametric Model

Participants: Adnane Boukhayma [contact], Amine Ouasfi.

Feedforward generalizable models for implicit shape reconstruction from unoriented point cloud present multiple advantages, including high performance and inference speed. However, they still suffer from generalization issues, ranging from underfitting the input point cloud, to misrepresenting samples outside of the training data distribution, or with toplogies unseen at training. We propose here an efficient mechanism to remedy some of these limitations at test time. We combine the inter-shape data prior of the network with an intra-shape regularization prior of a Nyström Kernel Ridge Regression, that we further adapt by fitting its hyperprameters to the current shape. The resulting shape function defined in a shape specific Reproducing Kernel Hilbert Space benefits from desirable stability and efficiency properties and grants a shape adaptive expressiveness-robustness trade-off. We demonstrate the improvement obtained through our method with respect to baselines and the state-of-the-art using synthetic and real data.

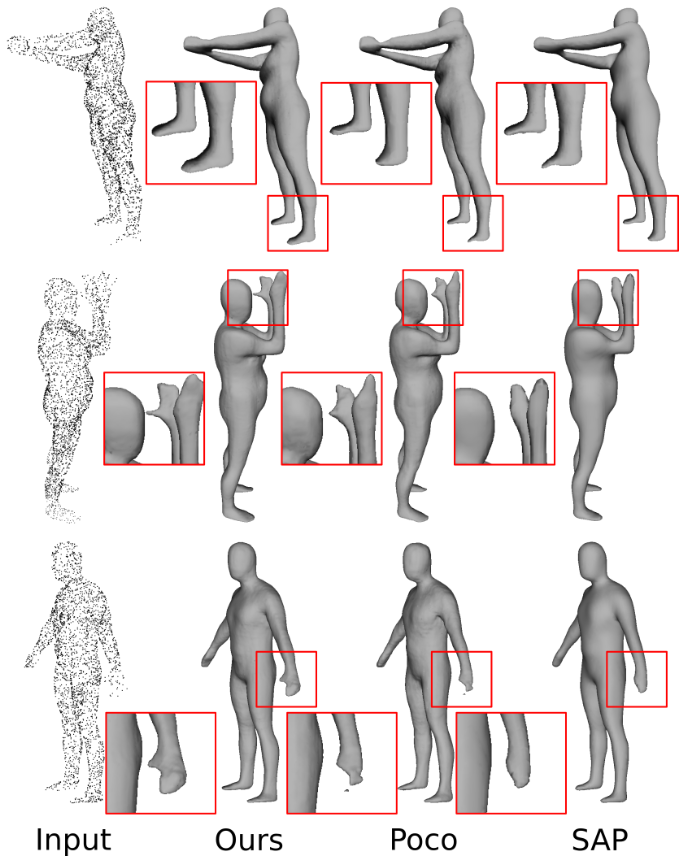

8.3.3 Mixing-Denoising Generalizable Occupancy Networks

Participants: Adnane Boukhayma [contact], Amine Ouasfi.

While current state-of-the-art generalizable implicit neural shape models rely on the inductive bias of convolutions, it is still not entirely clear how properties emerging from such biases are compatible with the task of 3D reconstruction from point cloud. We explore an alternative approach to generalizability in this context. We relax the intrinsic model bias (i.e. using MLPs to encode local features as opposed to convolutions) and constrain the hypothesis space instead with an auxiliary regularization related to the reconstruction task, i.e. denoising. The resulting model is the first only-MLP locally conditioned implicit shape reconstruction from point cloud network with fast feed forward inference. Point cloud borne features and denoising offsets are predicted from an exclusively MLP-made network in a single forward pass. A decoder predicts occupancy probabilities for queries anywhere in space by pooling nearby features from the point cloud order-invariantly, guided by denoised relative positional encoding. We outperform the state-of-the-art convolutional method while using half the number of model parameters.

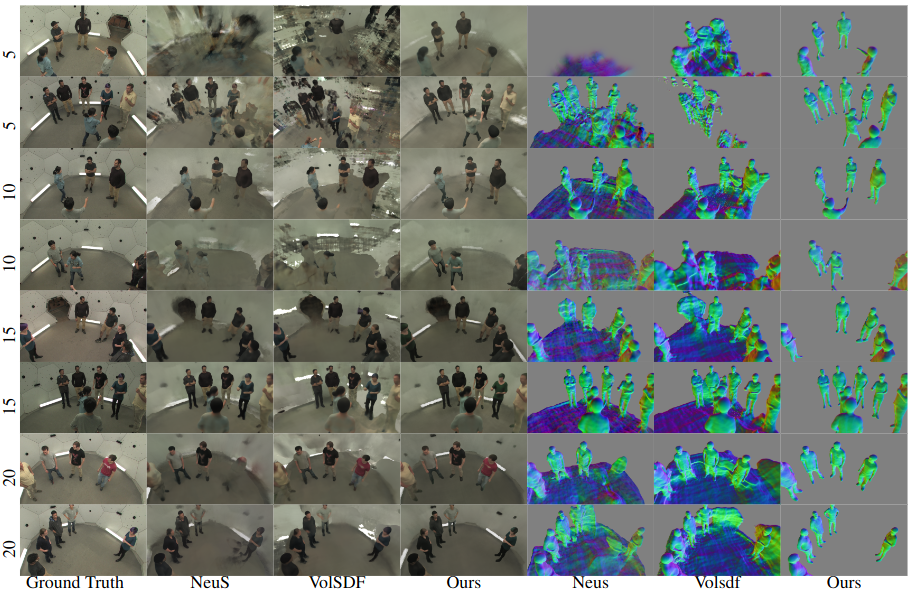

8.3.4 Few-Shot Multi-Human Neural Rendering Using Geometry Constraints

Participants: Adnane Boukhayma [contact], Qian Li, Franck Multon.

We present a method for recovering the shape and radiance of a scene consisting of multiple people given solely a few images. Multi-human scenes are complex due to additional occlusion and clutter. For single-human settings, existing approaches using implicit neural representations have achieved impressive results that deliver accurate geometry and appearance. However, it remains challenging to extend these methods for estimating multiple humans from sparse views. We propose a neural implicit reconstruction method that addresses the inherent challenges of this task through the following contributions: First, we use geometry constraints by exploiting pre-computed meshes using a human body model (SMPL). Specifically, we regularize the signed distances using the SMPL mesh and leverage bounding boxes for improved rendering. Second, we created a ray regularization scheme to minimize rendering inconsistencies, and a saturation regularization for robust optimization in variable illumination. Extensive experiments on both real and synthetic datasets demonstrate the benefits of our approach and show state-of-the-art performance against existing neural reconstruction methods.

8.3.5 Contact-conditioned hand-held object reconstruction from single-view images

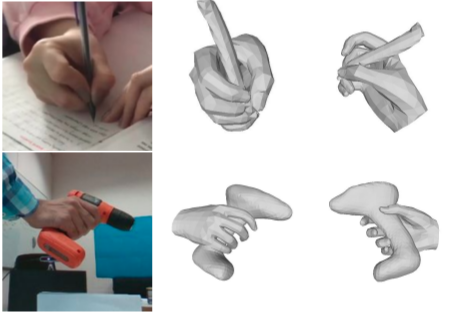

Participants: Adnane Boukhayma [contact].

Reconstructing the shape of hand-held objects from single-view color images is a long-standing problem in computer vision and computer graphics. The task is complicated by the ill-posed nature of single-view reconstruction, as well as potential occlusions due to both the hand and the object. Previous works mostly handled the problem by utilizing known object templates as priors to reduce the complexity. In contrast, our paper proposes a novel approach without knowing the object templates beforehand but by exploiting prior knowledge of contacts in hand-object interactions to train an attention-based network that can perform precise hand-held object reconstructions with only a single forward pass in inference. The network we propose encodes visual features together with contact features using a multi-head attention module as a way to condition the training of a neural field representation. This neural field representation outputs a Signed Distance Field representing the reconstructed object and extensive experiments on three well-known datasets demonstrate that our method achieves superior reconstruction results even under severe occlusion compared to the state-of-the-art techniques.

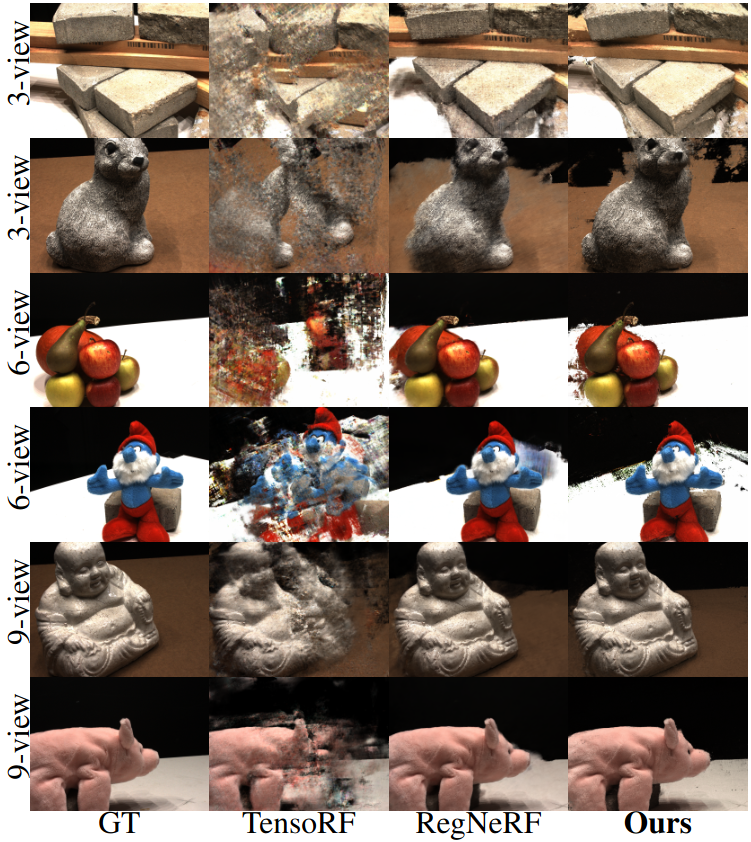

8.3.6 Regularizing Neural Radiance Fields from Sparse RGBD Inputs

Participants: Adnane Boukhayma [contact], Qian Li, Franck Multon.

This paper aims at improving neural radiance fields (NeRF) from sparse inputs. NeRF achieves photo-realistic renderings when given dense inputs, while its’ performance drops dramatically with the decrease of training views’ number. Our insight is that the standard volumetric rendering of NeRF is prone to over-fitting due to the lack of overall geometry and local neighborhood information from limited inputs. To address this issue, we propose a global sampling strategy with a geometry regularization utilizing warped images as augmented pseudo-views to encourage geometry consistency across multi-views. In addition, we introduce a local patch sampling scheme with a patch-based regularization for appearance consistency. Furthermore, our method exploits depth information for explicit geometry regularization. The proposed approach outperforms existing baselines on real benchmarks DTU datasets from sparse inputs and achieves the state of art results.

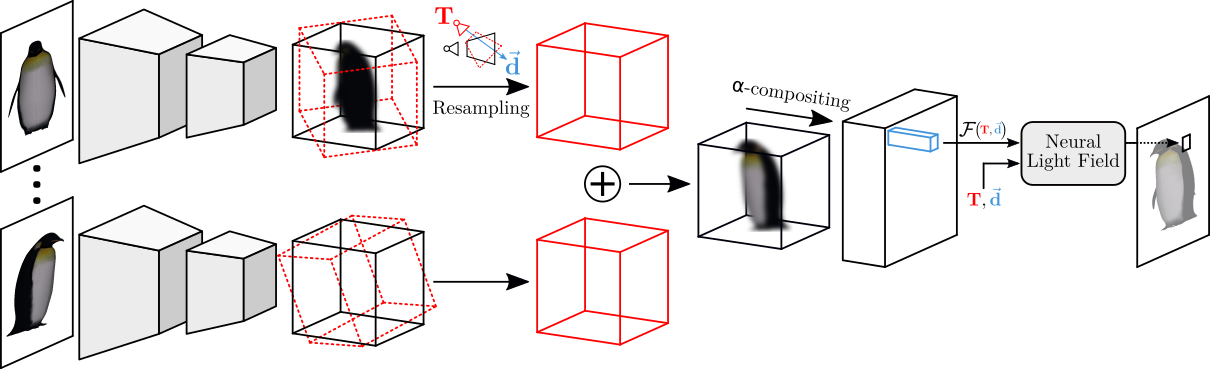

8.3.7 Learning Generalizable Light Field Networks from Few Images

Participants: Adnane Boukhayma [contact], Qian Li, Franck Multon.

We explore a new strategy for few-shot novel view synthesis based on a neural light field representation. Given a target camera pose, an implicit neural network maps each ray to its target pixel color directly. The network is conditioned on local ray features generated by coarse volumetric rendering from an explicit 3D feature volume. This volume is built from the input images using a 3D ConvNet. Our method achieves competitive performances on synthetic and real MVS data with respect to state-of-the-art neural radiance field based competition, while offering a 100 times faster rendering.

8.3.8 Few 'Zero Level Set'-Shot Learning of Shape Signed Distance Functions in Feature Space

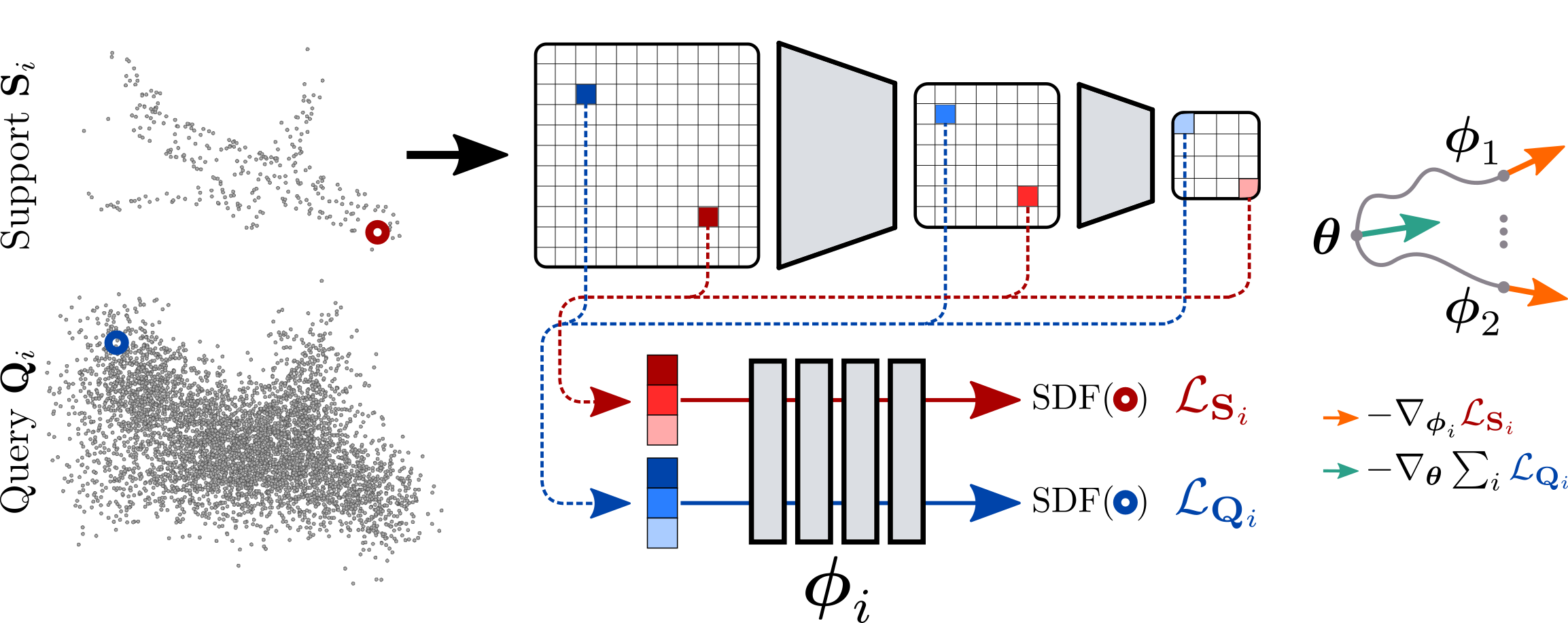

Participants: Adnane Boukhayma [contact], Amine Ouasfi.

We explore a new idea for learning based shape reconstruction from a point cloud, based on the recently popularized implicit neural shape representations. We cast the problem as a few-shot learning of implicit neural signed distance functions in feature space, that we approach using gradient based meta-learning. We use a convolutional encoder to build a feature space given the input point cloud. An implicit decoder learns to predict signed distance values given points represented in this feature space. Setting the input point cloud, i.e. samples from the target shape function's zero level set, as the support (i.e. context) in few-shot learning terms, we train the decoder such that it can adapt its weights to the underlying shape of this context with a few (5) tuning steps. We thus combine two types of implicit neural network conditioning mechanisms simultaneously for the first time, namely feature encoding and meta-learning. Our numerical and qualitative evaluation shows that in the context of implicit reconstruction from a sparse point cloud, our proposed strategy, i.e. meta-learning in feature space, outperforms existing alternatives, namely standard supervised learning in feature space, and meta-learning in euclidean space, while still providing fast inference.

Overview of our method. Our input is a sparse point cloud (Support ) and our output is an implicit neural SDF . is a neural network comprised of a convolutional encoder (top in gray) and an MLP decoder (bottom in gray). The decoder predicts SDF values for 3D points (red/blue circles) through their spatially sampled features (squares in shades of red/blue) from the encoder's activation maps. Following a gradient-based few-shot learning algorithm (MAML), we learn a meta-decoder in encoder feature space, parameterized with , that can quickly adapt to a new shape, i.e. new parameters , given its support. This is achieved by iterating per-shape 5-step adaptation gradient descent (orange arrow) using the support loss , and one-step meta gradient-descent (green arrow) by back-propagating the Query set () loss evaluated with the specialized parameters w.r.t. the meta-parameters . At test time, 5 fine-tuning iterations are performed similarly starting from the converged meta-model to evaluate .

8.3.9 Pressure insoles assessment for external forces prediction

Participants: Pauline Morin, Georges Dumont [contact], Charles Pontonnier [contact].

Force platforms generally involves a costraint to analyze human movement in the laboratory. Promising methods for estimating ground reaction forces and moments (GRF&M) can overcome this limitation. The most effective family of methods consists of minimizing a cost, constrained by the subject's dynamic equilibrium, for distributing the force over the contact surface on the ground. The detection of contact surfaces over time is dependent on numerous parameters. In this work we proposed to evaluate two contact detection methods: the first based on foot kinematics and the second based on pressure sole data. Optimal parameters for these two methods were identified for walking, running, and sidestep cut tasks. The results show that a single threshold in position or velocity is sufficient to guarantee a good estimate. Using pressure sole data to detect contact improves the estimation of the position of the center of pressure (CoP). Both methods demonstrated a similar level of accuracy in estimating ground reaction forces 17.

8.4 Sports

MimeTIC promotes the idea of coupling motion analysis and synthesis in various domains, especially sports. More specifically, we have a long experience and international leadership in using Virtual Reality for analyzing and training sports performance. In 2022, we continued to explore how enhancing the use of VR to design original training system. More specifically we addressed the problem of early motion recognition to make a virtual opponent react to the user's action before it ends. We also worked on the behavioral animation of the virtual athletes. Finally, we used VR as a mean to analyze perception in sports, or to train anticipation skills by introducing visual artifacts in the VR experience.

We also initiated some simulation work to better charactierize the interaction between a user and his physical environment, such as interactions between swimmers and diving boards.

8.4.1 VR for training perceptual-motor skills of boxers and relay runners for Paris 2024 Olympic games

Participants: Richard Kulpa [contact], Annabelle Limballe.

The revolution in digital technologies, and in particular Virtual Reality, in the field of sport has opened up new perspectives for the creation of new modalities for analyzing and training the skills underlying performance. Virtual Reality allows for the standardization, control and variation (even beyond real conditions) of stimuli while simultaneously quantifying performance. This provides the opportunity to offer specific training sessions, complementary to traditional training ones. In addition, in order to continuously improve their performances, athletes need to train more and more but they may reach their physical limits. Virtual Reality can create new training modalities that allow them to continue training while minimising the risk of injury (for example, due to the repetition of high-intensity work in races for a 4x100m relay or due to the impacts of defensive training in boxing). It may also be relevant for injured athletes who cannot physically practice their discipline but need to continue to train perceptually and cognitively by confronting field situations. In a series of publications, we described how Virtual Reality is effectively implemented in the French Boxing and Athletics federations to train athletes' anticipation skills in their preparation for the Paris 2024 Olympic Games. In the 4x100m relay 32, 35, the team's performance partly depends on the athletes' ability to synchronize their movements and therefore initiate their race at the right moment, before the partner arrives in the relay transmission zone, despite the pressure exerted by the opponents. The Virtual Reality training protocols are therefore designed to train each athlete to initiate his or her race at the right moment, with a tireless and always available avatar, based on the motion capture of real sprinters, whose race characteristics can be configured in terms of speed, lane, curvature, gender, etc. In boxing 33, the federation wants to improve boxers' anticipation skills in defensive situations without making them undergo repetitive blows that could injure them, which is impossible in real training. Virtual Reality training protocols allow boxers to focus on the appropriate information on the opponent, which should enable them to anticipate attacks and adopt the relevant parry. In this talk we will therefore show how these different challenges can be addressed in the REVEA project through the deployment of an interdisciplinary research programme.

8.4.2 Acceptance of VR training tools in high-level sport

Participants: Richard Kulpa [contact].

Under certain conditions, immersive virtual reality (VR) has shown its effectiveness in improving sport performance. However, the psychological impact of VR on athletes is often overlooked, even though it can be deleterious (e.g., decreased performance, stopping the use of VR). We have recently highlighted a significant intention of athletes to use a VR Head Mounted Display (VR-HMD) designed to increase their sport performance 34, 40. Whatever their level is, before a first use, they all initially considered it as quite useful (except for recreational athletes), quite easy to use, and quite pleasant to use. Coaches are also concerned by using the VR-HMD: If athletes accept the VR-HMD but coaches do not, there is a risk that the VR-HMD will never be used despite its potential benefits. In this context and based on the Technology Acceptance Model, a second study aimed at identifying possible blockages by measuring coaches’ acceptance of VR-HMD device before the first use 39. A total of 239 coaches, from different sports and from local to international level, filled out a questionnaire assessing perceived usefulness to improve training, perceived usefulness to improve athletes’ performance, perceived ease of use, perceived enjoyment, job relevance, and coaches’ intention to use it. Structural equation modeling analysis, one-sample t-tests, and one-way ANOVAs were used to examine the data. The main results show that (1) coaches’ intention to use the VR-HMD is positively predicted by perceived usefulness to improve athletes’ performance, perceived enjoyment, and job relevance, but not by perceived ease of use, (2) coaches significantly consider the VR-HMD useful to include in their training and to improve their athletes’ performance, easy to use, pleasant to use, and relevant for their job, and (3) no significant differences appear on the previous scores according to coaches’ levels, except for job relevance: international and national coaches find the VR-HMD more relevant to their job than local level coaches. All these results highlight that the VR-HMD is rather well accepted by the coaches before a first use.

8.4.3 Multiple Players Tracking in Virtual Reality: Influence of Soccer Specific Trajectories and Relationship With Gaze Activity

Participants: Richard Kulpa [contact], Anthony Sorel, Annabelle Limballe, Benoit Bideau, Alexandre Vu.