2024Activity reportProject-TeamBIOVISION

RNSR: 201622040S- Research center Inria Centre at Université Côte d'Azur

- Team name: Biologically plausible Integrative mOdels of the Visual system : towards synergIstic Solutions for visually-Impaired people and artificial visiON

- Domain:Digital Health, Biology and Earth

- Theme:Computational Neuroscience and Medicine

Keywords

Computer Science and Digital Science

- A5.1.1. Engineering of interactive systems

- A5.1.2. Evaluation of interactive systems

- A5.1.9. User and perceptual studies

- A5.3. Image processing and analysis

- A5.4. Computer vision

- A5.5.4. Animation

- A5.6.1. Virtual reality

- A5.6.2. Augmented reality

- A5.8. Natural language processing

- A6.1.1. Continuous Modeling (PDE, ODE)

- A6.1.4. Multiscale modeling

- A6.1.5. Multiphysics modeling

- A6.2.4. Statistical methods

- A6.3.3. Data processing

- A7.1.3. Graph algorithms

- A9.4. Natural language processing

- A9.7. AI algorithmics

Other Research Topics and Application Domains

- B1.1.8. Mathematical biology

- B1.2. Neuroscience and cognitive science

- B1.2.1. Understanding and simulation of the brain and the nervous system

- B1.2.2. Cognitive science

- B1.2.3. Computational neurosciences

- B2.1. Well being

- B2.5.1. Sensorimotor disabilities

- B2.5.3. Assistance for elderly

- B2.7.2. Health monitoring systems

- B9.1.2. Serious games

- B9.3. Medias

- B9.5.2. Mathematics

- B9.5.3. Physics

- B9.6.8. Linguistics

- B9.9. Ethics

1 Team members, visitors, external collaborators

Research Scientists

- Bruno Cessac [Team leader, INRIA, Senior Researcher, HDR]

- Pierre Kornprobst [INRIA, Senior Researcher, HDR]

- Hui-Yin Wu [INRIA, ISFP]

Post-Doctoral Fellow

- Sebastian Santiago Vizcay [INRIA, Post-Doctoral Fellow, until Feb 2024]

PhD Students

- Alexandre Bonlarron [INRIA, until Sep 2024]

- Johanna Delachambre [UNIV COTE D'AZUR]

- Pauline Devictor [UNIV COTE D'AZUR, from Nov 2024]

- Jerome Emonet [INRIA]

- Franz Franco Gallo [INRIA]

- Sebastian Gallardo Diaz [INRIA, CIFRE]

- Erwan Petit [UNIV COTE D'AZUR]

- Florent Robert [UNIV COTE D'AZUR, until Sep 2024]

Technical Staff

- Clement Bergman [INRIA, Engineer, until May 2024]

Interns and Apprentices

- Gillian Arlabosse [TELECOM SUDPARIS, Intern, from Jul 2024 until Aug 2024]

- Pauline Devictor [UNIV COTE D'AZUR, Intern, from Mar 2024 until Sep 2024]

- Christos Kyriazis [INRIA, Intern, from Mar 2024 until Aug 2024]

- Christos Kyriazis [UNIV COTE D'AZUR, Intern, until Feb 2024]

- Laura Piovano [UNIV COTE D'AZUR, Intern, from Sep 2024]

- Laura Piovano [UNIV COTE D'AZUR, Intern, from Mar 2024 until May 2024]

Administrative Assistant

- Marie-Cecile Lafont [INRIA]

Visiting Scientists

- Francisco Miqueles Varela [UNIV CHILI, from Mar 2024 until May 2024]

- Jorge Portal Diaz [UNIV CHILI, from Mar 2024 until May 2024]

External Collaborators

- Aurélie Calabrese [CNRS]

- Eric Castet [CNRS]

2 Overall objectives

Vision is a key function to sense our world and perform complex tasks. It has high sensitivity and strong reliability, even though most of its input is noisy, changing, and ambiguous. A better understanding of how biological vision works opens up scientific challenges as well as promising technological, medical and societal breakthroughs. Fundamental aspects such as understanding how a visual scene is encoded by the retina into spike trains, transmitted to the visual cortex via the optic nerve through the thalamus, decoded in a fast and efficient way, and then creating a sense of perception, offers perspectives in research and technological developments for current and future generations.

Vision is not always functional though. Sometimes, "something" goes wrong. Although many visual impairments such as myopia, hypermetropia, cataract, can be cured by glasses, contact lenses, or other means like medicine or surgery, pathologies impairing the retina such as Age-Related Macular Degeneration (AMD) and Retinis Pigmentosa (RP) can not be fixed with these standard treatments 39. They result in a progressive degradation of vision (Figure 1), up to a stage of low vision (visual acuity of less than 6/18 to light perception, or a visual field of less than 10 degrees from the point of fixation) or blindness. Thus, people with low vision must learn to adjust to their pathologies. Progress in research and technology can help them. Considering the aging of the population in developed countries and its strong correlation with the prevalence of eye diseases, low vision has already become a major societal problem.

Figure depicts a picture of a person through the eyes of a person with CFL (a scotoma blurs the image).

Central blind spot (i.e., scotoma), as perceived by an individual suffering from Central Field Loss (CFL) when looking at someone's face.

In this context, the Biovision Team's research revolves around the central theme biological vision and perception, and the impact of low vision conditions. Our strategy is based upon four cornerstones: To model, to assist diagnosis, to aid visual activities like reading, and to enable personalized content creation. We aim to develop fundamental research as well as technology transfer along three entangled axes of research:

- Axis 1: Modeling the retina and the primary visual system.

- Axis 2: Diagnosis, rehabilitation, and low-vision aids.

- Axis 3: Visual media analysis and creation.

These axes form a stable, three-pillared basis for our research activities, giving our team an original combination in expertise: modeling for the neurosciences, computer vision, Virtual and Augmented Reality (XR), and media analysis and creation. Our research themes require strong interactions with experimental neuroscientists, modelers, ophtalmologists and patients, constituting a large network of national and international collaborators. Biovision is therefore a strongly multi-disciplinary team. We publish in international reviews and conferences in several fields including neuroscience, low vision, mathematics, physics, computer vision, multimedia, computer graphics, and human-computer interactions.

3 Research program

3.1 Axis 1 - Modeling the retina and the primary visual system.

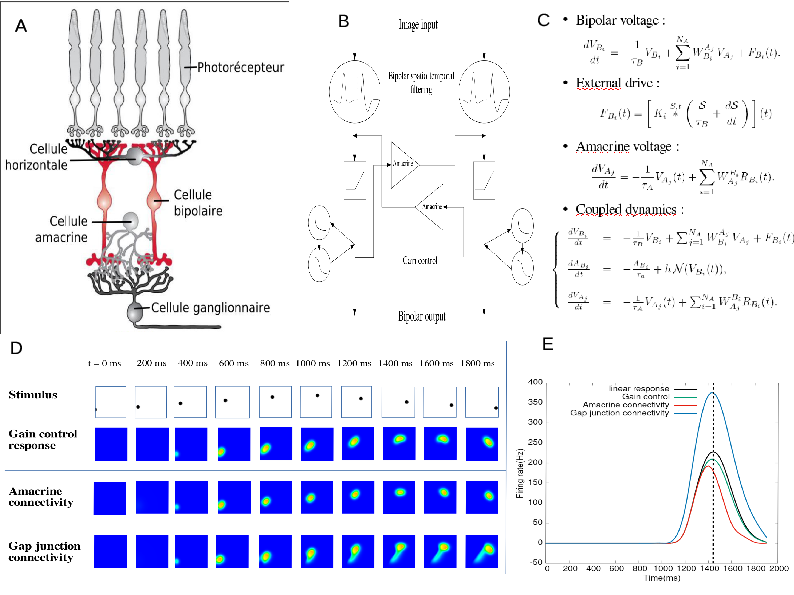

In collaboration with neuroscience labs, we derive phenomenological equations and analyze them mathematically by adopting methods from theoretical physics or mathematics (Figure 2). We also develop simulation platforms like Pranas or Macular, helping us confront theoretical predictions to numerical simulations, or allowing researchers to perform in silico experimentation under conditions rarely accessible to experimentalists (such as simultaneously recording the retina layers and the primary visual cortex1 (V1)). Specifically, our research focuses on the modeling and mathematical study of:

- Multi-scale dynamics of the retina in the presence of spatio-temporal stimuli;

- Response to motion, anticipation and surprise in the early visual system;

- Spatio-temporal coding and decoding of visual scenes by spike trains;

- Retinal pathologies.

The process of retina modeling. A) Inspired from the retina structure in biology, we, B) designe a simplified architecture keeping retina components that we want to better understand. C) From this we derive equations that we can study mathematically and/or with numerical simulations (D). In E we see an example. Here, our retina model's shows how the response to a parabolic motion where the peak of response resulting from amacrine cells connectivity or gain control is in advance with respect to the peak in response without these mechanisms. This illustrates retinal anticipation.

The process of retina modeling. A) The retina structure from biology. B) Designing a simplified architecture keeping retina components that we want to better understand (here, the role of Amacrine cells in motion anticipation); C) Deriving mathematical equations from A and B. D, E). Results from numerical modeling and mathematical modeling. Here, our retina model's response to a parabolic motion where the peak of response resulting from amacrine cells connectivity or gain control is in advance with respect to the peak in response without these mechanisms. This illustrates retinal anticipation.

3.2 Axis 2 - Diagnosis, rehabilitation, and low-vision aids.

In collaboration with low vision clinical centers and cognitive science labs, we develop computer science methods, open software and toolboxes to assist low vision patients (Figure 3), with a particular focus on Age-Related Macular Degeneration2. As AMD patients still have a plastic and functional vision in their peripheral visual field 45, they must develop efficient “Eccentric Viewing" (EV) to adapt to the central blind zone (scotoma) and to direct gaze away from the object they want to identify 54. Commonly proposed assistance tools involve visual rehabilitation methods 47 and visual aids that usually consist of magnifiers 40.

Our main research goals are:

- Understanding the relations between anatomo-functional and behavioral observations;

- Diagnosis from reading performance screening and oculomotor behavior analysis;

- Personalized and gamified rehabilitation for training eccentric viewing in VR;

- Personalized visual aid systems for daily living activities.

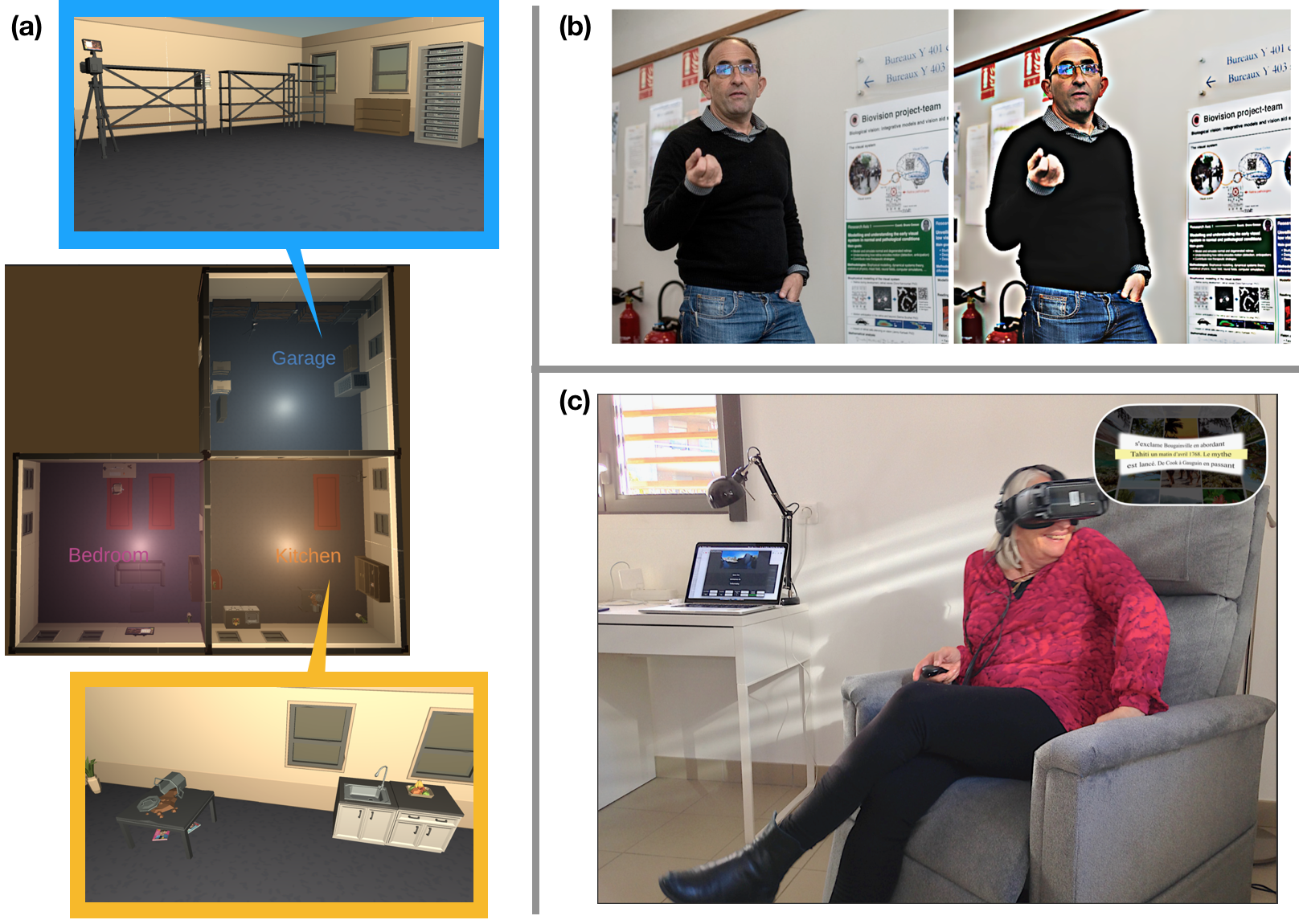

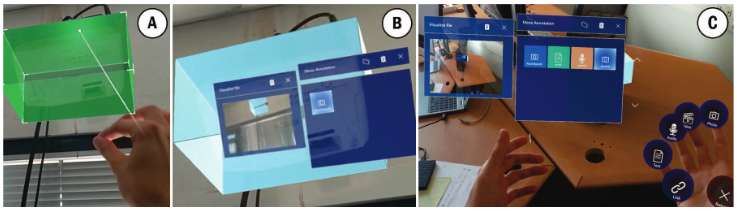

Figure depicts three images as described in the caption.

Multiple methodologies in graphics and image processing have applications towards low-vision technologies including (a) 3D virtual environments for studies of user perception and behavior, and creating 3D stimuli for model testing, (b) image enhancement techniques to magnify and increase visibility of contours of objects and people, and (c) personalization of media content such as text in 360 degrees visual space using VR headsets.

3.3 Axis 3 - Visual media analysis and creation.

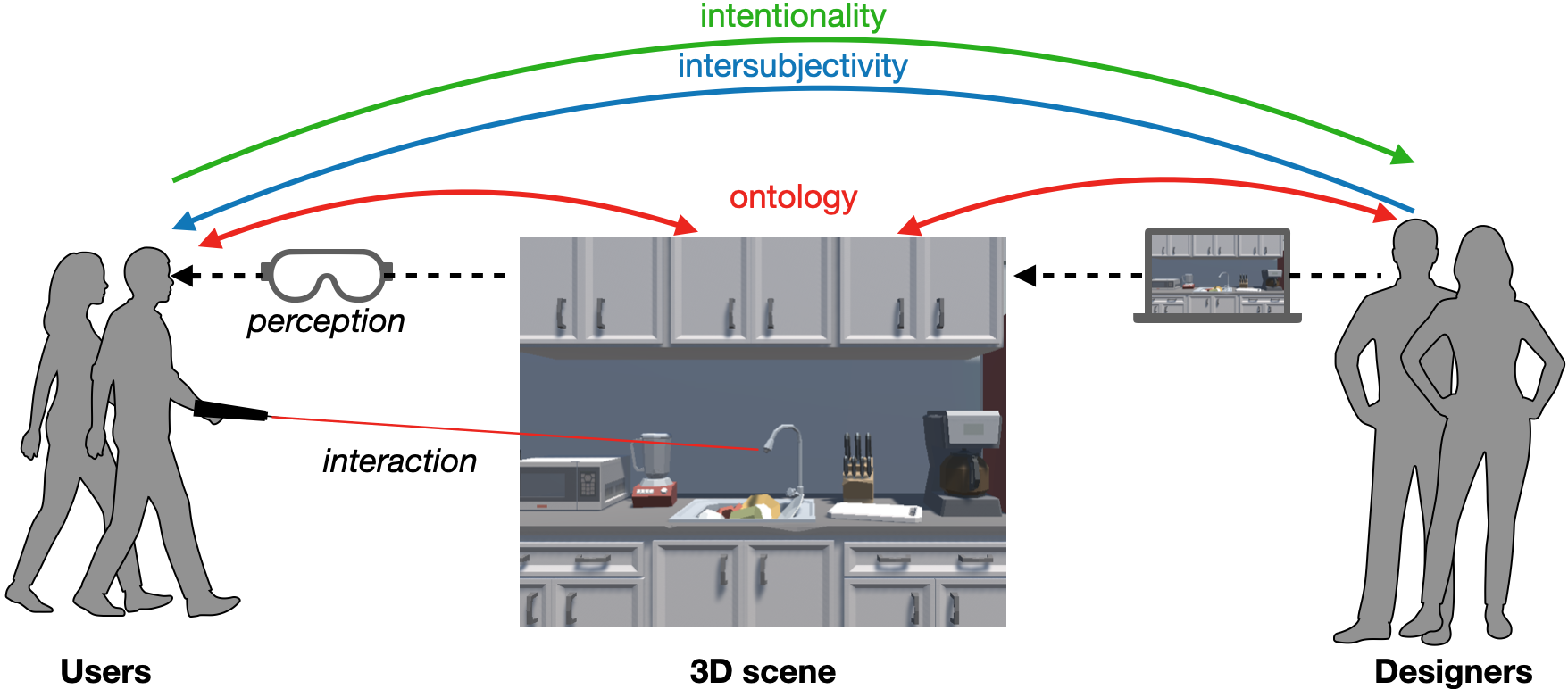

We investigate the impact of visual media design on user experience and perception, and propose assisted creativity tools for creating personalized and adapted media content (Figure 4). We employ computer vision and deep learning techniques for media understanding in film and in complex documents like newspapers. We deploy this understanding in new media platforms such as virtual and augmented reality for applications in low-vision training, accessible media design, and generation of 3D visual stimuli:

- Accessible / personalized media design for low vision training and reading platforms;

- Assisted creativity tools for 3D environments;

- Visual system and multivariate user behavior modeling in 3D contextual environments;

- Visual media understanding and gender representation in film.

On the left we have users, in the middle, the 3D scene, and on the right designers. Designers create the 3D scene through a computer interface and users interact with it through headsets and controllers. We see three main gaps of perception: ontology between the scene and its various users, intersubjectivity when communicating interactive possibilities from the designer and user, and intentionality when designers analyze user intentions

4 Application domains

4.1 Applications of low-vision studies

- Cognitive research: Virtual reality technology represents a new opportunity to conduct cognitive and behavioral research in virtual environments where all parameters can be psychophysically controlled. In the scope of ANR DEVISE, we are currently developing and using the PTVR software (Perception Toolbox for Virtual Reality) 16 to develop our own experimental protocols to study low vision. However, we believe that the potential of PTVR is much larger as it could be useful to any researcher familiar with Python programming willing to create and analyze a sophisticated experiment in VR with parsimonious code.

- Serious games: Serious games use game mechanics in order to achieve goals such as in training, education, or awareness. In our context, we want to explore serious games as a way to help low-vision patients in performing rehabilitation exercises. Virtual and augmented reality technology is a promising platform to develop such rehabilitation exercises targeted to specific pathologies due to their potential to create fully immersive environments, or inject additional information in the real world. For example, with Age-Related Macular Degeneration (AMD), our objective is to propose solutions allowing rehabilitation of visuo-perceptual-motor functions to optimally use residual portions of the peripheral retina defined from anatomo-functional exams 43.

- Vision aid-systems: A variety of aids for low-vision people are already on the market. They use various kinds of desktop (e.g., CCTVs), handheld (mobile applications), or wearable (e.g. OxSight, Helios) technologies, and offer different functionalities including magnification, image enhancement, text to speech, face and object recognition. Our goal is to design new solutions allowing autonomous interaction primarily using mixed reality – virtual and augmented reality. This technology could offer new affordable solutions developed in synergy with rehabilitation protocols to provide personalized adaptations and guidance.

4.2 Applications of vision modeling studies

-

Neuroscience research. Making in-silico experiments is a way to reduce the experimental costs, to test hypotheses and design models, and to test algorithms. Our goal is to develop a large-scale simulations platform of the normal and impaired retinas. This platform, called Macular, allows to test hypotheses on the retina functions in normal vision (such as the role of amacrine cells in motion anticipation 58, or the expected effects of pharmacology on retina dynamics 44). It is also to mimic specific degeneracies or pharmacologically induced impairments 53, as well as to emulate electric stimulation by prostheses.

In addition, the platform provides a realistic entry to models or simulators of the thalamus or the visual cortex, in contrast to the entries usually considered in modeling studies.

- Education. Macular is also targeted as a useful tool for educational purposes, illustrating for students how the retina works and responds to visual stimuli.

4.3 Applications of visual media analysis and creation

- Engineering immersive interactive storytelling platforms. With the sharp rise in popularity of immersive virtual and augmented reality technologies, it is important to acknowledge their strong potential to create engaging, embodied experiences. Reaching this goal involves investigating the impact of virtual and augmented reality platforms from an interactivity and immersivity perspective: how do people parse visual information, react, and respond in the face of content, in the face of media 15, 11, 50, 49.

- Personalized content creation and assisted creativity. Models of user perception can be integrated in tools for content creators, such as to simulate low-vision conditions to aid in the design of accessible spaces and media, and to diversify immersive scenarios used for studying user perception, for entertainment, and for rehabilitation 14, 61.

- Media studies and social awareness. Investigating interpretable models of media analysis will allow us to provide tools to conduct qualitative media studies on large amounts of data in relation to existing societal challenges and issues. This includes understanding the impact of media design on accessibility for patients with low-vision, as well as raising awareness towards biases in media 62 and existing media analysis tools from deep learning paradigms 63.

5 Social and environmental responsibility

The research themes in the Biovision team have direct social impacts on two fronts:

- Low vision: we work in partnership with neuroscientists and ophthalmologists to design technologies for the diagnosis and rehabilitation of low-vision pathologies, addressing a strong societal challenge.

- Accessibility: in concert with researchers in media studies, we tackle the social challenge of designing accessible media, including for the population with visual impairments, as well as to address media bias both in content design and in machine learning approaches.

6 Highlights of the year

6.1 Awards

- Simone Ebert got the first thesis prize of the EDSTIC, mention COP/ELEC/SN, for her PhD "Dynamical synapses in the retinal network" completed in 2023 under the supervision of B. Cessac.

- We published the paper “Temporal pattern recognition in retinal ganglion cells is mediated by dynamical inhibitory synapses” in Nature Communications.

- We obtained an ECOS-SUD funding on the project CANARD, in collaboration with the University of Valparaiso, Chile and the Federal University of Minas Gerais (Brasil) for the year 2025.

- Franz Franco Gallo received a best poster award at the SophIA Summit.

7 New software, platforms, open data

7.1 New software

7.1.1 Macular

-

Name:

Numerical platform for simulations of the primary visual system in normal and pathological conditions

-

Keywords:

Retina, Vision, Neurosciences

-

Scientific Description:

Macular is built around a central idea: its use and its graphical interface can evolve according to the user's objectives. It can therefore be used in user-designed scenarios, such as simulation of retinal waves, simulation of retinal and cortical responses to prosthetic stimulation, study of pharmacological impact on retinal response, etc. The user can design their own scenarios using the Macular Template Engine.

At the heart of Macular is an object called "Cell". Basically these "cells" are inspired by biological cells, but it's more general than that. It can also be a group of cells of the same type, a field generated by a large number of cells (for example a cortical column), or an electrode in a retinal prosthesis. A cell is defined by internal variables (evolving over time), internal parameters (adjusted by cursors), a dynamic evolution (described by a set of differential equations) and inputs. Inputs can come from an external visual scene or from other synaptically connected cells. Synapses are also Macular objects defined by specific variables, parameters, and equations. Cells of the same type are connected in layers according to a graph with a specific type of synapses (intra-layer connectivity). Cells of a different type can also be connected via synapses (inter-layer connectivity).

All the information concerning the types of cells, their inputs, their synapses and the organization of the layers are stored in a file of type .mac (for "macular") defining what we call a "scenario". Different types of scenarios are offered to the user, which they can load and play, while modifying the parameters and viewing the variables (see technical section).

-

Functional Description:

Macular is a simulation platform for the retina and the primary visual cortex, designed to reproduce the response to visual stimuli or to electrical stimuli, in normal vision conditions, or altered (pharmacology, pathology, development).

-

Release Contributions:

First release.

- URL:

-

Contact:

Bruno Cessac

-

Participants:

Bruno Cessac, Evgenia Kartsaki, Selma Souihel, Jerome Emonet, Sebastian Gallardo Diaz, Ghada Bahloul, Tristan Cabel, Erwan Demairy, Pierre Fernique, Thibaud Kloczko, Come Le Breton, Jonathan Levy, Nicolas Niclausse, Jean-Luc Szpyrka, Julien Wintz, Carlos Zubiaga Pena

7.1.2 GUsT-3D

-

Name:

Guided User Tasks Unity plugin for 3D virtual reality environments

-

Keywords:

3D, Virtual reality, Interactive Scenarios, Ontologies, User study

-

Functional Description:

We present the GUsT-3D framework for designing Guided User Tasks in embodied VR experiences, i.e., tasks that require the user to carry out a series of interactions guided by the constraints of the 3D scene. GUsT-3D is implemented as a set of tools that support a 4-step workflow to : (1) annotate entities in the scene with names, navigation, and interaction possibilities, (2) define user tasks with interactive and timing constraints, (3) manage scene changes, task progress, and user behavior logging in real-time, and (4) conduct post-scenario analysis through spatio-temporal queries on user logs, and visualizing scene entity relations through a scene graph.

The software also includes a set of tools for processing gaze tracking data, including: cleaning and synchronization of the data, calculation of fixations with I-VT, I-DT, IDTVR, IS5T, Remodnav, and IDVT algorithms, and visualization of the data (points of regard and fixations) in both real time and collectively.

-

News of the Year:

A new version of the software has been released with additional functionalities for collection, processing, and analysis of gaze tracking. We also made major changes to the overall workflow (system logging, interactions, bug fixes, etc.). This version was used in two user studies.

- URL:

- Publications:

-

Contact:

Hui-Yin Wu

-

Participants:

Hui-Yin Wu, Marco Alba Winckler, Lucile Sassatelli, Florent Robert

-

Partner:

I3S

7.1.3 PTVR

-

Name:

Perception Toolbox for Virtual Reality: The Open-Source Python Library for Virtual Reality Experiments

-

Keywords:

Visual perception, Behavioral science, Virtual reality

-

Functional Description:

PTVR is a fully open-source library for creating visual perception experiments in virtual reality using a high-level Python interface. It revolutionizes the way you design and conduct VR experiments, combining power and simplicity to bring your ideas to life:

(1) Effortless 3D Placement: With our innovative “Perimetric” coordinate system, positioning stimuli in 3D space is as simple as it gets. (2) Visual Angle Precision: Easily manage visual angles in a three-dimensional environment for precise experimental control. (3) Flat Screen Simulation: Replicate and extend standard 2D screen experiments on virtual flat screens, seamlessly bridging the gap between 2D and VR. (4) Data at Your Fingertips: Save experimental results and capture behavioral data, including head and gaze tracking, with ease. (5) Interactive Documentation: Our user-friendly guides include animated 3D figures that demystify the subtleties of working in a 3D space. (6) Hands-On Learning: Dive into the action with a suite of demo scripts, designed to be experienced directly through a VR headset—perfect for quick mastery of PTVR’s capabilities.

New for 2024: Import Realistic 3D Assets into Your Experiments. PTVR now supports the integration of complex and realistic 3D objects—commonly referred to as “assets” in the VR world. From furniture to detailed animals, characters, and even entire cities, you can leverage pre-made assets available for purchase or free download. With Unity’s “asset bundle” system, importing and managing large collections of assets is a breeze. PTVR’s intuitive syntax allows you to incorporate these assets directly into your experiments, expanding your creative possibilities.

Whether you're a researcher, educator, or VR enthusiast, PTVR is your go-to Python tool for crafting innovative, immersive, and precise virtual reality experiments. Join the open-source community today and take your experiments to the next dimension!

- URL:

- Publication:

-

Contact:

Pierre Kornprobst

-

Partner:

Aix-Marseille Université - CNRS Laboratoire de Psychologie Cognitive - UMR 7290 - Team ‘Perception and attention’

7.1.4 Tatoovi

-

Name:

Tagging tool for videos

-

Keywords:

Annotation tool, Video analysis, Multimedia player, Data visualization

-

Functional Description:

Tatoovi is an application which provides different modules for video annotation including: (1) a customizable annotation dictionary with visual editor that includes fields for classes, numerical values and scales, labelling, and text, (2) multi-level annotation by keyframes, shots/clips, and interface for film metadata and character information, (3) a click-and-drag pose module to edit and visualize character skeletons and bounding boxes, (4) and multi-timeline visualization and intuitive video playback and navigation tools, and (5) separate JSON export of timelines, annotations, and dictionaries for ease of collaboration, data analysis, and machine learning models

-

Contact:

Hui-Yin Wu

-

Participants:

Hui-Yin Wu, Lucile Sassatelli, Clement Bergman, Genevieve Masioni Kibadi, Luan Nguyen

-

Partner:

I3S

7.2 New platforms

Members of Biovision are marked with a .

7.2.1 CREATTIVE3D platform for navigation studies in VR

Participants: Hui-Yin Wu, Florent Robert, Lucile Sassatelli [UniCA, CNRS, I3S; Institut Universitaire de France], Marco Winckler [UniCA, CNRS, I3S].

As part of ANR CREATTIVE3D, the Biovision team has established a technological platform in the Kahn immersive space including:

- a 40 tracked space for the Vive Pro Eye virtual reality headset with 4-8 infra-red base stations,

- GUsT-3D software 7.1.2 under Unity for the creation, management, logging, analysis, and scene graph visualization of interactive immersive experiences,

- Vive Pro Eye headset integrated sensors including gyroscope and accelerometers, spatial tracking through base stations, and 120 Hz eye tracker,

- External sensors including XSens Awinda Starter inertia-based motion capture costumes and Shimmer GSR physiological sensors.

The platform has hosted around 60 user studies lasting over 120 hours this year, partly published in 11. It has also provided demonstrations of our virtual reality projects for visitors, collaborators, and students.

7.3 Open data

CREATTIVE3D multimodal dataset of user behavior in virtual reality

-

Contributors:

Participants: Hui-Yin Wu, Florent Robert, Lucile Sassatelli [UniCA, CNRS, I3S], Marco Winckler [UniCA, Polytech, CNRS, I3S], Stephen Ramanoël [UniCA, LAMHESS], Auriane Gros [UniCA, CHU Nice, CoBTeK].

-

Description:

In the context of the ANR CREATTIVE3D project, we present the CREATTIVE3D dataset of human interaction and navigation at road crossings in virtual reality. It is the largest dataset of human motion in fully-annotated scenarios (40 hours, 2.6 million poses), it captured in dynamic 3D scenes with multivariate – gaze, physiology, and motion – data. Its purpose is to investigate the impact of simulated low-vision conditions using dynamic eye tracking under real walking and simulated walking conditions. Extensive effort has been made to ensure the transparency, usability, and reproducibility of the study and collected data, even under extremely complex study conditions involving 6 degrees of freedom interactions, and multiple sensors.

- Dataset PID (DOI):

- Project link:

- Publications:

-

Contact:

Hui-Yin Wu

Visual Objectification in Films: Towards a New AI Task for Video Interpretation

-

Contributors:

Participants: Julie Tores [UniCA, CNRS, I3S], Lucile Sassatelli [UniCA, CNRS, I3S], Hui-Yin Wu, Clément Bergman, Léa Andolfi [UniCA, CNRS, I3S], Victor Ecrement [Sorbonne Université, GRIPIC], Frédéric Precioso [UniCA, Inria, CNRS, I3S], Thierry Devars [Sorbonne Université, GRIPIC], Magali Guaresi [UniCA, CNRS, BCL], Virginie Julliard [Sorbonne Université, GRIPIC], Sarah Lecossais [Université Sorbonne Paris Nord, LabSIC].

-

Description:

This is the first dataset for visual objectification in videos, specifically in films. We name this dataset ObyGaze12, short for Objectify-ingGaze12, which has the following highlights:

- It considers the multiple dimensions of the construct of visual objectification, made of filmic (framing and editing over successive shots, camera motion, etc.) and icono-graphic properties (visible objects, body parts, attire, character interactions, etc.).

- It is based on a thesaurus articulating five sub-constructs identified from multidisciplinary literature (film studies, psychology) from which we define typical instances, then grouped into coarse-grained visual concepts.

- The data is annotated densely with concepts, and shows the multi-factorial property of objectification, corroborating with some recent developments in cognitive psychology.

- Categories to annotate include a hard negative category meant to perform fine-grained error analysis and improve model generalization.

- Dataset Link:

- Project link:

- Publications:

-

Contact:

Hui-Yin Wu

8 New results

We present here the new scientific results of the team over the course of the year. For each entry, members of Biovision are marked with a .

8.1 Modeling the retina and the primary visual system

8.1.1 Temporal pattern recognition in retinal ganglion cells is mediated by dynamical inhibitory synapses

Participants: Simone Ebert, Thomas Buffet [Institut de la Vision, Sorbonne Université, Paris, France.], Semihcan Sermet [Institut de la Vision, Sorbonne Université, Paris, France.], Olivier Marre [Institut de la Vision, Sorbonne Université, Paris, France.], Bruno Cessac.

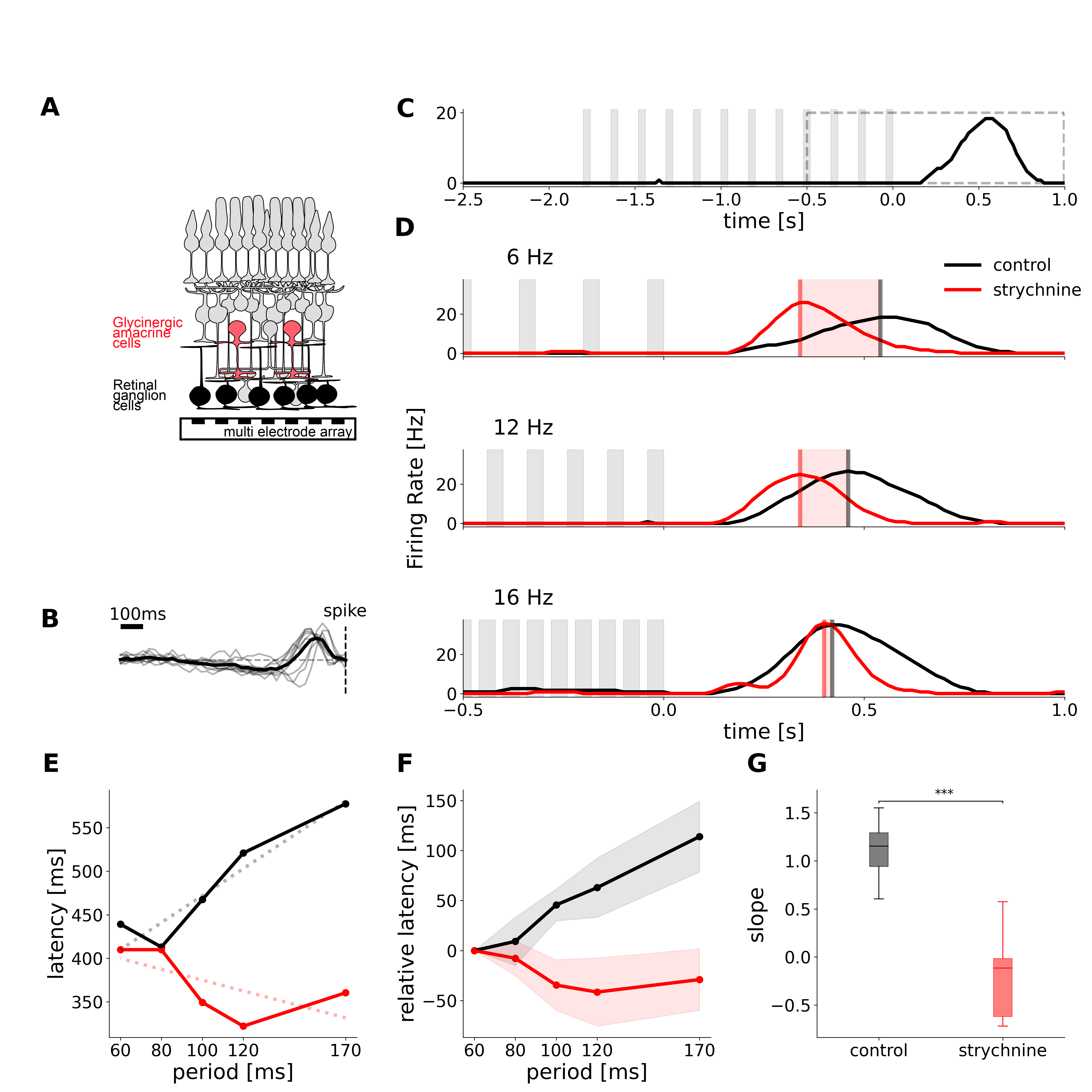

Description: A fundamental task for the brain is to generate predictions of future sensory inputs, and signal errors in these predictions. Many neurons have been shown to signal omitted stimuli during periodic stimulation, even in the retina. However, the mechanisms of this error signaling are unclear. Here we show that depressing inhibitory synapses enable the retina to signal an omitted stimulus in a flash sequence. While ganglion cells, the retinal output, responded to an omitted flash with a constant latency over many frequencies of the flash sequence, we found that this was not the case once inhibition was blocked. We built a simple circuit model and showed that depressing inhibitory synapses was a necessary component to reproduce our experimental findings (Figure 5). We also generated new predictions with this model, which we confirmed experimentally. Depressing inhibitory synapses could thus be a key component to generate the predictive responses observed in many brain areas.

This figure shows a schematic of the retina, experimental recordings of the Omitted Stimulus Response, in normal conditions and under application of strychnine which blocks glycine.

Glycinergic Amacrine cells are necessary for predictive timing of the OSR. A. Schematic representation of the retina, with the activity of retinal ganglion cells being recorded with a multi electrode array. B. Temporal traces of the receptive fields of the cells that exhibit an Omitted Stimulus Response (OSR). C. Example of OSR. The cell responds to the end on the stimulation, after the last flash. The dotted rectangle shows the time period of focus in panel D. D. Experimental recording of the OSR in one cell in control condition (black) and with strychnine to block glycinergic amacrine cell transmission (red). Firing rate responses to flash trains of 3 different frequencies are aligned to the last flash of each sequence. Flashes are represented by gray patches, vertical lines indicate the maximum of the response peak, red shaded areas indicate the temporal discrepancy between control and strychnine conditions.

This work has been published in the journal Nature Communication17.

8.1.2 How does the inner retinal network shape the ganglion cells receptive field : a computational study

Participants: Evgenia Kartsaki [Biosciences Institute, Newcastle University, Newcastle upon Tyne, UK; UniCA, Inria, SED], Gerrit Hilgen [Biosciences Institute, Newcastle University, Newcastle upon Tyne, UK; Health & Life Sciences, Applied Sciences, Northumbria University, Newcastle upon Tyne UK], Evelyne Sernagor [Biosciences Institute, Newcastle University, Newcastle upon Tyne, UK], Bruno Cessac.

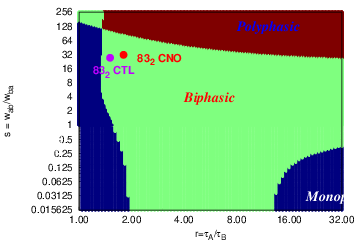

Description: We consider a model of basic inner retinal connectivity where bipolar (BCs) and amacrine (ACs) cells interconnect, and both cell types project onto ganglion cells (RGCs), modulating their response output to the brain visual areas. We derive an analytical formula for the spatio-temporal response of retinal ganglion cells to stimuli taking into account the effects of amacrine cells inhibition. This analysis reveals two important functional parameters of the network: (i) the intensity of the interactions between bipolar and amacrine cells, and, (ii) the characteristic time scale of these responses. Both parameters have a profound combined impact on the spatiotemporal features of RGC responses to light. The validity of the model is confirmed by faithfully reproducing pharmacogenetic experimental results obtained by stimulating excitatory DREADDs (Designer Receptors Exclusively Activated by Designer Drugs) expressed on ganglion cells and amacrine cells subclasses, thereby modifying the inner retinal network activity to visual stimuli in a complex, entangled manner. DREADDs are activated by"designer drugs" such as clozapine-n-oxide (CNO), resulting in an increase in free cytoplasmic calcium and profound increase in excitability in the cells that express these DREADDs (Roth, 2016). We found DREADD expression both in subsets of RGCs and in ACs in the inner nuclear layer (INL) 51. In these conditions, CNO would act both on ACs and RGCs providing a way to unravel the entangled effects of: (i) direct excitation of DREADD-expressing RGCs and increase inhibitory input onto RGCs originating from DREADD-expressing ACs; (ii) change in cells response timescale (via a change in the membrane conductance), thereby providing an experimental set up to validate our theoretical predictions. Our mathematical model allows us to explore and decipher these complex effects in a manner that would not be feasible experimentally and provides novel insights in retinal dynamics.

Figure 6 illustrates our results. This paper has been published in the journal Neural Computation18.

This figure shows how a slight change in the parameters ratio of characteristic times Amacrine Bipolar vs the ratio of synaptic weights inhibition excitation induces a dramatic change in the shape of the receptive field of a ganglion cell.

This figure shows how a slight change in the parameters ratio of characteristic times Amacrine Bipolar vs the ratio of synaptic weights inhibition excitation induces a dramatic change in the shape of the receptive field of a ganglion cell.

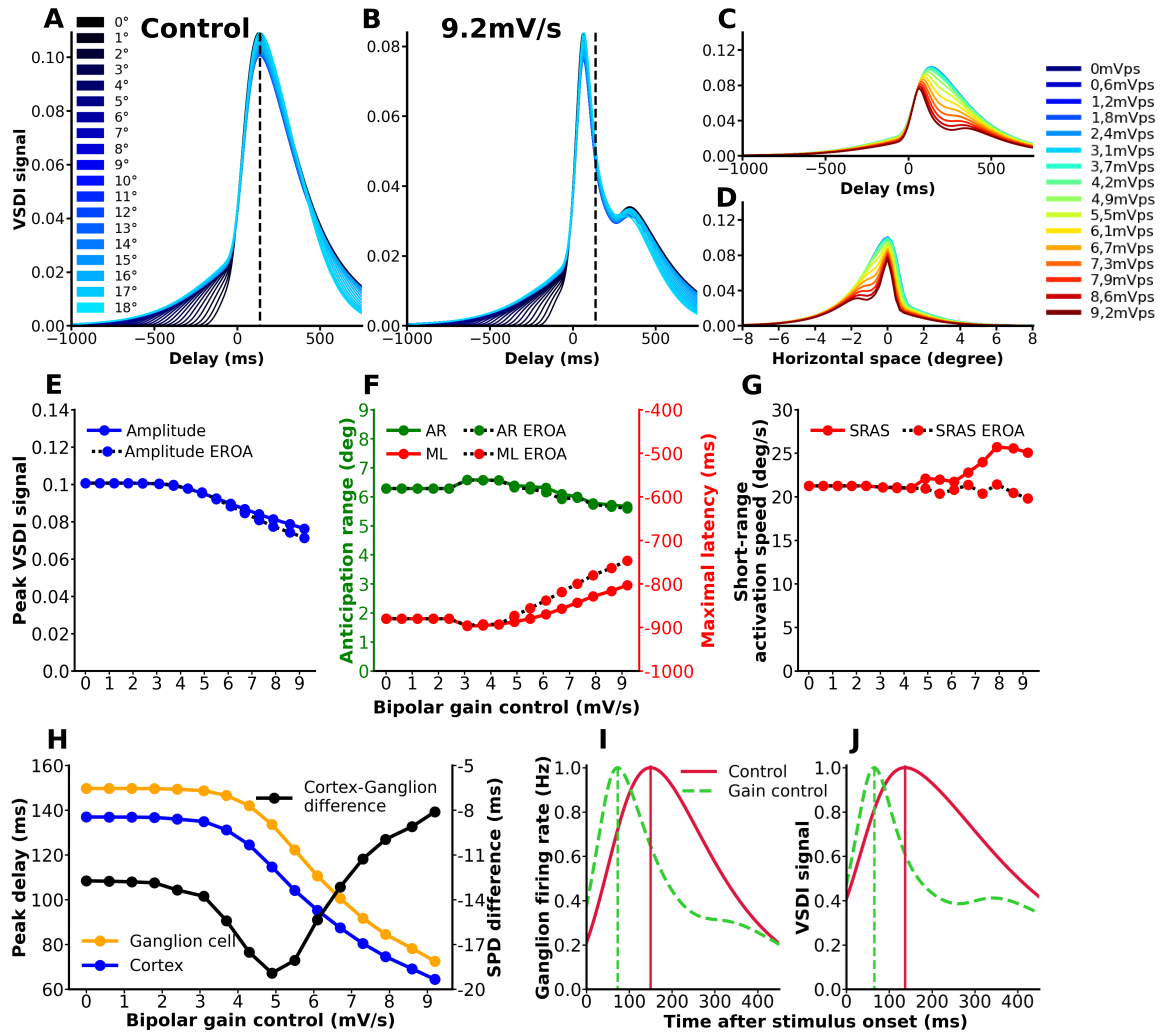

8.1.3 A chimera model for motion anticipation in the retina and the primary visual cortex

Participants: Jérôme Emonet, Selma Souihel [Inria, P16 - Programme IA], Bruno Cessac, Alain Destexhe [NeuroPSI - Institut des Neurosciences Paris-Saclay], Frédéric Chavane [INT - Institut de Neurosciences de la Timone], Matteo Di Volo [UCBL - Université Claude Bernard Lyon 1].

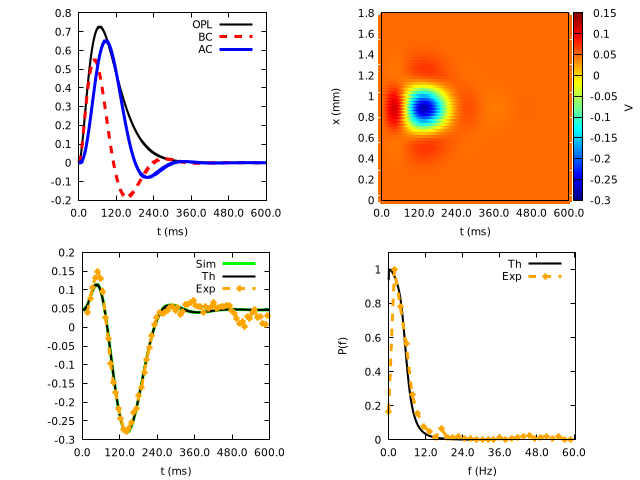

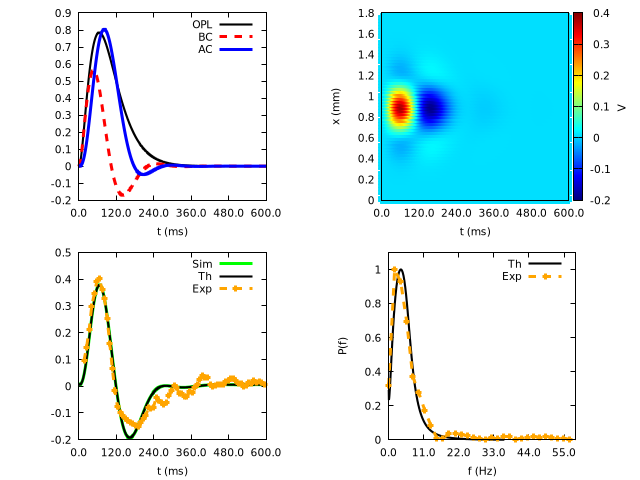

Description: Motion anticipation refers to the capacity of the visual system to compensate for inherent delays in visual processing. This ability results from distinct mechanisms taking place in the retina 38 and in the visual cortex 52. To study their respective role, we propose a mean field model of the primary visual cortex (V1) connected to a realistic retina model. Our first goal is to reproduce experimental results on motion anticipation, made in monkeys by using voltage dye optical imaging (VSDI) 37, and to assess the impact of the retina in this process. For this, we first study the model in the case where the retina does not itself provide anticipation. Then, anticipation is only triggered by a cortical mechanism, called "anticipation by latency". As we show, this mechanism strongly depends on the intensity of the retinal input supplied to the cortex, even if the retina does not itself provide anticipation. We also unravel the effect of the stimulus features, such as speed and contrast, and report the impact of physiological parameters not accessible experimentally, such as the size of cortical extensions or fibre conduction. Then, we explore the changes in the cortical wave of anticipation when V1 is triggered by a retina output implementing different potential retina-driven anticipatory mechanisms, including gain control and lateral inhibition by amacrine cells. In this setting, we show how retinal and cortical anticipation combine, to provide an efficient processing where the VSDI signal response is in advance over the moving object that triggers this response, compensating the delays in visual processing, in full agreement with the experimental results of 37. This work has been presented in the conferences "Annual Neuromod Meeting" 26, "AREADNE 2024"33, ICMNS 202432 and submitted to the journal Neural Computation. An example of results is shown in Fig. 7

This figure shows the effect of gain control at the retinal bipolar cell level on the cortical activity measured with Voltage Sensitive Dye.

This figure shows the effect of gain control at the retinal bipolar cell level on the cortical activity measured with Voltage Sensitive Dye.

8.1.4 Optical Flow Estimation pre-training with simulated stage II retinal waves

Participants: Christos Kyriazis, Bruno Cessac, Hui-Yin Wu, Pierre Kornprobst.

Context: Retinal waves are an early developmental process in the mammalian visual system, playing a crucial role in structuring retinal connectivity and priming the system for visual functions such as motion detection. In 30, we investigated innovative approaches to leveraging simulated retinal waves (RWs) as a form of pre-training for machine learning (ML) models, particularly in the domain of optical flow estimation (OFE).

Description: We focus on a recent non-transformer machine learning architecture, RAFT 59, and employ a transfer learning strategy to harness the potential of retinal waves (RWs) in improving optical flow estimation (OFE) performance through a related task. Additionally, we explore an alternative approach that approximates the optical flow of RWs, enabling their direct application within OFE. While the concept of leveraging these biologically inspired stimuli to create more accessible training data holds promise for enhancing the generalization capabilities of OFE models, our findings indicate limited effectiveness with both approaches.

8.2 Diagnosis, rehabilitation, and low-vision aids

8.2.1 Constrained text generation to measure reading performance

Participants: Alexandre Bonlarron, Aurélie Calabrèse [Aix-Marseille Université (CNRS, Laboratoire de Psychologie Cognitive, Marseille, France) ], Pierre Kornprobst, Jean-Charles Régin [UniCA, I3S, Constraints and Application Lab].

Context: Measuring reading performance is one of the most widely used methods in ophthalmology clinics to judge the effectiveness of treatments, surgical procedures, or rehabilitation techniques 57. However, reading tests are limited by the small number of standardized texts available. For the MNREAD test 55, which is one of the reference tests used as an example in this work, there are only two sets of 19 sentences in French. These sentences are challenging to write because they have to respect rules of different kinds (e.g., related to grammar, length, lexicon, and display). They are also tricky to find: out of a sample of more than three million sentences from children’s literature, only four satisfy the criteria of the MNREAD reading test.

Description: To obtain more sentences, we proposed in 41 a new standardized sentence generation method. Using this approach, we generated under constraints all possible MNREAD-like sentences that can be built from a given corpus and ranked them from ‘good’ to ‘poor’ using a transformers-based language model (GPT-2). To validate our method, we asked 14 normally sighted participants (age 14 to 56) to read 3 sets of 30 French sentences: MNREAD sentences, generated sentences ranked as ‘good’ and generated sentences ranked as ‘poor’. All were displayed at 40cm, in regular polarity with a fixed print size of 1.3 logMAR. Corrected reading speed was measured in words/min (wpm) and analyzed using a mixed-effects model. Results were presented at ARVO 2024 conference 31. On average, ‘good’ generated sentences were read at 164 wpm. This value was not significantly different from the reading speed of 162 wpm yield by MNREAD sentences (p=0.5). On the other hand, reading speed was significantly slower for ‘poor’ generated sentences, with an average value of 151 wpm (p<0.001). Our method seems to provide valid standardized sentences that follow the MNREAD rules and yield similar performance, at least in French. Since our method is easily applicable to other languages, further investigations is needed to validate the generation of sentences in English, Spanish, Italian, etc.

8.2.2 Inclusive news design: enhancing reading comfort on small displays through layout optimization

Participants: Sebastian Gallardo, Dorian Mazauric [UniCA, Inria, ABS Team], Pierre Kornprobst.

Context: The digital era transforms the newspaper industry, offering new digital user experiences for all readers. However, to be successful, newspaper designers stand before a tricky design challenge: translating the design and aesthetics from the printed edition (which remains a reference for many readers) and the functionalities from the online edition (continuous updates, responsiveness) to create the e-newspaper of the future, making a synthesis based on usability, reading comfort, engagement. In this spirit, our project aims to develop a novel inclusive digital news reading experience that will benefit all readers: you, me, and low vision people for whom newspapers are a way to be part of a well-evolved society.

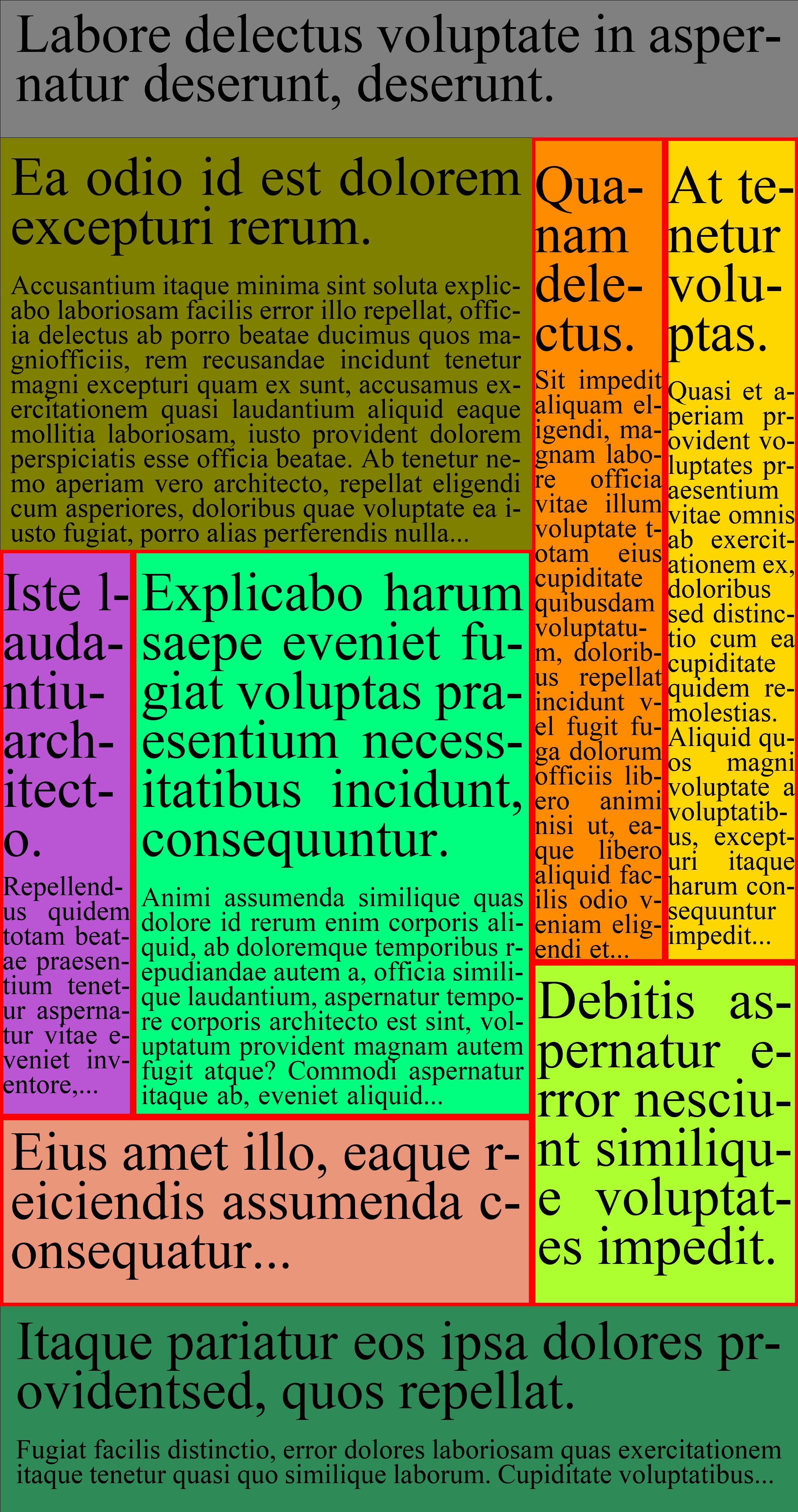

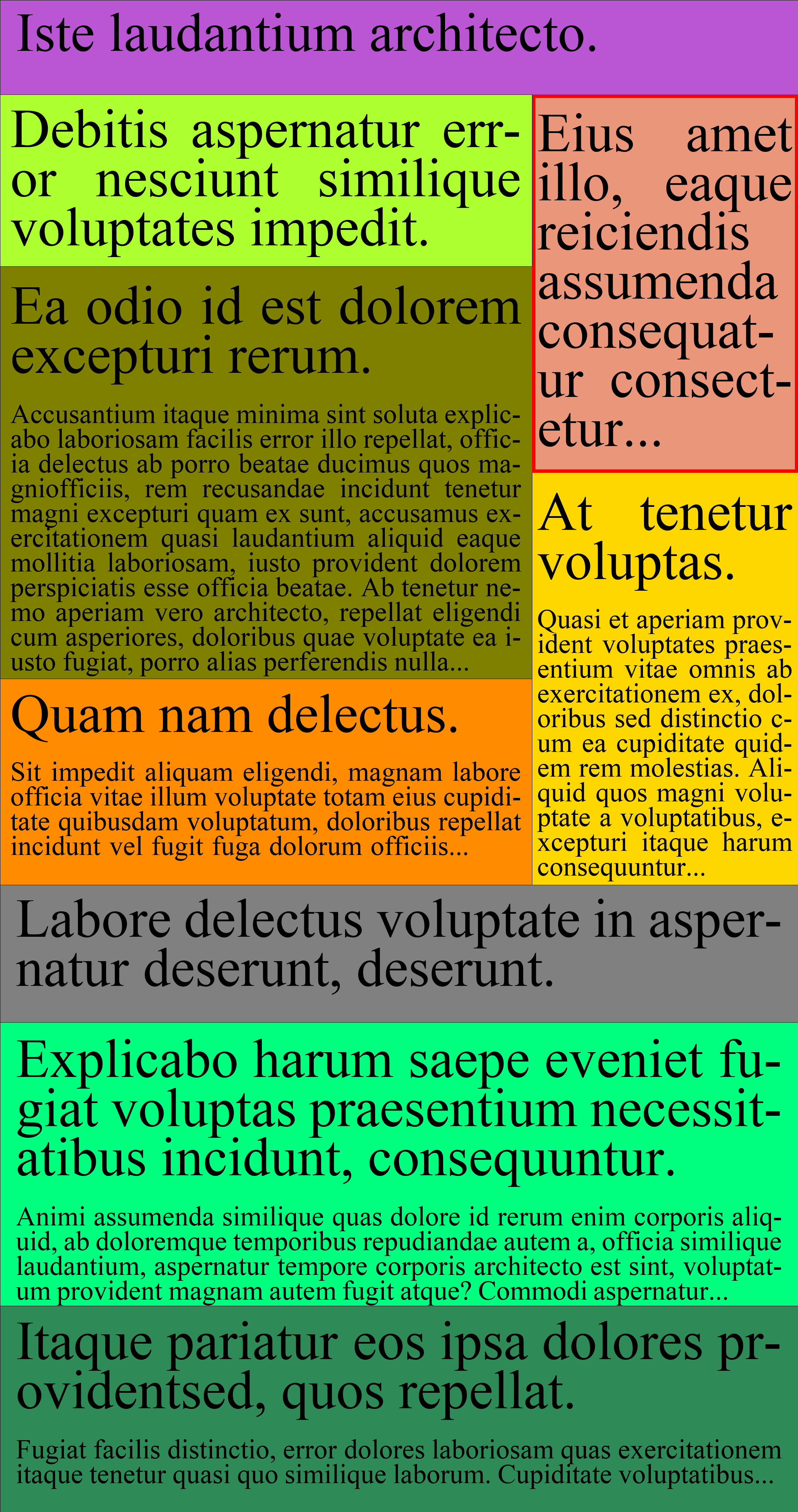

Description: In this work we focus on how to comfortably read newspapers on a small display. Simply transposing the print newspapers into digital media can not be satisfactory because they were not designed for small displays. One key feature lost is the notion of entry points that are essential for navigation. By focusing on headlines as entry points, we show how to produce alternative layouts for small displays that preserve entry points quality (readability and usability) while optimizing aesthetics and style. Our approach consists in a relayouting approach implemented via a genetic-inspired approach. We tested it on realistic newspaper pages. For the case discussed here, we obtained more than 2000 different layouts where the font was increased by a factor of two. We show that the quality of headlines is globally much better with the new layouts than with the original layout (Figure 8). Future work will tend to generalize this promising approach, accounting for the complexity of real newspapers, with user experience quality as the primary goal.

More details are available in 48. This work is still ongoing.

|

|

|

|

| (a) | (b) | (c) UW=6 | (d) UW=1 |

The figure has four panels. The first panel represents a newspaper page that is composed of several articles each of them with a headline and a body text. The goal is to see how to magnify this page while preserving reading comfort and newspaper design. The second panel illustrates the usual solution which consists in simply magnifying everything which results in a local/global navigation problem since only a portion of the page remains visible. The third panel shows an alternative solution where only the font size is increased while keeping the original design. The interest of this solution stands in the fact that we always see all the articles so that the reader may choose more easily the one to read. However, since the geometry of the page is kept the same, increasing font size may end up with headlines flowing on many lines, making them hard to read, and also aesthetically poor. The fourth panel shows the result of our approach which consists in increasing the font size as in the previous case but also exploring different layouts so that headlines do not flow on too many lines. By doing so, we provide a new self-contained layout that is easier to understand on small displays.

From print to online newspapers on small displays: (a) Print newspaper page where each article has been colored to highlight the structure. On small displays, headlines become too small to be readable and usable for navigation. Magnification is needed. (b) Pinch-zoom result with a magnification factor of two: Illustrates the common local/global navigation difficulty encountered when reading newspapers via digital kiosk applications. (c) Increasing the font with a factor two, keeping the original layout: Articles with unwanted shapes (denoted by UW, here when headlines flow on more than three lines) are boxed in red; (d) Best result of our approach showing how the page in (a) has been transformed to preserve headlines quality.

8.2.3 A clustering based article template recommendation system for newspaper editors

Participants: Sebastian Gallardo, Bruno Génuit [Demain un Autre Jour], Dorian Mazauric [UniCA, Inria, ABS Team], Pierre Kornprobst.

Context: Newspaper editors face significant challenges in page production, as printed newspapers are becoming less profitable and more costly to produce. Improving productivity in this process is crucial. In 29, we focus on the task of selecting the most appropriate article template for given content-a process that is time-consuming and difficult. We demonstrate how techniques from Recommendation Systems (RS) can be adapted and extended to assist newspaper editors by recommending the best templates based on their needs and preferences. We propose a clustering-based recommendation system that promotes diversity, which is a critical requirement in this context.

Description: Our method consists of two phases: first, an offline clustering phase that uses a graph-matching neural network to compute a custom similarity measure (GMN) between templates, modeled as graphs. This clustering process is based on content and structural information, independent of user preferences. The second phase is an online recommendation system that incorporates both user preferences and content requirements, promoting templates that minimize similarity. Compared to existing methods, our approach outperforms in terms of novelty and diversity, especially in cases where high-rated items are clustered together. We present promising results through case examples, demonstrating the practical applicability of our method for real-world scenarios, while also opening avenues for future studies with industry professionals. While this study provides a first step towards applying recommendation systems to optimize the selection of article templates in the newspaper production process, further research is needed to extend this work to full-page layouts and multi-page designs, incorporating more comprehensive recommendations that address the broader scope of newspaper production.

8.3 Visual media analysis and creation

8.3.1 Human Trajectory Forecasting in 3D Environments: Navigating Complexity under Low Vision

Participants: Franz Franco Gallo, Hui-Yin Wu, Lucile Sassatelli [UniCA, CNRS, I3S].

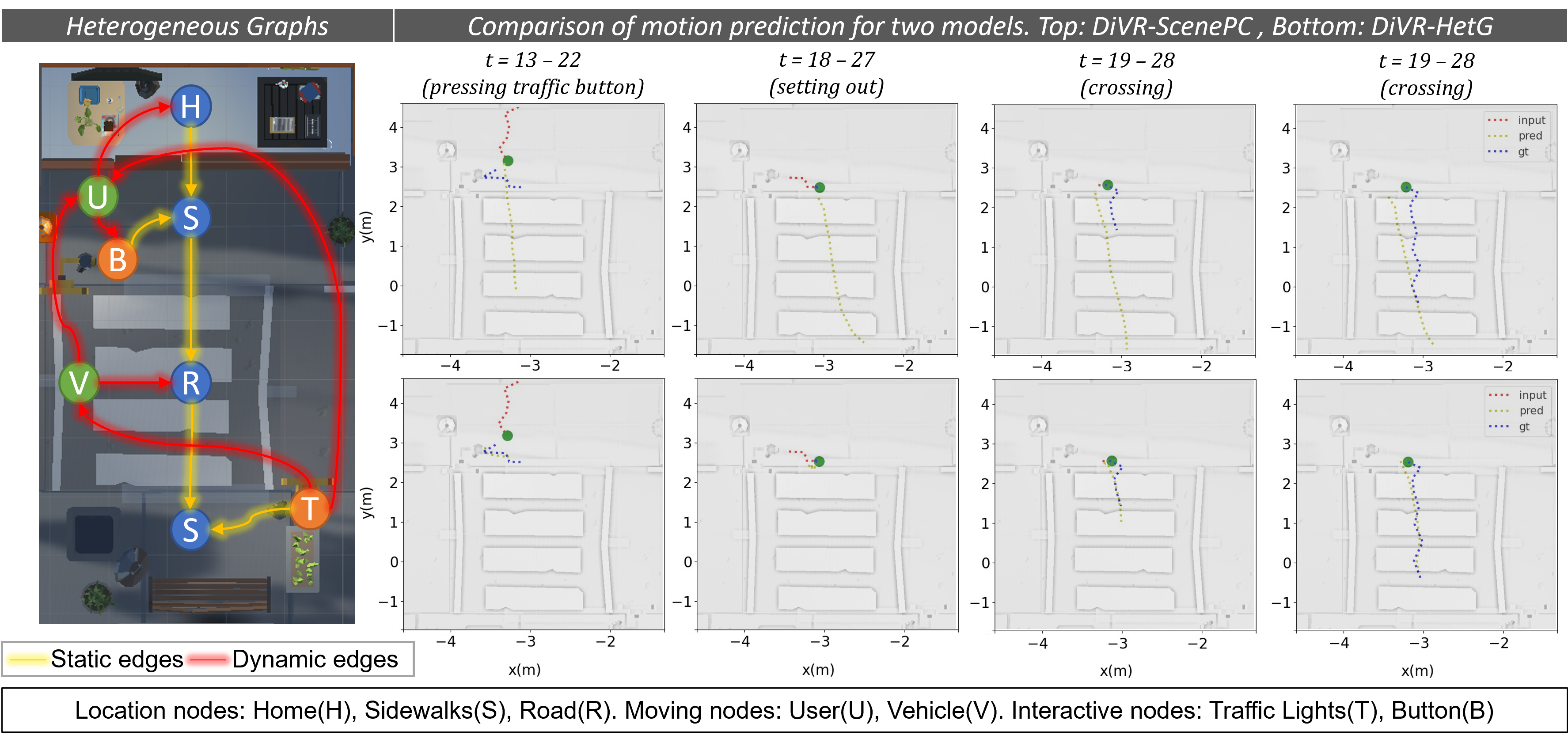

Context: This work tackles the challenge of predicting human trajectories while carrying out complex tasks in contextually-rich virtual environments. We evaluate the CREATIVE3D multimodal dataset on human interaction and navigation in 3D virtual reality (VR). In the dataset, navigating traffic crossings with simulated visual impairments are used as an example of complex or unpredictable situations.

Description: In the first part of the work, we establish evaluations for a base multi-layer perceptron (MLP) and two state-of-the-art models: TRACK (RNN) and GIMO (transformer), on tasks with varying levels of complexity and visual impairment conditions. Our findings indicate that a model trained on normal visual conditions and simple tasks does not generalize on test data with complex interactions and simulated visual impairments, despite including 3D scene context and user gaze. In comparison, a model trained on diverse visual and task conditions is more robust, with up to 84% decrease in positional error and 9% in orientation error, but with the trade-off of lower accuracy for simpler tasks. We believe this work can benefit real-world applications such as autonomous driving, and enable context-aware computing for diverse scenarios and populations.

On the left we show the 3D environment of a road crosing overlayed with a graph that shows the connectivity of places (sidewalk, crossings), objects (traffice lights, a movable box), and the user. On the right we show a number of predictions comparing results using scene point clouds with results using the graph input.

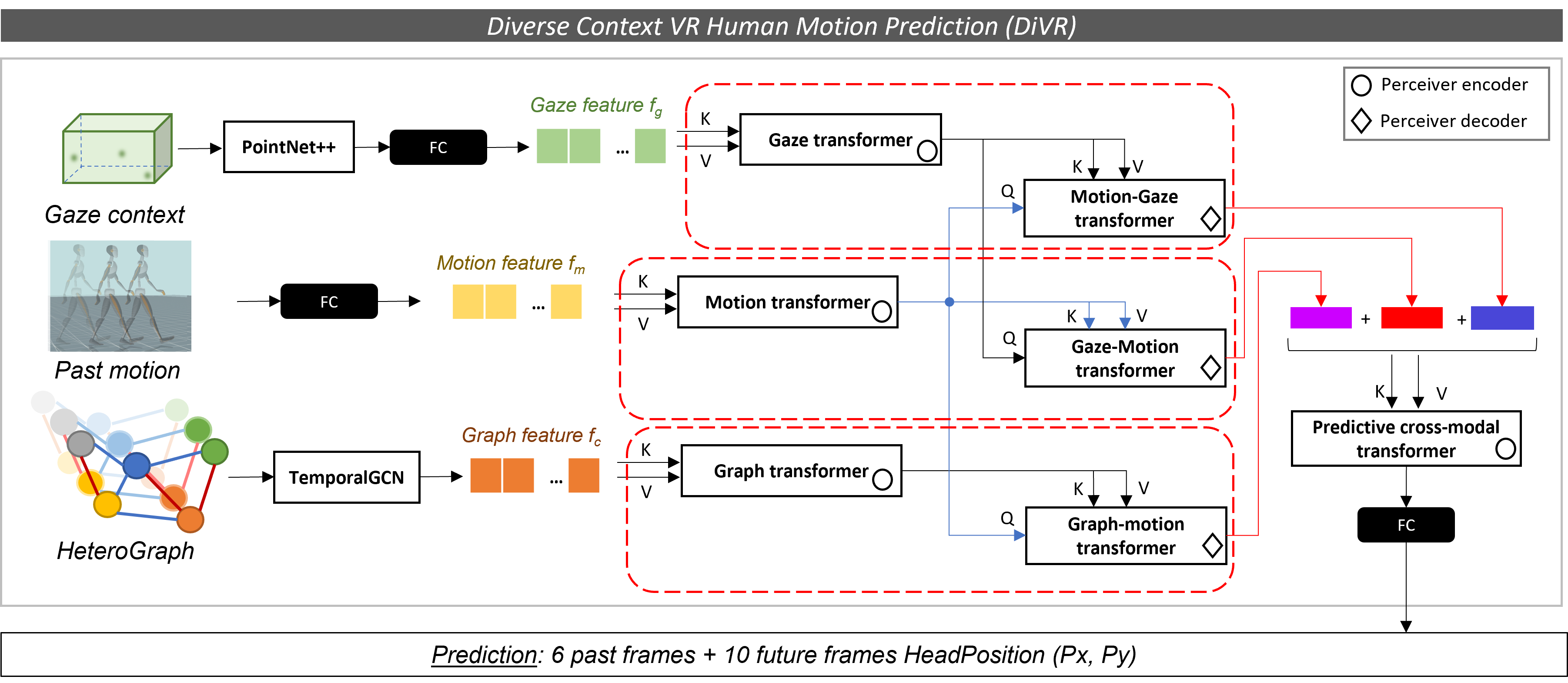

In the second part of the work, we investigate how virtual environments provide a rich and controlled setting for collecting detailed data on human behavior, offering unique opportunities for predicting human trajectories in dynamic scenes. Most existing approaches have overlooked the potential of these environments, focusing instead on static contexts without considering user-specific factors. We propose Diverse Context VR Human Motion Prediction (DiVR), a cross-modal transformer based on the Perceiver architecture that integrates both static and dynamic scene context using a heterogeneous graph convolution network (Figure 10). We conduct extensive experiments comparing DiVR against existing architectures including MLP, LSTM, and transformers with gaze and point cloud context. Additionally, we also test our model's generalizability across different users, tasks, and scenes. Results show that DiVR achieves higher accuracy and adaptability compared to other models and to static graphs. Some results are illustrated in Figure 9. This work highlights the advantages of using VR datasets for context-aware human trajectory modeling, with potential applications in enhancing user experiences in the metaverse. Our source code is publicly available on Inria Gitlab.

Diagram of our model showing the three inputs that enter three transformer branches (for gaze, motion, and graph), cross-attention blocks (motion-gaze, gaze-motion, and graph-motion) and finally a cross-modal transformer block that takes all three outputs for prediction.

These works were presented at the ACM MMSys Workshop on Immersive Mixed and Virtual Environment Systems 7 and The ECCV Workshop on Computer Vision for Metaverse 21, 36. It received a best poster award at the SophIA summit.

8.3.2 Task-based methodology to characterize immersive user experience with multivariate data

Participants: Florent Robert, Hui-Yin Wu, Marco Winckler [UniCA, Polytech, CNRS, I3S], Lucile Sassatelli [UniCA, CNRS, I3S].

Context: Virtual Reality (VR) technologies enable strong emotions compared to traditional media, stimulating the brain in ways comparable to real-life interactions. This makes VR systems promising for research and applications in training or rehabilitation, to imitate realistic situations. Nonetheless, the evaluation of the user experience in immersive environments is daunting, the richness of the media presents challenges to synchronize context with behavioral metrics in order to provide fine-grained personalized feedback or performance evaluation. The variety of scenarios and interaction modalities multiply this difficulty of user understanding in the face of lifelike training scenarios, complex interactions, and rich context.

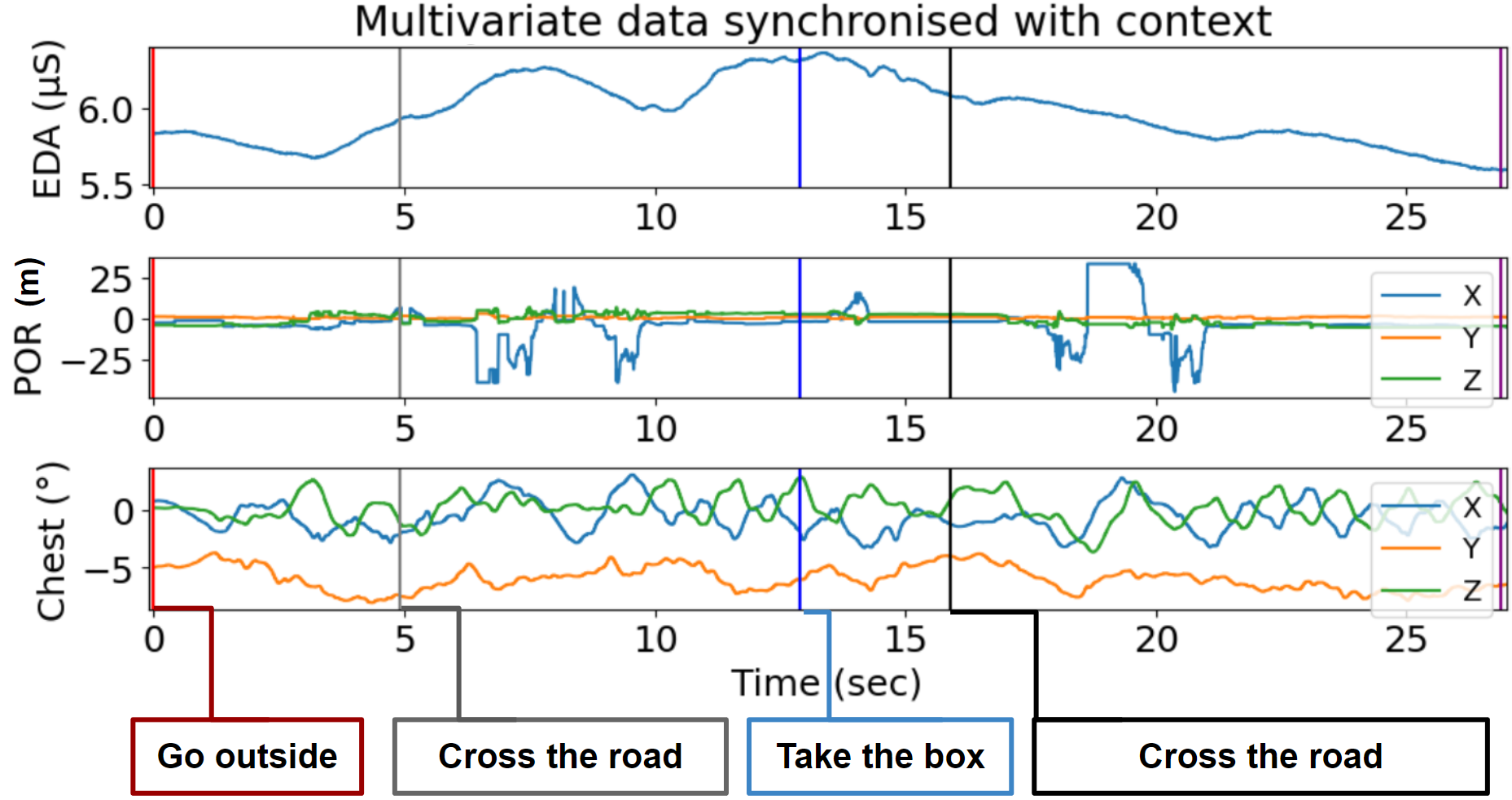

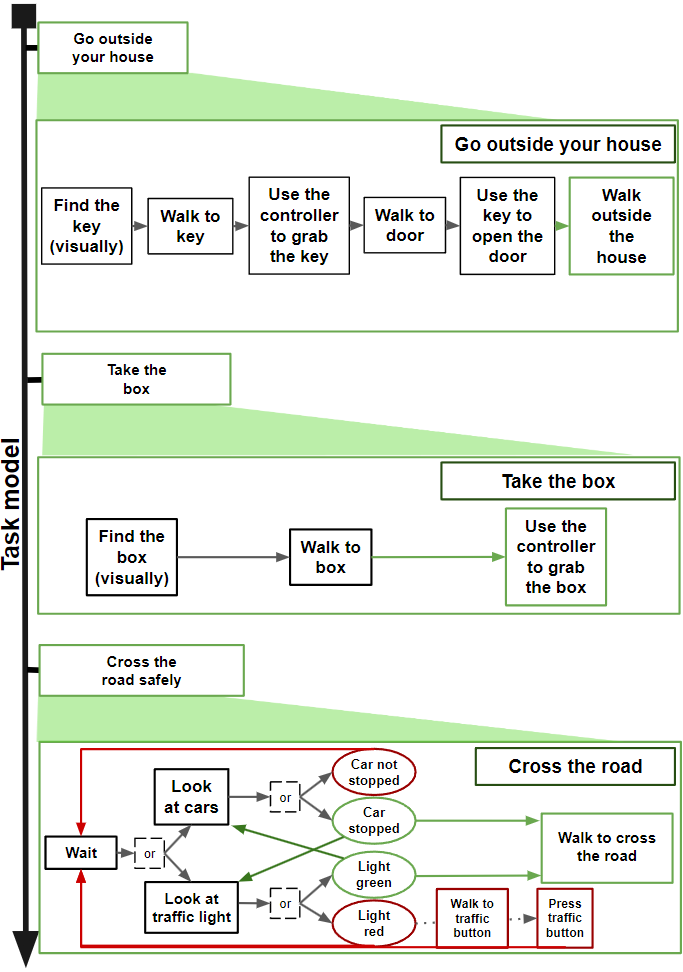

We see three graphs that show the workflow of the scenario where the user carries out four designated tasks. Rach task shows more detailed actions such as "Find the key", "Walk to the door", "Use the key to open the door" for the task "Go outside your house".

Diagram of our model showing the three inputs that enter three transformer branches (for gaze, motion, and graph), cross-attention blocks (motion-gaze, gaze-motion, and graph-motion) and finally a cross-modal transformer block that takes all three outputs for prediction.

Description: We propose a task-based methodology that provides fine-grained descriptions and analysis of the experiential user experience (UX) in VR that (1) aligns low-level tasks (i.e. take an object, go somewhere) with multivariate behavior metrics: gaze, motion, skin conductance (Figure 11), (2) defines performance components (i.e., attention, decision, and efficiency) and baselines to evaluate task performance (e.g., the time it takes for a user to carry out the task, whether they direct their gaze towards key elements, etc.), and (3) characterizes task performance with multivariate user behavior data. To illustrate our approach, we apply the task-based methodology to an existing dataset from a road crossing study in VR (task model shown in Figure 12). We find that the task-based methodology allows us to better observe the experiential UX by highlighting fine-grained relations between behavior profiles and task performance, opening pathways to personalized feedback and experiences in future VR applications. This work was published at the 2024 IEEE Conference on Virtual Reality 12.

8.3.3 Visual Objectification in Films: Towards a New AI Task for Video Interpretation

Participants: Julie Tores [UniCA, CNRS, I3S], Lucile Sassatelli [UniCA, CNRS, I3S], Hui-Yin Wu, Clément Bergman, Léa Andolfi [UniCA, CNRS, I3S], Victor Ecrement [Sorbonne Université, GRIPIC], Frédéric Precioso [UniCA, Inria, CNRS, I3S], Thierry Devars [Sorbonne Université, GRIPIC], Magali Guaresi [UniCA, CNRS, BCL], Virginie Julliard [Sorbonne Université, GRIPIC], Sarah Lecossais [Université Sorbonne Paris Nord, LabSIC].

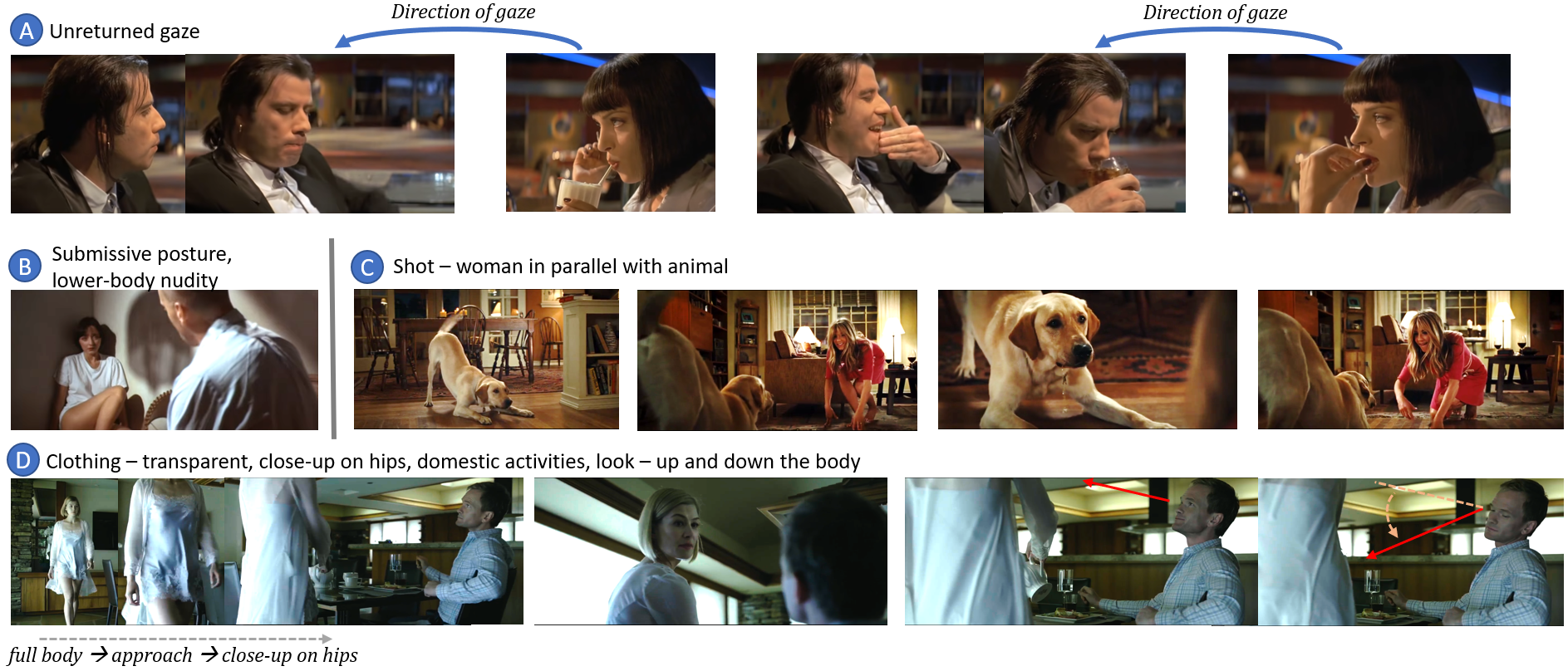

Description: In film gender studies, the concept of “male gaze” refers to the way the characters are portrayed on the screen as objects of desire rather than subjects. In this work, we introduce a novel video-interpretation task, to detect character objectification in films. The purpose is to reveal and quantify the usage of complex temporal patterns operated in cinema to produce the cognitive perception of objectification. We introduce the ObyGaze12 dataset, made of 1914 movie clips densely annotated by experts for objectification concepts identified in film studies and psychology. Examples of films and annotations can be seen in Figure 13. We evaluate recent vision models, show the feasibility of the task with concept bottleneck models, and discuss the limitations. Our new dataset and code are made available to the community.

We show examples of objectification in three films, including (A) a man not meeting the gaze of a woman sitting directly across from him in a restaurant, (B) a man looking down on a woman who is not wearing any clothing on the lower body, (C) a woman crouched down parallel to a dog, and (D) the camera watching a woman wearing a semi-transparent night gown approaching a man, stopping with the camera on her hips, and the man shifting the gaze downward to look at the woman's hips.

This work was presented as a poster highlight (top 11.9% of accepted papers) at the 2024 CVF Conference on Computer Vision and Pattern Recognition (CVPR) 25.

8.3.4 HandyNotes: using the hands to create semantic representations of contextually aware real-world objects

Participants: Clément Quéré [UniCA, Polytech, CNRS, I3S], Aline Menin [UniCA, CNRS, I3S], Raphaël Julien [UniCA, Polytech], Hui-Yin Wu, Marco Winckler [UniCA, Polytech, CNRS, I3S].

Description: We investigate Mixed Reality (MR) technologies to provide a seamless integration of digital information in physical environments through human-made annotations. Creating digital annotations of physical objects evokes many challenges for performing (simple) tasks such as adding digital notes and connecting them to real-world objects. For that, we have developed an MR system using the Microsoft HoloLens2 to create semantic representations of contextually-aware real-world objects while interacting with holographic virtual objects. User interaction is enhanced with use of fingers as placeholders for menu items. A figure of our application and its usage is shown in Figure 14. We demonstrate our approach through two real-world scenarios. We also discuss the challenges for using MR technologies.

There are three subfigures labeled A to C showing (A) a virtual box floating over a projector with a hand in a pinch motion to select it, (B) two menus over the virtual box that allow the user to take a picture of the projector annotation, and (C) a user with their right hand open and menu icons for different annotations such as text and voice hover on each finger tip.

This work was published and presented at the 2024 IEEE Conference on Virtual Reality 23.

8.3.5 AMD Journee: A patient co-designed VR experience to raise awareness towards the impact of AMD on social interactions

Participants: Johanna Delachambre, Monica Di Meo [CHU - Hôpital Pasteur, Nice], Frédérique Lagniez [CHU - Hôpital Pasteur, Nice], Christine Morfin-Bourlat [CHU - Hôpital Pasteur, Nice], Stéphanie Baillif [CHU - Hôpital Pasteur, Nice], Hui-Yin Wu, Pierre Kornprobst.

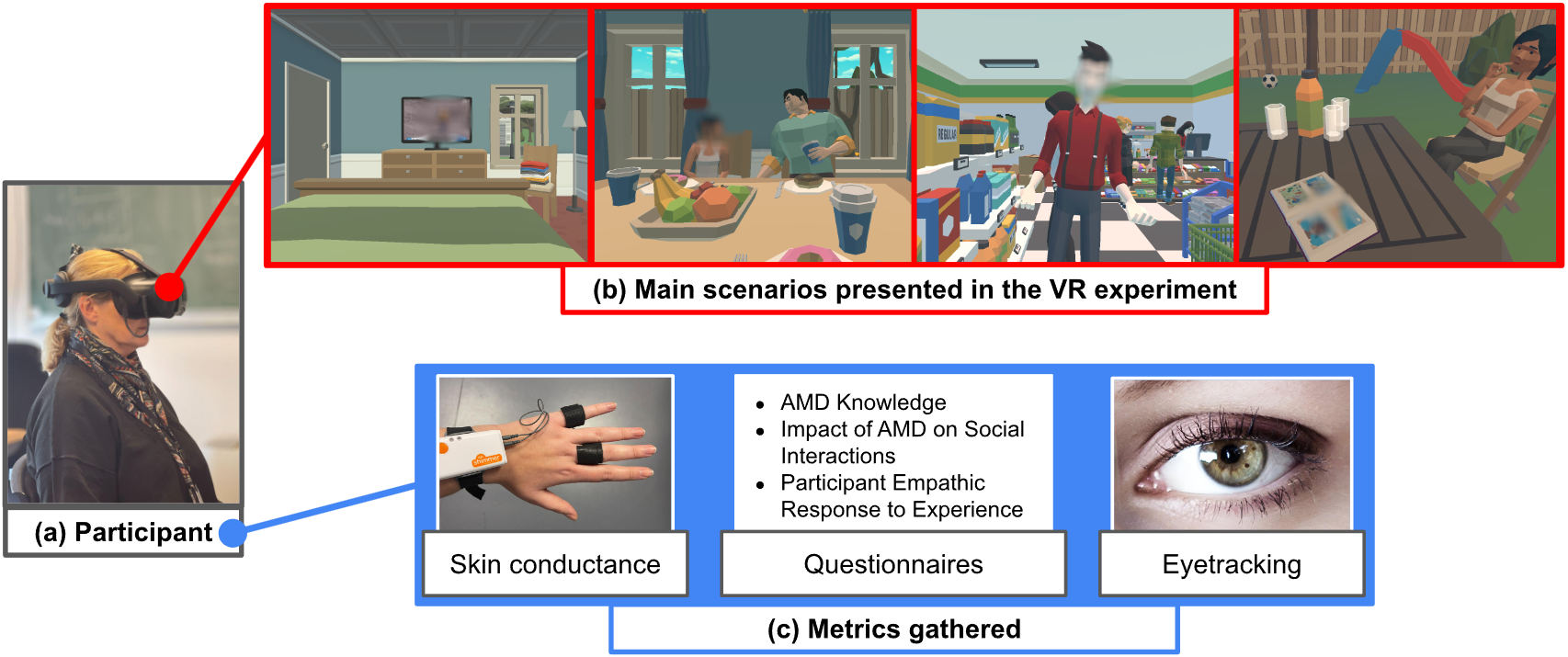

Context: Virtual reality (VR) has great potential for raising awareness, thanks to its ability to immerse users in situations different from their everyday experiences, such as various disabilities. In 20, we present a VR experience designed to raise awareness towards the impact of age-related macular degeneration (AMD) on social interactions for patients.

Description: We investigated age-related macular degeneration (AMD), which causes loss of central visual field acuity (a scotoma) and hinders patients from perceiving facial expressions and gestures. This often leads to social challenges such as awkward interactions, misunderstandings, and feelings of isolation. Using virtual reality (VR), we co-designed an immersive experience with four daily-life scenarios of AMD patients, informed by structured interviews with patients and orthoptists. The experience adopts the patient’s perspective, incorporating voiceovers to narrate their emotions, challenges, adaptive strategies, and advice on how supportive social actions can improve their quality of life. A virtual scotoma, designed to follow the user's gaze via an HTC Vive Focus 3 headset with eye-tracking, enhances realism. Guided by a formal definition of awareness, we evaluate our experience on three components of awareness – knowledge, engagement, and empathy – through established questionnaires, continuous measures of gaze and skin conductance, and qualitative feedback. In an experiment with 29 participants, the experience demonstrated a strong positive impact on awareness about AMD. The scotoma and events also influenced gaze activity and emotional responses. These findings highlight the potential of immersive technologies to raise public awareness of conditions like AMD and pave the way for fine-grained, multimodal studies of user behavior to design more impactful experiences.

This work was presented at the IMX conference 20 and in a workshop organized by Fedrha 46. The experience is available under an open CeCILL licence (IDDN.FR.001.170016.000.S.P.2024.000.31230) at the project webpage.

General workflow of the VR experiment. On the top, four images illustrate the scenarios of the experience. On the bottom, images and text describe the data gathered, including skin conductance response, eye tracking, and answers fron questionnaires.

General workflow of the VR experiment: (a) the participant wearing a headset is going through (b) four main scenarios designed to show “what it is like” to live with AMD and interact with others. (c) Multiple types of data on the user experience are collected including skin conductance response, eye tracking, and questionnaires.

9 Bilateral contracts and grants with industry

9.1 Bilateral contracts with industry

Participants: Pierre Kornprobst, Sebastián Gallardo, Dorian Mazauric [UniCA, Inria, ABS Team].

- Cifre contract with the company Demain Un Autre Jour (directed by Bruno Génuit), in the scope of the PhD of Sebastián Gallardo (co-supervised by Pierre Kornprobst and Dorian Mazauri, June 2023 – May 2026)

10 Partnerships and cooperations

10.1 International initiatives

10.1.1 Associate Teams in the framework of an Inria International Lab or in the framework of an Inria International Program

FUSION

-

Title:

Functional structure of the retina: A physiological and computational approach

-

Duration:

2024 ->

-

Coordinator:

Adrian Palacios (adrian.palacios@uv.cl)

-

Partners:

- Universidad de Valparaiso (Chile)

-

Inria contact:

Bruno Cessac

-

Summary:

This project aims to gain a better understanding of the retinal response to complex visual stimuli, and to discover the role played by the network of lateral interneurons (amacrine cells) in this response. To achieve this, we will adopt a dual methodology: experimental and computational. Using real-time control and a feedback loop, we will exploit a computer model of the retina to adapt, in real time, visual stimuli to the recorded responses of retinal cells. The experiments will be carried out in Valparaiso (Chile). The Biovision team will develop the control software and extrapolate the results to Inria Sophia-Antipolis, drawing on its recent theoretical advances in retinal modeling. This is a transdisciplinary, international project at the interface between biology, computer science and mathematical neuroscience. Beyond a better understanding of the role of its network structure in the retina's response to complex spatio-temporal stimuli, this project could potentially have an impact on diagnostic methods for neurodegenerative diseases such as Alzheimer's.

10.1.2 Participation in other International Programs

ESTHETICS

Participants: Bruno Cessac, Rodrigo Cofré, Simone Ebert, Francisco Miqueles, Adrian Palacios, Erwan Petit, Jorge Portal-Diaz, Luc Pronzato, Ludovic Sacchelli.

-

Title:

Exploring the functional Structure of THe rETIna with Closed loop Stimulation. A physiological and computational approach.

-

Partner Institution(s):

- Facultad de Ciencias, Universidad de Valparaíso, Chile

- Universidad de Valparaíso, Chile

- I3S, CNRS, Sophia-Antipolis, France

-

Date/Duration:

2 years

-

Additional info/keywords:

This project, funded by the Idex RISE fundings, aims to better understand the retina's response to complex visual stimuli and unravel the role played by the lateral interneurons network (amacrine cells) in this response. For this, we will adopt an experimental methodology using real-time control and feedback loop to adapt, in real-time, visual stimulations to recorded retinal cell responses. The experiments will be done in Valparaiso (Chile). The Biovision team will develop the control software and extrapolate results at Inria Sophia-Antipolis based on its recent theoretical advances in retina modeling. This is, therefore, a transdisciplinary and international project at the interface between biology, computer sciences and mathematical neurosciences. Beyond a better understanding of its network structure's role in shaping the retina's response to complex spatiotemporal stimuli, this project could potentially impact methods for diagnosing neurodegenerative diseases like Alzheimer's.

10.2 International research visitors

10.2.1 Visits of international scientists

Other international visits to the team

Francisco Miqueles

-

Status

(intern (master/eng))

-

Institution of origin:

Universidad Valparaiso

-

Country:

Chile

-

Dates:

01/02/2024-28/05/2024

-

Context of the visit:

collaboration with A. Palacios team, ESTHETICS project.

-

Mobility program/type of mobility:

internship.

Jorge Portal Diaz

-

Status

(PhD)

-

Institution of origin:

Universidad Valparaiso

-

Country:

Chile

-

Dates:

01/02/2024-28/05/2024

-

Context of the visit:

collaboration with A. Palacios team, ESTHETICS project.

-

Mobility program/type of mobility:

Research stay.

10.3 National initiatives

Participants: Bruno Cessac, Pierre Kornprobst, Hui-Yin Wu.

10.3.1 ANR

ShootingStar

-

Title:

Processing of naturalistic motion in early vision

-

Programme:

ANR

-

Duration:

April 2021 - March 2025

-

Coordinator:

Mark WEXLER (CNRS‐INCC),

-

Partners:

- Institut de Neurosciences de la Timone (CNRS and Aix-Marseille Université, France)

- Institut de la Vision (IdV), Paris, France

- Unité de Neurosciences Information et Complexité, Gif sur Yvette, France

- Laboratoire Psychologie de la Perception - UMR 8242, Paris

-

Inria contact:

Bruno Cessac

-

Summary:

The natural visual environments in which we have evolved have shaped and constrained the neural mechanisms of vision. Rapid progress has been made in recent years in understanding how the retina, thalamus, and visual cortex are specifically adapted to processing natural scenes. Over the past several years it has, in particular, become clear that cortical and retinal responses to dynamic visual stimuli are themselves dynamic. For example, the response in the primary visual cortex to a sudden onset is not a static activation, but rather a propagating wave. Probably the most common motions in the retina are image shifts due to our own eye movements: in free viewing in humans, ocular saccades occur about three times every second, shifting the retinal image at speeds of 100-500 degrees of visual angle per second. How these very fast shifts are suppressed, leading to clear, accurate, and stable representations of the visual scene, is a fundamental unsolved problem in visual neuroscience known as saccadic suppression. The new Agence Nationale de la Recherche (ANR) project “ShootingStar” aims at studying the unexplored neuroscience and psychophysics of the visual perception of fast (over 100 deg/s) motion, and incorporating these results into models of the early visual system.

DEVISE

-

Title:

From novel rehabilitation protocols to visual aid systems for low vision people through Virtual Reality

-

Programme:

ANR

-

Duration:

2021–2025

-

Coordinator:

Eric Castet (Laboratoire de Psychologie Cognitive, Marseille)

-

Partners:

- CNRS/Aix Marseille University – AMU, Cognitive Psychology Laboratory

- AMU, Mediterranean Virtual Reality Center

-

Inria contact:

Pierre Kornprobst

-

Summary:

The ANR DEVISE (Developing Eccentric Viewing in Immersive Simulated Environments) aims to develop in a Virtual Reality headset new functional rehabilitation techniques for visually impaired people. A strong point of these techniques will be the personalization of their parameters according to each patient’s pathology, and they will eventually be based on serious games whose practice will increase the sensory-motor capacities that are deficient in these patients.

CREATTIVE3D

-

Title:

Creating attention driven 3D contexts for low vision

-

Programme:

ANR

-

Duration:

2022–2026

-

Coordinator:

Hui-Yin Wu

-

Partners:

- Université Côte d'Azur I3S, LAMHESS, CoBTEK laboratories

- CNRS/Aix Marseille University – AMU, Cognitive Psychology Laboratory

-

Summary:

CREATTIVE3D deploys virtual reality (VR) headsets to study navigation behaviors in complex environments under both normal and simulated low-vision conditions. We aim to model multi-modal user attention and behavior, and use this understanding for the design of assisted creativity tools and protocols for the creation of personalized 3D-VR content for low vision training and rehabilitation.

TRACTIVE

-

Title:

Towards a computational multimodal analysis of film discursive aesthetics

-

Programme:

ANR

-

Duration:

2022–2026

-

Coordinator:

Lucile Sassatelli

-

Partners:

- Université Côte d'Azur CNRS I3S

- Université Côte d'Azur, CNRS BCL

- Sorbonne Université, GRIPIC

- Université Toulouse 3, CNRS IRIT

- Université Sorbonne Paris Nord, LabSIC

-

Inria contact:

Hui-Yin Wu

-

Summary:

TRACTIVE's objective is to characterize and quantify gender representation and women objectification in films and visual media, by designing an AI-driven multimodal (visual and textual) discourse analysis. The project aims to establish a novel framework for the analysis of gender representation in visual media. We integrate AI, linguistics, and media studies in an iterative approach that both pinpoints the multimodal discourse patterns of gender in film, and quantitatively reveals their prevalence. We devise a new interpretative framework for media and gender studies incorporating modern AI capabilities. Our models, published through an online tool, will engage the general public through participative science to raise awareness towards gender-in-media issues from a multi-disciplinary perspective.

11 Dissemination

Alexandre Bonlarron Bruno Cessac Johanna Delachambre Simone Ebert Jerôme Emonet Pierre Kornprobst Erwan Petit Florent Robert Hui-Yin Wu

11.1 Promoting scientific activities

11.1.1 Scientific events: organisation

Member of the organizing committees

- H.-Y. Wu co-organized with Cécile Mazon (EPI Flowers) the Journées Scientifique d'Inria on "Handicap, perte d'autonomie & numérique" in Paris, November 19-20

11.1.2 Scientific events: selection

Member of the conference program committees

- H.-Y. Wu was associate chair for the conference ACM Interactive Media Experiences (IMX 2024).

Reviewer

- B. Cessac has been a reviewer for the 46th Annual meeting of the IEEE "Engineering in Medicine and Biological Society".

- P. Kornprobst was a reviewer for The 33nd International Joint Conference on Artificial Intelligence (IJCAI-24), track IJCAI 2024 Main Track.

- H.-Y. Wu was a reviewer for the IEEE Conference on Virtual Reality (IEEEVR 2024).

- H.-Y. Wu was a reviewer for the IEEE International Symposium on the Internet of Sounds

- H.-Y. Wu was a reviewer for the ACM Symposium on Engineering Interactive Computing Systems (EICS)

11.1.3 Journal

Reviewer - reviewing activities

- H.-Y. Wu was a reviewer for the journal Elsevier Displays.

- H.-Y. Wu was a reviewer for the journal Springer Multimedia Tools and Applications.

11.1.4 Invited talks

- B. Cessac gave a talk in the Insitut de Physique de Nice, entitled "The retina from a physical perspective."

- H.-Y. Wu gave an invited talk at the 48th "Forum de culture scientifique" about her research in virtual reality and artificial intelligence.

- H.-Y. Wu participated in the round table for the Educazur/France Immersive Learning event on "Immersive technologies and learning".

11.1.5 Scientific expertise

- P. Kornprobst reviewed one proposition submitted to the ERC Advanced Grant 2023 Call.

- H.-Y. Wu was part of the Maître de Conférences selection committee for UniCA DS4H.

11.1.6 Research administration

- B. Cessac was a member of the Comité Scientifique de l'Institut Neuromod.

- B. Cessac is a member of the Bureau du Comité des Equipes Projets.

- B. Cessac was a member of the Comité de Suivi et Pilotage Spectrum.

- H.-Y. Wu is a member of the Comité de Suivi Doctoral of Inria.

11.2 Teaching - Supervision - Juries

11.2.1 Teaching

- Licence 2: A. Bonlarron (58 hours, TD) Mécanismes internes des systèmes d'exploitation, Licence en informatique, UniCA, France.

- Master 1: B. Cessac (24 hours, cours) Introduction to Modelling in Neuroscience, master Mod4NeuCog, UniCA, France.

- Master 1: E. Petit (15 hours, TD), Introduction to Modelling in Neuroscience, master Mod4NeuCog, UniCA, France.

- License 1: E. Petit (36 hours TD), Bases de l'informatique en python, License math/info, UniCA, France.

- License 2: J. Emonet (22 hours), Introduction à l'informatique, License SV, UniCA, France.

- License 3: J. Emonet (16 hours), Programmation python et environnement linux, License BIM, UniCA, France.

- License 3: J. Emonet (20 hours), Biostatistiques, License SV, UniCA, France.

- Master 1: J. Delachambre (17.5 hours TD), Création de mondes virtuels, Master en Informatique (SI4), Polytech Nice Sophia, UniCA, France

- Master 2: J. Delachambre (9 hours TD), Techniques d'interaction et multimodalité, Master en Informatique (SI5) mineure IHM, Polytech Nice Sophia, UniCA, France

- Master 1: R. Robert (30 hours TD), Création de mondes virtuels, Master en Informatique (SI4), Polytech Nice Sophia, UniCA, France

- Licence 3: F. Robert (39.5 hours TD), Analyse du besoin & Application Web, MIAGE, UniCA, France

- Master 1: H-Y. Wu (5 hours course), Introduction to scientific research, Master 1, DS4H, UniCA, France

- Master 1: H-Y. Wu (8 hours course), Introduction to scientific research, Master en Informatique (SI5), Polytech Nice Sophia, UniCA, France

- Master 1: H-Y. Wu (12 hours course), Création de mondes virtuels, Master en Informatique (SI4), Polytech Nice Sophia, UniCA, France

- Master 2: H-Y. Wu (6 hours course), Techniques d'interaction et multimodalité, Master en Informatique (SI5) mineure IHM, Polytech Nice Sophia, UniCA, France

- Master 2: H-Y. Wu supervises M2 student final projects (TER), 2 hours, Master en Informatique (SI5), Polytech Nice Sophia, UniCA, France

11.2.2 Supervision

- B. Cessac co-supervised the PhD of Jérôme Emonet (with A. Destexhe), "A retino-cortical model to study movement-generated waves in the visual system", defended on November 28th.

- B. Cessac supervises the PhD of Erwan Petit, "Modeling activity waves in the retina".

- B. Cessac co-supervised the M1 and M2 internship of Laura Piovano, Master Mod4NeuCog, in collaboration with S. Ebert.

- B. Cessac, P. Kornprobst and H. Wu co-supervised the M1 and M2 intership of C. Kyriazis, Master Mod4NeuCog.

- P. Kornprobst co-supervised (with J.-C. Régin) the PhD of Alexandre Bonlarron, "Pushing the limits of reading performance screening with Artificial Intelligence: Towards large-scale evaluation protocols for the Visually Impaired".

- P. Kornprobst co-supervises (with D. Mazauric) the PhD of Sebastian Gallardo, "Making newspaper layouts dynamic : Study of a new combinatorial/ geometric packing problems". CIFRE contract with the company Demain un Autre Jour (Toulouse).

- P. Kornprobst and H.-Y. Wu co-supervise the PhD of Johanna Delachambre, "Social interactions in low vision: A collaborative approach based on immersive technologies".

- H.-Y. Wu co-supervised (with Marco Winckler and Lucile Sassatelli) the PhD of Florent Robert, "Analyzing and Understanding Embodied Interactions in Extended Reality Systems", defended on December 9, 2024.

- H.-Y. Wu co-supervises (with Lucile Sassatelli) the PhD of Franz Franco Gallo, "Modeling 6DoF Navigation and the Impact of Low Vision in Immersive VR Contexts".

- H.-Y. Wu co-supervises (with Marco Winckler and Aline Menin) the PhD of Clément Quéré, "Apports des réalités étendues pour l’exploration visuelle de données spatio-temporelles".

- H.-Y. Wu co-supervises (with Lucile Sassatelli and Frédéric Précioso) the PhD of Julie Tores, "Deep learning to detect objectification in films and visual media".

- H.-Y. Wu co-supervises (with Marco Winckler) the PhD of Pauline Devictor, "Empathetic storytelling in interactive extended reality".

- H.-Y. Wu supervised the Masters 2 internship of Pauline Devictor.

11.2.3 Juries

- B. Cessac was a member of the comité de thèse of Anastasiia Maslianitsyna (Institut de la Vision, Paris).

- H.-Y. Wu was a jury member for the defense of Paritosh Sharma (University of Paris-Saclay, Paris)

- H.-Y. Wu was a member of the comité de thèse of Géraldine Cherchi (UniCA, SVS, Nice)

- H.-Y. Wu was a member of the comité de thèse of Kevin Galéry (UniCA, SVS, Nice)

- H.-Y. Wu was a member of the comité de thèse of Yujie Huang (Université de Nantes, France)

11.3 Popularization

11.3.1 Other science outreach relevant activities

- H.-Y. Wu gave an outreach talk on pursuing a scientific career in computer science to students at Lycée Calmette, Nice

12 Scientific production

12.1 Major publications

- 1 articleA New Vessel-Based Method to Estimate Automatically the Position of the Nonfunctional Fovea on Altered Retinography From Maculopathies.Translational vision science & technology12July 2023HALDOI

- 2 articlePTVR – A software in Python to make virtual reality experiments easier to build and more reproducible.Journal of Vision24April 2024HALDOI

- 3 articleLinear response for spiking neuronal networks with unbounded memory.Entropy232L'institution a financé les frais de publication pour que cet article soit en libre accèsFebruary 2021, 155HALDOI

- 4 inproceedingsAMD Journee: A Patient Co-designed VR Experience to Raise Awareness Towards the Impact of AMD on Social Interactions.IMX 2024 - ACM International Conference on Interactive Media ExperiencesStockholm, SwedenJune 2024HALDOI

- 5 articleTemporal pattern recognition in retinal ganglion cells is mediated by dynamical inhibitory synapses.Nature Communications151July 2024, 6118HALDOI

- 6 articleA constructive mean field analysis of multi population neural networks with random synaptic weights and stochastic inputs.Frontiers in Computational Neuroscience312009, URL: http://arxiv.org/abs/0808.1113DOI

- 7 inproceedingsHuman Trajectory Forecasting in 3D Environments: Navigating Complexity under Low Vision.ACM Digital LibraryMMVE 2024 - ACM Multimedia Systems Workshop on IMmersive Mixed and Virtual Environment SystemsMMVE '24: Proceedings of the 16th International Workshop on Immersive Mixed and Virtual Environment SystemsBari, ItalyACMApril 2024, 57-63HALDOIback to textback to text