2023Activity reportProject-TeamEX-SITU

RNSR: 201521246H- Research center Inria Saclay Centre at Université Paris-Saclay

- In partnership with:CNRS, Université Paris-Saclay

- Team name: Extreme Situated Interaction

- In collaboration with:Laboratoire Interdisciplinaire des Sciences du Numérique

- Domain:Perception, Cognition and Interaction

- Theme:Interaction and visualization

Keywords

Computer Science and Digital Science

- A5.1. Human-Computer Interaction

- A5.1.1. Engineering of interactive systems

- A5.1.2. Evaluation of interactive systems

- A5.1.5. Body-based interfaces

- A5.1.6. Tangible interfaces

- A5.1.7. Multimodal interfaces

- A5.2. Data visualization

- A5.6.2. Augmented reality

Other Research Topics and Application Domains

- B2.8. Sports, performance, motor skills

- B6.3.1. Web

- B6.3.4. Social Networks

- B9.2. Art

- B9.2.1. Music, sound

- B9.2.4. Theater

- B9.5. Sciences

1 Team members, visitors, external collaborators

Research Scientists

- Wendy Mackay [Team leader, INRIA, Senior Researcher, HDR]

- Janin Koch [INRIA, ISFP]

- Theofanis Tsantilas [INRIA, Researcher, HDR]

Faculty Members

- Michel Beaudouin-Lafon [UNIV PARIS SACLAY, Professor, HDR]

- Sarah Fdili Alaoui [UNIV PARIS SACLAY, Associate Professor, HDR]

Post-Doctoral Fellow

- Johnny Sullivan [UNIV PARIS-SACCLAY]

PhD Students

- Tove Bang [UNIV PARIS SACLAY]

- Alexandre Battut [UNIV PARIS SACLAY, until Mar 2023]

- Eya Ben Chaaben [INRIA]

- Vincent Bonczak [INRIA, from Sep 2023]

- Léo Chédin [ENS PARIS-SACLAY, from Sep 2023]

- Romane Dubus [UNIV PARIS SACLAY]

- Arthur Fages [UNIV PARIS SACLAY, until Mar 2023]

- Camille Gobert [INRIA]

- Yasaman Mashhadi Hashem Marandi [INRIA, from Oct 2023]

- Capucine Nghiem [UNIV PARIS SACLAY]

- Anna Offenwanger [UNIV PARIS SACLAY]

- Xiaohan Peng [Inria, from Sep 2023]

- Wissal Sahel [IRT SYSTEM X]

- Martin Tricaud [UNIV PARIS SACLAY]

- Yann Trividic [UNIV PARIS SACLAY, from Oct 2023]

Technical Staff

- Sébastien Dubos [UNIV PARIS SACLAY, Engineer, from Oct 2023]

- Olivier Gladin [INRIA, Engineer]

- Alexandre Kabil [UNIV PARIS SACLAY, Engineer]

- Nicolas Taffin [INRIA, Engineer, until Jul 2023]

- Junhang Yu [Inria, until May 2023]

Interns and Apprentices

- Ricardo Calcagno [UNIV PARIS SACLAY, from May 2023 until Jul 2023, Intern]

- Léo Chédin [Université Paris-Saclay, from Mar 2023 until Aug 2023, Masters Intern]

- Lars Oberhofer [INRIA, Intern, from May 2023 until Aug 2023]

- Elena Pasalskaya [INRIA, Intern, from Apr 2023 until Jun 2023]

- Lea Paymal [UNIV PARIS SACLAY (ENS), from Mar 2023 until Aug 2023, Masters Intern]

- Xiaohan Peng [INRIA, Intern, from Mar 2023 until Aug 2023, Masters]

- Catalina-Gabriela Radu [INRIA, Intern, from May 2023 until Aug 2023]

- Noemie Tapie [UNIV PARIS SACLAY, from Apr 2023 until Aug 2023]

Administrative Assistant

- Julienne Moukalou [INRIA]

External Collaborators

- Joanna Lynn Mcgrenere [University of British Columbia, Former Inria Chair, Full Professor]

- Nicolas Taffin [UNIV PARIS SACLAY, LISN, from Aug 2023]

2 Overall objectives

Interactive devices are everywhere: we wear them on our wrists and belts; we consult them from purses and pockets; we read them on the sofa and on the metro; we rely on them to control cars and appliances; and soon we will interact with them on living room walls and billboards in the city. Over the past 30 years, we have witnessed tremendous advances in both hardware and networking technology, which have revolutionized all aspects of our lives, not only business and industry, but also health, education and entertainment. Yet the ways in which we interact with these technologies remains mired in the 1980s. The graphical user interface (GUI), revolutionary at the time, has been pushed far past its limits. Originally designed to help secretaries perform administrative tasks in a work setting, the GUI is now applied to every kind of device, for every kind of setting. While this may make sense for novice users, it forces expert users to use frustratingly inefficient and idiosyncratic tools that are neither powerful nor incrementally learnable.

ExSitu explores the limits of interaction — how extreme users interact with technology in extreme situations. Rather than beginning with novice users and adding complexity, we begin with expert users who already face extreme interaction requirements. We are particularly interested in creative professionals, artists and designers who rewrite the rules as they create new works, and scientists who seek to understand complex phenomena through creative exploration of large quantities of data. Studying these advanced users today will not only help us to anticipate the routine tasks of tomorrow, but to advance our understanding of interaction itself. We seek to create effective human-computer partnerships, in which expert users control their interaction with technology. Our goal is to advance our understanding of interaction as a phenomenon, with a corresponding paradigm shift in how we design, implement and use interactive systems. We have already made significant progress through our work on instrumental interaction and co-adaptive systems, and we hope to extend these into a foundation for the design of all interactive technology.

3 Research program

We characterize Extreme Situated Interaction as follows:

Extreme users. We study extreme users who make extreme demands on current technology. We know that human beings take advantage of the laws of physics to find creative new uses for physical objects. However, this level of adaptability is severely limited when manipulating digital objects. Even so, we find that creative professionals––artists, designers and scientists––often adapt interactive technology in novel and unexpected ways and find creative solutions. By studying these users, we hope to not only address the specific problems they face, but also to identify the underlying principles that will help us to reinvent virtual tools. We seek to shift the paradigm of interactive software, to establish the laws of interaction that significantly empower users and allow them to control their digital environment.

Extreme situations. We develop extreme environments that push the limits of today's technology. We take as given that future developments will solve “practical" problems such as cost, reliability and performance and concentrate our efforts on interaction in and with such environments. This has been a successful strategy in the past: Personal computers only became prevalent after the invention of the desktop graphical user interface. Smartphones and tablets only became commercially successful after Apple cracked the problem of a usable touch-based interface for the iPhone and the iPad. Although wearable technologies, such as watches and glasses, are finally beginning to take off, we do not believe that they will create the major disruptions already caused by personal computers, smartphones and tablets. Instead, we believe that future disruptive technologies will include fully interactive paper and large interactive displays.

Our extensive experience with the Digiscope WILD and WILDER platforms places us in a unique position to understand the principles of distributed interaction that extreme environments call for. We expect to integrate, at a fundamental level, the collaborative capabilities that such environments afford. Indeed almost all of our activities in both the digital and the physical world take place within a complex web of human relationships. Current systems only support, at best, passive sharing of information, e.g., through the distribution of independent copies. Our goal is to support active collaboration, in which multiple users are actively engaged in the lifecycle of digital artifacts.

Extreme design. We explore novel approaches to the design of interactive systems, with particular emphasis on extreme users in extreme environments. Our goal is to empower creative professionals, allowing them to act as both designers and developers throughout the design process. Extreme design affects every stage, from requirements definition, to early prototyping and design exploration, to implementation, to adaptation and appropriation by end users. We hope to push the limits of participatory design to actively support creativity at all stages of the design lifecycle. Extreme design does not stop with purely digital artifacts. The advent of digital fabrication tools and FabLabs has significantly lowered the cost of making physical objects interactive. Creative professionals now create hybrid interactive objects that can be tuned to the user's needs. Integrating the design of physical objects into the software design process raises new challenges, with new methods and skills to support this form of extreme prototyping.

Our overall approach is to identify a small number of specific projects, organized around four themes: Creativity, Augmentation, Collaboration and Infrastructure. Specific projects may address multiple themes, and different members of the group work together to advance these different topics.

4 Application domains

4.1 Creative industries

We work closely with creative professionals in the arts and in design, including music composers, musicians, and sound engineers; painters and illustrators; dancers and choreographers; theater groups; game designers; graphic and industrial designers; and architects.

4.2 Scientific research

We work with creative professionals in the sciences and engineering, including neuroscientists and doctors; programmers and statisticians; chemists and astrophysicists; and researchers in fluid mechanics.

5 Highlights of the year

- Michel Beaudouin-Lafon was named an ACM Fellow;

- Wendy Mackay published a book based on her 2022 Inaugural Lecture as Visiting Professor in Computer Science for the Collège de France, “Réimaginer nos interactions avec le monde numérique” 31;

- Wendy Mackay published the first fully single-source authored book, “DOIT: The Design of Interactive Things”' 30, distributed at the CHI'23 conference;

- Sarah Fdili Alaoui defended her Habiliation 33: “Dance-Led Research”;

- The PEPR eNSEMBLE - Future of digital collaboration, was launched in October 2023;

- The ERC PoC (proof-of-concept) project “OnePub: Single-Source Collaborative Publishing” started in March 2023;

- Wendy Mackay and Michel Beaudouin-Lafon testified about Human-Centered AI to the European Parliament (Brussels, May 2023).

5.1 Awards

6 New software, platforms, open data

6.1 New software

6.1.1 Digiscape

-

Name:

Digiscape

-

Keywords:

2D, 3D, Node.js, Unity 3D, Video stream

-

Functional Description:

Through the Digiscape application, the users can connect to a remote workspace and share files, video and audio streams with other users. Application running on complex visualization platforms can be easily launched and synchronized.

- URL:

-

Contact:

Olivier Gladin

-

Partners:

Maison de la simulation, UVSQ, CEA, ENS Cachan, LIMSI, LRI - Laboratoire de Recherche en Informatique, CentraleSupélec, Telecom Paris

6.1.2 Touchstone2

-

Keyword:

Experimental design

-

Functional Description:

Touchstone2 is a graphical user interface to create and compare experimental designs. It is based on a visual language: Each experiment consists of nested bricks that represent the overall design, blocking levels, independent variables, and their levels. Parameters such as variable names, counterbalancing strategy and trial duration are specified in the bricks and used to compute the minimum number of participants for a balanced design, account for learning effects, and estimate session length. An experiment summary appears below each brick assembly, documenting the design. Manipulating bricks immediately generates a corresponding trial table that shows the distribution of experiment conditions across participants. Trial tables are faceted by participant. Using brushing and fish-eye views, users can easily compare among participants and among designs on one screen, and examine their trade-offs.

Touchstone2 plots a power chart for each experiment in the workspace. Each power curve is a function of the number of participants, and thus increases monotonically. Dots on the curves denote numbers of participants for a balanced design. The pink area corresponds to a power less than the 0.8 criterion: the first dot above it indicates the minimum number of participants. To refine this estimate, users can choose among Cohen’s three conventional effect sizes, directly enter a numerical effect size, or use a calculator to enter mean values for each treatment of the dependent variable (often from a pilot study).

Touchstone2 can export a design in a variety of formats, including JSON and XML for the trial table, and TSL, a language we have created to describe experimental designs. A command-line tool is provided to generate a trial table from a TSL description.

Touchstone2 runs in any modern Web browser and is also available as a standalone tool. It is used at ExSitu for the design of our experiments, and by other Universities and research centers worldwide. It is available under an Open Source licence at https://touchstone2.org.

- URL:

-

Contact:

Wendy Mackay

-

Partner:

University of Zurich

6.1.3 UnityCluster

-

Keywords:

3D, Virtual reality, 3D interaction

-

Functional Description:

UnityCluster is middleware to distribute any Unity 3D (https://unity3d.com/) application on a cluster of computers that run in interactive rooms, such as our WILD and WILDER rooms, or immersive CAVES (Computer-Augmented Virtual Environments). Users can interact the the application with various interaction resources.

UnityCluster provides an easy solution for running existing Unity 3D applications on any display that requires a rendering cluster with several computers. UnityCluster is based on a master-slave architecture: The master computer runs the main application and the physical simulation as well as manages the input, the slave computers receive updates from the master and render small parts of the 3D scene. UnityCluster manages data distribution and synchronization among the computers to obtain a consistent image on the entire wall-sized display surface.

UnityCluster can also deform the displayed images according to the user's position in order to match the viewing frustum defined by the user's head and the four corners of the screens. This respects the motion parallax of the 3D scene, giving users a better sense of depth.

UnityCluster is composed of a set of C Sharp scripts that manage the network connection, data distribution, and the deformation of the viewing frustum. In order to distribute an existing application on the rendering cluster, all scripts must be embedded into a Unity package that is included in an existing Unity project.

-

Contact:

Cédric Fleury

-

Partner:

Inria

6.1.4 VideoClipper

-

Keyword:

Video recording

-

Functional Description:

VideoClipper is an IOS app for Apple Ipad, designed to guide the capture of video during a variety of prototyping activities, including video brainstorming, interviews, video prototyping and participatory design workshops. It relies heavily on Apple’s AVFoundation, a framework that provides essential services for working with time-based audiovisual media on iOS (https://developer.apple.com/av-foundation/). Key uses include: transforming still images (title cards) into video tracks, composing video and audio tracks in memory to create a preview of the resulting video project and saving video files into the default Photo Album outside the application.

VideoClipper consists of four main screens: project list, project, capture and import. The project list screen shows a list with the most recent projects at the top and allows the user to quickly add, remove or clone (copy and paste) projects. The project screen includes a storyboard composed of storylines that can be added, cloned or deleted. Each storyline is composed of a single title card, followed by one or more video clips. Users can reorder storylines within the storyboard, and the elements within each storyline through direct manipulation. Users can preview the complete storyboard, including all titlecards and videos, by pressing the play button, or export it to the Ipad’s Photo Album by pressing the action button.

VideoClipper offers multiple tools for editing titlecards and storylines. Tapping on the title card lets the user edit the foreground text, including font, size and color, change background color, add or edit text labels, including size, position, color, and add or edit images, both new pictures and existing ones. Users can also delete text labels and images with the trash button. Video clips are presented via a standard video player, with standard interaction. Users can tap on any clip in a storyline to: trim the clip with a non-destructive trimming tool, delete it with a trash button, open a capture screen by clicking on the camera icon, label the clip by clicking a colored label button, and display or hide the selected clip by toggling the eye icon.

VideoClipper is currently in beta test, and is used by students in two HCI classes at the Université Paris-Saclay, researchers in ExSitu as well as external researchers who use it for both teaching and research work. A beta test version is available on demand under the Apple testflight online service.

-

Contact:

Wendy Mackay

6.1.5 WildOS

-

Keywords:

Human Computer Interaction, Wall displays

-

Functional Description:

WildOS is middleware to support applications running in an interactive room featuring various interaction resources, such as our WILD and WILDER rooms: a tiled wall display, a motion tracking system, tablets and smartphones, etc. The conceptual model of WildOS is a platform, such as the WILD or WILDER room, described as a set of devices and on which one or more applications can be run.

WildOS consists of a server running on a machine that has network access to all the machines involved in the platform, and a set of clients running on the various interaction resources, such as a display cluster or a tablet. Once WildOS is running, applications can be started and stopped and devices can be added to or removed from the platform.

WildOS relies on Web technologies, most notably Javascript and node.js, as well as node-webkit and HTML5. This makes it inherently portable (it is currently tested on Mac OS X and Linux). While applications can be developed only with these Web technologies, it is also possible to bridge to existing applications developed in other environments if they provide sufficient access for remote control. Sample applications include a web browser, an image viewer, a window manager, and the BrainTwister application developed in collaboration with neuroanatomists at NeuroSpin.

WildOS is used for several research projects at ExSitu and by other partners of the Digiscope project. It was also deployed on several of Google's interactive rooms in Mountain View, Dublin and Paris. It is available under an Open Source licence at https://bitbucket.org/mblinsitu/wildos.

- URL:

-

Contact:

Michel Beaudouin-Lafon

6.1.6 StructGraphics

-

Keywords:

Data visualization, Human Computer Interaction

-

Scientific Description:

Information visualization research has developed powerful systems that enable users to author custom data visualizations without textual programming. These systems can support graphics-driven practices by bridging lazy data-binding mechanisms with vector-graphics editing tools. Yet, despite their expressive power, visualization authoring systems often assume that users want to generate visual representations that they already have in mind rather than explore designs. They also impose a data-to-graphics workflow, where binding data dimensions to graphical properties is a necessary step for generating visualization layouts. In this work, we introduce StructGraphics, an approach for creating data-agnostic and fully reusable visualization designs. StructGraphics enables designers to construct visualization designs by drawing graphics on a canvas and then structuring their visual properties without relying on a concrete dataset or data schema. In StructGraphics, tabular data structures are derived directly from the structure of the graphics. Later, designers can link these structures with real datasets through a spreadsheet user interface. StructGraphics supports the design and reuse of complex data visualizations by combining graphical property sharing, by-example design specification, and persistent layout constraints. We demonstrate the power of the approach through a gallery of visualization examples and reflect on its strengths and limitations in interaction with graphic designers and data visualization experts.

-

Functional Description:

StructGraphics is a user interface for creating data-agnostic and fully reusable designs of data visualizations. It enables visualization designers to construct visualization designs by drawing graphics on a canvas and then structuring their visual properties without relying on a concrete dataset or data schema. Overall, StructGraphics follows the inverse workflow than traditional visualization-design systems. Rather than transforming data dependencies into visualization constraints, it allows users to interactively define the property and layout constraints of their visualization designs and then translate these graphical constraints into alternative data structures. Since visualization designs are data-agnostic, they can be easily reused and combined with different datasets.

- URL:

- Publication:

-

Contact:

Theofanis Tsantilas

-

Participant:

Theofanis Tsantilas

6.2 New platforms

6.2.1 WILD

Participants: Michel Beaudouin-Lafon [correspondant], Cédric Fleury, Olivier Gladin.

WILD is our first experimental ultra-high-resolution interactive environment, created in 2009. In 2019-2020 it received a major upgrade: the 16-computer cluster was replaced by new machines with top-of-the-line graphics cards, and the 32-screen display was replaced by 32 32" 8K displays resulting in a resolution of 1 giga-pixels (61 440 x 17 280) for an overall size of 5m80 x 1m70 (280ppi). An infrared frame adds multitouch capability to the entire display area. The platform also features a camera-based motion tracking system that lets users interact with the wall, as well as the surrounding space, with various mobile devices.

6.2.2 WILDER

Participants: Michel Beaudouin-Lafon [correspondant], Cédric Fleury, Olivier Gladin.

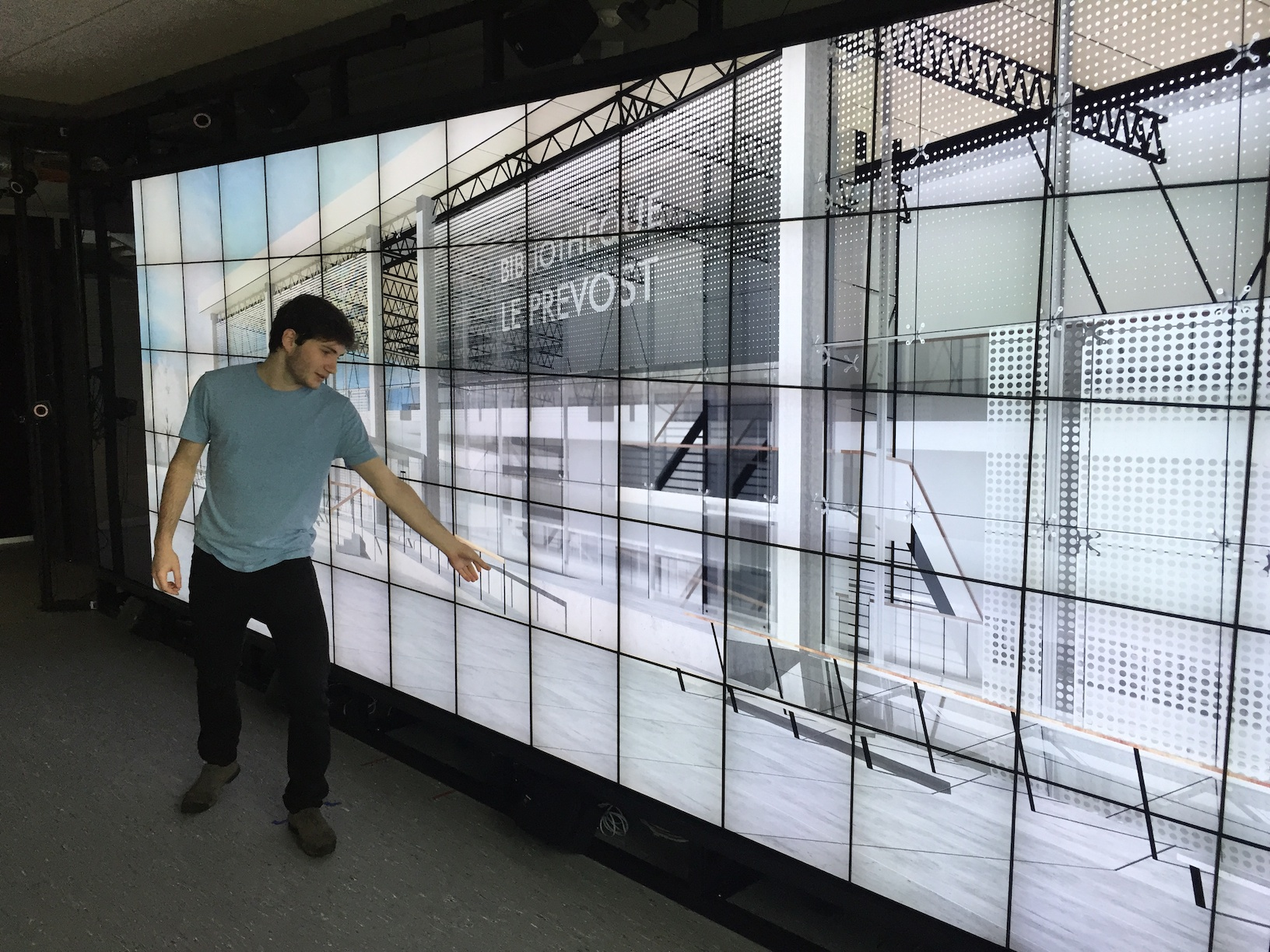

WILDER (Figure 1) is our second experimental ultra-high-resolution interactive environment, which follows the WILD platform developed in 2009. It features a wall-sized display with seventy-five 20" LCD screens, i.e. a 5m50 x 1m80 (18' x 6') wall displaying 14 400 x 4 800 = 69 million pixels, powered by a 10-computer cluster and two front-end computers. The platform also features a camera-based motion tracking system that lets users interact with the wall, as well as the surrounding space, with various mobile devices. The display uses a multitouch frame (one of the largest of its kind in the world) to make the entire wall touch sensitive.

WILDER was inaugurated in June, 2015. It is one of the ten platforms of the Digiscope Equipment of Excellence and, in combination with WILD and the other Digiscope rooms, provides a unique experimental environment for collaborative interaction.

In addition to using WILD and WILDER for our research, we have also developed software architectures and toolkits, such as WildOS and Unity Cluster, that enable developers to run applications on these multi-device, cluster-based systems.

User in front of the 5m50 x 1m80, 75-screen WILDER platform.

7 New results

7.1 Fundamentals of Interaction

Participants: Michel Beaudouin-Lafon [correspondant], Wendy Mackay, Theophanis Tsandilas, Camille Gobert, Han Han, Miguel Renom, Martin Tricaud.

In order to better understand fundamental aspects of interaction, ExSitu conducts in-depth observational studies and controlled experiments which contribute to theories and frameworks that unify our findings and help us generate new, advanced interaction techniques 1.

User interfaces typically feature tools to act on objects and rely on the ability of users to discover or learn how to use these tools. Previous work in HCI has used the Theory of Affordances to explain how users understand the possibilities for action in digital environments. We have continued our work on assessing the value of the Technical Reasoning theory for understanding interaction with digital tools and our ability to appropriate such tools for unexpected use. Technical Reasoning 39 is a theory from cognitive neuroscience that posits that users accumulate abstract knowledge of object properties and technical principles known as mechanical knowledge, essential in tool use. Drawing from this theory, we demonstrated in previous work 40 that Technical Reasoning can be at play when interacting with digital content. Based on this first result, we introduced interaction knowledge as the “mechanical” knowledge of digital environments 24. We provided evidence of its relevance by reporting on an experiment where participants performed tasks in a digital environment with ambiguous possibilities for interaction. We analyzed how interaction knowledge was transferred across two digital domains, text editing and graphical editing, and suggest that interaction knowledge models an essential type of knowledge for interacting in the digital world.

This theoretical work reinforces our belief that the current organization of the digital world into applications that act as “walled gardens”, isolated from their environment, is not ideal. We created Stratify 17, a new interaction model and proof-of-concept prototype based on the concepts of interaction substrate for organizing digital information and interaction instruments for manipulating these substrates. This approach makes the concept of application unnecessary and leads to flexible and extensible environments in which users can combine content at will and choose the tools they need to manipulate it. The implementation of Stratify combines a data-reactive approach to specify relationships among digital objects with a functional-reactive approach to handle interaction. This combination enables the creation of rich information substrates that can be freely inspected and modified, as well as interaction instruments that are decoupled from the objects they interact with, making it possible to use instruments with objects they were not designed for. We illustrated the flexibility of the approach with several examples .

We developed a general framework for interacting with computer languages, including programming languages as well as description languages such as LaTeX, HTML or Markdown. In previous work, we created iLaTeX 38 to facilitate interaction with images, tables and formulas in LaTeX. We generalized this concept to that of projections, tools that help users interact with representations that better fit their needs than plain text. We collected 62 projections from the literature and from a design workshop and found that 60% of them can be implemented using a table, a graph or a form. However, projections are often hardcoded for specific languages and situations, and in most cases only the developers of a code editor can create or adapt projections, leaving no room for appropriation by their users. We introduced lorgnette 20, a new framework for letting programmers augment their code editor with projections and demonstrated five examples that use lorgnette to create projections that can be reused in new contexts.

Existing systems dealing with the increasing volume of data series cannot guarantee interactive response times, even for fundamental tasks such as similarity search. Therefore, it is necessary to develop analytic approaches that support exploration and decision making by providing progressive results, before the final and exact ones have been computed. Prior works lack both efficiency and accuracy when applied to large-scale data series collections. We presented and experimentally evaluated ProS 11 a new probabilistic learning-based method that provides quality guarantees for progressive Nearest Neighbor (NN) query answering. We develop our method for k-NN queries and demonstrate how it can be applied with the two most popular distance measures, namely, Euclidean and Dynamic Time Warping (DTW). We provide both initial and progressive estimates of the final answer that are getting better during the similarity search, as well suitable stopping criteria for the progressive queries. Moreover, we describe how this method can be used in order to develop a progressive algorithm for data series classification (based on a k-NN classifier), and we additionally propose a method designed specifically for the classification task. Experiments with several and diverse synthetic and real datasets demonstrate that our prediction methods constitute the first practical solutions to the problem, significantly outperforming competing approaches.

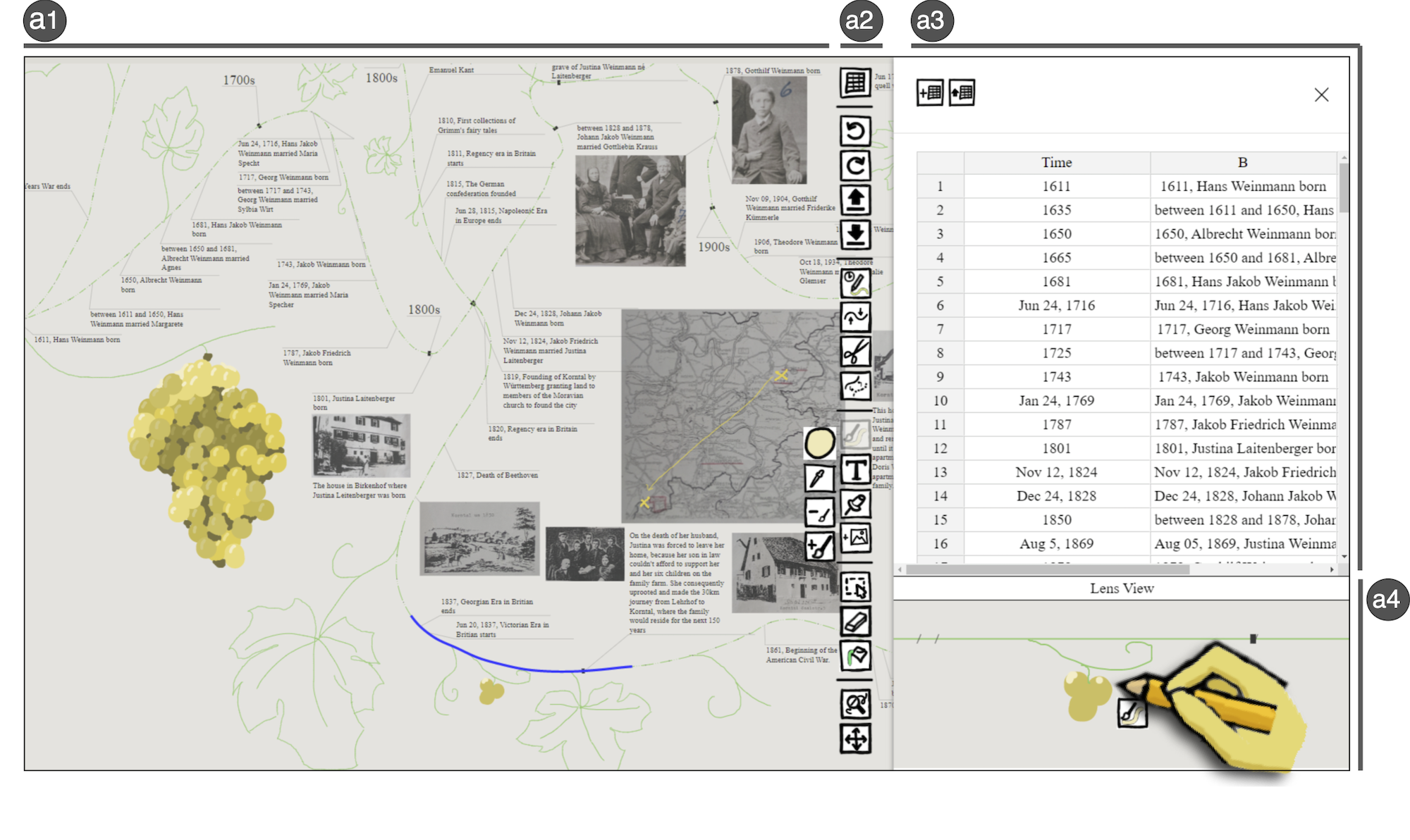

Timelines are essential for visually communicating chronological narratives and reflecting on the personal and cultural significance of historical events. Existing visualization tools tend to support conventional linear representations, but fail to capture personal idiosyncratic conceptualizations of time. In response, we built TimeSplines 13, a visualization authoring tool that allows people to sketch multiple free-form temporal axes and populate them with heterogeneous, time-oriented data via incremental and lazy data binding. Authors can bend, compress, and expand temporal axes to emphasize or de-emphasize intervals based on their personal importance; they can also annotate the axes with text and figurative elements to convey contextual information. The results of two user studies show how people appropriate the concepts in TimeSplines to express their own conceptualization of time, while our curated gallery of images demonstrates the expressive potential of our approach.

Image of the TimeSplines system for sketching idiosyncratic timelines.

Designing interactive technology to support safety-critical systems poses multiple challenges with respect to security, access to operators and the proprietary nature of the data. We conducted a two-year participatory design project with French power grid operators to both understand their specific needs and to collaborate on the design of a novel collaborative tool called StoryLines 25. Our primary objective was to capture detailed, in-context data about operators' work practices as part of a larger project designed to provide bi-directional assistance between an intelligent agent and human operator. We targeted handovers between shifts to take advantage of the operators' existing practice of articulating the current status of the grid and expected future events. We used information that would otherwise be lost to gather valuable information about the operator's decision rationale and decision-making patterns. We combined a bottom-up participatory design approach with a top-down generative theory approach to design StoryLines, an interactive timeline that helps operators collect information from diverse tools, record reminders and share relevant information with the next shift's operator.

At the methodological level, we continued our long-time commitment to and development of participatory design methods, with two publications of an educational nature. First, the “DOIT book” 30 presents the Do It process, which integrates a variety of interaction design methods into a coherent whole. Created for participants at the CHI'23 Conference in Hamburg, Germany, this book contains four methods from a forthcoming book entitled DOIT: The Design of Interactive Things. Each method was developed and refined to highlight the interaction aspect of HCI. All are grounded in social science research, and were selected for their relevance to both User Experience (UX) designers and Human-Computer Interaction (HCI) researchers. Each has been successfully employed in both corporate and academic settings by novice and professional interaction designers. Novice designers will be able learn and apply these methods immediately, while more advanced designers will be able to develop custom variations that reflect the underlying principles, and adapt them to the needs of your current project. The four methods chosen for this book — story interviews, video brainstorming, video prototyping and generative walk-throughs — emphasize the importance of capturing and representing users' lived experiences in the form of stories that inspire innovative ideas and accommodate potential breakdowns. Each takes advantage of video to capture those stories, generate ideas, explore designs, and evaluate and redesign the resulting system.

Second, we revised and extended a handbook chapter on participatory design and prototyping 32 originally published in 2007. In this new version we emphasized the role of participatory design in prototyping activities. Participatory design actively involves users throughout the design process, from initial discovery of their needs to the final assessment of the system. As in all forms of interaction design, this requires two complementary processes: generating new ideas that expand the design space, and selecting specific ideas, thus contracting the design space. During this process, designers create prototypes that help them generate and explore this space of possibilities, ideally with the ongoing participation of users. Successful prototypes represent different aspects of the system and help ground the design process in concrete artifacts that highlight specific design questions. We classify prototypes based on their representation, level of precision, interactivity, life cycle and scope and explain how to create and use these different kinds of prototypes, with examples selected from key phases of the interaction design process.

Together, the DOIT book and the prototyping chapter are useful resources for students, practitioners and researchers who want to engage in participatory design and actively use prototypes at all stages of the design process.

7.2 Human-Computer Partnerships

Participants: Wendy Mackay [co-correspondant], Janin Koch [co-correspondant], Téo Sanchez, Nicolas Taffin, Theophanis Tsandilas.

ExSitu is interested in designing effective human-computer partnerships where expert users control their interaction with intelligent systems. Rather than treating the human users as the `input' to a computer algorithm, we explore human-centered machine learning, where the goal is to use machine learning and other techniques to increase human capabilities. Much of human-computer interaction research focuses on measuring and improving productivity: our specific goal is to create what we call “co-adaptive systems” that are discoverable, appropriable and expressive for the user. The historical foundation for this work is described in the book Réimaginer nos interactions avec le monde numérique based on Wendy Mackay's Inaugural Lecture for the Collège de France 31.

Wendy Mackay explored problem of how to design “human-computer partnerships” that take optimal advantage of human skills and system capabilities 22. Artificial Intelligence research is usually measured in terms of the effectiveness of an algorithm, whereas Human-Computer Interaction research focuses on enhancing human abilities. However, better AI algorithms are neither necessary nor sufficient for creating more effective intelligent systems. The paper describes how to successfully balance the simplicity of the user's interaction with the expressive power of the system, by using “generative theories of interaction” 37. The goal is to create interactive intelligent systems where users can discover relevant functionality, express individual differences and appropriate the system for their own personal use.

We organized a workshop at the CHI'23 conference on Human-Human Collaborative Ideation 26 to explore how artificial intelligence can be incorporated into the creativity support tools. People generate more innovative ideas when they collaborate collectively explore ideas with each other. Advances in artificial intelligence have led to intelligent tools for supporting ideation, providing inspiration, suggesting methods, or generate alternatives. However, the role of AI as a mediary between creative professionals is poorly understood. The workshop brought together researchers and practitioners interested in understanding the trade-offs and potential for integrating AI into human-human collaborative ideation.

We reported on the Dagstuhl Perspectives Workshop we co-organized on “Human-Centered Artificial Intelligence” 35, which sought to provide the scientific and technological foundations for designing and deploying hybrid human-centered AI systems that work in partnership with human beings and that enhance human capabilities rather than replace human intelligence. We argued that fundamentally new solutions are needed for core research problems in AI and human-computer interaction (HCI), especially to help people understand actions recommended or performed by AI systems and to facilitate meaningful interaction between humans and AI systems. We identified a series of specific challenges, including: learning complex world models; building effective and explainable machine learning systems; developing human-controllable intelligent systems; adapting AI sys- tems to dynamic, open-ended real-world environments (in particular robots and autonomous systems); achieving in-depth understanding of humans and complex social contexts; and enabling self-reflection within AI systems.

We continued our work with Marcelle 19, an open-source toolkit dedicated to the design of web applications involving interactions with machine learning algorithms. We demonstrated how Marcelle can stimulate research and education in the field of interactive machine learning, particularly in scenarios involving several types of user.

We also analyzed movement learning 12. We proposed a metric learning method that bridges the gap between human ratings of movement similarity in a motor learning task and computational metric evaluation on the same task using a Dynamic Time Warping algorithm to derive an optimal set of movement features that best explain human ratings. We evaluated this method on an existing movement dataset comprised of videos of participants practising a complex gesture sequence toward a target template, as well as the collected data that describes the movements. We showed a linear relationship between human ratings and our learned computational metric, which describes the most salient temporal moments implicitly used by annotators, as well as movement parameters that correlate with motor improvements in the dataset. We concluded with possibilities to generalise this method for designing computational tools dedicated to movement annotation and evaluation of skill learning.

Recent work identified a concerning trend of disproportional gender representation in research participants in Human-Computer Interaction (HCI). Motivated by the fact that Human-Robot Interaction (HRI) shares many participant practices with HCI, we explored 14 whether this trend is mirrored in our field. We produced a dataset that covers participant gender representation in all 684 full papers published at the HRI conference from 2006-2021 and were able to identify current trends in HRI research participation. We found an over-representation of male research participants, as well as inconsistent and/or incomplete gender reporting. We also examined if and how participant gender was considered in user studies. Finally, we conducted a survey of HRI researchers to examine correlations between which participants perform which roles and how this might affect gender bias across different sub-fields of HRI, with practical suggestions for HRI researchers in the future.

7.3 Creativity

Participants: Sarah Fdili Alaoui, Wendy Mackay [correspondant], Tove Grimstad, Manon Vialle, Liz Walton, Janin Koch, Nicolas Taffin.

ExSitu is interested in understanding the work practices of creative professionals who push the limits of interactive technology. We follow a multi-disciplinary participatory design approach, working with both expert and non-expert users in diverse creative contexts.

We investigated the creative practices of computational artists 28, who use computing as their primary medium for producing visual output, e.g. through the use of creative coding or generative design. These practices entail a deep understanding of the mediating properties of code, data and computational representations for producing novel and valuable artifacts. We conducted an observational study with 12 artists and designers who create and/or configure algorithmic procedures to produce visual works. The results led us to analyze creative behavior as an epistemic process, whereby agents generate knowledge about their medium through epistemic actions, and produce their medium by externalizing this knowledge into epistemic artifacts. Epistemic artifacts are created explicitly for the sake of exploration and understanding rather than for progressing toward the creative goal. This work contributes a deeper understanding of the roles of instrumentality and materiality in the creative process and opens up new perspectives to better support this epistemic process in software and to define evaluation frameworks that account for it.

Sarah Fidili Alaoui successfully defended her habilitation entitled “Dance-Led Research”, which described her evolution through different approaches to studying dance and choreography.

We present 29 how we co-designed with a connoisseur a system that visualizes a star-like ribbon joining at the solar plexus and animated it from motion capture data to perform Isadora Duncan's dances. Additionally, the system visualizes the trace of the solar plexus and specific keyframes of the choreography as key poses placed in the 3D space. We display the visualization in a Hololens headset and provide features that allow to manipulate it in order to understand and learn Duncan's qualities and choreographic style. Through a workshop with dancers, we ran a structured observation where we compared qualitatively how the dancers were able to perceive Duncan's qualities and embody them using the system set according to two different conditions: displaying all the future keyframes or displaying a limited number of keyframes. We discuss the results of our workshop and the use of augmented reality in the studio for pedagogical purposes.

We present 15 a long-term collaboration between dancers and designers, centred around the transmission of the century-old repertoire of modern dance pioneer Isadora Duncan. We engaged in a co-design process with a Duncanian dancer consisting of conversations and participation in her transmission of Duncan's choreographies and technique. We then articulated experiential qualities central to Duncan's repertoire and used them to guide the design of the probes, the sounding scarfs. Our probes sonify dancers' movements using temporal sensors embedded in the fabric of the scarfs, with the goal of evoking Duncan's work and legacy. We shared the probes with our Duncan dance community and found that they deepen the dancers' engagement with the repertoire. Finally, we discuss how co-designing with slowness and humility were key to the dialogue created between the practitioners, allowing for seamless integration of design research and dance practice.

We present 16 a soma design process of a digital musical instrument grounded in the designer's first-person perspective of practicing Dalcroze eurhythmics, a pedagogical approach to learning music through movement. Our goal is to design an instrument that invites musicians to experience music as movement. The designer engaged in the soma design process, by first sensitising her body through Dalcroze training. Subsequently, she articulated her bodily experiences into experiential design qualities that guided the making of the instrument. The process resulted in the design of a large suspended mobile played by touching it with bare skin. We shared our instrument with 7 professional musicians and observed how it inspires a variety of approaches to musical meaning-making, ranging from exploring sound, to choreographing the body. Finally, we discuss how engaging with Dalcroze eurhythmics can be generative to the design of music-movement interaction.

We followed a Research through Design (RtD) that explored the concept of loops as a design material 23. Using an Ethnographic design methodology, we aimed to create artifacts that represent the ways in which content can be repeated, distorted and modified in loops. Our process consisted firstly of the practice of noticing loops in everyday life. This led us to curate a portfolio of loops’ occurrences in objects and living organisms from our surroundings. Secondly, we organized the loops that we noticed in a typology and created a score that instantiates such a variety of loops. Finally, we followed the score to produce 1. knitting work, 2. woodwork, 3. lino printing, and 4. dance performances as art-based physicalizations of different instances of loops. Our study of loops uncovers a designerly way to reflect on a daily phenomenon (loops) through making physical artifacts and dancing.

Designing technology for dance presents a unique challenge to conventional design research and methods. It is subject to diverse and idiosyncratic approaches to the artistic practice that it is situated. We investigated this by joining a dance company to develop interactive technologies for a new performance 27. From our firsthand account, we show the design space to be messy and non-linear, demanding flexibility and negotiation between multiple stakeholders and constraints. Through interviews with performers and choreographers, we identified nine themes for incorporating technology into dance, revealing tensions and anxiety, but also evolution and improvised processes to weave complex layers into a finished work. We find design for dance productions to be resistant to formal interpretation, requiring designers to embrace the intertwining stages, steps, and methods of the artistic processes that generate them …

7.4 Collaboration

Participants: Sarah Fdili Alaoui, Michel Beaudouin-Lafon [co-correspondant], Wendy Mackay [co-correspondant], Arthur Fages, Janin Koch, Theophanis Tsandilas.

ExSitu explores new ways of supporting collaborative interaction and remote communication.

A major achievement is the launch of the national network PEPR eNSEMBLE on the future of Digital Collaboration. Team members were heavily involved in spearheading, organizing and launching this 38M€ project that gathers over 80 research groups from multiple disciplines across France. The project was inaugurated in October, 2023, in Grenoble, and we organized and ran a 3-day summer school with 18 Ph.D. students as a launch event. PEPR eNSEMBLE is organized in 5 main areas covering all aspects of collaboration: collaboration in space, collaboration in time, collaboration with intelligent systems, collaboration at scale, and transversal aspects on ethics, methodology, regulation and economics. ExSitu is involved in all these areas as well as at the management level of the entire project.

We continued our long-time collaboration with Aarhus University on malleable software for collaboration by exploring better support for video-conferencing. Video conferencing software is traditionally built as one-size-fits-all, with little support for tailorability of either functionality or the user interface. By contrast, physical meetings let people spontaneously rearrange furniture, equipment, and themselves to match the format and atmosphere of the meeting. Our goal was to make end-user tailoring of virtual meetings as easy as rearranging physical furniture in a meeting room. Together with our Danish colleagues 21, we developed a design space for video conferencing systems that includes a five-step “ladder of tailorability,” from minor adjustments to live reprogramming of the interface. We created Mirrorverse, a proof-of-concept system that shows how live tailoring can be technically realized in a video conferencing interface. We showed how it applies the principles of computational media to support live tailoring of video conferencing interfaces to accommodate highly diverse meeting situations. We presented multiple use scenarios, including a virtual workshop, an online yoga class, and a stand-up team meeting to evaluate the approach and demonstrate its potential for new, remote meetings with fluid transitions across activities.

We also investigated how to support collaborative design in shared augmented-reality environments 18. In such environments, multiple designers can create and interact with 3D virtual content that overlays their shared physical objects. Although content created by one designer can serve as a source of inspiration for others, it can also distract the collaborators' creative process. To deal with conflicts that arise in such situations, we developed a conceptual framework that allows multiple versions of augmentations of the same physical object to coexist in parallel virtual spaces. According to our framework, users can partially or totally desynchronize their virtual environment to generate their own content and then explore alternatives created by others. We demonstrated our conceptual framework through a system of collaborative design, where two designers wearing augmented-reality headsets sketch in 3D around a physical sewing mannequin to design female dress. Arthur Fages successfully defended his thesis around this topic 34.

8 Bilateral contracts and grants with industry

8.1 Bilateral contracts with industry

Participants: Wendy Mackay, Wissal Sahel, Robert Falcasantos.

CAB: Cockpit and Bidirectional Assistant

-

Title:

Smart Cockpit Project

-

Duration:

Sept 2020 - August 2024

-

Coordinator:

SystemX Technological Research Institute

-

Partners:

- SystemX

- EDF

- Dassault

- RATP

- Orange

- Inria

-

Inria contact:

Wendy Mackay

-

Summary:

The goal of the CAB Smart Cockpit project is to define and evaluate an intelligent cockpit that integrates a bi-directional virtual agent that increases the real-time the capacities of operators facing complex and/or atypical situations. The project seeks to develop a new foundation for sharing agency between human users and intelligent systems: to empower rather than deskill users by letting them learn throughout the process, and to let users maintain control, even as their goals and circumstances change.

9 Partnerships and cooperations

9.1 International initiatives

9.1.1 Participation in other International Programs

Participants: Theophanis Tsandilas, Anna Offenwanger.

GRAVIDES

-

Title:

Grammars for Visualization-Design Sketching

-

Funding:

CNRS - University of Toronto Collaboration Program

-

Duration:

2021 - 2023

-

Coordinator:

Theophanis Tsandilas and Fanny Chevalier

-

Partners:

- CNRS – LISN

- University of Toronto – Dept. of Computer Science

-

Inria contact:

Theophanis Tsandilas

-

Summary:

The goal of the project is to create novel visualization authoring tools that enable common users with no design expertise to sketch and visually express their personal data.

Joanna McGrenere

-

Status

(Full Professor)

- Institution of origin: University of British Columbia

- Country: Canada

- Dates: 2-5 May, 2023

- Context of the visit: On-going research collaboration

-

Mobility program/type of mobility:

(research stay, lecture)

Danielle Lottridge

-

Status

(Associate Professor)

- Institution of origin: University of Auckland

- Country: New Zealand

- Dates: 2-12 May, 2023

- Context of the visit: Collaboration on an Interactive Exhibit

-

Mobility program/type of mobility:

(research stay, lecture)

Fanny Chevalier

-

Status

(Associate Professor)

- Institution of origin: University of Toronto

- Country: Canada

- Dates: 2-5 May, 2023

- Context of the visit: Research collaboration

-

Mobility program/type of mobility:

(research stay, lecture)

Susanne Bødker

-

Status

(Full Professor)

- Institution of origin: Aarhus University

- Country: Denmark

- Dates: 12-16 May, 2023

- Context of the visit: Research collaboration

-

Mobility program/type of mobility:

(research stay, lecture)

9.2 European initiatives

9.2.1 Horizon Europe

OnePub

Participants: Michel Beaudouin-Lafon, Wendy Mackay, Camille Gobert, Yann Trividic.

OnePub project on cordis.europa.eu

-

Title:

Single-source Collaborative Publishing

-

Duration:

From October 1, 2023 to March 30, 2025

-

Partners:

Université Paris-Saclay

-

Budget:

150 Keuros public funding from ERC

-

Coordinator:

Michel Beaudouin-Lafon

-

Summary:

Book publishing involves many stakeholders and a complex set of inflexible tools and formats. Current workflows are inefficient because authors and editors cannot make changes directly to the content once it has been laid out. They are costly because creating different output formats, such as PDF, ePub or HTML requires manual labor. Finally, new requirements such as the European directive on accessibility incur additional costs and delays.

The goal of the OnePub POC project is to demonstrate the feasibility and value of a book production workflow based on a set of collaborative editing tools and a single document source representing the “ground truth” of the book content and layout. The editing tools will be tailored to the needs of each stakeholder, e.g. author, editor or typesetter, and will feature innovative interaction techniques from the PI’s ERC project ONE.

The project will focus on textbooks and academic publications as its testbed because they feature some of the most stringent constraints in terms of content types and content layout. They also run on tight deadlines, emphasizing the need for an efficient process.

OnePub will define a unified format for the document source, create several collaborative document editors, and develop an open and extensible architecture so that new editors and add-ons can be added to the workflow. Together, these developments will set the stage for a new ecosystem for the publishing industry, with a level-playing field where software companies can provide components while publishers and their service contractors can decide which components to use for their workflows.

SustainML

SustainML project on cordis.europa.eu

-

Title:

Application Aware, Life-Cycle Oriented Model-Hardware Co-Design Framework for Sustainable, Energy Efficient ML Systems

-

Duration:

From October 1, 2022 to September 30, 2025

-

Partners:

- INSTITUT NATIONAL DE RECHERCHE EN INFORMATIQUE ET AUTOMATIQUE (INRIA), France

- PROYECTOS Y SISTEMAS DE MANTENIMIENTO SL (EPROSIMA EPROS), Spain

- IBM RESEARCH GMBH (IBM), Switzerland

- SAS UPMEM, France

- KOBENHAVNS UNIVERSITET (UCPH), Denmark

- DEUTSCHES FORSCHUNGSZENTRUM FUR KUNSTLICHE INTELLIGENZ GMBH (DFKI), Germany

- RHEINLAND-PFALZISCHE TECHNISCHE UNIVERSITAT, Germany

-

Inria contact:

Janin Koch

- Coordinator:

-

Summary:

AI is increasingly becoming a significant factor in the CO2 footprint of the European economy. To avoid a conflict between sustainability and economic competitiveness and to allow the European economy to leverage AI for its leadership in a climate friendly way, new technologies to reduce the energy requirements of all parts of AI system are needed. A key problem is the fact that tools (e.g. PyTorch) and methods that currently drive the rapid spread and democratization of AI prioritize performance and functionality while paying little attention to the CO2 footprint. As a consequence, we see rapid growth in AI applications, but not much so in AI applications that are optimized for low power and sustainability. To change that we aim to develop an interactive design framework and associated models, methods and tools that will foster energy efficiency throughout the whole life-cycle of ML applications: from the design and exploration phase that includes exploratory iterations of training, testing and optimizing different system versions through the final training of the production systems (which often involves huge amounts of data, computation and epochs) and (where appropriate) continuous online re-training during deployment for the inference process. The framework will optimize the ML solutions based on the application tasks, across levels from hardware to model architecture. AI developers from all experience levels will be able to make use of the framework through its emphasis on human-centric interactive transparent design and functional knowledge cores, instead of the common blackbox and fully automated optimization approaches in AutoML. The framework will be made available on the AI4EU platform and disseminated through close collaboration with initiatives such as the ICT 48 networks. It will also be directly exploited by the industrial partners representing various parts of the relevant value chain: from software framework, through hardware to AI services.

9.2.2 H2020 projects

ALMA

ALMA project on cordis.europa.eu

-

Title:

ALMA: Human Centric Algebraic Machine Learning

-

Duration:

From September 1, 2020 to August 31, 2024

-

Partners:

- INSTITUT NATIONAL DE RECHERCHE EN INFORMATIQUE ET AUTOMATIQUE (INRIA), France

- TEKNOLOGIAN TUTKIMUSKESKUS VTT OY (VTT), Finland

- PROYECTOS Y SISTEMAS DE MANTENIMIENTO SL (EPROSIMA EPROS), Spain

- ALGEBRAIC AI SL, Spain

- DEUTSCHES FORSCHUNGSZENTRUM FUR KUNSTLICHE INTELLIGENZ GMBH (DFKI), Germany

- RHEINLAND-PFALZISCHE TECHNISCHE UNIVERSITAT, Germany

- FIWARE FOUNDATION EV (FIWARE), Germany

- UNIVERSIDAD CARLOS III DE MADRID (UC3M), Spain

- FUNDACAO D. ANNA DE SOMMER CHAMPALIMAUD E DR. CARLOS MONTEZ CHAMPALIMAUD (FUNDACAO CHAMPALIMAUD), Portugal

-

Inria contact:

Wendy Mackay

- Coordinator:

-

Summary:

Algebraic Machine Learning (AML) has recently been proposed as new learning paradigm that builds upon Abstract Algebra, Model Theory. Unlike other popular learning algorithms, AML is not a statistical method, but it produces generalizing models from semantic embeddings of data into discrete algebraic structures, with the following properties:

-

P1:

Is far less sensitive to the statistical characteristics of the training data and does not fit (or even use) parameters

-

P2:

Has the potential to seamlessly integrate unstructured and complex information contained in training data, with a formal representation of human knowledge and requirements;

-

P3:

Uses internal representations based on discrete sets and graphs, offering a good starting point for generating human understandable, descriptions of what, why and how has been learned

-

P4:

Can be implemented in a distributed way that avoids centralized, privacy-invasive collections of large data sets in favor of a collaboration of many local learners at the level of learned partial representations.

The aim of the project is to leverage the above properties of AML for a new generation of Interactive, Human-Centric Machine Learning systems., that will:

- Reduce bias and prevent discrimination by reducing dependence on statistical properties of training data (P1), integrating human knowledge with constraints (P2), and exploring the how and why of the learning process (P3)

- Facilitate trust and reliability by respecting ‘hard’ human-defined constraints in the learning process (P2) and enhancing explainability of the learning process (P3)

- Integrate complex ethical constraints into Human-AI systems by going beyond basic bias and discrimination prevention (P2) to interactively shaping the ethics related to the learning process between humans and the AI system (P3)

- Facilitate a new distributed, incremental collaborative learning method by going beyond the dominant off-line and centralized data processing approach (P4)

-

P1:

ONE

Participants: Michel Beaudouin-Lafon, Wendy Mackay, Camille Gobert, Martin Tricaud.

ONE project on cordis.europa.eu

-

Title:

Unified Principles of Interaction

-

Duration:

From October 1, 2016 to March 30, 2023

-

Partners:

Université Paris-Saclay

-

Budget:

2.5 Meuros public funding from ERC

-

Coordinator:

Michel Beaudouin-Lafon

-

Summary:

Most of today’s computer interfaces are based on principles and conceptual models created in the late seventies. They are designed for a single user interacting with a closed application on a single device with a predefined set of tools to manipulate a single type of content. But one is not enough! We need flexible and extensible environments where multiple users can truly share content and manipulate it simultaneously, where applications can be distributed across multiple devices, where content and tools can migrate from one device to the next, and where users can freely choose, combine and even create tools to make their own digital workbench.

The goal of ONE is to fundamentally re-think the basic principles and conceptual model of interactive systems to empower users by letting them appropriate their digital environment. The project addresses this challenge through three interleaved strands: empirical studies to better understand interaction in both the physical and digital worlds, theoretical work to create a conceptual model of interaction and interactive systems, and prototype development to test these principles and concepts in the lab and in the field. Drawing inspiration from physics, biology and psychology, the conceptual model combines substrates to manage digital information at various levels of abstraction and representation, instruments to manipulate substrates, and environments to organize substrates and instruments into digital workspaces.

By identifying first principles of interaction, ONE unifies a wide variety of interaction styles and creates more open and flexible interactive environments.

9.3 National initiatives

eNSEMBLE

-

Title:

Future of Digital Collaboration

-

Type:

PEPR Exploratoire

-

Duration:

2022 – 2030

-

Coordinator:

Gilles Bailly, Michel Beaudouin-Lafon, Stéphane Huot, Laurence Nigay

-

Partners:

- Centre National de la Recherche Scientifique (CNRS)

- Institut National de Recherche en Informatique et Automatique (Inria)

- Université Grenoble Alpes

- Université Paris-Saclay

-

Budget:

38.25 Meuros public funding from ANR / France 2030

-

Summary:

The purpose of eNSEMBLE is to fundamentally redefine digital tools for collaboration. Whether it is to reduce our travel, to better mesh the territory and society, or to face the forthcoming problems and transformations of the next decades, the challenges of the 21st century will require us to collaborate at an unprecedented speed and scale.

To address this challenge, a paradigm shift in the design of collaborative systems is needed, comparable to the one that saw the advent of personal computing. To achieve this goal, we need to invent mixed (i.e. physical and digital) collaboration spaces that do not simply replicate the physical world in virtual environments, enabling co-located and/or geographically distributed teams to work together smoothly and efficiently.

Beyond this technological challenge, the eNSEMBLE project also addresses sovereignty and societal challenges: by creating the conditions for interoperability between communication and sharing services in order to open up the "private walled gardens" that currently require all participants to use the same services, we will enable new players to offer solutions adapted to the needs and contexts of use. Users will thus be able to choose combinations of potentially "intelligent" tools and services for defining mixed collaboration spaces that meet their needs without compromising their ability to exchange with the rest of the world. By making these services more accessible to a wider population, we will also help reduce the digital divide.

These challenges require a major long-term investment in multidisciplinary work (Computer Science, Ergonomics, Cognitive Psychology, Sociology, Design, Law, Economics) of both theoretical and empirical nature. The scientific challenges addressed by eNSEMBLE are:

- Designing novel collaborative environments and conceptual models;

- Combining human and artificial agency in collaborative set-ups;

- Enabling fluid collaborative experiences that support interoperability;

- Supporting the creation of healthy and sustainable collectives; and

- Specifying socio-technical norms with legal/regulatory frameworks.

eNSEMBLE will impact many sectors of society - education, health, industry, science, services, public life, leisure - by improving productivity, learning, care and well-being, as well as participatory democracy.

CONTINUUM

-

Title:

Collaborative continuum from digital to human

-

Type:

EQUIPEX+ (Equipement d'Excellence)

-

Duration:

2020 – 2029

-

Coordinator:

Michel Beaudouin-Lafon

-

Partners:

- Centre National de la Recherche Scientifique (CNRS)

- Institut National de Recherche en Informatique et Automatique (Inria)

- Commissariat à l'Energie Atomique et aux Energies Alternatives (CEA)

- Université de Rennes 1

- Université de Rennes 2

- Ecole Normale Supérieure de Rennes

- Institut National des Sciences Appliquées de Rennes

- Aix-Marseille University

- Université de Technologie de Compiègne

- Université de Lille

- Ecole Nationale d'Ingénieurs de Brest

- Ecole Nationale Supérieure Mines-Télécom Atlantique Bretagne-Pays de la Loire

- Université Grenoble Alpes

- Institut National Polytechnique de Grenoble

- Ecole Nationale Supérieure des Arts et Métiers

- Université de Strasbourg

- COMUE UBFC Université de Technologie Belfort Montbéliard

- Université Paris-Saclay

- Télécom Paris - Institut Polytechnique de Paris

- Ecole Normale Supérieure Paris-Saclay

- CentraleSupélec

- Université de Versailles - Saint-Quentin

-

Budget:

13.6 Meuros public funding from ANR

-

Summary:

The CONTINUUM project will create a collaborative research infrastructure of 30 platforms located throughout France, to advance interdisciplinary research based on interaction between computer science and the human and social sciences. Thanks to CONTINUUM, 37 research teams will develop cutting-edge research programs focusing on visualization, immersion, interaction and collaboration, as well as on human perception, cognition and behaviour in virtual/augmented reality, with potential impact on societal issues. CONTINUUM enables a paradigm shift in the way we perceive, interact, and collaborate with complex digital data and digital worlds by putting humans at the center of the data processing workflows. The project will empower scientists, engineers and industry users with a highly interconnected network of high-performance visualization and immersive platforms to observe, manipulate, understand and share digital data, real-time multi-scale simulations, and virtual or augmented experiences. All platforms will feature facilities for remote collaboration with other platforms, as well as mobile equipment that can be lent to users to facilitate onboarding.

GLACIS

-

Title:

Graphical Languages for Creating Infographics

-

Funding:

ANR

-

Duration:

2022 - 2025

-

Coordinator:

Theophanis Tsandilas

-

Partners:

- Inria Saclay (Theophanis Tsandilas, Michel Beaudouin-Lafon, Pierre Dragicevic)

- Inria Sophia Antipolis (Adrien Bousseau)

- École Centrale de Lyon (Romain Vuillemot)

- University of Toronto (Fanny Chevalier)

-

Inria contact:

Theophanis Tsandilas

-

Summary:

This project investigates interactive tools and techniques that can help graphic designers, illustrators, data journalists, and infographic artists, produce creative and effective visualizations for communication purposes, e.g., to inform the public about the evolution of a pandemic or help novices interpret global-warming predictions.

Living Archive

-

Title:

Interactive Documentation of Dance Heritage

-

Funding:

ANR JCJC

-

Duration:

2020 – 2024

-

Coordinator:

Sarah Fdili Alaoui

-

Partners:

Université Paris Saclay

-

Inria contact:

Sarah Fdili Alaoui

-

Summary:

The goal of this project is to design accessible, flexible and adaptable interactive systems that allow practitioners to easily document their dance using their own methods and personal artifacts emphasizing their first-person perspective. We will ground our methodology in action research where we seek through long-term commitment to field work and collaboration to simultaneously contribute to knowledge in Human-Computer Interaction and to benefit the communities of practice. More specifically, the interactive systems will allow dance practitioners to generate interactive repositories made of self-curated collections of heterogeneous materials that capture and document their dance practices from their first person-perspective. We will deploy these systems in real-world situations through long-term fieldwork that aims both at assessing the technology and at benefiting the communities of practice, exemplifying a socially relevant, collaborative, and engaged research.

10 Dissemination

10.1 Promoting scientific activities

10.1.1 Scientific events: organisation

Member of the organizing committees

- HHAI 2023, International Conference on Hybrid Human-Artificial Intelligence, Technical Program Chair: Janin Koch (chair)

- HHAI (2023), International Conference on Hybrid Human-Artificial Intelligence Doctoral Consortium jury: Wendy Mackay (member)

- ACM CHI, ACM Human Factors in Computing Systems, Workshop on Human-Human Collaborative Ideation: Janin Koch and Wendy Mackay (co-organizers)

- ACM CHI, ACM Human Factors in Computing Systems, Student Design Competion committee: Wendy Mackay (jury)

- ACM UIST, ACM Symposium on User Interface Software and Technology, Demo Award committee: Wendy Mackay (jury)

- ACM UIST, ACM Symposium on User Interface Software and Technology, Lasting Impact Award committee: Michel Beaudouin-Lafon (chair)

- ACM UIST, ACM Symposium on User Interface Software and Technology, Doctoral Consortium committee: Michel Beaudouin-Lafon (member)

- IHM 2023, Workshop on Synchronous and Remote Collaboration, Co-Chair: Alexandre Kabil

10.1.2 Scientific events: selection

Chair of conference program committees

- HHAI (2023-), International Conference on Hybrid Human-Artificial Intelligence, Steering Committee member: Janin Koch

Member of the conference program committees

- ACM CHI 2024, ACM CHI Conference on Human Factors in Computing Systems: Janin Koch, Theophanis Tsandilas

- ACM CHI 2023, ACM CHI Conference on Human Factors in Computing Systems: Michel Beaudouin-Lafon, Wendy Mackay

- ACM UIST 2023, ACM Symposium on User Interface Software and Technology: Michel Beaudouin-Lafon, Wendy Mackay

- IEEE VIS 2023, IEEE Visualization and Visual Analytics Conference: Theophanis Tsandilas

Reviewer

- ACM CHI 2024, ACM CHI Conference on Human Factors in Computing Systems: Tove Grimstad Bang, Anna Offenwanger, John Sullivan

- ACM C&C 2023, ACM Conference on Creativity and Cognition: John Sullivan, Theophanis Tsandilas, Tove Grimstad Bang, Camille Gobert

- ACM UIST 2023, ACM Symposium on User Interface Software and Technology: Janin Koch, Tove Grimstad Bang, Camille Gobert, Anna Offenwanger

- ACM DIS 2023, ACM DIS Conference on Designing Interactive Systems: Tove Grimstad Bang

- ACM ISS 2023 ACM Conference on Interactive Surfaces and Spaces: John Sullivan

- NIME 2023, International Conference on New Interfaces for Musical Expression: John Sullivan

- IEEE PacificVis 2024, IEEE Pacific Visualization Symposium: Theophanis Tsandilas

10.1.3 Journal

Member of the editorial boards

- Editor for the Human-Computer Interaction area of the ACM Books Series: Michel Beaudouin-Lafon (2013-)

- TOCHI, Transactions on Computer Human Interaction, ACM: Michel Beaudouin-Lafon (2009-), Wendy Mackay (2016-)

- ACM Tech Briefs: Michel Beaudouin-Lafon (2021-)

- JAIR, Journal of Artificial Intelligence Research, Special Issue on Human-Centred Computing: Wendy Mackay (co-editor) (2022-2023)

- ACM New Publications Board: Wendy Mackay (2020-)

- CACM Editorial Board Online: Wendy Mackay (2020-)

- CACM Website Redesign: Wendy Mackay (2022)

- Frontiers of Computer Science, Special Issue on Hybrid Human Artificial Intelligence: Janin Koch (Editor) (2023-)

Reviewer - reviewing activities

- IEEE TVCG, IEEE Transactions on Visualization and Computer Graphics: Theophanis Tsandilas

- Elsevier IJHCS, International Journal of Human-Computer Studies: Theophanis Tsandilas

- Elsevier Computers and Graphics: Theophanis Tsandilas

- ACM TOCHI, Transactions on Computer Human Interaction: Janin Koch, John Sullivan

- ACM HCIJ, Human Computer Interaction Journal: Janin Koch

10.1.4 Invited talks

- Inria, “Espoirs et déboires des métavers”, 20 January 2023: Michel Beaudouin-Lafon

- ROS 2 AI Integration Working Group, “Human-Computer Interaction and Artificial Intelligence”, 24 January 2022: Wendy Mackay and Janin Koch

- Interview and Round Table. “Femmes Inspirantes” International Women’s Day celebration, Paris. 8 March 2023: Wendy Mackay

- IRT System X, Orsay, “Information Theory Meets Human-Computer Partnerships”, 15 March 2023: Michel Beaudouin-Lafon

- CHI workshop “Integrating AI in Human-Human Collaborative Ideation”, 28 April 2023: Wendy Mackay, Janin Koch co-organizers

- Keynote “Applying Generative Theory to Human-Computer Partnerships” CRAI-CIS Seminar. Aalto, Finland. 10 May, 2023: Wendy Mackay

- Invited Address “Theory-driven HCI” CRAI-CIS Seminar. Aalto, Finland. 11 May, 2023: Michel Beaudouin-Lafon

- Invited address. “Creating Human-Computer Partnerships”. Association DocA2U: Les doctorants de l'École Doctorale Sciences, Technologies, Santé pour l'Alliance A2U (Amiens, Artois et Ulco): Wendy Mackay

- Maison de la Simulation, Saclay, “Un macroscope numérique pour l'interaction collaborative en grand”, 6 June 2023: Michel Beaudouin-Lafon

- Invited Addresss. “Theory-driven HCI”, HCI Seminar. University of California San Diego (UCSD), La Jolla, CA, USA. 14 June 2023: Michel Beaudouin-Lafon

- Invited address “Applying Generative Theory to Human-ComputerPartnerships” HCI Seminar. University of California San Diego (UCSD), La Jolla, CA, USA. 14 June 2023: Wendy Mackay

- Invited address “Creating Human-Computer Partnerships”. HHAI Doctoral Consortium, Munich, Germany. 27 June 2023: Wendy Mackay

- McGill University, “Dance technology design research at ex)situ”, July 2023: John Sullivan

- Keynote and panel “Creating Human-Computer Partnerships”, ICML HMCaT Workshop, Baltimore, Maryland. July 23 2023: Wendy Mackay

- Invited Talk and Panel. “Human-Computer Partnerships” at the EAC Panel for ACM AI and Education. Vancouver, Canada. 15 August 2023: Wendy Mackay

- Keynote “Les Partenariats Humain-Machine : Interagir avec l'Intelligence ArtificielleGRETSI’23” conference, Grenoble, France. 28 August 2023: Wendy Mackay

- “Human-AI collaboration: Shifting the focus to interaction”. (Keynote) Human-in-the-Loop applied Machine Learning (HITLAML) workshop, Luxembourg, 6 September 2023: Janin Koch

- Invited Address. “Creating Human-Computer Partnerships” at the ISO-CEN International Workshop on Human-Machine Teaming, IBM Research Innovation Center, Saclay, France. 18 September 2023: Wendy Mackay

- Invited Panel. “Analogie avec la biologie évolutive” at the eNSEMBLE kickoff, Grenoble, France. 4 October 2023: Wendy Mackay

- Invited Address. “Designing Collaborative Systems”. eNSEMBLE autumn school, Grenoble, France 5 October 2023: Wendy Mackay.

- Invited Address “Les enjeux de l'intelligence artificielle”, École Boulle, Paris, 18 October 2023: Wendy Mackay

- Keynote “Creating Human-Computer Partnerships”. Stanford University HCI Seminar, Stanford, CA, USA. 2 November 2023: Wendy Mackay

- Keynote. “Creating Human-Computer Partnerships”, CHIRA'23 conference, Rome, Italy. 17 November 2023: Wendy Mackay

- Paris-Saclay Institute for Mobility Infrastructures, “The CONTINUUM Research Infrastructure”, November 2023: Alexandre Kabil