2024Activity reportProject-TeamGRAPHDECO

RNSR: 201521163T- Research center Inria Centre at Université Côte d'Azur

- Team name: GRAPHics and DEsign with hEterogeneous COntent

- Domain:Perception, Cognition and Interaction

- Theme:Interaction and visualization

Keywords

Computer Science and Digital Science

- A3.1.4. Uncertain data

- A3.1.10. Heterogeneous data

- A3.4.1. Supervised learning

- A3.4.2. Unsupervised learning

- A3.4.3. Reinforcement learning

- A3.4.4. Optimization and learning

- A3.4.5. Bayesian methods

- A3.4.6. Neural networks

- A3.4.8. Deep learning

- A5.1. Human-Computer Interaction

- A5.1.1. Engineering of interactive systems

- A5.1.2. Evaluation of interactive systems

- A5.1.5. Body-based interfaces

- A5.1.8. 3D User Interfaces

- A5.1.9. User and perceptual studies

- A5.2. Data visualization

- A5.3.5. Computational photography

- A5.4.4. 3D and spatio-temporal reconstruction

- A5.4.5. Object tracking and motion analysis

- A5.5. Computer graphics

- A5.5.1. Geometrical modeling

- A5.5.2. Rendering

- A5.5.3. Computational photography

- A5.5.4. Animation

- A5.6. Virtual reality, augmented reality

- A5.6.1. Virtual reality

- A5.6.2. Augmented reality

- A5.6.3. Avatar simulation and embodiment

- A5.9.1. Sampling, acquisition

- A5.9.3. Reconstruction, enhancement

- A6.1. Methods in mathematical modeling

- A6.1.4. Multiscale modeling

- A6.1.5. Multiphysics modeling

- A6.2. Scientific computing, Numerical Analysis & Optimization

- A6.2.6. Optimization

- A6.2.8. Computational geometry and meshes

- A6.3.1. Inverse problems

- A6.3.2. Data assimilation

- A6.3.5. Uncertainty Quantification

- A6.5.2. Fluid mechanics

- A6.5.3. Transport

- A8.3. Geometry, Topology

- A9.2. Machine learning

- A9.3. Signal analysis

- A9.10. Hybrid approaches for AI

Other Research Topics and Application Domains

- B3.2. Climate and meteorology

- B3.3.1. Earth and subsoil

- B3.3.2. Water: sea & ocean, lake & river

- B3.3.3. Nearshore

- B3.4.1. Natural risks

- B5. Industry of the future

- B5.2. Design and manufacturing

- B5.5. Materials

- B5.7. 3D printing

- B5.8. Learning and training

- B8. Smart Cities and Territories

- B8.3. Urbanism and urban planning

- B9. Society and Knowledge

- B9.1.2. Serious games

- B9.2. Art

- B9.2.2. Cinema, Television

- B9.2.3. Video games

- B9.3. Medias

- B9.5.1. Computer science

- B9.5.2. Mathematics

- B9.5.3. Physics

- B9.5.5. Mechanics

- B9.5.6. Data science

- B9.6. Humanities

- B9.6.6. Archeology, History

- B9.8. Reproducibility

- B9.11.1. Environmental risks

1 Team members, visitors, external collaborators

Research Scientists

- George Drettakis [Team leader, INRIA, Senior Researcher]

- Adrien Bousseau [INRIA, Senior Researcher]

- Guillaume Cordonnier [INRIA, Researcher]

- Andreas Meuleman [INRIA, Starting Research Position, from Oct 2024]

Post-Doctoral Fellows

- Melike Aydinlilar [INRIA, Post-Doctoral Fellow, from Jun 2024]

- Alban Gauthier [INRIA, Post-Doctoral Fellow]

- Georgios Kopanas [INRIA, Post-Doctoral Fellow, until Jan 2024]

- Andreas Meuleman [INRIA, Post-Doctoral Fellow, until Sep 2024]

- Anran Qi [INRIA, Post-Doctoral Fellow]

- Marzia Riso [INRIA, Post-Doctoral Fellow, from Oct 2024]

- Emilie Yu [INRIA, until Apr 2024]

PhD Students

- Berend Baas [INRIA]

- Aryamaan Jain [INRIA]

- Henro Kriel [INRIA, from Sep 2024]

- Alexandre Lanvin [INRIA, from Dec 2024]

- Panagiotis Papantonakis [INRIA]

- Yohan Poirier-Ginter [UNIV LAVAL QUEBEC]

- Nicolas Rosset [INRIA]

- Petros Tzathas [INRIA]

- Nicolas Violante [INRIA]

Technical Staff

- Alexandre Lanvin [INRIA, Engineer, until Nov 2024]

- Ishaan Shah [INRIA, Engineer, from Dec 2024]

- Jiayi Wei [INRIA, Engineer, from Sep 2024]

Interns and Apprentices

- David Behrens [INRIA, Intern, from Jun 2024 until Aug 2024]

- Juan Sebastian Osorno Bolivar [INRIA, Intern, from May 2024 until Sep 2024]

- Vishal Pani [INRIA, Intern, until Feb 2024]

- Clement Remy [ENS DE LYON, Intern, from Jun 2024 until Jul 2024]

Administrative Assistant

- Sophie Honnorat [INRIA]

Visiting Scientists

- Frederic Durand [MIT, until Aug 2024]

- Gilda Manfredi [University of Basilicata, until Mar 2024]

- Eric Paquette [ETS MONTREAL, from Oct 2024 until Nov 2024]

2 Overall objectives

In traditional Computer Graphics (CG), input is accurately modeled by artists. Artists first create the 3D geometry – i.e., the surfaces used to represent the 3D scene. This task can be achieved using tools akin to sculpting for human-made objects, or using physical simulation for objects formed by natural phenomena. Artists then need to assign colors, textures and more generally material properties to each piece of geometry in the scene. Finally, they also define the position, type and intensity of the lights.

Creating all this 3D content by hand is a notoriously tedious process, both for novice users who do not have the skills to use complex modeling software, and for creative professionals who are primarily interested in obtaining a diversity of imagery and prototypes rather than in accurately specifying all the ingredients listed above. While physical simulation can alleviate some of this work for certain classes of objects (landscapes, fluids, plants), simulation algorithms are often costly and difficult to control.

Once all 3D elements of a scene are in place, a rendering algorithm is employed to generate a shaded, realistic image. Rendering algorithms typically involve the accurate simulation of light transport, accounting for the complex interactions between light and materials as light bounces over the surfaces of the scene to reach the camera. Similarly to the simulation of natural phenomena, the simulation of light transport is computationally expensive, and only provides meaningful results if the input is accurate and complete.

A major recent development is that many alternative sources of 3D content are becoming available. Cheap depth sensors but also video and photos allow anyone to capture real objects. However, the resulting 3D models are often inaccurate and incomplete due to limitations of these sensors and acquisition setups. There have also been significant advances in casual content creation, e.g., sketch-based modeling tools. But the resulting models are often approximate since people rarely draw accurate perspective and proportions, nor fine details. Unfortunately, the traditional Computer Graphics pipeline outlined above is unable to directly handle the uncertainty present in cheap sources of 3D content. The abundance and ease of access to inaccurate, incomplete and heterogeneous 3D content imposes the need to rethink the foundations of 3D computer graphics to allow uncertainty to be treated in inherent manner in Computer Graphics, from design and simulation all the way to rendering and prototyping.

The technological shifts we mention above, together with developments in computer vision and machine learning, and the availability of large repositories of images, videos and 3D models represent a great opportunity for new imaging methods. In GraphDeco, we have identified three major scientific challenges that we strive to address to make such visual content widely accessible:

- First, the design pipeline needs to be revisited to explicitly account for the variability and uncertainty of a concept and its representations, from early sketches to 3D models and prototypes. Professional practice also needs to be adapted to be accessible to all.

- Second, a new approach is required to develop computer graphics models and rendering algorithms capable of handling uncertain and heterogeneous data as well as traditional synthetic content.

- Third, physical simulation needs to be combined with approximate user inputs to produce content that is realistic and controllable.

We have developed a common thread that unifies these three axes: the combination of machine learning with optimization and simulation, allowing the treatment of uncertain data for the synthesis of visual content. This common methodology – which falls under the umbrella term of machine learning for visual computing – provides a shared language and toolbox for the three research axes in our group, allowing frequent and in-depth collaborations between all three permanent researchers of the group, and a strong cohesive dynamic for Ph.D. students and postdocs.

As a result of this approach, GRAPHDECO is one of the few groups worldwide with in-depth expertise of both computer graphics techniques and deep learning approaches, in all three “traditional pillars” of CG: modeling, animation and rendering.

3 Research program

3.1 Introduction

Our research program is oriented around three main axes: 1) Computer-Assisted Design with Heterogeneous Representations, 2) Graphics with Uncertainty and Heterogeneous Content, and 3) Physical Simulation of Natural Phenomena. These three axes are governed by a set of common fundamental goals, share many common methodological tools and are deeply intertwined in the development of applications.

3.2 Computer-Assisted Design with Heterogeneous Representations

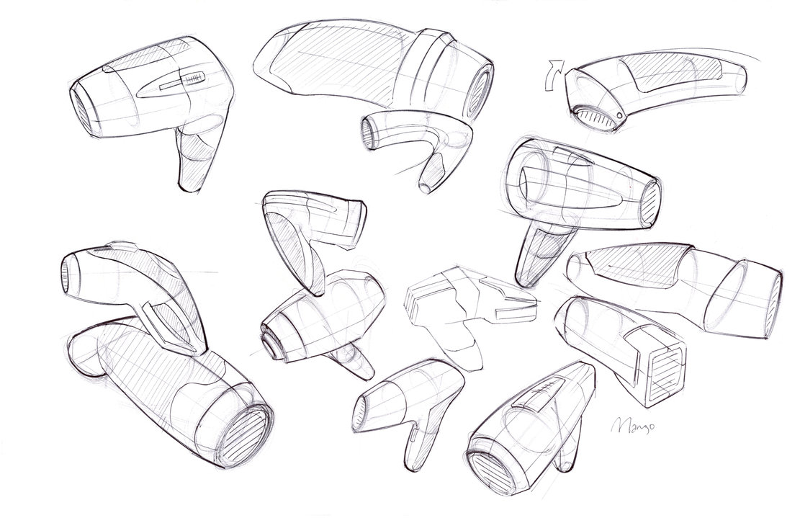

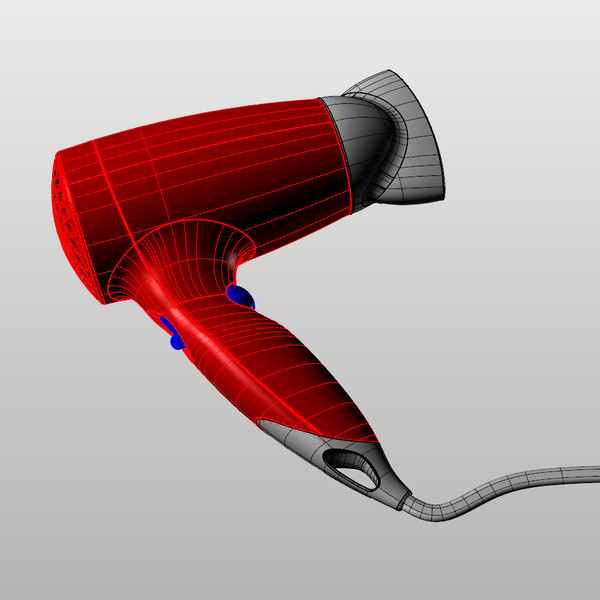

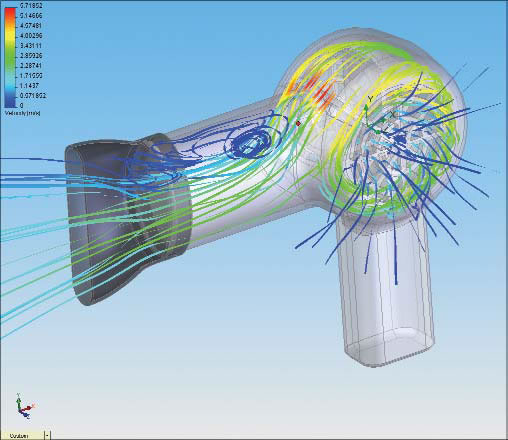

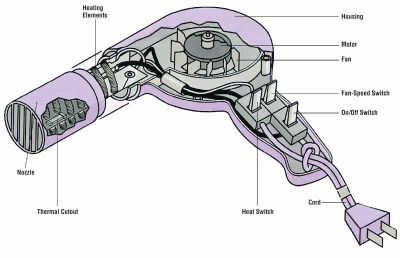

Designers use a variety of visual representations to explore and communicate about a concept. Figure 1 illustrates some typical representations, including sketches, hand-made prototypes, 3D models, 3D printed prototypes or instructions.

|

|

|

|

| (a) Ideation sketch | (b) Presentation sketch | (c) Coarse prototype | (d) 3D model |

|

|

|

|

| (f) Simulation | (e) 3D Printing | (g) Technical diagram | (h) Instructions |

Various design sketches used to inspire our research.

The early representations of a concept, such as rough sketches and hand-made prototypes, help designers formulate their ideas and test the form and function of multiple design alternatives. These low-fidelity representations are meant to be cheap and fast to produce, to allow quick exploration of the design space of the concept. These representations are also often approximate to leave room for subjective interpretation and to stimulate imagination; in this sense, these representations can be considered uncertain. As the concept gets more finalized, time and effort are invested in the production of more detailed and accurate representations, such as high-fidelity 3D models suitable for simulation and fabrication. These detailed models can also be used to create didactic instructions for assembly and usage.

Producing these different representations of a concept requires specific skills in sketching, modeling, manufacturing and visual communication. For these reasons, professional studios often employ different experts to produce the different representations of the same concept, at the cost of extensive discussions and numerous iterations between the actors of this process. The complexity of the multi-disciplinary skills involved in the design process also hinders their adoption by laymen.

Existing solutions to facilitate design have focused on a subset of the representations used by designers. However, no solution considers all representations at once, for instance to directly convert a series of sketches into a set of physical prototypes. In addition, all existing methods assume that the concept is unique rather than ambiguous. As a result, rich information about the variability of the concept is lost during each conversion step.

We plan to facilitate design for professionals and laymen by addressing the following objectives:

- We want to assist designers in the exploration of the design space that captures the possible variations of a concept. By considering a concept as a distribution of shapes and functionalities rather than a single object, our goal is to help designers consider multiple design alternatives more quickly and effectively. Such a representation should also allow designers to preserve multiple alternatives along all steps of the design process rather than committing to a single solution early on and pay the price of this decision for all subsequent steps. We expect that preserving alternatives will facilitate communication with engineers, managers and clients, accelerate design iterations and even allow mass personalization by the end consumers.

- We want to support the various representations used by designers during concept development. While drawings and 3D models have received significant attention in past Computer Graphics research, we will also account for the various forms of rough physical prototypes made to evaluate the shape and functionality of a concept. Depending on the task at hand, our algorithms will either analyze these prototypes to generate a virtual concept, or assist the creation of these prototypes from a virtual model. We also want to develop methods capable of adapting to the different drawing and manufacturing techniques used to create sketches and prototypes. We envision design tools that conform to the habits of users rather than impose specific techniques to them.

- We want to make professional design techniques available to novices. Affordable software, hardware and online instructions are democratizing technology and design, allowing small businesses and individuals to compete with large companies. New manufacturing processes and online interfaces also allow customers to participate in the design of an object via mass personalization. However, similarly to what happened for desktop publishing thirty years ago, desktop manufacturing tools need to be simplified to account for the needs and skills of novice designers. We hope to support this trend by adapting the techniques of professionals and by automating the tasks that require significant expertise.

3.3 Graphics with Uncertainty and Heterogeneous Content

Our research is motivated by the observation that traditional CG algorithms have not been designed to account for uncertain data. For example, global illumination rendering assumes accurate virtual models of geometry, light and materials to simulate light transport. While these algorithms produce images of high realism, capturing effects such as shadows, reflections and interreflections, they are not applicable to the growing mass of uncertain data available nowadays.

The need to handle uncertainty in CG is timely and pressing, given the large number of heterogeneous sources of 3D content that have become available in recent years. These include data from cheap depth+image sensors (e.g., Kinect or the Tango), 3D reconstructions from image/video data, but also data from large 3D geometry databases, or casual 3D models created using simplified sketch-based modeling tools. Such alternate content has varying levels of uncertainty about the scene or objects being modeled. This includes uncertainty in geometry, but also in materials and/or lights – which are often not even available with such content. Since CG algorithms cannot be applied directly, visual effects artists spend hundreds of hours correcting inaccuracies and completing the captured data to make them useable in film and advertising.

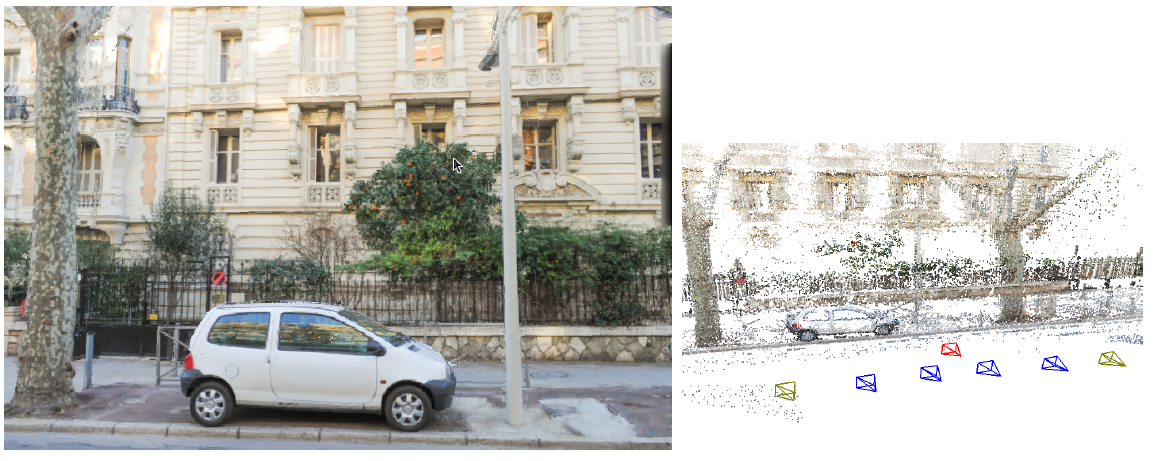

|

|

Image-Based Rendering (IBR) techniques use input photographs and approximate 3D to produce new synthetic views.

Image-Based Rendering (IBR) techniques use input photographs and approximate 3D to produce new synthetic views.

We identify a major scientific bottleneck which is the need to treat heterogeneous content, i.e., containing both (mostly captured) uncertain and perfect, traditional content. Our goal is to provide solutions to this bottleneck, by explicitly and formally modeling uncertainty in CG, and to develop new algorithms that are capable of mixed rendering for this content.

We strive to develop methods in which heterogeneous – and often uncertain – data can be handled automatically in CG with a principled methodology. Our main focus is on rendering in CG, including dynamic scenes (video/animations) (see Fig. 2).

Given the above, we need to address the following challenges:

- Develop a theoretical model to handle uncertainty in computer graphics. We must define a new formalism that inherently incorporates uncertainty, and must be able to express traditional CG rendering, both physically accurate and approximate approaches. Most importantly, the new formulation must elegantly handle mixed rendering of perfect synthetic data and captured uncertain content. An important element of this goal is to incorporate cost in the choice of algorithm and the optimizations used to obtain results, e.g., preferring solutions which may be slightly less accurate, but cheaper in computation or memory.

- The development of rendering algorithms for heterogeneous content often requires preprocessing of image and video data, which sometimes also includes depth information. An example is the decomposition of images into intrinsic layers of reflectance and lighting, which is required to perform relighting. Such solutions are also useful as image-manipulation or computational photography techniques. The challenge will be to develop such “intermediate” algorithms for the uncertain and heterogeneous data we target.

- Develop efficient rendering algorithms for uncertain and heterogeneous content, reformulating rendering in a probabilistic setting where appropriate. Such methods should allow us to develop approximate rendering algorithms using our formulation in a well-grounded manner. The formalism should include probabilistic models of how the scene, the image and the data interact. These models should be data-driven, e.g., building on the abundance of online geometry and image databases, domain-driven, e.g., based on requirements of the rendering algorithms or perceptually guided, leading to plausible solutions based on limitations of perception.

3.4 Physical Simulation of Natural Phenomena

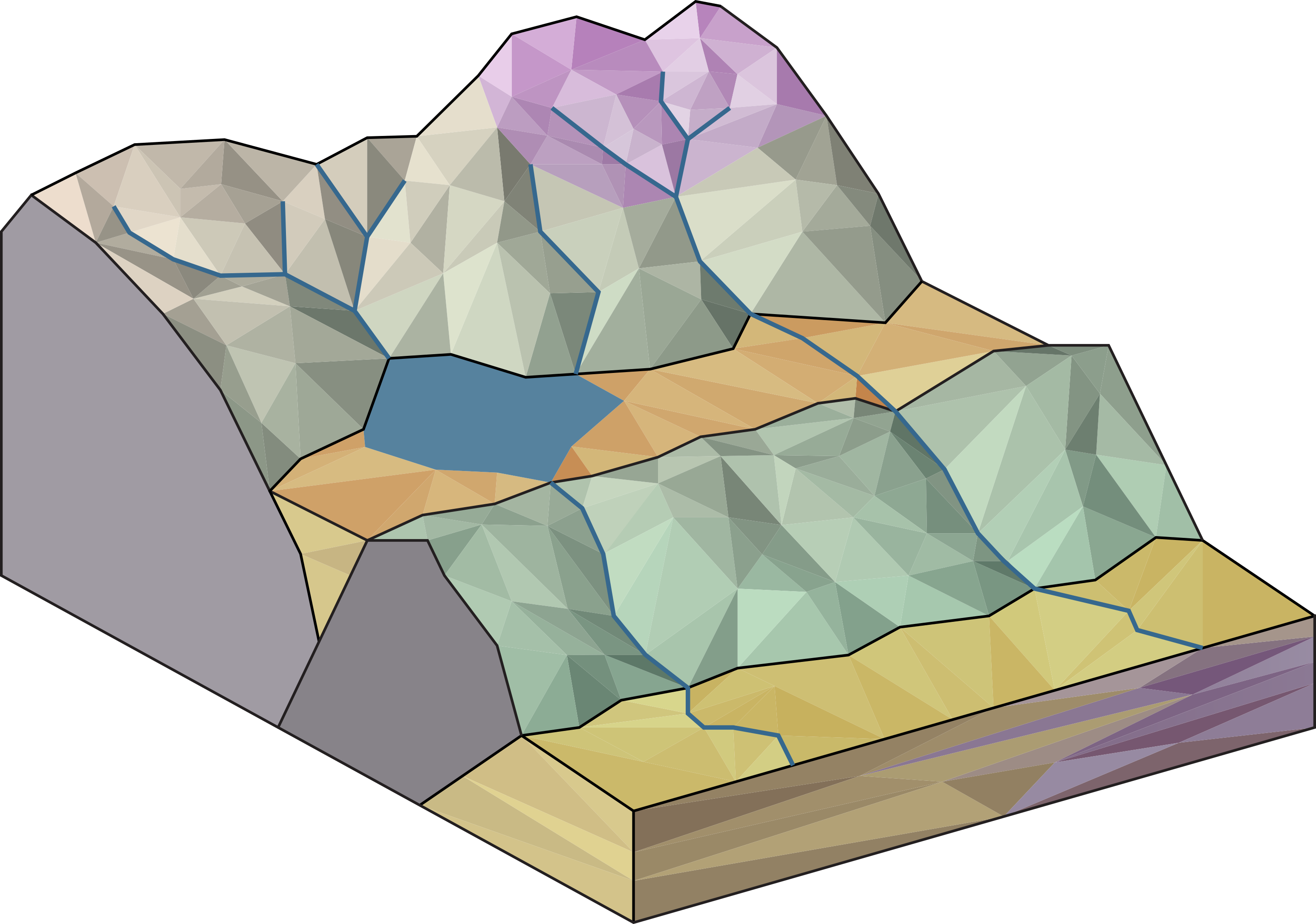

Our world emerged from the conjunction of natural phenomena at different scales, from the orogenesis of mountains to the evolution of ecosystems or the daily changes in weather conditions.

Understanding and modeling these phenomena is key to visually synthesizing our environments, reducing the uncertainty inherent to the capture of natural sceneries, and anticipating the impacts of natural hazards on our societies. For all these applications, the ability of a user to efficiently direct the simulation is preeminent, which provides us with two key constraints: first, the models should be fast to enable interactive interactions between the user and the simulation. Second, the models have to exhibit efficient control mechanisms.

The previous work on natural phenomena is as diverse as the number of scientific fields specialized in environmental and Earth sciences but with a main focus on predictability. In contrast, computer graphics has a long history of models focused on efficiency, robustness, and controllability, although originally explored for dynamic visual effects (smoke, explosions) and less so for natural phenomena that are more considered from a procedural or phenomenon-based perspective.

We benefit from computer graphics expertise in efficient and controllable physically-based simulations and extend it to natural phenomena. We explore new methods in machine learning and optimization, that enable us to enhance the efficiency of our models and reach a new space of forward and inverse control mechanisms. Coupling these models with physics provides guarantees on the quality of the results and a physical interpretation of the controls.

4 Application domains

Our research on design, simulation and computer graphics with heterogeneous data has the potential to change many different application domains. Such applications include:

Product design will be significantly accelerated and facilitated. Current industrial workflows separate 2D illustrators, 3D modelers and engineers who create physical prototypes, which results in a slow and complex process with frequent misunderstandings and corrective iterations between different people and different media. Our unified approach based on design principles could allow all processes to be done within a single framework, avoiding unnecessary iterations. This could significantly accelerate the design process (from months to weeks), result in much better communication between the different experts, or even create new types of experts who cross boundaries of disciplines today.

Mass customization will allow end customers to participate in the design of a product before buying it. In this context of “cloud-based design”, users of an e-commerce website will be provided with controls on the main variations of a product created by a professional designer. Intuitive modeling tools will also allow users to personalize the shape and appearance of the object while remaining within the bounds of the pre-defined design space.

Digital instructions for creating and repairing objects, in collaboration with other groups working in 3D fabrication, could have significant impact in sustainable development and allow anyone to be a creator of things, not just consumers, the motto of the makers movement.

Gaming experience individualization is an important emerging trend; using our results players will also be able to integrate personal objects or environments (e.g., their homes, neighborhoods) into any realistic 3D game. The success of creative games where the player constructs their world illustrates the potential of such solutions. This approach also applies to serious gaming, with applications in medicine, education/learning, training etc. Such interactive experiences with high-quality images of heterogeneous 3D content will be also applicable to archeology (e.g., realistic presentation of different reconstruction hypotheses), urban planning and renovation where new elements can be realistically used with captured imagery.

Virtual training, which today is restricted to pre-defined virtual environment(s) that are expensive and hard to create; with our solutions on-site data can be seamlessly and realistically used together with the actual virtual training environment. With our results, any real site can be captured, and the synthetic elements for the interventions rendered with high levels of realism, thus greatly enhancing the quality of the training experience.

Earth and environmental sciences use simulations to understand and characterize natural processes. One of the common scientific methodologies requires testing several simulations with different sets of parameters to observe emergent behavior or to match observed data. Our fast simulation models accelerate this workflow, while our focus on control gives new tools to efficiently reduce the misfit between simulations and observations.

Natural hazard prevention is becoming ever more critical now that several climatic tipping points are crossed or about to be. Fast and controllable simulations of natural phenomena could allow public authorities to quickly assert different scenarios on the verge of imminent hazards, informing them of the probable impacts of their decisions.

Another interesting novel use of heterogeneous graphics could be for news reports. Using our interactive tool, a news reporter can take on-site footage, and combine it with 3D mapping data. The reporter can design the 3D presentation allowing the reader to zoom from a map or satellite imagery and better situate the geographic location of a news event. Subsequently, the reader will be able to zoom into a pre-existing street-level 3D online map to see the newly added footage presented in a highly realistic manner. A key aspect of these presentation is the ability of the reader to interact with the scene and the data, while maintaining a fully realistic and immersive experience. The realism of the presentation and the interactivity will greatly enhance the readers experience and improve comprehension of the news. The same advantages apply to enhanced personal photography/videography, resulting in much more engaging and lively memories. Such interactive experiences with high-quality images of heterogeneous 3D content will be also applicable to archeology (e.g., realistic presentation of different reconstruction hypotheses), urban planning and renovation where new elements can be realistically used with captured imagery.

Other applications may include scientific domains which use photogrammetric data (captured with various 3D scanners), such as geophysics and seismology. Note however that our goal is not to produce 3D data suitable for numerical simulations; our approaches can help in combining captured data with presentations and visualization of scientific information.

5 Social and environmental responsibility

5.1 Footprint of research activities

Deep learning algorithms use a significant amount of computing resources. We are attentive to this issue and plan to implement a more detailed policy for monitoring overall resource usage.

5.2 Impact of research results

G. Cordonnier collaborates with geologists and glaciologists on various projects, developing computationally efficient models that can have direct impact in climate related research. A. Bousseau regularly collaborates with designers; their needs serve as an inspiration for some of his research projects, including the developement of innovative digital tools for circular design. Finally, the work in FUNGRAPH (G. Drettakis) has advanced research in visualization for reconstruction of real scenes. The recent 3D Gaussian Splatting (3DGS) 33 work has resulted in extensive technology transfer with many commercial licenses of the code already completed. These involve diverse industrial domains, including e-commerce, casual capture for 3D reconstruction, film and television production, virtual and extended reality, real-estate visualization and others. The 3DGS technology significantly reduces the computation time and thus computational resources required compared to the previous state of the art in 3D reconstruction/novel view synthesis that was the Neural Radiance Fields method (for typical scenes, 40 min instead of 48 hours of GPU time).

6 Highlights of the year

The most significant highlight of the year was the success of the 3D Gaussian Splatting method 33 which has had unprecedented impact both scientifically (already more than 2500 citations on google scholar since 2023) and in terms of technology transfer (around 11 licenses commercialized by Inria, see Sec. 5.2).

6.1 Awards

- George Drettakis received the Eurographics Outstanding Career Award.

- George Drettakis received the Grand Prix Inria - Academie de Sciences.

- Emilie Yu received the Ph.D. award from GdR IG-RV and the Gilles Kahn Ph.D. award from Société informatique de France (SIF).

- Yorgos Kopanas received the EDSTIC Doctoral Prize in Computer Science.

- Our paper FastFlow: GPU Acceleration of Flow and Depression Routing for Landscape Simulation 14 received the Best Paper Award at Pacific Graphics 2024.

- Our paper Unerosion: Simulating Terrain Evolution Back in Time 23 received an Honorable Mention to the Best Paper Award at the ACM SIGGRAPH / Eurographics Symposium on Computer Animation 2024.

- Our paper CADTalk: An Algorithm and Benchmark for Semantic Commenting of CAD Programs 31 was selected as a highlight at CVPR (top 10%).

6.2 Press release

- Article on 3D Gaussian Splatting: "Une technique revolutionne la creation des scenes en 3D", Le Monde, May 2024

- 3D Gaussian Splatting mentioned in the "Best of Science News 2024" in Le Monde, December 2024,

- 3D Mag website interview , October 2024.

- Inria media department articles on NERPHYS: and on the Grand Prix Inria - Academie de Sciences.

- Adrien Bousseau was interviewed for People of ACM.

7 New software, platforms, open data

7.1 New software

7.1.1 sibr-core

-

Name:

System for Image-Based Rendering

-

Keyword:

Graphics

-

Scientific Description:

Core functionality to support Image-Based Rendering research. The core provides basic support for camera calibration, multi-view stereo meshes and basic image-based rendering functionality. Separate dependent repositories interface with the core for each research project. This library is an evolution of the previous SIBR software, but now is much more modular.

sibr-core has been released as open source software, as well as the code for several of our research papers, as well as papers from other authors for comparisons and benchmark purposes.

The corresponding gitlab is: https://gitlab.inria.fr/sibr/sibr_core

The full documentation is at: https://sibr.gitlabpages.inria.fr

This year several improvements were added as part of the 3D Gaussian Splatting support.

-

Functional Description:

sibr-core is a framework containing libraries and tools used internally for research projects based on Image-Base Rendering. It includes both preprocessing tools (computing data used for rendering) and rendering utilities and serves as the basis for many research projects in the group.

-

Contact:

George Drettakis

7.1.2 3DGaussianSplats

-

Name:

3D Gaussian Splatting for Real-Time Radiance Field Rendering

-

Keywords:

3D, View synthesis, Graphics

-

Scientific Description:

Implementation of the method 3D Gaussian Splatting for Real-Time Radiance Field Rendering, see https://repo-sam.inria.fr/fungraph/3d-gaussian-splatting/

-

Functional Description:

3D Gaussian Splatting is a method that achieves real-time rendering of captured scenes with quality that equals the previous method with the best quality, while only requiring optimization times competitive with the fastest previous methods

3D Gaussian Splatting represents 3D scenes with 3D Gaussians that preserve desirable properties of continuous volumetric radiance fields for scene optimization while avoiding unnecessary computation in empty space. The method performs interleaved optimization/density control of the 3D Gaussians, notably optimizing anisotropic covariance to achieve an accurate representation of the scene. We provide a fast visibility-aware rendering algorithm that supports anisotropic splatting and both accelerates training and allows realtime rendering.

We have provided several updates to the software this year, most importantly integrating new features for better quality and speed of optimization.

- URL:

-

Contact:

George Drettakis

-

Participants:

Georgios Kopanas, Bernhard Kerbl, Thomas Leimkuhler

7.1.3 NerfShop

-

Name:

Interactive Editing of Neural Radiance Fields

-

Keywords:

3D, Deep learning

-

Scientific Description:

Software implementation of the paper "Nerfshop: Interactive Editing of Neural Radiance Fields". See https://repo-sam.inria.fr/fungraph/nerfshop/

-

Functional Description:

Neural Radiance Fields (NeRFs) have revolutionized novel view synthesis for captured scenes, with recent methods allowing interactive free-viewpoint navigation and fast training for scene reconstruction. NeRFshop is a novel end-to-end method that allows users to interactively edit NeRFs by selecting and deforming objects through cage-based transformations.

The software has seen extensive usage in followup papers during this year.

- URL:

-

Contact:

George Drettakis

7.1.4 H3DGS

-

Name:

Hierarchical 3D Gaussian Splatting

-

Keywords:

3D modeling, 3D rendering, Differentiable Rendering

-

Scientific Description:

Implementation of the SIGGRAPH 2024 paper A Hierarchical 3D Gaussian Representation for Real-Time Rendering of Very Large Datasets, project page https://repo-sam.inria.fr/fungraph/hierarchical-3d-gaussians/

-

Functional Description:

Implementation of the SIGGRAPH 2024 paper A Hierarchical 3D Gaussian Representation for Real-Time Rendering of Very Large Datasets, project page https://repo-sam.inria.fr/fungraph/hierarchical-3d-gaussians/

- URL:

-

Contact:

George Drettakis

-

Participants:

Georgios Kopanas, Alexandre Lanvin, Bernhard Kerbl, Andreas Meuleman, George Drettakis, Michael Wimmer

-

Partner:

Technische Universität Wien

7.1.5 DiffRelightGS

-

Name:

A Diffusion Approach to Radiance Field Relighting using Multi-Illumination Synthesis

-

Keywords:

3D, 3D reconstruction, 3D rendering, Artificial intelligence, Machine learning

-

Functional Description:

A method to create relightable 3D radiance fields from single-illumination data by exploiting priors extracted from 2D image diffusion models.

- URL:

- Publication:

-

Contact:

George Drettakis

-

Participants:

Yohan Poirier-Ginter, Alban Gauthier, Julien Philip, Jean-Francois Lalonde, George Drettakis

-

Partner:

Université Laval

7.1.6 3DLayers

-

Name:

VR painting system with layers

-

Keywords:

Virtual reality, 3D, Painting

-

Functional Description:

This is the source code for the prototype implementation of the research paper: "3D-Layers: Bringing Layer-Based Color Editing to VR Painting", Emilie Yu, Fanny Chevalier, Karan Singh and Adrien Bousseau, ACM Transactions on Graphics (SIGGRAPH) - 2024

This is a Unity project that implements a simple VR application compatible with Quest 2/3/Pro headsets. The project features:

- A VR app with basic 3D painting features (painting tube strokes, stroke deletion and transformation, color palette, undo/redo). - A UI in the VR app to create, paint in, and edit shape and appearance layers, as described in the 3DLayers paper. We have a basic menu UI for users to visualize and navigate in the layer hierarchy. - A basic in-Unity visualizer for paintings created with our system. It enables users to view and render still frames or simple camera path animations. We used it to create all results in the paper/video.

- URL:

-

Contact:

Emilie Yu

7.1.7 VideoDoodles

-

Name:

VideoDoodles: Hand-Drawn Animations on Videos with Scene-Aware Canvases

-

Keywords:

3D web, 3D, 2D animation, 3D animation, Visual tracking

-

Scientific Description:

Implementation for Siggraph 2023 paper VideoDoodles: Hand-Drawn Animations on Videos with Scene-Aware Canvases

-

Functional Description:

We present an interactive system to ease the creation of so-called video doodles – videos on which artists insert hand-drawn animations for entertainment or educational purposes. Video doodles are challenging to create because to be convincing, the inserted drawings must appear as if they were part of the captured scene. In particular, the drawings should undergo tracking, perspective deformations and occlusions as they move with respect to the camera and to other objects in the scene – visual effects that are difficult to reproduce with existing 2D video editing software. Our system supports these effects by relying on planar canvases that users position in a 3D scene reconstructed from the video. Furthermore, we present a custom tracking algorithm that allows users to anchor canvases to static or dynamic objects in the scene, such that the canvases move and rotate to follow the position and direction of these objects.

Our system is composed of the following elements: * A preprocessing library to convert depth, camera and motion data into data formats compatible with our system * A collection of Python scripts that can be used either offline to execute 3D point tracking (with the possibility to specify 1 to N keyframes) , or as a backend server to our interactive frontend UI * A web UI to author video doodles. It features a renderer capable of displaying the composited video doodles , an editing interface to keyframe canvases , a sketching interface to create frame-by-frame animations on canvases , basic export capabilities.

- URL:

-

Contact:

Emilie Yu

7.1.8 pySBM

-

Keywords:

3D modeling, Vector-based drawing

-

Scientific Description:

This project is the official implementation of our paper Symmetry-driven 3D Reconstruction from Concept Sketches, published at SIGGRAPH 2022 https://ns.inria.fr/d3/SymmetrySketch/

The software is currently part of an ERC PoC development cycle for the creation of a Blender plugin.

-

Functional Description:

This is a sketch-based modeling library. It proposes an interface to input a vector sketch, process it and reconstruct it in 3D. It also contains a Blender interface to trace a drawing and to interact with its 3D reconstruction.

- URL:

-

Contact:

Adrien Bousseau

-

Participants:

Felix Hahnlein, Yulia Gryaditskaya, Alla Sheffer, Adrien Bousseau

-

Partner:

University of British Columbia

7.1.9 pyLowStroke

-

Keywords:

Vector-based drawing, 3D modeling

-

Scientific Description:

This library contains several functionalities to process line drawings, including loading and saving in svg format, detecting vanishing points, calibrating a camera, detecting intersections. It has been used to develop our reconstruction algorithm Symmetry-driven 3D Reconstruction from Concept Sketches published at SIGGRAPH 2022 https://ns.inria.fr/d3/SymmetrySketch/

-

Functional Description:

This is a library for low-level processing of freehand sketches. Example applications include reading and writing vector drawings, removing hooks, classifying lines into straight lines and curves and the calibration of a perspective camera model.

The software is currently part of an ERC PoC development cycle for the creation of a Blender plugin.

- URL:

-

Contact:

Adrien Bousseau

-

Participants:

Felix Hahnlein, Yulia Gryaditskaya, Bastien Wailly, Adrien Bousseau

7.1.10 AnaTerrains

-

Name:

Physically-based analytical erosion for fast terrain generation

-

Keywords:

Analytic model, Terrain, Simulation

-

Functional Description:

Source code for the paper "Physically-based analytical erosion for fast terrain generation". This code demonstrates the use of analytical solutions of erosion laws to generate large-scale mountain ranges instantly.

-

Contact:

Guillaume Cordonnier

7.1.11 Fastflow

-

Name:

GPU Acceleration of Flow and Depression Routing for Landscape Simulation

-

Keywords:

Flow routing, Landscape, GPU

-

Functional Description:

Library for the fast computation of flow and depression routing on the GPU for numerical simulations of landscapes in hydrology and geomorphology. This code enables the computation of flow-related properties such as the discharge over large Digital Elevation Models (flow routing). It solves the problem that local minima in topography interrupt the flow path (depression routing). The code is optimized for the GPU, resulting in fast execution time for large domains.

- URL:

-

Contact:

Guillaume Cordonnier

8 New results

8.1 Computer-Assisted Design with Heterogeneous Representations

8.1.1 3D-Layers: Bringing Layer-Based Color Editing to VR Painting

Participants: Emilie Yu, Adrien Bousseau, Fanny Chevalier [University of Toronto], Karan Singh [University of Toronto].

The ability to represent artworks as stacks of layers is fundamental to modern graphics design, as it allows artists to easily separate visual elements, edit them in isolation, and blend them to achieve rich visual effects. Despite their ubiquity in 2D painting software, layers have not yet made their way to VR painting, where users paint strokes directly in 3D space by gesturing a 6-degrees-of-freedom controller. But while the concept of a stack of 2D layers was inspired by real-world layers in cell animation, what should 3D layers be? We propose to define 3D-Layers as groups of 3D strokes, and we distinguish the ones that represent 3D geometry from the ones that represent color modifications of the geometry. We call the former substrate layers and the latter appearance layers. Strokes in appearance layers modify the color of the substrate strokes they intersect. Thanks to this distinction, artists can define sequences of color modifications as stacks of appearance layers, and edit each layer independently to finely control the final color of the substrate. We have integrated 3D-Layers into a VR painting application and we evaluated its flexibility and expressiveness by conducting a usability study with experienced VR artists. Figure 3 details an artwork created with our system.

A 3D illustration of a lighthouse created with our VR painting system.

This work is a collaboration with Fanny Chevalier and Karan Singh from the University of Toronto and was initiated during the visit of Emilie Yu at University of Toronto in May-August 2023 funded by MITACS. It was published in ACM Transactions on Graphics and presented at SIGGRAPH 2024 24.

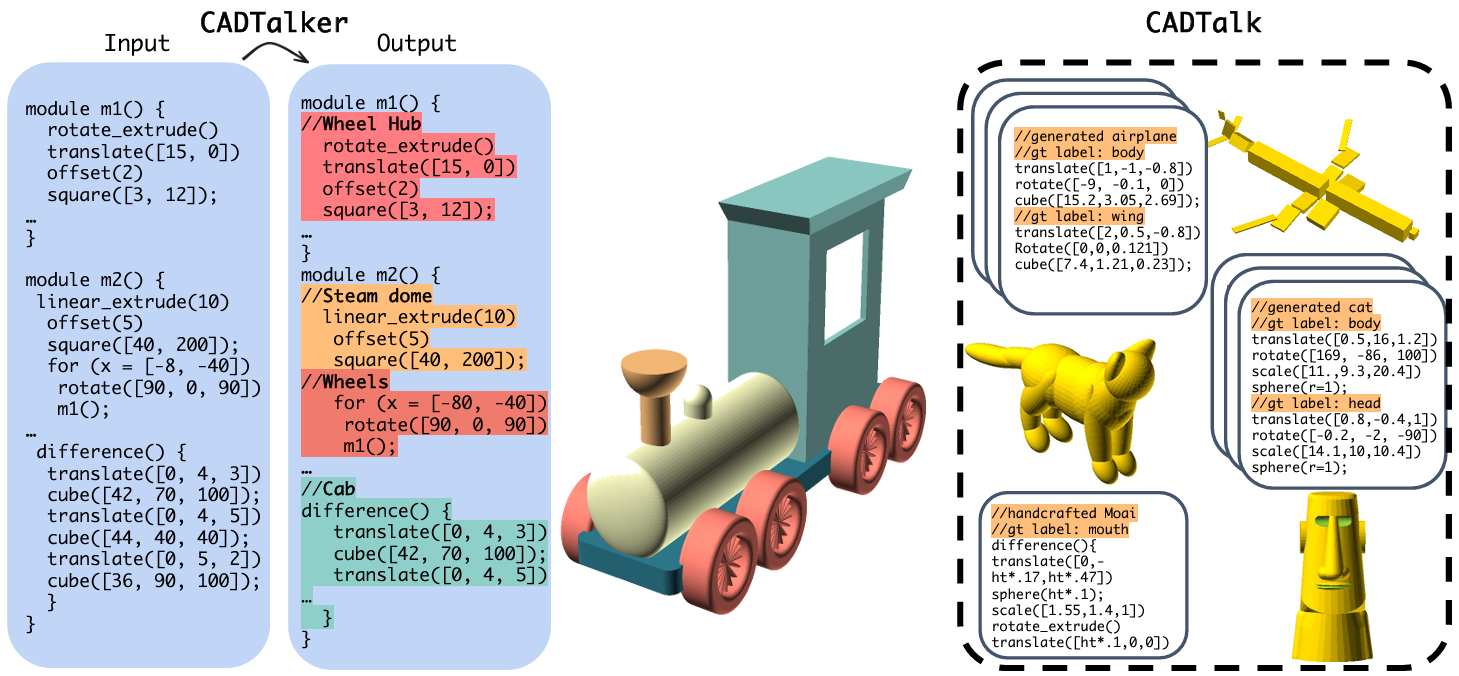

8.1.2 CADTalk: An Algorithm and Benchmark for Semantic Commenting of CAD Programs

Participants: Adrien Bousseau, Haocheng Yuan [University of Edinburgh], Jing Xu [University of Edinburgh], Hao Pan [Microsoft Research Asia], Niloy J. Mitra [University College London, Adobe Research], Changjian Li [University of Edinburgh].

CAD programs are a popular way to compactly encode shapes as a sequence of operations that are easy to parametrically modify. However, without sufficient semantic comments and structure, such programs can be challenging to understand, let alone modify. We introduce the problem of semantic commenting CAD programs, wherein the goal is to segment the input program into code blocks corresponding to semantically meaningful shape parts and assign a semantic label to each block (see Fig. 4). We solve the problem by combining program parsing with visual-semantic analysis afforded by recent advances in foundational language and vision models. Specifically, by executing the input programs, we create shapes, which we use to generate conditional photorealistic images to make use of semantic annotators for such images. We then distill the information across the images and link back to the original programs to semantically comment on them. Additionally, we collected and annotated a benchmark dataset, CADTalk, consisting of 5,280 machine-made programs and 45 human-made programs with ground truth semantic comments to foster future research. We extensively evaluated our approach, compared to a GPT-based baseline approach, and an open-set shape segmentation baseline, i.e., PartSLIP, and report an 83.24% accuracy on the new CADTalk dataset.

A CAD program representing a train, with comments highlighted.

This work is a collaboration with Haocheng Yuan, Jing Xu and Changjian Li from University of Edinburgh, Hao Pan from Microsoft Research Asia, and Niloy J. Mitra from University College London and Adobe Research. It was published at the Conference on Computer Vision and Pattern Recognition (CVPR) where it was selected as a highlight (top 10%) 31.

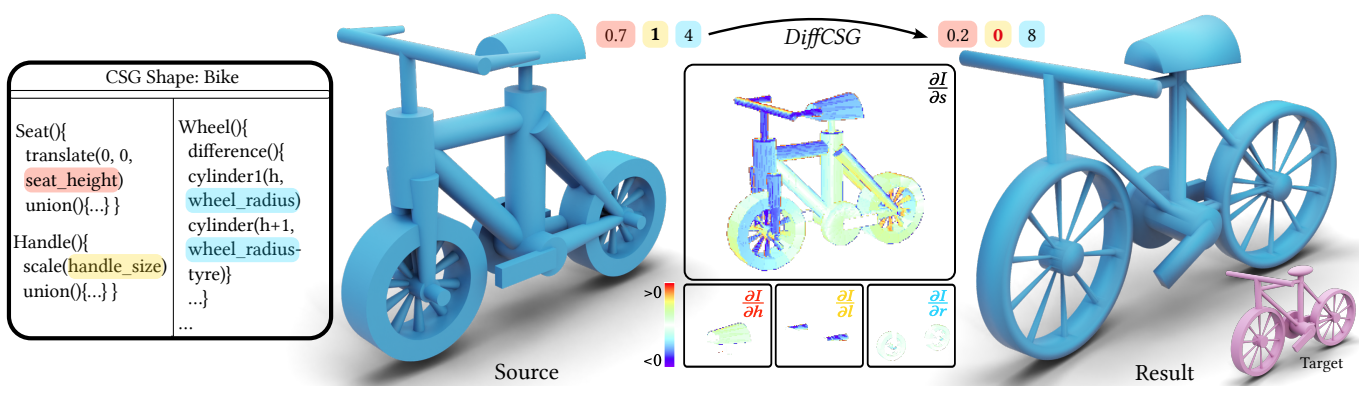

8.1.3 DiffCSG: Differentiable CSG via Rasterization

Participants: Adrien Bousseau, Haocheng Yuan [University of Edinburgh], Hao Pan [Microsoft Research Asia], Chengquan Zhang [Nanjing University], Niloy J. Mitra [University College London, Adobe Research], Changjian Li [University of Edinburgh].

Differentiable rendering is a key ingredient for inverse rendering and machine learning, as it allows to optimize scene parameters (shape, materials, lighting) to best fit target images. Differentiable rendering requires that each scene parameter relates to pixel values through differentiable operations. While 3D mesh rendering algorithms have been implemented in a differentiable way, these algorithms do not directly extend to Constructive-Solid-Geometry (CSG), a popular parametric representation of shapes, because the underlying boolean operations are typically performed with complex black-box mesh-processing libraries. We present an algorithm, DiffCSG, to render CSG models in a differentiable manner. Our algorithm builds upon CSG rasterization, which displays the result of boolean operations between primitives without explicitly computing the resulting mesh and, as such, bypasses black-box mesh processing. We describe how to implement CSG rasterization within a differentiable rendering pipeline, taking special care to apply antialiasing along primitive intersections to obtain gradients in such critical areas. Our algorithm is simple and fast, can be easily incorporated into modern machine learning setups, and enables a range of applications for computer-aided design, including direct and image-based editing of CSG primitives (Fig. 5).

The dimensions of a bike model are optimized to fit a target image.

This work is a collaboration with Haocheng Yuan and Changjian Li from University of Edinburgh, Chengquan Zhang from Nanjing University, Hao Pan from Microsoft Research Asia, and Niloy J. Mitra from University College London and Adobe Research. It was published at the conference track of SIGGRAPH Asia 30.

8.1.4 Single-Image SVBRDF Estimation with Learned Gradient Descent

Participants: Adrien Bousseau, Xuejiao Luo [Technical University of Delft], Leonardo Scandolo [Technical University of Delft], Elmar Eisemann [Technical University of Delft].

Recovering spatially-varying materials from a single photograph of a surface is inherently ill-posed, making the direct application of a gradient descent on the reflectance parameters prone to poor minima. Recent methods leverage deep learning either by directly regressing reflectance parameters using feed-forward neural networks or by learning a latent space of SVBRDFs (Spatially-Varying Bidirectional Reflection Distribution Functions) using encoder-decoder or generative adversarial networks followed by a gradient-based optimization in latent space. The former is fast but does not account for the likelihood of the prediction, i.e., how well the resulting reflectance explains the input image. The latter provides a strong prior on the space of spatially-varying materials, but this prior can hinder the reconstruction of images that are too different from the training data. Our method combines the strengths of both approaches. We optimize reflectance parameters to best reconstruct the input image using a recurrent neural network, which iteratively predicts how to update the reflectance parameters given the gradient of the reconstruction likelihood. By combining a learned prior with a likelihood measure, our approach provides a maximum a posteriori estimate of the SVBRDF. Our evaluation shows that this learned gradient-descent method achieves state-of-the-art performance for SVBRDF estimation on synthetic and real images (Fig. 6).

Comparison between the material parameters obtained with our method and with a previous method on the same two photographs.

This work is a collaboration with Xuejiao Luo, Leonardo Scandolo and Elmar Eisemann from the Technical University of Delft and was initiated during Adrien Bousseau's sabbatical visit there in 2022-2023. It was published in Computer Graphics Forum and presented at Eurographics 2024 16.

8.1.5 STIVi: Turning Perspective Sketching Video into Interactive Tutorials

Participants: Adrien Bousseau, Capucine Nghiem [Inria Ex)Situ, LISN], Mark Sypesteyn [TU Deflt], Jan Willem Hoftijzer [TU Deflt], Maneesh Agrawala [Stanford University], Theophanis Tsandilas [Inria Ex)Situ, LISN].

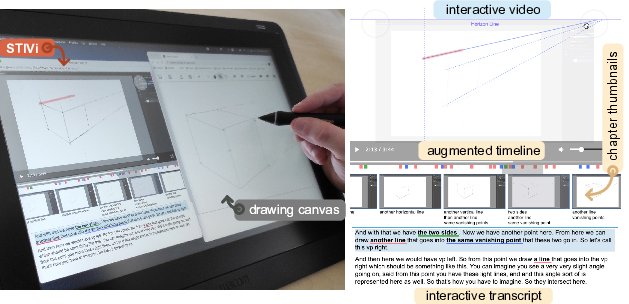

For design and art enthusiasts who seek to enhance their skills through instructional videos, following drawing instructions while practicing can be challenging. STIVi presents perspective drawing demonstrations and commentary of prerecorded instructional videos as interactive drawing tutorials that students can navigate and explore at their own pace (see Fig. 7). Our approach involves a semi-automatic pipeline to assist instructors in creating STIVi content by extracting pen strokes from video frames and aligning them with the accompanying audio commentary. Thanks to this structured data, students can navigate through transcript and in-video drawing, refer to provided highlights in both modalities to guide their navigation, and explore variations of the drawing demonstration to understand fundamental principles. We evaluated STIVi's interactive tutorials against a regular video player. We observed that our interface supports non-linear learning styles by providing students alternative paths for following and understanding drawing instructions.

STIVi's interface

This work is a collaboration with Theophanis Tsandilas from Inria, LISN, Université Paris-Saclay, Jan Willem Hoftijzer and Mark Sypesteyn from TU Delft and Maneesh Agrawala from Stanford University. It was presented at the Graphics Interface 2024 conference and published in the conference's proceedings 28.

8.1.6 Presentation Sketches in Product Design

Participants: Adrien Bousseau, Capucine Nghiem [Inria Ex)Situ, LISN], Mark Sypesteyn [TU Deflt], Jan Willem Hoftijzer [TU Deflt], Theophanis Tsandilas [Inria Ex)Situ, LISN].

Sketching is a core skill for industrial designers. While substantial research has studied sketching for product development, our work focuses on the activity of product presentation. We study product designer's practice of sketching for presentation, and more generally their presentation preparation workflow through three lenses: (1) We analyzed a corpus of presentation sketches to identify their visual characteristics and storytelling techniques. (2) We interviewed nine designers about their workflow and decision-making related to a recent presentation of theirs. (3) We also invited these designers to brainstorm on a sketch-based dynamic presentation authoring tool to explore the potential for novel sketch-based tools supporting their presentation authoring workflow.

This work is a collaboration with Theophanis Tsandilas from Inria, LISN, Université Paris-Saclay, Jan Willem Hoftijzer and Mark Sypesteyn from TU Delft.

8.1.7 Reconstructing CAD Programs from Hand-drawn Sketches

Participants: Henro Kriel, Adrien Bousseau, Gilda Manfredi [University of Basilicata], Daniel Ritche [Brown University].

We present a system that takes a concept sketch and reconstructs the depicted CAD model. We segment strokes into CAD operations, label these operations, and fit their parameters. We propose a graph neural network architecture to segment and label concept sketches for fitting, and we leverage sketch processing techniques, such as 3D reconstruction and cycle detection, to ease this prediction task.

This project is a collaboration with Gilda Manfredi, who visited our lab for 6 months, and Daniel Ritchie from Brown.

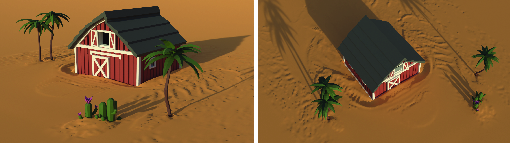

8.1.8 Surface Dissections: Waste Reuse through Shape Approximations

Participants: Berend Baas, Adrien Bousseau, David Bommes [University of Bern].

The design and manufacturing industry faces an urgent need to shift away from linear methods of production to more circular production models. In order to aid in this effort, the current generation of computational design tools needs to be rethought in order to accommodate reused materials for reuse scenarios. Motivated by this use case, this research project proposes a new computational inverse problem we label Surface Dissections, generalizing dissection puzzles to curved domains, with the intent to approximate target designs using reclaimed material. We propose an interactive method in an effort to solve this problem.

This is ongoing work with David Bommes from the University of Bern, Switzerland.

8.1.9 Recovering Data from Hand-Drawn Infographics

Participants: Anran Qi, Theophanis Tsandilas [Inria Ex)Situ], Ariel Shamir [Reichman University], Adrien Bousseau.

Data collection and visualization have long been perceived as activities reserved for experts. However, by drawing simple geometric figures –or glyphs – anyone can easily record and visualize their own data. Still, the resulting hand-drawn infographics do not provide direct access to the raw data values being visualized, hindering subsequent digital editing of both the data and the glyphs. We present a method to recover data values from glyph-based hand-drawn infographics. Given a visualization in a bitmap format and a user-specified parametric template of the glyphs in that visualization, we leverage deep neural networks to detect and localize all glyphs, and estimate the data values they represent. This reverse-engineering procedure effectively disentangles the depicted data from its visual representation, enabling various editing applications, such as visualizing new data values or testing different visualizations of the same data.

This is ongoing work with Theophanis Tsandilas from Inria Ex)Situ and Ariel Shamir from Reichman University.

8.1.10 Computational garment reuse

Participants: Anran Qi, Maria Korosteleva [Meshcapade], Nico Pietroni [University of Technology of Sydney], Olga Sorkine-Hornung [ETH Zurich], Adrien Bousseau.

The fashion industry is experiencing mass production, mass consumption, and as a consequence it also produces mass pollution. According to the UN Environment Program, the fashion industry is responsible for about 10% of global carbon emissions. Despite this, only 1% of used clothes are recycled into new clothes due to a lack of technology for recovering virgin fibers. Garment upcycling, a creative practice that transforms old clothes, termed as the source, into a new item, termed as the target, is gaining popularity as a method of recycling that individuals can engage in daily. We propose a computational method to compute the fabrication plan that transforms from the source to the target. We formulate this fabrication plan computation as an optimization that seeks to reuse as much of the existing seams and hems as possible (to minimize fabrication cost), while deviating as little as possible from the target (to best preserve design intent).

This is ongoing work with Maria Korosteleva from Meshcapade, Nico Pietroni from the University of Technology of Sydney and Olga Sorkine-Hornung from ETH Zurich.

8.2 Graphics with Uncertainty and Heterogeneous Content

8.2.1 Learning Images Across Scales Using Adversarial Training

Participants: George Drettakis, Guillaume Cordonnier, Krzysztof Wolski [MPI], Adarsh Djeacoumar [MPI], Alireza Javanmardi [MPI], Hans-Peter Seidel [MPI], Christian Theobalt [MPI], Karol Myszkowski [MPI], Xingang Pan [Nanyang Technological University, Singapour], Thomas Leimkühler [MPI].

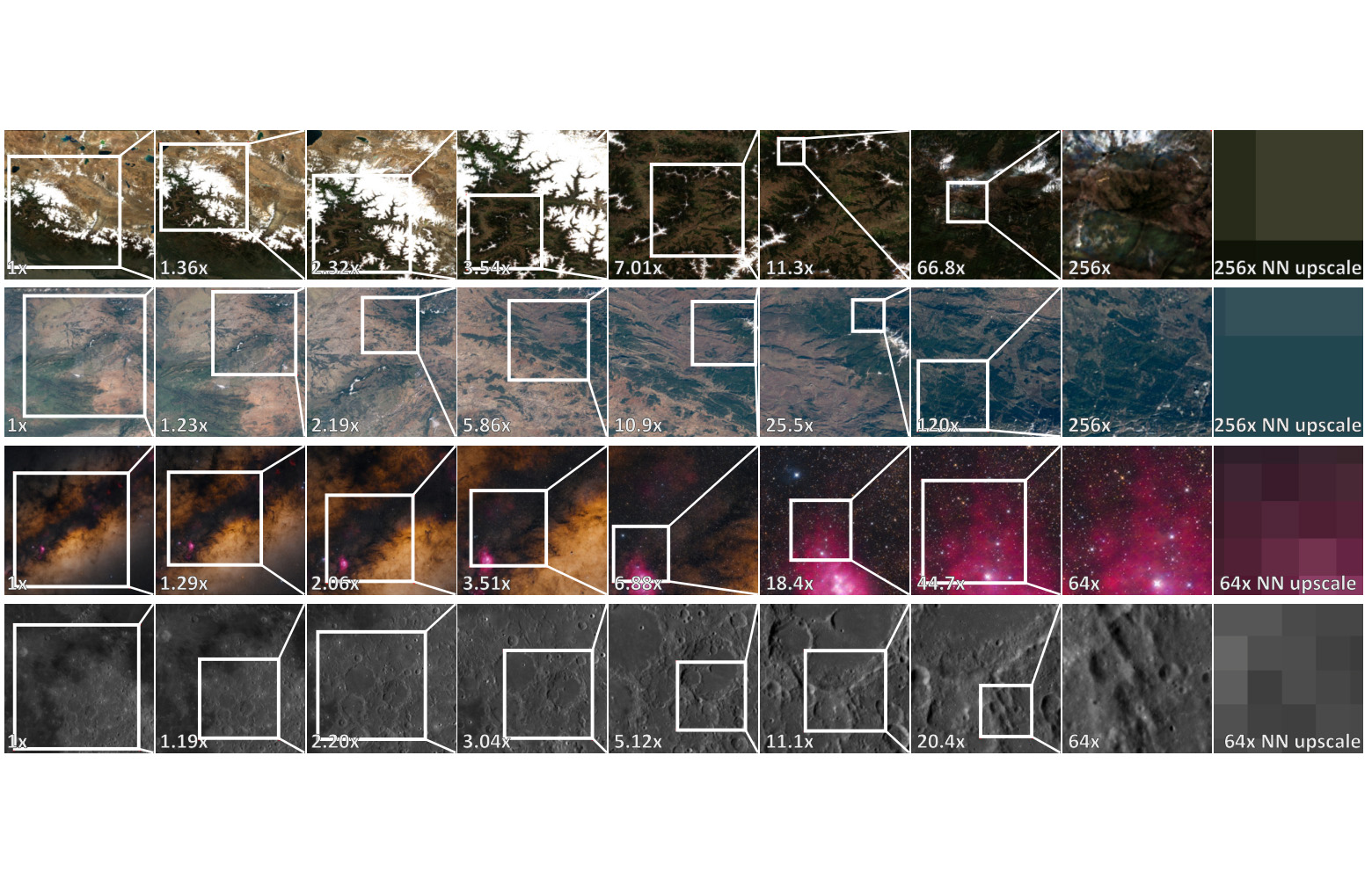

The real world exhibits rich structure and detail across many scales of observation. It is difficult, however, to capture and represent a broad spectrum of scales using ordinary images. We devise a novel paradigm for learning a representation that captures an orders-of-magnitude variety of scales from an unstructured collection of ordinary images. We treat this collection as a distribution of scale-space slices to be learned using adversarial training, and additionally enforce coherency across slices. Our approach relies on a multiscale generator with carefully injected procedural frequency content, which allows to interactively explore the emerging continuous scale space. Training across vastly different scales poses challenges regarding stability, which we tackle using a supervision scheme that involves careful sampling of scales. We show that our generator can be used as a multiscale generative model, and for reconstructions of scale spaces from unstructured patches. Significantly outperforming the state of the art, we demonstrate zoom-in factors of up to 256x at high quality and scale consistency (see Fig. 8).

Learning-scales illustration

This work is a collaboration with Thomas Leimkuehler, Krzysztof Wolski, Adarsh Djeacoumar, Alireza Javanmardi, Hans-Peter Seidel, Christian Theobalt, Karol Myszkowski from the Max-Plank-Institut für Informatik in Saarbruecken and Xingang Pan from NTU - Nanyang Technological University in Singapour; the work was started while Thomas was a postdoc at GRAPHDECO. It was published in ACM Transactions on Graphics, and presented at ACM SIGGRAPH 22.

8.2.2 N-Dimensional Gaussians for Fitting of High Dimensional Functions

Participants: Georgios Kopanas, Stavros Diolatzis [Intel Labs], Tobias Zirr [Intel Labs], Alexander Kuznetsov [Intel Labs], Anton Kaplanyan [Intel Labs].

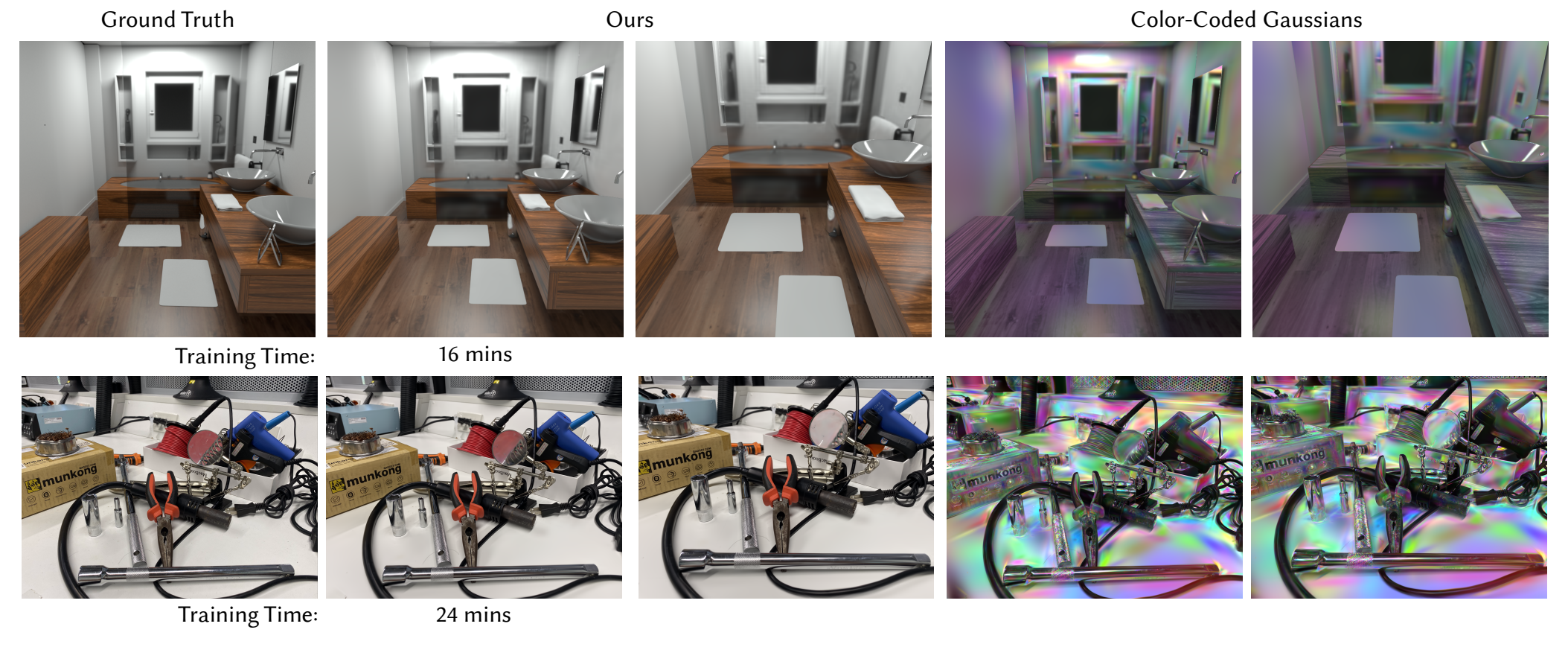

In the wake of many new ML-inspired approaches for reconstructing and representing high-quality 3D content, recent hybrid and explicitly learned representations exhibit promising performance and quality characteristics. However, their scaling to higher dimensions is challenging, e.g. when accounting for dynamic content with respect to additional parameters such as material properties, illumination, or time. We tackle these challenges with an explicit representation based on Gaussian mixture models. With our solutions, we arrive at efficient fitting of compact N-dimensional Gaussian mixtures and enable efficient evaluation at render time: For fast fitting and evaluation, we introduce a high-dimensional culling scheme that efficiently bounds N-D Gaussians, inspired by Locality Sensitive Hashing. For adaptive refinement yet compact representation, we introduce a loss-adaptive density control scheme that incrementally guides the use of additional capacity toward missing details. With these tools we can for the first time represent complex appearance that depends on many input dimensions beyond position or viewing angle within a compact, explicit representation optimized in minutes and rendered in milliseconds (see Fig. 9).

An illustration of N-Dimensional Gaussians.

This work is a collaboration with S. Diolatzis, T. Zirr, Z. Kuznetsov and A. Kaplanyan from Intel. It was published in Transactions on Graphics, and presented in SIGGRAPH 2024 25.

8.2.3 MatUp: Repurposing Image Upsamplers for SVBRDFs

Participants: Alban Gauthier, Bernhard Kerbl [TU Wien], Jérémy Levallois [Adobe Research], Robin Faury [Adobe Research], Jean-Marc Thiery [Adobe Research], Tamy Boubekeur [Adobe Research].

We propose MatUp, an upsampling filter for material super-resolution. Our method takes as input a low-resolution SVBRDF and upscales its maps so that their rendering under various lighting conditions fits upsampled renderings inferred in the radiance domain with pre-trained RGB upsamplers. We formulate our local filter as a compact Multilayer Perceptron (MLP), which acts on a small window of the input SVBRDF and is optimized using a sparsity-inducing loss defined over upsampled radiance at various locations. This optimization is entirely performed at the scale of a single, independent material. In doing so, MatUp leverages the reconstruction capabilities acquired over large collections of natural images by pre-trained RGB models and provides regularization over self-similar structures. In particular, our lightweight neural filter avoids retraining complex architectures from scratch or accessing any large collection of low/high resolution material pairs - which do not actually exist at the scale RGB upsamplers are trained with. As a result, MatUp provides fine and coherent details in the upscaled material maps (see Fig. 10), as shown in an extensive evaluation we provide.

MatUp illustration

This work is a collaboration with Bernhard Kerbl from Technische Universität Wien and Jérémy Levallois, Robin Faury, Jean-Marc Thiery, and Tamy Boubekeur from Adobe Research. It was published in Computer Graphics Forum, and presented at Eurographics Symposium on Rendering (EGSR) 2024 12.

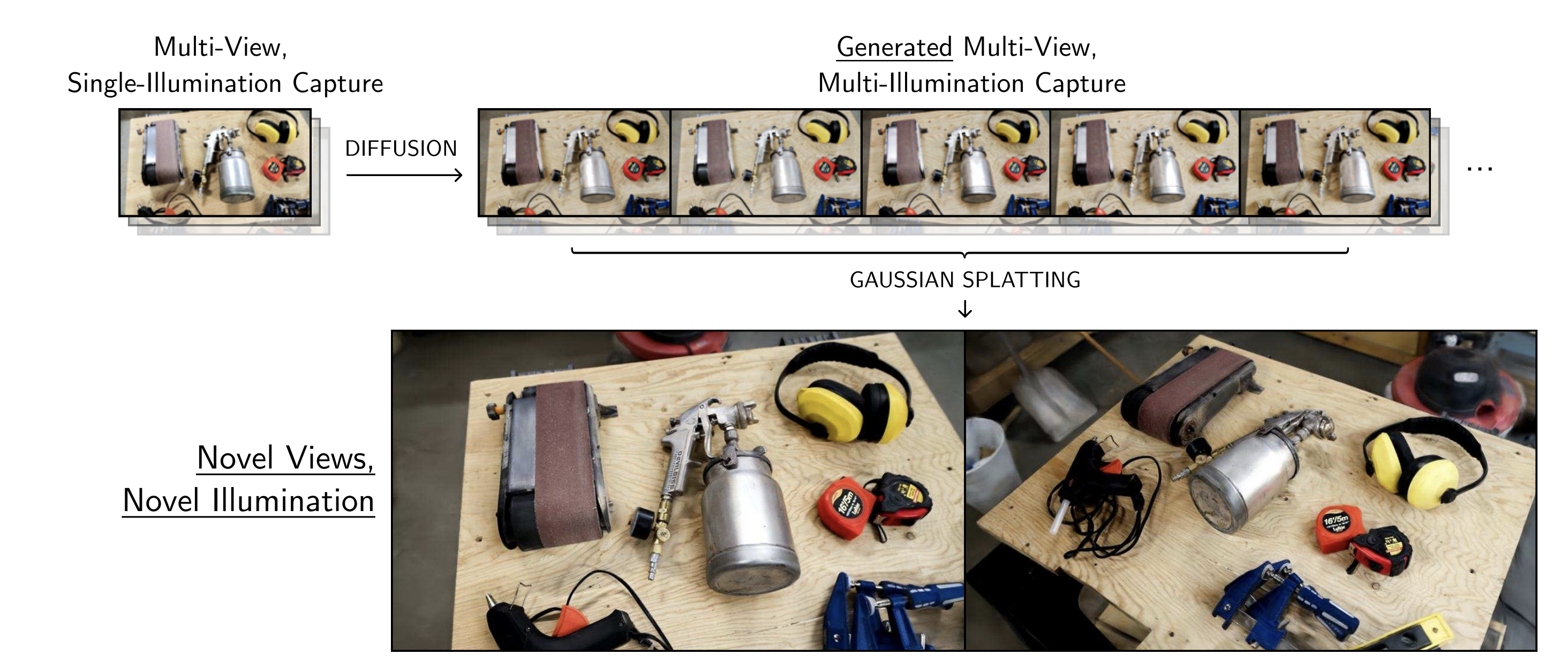

8.2.4 A Diffusion Approach to Radiance Field Relighting using Multi-Illumination Synthesis

Participants: Yohan Poirier-Ginter, Alban Gauthier, George Drettakis, Julien Philip [Adobe Research], Jean-François Lalonde [Université Laval].

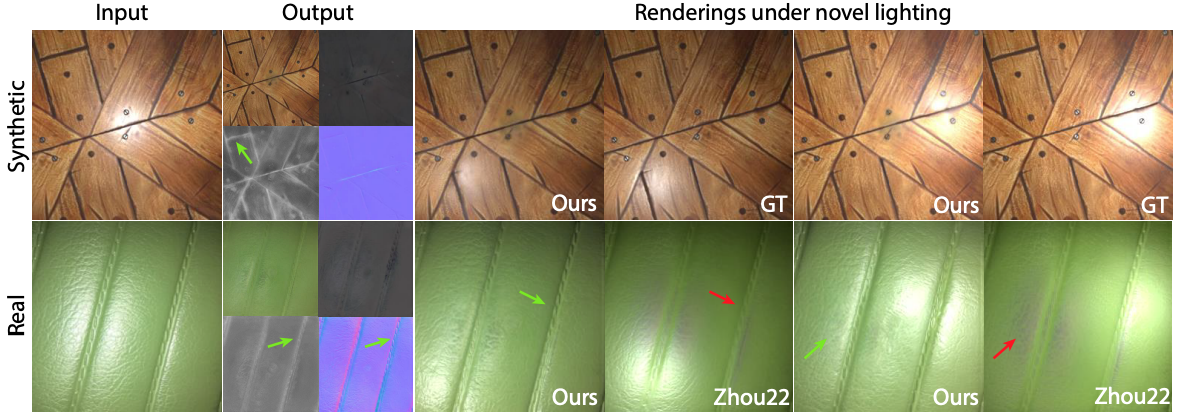

Relighting radiance fields is severely underconstrained for multi-view data, which is most often captured under a single illumination condition; it is especially hard for full scenes containing multiple objects. We introduce a method to create relightable radiance fields using such single-illumination data by exploiting priors extracted from 2D image diffusion models. We first fine-tune a 2D diffusion model on a multi-illumination dataset conditioned by light direction, allowing us to augment a single-illumination capture into a realistic – but possibly inconsistent – multi-illumination dataset from directly defined light directions. We use this augmented data to create a relightable radiance field represented by 3D Gaussian splats. To allow direct control of light direction for low-frequency lighting, we represent appearance with a multi-layer perceptron parameterized by light direction. To enforce multi-view consistency and overcome inaccuracies, we optimize a per-image auxiliary feature vector. We show results on synthetic and real multi-view data under single illumination, demonstrating that our method successfully exploits 2D diffusion model priors to allow realistic 3D relighting for complete scenes (see Fig. 11).

Illustration for `A Diffusion Approach to Radiance Field Relighting using Multi-Illumination Synthesis'

This work is a collaboration with Julien Philip (Adobe Research) and Jean-François Lalonde (Université Laval). It was published in the journal Computer Graphics Forum and presented at the Eurographics Symposium on Rendering (EGSR) 2024 17.

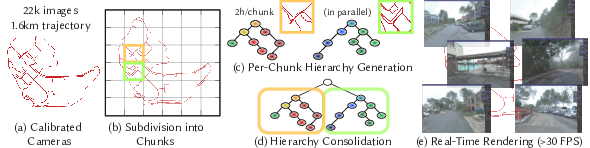

8.2.5 A Hierarchical 3D Gaussian Representation for Real-Time Rendering of Very Large Datasets

Participants: Andreas Meuleman, Alexandre Lanvin, George Drettakis, Bernhard Kerbl [TU Wien], Georgios Kopanas [Google], Michael Wimmer [TU Wien].

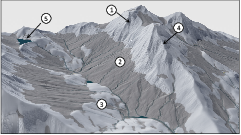

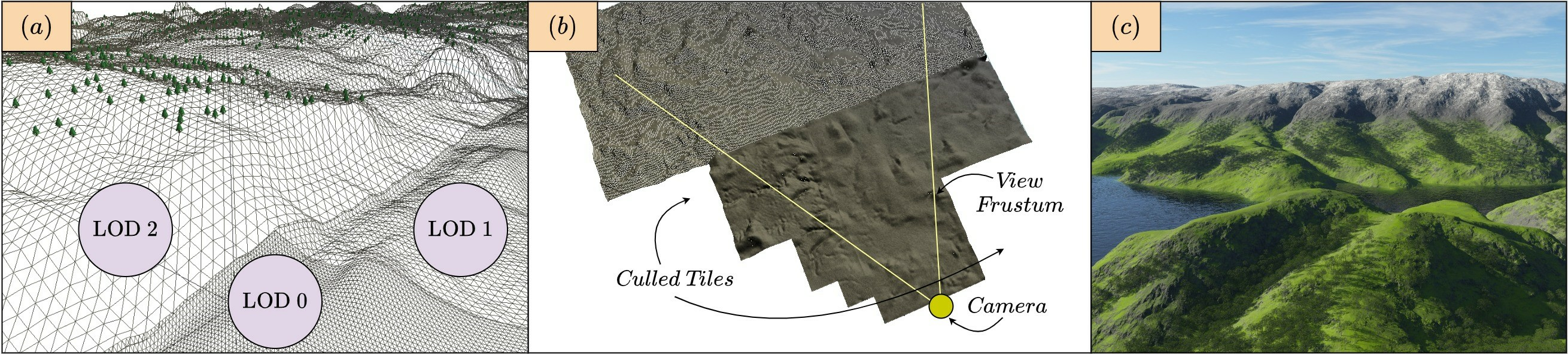

Novel view synthesis has seen major advances in recent years, with 3D Gaussian splatting offering an excellent level of visual quality, fast training and real-time rendering. However, the resources needed for training and rendering inevitably limit the size of the captured scenes that can be represented with good visual quality. We introduce a hierarchy of 3D Gaussians that preserves visual quality for very large scenes, while offering an efficient Level-of-Detail (LOD) solution for efficient rendering of distant content with effective level selection and smooth transitions between levels. We introduce a divide-and-conquer approach that allows us to train very large scenes in independent chunks. We consolidate the chunks into a hierarchy that can be optimized to further improve visual quality of Gaussians merged into intermediate nodes. Very large captures typically have sparse coverage of the scene, presenting many challenges to the original 3D Gaussian splatting training method; we adapt and regularize training to account for these issues. We present a complete solution, that enables real-time rendering of very large scenes and can adapt to available resources thanks to our LOD method. We show results for captured scenes with up to tens of thousands of images with a simple and affordable rig, covering trajectories of up to several kilometers and lasting up to one hour (see Fig. 12).

H3DGS

This work is a collaboration with Bernhard Kerbl and Michael Wimmer (TU Wien) and Georgios Kopanas (Google). It was published in Transaction on Graphics (TOG) and presented at Siggraph 2024 15.

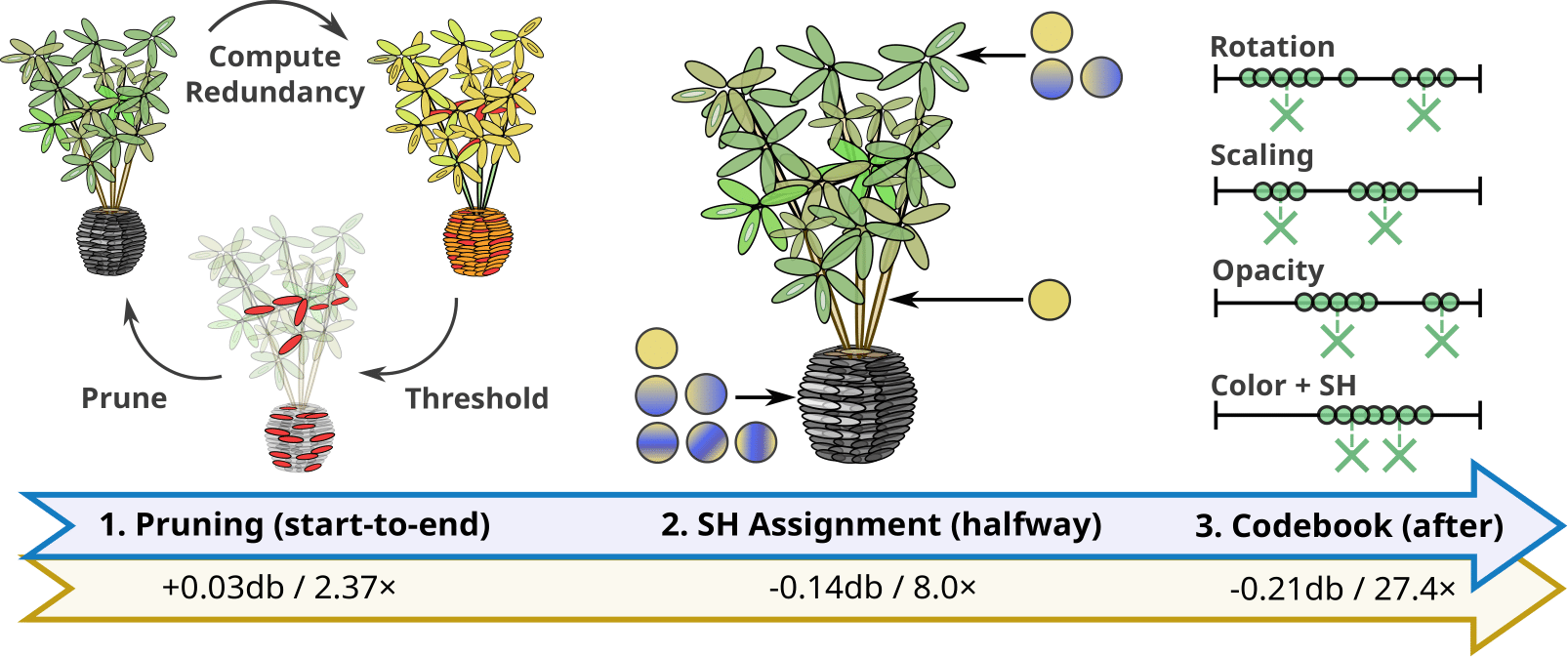

8.2.6 Reducing the Memory Footprint of 3D Gaussian Splatting

Participants: Panagiotis Papantonakis, Georgios Kopanas, Bernhard Kerbl [TU Wien], Alexandre Lanvin, George Drettakis.

3D Gaussian splatting provides excellent visual quality for novel view synthesis, with fast training and real-time rendering; unfortunately, the memory requirements of this method for storing and transmission are unreasonably high. We first analyze the reasons for this, identifying three main areas where storage can be reduced: the number of 3D Gaussian primitives used to represent a scene, the number of coefficients for the spherical harmonics used to represent directional radiance, and the precision required to store Gaussian primitive attributes. We present a solution to each of these issues. First, we propose an efficient, resolution-aware primitive pruning approach, reducing the primitive count by half. Second, we introduce an adaptive adjustment method to choose the number of coefficients used to represent directional radiance for each Gaussian primitive, and finally a codebook-based quantization method, together with a half-float representation for further memory reduction. Taken together, these three components result in a 27 times reduction in overall size on disk on the standard datasets we tested, along with a 1.7 speedup in rendering speed (see Fig. 13). We demonstrate our method on standard datasets and show how our solution results in significantly reduced download times when using the method on a mobile device.

Reduced 3DGS Overview

This work is a collaboration with Bernhard Kerbl from TU Wien and was presented at the 2024 ACM SIGGRAPH Symposium on Interactive 3D Graphics and Games (I3D) 29.

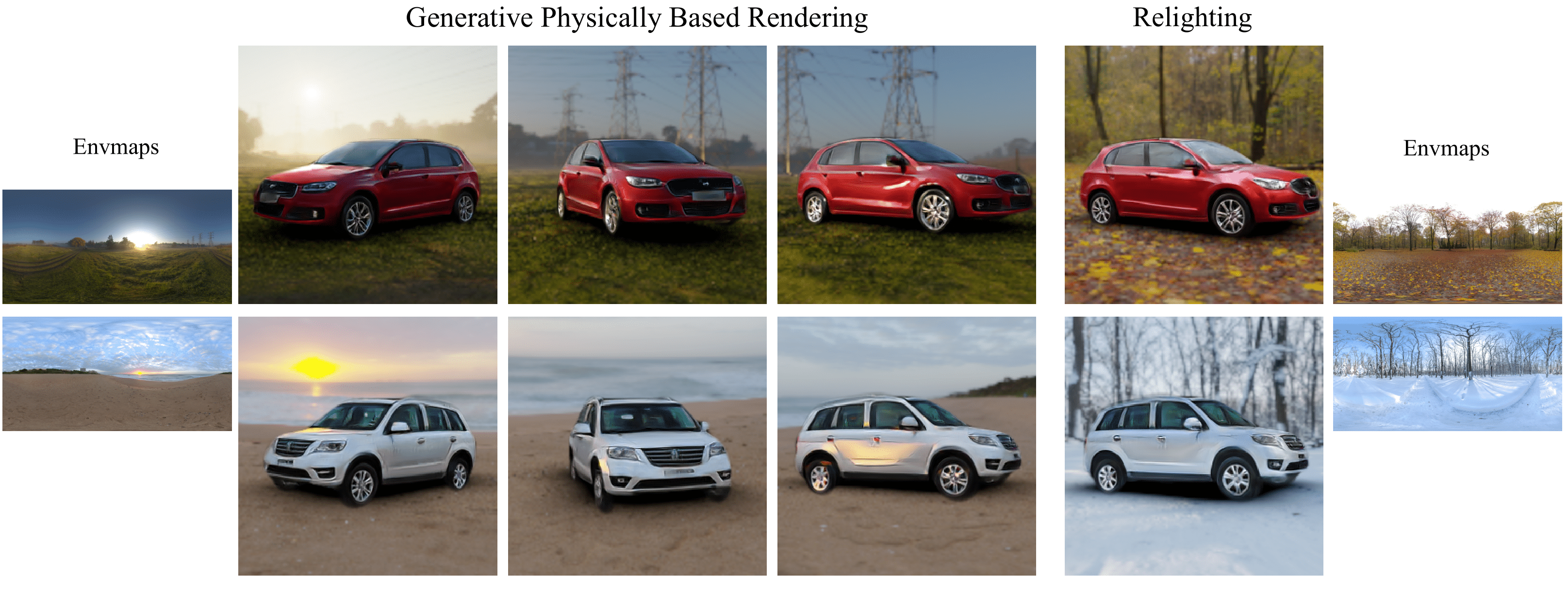

8.2.7 Physically-Based Lighting for 3D Generative Models of Cars

Participants: Nicolas Violante, Alban Gauthier, Stavros Diolatzis [Intel], Thomas Leimkühler [MPI], George Drettakis.

Recent work has demonstrated that Generative Adversarial Networks (GANs) can be trained to generate 3D content from 2D image collections, by synthesizing features for neural radiance field rendering. However, most such solutions generate radiance, with lighting entangled with materials. This results in unrealistic appearance, since lighting cannot be changed and view-dependent effects such as reflections do not move correctly with the viewpoint. In addition, many methods have difficulty for full, 360° rotations, since they are often designed for mainly front-facing scenes such as faces.

We introduce a new 3D GAN framework that addresses these shortcomings, allowing multi-view coherent 360° viewing and at the same time relighting for objects with shiny reflections, which we exemplify using a car dataset. The success of our solution stems from three main contributions. First, we estimate initial camera poses for a dataset of car images, and then learn to refine the distribution of camera parameters while training the GAN. Second, we propose an efficient Image-Based Lighting model, that we use in a 3D GAN to generate disentangled reflectance, as opposed to the radiance synthesized in most previous work. The material is used for physically-based rendering with a dataset of environment maps. Third, we improve the 3D GAN architecture compared to previous work and design a careful training strategy that allows effective disentanglement. Our model is the first that generates a variety of 3D cars that are multi-view consistent and that can be relit interactively with any environment map (see Fig. 14).

Illustration of physically-lighted cars generated with our method.

This work is a collaboration with Thomas Leimkühler from MPI Informatik and Stavros Diolatzis from Intel. It was published in Computer Graphics Forum and presented in Eurographics 2024 21.

8.2.8 Studying the Effect of Volumetric Rendering Approximations for 3D Gaussian Splatting

Participants: George Drettakis, Georgios Kopanas, Bernhard Kerbl [TU Wien], Adam Celarek [TU Wien], Michael Wimmer [TU Wien].

We present an in-depth study of the effect of various approximations to volumetric rendering that are performed in the 3D Gaussian Splatting algorithm. These include the fact that constant opacity is used instead of density for attenuation, that self-attenuation is ignored and that primitives are assumed not to intersect. We show that for moderately large numbers of primitives, none of these approximations affects quality.

This work is in collaboration with B. Kerbl, A. Celarek and M. Wimmer from TU Wien, Austria.

8.2.9 SVBRDF Prediction and Generative Image Modeling for Appearance Modeling of 3D Scenes

Participants: Alban Gauthier, Alexandre Lanvin, Adrien Bousseau, George Drettakis, Valentin Deschaintre [Adobe Research], Fredo Durand [MIT].

We introduce a method for texturing 3D scenes with SVBRDFs. This method exploits the complementary strengths of two active streams of research – generative image modeling and SVBRDF prediction. We build on the former to generate multiple views of a given scene conditioned on its geometry, while we leverage the latter to recover material parameters for each of these views, which we then merge into a common texture atlas.

This work is in collaboration with V. Deschaintre from Adobe Research and F. Durand from MIT.

8.2.10 Physical Reflections in Gaussian Splatting

Participants: Yohan Poirier-Ginter, George Drettakis, Jean-François Lalonde [Université Laval].

Radiance fields are highly effective at capturing the appearance of complex lighting effects. In theory, they implement reflections with viewpoint-dependent coloration e.g. spherical harmonics in Gaussian splatting. In practice, they struggle to do so robustly and rely on duplicate copies of objects standing in for reflected images. Besides damaging geometric reconstruction, such entanglement precludes reasoning about the content of a scene and its physical properties. We seek to model all reflections in Gaussian splatting properly and explicitly, with hardware-accelerated raytracing and a principled BRDF model.

8.2.11 Incremental Scalable Reconstruction

Participants: Andreas Meuleman, Ishaan Shah, Alexandre Lanvin, George Drettakis, Bernhard Kerbl [TU Wien].

Existing methods for large-scale 3D reconstruction are slow and require preprocessed poses. We present an algorithm for novel view synthesis from casually captured videos of large-scale scenes, providing real-time feedback.

This work is in collaboration with B. Kerbl from TU Wien, Austria.

8.2.12 Caching Global Illumination using Gaussian Primitives

Participants: Ishaan Shah, Alban Gauthier, George Drettakis.

Achieving real-time rendering global illumination has been a long-standing goal in Computer Graphics. Modeling global illumination is computationally expensive making it impractical for real-time applications. Caching global illumination is a popular approach alleviating the computational cost. In this work, we propose a novel caching technique that uses Gaussian primitives to store global illumination. Our method is quick to optimize (5-10s) and is able to represent high-frequency illumination such as sharp shadows and glossy reflections.

8.2.13 Adding Textures to Gaussian Splatting

Participants: Panagiotis Papantonakis, Georgios Kopanas, Frédo Durand [MIT], George Drettakis.

Gaussian Splatting is a method for Novel View Synthesis, that represents static scenes as a set of Gaussian primitives. Each primitive is characterized by a set of parameters that controls its appearance and geometry. In the original method, a primitive could only have a single color throughout its extent. We observed that this limitation can lead to an increased number of primitives in areas where the geometry is simple (e.g. a planar surface) but the appearance is complex, as is the case of a textured surface. In these cases, the overhead of geometric parameters becomes a burden and contributes to an increased memory usage. We try to remove this limitation by supporting multiple color samples per primitive. Out of the possible options, we have decided to proceed with a texture grid attached on top of our primitives. In that way, apart from increasing the expressivity of appearance by querying the texture grid, we hope to benefit from other advantages of textures, like prefiltering and efficient storage.

This work is in collaboration with F. Durand from MIT.

8.2.14 Dynamic Gaussian Splatting

Participants: Petros Tzathas, Andreas Meuleman, Guillaume Cordonnier, George Drettakis.

Gaussian Splatting has quickly established itself as the prevailing method for novel view synthesis. Nevertheless, as originally formulated, the method restricts itself to static scenes. We seek to extend its modeling capabilities to scenes that include motion. In contrast to other works sharing the same goal, we aim to reformulate the specific manner in which the representation is adapted to better model the data by examining the resulting error.

8.2.15 Splat and Replace: 3D Reconstruction with Repetitive Elements

Participants: Nicolas Violante, Alban Gauthier, Andreas Meuleman, Fredo Durand [MIT], Thibault Groueix [Adobe Research], George Drettakis.

We propose to leverage the information of repetitive elements to improve the reconstruction of low-quality parts of scenes due to poor coverage and occlusions in the capture. After a base 3D reconstruction, we detect multiple occurrences of the same object, or instances, in the scene, and merge them together into a common shared representation that effectively aggregates multi-view and multi-illumination information from all instances. This representation is then finetuned and used to replace poorly-reconstructed instances, improving the overall geometry while also accounting for the specific appearance variations of each instance.

This work is in collaboration with F. Durand from MIT and T. Groueix from Adobe Research.

8.3 Physical Simulation for Graphics

8.3.1 Volcanic Skies: coupling explosive eruptions with atmospheric simulation to create consistent skyscapes

Participants: Guillaume Cordonnier, Cilliers Pretorius [University of Cape Town], James Gain [University of Cape Town], Maud Lastic [LIX], Damien Rohmer [LIX], Marie-Paule Cani [LIX], Jiong Chen [LIX, Inria GeomeriX].

Explosive volcanic eruptions rank among the most terrifying natural phenomena, and are thus frequently depicted in films, games, and other media, usually with a bespoke once-off solution. In this paper, we introduce the first general-purpose model for bi-directional interaction between the atmosphere and a volcano plume. In line with recent interactive volcano models, we approximate the plume dynamics with Lagrangian disks and spheres and the atmosphere with sparse layers of 2D Eulerian grids, enabling us to focus on the transfer of physical quantities such as temperature, ash, moisture, and wind velocity between these sub-models. We subsequently generate volumetric animations by noise-based procedural upsampling keyed to aspects of advection, convection, moisture, and ash content to generate a fully-realized volcanic skyscape. Our model captures most of the visually salient features emerging from volcano-sky interaction, such as windswept plumes, enmeshed cap, bell and skirt clouds, shockwave effects, ash rain, and sheathes of lightning visible in the dark (see Fig. 15).

Different atmospheric phenomena from volcanic eruptions modeled by our method.

This work is a collaboration with Cilliers Pretorius and James Gain from University of Cape Town, Maud Lastic, Damien Rohmer and Marie-Paule Cani from LIX, and Jiong Chen from LIX and Inria GeomeriX. It was published in Computer Graphics Forum and presented at Eurographics 2024 18.

8.3.2 Fast simulation of viscous lava flow using Green's functions as a smoothing kernel

Participants: Guillaume Cordonnier, Yannis Kedadry [Ecole Normale Supérieure].

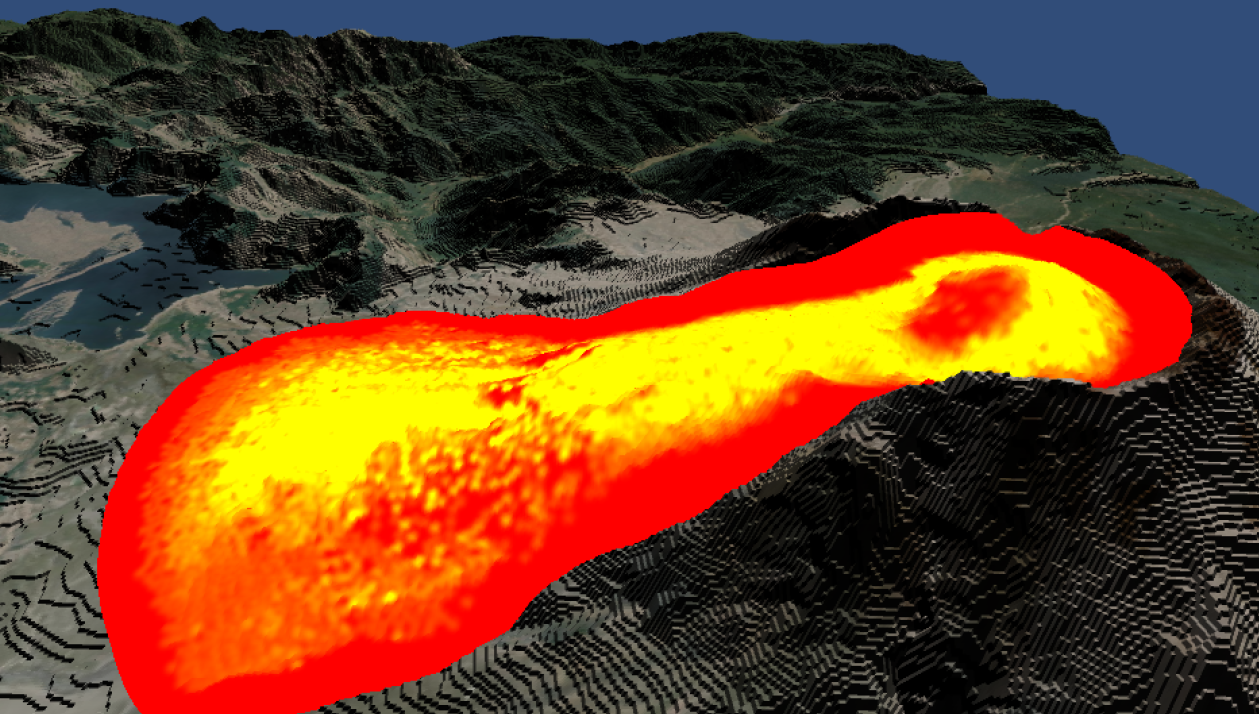

We present a novel approach to simulate large-scale lava flow in real-time. We use a depth-averaged model from numerical vulcanology to simplify the problem to 2.5D using a single layer of particle with thickness. Yet, lava flow simulation is challenging due to its strong viscosity which introduces computational instabilities. We solve the associated partial differential equations with approximated Green's functions and observe that this solution acts as a smoothing kernel. We use this kernel to diffuse the velocity based on Smoothed Particle Hydrodynamics. This yields a representation of the velocity that implicitly accounts for horizontal viscosity which is otherwise neglected in standard depth-average models. We demonstrate that our method efficiently simulates large-scale lava flows in real-time (see Fig. 16).

Illustration of our algorithm for the fast simulation of viscous lava.

This work is a collaboration with Yannis Kedadry from Ecole Normale Supérieure, initiated during Yannis' internship in GraphDeco in 2023. It was presented as a poster at the ACM SIGGRAPH / Eurographics Symposium on Computer Animation 2024 27.

8.3.3 Unerosion: simulating terrain evolution back in time

Participants: Guillaume Cordonnier, Zhanyu Yang [Purdue University], Bedrich Benes [Purdue University], Marie-Paule Cani [LIX], Christian Perrenoud [Institut de Paléontologie Humaine].

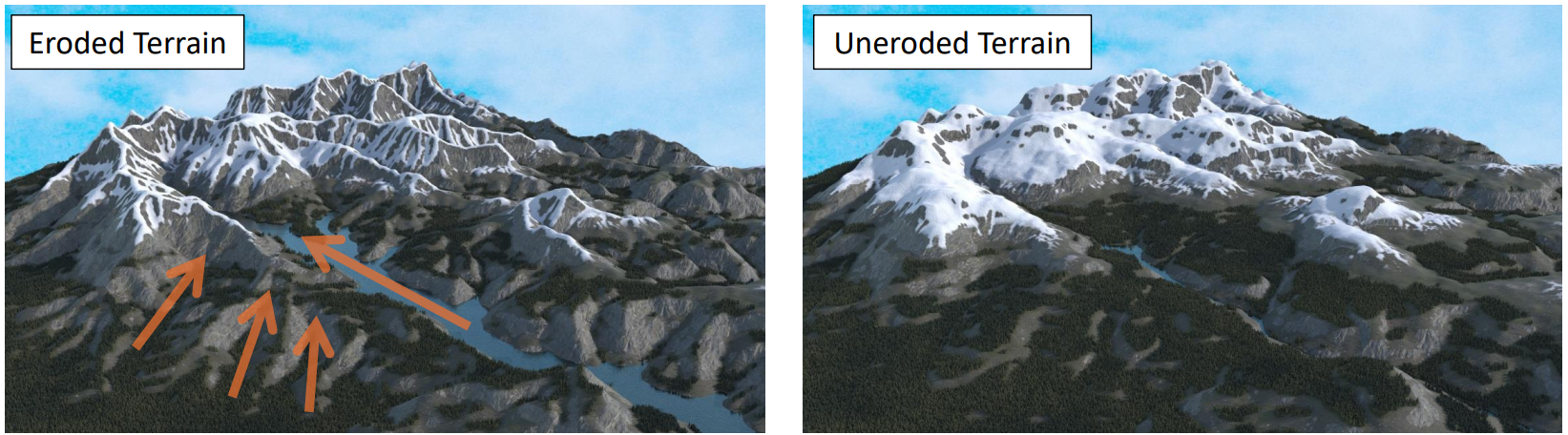

While the past of terrain cannot be known precisely because an effect can result from many different causes, exploring these possible pasts opens the way to numerous applications ranging from movies and games to paleogeography. We introduce unerosion, an attempt to recover plausible past topographies from an input terrain represented as a height field (see Fig. 17). Our solution relies on novel algorithms for the backward simulation of different processes: fluvial erosion, sedimentation, and thermal erosion. This is achieved by re-formulating the equations of erosion and sedimentation so that they can be simulated back in time. These algorithms can be combined to account for a succession of climate changes backward in time, while the possible ambiguities provide editing options to the user. Results show that our solution can approximately reverse different types of erosion while enabling users to explore a variety of alternative pasts. Using a chronology of climatic periods to inform us about the main erosion phenomena, we also went back in time using real measured terrain data. We checked the consistency with geological findings, namely the height of river beds hundreds of thousands of years ago.

Illustration of our algorithm for unerosion.