2024Activity reportProject-TeamLOKI

RNSR: 201822657D- Research center Inria Centre at the University of Lille

- In partnership with:Université de Lille

- Team name: Technology & Knowledge for Interaction

- In collaboration with:Centre de Recherche en Informatique, Signal et Automatique de Lille

- Domain:Perception, Cognition and Interaction

- Theme:Interaction and visualization

Keywords

Computer Science and Digital Science

- A5.1.1. Engineering of interactive systems

- A5.1.2. Evaluation of interactive systems

- A5.1.3. Haptic interfaces

- A5.1.5. Body-based interfaces

- A5.1.8. 3D User Interfaces

- A5.1.9. User and perceptual studies

- A5.2. Data visualization

- A5.6.1. Virtual reality

- A5.6.2. Augmented reality

- A5.6.4. Multisensory feedback and interfaces

- A5.7.2. Music

- A9.2. Machine learning

- A9.6. Decision support

Other Research Topics and Application Domains

- B2.8. Sports, performance, motor skills

- B3.1. Sustainable development

- B5.7. 3D printing

- B6.1.1. Software engineering

- B9.2. Art

- B9.2.1. Music, sound

- B9.4. Sports

- B9.5.1. Computer science

- B9.5.6. Data science

- B9.6.10. Digital humanities

1 Team members, visitors, external collaborators

Research Scientists

- Bruno Fruchard [INRIA, ISFP]

- Janin Koch [INRIA, ISFP, from Aug 2024]

- Sylvain Malacria [INRIA, Researcher, HDR]

- Mathieu Nancel [INRIA, Researcher]

Faculty Members

- Géry Casiez [Team leader, UNIV LILLE, Professor]

- Thomas Pietrzak [UNIV LILLE, Professor, from Sep 2024]

- Thomas Pietrzak [UNIV LILLE, Associate Professor, until Aug 2024]

- Damien Pollet [UNIV LILLE, Associate Professor]

- Aurélien Tabard [Université Lyon 1, Associate Professor Delegation, until Aug 2024]

PhD Students

- Eya Ben Chaaben [INRIA, from Aug 2024]

- Yuan Chen [WATERLOO CANADA, from Apr 2024 until Aug 2024]

- Yuan Chen [UNIV LILLE, until Mar 2024]

- Johann Gonzalez Avila [UNIV LILLE, until Mar 2024]

- Vincent Lambert [Université Grenoble Alpes]

- Suliac Lavenant [INRIA]

- Alice Loizeau [UNIV LILLE, ATER, from Sep 2024]

- Alice Loizeau [INRIA, until Aug 2024]

- Omid Niroomandi [INRIA, from Nov 2024]

- Antoine Nollet [UNIV LILLE, from Oct 2024]

- Xiaohan Peng [Université Paris-Saclay, from Aug 2024]

- Raphael Perraud [INRIA]

- Travis West [UNIV MCGILL]

Technical Staff

- Raphaël James [INRIA, Engineer, until Feb 2024]

- Timo Maszewski [INRIA, Engineer, until Mar 2024]

Interns and Apprentices

- David Adamov [INRIA, Intern, from Apr 2024 until Jul 2024]

- Madjdah Attatebi [UNIV LILLE, Intern, from May 2024 until Sep 2024]

- Jeremy Delobel [UNIV LILLE, until Feb 2024]

- Audrey Heurtaux Sene [INRIA, Intern, from Mar 2024 until Jun 2024]

- Ang Li [UNIV LILLE, Intern, from Sep 2024]

- Antoine Martinsse [UNIV LILLE, until Feb 2024]

- Flavien Volant [UNIV LILLE, Intern, from Apr 2024 until Jul 2024]

Administrative Assistant

- Lucile Leclercq [INRIA]

Visiting Scientists

- Ravin Balakrishnan [UNIV TORONTO, from Sep 2024]

- Scott Bateman [University of New Brunswick, from Dec 2024]

2 Overall objectives

Human-Computer Interaction (HCI) is a constantly moving field 42. Changes in computing technologies extend their possible uses, and modify the conditions of existing uses. People also adapt to new technologies and adjust them to their own needs 47. New problems and opportunities thus regularly arise and must be addressed from the perspectives of both the user and the machine, to understand and account for the tight coupling between human factors and interactive technologies. Our vision is to connect these two elements: Knowledge & Technology for Interaction.

2.1 Knowledge for Interaction

In the early 1960s, when computers were scarce, expensive, bulky, and formal-scheduled machines used for automatic computations, Engelbart saw their potential as personal interactive resources. He saw them as tools we would purposefully use to carry out particular tasks and that would empower people by supporting intelligent use 38. Others at the same time were seeing computers differently, as partners, intelligent entities to whom we would delegate tasks. These two visions still constitute the roots of today's predominant HCI paradigms, use and delegation. In the delegation approach, a lot of effort has been made to support oral, written and non-verbal forms of human-computer communication, and to analyze and predict human behavior. But the inconsistency and ambiguity of human beings, and the variety and complexity of contexts, make these tasks very difficult 52 and the machine is thus the center of interest.

2.1.1 Computers as tools

The focus of Loki is not on what machines can understand or do by themselves, but on what people can do with them. We do not reject the delegation paradigm but clearly favor the one of tool use, aiming for systems that support intelligent use rather than for intelligent systems. And as the frontier is getting thinner, one of our goals is to better understand what makes an interactive system perceived as a tool or as a partner, and how the two paradigms can be combined for the best benefit of the user.

2.1.2 Empowering tools

The ability provided by interactive tools to create and control complex transformations in real-time can support intellectual and creative processes in unusual but powerful ways. But mastering powerful tools is not simple and immediate, it requires learning and practice. Our research in HCI should not just focus on novice or highly proficient users, it should also care about intermediate ones willing to devote time and effort to develop new skills, be it for work or leisure.

2.1.3 Transparent tools

Technology is most empowering when it is transparent: invisible in effect, it does not get in your way but lets you focus on the task. Heidegger characterized this unobtruded relation to things with the term zuhanden (ready-to-hand). Transparency of interaction is not best achieved with tools mimicking human capabilities, but with tools taking full advantage of them given the context and task. For instance, the transparency of driving a car “is not achieved by having a car communicate like a person, but by providing the right coupling between the driver and action in the relevant domain (motion down the road)” 55. Our actions towards the digital world need to be digitized and we must receive proper feedback in return. But input and output technologies pose somewhat inevitable constraints while the number, diversity, and dynamicity of digital objects call for more and more sophisticated perception-action couplings for increasingly complex tasks. We want to study the means currently available for perception and action in the digital world: Do they leverage our perceptual and control skills? Do they support the right level of coupling for transparent use? Can we improve them or design more suitable ones?

2.2 Technology for Interaction

Studying the interactive phenomena described above is one of the pillars of HCI research, in order to understand, model and ultimately improve them. Yet, we have to make those phenomena happen, to make them possible and reproducible, be it for further research or for their diffusion 41. However, because of the high viscosity and the lack of openness of actual systems, this requires considerable efforts in designing, engineering, implementing and hacking hardware and software interactive artifacts. This is what we call “The Iceberg of HCI Research”, of which the hidden part supports the design and study of new artifacts, but also informs their creation process.

2.2.1 “Designeering Interaction”

Both parts of this iceberg are strongly influencing each other: The design of interaction techniques (the visible top) informs on the capabilities and limitations of the platform and the software being used (the hidden bottom), giving insights into what could be done to improve them. On the other hand, new architectures and software tools open the way to new designs, by giving the necessary bricks to build with 43. These bricks define the adjacent possible of interactive technology, the set of what could be designed by assembling the parts in new ways. Exploring ideas that lie outside of the adjacent possible require the necessary technological evolutions to be addressed first. This is a slow and gradual but uncertain process, which helps to explore and fill a number of gaps in our research field but can also lead to deadlocks. We want to better understand and master this process—i. e., analyzing the adjacent possible of HCI technology and methods—and introduce tools to support and extend it. This could help to make technology better suited to the exploration of the fundamentals of interaction, and to their integration into real systems, a way to ultimately improve interactive systems to be empowering tools.

2.2.2 Computers vs Interactive Systems

In fact, today's interactive systems—e. g., desktop computers, mobile devices— share very similar layered architectures inherited from the first personal computers of the 1970s. This abstraction of resources provides developers with standard components (UI widgets) and high-level input events (mouse and keyboard) that obviously ease the development of common user interfaces for predictable and well-defined tasks and users' behaviors. But it does not favor the implementation of non-standard interaction techniques that could be better adapted to more particular contexts, to expressive and creative uses. Those often require to go deeper into the system layers, and to hack them until getting access to the required functionalities and/or data, which implies switching between programming paradigms and/or languages.

And these limitations are even more pervading as interactive systems have changed deeply in the last 20 years. They are no longer limited to a simple desktop or laptop computer with a display, a keyboard and a mouse. They are becoming more and more distributed and pervasive (e. g., mobile devices, Internet of Things). They are changing dynamically with recombinations of hardware and software (e. g., transition between multiple devices, modular interactive platforms for collaborative use). Systems are moving “out of the box” with Augmented Reality, and users are going “ inside of the box” with Virtual Reality. This is obviously raising new challenges in terms of human factors, usability and design, but it also deeply questions actual architectures.

2.2.3 The Interaction Machine

We believe that promoting digital devices to empowering tools requires better fundamental knowledge about interaction phenomena AND to revisit the architecture of interactive systems in order to support this knowledge. By following a comprehensive systems approach—encompassing human factors, hardware elements, and all software layers above—we want to define the founding principles of an Interaction Machine:

- a set of hardware and software requirements with associated specifications for interactive systems to be tailored to interaction by leveraging human skills;

- one or several implementations to demonstrate and validate the concept and the specifications in multiple contexts;

- guidelines and tools for designing and implementing interactive systems, based on these specifications and implementations.

To reach this goal, we will adopt an opportunistic and iterative strategy guided by the designeering approach, where the engineering aspect will be fueled by the interaction design and study aspect. We will address several fundamental problems of interaction related to our vision of “empowering tools”, which, in combination with state-of-the-art solutions, will instruct us on the requirements for the solutions to be supported in an interactive system. This consists in reifying the concept of the Interaction Machine into multiple contexts and for multiple problems, before converging towards a more unified definition of “what is an interactive system”, the ultimate Interaction Machine, which constitutes the main scientific and engineering challenge of our project.

3 Research program

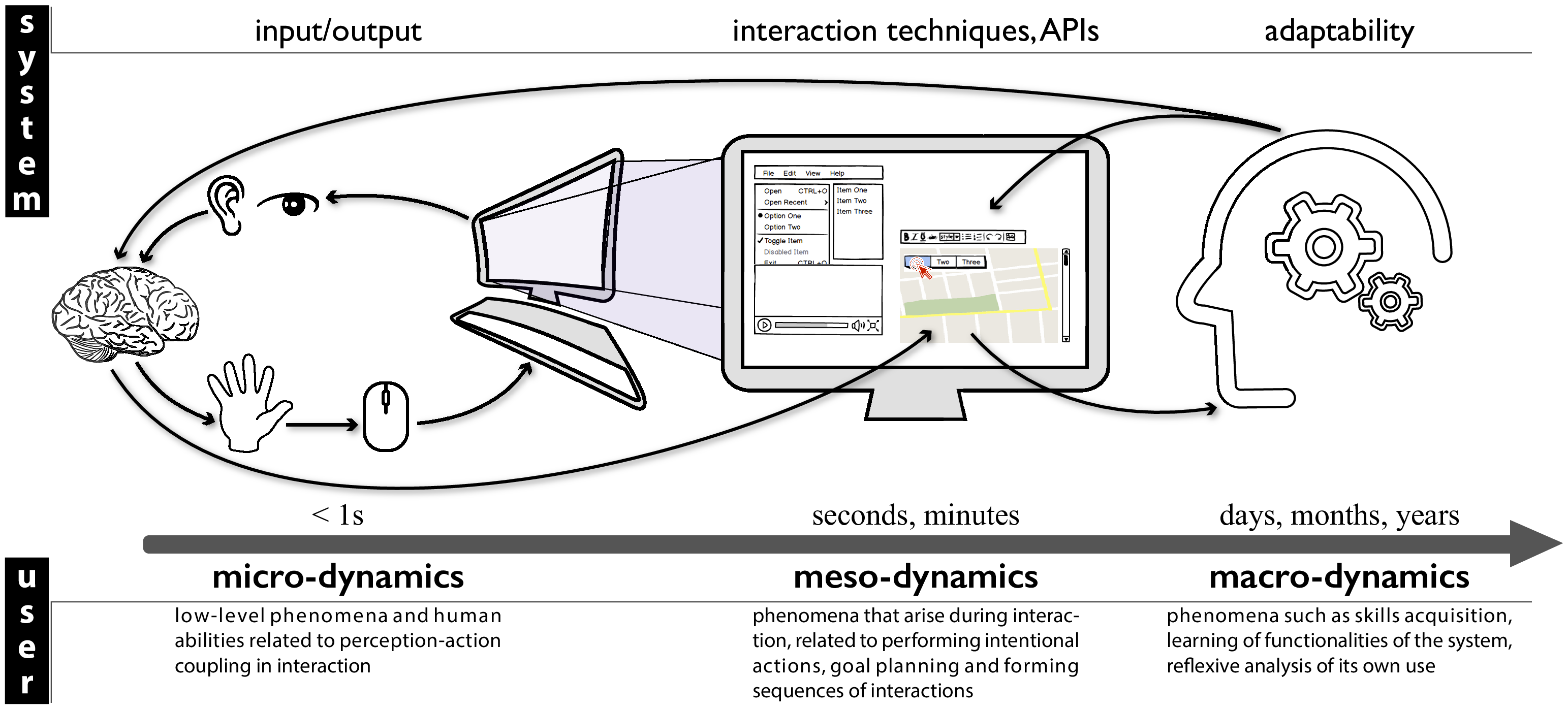

Interaction is by nature a dynamic phenomenon that takes place between interactive systems and their users. Redesigning interactive systems to better account for interaction requires fine understanding of these dynamics from the user side so as to better handle them from the system side. In fact, layers of actual interactive systems abstract hardware and system resources from a system and programing perspective. Following our Interaction Machine concept, we are reconsidering these architectures from the user's perspective, through different levels of dynamics of interaction (see Figure 1).

Represents the 3 levels of dynamics of interaction that we consider in our research program.

Considering phenomena that occur at each of these levels as well as their relationships will help us to acquire the necessary knowledge (Empowering Tools) and technological bricks (Interaction Machine) to reconcile the way interactive systems are designed and engineered with human abilities. Although our strategy is to investigate issues and address challenges for all of the three levels, our immediate priority is to focus on micro-dynamics since it concerns very fundamental knowledge about interaction and relates to very low-level parts of interactive systems, which is likely to influence our future research and developments at the other levels.

3.1 Micro-Dynamics

Micro-dynamics involve low-level phenomena and human abilities which are related to short time/instantness and to perception-action coupling in interaction, when the user has almost no control or consciousness of the action once it has been started. From a system perspective, it has implications mostly on input and output (I/O) management.

3.1.1 Transfer functions design and latency management

We have developed a recognized expertise in the characterization and the design of transfer functions 37, 51, i. e., the algorithmic transformations of raw user input for system use. Ideally, transfer functions should match the interaction context. Yet the question of how to maximize one or more criteria in a given context remains an open one, and on-demand adaptation is difficult because transfer functions are usually implemented at the lowest possible level to avoid latency. Latency has indeed long been known as a determinant of human performance in interactive systems 46 and recently regained attention with touch interactions 44. These two problems require cross examination to improve performance with interactive systems: Latency can be a confounding factor when evaluating the effectiveness of transfer functions, and transfer functions can also include algorithms to compensate for latency.

We have proposed new cheap but robust methods for input filtering 4 and for the measurement of end-to-end latency 36 and worked on compensation methods 50 and the evaluation of their perceived side effects 12. Our goal is then to automatically adapt transfer functions to individual users and contexts of use, which we started in 45, while reducing latency in order to support stable and appropriate control. To achieve this, we will investigate combinations of low-level (embedded) and high-level (application) ways to take user capabilities and task characteristics into account and reduce or compensate for latency in different contexts, e. g., using a mouse or a touchpad, a touch-screen, an optical finger navigation device or a brain-computer interface. From an engineering perspective, this knowledge on low-level human factors will help us to rethink and redesign the I/O loop of interactive systems in order to better account for them and achieve more adapted and adaptable perception-action coupling.

3.1.2 Tactile feedback & haptic perception

We are also concerned with the physicality of human-computer interaction, with a focus on haptic perception and related technologies. For instance, when interacting with virtual objects such as software buttons on a touch surface, the user cannot feel the click sensation as with physical buttons. The tight coupling between how we perceive and how we manipulate objects is then essentially broken although this is instrumental for efficient direct manipulation. We have addressed this issue in multiple contexts by designing, implementing and evaluating novel applications of tactile feedback 7.

In comparison with many other modalities, one difficulty with tactile feedback is its diversity. It groups sensations of forces, vibrations, friction, or deformation. Although this is a richness, it also raises usability and technological challenges since each kind of haptic stimulation requires different kinds of actuators with their own parameters and thresholds. And results from one are hardly applicable to others. On a “knowledge” point of view, we want to better understand and empirically classify haptic variables and the kind of information they can represent (continuous, ordinal, nominal), their resolution, and their applicability to various contexts. From the “technology” perspective, we want to develop tools to inform and ease the design of haptic interactions taking best advantage of the different technologies in a consistent and transparent way.

3.2 Meso-Dynamics

Meso-dynamics relate to phenomena that arise during interaction, on a longer but still short time-scale. For users, it is related to performing intentional actions, to goal planning and tools selection, and to forming sequences of interactions based on a known set of rules or instructions. From the system perspective, it relates to how possible actions are exposed to the user and how they have to be executed (i. e., interaction techniques). It also has implication on the tools for designing and implementing those techniques (programming languages and APIs).

3.2.1 Interaction bandwidth and vocabulary

Interactive systems and their applications have an always-increasing number of available features and commands due to, e. g., the large amount of data to manipulate, increasing power and number of functionalities, or multiple contexts of use.

On the input side, we want to augment the interaction bandwidth between the user and the system in order to cope with this increasing complexity. In fact, most input devices capture only a few of the movements and actions the human body is capable of. Our arms and hands for instance have many degrees of freedom that are not fully exploited in common interfaces. We have recently designed new technologies to improve expressibility such as a bendable digitizer pen 39, or reliable technology for studying the benefits of finger identification on multi-touch interfaces 40.

On the output side, we want to expand users' interaction vocabulary. All of the features and commands of a system can not be displayed on screen at the same time and lots of advanced features are by default hidden to the users (e. g., hotkeys) or buried in deep hierarchies of command-triggering systems (e. g., menus). As a result, users tend to use only a subset of all the tools the system actually offers 49. We will study how to help them to broaden their knowledge of available functions.

Through this “opportunistic” exploration of alternative and more expressive input methods and interaction techniques, we will particularly focus on the necessary technological requirements to integrate them into interactive systems, in relation with our redesign of the I/O stack at the micro-dynamics level.

3.2.2 Spatial and temporal continuity in interaction

At a higher level, we will investigate how more expressive interaction techniques affect users' strategies when performing sequences of elementary actions and tasks. More generally, we will explore the “continuity” in interaction. Interactive systems have moved from one computer to multiple connected interactive devices (computer, tablets, phones, watches, etc.) that could also be augmented through a Mixed-Reality paradigm. This distribution of interaction raises new challenges, both in terms of usability and engineering, that we clearly have to consider in our main objective of revisiting interactive systems 48. It involves the simultaneous use of multiple devices and also the changes in the role of devices according to the location, the time, the task, and contexts of use: a tablet device can be used as the main device while traveling, and it becomes an input device or a secondary monitor when resuming that same task once in the office; a smart-watch can be used as a standalone device to send messages, but also as a remote controller for a wall-sized display. One challenge is then to design interaction techniques that support smooth, seamless transitions during these spatial and temporal changes in order to maintain the continuity of uses and tasks, and how to integrate these principles in future interactive systems.

3.2.3 Expressive tools for prototyping, studying, and programming interaction

Current systems suffer from engineering issues that keep constraining and influencing how interaction is thought, designed, and implemented. Addressing the challenges we presented in this section and making the solutions possible require extended expressiveness, and researchers and designers must either wait for the proper toolkits to appear, or “hack” existing interaction frameworks, often bypassing existing mechanisms. For instance, numerous usability problems in existing interfaces stem from a common cause: the lack, or untimely discarding, of relevant information about how events are propagated and how changes come to occur in interactive environments. On top of our redesign of the I/O loop of interactive systems, we will investigate how to facilitate access to that information and also promote a more grounded and expressive way to describe and exploit input-to-output chains of events at every system level. We want to provide finer granularity and better-described connections between the causes of changes (e.g. input events and system triggers), their context (e.g. system and application states), their consequences (e.g. interface and data updates), and their timing 11. More generally, a central theme of our Interaction Machine vision is to promote interaction as a first-class object of the system 35, and we will study alternative and better-adapted technologies for designing and programming interaction, such as we did recently to ease the prototyping of Digital Musical Instruments 2 or the programming of graphical user interfaces 14. Ultimately, we want to propose a unified model of hardware and software scaffolding for interaction that will contribute to the design of our Interaction Machine.

3.3 Macro-Dynamics

Macro-dynamics involve longer-term phenomena such as skills acquisition, learning of functionalities of the system, reflexive analysis of its own use (e. g., when the user has to face novel or unexpected situations which require high-level of knowledge of the system and its functioning). From the system perspective, it implies to better support cross-application and cross-platform mechanisms so as to favor skill transfer. It also requires to improve the instrumentation and high-level logging capabilities to favor reflexive use, as well as flexibility and adaptability for users to be able to finely tune and shape their tools.

We want to move away from the usual binary distinction between “novices” and “experts” 5 and explore means to promote and assist digital skill acquisition in a more progressive fashion. Indeed, users have a permanent need to adapt their skills to the constant and rapid evolution of the tasks and activities they carry on a computer system, but also the changes in the software tools they use 53. Software strikingly lacks powerful means of acquiring and developing these skills 5, forcing users to mostly rely on outside support (e. g., being guided by a knowledgeable person, following online tutorials of varying quality). As a result, users tend to rely on a surprisingly limited interaction vocabulary, or make-do with sub-optimal routines and tools 54. Ultimately, the user should be able to master the interactive system to form durable and stabilized practices that would eventually become automatic and reduce the mental and physical efforts , making their interaction transparent.

In our previous work, we identified the fundamental factors influencing expertise development in graphical user interfaces, and created a conceptual framework that characterizes users' performance improvement with UIs 5, 10. We designed and evaluated new command selection and learning methods to leverage user's digital skill development with user interfaces, on both desktop and touch-based computers 8.

We are now interested in broader means to support the analytic use of computing tools:

- to foster understanding of interactive systems. As the digital world makes the shift to more and more complex systems driven by machine learning algorithms, we increasingly lose our comprehension of which process caused the system to respond in one way rather than another. We will study how novel interactive visualizations can help reveal and expose the “intelligence” behind, in ways that people better master their complexity.

- to foster reflexion on interaction. We will study how we can foster users' reflexion on their own interaction in order to encourage them to acquire novel digital skills. We will build real-time and off-line software for monitoring how user's ongoing activity is conducted at an application and system level. We will develop augmented feedbacks and interactive history visualization tools that will offer contextual visualizations to help users to better understand and share their activity, compare their actions to that of others, and discover possible improvement.

- to optimize skill-transfer and tool re-appropriation. The rapid evolution of new technologies has drastically increased the frequency at which systems are updated, often requiring to relearn everything from scratch. We will explore how we can minimize the cost of having to appropriate an interactive tool by helping users to capitalize on their existing skills.

We plan to explore these questions as well as the use of such aids in several contexts like web-based, mobile, or BCI-based applications. Although, a core aspect of this work will be to design systems and interaction techniques that will be as little platform-specific as possible, in order to better support skill transfer. Following our Interaction Machine vision, this will lead us to rethink how interactive systems have to be engineered so that they can offer better instrumentation, higher adaptability, and fewer separation between applications and tasks in order to support reuse and skill transfer.

4 Application domains

Loki works on fundamental and technological aspects of Human-Computer Interaction that can be applied to diverse application domains.

Our 2024 research involved desktop and mobile interaction, gestural interaction, virtual and extended reality, scientific communication supports, haptics, data visualization and sport analytics. Our technical work contributes to the more general application domains of interactive systems engineering.

5 Social and environmental responsibility

5.1 Footprint of research activities

Since 2022, we have included an estimate of the carbon footprint costs in our provisional travel budget. Although this is not our primary criterion, it at least makes us aware of it and to consider it in our decisions, especially when the events can also be remotely attended.

We favor as much as possible low-footprint transportation methods (train, carpool) for travel. We also avoid travelling for long-distance conferences when not necessary. As an example, the ACM CHI 2024 conference was held in Hawaii, but no LOKI member attended unless they had to physically present a scientific contribution.

5.2 Impact of research results

Aurélien Tabard participated in the writing of the Eco-concevoir des produits durables et réparables guide from the Club de la Durabilité presented at Assemblée Nationale on February 9th 2024. He also contributed to two articles in Le Monde on the impact of digital technologies on the environment and solutions to make our electronic devices more sustainable.

6 Highlights of the year

6.1 Awards

Best paper honorable mention award (top 5%) from the CHI'24 ACM conference for the paper “DirectGPT: A Direct Manipulation Interface to Interact with Large Language Models”, Damien Masson, Sylvain Malacria, Géry Casiez and Daniel Vogel. 25.

Best student poster award from the IHM'24 conference for the poster “Adaptability of software tutorials to user interfaces”, Raphaël Perraud. 32.

7 New software, platforms, open data

7.1 New software

7.1.1 Asynchrome

-

Keywords:

Raster graphics, Web Application

-

Functional Description:

A simple web-based drawing program based on PIXI.js. Its aim is to serve as a test base for the command history libraries developed during the ANR Causality project. It currently provides basic drawing functions including layer creation and manipulation (ordering, blending, opacity), and control of various brush parameters (size, opacity, flow, smoothing, spacing, blending, etc.).

-

Release Contributions:

First submission to BIL. Beta version under development.

-

Contact:

Mathieu Nancel

7.1.2 ClimbingAnalysis

-

Name:

Interactive Analytical Tool for Lead Climbing Data

-

Keywords:

Human Computer Interaction, Sport, Data analysis

-

Functional Description:

This tool has been designed in collaboration with the Fédération Française de la Montagne et de l'Escalade (FFME) to analyze data from high-level athletes captured using a video annotation tool. It enables data to be visualized using a number of graphs focusing on different types of data, such as hold times and climbing speed. Depending on the type of analysis required, the tool can be used to compare several athletes on the same route, or to analyze an athlete's performances over several competitions.

-

Release Contributions:

New podium panel to evaluate French athletes' performances over a year and bug corrections

-

News of the Year:

Publication of a scientific paper at the French speaking conference IHM'24 (https://ihm2024.afihm.org/) that reports on the tool functionalities, along with a demonstration during the conference.

-

Contact:

Bruno Fruchard

-

Participants:

Bruno Fruchard, Timo Maszewski

-

Partner:

Fédération Française de la Montagne et de l'Escalade (FFME)

7.1.3 libpointing

-

Name:

An open-source cross-platform library to get raw events from pointing devices and master transfer functions

-

Keyword:

Transfer functions

-

Functional Description:

Libpointing is a software toolkit that provides direct access to HID pointing devices and supports the design and evaluation of pointing transfer functions. The toolkit provides resolution and frequency information for the available pointing and display devices and makes it easy to choose between them at run-time through the use of URIs. It allows to bypass the system's transfer functions to receive raw asynchronous events from one or more pointing devices. It replicates as faithfully as possible the transfer functions used by Microsoft Windows, Apple OS X and Xorg (the X.Org Foundation server). Running on these three platforms, it makes it possible to compare the replicated functions to the genuine ones as well as custom ones. The toolkit is written in C++ with Python, Java and Node.js bindings available (about 49,000 lines of code in total). It is publicly available under the GPLv2 license.

-

Release Contributions:

Added support for new versions of Python and improved the continuous integration.

- URL:

- Publications:

-

Contact:

Gery Casiez

-

Participants:

Gery Casiez, Nicolas Roussel

-

Partner:

Université de Lille

7.1.4 OpenSCAD_BP

-

Name:

OpenSCAD version supporting bidirectional programming

-

Keywords:

OpenSCAD, Bidirectional programming

-

Functional Description:

The modified version of OpenSCAD supports integrated navigation and editing through interactions with the view. It supports reverse search by interaction with the elements in the view and highlighting the corresponding lines of code. It also supports forward search by highlighting a part in the view corresponding to a line of code. Editing consists in translating and rotating objects in the view and updating the corresponding lines of code accordingly.

-

Release Contributions:

We added support to allow users to retrieve parametric expressions from the visual representation for reuse in the code, streamlining the design process of 3D objects.

-

News of the Year:

Publication of a scientific paper at ACM UIST'24 to retrieve parametric expressions from the visual representation in OpenSCAD for reuse in the code.

- URL:

- Publications:

-

Contact:

Gery Casiez

-

Participants:

Johann Gonzalez Avila, Gery Casiez, Thomas Pietrzak, Audrey Girouard

7.1.5 Polyphony

-

Name:

Polyphony

-

Keywords:

Human Computer Interaction, Toolkit, Engineering of Interactive Systems

-

Functional Description:

Polyphony is an experimental toolkit demonstrating the use of Entity-Component-System (ECS) to design Graphical User Interfaces (GUI) on web technologies (HTML canvas or SVG). It also extends the original ECS model to support advanced interfaces.

-

News of the Year:

Deployed and documented a development and production ecosystem for the project, involving software testing, continuous integration and the provision of examples/demonstrators. A demonstrator, in the form of a blog-format tutorial including sections of interactive code, has been set up.

- URL:

- Publications:

-

Contact:

Damien Pollet

-

Participants:

Thibault Raffaillac, Stephane Huot, Damien Pollet, RaphaËl James

7.2 Open data

- Experimental data for the paper 17 are available at https://osf.io/63US9/.

- Code and live demo for the tool presented in 25 is available at http://ns.inria.fr/loki/DirectGPT.

- The code for the paper 20 is available at http://ns.inria.fr/loki/bp.

- The documentation and code for the paper 33 are available at https://sygaldry.enchantedinstruments.com/.

- The library "Pyrbit" introduced in the paper 22 is available at https://jgori-ouistiti.github.io/pyrbit/.

8 New results

According to our research program, we have studied dynamics of interaction along three levels depending on interaction time scale and related user's perception and behavior: Micro-dynamics, Meso-dynamics, and Macro-dynamics. Considering phenomena that occur at each of these levels as well as their relationships will help us acquire the necessary knowledge (Empowering Tools) and technological bricks (Interaction Machine) to reconcile the way interactive systems are designed and engineered with human abilities. Our strategy is to investigate issues and address challenges at all three levels of dynamics in order to contribute to our longer term objective of defining the basic principles of an Interaction Machine.

8.1 Micro-dynamics

Participants: Géry Casiez [contact person], Yuan Chen, Alice Loizeau, Sylvain Malacria, Mathieu Nancel, Omid Niroomandi, Thomas Pietrzak.

8.1.1 Arm Cooling Selectively Impacts Sensorimotor Control

The benefits of cold have long been recognized in sport and medicine. However, it also brings costs, which have more rarely been investigated, notably in terms of sensorimotor control. We hypothesized that, in addition to peripheral effects, cold slows down the processing of proprioceptive cues, which has an impact on both feedback and feedforward control. We therefore compared the performances of participants whose right arm had been immersed in either cold water (arm temperature: 14°C) or lukewarm water (arm temperature: 34°C) 17. In a first experiment, we administered a Fitts’s pointing task and performed a kinematic analysis to determine whether sensorimotor control processes were affected by the cold. Results revealed 1) modifications in late kinematic parameters, suggesting changes in the use of proprioceptive feedback, and 2) modifications in early kinematic parameters, suggesting changes in action representations and/or feedforward processes. To explore our hypothesis further, we ran a second experiment in which no physical movement was involved, and thus no peripheral effects. Participants were administrated a hand laterality task, known to involve implicit motor imagery and assess the internal representation of the hand. They were shown left- and right-hand images randomly displayed in different orientations in the picture plane and had to identify as quickly and as accurately as possible whether each image was of the left hand or the right hand. Results revealed slower responses and more errors when participants had to mentally rotate the cooled hand in the extreme orientation of 160°, further suggesting the impact of cold on action representations.

8.1.2 A Comparison of Virtual Reality Menu Archetypes

Spatial menus, meaning visual menus requiring movement in space to interact, are a fundamental part of virtual reality (VR) interfaces. The design, development, and evaluation of novel spatial menu styles has been a research topic for decades. However, not all demonstrated concepts in academia make the jump to real-world implementations. Moreover, since VR design and development on a consumer scale is still in its relative infancy, designers lack informed insight into the relative performance, accuracy, comfort, and preference between current common implementations.

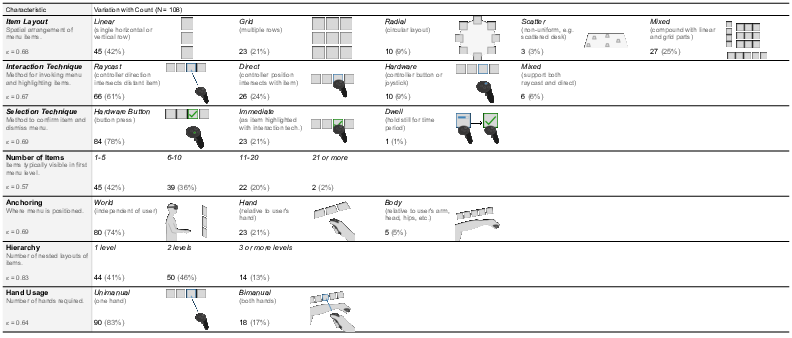

We contributed an analysis of the prevalence and relative performance of archetypal VR menu techniques 18. An initial survey of 108 menu interfaces in 84 popular commercial VR applications establishes common design characteristics (Table 1). These characteristics motivate the design of raycast, direct, and marking menu archetypes, and a two-experiment comparison of their relative performance with one and two levels of hierarchy using 8 or 24 items. With a single-level menu, direct input is the fastest interaction technique in general, and is unaffected by number of items. With a two-level hierarchical menu, marking is fastest regardless of item number. Menus using raycasting, the most common menu interaction technique, were among the slowest of the tested menus but were rated most consistently usable. Using the combined results, we provide design and implementation recommendations with applications to general VR menu design.

8.2 Meso-dynamics

Participants: Géry Casiez, Yuan Chen, Bruno Fruchard, Felipe Gonzalez, Alice Loizeau, Sylvain Malacria, Mathieu Nancel, Raphaël Perraud, Thomas Pietrzak [contact person], Damien Pollet, Travis West, Janin Koch, Eya Ben Chaaben.

8.2.1 LuxAR

People often expand desktop space with multiple displays, but physical constraints and costs pose challenges. Spatial augmented reality (SAR), which uses projectors to transform surfaces into interactive displays, offer a good alternative. However, fixed or handheld systems lack flexibility and can cause fatigue. A possible solution is to develop a method for securely positioning the pro-cam, enabling explicit and direct manipulation for extended tasks.

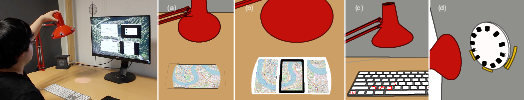

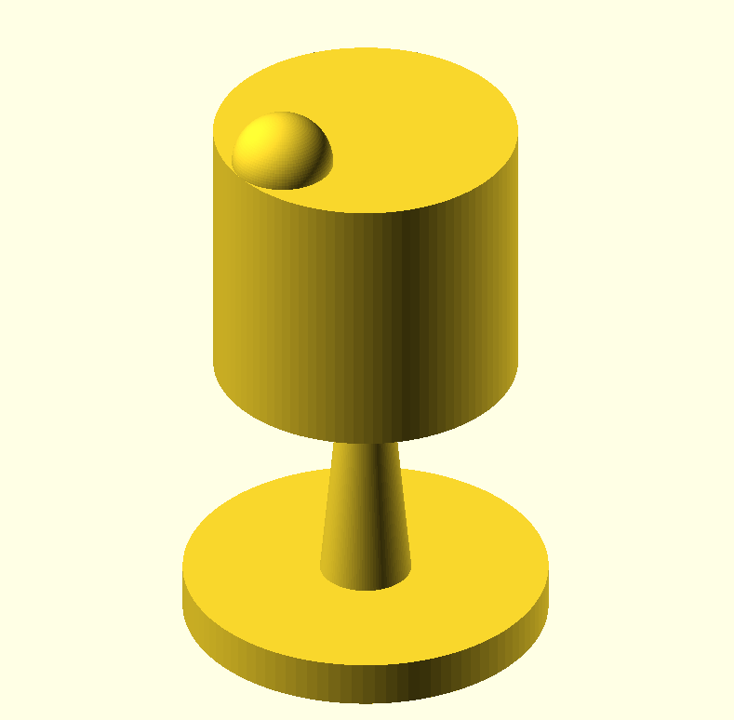

Therefore, we prototyped and evaluated LuxAR 19, a desktop input and output device in the form of an architect desk lamp (Figure 2). The bulb is replaced with a pico laser projector, a button replaces the switch, and the mechanical design allows it to remain in position between user manipulations. By also tracking lamp position and orientation, we explore novel interaction techniques to extend and augment conventional devices in a physical desktop environment. Content can be transferred from devices to the surrounding environment, and the representation of content can be adapted to surfaces, objects, and other devices using the lamp projection target and lamp proximity. A user study with the prototype and semi-structured interviews examine proposed interactions and consider potential scenarios and applications. Based on the results, we propose further design considerations for direct manipulation systems to extend and augment desktop computing. This work is further detailed in Chen's Ph.D. 29.

LuxAR is an architect lamp instrumented with a projector to extend direct manipulation interfaces into the physical surroundings, such as: (a) moving desktop windows onto desk surfaces; (b) extending interaction space of mobile devices; (c) augmenting keyboard with shortcut keys; (d) visualizing information related to nearby objects like a wall clock.

8.2.2 DirectGPT

Given a textual instruction in the form of a “prompt”, a large language model (LLM) can generate outputs such as emails, computer code, and vector images. The output often needs to be tweaked by conversing with the LLM until obtaining a satisfactory result. However, this tweaking process uses relatively slow text input, it requires precise references to elements in the output, and the wording must be carefully chosen to workaround unintuitive limitations of LLMs.

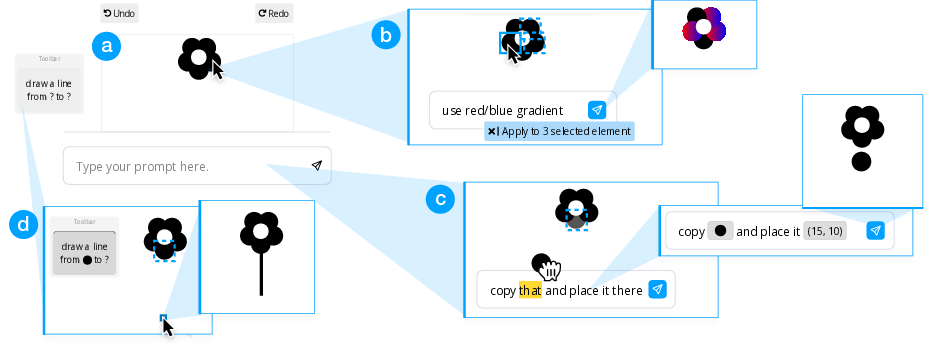

DirectGPT used on a vector image to demonstrate direct manipulation principles for LLMs: (a) continuous representation of the objects of interest; physical actions to (b) localize the effect of prompts and (c) refer to objects; (d) reusable prompts in a toolbar of commands; and reversible operations through undo and redo features.

Instead of solely relying on words, we introduced and characterized prompting through direct manipulation as a way to facilitate conversations with LLMs 25. This includes: continuous representation of generated objects of interest; reuse of prompt syntax in a toolbar of commands; manipulable outputs to compose or control the effect of prompts; and undo mechanisms. This idea is exemplified in DirectGPT (Figure 3), a user interface layer on top of ChatGPT that works by transforming direct manipulation actions to engineered prompts. A study shows participants were 50% faster and relied on 50% fewer and 72% shorter prompts to edit text, code, and vector images compared to baseline ChatGPT. Our work contributes a validated approach to integrate LLMs into traditional software using direct manipulation.

8.2.3 Challenges and Solutions for OpenSCAD Users

Direct manipulation has been established as the main interaction paradigm for Computer-Aided Design (CAD) for decades. It provides fast, incremental, and reversible actions that allow for an iterative process on a visual representation of the result. Despite its numerous advantages, some users prefer a programming-based approach where they describe the 3D model they design with a specific programming language, such as OpenSCAD. It allows users to create complex structured geometries and facilitates abstraction. Unfortunately, most current knowledge about CAD practices only focuses on direct manipulation programs. We interviewed 20 programming-based CAD users to understand their motivations and challenges 21. Our findings reveal that this programming-oriented population presents difficulties in the design process in tasks such as 3D spatial understanding, validation and code debugging, creation of organic shapes, code-view navigation and the creation of parametric designs.

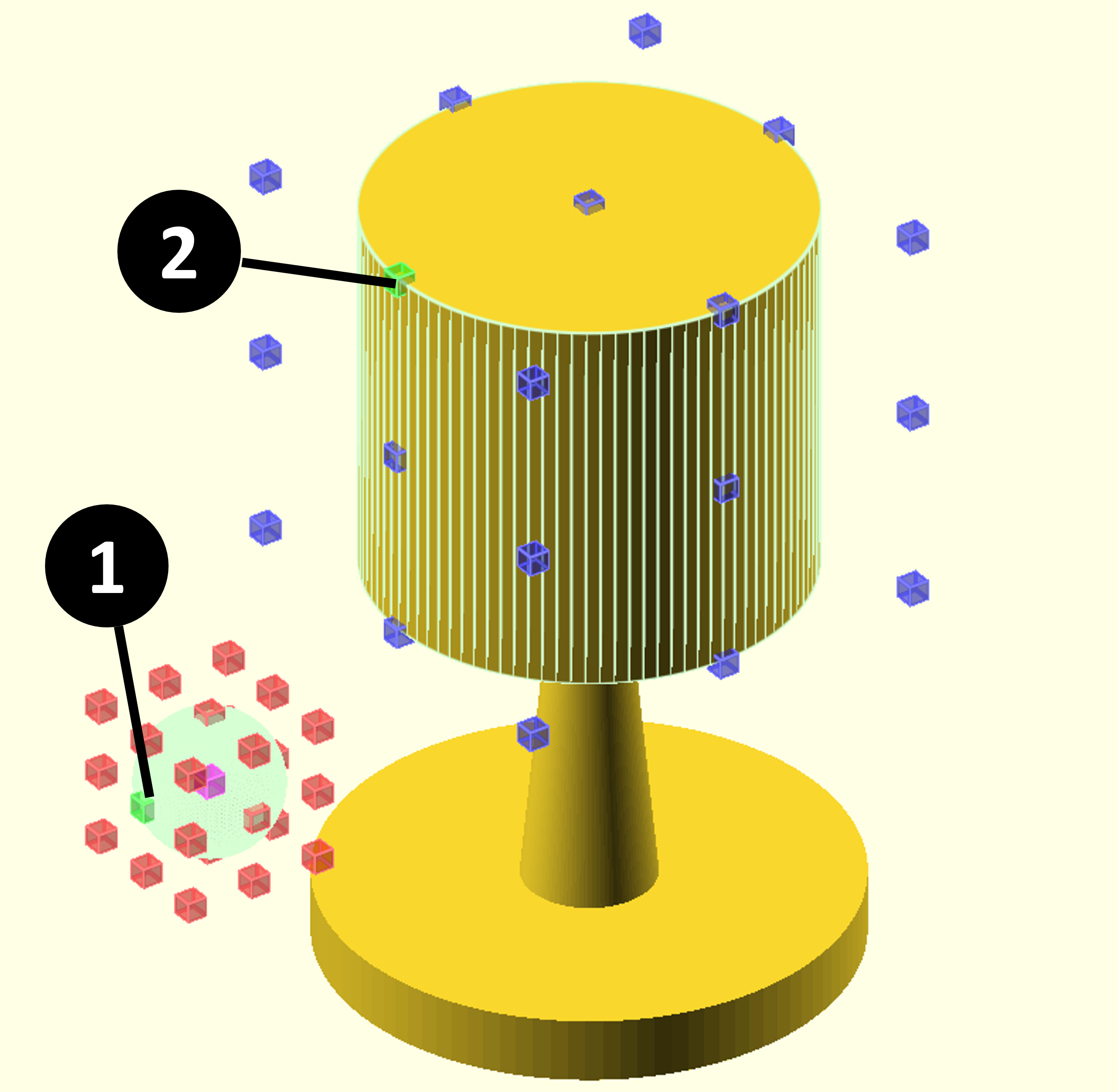

One of the challenges we identified is the creation of parametric designs. Programming-based CAD involves complex programming and arithmetic expressions to describe geometric properties, linking various object properties to create parametric designs. Unfortunately, these applications lack assistance, making the process unnecessarily demanding. We propose a solution that allows users to retrieve parametric expressions from the visual representation for reuse in the code, streamlining the design process 20 (Figure 4). We demonstrated this concept through a proof-of-concept implemented in the programming-based CAD application, OpenSCAD, and conducted an experiment with 11 users. Our findings suggest that this solution could significantly reduce design errors, improve interactivity and engagement in the design process, and lower the entry barrier for newcomers by reducing the mathematical skills typically required in programming-based CAD applications. These results are further developed in Gonzalez's Ph.D. 30.

Delta vector allows users to place one object's handle relative to another object's handle. Left: The user right-clicks the origin (1) and destination handles (2) creating the delta vector in the clipboard. Right: The user can place the translate into the sphere definition to locate it parametrically.

Delta vector allows users to place one object's handle relative to another object's handle. Left: The user right-clicks the origin (1) and destination handles (2) creating the delta vector in the clipboard. Right: The user can place the translate into the sphere definition to locate it parametrically.

8.2.4 Investigating the Frictions due to Interface Differences when Following Software Video Tutorials

Video tutorials are the main medium to learn novel software skills. However, the User Interface (UI) presented in a video tutorial may differ from the learner's UI because of customizations or differences in software versions. We investigated the frictions resulting from such differences on a learners' ability to reproduce a task demonstrated in a video tutorial 26, 32. Through a morphological analysis, we first identified 13 types of “interface differences" that differ in terms of availability, reachability and spatial location of features in the interface. To better assess the frictions resulting from each of these differences, we then conducted a laboratory study with 26 participants instructed to reproduce a vector graphics editing task. Our results highlight interesting UI comparison behaviors, and illustrate various approaches employed to visually locate features.

8.3 Macro-dynamics

Participants: Géry Casiez, Bruno Fruchard, Vincent Lambert, Suliac Lavenant, Sylvain Malacria [contact person], Timo Maszewski, Mathieu Nancel, Antoine Nollet, Janin Koch, Xiaohan Peng, Aurélien Tabard.

8.3.1 Studying the representation of Microgestures

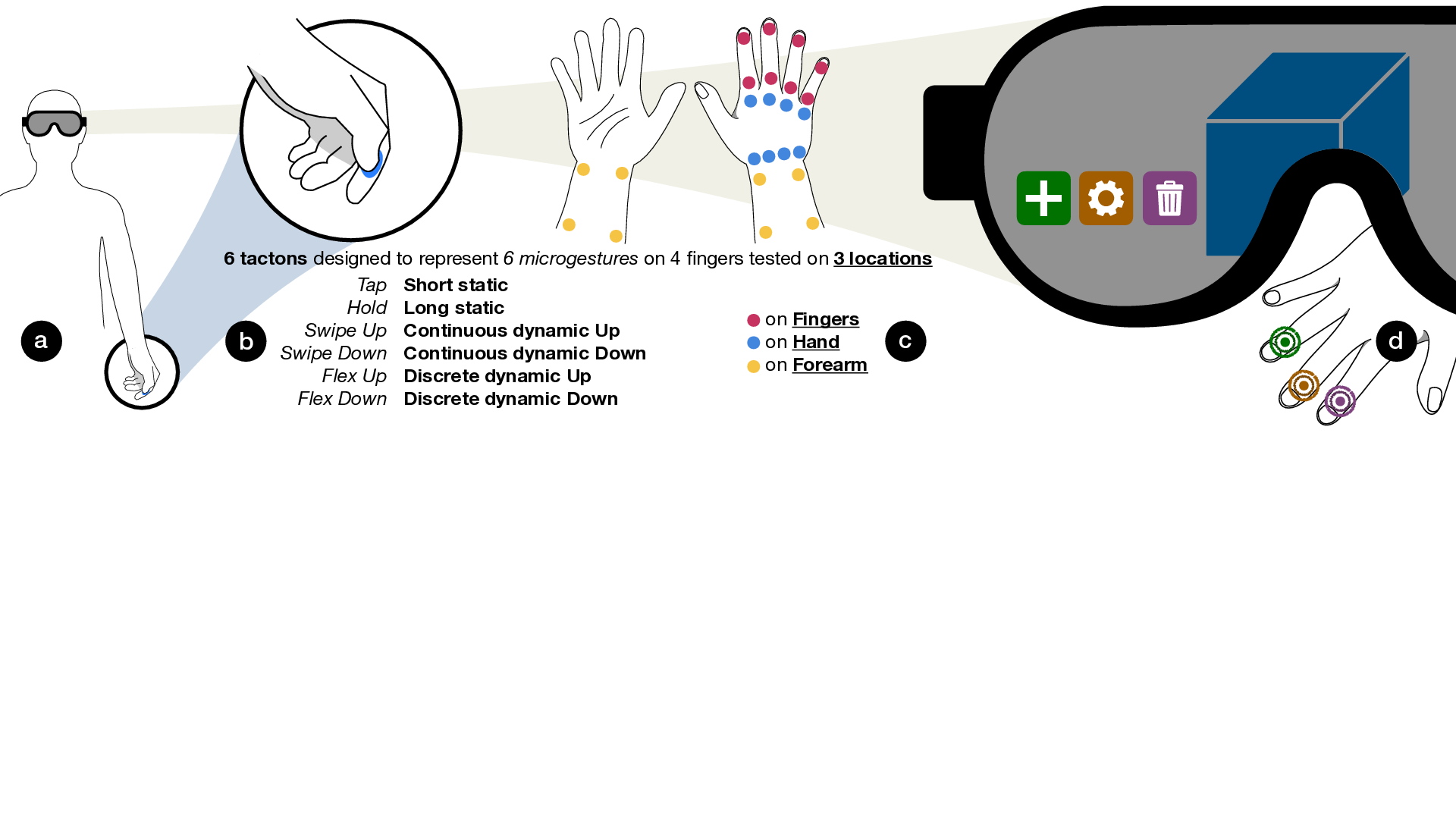

Hand microgestures are promising for mobile interaction with wearable devices. However, they will not be adopted if practitioners cannot communicate to users the microgestures associated with the commands of their applications. This requires unambiguous representations that simultaneously show the multiple microgestures available to control an application.

We first studied visual representations that simultaneously show the multiple microgestures available in an application 23. Using a systematic approach, we evaluate how such representations should be designed and contrast 4 conditions depending on the microgestures (tap-swipe and tap-hold) and fingers (index and index-middle) considered. Based on the results, we design a simultaneous representation of microgestures for a given set of 14 application commands. We then evaluate the usability of the representation for novice users and the suitability of the representation for small screens compared with a baseline. Finally, we formulate 8 recommendations based on the results of all the experiments. In particular, redundant graphical and textual representations of microgestures should only be displayed for novice users.

Vibrotactile Haptic Cues for representing microgestures: a) an example of microgesture interaction in mixed reality; b) 6 tactons designed to represent 6 microgestures; c) the 6 tactons are tested on 4 fingers with 3 haptic device locations; d) an example of vibrotactile feedforward for microgesture interaction in mixed reality.

We also explored the use of vibrotactile haptic cues for representing microgestures 24 (Figure 5). We built a four-axes haptic device for providing vibrotactile cues mapped to all four fingers. We designed six patterns, inspired by six most commonly studied microgestures, that can be played independently on each axis of the device. We ran an experiment testing three different device locations (fingers, back of the hand, and forearm) for pattern and axis recognition. For all three device locations, participants interpreted the patterns with similar accuracy. We also found that they were better at distinguishing the axes when the device is placed on the fingers. Hand and Forearm device locations remain suitable alternatives but involve a greater trade-off between recognition rate and expressiveness.

8.3.2 Design Study of a Data Analysis Tool for Lead Climbing

We performed a design study of an analytical tool for lead climbing performances 27, created in collaboration with the French Federation of Climbing (FFME). Its goal is to facilitate the exploration of a dataset to identify new performance indicators and better train French athletes. Regular interviews enabled us to identify the analysts' needs and design a robust system they can access constantly. A thematic analysis of transcripts from two evaluation days with the national coach highlighted the importance of accessing performance videos to view key passages, the need to navigate between several levels of information and the value of relying on qualitative data to better understand athletes' choices. The paper details our design choices and outline the solutions implemented to inform the design of future sports performance analysis systems.

Data analytical tool tailored to lead climbing analyses used by coaches of the French climbing federation.

8.3.3 Model-based Evaluation of Recall-based Interaction Techniques

We tackled two challenges of the empirical evaluation of interaction techniques that rely on user memory, such as hotkeys, here coined Recall-based interaction techniques (RBITs) 22: (1) the lack of guidance to design the associated study protocols, and (2) the difficulty of comparing evaluations performed with different protocols. To address these challenges, we propose a model-based evaluation of RBITs. This approach relies on a computational model of human memory to (1) predict the informativeness of a particular protocol through the variance of the estimated parameters (Fisher Information) (2) compare RBITs recall performance based on the inferred parameters rather than behavioral statistics, which has the advantage of being independent of the study protocol. We also release a Python library implementing our approach to aid researchers in producing more robust and meaningful comparisons of RBITs.

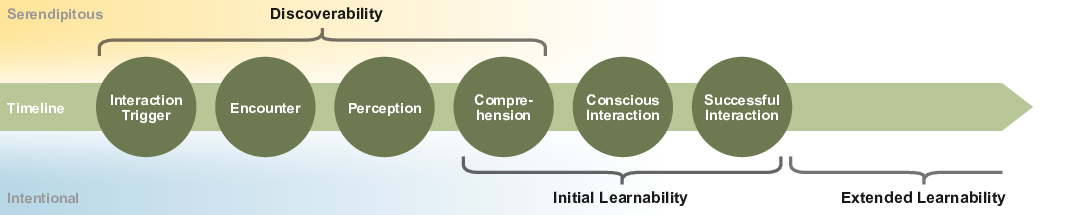

8.3.4 Clarifying and differentiating discoverability

Everyday users are confronted with increasingly complex technologies, be that novel introductions or ever-changing and expanding familiar interfaces. This creates the challenge of users needing to perpetually discover new functionality and interactivity, which is rarely addressed when novel technology is proposed. A possible contributing factor for this, is the variety of, often inconsistently defined, associated concepts and a consequent lack of clarity on how to address these problems. We examined usage and definitions of discoverability as well as related concepts and offer clarifications where definitions are ambiguous while highlighting focus areas and limitations 16. In doing so, we provide a clear definition of discoverability, underscore the separation of system, feature and interaction discoverability and elucidate how other concepts focused on initial interactions differ (Figure 7).

Timeline of progressing initial interaction. Both discoverability and learnability apply during this timeframe and influence the interaction's occurrence and success.

8.3.5 Quantifying and Leveraging Uncertain and Imprecise Answers in Multiple Choice Questionnaires for Crowdsourcing

Questionnaires are efficient for collecting numerous user feedback. However, the reliability of results is a major issue, even with honest participants. Indeed, they face situations of doubt, and usually do not have the option to express their hesitations. We describe a user study in which we provide participants with the possibility to give 1) a certitude rate 2) imprecise answers, 3) both a certitude rate and imprecise answers 28. Firstly, we observe that contributors express their hesitations consistently: there is a correlation between the task difficulty on the one hand, and the uncertainty and imprecision of the answer, on the other hand. Secondly, our results demonstrate the effectiveness of the decision-making process by using this additional information with the belief functions theory. Indeed, this process helps to reduce the error rate and fewer participants are required to reach a satisfactory correct answers rate.

8.3.6 Capturing stakeholders values and preferences regarding algorithmic systems

Complex-decisions made by algorithmic systems should embed interests and values of the different stakeholders involved in the making of these systems. We investigate how users' preferences can be captured in order to incorporate more ethical considerations in the design of such systems 31. Adopting a value sensitive design approach, we propose a method to measure how much the context can impact the differences between the declared importance of values and decisions made in a given situation. We developed a survey tool that enable to measure importance of values in either absolute or relative ways, comparing pairs of values in specific situations. We conducted a preliminary study to test our survey tool with fifteen participants in the context of Smart Grids. Our results show differences in the way participants estimate values and underline the interest of capturing users preferences in context.

8.4 Interaction Machine

Participants: Géry Casiez, Bruno Fruchard, Janin Koch, Alice Loizeau, Sylvain Malacria, Mathieu Nancel [contact person], Thomas Pietrzak, Damien Pollet, Travis West.

8.4.1 GUI Behaviors to Minimize Pointing-based Interaction Interferences

Pointing-based interaction interferences are situations wherein GUI elements appear, disappear, or change shortly before being selected, and too late for the user to inhibit their movement. Their cause lays in the design of most GUIs, for which any user event on an interactive element unquestionably reflects the user's intention-even one millisecond after that element has changed. Previous work indicate that interferences can cause frustration and sometimes severe consequences. This work investigates new default behaviors for GUI elements that aim to prevent the occurrences of interferences or to mitigate their consequences 15. We present a design space of the advantages and technical requirements of these behaviors, and demonstrate in a controlled study how simple rules can reduce the occurrences of so-called "Pop-up-style" interferences, and user frustration. We then discuss their application to various forms of interaction interferences. We conclude by addressing the feasibility and trade-offs of implementing these behaviors in existing systems.

8.4.2 Sygaldry: DMI Components First and Foremost

Motivated by challenges involved in the long-term maintenance of digital musical instruments, the frustrating problem of glue code, and the inherent complexity of evaluating new instruments, we developed Sygaldry, a C++20 library of digital musical instrument components 33. By emphasising the development of components first and foremost, and through use of C++20 language features, strict management of dependencies, and literate programming, Sygaldry provides immediate benefits to rapid prototyping, maintenance, and replication of DMIs, encourages code portability and code-reuse, and reduces the burden of glue-code in DMI firmware. Recognising that there still remains significant future work, we discuss the advantages of focusing development and research on DMI components rather than individual DMIs, and argue that a modern C++ library is among the most appropriate realisations of these efforts.

9 Partnerships and cooperations

9.1 Inria associate team not involved in an IIL or an international program

INPUT

-

Title:

Re-designing the Input Pipeline in Interactive Systems

-

Duration:

2024 -> 2026

-

Coordinator:

Géry Casiez

-

Partners:

- University of Waterloo, Waterloo (Canada)

-

Inria contact:

Géry Casiez

- Web site:

-

Summary:

The objective of the team is to redesign the input pipeline in interactive systems, from the capture of user motion by a sensor, its filtering, transformation and interpretation by the system, to the production of feedback to the user. Routine tasks such as controlling a system cursor or moving a virtual camera involve continuous visuo-motor control, to which the system has to respond accurately and with minimal latency.

The objective for the first year is to focus on the input filtering and signal processing with the primary goal to create an improved version of the 1€ filter, published by Géry Casiez and Daniel Vogel in 2012, which is widely used in research and industry, and remains the benchmark for filtering noisy signals in interactive systems. Other objectives include further work on latency and transfer functions in interactive systems.

9.2 International research visitors

9.2.1 Visits of international scientists

Other international visits to the team

Ravin Balakrishnan

-

Status

Professor

-

Institution of origin:

University of Toronto

-

Country:

Canada

-

Dates:

01/09/2024 to 31/07/2025

-

Context of the visit:

Ravin Balakrishnan is on sabbatical in the team to develop collaborations on interaction in Augmented Reality and Virtual Reality.

-

Mobility program/type of mobility:

sabbatical

Scott Bateman

-

Status

Associate Professor

-

Institution of origin:

University of New Brunswick

-

Country:

Canada

-

Dates:

09/12/2024 to 20/12/2024

-

Context of the visit:

Scott Bateman visited the team to discuss the ongoing collaboration on transfer functions and interaction in 3D environments (VR, AR). He also taught a course at ULille.

-

Mobility program/type of mobility:

invited professor by ULille

9.2.2 Visits to international teams

Sabbatical programme

Sylvain Malacria

-

Visited institution:

IIS Laboratory at the Universisty of Tokyo (Japon)

-

Dates of the stay:

From Mon Jul 15 2024 to Thu May 15 2025

-

Summary of the stay:

Sylvain Malacria is on a sabbatical visit in the IIS Lab at the University of Tokyo, funded by a JSPS international fellowship for research in Japan. This sabbatical project is focused on the design and implementation of software tools to facilitate the authoring and reading of scientific documents.

9.3 Informal International Partners

-

Fanny Chevalier, University of Toronto, Ontario, CA

visual communication of input possibilities on touch-screens

-

Scott Bateman, University of New Brunswick, Fredericton, CA

transfer functions, interaction in 3D environments (VR, AR)

-

Audrey Girouard, Carleton University, Ottawa, CA

interactions for digital fabrication 21, 20, 30 (co-tutelle thesis of Johann Felipe Gonzalez Avila)

-

Daniel Vogel, University of Waterloo, Waterloo, CA

spatially augmented reality 19, 29 (co-tutelle thesis of Yuan Chen) and polymorphic documents 25 (co-supervision of Damien Masson's Ph.D. thesis who obtained the Prix de thèse en IHM 2023 from AFIHM for his Ph.D. “Transformer l'expérience de lecture des documents scientifiques avec le polymorphisme“)

9.4 National initiatives

9.4.1 ANR

Causality (JCJC, 2019-2024)

Integrating Temporality and Causality to the Design of Interactive Systems

Participants: Géry Casiez, Alice Loizeau, Sylvain Malacria, Mathieu Nancel [contact person].

The project addresses a fundamental limitation in the way interfaces and interactions are designed and even thought about today, an issue we call procedural information loss: once a task has been completed by a computer, significant information that was used or produced while processing it is rendered inaccessible regardless of the multiple other purposes it could serve. It hampers the identification and solving of identifiable usability issues, as well as the development of new and beneficial interaction paradigms. We will explore, develop, and promote finer granularity and better-described connections between the causes of those changes, their context, their consequences, and their timing. We will apply it to facilitate the real-time detection, disambiguation, and solving of frequent timing issues related to human reaction time and system latency; to provide broader access to all levels of input data, therefore reducing the need to "hack" existing frameworks to implement novel interactive systems; and to greatly increase the scope and expressiveness of command histories, allowing better error recovery but also extended editing capabilities such as reuse and sharing of previous actions.

Web site: loki.lille.inria.fr/causality

Related publications in 2024: 15

Discovery (JCJC, 2020-2025)

Promoting and improving discoverability in interactive systems

Participants: Géry Casiez, Eva Mackamul, Sylvain Malacria [contact person], Raphaël Perraud, Suliac Lavenant.

This project addresses a fundamental limitation in the way interactive systems are usually designed, as in practice they do not tend to foster the discovery of their input methods (operations that can be used to communicate with the system) and corresponding features (commands and functionalities that the system supports). Its objective is to provide generic methods and tools to help the design of discoverable interactive systems: we will define validation procedures that can be used to evaluate the discoverability of user interfaces, design and implement novel UIs that foster input method and feature discovery, and create a design framework of discoverable user interfaces. This project investigates, but is not limited to, the context of touch-based interaction and will also explore two critical timings when the user might trigger a reflective practice on the available inputs and features: while the user is carrying her task (discovery in-action); and after having carried her task by having informed reflection on her past actions (discovery on-action). This dual investigation will reveal more generic and context-independent properties that will be summarized in a comprehensive framework of discoverable interfaces. Our ambition is to trigger a significant change in the way all interactive systems and interaction techniques, existing and new, are thought, designed, and implemented with both performance and discoverability in mind.

Web site: ns.inria.fr/discovery

Related publications in 2024: 32, 26, 16, 24

PerfAnalytics (PIA “Sport de très haute performance”, 2020-2025)

In situ performance analysis

Participants: Géry Casiez, Bruno Fruchard [contact person], Sylvain Malacria, Timo Maszewski.

The objective of the PerfAnalytics project (Inria, INSEP, Univ. Grenoble Alpes, Univ. Poitiers, Univ. Aix-Marseille, Univ. Eiffel & 5 sports federations) is to study how video analysis, now a standard tool in sport training and practice, can be used to quantify various performance indicators and deliver feedback to coaches and athletes. The project, supported by the boxing, cycling, gymnastics, wrestling, and mountain and climbing federations, aims to provide sports partners with a scientific approach dedicated to video analysis, by coupling existing technical results on the estimation of gestures and figures from video with scientific biomechanical methodologies for advanced gesture objectification (muscular for example).

Partners: the project involves several academic partners (Inria, INSEP, Univ. Grenoble Alpes, Univ. Poitiers, Univ. Aix-Marseille, Univ. Eiffel), as well as elite staff and athletes from different Olympic disciplines (Climbing, BMX Race, Gymnastics, Boxing and Wrestling).

Web site: perfanalytics.fr

Related publications in 2024: 27

MIC (PRC, 2022-2026)

Microgesture Interaction in Context

Participants: Vincent Lambert, Suliac Lavenant, Sylvain Malacria, Thomas Pietrzak [contact person].

MIC aims at studying and promoting microgesture-based interaction by putting it in practice in real-life use situations. Microgestures are hand gestures performed on one hand with the same hand. Examples include tap and swipe gestures performed by one finger on another finger. We study interaction techniques based on microgestures or on the combination of microgestures with another modality including haptic feedback as well as mechanisms that support discoverability and learnability of microgestures.

Partners: Univ. Grenoble Alpes, Inria, Univ. Toulouse 2, CNRS, Institut des Jeunes Aveugles, Immersion SA.

Web site: mic.imag.fr

10 Dissemination

10.1 Promoting scientific activities

10.1.1 Scientific events: organisation

General chair, scientific chair

- HHAI: Janin Koch (Head of steering committee)

- Journée IHM IG RV 2024: Thomas Pietrzak (Co-organizer)

Member of the organizing committees

- IHM 2024: Bruno Fruchard (Doctoral Consortium)

10.1.2 Scientific events: selection

Member of the conference program committees

Reviewer

- CHI 2024 (ACM): Géry Casiez, Yuan Chen, Bruno Fruchard, Janin Koch, Sylvain Malacria, Mathieu Nancel

- UIST 2024 (ACM): Sylvain Malacria, Mathieu Nancel, Thomas Pietrzak, Janin Koch

- EuroHaptics (IEEE): Bruno Fruchard, Sylvain Malacria, Thomas Pietrzak

- DIS 2024 (ACM): Janin Koch

- ISMAR 2024 (IEEE): Yuan Chen, Bruno Fruchard, Thomas Pietrzak

- IMX 2024 (ACM): Bruno Fruchard

- ISS 2024 (ACM): Yuan Chen

- ECCE 2024: Bruno Fruchard

- MobileHCI 2024 (ACM): Sylvain Malacria

- GI 2024 (CHCC): Mathieu Nancel

- Chinese CHI 2024 (ICACHI): Yuan Chen

10.1.3 Journal

Member of the editorial boards

- Frontiers in CS/AI: Janin Koch

Reviewer - reviewing activities

- IMWUT 2024 (ACM): Sylvain Malacria

- ToCHI (ACM): Mathieu Nancel, Janin Koch

- IJHCS: Géry Casiez

10.1.4 Invited talks

- “Human-AI Exploration: How to design for ideation with AI”, séminaire du MSAD, Troyes: Janin Koch

- “Changing how we see research illustrations”, Invited talk TOHAI'24 Symposium, Tokyo (Japon): Sylvain Malacria

- “Improving User Experience with Interactive Scientific Documents”, French Korean Workshop on AI, New Networks, Programming Languages and Mathematical Sciences, Séoul (République de Corée): Sylvain Malacria

- “Why interation methods should be exposed and recognizable”, Visiting talk Hokkaido University, Sapporo (Japon): Sylvain Malacria

- “Why interation methods should be exposed and recognizable”, Visiting talk Keio University, Yokohama (Japon): Sylvain Malacria

10.1.5 Leadership within the scientific community

- Association Francophone d'Interaction Humain-Machine (AFIHM): Géry Casiez (member of the steering committee), Bruno Fruchard (member of the executive committee), Sylvain Malacria (member of the executive committee), Raphaël Perraud (Webmaster and communication manager of the young researchers (JCJC) taskforce), Suliac Lavenant (Discord administrator of the young researchers (JCJC) taskforce)

10.1.6 Research administration

For Inria center at the University of Lille

- “Commission des Utilisateurs des Moyens Informatique” (CUMI): Mathieu Nancel (president)

- “Commission des Emplois de Recherche” (CER): Bruno Fruchard (member)

- “Référent médiation”: Bruno Fruchard

- “Comité Opérationnel d'Évaluation des Risques Légaux et Éthiques” (COERLE, the Inria Ethics board): Thomas Pietrzak (local correspondent), Mathieu Nancel (member)

For the Université de Lille

- MADIS Graduate School council: Géry Casiez (member)

- Computer Science Department commission mixte: Thomas Pietrzak (member)

- Coordinator for internships at IUT de Lille: Géry Casiez

- Co-coordinator for internships at Computer Science Deparment: Damien Pollet

- Deputy director of the Computer Science Deparment for finances: Thomas Pietrzak

For the CRIStAL lab of Université de Lille & CNRS

- Direction Board: Géry Casiez (Deputy Director)

- Computer Science PhD recruiting committee: Géry Casiez (member)

- Laboratory council: Sylvain Malacria (member), Thomas Pietrzak (member)

- Coordinator of the Human & Humanities research axis: Thomas Pietrzak

- Member of the “Commission parité”: Bruno Fruchard

Hiring committees

- University of Lille (IUT) committee for Assistant Professor Position in Computer Science: Géry Casiez (president)

- University of Lille (FST) committee for Assistant Professor Position in Computer Science: Sylvain Malacria (member)

- University Toulouse III Paul Sabatier committee for Assistant Professor Position in Computer Science: Mathieu Nancel (member)

10.2 Teaching - Supervision - Juries

10.2.1 Teaching

- Doctoral course: Géry Casiez (12h), Experimental research and statistical methods for Human-Computer Interaction, Université de Lille

- Master Informatique: Géry Casiez (12h), Mathieu Nancel (12h), Sylvain Malacria (12h), Thomas Pietrzak (12h), Interactions Humain-Machine avancées, M2, Université de Lille

- Master Informatique: Thomas Pietrzak (48h), Sylvain Malacria (48h), Interaction Humain-Machine, M1, Université de Lille

- Master Informatique: Damien Pollet (27h), Langages et Modèles Dédiés, M2, Université de Lille

- Licence Informatique: Bruno Fruchard (18h), Alice Loizeau (18h), Raphaël Perraud (18h), Introduction à l'Interaction Humain-Machine, L3, Université de Lille

- Licence Informatique: Raphaël Perraud (18h), JSFS, L3, Université de Lille

- Licence Informatique: Damien Pollet (18h), Suliac Lavenant (18h), Informatique, L1, Université de Lille

- Licence Informatique: Damien Pollet (36h), Algorithmes et Programmation, L1, Université de Lille

- Licence Informatique: Damien Pollet (18h), Conception orientée objet, L3, Université de Lille

- Licence Informatique: Damien Pollet (21h), Programmation des systèmes, L3, Université de Lille

- Licence Informatique: Damien Pollet (42h), Projet, L3, Université de Lille

- Licence Informatique: Damien Pollet (9h), Coordination des stages, L3, Université de Lille

- BUT Informatique: Géry Casiez (11h): Automatisation de la chaîne de production, 3rd year, IUT de Lille - Université de Lille

- BUT Informatique: Géry Casiez (20h): React, 3rd year, IUT de Lille - Université de Lille

- BUT Informatique: Géry Casiez (38h), Bruno Fruchard (30h), IHM, 1st year, IUT de Lille - Université de Lille

- BUT Informatique: Géry Casiez (8h) SAÉ développement d'applications, 1st year, IUT de Lille - Université de Lille

10.2.2 Supervision

- PhD in progress: Omid Niroomandi, Re-designing the Input Pipeline in Interactive Systems, started Nov. 2024, advised by Géry Casiez, Mathieu Nancel & Daniel Vogel

- PhD in progress: Antoine Nollet, Automatic Information Management for Collaborative Spaces, started Oct. 2024, advised by Sylvain Malacria, Bruno Fruchard & Carla Griggio

- PhD in progress: Suliac Lavenant, Using haptic cues to improve micro-gesture interaction, started Oct. 2023, advised by Thomas Pietrzak, Sylvain Malacria, Laurence Nigay & Alix Goguey

- PhD in progress: Xiaohan Peng, Designing Interactive Human-Computer Drawing Experiences, started Oct. 2023, advised by Janin Koch & Wendy Mackay (Inria Saclay)

- PhD in progress: Raphaël Perraud, Fostering the discovery of interactions through adapted tutorials, started Nov. 2022, advised by Sylvain Malacria

- PhD in progress: Eya Ben Chaaben, Exploring Human-AI Collaboration and Explainability for Sustainable ML, started Nov. 2022, advised by Janin Koch & Wendy Mackay (Inria Saclay)

- PhD in progress: Vincent Lambert, Discoverability and representation of interactions using micro-hand gestures, started in September 2022, advised by Laurence Nigay, Sylvain Malacria & Alix Goguey

- PhD in progress: Alice Loizeau, Understanding and designing around error in interactive systems, started Oct. 2021, advised by Stéphane Huot & Mathieu Nancel

- PhD in progress: Pierrick Uro, Studying the sense of co-presence in Augmented Reality, started in September 2021, advised by Thomas Pietrzak, Florent Berthaut, Laurent Grisoni & Marcelo Wanderley (co-tutelle with McGill University, Canada)

- PhD in progress: Travis West, Examining the Design of Musical Interaction: The Creative Practice and Process, started Oct. 2020, advised by Stéphane Huot & Marcelo Wanderley (co-tutelle with McGill University, Canada)

- PhD: Johann Felipe González Ávila, Facilitating Programming-Based 3D Computer-Aided Design Using Bidirectional Programming 30, defended in April 2024, advised by Géry Casiez, Thomas Pietrzak & Audrey Girouard (co-tutelle with Carleton University, Canada)

- PhD: Yuan Chen, Pervasive Desktop Computing by Direct Manipulation of an Augmented Lamp 29, defended in Sep. 2024, advised by Géry Casiez, Sylvain Malacria, Daniel Vogel & Edward Lank (co-tutelle with University of Waterloo, Canada)

10.2.3 Juries

- Gabriela Herrera Altamira (PhD, Université de Lorraine): Géry Casiez, reviewer

- Yann Moullec (PhD, Université de Rennes): Géry Casiez, reviewer

- Johann Wentzel (PhD, University of Waterloo): Géry Casiez, examiner

- Nicolas Fourrier (PhD, IMT Atlantique): Thomas Pietrzak, reviewer

- Pierre-Antoine Cabaret (PhD, INSA Rennes): Thomas Pietrzak, reviewer

10.2.4 PhD mid-term evaluation committees

- Julien Cauquis (IMT Atlantique): Géry Casiez

- Congjian Gao (LS2N, Université de Nantes): Bruno Fruchard

- Intissar Cherif (Université Paris-Saclay): Thomas Pietrzak

- Axel Carayon (Université Toulouse III): Thomas Pietrzak

- Sabrina Toofany (Université de Rennes): Thomas Pietrzak

- Anna Siacchitano (Université Grenoble Alpes, ESTIA): Sylvain Malacria

10.3 Popularization

10.3.1 Productions (articles, videos, podcasts, serious games, ...)

- Interview by “L'Essentiel” for the RJMI event, October 18th 2024 – Bruno Fruchard

- Utiliser tout le potentiel de nos écrans connectés: Interview by the “Interstices” audio Podcast, July 18th 2024 34 – Sylvain Malacria

- Participation to the writing of the Eco-concevoir des produits durables et réparables guide from the Club de la Durabilité presented at Assemblée Nationale on February 9th 2024 – Aurélien Tabard

- Six solutions concrètes pour rendre nos appareils électroniques plus durables (Le Monde, mars 2024) – Aurélien Tabard

- Comment diminuer l'impact carbone de nos pratiques numériques (Le Monde, avril 2024) – Aurélien Tabard

- Peut-on échapper à l'emprise numérique ? (Socialter Juin / Juillet 2024 - Superpuces) – Aurélien Tabard

10.3.2 Participation in Live events

-

Workskhop with highschoolers on June 24th 2024 – David Adamov & Bruno Fruchard

one-hour workshops for two groups (n=30) of highschoolers that visited the Inria center of the University of Lille during which we presented broadly the concept of research in HCI and presented David's work on interaction techniques followed by a small competition to annotate a video as fast as possible using various techniques

-

Co-Organization of RJMI “Rencontre Jeunes Mathématicienne et Informaticiennes” on October 21st-22nd 2024 – Simon Lemaire, Magda Guennadi & Bruno Fruchard

Event on two days that involved 30 highschool girls with the goal to expose gender biases in computer science and mathematics research, present research work to younger students and organize workshops on various topics led by PhD students. This event included two talks from Clémentine Maurice and Mylène Maida.

- Participation in RJMI “Rencontre Jeunes Mathématicienne et Informaticiennes” on October 21st-22nd 2024 – Alice Loizeau

-

Organization of “Les Innovantes” on December 6th 2024 – Alice Loizeau, Magda Guennadi & Bruno Fruchard

We invited highschoolers from the region Hauts-de-France to the Inria centre of the university of Lille attend to presentations promoting women's contributions to computer science. 3 women (Anne-Marie Jolly, Solène Lambert, Camille Dupré) presented their research work in topics such as augmented reality and collaborative surgery training, and discussed their experience of being women in research.

-

Participation in “Girls Can Code” on November 30th - December 1st 2024 – Antoine Nollet

Supervision of middle and highschool girls for coding exercises, answering questions on careers in computer science, explanation of research work in computer science.

- Participation in setting up the exhibition Numérique en eaux troubles – Aurélien Tabard, October 2024

-

Participation in Déclics on December, 12th - Raphaël Perraud

Half a day to foster discussions between research scientists and highschoolers to present them how knowledge is being built, followed by a panel with education staff.

11 Scientific production

11.1 Major publications

- 1 inproceedingsModeling and Reducing Spatial Jitter caused by Asynchronous Input and Output Rates.UIST 2020 - ACM Symposium on User Interface Software and TechnologyVirtual (previously Minneapolis, Minnesota), United StatesOctober 2020HALDOI