Section: New Results

Interaction

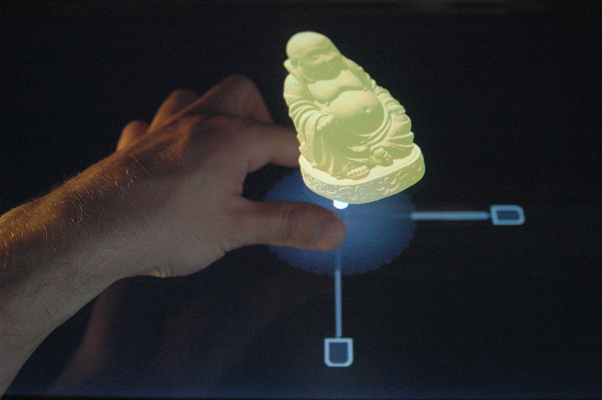

Toucheo: Multitouch + Stereo

Participants : Martin Hachet, Benoit Bossavit, Aurélie Cohé.

We propose a new system that efficiently combines direct multitouch interaction and 3D stereoscopic visualization (see Figure 5 ). In our approach, the users interact by means of simple 2D gestures on a monoscopic touchscreen, while visualizing occlusion-free 3D stereoscopic objects floating above the surface at an optically correct distance. By coinciding the 3D virtual space with the physical space, we produce a rich seamless workspace where both the advantages of direct and indirect interaction are jointly exploited. In addition to standard multitouch gestures and controls (e.g. pan, zoom, and standard 2D widgets) from which we take advantage, we have designed a dedicated multitouch 3D transformation widget. This widget allows the near-direct control of rotations, scaling, and translations of the manipulated objects. To illustrate the power of our setup, we have designed a demo scenario where participants reassemble 3D virtual fragments. This scenario, as many others, takes benefit of our proposal, where the strength of both multitouch interaction and stereoscopic visualization are unified in an innovative and relevant workspace [19] [25] . See highlights.

Touch-based interaction

Participants : Jérémy Laviole, Aurélie Cohé, Martin Hachet.

We have continued exploring 3D User Interfaces for [multi-]touch screens. In particular, in [26] , we conducted a user study to better understand the impact of directness on user performance for a RST docking task, for both 2D and 3D visualization conditions. We have also designed a new 3D transformation widget, called tBox, that can be operated easily and efficiently from simple gestures on touch-screens. In our approach, users apply rotations by means of physically plausible gestures, and we have extended successful 2D tactile principles to the context of 3D interaction [23] .

Immersive environments

Participant : Martin Hachet.

We have continued working on immersive environments. In particular, with the "Digital Sound" group of LaBRI, we have studied how sound processes should be visualized in immersive setups [13] . Another collaboration is with the REVES Inria project-team, where we have explored how to design 3D pieces of architecture in a CAVE [21] .

Brain-Computer Interaction

Participant : Fabien Lotte.

With Fabien Lotte joining the IPARLA team as a research scientist in January 2011, a new research topic related to interaction is being explored: Brain-Computer Interfaces (BCI). BCI are communication systems that enable its users to send commands to the computer by means of brain activity only, this activity being generally measured using ElectroEncephaloGraphy (EEG). This is therefore a new way to interact with computers and interactive 3D applications. In this area, we have explored new techniques to analyze and process EEG signals in order to identify the mental state of the user [14] [20] . This has led to improved robustness and mental state recognition performances. Another challenge in BCI is that it requires the collection of several examples of EEG signals from the user in order to calibrate the system. This makes the calibration step long and inconvenient. In order to alleviate this problem, we have proposed to generate artificial EEG signals from a few EEG signals already available. Our evaluations have shown that it can indeed significantly reduce the calibration time [28] . Together with the Inria VR4I-team, we have also explored the use of a new mental state to drive a BCI: attention and relaxation states. We have shown that it is indeed possible to identify relaxation and concentration in EEG signals, and that it can be used to drive a BCI [24] . Finally, we have critically analyzed the usefulness and potential of BCI for 3D video games [27] .

Tangible user interfaces

Participant : Patrick Reuter.

Tangible user interfaces have proven to be useful for the manipulation of 3D objects, such as for selection and navigation tasks, and even for deformation tasks. Deforming 3D models realistically is a crucial task when it comes to study the physical behavior of 3D objects, for example in engineering, in sculpting applications, and in other domains. With recent progress in physical deformation models and the increasing computing power, physically-realistic deformation simulations can now be driven at interactive rates. Consequently, there is an increasing demand for efficient and user-friendly user interfaces for physically-realistic deformation in real-time. We designed a general concept for designing physically-realistic deformations of 3D models with a tangible user interface, and instantiated our concept with a concrete prototype using a passive tangible user interface that incarnates the 3D model and that runs in real-time [32] .