Section: New Results

Plausible Image Rendering

Filtering Solid Gabor Noise

Participants : Ares Lagae, George Drettakis.

|

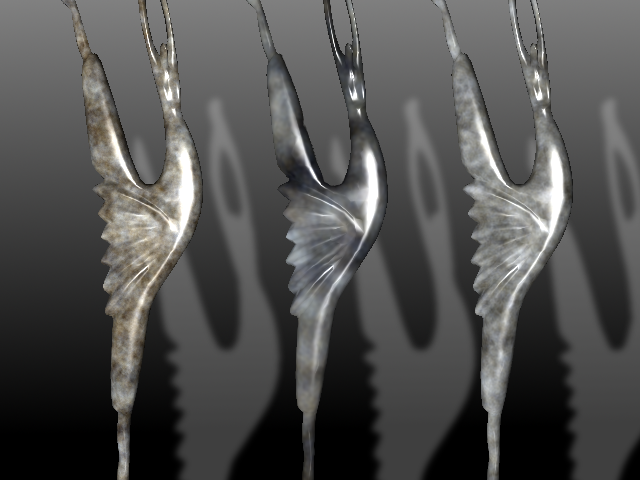

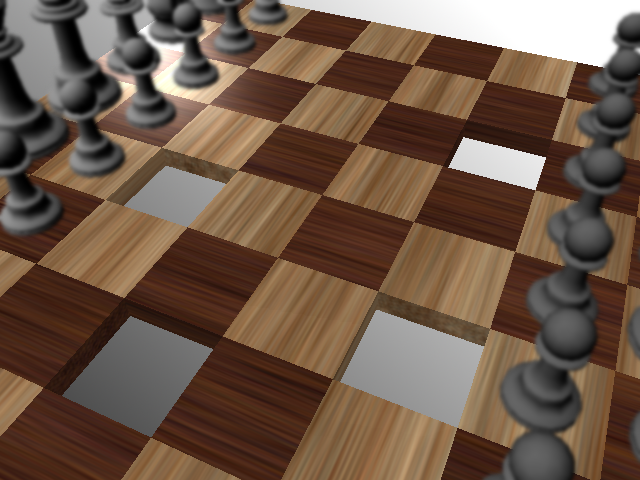

Solid noise is a fundamental tool in computer graphics. Surprisingly, no existing noise function supports both high-quality anti-aliasing and continuity across sharp edges. Existing noise functions either introduce discontinuities of the solid noise at sharp edges, which is the case for wavelet noise and Gabor noise, or result in detail loss when anti-aliased, which is the case for Perlin noise and wavelet noise. In this project, we therefore present a new noise function that preserves continuity over sharp edges and supports high-quality anti-aliasing. We show that a slicing approach is required to preserve continuity across sharp edges, and we present a new noise function that supports anisotropic filtering of sliced solid noise. This is made possible by individually filtering the slices of Gabor kernels, which requires the proper treatment of phase. This in turn leads to the introduction of the phase-augmented Gabor kernel and random-phase Gabor noise, our new noise function. We demonstrate that our new noise function supports both high-quality anti-aliasing and continuity across sharp edges, as well as anisotropy. Fig. 3 shows several solid procedural textures generated with our new random-phase solid Gabor noise.

This work was presented at ACM SIGGRAPH 2011 in Vancouver and published in ACM Transactions on Graphics [18] (SIGGRAPH paper).

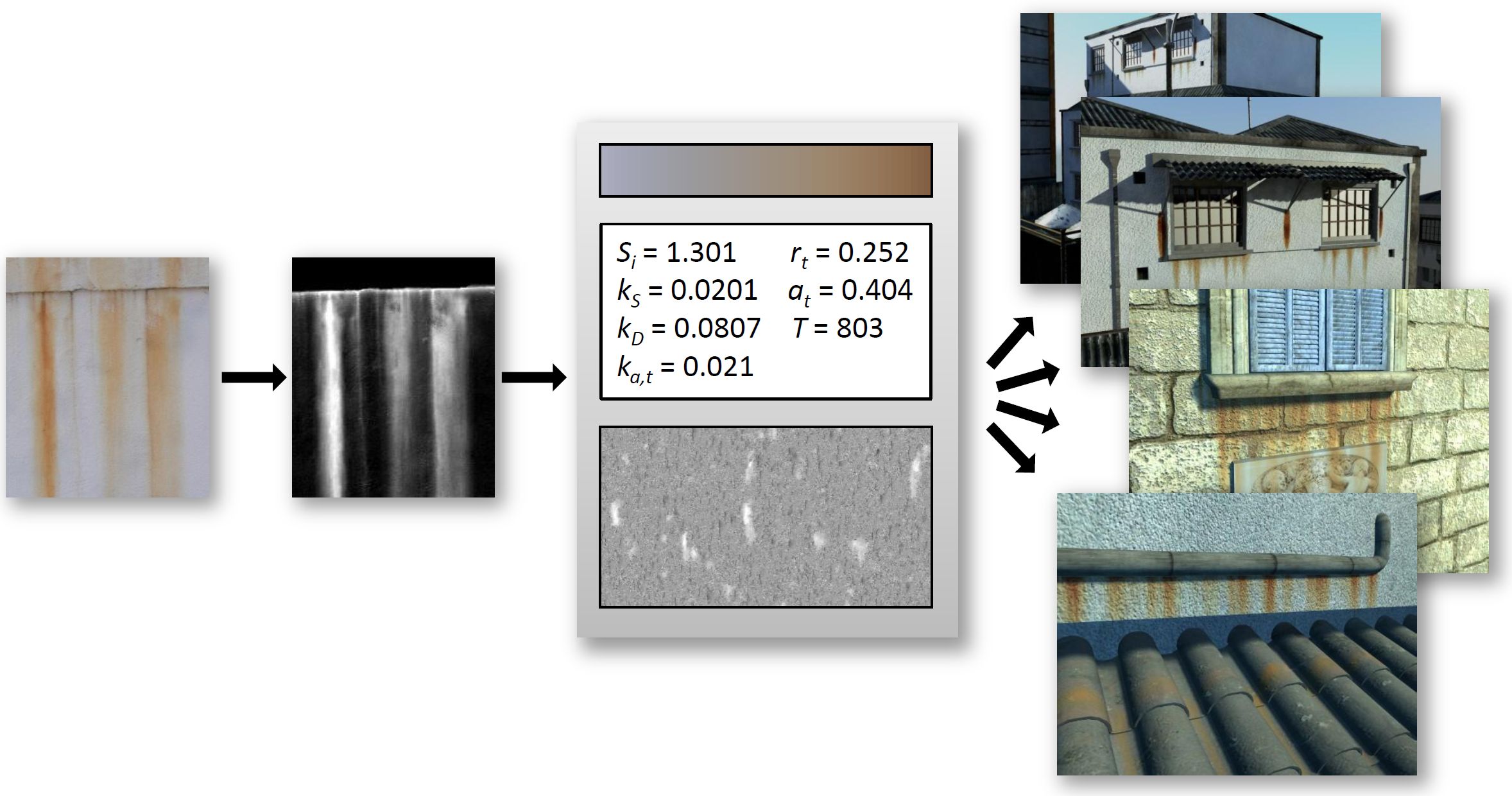

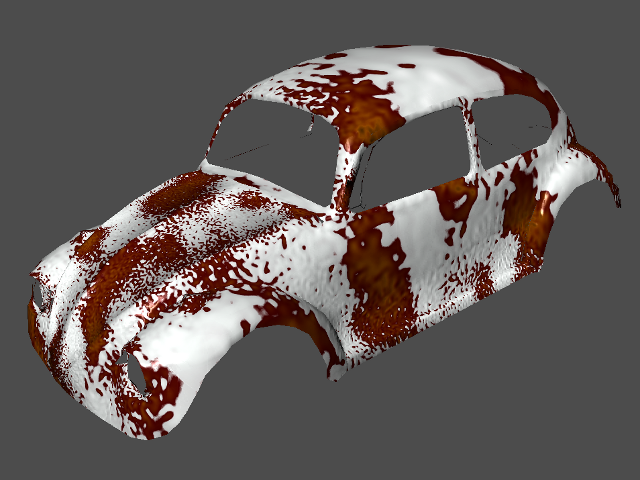

Image-Guided Weathering for Flow Phenomena

Participants : Carles Bosch, Pierre-Yves Laffont, George Drettakis.

The simulation of weathered appearance is essential in the realistic modeling of urban environments. With digital photography and Internet image collections, visual examples of weathering effects are readily available. These images, however, mix the appearance of the weathering phenomena with the specific local context. In [12] , we have introduced a new methodology to estimate parameters of a phenomenological weathering simulation from existing imagery, in a form that allows new target-specific weathering effects to be produced. In addition to driving the simulation from images, we complement the visual result with details and colors extracted from the images. This methodology has been illustrated using flow stains as a representative case, demostrating how a rich collection of flow patterns can be generated from a small set of exemplars. In Fig. 4 , we show the major components required for this approach.

This work was published in ACM Transactions on Graphics [12] and also presented at ACM SIGGRAPH 2011 in Vancouver (TOG paper).

|

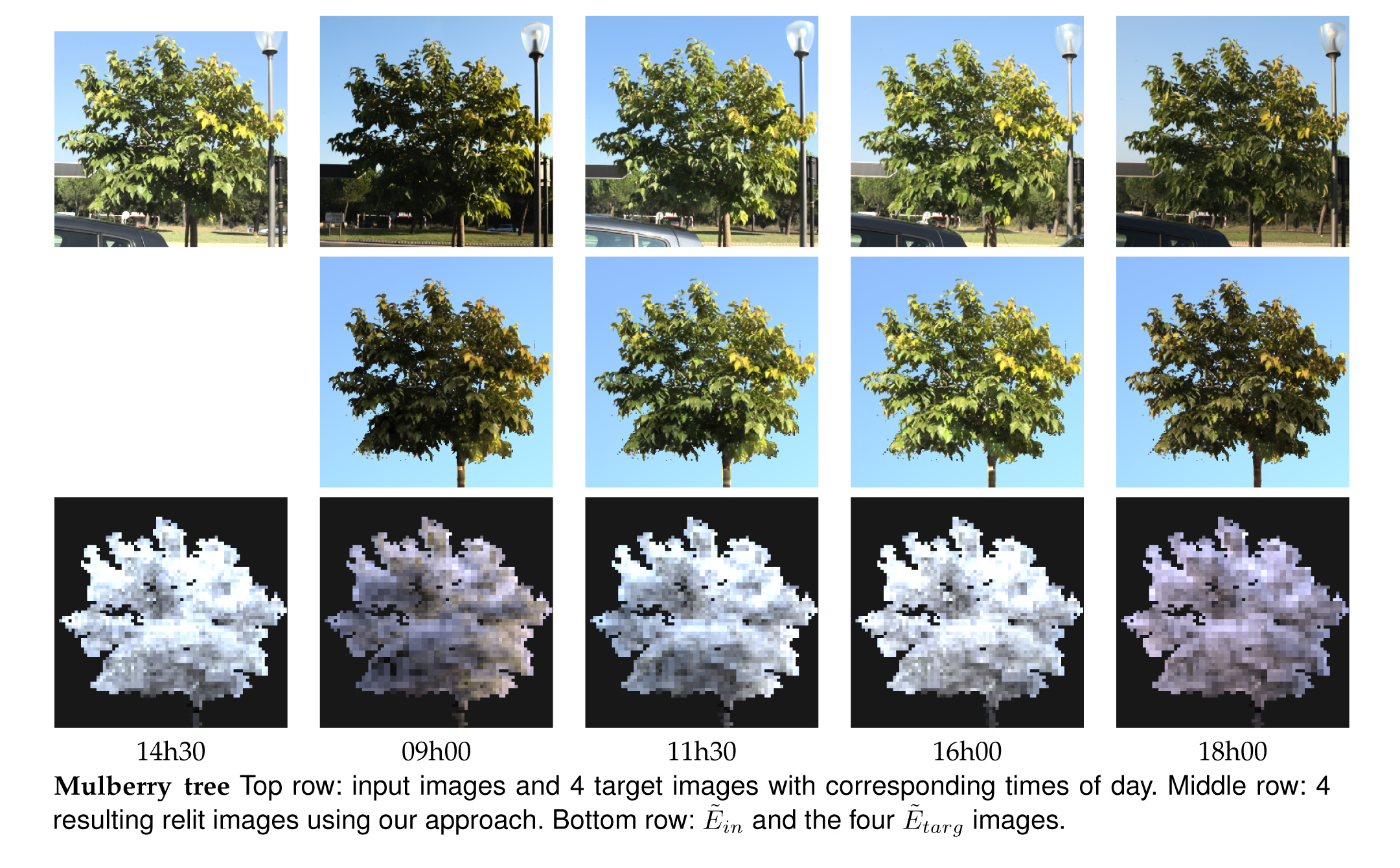

Relighting Photographs of Tree Canopies

Participants : Marcio Cabral, George Drettakis.

We present an image-based approach to relighting photographs of tree canopies. Our goal is to minimize capture overhead; thus the only input required is a set of photographs of the tree taken at a time of day, while allowing relighting at any other time. We first analyze lighting in a tree canopy, both theoretically and using simulations. From this analysis, we observe that tree canopy lighting is similar to volumetric illumination. We assume a single-scattering volumetric lighting model for tree canopies, and diffuse leaf reflectance. To validate our assumptions, we apply our method on several synthetic renderings of tree models, for which we are all able to compute all quantities involved.

Our method first creates a volumetric representation of the tree using 10-12 images taken at a single time of day and use a single-scattering participating media lighting model - these photos are taken from different viewpoints, around the tree. An analytical sun and sky illumination model, namely the Preetham model, provides consistent representation of lighting for the captured input and unknown target times.

We relight the input image by applying a ratio of the target and input time lighting representations. We compute this representation efficiently by simultaneously coding transmittance from the sky and to the eye in spherical harmonics. We validate our method by relighting images of synthetic trees and comparing to path-traced solutions. We also present results for photographs, validating with time-lapse ground truth sequences. An example is shown in Fig. 5 . This work was published in the IEEE Transactions on Computer Graphics and Visualization [15] , and was in collaboration with the past members of REVES, N. Bonneel and S. Lefebvre.

Silhouette-aware Warping for Image-based Rendering

Participants : Gaurav Chaurasia, George Drettakis.

| ||||||||

|

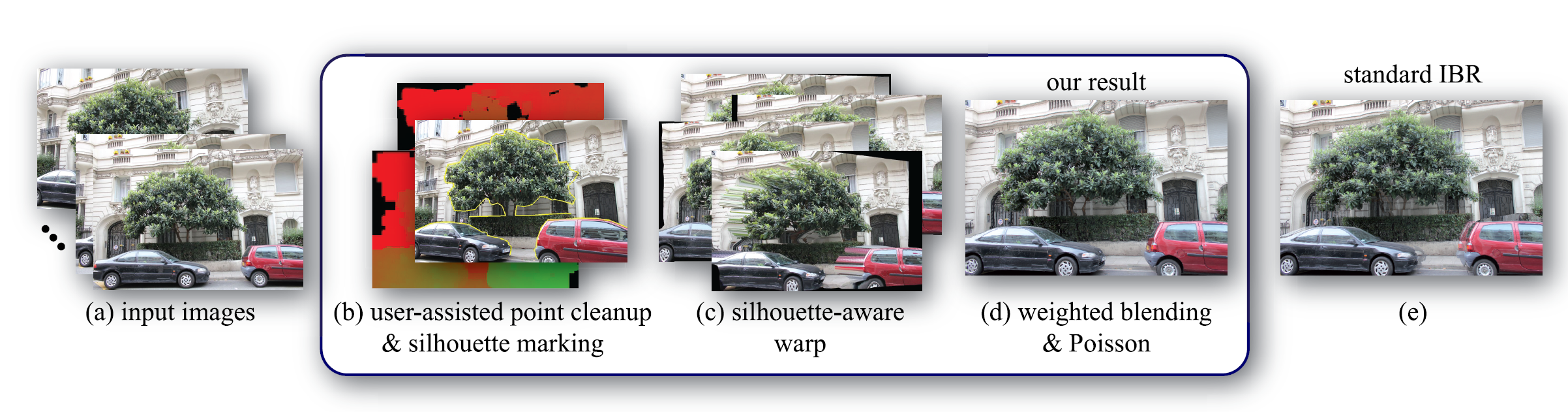

We have presented a novel solution for image-based rendering (IBR) of urban scenes based on image warping. IBR techniques allow capture and display of 3D environments using photographs. Modern IBR pipelines reconstruct proxy geometry using multi-view stereo, reproject the photographs onto the proxy and blend them to create novel views. The success of these methods depends on accurate 3D proxies, which are difficult to obtain for complex objects such as trees and cars. Large number of input images do not improve reconstruction proportionally; surface extraction is challenging even from dense range scans for scenes containing such objects. Our approach does not depend on dense accurate geometric reconstruction; instead we compensate for sparse 3D information by variational warping. In particular, we formulate silhouette-aware warps that preserve salient depth discontinuities. This improves the rendering of difficult foreground objects, even when deviating from view interpolation. We use a semi-automatic step to identify depth discontinuities and extract a sparse set of depth constraints used to guide the warp. On the technnical side, our formulation breaks new ground by demonstrating how to incorporate discontinuities in variational warps. Our framework is lightweight and results in good quality IBR for previously challenging environments as shown in figure. 6 .

Applications. Robust image-based rendering can be used to generate photo-realistic visual content easily which can be very useful for virtual reality applications. Commercial products like Google StreetView and Microsoft PhotoSynth use rudimentary image-based rendering for large scale visualization of cities. Our work advances the state of the art by treating the hardest class of scenes.

This work is a collaboration with Prof. Dr. Olga Sorkine (ETH Zurich). The work was published in the special issue of the journal Computer Graphics Forum [16] , presented at the Eurographics Symposium on Rendering 2011 in Prague, Czech Republic.

Real Time Rough Refractions

Participant : Adrien Bousseau.

|

We have proposed an algorithm to render objects made of transparent materials with rough surfaces in real-time, under environment lighting. Rough surfaces such as frosted and misted glass cause wide scattering as light enters and exits objects, which significantly complicates the rendering of such materials. We introduced two contributions to approximate the successive scattering events at interfaces, due to rough refraction: First, an approximation of the Bidirectional Transmittance Distribution Function (BTDF), using spherical Gaussians, suitable for real-time estimation of environment lighting using pre-convolution; second, a combination of cone tracing and macro-geometry filtering to efficiently integrate the scattered rays at the exiting interface of the object. Our method produces plausible results in real-time, as demonstrated by comparison against stochastic ray-tracing (Figure 7 ).

This work is a collaboration with Charles De Rousiers, Kartic Subr, Nicolas Holzschuch (ARTIS / INRIA Rhône-Alpes) and Ravi Ramamoorthi (UC Berkeley) as part of our CRISP associate team with UC Berkeley. The work was presented at the ACM SIGGRAPH Symposium on Interactive 3D Graphics and Games 2011 and won the best paper award.

Single view intrinsic images

Participants : Pierre-Yves Laffont, Adrien Bousseau, George Drettakis.

|

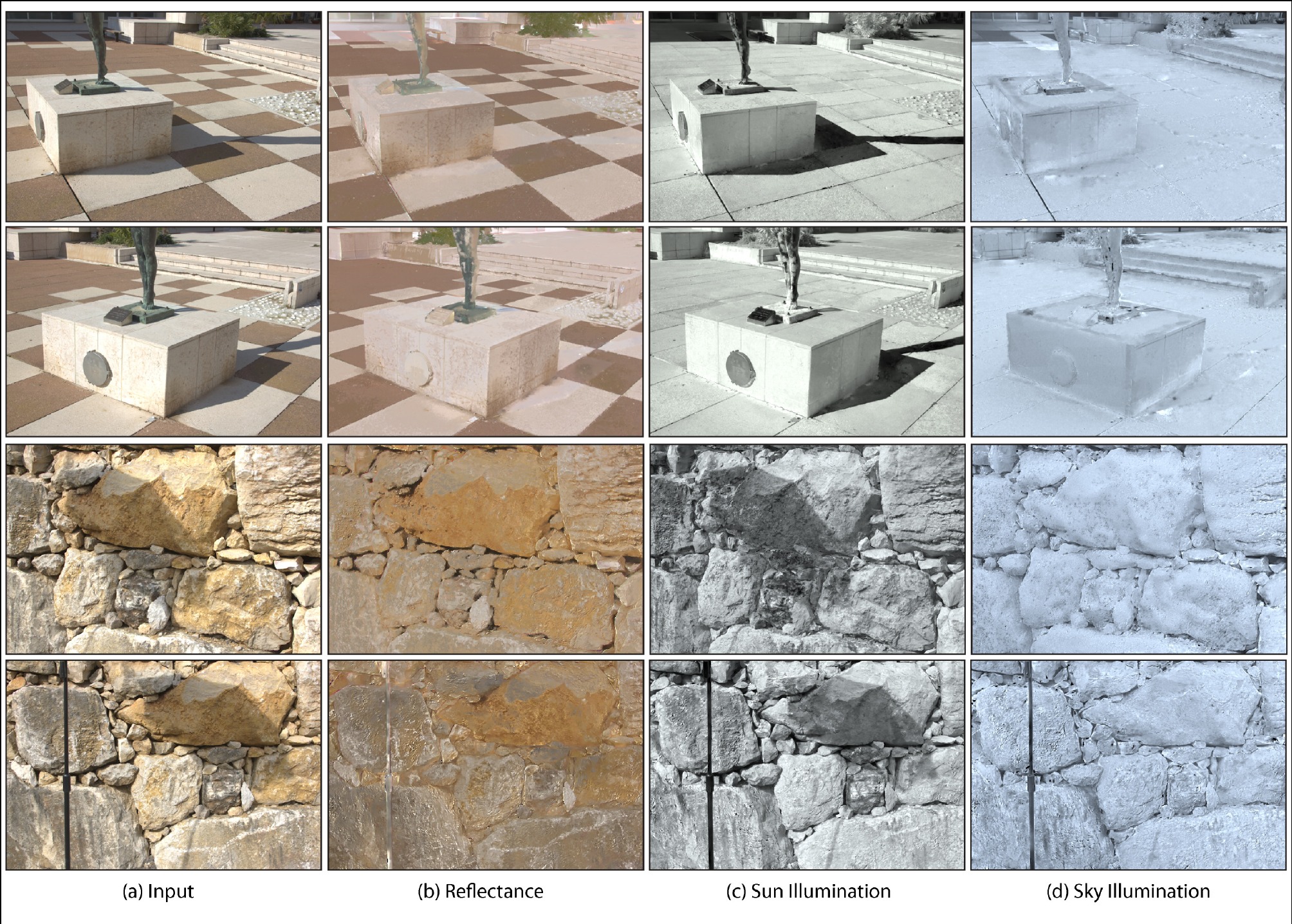

We introduced a new algorithm for decomposing photographs of outdoor scenes into intrinsic images, i.e. independent layers for illumination and reflectance (material color).

Extracting intrinsic images from photographs is a hard problem, typically solved with image-driven methods using numerous user indications. Recent methods in computer vision allow easy acquisition of medium-quality geometric information about a scene using multiple photographs from different views. We developed a new algorithm which allows us to exploit this noisy and somewhat unreliable information to automate and improve image-driven propagation algorithms to deduce intrinsic images. In particular, we develop a new approach to estimate cast shadow regions in the image, by refining an initial estimate available from reconstructed geometric information. We use a voting algorithm in color space which robustly identifies reflectance values for sparse reconstructed 3D points, allowing us to accurately determine visibility to the sun at these points. This information is propagated to the remaining pixels. In a final step we adapt appropriate image propagation algorithms, by replacing manual user indications by data inferred from the geometry-driven shadow and reflectance estimation. This allows us to automatically extract intrinsic images from multiple viewpoints, thus allowing many types of manipulation in images.

As illustrated on Figure 8 , our method can extract reflectance at each pixel of the input photographs. The decomposition also yields separate sun illumination and sky illumination components, enabling easier manipulation of shadows and illuminant colors in image editing software.

This work won the best paper award at “les journées de l'AFIG (Association Francophone d'Informatique Graphique) 2011”, and will appear in the French journal REFIG [21] .

Single view intrinsic images with indirect illumination

Participants : Pierre-Yves Laffont, Adrien Bousseau, George Drettakis.

Building on top of our work published in [21] , we extended our intrinsic image decomposition method to handle indirect illumination, in addition to sun and sky illumination. We introduced a new algorithm to reliably identify points in shadow based on a parameterization of the reflectance with respect to sun visibility, and proposed to separate illumination components using cascaded image-based propagation algorithm. We also demonstrated direct applications of our results for the end user in widespread image editing software. This rich intrinsic image decomposition method has been submitted for publication.

Multi-lighting multi-view intrinsic images

Participants : Pierre-Yves Laffont, Adrien Bousseau, George Drettakis.

We compute intrinsic image decompositions using several images of the same scene under different viewpoints and lighting conditions. Such images can be easily gathered for famous landmarks, using photo sharing websites such as Flickr. Our method leverages the heterogeneity of photo collections (multiple viewpoints and lighting conditions) to guide the intrinsic image separation process. With this automatic decomposition, we aim to facilitate many image editing tasks and improve the quality of image-based rendering from photo collections.

This work is in collaboration with Frédo Durand (associate professor at MIT) and Sylvain Paris (researcher at Adobe).

Warping superpixels

Participants : Gaurav Chaurasia, Sylvain Duchêne, George Drettakis.

We are working on developing a novel representation of multi-view scenes in the form of superpixels. Such a representation segments the scene into semantically meaningful regions called superpixels which admit variational warps [16] , thereby leveraging the power of shape-preserving warps while provinding automatic silhouette and occlusion handling. This entails several contributions namely incorporating geometric priors in superpixel extraction, generating consistent warping constraints and a novel rendering pipeline that assembles warped superpixels into the novel view maintaining spatio-temporal coherence.

This work is a collaboration with Prof. Dr. Olga Sorkine (ETH Zurich).

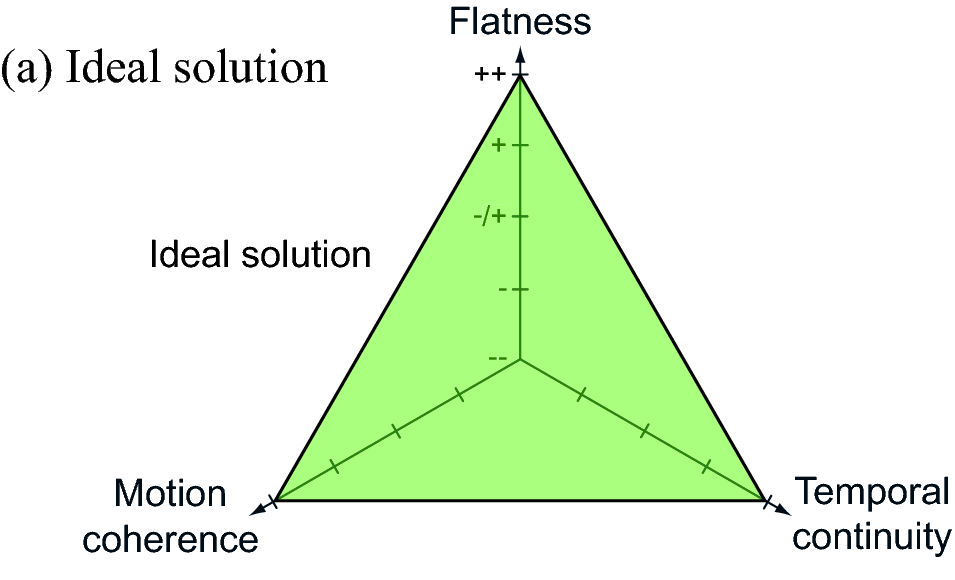

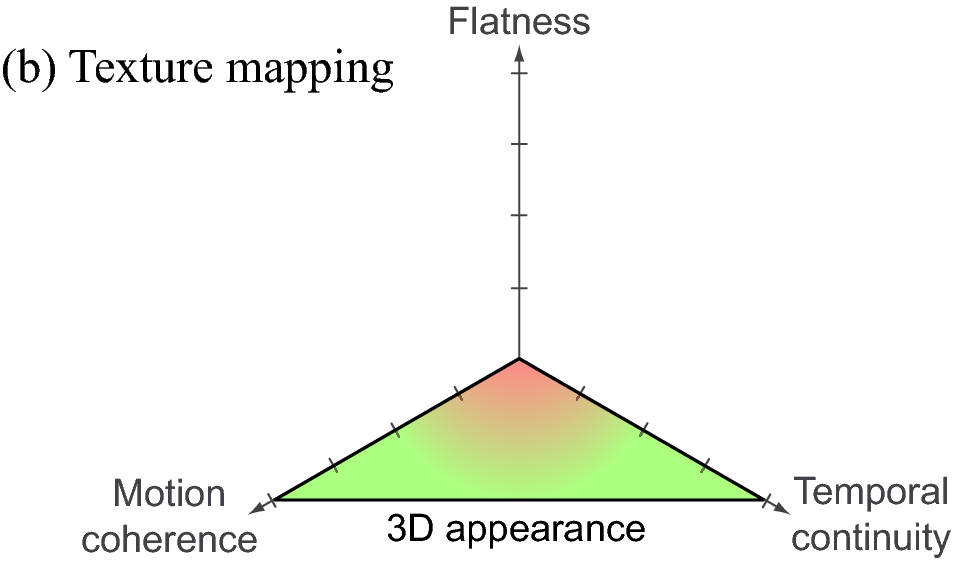

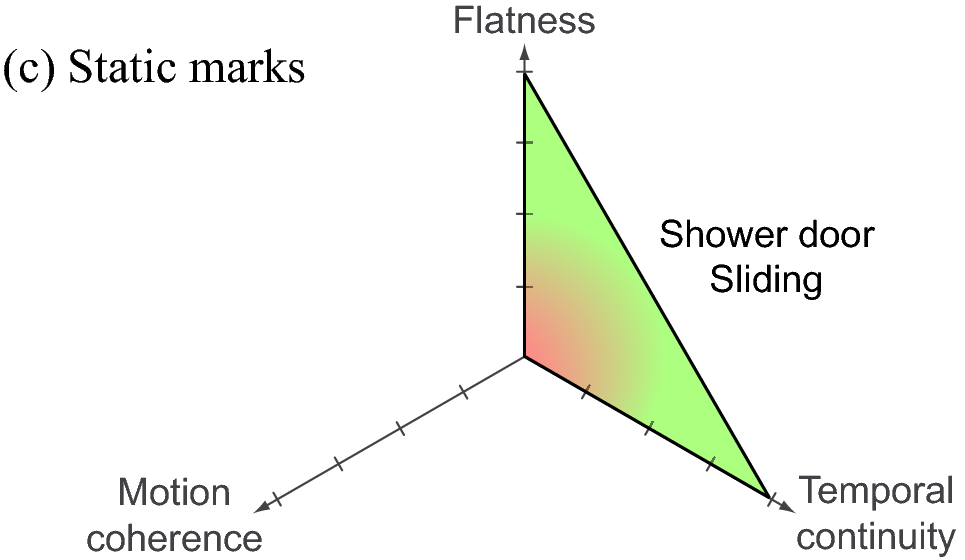

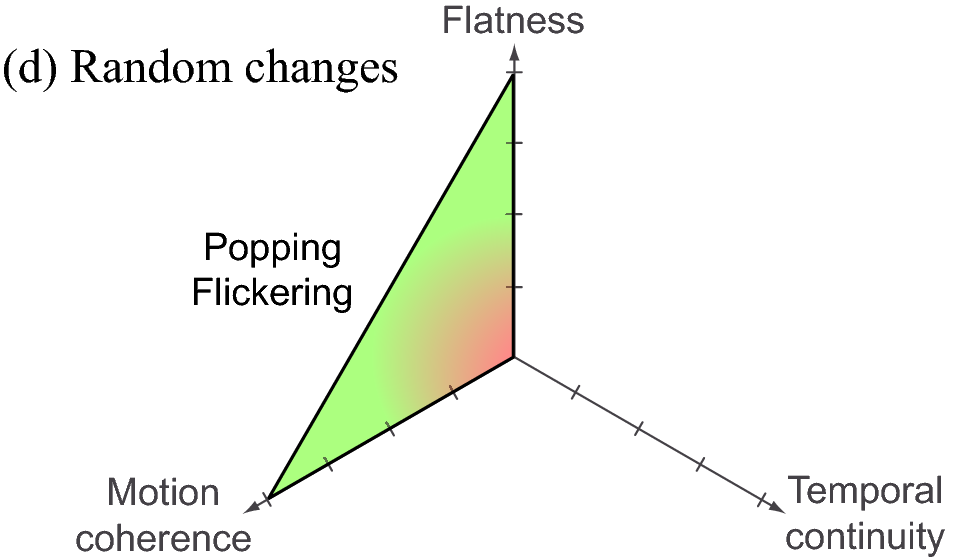

State-of-the-art Report on Temporal Coherence for Stylized Animations

Participant : Adrien Bousseau.

Non-photorealistic rendering (NPR) algorithms allow the creation of images in a variety of styles, ranging from line drawing and pen-and-ink to oil painting and watercolor. These algorithms provide greater flexibility, control and automation over traditional drawing and painting. The main challenge of computer generated stylized animations is to reproduce the look of traditional drawings and paintings while minimizing distracting flickering and sliding artifacts present in hand-drawn animations. These goals are inherently conflicting and any attempt to address the temporal coherence of stylized animations is a trade-off. This survey is motivated by the growing number of methods proposed in recent years and the need for a comprehensive analysis of the trade-offs they propose. We formalized the problem of temporal coherence in terms of goals and compared existing methods accordingly (Figure 9 ). The goal of this report is to help uninformed readers to choose the method that best suits their needs, as well as motivate further research to address the limitations of existing methods.

|

This work is a collaboration with Pierre Bénard and Joëlle Thollot (ARTIS / INRIA Rhône-Alpes) and was published in the journal Computer Graphics Forum [14] .

Improving Gabor Noise

Participant : Ares Lagae.

We have recently proposed a new procedural noise function, Gabor noise, which offers a combination of properties not found in existing noise functions. In this project, we present three significant improvements to Gabor noise: (1) an isotropic kernel for Gabor noise, which speeds up isotropic Gabor noise with a factor of roughly two, (2) an error analysis of Gabor noise, which relates the kernel truncation radius to the relative error of the noise, and (3) spatially varying Gabor noise, which enables spatial variation of all noise parameters. These improvements make Gabor noise an even more attractive alternative for existing noise functions. Fig. 3 shows a procedural textures generated with our new improved Gabor noise. This work was published in IEEE Transactions on Computer Graphics and Visualization [19]