Section: Scientific Foundations

Fast parametric estimation and its applications

Parametric estimation may often be formalized as follows:

where:

Finding a "good" approximation of the components of has been the subject of a huge literature in various fields of applied mathematics. Most of those researches have been done in a probabilistic setting, which necessitates a good knowledge of the statistical properties of . Our project is devoted to a new standpoint, which does not require this knowledge and which is based on the following tools, which are of algebraic flavor:

differential algebra (Differential algebra was introduced in nonlinear control theory by one of us almost twenty years ago for understanding some specific questions like input-output inversion. It allowed us to recast the whole of nonlinear control into a more realistic light. The best example is of course the discovery of flat systems, which are now quite popular in industry.), which plays with respect to differential equations a similar role that the commutative algebra plays with respect to algebraic equations;

module theory, i.e. linear algebra over rings, which are not necessarily commutative;

operational calculus, which is the most classical tool among control and mechanical engineers (Operational calculus is often formalized via the Laplace transform whereas the Fourier transform is today the cornerstone in estimation. Note that the one-sided Laplace transform is causal, but the Fourier transform over is not.).

Linear identifiability

In the most problems, which appear in linear control as well as in signal processing, the unknown parameters are linearly identifiable: standard elimination procedures are yielding the following matrix equation

where:

the entries of and are finite linear combinations of terms of the form , , where is an input or output signal,

How to deal with perturbations and noises?

With noisy measurements equation (2 ) becomes:

where is a column matrix, whose entries are finite linear combinations of terms of the form , where is a perturbation or a noise.

Structured perturbations

A perturbation is said to be structured if, and only if, it can be annihilated by a linear differential operator of the form , where is a rational function of , i.e. . Note that many classical perturbations, like a constant bias, are annihilated by such an operator. An unstructured noise cannot be annihilated by a non-zero differential operator.

By well-known properties of the non-commutative ring of differential operators, we can multiply both sides of equation (3 ) by a suitable differential operator such that equation (3 ) becomes:

where the entries of the column matrix are unstructured noises.

Attenuating unstructured noises

Unstructured noises are usually dealt with stochastic processes like white Gaussian noises. They are considered here as highly fluctuating phenomena, which may therefore be attenuated via low pass filters. Note that no precise knowledge of the statistical properties of the noises is required.

Comments

Although the previous noise attenuation procedure (It is reminiscent to that the most practitioners in electronics are doing.) may be fully explained via formula (4 ), its theoretical comparison (Let us stress again that many computer simulations and several laboratory experiments have been already successfully achieved and can be quite favorably compared with the existing techniques.) with today's literature (Especially in signal processing.) has yet to be done. It will require a complete resetting of the notions of noises and perturbations. Besides some connections with physics, it might lead to quite new "epistemological" issues [101] .

Some hints on the calculations

The time derivatives of the input and output signals appearing in equations (2 ), (3 ), (4 ) can be suppressed in the two following ways which might be combined:

integrate both sides of the equation a sufficient number of times,

take the convolution product of both sides by a suitable low pass filter.

The numerical values of the unknown parameters can be obtained by integrating both sides of the modified equation (4 ) during a very short time interval.

A first, very simple example

Let us illustrate on a very basic example, the grounding ideas of the algebraic approach. For this purpose consider the first order linear system:

where is an unknown parameter to be identified and is an unknown constant perturbation. With the notations of operational calculus and , equation (5 ) reads:

where represents the Laplace transform of .

In order to eliminate the term , multiply first the both hand-sides of this equation by and next take their derivatives with respect to :

Recall that corresponds to . Assume for simplicity of presentation (If one has to take above derivatives of order 2 with respect to , in order to eliminate the initial condition.). Then for any ,

For , we obtained the estimated value :

Since can be very small, estimation via (10 ) is very fast.

Note that equation (10 ) represents an on-line algorithm, which involves only two kinds of operations on and : (1) multiplications by , and (2) integrations over a pre-selected time interval.

If we now consider an additional noise of zero mean in (5 ), say:

it can be considered as a fast fluctuating signal. The order in (9 ) determines the order of iterations in the integrals (3 integrals in (10 )). Those iterated integrals are low-pass filters which are attenuating the fluctuations.

This example, even simple, clearly demonstrates how algebraic techniques proceed:

they are non-asymptotic: parameter is obtained from (10 ) in a finite time;

they are deterministic: no knowledge of the statistical properties of the noise is required.

A second simple example, with delay

Consider the first order, linear system with constant input delay (This example is taken from [93] . For further details, we suggest the reader to refer to it.):

Here we use a distributional-like notation, where denotes the Dirac impulse and is its integral, i.e. the Heaviside function (unit step) (In this document, for the sake of simplicity, we make an abuse of the language since we merge in a single notation the Heaviside function and the integration operator. To be rigorous, the iterated integration ( times) corresponds, in the operational domain, to a division by , whereas the convolution with ( times) corresponds to a division by . For , there is no difference and realizes the integration of . More generally, since we will always apply these operations to complete equations (left-and right-hand sides), the factor makes no difference.). Still for simplicity, we suppose that the parameter is known. The parameter to be identified is now the delay . As previously, is a constant perturbation, , , and are constant parameters. Consider also a step input . A first order derivation yields:

where denotes the delayed Dirac impulse and , of order 1 and support , contains the contributions of the initial conditions. According to Schwartz theorem, multiplication by a function such that , yields interesting simplifications. For instance, choosing leads to the following equalities (to be understood in the distributional framework):

The delay becomes available from successive integrations (represented by the operator ), as follows:

where the are defined using the notation by:

These coefficients show that integrations avoid any derivation in the delay identification.

|

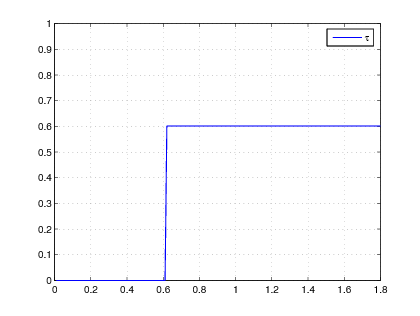

Figure 1 gives a numerical simulation with integrations and , . Due to the non identifiability over , the delay is set to zero until the numerator or the denominator in the right hand side of (15 ) reaches a significant nonzero value.

Again, note the realization algorithm (15 ) involves two kinds of operators: (1) integrations and (2) multiplications by . It relies on the measurement of and on the knowledge of . If is also unknown, the same approach can be utilized for a simultaneous identification of and . The following relation is derived from (14 ):

and a linear system with unknown parameters is obtained by using different integration orders:

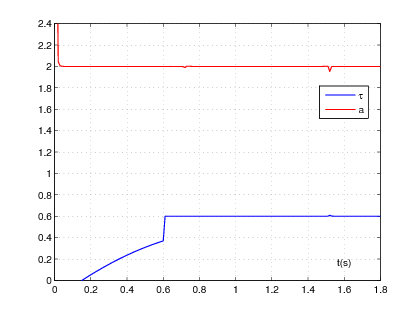

The resulting numerical simulations are shown in Figure 2 . For identifiability reasons, the obtained linear system may be not consistent for .

|