Section: New Software and Platforms

Poppy project

HiPi Board

Functional Description

Hipi is a board to control robots on Raspberry Pi. It is an extension of the Pixl board with the following features:

-

A DC/DC power converter from 12V (motor) to 5V (Raspberry Pi) at 3A.

-

A RS232 and a RS485 bus connected to the Raspberry Pi by SPI for driving MX and RX Dynamixel motor series.

This board will be integrated soon in the new head of the Poppy Humanoid and Poppy Torso.

Using the Raspberry Pi for every Poppy robots will simplify the hardware complexity (we maintain 4 types of embedded boards, with different Linux kernel and configurations) and improve the usage and installation of new robots.

IKPy

Inverse Kinematics Python Library

Functional Description

IKPy is a Python Inverse Kinematics library, designed to be simple to use and extend. It provides Forward and Inverse kinematics functionality, bundled with helper tools such as 3D plotting of the kinematics chains. Being written entirely in Python, IKPy is lightweight and is based on numpy and scipy for fast optimization. IKPy is compatible with many robots, by automatically parsing URDF files. It also supports other (such as DH-parameters) and custom representations. Moreover, it provides a framework to easily implement new Inverse Kinematics strategies. Originally developed for the Poppy project, it can also be used as a standalone library.

Pixl Board

Functional Description

Pixl is a tiny board used to create low cost robots based on Raspberry Pi board and Dynamixel XL-320 motors. This board has 2 main features:

-

The power part, allowing the user to plug a 7.5V AC/DC converter or a battery directly into the Pixl. This power is distributed to all XL320 motors and is converted to 5V for the Raspberry Pi board.

-

The communication part, which converts full duplex to half duplex and vice-versa. The half duplex part switch between RX and TX automatically. Another connector allows the user to connect his XL320 network.

The board is used in the Poppy Ergo Jr robot.

Poppy

Functional Description

The Poppy Project team develops open-source 3D printed robots platforms based on robust, flexible, easy-to-use and reproduce hardware and software. In particular, the use of 3D printing and rapid prototyping technologies is a central aspect of this project, and makes it easy and fast not only to reproduce the platform, but also to explore morphological variants. Poppy targets three domains of use: science, education and art.

In the Poppy project we are working on the Poppy System which is a new modular and open-source robotic architecture. It is designed to help people create and build custom robots. It permits, in a similar approach as Lego, building robots or smart objects using standardized elements.

Poppy System is a unified system in which essential robotic components (actuators, sensors...) are independent modules connected with other modules through standardized interfaces:

-

Unified mechanical interfaces, simplifying the assembly process and the design of 3D printable parts.

-

Unified communication between elements using the same connector and bus for each module.

-

Unified software, making it easy to program each module independently.

Our ambition is to create an ecosystem around this system so communities can develop custom modules, following the Poppy System standards, which can be compatible with all other Poppy robots.

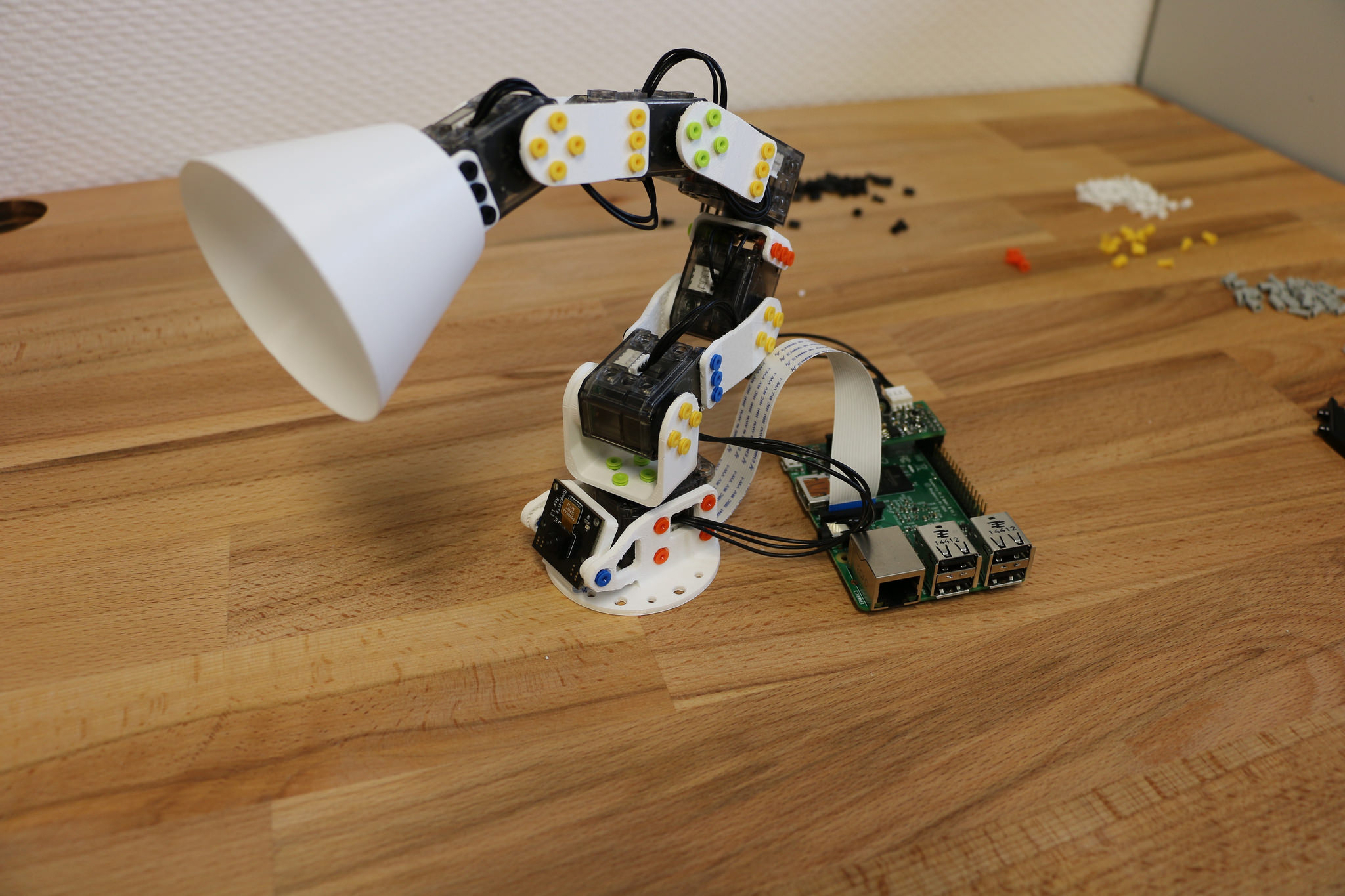

Poppy Ergo Jr

Functional Description

Poppy Ergo Jr is an open hardware robot developed by the Poppy Project to explore the use of robots in classrooms for learning robotic and computer science.

It is available as a 6 or 4 degrees of freedom arm designed to be both expressive and low-cost. This is achieved by the use of FDM 3D printing and low cost Robotis XL-320 actuators. A Raspberry Pi camera is attached to the robot so it can detect object, faces or QR codes.

The Ergo Jr is controlled by the Pypot library and runs on a Raspberry pi 2 or 3 board. Communication between the Raspberry Pi and the actuators is made possible by the Pixl board we have designed.

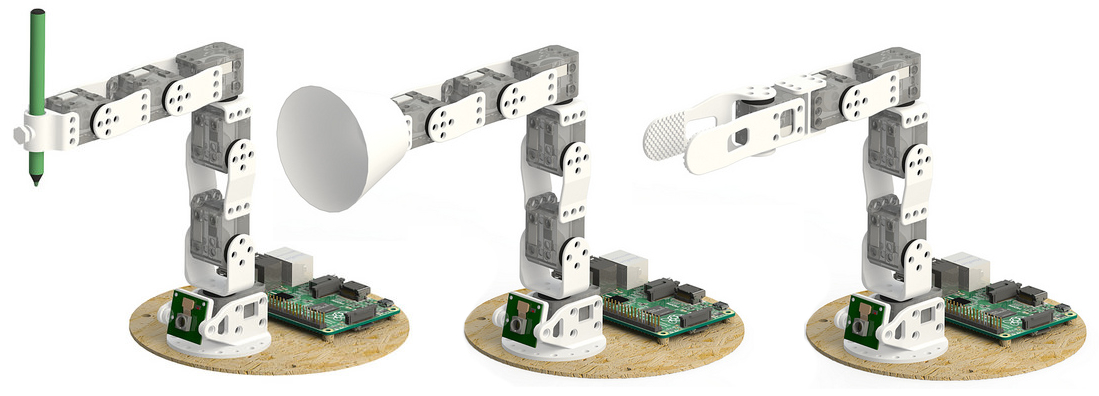

The Poppy Ergo Jr robot has several 3D printed tools extending its capabilities. There are currently the lampshade, the gripper and a pen holder.

With the release of a new Raspberry Pi board early 2016, the Poppy Ergo Jr disk image was updated to support Raspberry Pi 2 and 3 boards. The disk image can be used seamlessly with a board or the other.

Poppy Ergo Jr Installer

Functional Description

An alternative way to install the Ergo Jr robot software is made available using containers.

Users can own their own operating system installation, then add the Ergo Jr required software in a sandboxed environment. This results in a non-intrusive installation on the host system.

Docker containers implementation were used, and image is hosted at Docker Hub.

Poppy Ergo Jr Simulator

Functional Description

Poppy Project, through Poppy Education, wants users to get used to robotics, even without owning a physical robot.

For that purpose, Poppy Project team created a dummy robot in Pypot that is meant to be used in conjunction with a consumer application. We choose to develop a web hosted application using a 3D engine (Threejs) to render the robot.

Our ambition is to have a completely standalone simulated robot with physics. Some prototypes were created to benchmark possible solutions.

PyPot

Scientific Description

Pypot is a framework developed to make it easy and fast to control custom robots based on Dynamixel motors. This framework provides different levels of abstraction corresponding to different types of use. Pypot can be used to:

-

define the structure of a custom robot and control it through high-level commands,

-

define primitives and easily combine them to create complex behavior.

Pypot is part of the Poppy project. It is the core library used by the Poppy robots. This abstraction layer allows to seamlessly switch from a given Poppy robot to another. It also provides a common set of tools, such as forward and inverse kinematics, simple computer vision, recording and replaying moves, or easy access to the autonomous exploration library Explauto.

To extend pypot application domains and connection to outside world, it also provides an HTTP API. On top of providing an easy way to connect to smart sensors or connected devices, it is notably used to connect to Snap!, a variant of the well-known Scratch visual programming language.

Functional Description

Pypot is entirely written in Python to allow for fast development, easy deployment and quick scripting by non-expert developers. It can also benefit from the scientific and machine learning libraries existing in Python. The serial communication is handled through the standard library and offers high performance (10ms sensorimotor loop) for common Poppy uses. It is cross-platform and has been tested on Linux, Windows and Mac OS.

Pypot is also compatible with the V-REP simulator. This allows the transparent switch from a real robot to its simulated equivalent with a single code base.

Finally, it has been developed to be easily and quickly extended for other types of motors and sensors.

It works with Python 2.7 or Python 3.3 or later, and has also been adapted to the Raspberry Pi board.

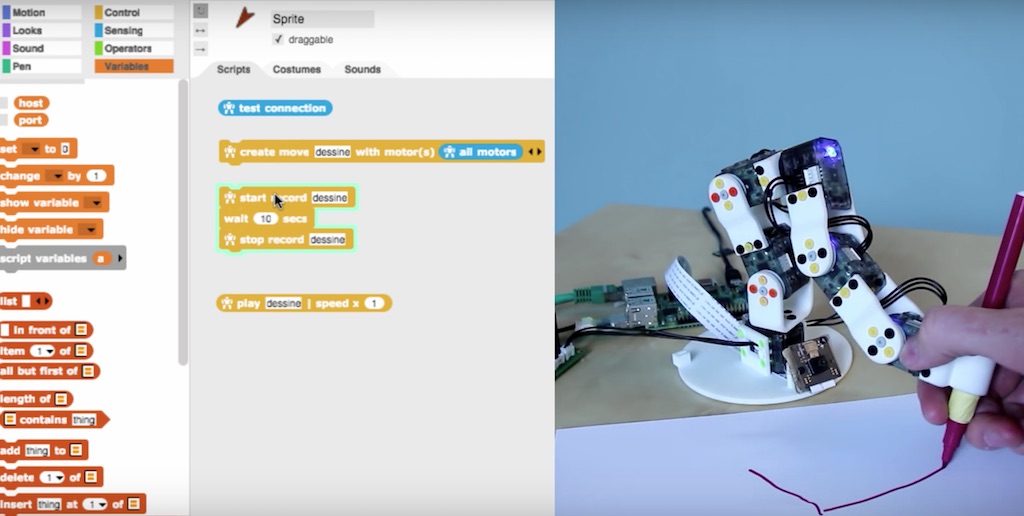

Pypot has been connected to Snap!, a variant of the famous Scratch visual language, developed to teach computer science to children. It is based on a drag-and-drop blocks interface to write scripts by assembling those blocks.

Thanks to the Snap! HTTP block, a connection can be made to pypot allowing users to directly control robots through their visual interfaces. A set of dedicated Snap! blocks have been designed, such as *set motor position* or *get motor temperature*. Thanks to the Snap! HTTP block, users can control robots through this visual interfaces connecting to Pypot. A set of dedicated Snap! blocks has been designed, such as *set motor position* or *get motor temperature*.

Snap! is also used as a tool to program the robot by demonstration. Using the *record* and *play* blocks, users can easily trigger kinesthetic recording of the whole robot or only a specific subpart, such as an arm. These records can then be played or "mixed" - either played in sequence or simultaneously - with other recordings to compose complex choreographies. The moves are encoded as a model of mixture of gaussians (GMM) which allows the definition of clean mathematical operators for combining them.

This recording tool has been developed and used in collaboration with artists who show interest in the concept of robotic moves.

PyQMC

Python library for Quasi-Metric Control

Functional Description

PyQMC is a python library implementing the control method described in http://dx.doi.org/10.1371/journal.pone.0083411 It allows to solve discrete markovian decision processes by computing a Quasi-Metric on the state space. This model based method has the advantage to be goal independant and thus can produce a policy for any goal with relatively few recomputation. New addition to this method is the possibility of online learning of the transition model and the Quasi-Metric.