Section:

New Results

Editing and Modeling

Flow-guided Warping for Image-based Shape Manipulation

Manipulating object shape in images usually require a-priori on their 3D geometry, and either user interactions or huge databases of 3D objects.

In collaboration with the Maverick team (Inria Rhone Alpes), we have developped a method that manipulates perceived object shape from a single input color image without the need of addional 3D information, user input or 3D data.

The key idea is to give the illusion of shape sharpening or rounding by exaggerating orientation patterns in the image that are strongly correlated to surface curvature (fig. 10).

We build on a growing literature in both human and computer vision showing the importance of orientation patterns in the communication of shape, which we complement with mathematical relationships and a statistical image analysis revealing that structure tensors are indeed strongly correlated to surface shape features.

We then rely on these correlations to introduce a flow-guided image warping algorithm, which in effect exaggerates orientation patterns involved in shape perception.

We evaluate our technique by 1) comparing it to ground truth shape deformations, and 2) performing two perceptual experiments to assess its effects.

Our algorithm produces convincing shape manipulation results on synthetic images and photographs, for various materials and lighting environments. This work has been published at ACM Siggraph 2016 [17].

Figure

10. Our warping technique takes as input (a) a single image (Jules Bennes, after Barye: “walking lion”) and modifies its perceived surface shape, either making it sharper in (b) or rounder in (c).

|

|

|

| (a) Input image - ©Expertissim |

(b) Shape sharpening |

(c) Shape rounding |

|

Local Shape Editing at the Compositing Stage

Modern compositing software permit to linearly recombine different 3D rendered outputs (e.g., diffuse and reflection shading) in post-process, providing for simple but interactive appearance manipulations. Renderers also routinely provide auxiliary buffers (e.g., normals, positions) that may be used to add local light sources or depth-of-field effects at the compositing stage. These methods are attractive both in product design and movie production, as they allow designers and technical directors to test different ideas without having to re-render an entire 3D scene. In this work, we extended this approach to the editing of local shape: users modify the rendered normal buffer, and our system automatically modifies diffuse and reflection buffers to provide a plausible result. Our method is based on the reconstruction of a pair of diffuse and reflection prefiltered environment maps for each distinct object/material appearing in the image. We seamlessly combine the reconstructed buffers in a recompositing pipeline that works in real-time on the GPU using arbitrarily modified normals. This work as been published at the Eurographics Symposium on Rendering [24].

Topology-Aware Neighborhoods for Point-Based Simulation and Reconstruction

Figure

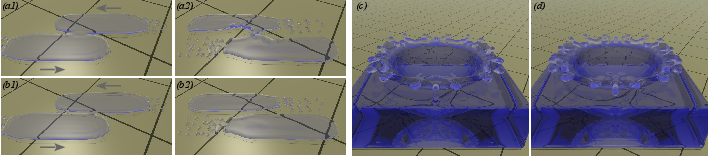

11. Two SPH fluid simulations using a standard Euclidean particle neighborhood (a,c), and our new topological neighborhood (b,d). On the left, two fluid components are crossing while moving in opposite directions. Our new neighborhood performs accurate merging computations and avoids both unwanted fusion in the reconstruction and incorrect fluid interaction in the simulation. On the right, our accurate neighborhoods lead to different shape of the splash, and enable the reconstruction of the fluid with an adequate topology while avoiding bulging at distance.

|

|

Particle based simulations are widely used in computer graphics. In this field, several recent results have improved the simulation itself or improved the tension of the final fluid surface. In current particle based implementations, the particle neighborhood is computed by considering the Euclidean distance between fluid particles only. Thus particles from different fluid components interact, which generates both local incorrect behavior in the simulation and blending artifacts in the reconstructed fluid surface.

In collaboration with IRIT, we developed a better neighborhood computation for both the physical simulation and surface reconstruction steps (fig. 11). Our approach tracks and stores the local fluid topology around each particle using a graph structure. In this graph, only particles within the same local fluid component are neighbors and other disconnected fluid particles are inserted only if they come into contact. The graph connectivity also takes into account the asymmetric behavior of particles when they merge and split, and the fluid surface is reconstructed accordingly, thus avoiding their blending at distance before a merge. In the simulation, this neighborhood information is exploited for better controlling the fluid density and the force interactions at the vicinity of its boundaries. For instance, it prevents the introduction of collision events when two distinct fluid components are crossing without contact, and it avoids fluid interactions through thin waterproof walls. This leads to an overall more consistent fluid simulation and reconstruction.

This work as been published at the Eurographics/ ACM SIGGRAPH Symposium on Computer Animation [18].