Section: New Results

Knowledge-based Models for Narrative Design

-

Other permanent researchers: Marie-Paule Cani, Frédéric Devernay, Jean-Claude Léon, Olivier Palombi.

Our long term goal is to develop high-level models helping users to express and convey their own narrative content (from fiction stories to more practical educational or demonstrative scenarios). Before being able to specify the narration, a first step is to define models able to express some a priori knowledge on the background scene and on the object(s) or character(s) of interest. Our first goal is to develop 3D ontologies able to express such knowledge. The second goal is to define a representation for narration, to be used in future storyboarding frameworks and virtual direction tools. Our last goal is to develop high-level models for virtual cinematography such as rule-based cameras able to automatically follow the ongoing action and semi-automatic editing tools enabling to easily convey the narration via a movie.

Zooming On All Actors: Automatic Focus+Context Split Screen Video Generation

|

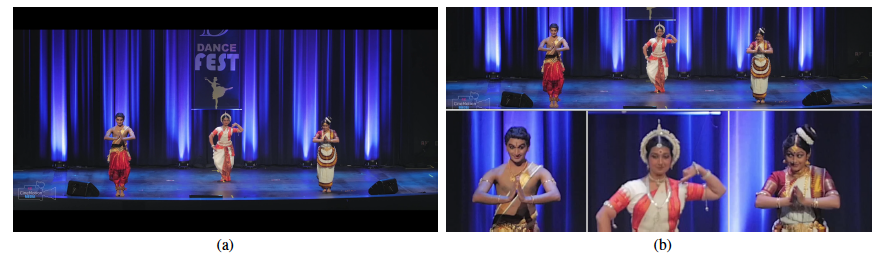

Recordings of stage performances are easy to capture with a high-resolution camera, but are difficult to watch because the actors' faces are too small. We present an approach to automatically create a split screen video that transforms these recordings to show both the context of the scene as well as close-up details of the actors [20]. This is illustrated in Figure 6. Given a static recording of a stage performance and tracking information about the actors positions, our system generates videos showing a focus+context view based on computed close-up camera motions using crop-and zoom. The key to our approach is to compute these camera motions such that they are cinematically valid close-ups and to ensure that the set of views of the different actors are properly coordinated and presented. We pose the computation of camera motions as convex optimization that creates detailed views and smooth movements, subject to cinematic constraints such as not cutting faces with the edge of the frame. Additional constraints link the close up views of each actor, causing them to merge seamlessly when actors are close. Generated views are placed in a resulting layout that preserves the spatial relationships between actors. We demonstrate our results on a variety of staged theater and dance performances.

Make Gestures to Learn: Reproducing Gestures Improves the Learning of Anatomical Knowledge More than Just Seeing Gestures

Manual gestures can facilitate problem solving but also language or conceptual learning. Both seeing and making the gestures during learning seem to be beneficial. However, the stronger activation of the motor system in the second case should provide supplementary cues to consolidate and re-enact the mental traces created during learning. In this work [14], we tested this hypothesis in the context of anatomy learning by naïve adult participants. Anatomy is a challenging topic to learn and is of specific interest for research on embodied learning, as the learning content can be directly linked to learners' body. Two groups of participants were asked to look at a video lecture on the forearm anatomy. The video included a model making gestures related to the content of the lecture. Both groups see the gestures but only one also imitate the model. Tests of knowledge were run just after learning and few days later. The results revealed that imitating gestures improves the recall of structures names and their localization on a diagram. This effect was however significant only in long-term assessments. This suggests that: (1) the integration of motor actions and knowledge may require sleep; (2) a specific activation of the motor system during learning may improve the consolidation and/or the retrieval of memories.