Section: New Results

Fundamentals of Interaction

Participants : Michel Beaudouin-Lafon [correspondant] , Wendy Mackay, Cédric Fleury, Theophanis Tsandilas, Benjamin Bressolette, Julien Gori, Han Han, Yiran Zhang, Miguel Renom, Philip Tchernavskij, Martin Tricaud.

In order to better understand fundamental aspects of interaction, ExSitu conducts in-depth observational studies and controlled experiments which contribute to theories and frameworks that unify our findings and help us generate new, advanced interaction techniques. Our theoretical work also leads us to deepen or re-analyze existing theories and methodologies in order to gain new insights.

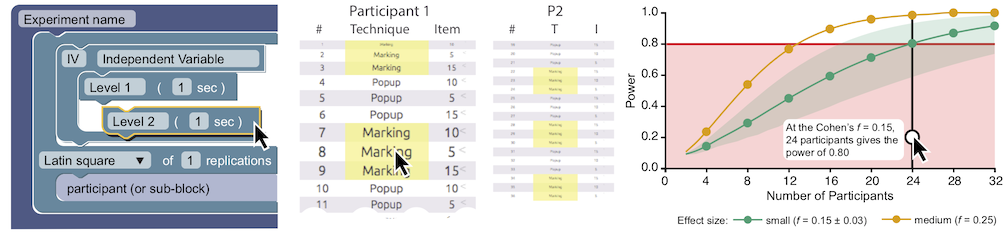

At the methodological level and in collaboration with University of Zurich (Switzerland), we have developed Touchstone2 [19] (Best Paper award), a direct-manipulation interface for generating and examining trade-offs in experiment designs (Fig. 2). Based on interviews with experienced researchers, we developed an interactive environment for manipulating experiment design parameters, revealing patterns in trial tables, and estimating and comparing statistical power. We also developed TSL, a declarative language that precisely represents experiment designs. In two studies, experienced HCI researchers successfully used Touchstone2 to evaluate design trade-offs and calculate how many participants are required for particular effect sizes. Touchstone2 is freely available at https://touchstone2.org and we encourage the community to use it to improve the accountability and reproducibility of research by sharing TSL descriptions of their experimental designs.

|

The book “Sticky Creativity: Post-It Note Cognition, Interaction and Digitalization” [32], Academic Press, explores how the Post-It note has “become the most commonly used design material in creative design activities”, with research and use cases to illustrate its role creative activities. Wendy Mackay converted her one-day Master Class on participatory design methods into a book chapter, shifting the designer's focus from static wireframes to prototyping how users will interact with a proposed new technology. The course takes the reader through a full interaction design cycle, with nine illustrated participatory design methods. It begins with a design brief: create an augmented sticky note inspired by observations of how people actually use paper sticky notes. Story-based interviews reveal both breakdowns and creative new uses of sticky notes. Brainstorming and video brainstorming, informed by the users' stories, generate new ideas. Paper prototyping a design concept related to augmented sticky notes lets designers explore ideas for a future system to address an untapped need or desire. Shooting a video prototype, guided by titlecards and a storyboard, illustrates how future users will interact with the proposed system. Finally, a design walkthrough identifies key problems and suggests ideas for improvement.

At the theoretical level, we have continued our exploration of Information Theory as a design tool for HCI by analyzing past and current applications of Shannon's theory to HCI research to identify areas where information-theoretic concepts can be used to understand, design and optimize human-computer communication [30]. We have also continued our long-standing strand of work on pointing by evaluating several models for assessing pointing performance by participants with motor impairments [27]. Namely, we studied the strengths of weaknesses of various models, from traditional Fitts' Law to the WHo model, the EMG regression and the method of Positional Variance Profiles (PVPs), on datasets from abled participants vs. participants with dyspraxia.

In the context of the ERC ONE project on Unified Principles of Interaction, Philip Tchernavskij defended his Ph.D. thesis on malleable software [40]. The goal of malleable software is to make is as easy as possible for users themselves to change software, or to have it changed on their behalf in response to their developing needs. Current approaches do not address this issue adequately: software engineering promotes flexible code, but in practice this does not help end-users effect change in their software. Based on a study of a network of communities working with biodiversity data, we found that the mode of software production, i.e. the technologies and economic relations that produce software, is biased towards centralized, one-size-fits-all systems. Instead, we should seek to create infrastructures for plurality, i.e. tools that help multiple communities collaborate without forcing them to consolidate around identical interfaces or data representations. Malleable software seeks to maximize the kinds of modifications that can take place through regular interactions, e.g. direct manipulation of interface elements. By generalizing existing control structures for interaction under the concepts of co-occurrences and entanglements, we created an environment where interactions can be dynamically created and modified. The Tangler prototype illustrates the power of these concepts to create malleable software.

In collaboration with Aarhus University (Denmark), we created Videostrates [22] to explore the notion of an interactive substrate for video data. Videostrates is based on our joint previous work on Webstrates (https://webstrates.net) and supports both live and recorded video composition with a declarative HTML-based notation, combining both simple and sophisticated editing tools that can be used collaboratively. Videostrates is programmable and unleashes the power of the modern web platform for video manipulation. We demonstrated its potential through three use scenarios (Fig. 3): collaborative video editing with multiple tools and devices; orchestration of multiple live streams that are recorded and broadcast to a popular streaming platform; and programmatic creation of video using WebGL and shaders for blue screen effects. These scenarios demonstrate Videostrates’ potential for novel collaborative video editors with fully programmable interfaces.

|

We conducted an in-depth observational study of landscape architecture students to reveal a new phenomenon in pen-and-touch surface interaction: interstices [24]. We observed that bimanual interactions with a pen and touch surface involved various sustained hand gestures, interleaved between their regular commands. Positioning of the non-preferred hand indicates anticipated actions, including: sustained hovering near the surface; pulled back but still floating above the surface; resting in their laps; and stabilizing the preferred hand while handwriting. These intersticial actions reveal anticipated actions and therefore should be taken into account in the design of novel interfaces.

We also started a study of blind or visually impaired people to better understand how they use graphical user interfaces [28]. The goal is to design multimodal interfaces for sighted users that do not rely on the visual channel as much as current GUIs.

In collaboration with the University of Paris Descartes and the ILDA Inria team, we investigated how to help users to query massive data series collections within interaction times. We demonstrated the importance of providing progressive whole-matching similarity search results on large time series collections (100 GB). Our experiments showed that there is a significant gap between the time the 1st Nearest Neighbor (1-NN) is found and the time when the search algorithm terminates [29]. In other words, users often wait without any improvement in their answers. We further showed that high-quality approximate answers are found very early, e.g., in less than one second, so they can support highly interactive visual analysis tasks. We discussed how to estimate probabilistic distance bounds, and how to help analysts evaluate the quality of their progressive results. The results of this collaboration have lead to Gogolou's Ph.D. thesis (ILDA Inria team) [38].

In the context of virtual reality, we explored how to integrate the real world surrounding users in the virtual environment. In many virtual reality systems, user physical workspace is superposed with a particular area of the virtual environment. This spatial consistency allows users to physically walk in the virtual environment and interact with virtual content through tangible objects. However, as soon as users perform virtual navigation to travel on a large scale (i.e. move their physical workspace in the virtual environment), they break this spatial consistency. We introduce two switch techniques to help users to recover the spatial consistency in some predefined virtual areas when using a teleportation technique for the virtual navigation [26]. We conducted a controlled experiment on a box-opening task in a CAVE-like system to evaluate the performance and usability of these switch techniques. The results highlight that helping the user to recover a spatially consistency ensures the accessibility of the entire virtual interaction space of the task. Consequently, the switch techniques decrease time and cognitive effort required to complete the task.