Section: New Results

Collaboration

Participants : Cédric Fleury [correspondant] , Michel Beaudouin-Lafon, Wendy Mackay, Carla Griggio, Yujiro Okuya, Arthur Fages.

ExSitu explores new ways of supporting collaborative interaction and remote communication. In particular, we studied co-located collaboration on large wall-sized display, video-conferencing systems for remote collaboration, and collaboration between professional designers and developers during the design of interactive systems.

|

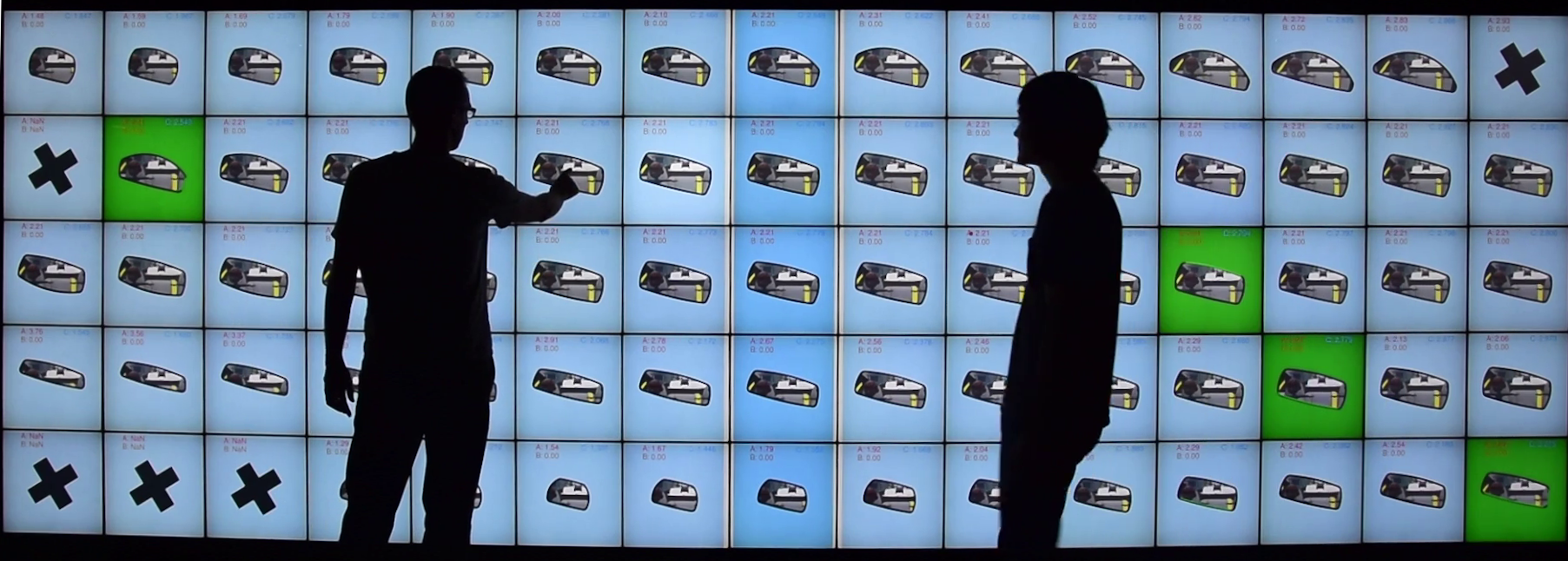

Multi-touch wall-sized displays, as those of the Digiscope network (http://digiscope.fr/, afford collaborative exploration of large datasets and re-organization of digital content. In the context of industrial design, computer-aided design (CAD) is now an essential part of the design process allowing experts to evaluate and adjust product design using digital mock-ups. We investigated how a wall-sized display could be used to allow multidisciplinary collaborators (e.g. designers, engineers, ergonomists) to explore large number of design alternatives. In particular, we design a system which allows non-CAD expert to generate and distribute on a wall-sized display multiple various of a CAD model (Figure 4). We ran a usability study and a controlled experiment to assess the benefit of wall-sized displays in such context. Yujiro Okuya, under the supervision of Patrick Bourdot (LIMSI-CNRS) and Cédric Fleury, successfully defended his thesis CAD Modification Techniques for Design Reviews on Heterogeneous Interactive Systems [39] on this topic.

|

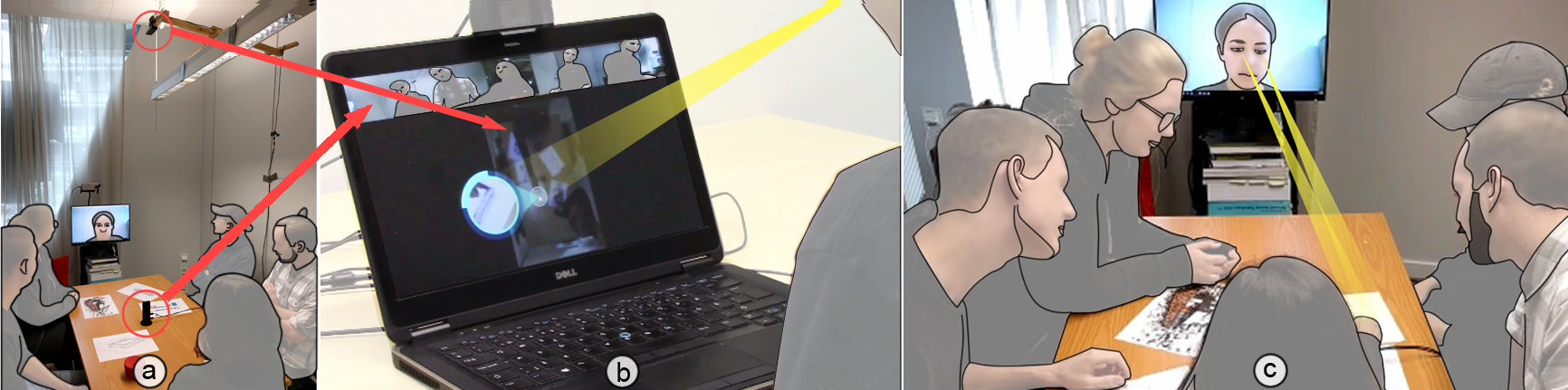

For remote collaboration using video, interpreting gaze direction is critical for communication between coworkers sitting around a table and a remote satellite colleague. However, 2D video distorts images and makes this interpretation inaccurate. We proposed GazeLens [23], a video conferencing system that improves coworkers’ ability to interpret the satellite worker’s gaze (Figure 5). A 360◦ camera captures the coworkers and a ceiling camera captures artifacts on the table. The system combines these two video feeds in an interface. Lens widgets strategically guide the satellite worker’s attention toward specific areas of her/his screen allowing coworkers to clearly interpret her/his gaze direction. Controlled experiments showed that GazeLens increases coworkers’ overall gaze interpretation accuracy in comparison to a conventional video conferencing system.

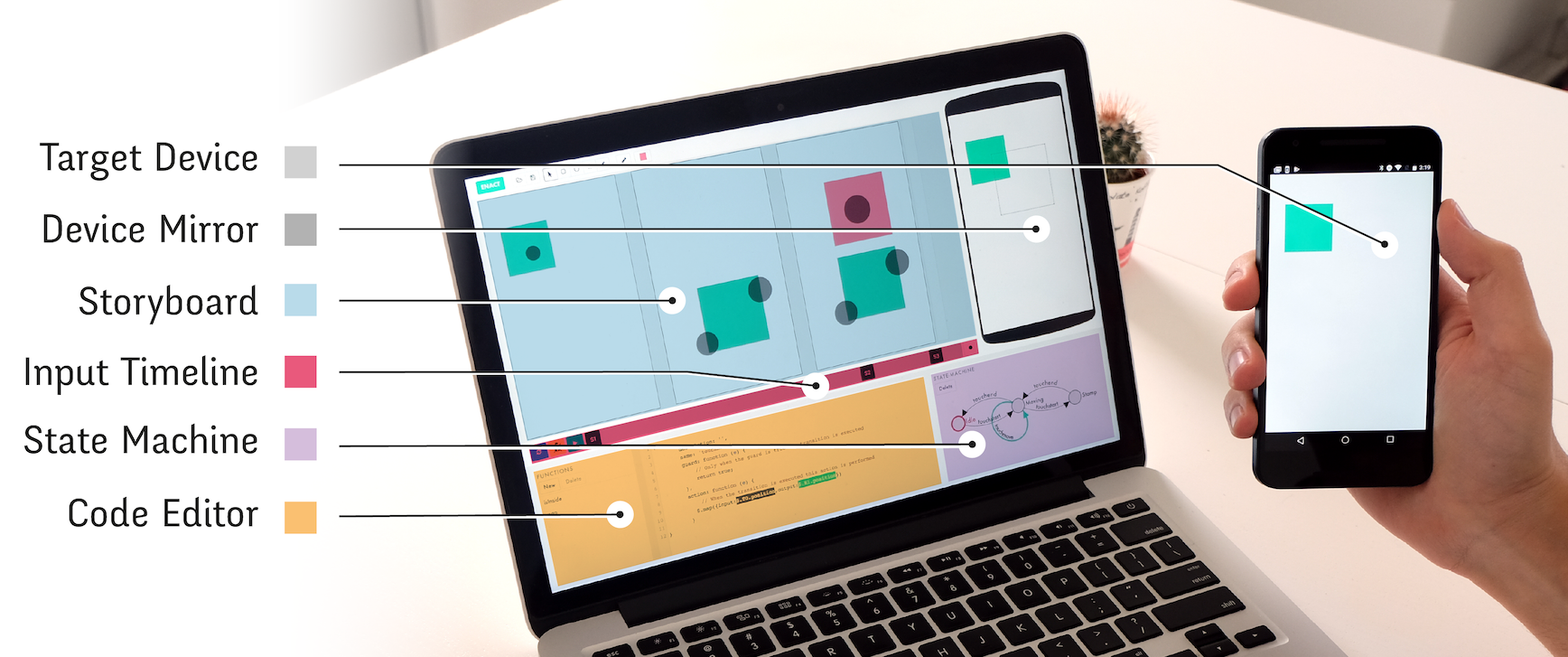

Finally, we also conducted an in-depth study of the collaboration patterns between designers and developers of interactive systems, and created a tool, Enact, to facilitate their work [14]. Professional designers and developers often struggle when transitioning between the design and implementation of an interactive system. We found that current practices induce unnecessary rework and cause discrepancies between design and implementation. We identified three recurring types of breakdowns: omitting critical details, ignoring edge cases, and disregarding technical limitations. We introduced four design principles to create tools that mitigate these problems: Provide multiple viewpoints, maintain a single source of truth, reveal the invisible and support design by enaction. We applied these principles to create Enact, a live environment for prototyping touch-based interactions (Fig. 6). We conducted two studies to assess Enact and compare it with current tools. Results suggest that Enact helps participants detect more edge cases, increases designers’ participation and provides new opportunities for co-creation.