Keywords

Computer Science and Digital Science

- A5.1.1. Engineering of interactive systems

- A5.1.2. Evaluation of interactive systems

- A5.1.4. Brain-computer interfaces, physiological computing

- A5.1.5. Body-based interfaces

- A5.1.6. Tangible interfaces

- A5.1.7. Multimodal interfaces

- A5.3.3. Pattern recognition

- A5.4.1. Object recognition

- A5.4.2. Activity recognition

- A5.7.3. Speech

- A5.8. Natural language processing

- A5.10.5. Robot interaction (with the environment, humans, other robots)

- A5.10.7. Learning

- A5.10.8. Cognitive robotics and systems

- A5.11.1. Human activity analysis and recognition

- A6.3.1. Inverse problems

- A9. Artificial intelligence

- A9.2. Machine learning

- A9.5. Robotics

- A9.7. AI algorithmics

Other Research Topics and Application Domains

- B1.2.1. Understanding and simulation of the brain and the nervous system

- B1.2.2. Cognitive science

- B5.6. Robotic systems

- B5.7. 3D printing

- B5.8. Learning and training

- B9. Society and Knowledge

- B9.1. Education

- B9.1.1. E-learning, MOOC

- B9.2. Art

- B9.2.1. Music, sound

- B9.2.4. Theater

- B9.6. Humanities

- B9.6.1. Psychology

- B9.6.8. Linguistics

- B9.7. Knowledge dissemination

1 Team members, visitors, external collaborators

Research Scientists

- Pierre-Yves Oudeyer [Team leader, Inria, Senior Researcher, HDR]

- Clément Moulin-Frier [Inria, Researcher]

Faculty Members

- Natalia Diaz Rodriguez [École Nationale Supérieure de Techniques Avancées]

- David Filliat [École Nationale Supérieure de Techniques Avancées, Professor, HDR]

- Cécile Mazon [Univ de Bordeaux, Associate Professor]

- Mai Nguyen [École Nationale Supérieure de Techniques Avancées, until Nov 2020]

- Helene Sauzeon [Univ de Bordeaux, Professor, HDR]

Post-Doctoral Fellows

- Eleni Nisioti [Inria, from Dec 2020]

- Chris Reinke [Inria, until Feb 2020]

PhD Students

- Rania Abdelghani [Evidenceb, CIFRE, from Dec 2020]

- Maxime Adolphe [Onepoint, CIFRE, from Sep 2020]

- Mehdi Alaimi [Inria, from Feb 2020 until Aug 2020]

- Florence Carton [CEA]

- Hugo Caselles-Dupre [Softbank Robotics]

- Cedric Colas [Inria]

- Thibault Desprez [Inria, from Apr 2020 until Jun 2020]

- Mayalen Etcheverry [Poietis, CIFRE]

- Tristan Karch [Inria]

- Timothee Lesort [École Nationale Supérieure de Techniques Avancées, until May 2020]

- Eleni Nisioti [Inria, Nov 2020]

- Vyshakh Palli Thaza [Renault, CIFRE]

- Remy Portelas [Inria]

- Thomas Rojat [Renault, CIFRE, from Mar 2020]

- Julius Taylor [Inria, from Nov 2020]

- Alexandr Ten [Inria]

- Maria Teodorescu [Inria, from Mar 2020]

Technical Staff

- Benjamin Clément [Inria, Engineer]

- Mayalen Etcheverry [Inria, Engineer, from Feb 2020 until Aug 2020]

- Grgur Kovac [Inria, Engineer]

- Alexandre Pere [Inria, Engineer, until Feb 2020]

- Clement Romac [Inria, Engineer, from Oct 2020]

- Didier Roy [Inria]

Interns and Apprentices

- Maxime Adolphe [Inria, from Feb 2020 until Jul 2020]

- Thibault Audouit [Inria, from May 2020 until Jul 2020]

- Camille Chastagnol [Association pour le développement de l'enseignement et des recherches d'Aquitaine, from Feb 2020 until Jun 2020]

- Younes Rabii [Inria, from Feb 2020 until Jul 2020]

- Clement Romac [Inria, from Mar 2020 until Aug 2020]

- Djiby Soumare [Inria, from May 2020 until Jul 2020]

- Maria Teodorescu [Inria, until Feb 2020]

- Valentin Villecroze [Inria, from Apr 2020 until Aug 2020]

Administrative Assistant

- Nathalie Robin [Inria]

Visiting Scientist

- Kevyn Collins-Thompson [Université du Michigan, until Jul 2020]

External Collaborator

- Wang Chak Chan [Automated Systems Limited-Hong Kong, from Nov 2020]

2 Overall objectives

The Flowers project-team, at Inria, University of Bordeaux and Ensta ParisTech, studies models of open-ended development and learning. These models are used as tools to help us understand better how children learn, as well as to build machines that learn like children, i.e. developmental artificial intelligence, with applications in educational technologies, automated discovery, robotics and human-computer interaction.

A major scientific challenge in artificial intelligence and cognitive sciences is to understand how humans and machines can efficiently acquire world models, as well as open and cumulative repertoires of skills over an extended time span. Processes of sensorimotor, cognitive and social development are organized along ordered phases of increasing complexity, and result from the complex interaction between the brain/body with its physical and social environment.

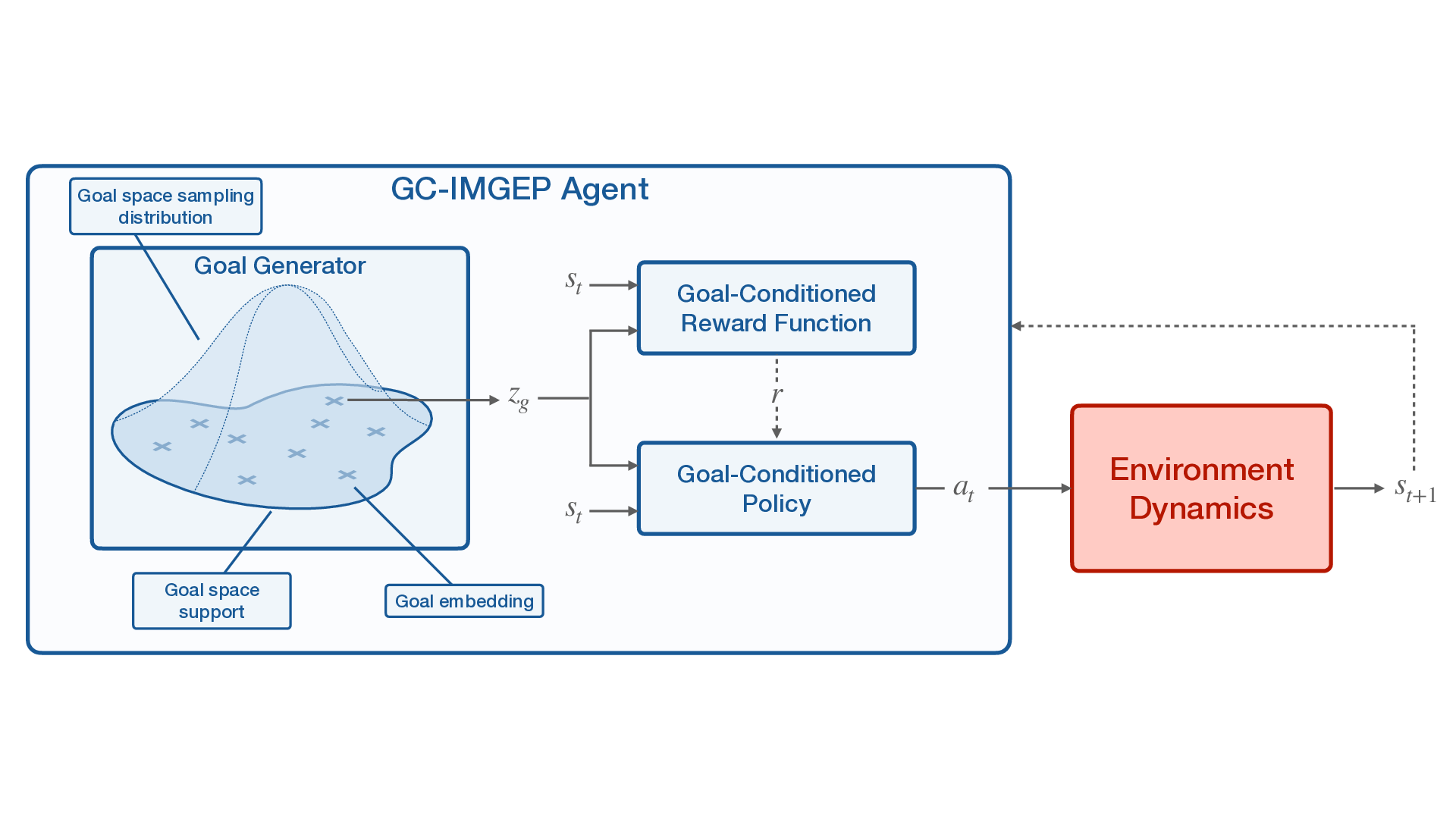

To advance the fundamental understanding of mechanisms of development, the FLOWERS team develops computational models that leverage advanced machine learning techniques such as intrinsically motivated deep reinforcement learning, in strong collaboration with developmental psychology and neuroscience. In particular, the team focuses on models of intrinsically motivated learning and exploration (also called curiosity-driven learning), with mechanisms enabling agents to learn to represent and generate their own goals, self-organizing a learning curriculum for efficient learning of world models and skill repertoire under limited resources of time, energy and compute. The team also studies how autonomous learning mechanisms can enable humans and machines to acquire grounded language skills, using neuro-symbolic architectures for learning structured representations and handling systematic compositionality and generalization.

Beyond leading to new theories and new experimental paradigms to understand human development in cognitive science, as well as new fundamental approaches to developmental machine learning, the team explores how such models can find applications in robotics, human-computer interaction, multi-agent systems, automated discovery and educational technologies. In robotics, the team studies how artificial curiosity combined with imitation learning can provide essential building blocks allowing robots to acquire multiple tasks through natural interaction with naïve human users, for example in the context of assistive robotics. The team also studies how models of curiosity-driven learning can be transposed in algorithms for intelligent tutoring systems, allowing educational software to incrementally and dynamically adapt to the particularities of each human learner, and proposing personalized sequences of teaching activities.

Research axes

The work of FLOWERS is organized around the following axis:

- Curiosity-driven exploration and sensorimotor learning: intrinsic motivation are mechanisms that have been identified by developmental psychologists to explain important forms of spontaneous exploration and curiosity. In FLOWERS, we try to develop computational intrinsic motivation systems, and test them on embodied machines, allowing to regulate the growth of complexity in exploratory behaviours. These mechanisms are studied as active learning mechanisms, allowing to learn efficiently in large inhomogeneous sensorimotor spaces and environments;

- Cumulative learning of sensorimotor skills: FLOWERS develops machine learning algorithms that can allow embodied machines to acquire cumulatively sensorimotor skills. In particular, we develop optimization and reinforcement learning systems which allow robots to discover and learn dictionaries of motor primitives, and then combine them to form higher-level sensorimotor skills.

- Natural and intuitive social learning: FLOWERS develops interaction frameworks and learning mechanisms allowing non-engineer humans to teach a robot naturally. This involves two sub-themes: 1) techniques allowing for natural and intuitive human-robot interaction, including simple ergonomic interfaces for establishing joint attention; 2) learning mechanisms that allow the robot to use the guidance hints provided by the human to teach new skills;

- Discovering and abstracting the structure of sets of uninterpreted sensors and motors: FLOWERS studies mechanisms that allow a robot to infer structural information out of sets of sensorimotor channels whose semantics is unknown, for example the topology of the body and the sensorimotor contingencies (propriocetive, visual and acoustic). This process is meant to be open-ended, progressing in continuous operation from initially simple representations to abstract concepts and categories similar to those used by humans.

- Body design and role of the body in sensorimotor and social development: We study how the physical properties of the body (geometry, materials, distribution of mass, growth, ...) can impact the acquisition of sensorimotor and interaction skills. This requires to consider the body as an experimental variable, and for this we develop special methodologies for designing and evaluating rapidly new morphologies, especially using rapid prototyping techniques like 3D printing.

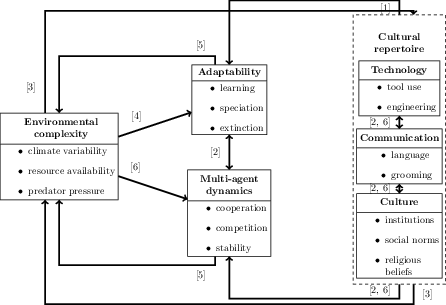

- Emergence of social behavior in multi-agent populations: We study how populations of interacting learning agents can collectively acquire cooperative or competitive strategies in challenging simulated environments. We specifically focus on the role of two factors: (i) Cognitive architectures, including the role of curiosity-driven exploration in the emergence of complex social behavior ; (2) Environmental dynamics, including how task structure and environmental variability influence emergent social behavior. Our work is grounded in principles and theories from behavioral ecology and language evolution; and uses recent advances in multi-agent reinforcement learning as a modeling framework.

- Curiosity and intrinsic motivations in cognitive learning: We are extending our research on the role of curiosity and intrinsic motivations on learning through human experimentations, and along two lines of research. The first aims to develop a lifelong approach for the role of intrinsic motivation and curiosity in cognitive learning (e.g., spatial learning, attentional learning, etc.) at all ages of life (children, young adults and older adults) in order to demonstrate the lifespan developmental nature of these mechanisms in knowledge acquisition and cognitive development. Basically, the aim is to examine whether curiosity/ intrinsic motivation is an essential ingredient for tackling inter-individual variability in learning performance. The second axis aims to study the links between states of curiosity, learning progress and metacognition. The aim is to address the question of the role of metacognitive strategies of self-regulation in the generation of states of curiosity and learning progress.

- Educational technologies and Intelligent Tutoring Systems: FLOWERS develops new educational technologies or Intelligent Tutorial Systems, using both curiosity-related models and artificial intelligence techniques (online optimization methods) in order to personalize learning sequences for each individual and to maximize curiosity and learning in real world context (at school or on MOOC platform). Two areas of research are being investigated : 1) the design of curiosity-driven interactive education systems by introducing mechanisms for self-questioning and self-exploration of knowledge during the learning process; 2) the design of Intelligent Tutoring systems promoting individual learning progress while personalizing the learning path in the task space to be covered by the learner. These two areas are enriched by applied studies in neuropsychological clinics (aging, cognitive disorders related to neurodevelopmental syndromes such as autistic spectrum or attentional disorders) where inter-individual variability is a critical challenge for designing educational programs or cognitive training or remediation programs.

3 Research program

Research in artificial intelligence, machine learning and pattern recognition has produced a tremendous amount of results and concepts in the last decades. A blooming number of learning paradigms - supervised, unsupervised, reinforcement, active, associative, symbolic, connectionist, situated, hybrid, distributed learning... - nourished the elaboration of highly sophisticated algorithms for tasks such as visual object recognition, speech recognition, robot walking, grasping or navigation, the prediction of stock prices, the evaluation of risk for insurances, adaptive data routing on the internet, etc... Yet, we are still very far from being able to build machines capable of adapting to the physical and social environment with the flexibility, robustness, and versatility of a one-year-old human child.

Indeed, one striking characteristic of human children is the nearly open-ended diversity of the skills they learn. They not only can improve existing skills, but also continuously learn new ones. If evolution certainly provided them with specific pre-wiring for certain activities such as feeding or visual object tracking, evidence shows that there are also numerous skills that they learn smoothly but could not be “anticipated” by biological evolution, for example learning to drive a tricycle, using an electronic piano toy or using a video game joystick. On the contrary, existing learning machines, and robots in particular, are typically only able to learn a single pre-specified task or a single kind of skill. Once this task is learnt, for example walking with two legs, learning is over. If one wants the robot to learn a second task, for example grasping objects in its visual field, then an engineer needs to re-program manually its learning structures: traditional approaches to task-specific machine/robot learning typically include engineer choices of the relevant sensorimotor channels, specific design of the reward function, choices about when learning begins and ends, and what learning algorithms and associated parameters shall be optimized.

As can be seen, this requires a lot of important choices from the engineer, and one could hardly use the term “autonomous” learning. On the contrary, human children do not learn following anything looking like that process, at least during their very first years. Babies develop and explore the world by themselves, focusing their interest on various activities driven both by internal motives and social guidance from adults who only have a folk understanding of their brains. Adults provide learning opportunities and scaffolding, but eventually young babies always decide for themselves what activity to practice or not. Specific tasks are rarely imposed to them. Yet, they steadily discover and learn how to use their body as well as its relationships with the physical and social environment. Also, the spectrum of skills that they learn continuously expands in an organized manner: they undergo a developmental trajectory in which simple skills are learnt first, and skills of progressively increasing complexity are subsequently learnt.

A link can be made to educational systems where research in several domains have tried to study how to provide a good learning or training experience to learners. This includes the experiences that allow better learning, and in which sequence they must be experienced. This problem is complementary to that of the learner who tries to progress efficiently, and the teacher here has to use as efficiently the limited time and motivational resources of the learner. Several results from psychology 75 and neuroscience 94 have argued that the human brain feels intrinsic pleasure in practicing activities of optimal difficulty or challenge. A teacher must exploit such activities to create positive psychological states of flow 86 for fostering the indivual engagement in learning activities. A such view is also relevant for reeducation issues where inter-individual variability, and thus intervention personalization are challenges of the same magnitude as those for education of children.

A grand challenge is thus to be able to build machines that possess this capability to discover, adapt and develop continuously new know-how and new knowledge in unknown and changing environments, like human children. In 1950, Turing wrote that the child's brain would show us the way to intelligence: “Instead of trying to produce a program to simulate the adult mind, why not rather try to produce one which simulates the child's” 152. Maybe, in opposition to work in the field of Artificial Intelligence who has focused on mechanisms trying to match the capabilities of “intelligent” human adults such as chess playing or natural language dialogue 100, it is time to take the advice of Turing seriously. This is what a new field, called developmental (or epigenetic) robotics, is trying to achieve 116156. The approach of developmental robotics consists in importing and implementing concepts and mechanisms from developmental psychology 121, cognitive linguistics 85, and developmental cognitive neuroscience 104 where there has been a considerable amount of research and theories to understand and explain how children learn and develop. A number of general principles are underlying this research agenda: embodiment 78134, grounding 98, situatedness 148, self-organization 150129, enaction 154, and incremental learning 81.

Among the many issues and challenges of developmental robotics, two of them are of paramount importance: exploration mechanisms and mechanisms for abstracting and making sense of initially unknown sensorimotor channels. Indeed, the typical space of sensorimotor skills that can be encountered and learnt by a developmental robot, as those encountered by human infants, is immensely vast and inhomogeneous. With a sufficiently rich environment and multimodal set of sensors and effectors, the space of possible sensorimotor activities is simply too large to be explored exhaustively in any robot's life time: it is impossible to learn all possible skills and represent all conceivable sensory percepts. Moreover, some skills are very basic to learn, some other very complicated, and many of them require the mastery of others in order to be learnt. For example, learning to manipulate a piano toy requires first to know how to move one's hand to reach the piano and how to touch specific parts of the toy with the fingers. And knowing how to move the hand might require to know how to track it visually.

Exploring such a space of skills randomly is bound to fail or result at best on very inefficient learning 130. Thus, exploration needs to be organized and guided. The approach of epigenetic robotics is to take inspiration from the mechanisms that allow human infants to be progressively guided, i.e. to develop. There are two broad classes of guiding mechanisms which control exploration:

- internal guiding mechanisms, and in particular intrinsic motivation, responsible of spontaneous exploration and curiosity in humans, which is one of the central mechanisms investigated in FLOWERS, and technically amounts to achieve online active self-regulation of the growth of complexity in learning situations;

- social learning and guidance, a learning mechanisms that exploits the knowledge of other agents in the environment and/or that is guided by those same agents. These mechanisms exist in many different forms like emotional reinforcement, stimulus enhancement, social motivation, guidance, feedback or imitation, some of which being also investigated in FLOWERS;

Internal guiding mechanisms

In infant development, one observes a progressive increase of the complexity of activities with an associated progressive increase of capabilities 121, children do not learn everything at one time: for example, they first learn to roll over, then to crawl and sit, and only when these skills are operational, they begin to learn how to stand. The perceptual system also gradually develops, increasing children perceptual capabilities other time while they engage in activities like throwing or manipulating objects. This make it possible to learn to identify objects in more and more complex situations and to learn more and more of their physical characteristics.

Development is therefore progressive and incremental, and this might be a crucial feature explaining the efficiency with which children explore and learn so fast. Taking inspiration from these observations, some roboticists and researchers in machine learning have argued that learning a given task could be made much easier for a robot if it followed a developmental sequence and “started simple” 6889. However, in these experiments, the developmental sequence was crafted by hand: roboticists manually build simpler versions of a complex task and put the robot successively in versions of the task of increasing complexity. And when they wanted the robot to learn a new task, they had to design a novel reward function.

Thus, there is a need for mechanisms that allow the autonomous control and generation of the developmental trajectory. Psychologists have proposed that intrinsic motivations play a crucial role. Intrinsic motivations are mechanisms that push humans to explore activities or situations that have intermediate/optimal levels of novelty, cognitive dissonance, or challenge 758688. Futher, the exploration of critical role of intrinsic motivation as lever of cognitive developement for all and for all ages is today expanded to several fields of research, closest to its original study, special education or cognitive aging, and farther away, neuropsychological clinical research. The role and structure of intrinsic motivation in humans have been made more precise thanks to recent discoveries in neuroscience showing the implication of dopaminergic circuits and in exploration behaviours and curiosity 87101145. Based on this, a number of researchers have began in the past few years to build computational implementation of intrinsic motivation 13013214372102118144. While initial models were developed for simple simulated worlds, a current challenge is to manage to build intrinsic motivation systems that can efficiently drive exploratory behaviour in high-dimensional unprepared real world robotic sensorimotor spaces 132, 130, 133, 142. Specific and complex problems are posed by real sensorimotor spaces, in particular due to the fact that they are both high-dimensional as well as (usually) deeply inhomogeneous. As an example for the latter issue, some regions of real sensorimotor spaces are often unlearnable due to inherent stochasticity or difficulty, in which case heuristics based on the incentive to explore zones of maximal unpredictability or uncertainty, which are often used in the field of active learning 8499 typically lead to catastrophic results. The issue of high dimensionality does not only concern motor spaces, but also sensory spaces, leading to the problem of correctly identifying, among typically thousands of quantities, those latent variables that have links to behavioral choices. In FLOWERS, we aim at developing intrinsically motivated exploration mechanisms that scale in those spaces, by studying suitable abstraction processes in conjunction with exploration strategies.

Socially Guided and Interactive Learning

Social guidance is as important as intrinsic motivation in the cognitive development of human babies 121. There is a vast literature on learning by demonstration in robots where the actions of humans in the environment are recognized and transferred to robots 67. Most such approaches are completely passive: the human executes actions and the robot learns from the acquired data. Recently, the notion of interactive learning has been introduced in 151, 77, motivated by the various mechanisms that allow humans to socially guide a robot 139. In an interactive context the steps of self-exploration and social guidance are not separated and a robot learns by self exploration and by receiving extra feedback from the social context 151, 109, 119.

Social guidance is also particularly important for learning to segment and categorize the perceptual space. Indeed, parents interact a lot with infants, for example teaching them to recognize and name objects or characteristics of these objects. Their role is particularly important in directing the infant attention towards objects of interest that will make it possible to simplify at first the perceptual space by pointing out a segment of the environment that can be isolated, named and acted upon. These interactions will then be complemented by the children own experiments on the objects chosen according to intrinsic motivation in order to improve the knowledge of the object, its physical properties and the actions that could be performed with it.

In FLOWERS, we are aiming at including intrinsic motivation system in the self-exploration part thus combining efficient self-learning with social guidance 126, 127. We also work on developing perceptual capabilities by gradually segmenting the perceptual space and identifying objects and their characteristics through interaction with the user 117 and robots experiments 103. Another challenge is to allow for more flexible interaction protocols with the user in terms of what type of feedback is provided and how it is provided 114.

Exploration mechanisms are combined with research in the following directions:

Cumulative learning, reinforcement learning and optimization of autonomous skill learning

FLOWERS develops machine learning algorithms that can allow embodied machines to acquire cumulatively sensorimotor skills. In particular, we develop optimization and reinforcement learning systems which allow robots to discover and learn dictionaries of motor primitives, and then combine them to form higher-level sensorimotor skills.

Autonomous perceptual and representation learning

In order to harness the complexity of perceptual and motor spaces, as well as to pave the way to higher-level cognitive skills, developmental learning requires abstraction mechanisms that can infer structural information out of sets of sensorimotor channels whose semantics is unknown, discovering for example the topology of the body or the sensorimotor contingencies (proprioceptive, visual and acoustic). This process is meant to be open- ended, progressing in continuous operation from initially simple representations towards abstract concepts and categories similar to those used by humans. Our work focuses on the study of various techniques for:

- autonomous multimodal dimensionality reduction and concept discovery;

- incremental discovery and learning of objects using vision and active exploration, as well as of auditory speech invariants;

- learning of dictionaries of motion primitives with combinatorial structures, in combination with linguistic description;

- active learning of visual descriptors useful for action (e.g. grasping).

Embodiment and maturational constraints

FLOWERS studies how adequate morphologies and materials (i.e. morphological computation), associated to relevant dynamical motor primitives, can importantly simplify the acquisition of apparently very complex skills such as full-body dynamic walking in biped. FLOWERS also studies maturational constraints, which are mechanisms that allow for the progressive and controlled release of new degrees of freedoms in the sensorimotor space of robots.

Discovering and abstracting the structure of sets of uninterpreted sensors and motors

FLOWERS studies mechanisms that allow a robot to infer structural information out of sets of sensorimotor channels whose semantics is unknown, for example the topology of the body and the sensorimotor contingencies (proprioceptive, visual and acoustic). This process is meant to be open-ended, progressing in continuous operation from initially simple representations to abstract concepts and categories similar to those used by humans.

Emergence of social behavior in multi-agent populations

FLOWERS studies how populations of interacting learning agents can collectively acquire cooperative or competitive strategies in challenging simulated environments. This differs from "Social learning and guidance" presented above: instead of studying how a learning agent can benefit from the interaction with a skilled agent, we rather consider here how social behavior can spontaneously emerge from a population of interacting learning agents. We focus on studying and modeling the emergence of cooperation, communication and cultural innovation based on theories in behavioral ecology and language evolution, using recent advances in multi-agent reinforcement learning.

Cognitive variability across Lifelong development and (re)educational Technologies

Over the past decade, the progress in the field of curiosity-driven learning generates a lot of hope, especially with regard to a major challenge, namely the inter-individual variability of developmental trajectories of learning, which is particularly critical during childhood and aging or in conditions of cognitive disorders. With the societal purpose of tackling of social inegalities, FLOWERS deals to move forward this new research avenue by exploring the changes of states of curiosity across lifespan and across neurodevelopemental conditions (neurotypical vs. learning disabilities) while designing new educational or rehabilitative technologies for curiosity-driven learning. The information gaps or learning progress, and their awareness are the core mechanisms of this part of research program due to high value as brain fuel by which the individual's internal intrinsic state of motivation is maintained and leads him/her to pursue his/her cognitive efforts for acquisitions /rehabilitations. Accordingly, a main challenge is to understand these mechanisms in order to draw up supports for the curiosity-driven learning, and then to embed them into (re)educational technologies. To this end, two-ways of investigations are carried out in real-life setting (school, home, work place etc): 1) the design of curiosity-driven interactive systems for learning and their effectiveness study ; and 2) the automated personnalization of learning programs through new algorithms maximizing learning progress in ITS.

4 Application domains

Neuroscience, Developmental Psychology and Cognitive Sciences The computational modelling of life-long learning and development mechanisms achieved in the team centrally targets to contribute to our understanding of the processes of sensorimotor, cognitive and social development in humans. In particular, it provides a methodological basis to analyze the dynamics of the interaction across learning and inference processes, embodiment and the social environment, allowing to formalize precise hypotheses and later on test them in experimental paradigms with animals and humans. A paradigmatic example of this activity is the Neurocuriosity project achieved in collaboration with the cognitive neuroscience lab of Jacqueline Gottlieb, where theoretical models of the mechanisms of information seeking, active learning and spontaneous exploration have been developed in coordination with experimental evidence and investigation, see https://

Personal and lifelong learning assistive agents Many indicators show that the arrival of personal assistive agents in everyday life, ranging from digital assistants to robots, will be a major fact of the 21st century. These agents will range from purely entertainment or educative applications to social companions that many argue will be of crucial help in our society. Yet, to realize this vision, important obstacles need to be overcome: these agents will have to evolve in unpredictable environments and learn new skills in a lifelong manner while interacting with non-engineer humans, which is out of reach of current technology. In this context, the refoundation of intelligent systems that developmental AI is exploring opens potentially novel horizons to solve these problems. In particular, this application domain requires advances in artificial intelligence that go beyond the current state-of-the-art in fields like deep learning. Currently these techniques require tremendous amounts of data in order to function properly, and they are severely limited in terms of incremental and transfer learning. One of our goals is to drastically reduce the amount of data required in order for this very potent field to work when humans are in-the-loop. We try to achieve this by making neural networks aware of their knowledge, i.e. we introduce the concept of uncertainty, and use it as part of intrinsically motivated multitask learning architectures, and combined with techniques of learning by imitation.

Educational technologies that foster curiosity-driven and personalized learning. Optimal teaching and efficient teaching/learning environments can be applied to aid teaching in schools aiming both at increase the achievement levels and the reduce time needed. From a practical perspective, improved models could be saving millions of hours of students' time (and effort) in learning. These models should also predict the achievement levels of students in order to influence teaching practices. The challenges of the school of the 21st century, and in particular to produce conditions for active learning that are personalized to the student's motivations, are challenges shared with other applied fields. Special education for children with special needs, such as learning disabilities, has long recognized the difficulty of personalizing contents and pedagogies due to the great variability between and within medical conditions. More remotely, but not so much, cognitive rehabilitative carers are facing the same challenges where today they propose standardized cognitive training or rehabilitation programs but for which the benefits are modest (some individuals respond to the programs, others respond little or not at all), as they are highly subject to inter- and intra-individual variability. The curiosity-driven technologies for learning and STIs could be a promising avenue to address these issues that are common to (mainstream and specialized)education and cognitive rehabilitation.

Automated discovery in science. Machine learning algorithms integrating intrinsically-motivated goal exploration processes (IMGEPs) with flexible modular representation learning are very promising directions to help human scientists discover novel structures in complex dynamical systems, in fields ranging from biology to physics. The automated discovery project lead by the FLOWERS team aims to boost the efficiency of these algorithms for enabling scientist to better understand the space of dynamics of bio-physical systems, that could include systems related to the design of new materials or new drugs with applications ranging from regenerative medicine to unraveling the chemical origins of life. As an example, Grizou et al. 96 recently showed how IMGEPs can be used to automate chemistry experiments addressing fundamental questions related to the origins of life (how oil droplets may self-organize into protocellular structures), leading to new insights about oil droplet chemistry. Such methods can be applied to a large range of complex systems in order to map the possible self-organized structures. The automated discovery project is intended to be interdisciplinary and to involve potentially non-expert end-users from a variety of domains. In this regard, we are currently collaborating with Poietis (a bio-printing company) and Bert Chan (an independant researcher in artificial life) to deploy our algorithms. To encourage the adoption of our algorithms by a wider community, we are also working on an interactive software which aims to provide tools to easily use the automated exploration algorithms (e.g. curiosity-driven) in various systems.

Human-Robot Collaboration. Robots play a vital role for industry and ensure the efficient and competitive production of a wide range of goods. They replace humans in many tasks which otherwise would be too difficult, too dangerous, or too expensive to perform. However, the new needs and desires of the society call for manufacturing system centered around personalized products and small series productions. Human-robot collaboration could widen the use of robot in this new situations if robots become cheaper, easier to program and safe to interact with. The most relevant systems for such applications would follow an expert worker and works with (some) autonomy, but being always under supervision of the human and acts based on its task models.

Environment perception in intelligent vehicles. When working in simulated traffic environments, elements of FLOWERS research can be applied to the autonomous acquisition of increasingly abstract representations of both traffic objects and traffic scenes. In particular, the object classes of vehicles and pedestrians are if interest when considering detection tasks in safety systems, as well as scene categories (”scene context”) that have a strong impact on the occurrence of these object classes. As already indicated by several investigations in the field, results from present-day simulation technology can be transferred to the real world with little impact on performance. Therefore, applications of FLOWERS research that is suitably verified by real-world benchmarks has direct applicability in safety-system products for intelligent vehicles.

5 Social and environmental responsibility

5.1 Footprint of research activities

AI is a field of research that currently requires a lot of computational resources, which is a challenge as these resources have an environmental cost. In the team we try to address this challenge in two ways:

- by working on developmental machine learning approaches that model how humans manage to learn open-ended and diverse repertoires of skills under severe limits of time, energy and compute: for example, curiosity-driven learning algorithms can be used to guide agent's exploration of their environment so that they learn a world model in a sample efficient manner, i.e. by minimizing the number of runs and computations they need to perform in the environment;

- by monitoring the number of CPU and GPU hours required to carry out our experiments. For instance, our work 43 used a total of 2.5 cpu years. More globally, our work uses large scale computational resources, such as the Jean Zay supercomputer platform, for which we obtained a credit of 2 millions hours of GPU and CPU for year 2021.

5.2 Impact of research results

Our research activities are organized along two fundamental research axis (models of human learning and algorithms for developmental machine learning) and one application research axis (involving multiple domains of application, see the Application Domains section). This entails different dimensions of potential societal impact:

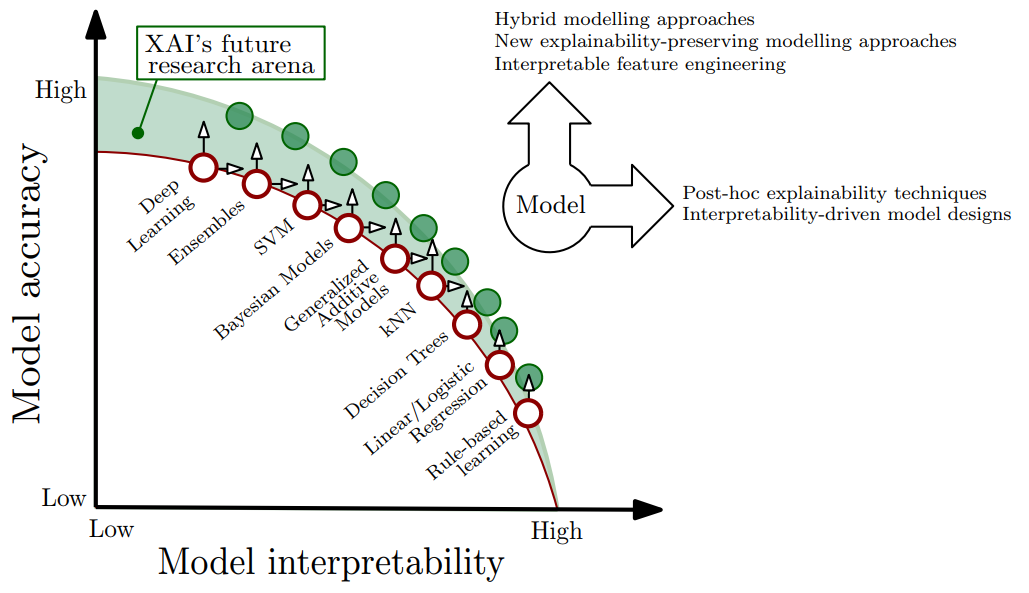

- Towards autonomous agents that can be shaped to human preferences and be explainable We work on reinforcement learning architectures where autonomous agents interact with a social partner to explore a large set of possible interactions and learn to master them, using language as a key communication medium. As a result, our work contributes to facilitating human intervention in the learning process of agents (e.g. digital assistants, video games characters, robots), which we believe is a key step towards more explainable and safer autonomous agents.

- Reproducibility of research: By releasing the codes of our research papers, we believe that we help efforts in reproducible science and allow the wider community to build upon and extend our work in the future. In that spirit, we also provide clear explanations on the statistical testing methods when reporting the results.

- AI and personalized educational technologies that support inclusivity and diversity and reduce inequalities The Flowers team develops AI technologies aiming to personalize sequences of educationa activities in digital educational apps: this entails the central challenge of designing systems which can have equitable impact over a diversity of students and reduce inequalitie. Using models of curiosity-driven learning to design AI algorithms for such personalization, we have been working to enable them to be positively and equitably impactful across several dimensions of diversity: for young learners or for aging populations; for learners with low initial levels as well as for learners with high initial levels; for "normally" developping children and for children with developmental disorders; and for learners of different socio-cultural backgrounds (e.g. we could show in the KidLearn project that the system is equally impactful along these various kinds of diversities).

- Health: Bio-printing The Flowers team is studying the use of curiosity-driven exploraiton algorithm in the domain of automated discovery, enabling scientists in physics/chemistry/biology to efficiently explore and build maps of the possible structures of various complex systems. One particular domain of application we are studying is bio-printing, where a challenge consists in exploring and understanding the space of morphogenetic structures self-organized by bio-printed cell populations. This could facilitate the design and bio-printing of personalized skins or organoids for people that need transplants, and thus could have major impact on the health of people needing such transplants.

- Tools for human creativity and the arts Curiosity-driven exploration algorithms could also in principle be used as tools to help human users in creative activities ranging from writing stories to painting or musical creation, which are domains we aim to consider in the future, and thus this constitutes another societal and cultural domain where our research could have impact.

-

Education to AI As artificial intelligence takes a greater role in human society, it is of foremost importance to empower individuals with understanding of these technologies. For this purpose, the Flowers lab has been actively involved in educational and popularization activities, in particular by designing educational robotics kits that form a motivating and tangible context to understand basic concepts in AI: these include the Inirobot kit (used by >30k primary school students in France, see https://

pixees. fr/ dm1r. fr/ and the Poppy Education kit (https:// www. poppy-education. org) now supported by the Poppy Station educational consortium (see https:// www. poppy-station. org)

6 Highlights of the year

Automated discovery in the sciences

The team made major progress in developping the new application domain of automated discovery in the sciences. We formalized this new research area, and introduced proof-of-concept results showing how intrinsically motivated goal exploration algorithms can be used as a tool to explore, map and learn to represent a diversity of self-organized patterns in complex dynamical systems. This opens stimulating perspectives in domains ranging from biology to chemistry and physiscs.

This work was presented in two papers accepted for oral presentation (< 1.5 % acceptance rate) at Neurips and ICLR 2020 conference. The work was achieved by Mayalen Etcheverry

(CIFRE PhD with the Poïetis company, for ICLR and Neurips) and Chris Reinke (Postdoc, for ICLR), and co-supervised

by C. Moulin-Frier (Neurips) and PY Oudeyer (ICLR and Neurips). See 38 (ICLR paper)

and 35, as well as the blog post https://

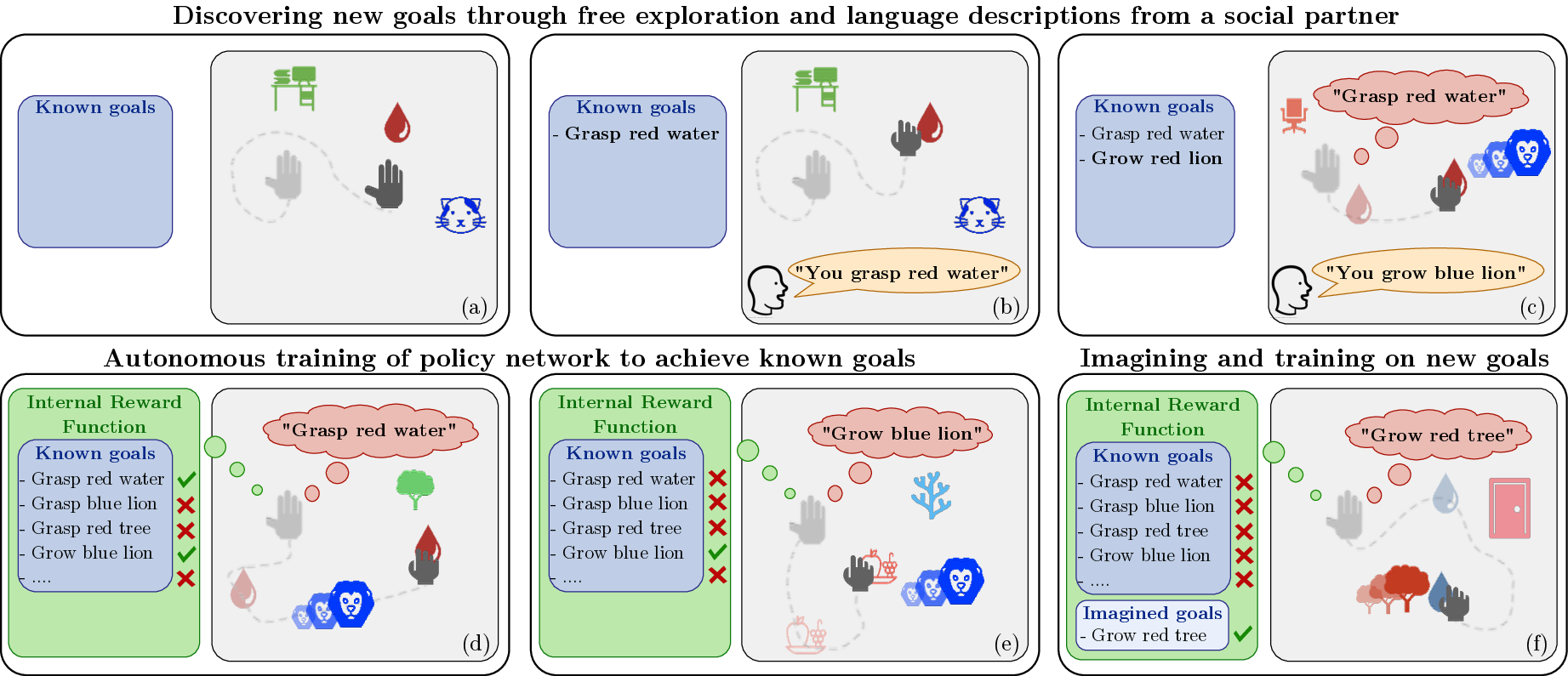

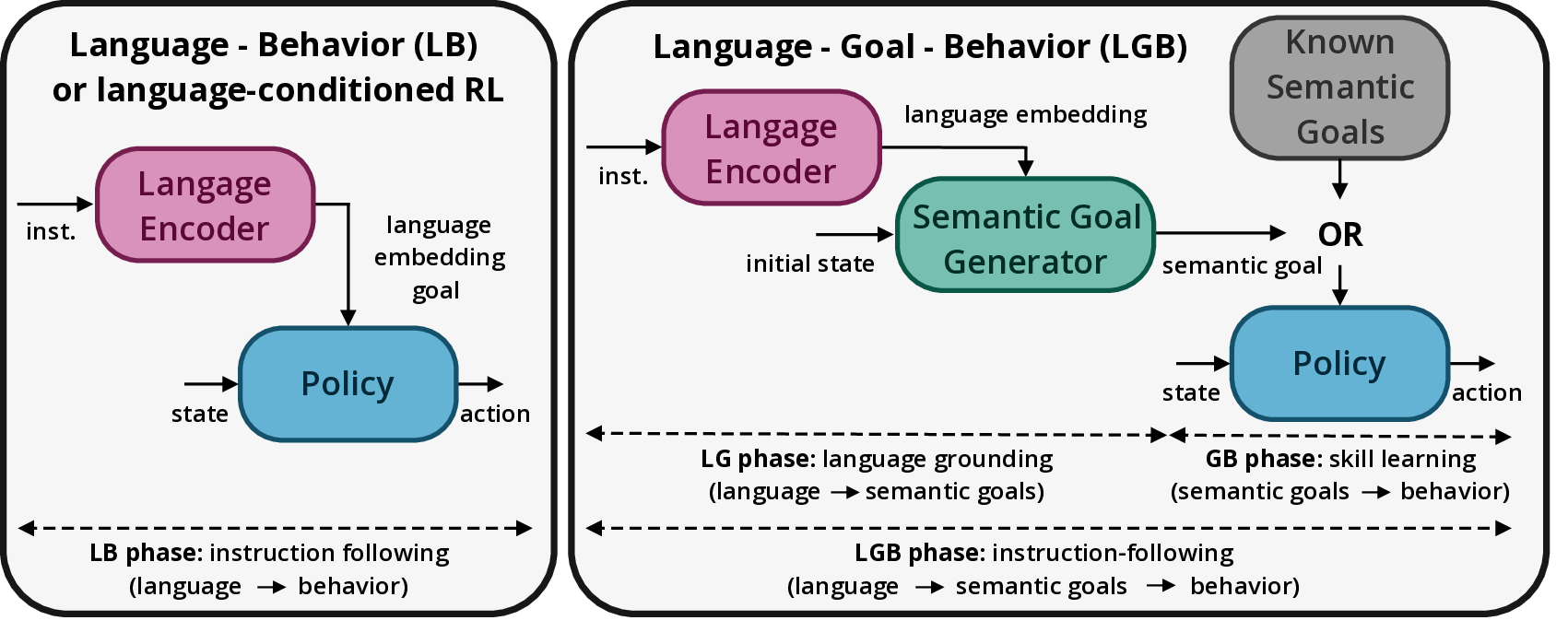

Language-guided curiosity-driven deep reinforcement learning with systematic generalization

The team made major advances in developmental machine learning, introducing techniques enabling autonomous agents to use language as a cognitive tool to imagine goals in intrinsically motivated exploration. This enables new forms of creative exploration, where agents can imagine goals that are outside the distribution of goals known so far. This approach, conceptually rooted in developmental psychology ideas from Vygotsky also leverages modular deep learning techniques enabling agents to generalize its understanding of new sentences. This work was published at Neurips 43. This work was published at Neurips 2020 43 First authors were Cécric Colas, Tristan Karch, Nicolas Lair, with co-supervision from PY Oudeyer, PF. Dominey, C. Moulin-Frier.

Educational technologies that foster curiosity-driven learning in humans

Together with the edTech industrial consortium Adaptiv'Maths (https://

Awards

PY Oudeyer was awarded an individual ANR Chair in Artificial Intelligence, and elected as Distinguised speaker of the IEEE Computational Ingelligence Society. C Moulin-Frier obtained an ANR JCJC grant, an Inria Exploratory Action and an Inria Cordi PhD grant in 2020 (see 10 for detail).

7 New software and platforms

7.1 New software

7.1.1 Explauto

- Name: an autonomous exploration library

- Keyword: Exploration

-

Scientific Description:

An important challenge in developmental robotics is how robots can be intrinsically motivated to learn efficiently parametrized policies to solve parametrized multi-task reinforcement learning problems, i.e. learn the mappings between the actions and the problem they solve, or sensory effects they produce. This can be a robot learning how arm movements make physical objects move, or how movements of a virtual vocal tract modulates vocalization sounds. The way the robot will collects its own sensorimotor experience have a strong impact on learning efficiency because for most robotic systems the involved spaces are high dimensional, the mapping between them is non-linear and redundant, and there is limited time allowed for learning. If robots explore the world in an unorganized manner, e.g. randomly, learning algorithms will be often ineffective because very sparse data points will be collected. Data are precious due to the high dimensionality and the limited time, whereas data are not equally useful due to non-linearity and redundancy. This is why learning has to be guided using efficient exploration strategies, allowing the robot to actively drive its own interaction with the environment in order to gather maximally informative data to optimize the parametrized policies. In the recent year, work in developmental learning has explored various families of algorithmic principles which allow the efficient guiding of learning and exploration.

Explauto is a framework developed to study, model and simulate curiosity-driven learning and exploration in real and simulated robotic agents. Explauto’s scientific roots trace back from Intelligent Adaptive Curiosity algorithmic architecture 131, which has been extended to a more general family of autonomous exploration architectures by 70 and recently expressed as a compact and unified formalism 123. The library is detailed in 125. In Explauto, interest models are implementing the strategies of active selection of particular problems / goals in a parametrized multi-task reinforcement learning setup to efficiently learn parametrized policies. The agent can have different available strategies, parametrized problems, models, sources of information, or learning mechanisms (for instance imitate by mimicking vs by emulation, or asking help to one teacher or to another), and chooses between them in order to optimize learning (a processus called strategic learning 128). Given a set of parametrized problems, a particular exploration strategy is to randomly draw goals/ RL problems to solve in the motor or problem space. More efficient strategies are based on the active choice of learning experiments that maximize learning progress using bandit algorithms, e.g. maximizing improvement of predictions or of competences to solve RL problems 131. This automatically drives the system to explore and learn first easy skills, and then explore skills of progressively increasing complexity. Both random and learning progress strategies can act either on the motor or on the problem space, resulting in motor babbling or goal babbling strategies.

- Motor babbling consists in sampling commands in the motor space according to a given strategy (random or learning progress), predicting the expected effect, executing the command through the environment and observing the actual effect. Both the parametrized policies and interest models are finally updated according to this experience.

- Goal babbling consists in sampling goals in the problem space and to use the current policies to infer a motor action supposed to solve the problem (inverse prediction). The robot/agent then executes the command through the environment and observes the actual effect. Both the parametrized policies and interest models are finally updated according to this experience.It has been shown that this second strategy allows a progressive solving of problems much more uniformly in the problem space than with a motor babbling strategy, where the agent samples directly in the motor space 70.

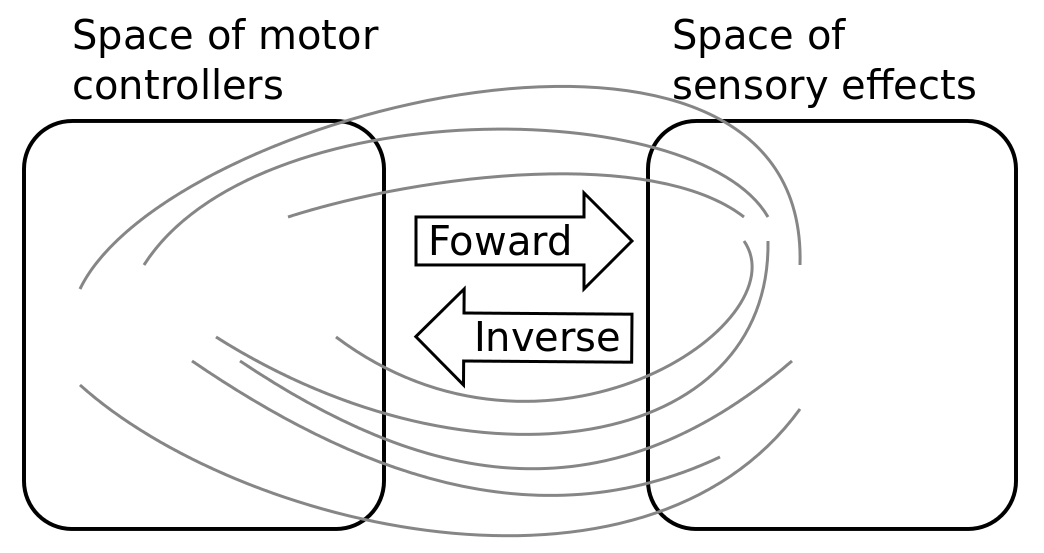

Figure 1: Complex parametrized policies involve high dimensional action and effect spaces. For the sake of visualization, the motor M and sensory S spaces are only 2D each in this example. The relationship between M and S is non-linear, dividing the sensorimotor space into regions of unequal stability: small regions of S can be reached very precisely by large regions of M, or large regions in S can be very sensitive to variations in M.: s as well as a non-linear and redundant relationship. This non-linearity can imply redundancy, where the same sensory effect can be attained using distinct regions in M. -

Functional Description:

This library provides high-level API for an easy definition of:

- Real and simulated robotic setups (Environment level),

- Incremental learning of parametrized policies (Sensorimotor level),

- Active selection of parametrized RL problems (Interest level).

The library comes with several built-in environments. Two of them corresponds to simulated environments: a multi-DoF arm acting on a 2D plan, and an under-actuated torque-controlled pendulum. The third one allows to control real robots based on Dynamixel actuators using the Pypot library. Learning parametrized policies involves machine learning algorithms, which are typically regression algorithms to learn forward models, from motor controllers to sensory effects, and optimization algorithms to learn inverse models, from sensory effects, or problems, to the motor programs allowing to reach them. We call these sensorimotor learning algorithms sensorimotor models. The library comes with several built-in sensorimotor models: simple nearest-neighbor look-up, non-parametric models combining classical regressions and optimization algorithms, online mixtures of Gaussians, and discrete Lidstone distributions. Explauto sensorimotor models are online learning algorithms, i.e. they are trained iteratively during the interaction of the robot in theenvironment in which it evolves. Explauto provides also a unified interface to define exploration strategies using the InterestModel class. The library comes with two built-in interest models: random sampling as well as sampling maximizing the learning progress in forward or inverse predictions.

Explauto environments now handle actions depending on a current context, as for instance in an environment where a robotic arm is trying to catch a ball: the arm trajectories will depend on the current position of the ball (context). Also, if the dynamic of the environment is changing over time, a new sensorimotor model (Non-Stationary Nearest Neighbor) is able to cope with those changes by taking more into account recent experiences. Those new features are explained in Jupyter notebooks.

This library has been used in many experiments including:

- the control of a 2D simulated arm,

- the exploration of the inverse kinematics of a poppy humanoid (both on the real robot and on the simulated version),

- acoustic model of a vocal tract.

Explauto is crossed-platform and has been tested on Linux, Windows and Mac OS. It has been released under the GPLv3 license.

-

URL:

https://

github. com/ flowersteam/ explauto - Contacts: Clément Moulin-Frier, Pierre Rouanet, Sebastien Forestier

7.1.2 KidBreath

- Keyword: Machine learning

- Functional Description: KidBreath is a web responsive application composed by several interactive contents linked to asthma and displayed to different forms: learning activities with quiz, short games and videos. There are profil creation and personalization, and a part which describes historic and scoring of learning activities, to see evolution of Kidreath use. To test Kidlearn algorithm, it is iadapted and integrated on this platform. Development in PHP, HTML-5, CSS, MySQL, JQuery, Javascript. Hosting in APACHE, LINUX, PHP 5.5, MySQL, OVH.

- Contacts: Pierre-Yves Oudeyer, Manuel Lopes, Alexandra Delmas

- Partner: ItWell SAS

7.1.3 Kidlearn: money game application

-

Functional Description:

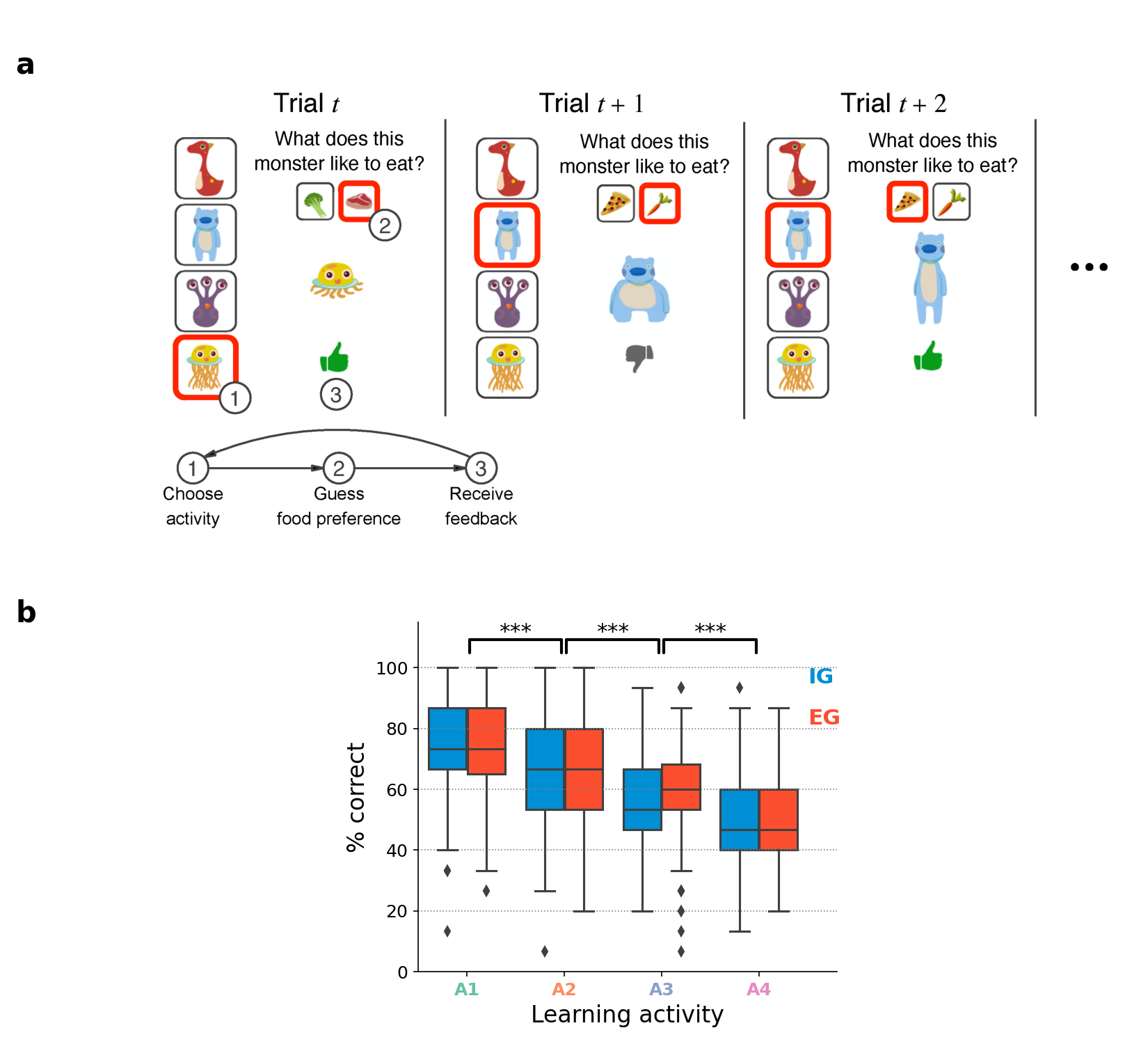

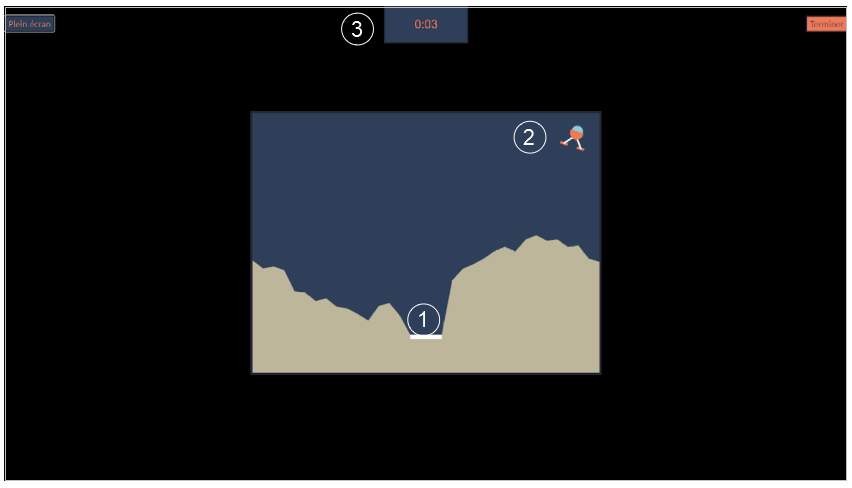

The games is instantiated in a browser environment where students are proposed exercises in the form of money/token games (see Figure 2). For an exercise type, one object is presented with a given tagged price and the learner has to choose which combination of bank notes, coins or abstract tokens need to be taken from the wallet to buy the object, with various constraints depending on exercises parameters. The games have been developed using web technologies, HTML5, javascript and Django.

Figure 2: Four principal regions are defined in the graphical interface. The first is the wallet location where users can pick and drag the money items and drop them on the repository location to compose the correct price. The object and the price are present in the object location. Four different types of exercises exist: M : customer/one object, R : merchant/one object, MM : customer/two objects, RM : merchant/two objects. -

URL:

https://

flowers. inria. fr/ research/ kidlearn/ - Contact: Benjamin Clement

7.1.4 Kidlearn: script for Kidbreath use

- Keyword: PHP

- Functional Description: A new way to test Kidlearn algorithms is to use them on Kidbreath Plateform. The Kidbreath Plateform use apache/PHP server, so to facilitate the integration of our algorithm, a python script have been made to allow PHP code to use easily the python library already made which include our algorithms.

-

URL:

https://

flowers. inria. fr/ research/ kidlearn/ - Contact: Benjamin Clement

7.1.5 KidLearn

- Keyword: Automatic Learning

- Functional Description: KidLearn is a software which adaptively personalize sequences of learning activities to the particularities of each individual student. It aims at proposing to the student the right activity at the right time, maximizing concurrently his learning progress and its motivation.

-

URL:

https://

flowers. inria. fr/ research/ kidlearn/ - Authors: Benjamin Clement, Didier Roy, Pierre-Yves Oudeyer, Manuel Lopes

- Contacts: Benjamin Clement, Pierre-Yves Oudeyer

- Participants: Benjamin Clement, Didier Roy, Manuel Lopes, Pierre Yves Oudeyer

7.1.6 Poppy

- Keywords: Robotics, Education

-

Functional Description:

The Poppy Project team develops open-source 3D printed robots platforms based on robust, flexible, easy-to-use and reproduce hardware and software. In particular, the use of 3D printing and rapid prototyping technologies is a central aspect of this project, and makes it easy and fast not only to reproduce the platform, but also to explore morphological variants. Poppy targets three domains of use: science, education and art.

In the Poppy project we are working on the Poppy System which is a new modular and open-source robotic architecture. It is designed to help people create and build custom robots. It permits, in a similar approach as Lego, building robots or smart objects using standardized elements.

Poppy System is a unified system in which essential robotic components (actuators, sensors...) are independent modules connected with other modules through standardized interfaces:

- Unified mechanical interfaces, simplifying the assembly process and the design of 3D printable parts.

- Unified communication between elements using the same connector and bus for each module.

- Unified software, making it easy to program each module independently.

Our ambition is to create an ecosystem around this system so communities can develop custom modules, following the Poppy System standards, which can be compatible with all other Poppy robots.

-

URL:

https://

www. poppy-project. org/ - Contacts: Matthieu Lapeyre, Pierre Rouanet, Pierre-Yves Oudeyer, Didier Roy, Stephanie Noirpoudre, Theo Segonds, Damien Caselli, Nicolas Rabault

- Participants: Jonathan Grizou, Matthieu Lapeyre, Pierre Rouanet, Pierre-Yves Oudeyer

7.1.7 Poppy Ergo Jr

- Name: Poppy Ergo Jr

- Keywords: Robotics, Education

-

Functional Description:

Poppy Ergo Jr is an open hardware robot developed by the Poppy Project to explore the use of robots in classrooms for learning robotic and computer science.

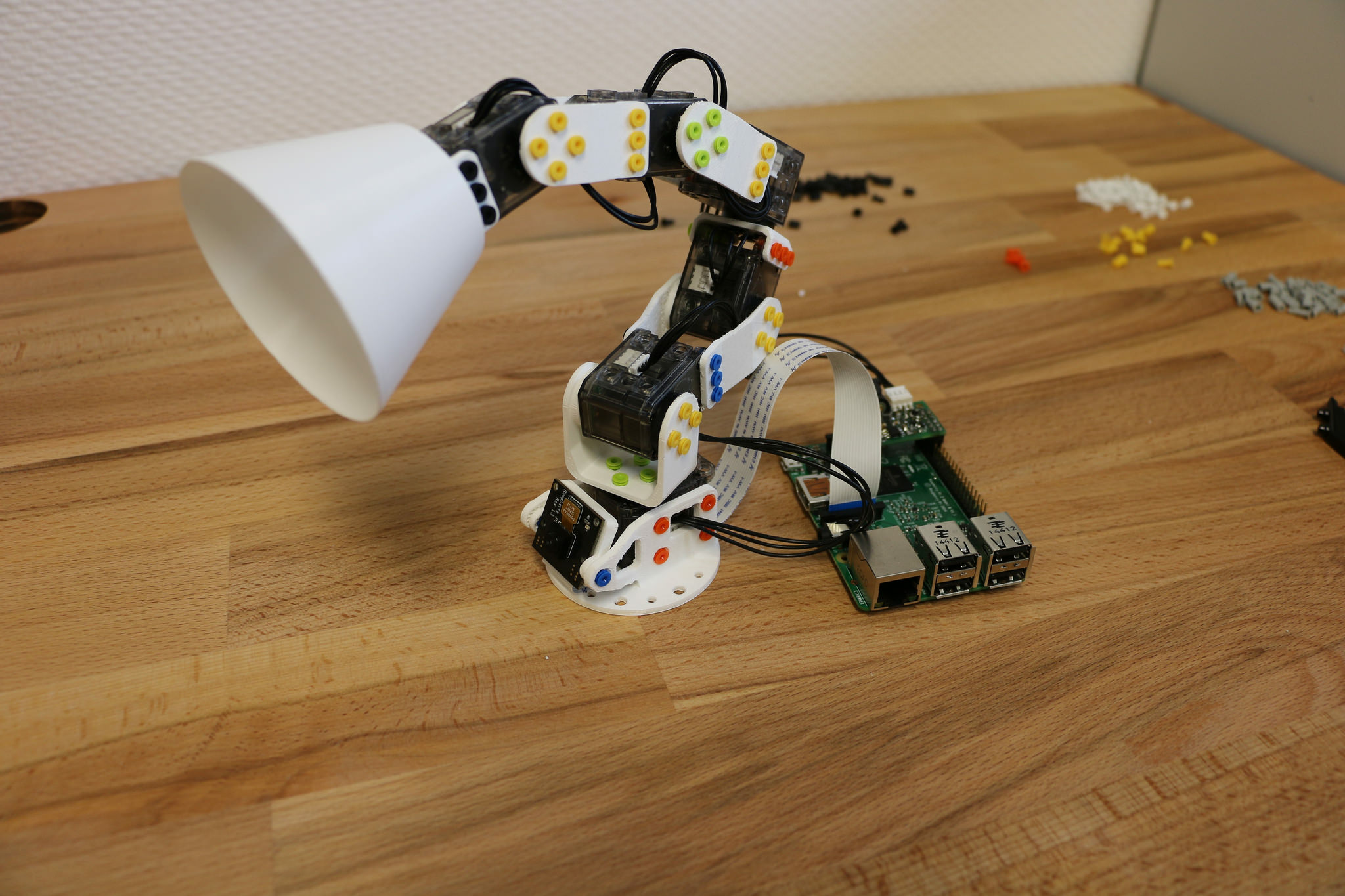

It is available as a 6 or 4 degrees of freedom arm designed to be both expressive and low-cost. This is achieved by the use of FDM 3D printing and low cost Robotis XL-320 actuators. A Raspberry Pi camera is attached to the robot so it can detect object, faces or QR codes.

The Ergo Jr is controlled by the Pypot library and runs on a Raspberry pi 2 or 3 board. Communication between the Raspberry Pi and the actuators is made possible by the Pixl board we have designed.

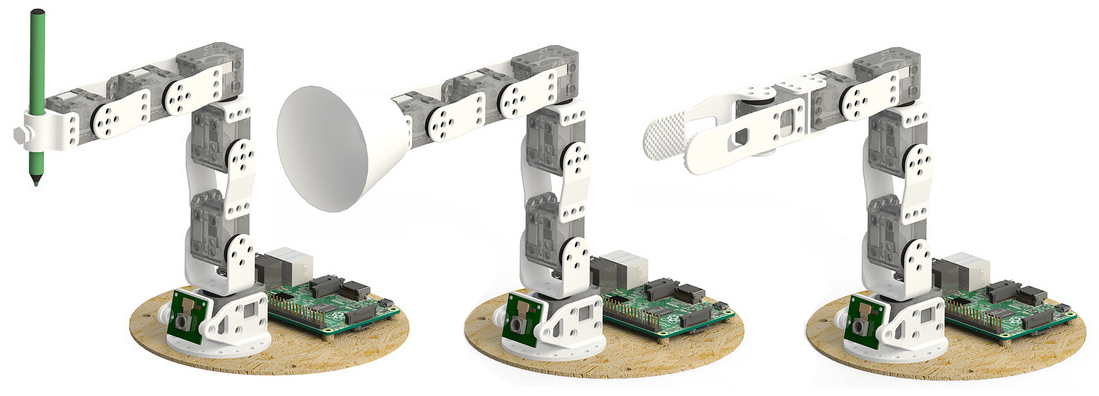

Figure 3: Poppy Ergo Jr, 6-DoFs arm robot for education The Poppy Ergo Jr robot has several 3D printed tools extending its capabilities. There are currently the lampshade, the gripper and a pen holder.

Figure 4: The available Ergo Jr tools: a pen holder, a lampshade and a gripper With the release of a new Raspberry Pi board early 2016, the Poppy Ergo Jr disk image was updated to support Raspberry Pi 2 and 3 boards. The disk image can be used seamlessly with a board or the other.

-

URL:

https://

github. com/ poppy-project/ poppy-ergo-jr - Contacts: Theo Segonds, Damien Caselli

7.1.8 S-RL Toolbox

- Name: Reinforcement Learning (RL) and State Representation Learning (SRL) for Robotics

- Keywords: Machine learning, Robotics

- Functional Description: This repository was made to evaluate State Representation Learning methods using Reinforcement Learning. It integrates (automatic logging, plotting, saving, loading of trained agent) various RL algorithms (PPO, A2C, ARS, ACKTR, DDPG, DQN, ACER, CMA-ES, SAC, TRPO) along with different SRL methods (see SRL Repo) in an efficient way (1 Million steps in 1 Hour with 8-core cpu and 1 Titan X GPU).

-

URL:

https://

github. com/ araffin/ robotics-rl-srl - Contact: David Filliat

- Partner: ENSTA

7.1.9 Deep-Explauto

- Name: Deep-Explauto

- Keywords: Deep learning, Unsupervised learning, Learning, Experimentation

-

Functional Description:

Until recently, curiosity driven exploration algorithms were based on classic learning algorithms, unable to handle large dimensional problems (see explauto). Recent advances in the field of deep learning offer new algorithms able to handle such situations.

Deep explauto is an experimental library, containing reference implementations of curiosity driven exploration algorithms. Given the experimental aspect of exploration algorithms, and the low maturity of the libraries and algorithms using deep learning, proposing black-box implementations of those algorithms, enabling a blind use of those, seem unrealistic.

Nevertheless, in order to quickly launch new experiments, this library offers an set of objects, functions and examples, allowing to kickstart new experiments.

- Contact: Alexandre Pere

7.1.10 Orchestra

- Name: Orchestra

- Keyword: Experimental mechanics

-

Functional Description:

Ochestra is a set of tools meant to help in performing experimental campaigns in computer science. It provides you with simple tools to:

+ Organize a manual experimental workflow, leveraging git and lfs through a simple interface. + Collaborate with other peoples on a single experimental campaign. + Execute pieces of code on remote hosts such as clusters or clouds, in one line. + Automate the execution of batches of experiments and the presentation of the results through a clean web ui.

A lot of advanced tools exists on the net to handle similar situations. Most of them target very complicated workflows, e.g. DAGs of tasks. Those tools are very powerful but lack the simplicity needed by newcomers. Here, we propose a limited but very simple tool to handle one of the most common situation of experimental campaigns: the repeated execution of an experiment on variations of parameters.

In particular, we include three tools: + expegit: a tool to organize your experimental campaign results in a git repository using git-lfs (large file storage). + runaway: a tool to execute code on distant hosts parameterized with easy to use file templates. + orchestra: a tool to automate the use of the two previous tools on large campaigns.

- Contact: Alexandre Pere

7.1.11 Curious

- Name: Curious: Intrinsically Motivated Modular Multi-Goal Reinforcement Learning

- Keywords: Exploration, Reinforcement learning, Artificial intelligence

- Functional Description: This is an algorithm enabling to learn a controller for an agent in a modular multi-goal environment. In these types of environments, the agent faces multiple goals classified in different types (e.g. reaching goals, grasping goals for a manipulation robot).

- Contact: Cedric Colas

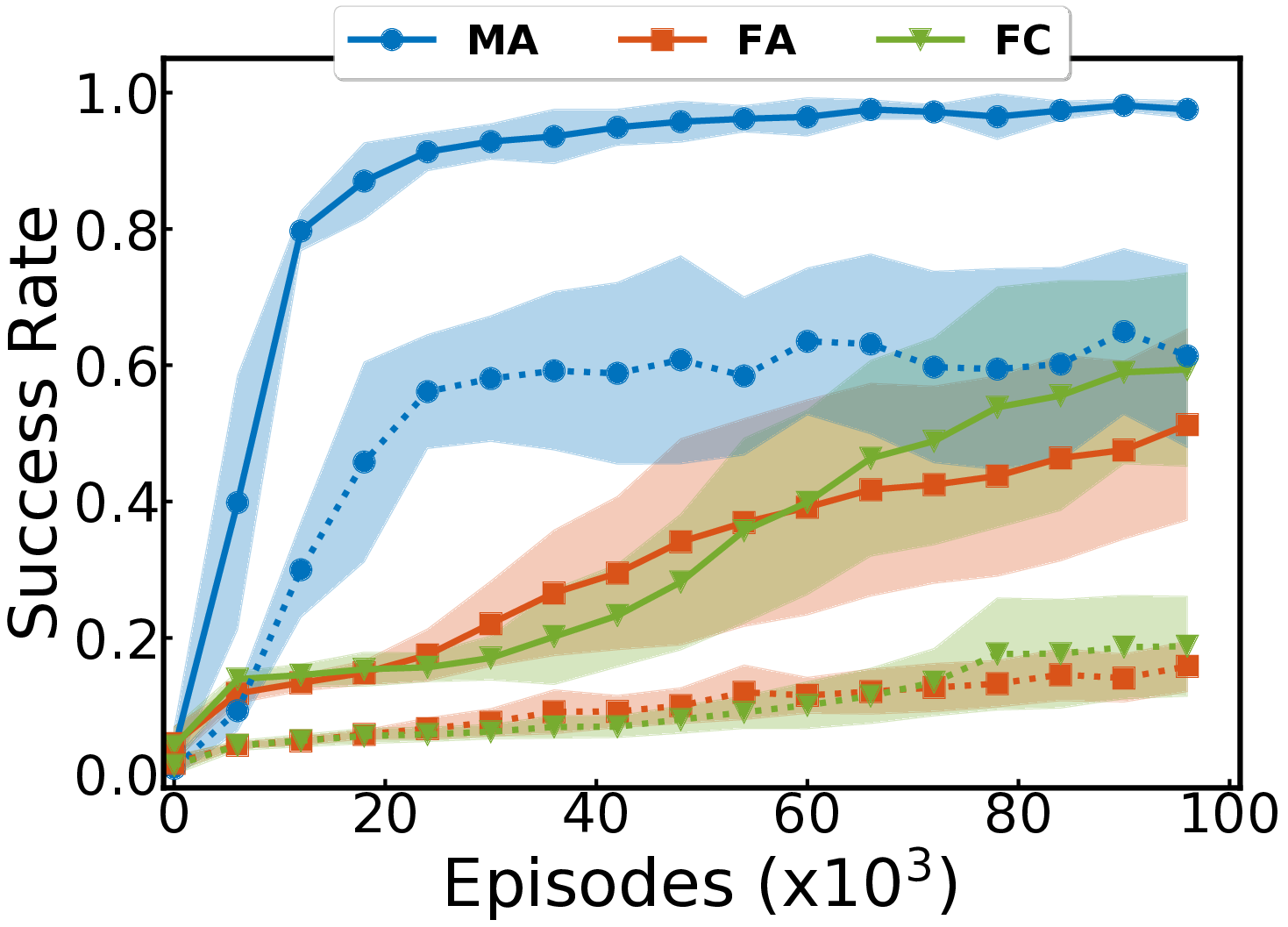

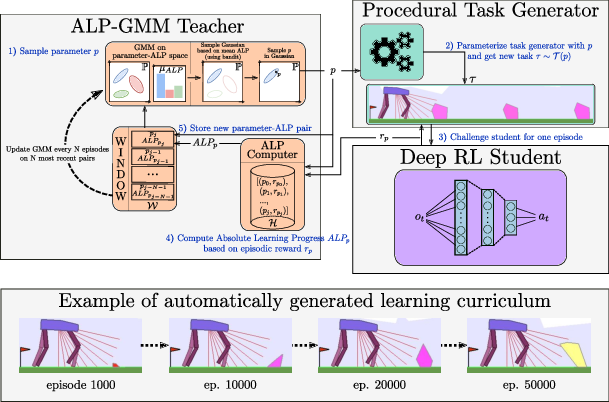

7.1.12 teachDeepRL

- Name: Teacher algorithms for curriculum learning of Deep RL in continuously parameterized environments

- Keywords: Machine learning, Git

-

Functional Description:

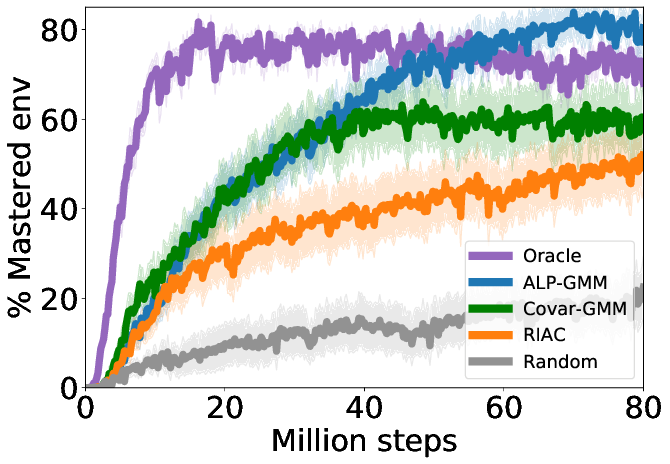

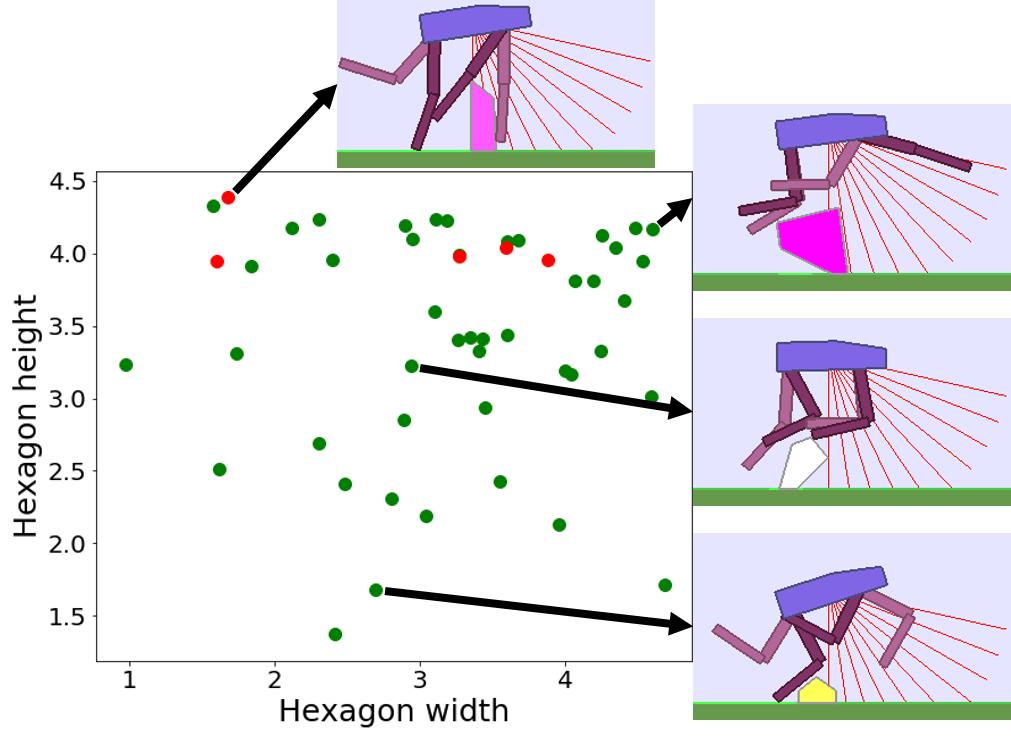

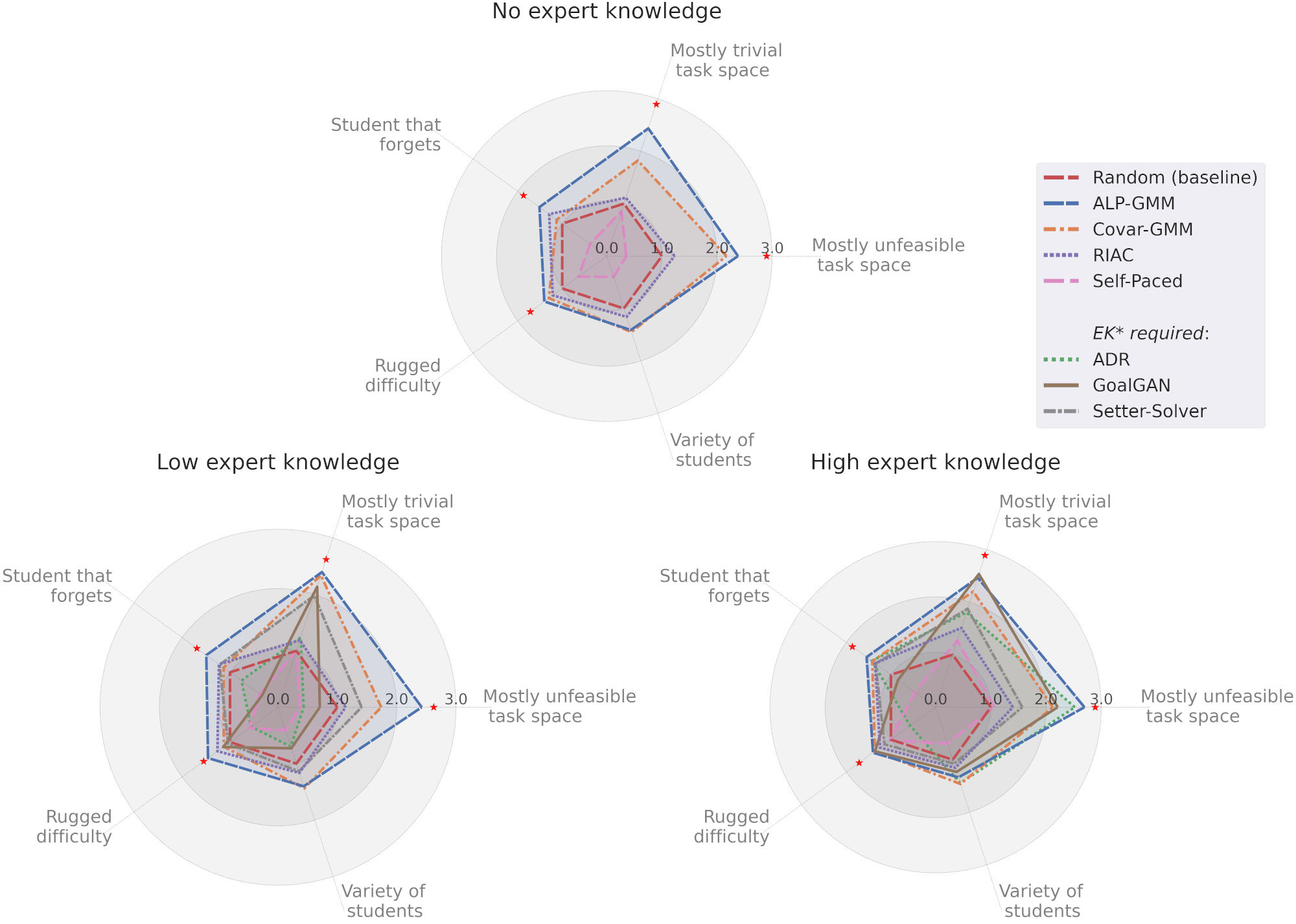

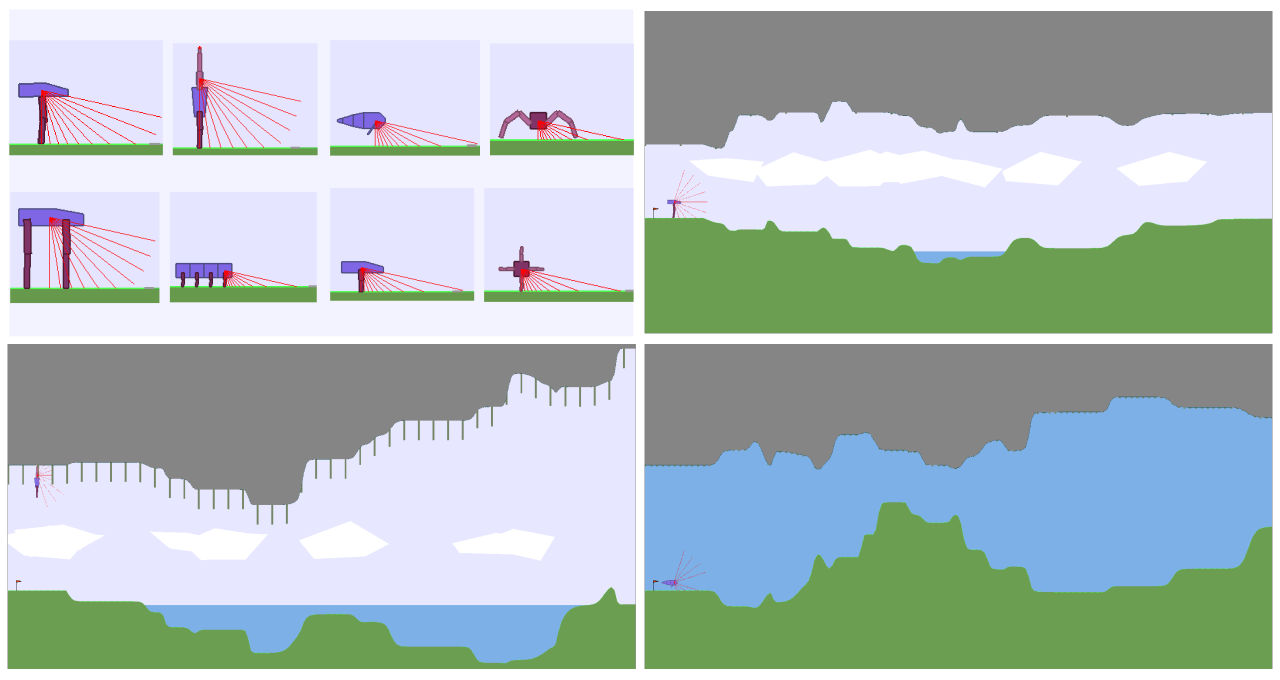

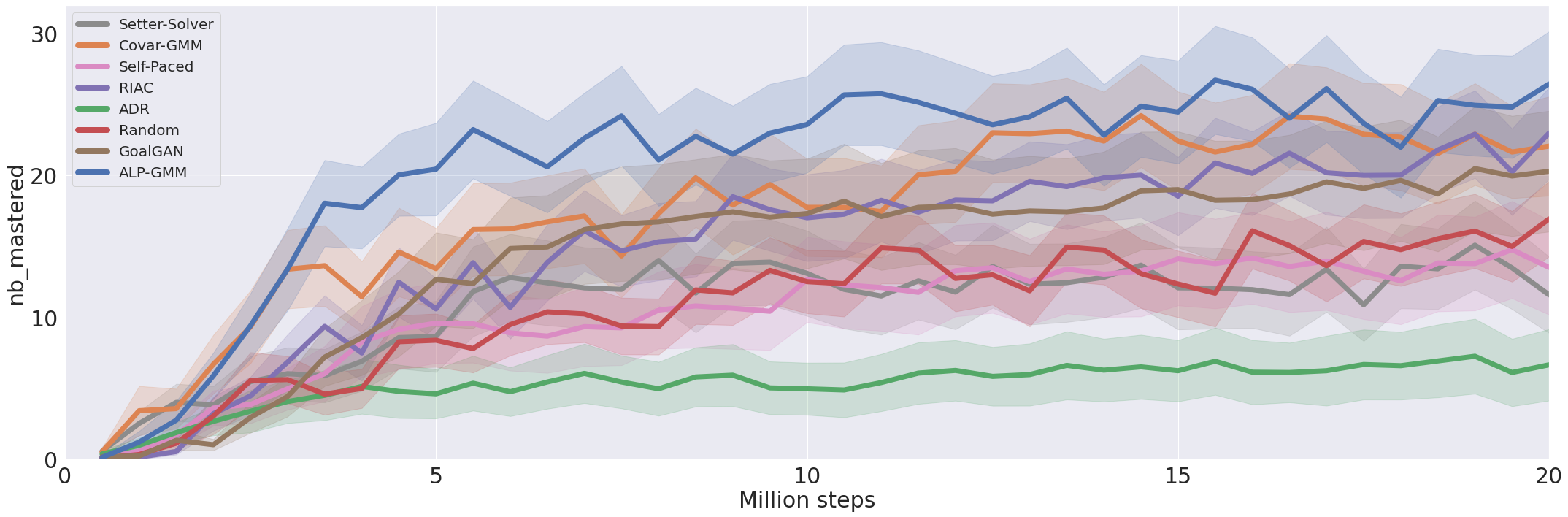

Codebase from our CoRL2019 paper https://arxiv.org/abs/1910.07224

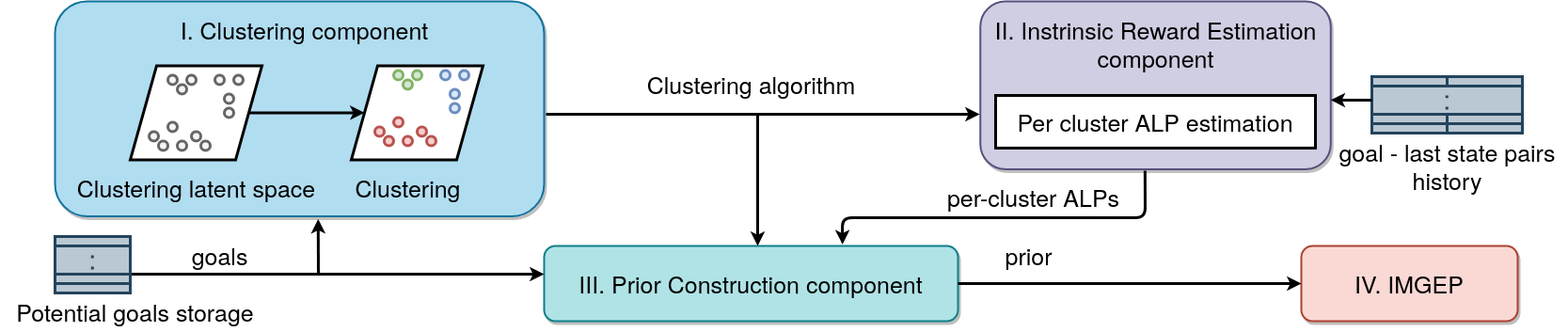

This github repository provides implementations for the following teacher algorithms: - Absolute Learning Progress-Gaussian Mixture Model (ALP-GMM), our proposed teacher algorithm - Robust Intelligent Adaptive Curiosity (RIAC), from Baranes and Oudeyer, R-IAC: robust intrinsically motivated exploration and active learning. - Covar-GMM, from Moulin-Frier et al., Self-organization of early vocal development in infants and machines: The role of intrinsic motivation.

-

URL:

https://

github. com/ flowersteam/ teachDeepRL - Author: Remy Portelas

- Contact: Remy Portelas

7.1.13 Automated Discovery of Lenia Patterns

- Keywords: Exploration, Cellular automaton, Deep learning, Unsupervised learning

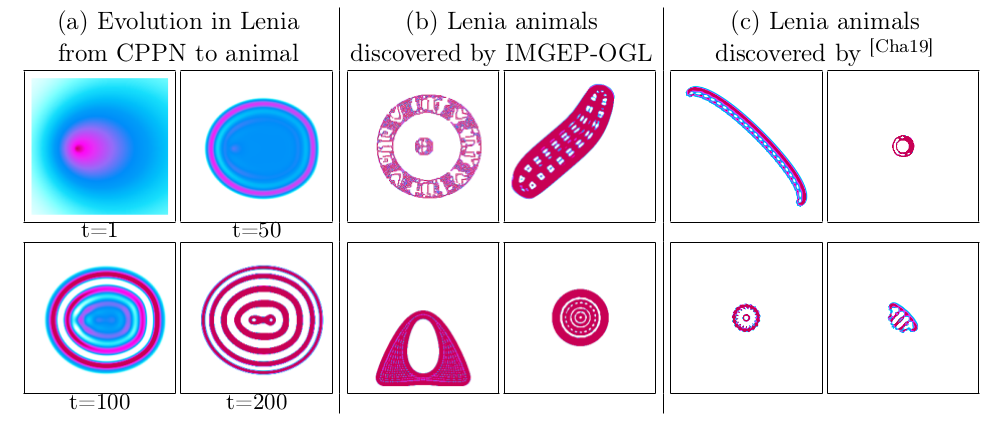

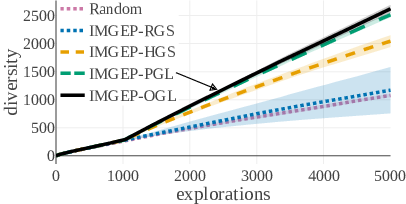

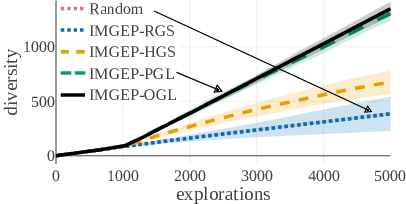

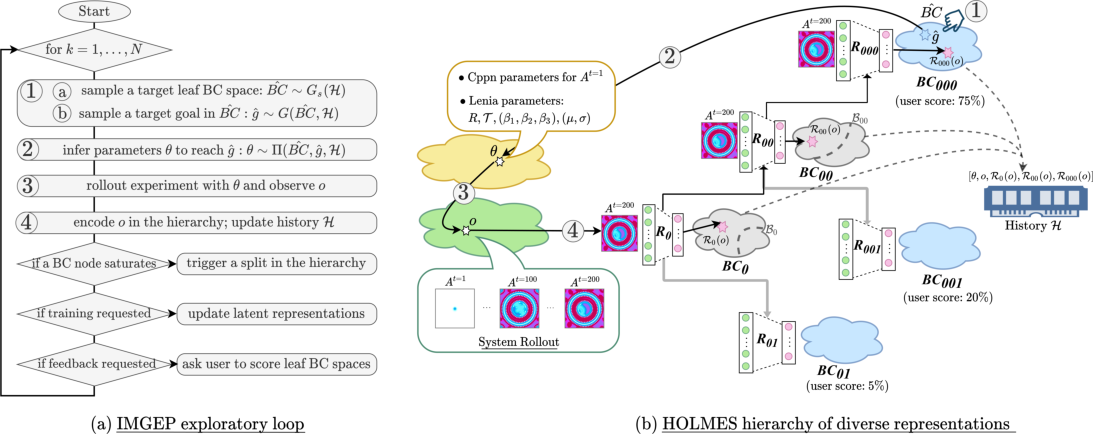

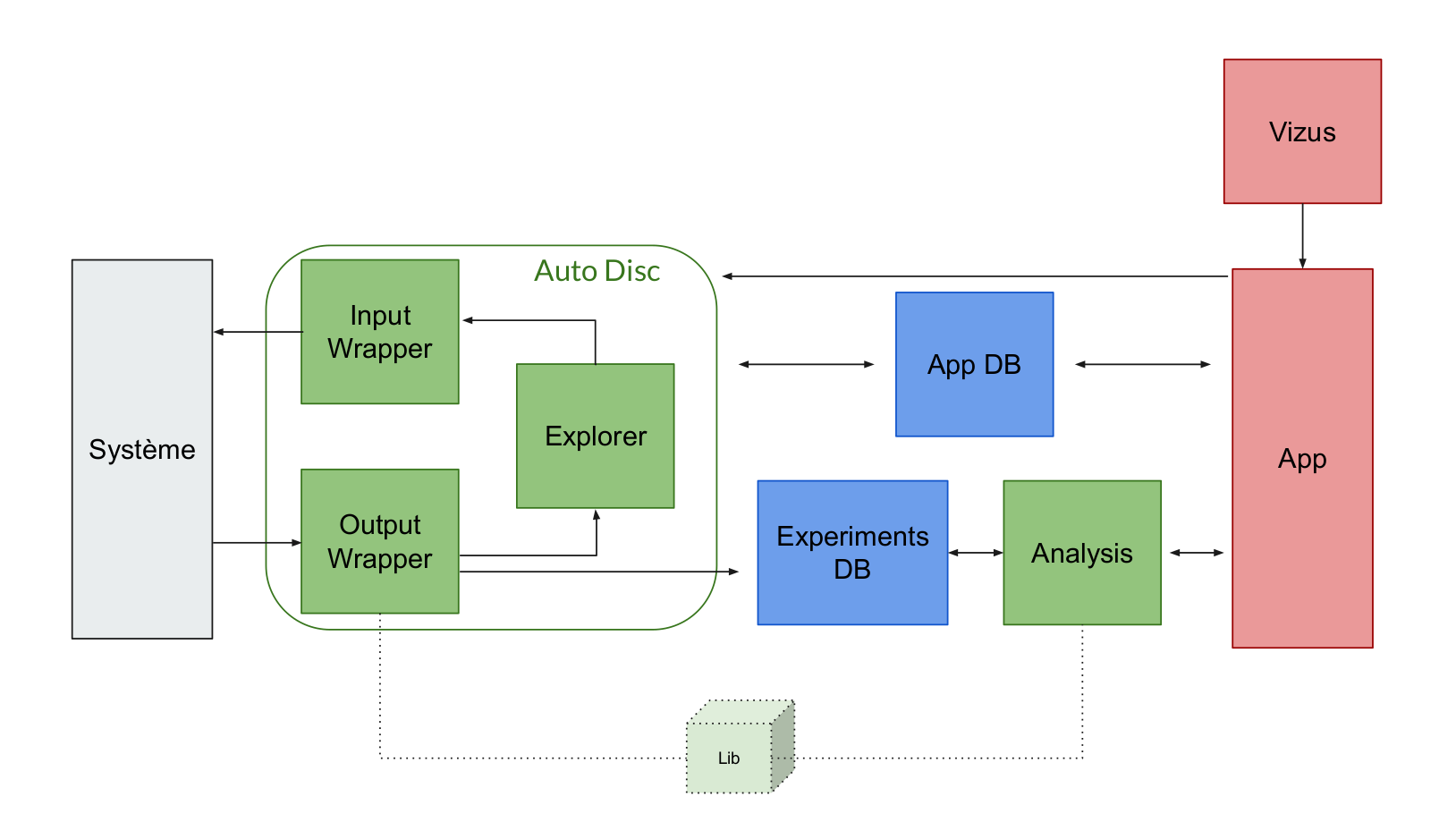

- Scientific Description: In many complex dynamical systems, artificial or natural, one can observe selforganization of patterns emerging from local rules. Cellular automata, like the Game of Life (GOL), have been widely used as abstract models enabling the study of various aspects of self-organization and morphogenesis, such as the emergence of spatially localized patterns. However, findings of self-organized patterns in such models have so far relied on manual tuning of parameters and initial states, and on the human eye to identify “interesting” patterns. In this paper, we formulate the problem of automated discovery of diverse self-organized patterns in such high-dimensional complex dynamical systems, as well as a framework for experimentation and evaluation. Using a continuous GOL as a testbed, we show that recent intrinsically-motivated machine learning algorithms (POP-IMGEPs), initially developed for learning of inverse models in robotics, can be transposed and used in this novel application area. These algorithms combine intrinsically motivated goal exploration and unsupervised learning of goal space representations. Goal space representations describe the “interesting” features of patterns for which diverse variations should be discovered. In particular, we compare various approaches to define and learn goal space representations from the perspective of discovering diverse spatially localized patterns. Moreover, we introduce an extension of a state-of-the-art POP-IMGEP algorithm which incrementally learns a goal representation using a deep auto-encoder, and the use of CPPN primitives for generating initialization parameters. We show that it is more efficient than several baselines and equally efficient as a system pre-trained on a hand-made database of patterns identified by human experts.

- Functional Description: Python source code of experiments and data analysis for the paper " Intrinsically Motivated Discovery of Diverse Patterns in Self-Organizing Systems" (Chris Reinke, Mayalen Echeverry, Pierre-Yves Oudeyer in Submitted to ICLR 2020). The software includes: Lenia environment, exploration algorithms (IMGEPs, random search), deep learning algorithms for unsupervised learning of goal spaces, tools and configurations to run experiments, and data analysis tools.

-

URL:

https://

github. com/ flowersteam/ automated_discovery_of_lenia_patterns - Contacts: Chris Reinke, Mayalen Etcheverry

7.1.14 ZPDES_ts

- Name: ZPDES in typescript

- Keywords: Machine learning, Education

- Functional Description: ZPDES is a machine learning-based algorithm that allows you to customize the content of training courses for each learner's level. It has already been implemented in the Kidlern software in python with other algorithms. Here, ZPDES is implemented in typescript.

-

URL:

https://

flowers. inria. fr/ research/ kidlearn/ - Authors: Benjamin Clement, Pierre-Yves Oudeyer, Didier Roy, Manuel Lopes

- Contact: Benjamin Clement

7.1.15 GEP-PG

- Name: Goal Exploration Process - Policy Gradient

- Keywords: Machine learning, Deep learning

- Functional Description: Reinforcement Learning algorithm working with OpenAI Gym environments. A first phase implements exploration using a Goal Exploration Process (GEP). Samples collected during exploration are then transferred to the memory of a deep reinforcement learning algorithm (deep deterministic policy gradient or DDPG). DDPG then starts learning from a pre-initialized memory so as to maximize the sum of discounted rewards given by the environment.

- Contact: Cedric Colas

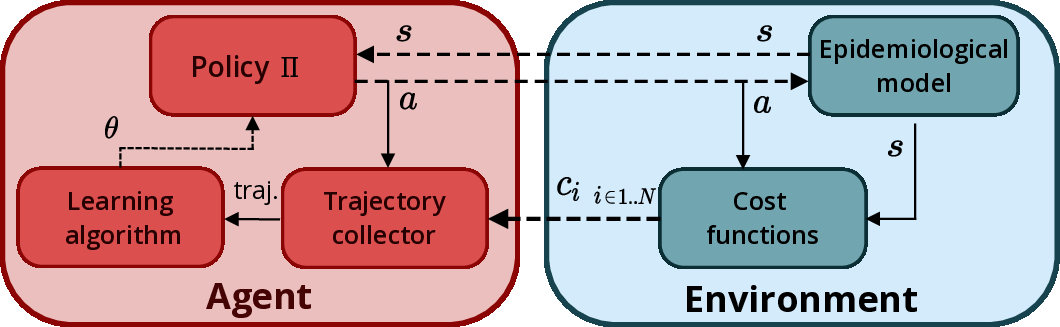

7.1.16 EpidemiOptim

- Name: EpidemiOptim: a toolbox for the optimization of control policies in epidemiological models

- Keywords: Epidemiology, Optimization, Dynamical system, Reinforcement learning, Multi-objective optimisation

- Functional Description: This toolbox proposes a modular set of tools to optimize intervention strategies in epidemiological models. The user can define or use a pre-coded epidemiological model to represent an epidemic. He/she can define a set of cost functions to define a particular optimization problem. Finally, given an optimization problem (epidemiological model and cost functions and action modalities), the user can define/reuse optimization algorithms to optimize intervention strategies that minimize the costs. Finally, the toolbox contains visualization and comparison tools. This allows to investigate various hypotheses easily.

-

URL:

https://

github. com/ flowersteam/ EpidemiOptim - Contacts: Cedric Colas, Clément Moulin-Frier, Melanie Prague

7.1.17 IMAGINE

- Keywords: Exploration, Reinforcement learning, Modeling language, Artificial intelligence

- Functional Description: This software provides: 1. An environment modelling the social interaction between an autonomous agent and a social partner. The social partner gives natural language descriptions when the agent performs something interesting in the environment. 2. A modular architecture allowing the autonomous agent to manipulate and to target goals expressed in natural language. This architecture is divided into three modules: 2.a. A goal achievement function mapping language descriptions and the agent's observations to a reward signal 2.b. A goal conditioned-policy that uses the reward signal in order to learn the behaviour required to reach the goal (expressed in natural language). This module is trained via Reinforcement Learning 2.c. A goal imagination module allowing the agent to compose known goals into new sentences in order to creatively explore new outcomes in its environment.

-

URL:

https://

github. com/ flowersteam/ Imagine - Contacts: Tristan Karch, Cedric Colas, Clément Moulin-Frier, Pierre-Yves Oudeyer

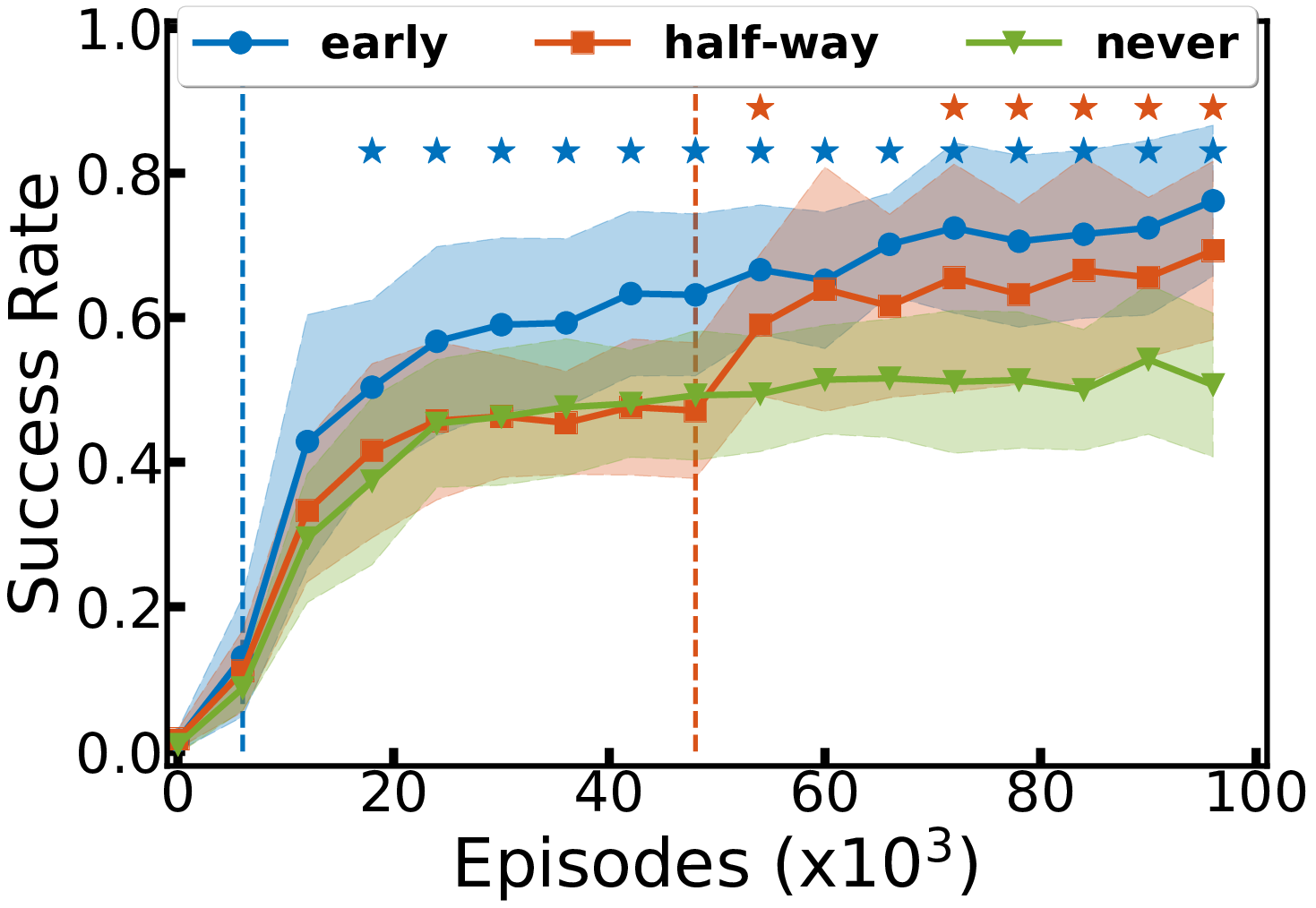

7.1.18 DECSTR

- Name: Grouding Language to Autonomously-Acquired Skills via Goal Generation

- Keywords: Reinforcement learning, Curiosity, Intrinsic motivations

- Functional Description: DECSTR is a learning algorithm that trains an agent to reach semantic goals made of predicates characterizing spatial relations between pairs of blocks. After this first skill learning phase, the agent trains a language generation module that converts linguistic inputs into semantic goals. This module enables efficient language grounding.

- Contact: Cedric Colas

7.1.19 holmes

- Name: IMGEP-HOLMES, an algorithm for meta-diversity search applied to the automated discovery of novel structures in complex dynamical systems

- Keywords: Exploration, Incremental learning, Unsupervised learning, Hierarchical architecture, Intrinsic motivations, Cellular automaton, Complexity

- Functional Description: Python source code to reproduce the experiments and data analysis for the paper "Hierarchically Organized Latent Modules for Exploratory Search in Morphogenetic Systems" (Mayalen Echeverry, Clément Moulin-Frier and Pierre-Yves Oudeyer, published at NeurIPS 2020). The user can define a complex system he would like to explore, or use the Lenia environment which is already provided. He/she can select an explorer to explore this system (Random or IMGEP explorer). For the IMGEP explorer, many variants of goal space representations are provided in the source code: hand-defined descriptors of the Lenia system, unsupervisedly learned descriptors that can be trained online during the course of exploration (VAE variants and Contrastive Learning variants) and the hierarchical progressively-learned architecture presented in the paper (HOLMES). To this purpose, the software includes tools and configurations to run experiments and for data analysis and comparison of the results, as well as for running the scripts on super-computers (SLURM job manager).

-

URL:

https://

github. com/ flowersteam/ holmes - Contact: Mayalen Etcheverry

7.1.20 metaACL

- Name: Meta Automatic Curriculum Learning

- Keywords: Machine learning, Git

-

Functional Description:

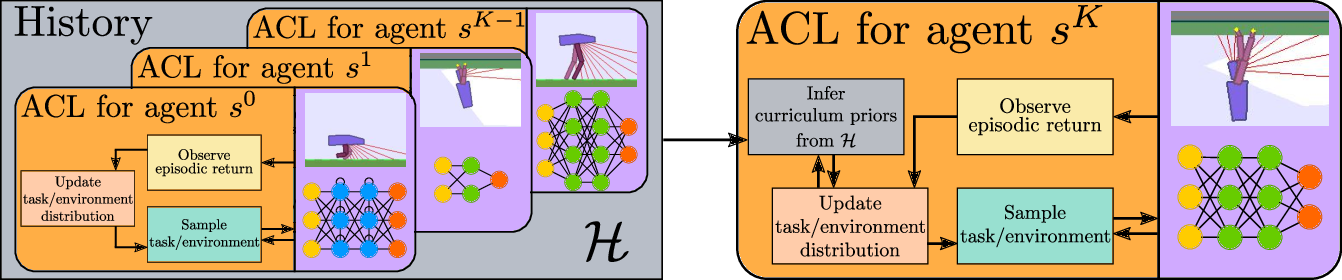

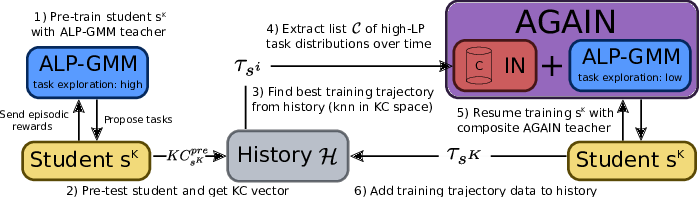

Codebase from our arxiv paper https://arxiv.org/abs/2011.08463

This github repository provides implementations for AGAIN (Alp-Gmm and Inferred Progress Niches), our proposed Meta automatic curriculum learning teacher algorithm.

-

URL:

https://

github. com/ flowersteam/ meta-acl - Contact: Remy Portelas

7.1.21 EmComPartObs

- Name: Studying the joint role of partial observability and channel reliability in emergent communication

- Keywords: Multi-agent, Reinforcement learning, Emergent communication

- Functional Description: This source code contains a new grid-world environment where two agents interact to solve a task, Multi-Agent Reinforcement algorithms that solve that task, as well as plotting utilities.

-

URL:

https://

github. com/ UnrealLink/ emergent_communication - Publication: hal-03100681

- Contacts: Valentin Villecroze, Clément Moulin-Frier

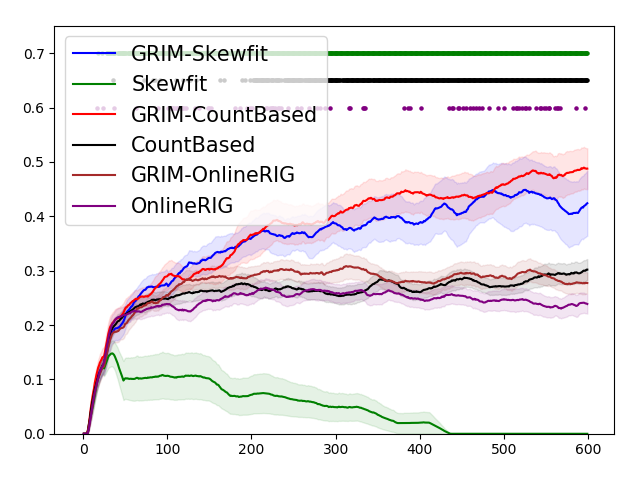

7.1.22 grimgep

- Name: GRIMGEP: Learning Progress for Robust Goal Sampling in Visual Deep Reinforcement Learning

- Keywords: Machine learning, Reinforcement learning, Artificial intelligence, Exploration, Intrinsic motivations, Git, Deep learning

- Functional Description: Source code for the GRIMGEP paper (https://arxiv.org/abs/2008.04388) Contains: - Implementation of the GRIMGEP framework on top of three different underlying imgeps (Skew-fit, CountBased, OnlineRIG). - image-based 2D environment (PlaygroundRGB)

-

URL:

https://

gitlab. com/ Grg/ grimgep - Contacts: Grgur Kovac, Adrien Laversanne-Finot, Pierre-Yves Oudeyer

7.1.23 flowers-OL

- Name: flowers-open-lab

- Keyword: Experimentation

- Functional Description: This web platform designed for planning and implementing remote behavioural studies provides the following features: - Registration and login of participants - Presentation of the instructions concerning the experience and get informed consent - Behavioural task and questionnaires - Automatic management of a participant's schedule (sends emails before the user's appointments) - Quick and easy addition of new experimental conditions

-

URL:

https://

flowers-mot. bordeaux. inria. fr/ - Contacts: Alexandr Ten, Maxime Adolphe

8 New results

8.1 Computational Models of Curiosity-Driven Learning in Humans

8.1.1 Testing the Learning Progres Hypothesis in Curiosity-Driven explortion in Human Adults

Participants: Pierre-Yves Oudeyer, Alexandr Ten.

This project involves a collaboration between the Flowers team and the Cognitive Neuroscience Lab of J. Gottlieb at Columbia Univ. (NY, US), on the understanding and computational modeling of mechanisms of curiosity, attention and active intrinsically motivated exploration in humans.

It is organized around the study of the hypothesis that subjective meta-cognitive evaluation of information gain (or control gain or learning progress) could generate intrinsic reward in the brain (living or artificial), driving attention and exploration independently from material rewards, and allowing for autonomous lifelong acquisition of open repertoires of skills. The project combines expertise about attention and exploration in the brain and a strong methodological framework for conducting experimentations with monkeys, human adults and children together with computational modeling of curiosity/intrinsic motivation and learning.

Such a collaboration paves the way towards a central objective, which is now a central strategic objective of the Flowers team: designing and conducting experiments in animals and humans informed by computational/mathematical theories of information seeking, and allowing to test the predictions of these computational theories.

Context

Curiosity can be understood as a family of mechanisms that evolved to allow agents to maximize their knowledge (or their control) of the useful properties of the world - i.e., the regularities that exist in the world - using active, targeted investigations. In other words, we view curiosity as a decision process that maximizes learning/competence progress (rather than minimizing uncertainty) and assigns value ("interest") to competing tasks based on their epistemic qualities - i.e., their estimated potential allow discovery and learning about the structure of the world.

Because a curiosity-based system acts in conditions of extreme uncertainty (when the distributions of events may be entirely unknown) there is in general no optimal solution to the question of which exploratory action to take 115, 133, 141. Therefore we hypothesize that, rather than using a single optimization process as it has been the case in most previous theoretical work 95, curiosity is comprised of a family of mechanisms that include simple heuristics related to novelty/surprise and measures of learning progress over longer time scales 13071, 122. These different components are related to the subject's epistemic state (knowledge and beliefs) and may be integrated with fluctuating weights that vary according to the task context. Our aim is to quantitatively characterize this dynamic, multi-dimensional system in a computational framework based on models of intrinsically motivated exploration and learning.

Because of its reliance on epistemic currencies, curiosity is also very likely to be sensitive to individual differences in personality and cognitive functions. Humans show well-documented individual differences in curiosity and exploratory drives 113, 140, and rats show individual variation in learning styles and novelty seeking behaviors 90, but the basis of these differences is not understood. We postulate that an important component of this variation is related to differences in working memory capacity and executive control which, by affecting the encoding and retention of information, will impact the individual's assessment of learning, novelty and surprise and ultimately, the value they place on these factors 135, 149, 66, 153. To start understanding these relationships, about which nothing is known, we will search for correlations between curiosity and measures of working memory and executive control in the population of children we test in our tasks, analyzed from the point of view of a computational models of the underlying mechanisms.

A final premise guiding our research is that essential elements of curiosity are shared by humans and non-human primates. Human beings have a superior capacity for abstract reasoning and building causal models, which is a prerequisite for sophisticated forms of curiosity such as scientific research. However, if the task is adequately simplified, essential elements of curiosity are also found in monkeys 113, 107 and, with adequate characterization, this species can become a useful model system for understanding the neurophysiological mechanisms.

Objectives

Our studies have several highly innovative aspects, both with respect to curiosity and to the traditional research field of each member team.

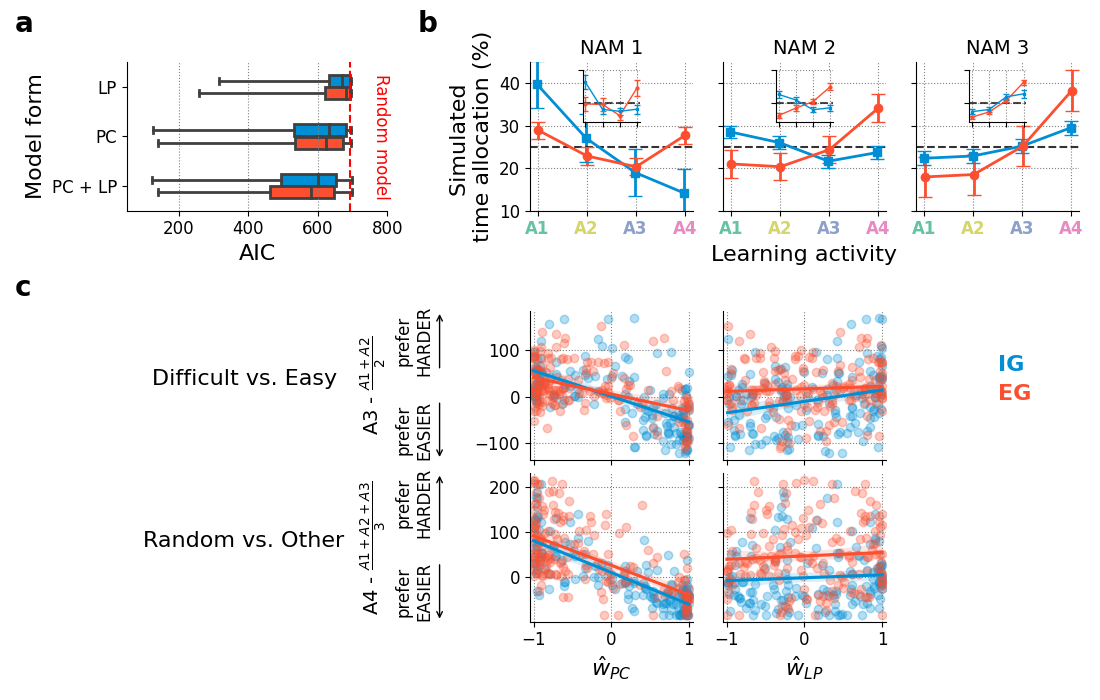

- Linking curiosity with quantitative theories of learning and decision making: While existing investigations examined curiosity in qualitative, descriptive terms, here we propose a novel approach that integrates quantitative behavioral and neuronal measures with computationally defined theories of learning and decision making.