Keywords

Computer Science and Digital Science

- A5.1.3. Haptic interfaces

- A5.1.7. Multimodal interfaces

- A5.4.4. 3D and spatio-temporal reconstruction

- A5.4.6. Object localization

- A5.4.7. Visual servoing

- A5.5.4. Animation

- A5.6. Virtual reality, augmented reality

- A5.6.1. Virtual reality

- A5.6.2. Augmented reality

- A5.6.3. Avatar simulation and embodiment

- A5.6.4. Multisensory feedback and interfaces

- A5.9.2. Estimation, modeling

- A5.10.2. Perception

- A5.10.3. Planning

- A5.10.4. Robot control

- A5.10.5. Robot interaction (with the environment, humans, other robots)

- A5.10.6. Swarm robotics

- A5.10.7. Learning

- A6.4.1. Deterministic control

- A6.4.3. Observability and Controlability

- A6.4.4. Stability and Stabilization

- A6.4.5. Control of distributed parameter systems

- A6.4.6. Optimal control

- A9.5. Robotics

- A9.7. AI algorithmics

- A9.9. Distributed AI, Multi-agent

Other Research Topics and Application Domains

- B2.4.3. Surgery

- B2.5. Handicap and personal assistances

- B5.1. Factory of the future

- B5.6. Robotic systems

- B8.1.2. Sensor networks for smart buildings

- B8.4. Security and personal assistance

1 Team members, visitors, external collaborators

Research Scientists

- Paolo Robuffo Giordano [Team leader, CNRS, Senior Researcher, HDR]

- François Chaumette [Inria, Senior Researcher, HDR]

- Alexandre Krupa [Inria, Researcher, HDR]

- Claudio Pacchierotti [CNRS, Researcher]

- Julien Pettré [Inria, Senior Researcher, HDR]

Faculty Members

- Marie Babel [INSA Rennes, Associate Professor, HDR]

- Quentin Delamare [École normale supérieure de Rennes, Associate Professor]

- Vincent Drevelle [Univ de Rennes I, Associate Professor]

- Maud Marchal [INSA Rennes, Professor, from Mar 2020, HDR]

- Eric Marchand [Univ de Rennes I, Professor, HDR]

Post-Doctoral Fellows

- Pratik Mullick [Inria, from Nov 2020]

- Gennaro Notomista [CNRS, from Nov 2020]

PhD Students

- Vicenzo Abichequer Sangalli [Inria, from Nov 2020]

- Julien Albrand [INSA Rennes, from Oct 2020]

- Javad Amirian [Inria]

- Benoit Antoniotti [Creative Rennes, CIFRE, until Jan 2020]

- Antonin Bernardin [INSA Rennes, from Jul 2020]

- Florian Berton [Inria]

- Pascal Brault [Inria]

- Thomas Chatagnon [Inria, from Nov 2020]

- Adele Colas [Inria]

- Cedric De Almeida Braga [Inria]

- Xavier De Tinguy De La Girouliere [INSA Rennes, from Sep 2020 until Oct 2020]

- Mathieu Gonzalez [Institut de recherche technologique B-com]

- Fabien Grzeskowiak [Inria]

- Alberto Jovane [Inria]

- Glenn Kerbiriou [INSA Rennes, from Dec 2020]

- Lisheng Kuang [China Scholarship Council, from Mar 2020]

- Romain Lagneau [INSA Rennes, from Jul 2020]

- Emilie Leblong [Pôle Saint-Hélier, from Oct 2020]

- Fouad Makiyeh [Inria, from Sep 2020]

- Alexander Oliva [Inria]

- Rahaf Rahal [Univ de Rennes I]

- Maxime Robic [Inria, from Nov 2020]

- Agniva Sengupta [Inria, until Jun 2020]

- Lev Smolentsev [Inria, from Nov 2020]

- John Thomas [Inria, from Dec 2020]

- Guillaume Vailland [INSA Rennes]

- Tairan Yin [Inria, from Nov 2020]

Technical Staff

- Marco Aggravi [CNRS, Engineer]

- Dieudonne Atrevi [Inria, Engineer]

- Julien Bruneau [Inria, Engineer]

- Louise Devigne [INSA Rennes, Engineer]

- Solenne Fortun [Inria, Engineer]

- Thierry Gaugry [INSA Rennes, Engineer, from Jul 2020]

- Guillaume Gicquel [CNRS, Engineer]

- Thomas Howard [CNRS, Engineer]

- Joudy Nader [CNRS, Engineer]

- Noura Neji [Inria, Engineer]

- François Pasteau [INSA Rennes, Engineer]

- Yuliya Patotskaya [Inria, Engineer, from Oct 2020]

- Fabien Spindler [Inria, Engineer]

- Ramana Sundararaman [Inria, Engineer, until Sep 2020]

- Wouter Van Toll [Inria, Engineer]

Interns and Apprentices

- Pierre Antoine Cabaret [INSA Rennes, from Jun 2020 until Sep 2020]

- Johann Courty [Univ de Rennes I, from Mar 2020 until Aug 2020]

- Juliette Grosset [Inria, from Feb 2020 until Jul 2020]

- Albert Khim [Inria, from Feb 2020 until Aug 2020]

- Muhammad Nazeer [Inria, from Feb 2020 until Jul 2020]

- Adrien Vigne [École normale supérieure de Rennes, from May 2020 until Jul 2020]

Administrative Assistants

- Hélène de La Ruée [Inria, until Fev 2020]

- Hélène de La Ruée [Univ de Rennes I, from Mar 2020]

Visiting Scientists

- Riccardo Arciulo [Région Lazio - Italie, until Apr 2020]

- Beatriz Cabrero Daniel [Université Pompeu Fabra - Barcelone, until Feb 2020]

- Raul Fernandez Fernandez [Université Carlos III Madrid - Espagne, from Sep 2020]

- Jose Gallardo Monroy [Conseil national des sciences et de la technologie - Mexique, from Mar 2020 until May 2020]

2 Overall objectives

The long-term vision of the Rainbow team is to develop the next generation of sensor-based robots able to navigate and/or interact in complex unstructured environments together with human users. Clearly, the word “together” can have very different meanings depending on the particular context: for example, it can refer to mere co-existence (robots and humans share some space while performing independent tasks), human-awareness (the robots need to be aware of the human state and intentions for properly adjusting their actions), or actual cooperation (robots and humans perform some shared task and need to coordinate their actions).

One could perhaps argue that these two goals are somehow in conflict since higher robot autonomy should imply lower (or absence of) human intervention. However, we believe that our general research direction is well motivated since: despite the many advancements in robot autonomy, complex and high-level cognitive-based decisions are still out of reach. In most applications involving tasks in unstructured environments, uncertainty, and interaction with the physical word, human assistance is still necessary, and will most probably be for the next decades. On the other hand, robots are extremely capable at autonomously executing specific and repetitive tasks, with great speed and precision, and at operating in dangerous/remote environments, while humans possess unmatched cognitive capabilities and world awareness which allow them to take complex and quick decisions; the cooperation between humans and robots is often an implicit constraint of the robotic task itself. Consider for instance the case of assistive robots supporting injured patients during their physical recovery, or human augmentation devices. It is then important to study proper ways of implementing this cooperation; finally, safety regulations can require the presence at all times of a person in charge of supervising and, if necessary, take direct control of the robotic workers. For example, this is a common requirement in all applications involving tasks in public spaces, like autonomous vehicles in crowded spaces, or even UAVs when flying in civil airspace such as over urban or populated areas.

Within this general picture, the Rainbow activities will be particularly focused on the case of (shared) cooperation between robots and humans by pursuing the following vision: on the one hand, empower robots with a large degree of autonomy for allowing them to effectively operate in non-trivial environments (e.g., outside completely defined factory settings). On the other hand, include human users in the loop for having them in (partial and bilateral) control of some aspects of the overall robot behavior. We plan to address these challenges from the methodological, algorithmic and application-oriented perspectives. The main research axes along which the Rainbow activities will be articulated are: three supporting axes (Optimal and Uncertainty-Aware Sensing; Advanced Sensor-based Control; Haptics for Robotics Applications) that are meant to develop methods, algorithms and technologies for realizing the central theme of Shared Control of Complex Robotic Systems.

3 Research program

3.1 Main Vision

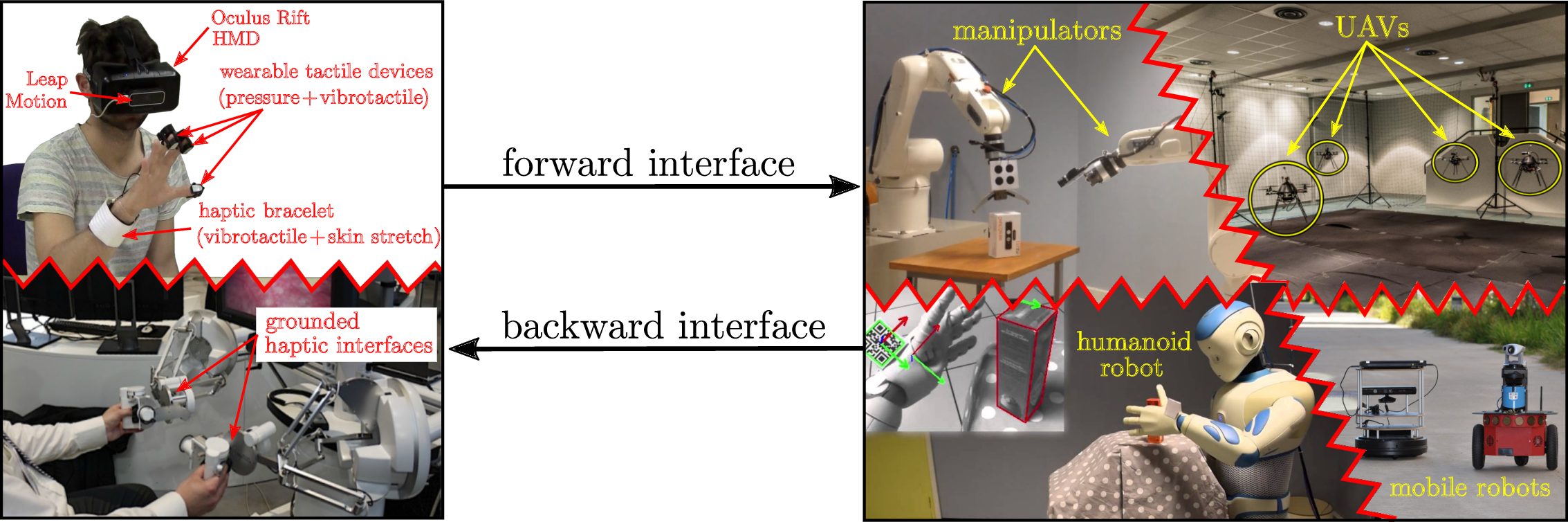

The vision of Rainbow (and foreseen applications) calls for several general scientific challenges: high-level of autonomy for complex robots in complex (unstructured) environments, forward interfaces for letting an operator giving high-level commands to the robot, backward interfaces for informing the operator about the robot `status', user studies for assessing the best interfacing, which will clearly depend on the particular task/situation. Within Rainbow we plan to tackle these challenges at different levels of depth:

- the methodological and algorithmic side of the sought human-robot interaction will be the main focus of Rainbow. Here, we will be interest in advancing the state-of-the-art in sensor-based online planning, control and manipulation for mobile/fixed robots. For instance, while classically most control approaches (especially those sensor-based) have been essentially reactive, we believe that less myopic strategies based on online/reactive trajectory optimization will be needed for the future Rainbow activities. The core ideas of Model-Predictive Control approaches (also known as Receding Horizon) or, in general, numerical optimal control methods will play a role in the Rainbow activities, for allowing the robots to reason/plan over some future time window and better cope with constraints. We will also consider extending classical sensor-based motion control/manipulation techniques to more realistic scenarios, such as deformable/flexible objects (“Advanced Sensor-based Control” axis). Finally, it will also be important to spend research efforts into the field of Optimal Sensing, in the sense of generating (again) trajectories that can optimize the state estimation problem in presence of scarce sensory inputs and/or non-negligible measurement and process noises, especially true for the case of mobile robots (“Optimal and Uncertainty-Aware Sensing” axis). We also aim at addressing the case of coordination between a single human user and multiple robots where, clearly, as explained the autonomy part plays even a more crucial role (no human can control multiple robots at once, thus a high degree of autonomy will be required by the robot group for executing the human commands);

-

the interfacing side will also be a focus of the Rainbow activities. As explained above, we will be interested in both the forward (human robot) and backward (robot human) interfaces. The forward interface will be mainly addressed from the algorithmic point of view, i.e., how to map the few degrees of freedom available to a human operator (usually in the order of 3–4) into complex commands for the controlled robot(s). This mapping will typically be mediated by an “AutoPilot” onboard the robot(s) for autonomously assessing if the commands are feasible and, if not, how to least modify them (“Advanced Sensor-based Control” axis).

The backward interface will, instead, mainly consist of a visual/haptic feedback for the operator. Here, we aim at exploiting our expertise in using force cues for informing an operator about the status of the remote robot(s). However, the sole use of classical grounded force feedback devices (e.g., the typical force-feedback joysticks) will not be enough due to the different kinds of information that will have to be provided to the operator. In this context, the recent interest in the use of wearable haptic interfaces is very interesting and will be investigated in depth (these include, e.g., devices able to provide vibro-tactile information to the fingertips, wrist, or other parts of the body). The main challenges in these activities will be the mechanical conception (and construction) of suitable wearable interfaces for the tasks at hand, and in the generation of force cues for the operator: the force cues will be a (complex) function of the robot state, therefore motivating research in algorithms for mapping the robot state into a few variables (the force cues) (“Haptics for Robotics Applications” axis);

- the evaluation side that will assess the proposed interfaces with some user studies, or acceptability studies by human subjects. Although this activity will not be a main focus of Rainbow (complex user studies are beyond the scope of our core expertise), we will nevertheless devote some efforts into having some reasonable level of user evaluations by applying standard statistical analysis based on psychophysical procedures (e.g., randomized tests and Anova statistical analysis). This will be particularly true for the activities involving the use of smart wheelchairs, which are intended to be used by human users and operate inside human crowds. Therefore, we will be interested in gaining some level of understanding of how semi-autonomous robots (a wheelchair in this example) can predict the human intention, and how humans can react to a semi-autonomous mobile robot.

Figure 1 depicts in an illustrative way the prototypical activities foreseen in Rainbow. On the righthand side, complex robots (dual manipulators, humanoid, single/multiple mobile robots) need to perform some task with high degree of autonomy. On the lefthand side, a human operator gives some high-level commands and receives a visual/haptic feedback aimed at informing her/him at best of the robot status. Again, the main challenges that Rainbow will tackle to address these issues are (in order of relevance): methods and algorithms, mostly based on first-principle modeling and, when possible, on numerical methods for online/reactive trajectory generation, for enabling the robots with high autonomy; design and implementation of visual/haptic cues for interfacing the human operator with the robots, with a special attention to novel combinations of grounded/ungrounded (wearable) haptic devices; user and acceptability studies.

3.2 Main Components

Hereafter, a summary description of the four axes of research in Rainbow.

3.2.1 Optimal and Uncertainty-Aware Sensing

Future robots will need to have a large degree of autonomy for, e.g., interpreting the sensory data for accurate estimation of the robot and world state (which can possibly include the human users), and for devising motion plans able to take into account many constraints (actuation, sensor limitations, environment), including also the state estimation accuracy (i.e., how well the robot/environment state can be reconstructed from the sensed data). In this context, we will be particularly interested in devising trajectory optimization strategies able to maximize some norm of the information gain gathered along the trajectory (and with the available sensors). This can be seen as an instance of Active Sensing, with the main focus on online/reactive trajectory optimization strategies able to take into account several requirements/constraints (sensing/actuation limitations, noise characteristics). We will also be interested in the coupling between optimal sensing and concurrent execution of additional tasks (e.g., navigation, manipulation). Formal methods for guaranteeing the accuracy of localization/state estimation in mobile robotics, mainly exploiting tools from interval analysis. The interest in these methods is their ability to provide possibly conservative but guaranteed accuracy bounds on the best accuracy one can obtain with the given robot/sensor pair, and can thus be used for planning purposes of for system design (choice of the best sensor suite for a given robot/task). Localization/tracking of objects with poor/unknown or deformable shape, which will be of paramount importance for allowing robots to estimate the state of “complex objects” (e.g., human tissues in medical robotics, elastic materials in manipulation) for controlling its pose/interaction with the objects of interest.

3.2.2 Advanced Sensor-based Control

One of the main competences of the previous Lagadic team has been, generally speaking, the topic of sensor-based control, i.e., how to exploit (typically onboard) sensors for controlling the motion of fixed/ground robots. The main emphasis has been in devising ways to directly couple the robot motion with the sensor outputs in order to invert this mapping for driving the robots towards a configuration specified as a desired sensor reading (thus, directly in sensor space). This general idea has been applied to very different contexts: mainly standard vision (from which the Visual Servoing keyword), but also audio, ultrasound imaging, and RGB-D.

Use of sensors for controlling the robot motion will also clearly be a central topic of the Rainbow team too, since the use of (especially onboard) sensing is a main characteristics of any future robotics application (which should typically operate in unstructured environments, and thus mainly rely on its own ability to sense the world). We then naturally aim at making the best out of the previous Lagadic experience in sensor-based control for proposing new advanced ways of exploiting sensed data for, roughly speaking, controlling the motion of a robot. In this respect, we plan to work on the following topics: “direct/dense methods” which try to directly exploit the raw sensory data in computing the control law for positioning/navigation tasks. The advantages of these methods is the need for little data pre-processing which can minimize feature extraction errors and, in general, improve the overall robustness/accuracy (since all the available data is used by the motion controller); sensor-based interaction with objects of unknown/deformable shapes, for gaining the ability to manipulate, e.g., flexible objects from the acquired sensed data (e.g., controlling online a needle being inserted in a flexible tissue); sensor-based model predictive control, by developing online/reactive trajectory optimization methods able to plan feasible trajectories for robots subjects to sensing/actuation constraints with the possibility of (onboard) sensing for continuously replanning (over some future time horizon) the optimal trajectory. These methods will play an important role when dealing with complex robots affected by complex sensing/actuation constraints, for which pure reactive strategies (as in most of the previous Lagadic works) are not effective. Furthermore, the coupling with the aforementioned optimal sensing will also be considered; multi-robot decentralised estimation and control, with the aim of devising again sensor-based strategies for groups of multiple robots needing to maintain a formation or perform navigation/manipulation tasks. Here, the challenges come from the need of devising “simple” decentralized and scalable control strategies under the presence of complex sensing constraints (e.g., when using onboard cameras, limited fov, occlusions). Also, the need of locally estimating global quantities (e.g., common frame of reference, global property of the formation such as connectivity or rigidity) will also be a line of active research.

3.2.3 Haptics for Robotics Applications

In the envisaged shared cooperation between human users and robots, the typical sensory channel (besides vision) exploited to inform the human users is most often the force/kinesthetic one (in general, the sense of touch and of applied forces to the human hand or limbs). Therefore, a part of our activities will be devoted to study and advance the use of haptic cueing algorithms and interfaces for providing a feedback to the users during the execution of some shared task. We will consider: multi-modal haptic cueing for general teleoperation applications, by studying how to convey information through the kinesthetic and cutaneous channels. Indeed, most haptic-enabled applications typically only involve kinesthetic cues, e.g., the forces/torques that can be felt by grasping a force-feedback joystick/device. These cues are very informative about, e.g., preferred/forbidden motion directions, but are also inherently limited in their resolution since the kinesthetic channel can easily become overloaded (when too much information is compressed in a single cue). In recent years, the arise of novel cutaneous devices able to, e.g., provide vibro-tactile feedback on the fingertips or skin, has proven to be a viable solution to complement the classical kinesthetic channel. We will then study how to combine these two sensory modalities for different prototypical application scenarios, e.g., 6-dof teleoperation of manipulator arms, virtual fixtures approaches, and remote manipulation of (possibly deformable) objects; in the particular context of medical robotics, we plan to address the problem of providing haptic cues for typical medical robotics tasks, such as semi-autonomous needle insertion and robot surgery by exploring the use of kinesthetic feedback for rendering the mechanical properties of the tissues, and vibrotactile feedback for providing with guiding information about pre-planned paths (with the aim of increasing the usability/acceptability of this technology in the medical domain); finally, in the context of multi-robot control we would like to explore how to use the haptic channel for providing information about the status of multiple robots executing a navigation or manipulation task. In this case, the problem is (even more) how to map (or compress) information about many robots into a few haptic cues. We plan to use specialized devices, such as actuated exoskeleton gloves able to provide cues to each fingertip of a human hand, or to resort to “compression” methods inspired by the hand postural synergies for providing coordinated cues representative of a few (but complex) motions of the multi-robot group, e.g., coordinated motions (translations/expansions/rotations) or collective grasping/transporting.

3.2.4 Shared Control of Complex Robotics Systems

This final and main research axis will exploit the methods, algorithms and technologies developed in the previous axes for realizing applications involving complex semi-autonomous robots operating in complex environments together with human users. The leitmotiv is to realize advanced shared control paradigms, which essentially aim at blending robot autonomy and user's intervention in an optimal way for exploiting the best of both worlds (robot accuracy/sensing/mobility/strength and human's cognitive capabilities). A common theme will be the issue of where to “draw the line” between robot autonomy and human intervention: obviously, there is no general answer, and any design choice will depend on the particular task at hand and/or on the technological/algorithmic possibilities of the robotic system under consideration.

A prototypical envisaged application, exploiting and combining the previous three research axes, is as follows: a complex robot (e.g., a two-arm system, a humanoid robot, a multi-UAV group) needs to operate in an environment exploiting its onboard sensors (in general, vision as the main exteroceptive one) and deal with many constraints (limited actuation, limited sensing, complex kinematics/dynamics, obstacle avoidance, interaction with difficult-to-model entities such as surrounding people, and so on). The robot must then possess a quite large autonomy for interpreting and exploiting the sensed data in order to estimate its own state and the environment one (“Optimal and Uncertainty-Aware Sensing” axis), and for planning its motion in order to fulfil the task (e.g., navigation, manipulation) by coping with all the robot/environment constraints. Therefore, advanced control methods able to exploit the sensory data at its most, and able to cope online with constraints in an optimal way (by, e.g., continuously replanning and predicting over a future time horizon) will be needed (“Advanced Sensor-based Control” axis), with a possible (and interesting) coupling with the sensing part for optimizing, at the same time, the state estimation process. Finally, a human operator will typically be in charge of providing high-level commands (e.g., where to go, what to look at, what to grasp and where) that will then be autonomously executed by the robot, with possible local modifications because of the various (local) constraints. At the same time, the operator will also receive online visual-force cues informative of, in general, how well her/his commands are executed and if the robot would prefer or suggest other plans (because of the local constraints that are not of the operator's concern). This information will have to be visually and haptically rendered with an optimal combination of cues that will depend on the particular application (“Haptics for Robotics Applications” axis).

4 Application domains

The activities of Rainbow falls obviously within the scope of Robotics. Broadly speaking, our main interest in in devising novel/efficient algorithms (for estimation, planning, control, haptic cueing, human interfacing, etc.) that can be general and applicable to many different robotic systems of interest, depending on the particular application/case study. For instance, we plan to consider

- applications involving remote telemanipulation with one or two robot arms, where the arm(s) will need to coordinate their motion for approaching/grasping objects of interest under the guidance of a human operator;

- applications involving single and multiple mobile robots for spatial navigation tasks (e.g., exploration, surveillance, mapping). In the multi-robot case, the high redundancy of the multi-robot group will motivate research in autonomously exploiting this redundancy for facilitating the task (e.g., optimizing the self-localization of the environment mapping) while following the human commands, and vice-versa for informing the operator about the status of a multi-robot group. In the single robot case, the possible combination with some manipulation devices (e.g., arms on a wheeled robot) will motivate research into remote tele-navigation and tele-manipulation;

- applications involving medical robotics, in which the “manipulators” are replaced by the typical tools used in medical applications (ultrasound probes, needles, cutting scalpels, and so on) for semi-autonomous probing and intervention;

- applications involving a direct physical “coupling” between human users and robots (rather than a “remote” interfacing), such as the case of assistive devices used for easing the life of impaired people. Here, we will be primarily interested in, e.g., safety and usability issues, and also touch some aspects of user acceptability.

These directions are, in our opinion, very promising since nowadays and future robotics applications are expected to address more and more complex tasks: for instance, it is becoming mandatory to empower robots with the ability to predict the future (to some extent) by also explicitly dealing with uncertainties from sensing or actuation; to safely and effectively interact with human supervisors (or collaborators) for accomplishing shared tasks; to learn or adapt to the dynamic environments from small prior knowledge; to exploit the environment (e.g., obstacles) rather than avoiding it (a typical example is a humanoid robot in a multi-contact scenario for facilitating walking on rough terrains); to optimize the onboard resources for large-scale monitoring tasks; to cooperate with other robots either by direct sensing/communication, or via some shared database (the “cloud”).

While no single lab can reasonably address all these theoretical/algorithmic/technological challenges, we believe that our research agenda can give some concrete contributions to the next generation of robotics applications.

5 Highlights of the year

5.1 Awards

- Best 2019 IEEE Robotics and Automation Magazine Paper Award received at ICRA 2020 2.

- Best Demonstration Award, Eurohaptics 2020, Leiden, The Netherlands 61.

- Best IEEE Trans. Haptics Short Paper - First Honorable Mention, IEEE Haptics Symposium (HAPTICS), Washington DC, USA (held online due to COVID-19) 26

- Best IEEE Trans. Haptics Short Paper - Second Honorable Mention, IEEE Haptics Symposium (HAPTICS), Washington DC, USA (held online due to COVID-19) 29.

- Best Video Presentation – Honorable Mention, IEEE Haptics Symposium (HAPTICS), Washington DC, USA (held online due to COVID-19) 16.

- Best Presentation Award, IEEE International Conference on Information and Computer Technologies (ICICT), San Jose, USA 45.

- Best Paper Award, EuroVR International Conference, Valencia, Spain, 35

5.2 Highlights

- Alexandre Krupa received at the IEEE ICRA 2020 Conference the “Distinguished Service Award” for best Associate Editor of the IEEE Robotics and Automation Letters for his services during the period 2015 to 2019

- P. Robuffo Giordano is the coordinator of the “MULTISHARED” project in the CHAIRE IA Programme PNIA 2019 – AAP Chaires de recherche et d'enseignement en Intelligence Artificielle

- J. Pettré is the coordinator of the H2020 FET Open project “CrowdDNA” launched on November 2020

6 New software and platforms

6.1 New software

6.1.1 HandiViz

- Name: Driving assistance of a wheelchair

- Keywords: Health, Persons attendant, Handicap

-

Functional Description:

The HandiViz software proposes a semi-autonomous navigation framework of a wheelchair relying on visual servoing.

It has been registered to the APP (“Agence de Protection des Programmes”) as an INSA software (IDDN.FR.001.440021.000.S.P.2013.000.10000) and is under GPL license.

- Contacts: François Pasteau, Marie Babel

- Participants: François Pasteau, Marie Babel

- Partner: INSA Rennes

6.1.2 UsTk

- Name: Ultrasound toolkit for medical robotics applications guided from ultrasound images

- Keywords: Echographic imagery, Image reconstruction, Medical robotics, Visual tracking, Visual servoing (VS), Needle insertion

- Functional Description: UsTK, standing for Ultrasound Toolkit, is a cross-platform extension of ViSP software dedicated to 2D and 3D ultrasound image processing and visual servoing based on ultrasound images. Written in C++, UsTK architecture provides a core module that implements all the data structures at the heart of UsTK, a grabber module that allows acquiring ultrasound images from an Ultrasonix or a Sonosite device, a GUI module to display data, an IO module for providing functionalities to read/write data from a storage device, and a set of image processing modules to compute the confidence map of ultrasound images, generate elastography images, track a flexible needle in sequences of 2D and 3D ultrasound images and track a target image template in sequences of 2D ultrasound images. All these modules were implemented on several robotic demonstrators to control the motion of an ultrasound probe or a flexible needle by ultrasound visual servoing.

-

URL:

https://

ustk. inria. fr - Authors: Alexandre Krupa, Fabien Spindler, Marc Pouliquen, Pierre Chatelain, Jason Chevrie

- Contacts: Alexandre Krupa, Fabien Spindler

- Participants: Alexandre Krupa, Fabien Spindler

- Partners: Inria, Université de Rennes 1

6.1.3 ViSP

- Name: Visual servoing platform

- Keywords: Augmented reality, Computer vision, Robotics, Visual servoing (VS), Visual tracking

-

Scientific Description:

Since 2005, we develop and release ViSP [1], an open source library available from https://

visp. inria. fr. ViSP standing for Visual Servoing Platform allows prototyping and developing applications using visual tracking and visual servoing techniques at the heart of the Rainbow research. ViSP was designed to be independent from the hardware, to be simple to use, expandable and cross-platform. ViSP allows designing vision-based tasks for eye-in-hand and eye-to-hand systems from the most classical visual features that are used in practice. It involves a large set of elementary positioning tasks with respect to various visual features (points, segments, straight lines, circles, spheres, cylinders, image moments, pose...) that can be combined together, and image processing algorithms that allow tracking of visual cues (dots, segments, ellipses...), or 3D model-based tracking of known objects or template tracking. Simulation capabilities are also available. [1] E. Marchand, F. Spindler, F. Chaumette. ViSP for visual servoing: a generic software platform with a wide class of robot control skills. IEEE Robotics and Automation Magazine, Special Issue on "Software Packages for Vision-Based Control of Motion", P. Oh, D. Burschka (Eds.), 12(4):40-52, December 2005.

- Functional Description: ViSP provides simple ways to integrate and validate new algorithms with already existing tools. It follows a module-based software engineering design where data types, algorithms, sensors, viewers and user interaction are made available. Written in C++, ViSP is based on open-source cross-platform libraries (such as OpenCV) and builds with CMake. Several platforms are supported, including OSX, iOS, Windows and Linux. ViSP online documentation allows to ease learning. More than 300 fully documented classes organized in 17 different modules, with more than 408 examples and 88 tutorials are proposed to the user. ViSP is released under a dual licensing model. It is open-source with a GNU GPLv2 or GPLv3 license. A professional edition license that replaces GNU GPL is also available.

-

URL:

http://

visp. inria. fr - Authors: Fabien Spindler, François Chaumette, Aurélien Yol, Éric Marchand, Souriya Trinh

- Contact: Fabien Spindler

- Participants: Éric Marchand, Fabien Spindler, François Chaumette

- Partners: Inria, Université de Rennes 1

6.2 New platforms

6.2.1 Robot Vision Platform

Participant: François Chaumette, Alexandre Krupa, Eric Marchand, Fabien Spindler.

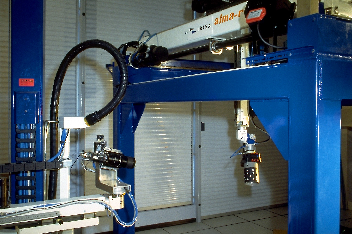

We exploit two industrial robotic systems built by Afma Robots in the nineties to validate our research in visual servoing and active vision. The first one is a 6 DoF Gantry robot, the other one is a 4 DoF cylindrical robot (see Fig. 2). These robots are equipped with monocular RGB cameras. The Gantry robot also allows mounting grippers on its end-effector. Attached to this platform, we can also find a collection of various RGB and RGB-D cameras used to validate vision-based real-time tracking algorithms.

6.2.2 Mobile Robots

Participants: Marie Babel, Solenne Fortun, François Pasteau, Julien Pettré, Quentin Delamare, Fabien Spindler.

To validate our research in personally assisted living topic (see Section 7.4.4), we have three electric wheelchairs, one from Permobil, one from Sunrise and the last from YouQ (see Fig. 3.a). The control of the wheelchair is performed using a plug and play system between the joystick and the low level control of the wheelchair. Such a system lets us acquire the user intention through the joystick position and control the wheelchair by applying corrections to its motion. The wheelchairs have been fitted with cameras, ultrasound and time of flight sensors to perform the required servoing for assisting handicapped people. A wheelchair haptic simulator completes this platform to develop new human interaction strategies in a virtual reality environment (see Fig. 3(b)).

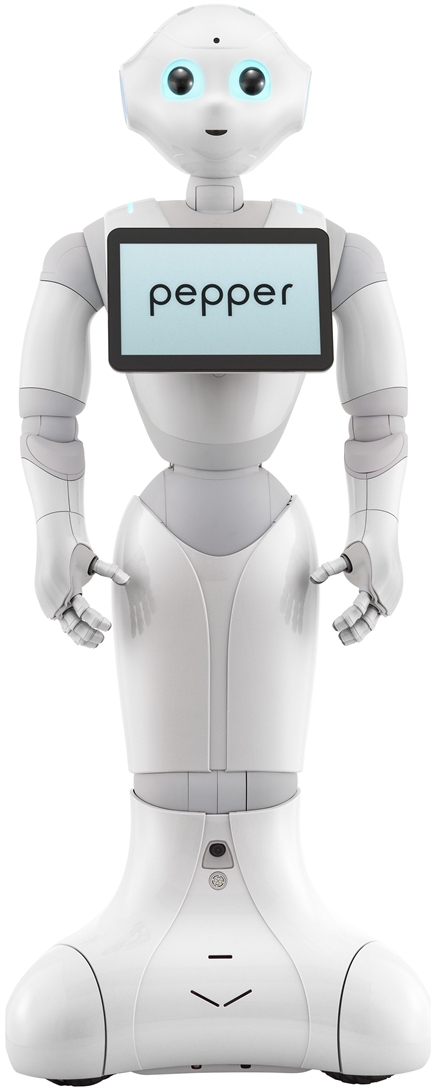

Pepper, a human-shaped robot designed by SoftBank Robotics to be a genuine day-to-day companion (see Fig. 3.c) is also part of this platform. It has 17 DoF mounted on a wheeled holonomic base and a set of sensors (cameras, laser, ultrasound, inertial, microphone) that makes this platform interesting for robot-human interactions during locomotion and visual exploration strategies (Sect. 7.2.8).

Moreover, for fast prototyping of algorithms in perception, control and autonomous navigation, the team uses a Pioneer 3DX from Adept (see Fig. 3.d). This platform is equipped with various sensors needed for autonomous navigation and sensor-based control.

Note that 5 papers 13, 38, 49, 54 exploiting the mobile robots were published this year.

|

|

|

|

| (a) | (b) | (c) | (d) |

6.2.3 Medical Robotic Platform

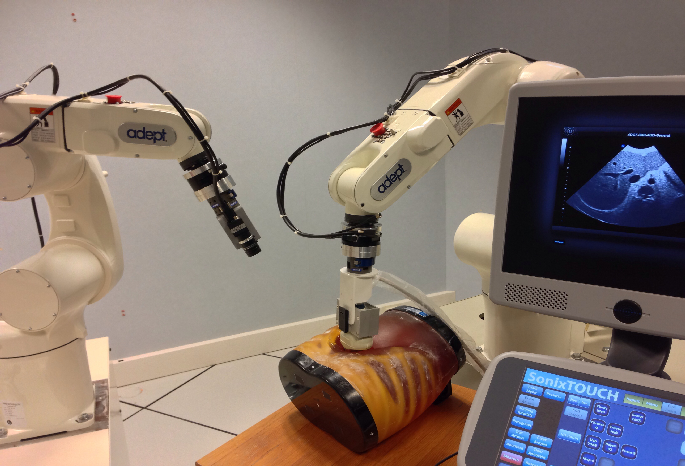

Participants: Alexandre Krupa, Fabien Spindler.

This platform is composed of two 6 DoF Adept Viper arms (see Figs. 4.a–b). Ultrasound probes connected either to a SonoSite 180 Plus or an Ultrasonix SonixTouch 2D and 3D imaging system can be mounted on a force torque sensor attached to each robot end-effector. The haptic Virtuose 6D or Omega 6 device (see Fig. 7.a) can also be used with this platform.

This platform was extended with a ATI Nano43 force/torque sensor attached to one of the Viper arm. It allows to perform experiments for needle insertion applications.

This testbed is of primary interest for researches and experiments concerning ultrasound visual servoing applied to probe positioning, soft tissue tracking, elastography or robotic needle insertion tasks (see Sect. 7.4.3). It can also be used to validate more classical tracking and visual servoing researches.

In 2020, this platform was used to obtain experimental results presented in 5 papers 43, 46, 40, 3, 21. Moreover, 2 PhD Theses 66, 68 exploiting this platform were published this year.

|

|

|

| (a) | (b) |

6.2.4 Advanced Manipulation Platform

Participants: Claudio Pacchierotti, Paolo Robuffo Giordano, Fabien Spindler.

This new platform is composed by 2 Panda lightweight arms from Franka Emika equipped with torque sensors in all seven axes. An electric gripper, a camera or a soft hand from qbrobotics can be mounted on the robot end-effector (see Fig. 5.a) to validate our researches in coupling force and vision for controlling robot manipulators (see Section 7.2.13) and in shared control for remote manipulation (see Section 7.4.1). Other haptic devices (see Section 6.2.6) can also be coupled to this platform.

This platform was extended with a Reflex TakkTile 2 gripper from RightHand Labs (see Fig. 5.b). A force/torque sensor from Alberobotics was also mounted on the robot end-effector to get more precision during torque control.

4 new papers 26, 19, 56, 57 and 1 PhD Thesis67 published this year include experimental results obtained with this platform.

|

|

|

| (a) | (b) |

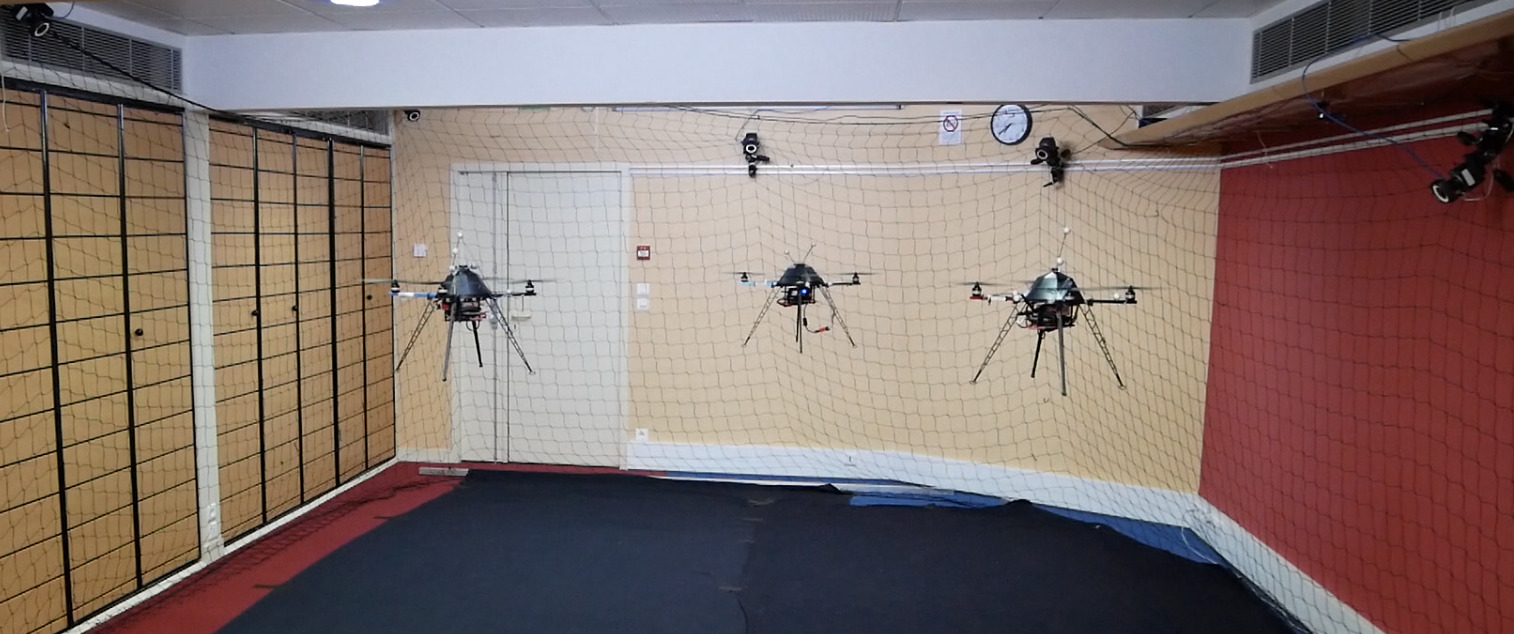

6.2.5 Unmanned Aerial Vehicles (UAVs)

Participants: Joudy Nader, Paolo Robuffo Giordano, Claudio Pacchierotti, Fabien Spindler.

Rainbow is involved in several activities involving perception and control for single and multiple quadrotor UAVs. To this end, we purchased four quadrotors from Mikrokopter Gmbh, Germany (see Fig. 6.a), and one quadrotor from 3DRobotics, USA (see Fig. 6.b). The Mikrokopter quadrotors have been heavily customized by: reprogramming from scratch the low-level attitude controller onboard the microcontroller of the quadrotors, equipping each quadrotor with a NVIDIA Jetson TX2 board running Linux Ubuntu and the TeleKyb-3 software based on genom3 framework developed at LAAS in Toulouse (the middleware used for managing the experiment flows and the communication among the UAVs and the base station), and purchasing the Flea Color USB3 cameras together with the gimbal needed to mount them on the UAVs. The quadrotor group is used as robotic platforms for testing a number of single and multiple flight control schemes with a special attention on the use of onboard vision as main sensory modality.

This year 1 paper 6 contains experimental results obtained with this platform.

|

|

|

| (a) | (b) |

6.2.6 Haptics and Shared Control Platform

Participants: Claudio Pacchierotti, Paolo Robuffo Giordano, Fabien Spindler.

Various haptic devices are used to validate our research in shared control. We have a Virtuose 6D device from Haption (see Fig. 7.a). This device is used as master device in many of our shared control activities (see, e.g., Sections 7.4.1). It could also be coupled to the Haption haptic glove in loan from the University of Birmingham. An Omega 6 (see Fig. 7.b) from Force Dimension and devices in loan from Ultrahaptics complete this platform that could be coupled to the other robotic platforms.

This platform was used to obtain experimental results presented in 9 papers 11, 26, 6, 19, 63, 56, 57, 55, 72 and 2 PhD Thesis 65, 67 published this year.

|

|

|

| (a) | (b) |

7 New results

7.1 Optimal and Uncertainty-Aware Sensing

7.1.1 Simultaneous Tracking and Elasticity Estimation of Deformable Objects

Participants: Agniva Sengupta, Romain Lagneau, Alexandre Krupa, Maud Marchal, Eric Marchand.

Within our research activities on deformable object tracking 68, this year we proposed a method to simultaneously track the deformation of soft objects and estimate their elasticity parameters 46. The tracking of the deformable object is performed by combining the visual information captured by a RGB-D camera with interactive Finite Element Method simulations of the object. The elasticity parameter estimation is performed in parallel and consists in minimizing the error between the tracked object and a simulated object deformed by the forces that are measured using a force sensor. Once the elasticity parameters are estimated, the deformation forces applied to an object can be estimated from the visual tracking of the object deformation without the use of a force sensor.

7.1.2 Trajectory Generation for Optimal State Estimation

Participants: Marco Aggravi, Claudio Pacchierotti, Paolo Robuffo Giordano.

This activity addresses the general problem of active sensing where the goal is to analyze and synthesize optimal trajectories for a robotic system that can maximize the amount of information gathered by the (few) noisy outputs (i.e., sensor readings) while at the same time reducing the negative effects of the process/actuation noise. We have recently developed a general framework for solving online the active sensing problem by continuously replanning an optimal trajectory that maximizes a suitable norm of the Constructibility Gramian (CG) 75. In 11, 55 we have extended this framework for considering a shared control task in which a human operator is in partial control of the motion of a mobile robot. The robot needs to estimate its state by measuring distances w.r.t. some landmarks, and it travels along a closed trajectory with fixed length. At the same time, an operator controls the location of the barycenter of the trajectory. This trajectory is autonomously deformed for maximizing the amount of information collected by the onboard sensors, and the operator is provided with a force feedback informing about where the autonomy would like to move the barycenter; however the final choice is left to the operator. The results of serveral users' studies have shown the validity of the approach. We plan to extend this framework to the multi-robot case.

7.1.3 Leveraging Multiple Environments for Learning and Decision Making

Participants: Maud Marchal, Thierry Gaugry, Antonin Bernardin.

Learning is usually performed by observing real robot executions. Physics-based simulators are a good alternative for providing highly valuable information while avoiding costly and potentially destructive robot executions. Within the Imagine project, we presented a novel approach for learning the probabilities of symbolic robot action outcomes 47. This is done by leveraging different environments, such as physics-based simulators, in execution time. To this end, we proposed MENID (Multiple Environment Noise Indeterministic Deictic) rules, a novel representation able to cope with the inherent uncertainties present in robotic tasks. MENID rules explicitly represent each possible outcomes of an action, keep memory of the source of the experience, and maintain the probability of success of each outcome. We also introduced an algorithm to distribute actions among environments, based on previous experiences and expected gain. Before using physics-based simulations, we proposed a methodology for evaluating different simulation settings and determining the least time-consuming model that could be used while still producing coherent results. We demonstrated the validity of the approach in a dismantling use case, using a simulation with reduced quality as simulated system, and a simulation with full resolution where we add noise to the trajectories and some physical parameters as a representation of the real system.

7.1.4 A Plane-based Approach for Indoor Point Clouds Registration

Participant: Eric Marchand.

Iterative Closest Point (ICP) is one of the mostly used algorithms for 3D point clouds registration. This classical approach can be impacted by the large number of points contained in a point cloud. Planar structures, which are less numerous than points, can be used in well-structured man-made environment. We thus propose a registration method inspired by the ICP algorithm in a plane-based registration approach for indoor environments. This method is based solely on data acquired with a LiDAR sensor. A new metric based on plane characteristics is introduced to find the best plane correspondences. The optimal transformation is estimated through a two-step minimization approach, successively performing robust plane-to-plane minimization and non-linear robust point-to-plane registration. This work was done in cooperation with IETR Lab and published in IAPR ICPR 2020 (in january 2021)

7.1.5 Relative camera pose estimation and planar reconstruction from two images

Participant: Eric Marchand.

We propose a novel method to simultaneously perform relative camera pose estimation and planar reconstruction of a scene from two RGB images 52. We start by extracting and matching superpixel information from both images and rely on a novel multi-model RANSAC approach to estimate multiple homographies from superpixels and identify matching planes. Ambiguity issues when performing homography decomposition are handled by proposing a voting system to more reliably estimate relative camera pose and plane parameters. A non-linear optimization process is also proposed to perform bundle adjustment that exploits a joint representation of homographies and works both for image pairs and whole sequences of image (vSLAM). As a result, the approach provides a mean to perform a dense 3D plane reconstruction from two RGB images only without relying on RGB-D inputs or strong priors such as Manhattan assumptions, and can be extented to handle sequences of images. This work was done in cooperation with the Mimetic team.

7.1.6 Learn Offsets for robust 6DoF object pose estimation

Participants: Mathieu Gonzalez, Eric Marchand.

Estimating the 3D translation and orientation of an object is a challenging task that can be considered within augmented reality or robotic applications. In 15 we propose a novel approach to perform 6 DoF object pose estimation from a single RGB-D image in cluttered scenes. We adopt an hybrid pipeline in two stages: data-driven and geometric respectively. The first data-driven step consists of a classification CNN to estimate the object 2D location in the image from local patches, followed by a regression CNN trained to predict the 3D location of a set of keypoints in the camera coordinate system. We robustly perform local voting to recover the location of each keypoint in the camera coordinate system. To extract the pose information, the geometric step consists in aligning the 3D points in the camera coordinate system with the corresponding 3D points in world coordinate system by minimizing a registration error, thus computing the pose.

7.2 Advanced Sensor-Based Control

7.2.1 Trajectory Generation for Minimum Closed-Loop State Sensitivity

Participants: Pascal Brault, Quentin Delamare, Paolo Robuffo Giordano.

The goal of this research activity is to propose a new point of view in addressing the control of robots under parametric uncertainties: rather than striving to design a sophisticated controller with some robustness guarantees for a specific system, we propose to attain robustness (for any choice of the control action) by suitably shaping the reference motion trajectory so as to minimize the state sensitivity to parameter uncertainty of the resulting closed-loop system.

During this year we have continued working on the idea of “input sensitivity”, which allows to obtain trajectories that, when perturbed, required minimal change of the control inputs. In particular we studied how to best combine the input and state senstivities in a single metric, and applied the trajectory optimization machinery to the case of a planar quadrotor. An off-the-shelf nonlinear optimization scheme was also employed for allowing to take into account (nonlinear) input constraints. A large statistical analysis was performed in simulation, and the results were submitted to the ICRA 2021 conference. We are now working towards an implementation on a real quadorotor by considering offsets in the center of mass (CoM) as one of the main sources of uncertainty.

7.2.2 Comfortable path generation for wheelchair navigation

Participants: Guillaume Vailland, Juliette Grosset, Marie Babel.

In the case of non-holonomic robot navigation, path planning algorithms such as Rapidly-exploring Random Tree (RRT) rarely provides feasible and smooth path without the need of additional processing. Furthermore, in a transport context like power wheelchair navigation, passenger comfort should be a priority and influences path planning strategy.

We then proposed a local path planner which guarantees curvature bounded value and continuous Cubic Bézier piecewise curves connections. To simulate and test this Cubic Bézier local path planner, we developed a new RRT version (CBB-RRT*) which generates on-the fly comfortable path adapted to non-holonomic constraints. This new framework has been submitted to the ICRA 2021 conference.

In addition, autonomous navigation for a mobile robot requires knowledge of the world around it. Detecting and recognizing obstacles in cluttered environment remains challenging to ensure safe navigation. To this aim, we then collaboration with Fabio Morbidi (Université Picardie-Jules Verne, Amiens) in order to evaluate the ability of event-based camera data to favour obstace recognition. Due to the Covid situation, data collection was difficult to organized. We then design event-based camera simulator, in order to train our machine learning framework with virtual images. Next steps will consist of implementing our solution onto the smart wheelchair platform. This work was done in cooperation with Valérie Gouranton (Hybrid group) 48

7.2.3 UWB beacon navigation of assisted power wheelchair

Participants: Julien Albrand, Pierre-Antoine Cabaret, François Pasteau, Vincent Drevelle, Eric Marchand, Marie Babel.

Typical problems in robots are those of perception of the environment and localization. Visual sensors are poorly adapted to the context of autonomous wheelchair navigation, both in terms of acceptability (intrusiveness) and in terms of adaptation to the wheelchair and of overall cost.

New sensors, based on Ultra Wide Band (UWB) radio technology, are emerging in particular for indoor localization and object tracking applications. This low-cost system allows the measurement of distances between fixed beacons and a mobile sensor, in order to obtain localization at decimeter level accuracy in the best case. We seek to exploit these sensors for the navigation of a wheelchair, despite the low accuracy of the measurements they provide.

The problem here lies in the definition of an autonomous or shared sensor based control solution, which fully exploits the notion of measurement uncertainty related to UWB beacons. By modeling the measurements of uncertain distances by intervals, we will try to propagate these uncertainties to the calculation of the speeds to be applied to the wheelchair. This will be done by using the methods of set inversion and constraint propagation, which lead to the characterization of solutions in the form of sets.

7.2.4 Visual Servoing for Cable-Driven Parallel Robots

Participant: François Chaumette.

This study is done in collaboration with IRT Jules Verne (Zane Zake, Nicolo Pedemonte) and LS2N (Stéphane Caro) in Nantes (see Section 8.1). It is devoted to the analysis of the robustness of visual servoing to modeling and calibration errors for cable-driven parallel robots. This year, the coupling of path planning and visual servoing has been studied to improve the robustness during all the transient period of the servo 31.

7.2.5 Singularities in visual servoing

Participant: François Chaumette.

This study is done in the scope of the ANR Sesame project (see Section 9.3).

We have performed a complete theoretical study about the singularities of image-based visual servoing and pose estimation (PnP problem) from the observation of four image points. Highly original results have been exhibited. In particular, it was shown that 2 to 6 camera positions correspond to singularities for a general configuration of 4 non-coplanar points, while it was wrongly believed before that no singularities occur for such configuration 25

7.2.6 Multi-sensor-based control for acurate and safe assembly

Participants: John Thomas, François Chaumette.

This study is done in the scope of the BPI Lichie project 9.3. Its goal is to design sensor-based control strategies coupling vision and proximetry data for ensuring precise positioning while avoiding obstacles in dense environements. The targeted application is the assembly of satellite parts.

7.2.7 Visual servo of a satellite constellation

Participants: Maxime Robic, Eric Marchand, François Chaumette.

This study is also done in the scope of the BPI Lichie project 9.3. Its goal is to control the orientation of a satellite constellation from a camera mounted on each of them to track particular objects on the ground.

7.2.8 Visual Exploration of an Indoor Environment

Participants: Benoît Antoniotti, Eric Marchand, François Chaumette.

This study was done in collaboration with the Creative company in Rennes through Benoît Antoniotti's PhD (see Section 7.2.8).

It was devoted to the exploration of indoor environments by a mobile robot, Pepper typically (see Section 6.2.2) for a complete and accurate reconstruction of the environment. Unfortunately, Benoît decided to drop his thesis.

7.2.9 Model-Free Deformation Servoing of Soft Objects

Participants: Romain Lagneau, Alexandre Krupa, Maud Marchal.

Nowadays robots are mostly used to manipulate rigid objects. Manipulating deformable objects remains challenging due to the difficulty of accurately predicting the object deformations.

We proposed an approach able to deform a soft object towards a desired shape that does not require a priori knowledge on the object mechanical behaviour parameters 66. It is based on a model-free visual servoing method that online estimates from past observations the deformation Jacobian that relates the motion of the robot end-effector to the deformation of the object. This estimation relies on a weighted least-squares minimization of a cost function that is defined on a temporal sliding window. The estimated deformation Jacobian is then used in a control law that aims to exponentially decrease a visual error describing the difference between the current and desired object deformation. To address the issue of possible lack of observation consistency, we consider an eigenvalue-based confidence criterion during the Jacobian update to insure robustness to observation noise. The approach was validated through comparisons with a model-based and a model-free state-of-the-art methods and the results showed that the proposed approach provides better robustness to external perturbations 40.

Based on the same previous approach principle, we propose a method to automatically control the 3D shape of deformable wires that are manipulated by two robotic arms 3. In order to describe the deformation of the wire 3D shape, we considered as visual features a set of equidistant 3D points belonging to the wire. A geometrical 3D B-spline model was used to represent the shape of the wire and an image processing algorithm relying on a Sequential Importance Resampling (SIR) particle filter was developed to track the wire deformation in real-time from a sequence of point clouds provided by a RGB-D camera. This geometrical B-spline model was only considered for the visual tracking process and no mechanical model of the wire was considered in the visual control scheme since we used our model-free deformation control approach. Several experiments on wires with different mechanical properties were performed and showed that our approach succeeded to control the 3D shape in order to reach a desired one.

7.2.10 Model-Based Deformation Servoing of Soft Objects

Participants: Fouad Makiyeh, Alexandre Krupa, Maud Marchal, François Chaumette.

This study has just started and takes place in the context of the GentleMAN project (see Section 9.1.2). The objective is to elaborate a new visual servoing approach aiming to control the shape of an object towards a desired deformation. In contrast to the model-free deformation servoing approach presented in Section 7.2.9, we plan to consider a model of the mechanical behaviour of the object in order to analytically derive the deformation Jacobian that will be used in the control law. Indeed, an analytical formulation could potentially increase the size of the convergence domain that is currently a limitation of the model-free methods.

7.2.11 Manipulation of a deformable wire by two UAVs

Participants: Lev Smolentsev, Alexande Krupa, François Chaumette.

This study has just started and takes place in the context of the CominLabs MAMBO project (see Section 9.4). It concerns the development of a visual-based control framework for performing autonomous manipulation of a deformable wire attached between two UAVs. The objective is to control the two UAVs from visual data provided by onboard RGB-D cameras in order to grasp an object on the ground with the wire and move it to another location.

7.2.12 Multi-Robot Formation Control

Participant: Paolo Robuffo Giordano.

Most multi-robot applications must rely on relative sensing among the robot pairs (rather than absolute/external sensing such as, e.g., GPS). For these systems, the concept of rigidity provides the correct framework for defining an appropriate sensing and communication topology architecture. In several previous works we have addressed the problem of coordinating a team of quadrotor UAVs equipped with onboard sensors (such as distance sensors or cameras) for cooperative localization and formation control under the rigidity framework. In 24 an interesting analysis of rigidity for robotics applications is provided in the context of distance geometry, together with other examples from different domains. This analysis helps in bringing together different notions and techniques related to rigidity that are otherwise considered very far from each other.

7.2.13 Coupling Force and Vision for Controlling Robot Manipulators

Participants: Alexander Oliva, François Chaumette, Paolo Robuffo Giordano.

The goal of this activity is about coupling visual and force information for advanced manipulation tasks. To this end, we are exploiting the recently acquired Panda robot (see Sect. 6.2.4), a state-of-the-art 7-dof manipulator arm with torque sensing in the joints, and the possibility to command torques at the joints or forces at the end-effector. The use of vision in torque-controlled robot is limited because of many issues, among which the difficulty of fusing low-rate images (about 30 Hz) with high-rate torque commands (about 1 kHz), the delays caused by any image processing and tracking algorithms, and the unavoidable occlusions that arise when the end-effector needs to approach an object to be grasped.

In this context we recently proposed a general framework for combining force and visual information directly in the visual feature space, by reformulating and unifying the classical admittance control law in the image space. The proposed visual/force control framework has been extensively evaluated via numerous experiments performed on the Panda robot in peg-in-hole tasks where both the pose and the exchanged forces could be regulated with high accuracy and good stability. These results are currently under review for the RAL/ICRA 2021.

7.2.14 Dimensionality reduction for Direct Visual Servoing

Participant: Eric Marchand.

To date most of visual servoing approaches have relied on geometric features that have to be tracked and matched in the image. Recent works have highlighted the importance of taking into account the photometric information of the entire images. This leads to direct visual servoing (DVS) approaches. The main disadvantage of DVS is its small convergence domain compared to conventional techniques, which is due to the high non-linearities of the cost function to be minimized. We proposed to project the image on orthogonal bases to build a new compact set of coordinates used as visual features. The idea is to consider the Discrete Cosine Transform (DCT) which allows to represent the image in the frequency domain in terms of a sum of cosine functions that oscillate at various frequencies. This leads to a new set of coordinates in a new precomputed orthogonal basis, the coefficients of the DCT. We propose to use these coefficients as the visual features that are then considered in a visual servoing control law. We then exhibit the analytical formulation of the interaction matrix related to these coefficients 21. This is also one of the first attempt to build a visual servoing control directly in the frequency domain.

7.3 Haptic Cueing for Robotic Applications

7.3.1 Wearable Haptics Systems Design

Participants: Claudio Pacchierotti, Marco Aggravi, Lisheng Kuang.

We have been working on wearable haptics since few years now, both from the hardware (design of interfaces) and software (rendering and interaction techniques) points of view.

In 10, we presented a modular wearable interface for the fingers. It is composed of a 3-DoF fingertip cutaneous device and a 1-DoF finger kinesthetic exoskeleton, which can be either used together as a single device or separately as two different devices. The 3-DoF fingertip device is composed of a static body and a mobile platform. The mobile platform is capable of making and breaking contact with the finger pulp and re-angle to replicate contacts with arbitrarily oriented surfaces. The 1-DoF finger exoskeleton provides kinesthetic force to the proximal and distal interphalangeal finger articulations using one servo motor grounded on the proximal phalanx. We carried out three human subjects experiments: the first experiment considered a curvature discrimination task, the second one a robot-assisted palpation task, and the third one an immersive experience in Virtual Reality. Results showed that providing cutaneous and kinesthetic feedback through our device significantly improved the performance of all the considered tasks. Moreover, although cutaneous-only feedback showed promising performance, adding kinesthetic feedback improved most metrics. Finally, subjects ranked the device as highly wearable, comfortable, and effective.

In 30, we focused on the personalization of wearable haptic interfaces, aiming at devising haptic rendering techniques adapted to the specific characteristics of ones fingers. Indeed, fingertip size and shape vary significantly across humans, making it difficult to design fingertip interfaces and rendering techniques suitable for everyone. We started with an existing data-driven haptic rendering algorithm that ignores fingertip size, and we then developed two software-based approaches to personalize this algorithm for fingertips of different sizes using either additional data or geometry. We evaluate our algorithms in the rendering of pre-recorded tactile sensations onto rubber casts of six different fingertips as well as onto the real fingertips of 13 human participants. Results on the casts show that both approaches significantly improve performance, reducing force error magnitudesby an average of 78% with respect to the standard non-personalized rendering technique. Congruent results were obtained for real fingertips, with subjects rating each of the two personalized rendering techniques significantly better than the standard non-personalized method.

In 45, we developed a low-cost immersive haptic, audio, and visual experience built by using off-the-shelf components.It is composed of a vibrotactile glove, a Leap Motion sensor,and an head-mounted display, integrated together to provide compelling immersive sensations. To demonstrate its potential, we presented two human subject studies in Virtual Reality. They evaluate the capability of the system in providing (i)guidance during simulated drone operations, and (ii) contacthaptic feedback during virtual objects interaction. Results prove that the proposed haptic-enabled framework improvesthe performance and illusion of presence.

In 69, we introduced a wearable armband interface capable of tracking its orientation in space as well as providing vibrotactile sensations. It is composed of four vibrotactile motors, able to provide contact sensations, an inertial measurement unit (IMU), and a battery-powered Arduino board. We use two of these armbands, worn on the forearm and the upper arm, to interact in an immersive Virtual Reality (VR) environment. The system renders in VR the movements of the user’s arm as well as its interactions with virtual objects. Specifically, users are asked to catch a series of baseballs using their armbands-equipped arm. Whenever one baseball hits the arm, the armband closer to the contact vibrates. The amplitude of the vibration is proportional to the distance between the impact point and the position of the activated armband.

7.3.2 Mid-Air Haptic Feedback

Participants: Claudio Pacchierotti, Thomas Howard, Guillaume Gicquel, Maud Marchal.

GUIs have been the gold standard for more than 25 years. However, they only support interaction with digital information indirectly (typically using a mouse or pen) and input and output are always separated. Furthermore, GUIs do not leverage our innate human abilities to manipulate and reason with 3D objects. Recently, 3D interfaces and VR headsets use physical objects as surrogates for tangible information, offering limited malleability and haptic feedback (e.g., rumble effects). In the framework of project H-Reality (Sect. 9.2.1), we are working to develop novel mid-air haptics paradigm that can convey the information spectrum of touch sensations in the real world, motivating the need to develop new, natural interaction techniques.

Currently, focused ultrasound phased arrays are the most mature solution for providing mid-air haptic feedback. They modulate the phase of an array of ultrasound emitters so as to generate focused points of oscillating high pressure, eliciting vibrotactile sensations when encountering a user’sskin. While these arrays feature a reasonably large vertical workspace,they are not capable of displaying stimuli far beyond their horizontallimits, severely limiting their workspace in the lateral dimensions. For this reason, we proposed a solution for enlarging the workspace of focused ultrasound arrays. It features 2 degrees of freedom, rotating the array around the pan and tilt axes, thereby significantly increasing the usable workspace and enabling multi-directional feedback. Our hardware tests and human subject study in an ecological VRsetting show a 14-fold increase in workspace volume, with focal point repositioning speeds over 0.85m/s while delivering tactile feedback with positional accuracy below 18 mm 16, 72.

In addition to enlarging the workspace of ultrasound interfaces, we also worked to understand if it was possible to render stiffness sensations through ultrasound stimulation 63. A user study enrolling 20 participants showed that it was indeed possible to render the sensation of interacting with virtual objects of different stiffness. We found just noticeable difference (JND) values of 17%, 31%, and 19% for the three reference stiffness values 7358 Pa/m, 13242 Pa/m, 19126 Pa/m (sound pressure overdisplacement), respectively.

7.3.3 Wearable Haptics for Interacting with Tangible Objects

Participants: Claudio Pacchierotti, Maud Marchal, Thomas Howard, Xavier de Tinguy.

We used haptic interfaces to augment the feeling of interacting with tangible objects in Virtual Reality, e.g., making a simple tangible object feel more or less stiff than it really is, or improving the sensation of making and breaking contact with virtual objects.

In 29, we studied the effect of combining simple passive tangible objects and wearable haptics for improving the display of varying stiffness, friction, and shape sensations in these environments. By providing timely cutaneous stimuli through a wearable finger device, we can make an object feel softer or more slippery than it really is, and we can also create the illusion of encountering virtual bumps and holes. We evaluate the proposed approach carrying out three experiments with human subjects. Results confirm that we can increase the compliance of a tangible object by varying the pressure applied through a wearable device. We are also able to simulate the presence of bumps and holes by providing timely pressure and skin stretch sensations. Altering the friction of a tangible surface showed recognition rates above the chance level, albeit lower than those registered in the other experiments. Finally, we show the potential of our techniquesin an immersive medical palpation use case in VR. These results pave the way for novel and promising haptic interactions in VR, better exploiting the multiple ways of providing simple, unobtrusive, and inexpensive haptic displays.

In 61, we designed a wearable encounter-type haptic interface (ETHDs). Encounter-type haptic displays solve the issue of rendering sensations of making and breaking contact, bringing their end-effector in contact with the user only when collisions with virtual objects occur. We presented the design and evaluation of a wearable haptic interface for natural manipulation of tangible objects in Virtual Reality (VR). It proposes an interaction concept between encounter-type and tangible haptics. The actuated 1 degree-of-freedom interface brings a tangible object in and out of contact with a user’s palm, rendering making and breaking of contact sensations, and allowing grasping and manipulation of virtual objects. Device performance tests show that changes in contact states can be rendered with delays as low as 50 ms, with additional improvements to contact synchronicity obtained through our proposed interaction technique. An exploratory user study in VR showed that our device can render compelling grasp and release interactions with static and slowly moving virtual objects, contributing to user immersion.

This work won the Best Hands-on Demonstration award at Eurohaptics 2020, held in Leiden, The Netherlands.

While working with wearable haptics and tangible objects, we realized that one of the main issues was the tracking of the human fingertip. Indeed, one important aspect to achieve is the synchronization of motion and sensory feedback between the human users and their virtual avatars, i.e., whenever one user moves a limb, the same motion should be replicated by the avatar; similarly, whenever the avatar touches a virtual object, the user should feel the same haptic experience. In 12, we combine tracking information from a tangible object instrumented with capacitive sensors and an optical tracking system, to improve contact rendering when interacting with tangibles in VR. A human-subject study shows that combining capacitive sensing with optical tracking significantly improves the visuohaptic synchronization and immersion of the VR experience.

7.3.4 Wearable Haptics for Interacting with Virtual Crowds

Participants: Claudio Pacchierotti, Julien Pettré, Florian Berton, Fabien Grzeskowiak, Marco Aggravi, Alberto Jovane.

We have been using wearable vibrotactile armbands to render the collisons between a human user and a virtual crowd. In 9, we focus on the behavioural changes occurring with or without haptic rendering during a navigation task in a dense crowd, as well as on potential after-effects introduced by the use haptic rendering. Our objective is to provide recommendations for designing VR setup to study crowd navigation behaviour. To this end, we designed an experiment (N=23) where participants navigated in a crowded virtual train station without, then with, and then again without haptic feedback of their collisions with virtual characters. Results show that providing haptic feedback improved the overall realism of the interaction, as participants more actively avoided collisions. We also noticed a significant after-effect in the users’ behaviour when haptic rendering was once again disabled in the third part of the experiment. Nonetheless, haptic feedback did not have any significant impact on the users’ sense of presence and embodiment.

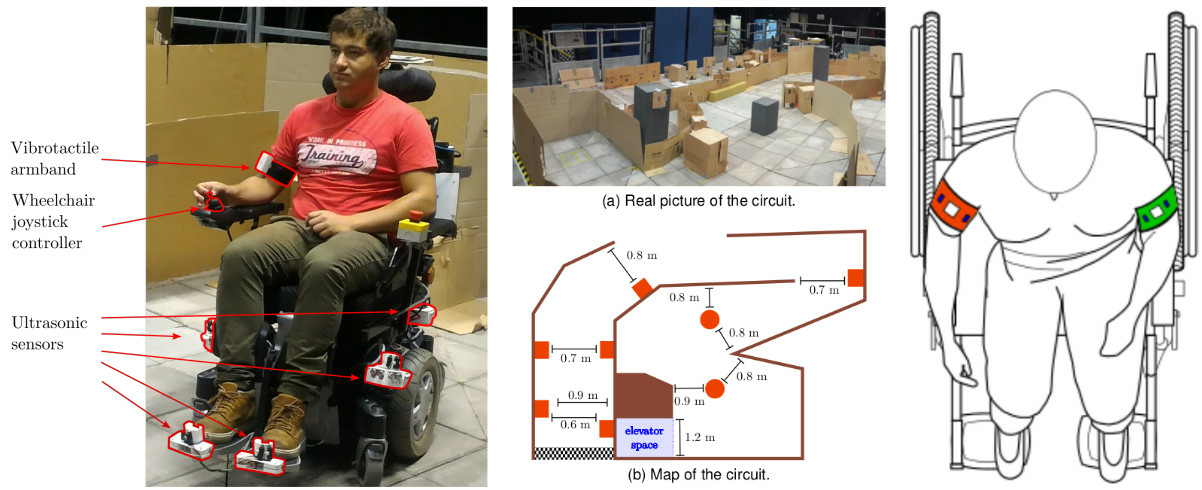

7.3.5 Wearable Haptics for an Augmented Wheelchair Driving Experience

Participants: Louise Devigne, Jeanne Hécquard, Zoé Levrel, François Pasteau, Marco Aggravi, Maud Marchal, Claudio Pacchierotti, Marie Babel.

Smart powered wheelchairs can increase mobility and independence for people with disability by providing navigation support. For rehabilitation or learning purposes, it would be of great benefit for wheelchair users to have a better understanding of the surrounding environment while driving. Therefore, a way of providing navigation support is to communicate information through a dedicated and adapted feedback interface.

In 13, we envisaged the use of wearable vibrotactile haptics, i.e. two haptic armbands, each composed of four evenly-spaced vibrotactile actuators. With respect to other available solutions, our approach provides rich navigation information while always leaving the patient in control of the wheelchair motion. We then conducted experiments with volunteers who experienced wheelchair driving in conjunction with the use of the armbands to provide drivers with information either on the presence of obstacles. Results show that providing information on closest obstacle position improved significantly the safety of the driving task (least number of collisions). This work is jointly conducted in the context of ADAPT project (Sect. 9.2.2) and ISI4NAVE associate team (Sect. 9.1.1).

To enlarge this work, new interfaces have been also explored such as mid-air interfaces in order to design inclusive solutions for museum and cultural heritage exploration for wheelchair users.

7.4 Shared Control Architectures

7.4.1 Shared Control for Remote Manipulation

Participants: Paolo Robuffo Giordano, Claudio Pacchierotti, Rahaf Rahal, Raul Fernandez Fernandez.

As teleoperation systems become more sophisticated and flexible, the environments and applications where they can be employed become less structured and predictable. This desirable evolution toward more challenging robotic tasks requires an increasing degree of training, skills, and concentration from the human operator. In this respect, shared control algorithms have been investigated as one the main tools to design complex but intuitive robotic teleoperation system, helping operators in carrying out several increasingly difficult robotic applications, such as assisted vehicle navigation, surgical robotics, brain-computer interface manipulation, rehabilitation. Indeed, this approach makes it possible to share the available degrees of freedom of the robotic system between the operator and an autonomous controller.

Along this general line of research, during this year we gave the following contributions:

- in 26, 57 we studied how to enhance the user's comfort during a telemanipulation task. Using an inverse kinematic model of the human arm and the Rapid Upper Limb Assessment (RULA) metric, the proposed approach estimates the current user’s comfort online. From this measure and an a priori knowledge of the task, we then generate dynamic active constraints guiding the users towards a successful completion of the task, along directions that improve their posture and increase their comfort. Studies with human subjects have shown the effectiveness of the proposed approach, yielding a 30% perceived reduction of the workload with respect to using standard guided humanin-the-loop teleoperation.

- in 23 we presented an adaptive impedance control architecture for robotic teleoperation of contact tasks featuring continuous interaction with the environment. We used Learning from Demonstration (LfD) as a framework to learn variable stiffness control policies. Then, the learnt state-varying stiffness was used to command the remote manipulator, so as to adapt its interaction with the environment based on the sensed forces. The proposed system only relies on the on-board torque sensors of a commercial robotic manipulator and it does not require any additional hardware or user input for the estimation of the required stiffness. We also provide a passivity analysis of our system, where the concept of energy tanks is used to guarantee a stable behavior. Finally, the system was evaluated in a representative teleoperated cutting application. Results showed that the proposed variable-stiffness approach outperforms two standard constant-stiffness approaches in terms of safety and robot tracking performance.

- in 19 we focused on robotic manipulation of fragile, compliant objects, such as food items. In particular we developed a haptic-based, Learning from Demonstration (LfD) policy that enables pre-trained autonomous grasping of food items using an anthropomorphic robotic system. The policy combines data from teleoperation and direct human manipulation of objects, embodying human intent and interaction areas of significance. We evaluated the proposed solution against a recent state-ofthe-art LfD policy as well as against two standard impedance controller techniques. The results showed that the proposed policy performs significantly better than the other considered techniques, leading to high grasping success rates while guaranteeing the integrity of the food at hand.

7.4.2 Shared Control for Multiple Robots

Participants: Marco Aggravi, Paolo Robuffo Giordano, Claudio Pacchierotti, Muhammad Nazeer.