Keywords

Computer Science and Digital Science

- A3.2.2. Knowledge extraction, cleaning

- A3.4.1. Supervised learning

- A5.1.1. Engineering of interactive systems

- A5.1.2. Evaluation of interactive systems

- A5.1.4. Brain-computer interfaces, physiological computing

- A5.1.6. Tangible interfaces

- A5.1.7. Multimodal interfaces

- A5.1.8. 3D User Interfaces

- A5.2. Data visualization

- A5.6. Virtual reality, augmented reality

- A5.6.1. Virtual reality

- A5.6.2. Augmented reality

- A5.6.3. Avatar simulation and embodiment

- A5.6.4. Multisensory feedback and interfaces

- A5.9. Signal processing

- A5.9.2. Estimation, modeling

- A9.2. Machine learning

- A9.3. Signal analysis

Other Research Topics and Application Domains

- B1.2. Neuroscience and cognitive science

- B2.1. Well being

- B2.5.1. Sensorimotor disabilities

- B2.6.1. Brain imaging

- B3.1. Sustainable development

- B3.6. Ecology

- B9.1. Education

- B9.1.1. E-learning, MOOC

- B9.5.3. Physics

- B9.6.1. Psychology

1 Team members, visitors, external collaborators

Research Scientists

- Martin Hachet [Team leader, INRIA, Senior Researcher, HDR]

- Pierre Dragicevic [INRIA, Researcher]

- Yvonne Jansen [CNRS, Researcher]

- Fabien Lotte [INRIA, Senior Researcher, HDR]

- Arnaud Prouzeau [INRIA, Researcher]

Faculty Member

- Stephanie Cardoso [UNIV BORDEAUX MONTAIGNE, Associate Professor]

Post-Doctoral Fellows

- Hessam Djavaherpour [UNIV BORDEAUX, from Dec 2022]

- Luiz Morais [INRIA, until Jul 2022]

- Cécilia Ostertag [INRIA, from Feb 2022 until Oct 2022]

- Sébastien Rimbert [INRIA, until Jun 2022]

PhD Students

- Ambre Assor [INRIA]

- Vincent Casamayou [UNIV BORDEAUX, from Oct 2022]

- Edwige Chauvergne [INRIA]

- Adelaide Genay [INRIA]

- Morgane Koval [INRIA]

- Maudeline Marlier [SNCF, CIFRE, from Mar 2022]

- Clara Rigaud [SORBONNE UNIVERSITE]

- Aline Roc [INRIA]

- Emma Tison [UNIV BORDEAUX, from Sep 2022]

- David Trocellier [UNIV BORDEAUX]

- Marc Welter [INRIA, from Feb 2022]

Technical Staff

- Axel Bouneau [INRIA, Engineer, from Mar 2022]

- Vincent Casamayou [INRIA, Engineer, until Sep 2022]

- Justin Dillmann [Inria, Engineer, from Nov 2022]

- Pauline Dreyer [INRIA]

- Thibaut Monseigne [INRIA, Engineer]

Interns and Apprentices

- Jordan Azzouguen [INRIA, from Feb 2022 until Aug 2022]

- Aymeric Ferron [Enseirb and UQAC, from Sep 2022]

- Remi Monier [UNIV BORDEAUX, from Jun 2022 until Jun 2022]

- Anagaël Pereira [UNIV BORDEAUX, until Nov 2022]

- Léana Petiot [UNIV BORDEAUX, from May 2022 until Jun 2022]

Administrative Assistants

- Audrey Plaza [INRIA]

- Rima Soueidan [INRIA, from Sep 2022]

Visiting Scientists

- Kim Sauvé [Lancaster University, from Feb 2022 until Apr 2022]

- Zachary Traylor [North Carolina State University, from Sep 2022]

2 Overall objectives

The standard human-computer interaction paradigm based on mice, keyboards, and 2D screens, has shown undeniable benefits in a number of fields. It perfectly matches the requirements of a wide number of interactive applications including text editing, web browsing, or professional 3D modeling. At the same time, this paradigm shows its limits in numerous situations. This is for example the case in the following activities: i) active learning educational approaches that require numerous physical and social interactions, ii) artistic performances where both a high degree of expressivity and a high level of immersion are expected, and iii) accessible applications targeted at users with special needs including people with sensori-motor and/or cognitive disabilities.

To overcome these limitations, Potioc investigates new forms of interaction that aim at pushing the frontiers of the current interactive systems. In particular, we are interested in approaches where we vary the level of materiality (i.e., with or without physical reality), both in the output and the input spaces. On the output side, we explore mixed-reality environments, from fully virtual environments to very physical ones, or between both using hybrid spaces. Similarly, on the input side, we study approaches going from brain activities, that require no physical actions of the user, to tangible interactions, which emphasize physical engagement. By varying the level of materiality, we adapt the interaction to the needs of the targeted users.

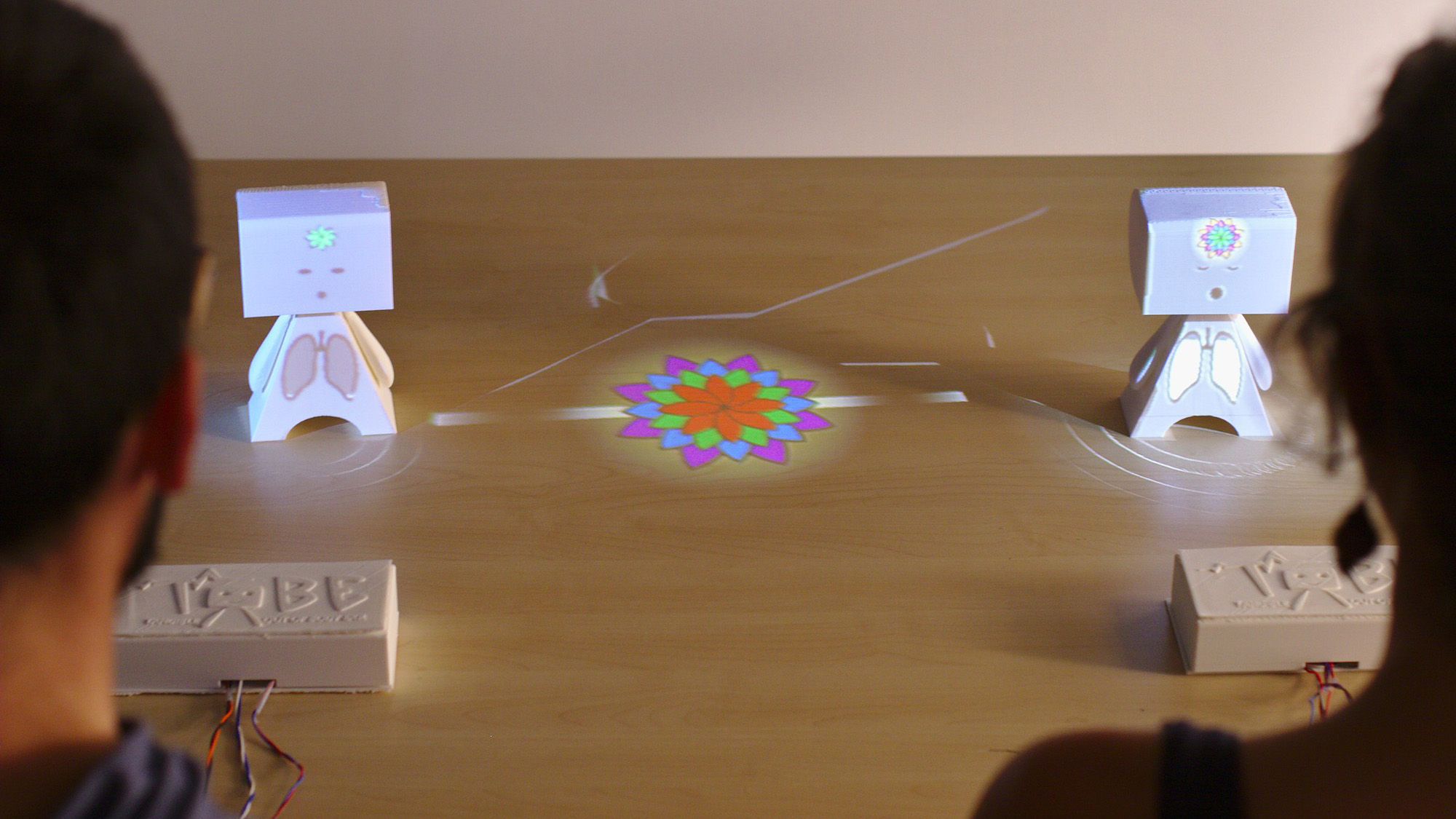

Two users are facing a physical puppet on which digital information are projected.

The main applicative domains targeted by Potioc are Education, Art, Entertainment and Well-being. For these domains, we design, develop, and evaluate new approaches that are mainly dedicated to non-expert users. In this context, we thus emphasize approaches that stimulate curiosity, engagement, and pleasure of use.

3 Research program

To achieve our overall objective, we follow two main research axes, plus one transverse axis, as illustrated in Figure 2.

In the first axis dedicated to Interaction in Mixed-Reality spaces, we explore interaction paradigms that encompass virtual and/or physical objects. We are notably interested in hybrid environments that co-locate virtual and physical spaces, and we also explore approaches that allow one to move from one space to the other.

The second axis is dedicated to Brain-Computer Interfaces (BCI), i.e., systems enabling user to interact by means of brain activity only. We target BCI systems that are reliable and accessible to a large number of people. To do so, we work on brain signal processing algorithms as well as on understanding and improving the way we train our users to control these BCIs.

Finally, in the transverse axis, we explore new approaches that involve both mixed-reality and neuro-physiological signals. In particular, tangible and augmented objects allow us to explore interactive physical visualizations of human inner states. Physiological signals also enable us to better assess user interaction, and consequently, to refine the proposed interaction techniques and metaphors.

Three images visually represent Potioc's research axes: a user with a HMD facing physical objects, a user wearing an EEG in front of a screen, and an sandbox augmented with digital information projected on it.

Main research axes of Potioc.

From a methodological point of view, for these three axes, we work at three different interconnected levels. The first level is centered on the human sensori-motor and cognitive abilities, as well as user strategies and preferences, for completing interaction tasks. We target, in a fundamental way, a better understanding of humans interacting with interactive systems. The second level is about the creation of interactive systems. This notably includes development of hardware and software components that will allow us to explore new input and output modalities, and to propose adapted interaction techniques. Finally, in a last higher level, we are interested in specific application domains. We want to contribute to the emergence of new applications and usages, with a societal impact.

4 Application domains

4.1 Education

Education is at the core of the motivations of the Potioc group. Indeed, we are convinced that the approaches we investigate—which target motivation, curiosity, pleasure of use and high level of interactivity—may serve education purposes. To this end, we collaborate with experts in Educational Sciences and teachers for exploring new interactive systems that enhance learning processes. We are currently investigating the fields of astronomy, optics, and neurosciences. We have also worked with special education centres for the blind on accessible augmented reality prototypes. Currently, we collaborate with teachers to enhance collaborative work for K-12 pupils. In the future, we will continue exploring new interactive approaches dedicated to education, in various fields. Popularization of Science is also a key domain for Potioc. Focusing on this subject allows us to get inspiration for the development of new interactive approaches.

4.2 Art

Art, which is strongly linked with emotions and user experiences, is also a target area for Potioc. We believe that the work conducted in Potioc may be beneficial for creation from the artist point of view, and it may open new interactive experiences from the audience point of view. As an example, we have worked with colleagues who are specialists in digital music, and with musicians. We have also worked with jugglers and we are currently working with a scenographer with the goal of enhancing interactivity of physical mockups and improve user experience.

4.3 Entertainment

Similarly, entertainment is a domain where our work may have an impact. We notably explored BCI-based gaming and non-medical applications of BCI, as well as mobile Augmented Reality games. Once again, we believe that our approaches that merge the physical and the virtual world may enhance the user experience. Exploring such a domain will raise numerous scientific and technological questions.

4.4 Well-being

Finally, well-being is a domain where the work of Potioc can have an impact. We have notably shown that spatial augmented reality and tangible interaction may favor mindfulness activities, which have been shown to be beneficial for well-being. More generally, we explore introspectibles objects, which are tangible and augmented objects that are connected to physiological signals and that foster introspection. We explore these directions for general public, including people with special needs.

5 Social and environmental responsibility

5.1 Mental Health and accessibility

In collaboration with colleagues in neuropsychology (Antoinette Prouteau), we are exploring how augmented reality can help to better explain schizophrenia and fight against stigmatization. This project is directly linked to Emma Tison's PhD thesis. Directly linked to this project, we are starting the Inria ”Action exploratoire” LiveIt.

Previously, we have been interested in designing and developing tools that can be used by people suffering from physiological or cognitive disorders. In particular, we used the PapARt tool developed in the team to contribute making board games accessible for people suffering from visual impairment 47. We also designed a MOOC player, called AIANA, in order to ease the access of people with cognitive disorders (attention, memory). See the dedicated page.

As part of our research on Brain-Computer Interfaces, we also worked with users with severe motor impairment (notably tetraplegic users or stroke patients) to restore or replace some of their lost functions, by designing BCI-based assistive technologies or motor rehabilitation approaches, see, e.g., 1.

5.2 Augmented reality for environmental challenges

In response to the big challenge of climate change, we are currently orienting our research towards approaches that may contribute to pro-environmental behaviors. To do so, we are framing research directions and building projects with the objective of putting our expertise in HCI, visualization, and mixed-reality to work for the reduction of human impact on the planet (see 46). In 2022, we started a new project dedicated to this subject with colleagues in environmental sciences (CIRED) and behavioral sciences (Lessac). This project received support from ANR in their annual general call and will officially start in 2023 under the name of Be·aware. See also ARwavs in 8.2.

5.3 Humanitarian information visualization

Members of the team have been involved in research promoting humanitarian goals, such as studying how to design visualizations of humanitarian data in a way that promotes charitable donations to traveling migrants and other populations in need. See 8.15.

5.4 Gender Equality

Members of the team have been involved in research on gender in academia, such as patterns of collaboration between men and women, and gender balance in organization and steering committees, and among award recipients. See 8.16.

6 Highlights of the year

This year, we got four ANR projects accepted:

- Be-aware [leader] - Bringing environmental issues closer to the public with augmented reality

- ICARE (JCJC) [leader] - Immersive Collaborative Analysis foR Education

- Proteus [leader] - Measuring, understanding and tackling variabilities in Brain-Computer Interfacing

- BCI4IA - a New BCI Paradigm To Detect Intraoperative Awareness During General Anesthesia

6.1 Awards

- Fabien Lotte is the Laureate of the USERN prize 2022 (Universal Scientific Education and Research Network) in the category “Formal Science”, for the project “BrainConquest: Boosting Brain-Computer Communication with high Quality User Training, for Healthy and Motor-Impaired Users alike”.

- Morgane Koval and Yvonne Jansen received an honorable mention award at ACM CHI for their article “Do You See What You Mean? Using Predictive Visualizations to Reduce Optimism in Duration Estimates” 22.

7 New software and platforms

7.1 New software

7.1.1 SHIRE

-

Name:

Simulation of Hobit for an Interactive and Remote Experience

-

Keywords:

Unity 3D, Optics, Education

-

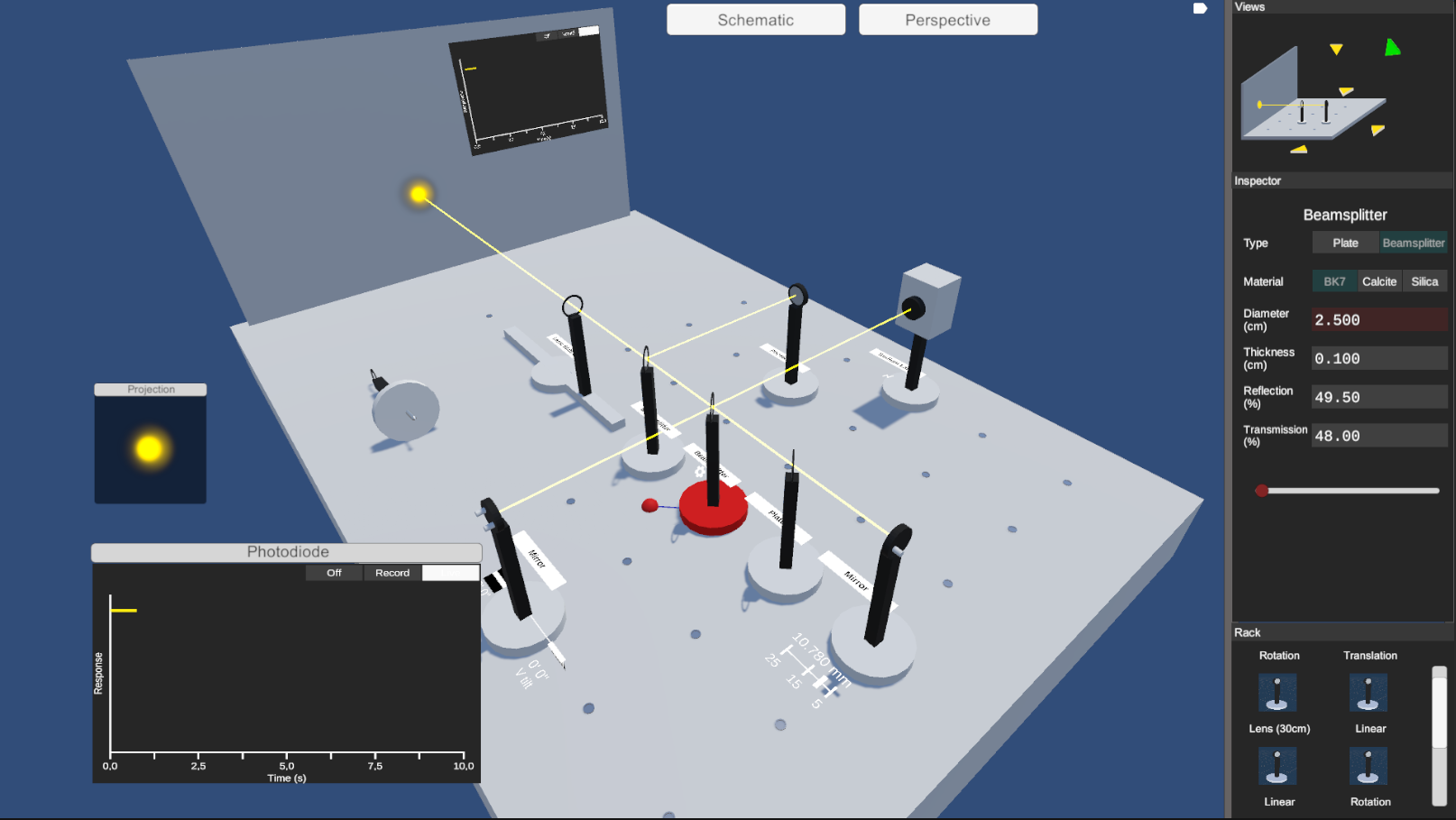

Functional Description:

SHIRE allows to have a simulation of the HOBIT device, an augmented table enhancing the teaching of optics at university level, in a completely digital version. It can be used outside of the classroom and still provide an experience similar to the one available on HOBIT. SHIRE can be used in a collaborative way, thanks to the connectivity between several instances of the software, allowing to work in groups on the same session.

-

Contact:

Vincent Casamayou

-

Participants:

Vincent Casamayou, Justin Dillmann, Martin Hachet, Bruno Bousquet, Lionel Canioni

7.2 New platforms

7.2.1 CARDS

Participants: Philippe Giraudeau, Anagael Pereira, Martin Hachet.

We have continued our work with the CARDS system, which allows us to augment pieces of paper in an interactive way. This technology serves as a basis for the startup project CoIdea led by Philippe Giraudeau. This year, we have also specialized CARDS to explore scenarios dedicated to communication around environmental issues.

7.2.2 HOBIT

Participants: Vincent Casamayou, Justin Dillmann, Martin Hachet.

External collaborators: Lionel Canioni [Université de Bordeaux], Bruno Bousquet [Université de Bordeaux].

Similarly, we have continued improving the HOBIT platform for exploring innovative teaching of wave optics. HOBIT stands for Hybrid Optical Bench for Innovative Teaching. In 2022, this hybrid tool (real/virtual) has been installed in 4 universities for pushing new concrete pedagogical approaches at University.

7.2.3 OpenVIBE

Participants: Thibaut Monseigne, Axel Bouneau, Fabien Lotte.

External collaborators: Thomas Prampart [Inria Rennes - Hybrid], Anatole Lécuyer [Inria Rennes - Hybrid].

This year, we have contributed to three new official releases (OpenViBE 3.3.0, 3.3.1 & 3.4.0) of the free and open-source BCI platform OpenViBE. These releases, realized in collaboration with Inria Rennes (Hybrid project-team), include the correction of various bugs, performance optimization, an update of dependencies use, a generic LSL box managing inputs and outputs, an upgrade of the "Sign Change Detector" box which is now a "Threshold Crossing Detector" (it can send a stimulation whenever a chosen threshold is crossed in the input signal), an update of the "Amplitude artifact detector" box (it can now perform 3 new actions when an artifact is detected), and a new driver, for The Shimmer GSR device (measuring physiological signals). Finally we also updated the developer documentation for adding new boxes to the designer, as well as for implementing new drivers into the acquisition server

7.2.4 Brain Hero

Participants: Thibaut Monseigne, Aline Roc, Anagaël Pereira, Pauline Dreyer, Fabien Lotte.

We continued designing and developping Brain Hero, a gamification of the traditional feedback of mental imagery BCI protocols whose principle is inspired from rhythmic games such as Guitar Hero in order to make the BCI sessions more interesting for the subjects. The visual design of the game was improved, some bugs corrected, new functionalities and customization options added, and the game is now used for real-time BCI experiments.

This picture represents the new visual interface of the Brain Hero visual BCI training environment, together with a user actually using it. The game visual looks like notes on a guitar FretBoard, each note representing a specific mental task for the BCI.

The new visual feedback and gaming environment of the Brain Hero mental imagery-based BCI training, during actual use.

8 New results

8.1 Avatars in Augmented Reality

Participants: Adélaïde Genay, Martin Hachet.

External collaborators: Anatole Lécuyer [Inria Rennes - Hybrid], Kiyoshi Kiyokawa [NAIST, Japan], Riku Otono [NAIST, Japan].

In 2022, we have explored a new usage of Augmented Reality (AR) to extend perception and interaction within physical areas ahead of ourselves. To do so, we propose to detach ourselves from our physical position by creating a controllable “digital copy” of our body that can be used to navigate in local space from a third-person perspective. With such a viewpoint, we aim to improve our mental representation of distant space and understanding of action possibilities (called affordances), without requiring us to physically enter this space (see Figure 4). Our approach relies on AR to virtually integrate the user’s body in remote areas in the form of an avatar. We have proposed concrete application scenarios and several techniques to manipulate avatars in the third person as a part of a larger conceptual framework. Thanks to a user study employing one of the proposed techniques (puppeteering), we evaluated the validity of using third-person embodiment to extend our perception of the real world to areas outside of our proximal zone. We found that this approach succeeded in enhancing the user’s accuracy and confidence when estimating their action capabilities at distant locations 29.

A user with a AR-HMD interacting with his avatar.

Control of self-avatars visualized through an Augmented Reality headset to better perceive interactions and affordances in the physical surroundings. Left: Testing fire exit paths with a gamepad. Center: Planning and testing a route before climbing by controlling the avatar's limbs with gestures. Right: Evaluating possible actions on a distant step stool with body-tracking mapping.

Still in the domain of Avatars, we have also explored with our colleagues from Japan, how it is possible to enhance the Sense of Embodiment (SoE) by introducing transitions between one real body and its virtual counterpart. In particular, we have showed that visual transitions effectively improve the SoE (paper conditionally accepted at IEEE VR 2023). We are also exploring priming approaches that tend to show that SoE can be enhanced when the subject follows a mental preparation protocol.

8.2 ARwavs

Participants: Ambre Assor, Arnaud Prouzeau, Pierre Dragicevic, Martin Hachet.

The negative impact humans have on the environment is partly caused by thoughtless consumption leading to unnecessary waste. A likely contributing factor is the relative invisibility of waste: waste produced by individuals is either out of their sight or quickly taken away. Nevertheless, waste disposal systems sometimes break down, creating natural information displays of waste production that can have educational value. We take inspiration from such natural displays and introduce a class of situated visualizations we call Augmented Reality Waste Accumulation Visualizations or ARwavs, which are literal representations of waste data embedded in users' familiar environment 35. We implemented examples of ARwavs (see Figure 5) and demonstrated them in feedback sessions with experts in pro-environmental behavior, and during a large tech exhibition event. We discuss general design principles and trade-offs for ARwavs. Finally, we conducted a study suggesting that ARwavs yield stronger emotional responses than non-immersive waste accumulation visualizations and plain numbers.

Virtual objects displayed in the real world.

8.3 HOBIT+

Participants: Vincent Casamayou, Justin Dillmann, Martin Hachet.

External collaborators: Bruno Bousquet [Univ. Bordeaux], Lionel Canioni [Univ. Bordeaux], Jean-Paul Guillet [Univ. Bordeaux].

We have continued extending the HOBIT platform 19. On one hand, we have implemented new functionalities on the hybrid platform. This is for example the case of Drivers, which allows the management of automatic measurements recording, as often done in real physics experiments. On the other hand, we have continued extending the functionalities of the fully digital version, called SHIRE (see Figure 6). This software tool allows remote students to continue doing practical work, as if they were manipulating the physical table. We have also started to connect both the physical table and its virtual counterparts for exploring hybrid (insitu/remote) collaborative training sessions.

A view of the desktop version of the HOBIT software

8.4 Tangible interfaces for Railroad Traffic Monitoring

Participants: Maudeline Marlier, Arnaud Prouzeau, Martin Hachet.

Maudeline Marlier started her PhD in March 2022 at Potioc on a Cifre contract with the SNCF on the use of tangible interaction in railroad traffic monitoring control rooms. She performed interviews and observations in several control rooms both in Paris and in Bordeaux to understand how operators work, their different tasks and roles and also what type of issues they are facing. We identified that while their tools are efficient to monitor a situation, they are not designed to explore different solutions when a problem happens (see Figure 7 for an example of a center). For instance, when a train breaks down in the middle of the tracks, operators have to look at many tools to see how to reroute the other trains, where to find a new train, where to find new conductors, how to evacuate the current train and so on. Using these interviews and observations, we build a representative scenario of such situations and we are now working on a prototype of a system that uses spatial augmented reality and tangible interaction to allow operators to easily explore the data they have to produce alternative solutions when critical issues happen.

Picture of operators in a control room

Control room D and R lines, Paris, France.

8.5 Interdisciplinary explorations with Design

Participants: Stéphanie Cardoso, Anagaël Perreira, Aymeric Ferron, Arnaud Prouzeau, Martin Hachet.

The delegation of Stéphanie Cardoso, associate professor at Bordeaux Montaigne University, within the Potioc team, let us explore fields both on ideation design but also on the design of tangible interactions. With colleagues working on the CARDS project, they have identified, listed, and structured the resources to imagine a catalog of uses and a scenarii set allowing to generate ideas. Stéphanie Cardoso has also built several workshops on the role of interfaces in raising environmental awareness:

1/ The political, health and social environment: decision-making support in a health crisis situation regarding the influence of social networks and the various information filters. The objective is to promote participatory democracy.

- “Gestion de crise et anticipation : design et démocratie”, Victoire Bruna, Paris Saclay, Nicolas Gaudron (agence IDSL), Stéphanie Cardoso, ACFAS Montréal, 9 mai 2022.

- “Justice et design”, Eric Jupin (Conseil départemental de la Gironde), Stéphanie Cardoso, ACFAS 9 mai 2022.

2/ Environmental resources: experiments with master Design students (UBM) have highlighted two aspects in the visualization of the impact of the environmental resources drawn on our meals consumption. On the one hand, the semiological dimension involved in the design coherence of the interface improves the interaction with complex data. On the other hand, the appropriation of environmental datas requires an immersive phase to allow better user involvement.

In partnership with MSH (Maison des Sciences) Bordeaux, Stéphanie Cardoso led seminars in the fall of 2022 (november 7th, 23th, december 7th) on design & anticipation. They adressed how interfaces are able to contribute to imagine desirable futures and which futures. Seminars and workshops have explored several topics dear to the Potioc team: anticipation and food, anticipation and energy, how can digital technologies help us in situations of health crisis, flooded territories and electricity restriction.

8.6 Debiasing personal time management with predictive visualizations

Participants: Morgane Koval, Yvonne Jansen.

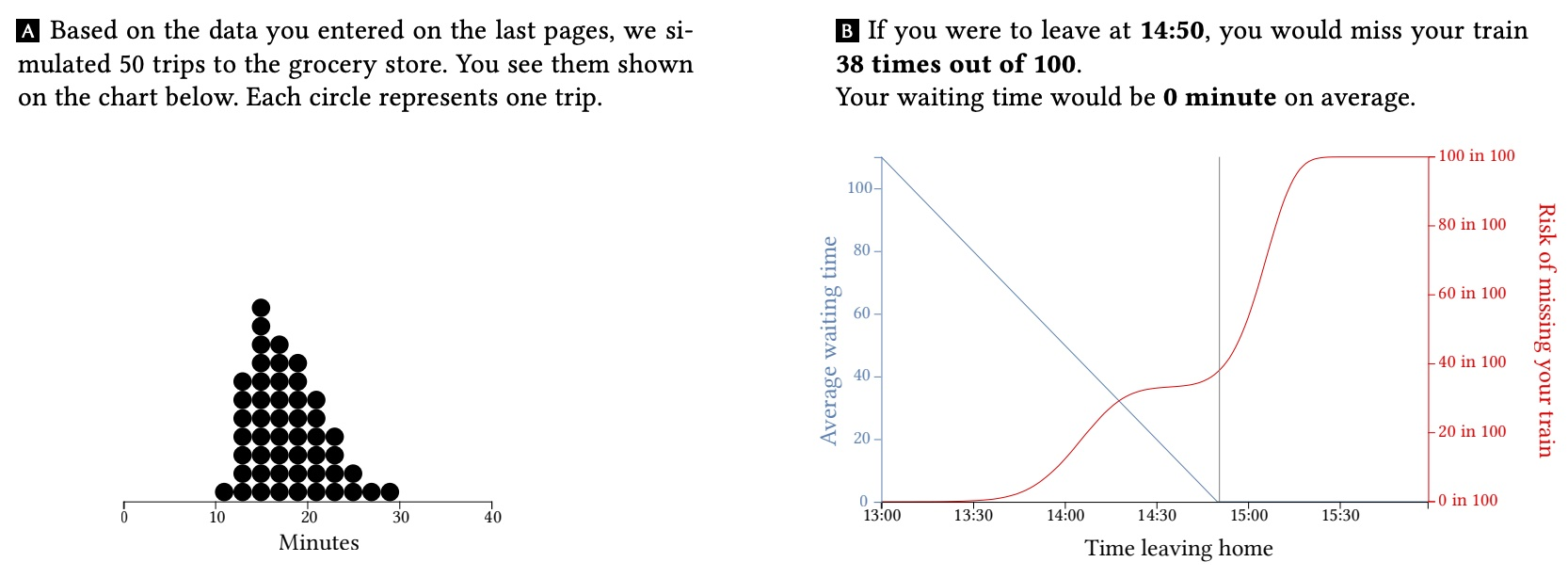

Making time estimates, such as how long a given task might take, frequently leads to inaccurate predictions because of an optimistic bias. Previous attempts to alleviate this bias, including decomposing the task into smaller components and listing potential surprises, have not shown any major improvement. Our approach built on the premise that these procedures may have failed because they involve compound probabilities and mixture distributions which are difficult to compute in one’s head, and we hypothesized that predictive visualizations of such distributions would facilitate the estimation of task durations. We conducted a crowdsourced study in which 145 participants provided different estimates of overall and sub-task durations and we used these to generate predictive visualizations of the resulting mixture distributions. We compared participants’ initial estimates with their updated ones and found compelling evidence that predictive visualizations encourage less optimistic estimates. Our article describing this work 22 was awarded an honorablee mention award at ACM CHI. An interactive demo of the developed tool is available on the project page https://timeestimator.github.io.

The visualizations used in our study: on the left using a quantile dotplot of plausible durations; on the right a double-axis linechart plotting the likelihood to miss one's train against the expected waiting time.

8.7 Capturing Activities in Makerspaces

Participants: Clara Rigaud, Yvonne Jansen.

External collaborators: Ignacio Avellino, Gilles Bailly.

Capturing content of fabrication activities is the first step for producing knowledge resources and an integral part of maker culture. As a secondary task, it conflicts though with the fabrication activity, and thus it is often forgotten and knowledge resources end up incomplete. In this project, we investigated different dimensions of content capture for knowledge resources in fabrication workshops. Based on past work in this area, we first proposed a framework through which we identify two research directions to investigate. From these, we derived three dimensions to explore in more depth: The number of capturing devices, their feature variety and the degree of automation of each feature. We then explored the design space resulting from these three dimensions with the help of a design concept and an online survey study (N=66). Results showed (1) a variety of needs and preferences justifying feature variety and multiplicity, (2) challenges in defining the right degree of manual and automatic control, and (3) the socio-technical impact of cameras in a shared space regarding privacy and ethics. The work resulted in a journal article published at CSCW 31.

8.8 Participatory Data Physicalization on the Climate Impact of Dietary Choices

Participants: Kim Sauvé, Yvonne Jansen, Pierre Dragicevic.

In early 2022 Kim Sauvé came to visit the Potioc team. She was a PhD student from Lancaster University who explored during her visit how a participatory data physicalization, or PDP, may engage people to reflect on the climate impact of their dietary choices. A PDP is a physical visualization that allows people to physically participate in the creation of the visualization, by directly encoding their data. PDPs offer a way to engage a community with data of personal relevance that otherwise would be intangible. However, their design space has only begun to be explored, and most prior work shows relatively simple encoding rules. We explored different ways of faceting contributed data: by week, day, or person. Specifically, we designed Edo, a PDP that allows a small community to contribute their data to a physical visualization showing the climate impact of their dietary choices. The project was published in the form of a pictorial 26 (a new type of research article with a heavy focus on visual communication). Fabrication instructions and data are available under CC-BY 4.0 at osf.io/q5fr6.

A close up of Edo. It shows a variety of different circles, colored differentyl and with different icons on them. Each stands for one portion of one food item.The circle surface encodes the climate impact of one portion of different food items, such as a yoghurt or a steak.

8.9 Envisioning Situated Visualizations of Environmental Footprints in an Urban Environment

Participants: Yvonne Jansen, Pierre Dragicevic, Martin Hachet, Morgane Koval, Léana Petiot, Arnaud Prouzeau.

External collaborators: Federica Bucchieri [Inria Saclay], Petra Isenberg [Inria Saclay], Lijie Yao [Inria Saclay], Dieter Schmalstieg [Graz University of Technology].

During the yearly seminar of the ANR Ember project, we organized a brainstorming exercise which focused on how situated visualizations could be used to better understand the state of the environment and our personal behavioral impact on it. Specifically, we conducted a day long workshop in Bordeaux where we envisioned situated visualizations of urban environmental footprints. We first explored the city and took photos and notes about possible situated visualizations of environmental footprints that could be embedded near places, people, or objects of interest. We found that our designs targeted four purposes and used four different methods that could be further explored to test situated visualizations for the protection of the environment. We contributed our findings as a workshop paper 21 for the Visualization for Good workshop held in conjunction with the IEEE VIS conference in 2022.

The visualizations used in our study: on the left using a quantile dotplot of plausible durations; on the right a double-axis linechart plotting the likelihood to miss one's train against the expected waiting time.

8.10 Body-based User Interfaces

Participants: Yvonne Jansen.

External collaborators: Paul Strohmeier [Saarland University, correspondent], Aske Mottelson [IT University Copenhagen], Henning Pohl [University of Copenhagen], Jess McIntosh [University of Copenhagen], Jarrod Knibbe [University of Melbourne], Joanna Bergström [University of Copenhagen], Kasper Hornbæk [University of Copenhagen].

The relation between the body and computer interfaces has undergone several shifts since the advent of computing. In early models of interaction, the body was treated as a periphery to the mind, much like a keyboard is peripheral to a computer. The goal of the interface designers was to optimize the information flow between the brain and the computer, using these imperfect peripheral devices. Toward the end of the previous century the social body, as well as the material body and its physical manipulation skills started receiving increased consideration from interaction designers. The goal of the interface designer shifted, requiring the designer to understand the role of the body in a given context, and adapting the interface to respect the social context and to make use of the tacit knowledge that the body has of how the physical world functions. Currently, we are witnessing another shift in the role of the body. It is no longer merely something that requires consideration for interface design. Instead, advances in technology and our understanding of interaction allows the body to become part of the interface. We call these body-based user interfaces. We published a handbook chapter, where we present a brief history of the body’s role in human–computer interaction, leading up to a definition of body-based user interfaces. We follow this by presenting examples of interfaces that reflect the different ways in which interfaces can be body-base and conclude by presenting outlooks on benefits, drawbacks, and possible futures of body-based user interfaces.

8.11 Use of Space in Immersive Analytics

Participants: Arnaud Prouzeau, Vincent Casamayou, Yvonne Jansen, Pierre Dragicevic.

External collaborators: Maxime Cordeil [University of Queensland], Jiazhou Liu [Monash University], Barrett Ens [Monash University], Tim Dwyer [Monash University], Benjamin Lee [Monash University], Jim Smiley [Monash University], Sarah Goodwin [Monash University], Isobel Kara Nixon [Monash University], Bernhard Jenny [Monash University], Arvind Satyanarayan [MIT].

An immersive system is one whose technology allows us to “step through the glass” of a computer display to engage in a visceral experience of interaction with digitally-created elements. As immersive technologies have rapidly matured to bring commercially successful virtual and augmented reality (VR/AR) devices and mass market applications, researchers have sought to leverage its benefits, such as enhanced sensory perception and embodied interaction, to aid human data understanding and sensemaking. In 2022, we have mainly explored the use of the 3D workspace in immersive analytics systems.

Recent studies have explored how users of immersive visualisation systems arrange data representations in the space around them. Generally, these have focused on placement centred at eye-level in absolute room coordinates. However, work in HCI exploring full-body interaction has identified zones relative to the user's body with different roles. We encapsulate the possibilities for visualisation view management into a design space (called “DataDancing”). From this design space we extrapolate a variety of view management prototypes, each demonstrating a different combination of interaction techniques and space use. The prototypes are enabled by a full-body tracking system including novel devices for torso and foot interaction. We explore four of these prototypes, encompassing standard wall and table-style interaction as well as novel foot interaction, in depth through a qualitative user study. Learning from the results, we improve the interaction techniques and propose two hybrid interfaces that demonstrate interaction possibilities of the design space. This work has been performed in collaboration with Monash University and is conditionnally accepted at the conference ACM CHI 2023.

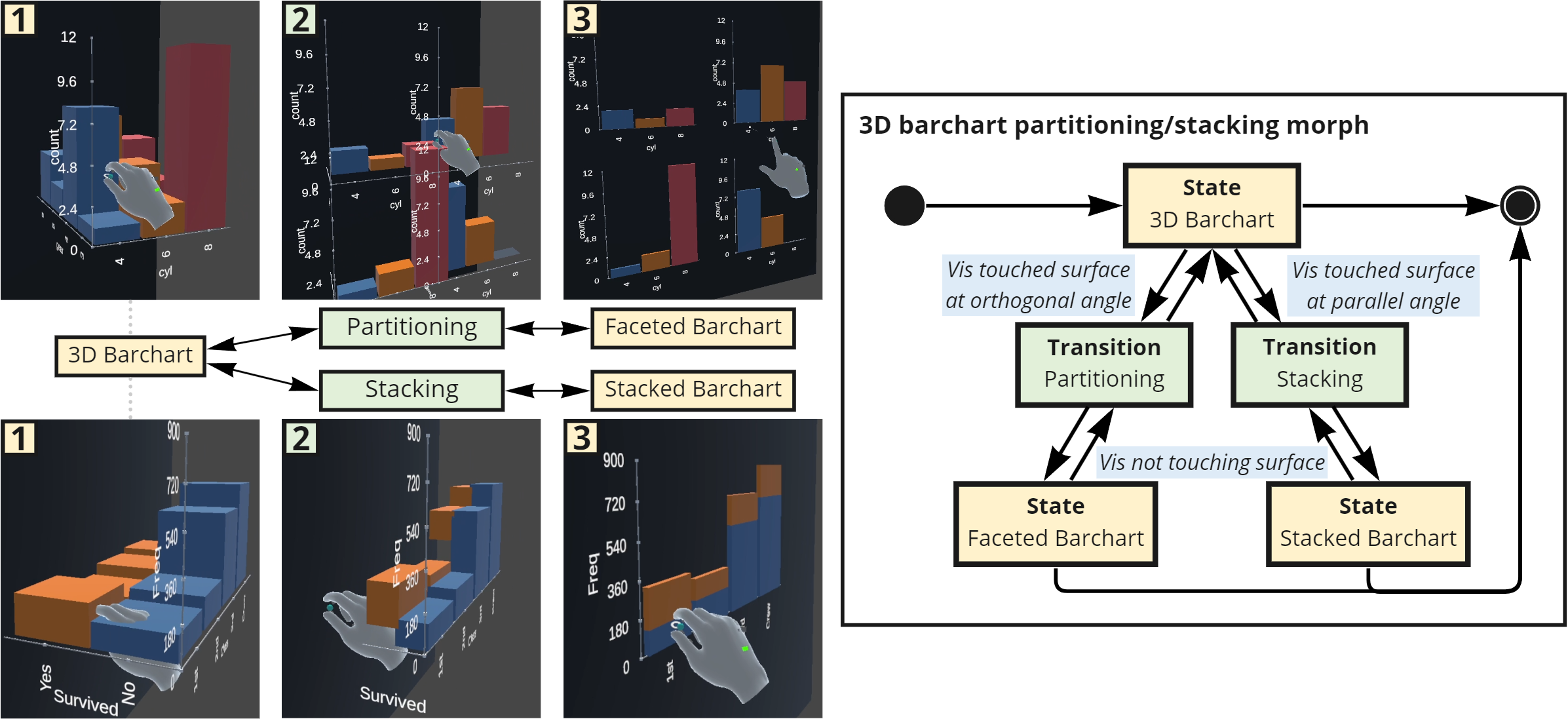

In another work, we developped a grammar for specifying dynamic embodied immersive visualisation morphs and transitions, we called it Deimos (see Figure 11 for an example). A morph is a collection of animated transitions that are dynamically applied to immersive visualisations at runtime and is conceptually modelled as a state machine. It is comprised of state, transition, and signal specifications. States in a morph are used to generate animation keyframes, with transitions connecting two states together. A transition is controlled by signals, which are composable data streams that can be used to enable embodied interaction techniques. Morphs allow immersive representations of data to transform and change shape through user interaction, facilitating the embodied cognition process. We demonstrate the expressivity of Deimos in an example gallery and evaluate its usability in an expert user study of six immersive analytics researchers. Participants found the grammar to be powerful and expressive, and showed interest in drawing upon Deimos’ concepts and ideas in their own research. This work have been performed in collaboration with Monash University and the MIT, and is conditionnally accepted at the conference ACM CHI 2023.

An illustration showing an example of the use of Deimos

A morph that transforms any 3D barchart into either a faceted barchart or a stacked barchart when it touches a surface in the immersive environment, depending on its angle of intersection. It was developped using Deimos.

Finally, we explored how the use of physical navigation in 3D spaces and more embodied techniques to interact with data. Traditionally, data visualisation has mostly focused on finding visual encodings most suitable for two-dimensional displays and static visual examination tasks. The sense-making process to explore them has then remained more or less the same to the one used on 2D screens. In a project, we took advantages of immersive technology to propose a more subjective experience of data. We present Ride Your Data (RYD), an immersive experience in which people ride a virtual roller coasters whose shape is dictated by data, allowing them to literally ride on their data, with climbs and drops corresponding to increases and decreases in data values. We then explored the research challenges behind the design of such experiences, and more generally, data-driven immersive experiences. This work was presented at the conference Alt.VIS 2022.

8.12 Virtual Reality to Amplify Episodic Future Thinking

Participants: Adelaide Genay, Arnaud Prouzeau.

External collaborators: Justin Mahlberg [Monash University], Simon Van Baal [Monash University], Alex Robinson [Monash University], Antonio Verdejo-Garcia [Monash University].

Neuroscience research discovered that when we imagine the future with the same level of detail and affective quality as when we remember the past, we tend to make more future-oriented decisions (e.g. staying home to protect others). Episodic future thinking (EFT) is a prospection-based training that stimulates this ability and has shown to improve future-oriented choices while simultaneously reducing anxiety about the future. Our team is currently working on two ways to improve the effectiveness and scalability of EFT: (1) building an immersive virtual reality (VR) scenario to facilitate imagining the future with sufficient detail and infusing it with positive emotions; (2) exploring the potential of collective EFT (imagining the future together) via VR communities. In 2022, we started a follow up study of the one from 2021 at Monash University to assess the impact of using a VR training during an EFT training and showed encouraging regarding delay discounting, an important mechanism of impulsivity. This second study is done with a clinical population (food addiction) and should be finished in 2023. A Late-Breaking Work paper will be submitted at CHI 2023.

8.13 Virtual Reality Onboarding

Participants: Edwige Gros, Arnaud Prouzeau, Martin Hachet.

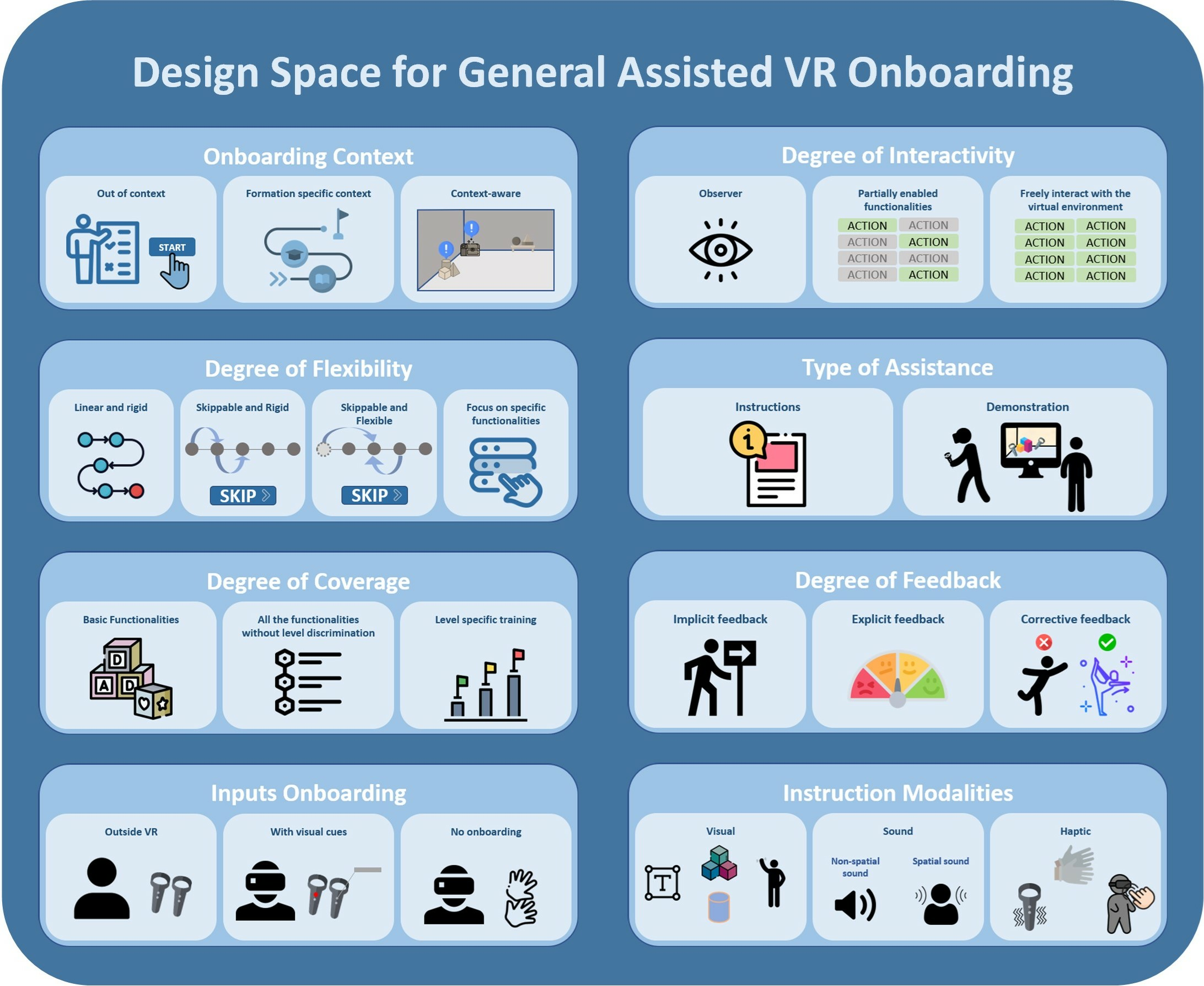

Edwige Gros is doing her PhD at Potioc and explored how onboarding is currently done for VR applications. Explaining to novice users how to interact in immersive VR applications may be challenging. This is in particular due to the fact that the learners are isolated from the real world, and they are asked to manipulate hardware and software objects they are not used to. Consequently, the onboarding phase, which consists in teaching the user how to interact with the application is particularly crucial. In this project, we aim at giving a better understanding of current VR onboarding methods, their benefits and challenges. We performed 21 VR tutorial ergonomic reviews and 15 interviews with VR experts with experience in VR onboarding. Building on the results, we propose a design space for VR onboarding (see Figure 12) and discuss important research directions to explore the design of future efficient onboarding solutions adapted to VR. This work is conditionnally accepted at the conference ACM CHI 2023.

Visual representation of the design space for VR onboarding

Design space of assisted virtual reality onboarding.

8.14 Eye-Tracking Analysis

Participants: Arnaud Prouzeau.

External collaborators: Joshua Langmead [Monash University], Tim Dwyer [Monash University], Ryan Whitelock-Jones [Monash University], Lee Lawrence [Monash University], Sarah Goodwin [Monash University], Kun-Ting Chen [University of Stuttgart], Daniel Weiskopf [University of Stuttgart], Christophe Hurter [Ecole National de l'Aviation Civile].

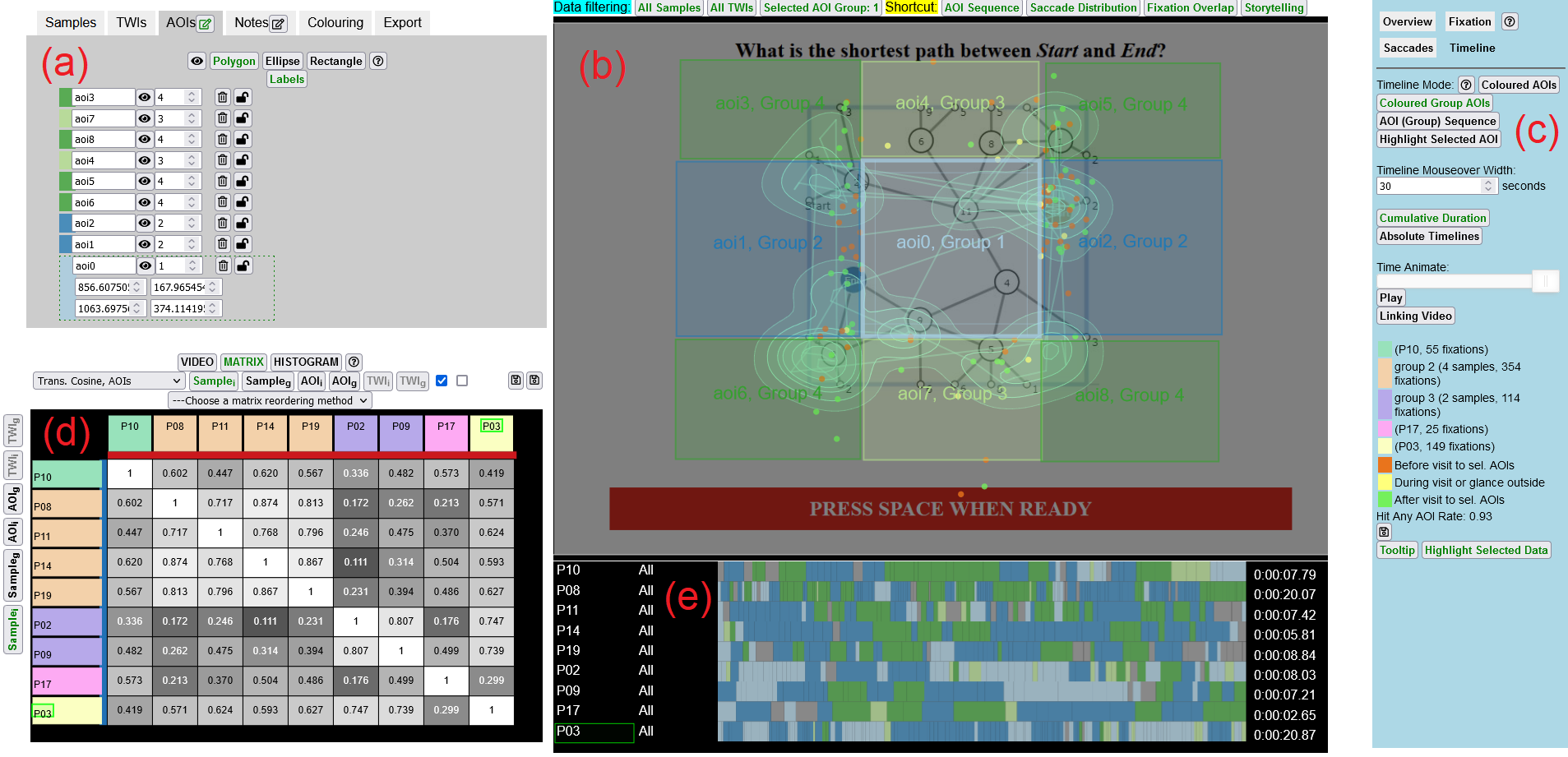

We developped Gazealytics an open-source tool features a unified combination of gaze analytics features that support flexible exploratory analysis, along with a definition of areas of interest and filter options based on multiple criteria to visually analyse eye tracking data across time and space (see Figure 13 for a screenshot). This approach exceeds the capabilities of existing systems by supporting flexible comparison between and within subjects, hypothesis generation, data analysis and communication of insights. Evaluation of this tool involved a field study with 10 domain experts each spending up to 10 months with the tool. The results indicate that the matrix overview provides the ability to quickly change parameters and visualize the results in real-time. Expert feedback indicates Gazealytics works well to support the sensemaking loop with diverse datasets and helps to gain new insights. This work have been performed in collaboration with Monash University and the University of Stuttgart, and will be submitted at the conference ETRA 2023.

Screenshot of the Gazealytics interface

Gazealytics coordinated views: (a): data control panel; top bar of (b): data filtering and shortcut for recommended coordinated views based on common eye tracking analytical tasks; (b) spatial visualisation (c): parameter control panel; (d): metrics views in matrix and histograms; (e) timeline visualisation

8.15 Humanitarian Data Visualizations

Participants: Pierre Dragicevic.

In a pre-prerint 37, we introduced immersive humanitarian visualization as a promising research area in information visualization. Humanitarian visualizations are data visualizations designed to promote human welfare. We explained why immersive display technologies taken broadly (e.g, virtual reality, augmented reality, ambient displays and physical representations) open up a range of opportunities for humanitarian visualization. In particular, immersive displays offer ways to make remote and hidden human suffering more salient. They also offer ways to communicate quantitative facts together with qualitative information and visceral experiences, in order to provide a holistic understanding of humanitarian issues that could support more informed humanitarian decisions. But despite some promising preliminary work, immersive humanitarian visualization has not taken off as a research topic yet. The goal of this paper was to encourage, motivate, and inspire future research in this area.

In a separate pre-print 36, we introduced effective altruism and how it relates to humanitarian visualization. Effective altruism is a movement whose goal it to use evidence and reason to figure out how to benefit others as much as possible. This movement is becoming influential, but effective altruists still lack tools to help them understand complex humanitarian trade-offs and make good decisions based on data. Visualization – the study of computer-supported, visual representations of data meant to support understanding, communication, and decision making – can help alleviate this issue. Conversely, effective altruism provides a powerful thinking framework for visualization research that focuses on humanitarian applications.

Those position papers are a follow up of work conducted before in collaboration with the University of Campina Grande in Brazil 48 and described in the 2021 activity report.

8.16 Gender in 30 Years of Visualization Research

Participants: Pierre Dragicevic.

External collaborators: Natkamon Tovanitch [Inria Saclay, Aviz team], Petra Isenberg [Inria Saclay, Aviz team].

We presented an exploratory analysis of gender representation among the authors, committee members, and award winners at the IEEE Visualization (VIS) conference over the last 30 years. Our goal was to provide descriptive data on which diversity discussions and efforts in the community can build. We looked in particular at the gender of VIS authors as a proxy for the community at large. We considered measures of overall gender representation among authors, differences in careers, positions in author lists, and collaborations. We found that the proportion of female authors has increased from 9% in the first five years to 22% in the last five years of the conference. Over the years, we found the same representation of women in program committees and slightly more women in organizing committees. Women are less likely to appear in the last author position, but more in the middle positions. In terms of collaboration patterns, female authors tend to collaborate more than expected with other women in the community.

8.17 A Survey of Tasks and Visualizations in Multiverse Analysis Reports

Participants: Yvonne Jansen, Pierre Dragicevic.

External collaborators: Brian Hall [University of Michigan], Yang Liu [University of Washington], Fanny Chevalier [University of Toronto], Matthew Kay [Northwestern University].

Analysing data from experiments is a complex, multi-step process, often with multiple defensible choices available at each step. While analysts often report a single analysis without documenting how it was chosen, this can cause serious transparency and methodological issues. To make the sensitivity of analysis results to analytical choices transparent, some statisticians and methodologists advocate the use of ‘multiverse analysis’: reporting the full range of outcomes that result from all combinations of defensible analytic choices. Summarizing this combinatorial explosion of statistical results presents unique challenges; several approaches to visualizing the output of multiverse analyses have been proposed across a variety of fields (e.g. psychology, statistics, economics, neuroscience). In this article 13, we (1) introduced a consistent conceptual framework and terminology for multiverse analyses that can be applied across fields; (2) identified the tasks researchers try to accomplish when visualizing multiverse analyses and (3) classified multiverse visualizations into ‘archetypes’, assessing how well each archetype supports each task. Our work sets a foundation for subsequent research on developing visualization tools and techniques to support multiverse analysis and its reporting.

This work is a follow-up of previous work published in 2018 and described in a previous activity report.

8.18 Gesture Elicitation as a Computational Optimization Problem

Participants: Pierre Dragicevic.

External collaborators: Theophanis Tsandilas [Inria Saclay, ExSitu team].

Gesture elicitation studies are commonly used for designing novel gesture-based interfaces. There is a rich methodology literature on metrics and analysis methods that helps researchers understand and characterize data arising from such studies. However, deriving concrete gesture vocabularies from this data, which is often the ultimate goal, remains largely based on heuristics and ad hoc methods. In this paper 27, we treated the problem of deriving a gesture vocabulary from gesture elicitation data as a computational optimization problem. We showed how to formalize it as an optimal assignment problem and discuss how to express objective functions and custom design constraints through integer programs. In addition, we introduced a set of tools for assessing the uncertainty of optimization outcomes due to random sampling, and for supporting researchers' decisions on when to stop collecting data from a gesture elicitation study. We evaluated our methods on a large number of simulated studies.

8.19 Understanding ERD/ERS during Motor Imagery BCI use

Participants: Sébastien Rimbert, David Trocellier, Fabien Lotte.

8.19.1 ERD modulations during motor imageries relate to users' traits and BCI performances.

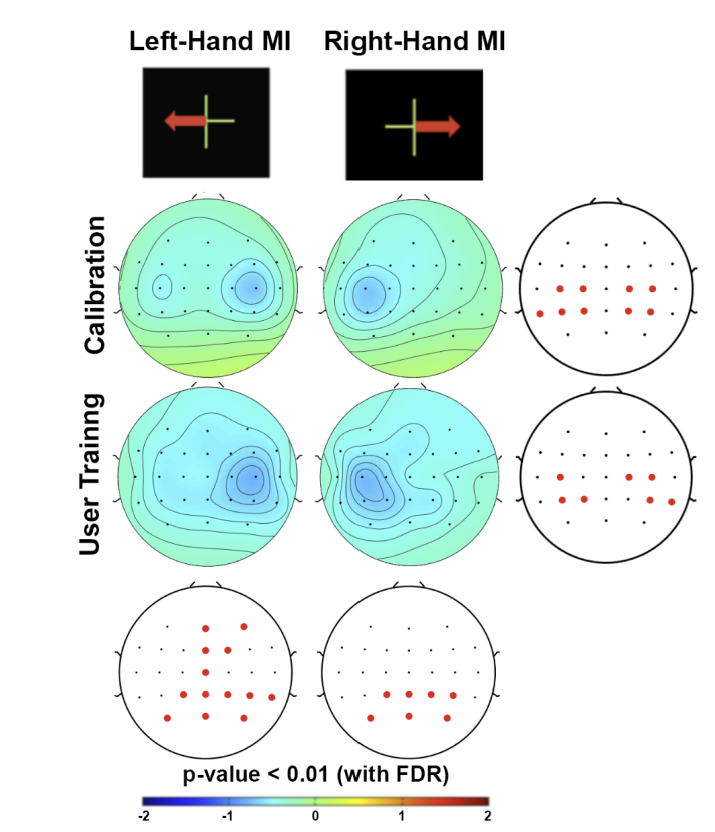

Improving user performances is one of the major issues for Motor Imagery (MI) - based BCI control. MI-BCIs exploit the modulation of sensorimotor rhythms (SMR) over the motor and sensorimotor cortices to discriminate several mental states and enable user interaction. Such modulations are known as Event-Related Desynchronization (ERD) and Synchronization (ERS), coming from the mu (7-13 Hz) and beta (15-30 Hz) frequency bands. This kind of BCI opens up promising fields, particularly to control assistive technologies, for sport training or even for post-stroke motor rehabilitation. However, MI-BCIs remain barely used outside laboratories, notably due to their lack of robustness and usability (15 to 30% of users seem unable to gain control of an MI-BCI). One way to increase user performance would be to better understand the relationships between user traits and ERD/ERS modulations underlying BCI performance. Therefore, in this study we analyzed how cerebral motor patterns underlying MI tasks (i.e., ERDs and ERSs) are modulated depending (i) on nature of the task (i.e., right-hand MI and left-hand MI), (ii) the session during which the task was performed (i.e., calibration or user training) and (iii) on the characteristics of the user (e.g., age, gender, manual activity, personality traits) on a large MI-BCI data base of N=75 participants. One of the originality of this study is to combine the investigation of human factors related to the user's traits and the neurophysiological ERD modulations during the MI task. Our study revealed for the first time an association between ERD and self-control from the 16PF5 questionnaire. This work was presented at CORTICO 2022 41 and published in the proceedings of the IEEE EMBC 2022 conference 25.

This Figure shows ERD patterns during both left and right hand motor imagery,for the calibration and user training phases. They show clear ERD over the controlateral motor cortices during MI.

Topographic map of ERD/ERS% (grand average, n=75) in the alpha/mu+beta band during the right-hand and left-hand MIs for both calibration and user training sessions. A blue colour corresponds to a strong ERD and a red one to a strong ERS. Red electrodes indicate a significant difference (p0.01) with a FDR (False Discovery Rate) correction.

8.19.2 Is Event-Related Desynchronization variability correlated with BCI performance?

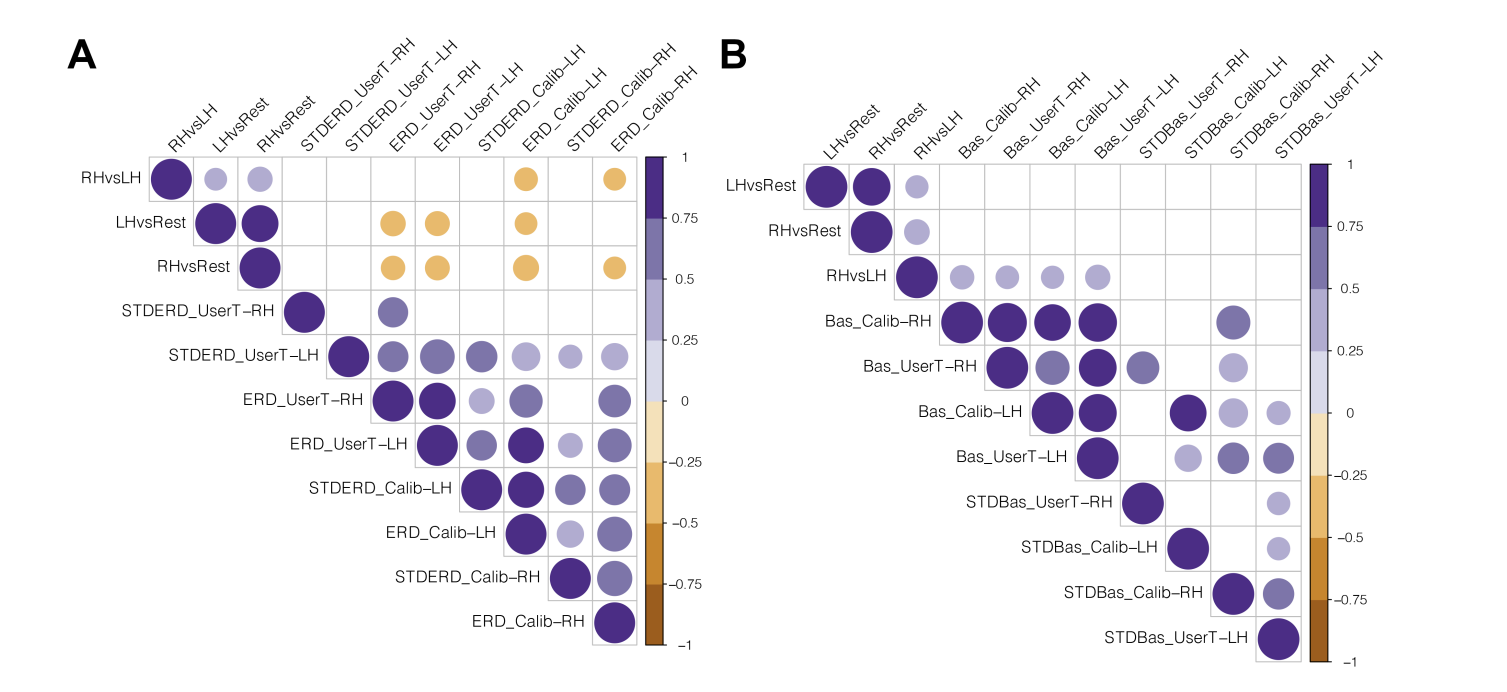

Despite current research, the relationship between the variability of Event-Related Desynchronization (ERD) generated during Motor Imagery (MI) tasks and MI-BCI performances is still not well understood. Indeed, numerous studies have previously shown that there is a lot of inter-subject and intra-subject variability in ERD patterns, but difficulties remain to understand the origin of such variability. This lack of knowledge about variability of cerebral motor patterns limits the possibilities of improving the performance of BCIs, which remains quite poor on average. We believe that a better understanding of the variability of ERDs during BCI use is crucial for developing effective interfaces. Indeed, analysis of inter-trial ERDs and their variability throughout the experimental session during MI are largely neglected in most studies, which have mainly focused on identifying ERD patterns averaged across trial and possibly across participants. In this study, we propose to analyze large MI-BCI databases (n=75 subjects) and investigate how the inter/intra-individual variability of the cerebral motor patterns underlying the right-hand and left-hand MIs task (i.e., ERDs) is associated to BCI performance. Our study revealed that although ERD amplitude and baseline power are correlated with BCI performances, variability of ERD amplitude or baseline power are not.This work was published in the proceedings of the IEEE MetroXRAINE 2022 conference 32

Correlograms for both left and right hand MI, showing the correlations between ERD/ERS amplitudes and variability measures and performances, showing that variability metrics do not correlated with other metrics.

Correlograms representing pearson correlations between BCI performances and (A) ERD modulations variability metrics, (B) baseline variability metrics. Correlations with a p-value 0.05 are considered as not significant and are in blank. Positive correlations are displayed in blue and negative ones are in orange. The intensity of the color and the size of the circles are proportional to the correlation coefficients.

8.20 Public BCI data sharing:

Participants: Pauline Dreyer, Aline Roc, Camille Benaroch, Thibaut Monseigne, Sébastien Rimbert, Fabien Lotte.

The numerous experiments carried out within the BrainConquest project allowed the creation of a large database that has been published on Zenodo (https://doi.org/10.5281/zenodo.7516451). It contains electroencephalographic signals from 87 human participants, with more than 20,800 trials in total which represent about 70 hours of recording. It includes the performance of the associated BCI users, detailed information about the demographics, personality and cognitive user's profile, and the experimental instructions and codes (executed in the open-source platform OpenViBE). A data base paper has also been submitted to describe this new EEG data base.

8.21 Towards curating personalized art exhibitions in Virtual Reality with multimodal Electroencephalography-based Brain-Computer-Interfaces

Participants: Marc Welter, Axel Bouneau, Fabien Lotte.

External collaborators: Tomàs Ward [Dublin City University].

Today, we live in an age of 'Like' where appreciation of digital content is expressed constantly by interacting with feedback icons. In contrast, Brain-Computer-Interfaces (BCIs) can decode cognitive states from neural signals without explicit user feedback that interrupts aesthetic experiences (AEs). This recently started project will elucidate the neuro-cognitive mechanisms behind art appreciation and implement an Electroencephalography (EEG)-based BCI to detect physiological correlates of artwork preference in order to curate personalized art exhibitions in Virtual Reality (VR). Most EEG recordings in visual neuroaesthetics focused on Event-Related Potentials, often using paradigms with unnatural viewing conditions. On the other hand, the neural dynamics during visual art appreciation remain obscure and previous studies reported conflicting results. Furthermore, the liking of visual artworks was mostly investigated from the perspective of beauty or pleasantness, concepts which are not applicable to all aesthetic pleasures. We hypothesize instead that art preferences in general depend on rewarding AEs. Therefore, we will develop novel algorithms to decode and discriminate EEG neuromarkers of hedonic AEs. In a first step, we conceptualized neuro-cognitive components of AE, such as attention, emotion and intrinsic reward, as well as their established EEG neuromarkers. Then, we developed a novel experimental frame work to investigate the physiological correlates of aesthetic 'Liking'. In the future, we will record EEG and other physiological measures, e.g. eye-tracking and heart rate, in naturalistic single trial VR experiments, use advanced Machine Learning to detect artwork preference and recommend further objects based on this multimodal information. Finally, we embrace open science and will make subject data and BCI algorithms publicly accessible. This was published in the VSAC 2022 conference 44 and presented at MetroXRAINE 2022.

8.22 Identifying factors influencing the outcome of BCI-based post stroke motor rehabilitation towards its personalization with Artificial Intelligence

Participants: David Trocellier, Fabien Lotte.

External collaborators: Bernard N'Kaoua [Univ. Bordeaux].

Stroke is the leading cause of complex disability in adults. The prevalence of motor deficit and cognitive impairment after stroke is high and persistent. The most common consequence is the hemiparesis of the contralateral upper limb, with over 80% of stroke patients suffering from this condition acutely and over 40% chronically. Brain-Computer Interfaces (BCI) based on motor imagery have shown promising results in post-stroke motor recovery. However, this approach does not work for all patients, and even when it works, shows vastly different effectiveness across patients. It thus needs to be improved. This could be achieved by personalizing the BCI-based Motor Rehabilitation (MR) program to each patient, notably by personalizing the employed Artificial Intelligence (AI) models used. To do this, it is necessary to first identify the predictive factors of successful BCI-based motor rehabilitation. In fact, very little research has addressed the question of factors that influence post-stroke BCI-based MR. Thus, in this work, we present a survey of the literature about the factors related to successful use of BCIs in general and then the factors that are associated to post-stroke motor recovery, to identify the various factors that could influence BCI-based post-stroke MR. We then discuss how such factors could be taken into account in order to develop new AI algorithms for personalized post-stroke BCI-based MR. We presented this work at CORTICO 2022 43 and published it in the proceedings of the IEEE MetroXRAINE 2022 conference 33.

8.23 Simple Probabilistic Data-driven Model for Adaptive BCI Feedback

Participants: Fabien Lotte.

External collaborators: Jelena Mladenovic [Union Univ. Belgrade], Jérémy Frey [Ullo], Jérémie Mattout [Inserm Lyon].

Due to abundant signal and user variability among others, BCIs remain difficult to control. To increase performance, adaptive methods are a necessary means to deal with such a vast spectrum of variable data. Typically, adaptive methods deal with the signal or classification corrections (adaptive spatial filters, co-adaptive calibration, adaptive classifiers). As such, they do not necessarily account for the implicit alterations they perform on the feedback (in real-time), and in turn, on the user, creating yet another potential source of unpredictable variability. Namely, certain user's personality traits and states have shown to correlate with BCI performance, while feedback can impact user states. For instance, altered (biased) feedback was distorting the participants' perception over their performance, influencing their feeling of control, and online performance. Thus, one can assume that through feedback we might implicitly guide the user towards a desired state beneficial for BCI performance. We propose a novel, simple probabilistic, data-driven dynamic model to provide such feedback that will maximize performance. This work was published in the proceedings of the NeuroAdaptive Technology (NAT) conference 2022 24.

8.24 Modeling subject perception and behaviour during neurofeedback training

Participants: Fabien Lotte.

External collaborators: Côme Annicchiarico [Inserm Lyon], Jérémie Mattout [Inserm Lyon].

Neurofeedback training describes a closed-loop paradigm in which a Brain-Computer Interface is typically used to provide a subject with an evaluation of his/her own mental states. As a learning process, it aims at enabling the subject to apprehend his or her own latent cognitive states in order to modulate it. Its use for therapeutic purposes has gained a lot of traction in the public sphere in the last decade, but conflicting evidence concerning its efficacy has led to increasing efforts by the scientific community to provide better explanations for the cognitive mechanisms at work. We intend to contribute to this effort by proposing a mathematical formalization of the mechanisms at play in this (arguably) quite complex dynamical system. Due to the subjective nature of the task, a representation of the subject and experimenter separate beliefs and hypothesis is an important first step to propose a meaningful approximation. We provide a first model of the training loop based on those considerations, introducing two pipelines. The direct pipeline (subject-> feedback) makes use of a coupling between cognitive and physiological states to infer latent cognitive states from measurement. The return pipeline (feedback-> subject) describes how perception of the indicator impacts subject behaviour. To describe the behaviour of an agent facing an uncertain environment, we make use of the Active Inference framework (1), a bayesian approach to belief updating that provides a biologically plausible model of perception, action and learning. The ensuing model is then leveraged to simulate computationally the behaviour and evolving beliefs of a neurofeedback training subject in tasks of varying nature and difficulty. We finally analyze the effects of several sources of error such as measurement noise or uncertainty surrounding the choice of the biomarker to conclude on their influence on training efficacy. This work was presented in CORTICO 2022 38 and published in the proceedings of the NeuroAdaptive Technology (NAT) conference 2022 20.

8.25 User-specific frequency band and time segment selection with high class distinctiveness for Riemannian BCIs

Participants: Fabien Lotte.

External collaborators: Maria Sayu Yamamoto [Univ. Paris Saclay], Sylvain Chevallier [Univ. Versailles Saint-Quentin], Florian Yger [Univ. Paris Dauphine - PSL].

User-specific settings are known to enhance Brain-Computer Interface (BCI) performances. In particular, the optimal frequency band and time segment for oscillatory activity classification are highly user-dependent and many selection methods have been developed in the past two decades. However, it has not been studied well whether those conventional methods can provide optimal settings for the Riemannian BCIs, a recent family of BCI systems that utilize different data representation, based on covariance matrices, compared to conventional BCI pipelines. In this work, we proposed a novel frequency band and time segment selection method considering class distinctiveness on the Riemannian manifold. The class distinctiveness of each combination of frequency band and time segment is quantified based on inter-class distance and intra-class variance on the Riemannian manifold. An advantage of this method is the user-specific settings can be adjusted without computationally heavy optimization steps. To the best of our knowledge, this is the first optimization method for selecting both the frequency band and the time segment on the Riemannian manifold. We evaluated the contributions of the 3 different selection models (frequency band, time or frequency band+time), comparing classification accuracy with a baseline (a fixed frequency band of 8-30 Hz and a fixed 2s time window) and a conventional popular method for non-Riemannian BCIs, on the BCI competition IV dataset 2a (2-class motor imagery). Our method showed higher average accuracy than baseline and a conventional method in all three models, and especially the frequency band selection model showed the highest performance. This preliminary result suggests the importance of developing new selection algorithms considering the properties of the manifold, rather than directly applying methods developed prior to the rise of Riemannian BCIs as they are. This work was presented at CORTICO 2022 45 and published in the proceedings of the IEEE EMBC 2022 conference 28.

8.26 Retrospective on the First Passive Brain-Computer Interface Competition on Cross-Session Workload Estimation

Participants: Fabien Lotte.

External collaborators: Raphaëlle Roy [ISAE-SupAéro, Toulouse], Marcel Hinss [ISAE-SupAéro, Toulouse], Ludovic Darmet [ISAE-SupAéro, Toulouse], Simon Ladouce [ISAE-SupAéro, Toulouse], Emilie Jahanpour [ISAE-SupAéro, Toulouse], Bertille Somon [ISAE-SupAéro, Toulouse], Xiaoqi Xu [ISAE-SupAéro, Toulouse], Nicolas Drougard [ISAE-SupAéro, Toulouse], Frédéric Dehais [ISAE-SupAéro, Toulouse].

As is the case in several research domains, data sharing is still scarce in the field of Brain-Computer Interfaces (BCI), and particularly in that of passive BCIs— i.e ., systems that enable implicit interaction or task adaptation based on a user's mental state(s) estimated from brain measures. Moreover, research in this field is currently hindered by a major challenge, which is tackling brain signal variability such as cross-session variability. Hence, with a view to develop good research practices in this field and to enable the whole community to join forces in working on cross-session estimation, we created the first passive brain-computer interface competition on cross-session workload estimation. This competition was part of the 3rd International Neuroergonomics conference. The data were electroencephalographic recordings acquired from 15 volunteers (6 females; average 25 y.o.) who performed 3 sessions—separated by 7 days—of the Multi-Attribute Task Battery-II (MATB-II) with 3 levels of difficulty per session (pseudo-randomized order). The data -training and testing sets—were made publicly available on Zenodo along with Matlab and Python toy code (https://doi.org/10.5281/zenodo.5055046). To this day, the database was downloaded more than 900 times (unique downloads of all version on the 10th of December 2021: 911). Eleven teams from 3 continents (31 participants) submitted their work. The best achieving processing pipelines included a Riemannian geometry-based method. Although better than the adjusted chance level (38% with an α at 0.05 for a 3-class classification problem), the results still remained under 60% of accuracy. These results clearly underline the real challenge that is cross-session estimation. Moreover, they confirmed once more the robustness and effectiveness of Riemannian methods for BCI. On the contrary, chance level results were obtained by one third of the methods—4 teams- based on Deep Learning. These methods have not demonstrated superior results in this contest compared to traditional methods, which may be due to severe overfitting. Yet this competition is the first step toward a joint effort to tackle BCI variability and to promote good research practices including reproducibility. This work was published in the Frontiers in Neuroergonomics: Neurotechnology and Systems Neuroergonomics journal 16.

8.27 Exploring the evolution of users' subjective ratings in three Motor Imagery (MI)-based BCI sessions

Participants: Aline Roc, Pauline Dreyer, Thibaut Monseigne, Fabien Lotte.

Many challenges remain to understand user-BCI interactions and make them easier, more effective, and more enjoyable - especially for Motor Imagery (MI)-based BCI. Past research in MI without feedback showed possible relations between prolonged practice (time) and decreased EEG features separability or increased subjective effort and mental fatigue. In MI-BCI applications, varying results suggest an unclear relationship between online performance and user subjective ratings e.g. fatigue or performance auto-evaluation. Often, studies show either very few subjects or one single session. Thus, how user experience evolves during standard training, both within- and between-session is still unclear. Therefore, we investigated how users' subjective ratings vary in relation to time or online performances when training with the standard Graz cue-based MI-BCI. 24 participants engaged in 3 sessions of 12 short runs. Each short run (16 trials/run) was followed by self-reported ratings (Frustration, Anxiety, MentalDemand, SubjectivePerformance, MentalEffort, and MentalFatigue). Statistical analysis indicated a significant time effect for user ratings, with an increase within-sessions of Frustration, MentalDemand, MentalEffort, and MentalFatigue. Session 1 was rated significantly more challenging than the other two regarding Frustration, Anxiety, MentalDemand, MentalEffort, and MentalFatigue. This highlights the importance of conducting studies across multiple sessions. BCI performances did not correlate with subjective ratings. We provide a ground truth in a standard training paradigm to help elucidate the possible relationships between time, performance, and user ratings during prolonged BCI operation by novice users. This work was presented at CORTICO 2022 42 and submitted to a journal.

8.28 What can we learn of MI-BCI users' strategies and experience through thematic analysis of user interviews?

Participants: Aline Roc, Pauline Dreyer, Fabien Lotte.

In this contribution, we provide pieces of answers to a question considered as very important: What is it like to use a BCI?. Notably, we identify actionable insights into which training parameters can be considered difficult or distracting, which is a lever to better understand the needs of users to design better exercises in the future. To do so we used semi-structured interviews and their qualitative analysis, following each of 3 sessions of MI-BCI user training, for 24 users. Overall, the three main elements that emerge from the analysis are the importance and difficulties of focus, fatigue, and the suppression of parasite thoughts and emotions

9 Bilateral contracts and grants with industry

9.1 Bilateral contracts with industry

SNCF - Cifre:

Participants: Maudeline Marlier, Arnaud Prouzeau, Martin Hachet.

- Duration: 2022-2025

- Local coordinator: Arnaud Prouzeau

- We started a collaboration with SNCF around the PhD thesis (Cifre) of Maudeline Marlier. The objective is to rethink railway control rooms with interactive tabletop projections.

10 Partnerships and cooperations

10.1 International research visitors

10.1.1 Visits of international scientists

Other international visits to the team

Zachary Traylor

-

Status

(Visting PhD student)

-

Institution of origin:

North Carolina State University

-

Country:

USA

-

Dates:

September 2022 - January 2023

-

Context of the visit:

collaboration on explanable AI to understand Deep Learning and Riemannian classifiers characteristics for EEG classification

-

Mobility program/type of mobility:

Chateaubriand Fellowship & internship

Kim sauvé

-

Status

(Visting PhD student)

-

Institution of origin:

Lancaster University

-

Country:

UK

-

Dates:

February 2022 - April 2023

-

Context of the visit:

collaboration on the visualization of personal data of a local community (more specifically the climate impact of dietary choices)

-

Mobility program/type of mobility:

the visit was financed as part of the ANR Ember project

10.1.2 Visits to international teams

Research stays abroad

Adélaïde Genay

-

Visited institution

: NAIST - Care Lab

-

Country

: Japan

-

Dates

: 2022/08/16 - 2022/11/15

-

Context of the visit

: Collaboration on Avatars, part of Adélaïde Genay PhD thesis.

-

Mobility program/type of mobility

: internship

10.2 European initiatives

10.2.1 H2020 projects

BrainConquest

BrainConquest project on cordis.europa.eu

-

Title:

Boosting Brain-Computer Communication with high Quality User Training

-

Duration:

From July 1, 2017 to December 31, 2022

-

Partners:

- Inria Bordeaux Sud-Ouest, France

-

Inria contact:

Fabien Lotte

-

Coordinator:

Fabien Lotte (INRIA)

-

Summary:

Brain-Computer Interfaces (BCIs) are communication systems that enable users to send commands to computers through brain signals only, by measuring and processing these signals. Making computer control possible without any physical activity, BCIs have promised to revolutionize many application areas, notably assistive technologies, e.g., for wheelchair control, and human-machine interaction. Despite this promising potential, BCIs are still barely used outside laboratories, due to their current poor reliability. For instance, BCIs only using two imagined hand movements as mental commands decode, on average, less than 80% of these commands correctly, while 10 to 30% of users cannot control a BCI at all. A BCI should be considered a co-adaptive communication system: its users learn to encode commands in their brain signals (with mental imagery) that the machine learns to decode using signal processing. Most research efforts so far have been dedicated to decoding the commands. However, BCI control is a skill that users have to learn too. Unfortunately how BCI users learn to encode the commands is essential but is barely studied, i.e., fundamental knowledge about how users learn BCI control is lacking. Moreover standard training approaches are only based on heuristics, without satisfying human learning principles. Thus, poor BCI reliability is probably largely due to highly suboptimal user training. In order to obtain a truly reliable BCI we need to completely redefine user training approaches. To do so, I propose to study and statistically model how users learn to encode BCI commands. Then, based on human learning principles and this model, I propose to create a new generation of BCIs which ensure that users learn how to successfully encode commands with high signal-to-noise ratio in their brain signals, hence making BCIs dramatically more reliable. Such a reliable BCI could positively change human-machine interaction as BCIs have promised but failed to do so far.

BITSCOPE

-

Funding:

CHIST-ERA Grant

-

Title:

BITSCOPE: Brain Integrated Tagging for Socially Curated Online Personalised Experiences

-

Duration:

2022-2025 (3 years)

-

Partners:

- Dublin City University (DCU), Ireland (Project Leader. Lead: Tomàs Ward)

- Inria Bordeaux Sud-Ouest, France (Lead: Fabien Lotte)

- Nicolas Copernicus University, Poland (Lead: Veslava Osinska)

- University Politechnic of Valence, Spain (Lead: Mariona Alcañiz)

-

Inria contact:

Fabien Lotte

-

Coordinator:

Tomàs Ward (DCU)

-

Summary:

This project presents a vision for brain computer interfaces (BCI) which can enhance social relationships in the context of sharing virtual experiences. In particular we propose BITSCOPE, that is, Brain-Integrated Tagging for Socially Curated Online Personalised Experiences. We envisage a future in which attention, memorability and curiosity elicited in virtual worlds will be measured without the requirement of “likes” and other explicit forms of feedback. Instead, users of our improved BCI technology can explore online experiences leaving behind an invisible trail of neural data-derived signatures of interest. This data, passively collected without interrupting the user, and refined in quality through machine learning, can be used by standard social sharing algorithms such as recommender systems to create better experiences. Technically the work concerns the development of a passive hybrid BCI (phBCI). It is hybrid because it augments electroencephalography with eye tracking data, galvanic skin response, heart rate and movement in order to better estimate the mental state of the user. It is passive because it operates covertly without distracting the user from their immersion in their online experience and uses this information to adapt the application. It represents a significant improvement in BCI due to the emphasis on improved denoising facilitating operation in home environments and the development of robust classifiers capable of taking inter- and intra-subject variations into account. We leverage our preliminary work in the use of deep learning and geometrical approaches to achieve this improvement in signal quality. The user state classification problem is ambitiously advanced to include recognition of attention, curiosity, and memorability which we will address through advanced machine learning, Riemannian approaches and the collection of large representative datasets in co-designed user centred experiments.

10.3 National initiatives

Inria Project Lab AVATAR:

- Duration: 2018-2022

- Partners: Inria project-teams: GraphDeco, Hybrid, Loki, MimeTIC, Morpheo

- Coordinator: Ludovic Hoyet (Inria Rennes)

- Local coordinator: Martin Hachet

- This project aims at designing avatars (i.e., the user’s representation in virtual environments) that are better embodied, more interactive and more social, through improving all the pipeline related to avatars, from acquisition and simulation, to designing novel interaction paradigms and multi-sensory feedback.

- website: Avatar

ANR Project EMBER:

- Duration: 2020-2024

- Partners: Inria/AVIZ, Sorbonne Université

- Coordinator: Pierre Dragicevic

- The goal of the project will be to study how embedding data into the physical world can help people to get insights into their own data. While the vast majority of data analysis and visualization takes place on desktop computers located far from the objects or locations the data refers to, in situated and embedded data visualizations, the data is directly visualized near the physical space, object, or person it refers to.

- website: Ember

AeX Inria Live-It:

- Duration: 2022-2024

- Partners: Université de Bordeaux - NeuroPsychology

- Coordinator: Arnaud Prouzeau and Martin Hachet

- In collaboration with colleagues in neuropsychology (Antoinette Prouteau), we are exploring how augmented reality can help to better explain schizophrenia and fight against stigmatization. This project is directly linked to Emma Tison's PhD thesis.

11 Dissemination

11.1 Promoting scientific activities

11.1.1 Scientific events: organisation

General chair, scientific chair

- Journée Visu du GdR IG-RV (Arnaud Prouzeau )

- Première édition du forum des sciences cognitives de Bordeaux (David Trocellier)

Member of the organizing committees

- Alt.VIS Workshop at IEEE VIS 2022 (Arnaud Prouzeau )

- Immersive Analytics Workshop at ACM CHI 2022 and ACM ISS 2022 (Arnaud Prouzeau )

- BITSCOPE special event at MetroXRAINE 2022 (Fabien Lotte, Marc Welter, Axel Bouneau)