2024Activity reportProject-TeamHYBRID

RNSR: 201322122U- Research center Inria Centre at Rennes University

- In partnership with:Institut national des sciences appliquées de Rennes, CNRS, Université de Rennes

- Team name: 3D interaction with virtual environments using body and mind

- In collaboration with:Institut de recherche en informatique et systèmes aléatoires (IRISA)

- Domain:Perception, Cognition and Interaction

- Theme:Interaction and visualization

Keywords

Computer Science and Digital Science

- A2.5. Software engineering

- A5.1. Human-Computer Interaction

- A5.1.1. Engineering of interactive systems

- A5.1.2. Evaluation of interactive systems

- A5.1.3. Haptic interfaces

- A5.1.4. Brain-computer interfaces, physiological computing

- A5.1.5. Body-based interfaces

- A5.1.6. Tangible interfaces

- A5.1.7. Multimodal interfaces

- A5.1.8. 3D User Interfaces

- A5.1.9. User and perceptual studies

- A5.2. Data visualization

- A5.6. Virtual reality, augmented reality

- A5.6.1. Virtual reality

- A5.6.2. Augmented reality

- A5.6.3. Avatar simulation and embodiment

- A5.6.4. Multisensory feedback and interfaces

- A5.10.5. Robot interaction (with the environment, humans, other robots)

- A6. Modeling, simulation and control

- A6.2. Scientific computing, Numerical Analysis & Optimization

- A6.3. Computation-data interaction

Other Research Topics and Application Domains

- B1.2. Neuroscience and cognitive science

- B2.4. Therapies

- B2.5. Handicap and personal assistances

- B2.6. Biological and medical imaging

- B2.8. Sports, performance, motor skills

- B5.1. Factory of the future

- B5.2. Design and manufacturing

- B5.8. Learning and training

- B5.9. Industrial maintenance

- B6.4. Internet of things

- B8.1. Smart building/home

- B8.3. Urbanism and urban planning

- B9.1. Education

- B9.2. Art

- B9.2.1. Music, sound

- B9.2.2. Cinema, Television

- B9.2.3. Video games

- B9.4. Sports

- B9.6.6. Archeology, History

1 Team members, visitors, external collaborators

Research Scientists

- Anatole Lécuyer [Team leader, INRIA, Senior Researcher, HDR]

- Ferran Argelaguet [INRIA, Researcher, HDR]

- Marc Macé [CNRS, Researcher, HDR]

- Léa Pillette [CNRS, Researcher]

- Justine Saint-Aubert [CNRS, Researcher]

Faculty Members

- Bruno Arnaldi [INSA Rennes, Emeritus, HDR]

- Mélanie Cogné [UNIV Rennes, Associate Professor, HDR]

- Valérie Gouranton [INSA RENNES, Associate Professor, HDR]

Post-Doctoral Fellows

- Yann Glémarec [INRIA, Post-Doctoral Fellow]

- François Le Jeune [INRIA, Univ Rennes, Post-Doctoral Fellow]

- Kyung-Ho Won [INRIA, Post-Doctoral Fellow]

PhD Students

- Tiffany Aires Da Cruz [UNIV Paris 1, from Oct 2024]

- Arthur Audrain [INRIA, from Oct 2024]

- Romain Chabbert [CNRS, INSA Rennes, until Oct 2024]

- Antonin Cheymol [INRIA, INSA Rennes]

- Maxime Dumonteil [UNIV Rennes]

- Jeanne Hecquard [INRIA, UNIV Rennes]

- Emilie Hummel [INRIA, INSA Rennes, until Nov 2024]

- Théo Lefeuvre [UNIV Rennes, from Oct 2024]

- Julien Lomet [UNIV Paris 8]

- Julien Manson [UNIV Rennes]

- Yann Moullec [UNIV Rennes, until Nov 2024]

- Mathieu Risy [INSA Rennes]

- Tom Roy [INRIA, CIFRE, InterDigital]

- Sony Saint-Auret [INSA Rennes]

- Nathan Salin [INSA Rennes, from Oct 2024]

- Emile Savalle [UNIV Rennes]

- Sabrina Toofany [INRIA, UNIV Rennes]

- Guillaume Vallet [UNIV Lille, from Nov 2024]

- Adriana Galan Villamarin [CENTRALE Nantes, from Oct 2024]

- Juri Yoneyama [INRIA, from Oct 2024]

- Philippe de Clermont Gallerande [UNIV Rennes, CIFRE]

Technical Staff

- Alexandre Audinot [INSA Rennes, Engineer]

- Julien Cagnoncle [UNIV Rennes, Engineer, from May 2024 until Oct 2024]

- Ronan Gaugne [UNIV Rennes, Engineer, PhD]

- Lysa Gramoli [INSA Rennes, Engineer, PhD]

- Tangui Marchand Guerniou [INSA Rennes, Engineer]

- Maé Mavromatis [INSA Rennes, Engineer]

- Maxime Meyrat [INRIA, Engineer, from Mar 2024 until Aug 2024]

- Anthony Mirabile [INRIA, Engineer]

- Florian Nouviale [INSA Rennes, Engineer]

- Thomas Prampart [INRIA, Engineer]

- Adrien Reuzeau [UNIV Rennes, Engineer, from Oct 2024]

Interns and Apprentices

- Gaspard Charvy [ENS Rennes, Intern, from Sep 2024]

- Nolhan Dumoulin [INSA Rennes, Intern, from May 2024 until Jul 2024]

- Wael El Khaledi [ENS Rennes, Intern, from Sep 2024]

- Filippo Gerbaudo [CNRS, Intern, from Nov 2024 until Nov 2024]

- Elias Goubin [INSA Rennes, Intern, from Jul 2024 until Aug 2024]

- Emilie Huard [INRIA, Intern, from May 2024 until Aug 2024]

- Théo Lefeuvre [INRIA, Intern, from Apr 2024 until Sep 2024]

- Prune Lepvraud [INSA Rennes, Intern, from May 2024 until Jul 2024]

- Vincent Philippe [UNIV Rennes, Intern, from May 2024 until Nov 2024]

- Jean-Paul Surasri [UNIV Rennes, Intern, from Apr 2024 until Aug 2024]

- Jacob Wallace [INRIA, Intern, from Apr 2024 until Aug 2024]

Administrative Assistant

- Nathalie Denis [INRIA]

External Collaborators

- Rebecca Fribourg [CENTRALE Nantes]

- Guillaume Moreau [IMT Atlantique, HDR]

- Jean-Marie Normand [CENTRALE Nantes, HDR]

2 Overall objectives

Our research project belongs to the scientific field of Virtual Reality (VR) and 3D interaction with virtual environments. VR systems can be used in numerous applications such as for industry (virtual prototyping, assembly or maintenance operations, data visualization), entertainment (video games, theme parks), arts and design (interactive sketching or sculpture, CAD, architectural mock-ups), education and science (physical simulations, virtual classrooms), or medicine (surgical training, rehabilitation systems). A major change that we foresee in the next decade concerning the field of Virtual Reality relates to the emergence of new paradigms of interaction (input/output) with Virtual Environments (VE).

As for today, the most common way to interact with 3D content still remains by measuring user's motor activity, i.e., his/her gestures and physical motions when manipulating different kinds of input device. However, a recent trend consists in soliciting more movements and more physical engagement of the body of the user. We can notably stress the emergence of bimanual interaction, natural walking interfaces, and whole-body involvement. These new interaction schemes bring a new level of complexity in terms of generic physical simulation of potential interactions between the virtual body and the virtual surrounding, and a challenging "trade-off" between performance and realism. Moreover, research is also needed to characterize the influence of these new sensory cues on the resulting feelings of "presence" and immersion of the user.

Besides, a novel kind of user input has recently appeared in the field of virtual reality: the user's mental activity, which can be measured by means of a "Brain-Computer Interface" (BCI). Brain-Computer Interfaces are communication systems which measure user's electrical cerebral activity and translate it, in real-time, into an exploitable command. BCIs introduce a new way of interacting "by thought" with virtual environments. However, current BCI can only determine a small amount of mental states and hence a small number of mental commands. Thus, research is still needed here to extend the capacities of BCI, and to better exploit the few available mental states in virtual environments.

Our first motivation consists thus in designing novel “body-based” and “mind-based” controls of virtual environments and reaching, in both cases, more immersive and more efficient 3D interaction.

Furthermore, in current VR systems, motor activities and mental activities are always considered separately and exclusively. This reminds the well-known “body-mind dualism” which is at the heart of historical philosophical debates. In this context, our objective is to introduce novel “hybrid” interaction schemes in virtual reality, by considering motor and mental activities jointly, i.e., in a harmonious, complementary, and optimized way. Thus, we intend to explore novel paradigms of 3D interaction mixing body and mind inputs. Moreover, our approach becomes even more challenging when considering and connecting multiple users which implies multiple bodies and multiple brains collaborating and interacting in virtual reality.

Our second motivation consists thus in introducing a “hybrid approach” which will mix mental and motor activities of one or multiple users in virtual reality.

3 Research program

The scientific objective of Hybrid team is to improve 3D interaction of one or multiple users with virtual environments, by making full use of physical engagement of the body, and by incorporating the mental states by means of brain-computer interfaces. We intend to improve each component of this framework individually and their subsequent combinations.

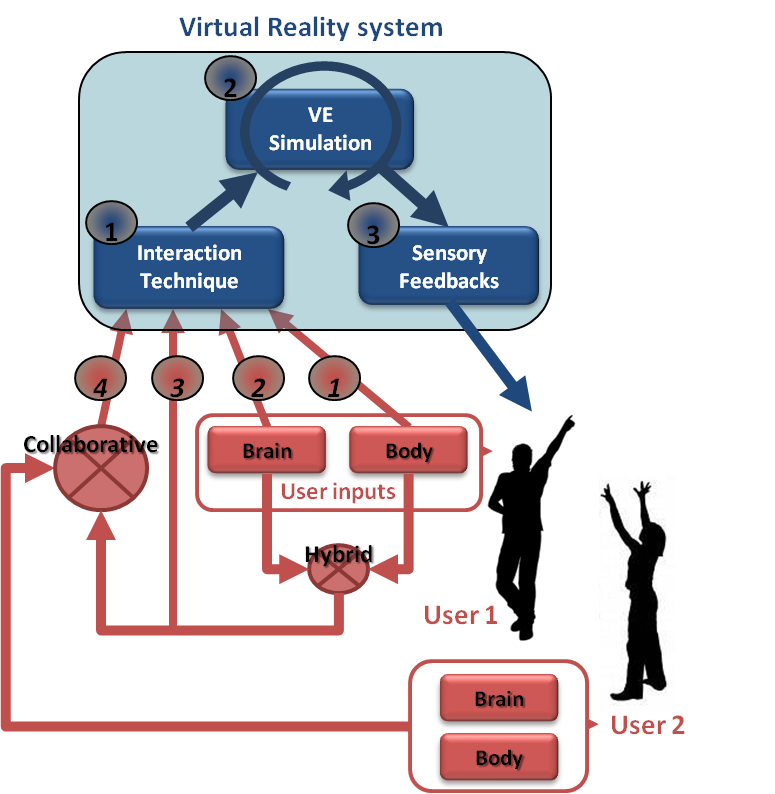

The “hybrid” 3D interaction loop between one or multiple users and a virtual environment is depicted in Figure 1. Different kinds of 3D interaction situations are distinguished (red arrows, bottom): 1) body-based interaction, 2) mind-based interaction, 3) hybrid and/or 4) collaborative interaction (with at least two users). In each case, three scientific challenges arise which correspond to the three successive steps of the 3D interaction loop (blue squares, top): 1) the 3D interaction technique, 2) the modeling and simulation of the 3D scenario, and 3) the design of appropriate sensory feedback.

3D hybrid interaction loop between one or multiple users and a virtual reality system

The 3D interaction loop involves various possible inputs from the user(s) and different kinds of output (or sensory feedback) from the simulated environment. Each user can involve his/her body and mind by means of corporal and/or brain-computer interfaces. A hybrid 3D interaction technique (1) mixes mental and motor inputs and translates them into a command for the virtual environment. The real-time simulation (2) of the virtual environment is taking into account these commands to change and update the state of the virtual world and virtual objects. The state changes are sent back to the user and perceived through different sensory feedbacks (e.g., visual, haptic and/or auditory) (3). These sensory feedbacks close the 3D interaction loop. Other users can also interact with the virtual environment using the same procedure, and can eventually “collaborate” using “collaborative interactive techniques” (4).

This description is stressing three major challenges which correspond to three mandatory steps when designing 3D interaction with virtual environments:

- 3D interaction techniques: This first step consists in translating the actions or intentions of the user (inputs) into an explicit command for the virtual environment. In virtual reality, the classical tasks that require such kinds of user command were early classified into four 53: navigating the virtual world, selecting a virtual object, manipulating it, or controlling the application (entering text, activating options, etc). However, adding a third dimension and using stereoscopic rendering along with advanced VR interfaces cause many 2D techniques to become inappropriate. It is thus necessary to design specific interaction techniques and adapted tools. This challenge is here renewed by the various kinds of 3D interaction which are targeted. In our case, we consider various situations, with motor and/or cerebral inputs, and potentially multiple users.

- Modeling and simulation of complex 3D scenarios: This second step corresponds to the update of the state of the virtual environment, in real-time, in response to all the potential commands or actions sent by the user. The complexity of the data and phenomena involved in 3D scenarios is constantly increasing. It corresponds for instance to the multiple states of the entities present in the simulation (rigid, articulated, deformable, fluids, which can constitute both the user’s virtual body and the different manipulated objects), and the multiple physical phenomena implied by natural human interactions (squeezing, breaking, melting, etc). The challenge consists here in modeling and simulating these complex 3D scenarios and meeting, at the same time, two strong constraints of virtual reality systems: performance (real-time and interactivity) and genericity (e.g., multi-resolution, multi-modal, multi-platform, etc).

- Immersive sensory feedbacks: This third step corresponds to the display of the multiple sensory feedbacks (output) coming from the various VR interfaces. These feedbacks enable the user to perceive the changes occurring in the virtual environment. They close the 3D interaction loop, making the user immersed, and potentially generating a subsequent feeling of presence. Among the various VR interfaces which have been developed so far we can stress two kinds of sensory feedback: visual feedback (3D stereoscopic images using projection-based systems such as CAVE systems or Head Mounted Displays); and haptic feedback (related to the sense of touch and to tactile or force-feedback devices). The Hybrid team has a strong expertice in haptic feedback, and in the design of haptic and “pseudo-haptic” rendering 54. Note that a major trend in the community, which is strongly supported by the Hybrid team, relates to a “perception-based” approach, which aims at designing sensory feedbacks which are well in line with human perceptual capabilities.

These three scientific challenges are addressed differently according to the context and the user inputs involved. We propose to consider three different contexts, which correspond to the three different research axes of the Hybrid research team, namely: 1) body-based interaction (motor input only), 2) mind-based interaction (cerebral input only), and then 3) hybrid and collaborative interaction (i.e., the mixing of body and brain inputs from one or multiple users).

Research Axes

The scientific activity of Hybrid team follows three main axes of research:

- Body-based interaction in virtual reality. Our first research axis concerns the design of immersive and effective "body-based" 3D interactions, i.e., relying on a physical engagement of the user’s body. This trend is probably the most popular one in VR research at the moment. Most VR setups make use of tracking systems which measure specific positions or actions of the user in order to interact with a virtual environment. However, in recent years, novel options have emerged for measuring “full-body” movements or other, even less conventional, inputs (e.g. body equilibrium). In this first research axis we focus on new emerging methods of “body-based interaction” with virtual environments. This implies the design of novel 3D user interfaces and 3D interactive techniques, new simulation models and techniques, and innovant sensory feedbacks for body-based interaction with virtual worlds. It involves real-time physical simulation of complex interactive phenomena, and the design of corresponding haptic and pseudo-haptic feedback.

- Mind-based interaction in virtual reality. Our second research axis concerns the design of immersive and effective “mind-based” 3D interactions in Virtual Reality. Mind-based interaction with virtual environments relies on Brain-Computer Interface technology, which corresponds to the direct use of brain signals to send “mental commands” to an automated system such as a robot, a prosthesis, or a virtual environment. BCI is a rapidly growing area of research and several impressive prototypes are already available. However, the emergence of such a novel user input is also calling for novel and dedicated 3D user interfaces. This implies to study the extension of the mental vocabulary available for 3D interaction with VEs, the design of specific 3D interaction techniques “driven by the mind” and, last, the design of immersive sensory feedbacks that could help improve the learning of brain control in VR.

- Hybrid and collaborative 3D interaction. Our third research axis intends to study the combination of motor and mental inputs in VR, for one or multiple users. This concerns the design of mixed systems, with potentially collaborative scenarios involving multiple users, and thus, multiple bodies and multiple brains sharing the same VE. This research axis therefore involves two interdependent topics: 1) collaborative virtual environments, and 2) hybrid interaction. It should end up with collaborative virtual environments with multiple users, and shared systems with body and mind inputs.

4 Application domains

4.1 Overview

The research program of the Hybrid team aims at next generations of virtual reality and 3D user interfaces which could possibly address both the “body” and “mind” of the user. Novel interaction schemes are designed, for one or multiple users. We target better integrated systems and more compelling user experiences.

The applications of our research program correspond to the applications of virtual reality technologies which could benefit from the addition of novel body-based or mind-based interaction capabilities:

- Industry: with training systems, virtual prototyping, or scientific visualization;

- Medicine: with rehabilitation and re-education systems, or surgical training simulators;

- Entertainment: with movie industry, content customization, video games or attractions in theme parks,

- Construction: with virtual mock-ups design and review, or historical/architectural visits.

- Cultural Heritage: with acquisition, virtual excavation, virtual reconstruction and visualization

5 Social and environmental responsibility

5.1 Impact of research results

A salient initiative carried out by Hybrid in relation to social responsibility on the field of health is the Inria Covid-19 project “VERARE”. VERARE is a unique and innovative concept implemented in record time thanks to a close collaboration between the Hybrid research team and the teams from the intensive care and physical and rehabilitation medicine departments of Rennes University Hospital. VERARE consists in using virtual environments and VR technologies for the rehabilitation of Covid-19 patients, coming out of coma, weakened, and with strong difficulties in recovering walking. With VERARE, the patient is immersed in different virtual environments using a VR headset. He is represented by an “avatar”, carrying out different motor tasks involving his lower limbs, for example : walking, jogging, avoiding obstacles, etc. Our main hypothesis is that the observation of such virtual actions, and the progressive resumption of motor activity in VR, will allow a quicker start to rehabilitation, as soon as the patient leaves the ICU. The patient will then be able to carry out sessions in his room, or even from his hospital bed, in simple and secure conditions, hoping to obtain a final clinical benefit, either in terms of motor and walking recovery or in terms of hospital length of stay. The project started at the end of April 2020, and we were able to deploy a first version of our application at the Rennes hospital in mid-June 2020 only 2 months after the project started. Covid patients are now using our virtual reality application at the Rennes University Hospital, and the clinical evaluation of VERARE is still on-going and expected to be achieved and completed in early 2025. The project is also pushing the research activity of Hybrid on many aspects, e.g., haptics, avatars, and VR user experience, with 4 papers published in IEEE TVCG in 2022 & 2023.

6 Highlights of the year

Hybrid team is fully commited to hosting the IEEE International Conference on Virtual Reality and 3D User Interfaces (IEEE VR 2025) in March 2025 in Saint-Malo.

6.1 Awards

Maxime Dumonteil obtained the Best Paper Award (Honorable Mention) at ICAT-EGVE 2024.

7 New software, platforms, open data

7.1 New software

7.1.1 OpenVIBE

-

Keywords:

Neurosciences, Interaction, Virtual reality, Health, Real time, Neurofeedback, Brain-Computer Interface, EEG, 3D interaction

-

Functional Description:

OpenViBE is a free and open-source software platform devoted to the design, test and use of Brain-Computer Interfaces (BCI). The platform consists of a set of software modules that can be integrated easily and efficiently to design BCI applications. The key features of OpenViBE software are its modularity, its high performance, its portability, its multiple-user facilities and its connection with high-end/VR displays. The designer of the platform enables users to build complete scenarios based on existing software modules using a dedicated graphical language and a simple Graphical User Interface (GUI). This software is available on the Inria Forge under the terms of the AGPL licence, and it was officially released in June 2009. Since then, the OpenViBE software has already been downloaded more than 60000 times, and it is used by numerous laboratories, projects, or individuals worldwide. More information, downloads, tutorials, videos, documentations are available on the OpenViBE website.

-

Release Contributions:

Added: - Build: conda env for dependency management - Build: OSX support (Intel) except Advanced Visualization - Box: PulseRateCalculator - Box: Asymmetry Index Metabox - CI: gitlab-ci

Updated: - Box: LDA Classifier scale independant - Box: Classifier trainer randomized k-fold option move from conf to box settings - Dependency: Boost version 1.71 -> 1.77 - Dependency: Eigen version 3.3.7 -> 3.3.8 - Dependency: Expat version 2.1.0 -> 2.5.0 - Dependency: Xerces-C version 3.1.3 -> 3.2.4 - Dependency: OGG version 1.2.1 -> 1.3.4 - Dependency: Vorbis version 1.3.2 -> 1.3.7 - Dependency: Lua version 5.1.4 -> 5.4.6

Removed: - Build: CMake ExternalProjects dependencies (now in conda) - Build: Scripted dependency management - CI: Jenkins CI (now in gitlab)

- URL:

- Publication:

-

Contact:

Anatole Lecuyer

-

Participants:

Marie-Constance Corsi, Fabrizio De Vico Fallani, Arthur Desbois, Sebastien Rimbert, Axel Bouneau, Laurent Garnier, Tristan Cabel, Marc Mace, Lea Pillette, Anatole Lecuyer, Fabien Lotte, Laurent Bougrain, Théodore Papadopoulo, Thomas Prampart

-

Partners:

INSERM, GIPSA-Lab

7.1.2 Xareus

-

Name:

Xareus

-

Keywords:

Virtual reality, Augmented reality, 3D, 3D interaction, Behavior modeling, Interactive Scenarios

-

Scientific Description:

Xareus mainly contains a scenario engine (#SEVEN) and a relation engine (#FIVE) #SEVEN is a model and an engine based on petri nets extended with sensors and effectors, enabling the description and execution of complex and interactive scenarios #FIVE is a framework for the development of interactive and collaborative virtual environments. #FIVE was developed to answer the need for an easier and a faster design and development of virtual reality applications. #FIVE provides a toolkit that simplifies the declaration of possible actions and behaviours of objects in a VE. It also provides a toolkit that facilitates the setting and the management of collaborative interactions in a VE. It is compliant with a distribution of the VE on different setups. It also proposes guidelines to efficiently create a collaborative and interactive VE.

-

Functional Description:

Xareus is implemented in C# and is available as libraries. An integration to the Unity3D engine, also exists. The user can focus on domain-specific aspects for his/her application (industrial training, medical training, etc) thanks to Xareus modules. These modules can be used in a vast range of domains for augmented and virtual reality applications requiring interactive environments and collaboration, such as in training. The scenario engine is based on Petri nets with the addition of sensors and effectors that allow the execution of complex scenarios for driving Virtual Reality applications. Xareus comes with a scenario editor integrated to Unity 3D for creating, editing and remotely controlling and running scenarios. The relation engine contains software modules that can be interconnected and helps in building interactive and collaborative virtual environments.

-

Release Contributions:

This version contains various bug fixes as well as better performances and memory usage Added : - Decision Trees - Visual Scripting

- URL:

- Publications:

-

Contact:

Valerie Gouranton

-

Participants:

Florian Nouviale, Valerie Gouranton, Bruno Arnaldi, Vincent Goupil, Carl-Johan Jorgensen, Emeric Goga, Adrien Reuzeau, Alexandre Audinot

7.1.3 AvatarReady

-

Name:

A unified platform for the next generation of our virtual selves in digital worlds

-

Keywords:

Avatars, Virtual reality, Augmented reality, Motion capture, 3D animation, Embodiment

-

Scientific Description:

AvatarReady is an open-source tool (AGPL) written in C#, providing a plugin for the Unity 3D software to facilitate the use of humanoid avatars for mixed reality applications. Due to the current complexity of semi-automatically configuring avatars coming from different origins, and using different interaction techniques and devices, AvatarReady aggregates several industrial solutions and results from the academic state of the art to propose a simple and fast way to use humanoid avatars in mixed reality in a seamless way. For example, it is possible to automatically configure avatars from different libraries (e.g., rocketbox, character creator, mixamo), as well as to easily use different avatar control methods (e.g., motion capture, inverse kinematics). AvatarReady is also organized in a modular way so that scientific advances can be progressively integrated into the framework. AvatarReady is furthermore accompanied by a utility to generate ready-to-use avatar packages that can be used on the fly, as well as a website to display them and offer them for download to users.

-

Functional Description:

AvatarReady is a Unity tool to facilitate the configuration and use of humanoid avatars for mixed reality applications. It comes with a utility to generate ready-to-use avatar packages and a website to display them and offer them for download.

- URL:

-

Contact:

Ludovic Hoyet

7.2 New platforms

7.2.1 Immerstar

Participants: Florian Nouviale, Ronan Gaugne.

URL: Immersia website

With the two virtual reality technological platforms Immersia and Immermove, grouped under the name Immerstar, the team has access to high-level scientific facilities. This equipment benefits the research teams of the center and has allowed them to extend their local, national and international collaborations. In 2023, the Immersia platform was extended to offer a new space dedicated to scientific experimentations in XR, named ImmerLab (see figure 2, left). A Photography Rig was also installed (see figure 2, right). This system given by Interdigital will allow to explore head/face picture computing and rendering.

Immerlab experimental room and Rig installation

Since 2021, Immerstar is granted by a PIA3-Equipex+ funding, CONTINUUM. The CONTINUUM project involves 22 partners and animates a collaborative research infrastructure of 30 platforms located throughout France. Driven by the consortium of Continuum, Immerstar is involved in a new National Research Infrastructure since the end of 2021, which gathers the main platforms of CONTINUUM 25

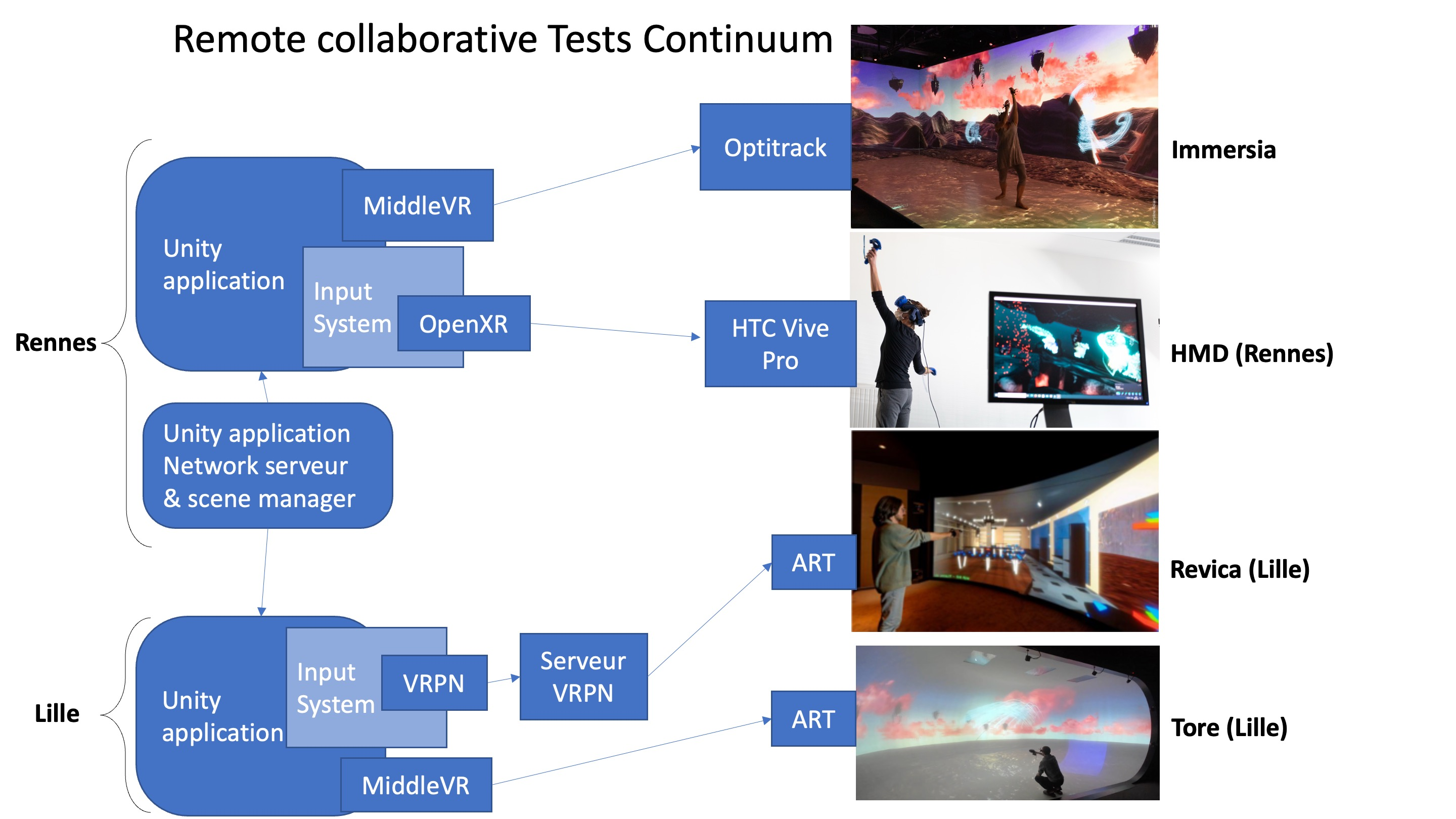

Immerstar is highly involved in the Continuum project. It hosted the annual plenary meeting in July 2024, joint with a scientific session of the CNRS GDR IG-RV. During the 3 days of the meeting, Immersia presented several demos to the participants. In addition, during the year, Immersia was involved in several remote collaborative tests, with the platforms Tore and Revica in Lille, and TransLife in Compiègne. The tests with the two platforms in Lille were based on a collaborative virtual environment developed in the Hybrid team, in collaboration with the department of digital art in Paris 8 (see figure 3). Another test with Tore in Lille and TransLife in Compiègne was based on the MiddleVR software platform (see figure 4). This French software is deployed in Immerstar since 2013, and we act as bêta testers for this software since its installation.

Immerstar is also involved in EUR Digisport led by University of Rennes, H2020 EU projects GuestXR and ShareSpace, and PIA4 DemoES AIR led by University of Rennes.

After the recruitment of Adrien Reuzeau in october 2023 as a permanent research engineer by the University of Rennes, Immerstar recruited Alexandre Vu on a 2-year research engineer position with University Rennes 2, in January 2024. His role is dedicated to the development of partnership with private entities.

Immersia also hosted teaching activities for students from INSA Rennes, ENS Rennes, University of Rennes, and University Rennes 2.

Collaboration between platforms in Continuum with Creative Harmony

Collaboration between platforms in Continuum with MiddleVR

8 New results

8.1 Virtual Reality Interaction and Usages

8.1.1 The Influence of a prop mass on task performance in Virtual Reality

Participants: Guillaume Moreau [contact].

As Virtual Reality (VR) applications continue to develop further, many questions persist regarding how to optimize user performance in virtual environments. Among the numerous variables that could influence performance, the mass props used within VR applications is particularly noteworthy. This work investigates 43 the influence of the mass of a tool replica on users' performance in a pointing task. A VR within-subject experiment was conducted, with three different weighted replicas, to collect objective and subjective data from participants (see Figure 5). Results suggest that the mass of the replica can influence task performance in terms of error-free selection time, number of errors, and subjective perceptions such as perceived difficulty and cognitive load. Indeed, performance was significantly better when using a lighter replica than a heavier one, and subjective user-experience-related metrics were also significantly improved with a light replica. These results help pave the way for additional research on user performance within virtual environments.

Experimental setup

This work was done in collaboration with the INUIT team of the IMT-Atlantique Brest and Clarté.

8.1.2 Influence of rotation gains on unintended positional drift during virtual steering navigation in virtual reality

Participants: Ferran Argelaguet [contact].

Unintended Positional Drift (UPD) is a phenomenon that occurs during navigation in Virtual Reality (VR). It is characterized by the user's unconscious or unintentional physical movements in the workspace while using a locomotion technique (LT) that does not require physical displacement (e.g., steering, teleportation). Recent work showed that some factors, such as the LT used and the type of trajectory, can influence UPD. However, little is known about the influence of rotation gains (commonly used in redirection-based LTs) on UPD during navigation in VR. In this work 30, we conducted two user studies to assess the influence of rotation gains on UPD. In the first study, participants had to perform consecutive turns in a corridor virtual environment. In the second study, participants had to explore a large office floor and collect spheres freely. We compared the conditions between rotation gains and without gains, and we also varied the turning angle to perform the turns while considering factors such as sensitivity to cybersickness and the learning effect. We found that rotation gains and lower turning angles decreased UPD during the first study, but the presence of rotation gains increased UPD in the second study. This work contributes to the understanding of UPD, which tends to be an overlooked topic and discusses the design implications of these results for improving navigation in VR.

This work was done in collaboration with the TU Wien and Telecom SudParis.

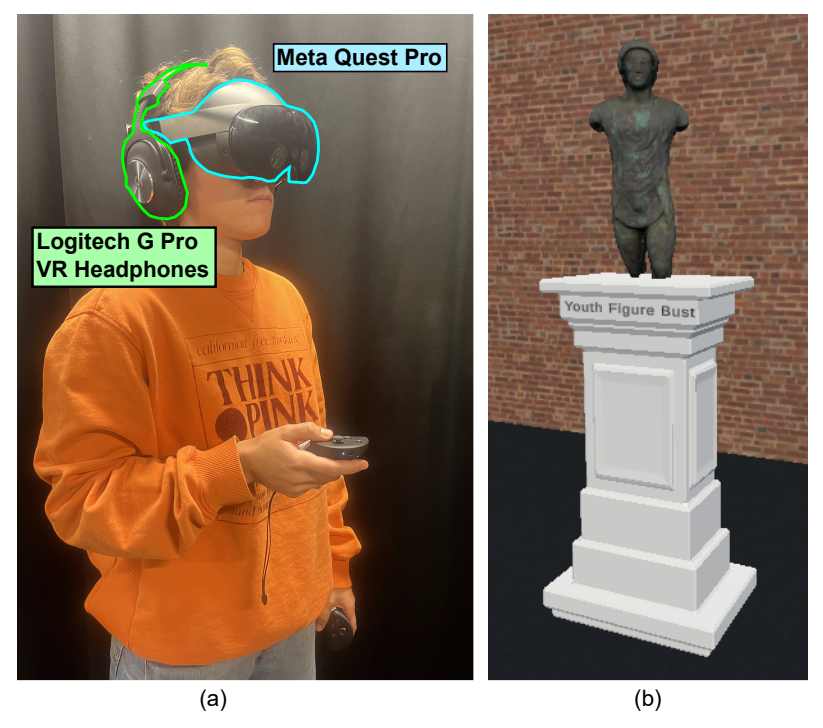

8.1.3 Examining the effects of teleportation on semantic memory of a virtual museum compared to natural walking

Participants: Ferran Argelaguet [contact].

Over the past decades there has been extensive research investigating the trade-offs between various Virtual Reality (VR) locomotion techniques. One of the most highly researched techniques is teleportation, due to its ability to quickly traverse large virtual spaces even in limited physical tracking spaces. The majority of teleportation research has been focused on its effects on spatial cognition, such as spatial understanding and retention. However, relatively little is known about whether the use of teleportation in immersive learning experiences can effect the acquisition of semantic knowledge -our knowledge about facts, concepts, and ideas -which is essential for long-term learning. In this work 32 we conducted a human-subject study to investigate the effects of teleportation compared to natural walking on the retention of semantic information about artifacts in a virtual museum. Participants visited unique 3D artifacts accompanied by audio clips and artifact names (see Figure 6). Our results show that participants reached the same semantic memory performance with both locomotion techniques but with different behaviors, self-assessed performance, and preferences. In particular, participants subjectively indicated that they felt that they recalled more semantic memory with walking than teleportation. However, objectively, they spent more time with the artifacts while walking, meaning that they learnt less per a set amount of time than with teleportation. We discuss the relationships, implications, and guidelines for VR experiences designed to help users acquire new knowledge.

Photography and VR snapshot of the experimental setup

This work was done in collaboration with the University of Central Florida (SReal team) and the university of Trento.

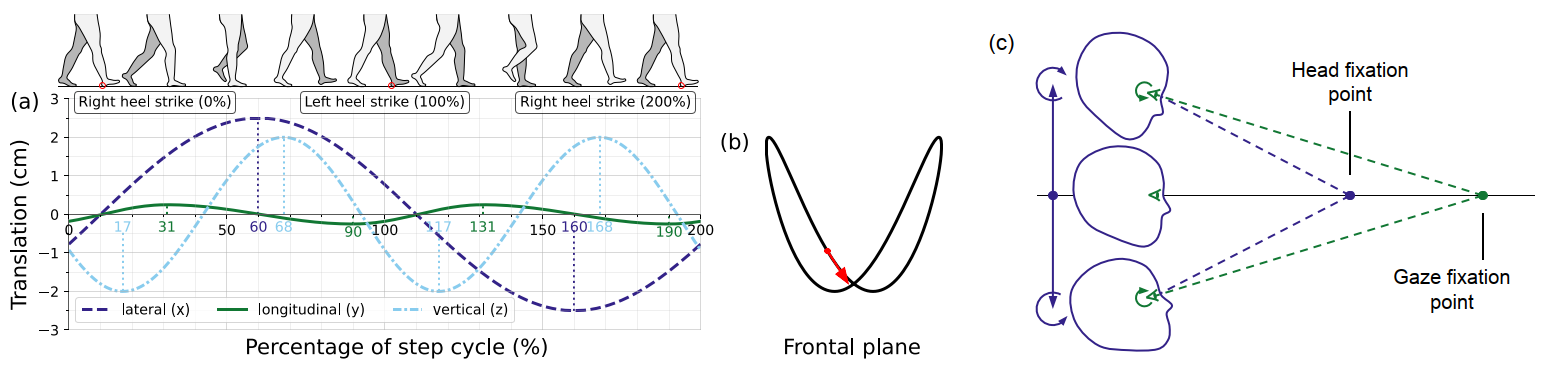

8.1.4 To use or not to use viewpoint oscillations when walking in VR? State of the art and perspectives

Participants: Yann Moullec, Justine Saint-Aubert, Mélanie Cogné, Anatole Lécuyer [contact].

Viewpoint oscillations are periodic changes in the position and/or orientation of the point of view in a virtual environment. They can be implemented in Virtual Reality (VR) walking simulations to make them feel closer to real walking (see Figure 7. This is especially useful in simulations where users remain in place because of space or hardware constraints. As for today, it remains unclear what exact benefit they bring to user experience during walking simulations, and with what characteristics they should be implemented. To answer these questions, in this work 28 we conducted a systematic literature review focusing on five main dimensions of user experience (walking sensation, vection, cybersickness, presence and embodiment) and discussed 44 articles from the fields of VR, Vision, and Human-Computer Interaction. Overall, the literature suggests that viewpoint oscillations benefit vection, and with less evidence, walking sensation and presence. As for cybersickness, the literature contains contrasted results. Based on these results, we recommend using viewpoint oscillations in applications that require accurate distance or speed perception, or that aim to provide compelling walking simulations without a walking avatar, and a particular attention should be paid to cybersickness. Taken together, this work gives recommendations for enhancing walking simulations in VR, which may be applied to entertainment, virtual visits, and medical rehabilitation.

Illustration of head motions while walking

8.1.5 Handing pedagogical scenarios back over to domain experts: A scenario authoring model for VR with pedagogical objectives

Participants: Mathieu Risy, Bruno Arnaldi, Valérie Gouranton [contact].

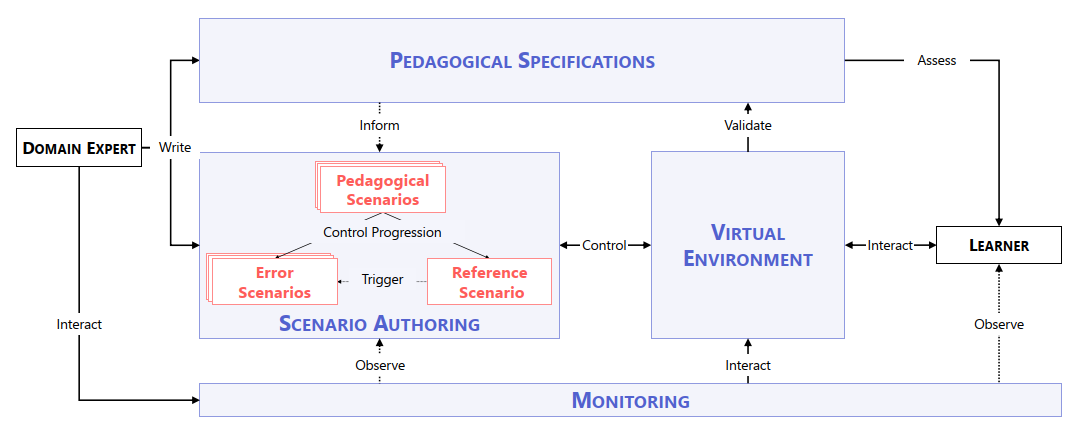

Teachers and trainers make pedagogical decisions for their training courses, so why not do the same for Virtual Reality (VR) training courses? Virtual Environments for Training (VETs) are becoming prominent educational tools. However, VET models have yet to propose scenario authoring aligned with pedagogical objectives that can account for the diversity of approaches available to teachers. This work 40 proposes a scenario authoring model for VET that directly involves domain experts and validates their pedagogical objectives. In addition, it proposes the coexistence of multiple pedagogical scenarios within the same VET, using three types of scenarios (see Figure 8). The validity of the model is then discussed using a VR welding application as a use case.

Schematics of a scenario

8.1.6 Generating and evaluating data of daily activities with an autonomous agent in a virtual smart home

Participants: Lysa Gramoli, Bruno Arnaldi, Valérie Gouranton [contact].

Training machine learning models to identify human behavior is a difficult yet essential task to develop autonomous and adaptive systems such as smart homes. These models require large and diversified amounts of labeled data to be trained effectively. Due to the high variety of home environments and occupant behaviors, collecting datasets that are representative of all possible homes is a major challenge. In addition, privacy and cost are major hurdles to collect real home data. To avoid these difficulties, one solution consists of training these models using purely synthetic data, which can be generated through the simulation of home and their occupants. Two challenges arise from this approach: designing a methodology with a simulation able to generate credible simulated data and evaluating this credibility. In this work 23, we explain the methodology used to generate diversified synthetic data of daily activities, through the combination of an agent model to simulate an occupant, and a simulated 3D house enriched with sensors and effectors to produce such data (see Figure 9). We demonstrate the credibility of the generated synthetic data by comparing their efficacy for training human context understanding models against the efficacy generated by real data. To achieve this, we replicate a real dataset collection setting with our smart home simulator. The occupant is replaced by an autonomous agent following the same experimental protocol used for the real dataset collection. This agent is a BDI-based model enhanced with a scheduler designed to offer a balance between control and autonomy. This balance is useful in synthetic data generation since strong constraints can be imposed on the agent to simulate desired situations while allowing autonomous behaviors outside these constraints to generate diversified data. In our case, the constraints are those imposed during the real dataset collection that we want to replicate. The simulated sensors and effectors were configured to react to the agent's behaviors similarly to the real ones. We experimentally show that data generated from this simulation is valuable for two human context understanding tasks: current human activity recognition and future human activity prediction. In particular, we show that models trained solely with simulated data can give reasonable predictions about real situations occurring in the original dataset.

VR environment snapshots

This work was done in collaboration with Orange Labs.

8.1.7 Priming and personality effects on the Sense of Embodiment for human and non-human avatars in Virtual Reality

Participants: Jean-Marie Normand [contact].

The increasingly widespread use of Virtual Reality (VR) technology necessitates a deeper understanding of virtual embodiment and its relationship to human subjectivity. Individual differences and primed perceptual associations that could influence the perception of one’s virtual body remain incompletely explored. In this work 37, we exposed participants to human and nonhuman avatars, with half of the sample experiencing a concept primer beforehand (see Figure 10). We also gathered measurements on subjective traits, in the Multidimensional Assessment of Interoceptive Awareness and the Ten Item Personality Inventory. Results support previous work which suggests greater body ownership in human as opposed to non-human avatars, and suggest that concept priming could have an influence on embodiment and state body mindfulness. Additionally, results highlight an array of personality trait influences on embodiment and body mindfulness measures.

VR snapshot of the avatars

This work was done in collaboration with Trinity College Dublin.

8.1.8 Avatar-centered feedback: Dynamic avatar alterations can induce avoidance behaviors to virtual dangers

Participants: Antonin Cheymol, Anatole Lécuyer, Rebecca Fribourg, Jean-Marie Normand, Ferran Argelaguet [contact].

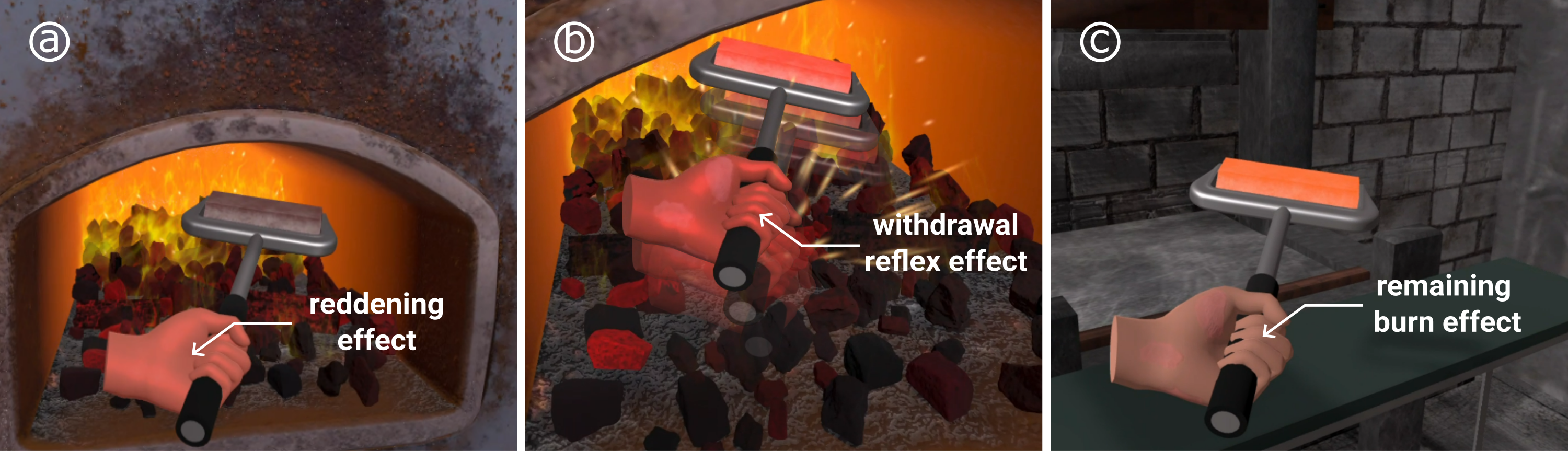

One singularity of VR is its capacity to generate a strong sensation of being present in a dangerous environment, without risking the physical consequences. However, this absence of consequences when interacting with virtual dangers might also limit the induction of realistic responses, such as avoidance behaviors, which are a key factor of various VR applications (e.g., training, journalism, or exposure therapies). To address this limitation, we propose avatar-centered feedback 31, a novel, device-free approach, consisting of dynamically altering the avatar’s appearance and movements to offer coherent feedback from interactions with the virtual environment, including dangers. To begin with, we present a design space clarifying the range of potential implementations for this approach. Then, we tested this approach in a metallurgy scenario, where participants’ virtual hands would redden and display burns as they got closer to a virtual fire (appearance alteration), and simulate short withdrawal reflexes movements when a spark burst next to them (movement alteration)(see Figure 11). Our results show that in comparison to a control group, participants receiving avatar-centered feedback demonstrated significantly more avoidance behaviors to the virtual fire. Interestingly, we also found that experiencing avatar-centered feedback of fire significantly increased avoidance behaviors toward a following danger of different nature (a circular saw). These results suggest that avatar-centered feedback can also impact the general perception of the avatar vulnerability to the virtual environment.

Snapshots of the VR environment

8.1.9 Experiencing an elongated limb in virtual reality modifies the tactile distance perception of the corresponding real limb

Participants: François Le Jeune [contact].

In measurement, a reference frame is needed to compare the measured object to something already known. This raises the neuroscientific question of which reference frame is used by humans when exploring the environment. Previous studies suggested that, in touch, the body employed as measuring tool also serves as reference frame. Indeed, an artificial modification of the perceived dimensions of the body changes the tactile perception of external object dimensions. However, it is unknown if such a change in tactile perception would occur when the body schema is modified through the illusion of owning a limb altered in size (see Figure 12). Therefore, employing a virtual hand illusion paradigm with an elongated forearm of different lengths, we systematically tested the subjective perception of distance between two points (tactile distance perception task, TDP task) on the corresponding real forearm following the illusion 26. Thus, TDP task is used as a proxy to gauge changes in the body schema. Embodiment of the virtual arm was found significantly greater after the synchronous visuo-tactile stimulation condition compared to the asynchronous one, and the forearm elongation significantly increased the TDP. However, we did not find any link between the visuo-tactile induced ownership over the elongated arm and TDP variation, suggesting that vision plays the main role in the modification of the body schema. Additionally, the effect of elongation found on TDP but not on proprioception suggests that these are affected differently by body schema modifications. These findings confirm the body schema malleability and its role as reference frame in touch.

VR snapshots of elongated arm conditions

This work was done in collaboration with Rome Bio-Medico University.

8.1.10 Preparing users to embody their avatars in VR: Insights on the effects of priming, mental imagery, and acting on embodiment experiences

Participants: Anatole Lécuyer [contact].

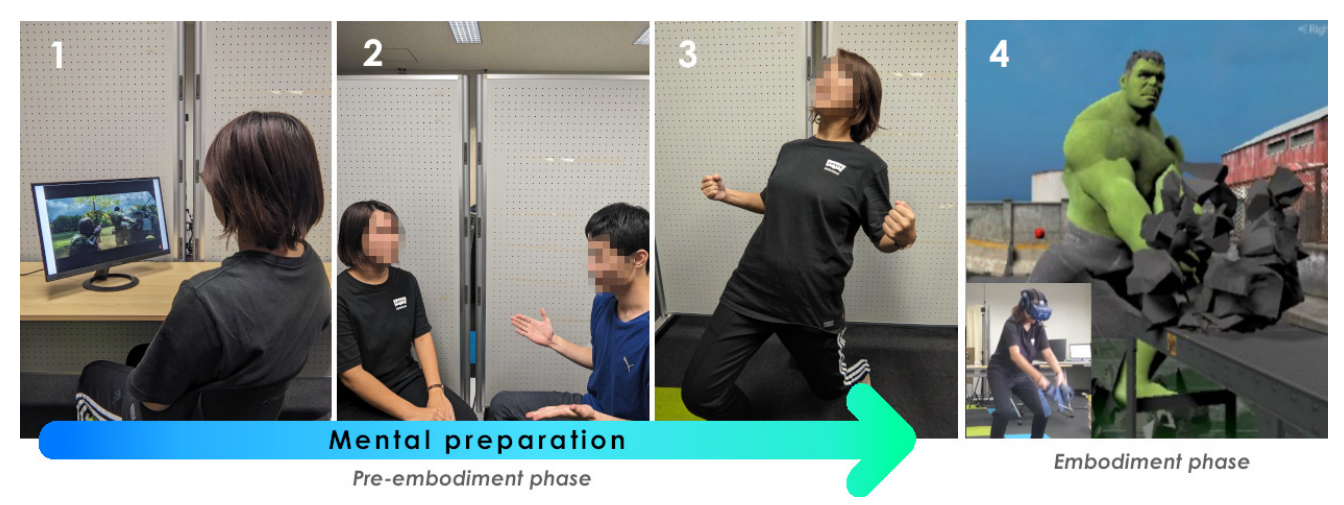

Establishing a strong connection between users and their avatars in virtual reality poses an enduring challenge. Despite technical advancements, some users resist their avatars, while others seamlessly accept them as replacements for their bodies. In this work 35, we investigate the feasibility of pre-conditioning users to embrace their avatars prior to immersion. To do so, we propose a user preparation protocol involving three stages: first, users receive information about their avatar's identity, capabilities, and appearance. Next, they engage in a mental imagery exercise, envisioning themselves as their avatar. Finally, they physically impersonate their avatar's character through an acting exercise (see Figure 13). Testing this protocol involved a study with 48 participants embodying an avatar representing the Hulk, with and without preparation. We could not find significant effects of the user preparation on the sense of embodiment, Proteus effects, or affective bond. This prompts further discussion on how users can be primed to accept their avatars as their own bodies, an idea introduced for the first time in this paper.

Photographies of the preparation protocol and VR snapshot of a user impersonnating the Hulk

This work was done in collaboration with Inria BIVWAK team, Melbourne University and Nara Institute.

8.1.11 Evaluation of power wheelchair driving performance in simulator compared to driving in real-life situations: the SIMADAPT (simulator ADAPT) project — a pilot study

Participants: Valérie Gouranton [contact].

The objective of this study was to evaluate users’ driving performances with a Power Wheelchair (PWC) driving simulator in comparison to the same driving task in real conditions with a standard power wheelchair. Three driving circuits of progressive difficulty levels (C1, C2, C3) that were elaborated to assess the driving performances with PWC in indoor situations, were used in this study. These circuits have been modeled in a 3D Virtual Environment to replicate the three driving task scenarios in Virtual Reality (VR) (see Figure 14). Users were asked to complete the three circuits with respect to two testing conditions during three successive sessions, i.e. in VR and on a real circuit (R). During each session, users completed the two conditions. Driving performances were evaluated using the number of collisions and time to complete the circuit. In addition, driving ability by Wheelchair Skill Test (WST) and mental load were assessed in both conditions. Cybersickness, user satisfaction and sense of presence were measured in VR. The conditions R and VR were randomized. Thirty-one participants with neurological disorders and expert wheelchair drivers were included in the study. The driving performances between VR and R conditions were statistically different for the C3 circuit but were not statistically different for the two easiest circuits C1 and C2. The results of the WST was not statistically different in C1, C2 and C3. The mental load was higher in VR than in R condition. The general sense of presence was reported as accept- able (mean value of 4.6 out of 6) for all the participants, and the cybersickness was reported as acceptable (SSQ mean value of 4.25 on the three circuits in VR condition). Driving performances were statistically different in the most complicated circuit C3 with an increased number of collisions in VR, but were not statistically different for the two easiest circuits C1 and C2 in R and VR conditions. In addition, there were no significant adverse effects such as cybersickness. The results show the value of the simulator for driving training applications. Still, the mental load was higher in VR than in R condition, thus mitigating the potential for use with people with cognitive disorders 22.

Photography and VR snapshot of the experimental setup

This work was done in collaboration with Inria RAINBOW team and the Pôle Saint-Hélier (Rennes CHU hospital).

8.2 Haptics

8.2.1 PalmEx: Adding palmar force-feedback for 3D manipulation with haptic exoskeleton gloves

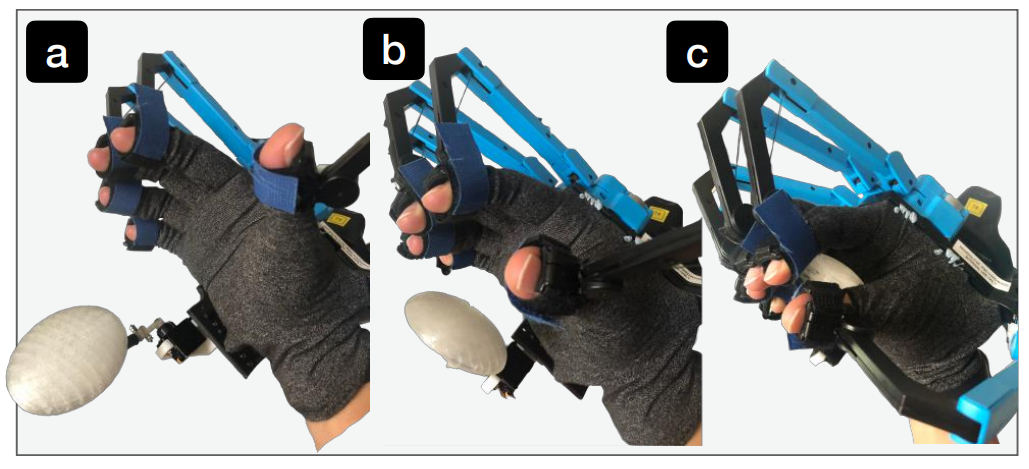

Participants: Anatole Lécuyer [contact].

Haptic exoskeleton gloves are a widespread solution for providing force-feedback in Virtual Reality (VR), especially for 3D object manipulations. However, they are still lacking an important feature regarding in-hand haptic sensations: the palmar contact. In this work 20, we present PalmEx, a novel approach which incorporates palmar force-feedback into exoskeleton gloves to improve the overall grasping sensations and manual haptic interactions in VR. PalmEx's concept is demonstrated through a self-contained hardware system augmenting a hand exoskeleton with a palmar contact interface physically encountering the users' palm (see Figure 15). We build upon current taxonomies to elicit PalmEx's capabilities for both the exploration and manipulation of virtual objects. We first conduct a technical evaluation optimizing the delay between the virtual interactions and their physical counterparts. We then empirically evaluate PalmEx's proposed design space in a user study (n=12) to assess the potential of a palmar contact for augmenting an exoskeleton. Results show that PalmEx offers the best rendering capabilities to perform believable grasps in VR. PalmEx highlights the importance of the palmar stimulation, and provides a low-cost solution to augment existing high-end consumer hand exoskeletons.

Palmex device photography: exosqueleton and encountered-type interface

This work was done in collaboration with Inria RAINBOW team.

8.2.2 Cool me down: Effects of thermal feedback on cognitive stress in virtual reality

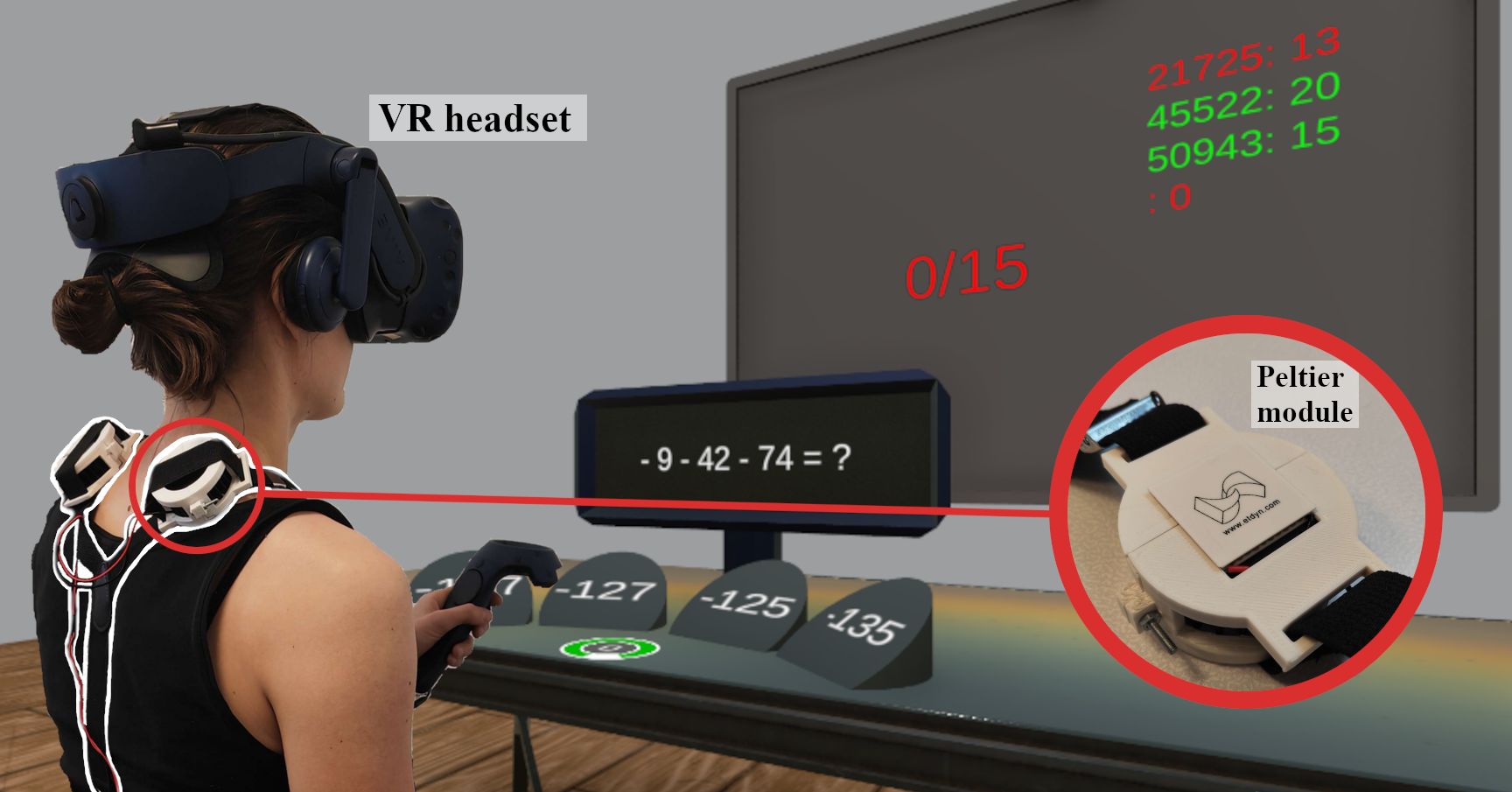

Participants: Vincent Philippe, Jeanne Hecquard [contact], Emilie Hummel, Ferran Argelaguet, Marc Macé, Valérie Gouranton, Anatole Lécuyer, Justine Saint-Aubert.

This work 39 investigates the influence of thermal haptic feedback on stress during a cognitive task in virtual reality. We hypothesized that cool feedback would help reduce stress in such task where users are actively engaged. We designed a haptic system using Peltier cells to deliver thermal feedback to the left and right trapezius muscles (see Figure 16). A user study was conducted on 36 participants to investigate the influence of different temperatures (cool, warm, neutral) on users' stress level during mental arithmetic tasks. Results show that the impact of the thermal feedback depends on the participant's temperature preference. Interestingly, a subset of participants (36%) felt less stressed with cool feedback than with neutral feedback but had similar performance levels, and expressed a preference for the cool condition. Emotional arousal also tended to be lower with cool feedback for these participants. This suggests that cool thermal feedback has the potential to induce relaxation in cognitive tasks. Taken together, our results pave the way for further experimentation on the influence of thermal feedback.

Experimental setup

This work was done in collaboration with Inria RAINBOW team.

8.2.3 Studying the influence of contact force on thermal perception at the fingertip

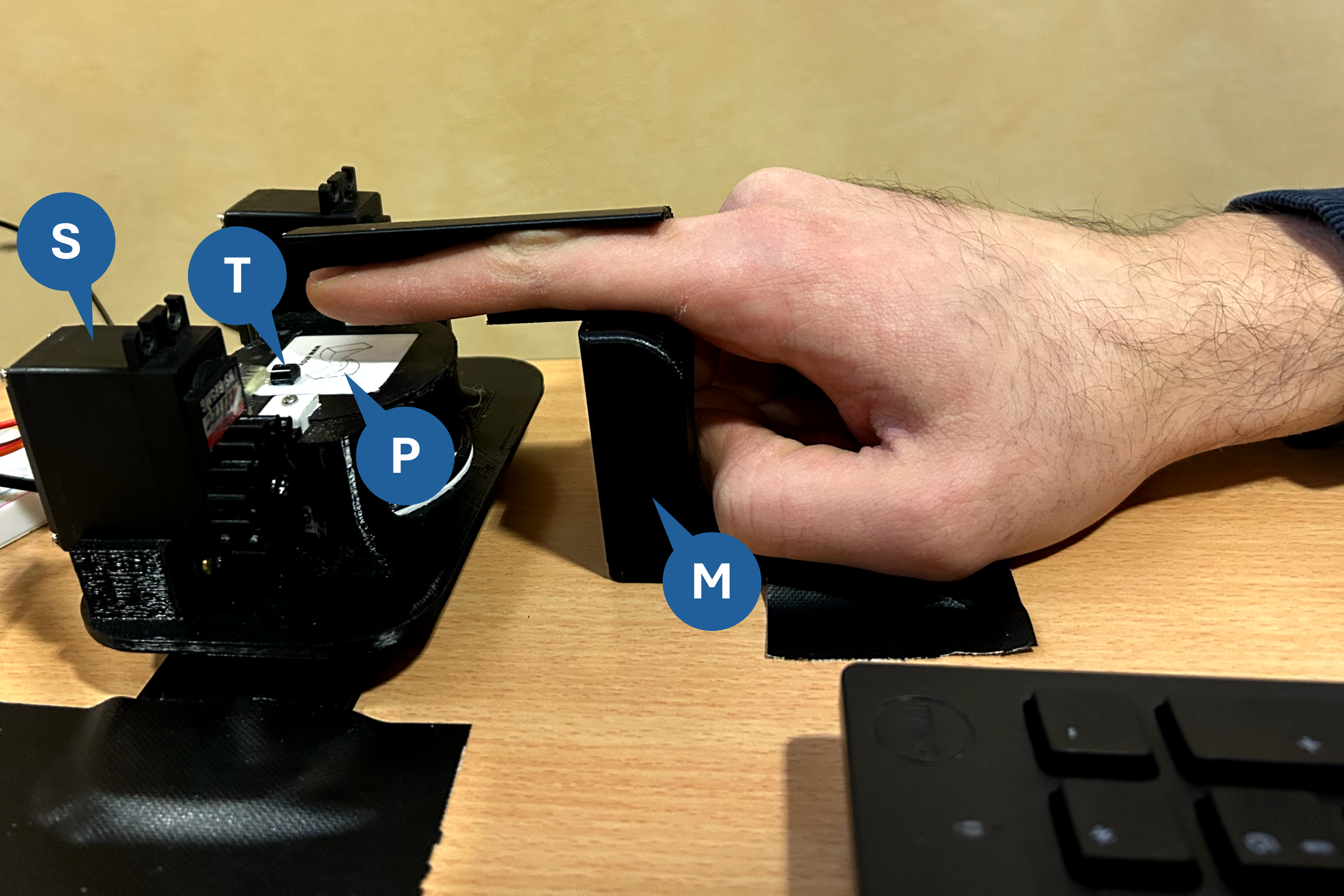

Participants: Jeanne Hecquard, Ferran Argelaguet, Justine Saint-Aubert [contact], Marc Macé, Anatole Lécuyer.

This paper 44 investigates the influence of contact force applied to the human's fingertip on the perception of hot and cold temperatures, studying how variations in contact force may affect the sensitivity of cutaneous thermoreceptors or their interpretation. A psychophysical experiment (see Figure 17) involved 18 participants exposed to cold (20 °C) and hot (38 °C) thermal stimuli at varying contact forces, ranging from gentle (0.5 N) to firm (3.5 N) touch. Results show a tendency to overestimate hot temperatures (hot feels hotter than it really is) and underestimate cold temperatures (cold feels colder than it really is) as the contact force increases. This result might be linked to the increase in the fingertip contact area that occurs as the contact force between the fingertip and the plate delivering the stimuli grows.

Experimental setup

This work was done in collaboration with Inria RAINBOW team and the University of Pisa.

8.2.4 Warm regards: Influence of thermal haptic feedback during social interactions in VR

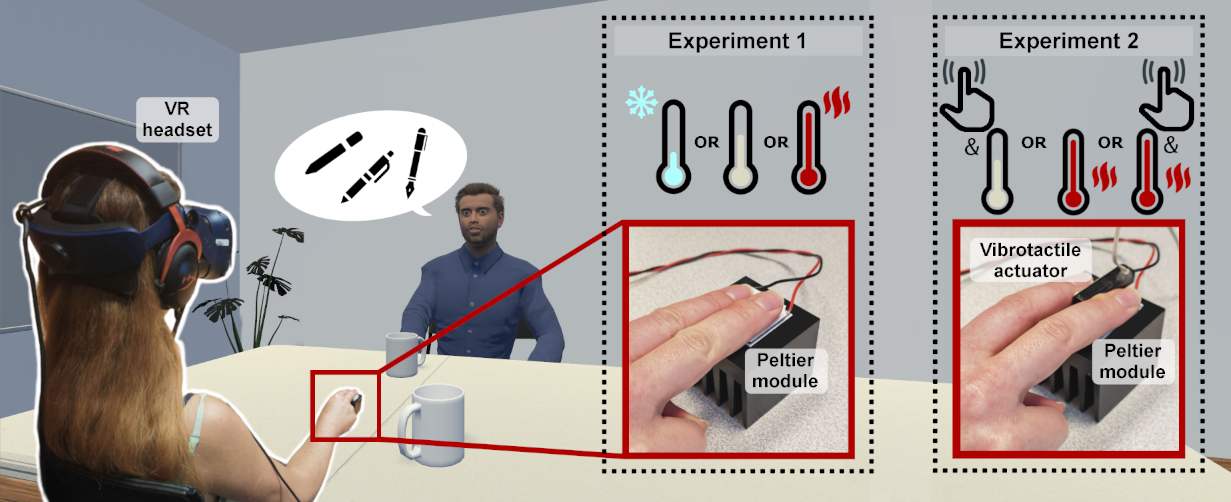

Participants: Jeanne Hecquard, Justine Saint-Aubert [contact], Ferran Argelaguet, Anatole Lécuyer, Marc Macé.

In this work 36, we study how thermal haptic feedback can influence social interactions in virtual environments, with an emphasis on persuasion, focus, co-presence, and friendliness. Physical and social warmth have been repeatedly linked in psychological literature, which allows for speculations on the effect of thermal haptics on virtual social interactions. To that effect, we conducted a study on thermal feedback during simulated social interactions with a virtual agent. We tested three conditions: warm, cool, and neutral (see Figure 18). Results showed that warm feedback positively influenced users' perception of the agent and significantly enhanced persuasion and thermal comfort. Multiple users reported the agent feeling less 'robotic' and more 'human' during the warm condition. Moreover, multiple studies have previously shown the potential of vibrotactile feedback for social interactions. A second study thus evaluated the combination of warmth and vibrations for social interactions. The study included the same protocol and three similar conditions: warmth, vibrations, and warm vibrations. Warmth was perceived as more friendly, while warm vibrations heightened the agent's virtual presence and persuasion. These results encourage the study of thermal haptics to support positive social interactions. Moreover, they suggest that some haptic feedback are more suited to certain types of social interactions and communication than others.

Experiment conditions

This work was done in collaboration with Inria RAINBOW team.

8.2.5 Towards end-user customization of haptic experiences

Participants: Tom Roy, Yann Glémarec, Ferran Argelaguet [contact].

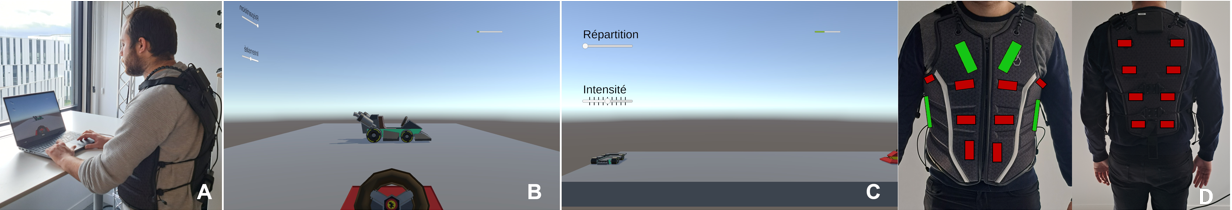

A Haptic Experience may take many shapes or forms, ranging from simple UI notifications on a phone, to fully immersive multi-sensorial experiences. In this context, dedicated Haptic authoring tools have been flourishing and the literature on Haptic design introduced many concepts and guidelines to improve Haptic Experiences. In this work 41, we propose to go beyond haptic design and explore the potential of end-user customization of Haptic Experiences. First, we discuss and extend a theoretical model of Haptic Experience. We revisit the proposed design parameters to account for the spatialized notion of haptic feedback and study the suitability of these parameters for end-user customization. Then, we detail a user study (n=52) exploring how users can customize different haptic effects (18 different effects) through haptic customization parameters, i.e., intensity and spatial density (see Figure 19). Finally, our results indicate significant variability in these parameters depending on the context and provide directions for future user studies regarding haptic profile design determined by subjective data.

Experimental setup and VR snapshots

This work was done in collaboration with Interdigital.

8.3 Brain Computer Interfaces

8.3.1 Introducing the use of thermal neurofeedback

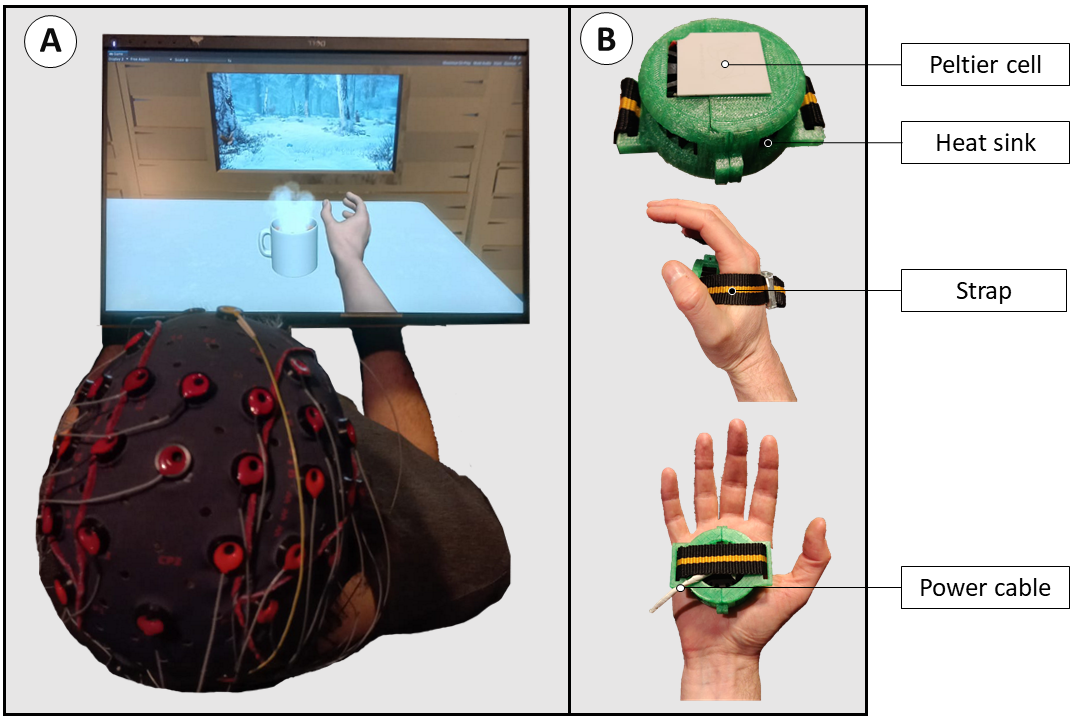

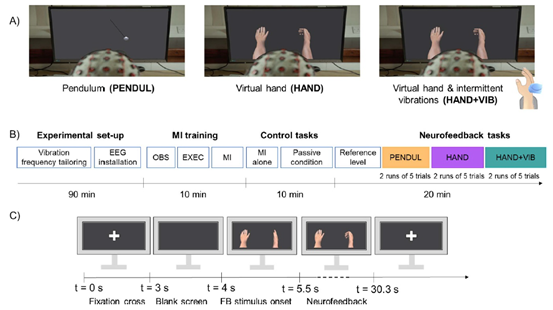

Participants: François Le Jeune, Emile Savalle, Anatole Lécuyer, Marc Macé, Léa Pillette [contact].

Motor imagery-based brain-computer interfaces (MI-BCIs) enable users to control digital devices by performing motor imagery tasks while their brain activity is recorded, typically using electroencephalography. Performing MI is challenging, especially for novices. To tackle this challenge, neurofeedback (NFB) training is frequently used and usually relies on visual feedback to help users learn to modulate the activity of their sensorimotor cortex when performing MI tasks. Improving the feedback provided during these training is essential. This study investigates the feasibility and effectiveness of using thermal feedback for MI-based NFB compared to visual feedback (see Figure 20) 46, 47. Thirteen people participated to a NFB training session with visual-only, thermal-only, and combined visuo-thermal feedback. Both visual-only and combined visuo-thermal feedback elicited significantly greater desynchronization over the sensorimotor cortex compared to thermal-only feedback. No significant difference between visual-only and combined visuo-thermal feedback was found, thermal feedback thus not impairing visual feedback. This study outlines the need for further exploration of alternative feedback modalities in BCI research.

Experimental setup

This work was done in collaboration with Inria EMPENN team.

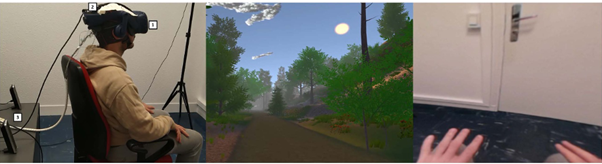

8.3.2 Towards electrophysiological measurement of presence in virtual reality through auditory oddball stimuli

Participants: Emile Savalle, Léa Pillette, Kyung-Ho Won, Ferran Argelaguet, Anatole Lécuyer, Marc Macé [contact].

In this work, we present a way of evaluating presence, which is an important aspect of user experience in virtual reality (VR). It corresponds to the illusion of being physically located in a virtual environment (VE). This feeling is usually measured through questionnaires that disrupt presence itself, are subjective and do not allow for real-time measurement. Electroencephalography (EEG), which measures brain activity, is increasingly used to monitor the state of users, especially while immersed in VR. Here we evaluate presence through the measure of the attention dedicated to the real environment via an EEG oddball paradigm (see Figure 21 for the setup) 29, 42. Using breaks in presence, this experimental protocol constitutes an ecological method for the study of presence, as different levels of presence are experienced in an identical VE. Through analysing the EEG data of 18 participants, a significant increase in the neurophysiological reaction to the oddball, i.e. the P300 amplitude, was found in low presence condition compared to high presence condition. This amplitude was significantly correlated with the self-reported measure of presence. Using Riemannian geometry to perform single-trial classification, we present a classification algorithm with 79% accuracy in detecting between two presence conditions. Taken together our results promote the use of EEG and oddball stimuli to monitor presence offline or in real-time without interrupting the user in the VE.

Experimental setup

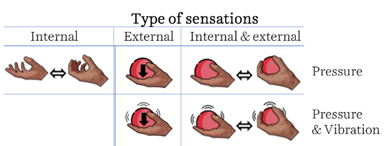

8.3.3 Which imagined sensations mostly impact electrophysiological activity?

Participants: Emile Savalle, Francois Le Jeune, Marc Macé, Léa Pillette [contact].

Motor imagery brain-computer interfaces (MI-BCI) user training aims at teaching people to control their sensorimotor cortex activity using feedback on the latter, often acquired using electroencephalography (EEG). During training, people are mostly asked to focus their imagery on the sensations associated with a movement, though very little is known on the sensations that mostly favor sensorimotor cortex activity. Our goal was to assess the influence of imagining different sensations on EEG data. Thirty participants performed MI tasks involving the following sensations: (i) interoceptive, arising from the muscles, tendons, and joints, (ii) exteroceptive, arising from the skin, such as thermal sensations, or (iii) both interoceptive and exteroceptive (see Figure 22 for the experimental conditions) 49. The results indicate that imagining exteroceptive sensations generates a greater neurophysiological response than imagining interoceptive sensations or both. Imagining external sensations should thus not be neglected in the instructions provided during MI-BCI user training. Our results also confirm the negative influence of mental workload and use of visual imagery on the resulting neurophysiological activity.

Table illustrating the experimental conditions

8.3.4 Neuromarkers of real touch vs. illusory touch

Participants: Emile Savalle, Léa Pillette, Kyung-Ho Won, Ferran Argelaguet, Anatole Lécuyer, Marc Macé [contact].

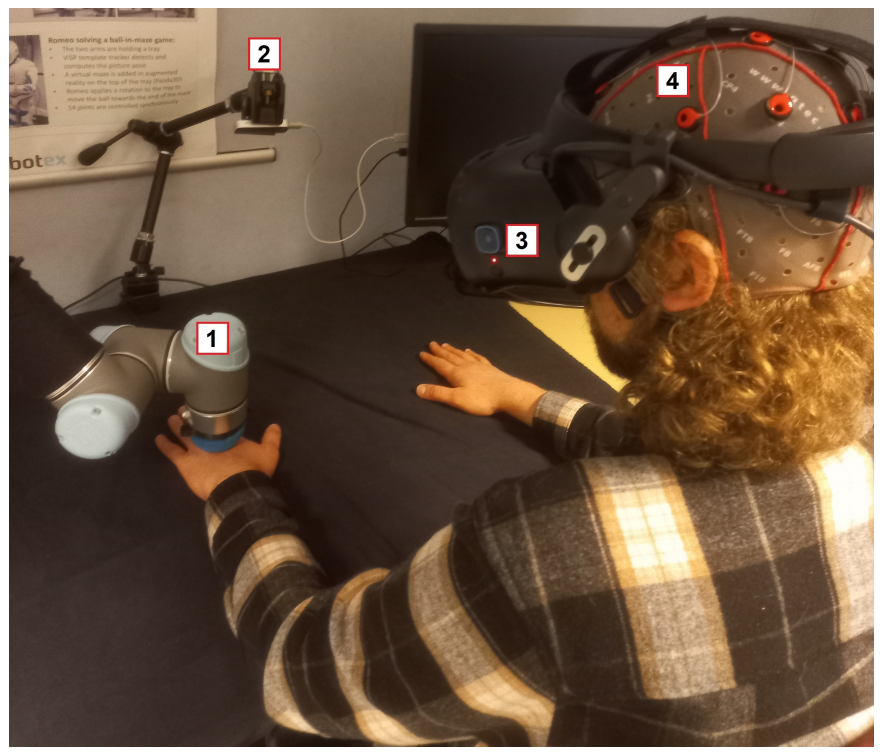

Haptic feedback allows virtual reality (VR) users to physically interact with virtual content. However, due to the limitations on haptic technology, delivering haptic feedback is still challenging [1]. To overcome such limitations, alternative methods leverage visual dominance to induce haptic illusions [2]. A few studies investigated the effects of tactile stimuli on user perception through EEG in VR, such as for texture discrimination [3] or to study social touch [4]. However, there is room for understanding the brain activity during the perception of tactile stimuli within Virtual Environment (VE) [3]. This experimental protocol aims to investigate the brain activity in response to the anticipation and perception of physical and virtual touch, and the impact of the virtual body representation 50. During the experiment, users will be immersed in a virtual environment replicating the real environment, including a desk, their body, and a robotic arm. Touch stimulation will be generated haptically, a gentle physical touch on the back of the hand using a robotic arm (see Figure 23), and/or visually, a visual stimulation matching the motion of the robot in VR. The experiment will have two within-subjects factors. Touch stimulation, either coherent visual and tactile touch feedback or just visual touch; and body representation, realistic human avatar or geometric body representation, as we hypothesize that body representation and ownership will alter the user’s perception. We will study the impact of our design on two aspects: • Anticipation of touch: Brain activity preceding touch using contingent negative variation • Perception of touch: Somatosensory cortex response with somatosensory evoked potential and mirror neuron system activation. Our results could provide insights about perception and anticipation of illusory and real touch by observing relevant neuromarkers.

Phtotography of the experimental setup

8.3.5 EEG markers of acceleration perception in virtual reality

Participants: Anatole Lécuyer [contact].

This work 38 investigates neural patterns of acceleration in virtual reality (VR) using electroencephalography (EEG). Participants experienced accelerating white spheres in VR while EEG signals were recorded. Significant EEG differences were found at the fronto-central region between acceleration and slow speed, regardless of direction, and at the central region depending on the acceleration direction. Topographic responses also show differences in spatial patterns between the conditions. These findings give insights into the perception of acceleration in the brain and show potential for passive BCI applications.

This work was done in collaboration with the univerty of Lille and the university of Essex.

8.3.6 Comparing EEG on interaction roles during a motor task

Participants: Kyung-Ho Won, Léa Pillette, Marc Macé, Anatole Lécuyer [contact].

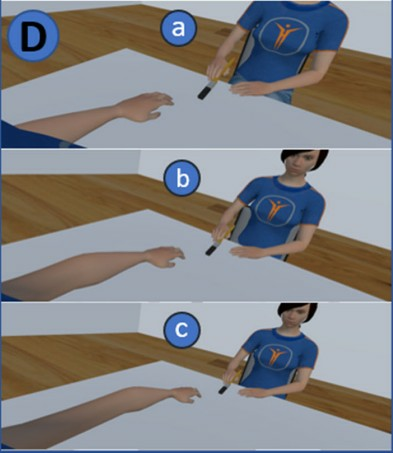

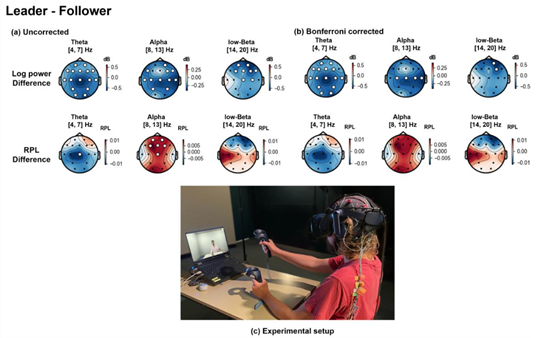

This work compared the electroencephalography (EEG) activity of leaders who moved their hands and followers who followed the leader’s movements (see Figure 24, bottom for the experimental setup). Such interaction is common in hyperscanning studies, but the influence of the leader and follower roles on EEG activity has scarcely been investigated 51. The log band power (LBP) and relative power level (RPL) were compared in theta and alpha bands. As a result, we found that LBP and RPL were higher in the theta band for the follower, which could be related to a greater cognitive load for the follower (see Figure 24, top for the main EEG results). The alpha band presented opposite patterns in LBP and RPL. LBP was higher in alpha for the follower, whereas the RPL was lower. RPL indicates that the alpha power among different bands is lower for the follower, which can be related to attentional suppression in alpha, although the absolute power is higher in the follower. This work indicates that different cognitive workloads are involved depending on the role of the interaction.

Main result and setup

8.3.7 Real-Time neurofeedback on Inter-Brain Synchrony: Current states and perspectives

Participants: Kyung-Ho Won, Léa Pillette, Marc Macé, Anatole Lécuyer [contact].

During neurofeedback (NFB) user training, participants learn to control the feedback associated with specific components of their brain activity, also called neuromarkers, to improve the cognitive abilities related to these neuromarkers, such as attention and mental workload. The recent development of methods to record the activity of several people’s brains simultaneously opens up the study of neuromarkers related to social interactions, computed from inter-brain synchrony (IBS). Here, we review the previous articles that trained participants to control electroencephalographic neuromarkers computed from inter-brain metrics (see Figure 25, left for article selection method) 52. The topic remains relatively unexplored as we only identified seven articles in the literature. We specifically studied the characteristics of the user’s training, i.e., instruction, task and feedback, and the neuromarkers used to provide feedback (see Figure 25, right for an example of our results on the feedback that were used). The reported results are promising as four studies including subjective measures of interaction report higher interaction and relationship scores with higher IBS during NFB training. Finally, we draw guidelines, identify open challenges, and suggests recommendations for future studies on this topic.

Study selection flowchart (left) and selected studies (right)

8.3.8 Large scale investigation of the effect of gender on mu rhythm suppression in motor imagery brain-computer interfaces

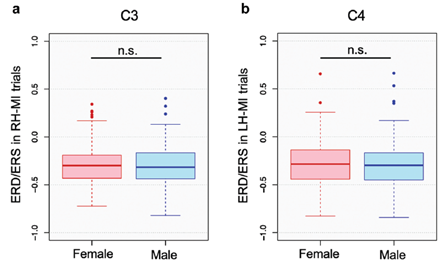

Participants: Léa Pillette [contact].

The utmost issue in Motor Imagery Brain-Computer Interfaces (MI-BCI) is the BCI poor performance known as ‘BCI inefficiency’. Although past research has attempted to find a solution by investigating factors influencing users’ MI-BCI performance, the issue persists. One of the factors that has been studied in relation to MI-BCI performance is gender. Research regarding the influence of gender on a user’s ability to control MI-BCIs remains inconclusive, mainly due to the small sample size and unbalanced gender distribution in past studies. To address these issues and obtain reliable results, this study combined four MI-BCI datasets into one large dataset with 248 subjects and equal gender distribution 24. The datasets included EEG signals from healthy subjects from both gender groups who had executed a right- vs. left-hand motor imagery task following the Graz protocol. The analysis consisted of extracting the Mu Suppression Index from C3 and C4 electrodes and comparing the values between female and male participants. Unlike some of the previous findings which reported an advantage for female BCI users in modulating mu rhythm activity, our results did not show any significant difference between the Mu Suppression Index of both groups, indicating that gender may not be a predictive factor for BCI performance (see Figure 26 for some of our neurophysiological results).

Neurophysiological results indicating an absence of significant difference of neurofeedback performances between men and women

This work was done in collaboration with Inria POTIOC team and the university of Tilburg.

8.3.9 Influence of feedback transparency on motor imagery neurofeedback performance

Participants: Léa Pillette [contact].

Neurofeedback (NF) is a cognitive training procedure based on real-time feedback (FB) of a participant’s brain activity that they must learn to self-regulate. It is widely used by athletes as it can increase motor skills. A classical visual FB delivered in a NF task is a filling gauge reflecting a measure of brain activity. This abstract visual FB is not transparently linked—from the subject's perspective—to the task performed (e.g., motor imagery). This may decrease the sense of agency, that is, the participants’ reported control over FB. In these two different experiments, we assessed (i) the influence of FB transparency on NF performance and the role of agency in this relationship (see Figure 27 for the experimental conditions) 21 and (ii) how athlete motor performances influence neurophysiological changes during neurofeedback compared to non athletes 45.

Illustration of the experimental protocol

This work was done in collaboration with INCIA and Paris Brain Institute.

8.3.10 Evaluation of multimodal EEG-fNIRS neurofeedback for motor imagery

Participants: Thomas Prampart [contact].

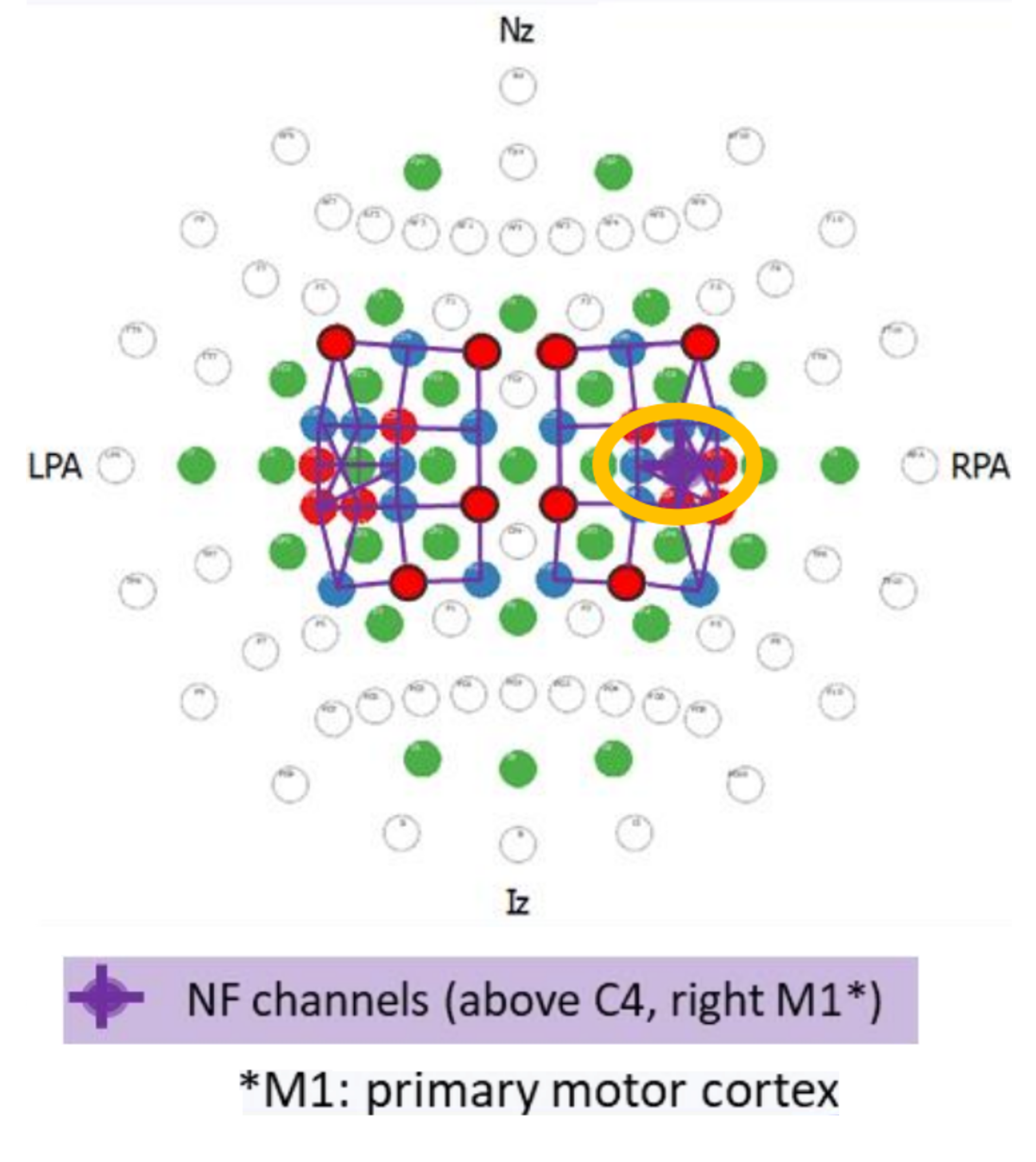

Neurofeedback (NF) enables self-regulation of brain activity through real-time feedback extracted from brain activity measures. Recently, the association of several neuroimaging methods to characterize brain activity has led to growing interest in NF. The integration of various portable recording techniques, such as electroencephalography (EEG) and functional near-infrared spectroscopy (fNIRS), respectively based on electrical and hemodynamics activity, could enhance the characterization of brain signals and subsequently improve NF performance. Such multimodal NF could benefit post-stroke motor rehabilitation. Nevertheless, their concomitant use in NF to discriminate specific features of brain activity has not been exhaustively studied. The objective of this study is to evaluate benefits of combining EEG and fNIRS for NF 48. Thirty right-handed participants are undergoing three randomized NF sessions: EEG-only, fNIRS-only, and EEG-fNIRS. EEG (Actichamp, Brain Products) and fNIRS (NIRScout, NIRx) channels are positioned above the primary sensorimotor cortex(see Figure 28 for the EEG and fNIRS montage). A NF score is computed from the right primary motor cortex signal along the 3 different sessions. The participants are presented with a visual representation of a ball along a one-dimension gauge, that moves up by imagining left-hand movements. The association between the score of NF, the neuroimaging modalities and the motor imagery strategy is then analyzed. Data collection is currently ongoing. We hypothesize that presenting the participants with a visual NF representing a score based on both EEG and fNIRS signals will result in higher control of the NF gauge, translating in higher NF scores. Moreover, we expect that multimodal feedback compared to unimodal feedback will enhance task-specific brain activity in both EEG and fNIRS modalities. This study investigates the benefits of combined EEG-fNIRS to provide multimodal NF. With potentially increased neuroplasticity, such system could find applications in clinical contexts, particularly in motor and brain rehabilitation, such as in post-stroke units.

EEG and fNIRS montage

This work was done in collaboration with Inria EMPENN team and Rennes CHU hospital.

8.4 Art and Cultural Heritage

8.4.1 A dissemination experiment centered on the scientific analysis of a cat mummy

Participants: Ronan Gaugne, Valérie Gouranton [contact].

This study combines virtual reality (VR) and 3D printing to enhance the scientific study of a cat mummy from the Musée des Beaux-Arts in Rennes. Using data collected through X-rays, CT scans, and photogrammetry, two main media were created: a transparent 1:1 scale 3D print revealing the internal structures and an interactive VR experience, Secret of Bastet, allowing users to explore the scientific process (see Figure 29) 34. Presented in 2023 at professional events and in the museum, the project received enthusiastic feedback from participants (83 in total). The two media offer complementary experiences: the 3D print unveils invisible details, while the VR experience provides an educational and narrative immersion. This innovative approach effectively engages the public in scientific discovery.

Photography of the experimental setup

This work was done in collaboration with UMR Trajectoires, Orange Labs, Polymoprh, Musée des beaux arts de Rennes and Rennes Métropole.

8.4.2 Do we study an archaeological artifact differently in VR and in reality?

Participants: Maxime Dumonteil, Valérie Gouranton [contact], Marc Macé, Ronan Gaugne.

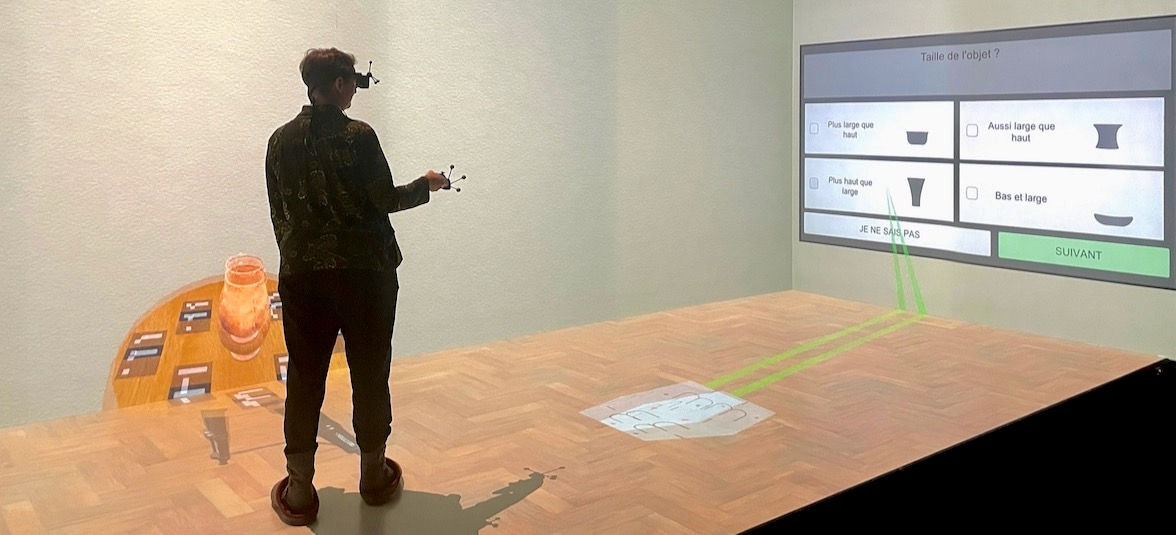

The use of virtual reality (VR) in archaeological research is increasing year over year. Nevertheless, the influence of VR technologies on researchers' perception and interpretation is frequently overlooked. These device-induced biases require careful consideration and mitigation strategies to ensure the integrity and reliability of archaeological research results. This work 33 aimed at identifying potential interpretation biases introduced by the use of VR tools in this field, by analyzing both eye-tracking patterns and participant's behavior. We designed a user study consisting of an analysis task on a corpus of archaeological artifacts across different modalities: a real environment and two virtual environments, one using a head-mounted device and the other an immersive room (see Figure 30). The aim of this experiment is to compare participants’ behavior (head movements, gaze patterns and task performance) between the three modalities. The main contribution of this work is to design a methodology to generate comparable and consistent results between the data recorded during the experiment in the three different contexts. The results highlight a number of points to watch out when using VR in archaeology for analysis and interpretative purposes.

Photography of the experiment in Immersia CAVE

This work was done in collaboration with UMR Trajectoires, Equipex+ Continuum and CNRS AJAX Project.

8.4.3 Collaboration in a virtual reality artwork: co-creation and relaxation beyond technology

Participants: Julien Lomet, Ronan Gaugne, Valérie Gouranton [contact].

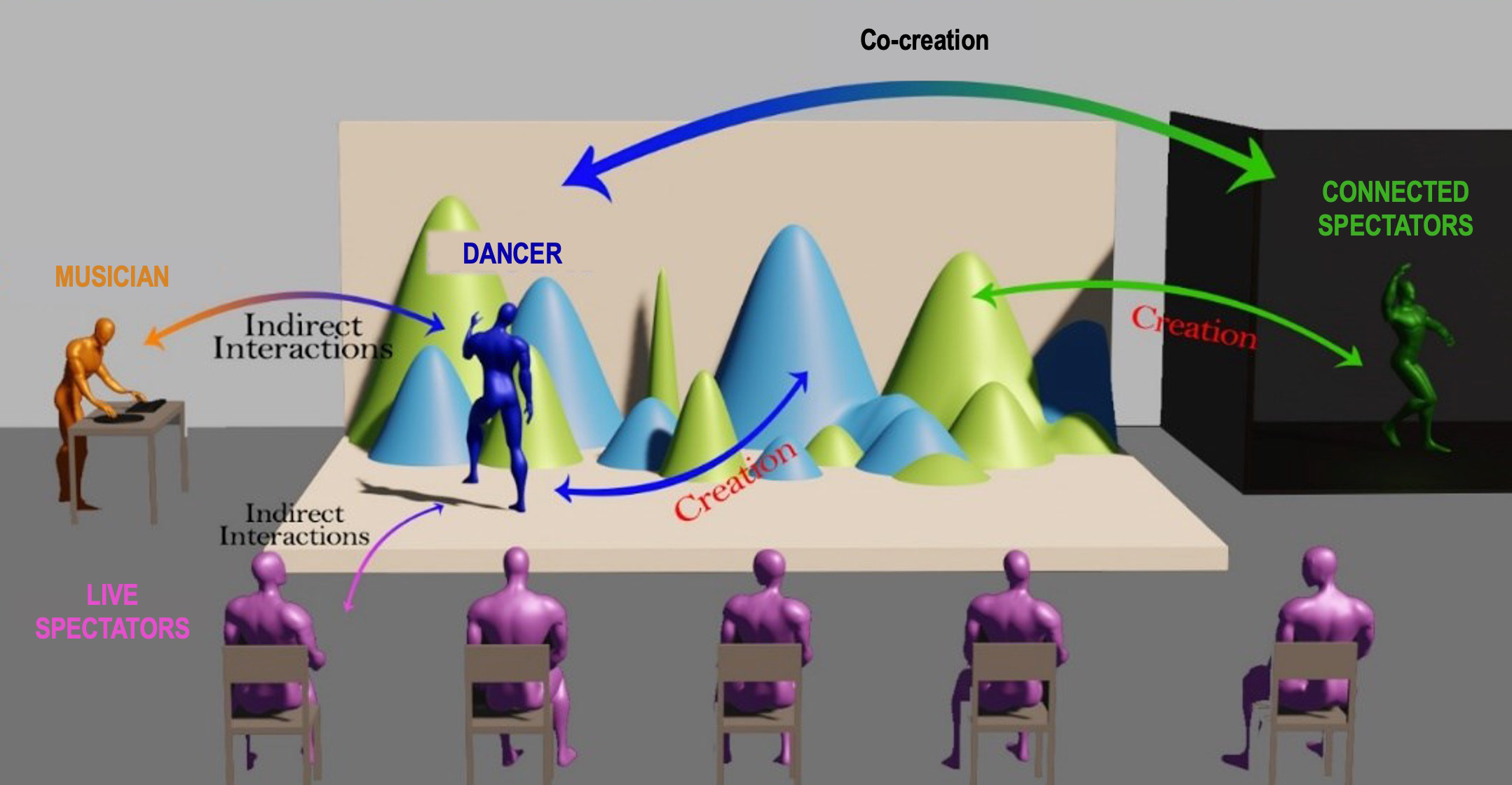

Collaborative virtual spaces allow to establish rich relations between involved actors in an artistic perspective. Our work contributes to the design and study of artistic collaborative virtual environments through the presentation of immersive and interactive digital artwork installation, named “Creative Harmony” (see Figure 31). In this project, we explore different levels of collaborations proposed by the “Creative Harmony” environment, and we question the experience and interactivity of several actors immersed in this environment, through different technological implementations 27.

Principle of multiple collaborations in Creative Harmony

9 Bilateral contracts and grants with industry

9.1 Grants with Industry

Nemo.AI Laboratory with InterDigital

Participants: Ferran Argelaguet [contact], Anatole Lécuyer, Yann Glemarrec, Tom Roy, Philippe Clermont de Gallerande.

To engage and employ scientists and engineers across the Brittany region in researching the technologies that will shape the metaverse, Inria, the French National Institute for Research in Digital Science and Technology, and InterDigital, Inc. (NASDAQ:IDCC), a mobile and video technology research and development company, launched the Nemo.AI Common Lab. This public-private partnership is dedicated to leveraging the combined research expertise of Inria and InterDigital labs to foster local participation in emerging innovations and global technology trends. Named after the pioneering Captain Nemo from Jules Verne’s 20,000 leagues under the sea, the Nemo.AI Common Lab aims to equip the Brittany region with resources to pursue cutting edge scientific research and explore the technologies that will define media experiences in the future. The project reflects the recognized importance of artificial intelligence (AI) in enabling new media experiences in a digital and responsible society.

IRT b<>com

Participants: Florian Nouviale, Valérie Gouranton [contact].

Our participation in the IRT b<>com involves using the Xareus software with the project partners.

10 Partnerships and cooperations

10.1 International research visitors

10.1.1 Visits to international teams

Research stays abroad

Anatole Lécuyer

-

Visited institutions:

University of Tokyo (Prof. Inami), University of Sendai (Prof. Kitamura), and University of Keio (Prof. Sugimoto)

-

Country:

Japan

-

Dates:

May 2024

-

Mobility program/type of mobility:

Research exchange, invitation

Jeanne Hecquard

-

Visited institution:

Event Lab, Barcelona university

-

Country:

Spain

-

Dates:

Sept-Dec 2024

-

Context of the visit:

GuestXR project

-

Mobility program/type of mobility:

Research mobility

Emile Savalle

-

Visited institution:

Cottbus university

-

Country:

Germany

-

Dates:

Sept-Dec 2024

-

Context of the visit:

Collaboration

-

Mobility program/type of mobility:

Research mobility

10.2 European initiatives

10.2.1 Horizon Europe

METATOO

Participants: Ferran Argelaguet [contact], Arthur Audrain.

-

Title:

A transfer of knowledge and technology for investigating gender-based inappropriate social interactions in the Metaverse

-

Duration:

From June 1, 2024 to May 30, 2027

-

Partners:

- Inria, France

- University of Athens, Grece

- Institut d’Investigacions Biomèdiques August Pi I Sunyer, Spain

-

Inria contact:

Ferran Argelaguet

-

Coordinator:

University of Athens, Grece

-

Summary: