2024Activity reportProject-TeamILDA

RNSR: 201521247J- Research center Inria Saclay Centre at Université Paris-Saclay

- In partnership with:CNRS, Université Paris-Saclay

- Team name: Interacting with Large Data

- In collaboration with:Laboratoire Interdisciplinaire des Sciences du Numérique

- Domain:Perception, Cognition and Interaction

- Theme:Interaction and visualization

Keywords

Computer Science and Digital Science

- A3.1.4. Uncertain data

- A3.1.7. Open data

- A3.1.10. Heterogeneous data

- A3.1.11. Structured data

- A3.2.4. Semantic Web

- A3.2.6. Linked data

- A5.1. Human-Computer Interaction

- A5.1.1. Engineering of interactive systems

- A5.1.2. Evaluation of interactive systems

- A5.1.5. Body-based interfaces

- A5.1.6. Tangible interfaces

- A5.1.9. User and perceptual studies

- A5.2. Data visualization

- A5.6.1. Virtual reality

- A5.6.2. Augmented reality

Other Research Topics and Application Domains

- B3.1. Sustainable development

- B3.2. Climate and meteorology

- B3.3. Geosciences

- B3.3.1. Earth and subsoil

- B3.5. Agronomy

- B5.9. Industrial maintenance

- B9.2. Art

- B9.5.3. Physics

- B9.5.6. Data science

- B9.6.7. Geography

- B9.7.2. Open data

- B9.11. Risk management

1 Team members, visitors, external collaborators

Research Scientists

- Emmanuel Pietriga [Team leader, INRIA, Senior Researcher]

- Caroline Appert [CNRS, Senior Researcher]

- Olivier Chapuis [CNRS, Researcher]

- Vanessa Pena Araya [INRIA, ISFP]

- Arnaud Prouzeau [INRIA, ISFP]

Faculty Member

- Anastasia Bezerianos [UNIV PARIS SACLAY, Professor]

PhD Students

- Julien Berry [UNIV PARIS SACLAY, from Oct 2024]

- Vincent Cavez [INRIA]

- Mehdi Rafik Chakhchoukh [INRIA, from Oct 2024]

- Mehdi Rafik Chakhchoukh [UNIV PARIS SACLAY, ATER, until Sep 2024]

- Camille Dupre [BERGER-LEVRAULT, CIFRE]

- Arthur Fages [CNRS, until Nov 2024]

- Mengfei Gao [UNIV PARIS SACLAY, from Nov 2024]

- Xinpei Zheng [CNRS, from Oct 2024, PEPR eNSEMBLE]

Technical Staff

- Ludovic David [INRIA, Engineer]

- Olivier Gladin [INRIA, Engineer]

Interns and Apprentices

- Julien Berry [INRIA, Intern, from Mar 2024 until Aug 2024]

- Celine Deivanayagam [INRIA, Intern, from May 2024 until Jul 2024]

- Noemie Hanus [INRIA, Intern, from Mar 2024 until Aug 2024]

- Abel Henry-Lapassat [Université Paris-Saclay, Intern, from May 2024 until Jul 2024]

- Doaa Hussien Yassien [INRIA, Intern, from Apr 2024 until Aug 2024]

- Omi Johnson [INRAe, Intern, from Apr 2024 until Jul 2024]

- Maryatou Kante [Université Paris-Saclay, Intern, from Jun 2024 until Jul 2024]

- Chuyun Ma [INRIA, Intern, from Mar 2024 until Aug 2024]

- Briggs Twitchell [INRAe, Intern, from Apr 2024 until Jun 2024]

Administrative Assistant

- Katia Evrat [INRIA]

External Collaborator

- Maria Lobo [IGN, from Aug 2024]

2 Overall objectives

Datasets are no longer just large. They are distributed over multiple networked sources and increasingly interlinked, consisting of heterogeneous pieces of content structured in elaborate ways. Our goal is to design data-centric interactive systems that provide users with the right data at the right time and enable them to effectively manipulate and share these data. We design, develop and evaluate novel interaction and visualization techniques to empower users in both mobile and stationary contexts involving a variety of display devices, including: smartphones and tablets, augmented reality glasses, desktop workstations, tabletops, ultra-high-resolution wall-sized displays.

Our ability to acquire or generate, store, process and query data has increased spectacularly over the last decade. This is having a profound impact in domains as varied as scientific research, commerce, social media, industrial processes or e-government. Looking ahead, technologies related to the Web of Data have started an even larger revolution in data-driven activities, by making information accessible to machines as semi-structured data 28. Indeed, novel Web-based data models considerably ease the interlinking of semi-structured data originating from multiple independent sources. They make it possible to associate machine-processable semantics with the data. This in turn means that heterogeneous systems can exchange data, infer new data using reasoning engines, and that software agents can cross data sources, resolving ambiguities and conflicts between them 53. As a result, datasets are becoming even richer and are being made even larger and more heterogeneous, but also more useful, by interlinking them 38.

These advances raise research questions and technological challenges that span numerous fields of computer science research: databases, artificial intelligence, communication networks, security and trust, as well as human-computer interaction. Our research is based on the conviction that interactive systems play a central role in many data-driven activity domains. Indeed, no matter how elaborate the data acquisition, processing and storage pipelines are, many data eventually get processed or consumed one way or another by users. The latter are faced with complex, increasingly interlinked heterogeneous datasets that are organized according to elaborate structures, resulting in overwhelming amounts of both raw data and structured information. Users thus require effective tools to make sense of their data and manipulate them.

We approach this problem from the perspective of the Human-Computer Interaction (HCI) field of research, whose goal is to study how humans interact with computers and inspire novel hardware and software designs aimed at optimizing properties such as efficiency, ease of use and learnability, in single-user or cooperative contexts of work.

ILDA aims at designing interactive systems that display the data and let users interact with them, aiming to help users better navigate and comprehend complex datasets represented visually, as well as manipulate them. Our research has been organized along three complementary axes, as detailed in Section 3.

3 Research program

3.1 Novel Forms of Display for Groups and Individuals

Participants: Caroline Appert, Anastasia Bezerianos, Olivier Chapuis, Vanessa Peña-Araya, Emmanuel Pietriga, Arnaud Prouzeau, Arthur Fages, Mengfei Gao, Ludovic David, Olivier Gladin, Celine Deivanayagam, Abel Henry-Lapassat, Doaa Hussien Yassien.

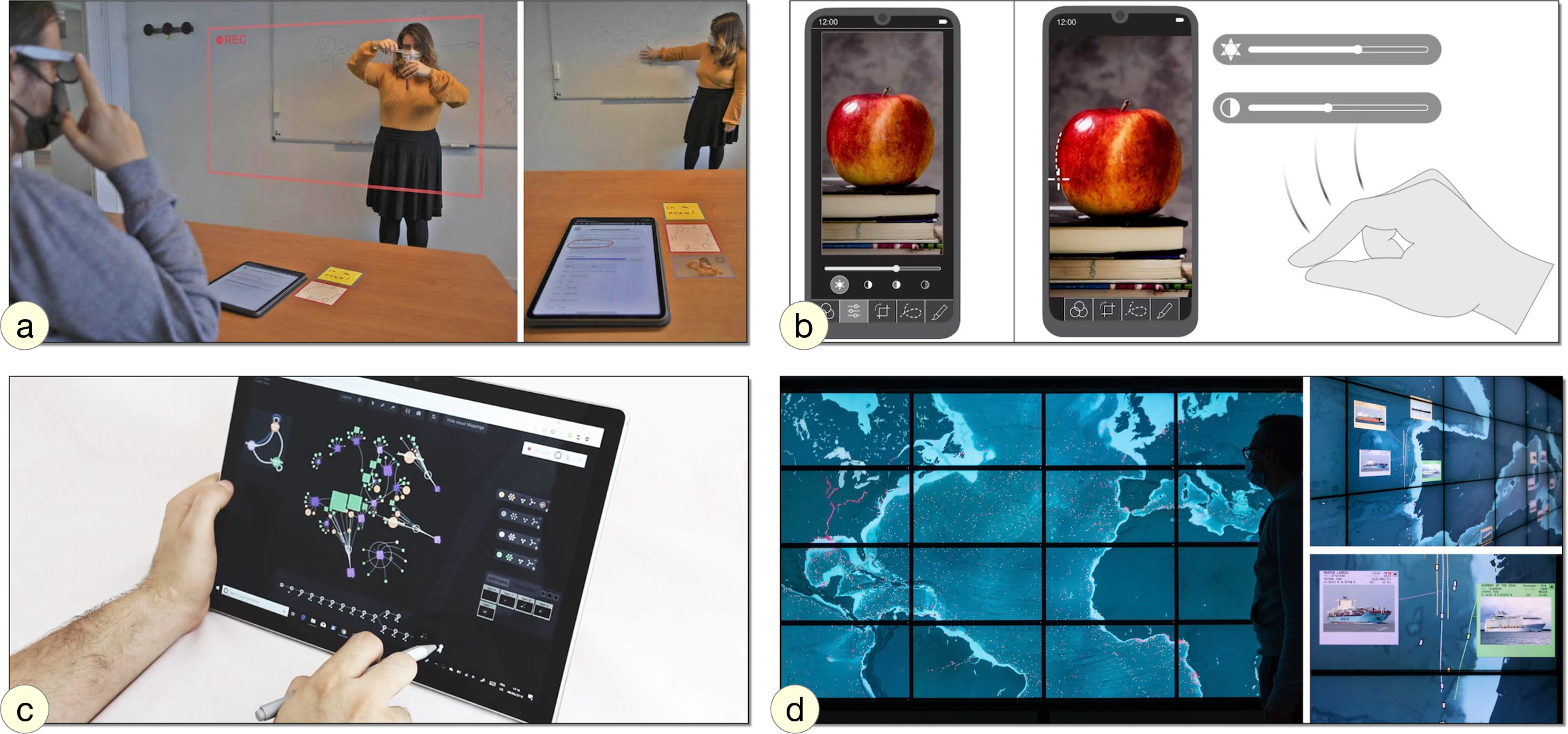

Data sense-making and analysis is not limited to individual users working with a single device, but increasingly involves multiple users working together in a coordinated manner in multi-display environments involving a variety of devices. We investigate how to empower users working with complex data by leveraging novel types of displays and combinations of displays, designing visualizations adapted to their capabilities: ultra-high-resolution wall-sized displays ((Figure 1-d)), augmented reality glasses ((Figure 1-a & b)), pen + touch interactive surfaces ((Figure 1-c)), handheld devices such as smartphones and tablets ((Figure 1-a & b)), desktop workstations and laptops.

Being able to combine or switch between representations of the data at different levels of detail and merge data from multiple sources in a single representation is central to many scenarios. The foundational question addressed here is what to display when, where and how, so as to provide effective support to users in their data understanding and manipulation tasks. We target contexts in which workers have to interact with complementary views on the same data, or with views on different-but-related datasets, possibly at different levels of abstraction, and with a particular interest for multi-variate data ((Figure 1-c)) that have spatio-temporal attributes.

Our activity in this axis focuses on the following themes: 1) multi-display environments that include not only interactive surfaces such as wall displays but Augmented Reality (AR) as well; 2) the combination of AR and handheld devices; 3) geovisualization and interactive cartography on a variety of interactive surfaces including desktop, tabletop, handheld and wall displays.

Projects in which we research data visualization techniques for multi-display environments and for ultra-high-resolution wall-sized displays in particular often involve geographical information 46 (Figure 1-d). But we also research interaction and visualization techniques that are more generic. For instance we study awareness techniques to aid transitions between personal and shared workspaces in collaborative contexts that include large shared displays and desktops 48. We also investigate the potential benefits of extending wall displays with AR, for personal+context navigation 40 or to seamlessly extend the collaboration space around wall displays 6.

Augmented Reality, as a novel form of display, has become a strong center of attention in the team. Beyond its combination with wall displays, we are also investigating its potential when coupled with handheld displays such as smartphones or tablets. Coupling a mobile device with AR eyewear can help address some usability challenges of both small handheld displays and AR displays. AR can be used to offload widgets from the mobile device to the air around it ((Figure 1-b)), for instance enabling digital content annotation 35 ((Figure 1-a)).

Relevant publications by team members this year: 11, 18, 20, 21, 26, 25, 27.

3.2 Novel Forms of Input for Groups and Individuals

Participants: Caroline Appert, Olivier Chapuis, Emmanuel Pietriga, Arnaud Prouzeau, Arthur Fages, Julien Berry, Vincent Cavez, Camille Dupré, Xinpei Zheng, Ludovic David, Olivier Gladin, Abel Henry-Lapassat.

The contexts in which novel types of displays are used often call for novel types of input. In addition, the interactive manipulation of complex datasets often calls for rich interaction vocabularies. We design and evaluate interaction techniques that leverage input technologies such as tactile surfaces, digital pens, 3D motion trackers and custom physical controllers built on-demand.

We develop input techniques that feature a high level of expressive power, as well as techniques that can improve group awareness and support cooperative tasks. When relevant, we aim to complement – rather than replace – traditional input devices such as keyboards and mouse, that remain very effective in contexts such as the office desktop.

We aim to design rich interaction vocabularies that go beyond what current interactive surfaces offer, which rarely exceeds five gestures such as simple slides and pinches. Designing larger interaction vocabularies requires identifying discriminating dimensions in order to structure a space of manipulations that should remain few and simple, so as to be easy to memorize and execute. Beyond cognitive and motor complexity, the scalability of vocabularies also depends on our ability to design robust recognizers that will allow users to fluidly chain simple manipulations that make it possible to interlace navigation, selection and manipulation actions. We also study how to further extend input vocabularies by combining multiple modalities, such as pen and touch, or mid-air gestures and tangibles. Gestures and objects encode a lot of information in their shape, dynamics and direction. They can also improve coordination among actors in collaborative contexts for, e.g., handling priorities or assigning specific roles to different users.

In recent years, there has been a tendency in the HCI community to uncritically rely on machine learning (ML) to solve user input problems. While we acknowledge the power and potential of AI to solve a broad range of problems, we are rather interested in trying to devise interaction techniques that are less resource-intensive and that do not require training the system. We tend to rely on analytical approaches rather than on ML-based solutions, using those as a last resort only.

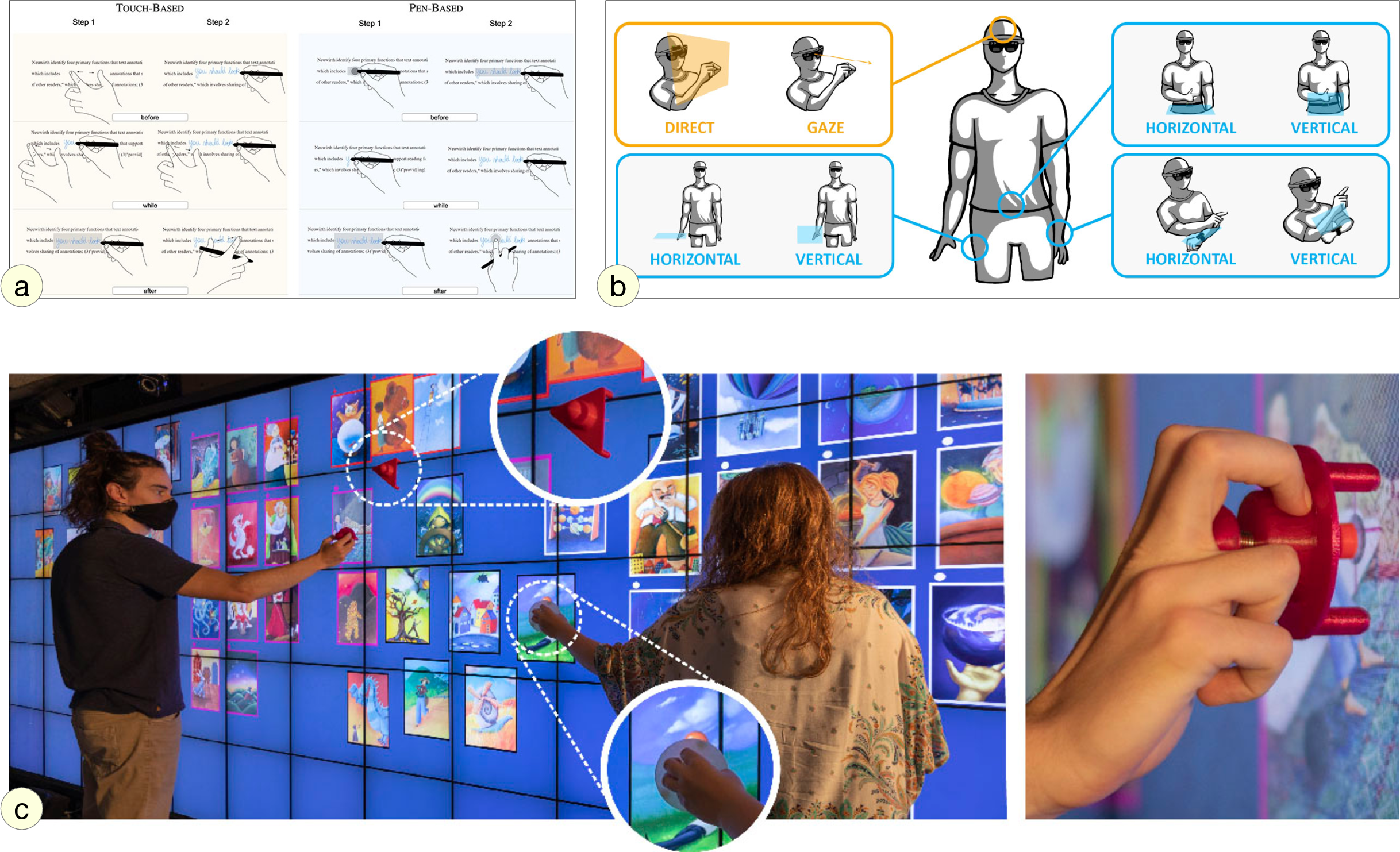

Our activity in this axis focuses on the following themes: 1) direct manipulation on interactive surfaces using a combination of pen and touch; 2) tangible input for wall displays; 3) input in immersive environments with a strong emphasis on Augmented Reality (AR), either in mid-air or combined with a handheld device.

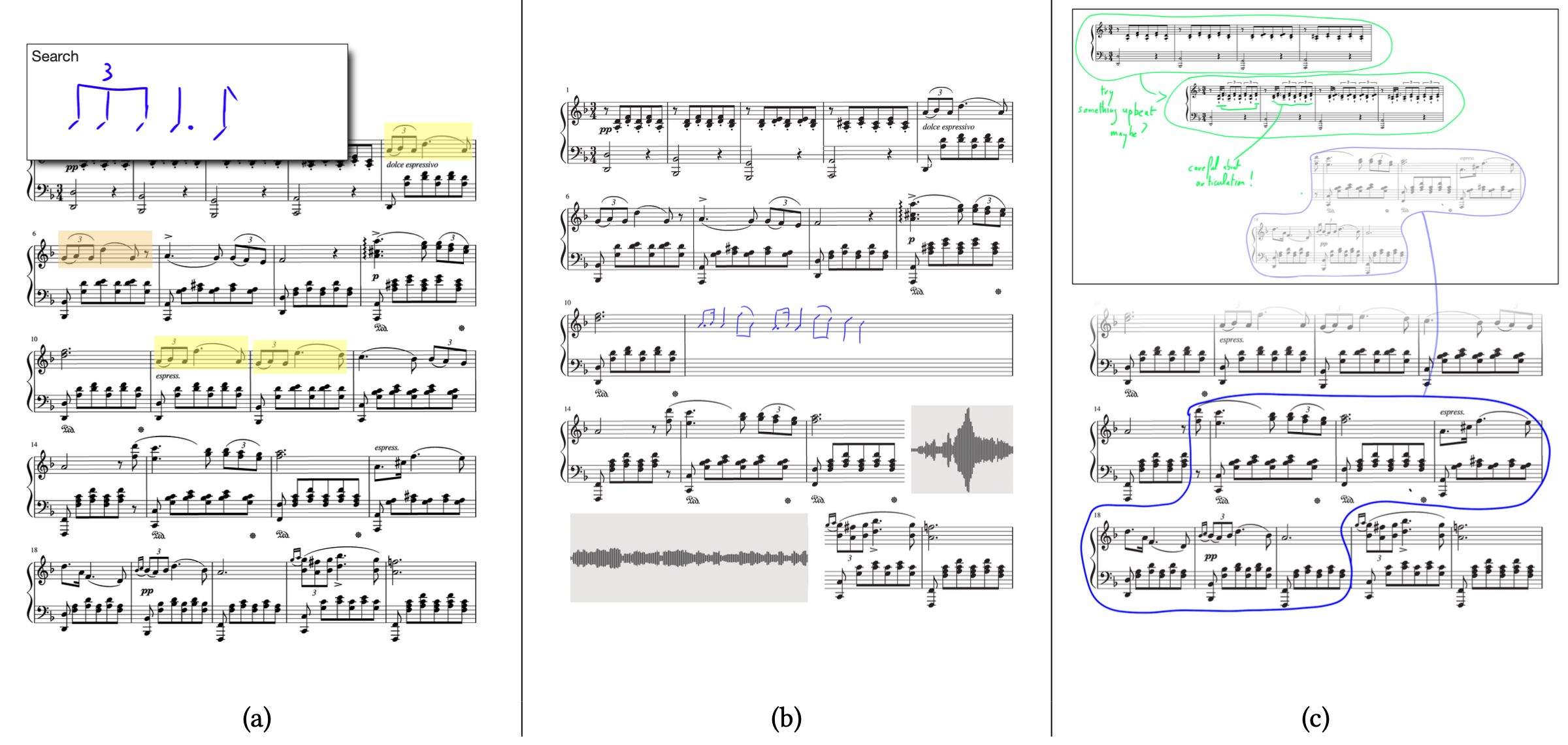

We investigate how pen and touch can be best combined to facilitate different tasks and activities: document annotation, where pen+touch techniques are used to make room for in-context annotations by dynamically reflowing documents 9 ((Figure 2-a)); heterogeneous dataset exploration and sensemaking 52, where that combination of modalities enables users to seamlessly transition between exploring data and externalizing their thoughts.

Following-up on earlier work in the team about tangible interaction using passive tokens on interactive surfaces 43, 42, 30, we investigate tangible interaction on vertical displays. Tangibles can enrich interaction with interactive surfaces, but gravity represents a major obstacle when interacting with a vertical display. We design solutions to this problem 4 ((Figure 2-c)), seeking to enable manipulations on vertical screens, in the air, or both 32.

As mentioned earlier, Augmented Reality is becoming a strong center of attention in the team as a display technology. Our research is not only about how to display data in AR, but how to interact with these data as well. For instance we study the concept of mid-air pads 1 ((Figure 2-b)) as an alternative to gaze or direct hand input to control a pointer in windows anchored in the environment (similar to the window-based UI showcased by Apple for the upcoming Vision Pro). Supporting indirect interaction, ARPads allow users to control a pointer in AR through movements on a mid-air plane.

When used in combination with another device, AR enables displaying additional information in the physical volume around that device. But AR also enables using that volume to interact with the device, providing new input possibilities, as we have started investigating with the design and evaluation of AR-enhanced widgets for smartphones 31. The AR technology is not only used to offload widgets from the phone to the air around it, but to give users more control on input precision as well.

Relevant publications by team members this year: 5, 12, 13, 17, 16.

3.3 Interacting with Spatio-Temporal Data

Participants: Caroline Appert, Anastasia Bezerianos, Olivier Chapuis, Vanessa Peña-Araya, Emmanuel Pietriga, Arnaud Prouzeau, Maria Lobo, Mehdi Rafik Chakhchoukh, Ludovic David, Olivier Gladin, Celine Deivanayagam, Noemie Hanus, Doaa Hussien Yassien, Omi Johnson, Maryatou Kante, Chuyun Ma, Briggs Twitchell.

Research in data management is yielding novel models that enable diverse structuring and querying strategies, give machine-processable semantics to the data and ease their interlinking. This, in turn, yields datasets that can be distributed at the scale of the Web, highly-heterogeneous and thus rich but also quite complex. We investigate ways to leverage this richness from the users' perspective, designing interactive systems adapted to the specific characteristics of data models and data semantics of the considered application area.

The general research question addressed in this part of our research program is how to design novel interaction techniques that help users manipulate their data more efficiently. By data manipulation, we mean all low-level tasks related to manually creating new data, modifying and cleaning existing content (data wrangling), possibly merging data from different sources.

We envision interactive systems in which the semantics and structure are not exposed directly to users, but serve as input to the system to generate interactive representations that convey information relevant to the task at hand and best afford the possible manipulation actions.

Our activity in this axis focuses on the following themes: 1) browsing webs of linked data; 2) visualization of multivariate networks; 3) visualization of hypergraphs; 4) visualization for decision making.

Early work in the team had focused on enabling users to explore large catalogs of linked data 47 by querying dataset metadata and contents originating from multiple sources; and interactive systems that let users explore the contents of those linked datasets. We work on new navigation paradigms, for instance based on the concept of semantic paths (SWJ, 34) which create aggregated views on collections of items of interest by analyzing the chains of triples that constitute knowledge graphs and characterizing the sets of values at the end of the paths formed by these chains.

While a central idea of the above system is to display information without ever exposing the underlying semi-structured directed labeled graph, we also research novel ways to visualize and manipulate multivariate networks represented as such ((Figure 1-c)), as there are contexts in which the structure of the data – knowledge graphs or else – is important to convey. To this end, we investigate novel ways to represented complex structures and visually encode the attributes of the entities that compose these structures. This can be, e.g., using motion as an encoding channel for edge attributes in multivariate network visualizations 49, 51. Or it can be by letting users build representations of multivariate networks incrementally using expressive design techniques 50 (Figure 1-c).

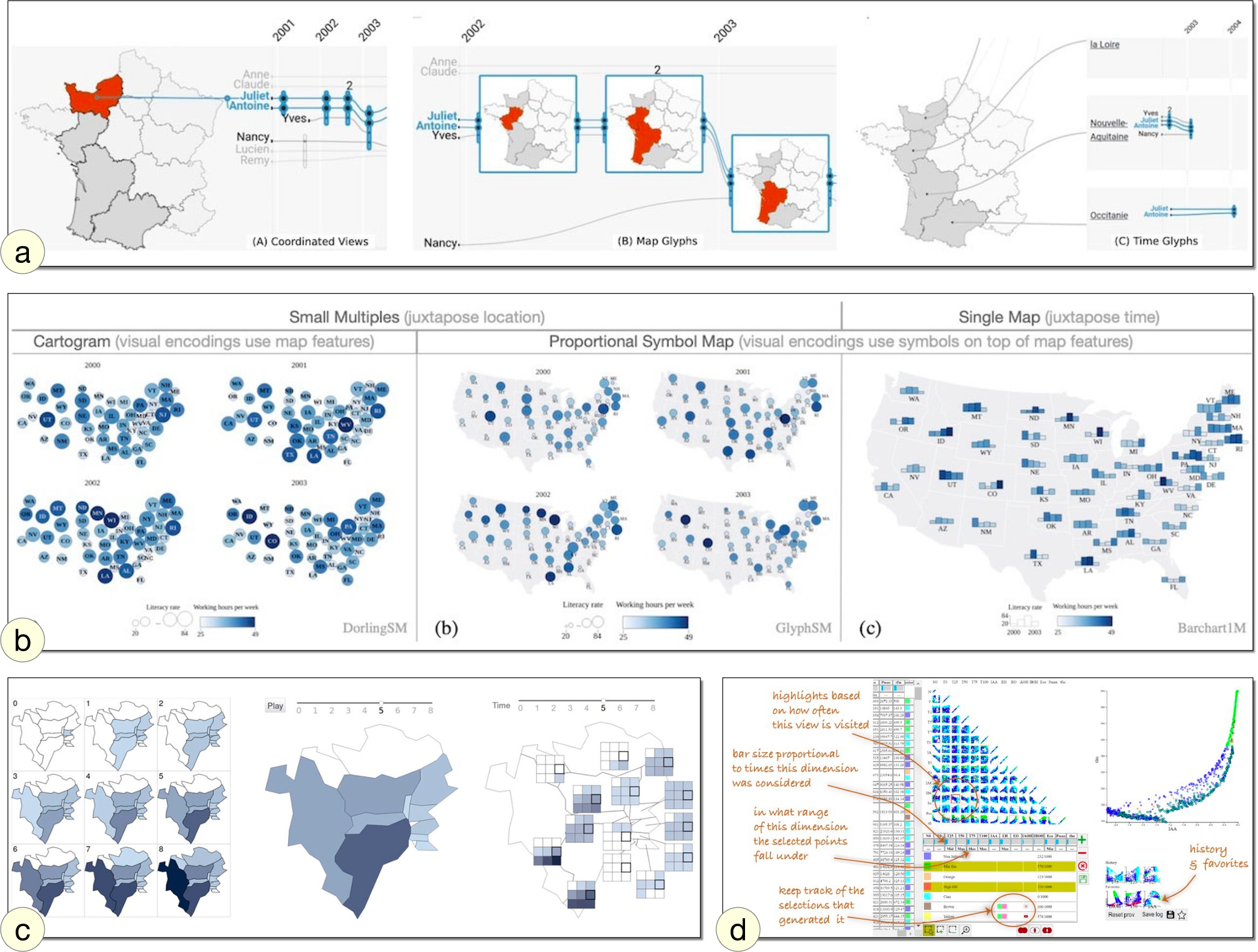

Multivariate networks and knowledge graphs are very expressive data structures that can be used to encode many different types of data. They are sometimes not sufficient, however. In some situations, even more elaborate structures are required, such as hypergraphs, which enable relating more than two vertices with a single edge and are notoriously difficult to visualize. We investigate visualization techniques for data modeled as hypergraphs, for instance in the area of data journalism by generalizing Storyline visualizations to display the temporal evolution of relationships involving multiple types of entities such as, e.g., people, locations, and companies 7, 39 (Figure 3-a).

As mentioned above, interactive cartography has been an active topic in the team since 2015 when we started a collaboration with IGN – the French national geographic institute. Project MapMuxing was about interactive cartography on a variety of surfaces, including tabletops 10 and wall displays 46, but on desktop workstations as well. Since then, our work has expanded to include geovisualization. We investigate research questions about the display of multivariate data that have a spatial – and sometimes temporal – component 8 (Figure 3-b) and that evolve over time 44 (Figure 3-c).

In this research axis we also investigate visualization to support decision making. While the above projects mostly consider visualization for the purpose of data exploration, the visual representation of data can also be an effective tool for promoting situation awareness and helping subject-matter experts make decisions. The design and development of user interfaces for major astronomical observatories – which dates back to 2009 with ALMA and is still on-going with CTA (Cherenkov Telescope Array) – has been and still is an important part of our research & development activities. But we also investigate this broad topic in other contexts. For instance, as part of a long-term collaboration with INRAe about the design of trade-off analysis systems 3 for experts in domains such as agronomy or manufacturing (Figure 3-d).

Relevant publications by team members this year: 11, 14, 15, 23, 24.

4 Application domains

Participants: Caroline Appert, Anastasia Bezerianos, Olivier Chapuis, Vanessa Peña-Araya, Emmanuel Pietriga, Arnaud Prouzeau, Arthur Fages, Ludovic David, Olivier Gladin, Abel Henry-Lapassat.

4.1 Mission-critical Systems

Mission-critical contexts of use, which include emergency response & management; critical infrastructure operations such as public transportation systems, communications and power distribution networks; and the operation of large scientific instruments such as astronomical observatories and particle accelerators. Central to these contexts of work is the notion of situation awareness 36. Our goal is to investigate novel ways of interacting with computing systems that improve collaborative data analysis capabilities and decision support assistance in a mission-critical, often time-constrained, work context.

Relevant publications by team members this year: 23, 22, 16.

4.2 Exploratory Analysis of Scientific Data

Participants: Caroline Appert, Anastasia Bezerianos, Olivier Chapuis, Vanessa Peña-Araya, Emmanuel Pietriga, Arnaud Prouzeau, Maria Lobo, Mehdi Rafik Chakhchoukh, Ludovic David, Olivier Gladin, Omi Johnson, Maryatou Kante, Briggs Twitchell.

Many scientific disciplines are increasingly data-driven, including astronomy, molecular biology, particle physics, or neuroanatomy. No matter their origin (experiments, remote observations, large-scale simulations), these data can be difficult to understand and analyze because of their sheer size and complexity. Our goal is to investigate how data-centric interactive systems can improve scientific data exploration.

5 New software, platforms, open data

Participants: Vincent Cavez, Ludovic David, Olivier Gladin, Caroline Appert, Olivier Chapuis, Emmanuel Pietriga, Maryatou Kante.

The following subsections list software developed by ILDA team members. The team develops research prototypes, toolkits, and also actively contributes, since 2018, to the development of the operations monitoring and control front-end of the Cherenkov Telescope Array, in collaboration with the CTAO and DESY.

More information on the ILDA website.

5.1 New software

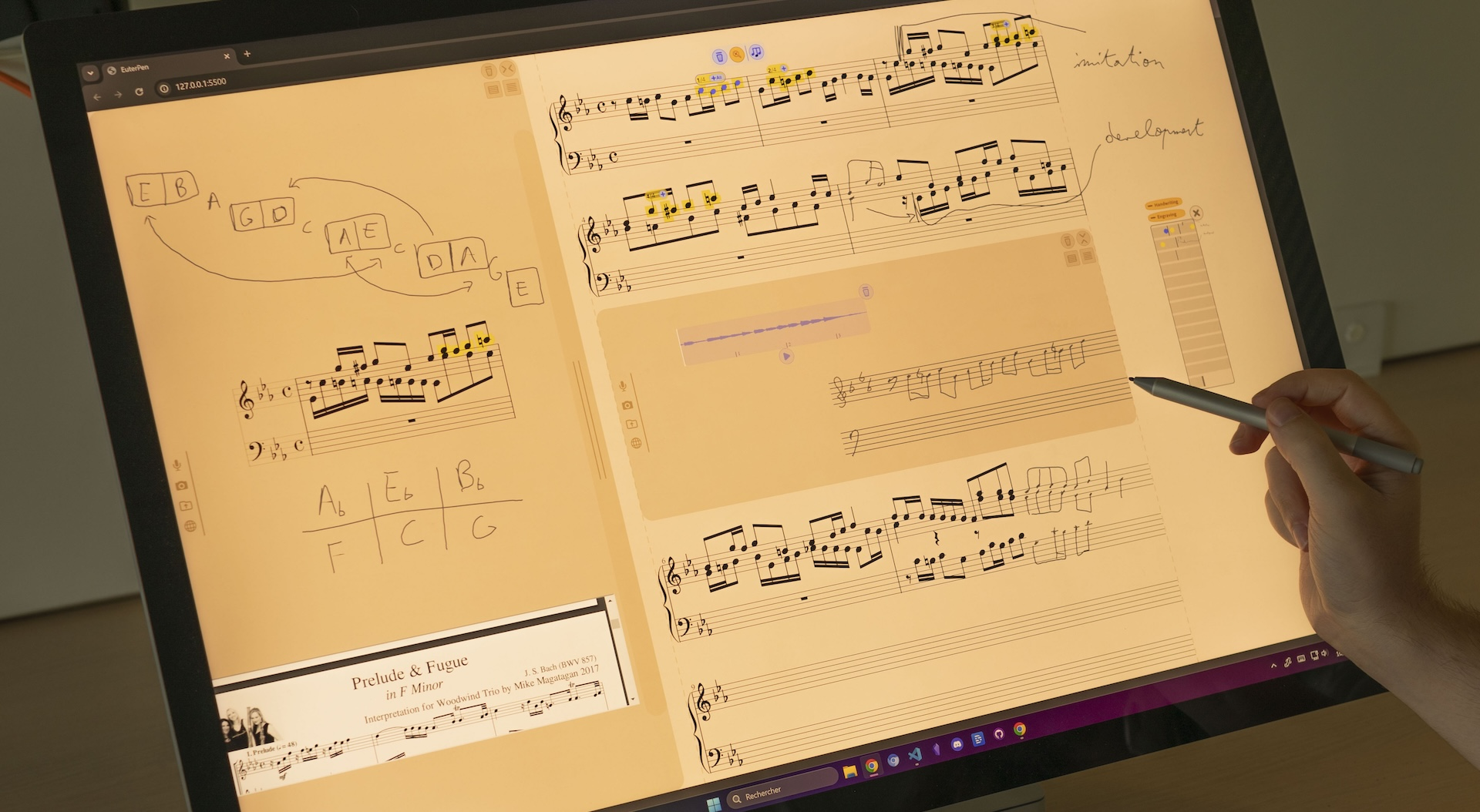

5.1.1 EuterPen

-

Name:

EuterPen: a Music Score Editor for Interactive Surfaces

-

Keywords:

Human Computer Interaction, Music notation

-

Functional Description:

Music score writing on interactive surfaces (pen + touch).

-

News of the Year:

First Euterpen functional prototype running on Microsoft Surface 2 developed in 2024.

- Publication:

-

Contact:

Vincent Cavez

-

Participants:

Vincent Cavez, Emmanuel Pietriga, Caroline Appert, Catherine Letondal

5.1.2 EunomInk

-

Keywords:

HCI, Spreadsheets

-

Functional Description:

EunomInk is a prototype spreadsheet program based on Web technologies and designed specifically for pen+touch interactive surfaces.

- URL:

- Publication:

-

Contact:

Vincent Cavez

-

Participants:

Vincent Cavez, Caroline Appert, Emmanuel Pietriga

5.1.3 ACADA-HMI

-

Keywords:

HCI, Data visualization

-

Functional Description:

Cherenkov Telescope Array Monitoring and Control software front-end, implemented using Web-based technologies

- Publications:

-

Contact:

Ludovic David

-

Participants:

Ludovic David, Emmanuel Pietriga, Dylan Lebout

-

Partner:

DESY

5.1.4 hypatia-vis

-

Keywords:

Data visualization, 3D visualisation, Human Computer Interaction

-

Functional Description:

hypatia-vis is a versatile platform for visualizing and interacting with astronomical data, with a primary focus on data from the Euclid space telescope, though it supports other sources as well. A key feature of hypatia-vis is its ability to run on ultra-high-resolution wall displays powered by a rendering cluster, offering a unique solution for collaborative and immersive exploration of astronomical datasets.

The platform supports a wide range of functionalities, including visualization of 2D images—such as high dynamic range FITS files—and catalogues of celestial objects from diverse sources like Euclid, Gaia, Simbad, NED, and Chandra. It also handles data cubes, multi-band image sets, overlaying images from various telescopes (e.g., Euclid, James Webb, Hubble, Herschel, ALMA, etc.), and providing 3D navigation and visualization of catalogues, as well as DS9-style annotations.

At its core, the rendering engine leverages Vulkan (via Unity3D) and is implemented in C#. In addition, hypatia-vis includes a suite of Python scripts for image processing, querying catalogue databases, and performing astronomical computations using libraries like Astropy. The platform also integrates a fork of NASA/Caltech's Montage software for generating specific types of astronomical images.

-

Contact:

Olivier Chapuis

-

Participant:

Olivier Chapuis

5.2 New platforms

Participants: Olivier Gladin, Caroline Appert, Anastasia Bezerianos, Olivier Chapuis, Vanessa Peña-Araya, Emmanuel Pietriga, Arnaud Prouzeau, Arthur Fages, Abel Henry-Lapassat.

Both the WILD-512K and WILDER platforms are part of funded project ANR EquipEx+ Continuum (ANR-21-ESRE-0030). Figure 8 illustrates Earth-orbiting object tracking visualization based on a TLE data feed.

Picture of the WILDER ultra wall displaying satellite tracking data.

6 New results

6.1 Novel Forms of Input for Groups and Individuals

Participants: Caroline Appert, Anastasia Bezerianos, Emmanuel Pietriga, Arnaud Prouzeau, Vincent Cavez, Camille Dupré, Ludovic David, Olivier Gladin.

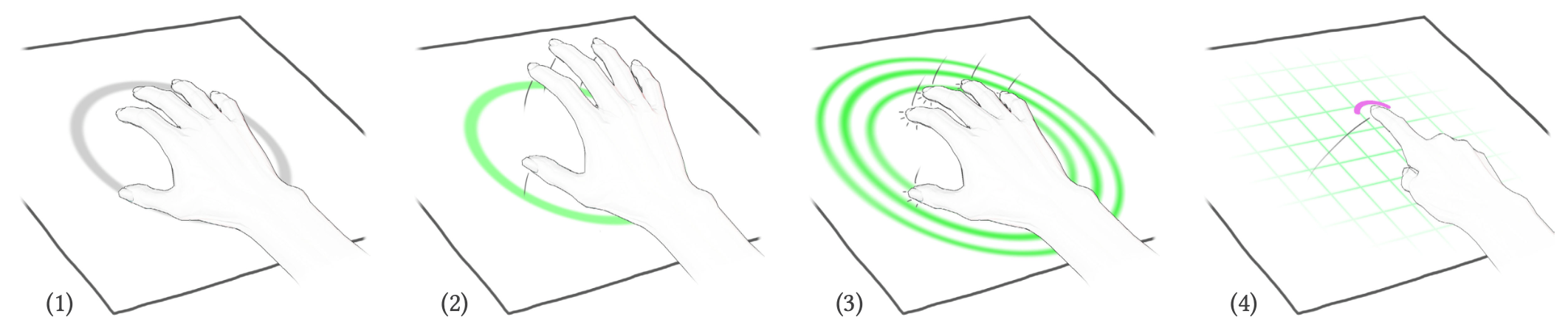

The design and evaluation of interaction techniques for manipulating content in Augmented Reality has become a major research topic in the team 1, 31, 35. Continuing our investigatetions in this area, we designed TriPad 5, a technique that enables opportunistic touch interaction in Augmented Reality using hand tracking only. With TriPad, users declare the surface they want to appropriate with a simple hand tap gesture, as illustrated in Figure 9. They can then use this surface at will for direct and indirect touch input. TriPad only involves analyzing hand movements and postures, without the need for additional instrumentation, scene understanding or machine learning. TriPad thus works on a variety of flat surfaces, including glass. It also ensures low computational overhead on devices that typically have a limited power budget. We evaluated TriPad with two user studies. First, we demonstrated the robustness of TriPad’s hand movement interpreter on different surface materials: wood, glass and a piece of drywall. We then compared the technique against direct mid-air AR input techniques available in commercial headsets on both discrete and continuous tasks (target acquisition, slider manipulation, 2D pursuit) and with different surface orientations. In this second study we observed that TriPad achieved a better speed-accuracy trade-off overall compared to raycast and direct touch. It also improved comfort and minimized fatigue.

Another focus of attention of the team is the design and evaluation of pen+touch input techniques for manipulating data on interactive surfaces 2, 9, 52, 50. We continued investigating the potentials of pen+touch input, but this time in a specific application area that is new to the team: music composition, and most particularly music notation programs. Composers use such programs throughout their creative process. Music notation programs are essentially elaborate structured document editors that enable composers to create high-quality scores by enforcing musical notation rules. They effectively support music engraving, but impede the more creative stages in the composition process because of their lack of flexibility. Composers thus often combine these desktop tools with other mediums such as paper. Interactive surfaces that support pen+touch input have the potential to address the tension between the contradicting needs for structure and flexibility. To better understand professional composers' work process, we interview nine of them. These interviews yielded insights about their thought process and creative intentions. We relied on the “Cognitive Dimensions of Notations” framework 37 to capture the frictions composers experience when materializing those intentions on a score. We then identified opportunities to increase flexibility when composing music on interactive surfaces (see examples in Figure 10) by enabling composers to temporarily break the structure when manipulating the notation. These findings – interviews, analysis and opportunities – are reported in our paper “Challenges of Music Score Writing and the Potentials of Interactive Surfaces” 17.

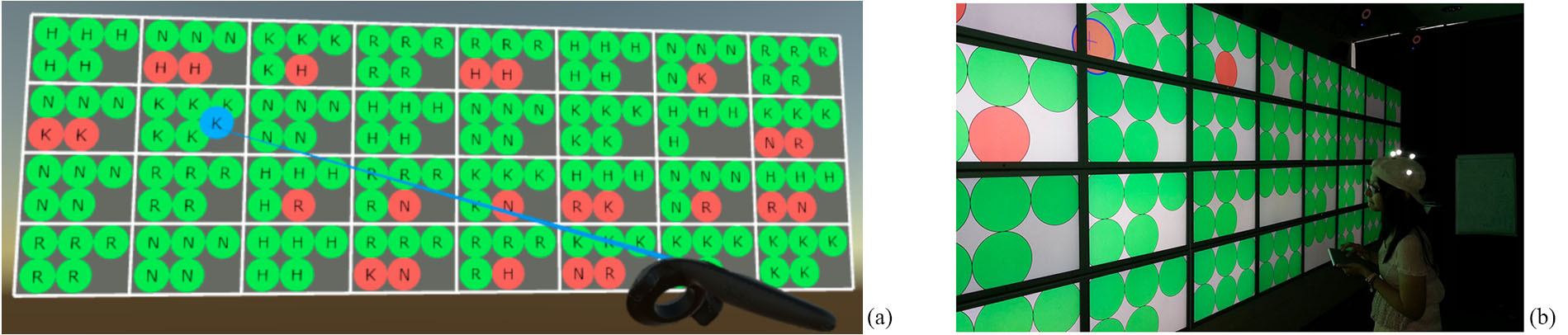

Ultra-high-resolution wall-sized displays 4, 6, 33, 46 remain an active area of research as well. This year, together with colleagues from the Aviz team, we performed an exploratory study about how pairs of users interact with speech commands and touch gestures when working in front of wall displays 13. We participated to the design and analysis of an in-depth exploratory study involving ten pairs of participants performing sensemaking tasks collaboratively. We observed their interaction choices: which interaction did they use, the interplay between modalities, their collaboration style, etc. Among the main findings, we found that while touch was the most used modality, participants preferred speech commands for global operations, used them for distant interaction, and that speech interaction contributed to the awareness of the partner's actions. Participants interacted with speech equally often whether they were in loosely or closely coupled collaboration. We derive from these findings a set of design considerations for collaborative and multimodal interactive data analysis systems.

Finally, in collaboration with colleagues from Université de Toulouse and in the context of our work on multi-display environments, we initiated an investigation of collaboration involving multiple hybrid environments 16. Hybrid environments consist of a heterogeneous mix of interactive resources including large screens, personal devices, AR headsets, mixed reality systems, etc. Such environments can provide effective support to groups of users in demanding contexts of work such as emergency management and response. When members of the same group are distributed across multiple such environments – thus collaborating remotely – differences between the environments can impct the quality of the collaboration. The goal of this work is to define a model for the description of such hybrid environments in order to help identify asymmetries between remote users.

6.2 Novel Forms of Display for Groups and Individuals

Participants: Anastasia Bezerianos, Olivier Chapuis, Arnaud Prouzeau.

As mentioned in Section 6.1 ultra-high-resolution wall-sized displays (ultra-walls for short) are an active area of research in the team. The scope of this research is not limited to the design of interaction techniques, but also encompasses research on visual perception and data visualization involving these platforms. One key feature of these displays, as the name suggests, is that they have a very high display capacity: a very high pixel density over a lage physical surface. At the same time, they remain expensive, require large rooms, require maintenance and involve a significant engineering effort when developing dedicated applications. We started investigating the potentials of virtual reality headsets as an alternative to wall displays 18. Such headsets theoretically have the potential to emulate ultra-walls while avoiding the drawbacks mentioned above. However, it is unclear, from the perspective of visual perception, whether VR headsets actually have high-enough a resolution to effectively emulate ultra-walls. We first performed an analysis based on off-the-shelf HMD (Head-Mounted Display) technical specifications, showing that their pixel density cannot yet emulate ultra-walls. We then performed user studies (Figure 11) comparing different conditions, including an actual ultra-wall, virtual walls that compensate for the above-mentioned limitation by scaling visual elements, and virtual navigation techniques. Overall, results from this work indicate that in order to properly emulate an ultra-wall, VR headsets need to achieve a pixel density that matches human visual acuity which, given the typical eye-screen distance in HMDs, should be about 2,600dpi.

Continuing work Arnaud Prouzeau was involved in while in the BIVWAC team at Centre Inria de l'Université de Bordeaux, we contributed to several projects on topics involving Augmented Reality or Virtual Reality displays:

- means to raise awareness about environmental concerns through the design and evaluation of waste-accumulation visualizations embedded in people's environment using Augmented Reality technology 11;

- help healthcare professionals better understand schizophrenia symptoms by immersively simulating, e.g., delusions and auditory hallucinations using Augmented Reality 20, 27;

- the initial stages of a study about collaborative processes in educational experiences and how to enhance those experiences in Virtual Reality 21.

6.3 Interacting with Spatio-Temporal Data

Participants: Anastasia Bezerianos, Vanessa Peña-Araya, Emmanuel Pietriga, Arnaud Prouzeau, Ludovic David, Olivier Gladin.

Our research in this axis is smoothly transitioning from Interacting with Diverse Data to the somewhat more specific scope of Interacting with Spatio-Temporal Data, continuing collaborations with the GeoVis teamIGN. This not only encompasses data with geographical attributes but any sort of data with a spatial component, including astronomical data.

On this front, we reignited a collaboration with Institut d'Astrophysique Spatiale 45 about the visualization of astronomical data – FITS data in particular – focusing on EUCLID data with an entirely new Unity-based visualization framework developed from scratch called Hypatia-vis (see Section 5.1.4).

Still in the area of Astronomy, our long-lasting collaboration with DESY continues, centered on the operations monitoring and control UI front-end of the Cherenkov Telescope Array Observatory (CTAO), the next-generation atmospheric Cherenkov gamma-ray project. Our contribution to the Array Control and Data Acquisition (ACADA) system – which controls, supervises, and handles the data generated by the telescopes and the auxiliary instruments – is about the design, implementation and testing of user interface components for the control room, in tight collaboration with Iftach Sadeh at DESY 23, 22.

Spatio-temporal data are not limited to geographic and astronomical data but also include smaller-scale movement data for instance. Together with colleagues from the Aviz team, we contributed to research about the effective use of visualization to display data associated with moving entities. One project 15 was about exploring the challenges and considerations involved in designing visualizations embedded in motion within sports videos, with a focus on swimming competitions. Through surveys, workshops, and a technology probe, we identified requirements and opportunities for situated visualizations. These findings contribute to understanding how to enhance sports coverage with moving visual representations tied to athletes and other dynamic elements. Another related project 14 focused on scenarios where users must balance attention between a primary task and dynamic, embedded visualizations. Using video games as a case study, we conducted a systematic review and a user study from which we derived considerations for designing visualizations that are readable, useful, and minimally distracting. The work highlights trade-offs in design and offers insights into improving user experience in dynamic, task-focused environments.

Finally, related to earlier work in the team about the visualization of hypergraphs with spatial and temporal dimensions 7, 39, we collaborated with team Cedar on a visualization tool to help users explore and understand data shared in a variety of technical formats, structured or semi-structured 24. Our framework, given as input a dataset of one among many different formats, automatically derives an informative graph structure in the spirit of Property Graphs (PGs) 29, from which an interactive visual interface is created, leveraging prior work from team Cedar on integrating heterogeneous data and summarizing those data.

7 Bilateral contracts and grants with industry

Participants: Caroline Appert, Camille Dupré, Emmanuel Pietriga.

7.1 Bilateral contracts with industry

- Berger-Levrault: ANRT/CIFRE PhD (Camille Dupré), 3 years, November 2022-October 2025 on the topic of Interactive content manipulation in Mixed Reality for maintenance applications.

8 Partnerships and cooperations

8.1 European initiatives

8.1.1 Other european programs/initiatives

Participants: Ludovic David, Emmanuel Pietriga.

Deutsches Elektronen-Synchrotron (DESY): Scientific collaboration on the design and implementation of user interfaces for array operations monitoring and control for the Cherenkov Telescope Array (CTA) project, currently being built in the Canary Islands (Spain) and in the Atacama desert (Chile). May 2018 – October 2026. Funding: 532k€. www.cta-observatory.org

8.2 National initiatives

8.2.1 EquipEx+ Continuum (ANR)

Participants: Emmanuel Pietriga, Olivier Gladin, Caroline Appert, Anastasia Bezerianos, Olivier Chapuis, Vanessa Peña-Araya, Arnaud Prouzeau, Maria Lobo, Arthur Fages, Julien Berry, Vincent Cavez, Camille Dupré, Mengfei Gao, Ludovic David, Celine Deivanayagam, Doaa Hussien Yassien, Xinpei Zheng.

ILDA participates to EquipEx+ project Continuum, a collaborative research infrastructure of 30 platforms located throughout France to advance interdisciplinary research based on interaction between computer science and the human and social sciences. Funded by the French National Research Agency (ANR) from 2021 to 2029. 19 academic institutions and 3 research organizations. PI: Emmanuel Pietriga. Funding: 816k€.

8.2.2 ANR Interplay

Participants: Olivier Chapuis, Caroline Appert, Arthur Fages.

Novel display technologies such as wall-sized displays, very large tabletops, and headsets for Virtual Reality or Augmented Reality make it possible to redesign workspaces for more flexibility and efficiency. The goal of the project is to study rich display environments from a human computer interaction perspective in order to inform the design of innovative workspaces for crisis management and data analysis. To reach this goal we will: (i) study the output capacities of these displays for distributing information across displays efficiently; (ii) use physical artefacts for facilitating navigation and interaction across displays; and (iii) use embodied gestures for portable and expert interactions across displays. Our studies will be informed by a real crisis management environment, and the results of the project will help redesign this environment. Coordinator: Olivier Chapuis. Funding: 629k€.

Partners:

- CEA Tech en Occitanie, Commissariat à l'Energie Atomique et aux Energies Alternatives.

- Institut de Recherche en Informatique de Toulouse (IRIT), Université de Toulouse.

- Laboratoire Traitement et Communication de l'Information, Télécom Paris & Institut Polytechnique de Paris.

8.2.3 ANR JCJC ICARE

Participants: Arnaud Prouzeau, Anastasia Bezerianos.

Partners:

- Inria/Bivwac

- Monash University

- Queensland University

In this project, we explore the use of immersive technologies for collaborative learning. First in fully virtual reality environments and then in heterogeneous ones which include different types of devices (e.g. AR/VR, wall displays, desktops), we will design interaction techniques to improve how people collaborate in practical learning activities. Website: ICARE. Coordinator: Arnaud Prouzeau. Duration: 2023-2026. Funding: 225k€.

8.2.4 PEPR eNSEMBLE

Joint actions in a collaborative digital environments: co-gestures and a sense of agency

Participants: Olivier Chapuis, Xinpei Zheng.

A wealth of behavioral and neuroimaging evidence highlights cognitive changes that emerge when we function in groups rather than individually. This raises several questions such as: What happens when inter-individual relations are mediated by digital tools? Our project seeks to answer such question from a cognitive science perspective. Some surfaces such as a wall displays enable collaborative gestures, that is, coordinated gestures involving several users to jointly perform an action. Th bis project aims to improve the user experience during collaborative gestures by building on the sense of agency – a notion borrowed from the philosophy of mind and cognitive psychology that refers to the sense of being the author of one's actions, and to the experience of controlling the effects of one's actions on the outside world. Our goal is to refine collaborative gestures based on theoretical models of joint action and methods for behavioral assessments of cooperation. 3-year PhD funding.

Partners:

- Ouriel Grynszpan, AMI team, LISN, Université Paris-Saclay.

THandgibles : Hand-Centric Collaborative Tangible Interaction

Participants: Julien Berry, Caroline Appert, Olivier Chapuis, Emmanuel Pietriga.

This PhD project aims to develop a versatile tangible interaction approach for collaborative environments with one or multiple screens, potentially distributed across various locations. The proposed "opportunistic tangible interaction" method focuses on recognizing uninstrumented objects based on hand activity tracked with augmented reality headsets. Bourse d'accompagnement for PhD students (10k€).

9 Dissemination

Participants: Caroline Appert, Anastasia Bezerianos, Olivier Chapuis, Vanessa Peña-Araya, Emmanuel Pietriga, Arnaud Prouzeau, Vincent Cavez, Camille Dupré.

9.1 Promoting scientific activities

- ACM CHI Steering Committee (2019-...), ACM SIGCHI Conference on Human Factors in Computing Systems: Caroline Appert

- IEEE VIS Executive Committee (2021-...), Visualization Conference: Anastasia Bezerianos

- IEEE VIS Ombud (2022-2024): Anastasia Bezerianos

9.1.1 Scientific events: organisation

Member of the organizing committees

- para.chi.Paris'24 co-organizer: Vanessa Peña-Araya

- RJC IHM'24 co-organizer: Vanessa Peña-Araya

- IHM 2024 (Workshop chair): Arnaud Prouzeau

- EnergyVis 2024 co-organizer: Arnaud Prouzeau

- TEI 2025 (Demo chair): Arnaud Prouzeau

- BELIV workshop co-organizer: Anastasia Bezerianos

9.1.2 Scientific events: selection

Member of the conference program committees

- ACM CHI 2025: Caroline Appert, Emmanuel Pietriga, Arnaud Prouzeau (AC - Associate Chair)

- IEEE VIS 2024: Vanessa Peña-Araya (AC - Associate Chair), Anastasia Bezerianos (AC - Associate Chair short papers)

- IEEE ISMAR 2024 (journal track): Arnaud Prouzeau (AC - Associate Chair)

- PacificVis 2025 (conference track): Vanessa Peña-Araya (AC - Associate Chair)

Reviewer

- ACM CHI 2025: Anastasia Bezerianos, Vincent Cavez, Olivier Chapuis, Vanessa Peña-Araya

- ACM UIST 2024: Caroline Appert, Olivier Chapuis

- ACM DIS 2024: Camille Dupré

- ACM EICS 2024: Caroline Appert, Olivier Chapuis

- IEEE VIS 2024: Anastasia Bezerianos, Arnaud Prouzeau

- IEEE ISMAR 2024: Olivier Chapuis

- AFIHM/ACM IHM 2024: Anastasia Bezerianos, Olivier Chapuis

9.1.3 Journal

Member of the editorial boards

- ACM ToCHI, Transactions on Computer-Human Interaction: Caroline Appert (Associate Editor)

Reviewer - reviewing activities

- ACM ToCHI, Transactions on Computer-Human Interaction: Olivier Chapuis

- IEEE TVCG, Transactions on Visualization and Computer Graphics: Anastasia Bezerianos, Olivier Chapuis, Vanessa Peña-Araya, Arnaud Prouzeau

9.1.4 Invited talks

- "AI Revolution in Africa: A Human-Centered Approach" Workshop: Caroline Appert

- Novel Types of Interactive Displays: Challenges and Opportunities, Airbus Defence and Space, Visualization and Data Fusion Day: Emmanuel Pietriga

9.1.5 Leadership within the scientific community

- Conseil Scientifique d'Institut (CNRS/INS2I): Caroline Appert

- Programme blanc ANR 2024: Caroline Appert (reviewer)

- CS Hiring committee MCF Paris Saclay: Caroline Appert (member)

- Other CS Hiring committees MCF : (Université de Bordeaux, Université Côte d'Azur, Université Toulouse 3 Paul Sabatier, Télécom Paris-Tech) : Anastasia Bezerianos (member)

- SIGCHI Paris Chapter: Vanessa Peña-Araya (Chair), Vincent Cavez (Website Chair)

- ANR CE33 jury: Arnaud Prouzeau (member)

9.1.6 Scientific expertise

- Jury d'admission, CNRS INS2I: Caroline Appert (member)

9.1.7 Research administration

- Head of Departement Interaction avec l'Humain (IaH) du LISN (UMR9015): Olivier Chapuis

- Director of pôle B de l'École Doctorale STIC Paris Saclay: Caroline Appert

- Member of Conseil de l'École Doctorale STIC Paris Saclay: Caroline Appert

- Member of Commission Scientifique Inria Saclay: Vanessa Peña-Araya

- Member of Conseil de Laboratoire (LISN): Caroline Appert

- Conseil de Gouvernance (élue), Polytech, Université Paris-Saclay: Anastasia Bezerianos

- Member of Commissions Consultatives de Spécialistes d'Université (CCUPS) at Université Paris Saclay: Emmanuel Pietriga

- Co-head of the Polytech Département IIM: Informatique et Ingénierie Mathématique: Anastasia Bezerianos

- Représentant des doctorants du pôle B de l’École Doctorale STIC Paris Saclay: Vincent Cavez

- Member of Conseil de l’École Doctorale STIC Paris Saclay: Vincent Cavez

- Member of Comité de Centre Inria Saclay: Vincent Cavez

- Groupe de Travail EduIHM de l'AFIHM: Arnaud Prouzeau (co-animator)

9.2 Teaching - Supervision - Juries

- Ingénieur (X-3A)/Master (M1/M2): Emmanuel Pietriga, Data Visualization (CSC_51052_EP), 36h, École Polytechnique / Institut Polytechnique de Paris

- Master (M1/M2): Caroline Appert, Experimental Design and Analysis, 21h, Univ. Paris Saclay

- Master (M1/M2): Anastasia Bezerianos, Interactive Information Visualization, 10h, Univ. Paris Saclay

- Master (M1/M2): Anastasia Bezerianos, Mixed Reality and Tangible Interaction, 11h, Univ. Paris Saclay

- Master (M2): Vanessa Peña Araya, Winter School, 10.5h, Univ. Paris Saclay

- Master (M2): Vanessa Peña Araya, Career Seminar, 13h, Univ. Paris Saclay

- Master (M1/M2): Vanessa Peña Araya, Web Development with Node.js, 10.5h, Univ. Paris Saclay

- Ingénieur (3A): Anastasia Bezerianos, Web Programming, 36h, Polytech Paris-Saclay

- Ingénieur (3A): Anastasia Bezerianos, Interaction Humain-Machine Project, 24h, Polytech Paris-Saclay

- Ingénieur (4A / Master (M1)): Anastasia Bezerianos, 4th year CS project (Projet Bases de Données/Web), 24h, Polytech Paris-Saclay

- Ingénieur (X-3A)/Master (M1/M2): Olivier Gladin, Data Visualization (CSC_51052_EP), 18h, École Polytechnique / Institut Polytechnique de Paris

- Master (M1/M2): Vincent Cavez, Design of Interactive Systems (DOIS 2024), 20h, Univ. Paris Saclay

- Master (M2): Vincent Cavez, Winter School, 10.5h, Univ. Paris Saclay

- Licence (L2): Vincent Cavez, Introduction à l'Interaction Humain-Machine (OLIN219), 12h, Univ. Paris Saclay

- Master (M1): Arnaud Prouzeau, Human Factor and Human Computer Interaction, 6h, Université de Bordeaux

- Master (M1): Arnaud Prouzeau, Immersion and interaction with visual worlds, 7.5h, École Polytechnique

- Master (M1): Arnaud Prouzeau, Winter School, 7.5h, Univ. Paris Saclay

- Master (M1/M2): Arnaud Prouzeau, Design Project, 21h, Univ. Paris Saclay

- Bachelor (BX1): Emmanuel Pietriga, Web Programming (CSE104), 32h, École Polytechnique

9.2.1 Supervision

- PhD: Mehdi Chakhchoukh, CHORALE: Collaborative Human-Model Interaction to Support Multi-Criteria Decision Making in Agronomy, defended December 4th, 2024, Advisors: Anastasia Bezerianos, Nadia Boukhelifa

- PhD: Edwige Chauvergne, Study and Design of Interactive Immersive Visualization Experiences for non-experts, defended November 26th, 2024, Advisors: Arnaud Prouzeau, Martin Hachet

- PhD in progress: Vincent Cavez, Post-WIMP Data Wrangling, since October 2021, Advisors: Emmanuel Pietriga, Caroline Appert

- PhD in progress: Camille Dupré, Interactive content manipulation in Mixed Reality, since November 2022, Advisors: Caroline Appert, Emmanuel Pietriga

- PhD in progress: Theo Bouganim, Interactive Exploration of Semi-structured Document Collections and Databases, since October 2022, Advisors: Ioana Manolescu (EPC Cedar), Emmanuel Pietriga

- PhD in progress: Julien Berry, THandgibles : Hand-Centric Collaborative Tangible Interaction, since October 2024, Advisors: Caroline Appert, Olivier Chapuis, Emmanuel Pietriga

- PhD in progress: Mengfei Gao, Augmented Reality as a Means to Improve Web Browsing on Handheld Devices, since November 2024, Advisors: Caroline Appert, Emmanuel Pietriga

- PhD in progress: Xinpei Zheng, Joint actions in a collaborative digital environments: co-gestures and a sense of agency, since October 2024, Advisors: Ouriel Grynszpan, Olivier Chapuis

- PhD in progress: Maudeline Marlier, Tangible interactions for rail operations in operational centers, since March 2022, Advisors: Arnaud Prouzeau, Martin Hachet

- PhD in progress: Juliette Le Meudec, Exploring the use of immersive technologies for collaborative learning, since October 2023, Advisors: Arnaud Prouzeau, Anastasia Bezerianos, Martin Hachet

- PhD in progress: Victor Brehaut, Remote collaboration in hybrid environments, since October 2023, Advisors: Marcos Serrano, Emmanuel Dubois, Arnaud Prouzeau

- M2 internship: Julien Berry, A Hand-centric Approach to the Recognition of Object Manipulation in AR, 6 months. Advisors: Caroline Appert, Olivier Chapuis, Emmanuel Pietriga

- M2 internship: Noémie Hanus, Itinerary-driven Interaction for Map Navigation, 6 months. Advisors: Caroline Appert, Emmanuel Pietriga

- M2 internship: Doaa Hussien Yassien, Exploring the Transition from AR to VR from the First Person's Perspective View to a Bird's Eye View, 5 months. Advisors: Vanessa Peña-Araya, Emmanuel Pietriga

- M2 internship: Chuyun Ma, Beyond Linear Learning: Developing A Progressive, Interactive Onboarding Method for Complex Visualizations, 6 months. Advisors: María Jesús Lobo, Vanessa Peña-Araya

- M1 internship: Céline Deivanayagam, Visualizing Collaborative Integration of New Datasets in Augmented Reality, 3 months. Advisors: Vanessa Peña-Araya, Emmanuel Pietriga

- M1 internship: Abel Henry-Lapassat, Interacting with Distant Displays in a Co-located Collaborative Setting, 3 months. Advisors: Caroline Appert, Olivier Chapuis

- M1 internship: Yana Upadhyay, Exploring a Design Space for Uncertainty Visualization for Climate Data, 3 months. Advisors: María Jesús Lobo, Vanessa Peña-Araya, Jagat Sesh Challa

- MSc internship: Briggs Twitchell, Explaining complex ML models using LLM & visualization, 3 months. Advisors: Anastasia Bezerianos, Nadia Boukhelifa (INRAe)

- MSc internship: Omi Johnson, Interactive visualization to communicate bread-making Bayesian networks to domain experts, 4 months. Advisors: Anastasia Bezerianos, Nadia Boukhelifa (INRAe)

- L3 internship: Maryatou Kante, Catalogue d'objets célestes: Interrogation et traitement de données pour la visualisation, 2 months. Advisor: Olivier Chapuis

9.2.2 Juries

- HDR: Romain Vuillemot, École Centrale Lyon: Emmanuel Pietriga (rapporteur)

- HDR: Cédric Fleury, Univ. Paris-Saclay: Anastasia Bezerianos (presidente)

- PhD: Yuan Chen, Centre Inria de l'Université de Lille and University of Waterloo: Caroline Appert (examinatrice)

- PhD: Morgane Koval, Centre Inria de Bordeaux: Caroline Appert (examinatrice)

- PhD: Ricardo Langner, TU Dresden, Germany: Anastasia Bezerianos (rapportrice)

- PhD: Felipe Gonzalez, Université de Lille and Carleton University: Anastasia Bezerianos (presidente)

- PhD: Camille Gobert, Univ. Paris-Saclay: Anastasia Bezerianos (presidente)

- PhD: Edwige Chauvergne, Université de Bordeaux: Anastasia Bezerianos (examinatrice)

9.3 Popularization

9.3.1 Participation in Live events

- Inria stand at the "38ème finale internationale du championnat des jeux mathématiques" at École Polytechnique: Vanessa Peña-Araya

- NSI (Lycéen) - Jury académique des trophées NSI 2024, Académie de Versailles : Camille Dupré

9.3.2 Others science outreach relevant activities

- 1 scientifique, 1 classe : chiche ! at Lycée International Palaiseau Paris Saclay: Emmanuel Pietriga

- Les Innovantes at Centre Inria de l'Université de Lille: Camille Dupré

10 Scientific production

10.1 Major publications

- 1 inproceedingsARPads: Mid-air Indirect Input for Augmented Reality.ISMAR 2020 - IEEE International Symposium on Mixed and Augmented RealityISMAR '20Porto de Galinhas, BrazilIEEENovember 2020, 13 pagesHALback to textback to textback to text

- 2 articleSpreadsheets on Interactive Surfaces: Breaking through the Grid with the Pen.ACM Transactions on Computer-Human InteractionOctober 2023HALDOIback to text

- 3 articleUnderstanding How In-Visualization Provenance Can Support Trade-off Analysis.IEEE Transactions on Visualization and Computer GraphicsMay 2022HALDOIback to textback to text

- 4 inproceedingsWallTokens: Surface Tangibles for Vertical Displays.Proceedings of the international conference on Human factors in computing systemsCHI 2021 - International conference on Human factors in computing systemsCHI '21Yokoama / Virtual, JapanACMMay 2021, 13 pagesHALDOIback to textback to text

- 5 inproceedingsTriPad: Touch Input in AR on Ordinary Surfaces with Hand Tracking Only.Proceedings of the 42nd SIGCHI conference on Human Factors in computing systemsCHI 2024 - The 42nd SIGCHI conference on Human Factors in computing systemsHonolulu, HI, USA, United StatesMay 2024HALDOIback to textback to text

- 6 inproceedingsEvaluating the Extension of Wall Displays with AR for Collaborative Work.Proceedings of the international conference on Human factors in computing systemsCHI 2023 - International conference on Human factors in computing systemsHambourg, GermanyACM2023HALDOIback to textback to text

- 7 articleHyperStorylines: Interactively untangling dynamic hypergraphs.Information VisualizationSeptember 2021, 1-21HALDOIback to textback to text

- 8 articleA Comparison of Visualizations for Identifying Correlation over Space and Time.IEEE Transactions on Visualization and Computer GraphicsOctober 2019HALDOIback to textback to text

- 9 inproceedingsSpaceInk: Making Space for In-Context Annotations.UIST 2019 - 32nd ACM User Interface Software and TechnologyNouvelle-Orleans, United StatesOctober 2019HALDOIback to textback to textback to text

- 10 inproceedingsDesigning Coherent Gesture Sets for Multi-scale Navigation on Tabletops.Proceedings of the 36th international conference on Human factors in computing systemsCHI '18Montreal, CanadaACMApril 2018, 142:1-142:12HALDOIback to text

10.2 Publications of the year

International journals

International peer-reviewed conferences

Conferences without proceedings

Reports & preprints

Other scientific publications

10.3 Cited publications

- 28 bookData on the Web: From Relations to Semistructured Data and XML.Morgan Kaufmann1999back to text

- 29 inproceedingsThe Property Graph Database Model.Proceedings of the International Workshop on Foundations of Data Management2100CEUR Workshop ProceedingsCEUR-WS.org2018back to text

- 30 inproceedingsCustom-made Tangible Interfaces with TouchTokens.International Working Conference on Advanced Visual Interfaces (AVI '18)Proceedings of the 2018 International Conference on Advanced Visual InterfacesGrosseto, ItalyACMMay 2018, 15HALDOIback to text

- 31 inproceedingsAR-enhanced Widgets for Smartphone-centric Interaction.MobileHCI '21 - 23rd International Conference on Mobile Human-Computer InteractionACMToulouse, FranceSeptember 2021HALDOIback to textback to textback to text

- 32 inproceedingsSurfAirs: Surface + Mid-air Input for Large Vertical Displays.Proceedings of the international conference on Human factors in computing systemsHamburg, GermanyACMApril 2023, 15 pagesHALDOIback to text

- 33 inproceedingsWallTokens: Surface Tangibles for Vertical Displays.CHI 2021- International conference on Human factors in computing systemsYokohama ( virtual ), JapanACMMay 2021, 13 pagesHALDOIback to textback to text

- 34 articleS-Paths: Set-based visual exploration of linked data driven by semantic paths.Open Journal Of Semantic Web1212021, 99-116HALDOIback to text

- 35 inproceedingsInvestigating the Use of AR Glasses for Content Annotation on Mobile Devices.ACM ISS 2022 - ACM Interactive Surfaces and Spaces Conference6Proceedings of the ACM on Human-Computer InteractionISSWellington, New ZealandNovember 2022, 1-18HALDOIback to textback to textback to text

- 36 bookM. R.M. R. Endsley and D. G.D. G. Jones, eds. Designing for Situation Awareness: an Approach to User-Centered Design.370 pagesCRC Press, Taylor & Francis2012back to text

- 37 articleUsability Analysis of Visual Programming Environments: A ‘Cognitive Dimensions’ Framework.Journal of Visual Languages & Computing721996, 131--174DOIback to text

- 38 bookLinked Data: Evolving the Web into a Global Data Space.Morgan & Claypool2011back to text

- 39 articleGeo-Storylines: Integrating Maps into Storyline Visualizations.IEEE Transactions on Visualization and Computer Graphics291October 2022, 994-1004HALDOIback to textback to textback to text

- 40 inproceedingsPersonal+Context navigation: combining AR and shared displays in Network Path-following.GI 2020 - Conference on Graphics InterfaceGI '20Toronto, CanadaCHCCS/SCDHMMay 2020HALback to text

- 41 inproceedingsEffects of Display Size and Navigation Type on a Classification Task.CHI '14CHI '14Toronto, CanadaACMApril 2014, 4147-4156HALDOIback to text

- 42 inproceedingsPassive yet Expressive TouchTokens.Proceedings of the 35th SIGCHI conference on Human Factors in computing systemsDenver, United StatesMay 2017, 3741 - 3745HALDOIback to text

- 43 inproceedingsTouchTokens: Guiding Touch Patterns with Passive Tokens.Proceedings of the 2016 CHI Conference on Human Factors in Computing SystemsCHI '16San Jose, CA, United StatesACMMay 2016, 4189-4202HALDOIback to text

- 44 inproceedingsA Comparison of Geographical Propagation Visualizations.CHI '20 - 38th SIGCHI conference on Human Factors in computing systemsHonolulu, United StatesApril 2020, 223:1--223:14HALDOIback to textback to text

- 45 inproceedingsExploratory Visualization of Astronomical Data on Ultra-high-resolution Wall Displays.Proc. SPIE, Software and Cyberinfrastructure for Astronomy III9913Proc. SPIE, Software and Cyberinfrastructure for Astronomy IIISPIEEdinburgh, United KingdomJune 2016, 15HALDOIback to text

- 46 inproceedingsEngineering Interactive Geospatial Visualizations for Cluster-Driven Ultra-high-resolution Wall Displays.EICS 2022 - ACM SIGCHI Symposium on Engineering Interactive Computing SystemsSophia Antipolis France, FranceACMJune 2022, 3-4HALDOIback to textback to textback to textback to text

- 47 inproceedingsBrowsing Linked Data Catalogs with LODAtlas.ISWC 2018 - 17th International Semantic Web ConferenceMonterey, United StatesSpringerOctober 2018, 137-153HALDOIback to text

- 48 inproceedingsAwareness Techniques to Aid Transitions between Personal and Shared Workspaces in Multi-Display Environments.Proceedings of the 2018 International Conference on Interactive Surfaces and SpacesISS '18Tokyo, JapanACMNovember 2018, 291--304HALDOIback to text

- 49 inproceedingsAnimated Edge Textures in Node-Link Diagrams: a Design Space and Initial Evaluation.Proceedings of the 2018 CHI Conference on Human Factors in Computing SystemsCHI '18Montréal, CanadaACMApril 2018, 187:1--187:13HALDOIback to text

- 50 articleExpressive Authoring of Node-Link Diagrams with Graphies.IEEE Transactions on Visualization and Computer Graphics274April 2021, 2329-2340HALDOIback to textback to textback to text

- 51 inproceedingsInfluence of Color and Size of Particles on Their Perceived Speed in Node-Link Diagrams.INTERACT 2019 - 17th IFIP Conference on Human-Computer InteractionLNCS-11747Human-Computer Interaction -- INTERACT 2019Part IIPart 7: Information VisualizationPaphos, CyprusSpringer International PublishingSeptember 2019, 619-637HALDOIback to text

- 52 inproceedingsActiveInk: (Th)Inking with Data.CHI 2019 - The ACM CHI Conference on Human Factors in Computing SystemsCHI 2019 - Proceedings of the 2019 CHI Conference on Human Factors in Computing SystemsGlasgow, United KingdomACMMay 2019HALDOIback to textback to text

- 53 articleThe Semantic Web Revisited.IEEE Intelligent Systems2132006, 96-101URL: 10.1109/MIS.2006.62DOIback to text