2024Activity reportProject-TeamPOTIOC

RNSR: 201221023D- Research center Inria Centre at the University of Bordeaux

- In partnership with:Université de Bordeaux, CNRS

- Team name: Novel Multimodal Interactions for a Stimulating User Experience

- In collaboration with:Laboratoire Bordelais de Recherche en Informatique (LaBRI)

- Domain:Perception, Cognition and Interaction

- Theme:Interaction and visualization

Keywords

Computer Science and Digital Science

- A3.2.2. Knowledge extraction, cleaning

- A3.4.1. Supervised learning

- A3.4.6. Neural networks

- A3.4.7. Kernel methods

- A3.4.8. Deep learning

- A5.1.1. Engineering of interactive systems

- A5.1.2. Evaluation of interactive systems

- A5.1.4. Brain-computer interfaces, physiological computing

- A5.1.7. Multimodal interfaces

- A5.6.4. Multisensory feedback and interfaces

- A5.9. Signal processing

- A5.9.2. Estimation, modeling

- A5.9.3. Reconstruction, enhancement

- A9.2. Machine learning

- A9.3. Signal analysis

Other Research Topics and Application Domains

- B1.2. Neuroscience and cognitive science

- B2.1. Well being

- B2.2. Physiology and diseases

- B2.2.1. Cardiovascular and respiratory diseases

- B2.2.2. Nervous system and endocrinology

- B2.5.1. Sensorimotor disabilities

- B2.6.1. Brain imaging

- B9.2. Art

- B9.6.1. Psychology

- B9.7.2. Open data

1 Team members, visitors, external collaborators

Research Scientists

- Fabien Lotte [Team leader, INRIA, Senior Researcher]

- Sebastien Rimbert [INRIA, ISFP]

PhD Students

- Come Annicchiarico [UDL (until October 2024) and INRIA (from Novembre 2024)]

- Pauline Dreyer [INRIA]

- Valerie Marissens [INRIA]

- David Trocellier [UNIV BORDEAUX (Until September 2024) then INRIA (from October 2024), until Sep 2024]

- Marc Welter [INRIA]

Technical Staff

- Loic Bechon [INRIA, from Apr 2024 until Sep 2024]

- Axel Bouneau [INRIA, Engineer]

- Juliette Meunier [INRIA, Engineer, from Dec 2024]

- Alex Pepi [INRIA, Engineer]

Interns and Apprentices

- Gloria Makima [INRIA, Intern, from Mar 2024 until Jul 2024]

- Philomène Motti [ENSC, Intern, from Jun 2024 until Jul 2024]

- Ioanna Prodromia Siklafidou [INRIA, Intern, from Feb 2024 until Jun 2024]

Administrative Assistant

- Anne-Lise Pernel [INRIA]

Visiting Scientist

- Ettore Cinquetti [UNIV VERONE, from Nov 2024]

2 Overall objectives

The standard human-computer interaction paradigm based on mice, keyboards, and 2D screens, has shown undeniable benefits in a number of fields. It perfectly matches the requirements of a wide number of interactive applications including text editing, web browsing, or professional 3D modeling. At the same time, this paradigm shows its limits in numerous situations. This is for example the case in the following activities: i) active learning educational approaches that require numerous physical and social interactions, ii) artistic performances where both a high degree of expressivity and a high level of immersion are expected, and iii) accessible applications targeted at users with special needs including people with sensori-motor and/or cognitive disabilities.

To overcome these limitations, Potioc investigates new forms of interaction that aim at pushing the frontiers of the current interactive systems. Since January 2024, and the creation of the new project-team Bivwac (a child from Potioc), Potioc focuses particularly on the input side of interactive systems, and notably studies approaches using from brain activities and physiological signals, that require no physical actions of the user. In other words, the Potioc team focuses on the study, design and use of Brain-Computer Interfaces (BCI) and Physiological Computing systems.

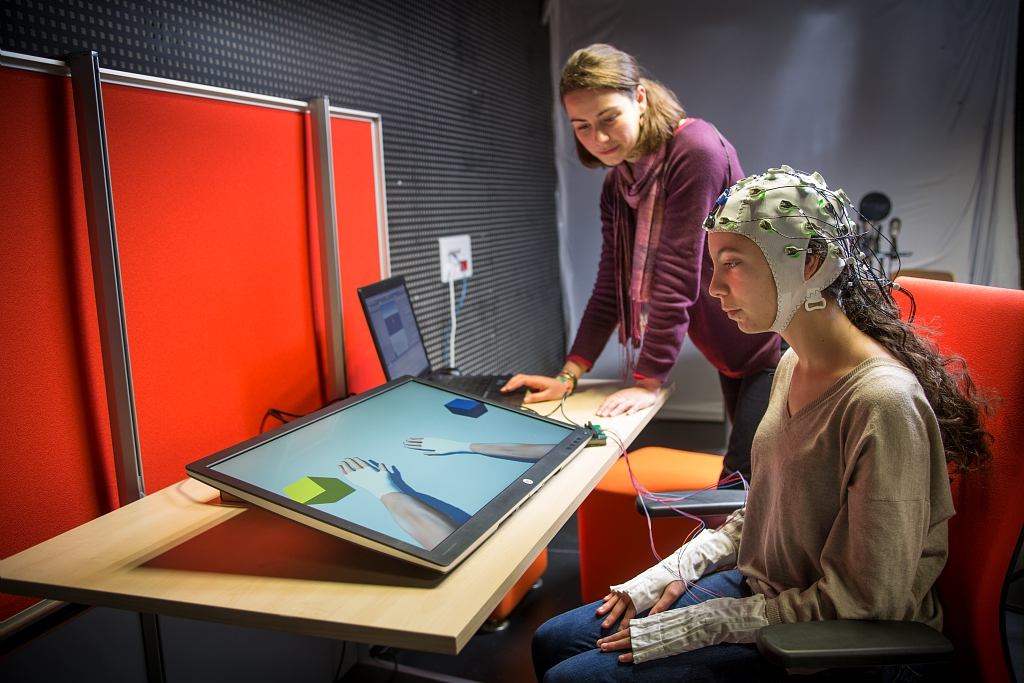

A user wearing an electroencephalography (EEG) cap, and facing a screen, representing 3 hands.

The main applicative domains targeted by Potioc are Neuroergonomics (i.e., the study of the brain at work, in real-life situations), Art, Entertainment, health and Well-being. For these domains, we design, develop, and evaluate new approaches that are mainly dedicated to non-expert users.

3 Research program

To achieve our overall objective, we conduct research that is now (since January 2024) narrowed down to Brain-Computer Interfaces (BCI), i.e., systems enabling users to interact by means of brain activity only, in particular as measured by ElectroEncephaloGraphy (EEG). We target BCI systems that are reliable and accessible to a large number of people. To do so, one of our research axes is to conduct work on brain signal processing and classification algorithms (based on machine learning) to better decode brain signals. Another research axis is dedicated to the understanding and improving of the way we train our users to control these BCIs (human factors). Still at the more fundamental research level on BCI, we also have a research axis that aims at identifying new neuromarkers (i.e., patterns of brain activity), that can reflect users' mental states or intentions, to expand the possibilities offered by BCIs. Finally, we have a last application-oriented research axis, that aims at applying BCI technologies to concrete problems, to reach societal impact. We notably work on neuroergonomics, to assess and improve User eXperience (UX) with interactive systems, as well as on health applications, e.g., for aneasthesia monitoring, cognitive or motor rehabilitation. These axes are summarized below:

- Fundamental research on BCI:

- Machine Learning & and Signal processing of EEG signals

- Human factors of BCI (e.g., BCI user training)

- Neuromarkers in BCI

- Applications of BCI technology: neuroergonomics, anaesthesia monitoring, motor and cognitive rehabilitation

4 Application domains

4.1 Neuroergonomics

Neuroergonomics is the study of the brain at work, outside the lab in real-life, unconstrained situations. In team Potioc, we notably focus on Neuroergonomics studies that aim at assessing and optimising User eXperience (UX) from EEG and physiological signals. We notably aim at monitoring UX related mental states such as attention, mental workload, fatigue or aesthetic experience, in order assess the ergonomics qualities of interactive systems and/or improve this experience by creating systems that adapt in real time to such mental states, estimated using a BCI. For instance, through collaborations, we work on mental state monitoring in flight, or on aesthetic experience monitoring in virtual museums.

4.2 Art

Art, which is strongly linked with emotions and user experiences, is also a target area for Potioc. Tools developed in neuroergonomics research, notably aesthetic experience monitoring, can notably be used for proposing BCI-based personalized art exhibitions in virtual museums, with the sequences of artworks presented depending on the user experience with previous artworks, estimated from his/her brain and physiological signals.

4.3 Health and Well-being

Finally, health and well-being is a domain where the work of Potioc can have an impact. BCI are notably promising for a number of medical applications. In Potioc we notably explore BCI use as an assistive technology to enable people with severe motor impairments to communicate and control computer systems. We also explore them for motor and cognitive rehabilitation, by using them in neurofeedback paradigms, for people after a stroke or with mental health issues. In this case, the goal is to help patients to self-regulate their pathological brain activity, through a feedback provided by the BCI that reflects this activity. Finally, we also explore BCI to detect intra-operative awareness, by aiming at detecting when a patient accidently re-gains consciousness during a surgery under general aneasthesia, by detecting in his/her EEG that they want to move.

5 Social and environmental responsibility

5.1 Physical/Mental Health and accessibility

As part of our research on Brain-Computer Interfaces, we work with users with severe motor impairment (notably tetraplegic users or stroke patients) to restore or replace some of their lost functions, by designing BCI-based assistive technologies or motor rehabilitation approaches, see, e.g., 1. In collaboration with Bordeaux CHU, we are also involved in research on using BCI for post-stroke motor and speech rehabilitation, as well as on wakefulness regulation through neurofeedback with psychiatrists (SANPSY) 19.

5.2 Gender Equality

Gender-related aspects are considered at two levels: 1) participant recruitment for the BCI experimental campaigns and 2) staff hiring. For all our experimental campaigns we notably target strict parity, with half female and half male participants, to ensure unbiased results. Regarding staff hiring, we also make sure to consider equally both female and male applicants, and to even encourage the hiring of female applicants if relevant, who are under-represented in the BCI field in general.

6 Highlights of the year

6.1 Awards

- David Trocellier obtained the Best Poster Award at the International Graz BCI conference 2024, for the paper 26.

6.2 New Research Grants

- Sébastien Rimbert obtained an ANR Jeune Chercheur grant, with project STIM-BCI

- Sébastien Rimbert obtained an Action Exploratoire (AEx) grant, with project DCode-Brain

6.3 International Visibility

- Fabien Lotte was co-chair of the International Neuroergonomics Conference 2024 (NEC'24), organized in Bordeaux

- Fabien Lotte was the president of the Annual BCI research award 2024

7 New software, platforms, open data

7.1 New platforms

7.1.1 OpenVIBE

Participants: Axel Bouneau, Fabien Lotte.

External collaborators: Thomas Prampart [Inria Rennes - Hybrid], Anatole Lécuyer [Inria Rennes - Hybrid].

OpenViBE is an open-source and free software platform dedicated to designing, testing and using of brain-computer interfaces. In 2024, the version 3.6.0 of OpenViBE was released, and some developpements have been made towards the upcomming 3.7.0 release. These updates include, among others, the following features that were developped by the Potioc team:

The following functionalities and EEG signal processing modules (available as OpenViBE boxes) were added to the OpenViBE designer:

- Entropy measures (3.7.0)

- Riemannian potato field trainer (3.7.0)

- Riemannian potato field (3.7.0)

7.1.2 BrainHero for the ANR PROTEUS Project

Participants: Loïc Bechon, Pauline Dreyer, Fabien Lotte.

BrainHero is a gamified Mental Task Brain-Computer Interface (BCI) based on the Graz protocol, inspired by rhythm games like Guitar Hero. It is used in an experiment to compare the effects of a more engaging and stimulating environment on the user in contrast to a basic one. In 2024, we updated this software, for the ANR PROTEUS project, with the following new functionalities:

- Adding background music during the training session

- Adding cues for the mental calculus and word association mental tasks

- Creating a document for the installation of BrainHero and how to link it with OpenViBE

- Designing OpenViBE scenarios using it for the experiment of the PROTEUS ANR project (Filter Bank CSP)

7.2 Open data

Team Potioc is sharing and using the following EEG-BCI open data set:

A large EEG database with users' profile information for motor imagery Brain-Computer Interface research

-

Contributors:

Pauline Dreyer , Sébastien Rimbert , Fabien Lotte

-

Description:

A large database containing electroencephalographic signals from 87 human participants, with more than 20,800 trials in total representing about 70 hours of recording. It was collected during brain-computer interface (BCI) experiments that were recorded following the same protocol: right and left hand motor imagery (MI) tasks during one single day session. It includes the performance of the associated BCI users, detailed information about the demographics, personality and cognitive user's profile, and the experimental instructions and codes (executed in the open-source platform OpenViBE).

-

Dataset PID (DOI):

10.5281/zenodo.8089820

- Project link:

- Publications:

-

Contact:

pauline.dreyer@inria.fr

8 New results

As per our research project, our new results address both fundamental aspects of BCI, at the machine learning and human factors levels, and their applications, both medical and non-medical ones. They are described in more details below.

8.1 Machine Learning methods for BCI

On the machine learning side, we proposed new algorithms to consider the dynamics of EEG signals when representing them as covariance matrices (the current state of the art in BCI), improving the reliability of their classification. We also identified BCI-specific biases that need to be avoided when using Deep Learning for EEG classification. More generally, we proposed a number of guidelines, reflexions and good practices on using Artificial Intelligence and Machine Learning in BCI.

8.1.1 Averaging trajectories on the manifold of symmetric positive definite matrices

Participants: Fabien Lotte.

External collaborators: Thibault de Surrel [LAMSADE], Sylvain Chevallier [Univ. Paris-Saclay], Florian Yger [LAMSADE].

In this work, we leveraged more information from a single measurement (e.g. an ElectroEncephalo-Graphic (EEG) trial) by representing it as a trajectory of covariance matrices (indexed by time for example) instead of a single aggregated one. Doing so, we aim at reducing the impact of non-stationarities and variabilities (e.g. due to fatigue or stress for EEG). Covariance matrices being symmetric positive definite (SPD) matrices, we present two algorithms to classify trajectories on the space of SPD matrices. These algorithms consist in computing, in two different ways, the mean trajectory of a set of training trajectories and use them as class prototypes. The first method computes a pointwise mean and the second one achieves a smart matching using the Dynamic Time Warping (DTW) algorithm. As we are considering SPD matrices, the geometry used along these processes is the Riemannian geometry of the SPD matrices. We tested our algorithms on synthetic data and on EEG data from six different datasets. We show that our algorithms yield better average results than the state-of-the-art classifier for EEG data. This work was published in the EUSIPCO conference 25.

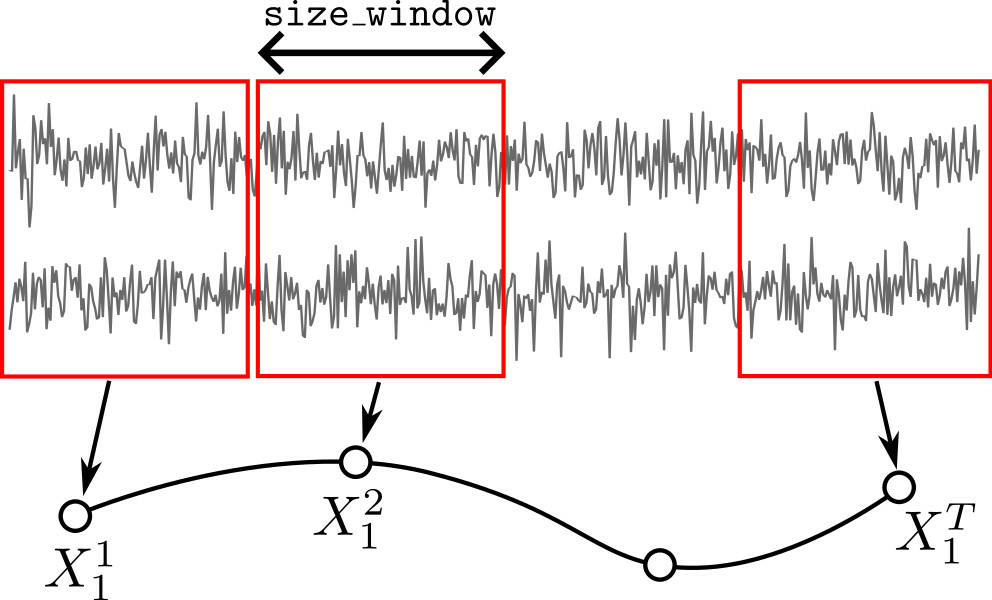

An EEG signal, with two channels, being epoched into 4 epochs, and a covariance matrix of each epoch being estimated, leading to a trajectory of 4 covariance matrices.

8.1.2 Visual cues can bias EEG Deep Learning models

Participants: David Trocellier, Fabien Lotte.

External collaborators: Bernard Nkaoua [Bordeaux population Health /Univ. Bordeaux].

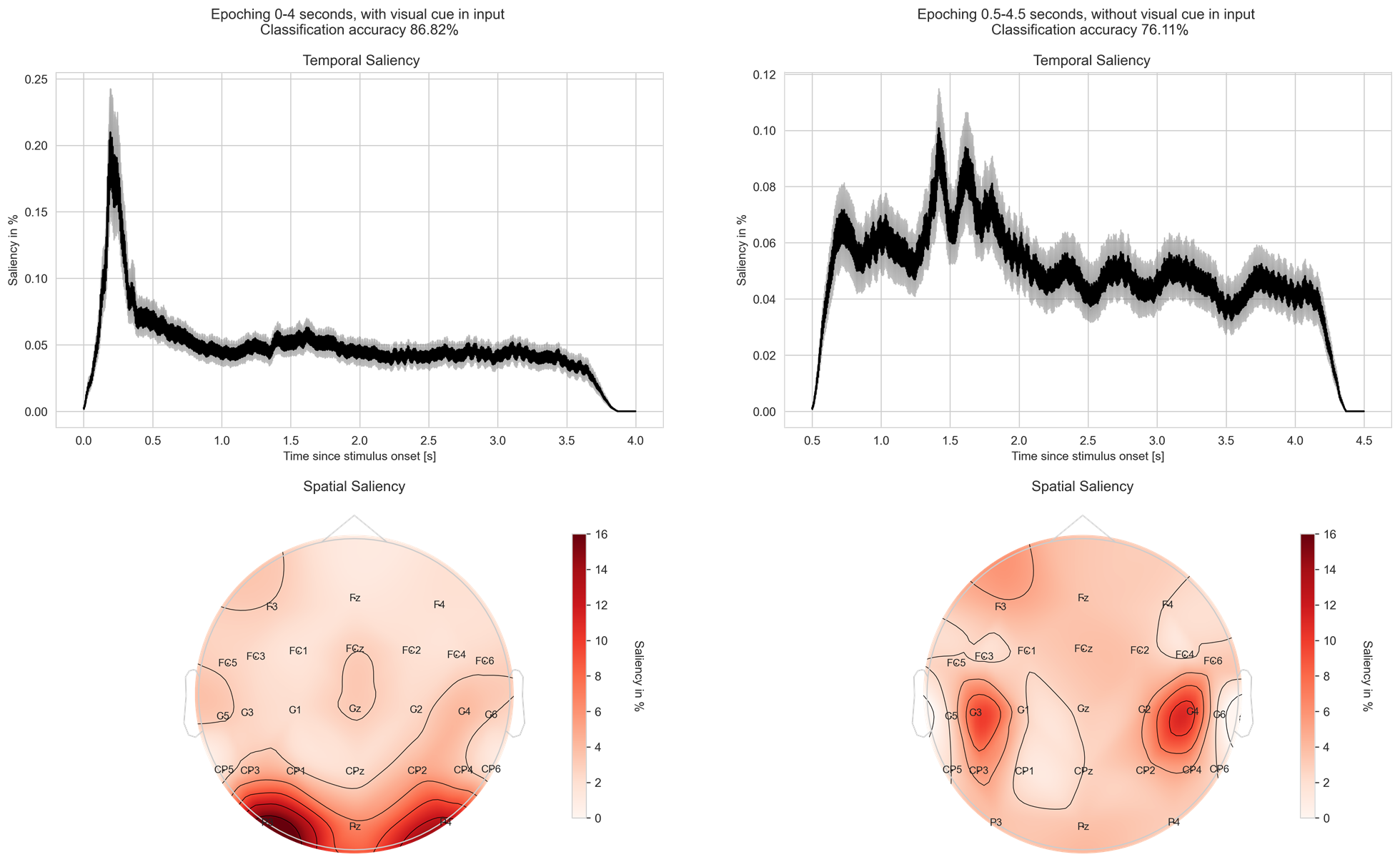

The use of Deep Learning (DL) for classifying motor imagery-based brain-computer interfaces (MI-BCIs) has seen significant growth over the past years, promising to enhance EEG classification accuracies. However, the black-box nature of DL may lead to accurate but biased and/or irrelevant DL models. Here, we study the influence of using visual cue EEG (which is commonly done) in the DL input window on both the features learned and the classification performance of a state-of-the-art DL model, DeepConvNet. The classifier was tested on a large MI-BCI dataset with two time windows post visual cue: 0-4s (with the cue EEG) and 0.5-4.5s (without). Performance-wise, the first condition significantly outperformed the second (86.82% vs. 76.11%, p<0.001). However, saliency maps analyses demonstrated that the inclusion of the visual cue EEG leads to the extraction of cue-related evoked potentials, which are distinct from the MI features used by the model trained without visual cues EEG. This research was presented at the 2024 NeuroErgonomics Conference (NEC'24), Bordeaux, 26.

Left (visual cue included): a graph showing a higher temporal saliency around 0.2ms after the cue, and a spatial saliency in visual areas of the brain (occipital cortex). Right (visual cue not included): a graph showing a more uniform temporal saliency, and spatial saliency in the motor cortex.

8.1.3 Guidelines, good practices and reflexions on machine learning use in BCI

Participants: Fabien Lotte.

External collaborators: Jaime Riascos Salas [Univ. Postdam, Univ. Envigado], Marta Molinas [NTNU], Camille Jeunet-Kelway [INCIA, CNRS], The USERN Network, Klaus Gramann1 [TU Berlin], Frederic Dehais [ISAE-SupAero], Hasan Ayaz [Univ. Drexel], Mathias Vukelic [Fraunhofer], Waldemar Karwowski [Univ. Central Florida], Stephen Fairclough [Univ. Liverpool], Anne-Marie Brouwer [Univ. Radboud, TNO], Raphaëlle N. Roy [ISAE-SupAéro].

Together with various colleagues, from various countries and institutions, we proposed a number of guidelines, good practices, and reflexions on the use of machine learning and Artificial Intelligence in BCI. We notably identified a number of common pitfalls to avoid, identified promising challenges, good practices for reproducibility and open science in BCI, open research questions as well as ethical considerations and risks. These various works were published in IEEE Access 11, Frontiers in Neuroergonomics 12, ESANN 2024 22 and as a book chapter 32.

8.2 Human factors in BCI

In order to better under the human factors involved in BCI, we studied the impact of the BCI users' biolgical sex (and found no differences between males and females), proposed a computational model to study user learning and training (and the impact of instructions) in BCI and neurofeedback, and proposed a new protocol to identify the impact of additional human factors in the future.

8.2.1 Large scale investigation of the effect of gender on mu rhythm suppression in motor imagery brain-computer interfaces

Participants: Fabien Lotte, Sébastien Rimbert.

External collaborators: Valentina Gamboa von Groll [Tilburg University], Nikki Leeuwis [Tilburg University], Maryam Alimardani [Tilburg University/Vrei Universiteit Amsterdam], Aline Roc [CATIE], Léa Pillette [Hybrid - 3D interaction with virtual environments using body and mind].

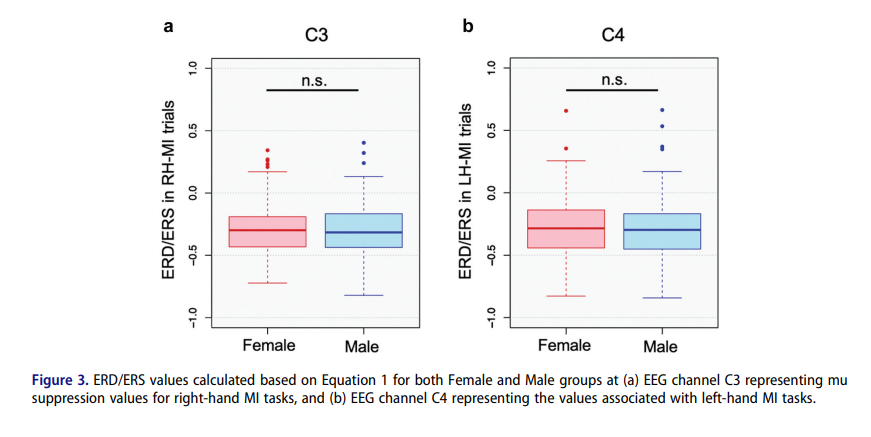

The utmost issue in Motor Imagery Brain-Computer Interfaces (MI-BCI) is the BCI poor performance known as 'BCI inefficiency'. Although past research has attempted to find a solution by investigating factors influencing users' MI-BCI performance, the issue persists. One of the factors that has been studied in relation to MI-BCI performance is gender. Research regarding the influence of gender on a user's ability to control MI-BCIs remains inconclusive, mainly due to the small sample size and unbalanced gender distribution in past studies. To address these issues and obtain reliable results, this study combined four MI-BCI datasets into one large dataset with 248 subjects and equal gender distribution. The datasets included EEG signals from healthy subjects from both gender groups who had executed a right- vs. left-hand motor imagery task following the Graz protocol. The analysis consisted of extracting the Mu Suppression Index from C3 and C4 electrodes and comparing the values between female and male participants. Unlike some of the previous findings which reported an advantage for female BCI users in modulating mu rhythm activity, our results did not show any significant difference between the Mu Suppression Index of both groups, indicating that gender may not be a predictive factor for BCI performance. This work was published in the Brain-Computer Interfaces journal 13.

Two box plots showing no differences in ERD/ERS between male in female, for both C3 and C4 electrodes.

8.2.2 Bayesian model of individual learning to control a motor imagery BCI

Participants: Come Annicchiarico, Fabien Lotte.

External collaborators: Jérémie Mattout [CNRL / Inserm, Lyon, France].

The cognitive mechanisms underlying subjects' self-regulation in Brain-Computer Interface (BCI) and neurofeedback (NF) training remain poorly understood. Yet, a mechanistic computational model of each individual learning trajectory is required to improve the reliability of BCI applications. The few existing attempts mostly rely on model-free (reinforcement learning) approaches. Hence, they cannot capture the strategy developed by each subject and neither finely predict their learning curve. We thus proposed an alternative, model-based approach rooted in cognitive skill learning within the Active Inference framework. We showed how BCI training may be framed as an inference problem under high uncertainties. We illustrate the proposed approach on a previously published synthetic Motor Imagery ERD laterality training. We showed how simple changes in model parameters allow us to qualitatively match experimental results and account for various subjects. In the near future, this approach may provide a powerful computational to model individual skill learning and thus optimize and finely characterize BCI training. This work was published at the 9th International Graz BCI conference 15.

8.2.3 NEARBY project - Motor Imagery BCI Protocol

Participants: Fabien Lotte, Sébastien Rimbert, Juliette Meunier.

The NEARBY (Noise and Variability-free BCI Systems for Out-of-the-lab Use) project aimed to study the variability factors influencing BCIs performances, such as the variability between users, within users and environmental factors which may explain the poor reliability of BCI outside the laboratory. Therefore, the project aimed to (1) collect an extensive database of EEG under different conditions and types of BCIs in order (2) to develop new algorithms of machine learning which can be robust to such variability factors. In this context, we are collaborating with DFKI (German Research Center for Artificial Intelligence) in order to collect data of speech and MI BCIs. Our objective in Bordeaux is to gather data from MI BCI. To achieve this, we have designed a protocol for MI BCI that incorporates various factors of variability.

8.3 Neuro and biomarkers

We studied and proposed a number of biomarkers, notably to predict BCI performance (between-user variability) before using a BCI, to predict runners performances, or to understand performance variations due to fatigue or to the timing of stimulations in stimulation-based BCIs.

8.3.1 Validating neurophysiological predictors of BCI performance on a large open source dataset.

Participants: David Trocellier, Fabien Lotte.

External collaborators: Bernard Nkaoua [Bordeaux population Health / Univ. Bordeaux].

Brain-computer interfaces (BCI) are systems that process brain activity to decode specific commands from it such as motor imagery patterns generated when users imagine movements. Despite the growing interest in BCI, they present significant challenges, notably in decoding distinct neural patterns, due to considerable variability across and within users. The literature showed that various predictors were correlated with subject's BCI performance. Among these indicators, neurophysiological predictors appeared to be the most effective, although studies generally involved small samples and results were not always replicated, thus questioning their reliability. In our study, we used a large dataset with 85 subjects to analyse the relationship between different predictors identified in the literature and BCI performance. Our findings reveal that only four of the six predictors tested could be replicated on this dataset. These results underscore the necessity of validating literature findings to ensure the reliability and applicability of such predictors. This work was published as a conference paper in the 9th Graz BCI conference and received the "best poster award" 26.

8.3.2 Prediction of Motor-Imagery-BCI performance using Median Nerve Stimulation

Participants: Sébastien Rimbert, Valérie Marissens-Cueva, Fabien Lotte.

External collaborators: Laurent Bougrain [LORIA /Paris Brain Institute].

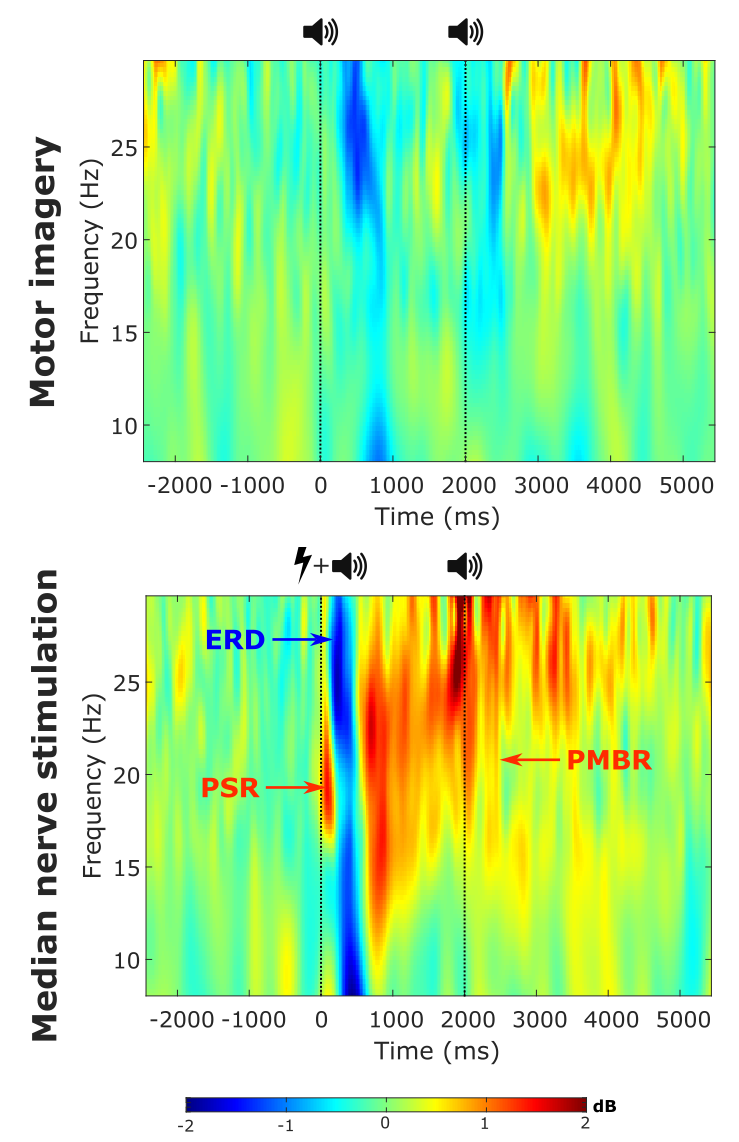

Time-Frequency maps, following MI (top) or MNS (bottom). This shows that MI is followed by an ERD then and ERS, while MNS is followed by a brief ERS, then an ERD and a long ERS

Motor Imagery-based Brain-Computer Interfaces (MI-BCIs) have grown significantly in recent years and have successfully overcome several challenges in rehabilitation, control, communication, artistic creation, etc. However, not everyone can use MI-BCI, and there are large variations between users in performance. Thus, predicting a user's future MI-BCI performance is now a critical issue that could enable the BCI system to be adapted as effectively as possible to each user, thereby improving its efficiency. However, few methods exist that can currently predict performance prior to a BCI session. In fact, both neurophysiological markers and questionnaires have been shown to be insufficient as predictive tools. Interestingly, it has previously been shown that painless and passive median nerve stimulation can generate well-known motor patterns of Event-Related Desynchronization (ERD) and Event-Related Synchronization (ERS). In this work, we proposed to use these post-stimulation motor patterns to predict the future performance of an MI-BCI user. Our results showed that the ERD after stimulation of the median nerve in the beta frequency band was correlated with the BCI performance of the subjects (rho =-0.58; p-value < 0.05) and that the ERD in the mu band correlated very strongly with BCI performance in this specific frequency band (rho=-0.87; p-value < 0.001). These promising results suggested that there is a strong neural predisposition to perform well with MI-BCI and that median nerve stimulation may be an easy-to-install, fast and highly effective technique for detecting it 24.

8.3.3 Could blink parameters be used to monitor fatigue during MI-BCI use?

Participants: Pauline Dreyer, Aline Roc, David Trocelier, Marc Welter, Fabien Lotte.

External collaborators: Raphaëlle Roy [Fédération ENAC ISAE-SUPAERO ONERA, Université de Toulouse, France].

Brain-Computer Interface (BCI) performances are affected by intra-subject variability, including mental fatigue. While typically assessed through self-reports, mental fatigue can also be measured via blink parameters from electro-oculography (EOG) signals. We analyzed blinks data from 23 motor imagery BCI (MI-BCI) users, along with subjective fatigue reports and BCI performance. Although blink parameters did not correlate with MI-BCI performance or subjective fatigue, both blink number and duration increased with time-on-task, mirroring the rise in subjective fatigue. These findings suggest blink parameters may be useful for BCI user monitoring, although their relationship with BCI performance and fatigue needs further studies. This work was presented in CORTICO 2024 and in the 9th Graz BCI Conference 2024 33, 16.

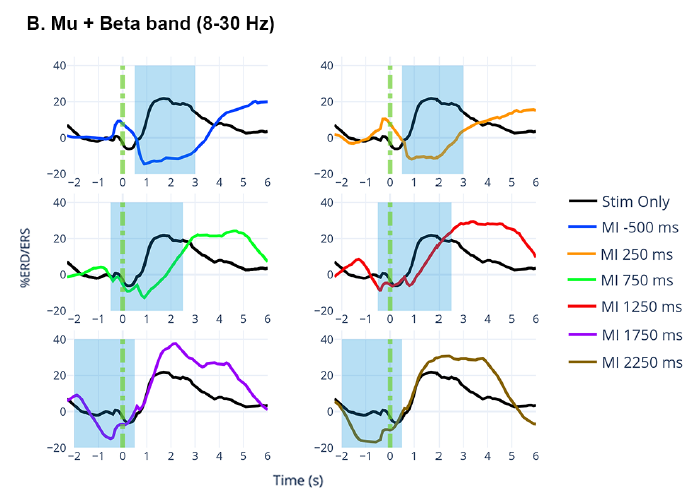

8.3.4 Investigating the overlap of ERD/ERS modulations for a Median Nerve Stimulation-based BCI

Participants: Sébastien Rimbert.

Motor-Imagery-based Brain-Computer Interfaces (MI-BCIs) show promise but face challenges for a wider adoption due to accuracy and usability issues. It has been proposed that BCIs based on median nerve stimulation (MNS) could significantly improve the efficacy of BCIs. Indeed, when MNS occurs during a MI, post-stimulation neuronal synchronization is significantly reduced, potentially aiding MI-BCIs in distinguishing MI with stimulation from stimulation alone. However, MNS-based BCI only actually works under optimal timing conditions (i.e. when the MNS occurs precisely during MI). Understanding how the ERD/ERS components of mu and beta rhythm interact with each other when MNS is close, but not perfectly aligned, to MI is essential. This study examined 14 participants in two motor conditions: MI with MNS and MNS alone, assessing timing effects on EEG activity. The results showed that when stimulation occurs before or at the onset of MI, the ERD of MI tended to abolish the ERS of the MNS 23.

Graphs showing the evolution of ERD and ERS with diffrent delays.

8.3.5 Performance predictions for running

Participants: Alex Pepi, Fabien Lotte.

External collaborators: Pierre Gilfriche [Flit Sport], Aurélien Appriou [Flit Sport].

As part of project SPEARS, we aim at predicting the performance of runners, in order to later enable personalized training programs. When runners exercise, their running capacity can be modeled using a critical speed () which is a speed threshold. In theory, below this speed they can run for hours (infinity) without being exhausted. The running performance model typically also includes another parameter , which is the finite work capacity available above . These parameters can be used to estimate the performance of a runner.

Runners do intermittent high-intensity and low-intensity exercises. In order to find the values of these parameters, only intermittent exercises with high-intensity can be used, because the runner is above in this case. Thus, we developed a Random Forest Classifier to automatically identify intermittent exercises, in runners' running logs (from smartwatches), by extracting a few features from each exercise.

There is a simple model for predicting these parameters named model. However, this model requires many exercises and underestimates real performance. We thus proposed two new and better models:

- model which combines the previous model and the model. is used to see the evolution of during an exercise. It works better but still requires a few intermittent exercises.

- Riemannian SVR (Support Vector Regression) is a new approach which combines cardiac activity and running characteristics, in a Riemannian framework, to better estimate performances.

The final goal is to be able to predict runners performance in the future. There is a five parameters model called the model that can plot the evolution of the performance. We explored this model for running (it was developped for biking) and was unfortunately not working well. The parameters can be well fitted for some runners but not for others. The next step is to find a new model architecture able to predict performance in the future and for all runners.

8.4 Applications of BCIs

In our work, we explored various applications of BCIs, both for healthy users, notably to enhance workspace awareness and design personalized virtual museums as well as for medical applications to detect awareness during general anesthesia and improve wakefulness abilities using neurofeedback.

8.4.1 Toward Enhancing Workspace Awareness Using a Brain-Computer Interface based on Neural Synchrony

Participants: Sébastien Rimbert.

External collaborators: Arnaud Prouzeau [ILDA LISN].

This work explored the potential of EEG-based passive Brain-Computer Interfaces (BCIs) to enhance workspace awareness using EEG synchrony indices to track cognitive states in real-time. Using such measures, it would be possible to detect and adjust for misalignments in team members' focus and engagement, potentially preventing communication failures. We envision techniques that provide real-time feedback on team dynamics, enhancing decision-making processes within collaborative tasks. In the future, we plan to run a study to assess the performance and impact of such techniques. This work was published in the Neuroergonomics conference 21.

8.4.2 One-Class Riemannian EEG classifier to detect anesthesia

Participants: Valérie Marissens Cueva, Sébastien Rimbert, Fabien Lotte.

External collaborators: Ana Maria Cebolla Alvarez [Université Libre de Bruxelles (ULB), LNMB], Mathieu Petieau [ULB, LNMB], Iraj Hashemi [ULB, LNMB], Viktoriya Vitkova [ULB, LNMB], Guy Cheron [ULB, LNMB], Claude Meistelman [Université de Lorraine], Philippe Guerci [CHRU de Brabois], Denis Schmartz [Hôpital Universitaire de Bruxelles, ULB], Seyed Javad Bidgoli [CHU Brugmann], Laurent Bougrain [LORIA, Paris Brain Institute].

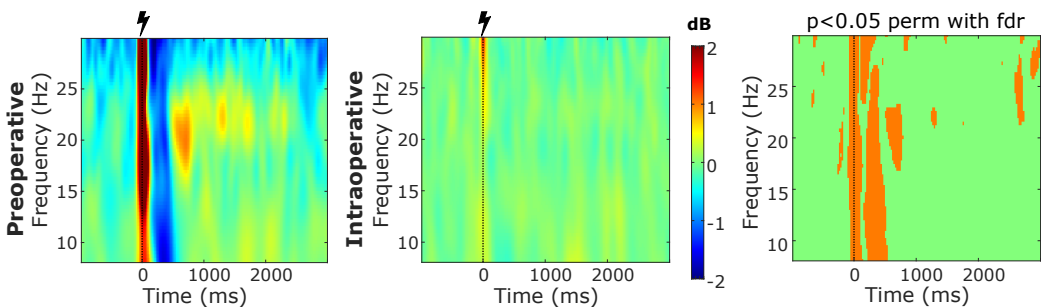

Among all the operations carried out under general anesthesia worldwide, some patients have had the terrible experience of Accidental Awareness during General Anesthesia (AAGA), an unexpected awakening during the surgical procedure. The inability to predict and prevent AAGA before its occurrence using only conventional measures, such as clinical signs, leads to the use of brain activity monitors. Given AAGA patients' first reflex to move, impeded by neuromuscular-blocking agents, we propose using a new Brain Computer Interface with Median Nerve Stimulation (MNS) to detect their movement intentions, specifically in the context of general anesthesia. Indeed, MNS induces movement-related EEG patterns, improving the detection of such intentions. We first compared MNS effects on the motor cortex before and during surgery under general anesthesia 18. Then, a Riemannian Minimum Distance to the Mean classifier achieved 97% test balanced accuracy in distinguishing awake and anesthetized states. Additionally, we observed how the classifier's response evolves with anesthesia depth, in terms of distance to the awake class centroid. This distance appears to track the patients' awareness level during surgery 30. Our objective is to develop a classifier that distinguishes EEG patterns induced by MNS under two conditions: when a patient is awake vs. under general anesthesia. Since the latter condition is unavailable before the surgery for BCI calibration, we focused on one-class methods. A One-Class Riemannian Minimum Distance to the Mean trained with the awake data correctly differentiates between these two conditions (test balanced accuracy of 85.44%), significantly better than when the classifier is trained with the beginning of intraoperative data 17.

Time frequency maps of MNS stimulation when awake (left) or under general anaesthesia (center), and the statistical difference between both (right). These maps show that the ERD and ERS patterns following MNS when awake, disappear during general anaesthesia.

8.4.3 Neurofeedback to improve wakefulness maintenance ability

Participants: Fabien Lotte.

External collaborators: Jean-Arthur Micoulaud-Franchi [SANPSY, Univ. Bordeaux, CHU Bordeaux], Pierre Philip [SANPSY, Univ. Bordeaux, CHU Bordeaux], Jacques Taillard [SANPSY, CNRS], Marie Pelou [SANPSY, Univ. Bordeaux], Thibault Monseigne [SANPSY, Univ. Bordeaux], Camille Jeunet-Kelway [INCIA, CNRS], Poeiti Abi-Saab [SANPSY, Univ. Bordeaux].

The aim of this study was to evaluate the effect of an EEG-based neurofeedback (NF) program on the ability to maintain wakefulness in healthy subjects, after a controlled one-night complete sleep debt condition. The Maintenance Wakefulness Test (MWT), which assesses objective wakefulness maintenance abilities, and the Karolinska Sleepiness Scale (KSS), which assesses subjective wakefulness maintenance abilities, were administered to a group of healthy sleepdeprived subjects (n=16) before (V1) and after (V2) eight neurofeedback sessions on /(-) EEG spectral bands activities target. Mean sleep latency on the MWT and mean KSS scores increased significantly between V2 and V1 (). This proof-of-concept study is the first step towards developing cognitive remediation strategies using EEG neurofeedback targeting sleep complaints in patients with residual hypersomnolence. Further research is needed to confirm the efficacy and benefits of this type of non-pharmacological therapy. This work was presented at the International Neuroergonomics conference 20.

8.4.4 Towards neuroadaptive art presentation

Participants: Marc Welter, Axel Bouneau, Fabien Lotte.

External collaborators: Tomas Ward [DCU, Ireland], Jesus Casal Martínez [UPV, Spain], Jonathan Baum [Inria Montpellier], Erin Redmond [DUC, Ireland].

The EEG correlates of art appreciation are not yet well understood scientifically. We published a literature review on the oscillatory EEG correlates of art preference in Frontiers in Neuroergonomics 14. We tested various Machine Learning methods to decode aesthetic appreciation (like and interest) from (neuro-)physiological EEG, GSR (Galvanic Skin Response) and PPG (PhotoPlethysmoGraphy) data previously collected in a virtual museum environment. Offline classification results suggest that, at least for some persons, aesthetic appreciation for static visual art can be decoded from single-trial (neuro-)physiological signals. Parts of this work were presented at CORTICO 2024 31, Neuroergonomics2024 29 and the Graz BCI conference (GBCIC2024) 28. However, bridging the gap towards ecologically valid online decoding remains challenging. To this end, we worked on novel online BCI protocols and reflected on art recommender systems, as well as on ethical considerations for neuradaptive art presentation. Some of this work was published in the abstracts of VSAC2024.

9 Partnerships and cooperations

9.1 International research visitors

9.1.1 Visits of international scientists

Other international visits to the team

Jaime Riascos Salas

-

Status

PhD candidate

-

Institution of origin:

Univ. Postdam & Univ. Envigado

-

Country:

Germany & Colombia

-

Dates:

July 2024

-

Context of the visit:

collaboration on Brain-Computer Interfaces and establishment of connexions between the French (CORTICO) and Latin-American (BCI-LATAM) BCI research networks

-

Mobility program/type of mobility:

Research stay

Ettore Cinquetti

-

Status

PhD candidate

-

Institution of origin:

Univ. Verona

-

Country:

Italy

-

Dates:

November 2024 - April 2025

-

Context of the visit:

collaboration on Brain-Computer Interfaces

-

Mobility program/type of mobility:

Research stay

9.1.2 Visits to international teams

Research stays abroad

Valérie Marissens Cueva

-

Visited institution:

Kyushu Institute of Technology (KYUTECH)

-

Country:

Japan

-

Dates:

19 February - 29 February 2024

-

Context of the visit:

JST Sakura Science Program

-

Mobility program/type of mobility:

Research stay (visiting student)

9.2 European initiatives

9.2.1 Horizon Europe

SPEARS

Participants: Fabien Lotte, Alex Pepi, Gloria Makima.

SPEARS project on cordis.europa.eu

-

Title:

Skill Performance Estimation from cARdiac Signals

-

Duration:

From January 1, 2024 to June 30, 2025

-

Funding:

ERC Proof-of-Concept (PoC) grant

-

Partners:

- INSTITUT NATIONAL DE RECHERCHE EN INFORMATIQUE ET AUTOMATIQUE (INRIA), France

- Flit Sport SAS (Flit Sport), France

-

Inria contact:

Fabien Lotte

-

Coordinator:

Fabien Lotte

-

Summary:

In any learning situation, be it math education, language learning or sport training, different learners have different abilities, motivations and capacities at any given time. Thus, an optimal learning can only be achieved with personalized training solutions, dynamically adapted to each learner's cognitive and/or physical states. The scientific literature showed that such states could be estimated from Cardiac Signals (CS). In ERC PoC SPEARS, we thus propose to redefine consumer training apps, by enabling them to propose personalized and adaptive training plans according to an estimation of their users' cognitive and/or physical states from their CS measured with consumer grade sensors, e.g., smartwatches. The outcome of ERC project BrainConquest should enable us to tackle this challenge. Indeed, in BrainConquest we explored such a personalized training approach for users of Brain-Computer Interfaces (BCI). In doing so, we developed Machine Learning (ML) and Signal Processing (SP) algorithms to estimate users' mental states and predict their upcoming performances from their brain and physiological signals, including CS. In SPEARS, we thus aim at adapting, improving and assessing BrainConquest ML & SP algorithms, initially designed for BCI performance prediction from research grade brain and CS sensors in the lab, to predict cognitive and physical performance from consumer grade CS sensors in the wild. Such algorithms could be used for adaptive training apps in education, cognitive training for healthy aging or sport training. We will then explore a commercial application of this technology for sport training in particular, in collaboration with the startup Flit Sport, which sells an app for providing personalized training exercises for endurance sport athletes, based on their past performances and ML. By integrating our CS-based prediction into Flit Sport training app, we should design optimally personalized training solutions for millions of runners worldwide.

BITSCOPE

Participants: Fabien Lotte, Axel Bouneau, Marc Welter.

-

Title:

BITSCOPE: Brain Integrated Tagging for Socially Curated Online Personalised Experiences

-

Duration:

2022-2025 (3 years)

-

Funding:

CHIST-ERA Grant

-

Partners:

- Dublin City University (DCU), Ireland (Project Leader. Lead: Tomàs Ward)

- Inria Centre at the University of Bordeaux, France (Lead: Fabien Lotte)

- Nicolas Copernicus University, Poland (Lead: Veslava Osinska)

- University Politechnic of Valence, Spain (Lead: Mariona Alcañiz)

-

Inria contact:

Fabien Lotte

-

Coordinator:

Tomàs Ward (DCU)

-

Summary:

This project presents a vision for brain computer interfaces (BCI) which can enhance social relationships in the context of sharing virtual experiences. In particular we propose BITSCOPE, that is, Brain-Integrated Tagging for Socially Curated Online Personalised Experiences. We envisage a future in which attention, memorability and curiosity elicited in virtual worlds will be measured without the requirement of “likes” and other explicit forms of feedback. Instead, users of our improved BCI technology can explore online experiences leaving behind an invisible trail of neural data-derived signatures of interest. This data, passively collected without interrupting the user, and refined in quality through machine learning, can be used by standard social sharing algorithms such as recommender systems to create better experiences. Technically the work concerns the development of a passive hybrid BCI (phBCI). It is hybrid because it augments electroencephalography with eye tracking data, galvanic skin response, heart rate and movement in order to better estimate the mental state of the user. It is passive because it operates covertly without distracting the user from their immersion in their online experience and uses this information to adapt the application. It represents a significant improvement in BCI due to the emphasis on improved denoising facilitating operation in home environments and the development of robust classifiers capable of taking inter- and intra-subject variations into account. We leverage our preliminary work in the use of deep learning and geometrical approaches to achieve this improvement in signal quality. The user state classification problem is ambitiously advanced to include recognition of attention, curiosity, and memorability which we will address through advanced machine learning, Riemannian approaches and the collection of large representative datasets in co-designed user centred experiments.

9.2.2 Other european programs/initiatives

NEARBY

Participants: Fabien Lotte, Sebastien Rimbert, Juliette Meunier.

-

Title:

NEARBY: Noise and Variability Free Brain-Computer Interfaces

-

Duration:

2023-2027 (3.25 years)

-

Funding:

Inria-DFKI project

-

Partners:

- Inria Centre at the University of Bordeaux, teams Potioc & Mnemosyne France (Lead: Fabien Lotte)

- DFKI Saarbrucken, Germany (Lead: Maurice Rekrut)

- DFKI Bremen, Germany (Lead: Marc Tabie)

-

Inria contact:

Fabien Lotte

-

Coordinators:

Fabien Lotte (for Inria) and Maurice Rekrut ( for DFKI)

-

Summary:

While Brain-Computer Interfaces (BCI) are promising for many applications, e.g., assistive technologies, man-machine teaming or motor rehabilitation, they are barely used out-of-the-lab due to a poor reliability. Electroencephalographic (EEG) brain signals are indeed very noisy and variable, both between and within users. To address these issues, we first propose to join Inria and DFKI forces to build large-scale multi-centric and open EEG-BCI databases, with controlled noise and variability sources, for BCIs based on motor and speech activity. Building on this data we will then design new Artificial Intelligence algorithms, notably based on Deep Learning, dedicated to EEG denoising and variability-robust EEG-decoding. Such algorithms will be implemented both on open-source software as well as FPGA hardware, and then demonstrated in two out-of-the-lab BCI applications: Human-Robot collaboration and exoskeleton control.

9.3 National initiatives

ANR BCI4IA

Participants: Sebastien Rimbert, Fabien Lotte, Valerie Marissens.

-

Title:

BCI4IA: a New BCI Paradigm To Detect Intraoperative Awareness During General Anesthesia

-

Duration:

2023-2027 (4 years)

-

Partners:

- Inria Center at the University of Bordeaux, Talence (lead: Fabien Lotte)

- LORIA, Nancy (lead: Laurent Bougrain)

- CHRU Nancy, Nancy (lead: Claude Meistelman)

- CHU Brugmann, Brussels (lead: Denis Schwartz)

- Univ. Libre Bruxelles, Brussels (lead: Anna Cebolla)

-

Coordinator:

Claude Meistelman

-

Inria contact:

Sebastien Rimbert

-

Summary:

The BCI4IA project aims to design a brain-computer interface to enable reliable general anesthesia (GA) monitoring, in particular to detect intraoperative awareness. Currently, there is no satisfactory solution to do so whereas it causes severe post-traumatic stress disorder. "I couldn't breathe, I couldn't move or open my eyes, or even tell the doctors I wasn't asleep." This testimony shows that a patient's first reaction during an intraoperative awareness is usually to move to alert the medical staff. Unfortunately, during most surgery, the patient is curarized, which causes neuromuscular block and prevents any movement. To prevent intraoperative awareness, we propose to study motor brain activity under GA using electroencephalography (EEG) to detect markers of motor intention (MI) combined with general brain markers of consciousness. We will analyze a combination of MI markers (relative powers, connectivity) under the propofol anesthetics, with a brain-computer interface based on median nerve stimulation to amplify them. Doing so will also require to design new machine learning algorithms based on one-class (rest class) EEG classification, since no EEG examples of the patient's MI under GA are available to calibrate the BCI. Our preliminary results are very promising to bring an original solution to this problem which causes serious traumas.

- Website:

ANR PROTEUS

Participants: Fabien Lotte, Sebastien Rimbert, Pauline Dreyer, David Trocellier, Loic Bechon.

-

Title:

PROTEUS: Measuring, understanding and tackling variabilities in Brain-Computer Interfacing

-

Duration:

2023-2027 (3.5 years)

-

Partners:

- Inria Center at the University of Bordeaux, Talence (lead: Fabien Lotte)

- LAMSADE, Paris (lead: Florian Yger)

- ISAE-SupAero, Toulouse (lead: Raphaëlle Roy)

- INSA Rouen, Rouen (lead: Florian Yger)

- Wisear, Paris (lead: Alain Sirois)

-

Coordinator:

Fabien Lotte

-

Inria contact:

Fabien Lotte

-

Summary:

Whereas BCI are very promising for various applications they are not reliable. Their reliability degrades even more when used across contexts (e.g., across days, for changing users' states or applications used) due to various sources of variabilities. Project PROTEUS proposes to make BCIs robust to such variabilities by 1) Systematically measuring BCI and brain signal variabilities across various contexts while sharing the collected databases; 2) Characterising, understanding and modelling the variability and their sources based on these new databases; and 3) Tackling these variabilities by designing new machine learning algorithms optimally invariant to them according to our models, and using the resulting BCIs for two practical applications affected by variabilities: tetraplegic BCI user training and auditory attention monitoring at home or in flight.

- Website:

9.4 Regional initiatives

IHU VBHI

Participants: Fabien Lotte.

-

Title:

VBHI: Vascular Brain Health Institut

-

Duration:

2024 to 2034 (10 years)

-

Funding:

Institut Hospitalo-Universitaire

-

Partners:

- Univ. de Bordeaux

- CHU Bordeaux

- Inria

- Fondation Bordeaux Université

- Inserm

- Région Nouvelle Aquitaine

-

Inria contact:

Fabien Lotte (for the digital therapeutics and neurofeedback project)

-

Coordinator:

Stéphanie Debette

-

Summary:

Maintaining optimal cerebrovascular health is essential for healthy ageing and to fight the risk of stroke and dementia. In Bordeaux, the VBHI brings together top research and clinical expertise from neuroscience, public health and cardiology to carry out translational programmes that from go from patients to public health policies. The VBHI’s approaches are based on cutting-edge technologies and infrastructures (omics, AI, imaging, etc.) to invent innovative models for the prevention of cerebrovascular diseases and to share them with the scientific community and as many people as possible.

10 Dissemination

10.1 Promoting scientific activities

10.1.1 Scientific events: organisation

General chair, scientific chair

- 5th International Neuroergonomics Conference 2024 (NEC'24), July 8-12, Bordeaux, France (200+ participants, 100+ papers) (Fabien Lotte , co-chair, with Camille Jeunet-Kelway)

Member of the organizing committees

- Conference Cortico 2024, May 22-23, Nancy, France (Valerie Marissens , Sebastien Rimbert )

Workshop and special session organization

- Workshop “OpenViBE for wannabe experts” at the 5th International Neuroergonomics Conference, 9th July 2024 (Axel Bouneau , David Trocellier , with Thomas Prampart)

- Workshop “Aesthetic Experience Decoding with multi-modal passive Brain-Computer-Interfaces” at the Graz BCI conference 2024. 9th September 2024 (Marc Welter , with Dominik Welke)

- Workshop “Variabilities in Brain-Computer Interactions” at the Graz BCI conference 2024. 9th September 2024 (Fabien Lotte , Sebastien Rimbert , with Maurice Rekrut, Marc Tabie, Niklas Küper, Tobias Jungbluth)

- Special session “Modern Machine Learning Methods for robust and real-time Brain-Computer Interfaces (BCI)”, at ESANN 2024 (Fabien Lotte , with Jaime Riascos Salas and Marta Molinas)

10.1.2 Scientific events: selection

Member of the conference program committees

- ESANN 2024 & ESANN 2025 (Fabien Lotte )

- NEC 2024 (Fabien Lotte )

- International Graz BCI conference (Fabien Lotte )

Reviewer

- ESANN 2024 & ESANN 2025 (David Trocellier , Marc Welter , Fabien Lotte )

- LAWCN (Fabien Lotte )

- NEC 2024 (Fabien Lotte )

- International Graz BCI conference (Fabien Lotte )

- IEEE VR 2025 (Fabien Lotte )

- CORTICO 2024 (Fabien Lotte , Sebastien Rimbert )

10.1.3 Journal

Member of the editorial boards

- IEEE Transactions on BioMedical Engineering (Fabien Lotte )

- Frontiers in Neuroergonomics (Fabien Lotte )

- Journal of Neural Engineering (Fabien Lotte )

Reviewer - reviewing activities

- IEEE Transactions on BioMedical Engineering (David Trocellier , Fabien Lotte )

- PLOS One (Marc Welter , Sébastien Rimbert )

- Frontiers in Neuroscience (Sébastien Rimbert )

- Frontiers in Human Neuroscience (Sébastien Rimbert )

- Journal of the Franklin Institute (Fabien Lotte )

10.1.4 Invited talks

- F. Lotte, "On Variabilities in EEG-based Brain-Computer Interaction", Workshop on Neuroimaging, IRISA-Inria Rennes, November 2024, invited talk (Fabien Lotte )

- B. Glize & F. Lotte, "Neurotechnologies pour la rééducation du langage post-AVC : approches et perspectives", journée de la FEDRHA (Fédération de Recherche sur le Handicap et l'Autonomie), Talence, France, invited talk, November 2024 (Fabien Lotte )

- F. Lotte, "Études, conceptions et applications des Interfaces Cerveau-Ordinateur", Congrès de la Société Informatique de France (SIF), Poitiers, France, invited talk, June 2024 (Fabien Lotte )

- F. Lotte, "Études, conceptions et applications des Interfaces Cerveau-Ordinateur", Journées de présentation des lauréats des prix de l'académie des sciences 2023, académie des sciences, Paris, France, invited talk, May 2024 (Fabien Lotte )

- F. Lotte, "Brain-Computer Interactions: Principles, Hypes and Hopes", International Conference on Cognitive Aircraft Systems (ICCAS'24), Toulouse, France, opening Keynote, May 2024 (Fabien Lotte )

10.1.5 Leadership within the scientific community

- Member of the board of CORTICO, the French BCI research society (Sebastien Rimbert , Fabien Lotte )

10.1.6 Scientific expertise

- Member of the Scientific Advisory Board of the Research Training Group “Neuromodulation”, University of Oldenburg, Germany (2023-2026) (Fabien Lotte )

- President of the Annual BCI research award 2024 (Fabien Lotte )

10.1.7 Research administration

- Comunity manager for The Hub - "physical activity and performance" : collaborative platform of the university, structured around thematic. The aim is to give actors from the socio-economic world access to all the resources that a university can offer, such as high-level scientific expertise, cutting-edge technologies and innovations, specific equipment, structures and suitable spaces. (Pauline Dreyer )

10.2 Teaching - Supervision - Juries

10.2.1 Teaching

- Master: Interface cerveau-ordinateur, 23h eqTD, M2 Cognitive science, Université de Lorraine (Sébastien Rimbert )

- Master: Neuroergonomie, 6h eqTD, M2 Cognitive science, Université de Bordeaux (Fabien Lotte )

- Master: Brain-Computer Interfaces, 22h eqTD, M2 Cognitive science IDMC, Université de Lorraine (Valerie Marissens )

- Bachelor: Programmation et applications interactives, 32h eqTD, L1, Université de Bordeaux (David Trocellier )

- Bachelor: Travaux encadrée de recherche, 5h eqTD, L3, Université de Bordeaux (David Trocellier )

- Bachelor: Epistémologie de science, L3 MIASSH, Université de Bordeaux (Marc Welter )

- Bachelor: Travaux encadrée de recherche, 20h Culture et Compétences Numériques - PIX, L2,Université de Bordeaux (Pauline Dreyer )

- Engineering school: Immersion and interaction with visual worlds, 4,5h eqtd, Graduate Degree Artificial Intelligence and Advanced Visual Computing, Ecole Polytechnique Palaiseau (Fabien Lotte )

- Engineering school: Artificial Intelligence, 14h eqTD, 2nd year, Telecom Nancy (Valerie Marissens )

- IFMK: Jurie evaluation Anglais, 7h eqTD, K4 IFMK, Ifmk Du Chu De Bordeaux (Pauline Dreyer )

- Supervision of interns from 3ème (9th grade) (Sébastien Rimbert , Pauline Dreyer )

10.2.2 Supervision

- PhD thesis: David Trocellier (Fabien Lotte 50%)

- PhD thesis: Marc Welter (Fabien Lotte 100%)

- PhD thesis: Côme Annicchiarico (Fabien Lotte 25%)

- PhD thesis: Maria Sayu Yamamoto (Fabien Lotte 15%)

- PhD thesis: Pauline Dreyer (Fabien Lotte 50%)

- PhD thesis: Valerie Marissens-Cueva (Fabien Lotte 33%, Sebastien Rimbert 33%)

- PhD thesis: Thibault de Surrel (Fabien Lotte 15%)

- Master internship: Gloria Makima (Fabien Lotte 10%)

- Master internship: Ioanna Prodromia Siklafidou (Fabien Lotte 20 %, Sebastien Rimbert 10%)

- Bachelor internship: Philomène Motti (Loic Bechon 70%, Fabien Lotte 20%, David Trocellier 10 %)

10.2.3 Juries

PhD thesis committees:

- Caio de Castro Martins, Univ. Greenwhich, UK (Fabien Lotte , reviewer)

- Juan-Jesus Torre-Tresols, ISAE-Supaéro, France (Fabien Lotte , reviewer)

- Caroline Pinte, Inria / Univ. Rennes, France (Fabien Lotte , reviewer)

- Arne Van Den Kerchove, Univ. Lille, France / KU Leuven, Belgium (Fabien Lotte , reviewer)

- Subba Reddy Oota, Inria / Univ. Bordeaux, France (Fabien Lotte , examiner)

- Marcel Hinns, ISAE-Supaéro, France (Fabien Lotte , examiner)

- Igor Carrara, Inria / Univ. Côtés d'Azur, France (Fabien Lotte , examiner)

PhD follow-up committees (Jury de suivi de thèse):

- Anais Pontiggia, Sorbonne Univ. (Fabien Lotte )

- Clémentine Jacques, Sorbonne Univ (Fabien Lotte )

- Maeva Andriantsoamberomanga, Inria Bordeaux / Univ. Bordeaux / IMN (Fabien Lotte )

- Subba Reddy Oota, Inria Bordeaux / Univ. Bordeaux / IMN (Fabien Lotte )

- Camilla Mannino, Sorbonne Univ. / Inria Paris / ICM (Fabien Lotte )

10.3 Popularization

10.3.1 Productions (articles, videos, podcasts, serious games, ...)

- podcast on Cortico 2024, "Une interface cerveau-ordinateur pour détecter les réveils peropératoires", LORIA, Université de Lorraine (Valerie Marissens )

- Video Interview "une-minute-avec..." : short video, shared on Twitter, Pixees, and on the center's screens, highlighting careers of scientists and research support staff. (Pauline Dreyer )

- Article “Études, conceptions et applications des Interfaces Cerveau-Ordinateur” dans 1024, le Bulletin de la Société Informatique de France (SIF) (Fabien Lotte )

10.3.2 Participation in Live events

- Presenting Brain Kart, a kart controlled by brain activity at the "Forum des sciences cognitives", in Paris (David Trocellier )

- Organising and participating to the second edition of Hack1robo, an Hackhaton around cognitive sciences, neurosciences and AI (50 participants), February 2024 (David Trocellier )

- Organising and participating to the third edition of Hack1robo, an Hackhaton around cognitive sciences, neurosciences and AI (60 participants), October 2024 (David Trocellier )

- MIMM "Moi informaticienne, moi mathématicienne” is an initiative of the University of Bordeaux, supported by the IMB and Inria. Its aim is to introduce middle and high school girls to the digital sciences and let them spend a week in the world of research. Presentation of the BCI / academic degree / research-experiments / questions-answers) (Pauline Dreyer )

11 Scientific production

11.1 Major publications

- 1 articleLong-Term BCI Training of a Tetraplegic User: Adaptive Riemannian Classifiers and User Training.Frontiers in Human Neuroscience15March 2021HALDOIback to text

- 2 articleA large EEG database with users’ profile information for motor imagery brain-computer interface research.Scientific Data 101September 2023, 580HALDOIback to text

- 3 inproceedingsFramework for Electroencephalography-based Evaluation of User Experience.CHI '16 - SIGCHI Conference on Human Factors in Computing SystemSan Jose, United StatesMay 2016HALDOI

- 4 articleAdvances in User-Training for Mental-Imagery Based BCI Control: Psychological and Cognitive Factors and their Neural Correlates.Progress in brain researchFebruary 2016HAL

- 5 articleFlaws in current human training protocols for spontaneous Brain-Computer Interfaces: lessons learned from instructional design.Frontiers in Human Neurosciences7568September 2013, URL: http://hal.inria.fr/hal-00862716DOI

- 6 articleTowards identifying optimal biased feedback for various user states and traits in motor imagery BCI.IEEE Transactions on Biomedical EngineeringSeptember 2021HALDOI

- 7 articleA physical learning companion for Mental-Imagery BCI User Training.International Journal of Human-Computer Studies136102380April 2020HALDOI

- 8 articleLong-term kinesthetic motor imagery practice with a BCI: Impacts on user experience, motor cortex oscillations and BCI performances.Computers in Human Behavior146April 2023, 107789HALDOI

- 9 articleA review of user training methods in brain computer interfaces based on mental tasks.Journal of Neural Engineering2020HALDOI

- 10 articleModeling complex EEG data distribution on the Riemannian manifold toward outlier detection and multimodal classification.IEEE Transactions on Biomedical Engineering7222023, 377 - 387In press. HALDOI

11.2 Publications of the year

International journals

International peer-reviewed conferences

Conferences without proceedings

Scientific book chapters

Other scientific publications